├── .gitignore

├── README.md

├── agent.py

├── config.py

├── dataloader.py

├── dataset.py

├── docs

└── teaser.png

├── isp

├── __init__.py

├── denoise.py

├── filters.py

├── sharpen.py

└── unprocess_np.py

├── replay_memory.py

├── requirements.txt

├── train.py

├── util.py

├── value.py

└── yolov3

├── .dockerignore

├── .pre-commit-config.yaml

├── Arial.ttf

├── CITATION.cff

├── CONTRIBUTING.md

├── LICENSE

├── README.md

├── README.zh-CN.md

├── benchmarks.py

├── classify

├── predict.py

├── train.py

├── tutorial.ipynb

└── val.py

├── data

├── Argoverse.yaml

├── GlobalWheat2020.yaml

├── ImageNet.yaml

├── SKU-110K.yaml

├── VisDrone.yaml

├── coco-2017-5000.yaml

├── coco-2017-small.yaml

├── coco-2017.yaml

├── coco-seg.yaml

├── coco.yaml

├── coco128-seg.yaml

├── coco128.yaml

├── hyps

│ ├── hyp.Objects365.yaml

│ ├── hyp.VOC.yaml

│ ├── hyp.no-augmentation.yaml

│ ├── hyp.scratch-high.yaml

│ ├── hyp.scratch-low.yaml

│ └── hyp.scratch-med.yaml

├── images

│ ├── bus.jpg

│ └── zidane.jpg

├── lod.yaml

├── lod_pynet.yaml

├── lod_rgb_dark.yaml

├── objects365.yaml

├── oprd.yaml

├── rod.yaml

├── rod_day.yaml

├── rod_night.yaml

├── rod_npy.yaml

├── rod_png.yaml

├── scripts

│ ├── download_weights.sh

│ ├── get_coco.sh

│ ├── get_coco128.sh

│ └── get_imagenet.sh

├── voc.yaml

├── xView.yaml

└── yolov3-lod.yaml

├── detect.py

├── export.py

├── hubconf.py

├── models

├── __init__.py

├── common.py

├── experimental.py

├── hub

│ ├── anchors.yaml

│ ├── yolov5-bifpn.yaml

│ ├── yolov5-fpn.yaml

│ ├── yolov5-p2.yaml

│ ├── yolov5-p34.yaml

│ ├── yolov5-p6.yaml

│ ├── yolov5-p7.yaml

│ ├── yolov5-panet.yaml

│ ├── yolov5l6.yaml

│ ├── yolov5m6.yaml

│ ├── yolov5n6.yaml

│ ├── yolov5s-LeakyReLU.yaml

│ ├── yolov5s-ghost.yaml

│ ├── yolov5s-transformer.yaml

│ ├── yolov5s6.yaml

│ └── yolov5x6.yaml

├── segment

│ ├── yolov5l-seg.yaml

│ ├── yolov5m-seg.yaml

│ ├── yolov5n-seg.yaml

│ ├── yolov5s-seg.yaml

│ └── yolov5x-seg.yaml

├── tf.py

├── yolo.py

├── yolov3-spp.yaml

├── yolov3-tiny.yaml

├── yolov3.yaml

├── yolov5l.yaml

├── yolov5m.yaml

├── yolov5n.yaml

├── yolov5s.yaml

└── yolov5x.yaml

├── requirements.txt

├── run.sh

├── segment

├── predict.py

├── train.py

├── tutorial.ipynb

└── val.py

├── setup.cfg

├── train.py

├── tutorial.ipynb

├── utils

├── __init__.py

├── activations.py

├── augmentations.py

├── autoanchor.py

├── autobatch.py

├── aws

│ ├── __init__.py

│ ├── mime.sh

│ ├── resume.py

│ └── userdata.sh

├── callbacks.py

├── dataloaders.py

├── docker

│ ├── Dockerfile

│ ├── Dockerfile-arm64

│ └── Dockerfile-cpu

├── downloads.py

├── flask_rest_api

│ ├── README.md

│ ├── example_request.py

│ └── restapi.py

├── general.py

├── google_app_engine

│ ├── Dockerfile

│ ├── additional_requirements.txt

│ └── app.yaml

├── loggers

│ ├── __init__.py

│ ├── clearml

│ │ ├── README.md

│ │ ├── __init__.py

│ │ ├── clearml_utils.py

│ │ └── hpo.py

│ ├── comet

│ │ ├── README.md

│ │ ├── __init__.py

│ │ ├── comet_utils.py

│ │ ├── hpo.py

│ │ └── optimizer_config.json

│ └── wandb

│ │ ├── __init__.py

│ │ └── wandb_utils.py

├── loss.py

├── metrics.py

├── plots.py

├── segment

│ ├── __init__.py

│ ├── augmentations.py

│ ├── dataloaders.py

│ ├── general.py

│ ├── loss.py

│ ├── metrics.py

│ └── plots.py

├── torch_utils.py

└── triton.py

├── val.py

└── val_adaptiveisp.py

/.gitignore:

--------------------------------------------------------------------------------

1 | __pycache__/

2 | **/__pycache__/

3 | .idea/

4 | .vscode

5 |

6 | # Distribution / packaging

7 | .Python

8 | env/

9 | build/

10 | develop-eggs/

11 | dist/

12 | downloads/

13 | eggs/

14 | .eggs/

15 | lib/

16 | lib64/

17 | parts/

18 | sdist/

19 | var/

20 | wheels/

21 | *.egg-info/

22 | /wandb/

23 | .installed.cfg

24 | *.egg

25 |

26 | .DS_Store

27 |

28 | # Jupyter Notebook

29 | .ipynb_checkpoints

30 |

31 | # pyenv

32 | .python-version

33 |

34 |

35 | experiments

36 | outputs

37 | output

38 | output_results

39 | tmp

40 | *.out

41 | results

--------------------------------------------------------------------------------

/config.py:

--------------------------------------------------------------------------------

1 | from util import Dict

2 | from isp.filters import *

3 |

4 |

5 | cfg = Dict()

6 | cfg.val_freq = 1000

7 | cfg.save_model_freq = 1000

8 | cfg.print_freq = 100

9 | cfg.summary_freq = 100

10 | cfg.show_img_num = 2

11 |

12 | cfg.parameter_lr_mul = 1

13 | cfg.value_lr_mul = 1

14 | cfg.critic_lr_mul = 1

15 |

16 | ###########################################################################

17 | # Filter Parameters

18 | ###########################################################################

19 | cfg.filters = [

20 | ExposureFilter, GammaFilter, CCMFilter, SharpenFilter, DenoiseFilter,

21 | ToneFilter, ContrastFilter, SaturationPlusFilter, WNBFilter, ImprovedWhiteBalanceFilter

22 | ]

23 | cfg.filter_runtime_penalty = False

24 | cfg.filters_runtime = [1.7, 2.0, 1.9, 6.3, 10, 2.7, 2.1, 2.0, 1.9, 1.7]

25 | cfg.filter_runtime_penalty_lambda = 0.01

26 |

27 | # Gamma = 1/x ~ x

28 | cfg.curve_steps = 8

29 | cfg.gamma_range = 3

30 | cfg.exposure_range = 3.5

31 | cfg.wb_range = 1.1

32 | cfg.color_curve_range = (0.90, 1.10)

33 | cfg.lab_curve_range = (0.90, 1.10)

34 | cfg.tone_curve_range = (0.5, 2)

35 | cfg.usm_sharpen_range = (0.0, 2.0) # wikipedia recommended sigma 0.5-2.0; amount 0.5-1.5

36 | cfg.sharpen_range = (0.0, 10.0)

37 | cfg.ccm_range = (-2.0, 2.0)

38 | cfg.denoise_range = (0.0, 1.0)

39 |

40 | cfg.masking = False

41 | cfg.minimum_strength = 0.3

42 | cfg.maximum_sharpness = 1

43 | cfg.clamp = False

44 |

45 |

46 | ###########################################################################

47 | # RL Parameters

48 | ###########################################################################

49 | cfg.critic_logit_multiplier = 100

50 | cfg.discount_factor = 1.0 # 0.98

51 | # Each time the agent reuse a filter, a penalty is subtracted from the reward. Set to 0 to disable.

52 | cfg.filter_usage_penalty = 1.0

53 | # Use temporal difference error (thereby the value network is used) or directly a single step award (greedy)?

54 | cfg.use_TD = True

55 | # Replay memory

56 | cfg.replay_memory_size = 128

57 | # Note, a trajectory will be killed either after achieving this value (by chance) or submission

58 | # Thus exploration will lead to kills as well.

59 | cfg.maximum_trajectory_length = 7

60 | cfg.over_length_keep_prob = 0.5

61 | cfg.all_reward = 1.0

62 | # Append input image with states?

63 | cfg.img_include_states = True

64 | # with prob. cfg.exploration, we randomly pick one action during training

65 | cfg.exploration = 0.05

66 | # Action entropy penalization

67 | cfg.exploration_penalty = 0.05

68 | cfg.early_stop_penalty = 1.0

69 | cfg.detect_loss_weight = 1.0

70 |

71 | ###########################################################################

72 | # Agent, Value Network Parameters

73 | ###########################################################################

74 | cfg.base_channels = 32

75 | cfg.dropout_keep_prob = 0.5

76 | cfg.shared_feature_extractor = True

77 | cfg.fc1_size = 128

78 | cfg.bnw = False

79 | # number of filters for the first convolutional layers for all networks

80 | cfg.feature_extractor_dims = 4096

81 | cfg.use_penalty = True

82 | cfg.z_type = 'uniform'

83 | cfg.z_dim_per_filter = 16

84 |

85 | cfg.num_state_dim = 3 + len(cfg.filters)

86 | cfg.z_dim = 3 + len(cfg.filters) * cfg.z_dim_per_filter

87 | cfg.test_steps = 5

88 |

--------------------------------------------------------------------------------

/docs/teaser.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenImagingLab/AdaptiveISP/a6775e64d9c3768fc964ffcb00692c5e042111f1/docs/teaser.png

--------------------------------------------------------------------------------

/isp/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenImagingLab/AdaptiveISP/a6775e64d9c3768fc964ffcb00692c5e042111f1/isp/__init__.py

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | about-time==4.2.1

2 | absl-py==2.1.0

3 | accelerate==0.33.0

4 | addict==2.4.0

5 | aiohttp==3.9.5

6 | aiosignal==1.3.1

7 | alive-progress==3.1.5

8 | antlr4-python3-runtime==4.9.3

9 | asttokens==2.4.1

10 | async-timeout==4.0.3

11 | attrs==23.2.0

12 | autograd==1.6.2

13 | cattrs==23.2.3

14 | cffi==1.16.0

15 | cma==3.2.2

16 | colorama==0.4.6

17 | colour-demosaicing==0.2.5

18 | colour-science==0.4.4

19 | comm==0.2.2

20 | contourpy==1.2.1

21 | cryptography==43.0.0

22 | cycler==0.12.1

23 | debugpy==1.8.1

24 | decorator==4.4.2

25 | Deprecated==1.2.14

26 | diffusers==0.30.0

27 | dill==0.3.8

28 | distro==1.9.0

29 | easydict==1.13

30 | einops==0.8.0

31 | exceptiongroup==1.2.1

32 | executing==2.0.1

33 | facexlib==0.3.0

34 | filterpy==1.4.5

35 | fonttools==4.51.0

36 | Forward_Warp==0.0.1

37 | forward_warp_cuda==0.0.0

38 | frozenlist==1.4.1

39 | fsspec==2024.3.1

40 | ftfy==6.2.0

41 | future==1.0.0

42 | grapheme==0.6.0

43 | grpcio==1.63.0

44 | huggingface-hub==0.24.5

45 | icecream==2.1.3

46 | imageio==2.34.1

47 | imageio-ffmpeg==0.5.1

48 | imgaug==0.4.0

49 | importlib_metadata==7.1.0

50 | iopath==0.1.10

51 | ipykernel==6.29.4

52 | ipython==8.24.0

53 | jedi==0.19.1

54 | joblib==1.4.2

55 | jupyter_client==8.6.1

56 | jupyter_core==5.7.2

57 | kiwisolver==1.4.5

58 | kornia==0.7.2

59 | kornia_rs==0.1.3

60 | lazy_loader==0.4

61 | lightning-utilities==0.11.5

62 | llvmlite==0.42.0

63 | lmdb==1.4.1

64 | loguru==0.7.2

65 | Markdown==3.6

66 | markdown-it-py==3.0.0

67 | matplotlib==3.8.4

68 | matplotlib-inline==0.1.7

69 | mdurl==0.1.2

70 | meshzoo==0.11.6

71 | mkl-service==2.4.0

72 | mmengine==0.10.4

73 | moviepy==1.0.3

74 | multidict==6.0.5

75 | nest-asyncio==1.6.0

76 | ninja==1.11.1.1

77 | numba==0.59.1

78 | omegaconf==2.3.0

79 | openai-clip==1.0.1

80 | opencv-python==4.9.0.80

81 | packaging==24.0

82 | pandas==2.2.2

83 | parso==0.8.4

84 | pexpect==4.9.0

85 | platformdirs==4.2.1

86 | portalocker==2.10.1

87 | proglog==0.1.10

88 | prompt-toolkit==3.0.43

89 | protobuf==5.26.1

90 | psutil==5.9.8

91 | ptyprocess==0.7.0

92 | pure-eval==0.2.2

93 | py-cpuinfo==9.0.0

94 | py-machineid==0.6.0

95 | pycocotools==2.0.7

96 | pycparser==2.22

97 | Pygments==2.18.0

98 | pyiqa==0.1.11

99 | pymoo==0.6.1.1

100 | pyparsing==3.1.2

101 | python-dateutil==2.9.0.post0

102 | python-package-info==0.0.9

103 | pytorch-lightning==2.3.3

104 | pytz==2024.1

105 | PyYAML==6.0.1

106 | pyzmq==26.0.3

107 | rawpy==0.21.0

108 | regex==2024.5.10

109 | requests-cache==1.2.1

110 | rich==13.7.1

111 | rich-argparse==1.5.2

112 | safetensors==0.4.3

113 | scikit-image==0.23.2

114 | scipy==1.13.0

115 | seaborn==0.13.2

116 | sentencepiece==0.2.0

117 | shapely==2.0.4

118 | six==1.16.0

119 | sk-video==1.1.10

120 | stack-data==0.6.3

121 | stonefish-license-manager==0.4.36

122 | tabulate==0.9.0

123 | tensorboard==2.16.2

124 | tensorboard-data-server==0.7.2

125 | termcolor==2.4.0

126 | thop==0.1.1.post2209072238

127 | tifffile==2024.5.10

128 | timm==0.9.16

129 | tokenizers==0.15.2

130 | tomli==2.0.1

131 | torch==2.0.1

132 | torch-tb-profiler==0.4.3

133 | torchaudio==2.0.2

134 | torchmetrics==1.4.0.post0

135 | torchvision==0.15.2

136 | tornado==6.4

137 | tqdm==4.66.4

138 | traitlets==5.14.3

139 | transformers==4.37.2

140 | triton==2.0.0

141 | tzdata==2024.1

142 | ultralytics==8.2.16

143 | url-normalize==1.4.3

144 | wcwidth==0.2.13

145 | Werkzeug==3.0.3

146 | wrapt==1.16.0

147 | x21==0.5.2

148 | yacs==0.1.8

149 | yapf==0.40.2

150 | yarl==1.9.4

151 | zipp==3.18.1

152 |

--------------------------------------------------------------------------------

/value.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 | import torch.nn.functional as F

4 |

5 |

6 | class FeatureExtractor(torch.nn.Module):

7 | def __init__(self, shape=(17, 64, 64), mid_channels=32, output_dim=4096):

8 | """shape: c,h,w"""

9 | super(FeatureExtractor, self).__init__()

10 | in_channels = shape[0]

11 | self.output_dim = output_dim

12 |

13 | min_feature_map_size = 4

14 | assert output_dim % (min_feature_map_size ** 2) == 0, 'output dim=%d' % output_dim

15 | size = int(shape[2])

16 | # print('Agent CNN:')

17 | # print(' ', shape)

18 | size = size // 2

19 | channels = mid_channels

20 | layers = []

21 | layers.append(nn.Conv2d(in_channels, channels, kernel_size=4, stride=2, padding=1))

22 | layers.append(nn.BatchNorm2d(channels))

23 | layers.append(nn.LeakyReLU(negative_slope=0.2))

24 | while size > min_feature_map_size:

25 | in_channels = channels

26 | if size == min_feature_map_size * 2:

27 | channels = output_dim // (min_feature_map_size ** 2)

28 | else:

29 | channels *= 2

30 | assert size % 2 == 0

31 | size = size // 2

32 | # print(size, in_channels, channels)

33 | layers.append(nn.Conv2d(in_channels, channels, kernel_size=4, stride=2, padding=1))

34 | layers.append(nn.BatchNorm2d(channels))

35 | layers.append(nn.LeakyReLU(negative_slope=0.2))

36 | # layers.append(nn.Conv2d(channels, channels, kernel_size=3, stride=1, padding=1))

37 | # layers.append(nn.BatchNorm2d(channels))

38 | # layers.append(nn.LeakyReLU(negative_slope=0.2))

39 | self.layers = nn.Sequential(*layers)

40 |

41 | def forward(self, x):

42 | x = self.layers(x)

43 | x = torch.reshape(x, [-1, self.output_dim])

44 | return x

45 |

46 |

47 | # input: float in [0, 1]

48 | class Value(nn.Module):

49 | def __init__(self, cfg, shape=(19, 64, 64)):

50 | super(Value, self).__init__()

51 | self.cfg = cfg

52 | self.feature_extractor = FeatureExtractor(shape=shape, mid_channels=cfg.base_channels,

53 | output_dim=cfg.feature_extractor_dims)

54 |

55 | self.fc1 = nn.Linear(cfg.feature_extractor_dims, cfg.fc1_size)

56 | self.lrelu = nn.LeakyReLU(negative_slope=0.2)

57 | self.fc2 = nn.Linear(cfg.fc1_size, 1)

58 | self.tanh = nn.Tanh()

59 |

60 | self.down_sample = nn.AdaptiveAvgPool2d((shape[1], shape[2]))

61 |

62 | def forward(self, images, states=None):

63 | images = self.down_sample(images)

64 | lum = (images[:, 0, :, :] * 0.27 + images[:, 1, :, :] * 0.67 + images[:, 2, :, :] * 0.06 + 1e-5)[:, None, :, :]

65 | # print(lum.shape)

66 | # luminance and contrast

67 | luminance = torch.mean(lum, dim=(1, 2, 3))

68 | contrast = torch.var(lum, dim=(1, 2, 3))

69 | # saturation

70 | i_max, _ = torch.max(torch.clip(images, min=0.0, max=1.0), dim=1)

71 | i_min, _ = torch.min(torch.clip(images, min=0.0, max=1.0), dim=1)

72 | # print("i_max i_min shape:", i_max.shape, i_min.shape)

73 | sat = (i_max - i_min) / (torch.minimum(i_max + i_min, 2.0 - i_max - i_min) + 1e-2)

74 | # print("sat.shape", sat.shape)

75 | saturation = torch.mean(sat, dim=[1, 2])

76 | # print("luminance shape:", luminance.shape, contrast.shape, saturation.shape)

77 | repetition = 1

78 | state_feature = torch.cat(

79 | [torch.tile(luminance[:, None], [1, repetition]),

80 | torch.tile(contrast[:, None], [1, repetition]),

81 | torch.tile(saturation[:, None], [1, repetition])], dim=1)

82 | # print('States:', states.shape)

83 | if states is None:

84 | states = state_feature

85 | else:

86 | assert len(states.shape) == len(state_feature.shape)

87 | states = torch.cat([states, state_feature], dim=1)

88 | if states is not None:

89 | states = states[:, :, None, None] + images[:, 0:1, :, :] * 0

90 | # print(' States:', states.shape)

91 | images = torch.cat([images, states], dim=1)

92 | # print("images.shape", images.shape)

93 | feature = self.feature_extractor(images)

94 | # print(' CNN shape: ', feature.shape)

95 | # print('Before final FCs', feature.shape)

96 | out = self.fc2(self.lrelu(self.fc1(feature)))

97 | # print(' ', out.shape)

98 | # out = self.tanh(out)

99 | return out

100 |

101 |

102 | if __name__ == "__main__":

103 | from easydict import EasyDict

104 | import numpy as np

105 | cfg = EasyDict()

106 | cfg['base_channels'] = 32

107 | cfg['fc1_size'] = 128

108 | cfg['feature_extractor_dims'] = 4096

109 |

110 | np.random.seed(0)

111 | x = torch.randn((1, 3, 512, 512))

112 | states = torch.randn((1, 11))

113 | # x = np.transpose(x, (0, 3, 1, 2))

114 | # x = torch.from_numpy(x)

115 | value = Value(cfg)

116 | y = value(x, states)

117 | print(y.shape, y)

118 | print(value.state_dict())

119 | torch.save(value.state_dict(), "value.pth")

--------------------------------------------------------------------------------

/yolov3/.dockerignore:

--------------------------------------------------------------------------------

1 | # Repo-specific DockerIgnore -------------------------------------------------------------------------------------------

2 | .git

3 | .cache

4 | .idea

5 | runs

6 | output

7 | coco

8 | storage.googleapis.com

9 |

10 | data/samples/*

11 | **/results*.csv

12 | *.jpg

13 |

14 | # Neural Network weights -----------------------------------------------------------------------------------------------

15 | **/*.pt

16 | **/*.pth

17 | **/*.onnx

18 | **/*.engine

19 | **/*.mlmodel

20 | **/*.torchscript

21 | **/*.torchscript.pt

22 | **/*.tflite

23 | **/*.h5

24 | **/*.pb

25 | *_saved_model/

26 | *_web_model/

27 | *_openvino_model/

28 |

29 | # Below Copied From .gitignore -----------------------------------------------------------------------------------------

30 | # Below Copied From .gitignore -----------------------------------------------------------------------------------------

31 |

32 |

33 | # GitHub Python GitIgnore ----------------------------------------------------------------------------------------------

34 | # Byte-compiled / optimized / DLL files

35 | __pycache__/

36 | *.py[cod]

37 | *$py.class

38 |

39 | # C extensions

40 | *.so

41 |

42 | # Distribution / packaging

43 | .Python

44 | env/

45 | build/

46 | develop-eggs/

47 | dist/

48 | downloads/

49 | eggs/

50 | .eggs/

51 | lib/

52 | lib64/

53 | parts/

54 | sdist/

55 | var/

56 | wheels/

57 | *.egg-info/

58 | wandb/

59 | .installed.cfg

60 | *.egg

61 |

62 | # PyInstaller

63 | # Usually these files are written by a python script from a template

64 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

65 | *.manifest

66 | *.spec

67 |

68 | # Installer logs

69 | pip-log.txt

70 | pip-delete-this-directory.txt

71 |

72 | # Unit test / coverage reports

73 | htmlcov/

74 | .tox/

75 | .coverage

76 | .coverage.*

77 | .cache

78 | nosetests.xml

79 | coverage.xml

80 | *.cover

81 | .hypothesis/

82 |

83 | # Translations

84 | *.mo

85 | *.pot

86 |

87 | # Django stuff:

88 | *.log

89 | local_settings.py

90 |

91 | # Flask stuff:

92 | instance/

93 | .webassets-cache

94 |

95 | # Scrapy stuff:

96 | .scrapy

97 |

98 | # Sphinx documentation

99 | docs/_build/

100 |

101 | # PyBuilder

102 | target/

103 |

104 | # Jupyter Notebook

105 | .ipynb_checkpoints

106 |

107 | # pyenv

108 | .python-version

109 |

110 | # celery beat schedule file

111 | celerybeat-schedule

112 |

113 | # SageMath parsed files

114 | *.sage.py

115 |

116 | # dotenv

117 | .env

118 |

119 | # virtualenv

120 | .venv*

121 | venv*/

122 | ENV*/

123 |

124 | # Spyder project settings

125 | .spyderproject

126 | .spyproject

127 |

128 | # Rope project settings

129 | .ropeproject

130 |

131 | # mkdocs documentation

132 | /site

133 |

134 | # mypy

135 | .mypy_cache/

136 |

137 |

138 | # https://github.com/github/gitignore/blob/master/Global/macOS.gitignore -----------------------------------------------

139 |

140 | # General

141 | .DS_Store

142 | .AppleDouble

143 | .LSOverride

144 |

145 | # Icon must end with two \r

146 | Icon

147 | Icon?

148 |

149 | # Thumbnails

150 | ._*

151 |

152 | # Files that might appear in the root of a volume

153 | .DocumentRevisions-V100

154 | .fseventsd

155 | .Spotlight-V100

156 | .TemporaryItems

157 | .Trashes

158 | .VolumeIcon.icns

159 | .com.apple.timemachine.donotpresent

160 |

161 | # Directories potentially created on remote AFP share

162 | .AppleDB

163 | .AppleDesktop

164 | Network Trash Folder

165 | Temporary Items

166 | .apdisk

167 |

168 |

169 | # https://github.com/github/gitignore/blob/master/Global/JetBrains.gitignore

170 | # Covers JetBrains IDEs: IntelliJ, RubyMine, PhpStorm, AppCode, PyCharm, CLion, Android Studio and WebStorm

171 | # Reference: https://intellij-support.jetbrains.com/hc/en-us/articles/206544839

172 |

173 | # User-specific stuff:

174 | .idea/*

175 | .idea/**/workspace.xml

176 | .idea/**/tasks.xml

177 | .idea/dictionaries

178 | .html # Bokeh Plots

179 | .pg # TensorFlow Frozen Graphs

180 | .avi # videos

181 |

182 | # Sensitive or high-churn files:

183 | .idea/**/dataSources/

184 | .idea/**/dataSources.ids

185 | .idea/**/dataSources.local.xml

186 | .idea/**/sqlDataSources.xml

187 | .idea/**/dynamic.xml

188 | .idea/**/uiDesigner.xml

189 |

190 | # Gradle:

191 | .idea/**/gradle.xml

192 | .idea/**/libraries

193 |

194 | # CMake

195 | cmake-build-debug/

196 | cmake-build-release/

197 |

198 | # Mongo Explorer plugin:

199 | .idea/**/mongoSettings.xml

200 |

201 | ## File-based project format:

202 | *.iws

203 |

204 | ## Plugin-specific files:

205 |

206 | # IntelliJ

207 | out/

208 |

209 | # mpeltonen/sbt-idea plugin

210 | .idea_modules/

211 |

212 | # JIRA plugin

213 | atlassian-ide-plugin.xml

214 |

215 | # Cursive Clojure plugin

216 | .idea/replstate.xml

217 |

218 | # Crashlytics plugin (for Android Studio and IntelliJ)

219 | com_crashlytics_export_strings.xml

220 | crashlytics.properties

221 | crashlytics-build.properties

222 | fabric.properties

223 |

--------------------------------------------------------------------------------

/yolov3/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, AGPL-3.0 license

2 | # Pre-commit hooks. For more information see https://github.com/pre-commit/pre-commit-hooks/blob/main/README.md

3 |

4 | exclude: 'docs/'

5 | # Define bot property if installed via https://github.com/marketplace/pre-commit-ci

6 | ci:

7 | autofix_prs: true

8 | autoupdate_commit_msg: '[pre-commit.ci] pre-commit suggestions'

9 | autoupdate_schedule: monthly

10 | # submodules: true

11 |

12 | repos:

13 | - repo: https://github.com/pre-commit/pre-commit-hooks

14 | rev: v4.4.0

15 | hooks:

16 | - id: end-of-file-fixer

17 | - id: trailing-whitespace

18 | - id: check-case-conflict

19 | # - id: check-yaml

20 | - id: check-docstring-first

21 | - id: double-quote-string-fixer

22 | - id: detect-private-key

23 |

24 | - repo: https://github.com/asottile/pyupgrade

25 | rev: v3.10.1

26 | hooks:

27 | - id: pyupgrade

28 | name: Upgrade code

29 |

30 | - repo: https://github.com/PyCQA/isort

31 | rev: 5.12.0

32 | hooks:

33 | - id: isort

34 | name: Sort imports

35 |

36 | - repo: https://github.com/google/yapf

37 | rev: v0.40.0

38 | hooks:

39 | - id: yapf

40 | name: YAPF formatting

41 |

42 | - repo: https://github.com/executablebooks/mdformat

43 | rev: 0.7.16

44 | hooks:

45 | - id: mdformat

46 | name: MD formatting

47 | additional_dependencies:

48 | - mdformat-gfm

49 | - mdformat-black

50 | # exclude: "README.md|README.zh-CN.md|CONTRIBUTING.md"

51 |

52 | - repo: https://github.com/PyCQA/flake8

53 | rev: 6.1.0

54 | hooks:

55 | - id: flake8

56 | name: PEP8

57 |

58 | - repo: https://github.com/codespell-project/codespell

59 | rev: v2.2.5

60 | hooks:

61 | - id: codespell

62 | args:

63 | - --ignore-words-list=crate,nd,strack,dota

64 |

65 | # - repo: https://github.com/asottile/yesqa

66 | # rev: v1.4.0

67 | # hooks:

68 | # - id: yesqa

69 |

70 | # - repo: https://github.com/asottile/dead

71 | # rev: v1.5.0

72 | # hooks:

73 | # - id: dead

74 |

--------------------------------------------------------------------------------

/yolov3/Arial.ttf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenImagingLab/AdaptiveISP/a6775e64d9c3768fc964ffcb00692c5e042111f1/yolov3/Arial.ttf

--------------------------------------------------------------------------------

/yolov3/CITATION.cff:

--------------------------------------------------------------------------------

1 | cff-version: 1.2.0

2 | preferred-citation:

3 | type: software

4 | message: If you use YOLOv5, please cite it as below.

5 | authors:

6 | - family-names: Jocher

7 | given-names: Glenn

8 | orcid: "https://orcid.org/0000-0001-5950-6979"

9 | title: "YOLOv5 by Ultralytics"

10 | version: 7.0

11 | doi: 10.5281/zenodo.3908559

12 | date-released: 2020-5-29

13 | license: AGPL-3.0

14 | url: "https://github.com/ultralytics/yolov5"

15 |

--------------------------------------------------------------------------------

/yolov3/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | ## Contributing to YOLOv3 🚀

2 |

3 | We love your input! We want to make contributing to YOLOv5 as easy and transparent as possible, whether it's:

4 |

5 | - Reporting a bug

6 | - Discussing the current state of the code

7 | - Submitting a fix

8 | - Proposing a new feature

9 | - Becoming a maintainer

10 |

11 | YOLOv5 works so well due to our combined community effort, and for every small improvement you contribute you will be

12 | helping push the frontiers of what's possible in AI 😃!

13 |

14 | ## Submitting a Pull Request (PR) 🛠️

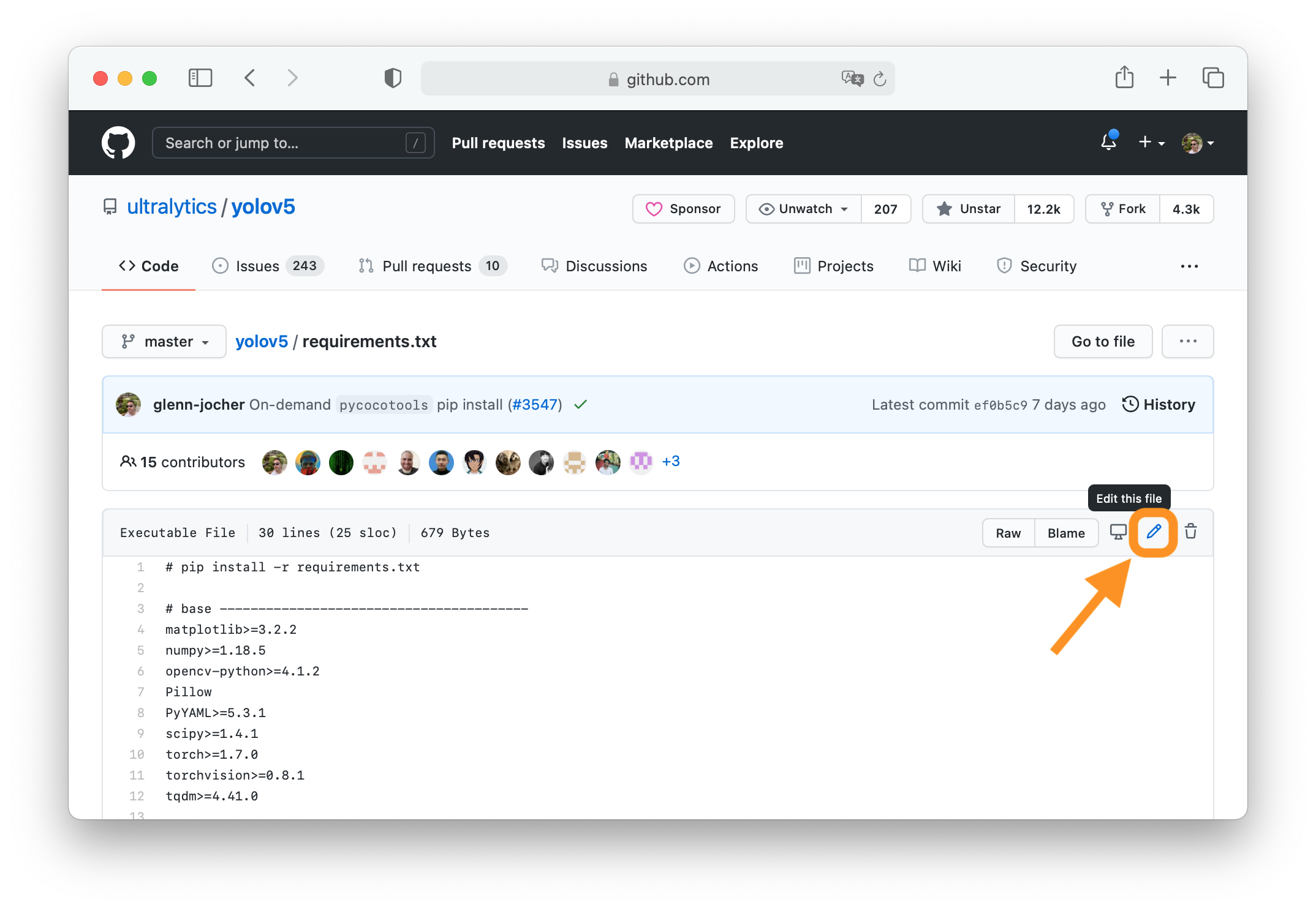

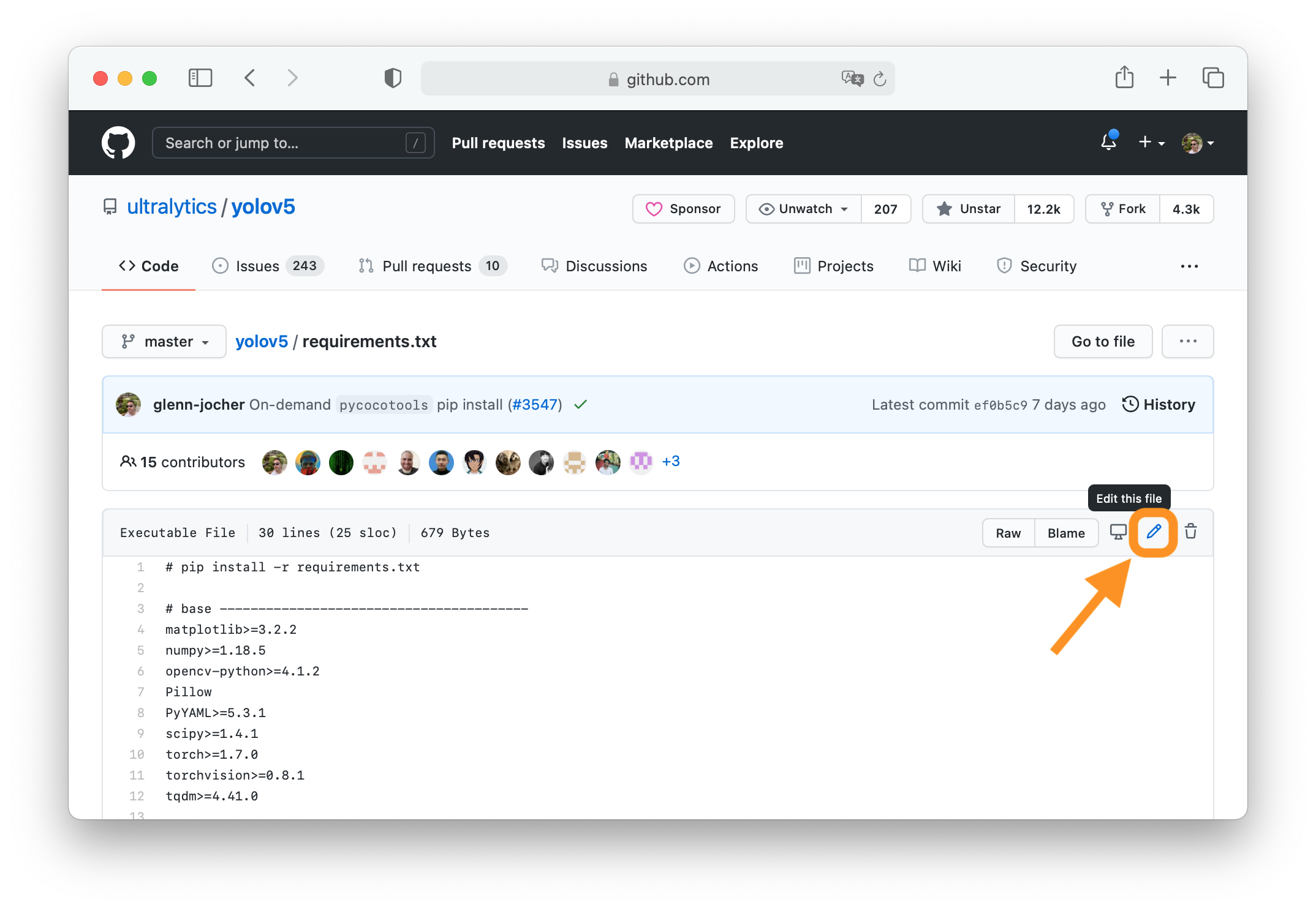

15 |

16 | Submitting a PR is easy! This example shows how to submit a PR for updating `requirements.txt` in 4 steps:

17 |

18 | ### 1. Select File to Update

19 |

20 | Select `requirements.txt` to update by clicking on it in GitHub.

21 |

22 |

23 |

24 | ### 2. Click 'Edit this file'

25 |

26 | The button is in the top-right corner.

27 |

28 |

29 |

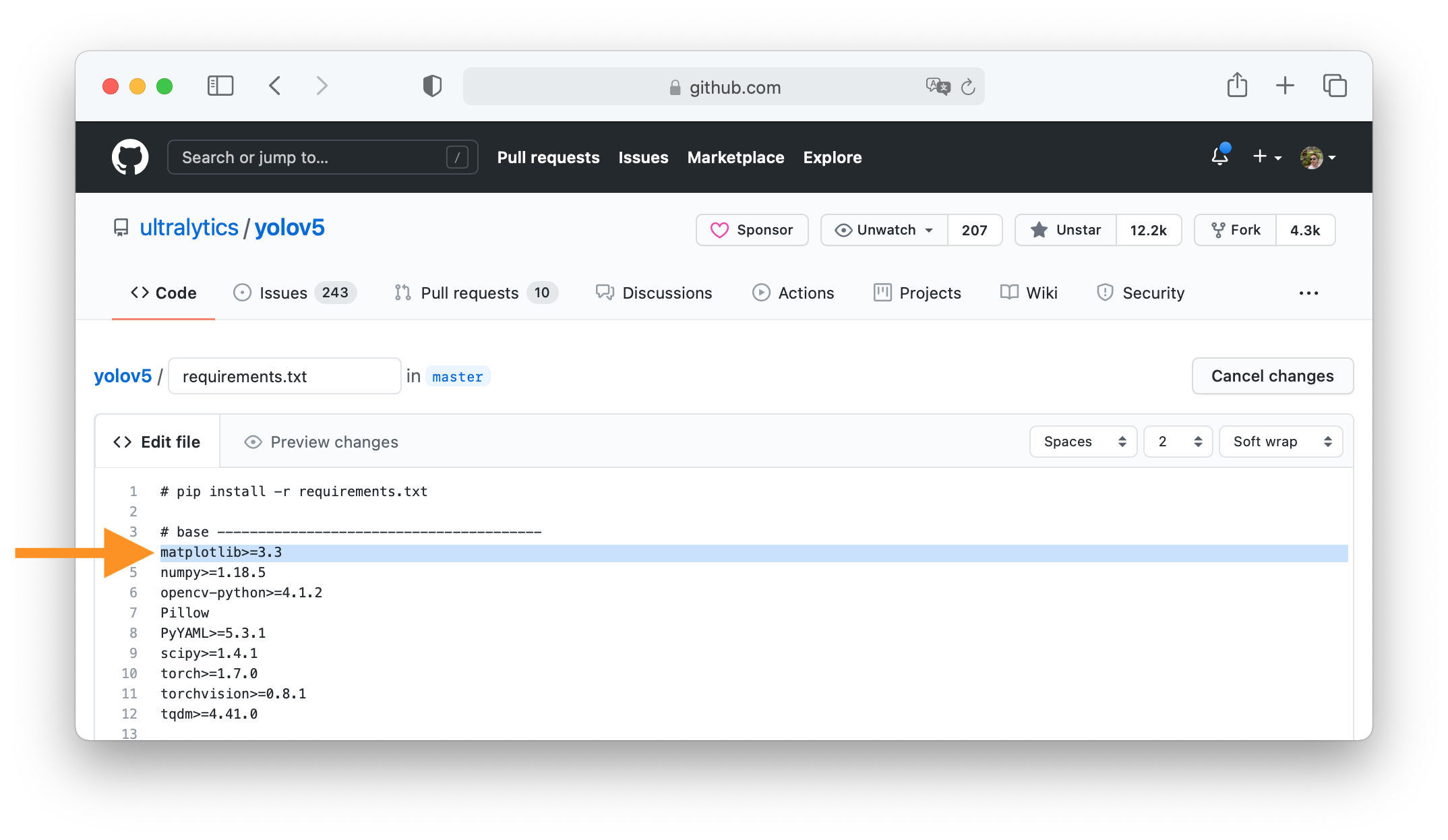

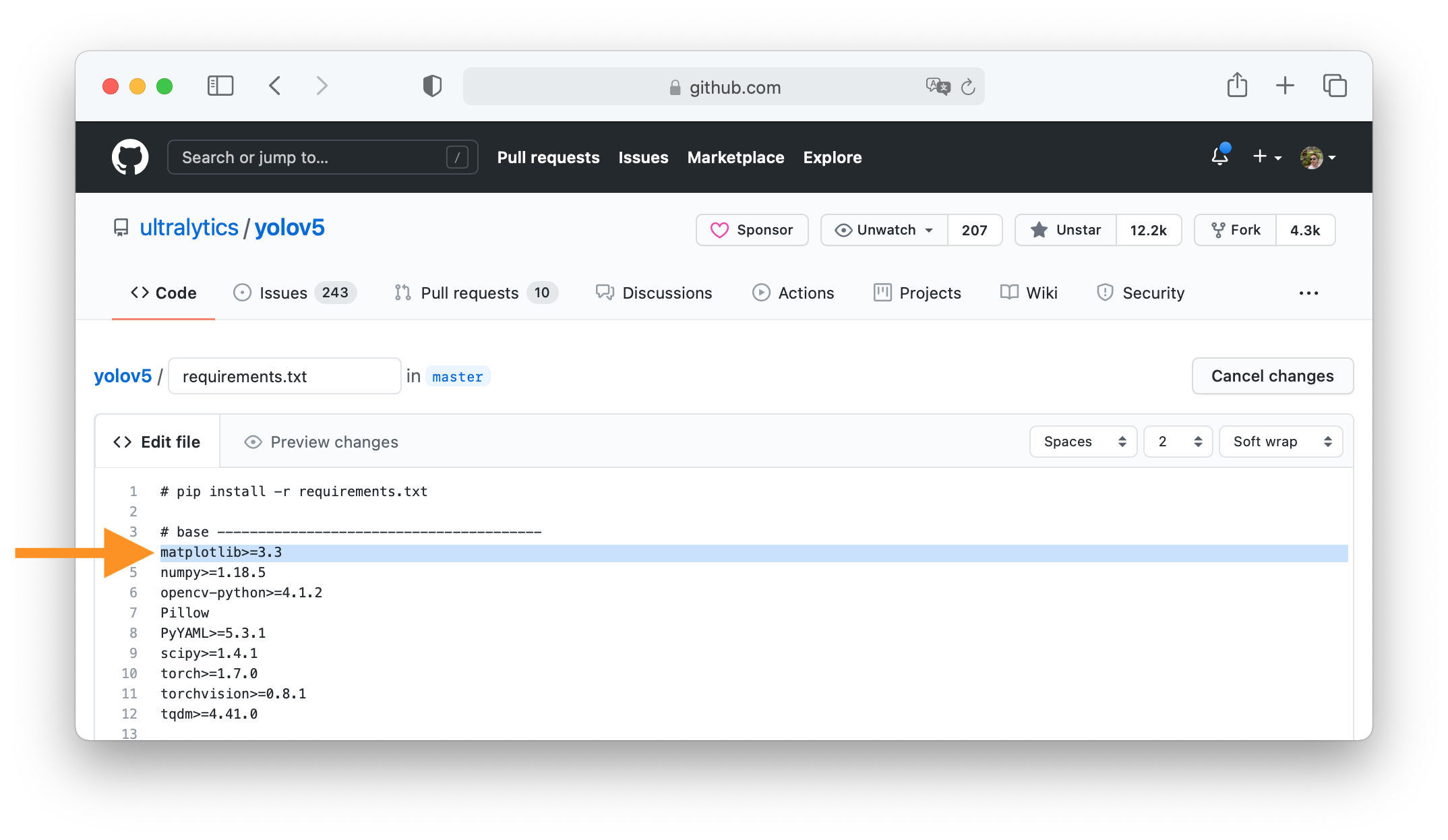

30 | ### 3. Make Changes

31 |

32 | Change the `matplotlib` version from `3.2.2` to `3.3`.

33 |

34 |

35 |

36 | ### 4. Preview Changes and Submit PR

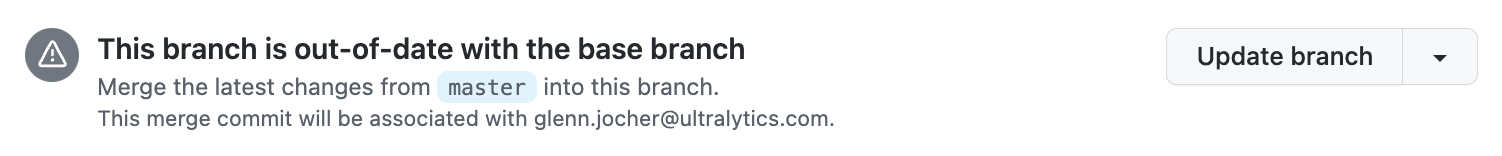

37 |

38 | Click on the **Preview changes** tab to verify your updates. At the bottom of the screen select 'Create a **new branch**

39 | for this commit', assign your branch a descriptive name such as `fix/matplotlib_version` and click the green **Propose

40 | changes** button. All done, your PR is now submitted to YOLOv5 for review and approval 😃!

41 |

42 |

43 |

44 | ### PR recommendations

45 |

46 | To allow your work to be integrated as seamlessly as possible, we advise you to:

47 |

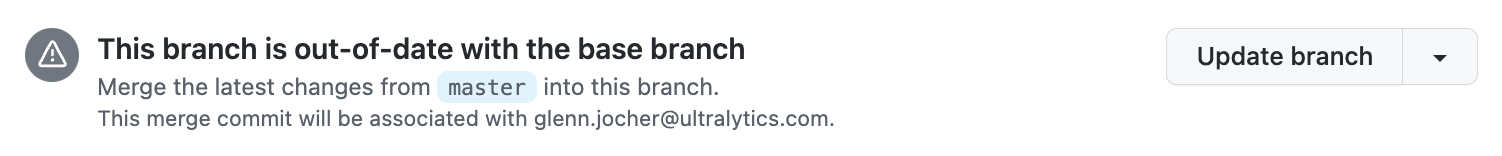

48 | - ✅ Verify your PR is **up-to-date** with `ultralytics/yolov5` `master` branch. If your PR is behind you can update

49 | your code by clicking the 'Update branch' button or by running `git pull` and `git merge master` locally.

50 |

51 |

52 |

53 | - ✅ Verify all YOLOv5 Continuous Integration (CI) **checks are passing**.

54 |

55 |

56 |

57 | - ✅ Reduce changes to the absolute **minimum** required for your bug fix or feature addition. _"It is not daily increase

58 | but daily decrease, hack away the unessential. The closer to the source, the less wastage there is."_ — Bruce Lee

59 |

60 | ## Submitting a Bug Report 🐛

61 |

62 | If you spot a problem with YOLOv5 please submit a Bug Report!

63 |

64 | For us to start investigating a possible problem we need to be able to reproduce it ourselves first. We've created a few

65 | short guidelines below to help users provide what we need to get started.

66 |

67 | When asking a question, people will be better able to provide help if you provide **code** that they can easily

68 | understand and use to **reproduce** the problem. This is referred to by community members as creating

69 | a [minimum reproducible example](https://docs.ultralytics.com/help/minimum_reproducible_example/). Your code that reproduces

70 | the problem should be:

71 |

72 | - ✅ **Minimal** – Use as little code as possible that still produces the same problem

73 | - ✅ **Complete** – Provide **all** parts someone else needs to reproduce your problem in the question itself

74 | - ✅ **Reproducible** – Test the code you're about to provide to make sure it reproduces the problem

75 |

76 | In addition to the above requirements, for [Ultralytics](https://ultralytics.com/) to provide assistance your code

77 | should be:

78 |

79 | - ✅ **Current** – Verify that your code is up-to-date with the current

80 | GitHub [master](https://github.com/ultralytics/yolov5/tree/master), and if necessary `git pull` or `git clone` a new

81 | copy to ensure your problem has not already been resolved by previous commits.

82 | - ✅ **Unmodified** – Your problem must be reproducible without any modifications to the codebase in this

83 | repository. [Ultralytics](https://ultralytics.com/) does not provide support for custom code ⚠️.

84 |

85 | If you believe your problem meets all of the above criteria, please close this issue and raise a new one using the 🐛

86 | **Bug Report** [template](https://github.com/ultralytics/yolov5/issues/new/choose) and provide

87 | a [minimum reproducible example](https://docs.ultralytics.com/help/minimum_reproducible_example/) to help us better

88 | understand and diagnose your problem.

89 |

90 | ## License

91 |

92 | By contributing, you agree that your contributions will be licensed under

93 | the [AGPL-3.0 license](https://choosealicense.com/licenses/agpl-3.0/)

94 |

--------------------------------------------------------------------------------

/yolov3/data/Argoverse.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # Argoverse-HD dataset (ring-front-center camera) http://www.cs.cmu.edu/~mengtial/proj/streaming/ by Argo AI

3 | # Example usage: python train.py --data Argoverse.yaml

4 | # parent

5 | # ├── yolov5

6 | # └── datasets

7 | # └── Argoverse ← downloads here (31.3 GB)

8 |

9 |

10 | # Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

11 | path: ../datasets/Argoverse # dataset root dir

12 | train: Argoverse-1.1/images/train/ # train images (relative to 'path') 39384 images

13 | val: Argoverse-1.1/images/val/ # val images (relative to 'path') 15062 images

14 | test: Argoverse-1.1/images/test/ # test images (optional) https://eval.ai/web/challenges/challenge-page/800/overview

15 |

16 | # Classes

17 | names:

18 | 0: person

19 | 1: bicycle

20 | 2: car

21 | 3: motorcycle

22 | 4: bus

23 | 5: truck

24 | 6: traffic_light

25 | 7: stop_sign

26 |

27 |

28 | # Download script/URL (optional) ---------------------------------------------------------------------------------------

29 | download: |

30 | import json

31 |

32 | from tqdm import tqdm

33 | from utils.general import download, Path

34 |

35 |

36 | def argoverse2yolo(set):

37 | labels = {}

38 | a = json.load(open(set, "rb"))

39 | for annot in tqdm(a['annotations'], desc=f"Converting {set} to YOLOv5 format..."):

40 | img_id = annot['image_id']

41 | img_name = a['images'][img_id]['name']

42 | img_label_name = f'{img_name[:-3]}txt'

43 |

44 | cls = annot['category_id'] # instance class id

45 | x_center, y_center, width, height = annot['bbox']

46 | x_center = (x_center + width / 2) / 1920.0 # offset and scale

47 | y_center = (y_center + height / 2) / 1200.0 # offset and scale

48 | width /= 1920.0 # scale

49 | height /= 1200.0 # scale

50 |

51 | img_dir = set.parents[2] / 'Argoverse-1.1' / 'labels' / a['seq_dirs'][a['images'][annot['image_id']]['sid']]

52 | if not img_dir.exists():

53 | img_dir.mkdir(parents=True, exist_ok=True)

54 |

55 | k = str(img_dir / img_label_name)

56 | if k not in labels:

57 | labels[k] = []

58 | labels[k].append(f"{cls} {x_center} {y_center} {width} {height}\n")

59 |

60 | for k in labels:

61 | with open(k, "w") as f:

62 | f.writelines(labels[k])

63 |

64 |

65 | # Download

66 | dir = Path(yaml['path']) # dataset root dir

67 | urls = ['https://argoverse-hd.s3.us-east-2.amazonaws.com/Argoverse-HD-Full.zip']

68 | download(urls, dir=dir, delete=False)

69 |

70 | # Convert

71 | annotations_dir = 'Argoverse-HD/annotations/'

72 | (dir / 'Argoverse-1.1' / 'tracking').rename(dir / 'Argoverse-1.1' / 'images') # rename 'tracking' to 'images'

73 | for d in "train.json", "val.json":

74 | argoverse2yolo(dir / annotations_dir / d) # convert VisDrone annotations to YOLO labels

75 |

--------------------------------------------------------------------------------

/yolov3/data/GlobalWheat2020.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # Global Wheat 2020 dataset http://www.global-wheat.com/ by University of Saskatchewan

3 | # Example usage: python train.py --data GlobalWheat2020.yaml

4 | # parent

5 | # ├── yolov5

6 | # └── datasets

7 | # └── GlobalWheat2020 ← downloads here (7.0 GB)

8 |

9 |

10 | # Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

11 | path: ../datasets/GlobalWheat2020 # dataset root dir

12 | train: # train images (relative to 'path') 3422 images

13 | - images/arvalis_1

14 | - images/arvalis_2

15 | - images/arvalis_3

16 | - images/ethz_1

17 | - images/rres_1

18 | - images/inrae_1

19 | - images/usask_1

20 | val: # val images (relative to 'path') 748 images (WARNING: train set contains ethz_1)

21 | - images/ethz_1

22 | test: # test images (optional) 1276 images

23 | - images/utokyo_1

24 | - images/utokyo_2

25 | - images/nau_1

26 | - images/uq_1

27 |

28 | # Classes

29 | names:

30 | 0: wheat_head

31 |

32 |

33 | # Download script/URL (optional) ---------------------------------------------------------------------------------------

34 | download: |

35 | from utils.general import download, Path

36 |

37 |

38 | # Download

39 | dir = Path(yaml['path']) # dataset root dir

40 | urls = ['https://zenodo.org/record/4298502/files/global-wheat-codalab-official.zip',

41 | 'https://github.com/ultralytics/yolov5/releases/download/v1.0/GlobalWheat2020_labels.zip']

42 | download(urls, dir=dir)

43 |

44 | # Make Directories

45 | for p in 'annotations', 'images', 'labels':

46 | (dir / p).mkdir(parents=True, exist_ok=True)

47 |

48 | # Move

49 | for p in 'arvalis_1', 'arvalis_2', 'arvalis_3', 'ethz_1', 'rres_1', 'inrae_1', 'usask_1', \

50 | 'utokyo_1', 'utokyo_2', 'nau_1', 'uq_1':

51 | (dir / p).rename(dir / 'images' / p) # move to /images

52 | f = (dir / p).with_suffix('.json') # json file

53 | if f.exists():

54 | f.rename((dir / 'annotations' / p).with_suffix('.json')) # move to /annotations

55 |

--------------------------------------------------------------------------------

/yolov3/data/SKU-110K.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # SKU-110K retail items dataset https://github.com/eg4000/SKU110K_CVPR19 by Trax Retail

3 | # Example usage: python train.py --data SKU-110K.yaml

4 | # parent

5 | # ├── yolov5

6 | # └── datasets

7 | # └── SKU-110K ← downloads here (13.6 GB)

8 |

9 |

10 | # Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

11 | path: ../datasets/SKU-110K # dataset root dir

12 | train: train.txt # train images (relative to 'path') 8219 images

13 | val: val.txt # val images (relative to 'path') 588 images

14 | test: test.txt # test images (optional) 2936 images

15 |

16 | # Classes

17 | names:

18 | 0: object

19 |

20 |

21 | # Download script/URL (optional) ---------------------------------------------------------------------------------------

22 | download: |

23 | import shutil

24 | from tqdm import tqdm

25 | from utils.general import np, pd, Path, download, xyxy2xywh

26 |

27 |

28 | # Download

29 | dir = Path(yaml['path']) # dataset root dir

30 | parent = Path(dir.parent) # download dir

31 | urls = ['http://trax-geometry.s3.amazonaws.com/cvpr_challenge/SKU110K_fixed.tar.gz']

32 | download(urls, dir=parent, delete=False)

33 |

34 | # Rename directories

35 | if dir.exists():

36 | shutil.rmtree(dir)

37 | (parent / 'SKU110K_fixed').rename(dir) # rename dir

38 | (dir / 'labels').mkdir(parents=True, exist_ok=True) # create labels dir

39 |

40 | # Convert labels

41 | names = 'image', 'x1', 'y1', 'x2', 'y2', 'class', 'image_width', 'image_height' # column names

42 | for d in 'annotations_train.csv', 'annotations_val.csv', 'annotations_test.csv':

43 | x = pd.read_csv(dir / 'annotations' / d, names=names).values # annotations

44 | images, unique_images = x[:, 0], np.unique(x[:, 0])

45 | with open((dir / d).with_suffix('.txt').__str__().replace('annotations_', ''), 'w') as f:

46 | f.writelines(f'./images/{s}\n' for s in unique_images)

47 | for im in tqdm(unique_images, desc=f'Converting {dir / d}'):

48 | cls = 0 # single-class dataset

49 | with open((dir / 'labels' / im).with_suffix('.txt'), 'a') as f:

50 | for r in x[images == im]:

51 | w, h = r[6], r[7] # image width, height

52 | xywh = xyxy2xywh(np.array([[r[1] / w, r[2] / h, r[3] / w, r[4] / h]]))[0] # instance

53 | f.write(f"{cls} {xywh[0]:.5f} {xywh[1]:.5f} {xywh[2]:.5f} {xywh[3]:.5f}\n") # write label

54 |

--------------------------------------------------------------------------------

/yolov3/data/VisDrone.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # VisDrone2019-DET dataset https://github.com/VisDrone/VisDrone-Dataset by Tianjin University

3 | # Example usage: python train.py --data VisDrone.yaml

4 | # parent

5 | # ├── yolov5

6 | # └── datasets

7 | # └── VisDrone ← downloads here (2.3 GB)

8 |

9 |

10 | # Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

11 | path: ../datasets/VisDrone # dataset root dir

12 | train: VisDrone2019-DET-train/images # train images (relative to 'path') 6471 images

13 | val: VisDrone2019-DET-val/images # val images (relative to 'path') 548 images

14 | test: VisDrone2019-DET-test-dev/images # test images (optional) 1610 images

15 |

16 | # Classes

17 | names:

18 | 0: pedestrian

19 | 1: people

20 | 2: bicycle

21 | 3: car

22 | 4: van

23 | 5: truck

24 | 6: tricycle

25 | 7: awning-tricycle

26 | 8: bus

27 | 9: motor

28 |

29 |

30 | # Download script/URL (optional) ---------------------------------------------------------------------------------------

31 | download: |

32 | from utils.general import download, os, Path

33 |

34 | def visdrone2yolo(dir):

35 | from PIL import Image

36 | from tqdm import tqdm

37 |

38 | def convert_box(size, box):

39 | # Convert VisDrone box to YOLO xywh box

40 | dw = 1. / size[0]

41 | dh = 1. / size[1]

42 | return (box[0] + box[2] / 2) * dw, (box[1] + box[3] / 2) * dh, box[2] * dw, box[3] * dh

43 |

44 | (dir / 'labels').mkdir(parents=True, exist_ok=True) # make labels directory

45 | pbar = tqdm((dir / 'annotations').glob('*.txt'), desc=f'Converting {dir}')

46 | for f in pbar:

47 | img_size = Image.open((dir / 'images' / f.name).with_suffix('.jpg')).size

48 | lines = []

49 | with open(f, 'r') as file: # read annotation.txt

50 | for row in [x.split(',') for x in file.read().strip().splitlines()]:

51 | if row[4] == '0': # VisDrone 'ignored regions' class 0

52 | continue

53 | cls = int(row[5]) - 1

54 | box = convert_box(img_size, tuple(map(int, row[:4])))

55 | lines.append(f"{cls} {' '.join(f'{x:.6f}' for x in box)}\n")

56 | with open(str(f).replace(os.sep + 'annotations' + os.sep, os.sep + 'labels' + os.sep), 'w') as fl:

57 | fl.writelines(lines) # write label.txt

58 |

59 |

60 | # Download

61 | dir = Path(yaml['path']) # dataset root dir

62 | urls = ['https://github.com/ultralytics/yolov5/releases/download/v1.0/VisDrone2019-DET-train.zip',

63 | 'https://github.com/ultralytics/yolov5/releases/download/v1.0/VisDrone2019-DET-val.zip',

64 | 'https://github.com/ultralytics/yolov5/releases/download/v1.0/VisDrone2019-DET-test-dev.zip',

65 | 'https://github.com/ultralytics/yolov5/releases/download/v1.0/VisDrone2019-DET-test-challenge.zip']

66 | download(urls, dir=dir, curl=True, threads=4)

67 |

68 | # Convert

69 | for d in 'VisDrone2019-DET-train', 'VisDrone2019-DET-val', 'VisDrone2019-DET-test-dev':

70 | visdrone2yolo(dir / d) # convert VisDrone annotations to YOLO labels

71 |

--------------------------------------------------------------------------------

/yolov3/data/coco-2017-5000.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # COCO 2017 dataset http://cocodataset.org by Microsoft

3 | # Example usage: python train.py --data coco.yaml

4 | # parent

5 | # ├── yolov5

6 | # └── datasets

7 | # └── coco ← downloads here (20.1 GB)

8 |

9 |

10 | # Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

11 | path: ../../../datasets/coco2017 # dataset root dir

12 | train: train2017-5000.txt # train2017.txt # train images (relative to 'path') 118287 images

13 | val: val2017.txt # val images (relative to 'path') 5000 images

14 | test: val2017.txt # 20288 of 40670 images, submit to https://competitions.codalab.org/competitions/20794

15 |

16 | # Classes

17 | names:

18 | 0: person

19 | 1: bicycle

20 | 2: car

21 | 3: motorcycle

22 | 4: airplane

23 | 5: bus

24 | 6: train

25 | 7: truck

26 | 8: boat

27 | 9: traffic light

28 | 10: fire hydrant

29 | 11: stop sign

30 | 12: parking meter

31 | 13: bench

32 | 14: bird

33 | 15: cat

34 | 16: dog

35 | 17: horse

36 | 18: sheep

37 | 19: cow

38 | 20: elephant

39 | 21: bear

40 | 22: zebra

41 | 23: giraffe

42 | 24: backpack

43 | 25: umbrella

44 | 26: handbag

45 | 27: tie

46 | 28: suitcase

47 | 29: frisbee

48 | 30: skis

49 | 31: snowboard

50 | 32: sports ball

51 | 33: kite

52 | 34: baseball bat

53 | 35: baseball glove

54 | 36: skateboard

55 | 37: surfboard

56 | 38: tennis racket

57 | 39: bottle

58 | 40: wine glass

59 | 41: cup

60 | 42: fork

61 | 43: knife

62 | 44: spoon

63 | 45: bowl

64 | 46: banana

65 | 47: apple

66 | 48: sandwich

67 | 49: orange

68 | 50: broccoli

69 | 51: carrot

70 | 52: hot dog

71 | 53: pizza

72 | 54: donut

73 | 55: cake

74 | 56: chair

75 | 57: couch

76 | 58: potted plant

77 | 59: bed

78 | 60: dining table

79 | 61: toilet

80 | 62: tv

81 | 63: laptop

82 | 64: mouse

83 | 65: remote

84 | 66: keyboard

85 | 67: cell phone

86 | 68: microwave

87 | 69: oven

88 | 70: toaster

89 | 71: sink

90 | 72: refrigerator

91 | 73: book

92 | 74: clock

93 | 75: vase

94 | 76: scissors

95 | 77: teddy bear

96 | 78: hair drier

97 | 79: toothbrush

98 |

99 |

100 | # Download script/URL (optional)

101 | #download: |

102 | # from utils.general import download, Path

103 | #

104 | #

105 | # # Download labels

106 | # segments = False # segment or box labels

107 | # dir = Path(yaml['path']) # dataset root dir

108 | # url = 'https://github.com/ultralytics/yolov5/releases/download/v1.0/'

109 | # urls = [url + ('coco2017labels-segments.zip' if segments else 'coco2017labels.zip')] # labels

110 | # download(urls, dir=dir.parent)

111 | #

112 | # # Download data

113 | # urls = ['http://images.cocodataset.org/zips/train2017.zip', # 19G, 118k images

114 | # 'http://images.cocodataset.org/zips/val2017.zip', # 1G, 5k images

115 | # 'http://images.cocodataset.org/zips/test2017.zip'] # 7G, 41k images (optional)

116 | # download(urls, dir=dir / 'images', threads=3)

117 |

--------------------------------------------------------------------------------

/yolov3/data/coco-2017-small.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # COCO 2017 dataset http://cocodataset.org by Microsoft

3 | # Example usage: python train.py --data coco.yaml

4 | # parent

5 | # ├── yolov5

6 | # └── datasets

7 | # └── coco ← downloads here (20.1 GB)

8 |

9 |

10 | # Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

11 | path: ../../../datasets/coco2017 # dataset root dir

12 | train: train2017-1000.txt # train2017.txt # train images (relative to 'path') 118287 images

13 | val: val2017-small.txt # val images (relative to 'path') 5000 images

14 | test: test-dev2017.txt # 20288 of 40670 images, submit to https://competitions.codalab.org/competitions/20794

15 |

16 | # Classes

17 | names:

18 | 0: person

19 | 1: bicycle

20 | 2: car

21 | 3: motorcycle

22 | 4: airplane

23 | 5: bus

24 | 6: train

25 | 7: truck

26 | 8: boat

27 | 9: traffic light

28 | 10: fire hydrant

29 | 11: stop sign

30 | 12: parking meter

31 | 13: bench

32 | 14: bird

33 | 15: cat

34 | 16: dog

35 | 17: horse

36 | 18: sheep

37 | 19: cow

38 | 20: elephant

39 | 21: bear

40 | 22: zebra

41 | 23: giraffe

42 | 24: backpack

43 | 25: umbrella

44 | 26: handbag

45 | 27: tie

46 | 28: suitcase

47 | 29: frisbee

48 | 30: skis

49 | 31: snowboard

50 | 32: sports ball

51 | 33: kite

52 | 34: baseball bat

53 | 35: baseball glove

54 | 36: skateboard

55 | 37: surfboard

56 | 38: tennis racket

57 | 39: bottle

58 | 40: wine glass

59 | 41: cup

60 | 42: fork

61 | 43: knife

62 | 44: spoon

63 | 45: bowl

64 | 46: banana

65 | 47: apple

66 | 48: sandwich

67 | 49: orange

68 | 50: broccoli

69 | 51: carrot

70 | 52: hot dog

71 | 53: pizza

72 | 54: donut

73 | 55: cake

74 | 56: chair

75 | 57: couch

76 | 58: potted plant

77 | 59: bed

78 | 60: dining table

79 | 61: toilet

80 | 62: tv

81 | 63: laptop

82 | 64: mouse

83 | 65: remote

84 | 66: keyboard

85 | 67: cell phone

86 | 68: microwave

87 | 69: oven

88 | 70: toaster

89 | 71: sink

90 | 72: refrigerator

91 | 73: book

92 | 74: clock

93 | 75: vase

94 | 76: scissors

95 | 77: teddy bear

96 | 78: hair drier

97 | 79: toothbrush

98 |

99 |

100 | # Download script/URL (optional)

101 | #download: |

102 | # from utils.general import download, Path

103 | #

104 | #

105 | # # Download labels

106 | # segments = False # segment or box labels

107 | # dir = Path(yaml['path']) # dataset root dir

108 | # url = 'https://github.com/ultralytics/yolov5/releases/download/v1.0/'

109 | # urls = [url + ('coco2017labels-segments.zip' if segments else 'coco2017labels.zip')] # labels

110 | # download(urls, dir=dir.parent)

111 | #

112 | # # Download data

113 | # urls = ['http://images.cocodataset.org/zips/train2017.zip', # 19G, 118k images

114 | # 'http://images.cocodataset.org/zips/val2017.zip', # 1G, 5k images

115 | # 'http://images.cocodataset.org/zips/test2017.zip'] # 7G, 41k images (optional)

116 | # download(urls, dir=dir / 'images', threads=3)

117 |

--------------------------------------------------------------------------------

/yolov3/data/coco-2017.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # COCO 2017 dataset http://cocodataset.org by Microsoft

3 | # Example usage: python train.py --data coco.yaml

4 | # parent

5 | # ├── yolov5

6 | # └── datasets

7 | # └── coco ← downloads here (20.1 GB)

8 |

9 |

10 | # Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

11 | path: ../../../datasets/coco2017 # dataset root dir

12 | train: train2017-1000.txt # train2017.txt # train images (relative to 'path') 118287 images

13 | val: val2017.txt # val images (relative to 'path') 5000 images

14 | test: test-dev2017.txt # 20288 of 40670 images, submit to https://competitions.codalab.org/competitions/20794

15 |

16 | # Classes

17 | names:

18 | 0: person

19 | 1: bicycle

20 | 2: car

21 | 3: motorcycle

22 | 4: airplane

23 | 5: bus

24 | 6: train

25 | 7: truck

26 | 8: boat

27 | 9: traffic light

28 | 10: fire hydrant

29 | 11: stop sign

30 | 12: parking meter

31 | 13: bench

32 | 14: bird

33 | 15: cat

34 | 16: dog

35 | 17: horse

36 | 18: sheep

37 | 19: cow

38 | 20: elephant

39 | 21: bear

40 | 22: zebra

41 | 23: giraffe

42 | 24: backpack

43 | 25: umbrella

44 | 26: handbag

45 | 27: tie

46 | 28: suitcase

47 | 29: frisbee

48 | 30: skis

49 | 31: snowboard

50 | 32: sports ball

51 | 33: kite

52 | 34: baseball bat

53 | 35: baseball glove

54 | 36: skateboard

55 | 37: surfboard

56 | 38: tennis racket

57 | 39: bottle

58 | 40: wine glass

59 | 41: cup

60 | 42: fork

61 | 43: knife

62 | 44: spoon

63 | 45: bowl

64 | 46: banana

65 | 47: apple

66 | 48: sandwich

67 | 49: orange

68 | 50: broccoli

69 | 51: carrot

70 | 52: hot dog

71 | 53: pizza

72 | 54: donut

73 | 55: cake

74 | 56: chair

75 | 57: couch

76 | 58: potted plant

77 | 59: bed

78 | 60: dining table

79 | 61: toilet

80 | 62: tv

81 | 63: laptop

82 | 64: mouse

83 | 65: remote

84 | 66: keyboard

85 | 67: cell phone

86 | 68: microwave

87 | 69: oven

88 | 70: toaster

89 | 71: sink

90 | 72: refrigerator

91 | 73: book

92 | 74: clock

93 | 75: vase

94 | 76: scissors

95 | 77: teddy bear

96 | 78: hair drier

97 | 79: toothbrush

98 |

99 |

100 | # Download script/URL (optional)

101 | #download: |

102 | # from utils.general import download, Path

103 | #

104 | #

105 | # # Download labels

106 | # segments = False # segment or box labels

107 | # dir = Path(yaml['path']) # dataset root dir

108 | # url = 'https://github.com/ultralytics/yolov5/releases/download/v1.0/'

109 | # urls = [url + ('coco2017labels-segments.zip' if segments else 'coco2017labels.zip')] # labels

110 | # download(urls, dir=dir.parent)

111 | #

112 | # # Download data

113 | # urls = ['http://images.cocodataset.org/zips/train2017.zip', # 19G, 118k images

114 | # 'http://images.cocodataset.org/zips/val2017.zip', # 1G, 5k images

115 | # 'http://images.cocodataset.org/zips/test2017.zip'] # 7G, 41k images (optional)

116 | # download(urls, dir=dir / 'images', threads=3)

117 |

--------------------------------------------------------------------------------

/yolov3/data/coco-seg.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # COCO128-seg dataset https://www.kaggle.com/ultralytics/coco128 (first 128 images from COCO train2017) by Ultralytics

3 | # Example usage: python train.py --data coco128.yaml

4 | # parent

5 | # ├── yolov5

6 | # └── datasets

7 | # └── coco128-seg ← downloads here (7 MB)

8 |

9 |

10 | # Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

11 | path: ../../../datasets/coco2017/coco2017labels-segments # dataset root dir

12 | train: train2017.txt # train images (relative to 'path') 128 images

13 | val: adaptiveisp_val2017.txt # val images (relative to 'path') 128 images

14 | test: # test images (optional)

15 |

16 | # Classes

17 | names:

18 | 0: person

19 | 1: bicycle

20 | 2: car

21 | 3: motorcycle

22 | 4: airplane

23 | 5: bus

24 | 6: train

25 | 7: truck

26 | 8: boat

27 | 9: traffic light

28 | 10: fire hydrant

29 | 11: stop sign

30 | 12: parking meter

31 | 13: bench

32 | 14: bird

33 | 15: cat

34 | 16: dog

35 | 17: horse

36 | 18: sheep

37 | 19: cow

38 | 20: elephant

39 | 21: bear

40 | 22: zebra

41 | 23: giraffe

42 | 24: backpack

43 | 25: umbrella

44 | 26: handbag

45 | 27: tie

46 | 28: suitcase

47 | 29: frisbee

48 | 30: skis

49 | 31: snowboard

50 | 32: sports ball

51 | 33: kite

52 | 34: baseball bat

53 | 35: baseball glove

54 | 36: skateboard

55 | 37: surfboard

56 | 38: tennis racket

57 | 39: bottle

58 | 40: wine glass

59 | 41: cup

60 | 42: fork

61 | 43: knife

62 | 44: spoon

63 | 45: bowl

64 | 46: banana

65 | 47: apple

66 | 48: sandwich

67 | 49: orange

68 | 50: broccoli

69 | 51: carrot

70 | 52: hot dog

71 | 53: pizza

72 | 54: donut

73 | 55: cake

74 | 56: chair

75 | 57: couch

76 | 58: potted plant

77 | 59: bed

78 | 60: dining table

79 | 61: toilet

80 | 62: tv

81 | 63: laptop

82 | 64: mouse

83 | 65: remote

84 | 66: keyboard

85 | 67: cell phone

86 | 68: microwave

87 | 69: oven

88 | 70: toaster

89 | 71: sink

90 | 72: refrigerator

91 | 73: book

92 | 74: clock

93 | 75: vase

94 | 76: scissors

95 | 77: teddy bear

96 | 78: hair drier

97 | 79: toothbrush

98 |

99 |

100 | # Download script/URL (optional)

101 | #download: https://ultralytics.com/assets/coco128-seg.zip

102 |

--------------------------------------------------------------------------------

/yolov3/data/coco.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # COCO 2017 dataset http://cocodataset.org by Microsoft

3 | # Example usage: python train.py --data coco.yaml

4 | # parent

5 | # ├── yolov5

6 | # └── datasets

7 | # └── coco ← downloads here (20.1 GB)

8 |

9 |

10 | # Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

11 | path: ../../../datasets/coco2017 # dataset root dir

12 | train: train2017.txt # train images (relative to 'path') 118287 images

13 | val: val2017.txt # val images (relative to 'path') 5000 images

14 | test: test-dev2017.txt # 20288 of 40670 images, submit to https://competitions.codalab.org/competitions/20794

15 |

16 | # Classes

17 | names:

18 | 0: person

19 | 1: bicycle

20 | 2: car

21 | 3: motorcycle

22 | 4: airplane

23 | 5: bus

24 | 6: train

25 | 7: truck

26 | 8: boat

27 | 9: traffic light

28 | 10: fire hydrant

29 | 11: stop sign

30 | 12: parking meter

31 | 13: bench

32 | 14: bird

33 | 15: cat

34 | 16: dog

35 | 17: horse

36 | 18: sheep

37 | 19: cow

38 | 20: elephant

39 | 21: bear

40 | 22: zebra

41 | 23: giraffe

42 | 24: backpack

43 | 25: umbrella

44 | 26: handbag

45 | 27: tie

46 | 28: suitcase

47 | 29: frisbee

48 | 30: skis

49 | 31: snowboard

50 | 32: sports ball

51 | 33: kite

52 | 34: baseball bat

53 | 35: baseball glove

54 | 36: skateboard

55 | 37: surfboard

56 | 38: tennis racket

57 | 39: bottle

58 | 40: wine glass

59 | 41: cup

60 | 42: fork

61 | 43: knife

62 | 44: spoon

63 | 45: bowl

64 | 46: banana

65 | 47: apple

66 | 48: sandwich

67 | 49: orange

68 | 50: broccoli

69 | 51: carrot

70 | 52: hot dog

71 | 53: pizza

72 | 54: donut

73 | 55: cake

74 | 56: chair

75 | 57: couch

76 | 58: potted plant

77 | 59: bed

78 | 60: dining table

79 | 61: toilet

80 | 62: tv

81 | 63: laptop

82 | 64: mouse

83 | 65: remote

84 | 66: keyboard

85 | 67: cell phone

86 | 68: microwave

87 | 69: oven

88 | 70: toaster

89 | 71: sink

90 | 72: refrigerator

91 | 73: book

92 | 74: clock

93 | 75: vase

94 | 76: scissors

95 | 77: teddy bear

96 | 78: hair drier

97 | 79: toothbrush

98 |

99 |

100 | # Download script/URL (optional)

101 | # download: |

102 | # from utils.general import download, Path

103 |

104 |

105 | # # Download labels

106 | # segments = False # segment or box labels

107 | # dir = Path(yaml['path']) # dataset root dir

108 | # url = 'https://github.com/ultralytics/yolov5/releases/download/v1.0/'

109 | # urls = [url + ('coco2017labels-segments.zip' if segments else 'coco2017labels.zip')] # labels

110 | # download(urls, dir=dir.parent)

111 |

112 | # # Download data

113 | # urls = ['http://images.cocodataset.org/zips/train2017.zip', # 19G, 118k images

114 | # 'http://images.cocodataset.org/zips/val2017.zip', # 1G, 5k images

115 | # 'http://images.cocodataset.org/zips/test2017.zip'] # 7G, 41k images (optional)

116 | # download(urls, dir=dir / 'images', threads=3)

117 |

--------------------------------------------------------------------------------

/yolov3/data/coco128-seg.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # COCO128-seg dataset https://www.kaggle.com/ultralytics/coco128 (first 128 images from COCO train2017) by Ultralytics

3 | # Example usage: python train.py --data coco128.yaml

4 | # parent

5 | # ├── yolov5

6 | # └── datasets

7 | # └── coco128-seg ← downloads here (7 MB)

8 |

9 |

10 | # Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

11 | path: ../datasets/coco128-seg # dataset root dir

12 | train: images/train2017 # train images (relative to 'path') 128 images

13 | val: images/train2017 # val images (relative to 'path') 128 images

14 | test: # test images (optional)

15 |

16 | # Classes

17 | names:

18 | 0: person

19 | 1: bicycle

20 | 2: car

21 | 3: motorcycle

22 | 4: airplane

23 | 5: bus

24 | 6: train

25 | 7: truck

26 | 8: boat

27 | 9: traffic light

28 | 10: fire hydrant

29 | 11: stop sign

30 | 12: parking meter

31 | 13: bench

32 | 14: bird

33 | 15: cat

34 | 16: dog

35 | 17: horse

36 | 18: sheep

37 | 19: cow

38 | 20: elephant

39 | 21: bear

40 | 22: zebra

41 | 23: giraffe

42 | 24: backpack

43 | 25: umbrella

44 | 26: handbag

45 | 27: tie

46 | 28: suitcase

47 | 29: frisbee

48 | 30: skis

49 | 31: snowboard

50 | 32: sports ball

51 | 33: kite

52 | 34: baseball bat

53 | 35: baseball glove

54 | 36: skateboard

55 | 37: surfboard

56 | 38: tennis racket

57 | 39: bottle

58 | 40: wine glass

59 | 41: cup

60 | 42: fork

61 | 43: knife

62 | 44: spoon

63 | 45: bowl

64 | 46: banana

65 | 47: apple

66 | 48: sandwich

67 | 49: orange

68 | 50: broccoli

69 | 51: carrot

70 | 52: hot dog

71 | 53: pizza

72 | 54: donut

73 | 55: cake

74 | 56: chair

75 | 57: couch

76 | 58: potted plant

77 | 59: bed

78 | 60: dining table

79 | 61: toilet

80 | 62: tv

81 | 63: laptop

82 | 64: mouse

83 | 65: remote

84 | 66: keyboard

85 | 67: cell phone

86 | 68: microwave

87 | 69: oven

88 | 70: toaster

89 | 71: sink

90 | 72: refrigerator

91 | 73: book

92 | 74: clock

93 | 75: vase

94 | 76: scissors

95 | 77: teddy bear

96 | 78: hair drier

97 | 79: toothbrush

98 |

99 |

100 | # Download script/URL (optional)

101 | download: https://ultralytics.com/assets/coco128-seg.zip

102 |

--------------------------------------------------------------------------------

/yolov3/data/coco128.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # COCO128 dataset https://www.kaggle.com/ultralytics/coco128 (first 128 images from COCO train2017) by Ultralytics

3 | # Example usage: python train.py --data coco128.yaml

4 | # parent

5 | # ├── yolov5

6 | # └── datasets

7 | # └── coco128 ← downloads here (7 MB)

8 |

9 |

10 | # Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

11 | path: ../datasets/coco128 # dataset root dir

12 | train: images/train2017 # train images (relative to 'path') 128 images

13 | val: images/train2017 # val images (relative to 'path') 128 images

14 | test: # test images (optional)

15 |

16 | # Classes

17 | names:

18 | 0: person

19 | 1: bicycle

20 | 2: car

21 | 3: motorcycle

22 | 4: airplane

23 | 5: bus

24 | 6: train

25 | 7: truck

26 | 8: boat

27 | 9: traffic light

28 | 10: fire hydrant

29 | 11: stop sign

30 | 12: parking meter

31 | 13: bench

32 | 14: bird

33 | 15: cat

34 | 16: dog

35 | 17: horse

36 | 18: sheep

37 | 19: cow

38 | 20: elephant

39 | 21: bear

40 | 22: zebra

41 | 23: giraffe

42 | 24: backpack

43 | 25: umbrella

44 | 26: handbag

45 | 27: tie

46 | 28: suitcase

47 | 29: frisbee

48 | 30: skis

49 | 31: snowboard

50 | 32: sports ball

51 | 33: kite

52 | 34: baseball bat

53 | 35: baseball glove

54 | 36: skateboard

55 | 37: surfboard

56 | 38: tennis racket

57 | 39: bottle

58 | 40: wine glass

59 | 41: cup

60 | 42: fork

61 | 43: knife

62 | 44: spoon

63 | 45: bowl

64 | 46: banana

65 | 47: apple

66 | 48: sandwich

67 | 49: orange

68 | 50: broccoli

69 | 51: carrot

70 | 52: hot dog

71 | 53: pizza

72 | 54: donut

73 | 55: cake

74 | 56: chair

75 | 57: couch

76 | 58: potted plant

77 | 59: bed

78 | 60: dining table

79 | 61: toilet

80 | 62: tv

81 | 63: laptop

82 | 64: mouse

83 | 65: remote

84 | 66: keyboard

85 | 67: cell phone

86 | 68: microwave

87 | 69: oven

88 | 70: toaster

89 | 71: sink

90 | 72: refrigerator

91 | 73: book

92 | 74: clock

93 | 75: vase

94 | 76: scissors

95 | 77: teddy bear

96 | 78: hair drier

97 | 79: toothbrush

98 |

99 |

100 | # Download script/URL (optional)

101 | download: https://ultralytics.com/assets/coco128.zip

102 |

--------------------------------------------------------------------------------

/yolov3/data/hyps/hyp.Objects365.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # Hyperparameters for Objects365 training

3 | # python train.py --weights yolov5m.pt --data Objects365.yaml --evolve

4 | # See Hyperparameter Evolution tutorial for details https://github.com/ultralytics/yolov5#tutorials

5 |

6 | lr0: 0.00258

7 | lrf: 0.17

8 | momentum: 0.779

9 | weight_decay: 0.00058

10 | warmup_epochs: 1.33

11 | warmup_momentum: 0.86

12 | warmup_bias_lr: 0.0711

13 | box: 0.0539

14 | cls: 0.299

15 | cls_pw: 0.825

16 | obj: 0.632

17 | obj_pw: 1.0

18 | iou_t: 0.2

19 | anchor_t: 3.44

20 | anchors: 3.2

21 | fl_gamma: 0.0

22 | hsv_h: 0.0188

23 | hsv_s: 0.704

24 | hsv_v: 0.36

25 | degrees: 0.0

26 | translate: 0.0902

27 | scale: 0.491

28 | shear: 0.0

29 | perspective: 0.0

30 | flipud: 0.0

31 | fliplr: 0.5

32 | mosaic: 1.0

33 | mixup: 0.0

34 | copy_paste: 0.0

35 |

--------------------------------------------------------------------------------

/yolov3/data/hyps/hyp.VOC.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # Hyperparameters for VOC training

3 | # python train.py --batch 128 --weights yolov5m6.pt --data VOC.yaml --epochs 50 --img 512 --hyp hyp.scratch-med.yaml --evolve

4 | # See Hyperparameter Evolution tutorial for details https://github.com/ultralytics/yolov5#tutorials

5 |

6 | # YOLOv3 Hyperparameter Evolution Results

7 | # Best generation: 467

8 | # Last generation: 996

9 | # metrics/precision, metrics/recall, metrics/mAP_0.5, metrics/mAP_0.5:0.95, val/box_loss, val/obj_loss, val/cls_loss

10 | # 0.87729, 0.85125, 0.91286, 0.72664, 0.0076739, 0.0042529, 0.0013865

11 |

12 | lr0: 0.00334

13 | lrf: 0.15135

14 | momentum: 0.74832

15 | weight_decay: 0.00025

16 | warmup_epochs: 3.3835

17 | warmup_momentum: 0.59462

18 | warmup_bias_lr: 0.18657

19 | box: 0.02

20 | cls: 0.21638

21 | cls_pw: 0.5

22 | obj: 0.51728

23 | obj_pw: 0.67198

24 | iou_t: 0.2

25 | anchor_t: 3.3744

26 | fl_gamma: 0.0

27 | hsv_h: 0.01041

28 | hsv_s: 0.54703

29 | hsv_v: 0.27739

30 | degrees: 0.0

31 | translate: 0.04591

32 | scale: 0.75544

33 | shear: 0.0

34 | perspective: 0.0

35 | flipud: 0.0

36 | fliplr: 0.5

37 | mosaic: 0.85834

38 | mixup: 0.04266

39 | copy_paste: 0.0

40 | anchors: 3.412

41 |

--------------------------------------------------------------------------------

/yolov3/data/hyps/hyp.no-augmentation.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # Hyperparameters when using Albumentations frameworks

3 | # python train.py --hyp hyp.no-augmentation.yaml

4 | # See https://github.com/ultralytics/yolov5/pull/3882 for YOLOv3 + Albumentations Usage examples

5 |

6 | lr0: 0.01 # initial learning rate (SGD=1E-2, Adam=1E-3)

7 | lrf: 0.1 # final OneCycleLR learning rate (lr0 * lrf)

8 | momentum: 0.937 # SGD momentum/Adam beta1

9 | weight_decay: 0.0005 # optimizer weight decay 5e-4

10 | warmup_epochs: 3.0 # warmup epochs (fractions ok)

11 | warmup_momentum: 0.8 # warmup initial momentum

12 | warmup_bias_lr: 0.1 # warmup initial bias lr

13 | box: 0.05 # box loss gain

14 | cls: 0.3 # cls loss gain

15 | cls_pw: 1.0 # cls BCELoss positive_weight

16 | obj: 0.7 # obj loss gain (scale with pixels)

17 | obj_pw: 1.0 # obj BCELoss positive_weight

18 | iou_t: 0.20 # IoU training threshold

19 | anchor_t: 4.0 # anchor-multiple threshold

20 | # anchors: 3 # anchors per output layer (0 to ignore)

21 | # this parameters are all zero since we want to use albumentation framework

22 | fl_gamma: 0.0 # focal loss gamma (efficientDet default gamma=1.5)

23 | hsv_h: 0 # image HSV-Hue augmentation (fraction)

24 | hsv_s: 0 # image HSV-Saturation augmentation (fraction)

25 | hsv_v: 0 # image HSV-Value augmentation (fraction)

26 | degrees: 0.0 # image rotation (+/- deg)

27 | translate: 0 # image translation (+/- fraction)

28 | scale: 0 # image scale (+/- gain)

29 | shear: 0 # image shear (+/- deg)

30 | perspective: 0.0 # image perspective (+/- fraction), range 0-0.001

31 | flipud: 0.0 # image flip up-down (probability)

32 | fliplr: 0.0 # image flip left-right (probability)

33 | mosaic: 0.0 # image mosaic (probability)

34 | mixup: 0.0 # image mixup (probability)

35 | copy_paste: 0.0 # segment copy-paste (probability)

36 |

--------------------------------------------------------------------------------

/yolov3/data/hyps/hyp.scratch-high.yaml:

--------------------------------------------------------------------------------

1 | # YOLOv3 🚀 by Ultralytics, AGPL-3.0 license

2 | # Hyperparameters for high-augmentation COCO training from scratch

3 | # python train.py --batch 32 --cfg yolov5m6.yaml --weights '' --data coco.yaml --img 1280 --epochs 300

4 | # See tutorials for hyperparameter evolution https://github.com/ultralytics/yolov5#tutorials

5 |

6 | lr0: 0.01 # initial learning rate (SGD=1E-2, Adam=1E-3)

7 | lrf: 0.1 # final OneCycleLR learning rate (lr0 * lrf)

8 | momentum: 0.937 # SGD momentum/Adam beta1

9 | weight_decay: 0.0005 # optimizer weight decay 5e-4

10 | warmup_epochs: 3.0 # warmup epochs (fractions ok)

11 | warmup_momentum: 0.8 # warmup initial momentum

12 | warmup_bias_lr: 0.1 # warmup initial bias lr

13 | box: 0.05 # box loss gain

14 | cls: 0.3 # cls loss gain

15 | cls_pw: 1.0 # cls BCELoss positive_weight

16 | obj: 0.7 # obj loss gain (scale with pixels)

17 | obj_pw: 1.0 # obj BCELoss positive_weight

18 | iou_t: 0.20 # IoU training threshold

19 | anchor_t: 4.0 # anchor-multiple threshold

20 | # anchors: 3 # anchors per output layer (0 to ignore)

21 | fl_gamma: 0.0 # focal loss gamma (efficientDet default gamma=1.5)

22 | hsv_h: 0.015 # image HSV-Hue augmentation (fraction)

23 | hsv_s: 0.7 # image HSV-Saturation augmentation (fraction)

24 | hsv_v: 0.4 # image HSV-Value augmentation (fraction)

25 | degrees: 0.0 # image rotation (+/- deg)

26 | translate: 0.1 # image translation (+/- fraction)

27 | scale: 0.9 # image scale (+/- gain)

28 | shear: 0.0 # image shear (+/- deg)