├── util

├── .aspell

├── gen_pdf

├── gen_notebooks

├── clean_all

├── push_pypi

└── spell_check

├── doc

├── flashNorm.pdf

├── matShrink.pdf

├── slimAttn.pdf

├── removeWeights.pdf

├── precomp1stLayer.pdf

├── fig

│ ├── flashNorm_fig1.pdf

│ ├── flashNorm_fig2.pdf

│ ├── flashNorm_fig3.pdf

│ ├── flashNorm_fig4.pdf

│ ├── flashNorm_fig5.pdf

│ ├── flashNorm_fig6.pdf

│ ├── flashNorm_fig7.pdf

│ ├── flashNorm_fig8.pdf

│ ├── flashNorm_figA.pdf

│ ├── flashNorm_figB.pdf

│ ├── matShrink_fig1.pdf

│ ├── matShrink_fig2.pdf

│ ├── matShrink_fig3.pdf

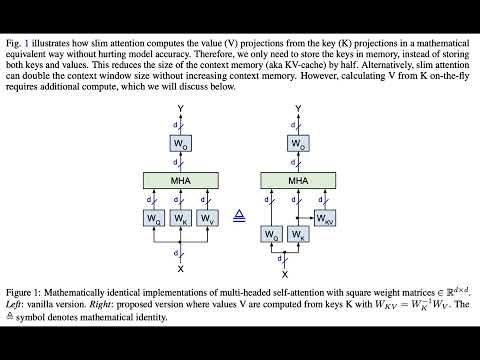

│ ├── slimAttn_fig1.pdf

│ ├── slimAttn_fig2.pdf

│ ├── slimAttn_fig3.pdf

│ ├── slimAttn_fig4.pdf

│ ├── slimAttn_fig5.pdf

│ ├── slimAttn_fig6.pdf

│ ├── slimAttn_fig7.pdf

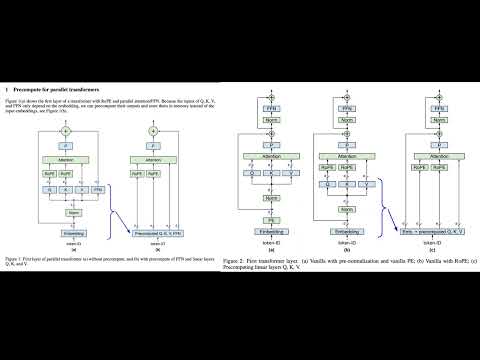

│ ├── precomp1stLayer_fig1.pdf

│ ├── precomp1stLayer_fig2.pdf

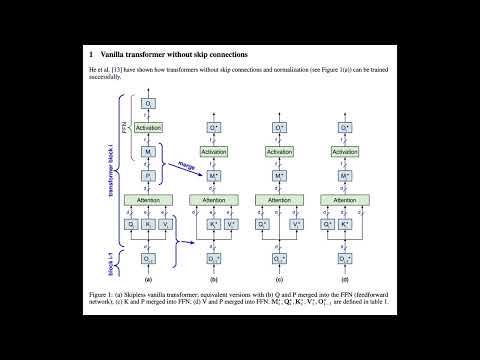

│ ├── removeWeights_fig1.pdf

│ ├── removeWeights_fig2.pdf

│ ├── removeWeights_fig3.pdf

│ ├── removeWeights_fig4.pdf

│ └── slimAttn_fig1.svg

├── slimAttn.md

├── CONTRIBUTING.md

├── README.md

└── flashNorm.md

├── tex

├── clean

├── run

├── neurips_2025_mods.sty

├── submit

├── README.md

├── arxiv.sty

├── precomp1stLayer.tex

├── neurips_2025.sty

├── removeWeights.tex

├── matShrink.tex

├── flashNorm.tex

└── matShrink_Sid.tex

├── requirements.txt

├── LICENSE

├── pyproject.toml

├── notebooks

├── README.md

├── update_packages.ipynb

├── flashNorm_example.ipynb

├── removeWeights_paper.ipynb

├── slimAttn_paper.ipynb

└── flashNorm_paper.ipynb

├── flashNorm_example.py

├── flashNorm_test.py

├── slimAttn_paper.py

├── README.md

└── transformer_tricks.py

/util/.aspell:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/util/.aspell

--------------------------------------------------------------------------------

/doc/flashNorm.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/flashNorm.pdf

--------------------------------------------------------------------------------

/doc/matShrink.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/matShrink.pdf

--------------------------------------------------------------------------------

/doc/slimAttn.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/slimAttn.pdf

--------------------------------------------------------------------------------

/doc/removeWeights.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/removeWeights.pdf

--------------------------------------------------------------------------------

/doc/precomp1stLayer.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/precomp1stLayer.pdf

--------------------------------------------------------------------------------

/doc/fig/flashNorm_fig1.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/flashNorm_fig1.pdf

--------------------------------------------------------------------------------

/doc/fig/flashNorm_fig2.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/flashNorm_fig2.pdf

--------------------------------------------------------------------------------

/doc/fig/flashNorm_fig3.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/flashNorm_fig3.pdf

--------------------------------------------------------------------------------

/doc/fig/flashNorm_fig4.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/flashNorm_fig4.pdf

--------------------------------------------------------------------------------

/doc/fig/flashNorm_fig5.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/flashNorm_fig5.pdf

--------------------------------------------------------------------------------

/doc/fig/flashNorm_fig6.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/flashNorm_fig6.pdf

--------------------------------------------------------------------------------

/doc/fig/flashNorm_fig7.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/flashNorm_fig7.pdf

--------------------------------------------------------------------------------

/doc/fig/flashNorm_fig8.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/flashNorm_fig8.pdf

--------------------------------------------------------------------------------

/doc/fig/flashNorm_figA.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/flashNorm_figA.pdf

--------------------------------------------------------------------------------

/doc/fig/flashNorm_figB.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/flashNorm_figB.pdf

--------------------------------------------------------------------------------

/doc/fig/matShrink_fig1.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/matShrink_fig1.pdf

--------------------------------------------------------------------------------

/doc/fig/matShrink_fig2.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/matShrink_fig2.pdf

--------------------------------------------------------------------------------

/doc/fig/matShrink_fig3.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/matShrink_fig3.pdf

--------------------------------------------------------------------------------

/doc/fig/slimAttn_fig1.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/slimAttn_fig1.pdf

--------------------------------------------------------------------------------

/doc/fig/slimAttn_fig2.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/slimAttn_fig2.pdf

--------------------------------------------------------------------------------

/doc/fig/slimAttn_fig3.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/slimAttn_fig3.pdf

--------------------------------------------------------------------------------

/doc/fig/slimAttn_fig4.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/slimAttn_fig4.pdf

--------------------------------------------------------------------------------

/doc/fig/slimAttn_fig5.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/slimAttn_fig5.pdf

--------------------------------------------------------------------------------

/doc/fig/slimAttn_fig6.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/slimAttn_fig6.pdf

--------------------------------------------------------------------------------

/doc/fig/slimAttn_fig7.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/slimAttn_fig7.pdf

--------------------------------------------------------------------------------

/doc/fig/precomp1stLayer_fig1.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/precomp1stLayer_fig1.pdf

--------------------------------------------------------------------------------

/doc/fig/precomp1stLayer_fig2.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/precomp1stLayer_fig2.pdf

--------------------------------------------------------------------------------

/doc/fig/removeWeights_fig1.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/removeWeights_fig1.pdf

--------------------------------------------------------------------------------

/doc/fig/removeWeights_fig2.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/removeWeights_fig2.pdf

--------------------------------------------------------------------------------

/doc/fig/removeWeights_fig3.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/removeWeights_fig3.pdf

--------------------------------------------------------------------------------

/doc/fig/removeWeights_fig4.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/HEAD/doc/fig/removeWeights_fig4.pdf

--------------------------------------------------------------------------------

/tex/clean:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # script to clean up this directory

4 | # usage: ./clean

5 |

6 | \rm -f *.bbl *.aux *.blg *.log *.out

7 |

--------------------------------------------------------------------------------

/util/gen_pdf:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # generate PDF from tex for all files

4 | # usage: util/gen_pdf (run from the root dir of this repo)

5 |

6 | cd tex

7 | for fname in *.tex; do

8 | ./run "$fname"

9 | done

10 |

11 | ./clean

12 | cd -

13 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | transformer-tricks>=0.3.4

2 | jupytext>=1.16.4

3 | autopep8>=2.3.1

4 | twine>=6.1.0

5 | build>=1.2.2

6 |

7 | # pip list # see all versions

8 | #

9 | # Phi-3 needs flash-attn, but this requires CUDA

10 | # flash-attn==2.5.8

11 |

--------------------------------------------------------------------------------

/util/gen_notebooks:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # generate Jupyter notebooks from python

4 | # usage: util/gen_notebooks (run from the root dir of this repo)

5 |

6 | for fname in slimAttn_paper flashNorm_example; do

7 | jupytext "$fname".py -o notebooks/"$fname".ipynb

8 | done

9 |

--------------------------------------------------------------------------------

/util/clean_all:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # cleans up the entire repo by removing generated files

4 | # usage: util/clean_all (run from the root dir of this repo)

5 |

6 | # clean up ./util

7 | cd util

8 | rm -rf pypi

9 | cd -

10 |

11 | # clean up ./tex

12 | cd tex

13 | ./clean

14 | \rm -rf *_submit *_submit.tar.gz

15 | cd -

16 |

--------------------------------------------------------------------------------

/doc/slimAttn.md:

--------------------------------------------------------------------------------

1 | coming soon

2 |

3 | For now, see the [[notebook]](https://colab.research.google.com/github/OpenMachine-ai/transformer-tricks/blob/main/notebooks/slimAttn_paper.ipynb)

4 |

5 | Feature requests for frameworks to implement slim attention:

6 | - [llama.cpp and whisper.cpp](https://github.com/ggml-org/llama.cpp/issues/12359)

7 | - [vLLM](https://github.com/vllm-project/vllm/issues/14937)

8 | - [SGLang](https://github.com/sgl-project/sglang/issues/4496)

9 |

--------------------------------------------------------------------------------

/util/push_pypi:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # Make sure to increment the version number in pyproject.toml and

4 | # requirements.txt before running this script! See below link for details:

5 | # https://packaging.python.org/en/latest/tutorials/packaging-projects/

6 | #

7 | # Setup: install build and twine via 'pip3 install build twine'

8 | # To upgrade: pip3 install --upgrade build twine pkginfo packaging

9 | # Usage: ./push_pypi

10 |

11 | # create folder 'pypi' and copy all relevant files

12 | rm -rf pypi

13 | mkdir pypi

14 | cp ../LICENSE ../README.md ../pyproject.toml ../transformer_tricks.py pypi

15 |

16 | # build and upload

17 | cd pypi

18 | python3 -m build

19 | python3 -m twine upload dist/*

20 |

21 | #rm -rf pypi

22 |

--------------------------------------------------------------------------------

/util/spell_check:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # spell check all tex and markdown files

4 | # usage: util/spell_check (run from the root dir of this repo)

5 |

6 | # notes:

7 | # - option -M is for markdown; -t is for tex

8 | # - file util/.aspell is our personal dictionary

9 | # - however, aspell seems to have a bug, the personal dictionary file

10 | # must be located in the same dir from which you call aspell, that's

11 | # why we first do 'cd util'

12 |

13 | cd util

14 |

15 | # all markdown files

16 | for file in ../*.md ../*/*.md; do

17 | aspell -d en_US -l en_US --personal=./.aspell -M -c "$file"

18 | done

19 |

20 | # all tex files

21 | for file in ../tex/*.tex; do

22 | aspell -d en_US -l en_US --personal=./.aspell -t -c "$file"

23 | done

24 |

25 | cd -

26 |

--------------------------------------------------------------------------------

/doc/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | We pay cash for your high-impact contributions, please contact us for details.

2 |

3 | Before submitting a PR, please do the following:

4 | - Make sure to minimize the number of files and lines of code, we strive for simple and readable code.

5 | - Format your code by typing `autopep8 *.py`. It's using the config in `pyproject.toml`

6 | - Generate notebooks from python by typing `util/gen_notebooks`

7 | - Whenever you change `transformer_tricks.py`, we will publish a new version of the package as follows:

8 | - First, update the version number in `pyproject.toml` and in `requirements.txt`

9 | - Then, push the package to PyPi by typing `./push_pypi.sh`

10 | - Links for python package: [pypi](https://pypi.org/project/transformer-tricks/), [stats](https://www.pepy.tech/projects/transformer-tricks)

11 |

--------------------------------------------------------------------------------

/doc/README.md:

--------------------------------------------------------------------------------

1 | Click on the links below for better PDF viewing:

2 | - [flashNorm.pdf](https://docs.google.com/viewer?url=https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/refs/heads/main/doc/flashNorm.pdf)

3 | - [matShrink.pdf](https://docs.google.com/viewer?url=https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/refs/heads/main/doc/matShrink.pdf)

4 | - [precomp1stLayer.pdf](https://docs.google.com/viewer?url=https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/refs/heads/main/doc/precomp1stLayer.pdf)

5 | - [removeWeights.pdf](https://docs.google.com/viewer?url=https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/refs/heads/main/doc/removeWeights.pdf)

6 | - [slimAttn.pdf](https://docs.google.com/viewer?url=https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/refs/heads/main/doc/slimAttn.pdf)

7 |

--------------------------------------------------------------------------------

/tex/run:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # script to convert foo.tex to PDF

4 | # usage: ./run foo.tex or ./run foo

5 | # above generates foo.pdf and other files

6 |

7 | # remove filename extension from arg

8 | file="${1%.*}"

9 |

10 | ./clean

11 | pdflatex "$file"

12 | bibtex "$file"

13 | pdflatex "$file"

14 | pdflatex "$file"

15 | pdflatex "$file" # we sometimes need to run pdflatex 3 times

16 |

17 | # in case you want to diff your changes visually

18 | diff-pdf --view "$file".pdf ../doc/"$file".pdf

19 |

20 | mv "$file".pdf ../doc

21 |

22 | echo "--------------------------------------------------------------------------------"

23 | grep Warning "$file".log

24 |

25 | #./clean

26 |

27 | # note: to diff 2 PDF files visually, type the following:

28 | # diff-pdf --view file1.pdf file2.pdf

29 | # alternatively, use pdftotext to convert each PDF to text and then diff the text files:

30 | # pdftotext file1.pdf # this generates file1.txt

31 | # pdftotext file2.pdf # this generates file2.txt

32 | # diff file1.txt file2.txt

33 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2024 OpenMachine

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/tex/neurips_2025_mods.sty:

--------------------------------------------------------------------------------

1 | % My mods for neurips_2025.sty

2 |

3 | \renewcommand{\@noticestring}{} % remove the footnote 'Under review'

4 |

5 | \usepackage[utf8]{inputenc} % allow utf-8 input

6 | \usepackage[T1]{fontenc} % use 8-bit T1 fonts

7 | %% I removed this: \usepackage{hyperref} % hyperlinks

8 | \usepackage{url} % simple URL typesetting

9 | \usepackage{booktabs} % professional-quality tables

10 | \usepackage{amsfonts} % blackboard math symbols

11 | \usepackage{nicefrac} % compact symbols for 1/2, etc.

12 | \usepackage{microtype} % microtypography

13 | \usepackage{xcolor} % colors

14 |

15 | %% I added the following packages

16 | \usepackage[hidelinks,colorlinks=true,linkcolor=blue,citecolor=blue,urlcolor=blue]{hyperref}

17 | \usepackage{amsmath}

18 | \usepackage{amssymb}

19 | \usepackage{graphicx}

20 | \usepackage{makecell}

21 | \usepackage{multirow}

22 | \usepackage{tablefootnote}

23 | \usepackage{enumitem}

24 | \usepackage{pythonhighlight} % for python listings

25 | \usepackage[numbers]{natbib}

26 | \usepackage{caption}

27 | \captionsetup[figure]{skip=2pt} % reduce the space between figure and caption

28 | %\captionsetup[table]{skip=10pt}

29 |

--------------------------------------------------------------------------------

/tex/submit:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # script to submit foo.tex to arXiv

4 | # usage: ./submit foo.tex

5 | # above generates a directory foo_submit and a tar gz file foo_submit.tar.gz

6 | # only upload this tar file to arXiv and see the notes in README on how to submit

7 |

8 | # note: to double-check if everything works, run pdflatex foo two times

9 | # (or sometimes three times) as follows:

10 | # cd foo_submit

11 | # pdflatex foo && pdflatex foo

12 |

13 | # remove filename extension from arg

14 | file="${1%.*}"

15 |

16 | DIR="$file"_submit

17 |

18 | rm -Rf "$DIR" "$DIR".tar.gz

19 | mkdir "$DIR"

20 |

21 | ./run "$file"

22 |

23 | cp *.sty references.bib "$file".bbl "$file".tex "$DIR"

24 | cp ../doc/fig/"$file"_fig*.pdf "$DIR"

25 |

26 | # modify the figure-paths in the tex file: ../doc/fig/ -> ./

27 | sed -i "" "s,../doc/fig/,./,g" "$DIR"/"$file".tex

28 | # the two quotes (empty string) at the beginning of sed are for running on mac

29 |

30 | # TODO: it might also work without the sed command, but only if the tar.gz

31 | # archive is flat and has only one directory to the tex file (right now the

32 | # archive includes the directory 'foo_submit')

33 |

34 | tar -czvf "$DIR".tar.gz -C "$DIR" .

35 |

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

1 | # config file for creating the PyPi package. See

2 | # https://packaging.python.org/en/latest/tutorials/packaging-projects/

3 |

4 | [build-system]

5 | requires = ["hatchling"]

6 | build-backend = "hatchling.build"

7 |

8 | [project]

9 | name = "transformer-tricks"

10 | # version numbering A.B.C: A is major version, B is minor version, and C is patch

11 | version = "0.3.4"

12 | authors = [

13 | {name="Open Machine", email="info@openmachine.ai"},

14 | ]

15 | description = "A collection of tricks to speed up LLMs, see our transformer-tricks papers on arXiv"

16 | readme = "README.md"

17 | requires-python = ">=3.11"

18 | classifiers = [

19 | "Programming Language :: Python :: 3",

20 | "License :: OSI Approved :: MIT License",

21 | "Operating System :: OS Independent",

22 | ]

23 | dependencies = [

24 | "transformers>=4.52.3",

25 | "accelerate>=1.7.0",

26 | "datasets>=3.6.0",

27 | ]

28 |

29 | [project.urls]

30 | "Homepage" = "https://github.com/OpenMachine-ai/transformer-tricks"

31 | "Bug Tracker" = "https://github.com/OpenMachine-ai/transformer-tricks/issues"

32 |

33 | [tool.autopep8]

34 | indent-size = 2

35 | in-place = true

36 | max-line-length = 120

37 | ignore = "E265, E401, E70, E20, E241, E11"

38 | # type 'autopep8 --list-fixes' to see a list of all rules

39 | # for debug, type 'autopep8 --verbose *.py'

40 |

--------------------------------------------------------------------------------

/notebooks/README.md:

--------------------------------------------------------------------------------

1 | Click on the icons below to run the notebooks in your browser. You can hit 'cancel' when it says 'Notebook does not have secret access' because we don't need an HF_TOKEN:

2 | - flashNorm_example.ipynb:

3 | - flashNorm_paper.ipynb:

4 | - removeWeights_paper.ipynb:

5 | - slimAttn_paper.ipynb:

6 | - update_packages.ipynb:

7 |

--------------------------------------------------------------------------------

/flashNorm_example.py:

--------------------------------------------------------------------------------

1 | # This example converts SmolLM-135M to FlashNorm and measures its perplexity

2 | # before and after the conversion.

3 | # Usage: python3 flashNorm_example.py

4 |

5 | # !wget -q https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/refs/heads/main/flashNorm_modeling_llama.py

6 | # %pip install --quiet transformer_tricks

7 | import transformer_tricks as tt

8 |

9 | tt.quiet_hf() # calm down HuggingFace

10 |

11 | # %%

12 | #-------------------------------------------------------------------------------

13 | # Example 1

14 | #-------------------------------------------------------------------------------

15 |

16 | # convert model and store the new model in ./SmolLM-135M_flashNorm_test

17 | tt.flashify_repo('HuggingFaceTB/SmolLM-135M')

18 |

19 | # run example inference of original and modified model

20 | tt.hello_world('HuggingFaceTB/SmolLM-135M')

21 | tt.hello_world('./SmolLM-135M_flashNorm_test')

22 |

23 | # measure perplexity of original and modified model

24 | tt.perplexity('HuggingFaceTB/SmolLM-135M', speedup=16)

25 | tt.perplexity('./SmolLM-135M_flashNorm_test', speedup=16)

26 |

27 | # %%

28 | #-------------------------------------------------------------------------------

29 | # Example 2

30 | #-------------------------------------------------------------------------------

31 |

32 | # convert model and store the new model in ./SmolLM-135M_flashNorm

33 | tt.flashify_repo('HuggingFaceTB/SmolLM-135M')

34 |

35 | # run example inference of original and modified model

36 | tt.hello_world('HuggingFaceTB/SmolLM-135M')

37 | tt.hello_world('./SmolLM-135M_flashNorm', arch='LlamaFlashNorm')

38 |

39 | # measure perplexity of original and modified model

40 | tt.perplexity('HuggingFaceTB/SmolLM-135M', speedup=16)

41 | tt.perplexity('./SmolLM-135M_flashNorm', speedup=16, arch='LlamaFlashNorm')

42 |

43 | # %% [markdown]

44 | # Whenever you change this file, make sure to regenerate the jupyter notebook by typing:

45 | # `util/gen_notebooks`

46 |

--------------------------------------------------------------------------------

/tex/README.md:

--------------------------------------------------------------------------------

1 | # Create your paper

2 |

3 | This folder contains the latex files for the Transformer Tricks papers. The flow is as follows:

4 | 1) Write first draft and drawings in Google docs.

5 | 2) Create file `foo.tex` and copy text from the Google doc.

6 | - Copy each drawing into a separate google drawing file and adjust the bounding box and "download" as PDF. This PDF is then used by latex.

7 | - For references, see the comments in file `references.bib`

8 | 3) Type `./run foo.tex` to create PDF.

9 | 4) Use spell checker as follows: `cd ..; util/spell_check`

10 | 5) Note: I converted some figures from PDF to SVG (so that I can use them in markdown) as follows `pdftocairo -svg foo.pdf foo.svg` TODO: maybe only use SVG drawings even for tex.

11 | 6) Submit to arXiv:

12 | - To submit `foo.tex`, type: `./submit foo.tex`

13 | - To double-check if everything works, run `pdflatex foo` two times (or sometimes three times) as follows:

14 | `cd foo_submit` and `pdflatex foo && pdflatex foo`

15 | - Then upload the generated `*.tar.gz` file to arXiv.

16 | - Notes for filling out the abstract field in the online form:

17 | - Make sure to remove citations or replace them by `arXiv:YYMM.NNNNN`

18 | - You can add hyperlinks to the abstract as follows: `See https://github.com/blabla for code`

19 | - You can force a new paragraph in the abstract by typing a carriage return followed by one white space in the new line (i.e. indent the new line after the carriage return)

20 | - Keep in mind: papers that are short (e.g. 6 pages or less) are automatically put on `hold` and need to be reviewed by a moderator, which can take several weeks.

21 |

22 | # Promote your paper

23 | - Post on social media: LinkedIn, twitter

24 | - Post on reddit and discord

25 | - Generate a podcast and YouTube video:

26 | - We use Notebook LM to generate audio podcasts. We then manually create videos with this audio, see [here](https://www.youtube.com/@OpenMachine)

27 | - Try generating videos and podcasts with the [arXiv paper reader](https://github.com/imelnyk/ArxivPapers), see videos [here](https://www.youtube.com/@ArxivPapers)

28 |

29 | # Submit to conference

30 | - We don't have any experience with this

31 | - It requires adding an introduction section and an extensive experiment section

32 |

--------------------------------------------------------------------------------

/doc/flashNorm.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ## Setup

4 | ```

5 | pip3 install transformer-tricks

6 | ```

7 |

8 | ## Example

9 | The example below converts SmolLM-135M to [FlashNorm](https://arxiv.org/pdf/2407.09577) and measures perplexity of the original and the modified model.

10 | ```python

11 | import transformer_tricks as tt

12 |

13 | # convert model and store the new model in ./SmolLM-135M_flashNorm_test

14 | tt.flashify_repo('HuggingFaceTB/SmolLM-135M')

15 |

16 | # run example inference of original and modified model

17 | tt.hello_world('HuggingFaceTB/SmolLM-135M')

18 | tt.hello_world('./SmolLM-135M_flashNorm_test')

19 |

20 | # measure perplexity of original and modified model

21 | tt.perplexity('HuggingFaceTB/SmolLM-135M', speedup=16)

22 | tt.perplexity('./SmolLM-135M_flashNorm_test', speedup=16)

23 | ```

24 | Results:

25 | ```

26 | Once upon a time there was a curious little girl

27 | Once upon a time there was a curious little girl

28 | perplexity = 16.083

29 | perplexity = 16.083

30 | ```

31 |

32 | You can run the example in your browser by clicking on this notebook: . Hit "cancel" when it says "Notebook does not have secret access", because we don't need an HF_TOKEN for SmolLM.

33 |

34 | TODO: [our HuggingFace repo](https://huggingface.co/open-machine/FlashNorm)

35 |

36 | ## Test FlashNorm

37 | ```shell

38 | # setup

39 | git clone https://github.com/OpenMachine-ai/transformer-tricks.git

40 | pip3 install --quiet -r requirements.txt

41 |

42 | # run tests

43 | python3 flashNorm_test.py

44 | ```

45 | Results:

46 | ```

47 | Once upon a time there was a curious little girl

48 | Once upon a time there was a curious little girl

49 | Once upon a time there was a little girl named

50 | Once upon a time there was a little girl named

51 | perplexity = 16.083

52 | perplexity = 16.083

53 | perplexity = 12.086

54 | perplexity = 12.086

55 | ```

56 | To run llama and other LLMs that need an agreement (not SmolLM), you first have to type the following, which will ask for your `hf_token`:

57 | ```

58 | huggingface-cli login

59 | ```

60 |

61 | ## Please give us a ⭐ if you like this repo, thanks!

62 |

--------------------------------------------------------------------------------

/notebooks/update_packages.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "nbformat": 4,

3 | "nbformat_minor": 0,

4 | "metadata": {

5 | "colab": {

6 | "provenance": []

7 | },

8 | "kernelspec": {

9 | "name": "python3",

10 | "display_name": "Python 3"

11 | },

12 | "language_info": {

13 | "name": "python"

14 | }

15 | },

16 | "cells": [

17 | {

18 | "cell_type": "code",

19 | "execution_count": 1,

20 | "metadata": {

21 | "id": "lOsSebCDGVTh"

22 | },

23 | "outputs": [],

24 | "source": [

25 | "# This colab helps you to fix python package issues:\n",

26 | "# - The huggingface (HF) packages are updated very often\n",

27 | "# - We want the transformer-tricks package to work with the (almost) latest\n",

28 | "# HF packages\n",

29 | "# - We only need 3 HF packages: transformers, accelerate, datasets\n",

30 | "# - These 3 HF packages will load many other HF packages (such as safetensors,\n",

31 | "# hub), numpy and torch\n",

32 | "\n",

33 | "# remove all pip packages, except for pip, dateutil, certifi, etc.\n",

34 | "!pip list --format=freeze | grep -v -E \"pip|dateutil|certifi|psutil|_distutils_hack|pkg_resources\" | xargs pip uninstall -y --quiet\n",

35 | "\n",

36 | "# above takes about 6 minutes !!!"

37 | ]

38 | },

39 | {

40 | "cell_type": "code",

41 | "source": [

42 | "# install latest versions of the 3 HF packages\n",

43 | "!pip install transformers --quiet\n",

44 | "!pip install accelerate --quiet\n",

45 | "!pip install datasets --quiet\n",

46 | "\n",

47 | "!pip list | grep -E \"transformers|accelerate|datasets\"\n",

48 | "!pip list | grep -E \"torch|numpy|safetensors|huggingface\"\n",

49 | "!python -V"

50 | ],

51 | "metadata": {

52 | "id": "kWz9vRphZL2A"

53 | },

54 | "execution_count": null,

55 | "outputs": []

56 | },

57 | {

58 | "cell_type": "code",

59 | "source": [

60 | "# download files transformer_tricks.py and flashNorm_test.py and test if it\n",

61 | "# works with the latest version of the HF packages\n",

62 | "!wget -q https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/refs/heads/main/transformer_tricks.py\n",

63 | "!wget -q https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/refs/heads/main/flashNorm_example.py\n",

64 | "!wget -q https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/refs/heads/main/flashNorm_modeling_llama.py\n",

65 | "\n",

66 | "!python flashNorm_example.py"

67 | ],

68 | "metadata": {

69 | "id": "FCh28_1fauTX"

70 | },

71 | "execution_count": null,

72 | "outputs": []

73 | }

74 | ]

75 | }

--------------------------------------------------------------------------------

/notebooks/flashNorm_example.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": null,

6 | "id": "456ef5b0",

7 | "metadata": {},

8 | "outputs": [],

9 | "source": [

10 | "# This example converts SmolLM-135M to FlashNorm and measures its perplexity\n",

11 | "# before and after the conversion.\n",

12 | "# Usage: python3 flashNorm_example.py\n",

13 | "\n",

14 | "!wget -q https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/refs/heads/main/flashNorm_modeling_llama.py\n",

15 | "%pip install --quiet transformer_tricks\n",

16 | "import transformer_tricks as tt\n",

17 | "\n",

18 | "tt.quiet_hf() # calm down HuggingFace"

19 | ]

20 | },

21 | {

22 | "cell_type": "code",

23 | "execution_count": null,

24 | "id": "a8cd3fd7",

25 | "metadata": {},

26 | "outputs": [],

27 | "source": [

28 | "#-------------------------------------------------------------------------------\n",

29 | "# Example 1\n",

30 | "#-------------------------------------------------------------------------------\n",

31 | "\n",

32 | "# convert model and store the new model in ./SmolLM-135M_flashNorm_test\n",

33 | "tt.flashify_repo('HuggingFaceTB/SmolLM-135M')\n",

34 | "\n",

35 | "# run example inference of original and modified model\n",

36 | "tt.hello_world('HuggingFaceTB/SmolLM-135M')\n",

37 | "tt.hello_world('./SmolLM-135M_flashNorm_test')\n",

38 | "\n",

39 | "# measure perplexity of original and modified model\n",

40 | "tt.perplexity('HuggingFaceTB/SmolLM-135M', speedup=16)\n",

41 | "tt.perplexity('./SmolLM-135M_flashNorm_test', speedup=16)"

42 | ]

43 | },

44 | {

45 | "cell_type": "code",

46 | "execution_count": null,

47 | "id": "31381dd7",

48 | "metadata": {},

49 | "outputs": [],

50 | "source": [

51 | "#-------------------------------------------------------------------------------\n",

52 | "# Example 2\n",

53 | "#-------------------------------------------------------------------------------\n",

54 | "\n",

55 | "# convert model and store the new model in ./SmolLM-135M_flashNorm\n",

56 | "tt.flashify_repo('HuggingFaceTB/SmolLM-135M')\n",

57 | "\n",

58 | "# run example inference of original and modified model\n",

59 | "tt.hello_world('HuggingFaceTB/SmolLM-135M')\n",

60 | "tt.hello_world('./SmolLM-135M_flashNorm', arch='LlamaFlashNorm')\n",

61 | "\n",

62 | "# measure perplexity of original and modified model\n",

63 | "tt.perplexity('HuggingFaceTB/SmolLM-135M', speedup=16)\n",

64 | "tt.perplexity('./SmolLM-135M_flashNorm', speedup=16, arch='LlamaFlashNorm')"

65 | ]

66 | },

67 | {

68 | "cell_type": "markdown",

69 | "id": "46c7d85f",

70 | "metadata": {},

71 | "source": [

72 | "Whenever you change this file, make sure to regenerate the jupyter notebook by typing:\n",

73 | " `util/gen_notebooks`"

74 | ]

75 | }

76 | ],

77 | "metadata": {

78 | "jupytext": {

79 | "cell_metadata_filter": "-all",

80 | "main_language": "python",

81 | "notebook_metadata_filter": "-all"

82 | }

83 | },

84 | "nbformat": 4,

85 | "nbformat_minor": 5

86 | }

87 |

--------------------------------------------------------------------------------

/flashNorm_test.py:

--------------------------------------------------------------------------------

1 | # flashify LLMs and run inference and perplexity to make sure that

2 | # the flashified models are equivalent to the original ones

3 | # Usage: python3 test_flashNorm.py

4 |

5 | import transformer_tricks as tt

6 |

7 | tt.quiet_hf() # calm down HuggingFace

8 |

9 | # convert models to flashNorm

10 | tt.flashify_repo('HuggingFaceTB/SmolLM-135M')

11 | tt.flashify_repo('HuggingFaceTB/SmolLM-360M')

12 | #tt.flashify_repo('HuggingFaceTB/SmolLM-1.7B', bars=True)

13 | #tt.flashify_repo('microsoft/Phi-3-mini-4k-instruct', bars=True)

14 |

15 | # run models

16 | tt.hello_world('HuggingFaceTB/SmolLM-135M')

17 | tt.hello_world( 'SmolLM-135M_flashNorm_test')

18 | tt.hello_world( 'SmolLM-135M_flashNorm', arch='LlamaFlashNorm')

19 | tt.hello_world('HuggingFaceTB/SmolLM-360M')

20 | tt.hello_world( 'SmolLM-360M_flashNorm_test')

21 | tt.hello_world( 'SmolLM-360M_flashNorm', arch='LlamaFlashNorm')

22 | #tt.hello_world('HuggingFaceTB/SmolLM-1.7B')

23 | #tt.hello_world( 'SmolLM-1.7B_flashNorm')

24 | #tt.hello_world('microsoft/Phi-3-mini-4k-instruct')

25 | #tt.hello_world( 'Phi-3-mini-4k-instruct_flashNorm')

26 |

27 | # measure perplexity

28 | tt.perplexity('HuggingFaceTB/SmolLM-135M', speedup=16)

29 | tt.perplexity( 'SmolLM-135M_flashNorm_test', speedup=16)

30 | tt.perplexity( 'SmolLM-135M_flashNorm', arch='LlamaFlashNorm', speedup=16)

31 | tt.perplexity('HuggingFaceTB/SmolLM-360M', speedup=16)

32 | tt.perplexity( 'SmolLM-360M_flashNorm_test', speedup=16)

33 | tt.perplexity( 'SmolLM-360M_flashNorm', arch='LlamaFlashNorm', speedup=16)

34 | #tt.perplexity('HuggingFaceTB/SmolLM-1.7B', speedup=64)

35 | #tt.perplexity( 'SmolLM-1.7B_flashNorm', speedup=64)

36 | #tt.perplexity('microsoft/Phi-3-mini-4k-instruct', speedup=64, bars=True)

37 | #tt.perplexity( 'Phi-3-mini-4k-instruct_flashNorm', speedup=64, bars=True)

38 |

39 | # TODO: add more LLMs

40 | #python3 gen.py stabilityai/stablelm-2-1_6b # doesn't use RMSNorm, but LayerNorm

41 | #python3 gen.py meta-llama/Meta-Llama-3.1-8B

42 | #python3 gen.py mistralai/Mistral-7B-v0.3

43 |

44 | # Notes for running larger models:

45 | # - To run llama and other semi-secret LLMs, you first have to type the following:

46 | # huggingface-cli login

47 | # above will ask you for the hf_token, which is the same you use e.g. in colab

48 | #

49 | # - On MacBook, open the 'Activity Monitor' and check your memory usage. If your

50 | # MacBook has only 8GB of DRAM, then you have only about 6GB available. Many LLMs

51 | # use float32, so a 1.5B model needs at least 6GB of DRAM.

52 | #

53 | # - Running gen.py is limited by DRAM bandwidth, not compute. Running ppl.py is

54 | # usually limited by compute (rather than by memory bandwidth), so only having

55 | # 8GB of DRAM is likely not an issue for running ppl.py on larger LLMs. That's

56 | # because ppl.py doesn't do the auto-regressive generation phase but only the

57 | # prompt phase (where all input tokens are batched).

58 | #

59 | # - The models get cached on your system at ~/.cache/huggingface, which can grow

60 | # very big, see du -h -d 3 ~/.cache/huggingface

61 |

--------------------------------------------------------------------------------

/notebooks/removeWeights_paper.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": null,

6 | "metadata": {

7 | "id": "P9KAn1NGtAX3"

8 | },

9 | "outputs": [],

10 | "source": [

11 | "import torch\n",

12 | "import numpy as np\n",

13 | "from huggingface_hub import hf_hub_download\n",

14 | "import gc # garbage collection needed for low RAM footprint\n",

15 | "\n",

16 | "# download Mistral-7B from https://huggingface.co/mistralai/Mistral-7B-v0.1\n",

17 | "hf_hub_download(repo_id='mistralai/Mistral-7B-v0.1', filename='pytorch_model-00001-of-00002.bin', local_dir='.')\n",

18 | "hf_hub_download(repo_id='mistralai/Mistral-7B-v0.1', filename='pytorch_model-00002-of-00002.bin', local_dir='.')\n",

19 | "\n",

20 | "# load model files, use mmap to keep RAM footprint low\n",

21 | "m1 = torch.load('pytorch_model-00001-of-00002.bin', weights_only=True, mmap=True)\n",

22 | "m2 = torch.load('pytorch_model-00002-of-00002.bin', weights_only=True, mmap=True)\n",

23 | "\n",

24 | "def get_weights(model, layer, name):\n",

25 | " \"\"\"returns weight matrix of specific layer and name (such as Q, K, V)\"\"\"\n",

26 | " layer_str = 'layers.' + str(layer)\n",

27 | " match name:\n",

28 | " case 'Q': suffix = layer_str + '.self_attn.q_proj.weight'\n",

29 | " case 'K': suffix = layer_str + '.self_attn.k_proj.weight'\n",

30 | " case 'V': suffix = layer_str + '.self_attn.v_proj.weight'\n",

31 | " case 'P': suffix = layer_str + '.self_attn.o_proj.weight'\n",

32 | " case 'O': suffix = layer_str + '.mlp.down_proj.weight'\n",

33 | " case 'E': suffix = 'embed_tokens.weight'\n",

34 | " W = model['model.' + suffix].to(torch.float64).numpy() # convert to float64\n",

35 | " return W if name == 'E' else W.T # transpose weights, except for 'E'\n",

36 | "\n",

37 | "for layer in range(0, 32):\n",

38 | " print('layer', layer)\n",

39 | "\n",

40 | " # get weights Q, K, V, P, O\n",

41 | " model = m1 if layer < 23 else m2 # use m1 for layers 0 to 22\n",

42 | " Q = get_weights(model, layer, 'Q')\n",

43 | " K = get_weights(model, layer, 'K')\n",

44 | " V = get_weights(model, layer, 'V')\n",

45 | " P = get_weights(model, layer, 'P')\n",

46 | " O = get_weights(model, layer - 1, 'E' if layer == 0 else 'O') # use embedding for 1st layer\n",

47 | "\n",

48 | " # check if weight elimination is numerically identical\n",

49 | " Q_inv = np.linalg.inv(Q) # errors out if matrix is not invertible\n",

50 | " K_star = Q_inv @ K\n",

51 | " V_star = Q_inv @ V\n",

52 | " O_star = O @ Q\n",

53 | " print(' is O* @ K* close to O @ K ? ', np.allclose(O_star @ K_star, O @ K))\n",

54 | " print(' is O* @ V* close to O @ V ? ', np.allclose(O_star @ V_star, O @ V))\n",

55 | "\n",

56 | " # also check if P is invertible\n",

57 | " P_inv = np.linalg.inv(P) # errors out if matrix is not invertible\n",

58 | "\n",

59 | "# garbage collection (to avoid colab's RAM limit)\n",

60 | "del m1, m2, model, Q, K, V, P, O, Q_inv, P_inv, K_star, V_star, O_star\n",

61 | "gc.collect()"

62 | ]

63 | }

64 | ],

65 | "metadata": {

66 | "colab": {

67 | "provenance": []

68 | },

69 | "kernelspec": {

70 | "display_name": "Python 3",

71 | "name": "python3"

72 | },

73 | "language_info": {

74 | "name": "python"

75 | }

76 | },

77 | "nbformat": 4,

78 | "nbformat_minor": 0

79 | }

--------------------------------------------------------------------------------

/slimAttn_paper.py:

--------------------------------------------------------------------------------

1 | # Proof of concept for paper "Slim Attention: cut your context memory in half"

2 | # Usage: python3 slimAttn_paper.py

3 |

4 | # %pip install --quiet transformer_tricks

5 | import transformer_tricks as tt

6 | import numpy as np

7 | import torch

8 | from transformers import AutoConfig

9 |

10 | #-------------------------------------------------------------------------------

11 | # defs

12 | #-------------------------------------------------------------------------------

13 | def softmax(x, axis=-1):

14 | """softmax along 'axis', default is the last axis"""

15 | e_x = np.exp(x - np.max(x, axis=axis, keepdims=True))

16 | return e_x / np.sum(e_x, axis=axis, keepdims=True)

17 |

18 | def msplit(M, h):

19 | """shortcut to split matrix M into h chunks"""

20 | return np.array_split(M, h, axis=-1)

21 |

22 | def ops(A, B):

23 | """number of OPs (operations) for matmul of A and B:

24 | - A and B must be 2D arrays, and their inner dimensions must agree!

25 | - A is an m × n matrix, and B is an n × p matrix, then the resulting product

26 | of A and B is an m × p matrix.

27 | - Each element (i,j) of the m x p result matrix is computed by the dotproduct

28 | of the i-th row of A and the j-th column of B.

29 | - Each dotproduct takes n multiplications and n - 1 additions, so total

30 | number of OPs is 2n - 1 per dotproduct.

31 | - There are m * p elements in the result matrix, so m * p dotproducts, so in

32 | total we need m * p * (2n - 1) OPs, which is approximately 2*m*p*n OPs

33 | - For simplicity, let's just use the simple approximation of OPs = 2*m*p*n"""

34 | m, n = A.shape

35 | p = B.shape[1]

36 | return 2 * m * n * p

37 |

38 | #-------------------------------------------------------------------------------

39 | # setup for model SmolLM2-1.7B

40 | #-------------------------------------------------------------------------------

41 | tt.quiet_hf() # calm down HuggingFace

42 |

43 | repo = 'HuggingFaceTB/SmolLM2-1.7B'

44 | param = tt.get_param(repo)

45 | config = AutoConfig.from_pretrained(repo)

46 |

47 | h = config.num_attention_heads

48 | d = config.hidden_size

49 | dk = config.head_dim

50 |

51 | # %%

52 | #-------------------------------------------------------------------------------

53 | # check if we can accurately compute V from K for each layer

54 | #-------------------------------------------------------------------------------

55 | for layer in range(config.num_hidden_layers):

56 | # convert to float64 for better accuracy of matrix inversion

57 | # note that all weights are transposed in tensorfile (per pytorch convention)

58 | Wk = param[tt.weight('K', layer)].to(torch.float64).numpy().T

59 | Wv = param[tt.weight('V', layer)].to(torch.float64).numpy().T

60 | Wkv = np.linalg.inv(Wk) @ Wv

61 | print(layer, ':', np.allclose(Wk @ Wkv, Wv)) # check if Wk @ Wkv close to Wv

62 |

63 | # %%

64 | #-------------------------------------------------------------------------------

65 | # compare options 1 and 2 for calculating equation (5) of paper

66 | #-------------------------------------------------------------------------------

67 |

68 | # get weights for Q, K, V and convert to float64

69 | # note that all weights are transposed in tensorfile (per pytorch convention)

70 | Wq = param[tt.weight('Q', 0)].to(torch.float64).numpy().T

71 | Wk = param[tt.weight('K', 0)].to(torch.float64).numpy().T

72 | Wv = param[tt.weight('V', 0)].to(torch.float64).numpy().T

73 | Wkv = np.linalg.inv(Wk) @ Wv # calculate Wkv (aka W_KV)

74 | # print('Is Wk @ Wkv close to Wv?', np.allclose(Wk @ Wkv, Wv))

75 |

76 | # generate random input X

77 | n = 100 # number of tokens

78 | X = np.random.rand(n, d).astype(np.float64) # range [0,1]

79 | Xn = np.expand_dims(X[n-1, :], axis=0) # n-th row of X; make it a 1 x d matrix

80 |

81 | Q = Xn @ Wq # only for the last row of X (for the generate-phase)

82 | K = X @ Wk

83 | V = X @ Wv

84 |

85 | # only consider the first head

86 | Q0, K0, V0 = msplit(Q, h)[0], msplit(K, h)[0], msplit(V, h)[0]

87 | Wkv0 = msplit(Wkv, h)[0]

88 |

89 | # baseline reference

90 | scores = softmax((Q0 @ K0.T) / np.sqrt(dk))

91 | head_ref = scores @ V0

92 |

93 | # head option1 and option2

94 | head_o1 = scores @ (K @ Wkv0) # option 1

95 | head_o2 = (scores @ K) @ Wkv0 # option 2

96 |

97 | # compare

98 | print('Is head_o1 close to head_ref?', np.allclose(head_o1, head_ref))

99 | print('Is head_o2 close to head_ref?', np.allclose(head_o2, head_ref))

100 |

101 | # computational complexity for both options

102 | o1_step1, o1_step2 = ops(K, Wkv0), ops(scores, (K @ Wkv0))

103 | o2_step1, o2_step2 = ops(scores, K), ops(scores @ K, Wkv0)

104 |

105 | print(f'Option 1 OPs: step 1 = {o1_step1:,}; step 2 = {o1_step2:,}; total = {(o1_step1 + o1_step2):,}')

106 | print(f'Option 2 OPs: step 1 = {o2_step1:,}; step 2 = {o2_step2:,}; total = {(o2_step1 + o2_step2):,}')

107 | print(f'speedup of option 2 over option 1: {((o1_step1 + o1_step2) / (o2_step1 + o2_step2)):.1f}')

108 |

109 | # %% [markdown]

110 | # Whenever you change this file, make sure to regenerate the jupyter notebook by typing:

111 | # `util/gen_notebooks`

112 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

7 |

8 | A collection of tricks to simplify and speed up transformer models:

9 | - Slim attention: [paper](https://arxiv.org/abs/2503.05840), [video](https://youtu.be/uVtk3B6YO4Y), [podcast](https://notebooklm.google.com/notebook/ac47a53c-866b-4271-ab79-bc48d1b41722/audio), [notebook](https://colab.research.google.com/github/OpenMachine-ai/transformer-tricks/blob/main/notebooks/slimAttn_paper.ipynb), [code-readme](doc/slimAttn.md), :hugs: [article](https://huggingface.co/blog/Kseniase/attentions), [reddit](https://www.reddit.com/r/LocalLLaMA/comments/1j9wkc2/slim_attention_cut_your_context_memory_in_half)

10 | - FlashNorm: [paper](https://arxiv.org/abs/2407.09577), [video](https://youtu.be/GEuJv34_XgU), [podcast](https://notebooklm.google.com/notebook/0877599c-720c-49b5-b451-8a41af592dd1/audio), [notebook](https://colab.research.google.com/github/OpenMachine-ai/transformer-tricks/blob/main/notebooks/flashNorm_paper.ipynb), [code-readme](doc/flashNorm.md)

11 | - Matrix-shrink \[work in progress\]: [paper](https://docs.google.com/viewer?url=https://raw.githubusercontent.com/OpenMachine-ai/transformer-tricks/refs/heads/main/doc/matShrink.pdf)

12 | - Precomputing the first layer: [paper](https://arxiv.org/abs/2402.13388), [video](https://youtu.be/pUeSwnCOoNI), [podcast](https://notebooklm.google.com/notebook/7794278e-de6a-40fc-ab1c-3240a40e55d5/audio)

13 | - KV-weights only for skipless transformers: [paper](https://arxiv.org/abs/2404.12362), [video](https://youtu.be/Tx_lMpphd2g), [podcast](https://notebooklm.google.com/notebook/0875eef7-094e-4c30-bc13-90a1a074c949/audio), [notebook](https://colab.research.google.com/github/OpenMachine-ai/transformer-tricks/blob/main/notebooks/removeWeights_paper.ipynb)

14 |

15 | These transformer tricks extend a recent trend in neural network design toward architectural parsimony, in which unnecessary components are removed to create more efficient models. Notable examples include [RMSNorm’s](https://arxiv.org/abs/1910.07467) simplification of LayerNorm by removing mean centering, [PaLM's](https://arxiv.org/abs/2204.02311) elimination of bias parameters, and [decoder-only transformer's](https://arxiv.org/abs/1801.10198) omission of the encoder stack. This trend began with the original [transformer model's](https://arxiv.org/abs/1706.03762) removal of recurrence and convolutions.

16 |

17 | For example, our [FlashNorm](https://arxiv.org/abs/2407.09577) removes the weights from RMSNorm and merges them with the next linear layer. And [slim attention](https://arxiv.org/abs/2503.05840) removes the entire V-cache from the context memory for MHA transformers.

18 |

19 | ---

20 |

21 | ## Explainer videos

22 |

23 | [](https://www.youtube.com/watch?v=uVtk3B6YO4Y "Slim attention")

24 | [](https://www.youtube.com/watch?v=GEuJv34_XgU "Flash normalization")

25 | [](https://www.youtube.com/watch?v=pUeSwnCOoNI "Precomputing the first layer")

26 | [](https://www.youtube.com/watch?v=Tx_lMpphd2g "Removing weights from skipless transformers")

27 |

28 | ---

29 |

30 | ## Installation

31 |

32 | Install the transformer tricks package:

33 | ```bash

34 | pip install transformer-tricks

35 | ```

36 |

37 | Alternatively, to run from latest repo:

38 | ```bash

39 | git clone https://github.com/OpenMachine-ai/transformer-tricks.git

40 | python3 -m venv .venv

41 | source .venv/bin/activate

42 | pip3 install --quiet -r requirements.txt

43 | ```

44 |

45 | ---

46 |

47 | ## Documentation

48 | Follow the links below for documentation of the python code in this directory:

49 | - [Slim attention](doc/slimAttn.md)

50 | - [Flash normalization](doc/flashNorm.md)

51 |

52 | ---

53 |

54 | ## Notebooks

55 | The papers are accompanied by the following Jupyter notebooks:

56 | - Slim attention:

57 | - Flash normalization:

58 | - Removing weights from skipless transformers:

59 |

60 | ---

61 | ## Newsletter

62 | Please subscribe to our [newsletter](https://transformertricks.substack.com) on substack to get the latest news about this project. We will never send you more than one email per month.

63 |

64 | [](https://transformertricks.substack.com)

65 |

66 | ---

67 |

68 | ## Contributing

69 | We pay cash for high-impact contributions. Please check out [CONTRIBUTING](doc/CONTRIBUTING.md) for how to get involved.

70 |

71 | ---

72 |

73 | ## Sponsors

74 | The Transformer Tricks project is currently sponsored by [OpenMachine](https://openmachine.ai). We'd love to hear from you if you'd like to join us in supporting this project.

75 |

76 | ---

77 |

78 | ### Please give us a ⭐ if you like this repo, and check out [TinyFive](https://github.com/OpenMachine-ai/tinyfive)

79 |

80 | ---

81 |

82 | [](https://www.star-history.com/#OpenMachine-ai/transformer-tricks&Date)

83 |

--------------------------------------------------------------------------------

/notebooks/slimAttn_paper.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": null,

6 | "id": "9e3a70d2",

7 | "metadata": {},

8 | "outputs": [],

9 | "source": [

10 | "# Proof of concept for paper \"Slim Attention: cut your context memory in half\"\n",

11 | "# Usage: python3 slimAttn_paper.py\n",

12 | "\n",

13 | "%pip install --quiet transformer_tricks\n",

14 | "import transformer_tricks as tt\n",

15 | "import numpy as np\n",

16 | "import torch\n",

17 | "from transformers import AutoConfig\n",

18 | "\n",

19 | "#-------------------------------------------------------------------------------\n",

20 | "# defs\n",

21 | "#-------------------------------------------------------------------------------\n",

22 | "def softmax(x, axis=-1):\n",

23 | " \"\"\"softmax along 'axis', default is the last axis\"\"\"\n",

24 | " e_x = np.exp(x - np.max(x, axis=axis, keepdims=True))\n",

25 | " return e_x / np.sum(e_x, axis=axis, keepdims=True)\n",

26 | "\n",

27 | "def msplit(M, h):\n",

28 | " \"\"\"shortcut to split matrix M into h chunks\"\"\"\n",

29 | " return np.array_split(M, h, axis=-1)\n",

30 | "\n",

31 | "def ops(A, B):\n",

32 | " \"\"\"number of OPs (operations) for matmul of A and B:\n",

33 | " - A and B must be 2D arrays, and their inner dimensions must agree!\n",

34 | " - A is an m × n matrix, and B is an n × p matrix, then the resulting product\n",

35 | " of A and B is an m × p matrix.\n",

36 | " - Each element (i,j) of the m x p result matrix is computed by the dotproduct\n",

37 | " of the i-th row of A and the j-th column of B.\n",

38 | " - Each dotproduct takes n multiplications and n - 1 additions, so total\n",

39 | " number of OPs is 2n - 1 per dotproduct.\n",

40 | " - There are m * p elements in the result matrix, so m * p dotproducts, so in\n",

41 | " total we need m * p * (2n - 1) OPs, which is approximately 2*m*p*n OPs\n",

42 | " - For simplicity, let's just use the simple approximation of OPs = 2*m*p*n\"\"\"\n",

43 | " m, n = A.shape\n",

44 | " p = B.shape[1]\n",

45 | " return 2 * m * n * p\n",

46 | "\n",

47 | "#-------------------------------------------------------------------------------\n",

48 | "# setup for model SmolLM2-1.7B\n",

49 | "#-------------------------------------------------------------------------------\n",

50 | "tt.quiet_hf() # calm down HuggingFace\n",

51 | "\n",

52 | "repo = 'HuggingFaceTB/SmolLM2-1.7B'\n",

53 | "param = tt.get_param(repo)\n",

54 | "config = AutoConfig.from_pretrained(repo)\n",

55 | "\n",

56 | "h = config.num_attention_heads\n",

57 | "d = config.hidden_size\n",

58 | "dk = config.head_dim"

59 | ]

60 | },

61 | {

62 | "cell_type": "code",

63 | "execution_count": null,

64 | "id": "6498fa85",

65 | "metadata": {},

66 | "outputs": [],

67 | "source": [

68 | "#-------------------------------------------------------------------------------\n",

69 | "# check if we can accurately compute V from K for each layer\n",

70 | "#-------------------------------------------------------------------------------\n",

71 | "for layer in range(config.num_hidden_layers):\n",

72 | " # convert to float64 for better accuracy of matrix inversion\n",

73 | " # note that all weights are transposed in tensorfile (per pytorch convention)\n",

74 | " Wk = param[tt.weight('K', layer)].to(torch.float64).numpy().T\n",

75 | " Wv = param[tt.weight('V', layer)].to(torch.float64).numpy().T\n",

76 | " Wkv = np.linalg.inv(Wk) @ Wv\n",

77 | " print(layer, ':', np.allclose(Wk @ Wkv, Wv)) # check if Wk @ Wkv close to Wv"

78 | ]

79 | },

80 | {

81 | "cell_type": "code",

82 | "execution_count": null,

83 | "id": "a0f6735d",

84 | "metadata": {},

85 | "outputs": [],

86 | "source": [

87 | "#-------------------------------------------------------------------------------\n",

88 | "# compare options 1 and 2 for calculating equation (5) of paper\n",

89 | "#-------------------------------------------------------------------------------\n",

90 | "\n",

91 | "# get weights for Q, K, V and convert to float64\n",

92 | "# note that all weights are transposed in tensorfile (per pytorch convention)\n",

93 | "Wq = param[tt.weight('Q', 0)].to(torch.float64).numpy().T\n",

94 | "Wk = param[tt.weight('K', 0)].to(torch.float64).numpy().T\n",

95 | "Wv = param[tt.weight('V', 0)].to(torch.float64).numpy().T\n",

96 | "Wkv = np.linalg.inv(Wk) @ Wv # calculate Wkv (aka W_KV)\n",

97 | "# print('Is Wk @ Wkv close to Wv?', np.allclose(Wk @ Wkv, Wv))\n",

98 | "\n",

99 | "# generate random input X\n",

100 | "n = 100 # number of tokens\n",

101 | "X = np.random.rand(n, d).astype(np.float64) # range [0,1]\n",

102 | "Xn = np.expand_dims(X[n-1, :], axis=0) # n-th row of X; make it a 1 x d matrix\n",

103 | "\n",

104 | "Q = Xn @ Wq # only for the last row of X (for the generate-phase)\n",

105 | "K = X @ Wk\n",

106 | "V = X @ Wv\n",

107 | "\n",

108 | "# only consider the first head\n",

109 | "Q0, K0, V0 = msplit(Q, h)[0], msplit(K, h)[0], msplit(V, h)[0]\n",

110 | "Wkv0 = msplit(Wkv, h)[0]\n",

111 | "\n",

112 | "# baseline reference\n",

113 | "scores = softmax((Q0 @ K0.T) / np.sqrt(dk))\n",

114 | "head_ref = scores @ V0\n",

115 | "\n",

116 | "# head option1 and option2\n",

117 | "head_o1 = scores @ (K @ Wkv0) # option 1\n",

118 | "head_o2 = (scores @ K) @ Wkv0 # option 2\n",

119 | "\n",

120 | "# compare\n",

121 | "print('Is head_o1 close to head_ref?', np.allclose(head_o1, head_ref))\n",

122 | "print('Is head_o2 close to head_ref?', np.allclose(head_o2, head_ref))\n",

123 | "\n",

124 | "# computational complexity for both options\n",

125 | "o1_step1, o1_step2 = ops(K, Wkv0), ops(scores, (K @ Wkv0))\n",

126 | "o2_step1, o2_step2 = ops(scores, K), ops(scores @ K, Wkv0)\n",

127 | "\n",

128 | "print(f'Option 1 OPs: step 1 = {o1_step1:,}; step 2 = {o1_step2:,}; total = {(o1_step1 + o1_step2):,}')\n",

129 | "print(f'Option 2 OPs: step 1 = {o2_step1:,}; step 2 = {o2_step2:,}; total = {(o2_step1 + o2_step2):,}')\n",

130 | "print(f'speedup of option 2 over option 1: {((o1_step1 + o1_step2) / (o2_step1 + o2_step2)):.1f}')"

131 | ]

132 | },

133 | {

134 | "cell_type": "markdown",

135 | "id": "fe352e46",

136 | "metadata": {},

137 | "source": [

138 | "Whenever you change this file, make sure to regenerate the jupyter notebook by typing:\n",

139 | " `util/gen_notebooks`"

140 | ]

141 | }

142 | ],

143 | "metadata": {

144 | "jupytext": {

145 | "cell_metadata_filter": "-all",

146 | "main_language": "python",

147 | "notebook_metadata_filter": "-all"

148 | }

149 | },

150 | "nbformat": 4,

151 | "nbformat_minor": 5

152 | }

153 |

--------------------------------------------------------------------------------

/notebooks/flashNorm_paper.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": 1,

6 | "metadata": {

7 | "id": "P9KAn1NGtAX3"

8 | },

9 | "outputs": [],

10 | "source": [

11 | "# code for paper 'Transformer tricks: flash normalization'\n",

12 | "\n",

13 | "import numpy as np\n",

14 | "\n",

15 | "# reciprocal of RMS and activation functions\n",

16 | "def r_rms(x): return 1 / np.sqrt(np.mean(x**2))\n",

17 | "def r_ms(x): return 1 / np.mean(x**2)\n",

18 | "def relu(x): return np.maximum(0, x)\n",

19 | "def sigmoid(x): return 1 / (1 + np.exp(-x))\n",

20 | "def silu(x): return x * sigmoid(x) # often known as swish\n",

21 | "\n",

22 | "# merge normalization weights g into weight matrix W\n",

23 | "def flashify(g, W):\n",

24 | " Wnew = np.empty(W.shape)\n",

25 | " for i in range(g.shape[0]):\n",

26 | " Wnew[i, :] = g[i] * W[i, :]\n",

27 | " return Wnew\n",

28 | "\n",

29 | "# alternative flashify (same as above but fewer lines)\n",

30 | "#def flashify_alt(g, W):\n",

31 | "# G = np.repeat(g, W.shape[1]).reshape(W.shape)\n",

32 | "# return G * W # elementwise multiply"

33 | ]

34 | },

35 | {

36 | "cell_type": "code",

37 | "source": [

38 | "# variables\n",

39 | "n = 32\n",

40 | "f = 128\n",

41 | "a = np.random.rand(n) # row-vector\n",

42 | "g = np.random.rand(n) # row-vector\n",

43 | "W = np.random.rand(n, n)\n",

44 | "UP = np.random.rand(n, f)\n",

45 | "GATE = np.random.rand(n, f)\n",

46 | "DOWN = np.random.rand(f, n)\n",

47 | "\n",

48 | "# derived variables\n",

49 | "s = r_rms(a) # scaling factor\n",

50 | "Wstar = flashify(g, W)\n",

51 | "UPstar = flashify(g, UP)\n",

52 | "GATEstar = flashify(g, GATE)"

53 | ],

54 | "metadata": {

55 | "id": "yLty-2szRrDM"

56 | },

57 | "execution_count": 2,

58 | "outputs": []

59 | },

60 | {

61 | "cell_type": "code",

62 | "source": [

63 | "# code for section 1 of paper\n",

64 | "\n",

65 | "# figures 1(a), 1(b), and 1(c) of paper\n",

66 | "z_fig1a = (r_rms(a) * a * g) @ W\n",

67 | "z_fig1b = (r_rms(a) * a) @ Wstar\n",

68 | "z_fig1c = (a @ Wstar) * r_rms(a)\n",

69 | "\n",

70 | "# compare against z_fig1a\n",

71 | "print(np.allclose(z_fig1b, z_fig1a), ' (fig1b is close to fig1a if True)')\n",

72 | "print(np.allclose(z_fig1c, z_fig1a), ' (fig1c is close to fig1a if True)')"

73 | ],

74 | "metadata": {

75 | "colab": {

76 | "base_uri": "https://localhost:8080/"

77 | },

78 | "id": "4YSv5p16ScE2",

79 | "outputId": "e9e6ec90-04e7-4d80-98dc-374e8202f60e"

80 | },

81 | "execution_count": 3,

82 | "outputs": [

83 | {

84 | "output_type": "stream",

85 | "name": "stdout",

86 | "text": [

87 | "True (fig1b is close to fig1a if True)\n",

88 | "True (fig1c is close to fig1a if True)\n"

89 | ]

90 | }

91 | ]

92 | },

93 | {

94 | "cell_type": "code",

95 | "source": [

96 | "# code for section 2.1 of paper\n",

97 | "\n",

98 | "# reference and figures 2(a) and 2(b) of paper\n",

99 | "y_ref2 = relu((s * a * g) @ UP) @ DOWN\n",

100 | "y_fig2a = relu((a @ UPstar) * s) @ DOWN\n",

101 | "y_fig2b = (relu(a @ UPstar) @ DOWN) * s\n",

102 | "\n",

103 | "# compare against y_ref\n",

104 | "print(np.allclose(y_fig2a, y_ref2), ' (fig2a is close to reference if True)')\n",

105 | "print(np.allclose(y_fig2b, y_ref2), ' (fig2b is close to reference if True)')"

106 | ],

107 | "metadata": {

108 | "colab": {

109 | "base_uri": "https://localhost:8080/"

110 | },

111 | "id": "HlUzIzR-VXlg",

112 | "outputId": "6f90bd82-b003-4431-e071-a251b4906d8a"

113 | },

114 | "execution_count": 4,

115 | "outputs": [

116 | {

117 | "output_type": "stream",

118 | "name": "stdout",

119 | "text": [

120 | "True (fig2a is close to reference if True)\n",

121 | "True (fig2b is close to reference if True)\n"

122 | ]

123 | }

124 | ]

125 | },

126 | {

127 | "cell_type": "code",

128 | "source": [

129 | "# code for section 2.2 of paper\n",

130 | "\n",

131 | "# shortcuts\n",

132 | "a_norm = s * a * g\n",

133 | "a_gate, a_up = (a @ GATEstar), (a @ UPstar)\n",

134 | "\n",

135 | "# figure 3: reference and figures 3(a) and 3(b) of paper\n",

136 | "y_ref3 = ((a_norm @ GATE) * silu(a_norm @ UP)) @ DOWN\n",

137 | "y_fig3a = (a_gate * s * silu(a_up * s)) @ DOWN\n",

138 | "y_fig3b = ((a_gate * silu(a_up * s)) @ DOWN) * s\n",

139 | "\n",

140 | "# compare against y_ref3\n",

141 | "print(np.allclose(y_fig3a, y_ref3), ' (fig3a is close to reference if True)')\n",

142 | "print(np.allclose(y_fig3b, y_ref3), ' (fig3b is close to reference if True)')\n",

143 | "\n",

144 | "# figure 4: reference and figures 4(a) and 4(b) of paper\n",

145 | "y_ref4 = ((a_norm @ GATE) * relu(a_norm @ UP)) @ DOWN\n",

146 | "y_fig4a = (a_gate * s * relu(a_up * s)) @ DOWN\n",

147 | "y_fig4b = ((a_gate * relu(a_up)) @ DOWN) * r_ms(a)\n",

148 | "\n",

149 | "# compare against y_ref4\n",

150 | "print(np.allclose(y_fig4a, y_ref4), ' (fig4a is close to reference if True)')\n",

151 | "print(np.allclose(y_fig4b, y_ref4), ' (fig4b is close to reference if True)')"

152 | ],

153 | "metadata": {

154 | "colab": {

155 | "base_uri": "https://localhost:8080/"

156 | },

157 | "id": "3sVxcH8Q0MHt",

158 | "outputId": "5a7a7d7c-f935-4e80-fc34-9b59662e39d7"

159 | },

160 | "execution_count": 5,

161 | "outputs": [

162 | {

163 | "output_type": "stream",

164 | "name": "stdout",

165 | "text": [

166 | "True (fig3a is close to reference if True)\n",

167 | "True (fig3b is close to reference if True)\n",

168 | "True (fig4a is close to reference if True)\n",

169 | "True (fig4b is close to reference if True)\n"

170 | ]

171 | }

172 | ]

173 | },

174 | {

175 | "cell_type": "code",

176 | "source": [

177 | "# code for section 3 of paper\n",

178 | "\n",

179 | "# TODO"

180 | ],

181 | "metadata": {

182 | "id": "xW9Sz-Dy5H0e"

183 | },

184 | "execution_count": null,

185 | "outputs": []

186 | }

187 | ],

188 | "metadata": {

189 | "colab": {

190 | "provenance": []

191 | },

192 | "kernelspec": {

193 | "display_name": "Python 3",

194 | "name": "python3"

195 | },

196 | "language_info": {

197 | "name": "python"

198 | }

199 | },

200 | "nbformat": 4,

201 | "nbformat_minor": 0

202 | }

--------------------------------------------------------------------------------

/tex/arxiv.sty:

--------------------------------------------------------------------------------

1 | % This file is copied from

2 | % https://github.com/kourgeorge/arxiv-style/blob/master/arxiv.sty

3 | % my changes are marked by "OM-mods"

4 |

5 | \NeedsTeXFormat{LaTeX2e}

6 |

7 | \ProcessOptions\relax

8 |

9 | % fonts

10 | \renewcommand{\rmdefault}{ptm}

11 | \renewcommand{\sfdefault}{phv}

12 |

13 | % set page geometry

14 | \usepackage[verbose=true,letterpaper]{geometry}

15 | \AtBeginDocument{

16 | \newgeometry{

17 | textheight=9in,

18 | textwidth=6.5in,

19 | top=1in,

20 | % OM-mods: headheight=14pt,

21 | % OM-mods: headsep=25pt,

22 | footskip=30pt

23 | }

24 | }

25 |

26 | \widowpenalty=10000

27 | \clubpenalty=10000

28 | \flushbottom

29 | \sloppy

30 |

31 | % OM-mods: \newcommand{\headeright}{A Preprint}

32 | % OM-mods: \newcommand{\undertitle}{A Preprint}

33 | % OM-mods: \newcommand{\shorttitle}{\@title}

34 |

35 | \usepackage{fancyhdr}

36 | \usepackage{lastpage}

37 | \fancyhf{}

38 | \pagestyle{fancy}

39 | % OM-mods: \renewcommand{\headrulewidth}{0.4pt}

40 | % OM-mods: below line removes the header

41 | \renewcommand{\headrulewidth}{0pt}

42 | % OM-mods: \fancyheadoffset{0pt}

43 | % OM-mods: \rhead{\scshape \footnotesize \headeright}

44 | % OM-mods: \chead{\shorttitle}

45 | \cfoot{{\thepage} of \pageref*{LastPage}}

46 |

47 | % OM-mods: %Handling Keywords

48 | % OM-mods: \def\keywordname{{\bfseries \emph{Keywords}}}%

49 | % OM-mods: \def\keywords#1{\par\addvspace\medskipamount{\rightskip=0pt plus1cm

50 | % OM-mods: \def\and{\ifhmode\unskip\nobreak\fi\ $\cdot$

51 | % OM-mods: }\noindent\keywordname\enspace\ignorespaces#1\par}}

52 |

53 | % font sizes with reduced leading

54 | \renewcommand{\normalsize}{%

55 | \@setfontsize\normalsize\@xpt\@xipt

56 | \abovedisplayskip 7\p@ \@plus 2\p@ \@minus 5\p@

57 | \abovedisplayshortskip \z@ \@plus 3\p@

58 | \belowdisplayskip \abovedisplayskip

59 | \belowdisplayshortskip 4\p@ \@plus 3\p@ \@minus 3\p@

60 | }

61 | \normalsize

62 | \renewcommand{\small}{%

63 | \@setfontsize\small\@ixpt\@xpt

64 | \abovedisplayskip 6\p@ \@plus 1.5\p@ \@minus 4\p@

65 | \abovedisplayshortskip \z@ \@plus 2\p@

66 | \belowdisplayskip \abovedisplayskip

67 | \belowdisplayshortskip 3\p@ \@plus 2\p@ \@minus 2\p@

68 | }

69 | \renewcommand{\footnotesize}{\@setfontsize\footnotesize\@ixpt\@xpt}

70 | \renewcommand{\scriptsize}{\@setfontsize\scriptsize\@viipt\@viiipt}

71 | \renewcommand{\tiny}{\@setfontsize\tiny\@vipt\@viipt}

72 | \renewcommand{\large}{\@setfontsize\large\@xiipt{14}}

73 | \renewcommand{\Large}{\@setfontsize\Large\@xivpt{16}}

74 | \renewcommand{\LARGE}{\@setfontsize\LARGE\@xviipt{20}}

75 | \renewcommand{\huge}{\@setfontsize\huge\@xxpt{23}}