├── .github

└── workflows

│ └── email-notification.yml

├── LICENSE

├── README.md

├── citations.bib

├── examples

├── 01_Creating_Imbalanced_Benchmark_Datasets.ipynb

├── 02_Optimizing_AUROC_with_ResNet20_on_Imbalanced_CIFAR10.ipynb

├── 03_Optimizing_AUPRC_Loss_on_Imbalanced_dataset.ipynb

├── 04_Training_with_Pytorch_Learning_Rate_Scheduling.ipynb

├── 05_Optimizing_AUROC_Loss_with_DenseNet121_on_CheXpert.ipynb

├── 07_Optimizing_Multi_Label_AUROC_Loss_with_DenseNet121_on_CheXpert.ipynb

├── 08_Optimizing_AUROC_Loss_with_DenseNet121_on_Melanoma.ipynb

├── 09_Optimizing_CompositionalAUC_Loss_with_ResNet20_on_CIFAR10.ipynb

├── 10_Optimizing_NDCG_Loss_on_MovieLens20M.ipynb

├── 11_Optimizing_pAUC_Loss_on_Imbalanced_data_wrapper.ipynb

├── 11_Optimizing_pAUC_Loss_with_SOPA_on_Imbalanced_data.ipynb

├── 11_Optimizing_pAUC_Loss_with_SOPAs_on_Imbalanced_data.ipynb

├── 11_Optimizing_pAUC_Loss_with_SOTAs_on_Imbalanced_data.ipynb

├── 12_Optimizing_AUROC_Loss_on_Tabular_Data.ipynb

├── placeholder.md

└── scripts

│ ├── 01_Creating_Imbalanced_Benchmark_Datasets.py

│ ├── 02_optimizing_auroc_with_resnet20_on_imbalanced_cifar10.py

│ ├── 03_optimizing_auprc_loss_on_imbalanced_dataset.py

│ ├── 04_training_with_pytorch_learning_rate_scheduling.py

│ ├── 05_Optimizing_AUROC_loss_with_densenet121_on_CheXpert.py

│ ├── 05_optimizing_auroc_loss_with_densenet121_on_chexpert.py

│ ├── 06_Optimizing_AUROC_loss_with_DenseNet121_on_CIFAR100_in_Federated_Setting_CODASCA.py

│ ├── 07_optimizing_multi_label_auroc_loss_with_densenet121_on_chexpert.py

│ ├── 08_optimizing_auroc_loss_with_densenet121_on_melanoma.py

│ ├── 09_optimizing_compositionalauc_loss_with_resnet20_on_cifar10.py

│ ├── 10_optimizing_ndcg_loss_on_movielens20m.py

│ ├── 11_optimizing_pauc_loss_on_imbalanced_data_wrapper.py

│ ├── 11_optimizing_pauc_loss_with_sopa_on_imbalanced_data.py

│ ├── 11_optimizing_pauc_loss_with_sopas_on_imbalanced_data.py

│ └── 11_optimizing_pauc_loss_with_sotas_on_imbalanced_data.py

├── imgs

└── libauc_logo.png

├── libauc

├── __init__.py

├── datasets

│ ├── __init__.py

│ ├── breastcancer.py

│ ├── cat_vs_dog.py

│ ├── chexpert.py

│ ├── cifar.py

│ ├── dataset.py

│ ├── folder.py

│ ├── melanoma.py

│ ├── movielens.py

│ ├── musk2.py

│ ├── stl10.py

│ └── webdataset.py

├── losses

│ ├── __init__.py

│ ├── auc.py

│ ├── contrastive.py

│ ├── losses.py

│ ├── mil.py

│ ├── perf_at_top.py

│ ├── ranking.py

│ └── surrogate.py

├── metrics

│ ├── __init__.py

│ ├── metrics.py

│ └── metrics_k.py

├── models

│ ├── __init__.py

│ ├── densenet.py

│ ├── gnn.py

│ ├── mil_models.py

│ ├── neumf.py

│ ├── perceptron.py

│ ├── resnet.py

│ └── resnet_cifar.py

├── optimizers

│ ├── __init__.py

│ ├── adam.py

│ ├── adamw.py

│ ├── isogclr.py

│ ├── lars.py

│ ├── midam.py

│ ├── pdsca.py

│ ├── pesg.py

│ ├── sgd.py

│ ├── soap.py

│ ├── sogclr.py

│ ├── song.py

│ ├── sopa.py

│ ├── sopa_s.py

│ └── sota_s.py

├── sampler

│ ├── __init__.py

│ └── sampler.py

└── utils

│ ├── __init__.py

│ ├── paper_utils.py

│ └── utils.py

└── setup.py

/.github/workflows/email-notification.yml:

--------------------------------------------------------------------------------

1 | name: Email Notification

2 |

3 | on:

4 | issues:

5 | types: [opened]

6 |

7 | jobs:

8 | send-email:

9 | runs-on: ubuntu-latest

10 |

11 | steps:

12 | - name: Send Email Notification

13 | uses: dawidd6/action-send-mail@v2

14 | with:

15 | server_address: smtp.gmail.com

16 | server_port: 587

17 | username: ${{ secrets.EMAIL_USERNAME }}

18 | password: ${{ secrets.EMAIL_PASSWORD }}

19 | subject: 'New Issue Created'

20 | to: 'yangtia1@gmail.com'

21 | from: 'yangtia1@gmail.com'

22 | body: 'A new issue has been created in your GitHub repository.'

23 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2023 OptMAI

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 | LibAUC: A Deep Learning Library for X-Risk Optimization

7 | ---

8 |

9 |

10 |  11 |

12 |

13 |

11 |

12 |

13 |  14 |

15 |

16 |

14 |

15 |

16 |  17 |

18 |

19 |

17 |

18 |

19 |  20 |

21 |

22 |

20 |

21 |

22 |  23 |

24 |

23 |

24 |

25 |

26 | | [**Documentation**](https://docs.libauc.org/)

27 | | [**Installation**](https://libauc.org/installation/)

28 | | [**Website**](https://libauc.org/)

29 | | [**Tutorial**](https://github.com/Optimization-AI/LibAUC/tree/main/examples)

30 | | [**Research**](https://libauc.org/publications/)

31 | | [**Github**](https://github.com/Optimization-AI/LibAUC/) |

32 |

33 |

34 | News

35 | ---

36 |

37 | - [8/14/2024]: **New Version is Available:** We are releasing LibAUC 1.4.0. We offer new optimizers/losses/models and have improved some existing optimizers. For more details, please check the latest [release note](https://github.com/Optimization-AI/LibAUC/releases/).

38 |

39 | - [04/07/2024]: **Bugs fixed:** We fixed a bug in datasets/folder.py by returning a return_index to support SogCLR/iSogCLR for contrastive learning. Fixed incorrect communication with all_gather in GCLoss_v1 and set gamma to original value when u is not 0. None of these were in our experimental code of the paper.

40 |

41 | - [02/11/2024]: **A Bug fixed:** We fixed a bug in the calculation of AUCM loss and MultiLabelAUCM loss (the margin parameter is missed in the original calculation which might cause the loss to be negative). However, it does not affect the learning as the updates are not affected by this. Both the source code and pip install are updated.

42 |

43 | - [06/10/2023]: **LibAUC 1.3.0 is now available!** In this update, we have made improvements and introduced new features. We also release a new documentation website at [https://docs.libauc.org/](https://docs.libauc.org/). Please see the [release notes](https://github.com/Optimization-AI/LibAUC/releases) for details.

44 |

45 |

46 | Why LibAUC?

47 | ---

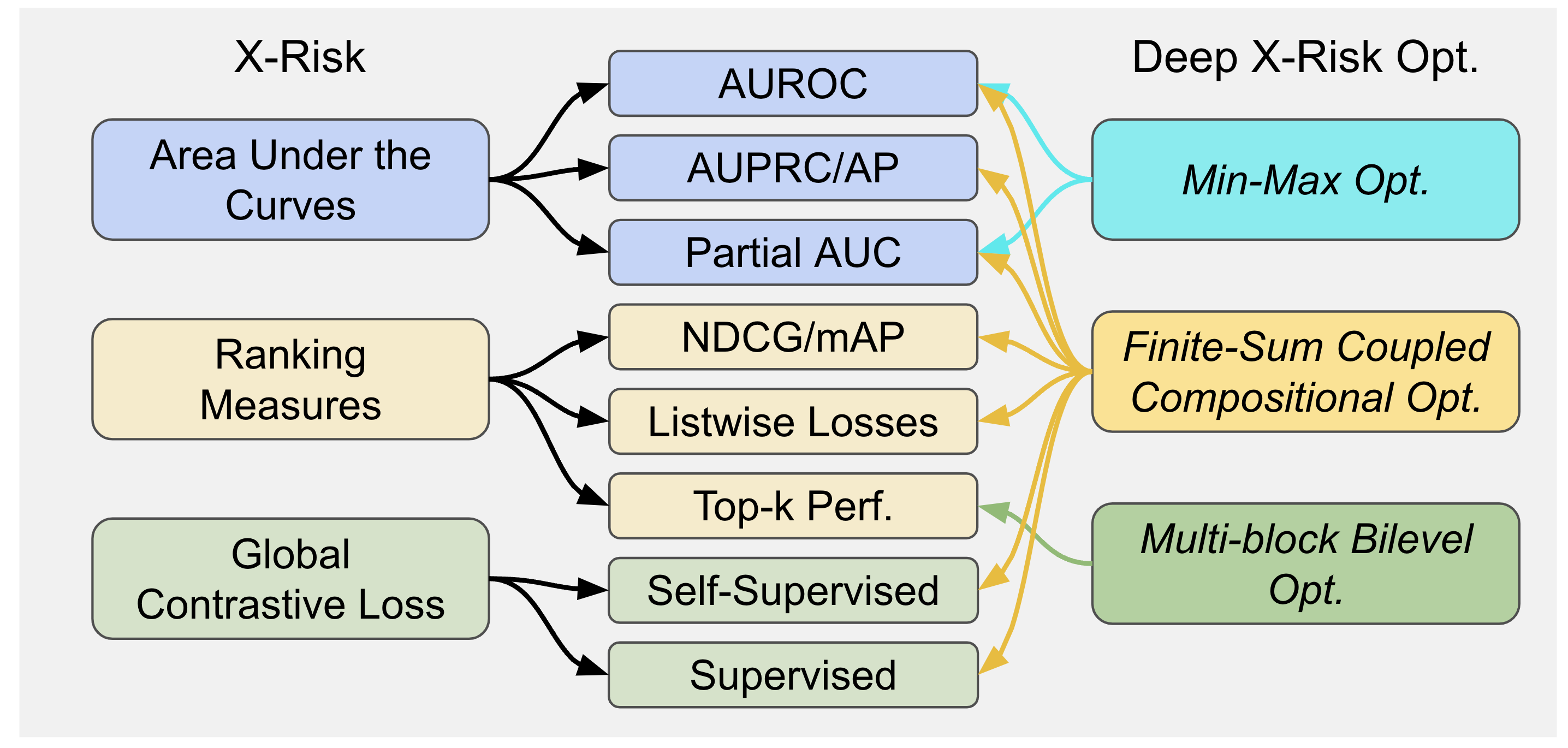

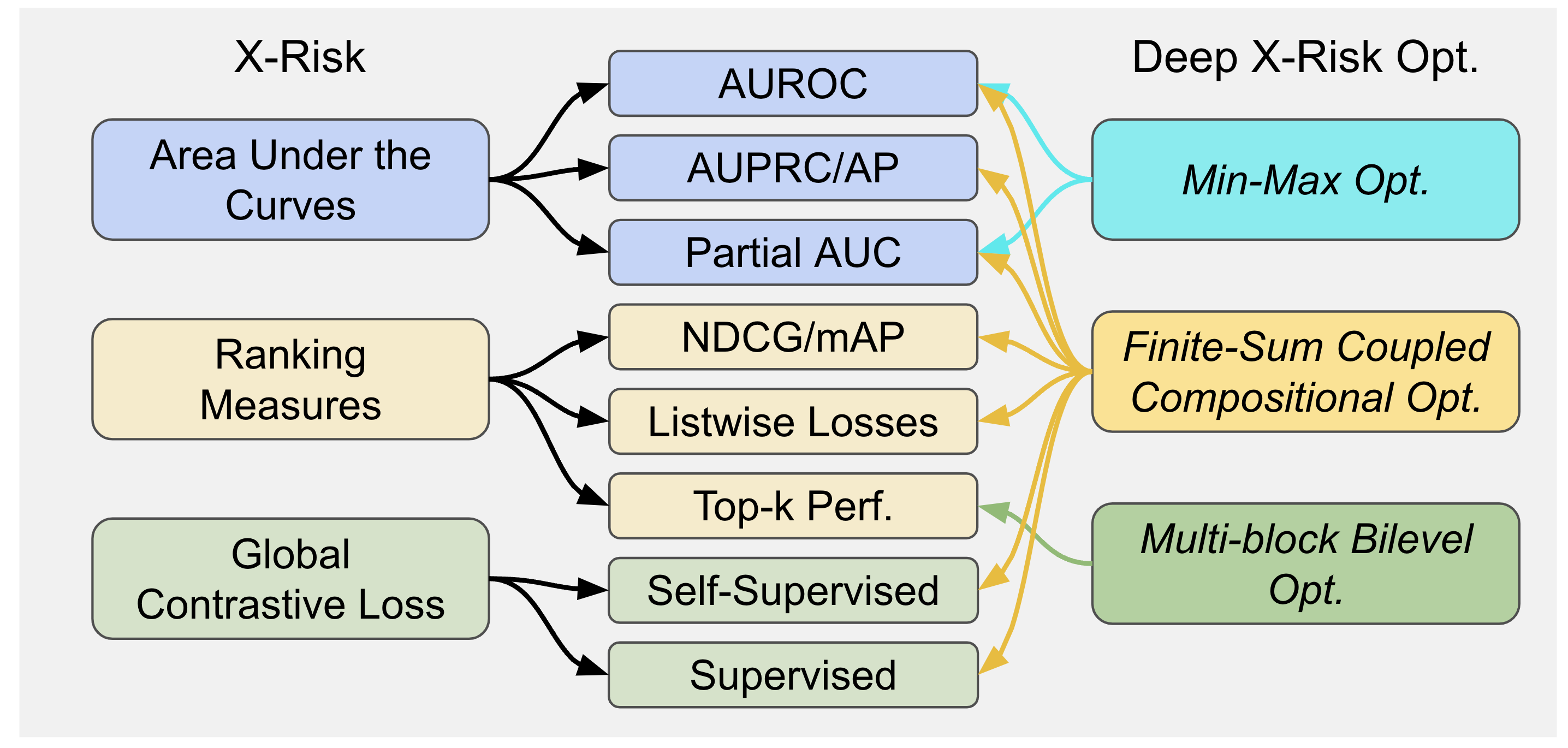

48 | LibAUC offers an easier way to directly optimize commonly-used performance measures and losses with user-friendly API. LibAUC has broad applications in AI for tackling many challenges, such as **Classification of Imbalanced Data (CID)**, **Learning to Rank (LTR)**, and **Contrastive Learning of Representation (CLR)**. LibAUC provides a unified framework to abstract the optimization of many compositional loss functions, including surrogate losses for AUROC, AUPRC/AP, and partial AUROC that are suitable for CID, surrogate losses for NDCG, top-K NDCG, and listwise losses that are used in LTR, and global contrastive losses for CLR. Here’s an overview:

49 |

50 |

51 |  52 |

52 |

53 |

54 |

55 | Installation

56 | --------------

57 | Installing from pip

58 | ```

59 | $ pip install -U libauc

60 | ```

61 |

62 | Installing from source

63 |

64 | ```

65 | $ git clone https://github.com/Optimization-AI/LibAUC.git

66 | $ cd LibAUC

67 | $ pip install .

68 | ```

69 |

70 |

71 |

72 |

73 |

74 | Usage

75 | ---

76 | #### Example training pipline for optimizing X-risk (e.g., AUROC)

77 | ```python

78 | >>> #import our loss and optimizer

79 | >>> from libauc.losses import AUCMLoss

80 | >>> from libauc.optimizers import PESG

81 | >>> #pretraining your model through supervised learning or self-supervised learning

82 | >>> #load a pretrained encoder and random initialize the last linear layer

83 | >>> #define loss & optimizer

84 | >>> Loss = AUCMLoss()

85 | >>> optimizer = PESG()

86 | ...

87 | >>> #training

88 | >>> model.train()

89 | >>> for data, targets in trainloader:

90 | >>> data, targets = data.cuda(), targets.cuda()

91 | logits = model(data)

92 | preds = torch.sigmoid(logits)

93 | loss = Loss(preds, targets)

94 | optimizer.zero_grad()

95 | loss.backward()

96 | optimizer.step()

97 | ...

98 | >>> #update internal parameters

99 | >>> optimizer.update_regularizer()

100 | ```

101 |

102 | Tutorials

103 | -------

104 | ### X-Risk Minimization

105 |

106 | - **Optimizing AUCMLoss**: [[example]](https://docs.libauc.org/examples/auroc.html)

107 | - **Optimizing APLoss**: [[example]](https://docs.libauc.org/examples/auprc.html)

108 | - **Optimizing CompositionalAUCLoss**: [[example]](https://docs.libauc.org/examples/compauc.html)

109 | - **Optimizing pAUCLoss**: [[example]](https://docs.libauc.org/examples/pauc.html)

110 | - **Optimizing MIDAMLoss**: [[example]](https://docs.libauc.org/examples/MIDAM-att-tabular.html)

111 | - **Optimizing NDCGLoss**: [[example]](https://docs.libauc.org/examples/ndcg.html)

112 | - **Optimizing GCLoss (Unimodal)**: [[example]](https://docs.libauc.org/examples/sogclr.html)

113 | - **Optimizing GCLoss (Bimodal)**: [[example]](https://docs.libauc.org/examples/sogclr_gamma.html)

114 |

115 |

116 | Other Applications

117 |

118 | - [Constructing benchmark imbalanced datasets for CIFAR10, CIFAR100, CATvsDOG, STL10](https://github.com/Optimization-AI/LibAUC/blob/main/examples/01_Creating_Imbalanced_Benchmark_Datasets.ipynb)

119 | - [Using LibAUC with PyTorch learning rate scheduler](https://github.com/Optimization-AI/LibAUC/blob/main/examples/04_Training_with_Pytorch_Learning_Rate_Scheduling.ipynb)

120 | - [Optimizing AUROC loss on Chest X-Ray dataset (CheXpert)](https://github.com/Optimization-AI/LibAUC/blob/main/examples/05_Optimizing_AUROC_Loss_with_DenseNet121_on_CheXpert.ipynb)

121 | - [Optimizing AUROC loss on Skin Cancer dataset (Melanoma)](https://github.com/Optimization-AI/LibAUC/blob/main/examples/08_Optimizing_AUROC_Loss_with_DenseNet121_on_Melanoma.ipynb)

122 | - [Optimizing multi-label AUROC loss on Chest X-Ray dataset (CheXpert)](https://github.com/Optimization-AI/LibAUC/blob/main/examples/07_Optimizing_Multi_Label_AUROC_Loss_with_DenseNet121_on_CheXpert.ipynb)

123 | - [Optimizing AUROC loss on Tabular dataset (Credit Fraud)](https://github.com/Optimization-AI/LibAUC/blob/main/examples/12_Optimizing_AUROC_Loss_on_Tabular_Data.ipynb)

124 | - [Optimizing AUROC loss for Federated Learning](https://github.com/Optimization-AI/LibAUC/blob/main/examples/scripts/06_Optimizing_AUROC_loss_with_DenseNet121_on_CIFAR100_in_Federated_Setting_CODASCA.py)

125 | - [Optimizing GCLoss (Bimodal with Cosine Gamma)](https://docs.libauc.org/examples/sogclr_gamma.html)

126 |

127 |

128 |

129 |

130 | Citation

131 | ---------

132 | If you find LibAUC useful in your work, please cite the following papers:

133 | ```

134 | @inproceedings{yuan2023libauc,

135 | title={LibAUC: A Deep Learning Library for X-Risk Optimization},

136 | author={Zhuoning Yuan and Dixian Zhu and Zi-Hao Qiu and Gang Li and Xuanhui Wang and Tianbao Yang},

137 | booktitle={29th SIGKDD Conference on Knowledge Discovery and Data Mining},

138 | year={2023}

139 | }

140 | ```

141 | ```

142 | @article{yang2022algorithmic,

143 | title={Algorithmic Foundations of Empirical X-Risk Minimization},

144 | author={Yang, Tianbao},

145 | journal={arXiv preprint arXiv:2206.00439},

146 | year={2022}

147 | }

148 | ```

149 |

150 | Contact

151 | ----------

152 | For any technical questions, please open a new issue in the Github. If you have any other questions, please contact us via libaucx@gmail.com or tianbao-yang@tamu.edu.

153 |

--------------------------------------------------------------------------------

/citations.bib:

--------------------------------------------------------------------------------

1 | @inproceedings{yuan2023libauc,

2 | title={LibAUC: A Deep Learning Library for X-Risk Optimization},

3 | author={Zhuoning Yuan and Dixian Zhu and Zi-Hao Qiu and Gang Li and Xuanhui Wang and Tianbao Yang},

4 | booktitle={29th SIGKDD Conference on Knowledge Discovery and Data Mining},

5 | year={2023}

6 | }

7 |

8 | @article{yang2022algorithmic,

9 | title={Algorithmic Foundation of Deep X-Risk Optimization},

10 | author={Yang, Tianbao},

11 | journal={arXiv preprint arXiv:2206.00439},

12 | year={2022}

13 | }

14 |

15 | @article{yang2022auc,

16 | title={AUC Maximization in the Era of Big Data and AI: A Survey},

17 | author={Yang, Tianbao and Ying, Yiming},

18 | journal={arXiv preprint arXiv:2203.15046},

19 | year={2022}

20 | }

21 |

22 | @article{yuan2022provable,

23 | title={Provable Stochastic Optimization for Global Contrastive Learning: Small Batch Does Not Harm Performance},

24 | author={Yuan, Zhuoning and Wu, Yuexin and Qiu, Zihao and Du, Xianzhi and Zhang, Lijun and Zhou, Denny and Yang, Tianbao},

25 | booktitle={International Conference on Machine Learning},

26 | year={2022},

27 | organization={PMLR}

28 | }

29 |

30 |

31 | @article{qiu2022large,

32 | title={Large-scale Stochastic Optimization of NDCG Surrogates for Deep Learning with Provable Convergence},

33 | author={Qiu, Zi-Hao and Hu, Quanqi and Zhong, Yongjian and Zhang, Lijun and Yang, Tianbao},

34 | booktitle={International Conference on Machine Learning},

35 | year={2022},

36 | organization={PMLR}

37 | }

38 |

39 | @article{zhu2022auc,

40 | title={When AUC meets DRO: Optimizing Partial AUC for Deep Learning with Non-Convex Convergence Guarantee},

41 | author={Zhu, Dixian and Li, Gang and Wang, Bokun and Wu, Xiaodong and Yang, Tianbao},

42 | booktitle={International Conference on Machine Learning},

43 | year={2022},

44 | organization={PMLR}

45 | }

46 |

47 |

48 | @inproceedings{yuan2021compositional,

49 | title={Compositional Training for End-to-End Deep AUC Maximization},

50 | author={Yuan, Zhuoning and Guo, Zhishuai and Chawla, Nitesh and Yang, Tianbao},

51 | booktitle={International Conference on Learning Representations},

52 | year={2022},

53 | organization={PMLR}

54 | }

55 |

56 |

57 | @inproceedings{yuan2021federated,

58 | title={Federated deep AUC maximization for hetergeneous data with a constant communication complexity},

59 | author={Yuan, Zhuoning and Guo, Zhishuai and Xu, Yi and Ying, Yiming and Yang, Tianbao},

60 | booktitle={International Conference on Machine Learning},

61 | pages={12219--12229},

62 | year={2021},

63 | organization={PMLR}

64 | }

65 |

66 | @inproceedings{yuan2021large,

67 | title={Large-scale robust deep auc maximization: A new surrogate loss and empirical studies on medical image classification},

68 | author={Yuan, Zhuoning and Yan, Yan and Sonka, Milan and Yang, Tianbao},

69 | booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

70 | pages={3040--3049},

71 | year={2021}

72 | }

73 | @article{qi2021stochastic,

74 | title={Stochastic optimization of areas under precision-recall curves with provable convergence},

75 | author={Qi, Qi and Luo, Youzhi and Xu, Zhao and Ji, Shuiwang and Yang, Tianbao},

76 | journal={Advances in Neural Information Processing Systems},

77 | volume={34},

78 | pages={1752--1765},

79 | year={2021}

80 | }

81 |

--------------------------------------------------------------------------------

/examples/placeholder.md:

--------------------------------------------------------------------------------

1 | Code & tutorials will be available soon!

2 |

--------------------------------------------------------------------------------

/examples/scripts/01_Creating_Imbalanced_Benchmark_Datasets.py:

--------------------------------------------------------------------------------

1 | """

2 | Author: Zhuoning Yuan

3 | Contact: yzhuoning@gmail.com

4 | """

5 |

6 | from libauc.datasets import CIFAR10

7 | (train_data, train_label) = CIFAR10(root='./data', train=True)

8 | (test_data, test_label) = CIFAR10(root='./data', train=False)

9 |

10 | from libauc.datasets import CIFAR100

11 | (train_data, train_label) = CIFAR100(root='./data', train=True)

12 | (test_data, test_label) = CIFAR100(root='./data', train=False)

13 |

14 | from libauc.datasets import CAT_VS_DOG

15 | (train_data, train_label) = CAT_VS_DOG('./data/', train=True)

16 | (test_data, test_label) = CAT_VS_DOG('./data/', train=False)

17 |

18 | from libauc.datasets import STL10

19 | (train_data, train_label) = STL10(root='./data/', split='train') # return numpy array

20 | (test_data, test_label) = STL10(root='./data/', split='test') # return numpy array

21 |

22 | from libauc.utils import ImbalancedDataGenerator

23 |

24 | SEED = 123

25 | imratio = 0.1 # postive_samples/(total_samples)

26 |

27 | from libauc.datasets import CIFAR10

28 | (train_data, train_label) = CIFAR10(root='./data', train=True)

29 | (test_data, test_label) = CIFAR10(root='./data', train=False)

30 | g = ImbalancedDataGenerator(verbose=True, random_seed=0)

31 | (train_images, train_labels) = g.transform(train_data, train_label, imratio=imratio)

32 | (test_images, test_labels) = g.transform(test_data, test_label, imratio=0.5)

33 |

34 |

35 | import torch

36 | from torch.utils.data import Dataset, DataLoader

37 | import torchvision.transforms as transforms

38 | import numpy as np

39 | from PIL import Image

40 |

41 | class ImageDataset(Dataset):

42 | def __init__(self, images, targets, image_size=32, crop_size=30, mode='train'):

43 | self.images = images.astype(np.uint8)

44 | self.targets = targets

45 | self.mode = mode

46 | self.transform_train = transforms.Compose([

47 | transforms.ToTensor(),

48 | transforms.RandomCrop((crop_size, crop_size), padding=None),

49 | transforms.RandomHorizontalFlip(),

50 | transforms.Resize((image_size, image_size)),

51 | ])

52 | self.transform_test = transforms.Compose([

53 | transforms.ToTensor(),

54 | transforms.Resize((image_size, image_size)),

55 | ])

56 | def __len__(self):

57 | return len(self.images)

58 |

59 | def __getitem__(self, idx):

60 | image = self.images[idx]

61 | target = self.targets[idx]

62 | image = Image.fromarray(image.astype('uint8'))

63 | if self.mode == 'train':

64 | image = self.transform_train(image)

65 | else:

66 | image = self.transform_test(image)

67 | return image, target

68 |

69 |

70 | trainloader = DataLoader(ImageDataset(train_images, train_labels, mode='train'), batch_size=128, shuffle=True, num_workers=2, pin_memory=True)

71 | testloader = DataLoader(ImageDataset(test_images, test_labels, mode='test'), batch_size=128, shuffle=False, num_workers=2, pin_memory=True)

72 |

--------------------------------------------------------------------------------

/examples/scripts/02_optimizing_auroc_with_resnet20_on_imbalanced_cifar10.py:

--------------------------------------------------------------------------------

1 | """02_Optimizing_AUROC_with_ResNet20_on_Imbalanced_CIFAR10.ipynb

2 |

3 | **Author**: Zhuoning Yuan

4 |

5 | **Introduction**

6 | In this tutorial, you will learn how to quickly train a ResNet20 model by optimizing **AUROC** using our novel [AUCMLoss](https://arxiv.org/abs/2012.03173) and `PESG` optimizer on a binary image classification task on Cifar10. After completion of this tutorial, you should be able to use LibAUC to train your own models on your own datasets.

7 |

8 | **Useful Resources**:

9 | * Website: https://libauc.org

10 | * Github: https://github.com/Optimization-AI/LibAUC

11 |

12 | **Reference**:

13 | If you find this tutorial helpful in your work, please acknowledge our library and cite the following paper:

14 | @inproceedings{yuan2021large,

15 | title={Large-scale robust deep auc maximization: A new surrogate loss and empirical studies on medical image classification},

16 | author={Yuan, Zhuoning and Yan, Yan and Sonka, Milan and Yang, Tianbao},

17 | booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

18 | pages={3040--3049},

19 | year={2021}

20 | }

21 | """

22 |

23 |

24 | from libauc.losses import AUCMLoss

25 | from libauc.optimizers import PESG

26 | from libauc.models import resnet20 as ResNet20

27 | from libauc.datasets import CIFAR10

28 | from libauc.utils import ImbalancedDataGenerator

29 | from libauc.sampler import DualSampler

30 | from libauc.metrics import auc_roc_score

31 |

32 | import torch

33 | from PIL import Image

34 | import numpy as np

35 | import torchvision.transforms as transforms

36 | from torch.utils.data import Dataset

37 | from sklearn.metrics import roc_auc_score

38 |

39 | def set_all_seeds(SEED):

40 | # REPRODUCIBILITY

41 | torch.manual_seed(SEED)

42 | np.random.seed(SEED)

43 | torch.backends.cudnn.deterministic = True

44 | torch.backends.cudnn.benchmark = False

45 |

46 |

47 | class ImageDataset(Dataset):

48 | def __init__(self, images, targets, image_size=32, crop_size=30, mode='train'):

49 | self.images = images.astype(np.uint8)

50 | self.targets = targets

51 | self.mode = mode

52 | self.transform_train = transforms.Compose([

53 | transforms.ToTensor(),

54 | transforms.RandomCrop((crop_size, crop_size), padding=None),

55 | transforms.RandomHorizontalFlip(),

56 | transforms.Resize((image_size, image_size)),

57 | ])

58 | self.transform_test = transforms.Compose([

59 | transforms.ToTensor(),

60 | transforms.Resize((image_size, image_size)),

61 | ])

62 | def __len__(self):

63 | return len(self.images)

64 |

65 | def __getitem__(self, idx):

66 | image = self.images[idx]

67 | target = self.targets[idx]

68 | image = Image.fromarray(image.astype('uint8'))

69 | if self.mode == 'train':

70 | image = self.transform_train(image)

71 | else:

72 | image = self.transform_test(image)

73 | return image, target

74 |

75 |

76 | # HyperParameters

77 | SEED = 123

78 | BATCH_SIZE = 128

79 | imratio = 0.1 # for demo

80 | total_epochs = 100

81 | decay_epochs = [50, 75]

82 |

83 | lr = 0.1

84 | margin = 1.0

85 | epoch_decay = 0.003 # refers gamma in the paper

86 | weight_decay = 0.0001

87 |

88 | # oversampling minority class, you can tune it in (0, 0.5]

89 | # e.g., sampling_rate=0.2 is that num of positive samples in mini-batch is sampling_rate*batch_size=13

90 | sampling_rate = 0.2

91 |

92 | # load data as numpy arrays

93 | train_data, train_targets = CIFAR10(root='./data', train=True)

94 | test_data, test_targets = CIFAR10(root='./data', train=False)

95 |

96 | # generate imbalanced data

97 | generator = ImbalancedDataGenerator(verbose=True, random_seed=0)

98 | (train_images, train_labels) = generator.transform(train_data, train_targets, imratio=imratio)

99 | (test_images, test_labels) = generator.transform(test_data, test_targets, imratio=0.5)

100 |

101 | # data augmentations

102 | trainSet = ImageDataset(train_images, train_labels)

103 | trainSet_eval = ImageDataset(train_images, train_labels, mode='test')

104 | testSet = ImageDataset(test_images, test_labels, mode='test')

105 |

106 | # dataloaders

107 | sampler = DualSampler(trainSet, BATCH_SIZE, sampling_rate=sampling_rate)

108 | trainloader = torch.utils.data.DataLoader(trainSet, batch_size=BATCH_SIZE, sampler=sampler, num_workers=2)

109 | trainloader_eval = torch.utils.data.DataLoader(trainSet_eval, batch_size=BATCH_SIZE, shuffle=False, num_workers=2)

110 | testloader = torch.utils.data.DataLoader(testSet, batch_size=BATCH_SIZE, shuffle=False, num_workers=2)

111 |

112 | """# **Creating models & AUC Optimizer**"""

113 | # You can include sigmoid/l2 activations on model's outputs before computing loss

114 | model = ResNet20(pretrained=False, last_activation=None, num_classes=1)

115 | model = model.cuda()

116 |

117 | # You can also pass Loss.a, Loss.b, Loss.alpha to optimizer (for old version users)

118 | loss_fn = AUCMLoss()

119 | optimizer = PESG(model,

120 | loss_fn=loss_fn,

121 | lr=lr,

122 | momentum=0.9,

123 | margin=margin,

124 | epoch_decay=epoch_decay,

125 | weight_decay=weight_decay)

126 |

127 |

128 | """# **Training**"""

129 | print ('Start Training')

130 | print ('-'*30)

131 |

132 | train_log = []

133 | test_log = []

134 | for epoch in range(total_epochs):

135 | if epoch in decay_epochs:

136 | optimizer.update_regularizer(decay_factor=10) # decrease learning rate by 10x & update regularizer

137 |

138 | train_loss = []

139 | model.train()

140 | for data, targets in trainloader:

141 | data, targets = data.cuda(), targets.cuda()

142 | y_pred = model(data)

143 | y_pred = torch.sigmoid(y_pred)

144 | loss = loss_fn(y_pred, targets)

145 | optimizer.zero_grad()

146 | loss.backward()

147 | optimizer.step()

148 | train_loss.append(loss.item())

149 |

150 | # evaluation on train & test sets

151 | model.eval()

152 | train_pred_list = []

153 | train_true_list = []

154 | for train_data, train_targets in trainloader_eval:

155 | train_data = train_data.cuda()

156 | train_pred = model(train_data)

157 | train_pred_list.append(train_pred.cpu().detach().numpy())

158 | train_true_list.append(train_targets.numpy())

159 | train_true = np.concatenate(train_true_list)

160 | train_pred = np.concatenate(train_pred_list)

161 | train_auc = auc_roc_score(train_true, train_pred)

162 | train_loss = np.mean(train_loss)

163 |

164 | test_pred_list = []

165 | test_true_list = []

166 | for test_data, test_targets in testloader:

167 | test_data = test_data.cuda()

168 | test_pred = model(test_data)

169 | test_pred_list.append(test_pred.cpu().detach().numpy())

170 | test_true_list.append(test_targets.numpy())

171 | test_true = np.concatenate(test_true_list)

172 | test_pred = np.concatenate(test_pred_list)

173 | val_auc = auc_roc_score(test_true, test_pred)

174 | model.train()

175 |

176 | # print results

177 | print("epoch: %s, train_loss: %.4f, train_auc: %.4f, test_auc: %.4f, lr: %.4f"%(epoch, train_loss, train_auc, val_auc, optimizer.lr ))

178 | train_log.append(train_auc)

179 | test_log.append(val_auc)

180 |

181 |

182 | """# **Visualization**

183 | Now, let's see the learning curve of optimizing AUROC on train and tes sets.

184 | """

185 | import matplotlib.pyplot as plt

186 | plt.rcParams["figure.figsize"] = (9,5)

187 | x=np.arange(len(train_log))

188 | plt.figure()

189 | plt.plot(x, train_log, LineStyle='-', label='Train Set', linewidth=3)

190 | plt.plot(x, test_log, LineStyle='-', label='Test Set', linewidth=3)

191 | plt.title('AUCMLoss (10% CIFAR10)',fontsize=25)

192 | plt.legend(fontsize=15)

193 | plt.ylabel('AUROC', fontsize=25)

194 | plt.xlabel('Epoch', fontsize=25)

--------------------------------------------------------------------------------

/examples/scripts/03_optimizing_auprc_loss_on_imbalanced_dataset.py:

--------------------------------------------------------------------------------

1 | """03_Optimizing_AUPRC_Loss_on_Imbalanced_dataset.ipynb

2 | # **Optimizing AUPRC Loss on imbalanced dataset**

3 |

4 | **Author**: Gang Li

5 | **Edited by**: Zhuoning Yuan

6 |

7 | In this tutorial, you will learn how to quickly train a Resnet18 model by optimizing **AUPRC** loss with **SOAP** optimizer [[ref]](https://arxiv.org/abs/2104.08736) on a binary image classification task with CIFAR-10 dataset. After completion of this tutorial, you should be able to use LibAUC to train your own models on your own datasets.

8 |

9 | **Useful Resources**:

10 | * Website: https://libauc.org

11 | * Github: https://github.com/Optimization-AI/LibAUC

12 |

13 | **Reference**:

14 | If you find this tutorial helpful, please acknowledge our library and cite the following paper:

15 |

16 | @article{qi2021stochastic,

17 | title={Stochastic Optimization of Areas Under Precision-Recall Curves with Provable Convergence},

18 | author={Qi, Qi and Luo, Youzhi and Xu, Zhao and Ji, Shuiwang and Yang, Tianbao},

19 | journal={Advances in Neural Information Processing Systems},

20 | volume={34},

21 | year={2021}

22 | }

23 | """

24 |

25 | !pip install libauc==1.2.0

26 |

27 | """# **Importing LibAUC**

28 |

29 | Import required packages to use

30 | """

31 |

32 | from libauc.losses import APLoss

33 | from libauc.optimizers import SOAP

34 | from libauc.models import resnet18 as ResNet18

35 | from libauc.datasets import CIFAR10

36 | from libauc.utils import ImbalancedDataGenerator

37 | from libauc.sampler import DualSampler

38 | from libauc.metrics import auc_prc_score

39 |

40 | import torchvision.transforms as transforms

41 | from torch.utils.data import Dataset

42 | import numpy as np

43 | import torch

44 | from PIL import Image

45 |

46 | """# **Configurations**

47 | **Reproducibility**

48 | The following function `set_all_seeds` limits the number of sources of randomness behaviors, such as model intialization, data shuffling, etcs. However, completely reproducible results are not guaranteed across PyTorch releases [[Ref]](https://pytorch.org/docs/stable/notes/randomness.html#:~:text=Completely%20reproducible%20results%20are%20not,even%20when%20using%20identical%20seeds.).

49 | """

50 |

51 | def set_all_seeds(SEED):

52 | # REPRODUCIBILITY

53 | np.random.seed(SEED)

54 | torch.manual_seed(SEED)

55 | torch.cuda.manual_seed(SEED)

56 | torch.backends.cudnn.deterministic = True

57 | torch.backends.cudnn.benchmark = False

58 |

59 | """# **Loading datasets**

60 | In this step, , we will use the [CIFAR10](https://www.cs.toronto.edu/~kriz/cifar.html) as benchmark dataset. Before importing data to `dataloader`, we construct imbalanced version for CIFAR10 by `ImbalanceDataGenerator`. Specifically, it first randomly splits the training data by class ID (e.g., 10 classes) into two even portions as the positive and negative classes, and then it randomly removes some samples from the positive class to make

61 | it imbalanced. We keep the testing set untouched. We refer `imratio` to the ratio of number of positive examples to number of all examples.

62 | """

63 |

64 | train_data, train_targets = CIFAR10(root='./data', train=True)

65 | test_data, test_targets = CIFAR10(root='./data', train=False)

66 |

67 | imratio = 0.02

68 | generator = ImbalancedDataGenerator(verbose=True, random_seed=2022)

69 | (train_images, train_labels) = generator.transform(train_data, train_targets, imratio=imratio)

70 | (test_images, test_labels) = generator.transform(test_data, test_targets, imratio=0.5)

71 |

72 | """Now that we defined the data input pipeline such as data augmentations. In this tutorials, we use `RandomCrop`, `RandomHorizontalFlip`."""

73 |

74 | class ImageDataset(Dataset):

75 | def __init__(self, images, targets, image_size=32, crop_size=30, mode='train'):

76 | self.images = images.astype(np.uint8)

77 | self.targets = targets

78 | self.mode = mode

79 | self.transform_train = transforms.Compose([

80 | transforms.ToTensor(),

81 | transforms.RandomCrop((crop_size, crop_size), padding=None),

82 | transforms.RandomHorizontalFlip(),

83 | transforms.Resize((image_size, image_size)),

84 | ])

85 | self.transform_test = transforms.Compose([

86 | transforms.ToTensor(),

87 | transforms.Resize((image_size, image_size)),

88 | ])

89 |

90 |

91 | # for loss function

92 | self.pos_indices = np.flatnonzero(targets==1)

93 | self.pos_index_map = {}

94 | for i, idx in enumerate(self.pos_indices):

95 | self.pos_index_map[idx] = i

96 |

97 | def __len__(self):

98 | return len(self.images)

99 |

100 | def __getitem__(self, idx):

101 | image = self.images[idx]

102 | target = self.targets[idx]

103 | image = Image.fromarray(image.astype('uint8'))

104 | if self.mode == 'train':

105 | idx = self.pos_index_map[idx] if idx in self.pos_indices else -1

106 | image = self.transform_train(image)

107 | else:

108 | image = self.transform_test(image)

109 | return idx, image, target

110 |

111 | """We define `dataset`, `DualSampler` and `dataloader` here. By default, we use `batch_size` 64 and we oversample the minority class with `pos:neg=1:1` by setting `sampling_rate=0.5`."""

112 |

113 | batch_size = 64

114 | sampling_rate = 0.5

115 |

116 | trainSet = ImageDataset(train_images, train_labels)

117 | trainSet_eval = ImageDataset(train_images, train_labels,mode='test')

118 | testSet = ImageDataset(test_images, test_labels, mode='test')

119 |

120 | sampler = DualSampler(trainSet, batch_size, sampling_rate=sampling_rate)

121 | trainloader = torch.utils.data.DataLoader(trainSet, batch_size=batch_size, sampler=sampler, num_workers=2)

122 | trainloader_eval = torch.utils.data.DataLoader(trainSet_eval, batch_size=batch_size, shuffle=False, num_workers=2)

123 | testloader = torch.utils.data.DataLoader(testSet, batch_size=batch_size, shuffle=False, num_workers=2)

124 |

125 |

126 | # Parameters

127 |

128 | lr = 1e-3

129 | margin = 0.6

130 | gamma = 0.1

131 | weight_decay = 0

132 | total_epoch = 60

133 | decay_epoch = [30]

134 | SEED = 2022

135 |

136 | """# **Model and Loss Setup**

137 | """

138 |

139 | set_all_seeds(SEED)

140 | model = ResNet18(pretrained=False, last_activation=None)

141 | model = model.cuda()

142 |

143 | Loss = APLoss(pos_len=sampler.pos_len, margin=margin, gamma=gamma)

144 | optimizer = SOAP(model.parameters(), lr=lr, mode='adam', weight_decay=weight_decay)

145 |

146 | """# **Training**"""

147 | print ('Start Training')

148 | print ('-'*30)

149 | test_best = 0

150 | train_list, test_list = [], []

151 | for epoch in range(total_epoch):

152 | if epoch in decay_epoch:

153 | optimizer.update_lr(decay_factor=10)

154 | model.train()

155 | for idx, (index, data, targets) in enumerate(trainloader):

156 | data, targets = data.cuda(), targets.cuda()

157 | y_pred = model(data)

158 | y_prob = torch.sigmoid(y_pred)

159 | loss = Loss(y_prob, targets, index)

160 | optimizer.zero_grad()

161 | loss.backward()

162 | optimizer.step()

163 |

164 | # evaluation

165 | model.eval()

166 | train_pred, train_true = [], []

167 | for i, data in enumerate(trainloader_eval):

168 | _, train_data, train_targets = data

169 | train_data = train_data.cuda()

170 | y_pred = model(train_data)

171 | y_prob = torch.sigmoid(y_pred)

172 | train_pred.append(y_prob.cpu().detach().numpy())

173 | train_true.append(train_targets.cpu().detach().numpy())

174 | train_true = np.concatenate(train_true)

175 | train_pred = np.concatenate(train_pred)

176 | train_ap = auc_prc_score(train_true, train_pred)

177 | train_list.append(train_ap)

178 |

179 | test_pred, test_true = [], []

180 | for j, data in enumerate(testloader):

181 | _, test_data, test_targets = data

182 | test_data = test_data.cuda()

183 | y_pred = model(test_data)

184 | y_prob = torch.sigmoid(y_pred)

185 | test_pred.append(y_prob.cpu().detach().numpy())

186 | test_true.append(test_targets.numpy())

187 | test_true = np.concatenate(test_true)

188 | test_pred = np.concatenate(test_pred)

189 | val_ap = auc_prc_score(test_true, test_pred)

190 | test_list.append(val_ap)

191 | if test_best < val_ap:

192 | test_best = val_ap

193 |

194 | model.train()

195 | print("epoch: %s, train_ap: %.4f, test_ap: %.4f, lr: %.4f, test_best: %.4f"%(epoch, train_ap, val_ap, optimizer.lr, test_best))

196 |

197 |

--------------------------------------------------------------------------------

/examples/scripts/04_training_with_pytorch_learning_rate_scheduling.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | """04_Training_with_Pytorch_Learning_Rate_Scheduling.ipynb

3 |

4 | **Author**: Zhuoning Yuan

5 | **Introduction**

6 |

7 | In this tutorial, you will learn how to quickly train models using LibAUC with [Pytorch Learning Rate Scheduler](https:/https://www.kaggle.com/code/isbhargav/guide-to-pytorch-learning-rate-scheduling/notebook/). After completion of this tutorial, you should be able to use LibAUC to train your own models on your own datasets.

8 |

9 | **Useful Resources**:

10 | * Website: https://libauc.org

11 | * Github: https://github.com/Optimization-AI/LibAUC

12 |

13 | **Reference**:

14 |

15 | If you find this tutorial helpful in your work, please acknowledge our library and cite the following paper:

16 |

17 | @inproceedings{yuan2021large,

18 | title={Large-scale robust deep auc maximization: A new surrogate loss and empirical studies on medical image classification},

19 | author={Yuan, Zhuoning and Yan, Yan and Sonka, Milan and Yang, Tianbao},

20 | booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

21 | pages={3040--3049},

22 | year={2021}

23 | }

24 | """

25 |

26 | """# **Importing AUC Training Pipeline**"""

27 |

28 | from libauc.losses import AUCMLoss

29 | from libauc.optimizers import PESG

30 | from libauc.models import resnet20 as ResNet20

31 | from libauc.datasets import CIFAR10

32 | from libauc.utils import ImbalancedDataGenerator

33 | from libauc.metrics import auc_roc_score

34 |

35 | import torch

36 | from PIL import Image

37 | import numpy as np

38 | import torchvision.transforms as transforms

39 | from torch.utils.data import Dataset

40 |

41 | class ImageDataset(Dataset):

42 | def __init__(self, images, targets, image_size=32, crop_size=30, mode='train'):

43 | self.images = images.astype(np.uint8)

44 | self.targets = targets

45 | self.mode = mode

46 | self.transform_train = transforms.Compose([

47 | transforms.ToTensor(),

48 | transforms.RandomCrop((crop_size, crop_size), padding=None),

49 | transforms.RandomHorizontalFlip(),

50 | transforms.Resize((image_size, image_size)),

51 | ])

52 | self.transform_test = transforms.Compose([

53 | transforms.ToTensor(),

54 | transforms.Resize((image_size, image_size)),

55 | ])

56 | def __len__(self):

57 | return len(self.images)

58 |

59 | def __getitem__(self, idx):

60 | image = self.images[idx]

61 | target = self.targets[idx]

62 | image = Image.fromarray(image.astype('uint8'))

63 | if self.mode == 'train':

64 | image = self.transform_train(image)

65 | else:

66 | image = self.transform_test(image)

67 | return image, target

68 |

69 | # paramaters

70 | SEED = 123

71 | BATCH_SIZE = 128

72 | imratio = 0.1

73 | lr = 0.1

74 | epoch_decay = 2e-3 # 1/gamma

75 | weight_decay = 1e-4

76 | margin = 1.0

77 |

78 |

79 | # dataloader

80 | (train_data, train_label) = CIFAR10(root='./data', train=True)

81 | (test_data, test_label) = CIFAR10(root='./data', train=False)

82 |

83 | generator = ImbalancedDataGenerator(verbose=True, random_seed=0)

84 | (train_images, train_labels) = generator.transform(train_data, train_label, imratio=imratio)

85 | (test_images, test_labels) = generator.transform(test_data, test_label, imratio=0.5)

86 |

87 | trainloader = torch.utils.data.DataLoader(ImageDataset(train_images, train_labels), batch_size=BATCH_SIZE, shuffle=True, num_workers=1, pin_memory=True, drop_last=True)

88 | testloader = torch.utils.data.DataLoader(ImageDataset(test_images, test_labels, mode='test'), batch_size=BATCH_SIZE, shuffle=False, num_workers=1, pin_memory=True)

89 |

90 | # model

91 | model = ResNet20(pretrained=False, num_classes=1)

92 | model = model.cuda()

93 |

94 | # loss & optimizer

95 | loss_fn = AUCMLoss()

96 | optimizer = PESG(model,

97 | loss_fn=loss_fn,

98 | lr=lr,

99 | margin=margin,

100 | epoch_decay=epoch_decay,

101 | weight_decay=weight_decay)

102 |

103 | """# **Pytorch Learning Rate Scheduling**

104 | We will cover three scheduling functions in this section:

105 | * CosineAnnealingLR

106 | * ReduceLROnPlateau

107 | * MultiStepLR

108 |

109 | For more details, please refer to orginal PyTorch [doc](https://pytorch.org/docs/stable/optim.html).

110 |

111 | """

112 |

113 | def reset_model():

114 | # loss & optimizer

115 | loss_fn = AUCMLoss()

116 | optimizer = PESG(model,

117 | loss_fn=loss_fn,

118 | lr=lr,

119 | epoch_decay=epoch_decay,

120 | margin=margin,

121 | weight_decay=weight_decay)

122 | return loss_fn, optimizer

123 |

124 | """### CosineAnnealingLR"""

125 |

126 | total_epochs = 10

127 | loss_fn, optimizer = reset_model()

128 | scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, len(trainloader)*total_epochs)

129 |

130 | model.train()

131 | for epoch in range(total_epochs):

132 | for i, (data, targets) in enumerate(trainloader):

133 | data, targets = data.cuda(), targets.cuda()

134 | y_pred = model(data)

135 | y_pred = torch.sigmoid(y_pred)

136 | loss = loss_fn(y_pred, targets)

137 | optimizer.zero_grad()

138 | loss.backward()

139 | optimizer.step()

140 | scheduler.step()

141 | print("epoch: {}, loss: {:4f}, lr:{:4f}".format(epoch, loss.item(), optimizer.lr))

142 |

143 | """### ReduceLROnPlateau"""

144 |

145 | total_epochs = 20

146 | loss_fn, optimizer = reset_model()

147 | scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer,

148 | patience=3,

149 | verbose=True,

150 | factor=0.5,

151 | threshold=0.001,

152 | min_lr=0.00001)

153 |

154 | model.train()

155 | for epoch in range(total_epochs):

156 | for i, (data, targets) in enumerate(trainloader):

157 | data, targets = data.cuda(), targets.cuda()

158 | y_pred = model(data)

159 | y_pred = torch.sigmoid(y_pred)

160 | loss = loss_fn(y_pred, targets)

161 | optimizer.zero_grad()

162 | loss.backward()

163 | optimizer.step()

164 | scheduler.step(loss)

165 | print("epoch: {}, loss: {:4f}, lr:{:4f}".format(epoch, loss.item(), optimizer.lr))

166 |

167 | """### MultiStepLR"""

168 |

169 | total_epochs = 20

170 | loss_fn, optimizer = reset_model()

171 | scheduler = torch.optim.lr_scheduler.MultiStepLR(optimizer, milestones=[10,15], gamma=0.1)

172 |

173 | # reset model

174 | model.train()

175 | for epoch in range(total_epochs):

176 | for i, (data, targets) in enumerate(trainloader):

177 | data, targets = data.cuda(), targets.cuda()

178 | y_pred = model(data)

179 | y_pred = torch.sigmoid(y_pred)

180 | loss = loss_fn(y_pred, targets)

181 | optimizer.zero_grad()

182 | loss.backward()

183 | optimizer.step()

184 | scheduler.step()

185 | print("epoch: {}, loss: {:4f}, lr:{:4f}".format(epoch, loss.item(), optimizer.lr))

--------------------------------------------------------------------------------

/examples/scripts/05_Optimizing_AUROC_loss_with_densenet121_on_CheXpert.py:

--------------------------------------------------------------------------------

1 | """

2 | Author: Zhuoning Yuan

3 | Contact: yzhuoning@gmail.com

4 | """

5 |

6 | from libauc.losses import AUCMLoss, CrossEntropyLoss

7 | from libauc.optimizers import PESG, Adam

8 | from libauc.models import DenseNet121, DenseNet169

9 | from libauc.datasets import CheXpert

10 |

11 | import torch

12 | from PIL import Image

13 | import numpy as np

14 | import torchvision.transforms as transforms

15 | from torch.utils.data import Dataset

16 | from sklearn.metrics import roc_auc_score

17 |

18 |

19 | def set_all_seeds(SEED):

20 | # REPRODUCIBILITY

21 | torch.manual_seed(SEED)

22 | np.random.seed(SEED)

23 | torch.backends.cudnn.deterministic = True

24 | torch.backends.cudnn.benchmark = False

25 |

26 | """# **Pretraining**

27 | * Multi-label classification (5 tasks)

28 | * Adam + CrossEntropy Loss

29 | * This step is optional

30 | """

31 |

32 | # dataloader

33 | root = './CheXpert/CheXpert-v1.0-small/'

34 | # Index: -1 denotes multi-label mode including 5 diseases

35 | traindSet = CheXpert(csv_path=root+'train.csv', image_root_path=root, use_upsampling=False, use_frontal=True, image_size=224, mode='train', class_index=-1)

36 | testSet = CheXpert(csv_path=root+'valid.csv', image_root_path=root, use_upsampling=False, use_frontal=True, image_size=224, mode='valid', class_index=-1)

37 | trainloader = torch.utils.data.DataLoader(traindSet, batch_size=32, num_workers=2, shuffle=True)

38 | testloader = torch.utils.data.DataLoader(testSet, batch_size=32, num_workers=2, shuffle=False)

39 |

40 | # paramaters

41 | SEED = 123

42 | BATCH_SIZE = 32

43 | lr = 1e-4

44 | weight_decay = 1e-5

45 |

46 | # model

47 | set_all_seeds(SEED)

48 | model = DenseNet121(pretrained=True, last_activation=None, activations='relu', num_classes=5)

49 | model = model.cuda()

50 |

51 | # define loss & optimizer

52 | CELoss = CrossEntropyLoss()

53 | optimizer = Adam(model.parameters(), lr=lr, weight_decay=weight_decay)

54 |

55 | # training

56 | best_val_auc = 0

57 | for epoch in range(1):

58 | for idx, data in enumerate(trainloader):

59 | train_data, train_labels = data

60 | train_data, train_labels = train_data.cuda(), train_labels.cuda()

61 | y_pred = model(train_data)

62 | loss = CELoss(y_pred, train_labels)

63 | optimizer.zero_grad()

64 | loss.backward()

65 | optimizer.step()

66 |

67 | # validation

68 | if idx % 400 == 0:

69 | model.eval()

70 | with torch.no_grad():

71 | test_pred = []

72 | test_true = []

73 | for jdx, data in enumerate(testloader):

74 | test_data, test_labels = data

75 | test_data = test_data.cuda()

76 | y_pred = model(test_data)

77 | test_pred.append(y_pred.cpu().detach().numpy())

78 | test_true.append(test_labels.numpy())

79 |

80 | test_true = np.concatenate(test_true)

81 | test_pred = np.concatenate(test_pred)

82 | val_auc_mean = roc_auc_score(test_true, test_pred)

83 | model.train()

84 |

85 | if best_val_auc < val_auc_mean:

86 | best_val_auc = val_auc_mean

87 | torch.save(model.state_dict(), 'ce_pretrained_model.pth')

88 |

89 | print ('Epoch=%s, BatchID=%s, Val_AUC=%.4f, Best_Val_AUC=%.4f'%(epoch, idx, val_auc_mean, best_val_auc ))

90 |

91 |

92 | """# **Optimizing AUCM Loss**

93 | * Binary Classification

94 | * PESG + AUCM Loss

95 | """

96 |

97 | # parameters

98 | class_id = 1 # 0:Cardiomegaly, 1:Edema, 2:Consolidation, 3:Atelectasis, 4:Pleural Effusion

99 | root = './CheXpert/CheXpert-v1.0-small/'

100 |

101 | # You can set use_upsampling=True and pass the class name by upsampling_cols=['Cardiomegaly'] to do upsampling. This may improve the performance

102 | traindSet = CheXpert(csv_path=root+'train.csv', image_root_path=root, use_upsampling=True, use_frontal=True, image_size=224, mode='train', class_index=class_id)

103 | testSet = CheXpert(csv_path=root+'valid.csv', image_root_path=root, use_upsampling=False, use_frontal=True, image_size=224, mode='valid', class_index=class_id)

104 | trainloader = torch.utils.data.DataLoader(traindSet, batch_size=32, num_workers=2, shuffle=True)

105 | testloader = torch.utils.data.DataLoader(testSet, batch_size=32, num_workers=2, shuffle=False)

106 |

107 | # paramaters

108 | SEED = 123

109 | BATCH_SIZE = 32

110 | imratio = traindSet.imratio

111 | lr = 0.05 # using smaller learning rate is better

112 | gamma = 500

113 | weight_decay = 1e-5

114 | margin = 1.0

115 |

116 | # model

117 | set_all_seeds(SEED)

118 | model = DenseNet121(pretrained=False, last_activation='sigmoid', activations='relu', num_classes=1)

119 | model = model.cuda()

120 |

121 |

122 | # load pretrained model

123 | if True:

124 | PATH = 'ce_pretrained_model.pth'

125 | state_dict = torch.load(PATH)

126 | state_dict.pop('classifier.weight', None)

127 | state_dict.pop('classifier.bias', None)

128 | model.load_state_dict(state_dict, strict=False)

129 |

130 |

131 | # define loss & optimizer

132 | Loss = AUCMLoss(imratio=imratio)

133 | optimizer = PESG(model,

134 | a=Loss.a,

135 | b=Loss.b,

136 | alpha=Loss.alpha,

137 | imratio=imratio,

138 | lr=lr,

139 | gamma=gamma,

140 | margin=margin,

141 | weight_decay=weight_decay)

142 |

143 | best_val_auc = 0

144 | for epoch in range(2):

145 | if epoch > 0:

146 | optimizer.update_regularizer(decay_factor=10)

147 | for idx, data in enumerate(trainloader):

148 | train_data, train_labels = data

149 | train_data, train_labels = train_data.cuda(), train_labels.cuda()

150 | y_pred = model(train_data)

151 | loss = Loss(y_pred, train_labels)

152 | optimizer.zero_grad()

153 | loss.backward()

154 | optimizer.step()

155 |

156 | # validation

157 | if idx % 400 == 0:

158 | model.eval()

159 | with torch.no_grad():

160 | test_pred = []

161 | test_true = []

162 | for jdx, data in enumerate(testloader):

163 | test_data, test_label = data

164 | test_data = test_data.cuda()

165 | y_pred = model(test_data)

166 | test_pred.append(y_pred.cpu().detach().numpy())

167 | test_true.append(test_label.numpy())

168 |

169 | test_true = np.concatenate(test_true)

170 | test_pred = np.concatenate(test_pred)

171 | val_auc = roc_auc_score(test_true, test_pred)

172 | model.train()

173 |

174 | if best_val_auc < val_auc:

175 | best_val_auc = val_auc

176 |

177 | print ('Epoch=%s, BatchID=%s, Val_AUC=%.4f, lr=%.4f'%(epoch, idx, val_auc, optimizer.lr))

178 |

179 | print ('Best Val_AUC is %.4f'%best_val_auc)

--------------------------------------------------------------------------------

/examples/scripts/05_optimizing_auroc_loss_with_densenet121_on_chexpert.py:

--------------------------------------------------------------------------------

1 | """05_Optimizing_AUROC_Loss_with_DenseNet121_on_CheXpert.ipynb

2 |

3 | **Author**: Zhuoning Yuan

4 |

5 | **Introduction**

6 | In this tutorial, you will learn how to quickly train a DenseNet121 model by optimizing **AUROC** using our novel **`AUCMLoss`** and **`PESG`** optimizer on Chest X-Ray dataset, e.g., [CheXpert](https://stanfordmlgroup.github.io/competitions/chexpert/). After completion of this tutorial, you should be able to use LibAUC to train your own models on your own datasets.

7 |

8 | **Useful Resources**:

9 | * Website: https://libauc.org

10 | * Github: https://github.com/Optimization-AI/LibAUC

11 |

12 | **Reference**:

13 | If you find this tutorial helpful in your work, please acknowledge our library and cite the following paper:

14 |

15 | @inproceedings{yuan2021large,

16 | title={Large-scale robust deep auc maximization: A new surrogate loss and empirical studies on medical image classification},

17 | author={Yuan, Zhuoning and Yan, Yan and Sonka, Milan and Yang, Tianbao},

18 | booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

19 | pages={3040--3049},

20 | year={2021}

21 | }

22 | """

23 |

24 | """# **Downloading CheXpert**

25 | * To request dataset access, you need to apply from CheXpert website: https://stanfordmlgroup.github.io/competitions/chexpert/

26 | * In this tutorial, we use the smaller version of dataset with lower image resolution, i.e., *CheXpert-v1.0-small.zip*

27 |

28 | """

29 |

30 | """

31 | # **Importing LibAUC**"""

32 |

33 | from libauc.losses import AUCMLoss, CrossEntropyLoss

34 | from libauc.optimizers import PESG, Adam

35 | from libauc.models import densenet121 as DenseNet121

36 | from libauc.datasets import CheXpert

37 |

38 | import torch

39 | from PIL import Image

40 | import numpy as np

41 | import torchvision.transforms as transforms

42 | from torch.utils.data import Dataset

43 | from sklearn.metrics import roc_auc_score

44 |

45 | """# **Reproducibility**"""

46 |

47 | def set_all_seeds(SEED):

48 | # REPRODUCIBILITY

49 | torch.manual_seed(SEED)

50 | np.random.seed(SEED)

51 | torch.backends.cudnn.deterministic = True

52 | torch.backends.cudnn.benchmark = False

53 |

54 | """# **Pretraining**

55 | * Multi-label classification (5 tasks)

56 | * Adam + CrossEntropy Loss

57 | * This step is optional

58 |

59 |

60 |

61 | """

62 |

63 | # dataloader

64 | root = './CheXpert/CheXpert-v1.0-small/'

65 | # Index: -1 denotes multi-label mode including 5 diseases

66 | traindSet = CheXpert(csv_path=root+'train.csv', image_root_path=root, use_upsampling=False, use_frontal=True, image_size=224, mode='train', class_index=-1)

67 | testSet = CheXpert(csv_path=root+'valid.csv', image_root_path=root, use_upsampling=False, use_frontal=True, image_size=224, mode='valid', class_index=-1)

68 | trainloader = torch.utils.data.DataLoader(traindSet, batch_size=32, num_workers=2, shuffle=True)

69 | testloader = torch.utils.data.DataLoader(testSet, batch_size=32, num_workers=2, shuffle=False)

70 |

71 | # paramaters

72 | SEED = 123

73 | BATCH_SIZE = 32

74 | lr = 1e-4

75 | weight_decay = 1e-5

76 |

77 | # model

78 | set_all_seeds(SEED)

79 | model = DenseNet121(pretrained=True, last_activation=None, activations='relu', num_classes=5)

80 | model = model.cuda()

81 |

82 | # define loss & optimizer

83 | CELoss = CrossEntropyLoss()

84 | optimizer = Adam(model.parameters(), lr=lr, weight_decay=weight_decay)

85 |

86 | # training

87 | best_val_auc = 0

88 | for epoch in range(1):

89 | for idx, data in enumerate(trainloader):

90 | train_data, train_labels = data

91 | train_data, train_labels = train_data.cuda(), train_labels.cuda()

92 | y_pred = model(train_data)

93 | loss = CELoss(y_pred, train_labels)

94 | optimizer.zero_grad()

95 | loss.backward()

96 | optimizer.step()

97 |

98 | # validation

99 | if idx % 400 == 0:

100 | model.eval()

101 | with torch.no_grad():

102 | test_pred = []

103 | test_true = []

104 | for jdx, data in enumerate(testloader):

105 | test_data, test_labels = data

106 | test_data = test_data.cuda()

107 | y_pred = model(test_data)

108 | test_pred.append(y_pred.cpu().detach().numpy())

109 | test_true.append(test_labels.numpy())

110 |

111 | test_true = np.concatenate(test_true)

112 | test_pred = np.concatenate(test_pred)

113 | val_auc_mean = roc_auc_score(test_true, test_pred)

114 | model.train()

115 |

116 | if best_val_auc < val_auc_mean:

117 | best_val_auc = val_auc_mean

118 | torch.save(model.state_dict(), 'ce_pretrained_model.pth')

119 |

120 | print ('Epoch=%s, BatchID=%s, Val_AUC=%.4f, Best_Val_AUC=%.4f'%(epoch, idx, val_auc_mean, best_val_auc ))

121 |

122 | """# **Optimizing AUCM Loss**

123 | * Binary Classification

124 | * PESG + AUCM Loss

125 | Note: you can also try other losses in this task, e.g., [CompositionalAUCLoss](https://github.com/Optimization-AI/LibAUC/blob/main/examples/09_Optimizing_CompositionalAUC_Loss_with_ResNet20_on_CIFAR10.ipynb).

126 | """

127 |

128 | # parameters

129 | class_id = 1 # 0:Cardiomegaly, 1:Edema, 2:Consolidation, 3:Atelectasis, 4:Pleural Effusion

130 | root = './CheXpert/CheXpert-v1.0-small/'

131 |

132 | # You can set use_upsampling=True and pass the class name by upsampling_cols=['Cardiomegaly'] to do upsampling. This may improve the performance

133 | traindSet = CheXpert(csv_path=root+'train.csv', image_root_path=root, use_upsampling=True, use_frontal=True, image_size=224, mode='train', class_index=class_id)

134 | testSet = CheXpert(csv_path=root+'valid.csv', image_root_path=root, use_upsampling=False, use_frontal=True, image_size=224, mode='valid', class_index=class_id)

135 | trainloader = torch.utils.data.DataLoader(traindSet, batch_size=32, num_workers=2, shuffle=True)

136 | testloader = torch.utils.data.DataLoader(testSet, batch_size=32, num_workers=2, shuffle=False)

137 |

138 | # paramaters

139 | SEED = 123

140 | BATCH_SIZE = 32

141 | imratio = traindSet.imratio

142 | lr = 0.05 # using smaller learning rate is better

143 | epoch_decay = 2e-3

144 | weight_decay = 1e-5

145 | margin = 1.0

146 |

147 | # model

148 | set_all_seeds(SEED)

149 | model = DenseNet121(pretrained=False, last_activation=None, activations='relu', num_classes=1)

150 | model = model.cuda()

151 |

152 |

153 | # load pretrained model

154 | if True:

155 | PATH = 'ce_pretrained_model.pth'

156 | state_dict = torch.load(PATH)

157 | state_dict.pop('classifier.weight', None)

158 | state_dict.pop('classifier.bias', None)

159 | model.load_state_dict(state_dict, strict=False)

160 |

161 |

162 | # define loss & optimizer

163 | loss_fn = AUCMLoss()

164 | optimizer = PESG(model,

165 | loss_fn=loss_fn,

166 | lr=lr,

167 | margin=margin,

168 | epoch_decay=epoch_decay,

169 | weight_decay=weight_decay)

170 |

171 | best_val_auc = 0

172 | for epoch in range(2):

173 | if epoch > 0:

174 | optimizer.update_regularizer(decay_factor=10)

175 | for idx, data in enumerate(trainloader):

176 | train_data, train_labels = data

177 | train_data, train_labels = train_data.cuda(), train_labels.cuda()

178 | y_pred = model(train_data)

179 | y_pred = torch.sigmoid(y_pred)

180 | loss = loss_fn(y_pred, train_labels)

181 | optimizer.zero_grad()

182 | loss.backward()

183 | optimizer.step()

184 |

185 | # validation

186 | if idx % 400 == 0:

187 | model.eval()

188 | with torch.no_grad():

189 | test_pred = []

190 | test_true = []

191 | for jdx, data in enumerate(testloader):

192 | test_data, test_label = data

193 | test_data = test_data.cuda()

194 | y_pred = model(test_data)

195 | test_pred.append(y_pred.cpu().detach().numpy())

196 | test_true.append(test_label.numpy())

197 |

198 | test_true = np.concatenate(test_true)

199 | test_pred = np.concatenate(test_pred)

200 | val_auc = roc_auc_score(test_true, test_pred)

201 | model.train()

202 |

203 | if best_val_auc < val_auc:

204 | best_val_auc = val_auc

205 |

206 | print ('Epoch=%s, BatchID=%s, Val_AUC=%.4f, lr=%.4f'%(epoch, idx, val_auc, optimizer.lr))

207 |

208 | print ('Best Val_AUC is %.4f'%best_val_auc)

--------------------------------------------------------------------------------

/examples/scripts/07_optimizing_multi_label_auroc_loss_with_densenet121_on_chexpert.py:

--------------------------------------------------------------------------------

1 | """

2 | # Optimizing Multi-label AUROC loss on Chest X-Ray Dataset (CheXpert)

3 |

4 | Author: Zhuoning Yuan

5 |

6 | Reference:

7 |

8 | If you find this tutorial helpful in your work, please acknowledge our library and cite the following paper:

9 |

10 | @inproceedings{yuan2021large,

11 | title={Large-scale robust deep auc maximization: A new surrogate loss and empirical studies on medical image classification},

12 | author={Yuan, Zhuoning and Yan, Yan and Sonka, Milan and Yang, Tianbao},

13 | booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

14 | pages={3040--3049},

15 | year={2021}

16 | }

17 |

18 | @misc{libauc2022,

19 | title={LibAUC: A Deep Learning Library for X-Risk Optimization.},

20 | author={Zhuoning Yuan, Zi-Hao Qiu, Gang Li, Dixian Zhu, Zhishuai Guo, Quanqi Hu, Bokun Wang, Qi Qi, Yongjian Zhong, Tianbao Yang},

21 | year={2022}

22 | }

23 |

24 | """

25 |

26 |

27 | from libauc.losses import AUCM_MultiLabel, CrossEntropyLoss

28 | from libauc.optimizers import PESG, Adam

29 | from libauc.models import densenet121 as DenseNet121

30 | from libauc.datasets import CheXpert

31 | from libauc.metrics import auc_roc_score # for multi-task

32 |

33 | import torch

34 | from PIL import Image

35 | import numpy as np

36 | import torchvision.transforms as transforms

37 | from torch.utils.data import Dataset

38 | from sklearn.metrics import roc_auc_score

39 | import torch.nn.functional as F

40 |

41 |

42 | def set_all_seeds(SEED):

43 | # REPRODUCIBILITY

44 | torch.manual_seed(SEED)

45 | np.random.seed(SEED)

46 | torch.backends.cudnn.deterministic = True

47 | torch.backends.cudnn.benchmark = False

48 |

49 |

50 | # dataloader

51 | root = './CheXpert/CheXpert-v1.0-small/'

52 | # Index: -1 denotes multi-label mode including 5 diseases

53 | traindSet = CheXpert(csv_path=root+'train.csv', image_root_path=root, use_upsampling=False, use_frontal=True, image_size=224, mode='train', class_index=-1, verbose=False)

54 | testSet = CheXpert(csv_path=root+'valid.csv', image_root_path=root, use_upsampling=False, use_frontal=True, image_size=224, mode='valid', class_index=-1, verbose=False)

55 | trainloader = torch.utils.data.DataLoader(traindSet, batch_size=32, num_workers=2, shuffle=True)

56 | testloader = torch.utils.data.DataLoader(testSet, batch_size=32, num_workers=2, shuffle=False)

57 |

58 | # check imbalance ratio for each task

59 | print (traindSet.imratio_list )

60 |

61 | # paramaters

62 | SEED = 123

63 | BATCH_SIZE = 32

64 | lr = 0.1

65 | gamma = 500

66 | weight_decay = 1e-5

67 | margin = 1.0

68 |

69 | # model

70 | set_all_seeds(SEED)

71 | model = DenseNet121(pretrained=True, last_activation=None, activations='relu', num_classes=5)

72 | model = model.cuda()

73 |

74 | # define loss & optimizer

75 | Loss = AUCM_MultiLabel(num_classes=5)

76 | optimizer = PESG(model,

77 | a=Loss.a,

78 | b=Loss.b,

79 | alpha=Loss.alpha,

80 | lr=lr,

81 | gamma=gamma,

82 | margin=margin,

83 | weight_decay=weight_decay, device='cuda')

84 |

85 | # training

86 | best_val_auc = 0

87 | for epoch in range(2):

88 |

89 | if epoch > 0:

90 | optimizer.update_regularizer(decay_factor=10)

91 |

92 | for idx, data in enumerate(trainloader):

93 | train_data, train_labels = data

94 | train_data, train_labels = train_data.cuda(), train_labels.cuda()

95 | y_pred = model(train_data)

96 | y_pred = torch.sigmoid(y_pred)

97 | loss = Loss(y_pred, train_labels)

98 | optimizer.zero_grad()

99 | loss.backward()

100 | optimizer.step()

101 |

102 | # validation

103 | if idx % 400 == 0:

104 | model.eval()

105 | with torch.no_grad():

106 | test_pred = []

107 | test_true = []

108 | for jdx, data in enumerate(testloader):

109 | test_data, test_labels = data

110 | test_data = test_data.cuda()

111 | y_pred = model(test_data)

112 | y_pred = torch.sigmoid(y_pred)

113 | test_pred.append(y_pred.cpu().detach().numpy())

114 | test_true.append(test_labels.numpy())

115 |

116 | test_true = np.concatenate(test_true)

117 | test_pred = np.concatenate(test_pred)

118 | val_auc_mean = roc_auc_score(test_true, test_pred)

119 | model.train()

120 |

121 | if best_val_auc < val_auc_mean:

122 | best_val_auc = val_auc_mean

123 | torch.save(model.state_dict(), 'aucm_multi_label_pretrained_model.pth')

124 |

125 | print ('Epoch=%s, BatchID=%s, Val_AUC=%.4f, Best_Val_AUC=%.4f'%(epoch, idx, val_auc_mean, best_val_auc))

126 |

127 |

128 | # show auc roc scores for each task

129 | auc_roc_score(test_true, test_pred)

--------------------------------------------------------------------------------

/examples/scripts/08_optimizing_auroc_loss_with_densenet121_on_melanoma.py:

--------------------------------------------------------------------------------

1 | """

2 | Author: Zhuoning Yuan

3 | Contact: yzhuoning@gmail.com

4 | """

5 |

6 | """

7 | # **Importing LibAUC**"""

8 |

9 | from libauc.losses import AUCMLoss

10 | from libauc.optimizers import PESG

11 | from libauc.models import DenseNet121, DenseNet169

12 | from libauc.datasets import Melanoma

13 | from libauc.utils import auroc

14 |

15 | import torch

16 | from PIL import Image

17 | import numpy as np

18 | import torchvision.transforms as transforms

19 | from torch.utils.data import Dataset

20 |

21 | """# **Reproducibility**"""

22 |

23 | def set_all_seeds(SEED):

24 | # REPRODUCIBILITY

25 | torch.manual_seed(SEED)

26 | np.random.seed(SEED)

27 | torch.backends.cudnn.deterministic = True

28 | torch.backends.cudnn.benchmark = False

29 |

30 | """# **Data Augmentation**"""

31 |

32 | import albumentations as A

33 | from albumentations.pytorch.transforms import ToTensor

34 |

35 | def augmentations(image_size=256, is_test=True):

36 | # https://www.kaggle.com/vishnus/a-simple-pytorch-starter-code-single-fold-93

37 | imagenet_stats = {'mean':[0.485, 0.456, 0.406], 'std':[0.229, 0.224, 0.225]}

38 | train_tfms = A.Compose([

39 | A.Cutout(p=0.5),

40 | A.RandomRotate90(p=0.5),

41 | A.Flip(p=0.5),

42 | A.OneOf([

43 | A.RandomBrightnessContrast(brightness_limit=0.2,

44 | contrast_limit=0.2,

45 | ),

46 | A.HueSaturationValue(

47 | hue_shift_limit=20,

48 | sat_shift_limit=50,

49 | val_shift_limit=50)

50 | ], p=0.5),

51 | A.OneOf([

52 | A.IAAAdditiveGaussianNoise(),

53 | A.GaussNoise(),

54 | ], p=0.5),

55 | A.OneOf([

56 | A.MotionBlur(p=0.2),

57 | A.MedianBlur(blur_limit=3, p=0.1),

58 | A.Blur(blur_limit=3, p=0.1),

59 | ], p=0.5),

60 | A.ShiftScaleRotate(shift_limit=0.0625, scale_limit=0.2, rotate_limit=45, p=0.5),

61 | A.OneOf([

62 | A.OpticalDistortion(p=0.3),

63 | A.GridDistortion(p=0.1),

64 | A.IAAPiecewiseAffine(p=0.3),

65 | ], p=0.5),

66 | ToTensor(normalize=imagenet_stats)

67 | ])

68 |

69 | test_tfms = A.Compose([ToTensor(normalize=imagenet_stats)])

70 | if is_test:

71 | return test_tfms

72 | else:

73 | return train_tfms

74 |

75 | """# **Optimizing AUCM Loss**

76 | * Installation of `albumentations` is required!

77 | """

78 |

79 | # dataset

80 | trainSet = Melanoma(root='./melanoma/', is_test=False, test_size=0.2, transforms=augmentations)

81 | testSet = Melanoma(root='./melanoma/', is_test=True, test_size=0.2, transforms=augmentations)

82 |

83 | # paramaters

84 | SEED = 123

85 | BATCH_SIZE = 64

86 | lr = 0.1

87 | gamma = 500

88 | imratio = trainSet.imratio

89 | weight_decay = 1e-5

90 | margin = 1.0

91 |

92 | # model

93 | set_all_seeds(SEED)

94 | model = DenseNet121(pretrained=True, last_activation=None, activations='relu', num_classes=1)

95 | model = model.cuda()

96 |

97 | trainloader = torch.utils.data.DataLoader(trainSet, batch_size=BATCH_SIZE, num_workers=2, shuffle=True)

98 | testloader = torch.utils.data.DataLoader(testSet, batch_size=BATCH_SIZE, num_workers=2, shuffle=False)

99 |

100 | # load your own pretrained model here

101 | # PATH = 'ce_pretrained_model.pth'

102 | # state_dict = torch.load(PATH)

103 | # state_dict.pop('classifier.weight', None)

104 | # state_dict.pop('classifier.bias', None)

105 | # model.load_state_dict(state_dict, strict=False)

106 |

107 | # define loss & optimizer

108 | Loss = AUCMLoss(imratio=imratio)

109 | optimizer = PESG(model,

110 | a=Loss.a,

111 | b=Loss.b,

112 | alpha=Loss.alpha,

113 | lr=lr,

114 | gamma=gamma,

115 | margin=margin,

116 | weight_decay=weight_decay)

117 |

118 | total_epochs = 16

119 | best_val_auc = 0

120 | for epoch in range(total_epochs):

121 |

122 | # reset stages

123 | if epoch== int(total_epochs*0.5) or epoch== int(total_epochs*0.75):

124 | optimizer.update_regularizer(decay_factor=10)

125 |

126 | # training

127 | for idx, data in enumerate(trainloader):

128 | train_data, train_labels = data

129 | train_data, train_labels = train_data.cuda(), train_labels.cuda()

130 | y_pred = model(train_data)

131 | y_pred = torch.sigmoid(y_pred)

132 | loss = Loss(y_pred, train_labels)

133 | optimizer.zero_grad()

134 | loss.backward()

135 | optimizer.step()

136 |

137 | # validation

138 | model.eval()

139 | with torch.no_grad():

140 | test_pred = []

141 | test_true = []

142 | for jdx, data in enumerate(testloader):

143 | test_data, test_label = data

144 | test_data = test_data.cuda()

145 | y_pred = model(test_data)

146 | y_pred = torch.sigmoid(y_pred)

147 | test_pred.append(y_pred.cpu().detach().numpy())

148 | test_true.append(test_label.numpy())

149 |

150 | test_true = np.concatenate(test_true)

151 | test_pred = np.concatenate(test_pred)

152 | val_auc = auroc(test_true, test_pred)

153 | model.train()

154 |

155 | if best_val_auc < val_auc:

156 | best_val_auc = val_auc

157 |

158 | print ('Epoch=%s, Loss=%.4f, Val_AUC=%.4f, lr=%.4f'%(epoch, loss, val_auc, optimizer.lr))

159 |

160 | print ('Best Val_AUC is %.4f'%best_val_auc)

--------------------------------------------------------------------------------

/examples/scripts/09_optimizing_compositionalauc_loss_with_resnet20_on_cifar10.py:

--------------------------------------------------------------------------------

1 | """09_Optimizing_CompositionalAUC_Loss_with_ResNet20_on_CIFAR10.ipynb

2 |

3 | **Author**: Zhuoning Yuan

4 |

5 | **Introduction**

6 | In this tutorial, we will learn how to quickly train a ResNet20 model by optimizing AUC score using our novel compositional training framework [[Ref]](https://openreview.net/forum?id=gPvB4pdu_Z) on an binary image classification task on Cifar10. After completion of this tutorial, you should be able to use LibAUC to train your own models on your own datasets.

7 |

8 | **Useful Resources**

9 | * Website: https://libauc.org

10 | * Github: https://github.com/Optimization-AI/LibAUC

11 |

12 |

13 | **References**

14 | If you find this tutorial helpful in your work, please acknowledge our library and cite the following papers:

15 |

16 | @inproceedings{yuan2022compositional,

17 | title={Compositional Training for End-to-End Deep AUC Maximization},

18 | author={Zhuoning Yuan and Zhishuai Guo and Nitesh Chawla and Tianbao Yang},

19 | booktitle={International Conference on Learning Representations},

20 | year={2022},

21 | url={https://openreview.net/forum?id=gPvB4pdu_Z}

22 | }

23 |

24 | """

25 |

26 | """# **Importing LibAUC**

27 | Import required packages to use

28 | """

29 |

30 | from libauc.losses import CompositionalAUCLoss

31 | from libauc.optimizers import PDSCA

32 | from libauc.models import resnet20 as ResNet20

33 | from libauc.datasets import CIFAR10, CIFAR100, STL10, CAT_VS_DOG

34 | from libauc.utils import ImbalancedDataGenerator

35 | from libauc.sampler import DualSampler

36 | from libauc.metrics import auc_roc_score

37 |

38 | import torch

39 | from PIL import Image

40 | import numpy as np

41 | import torchvision.transforms as transforms

42 | from torch.utils.data import Dataset

43 |

44 | """# **Reproducibility**