166 |

167 |

169 |

170 |

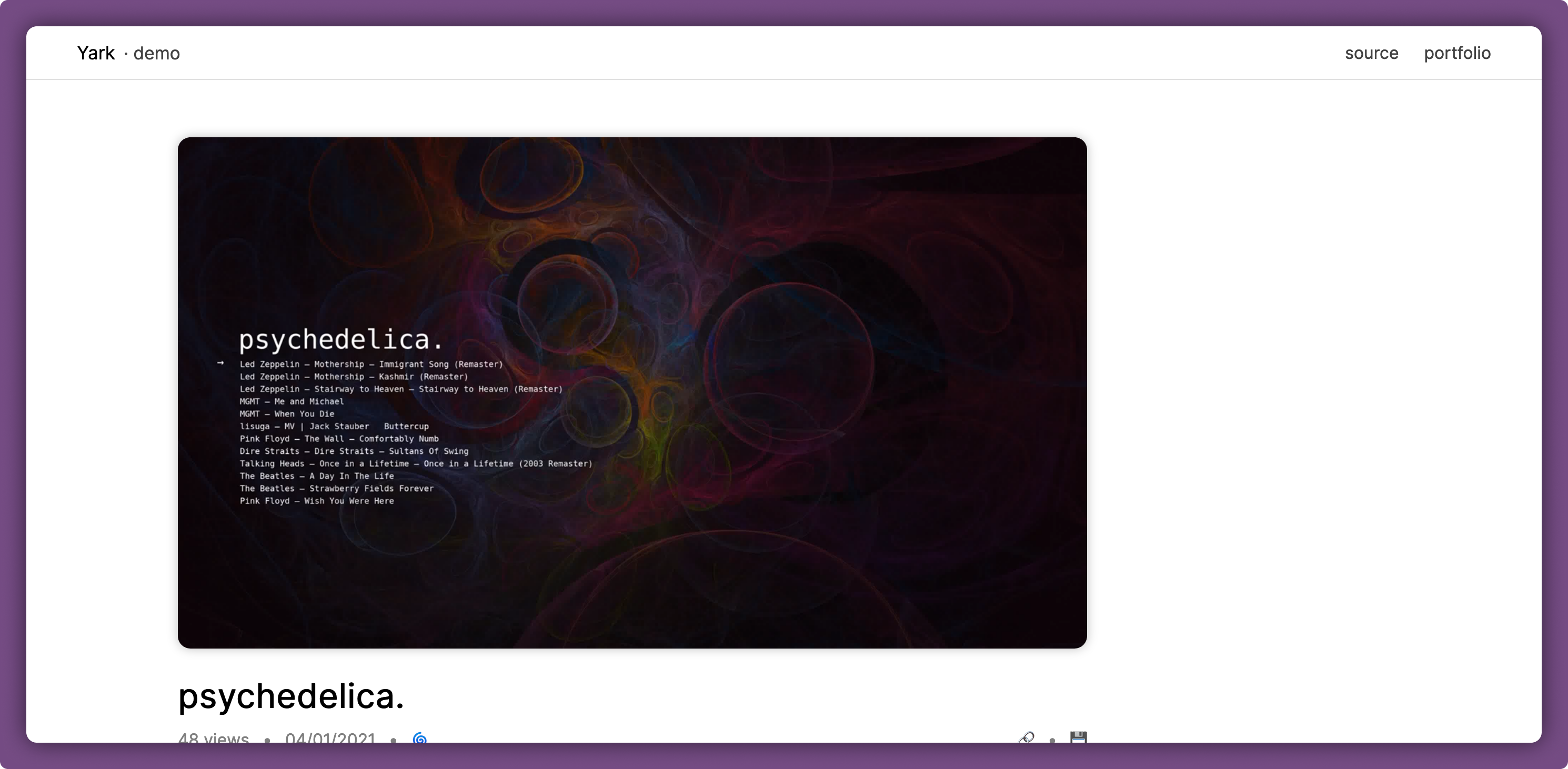

{{ video.title.current() }}

171 |

172 | {% set views = video.views.current() %}

173 |

174 |

175 | {{ views }} view{% if views != 1 %}s{% endif %}

176 |

177 | •

178 | {{ video.uploaded.strftime("%d/%m/%Y") }}

179 |

180 | {% if video.updated() %}

181 | •

182 | 🌀

183 | {% endif %}

184 |

185 |

186 |

187 | 🔗

188 |

189 | •

190 | 💾

192 |

193 | {% set likes = video.likes.current() %}

194 | {% if likes or likes == 0 %}

195 | •

196 | 👍 {{ likes }}

197 | {% endif %}

198 |

199 |

200 |

201 | {% set description = video.description.current().split("\n") %}

202 | {% set no_description = description|count == 1 and description[0] == "" %}

203 | {% if not no_description %}

204 |

206 |

207 | {% for line in description %}

208 | {{ line }}

209 | {% endfor %}

210 |

211 | {% endif %}

212 |

213 |

Create Note

214 |

215 |

216 |

218 |

00:00

219 |

220 |

221 |

223 |

224 |

225 |

282 |

283 |

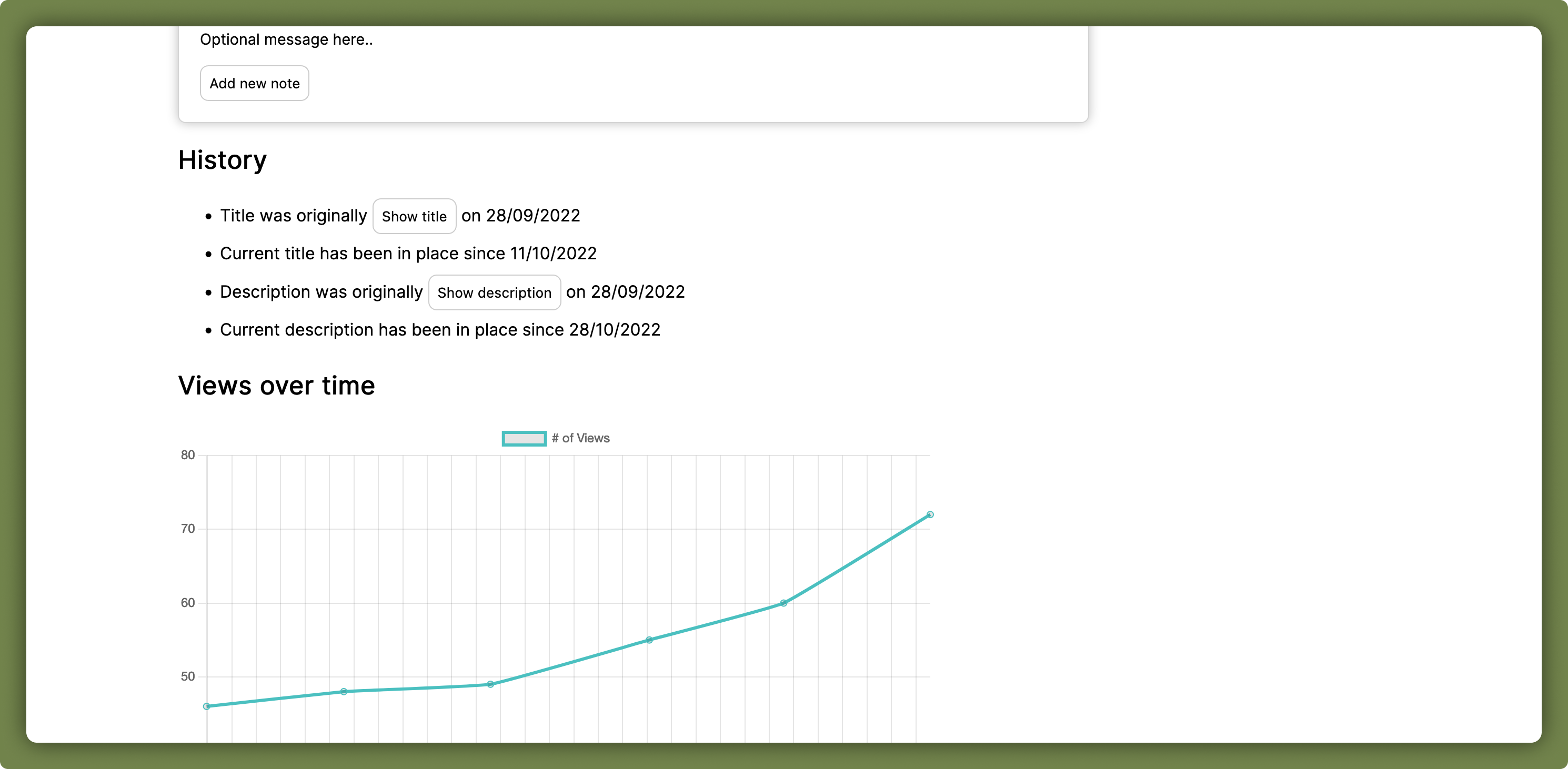

History

284 | {% set mto_title = video.title.inner|count != 1 %}

285 | {% set mto_description = video.description.inner|count != 1 %}

286 | {% set mto_views = video.views.inner|count != 1 %}

287 | {% set mto_likes = video.likes.inner|count != 1 %}

288 |

289 |

290 | {% if not mto_title and not mto_description %}

291 | - No title or description changes on record

292 | {% else %}

293 | {% if mto_title %}

294 | {% for timestamp in video.title.inner %}

295 | -

296 | {% if loop.index == video.title.inner|count %}

297 | Current title has been in place since {{ timestamp.strftime("%d/%m/%Y") }}

298 | {% else %}

299 | {% if loop.index == 1 %}

300 | Title was originally

301 | {% else %}

302 | Title changed to

303 | {% endif %}

304 |

307 | on {{ timestamp.strftime("%d/%m/%Y") }}

308 |

309 |

{{ video.title.inner[timestamp] }}

310 |

311 | {% endif %}

312 |

313 | {% endfor %}

314 | {% else %}

315 | - No title changes on record

316 | {% endif %}

317 |

318 | {% if mto_description %}

319 | {% for timestamp in video.description.inner %}

320 | -

321 | {% if loop.index == video.description.inner|count %}

322 | Current description has been in place since {{ timestamp.strftime("%d/%m/%Y") }}

323 | {% else %}

324 | {% if loop.index == 1 %}

325 | Description was originally

326 | {% else %}

327 | Description changed to

328 | {% endif %}

329 |

332 | on {{ timestamp.strftime("%d/%m/%Y") }}

333 |

334 |

335 | {% for line in video.description.inner[timestamp].split("\n") %}

336 | {{ line }}

337 | {% endfor %}

338 |

339 | {% endif %}

340 |

341 | {% endfor %}

342 | {% else %}

343 | - No description changes on record

344 | {% endif %}

345 | {% endif %}

346 |

347 | {% if not mto_views and not mto_likes %}

348 | - No view or like changes on record

349 | {% elif not mto_views %}

350 | - No view changes on record

351 | {% elif not mto_likes %}

352 | - No like changes on record

353 | {% endif %}

354 |

355 |

356 | {% if mto_views or mto_likes %}

357 | {% if mto_views %}

358 |

Views over time

359 |

360 | {% endif %}

361 | {% if mto_likes %}

362 |

Likes over time

363 |

364 | {% endif %}

365 |

366 |

368 |

422 | {% endif %}

423 |

424 | {% if video.notes %}

425 |

Notes

426 | {% for note in video.notes %}

427 |

428 |

429 |

436 |

437 |

{{ note.id }}

438 |

439 |

440 |

441 | {% if note.body %}{{ note.body }}{% endif %}

442 |

443 |

444 |

445 |

446 | {% endfor %}

447 |

519 | {% endif %}

520 |