├── .readme

├── figure3.png

├── husky_test.gif

├── husky_test_nav.gif

├── motivation.png

├── motivation_old.png

├── vs_em.png

├── vs_graph.png

└── vs_poster.png

├── CMakeLists.txt

├── LICENSE

├── README.md

├── include

├── agribot_types.h

├── agribot_vs.h

└── agribot_vs_nodehandler.h

├── launch

├── camera_umd.launch

└── visualservoing.launch

├── msg

└── vs_msg.msg

├── package.xml

├── params

└── agribot_vs_run.yaml

└── src

├── agribot_vs.cpp

├── agribot_vs_node.cpp

└── agribot_vs_nodehandler.cpp

/.readme/figure3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/PRBonn/visual-crop-row-navigation/6ddfa6cc183c4d21eda64abe2003291ecffeb4f3/.readme/figure3.png

--------------------------------------------------------------------------------

/.readme/husky_test.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/PRBonn/visual-crop-row-navigation/6ddfa6cc183c4d21eda64abe2003291ecffeb4f3/.readme/husky_test.gif

--------------------------------------------------------------------------------

/.readme/husky_test_nav.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/PRBonn/visual-crop-row-navigation/6ddfa6cc183c4d21eda64abe2003291ecffeb4f3/.readme/husky_test_nav.gif

--------------------------------------------------------------------------------

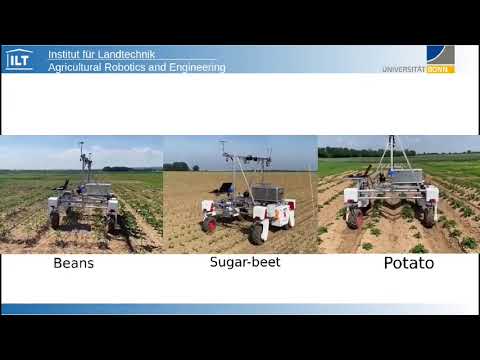

/.readme/motivation.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/PRBonn/visual-crop-row-navigation/6ddfa6cc183c4d21eda64abe2003291ecffeb4f3/.readme/motivation.png

--------------------------------------------------------------------------------

/.readme/motivation_old.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/PRBonn/visual-crop-row-navigation/6ddfa6cc183c4d21eda64abe2003291ecffeb4f3/.readme/motivation_old.png

--------------------------------------------------------------------------------

/.readme/vs_em.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/PRBonn/visual-crop-row-navigation/6ddfa6cc183c4d21eda64abe2003291ecffeb4f3/.readme/vs_em.png

--------------------------------------------------------------------------------

/.readme/vs_graph.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/PRBonn/visual-crop-row-navigation/6ddfa6cc183c4d21eda64abe2003291ecffeb4f3/.readme/vs_graph.png

--------------------------------------------------------------------------------

/.readme/vs_poster.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/PRBonn/visual-crop-row-navigation/6ddfa6cc183c4d21eda64abe2003291ecffeb4f3/.readme/vs_poster.png

--------------------------------------------------------------------------------

/CMakeLists.txt:

--------------------------------------------------------------------------------

1 | cmake_minimum_required(VERSION 2.8.3)

2 | project(visual_crop_row_navigation)

3 |

4 | # c++11

5 | set(CMAKE_CXX_FLAGS "-std=c++11 ${CMAKE_CXX_FLAGS}")

6 |

7 | find_package(catkin REQUIRED COMPONENTS

8 | roscpp

9 | genmsg

10 | cmake_modules

11 | message_generation

12 | dynamic_reconfigure

13 | std_msgs

14 | cv_bridge

15 | sensor_msgs

16 | image_transport

17 | )

18 |

19 | find_package( PkgConfig )

20 | find_package( OpenCV REQUIRED )

21 |

22 | add_message_files(FILES vs_msg.msg)

23 |

24 | generate_messages(DEPENDENCIES std_msgs)

25 |

26 | set (CMAKE_CXX_STANDARD 11)

27 | if(NOT CMAKE_BUILD_TYPE)

28 | set(CMAKE_BUILD_TYPE Release)

29 | endif()

30 | set(CMAKE_CXX_FLAGS "-Wall -Wextra -fPIC")

31 | set(CMAKE_CXX_FLAGS_DEBUG "-g")

32 | set(CMAKE_CXX_FLAGS_RELEASE "-O3")

33 |

34 | find_package (Eigen3 3.3 REQUIRED NO_MODULE)

35 | pkg_check_modules( EIGEN3 REQUIRED eigen3 )

36 |

37 | include_directories(

38 | include

39 | ${catkin_INCLUDE_DIRS}

40 | ${EIGEN3_INCLUDE_DIRS}

41 | )

42 |

43 | catkin_package(

44 | INCLUDE_DIRS

45 | include

46 | LIBRARIES

47 | ${PROJECT_NAME}_core

48 | CATKIN_DEPENDS

49 | sensor_msgs

50 | std_msgs

51 | message_runtime

52 | )

53 |

54 | ## Declare a cpp library

55 | add_library(${PROJECT_NAME}_core

56 | src/agribot_vs.cpp

57 | )

58 |

59 | ## Declare cpp executables

60 | add_executable(agribot_vs_node

61 | src/agribot_vs_node.cpp

62 | src/agribot_vs_nodehandler.cpp

63 | )

64 |

65 | target_link_libraries(agribot_vs_node

66 | ${PROJECT_NAME}_core

67 | ${catkin_LIBRARIES}

68 | ${OpenCV_LIBS}

69 | Eigen3::Eigen

70 | )

71 |

72 | add_dependencies(agribot_vs_node ${${PROJECT_NAME}_EXPORTED_TARGETS})

73 | add_dependencies(${PROJECT_NAME}_core ${${PROJECT_NAME}_EXPORTED_TARGETS})

74 |

75 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2019 Photogrammetry & Robotics Bonn

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Visual Crop Row Navigation

2 |

3 |

4 |

5 |

13 |

14 | [](https://www.youtube.com/watch?v=z2Cb2FFZ2aU)

15 |

16 |

17 |

18 | This is a visual-servoing based robot navigation framework tailored for navigating in row-crop fields.

19 | It uses the images from two on-board cameras and exploits the regular crop-row structure present in the fields for navigation, without performing explicit localization or mapping. It allows the robot to follow the crop-rows accurately and handles the switch to the next row seamlessly within the same framework.

20 |

21 | This implementation uses C++ and ROS and has been tested in different environments both in simulation and in real world and on diverse robotic platforms.

22 |

23 | This work has been developed @ [IPB](http://www.ipb.uni-bonn.de/), University of Bonn.

24 |

25 | Check out the [video1](https://www.youtube.com/watch?v=uO6cgBqKBas), [video2](https://youtu.be/KkCVQAhzS4g) of our robot following this approach to navigate on a test row-crop field.

26 |

27 |

28 |

30 |

33 |

48 |

49 |

50 |

55 |

56 |

57 |

5 |

5 |  5 |

5 |  49 |

49 |  50 |

50 |  56 |

56 |  57 |

57 |