├── scripts

├── train_fashion.sh

├── eval_fashion.sh

├── eval_market.sh

├── test_market.sh

├── test_fashion.sh

└── train_market.sh

├── requirements.txt

├── metrics

├── README.md

├── inception.py

└── metrics.py

├── data

├── data_loader.py

├── base_data_loader.py

├── custom_dataset_data_loader.py

├── __init__.py

├── generate_fashion_datasets.py

├── image_folder.py

├── base_dataset.py

├── market_dataset.py

└── fashion_dataset.py

├── Poster.md

├── models

├── models.py

├── __init__.py

├── PTM.py

├── base_model.py

├── DPTN_model.py

├── networks.py

├── external_function.py

├── base_function.py

└── ui_model.py

├── test.py

├── util

├── image_pool.py

├── html.py

├── pose_utils.py

├── visualizer.py

└── util.py

├── options

├── test_options.py

├── train_options.py

└── base_options.py

├── train.py

├── README.md

└── LICENSE.md

/scripts/train_fashion.sh:

--------------------------------------------------------------------------------

1 | python train.py --name=DPTN_fashion --model=DPTN --dataset_mode=fashion --dataroot=./dataset/fashion --batchSize 32 --gpu_id=0

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | torch==1.7.1

2 | torchvision==0.8.2

3 | imageio

4 | natsort

5 | scipy

6 | scikit-image

7 | pandas

8 | dominate

9 | opencv-python

10 | visdom

11 |

--------------------------------------------------------------------------------

/scripts/eval_fashion.sh:

--------------------------------------------------------------------------------

1 | python -m metrics.metrics --gt_path=./dataset/fashion/test --distorated_path=./results/DPTN_fashion --fid_real_path=./dataset/fashion/train --name=./fashion

--------------------------------------------------------------------------------

/scripts/eval_market.sh:

--------------------------------------------------------------------------------

1 | python -m metrics.metrics --gt_path=./dataset/market/test --distorated_path=./results/DPTN_market --fid_real_path=./dataset/market/train --name=./market --market

--------------------------------------------------------------------------------

/scripts/test_market.sh:

--------------------------------------------------------------------------------

1 | python test.py --name=DPTN_market --model=DPTN --dataset_mode=market --dataroot=./dataset/market --which_epoch latest --results_dir=./results/DPTN_market --batchSize 1 --gpu_id=0

--------------------------------------------------------------------------------

/scripts/test_fashion.sh:

--------------------------------------------------------------------------------

1 | python test.py --name=DPTN_fashion --model=DPTN --dataset_mode=fashion --dataroot=./dataset/fashion --which_epoch latest --results_dir ./results/DPTN_fashion --batchSize 1 --gpu_id=0

--------------------------------------------------------------------------------

/metrics/README.md:

--------------------------------------------------------------------------------

1 | Please clone the official repository **[PerceptualSimilarity](https://github.com/richzhang/PerceptualSimilarity/tree/future)** of the LPIPS score, and put the folder PerceptualSimilarity here.

2 |

--------------------------------------------------------------------------------

/scripts/train_market.sh:

--------------------------------------------------------------------------------

1 | python train.py --name=DPTN_market --model=DPTN --dataset_mode=market --dataroot=./dataset/market --dis_layer=3 --lambda_g=5 --lambda_rec 2 --t_s_ratio=0.8 --save_latest_freq=10400 --batchSize 32 --gpu_id=0

--------------------------------------------------------------------------------

/data/data_loader.py:

--------------------------------------------------------------------------------

1 |

2 | def CreateDataLoader(opt):

3 | from data.custom_dataset_data_loader import CustomDatasetDataLoader

4 | data_loader = CustomDatasetDataLoader()

5 | print(data_loader.name())

6 | data_loader.initialize(opt)

7 | return data_loader

8 |

--------------------------------------------------------------------------------

/data/base_data_loader.py:

--------------------------------------------------------------------------------

1 |

2 | class BaseDataLoader():

3 | def __init__(self):

4 | pass

5 |

6 | def initialize(self, opt):

7 | self.opt = opt

8 | pass

9 |

10 | def load_data():

11 | return None

12 |

13 |

14 |

15 |

--------------------------------------------------------------------------------

/Poster.md:

--------------------------------------------------------------------------------

1 |  2 | Our poster template can be download from [Google Drive](https://docs.google.com/presentation/d/1i02V0JZCw2mRZF99szitaOEkfVNeKR1q/edit?usp=sharing&ouid=111594135598063931892&rtpof=true&sd=true).

3 |

--------------------------------------------------------------------------------

/models/models.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import models

3 |

4 | def create_model(opt):

5 | '''

6 | if opt.model == 'pix2pixHD':

7 | from .pix2pixHD_model import Pix2PixHDModel, InferenceModel

8 | if opt.isTrain:

9 | model = Pix2PixHDModel()

10 | else:

11 | model = InferenceModel()

12 | elif opt.model == 'basic':

13 | from .basic_model import BasicModel

14 | model = BasicModel(opt)

15 | else:

16 | from .ui_model import UIModel

17 | model = UIModel()

18 | '''

19 | model = models.find_model_using_name(opt.model)(opt)

20 | if opt.verbose:

21 | print("model [%s] was created" % (model.name()))

22 | return model

23 |

--------------------------------------------------------------------------------

/test.py:

--------------------------------------------------------------------------------

1 | from data.data_loader import CreateDataLoader

2 | from options.test_options import TestOptions

3 | from models.models import create_model

4 | import numpy as np

5 | import torch

6 |

7 | if __name__=='__main__':

8 | # get testing options

9 | opt = TestOptions().parse()

10 | # creat a dataset

11 | data_loader = CreateDataLoader(opt)

12 | dataset = data_loader.load_data()

13 |

14 |

15 | print(len(dataset))

16 |

17 | dataset_size = len(dataset) * opt.batchSize

18 | print('testing images = %d' % dataset_size)

19 | # create a model

20 | model = create_model(opt)

21 |

22 | with torch.no_grad():

23 | for i, data in enumerate(dataset):

24 | model.set_input(data)

25 | model.test()

26 |

--------------------------------------------------------------------------------

/models/__init__.py:

--------------------------------------------------------------------------------

1 | """This package contains modules related to function, network architectures, and models"""

2 |

3 | import importlib

4 | from .base_model import BaseModel

5 |

6 |

7 | def find_model_using_name(model_name):

8 | """Import the module "model/[model_name]_model.py"."""

9 | model_file_name = "models." + model_name + "_model"

10 | modellib = importlib.import_module(model_file_name)

11 | model = None

12 | for name, cls in modellib.__dict__.items():

13 | if name.lower() == (model_name+'model').lower() and issubclass(cls, BaseModel):

14 | model = cls

15 |

16 | if model is None:

17 | print("In %s.py, there should be a subclass of BaseModel with class name that matches %s in lowercase." % (model_file_name, model_name))

18 | exit(0)

19 |

20 | return model

21 |

22 |

23 | def get_option_setter(model_name):

24 | """Return the static method of the model class."""

25 | model = find_model_using_name(model_name)

26 | return model.modify_options

27 |

--------------------------------------------------------------------------------

/util/image_pool.py:

--------------------------------------------------------------------------------

1 | import random

2 | import torch

3 | from torch.autograd import Variable

4 | class ImagePool():

5 | def __init__(self, pool_size):

6 | self.pool_size = pool_size

7 | if self.pool_size > 0:

8 | self.num_imgs = 0

9 | self.images = []

10 |

11 | def query(self, images):

12 | if self.pool_size == 0:

13 | return images

14 | return_images = []

15 | for image in images.data:

16 | image = torch.unsqueeze(image, 0)

17 | if self.num_imgs < self.pool_size:

18 | self.num_imgs = self.num_imgs + 1

19 | self.images.append(image)

20 | return_images.append(image)

21 | else:

22 | p = random.uniform(0, 1)

23 | if p > 0.5:

24 | random_id = random.randint(0, self.pool_size-1)

25 | tmp = self.images[random_id].clone()

26 | self.images[random_id] = image

27 | return_images.append(tmp)

28 | else:

29 | return_images.append(image)

30 | return_images = Variable(torch.cat(return_images, 0))

31 | return return_images

32 |

--------------------------------------------------------------------------------

/data/custom_dataset_data_loader.py:

--------------------------------------------------------------------------------

1 | import torch.utils.data

2 | from data.base_data_loader import BaseDataLoader

3 | import data

4 |

5 | def CreateDataset(opt):

6 | '''

7 | dataset = None

8 | if opt.dataset_mode == 'fashion':

9 | from data.fashion_dataset import FashionDataset

10 | dataset = FashionDataset()

11 | else:

12 | from data.aligned_dataset import AlignedDataset

13 | dataset = AlignedDataset()

14 | '''

15 | dataset = data.find_dataset_using_name(opt.dataset_mode)()

16 | print("dataset [%s] was created" % (dataset.name()))

17 | dataset.initialize(opt)

18 | return dataset

19 |

20 | class CustomDatasetDataLoader(BaseDataLoader):

21 | def name(self):

22 | return 'CustomDatasetDataLoader'

23 |

24 | def initialize(self, opt):

25 | BaseDataLoader.initialize(self, opt)

26 | self.dataset = CreateDataset(opt)

27 | self.dataloader = torch.utils.data.DataLoader(

28 | self.dataset,

29 | batch_size=opt.batchSize,

30 | shuffle=(not opt.serial_batches) and opt.isTrain,

31 | num_workers=int(opt.nThreads))

32 |

33 | def load_data(self):

34 | return self.dataloader

35 |

36 | def __len__(self):

37 | return min(len(self.dataset), self.opt.max_dataset_size)

38 |

--------------------------------------------------------------------------------

/options/test_options.py:

--------------------------------------------------------------------------------

1 | from .base_options import BaseOptions

2 |

3 | class TestOptions(BaseOptions):

4 | def initialize(self):

5 | BaseOptions.initialize(self)

6 | self.parser.add_argument('--ntest', type=int, default=float("inf"), help='# of test examples.')

7 | self.parser.add_argument('--results_dir', type=str, default='./results/', help='saves results here.')

8 | self.parser.add_argument('--aspect_ratio', type=float, default=1.0, help='aspect ratio of result images')

9 | self.parser.add_argument('--phase', type=str, default='test', help='train, val, test, etc')

10 | self.parser.add_argument('--which_epoch', type=str, default='latest', help='which epoch to load? set to latest to use latest cached model')

11 | self.parser.add_argument('--how_many', type=int, default=50, help='how many test images to run')

12 | self.parser.add_argument('--cluster_path', type=str, default='features_clustered_010.npy', help='the path for clustered results of encoded features')

13 | self.parser.add_argument('--use_encoded_image', action='store_true', help='if specified, encode the real image to get the feature map')

14 | self.parser.add_argument("--export_onnx", type=str, help="export ONNX model to a given file")

15 | self.parser.add_argument("--engine", type=str, help="run serialized TRT engine")

16 | self.parser.add_argument("--onnx", type=str, help="run ONNX model via TRT")

17 | self.isTrain = False

18 |

--------------------------------------------------------------------------------

/data/__init__.py:

--------------------------------------------------------------------------------

1 | import importlib

2 | import torch.utils.data

3 | from data.base_dataset import BaseDataset

4 |

5 |

6 | def find_dataset_using_name(dataset_name):

7 | # Given the option --dataset [datasetname],

8 | # the file "datasets/datasetname_dataset.py"

9 | # will be imported.

10 | dataset_filename = "data." + dataset_name + "_dataset"

11 | datasetlib = importlib.import_module(dataset_filename)

12 |

13 | # In the file, the class called DatasetNameDataset() will

14 | # be instantiated. It has to be a subclass of BaseDataset,

15 | # and it is case-insensitive.

16 | dataset = None

17 | target_dataset_name = dataset_name.replace('_', '') + 'dataset'

18 | for name, cls in datasetlib.__dict__.items():

19 | if name.lower() == target_dataset_name.lower() \

20 | and issubclass(cls, BaseDataset):

21 | dataset = cls

22 |

23 | if dataset is None:

24 | raise ValueError("In %s.py, there should be a subclass of BaseDataset "

25 | "with class name that matches %s in lowercase." %

26 | (dataset_filename, target_dataset_name))

27 |

28 | return dataset

29 |

30 |

31 | def get_option_setter(dataset_name):

32 | dataset_class = find_dataset_using_name(dataset_name)

33 | return dataset_class.modify_commandline_options

34 |

35 | '''

36 | def create_dataloader(opt):

37 | dataset = find_dataset_using_name(opt.dataset_mode)

38 | instance = dataset()

39 | instance.initialize(opt)

40 | print("dataset [%s] of size %d was created" %

41 | (type(instance).__name__, len(instance)))

42 | dataloader = torch.utils.data.DataLoader(

43 | instance,

44 | batch_size=opt.batchSize,

45 | shuffle=not opt.serial_batches,

46 | num_workers=int(opt.nThreads),

47 | drop_last=opt.isTrain

48 | )

49 | return dataloader

50 | '''

--------------------------------------------------------------------------------

/data/generate_fashion_datasets.py:

--------------------------------------------------------------------------------

1 | import os

2 | import shutil

3 | from PIL import Image

4 |

5 | IMG_EXTENSIONS = [

6 | '.jpg', '.JPG', '.jpeg', '.JPEG',

7 | '.png', '.PNG', '.ppm', '.PPM', '.bmp', '.BMP',

8 | ]

9 |

10 | def is_image_file(filename):

11 | return any(filename.endswith(extension) for extension in IMG_EXTENSIONS)

12 |

13 | def make_dataset(dir):

14 | images = []

15 | assert os.path.isdir(dir), '%s is not a valid directory' % dir

16 | new_root = './fashion'

17 | if not os.path.exists(new_root):

18 | os.mkdir(new_root)

19 |

20 | train_root = './fashion/train'

21 | if not os.path.exists(train_root):

22 | os.mkdir(train_root)

23 |

24 | test_root = './fashion/test'

25 | if not os.path.exists(test_root):

26 | os.mkdir(test_root)

27 |

28 | train_images = []

29 | train_f = open('./fashion/train.lst', 'r')

30 | for lines in train_f:

31 | lines = lines.strip()

32 | if lines.endswith('.jpg'):

33 | train_images.append(lines)

34 |

35 | test_images = []

36 | test_f = open('./fashion/test.lst', 'r')

37 | for lines in test_f:

38 | lines = lines.strip()

39 | if lines.endswith('.jpg'):

40 | test_images.append(lines)

41 |

42 | print(train_images, test_images)

43 |

44 |

45 | for root, _, fnames in sorted(os.walk(dir)):

46 | for fname in fnames:

47 | if is_image_file(fname):

48 | path = os.path.join(root, fname)

49 | path_names = path.split('/')

50 | # path_names[2] = path_names[2].replace('_', '')

51 | path_names[3] = path_names[3].replace('_', '')

52 | path_names[4] = path_names[4].split('_')[0] + "_" + "".join(path_names[4].split('_')[1:])

53 | path_names = "".join(path_names)

54 | # new_path = os.path.join(root, path_names)

55 | img = Image.open(path)

56 | imgcrop = img.crop((40, 0, 216, 256))

57 | if new_path in train_images:

58 | imgcrop.save(os.path.join(train_root, path_names))

59 | elif new_path in test_images:

60 | imgcrop.save(os.path.join(test_root, path_names))

61 |

62 | make_dataset('./fashion')

--------------------------------------------------------------------------------

/util/html.py:

--------------------------------------------------------------------------------

1 | import dominate

2 | from dominate.tags import *

3 | import os

4 |

5 |

6 | class HTML:

7 | def __init__(self, web_dir, title, refresh=0):

8 | self.title = title

9 | self.web_dir = web_dir

10 | self.img_dir = os.path.join(self.web_dir, 'images')

11 | if not os.path.exists(self.web_dir):

12 | os.makedirs(self.web_dir)

13 | if not os.path.exists(self.img_dir):

14 | os.makedirs(self.img_dir)

15 |

16 | self.doc = dominate.document(title=title)

17 | if refresh > 0:

18 | with self.doc.head:

19 | meta(http_equiv="refresh", content=str(refresh))

20 |

21 | def get_image_dir(self):

22 | return self.img_dir

23 |

24 | def add_header(self, str):

25 | with self.doc:

26 | h3(str)

27 |

28 | def add_table(self, border=1):

29 | self.t = table(border=border, style="table-layout: fixed;")

30 | self.doc.add(self.t)

31 |

32 | def add_images(self, ims, txts, links, width=512):

33 | self.add_table()

34 | with self.t:

35 | with tr():

36 | for im, txt, link in zip(ims, txts, links):

37 | with td(style="word-wrap: break-word;", halign="center", valign="top"):

38 | with p():

39 | with a(href=os.path.join('images', link)):

40 | img(style="width:%dpx" % (width), src=os.path.join('images', im))

41 | br()

42 | p(txt)

43 |

44 | def save(self):

45 | html_file = '%s/index.html' % self.web_dir

46 | f = open(html_file, 'wt')

47 | f.write(self.doc.render())

48 | f.close()

49 |

50 |

51 | if __name__ == '__main__':

52 | html = HTML('web/', 'test_html')

53 | html.add_header('hello world')

54 |

55 | ims = []

56 | txts = []

57 | links = []

58 | for n in range(4):

59 | ims.append('image_%d.jpg' % n)

60 | txts.append('text_%d' % n)

61 | links.append('image_%d.jpg' % n)

62 | html.add_images(ims, txts, links)

63 | html.save()

64 |

--------------------------------------------------------------------------------

/data/image_folder.py:

--------------------------------------------------------------------------------

1 | ###############################################################################

2 | # Code from

3 | # https://github.com/pytorch/vision/blob/master/torchvision/datasets/folder.py

4 | # Modified the original code so that it also loads images from the current

5 | # directory as well as the subdirectories

6 | ###############################################################################

7 | import torch.utils.data as data

8 | from PIL import Image

9 | import os

10 |

11 | IMG_EXTENSIONS = [

12 | '.jpg', '.JPG', '.jpeg', '.JPEG',

13 | '.png', '.PNG', '.ppm', '.PPM', '.bmp', '.BMP', '.tiff'

14 | ]

15 |

16 |

17 | def is_image_file(filename):

18 | return any(filename.endswith(extension) for extension in IMG_EXTENSIONS)

19 |

20 |

21 | def make_dataset(dir):

22 | images = []

23 | assert os.path.isdir(dir), '%s is not a valid directory' % dir

24 |

25 | for root, _, fnames in sorted(os.walk(dir)):

26 | for fname in fnames:

27 | if is_image_file(fname):

28 | path = os.path.join(root, fname)

29 | images.append(path)

30 |

31 | return images

32 |

33 |

34 | def default_loader(path):

35 | return Image.open(path).convert('RGB')

36 |

37 |

38 | class ImageFolder(data.Dataset):

39 |

40 | def __init__(self, root, transform=None, return_paths=False,

41 | loader=default_loader):

42 | imgs = make_dataset(root)

43 | if len(imgs) == 0:

44 | raise(RuntimeError("Found 0 images in: " + root + "\n"

45 | "Supported image extensions are: " +

46 | ",".join(IMG_EXTENSIONS)))

47 |

48 | self.root = root

49 | self.imgs = imgs

50 | self.transform = transform

51 | self.return_paths = return_paths

52 | self.loader = loader

53 |

54 | def __getitem__(self, index):

55 | path = self.imgs[index]

56 | img = self.loader(path)

57 | if self.transform is not None:

58 | img = self.transform(img)

59 | if self.return_paths:

60 | return img, path

61 | else:

62 | return img

63 |

64 | def __len__(self):

65 | return len(self.imgs)

66 |

--------------------------------------------------------------------------------

/data/base_dataset.py:

--------------------------------------------------------------------------------

1 | import torch.utils.data as data

2 | from PIL import Image

3 | import torchvision.transforms as transforms

4 | import numpy as np

5 | import random

6 |

7 |

8 | class BaseDataset(data.Dataset):

9 | def __init__(self):

10 | super(BaseDataset, self).__init__()

11 |

12 | def name(self):

13 | return 'BaseDataset'

14 |

15 | def initialize(self, opt):

16 | pass

17 |

18 | def get_params(opt, size):

19 | w, h = size

20 | new_h = h

21 | new_w = w

22 | if opt.resize_or_crop == 'resize_and_crop':

23 | new_h = new_w = opt.loadSize

24 | elif opt.resize_or_crop == 'scale_width_and_crop':

25 | new_w = opt.loadSize

26 | new_h = opt.loadSize * h // w

27 |

28 | x = random.randint(0, np.maximum(0, new_w - opt.fineSize))

29 | y = random.randint(0, np.maximum(0, new_h - opt.fineSize))

30 |

31 | flip = random.random() > 0.5

32 | return {'crop_pos': (x, y), 'flip': flip}

33 |

34 | def get_transform(opt, params, method=Image.BICUBIC, normalize=True):

35 | transform_list = []

36 | if 'resize' in opt.resize_or_crop:

37 | osize = [opt.loadSize, opt.loadSize]

38 | transform_list.append(transforms.Scale(osize, method))

39 | elif 'scale_width' in opt.resize_or_crop:

40 | transform_list.append(transforms.Lambda(lambda img: __scale_width(img, opt.loadSize, method)))

41 |

42 | if 'crop' in opt.resize_or_crop:

43 | transform_list.append(transforms.Lambda(lambda img: __crop(img, params['crop_pos'], opt.fineSize)))

44 |

45 | if opt.resize_or_crop == 'none':

46 | base = float(2 ** opt.n_downsample_global)

47 | if opt.netG == 'local':

48 | base *= (2 ** opt.n_local_enhancers)

49 | transform_list.append(transforms.Lambda(lambda img: __make_power_2(img, base, method)))

50 |

51 | if opt.isTrain and not opt.no_flip:

52 | transform_list.append(transforms.Lambda(lambda img: __flip(img, params['flip'])))

53 |

54 | transform_list += [transforms.ToTensor()]

55 |

56 | if normalize:

57 | transform_list += [transforms.Normalize((0.5, 0.5, 0.5),

58 | (0.5, 0.5, 0.5))]

59 | return transforms.Compose(transform_list)

60 |

61 |

62 | def normalize():

63 | return transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

64 |

65 |

66 | def __make_power_2(img, base, method=Image.BICUBIC):

67 | ow, oh = img.size

68 | h = int(round(oh / base) * base)

69 | w = int(round(ow / base) * base)

70 | if (h == oh) and (w == ow):

71 | return img

72 | return img.resize((w, h), method)

73 |

74 |

75 | def __scale_width(img, target_width, method=Image.BICUBIC):

76 | ow, oh = img.size

77 | if (ow == target_width):

78 | return img

79 | w = target_width

80 | h = int(target_width * oh / ow)

81 | return img.resize((w, h), method)

82 |

83 |

84 | def __crop(img, pos, size):

85 | ow, oh = img.size

86 | x1, y1 = pos

87 | tw = th = size

88 | if (ow > tw or oh > th):

89 | return img.crop((x1, y1, x1 + tw, y1 + th))

90 | return img

91 |

92 |

93 | def __flip(img, flip):

94 | if flip:

95 | return img.transpose(Image.FLIP_LEFT_RIGHT)

96 | return img

97 |

--------------------------------------------------------------------------------

/options/train_options.py:

--------------------------------------------------------------------------------

1 | from .base_options import BaseOptions

2 |

3 | class TrainOptions(BaseOptions):

4 | def initialize(self):

5 | BaseOptions.initialize(self)

6 | # for displays

7 | self.parser.add_argument('--display_freq', type=int, default=200, help='frequency of showing training results on screen')

8 | self.parser.add_argument('--print_freq', type=int, default=200, help='frequency of showing training results on console')

9 | self.parser.add_argument('--save_latest_freq', type=int, default=1000, help='frequency of saving the latest results')

10 | self.parser.add_argument('--save_epoch_freq', type=int, default=1, help='frequency of saving checkpoints at the end of epochs')

11 | self.parser.add_argument('--no_html', action='store_true', help='do not save intermediate training results to [opt.checkpoints_dir]/[opt.name]/web/')

12 | self.parser.add_argument('--debug', action='store_true', help='only do one epoch and displays at each iteration')

13 |

14 | # for training

15 | self.parser.add_argument('--continue_train', action='store_true', help='continue training: load the latest model')

16 | self.parser.add_argument('--load_pretrain', type=str, default='', help='load the pretrained model from the specified location')

17 | self.parser.add_argument('--which_epoch', type=str, default='latest', help='which epoch to load? set to latest to use latest cached model')

18 | self.parser.add_argument('--phase', type=str, default='train', help='train, val, test, etc')

19 | self.parser.add_argument('--niter', type=int, default=100, help='# of iter at starting learning rate')

20 | self.parser.add_argument('--niter_decay', type=int, default=100, help='# of iter to linearly decay learning rate to zero')

21 | self.parser.add_argument('--iter_start', type=int, default=0, help='# of iter to linearly decay learning rate to zero')

22 | self.parser.add_argument('--beta1', type=float, default=0.5, help='momentum term of adam')

23 | self.parser.add_argument('--lr', type=float, default=0.0002, help='initial learning rate for adam')

24 | self.parser.add_argument('--lr_policy', type=str, default='lambda', help='learning rate policy[lambda|step|plateau]')

25 | self.parser.add_argument('--gan_mode', type=str, default='lsgan', choices=['wgan-gp', 'hinge', 'lsgan'])

26 | # for discriminators

27 | self.parser.add_argument('--num_D', type=int, default=1, help='number of discriminators to use')

28 | self.parser.add_argument('--n_layers_D', type=int, default=3, help='only used if which_model_netD==n_layers')

29 | self.parser.add_argument('--ndf', type=int, default=64, help='# of discrim filters in first conv layer')

30 | self.parser.add_argument('--lambda_feat', type=float, default=10.0, help='weight for feature matching loss')

31 | self.parser.add_argument('--no_ganFeat_loss', action='store_true', help='if specified, do *not* use discriminator feature matching loss')

32 | self.parser.add_argument('--no_vgg_loss', action='store_true', help='if specified, do *not* use VGG feature matching loss')

33 | self.parser.add_argument('--pool_size', type=int, default=0, help='the size of image buffer that stores previously generated images')

34 |

35 | self.isTrain = True

36 |

--------------------------------------------------------------------------------

/data/market_dataset.py:

--------------------------------------------------------------------------------

1 | import os.path

2 | from data.base_dataset import BaseDataset, get_params, get_transform, normalize

3 | from data.image_folder import make_dataset

4 | import torchvision.transforms.functional as F

5 | import torchvision.transforms as transforms

6 | from PIL import Image

7 | from util import pose_utils

8 | import pandas as pd

9 | import numpy as np

10 | import torch

11 |

12 | class MarketDataset(BaseDataset):

13 | @staticmethod

14 | def modify_commandline_options(parser, is_train):

15 | if is_train:

16 | parser.set_defaults(load_size=128)

17 | else:

18 | parser.set_defaults(load_size=128)

19 | parser.set_defaults(old_size=(128, 64))

20 | parser.set_defaults(structure_nc=18)

21 | parser.set_defaults(image_nc=3)

22 | return parser

23 |

24 | def initialize(self, opt):

25 | self.opt = opt

26 | self.root = opt.dataroot

27 | self.phase = opt.phase

28 |

29 | # prepare for image (image_dir), image_pair (name_pairs) and bone annotation (annotation_file)

30 | self.image_dir = os.path.join(self.root, self.phase)

31 | self.bone_file = os.path.join(self.root, 'market-annotation-%s.csv' % self.phase)

32 | pairLst = os.path.join(self.root, 'market-pairs-%s.csv' % self.phase)

33 | self.name_pairs = self.init_categories(pairLst)

34 | self.annotation_file = pd.read_csv(self.bone_file, sep=':')

35 | self.annotation_file = self.annotation_file.set_index('name')

36 |

37 | # load image size

38 | if isinstance(opt.loadSize, int):

39 | self.load_size = (128, 64)

40 | else:

41 | self.load_size = opt.loadSize

42 |

43 | # prepare for transformation

44 | transform_list=[]

45 | transform_list.append(transforms.ToTensor())

46 | transform_list.append(transforms.Normalize((0.5, 0.5, 0.5),(0.5, 0.5, 0.5)))

47 | self.trans = transforms.Compose(transform_list)

48 |

49 | def __getitem__(self, index):

50 | # prepare for source image Xs and target image Xt

51 | Xs_name, Xt_name = self.name_pairs[index]

52 | Xs_path = os.path.join(self.image_dir, Xs_name)

53 | Xt_path = os.path.join(self.image_dir, Xt_name)

54 |

55 | Xs = Image.open(Xs_path).convert('RGB')

56 | Xt = Image.open(Xt_path).convert('RGB')

57 |

58 | Xs = F.resize(Xs, self.load_size)

59 | Xt = F.resize(Xt, self.load_size)

60 |

61 | Ps = self.obtain_bone(Xs_name)

62 | Xs = self.trans(Xs)

63 | Pt = self.obtain_bone(Xt_name)

64 | Xt = self.trans(Xt)

65 |

66 | return {'Xs': Xs, 'Ps': Ps, 'Xt': Xt, 'Pt': Pt,

67 | 'Xs_path': Xs_name, 'Xt_path': Xt_name}

68 |

69 | def init_categories(self, pairLst):

70 | pairs_file_train = pd.read_csv(pairLst)

71 | size = len(pairs_file_train)

72 | pairs = []

73 | print('Loading data pairs ...')

74 | for i in range(size):

75 | pair = [pairs_file_train.iloc[i]['from'], pairs_file_train.iloc[i]['to']]

76 | pairs.append(pair)

77 |

78 | print('Loading data pairs finished ...')

79 | return pairs

80 |

81 | def getRandomAffineParam(self):

82 | if self.opt.angle is not False:

83 | angle = np.random.uniform(low=self.opt.angle[0], high=self.opt.angle[1])

84 | else:

85 | angle = 0

86 | if self.opt.scale is not False:

87 | scale = np.random.uniform(low=self.opt.scale[0], high=self.opt.scale[1])

88 | else:

89 | scale = 1

90 | if self.opt.shift is not False:

91 | shift_x = np.random.uniform(low=self.opt.shift[0], high=self.opt.shift[1])

92 | shift_y = np.random.uniform(low=self.opt.shift[0], high=self.opt.shift[1])

93 | else:

94 | shift_x = 0

95 | shift_y = 0

96 | return angle, (shift_x, shift_y), scale

97 |

98 | def obtain_bone(self, name):

99 | string = self.annotation_file.loc[name]

100 | array = pose_utils.load_pose_cords_from_strings(string['keypoints_y'], string['keypoints_x'])

101 | pose = pose_utils.cords_to_map(array, self.load_size, self.opt.old_size)

102 | pose = np.transpose(pose,(2, 0, 1))

103 | pose = torch.Tensor(pose)

104 | return pose

105 |

106 | def obtain_bone_affine(self, name, affine_matrix):

107 | string = self.annotation_file.loc[name]

108 | array = pose_utils.load_pose_cords_from_strings(string['keypoints_y'], string['keypoints_x'])

109 | pose = pose_utils.cords_to_map(array, self.load_size, self.opt.old_size, affine_matrix)

110 | pose = np.transpose(pose,(2, 0, 1))

111 | pose = torch.Tensor(pose)

112 | return pose

113 |

114 | def __len__(self):

115 | return len(self.name_pairs) // self.opt.batchSize * self.opt.batchSize

116 |

117 | def name(self):

118 | return 'MarketDataset'

--------------------------------------------------------------------------------

/data/fashion_dataset.py:

--------------------------------------------------------------------------------

1 | import os.path

2 | from data.base_dataset import BaseDataset, get_params, get_transform, normalize

3 | from data.image_folder import make_dataset

4 | import torchvision.transforms.functional as F

5 | import torchvision.transforms as transforms

6 | from PIL import Image

7 | from util import pose_utils

8 | import pandas as pd

9 | import numpy as np

10 | import torch

11 |

12 | class FashionDataset(BaseDataset):

13 | @staticmethod

14 | def modify_commandline_options(parser, is_train):

15 | if is_train:

16 | parser.set_defaults(load_size=256)

17 | else:

18 | parser.set_defaults(load_size=256)

19 | parser.set_defaults(old_size=(256, 176))

20 | parser.set_defaults(structure_nc=18)

21 | parser.set_defaults(image_nc=3)

22 | return parser

23 |

24 | def initialize(self, opt):

25 | self.opt = opt

26 | self.root = opt.dataroot

27 | self.phase = opt.phase

28 |

29 | # prepare for image (image_dir), image_pair (name_pairs) and bone annotation (annotation_file)

30 | self.image_dir = os.path.join(self.root, self.phase)

31 | self.bone_file = os.path.join(self.root, 'fasion-resize-annotation-%s.csv' % self.phase)

32 | pairLst = os.path.join(self.root, 'fasion-resize-pairs-%s.csv' % self.phase)

33 | self.name_pairs = self.init_categories(pairLst)

34 | self.annotation_file = pd.read_csv(self.bone_file, sep=':')

35 | self.annotation_file = self.annotation_file.set_index('name')

36 |

37 | # load image size

38 | if isinstance(opt.loadSize, int):

39 | self.load_size = (opt.loadSize, opt.loadSize)

40 | else:

41 | self.load_size = opt.loadSize

42 |

43 | # prepare for transformation

44 | transform_list=[]

45 | transform_list.append(transforms.ToTensor())

46 | transform_list.append(transforms.Normalize((0.5, 0.5, 0.5),(0.5, 0.5, 0.5)))

47 | self.trans = transforms.Compose(transform_list)

48 |

49 | def __getitem__(self, index):

50 | # prepare for source image Xs and target image Xt

51 | Xs_name, Xt_name = self.name_pairs[index]

52 | Xs_path = os.path.join(self.image_dir, Xs_name)

53 | Xt_path = os.path.join(self.image_dir, Xt_name)

54 |

55 | Xs = Image.open(Xs_path).convert('RGB')

56 | Xt = Image.open(Xt_path).convert('RGB')

57 |

58 | Xs = F.resize(Xs, self.load_size)

59 | Xt = F.resize(Xt, self.load_size)

60 |

61 | Ps = self.obtain_bone(Xs_name)

62 | Xs = self.trans(Xs)

63 | Pt = self.obtain_bone(Xt_name)

64 | Xt = self.trans(Xt)

65 |

66 | return {'Xs': Xs, 'Ps': Ps, 'Xt': Xt, 'Pt': Pt,

67 | 'Xs_path': Xs_name, 'Xt_path': Xt_name}

68 |

69 | def init_categories(self, pairLst):

70 | pairs_file_train = pd.read_csv(pairLst)

71 | size = len(pairs_file_train)

72 | pairs = []

73 | print('Loading data pairs ...')

74 | for i in range(size):

75 | pair = [pairs_file_train.iloc[i]['from'], pairs_file_train.iloc[i]['to']]

76 | pairs.append(pair)

77 |

78 | print('Loading data pairs finished ...')

79 | return pairs

80 |

81 | def getRandomAffineParam(self):

82 | if self.opt.angle is not False:

83 | angle = np.random.uniform(low=self.opt.angle[0], high=self.opt.angle[1])

84 | else:

85 | angle = 0

86 | if self.opt.scale is not False:

87 | scale = np.random.uniform(low=self.opt.scale[0], high=self.opt.scale[1])

88 | else:

89 | scale = 1

90 | if self.opt.shift is not False:

91 | shift_x = np.random.uniform(low=self.opt.shift[0], high=self.opt.shift[1])

92 | shift_y = np.random.uniform(low=self.opt.shift[0], high=self.opt.shift[1])

93 | else:

94 | shift_x = 0

95 | shift_y = 0

96 | return angle, (shift_x, shift_y), scale

97 |

98 | def obtain_bone(self, name):

99 | string = self.annotation_file.loc[name]

100 | array = pose_utils.load_pose_cords_from_strings(string['keypoints_y'], string['keypoints_x'])

101 | pose = pose_utils.cords_to_map(array, self.load_size, self.opt.old_size)

102 | pose = np.transpose(pose,(2, 0, 1))

103 | pose = torch.Tensor(pose)

104 | return pose

105 |

106 | def obtain_bone_affine(self, name, affine_matrix):

107 | string = self.annotation_file.loc[name]

108 | array = pose_utils.load_pose_cords_from_strings(string['keypoints_y'], string['keypoints_x'])

109 | pose = pose_utils.cords_to_map(array, self.load_size, self.opt.old_size, affine_matrix)

110 | pose = np.transpose(pose,(2, 0, 1))

111 | pose = torch.Tensor(pose)

112 | return pose

113 |

114 | def __len__(self):

115 | return len(self.name_pairs) // self.opt.batchSize * self.opt.batchSize

116 |

117 | def name(self):

118 | return 'FashionDataset'

--------------------------------------------------------------------------------

/metrics/inception.py:

--------------------------------------------------------------------------------

1 | import torch.nn as nn

2 | import torch.nn.functional as F

3 | from torchvision import models

4 |

5 |

6 | class InceptionV3(nn.Module):

7 | """Pretrained InceptionV3 network returning feature maps"""

8 |

9 | # Index of default block of inception to return,

10 | # corresponds to output of final average pooling

11 | DEFAULT_BLOCK_INDEX = 3

12 |

13 | # Maps feature dimensionality to their output blocks indices

14 | BLOCK_INDEX_BY_DIM = {

15 | 64: 0, # First max pooling features

16 | 192: 1, # Second max pooling featurs

17 | 768: 2, # Pre-aux classifier features

18 | 2048: 3 # Final average pooling features

19 | }

20 |

21 | def __init__(self,

22 | output_blocks=[DEFAULT_BLOCK_INDEX],

23 | resize_input=True,

24 | normalize_input=True,

25 | requires_grad=False):

26 | """Build pretrained InceptionV3

27 | Parameters

28 | ----------

29 | output_blocks : list of int

30 | Indices of blocks to return features of. Possible values are:

31 | - 0: corresponds to output of first max pooling

32 | - 1: corresponds to output of second max pooling

33 | - 2: corresponds to output which is fed to aux classifier

34 | - 3: corresponds to output of final average pooling

35 | resize_input : bool

36 | If true, bilinearly resizes input to width and height 299 before

37 | feeding input to model. As the network without fully connected

38 | layers is fully convolutional, it should be able to handle inputs

39 | of arbitrary size, so resizing might not be strictly needed

40 | normalize_input : bool

41 | If true, normalizes the input to the statistics the pretrained

42 | Inception network expects

43 | requires_grad : bool

44 | If true, parameters of the model require gradient. Possibly useful

45 | for finetuning the network

46 | """

47 | super(InceptionV3, self).__init__()

48 |

49 | self.resize_input = resize_input

50 | self.normalize_input = normalize_input

51 | self.output_blocks = sorted(output_blocks)

52 | self.last_needed_block = max(output_blocks)

53 |

54 | assert self.last_needed_block <= 3, \

55 | 'Last possible output block index is 3'

56 |

57 | self.blocks = nn.ModuleList()

58 |

59 | inception = models.inception_v3(pretrained=True)

60 |

61 | # Block 0: input to maxpool1

62 | block0 = [

63 | inception.Conv2d_1a_3x3,

64 | inception.Conv2d_2a_3x3,

65 | inception.Conv2d_2b_3x3,

66 | nn.MaxPool2d(kernel_size=3, stride=2)

67 | ]

68 | self.blocks.append(nn.Sequential(*block0))

69 |

70 | # Block 1: maxpool1 to maxpool2

71 | if self.last_needed_block >= 1:

72 | block1 = [

73 | inception.Conv2d_3b_1x1,

74 | inception.Conv2d_4a_3x3,

75 | nn.MaxPool2d(kernel_size=3, stride=2)

76 | ]

77 | self.blocks.append(nn.Sequential(*block1))

78 |

79 | # Block 2: maxpool2 to aux classifier

80 | if self.last_needed_block >= 2:

81 | block2 = [

82 | inception.Mixed_5b,

83 | inception.Mixed_5c,

84 | inception.Mixed_5d,

85 | inception.Mixed_6a,

86 | inception.Mixed_6b,

87 | inception.Mixed_6c,

88 | inception.Mixed_6d,

89 | inception.Mixed_6e,

90 | ]

91 | self.blocks.append(nn.Sequential(*block2))

92 |

93 | # Block 3: aux classifier to final avgpool

94 | if self.last_needed_block >= 3:

95 | block3 = [

96 | inception.Mixed_7a,

97 | inception.Mixed_7b,

98 | inception.Mixed_7c,

99 | nn.AdaptiveAvgPool2d(output_size=(1, 1))

100 | ]

101 | self.blocks.append(nn.Sequential(*block3))

102 |

103 | for param in self.parameters():

104 | param.requires_grad = requires_grad

105 |

106 | def forward(self, inp):

107 | """Get Inception feature maps

108 | Parameters

109 | ----------

110 | inp : torch.autograd.Variable

111 | Input tensor of shape Bx3xHxW. Values are expected to be in

112 | range (0, 1)

113 | Returns

114 | -------

115 | List of torch.autograd.Variable, corresponding to the selected output

116 | block, sorted ascending by index

117 | """

118 | outp = []

119 | x = inp

120 |

121 | if self.resize_input:

122 | x = F.upsample(x, size=(299, 299), mode='bilinear')

123 |

124 | if self.normalize_input:

125 | x = x.clone()

126 | x[:, 0] = x[:, 0] * (0.229 / 0.5) + (0.485 - 0.5) / 0.5

127 | x[:, 1] = x[:, 1] * (0.224 / 0.5) + (0.456 - 0.5) / 0.5

128 | x[:, 2] = x[:, 2] * (0.225 / 0.5) + (0.406 - 0.5) / 0.5

129 |

130 | for idx, block in enumerate(self.blocks):

131 | x = block(x)

132 | if idx in self.output_blocks:

133 | outp.append(x)

134 |

135 | if idx == self.last_needed_block:

136 | break

137 |

138 | return outp

139 |

--------------------------------------------------------------------------------

/train.py:

--------------------------------------------------------------------------------

1 | import time

2 | import os

3 | import numpy as np

4 | import torch

5 | from torch.autograd import Variable

6 | from collections import OrderedDict

7 | from subprocess import call

8 | import fractions

9 | def lcm(a,b): return abs(a * b)/fractions.gcd(a,b) if a and b else 0

10 |

11 | from options.train_options import TrainOptions

12 | from data.data_loader import CreateDataLoader

13 | from models.models import create_model

14 | import util.util as util

15 | from util.visualizer import Visualizer

16 | from torch.utils.tensorboard import SummaryWriter

17 |

18 | opt = TrainOptions().parse()

19 | iter_path = os.path.join(opt.checkpoints_dir, opt.name, 'iter.txt')

20 | if opt.continue_train:

21 | try:

22 | start_epoch, epoch_iter = np.loadtxt(iter_path , delimiter=',', dtype=int)

23 | except:

24 | start_epoch, epoch_iter = 1, 0

25 | print('Resuming from epoch %d at iteration %d' % (start_epoch, epoch_iter))

26 | else:

27 | start_epoch, epoch_iter = 1, 0

28 |

29 | opt.iter_start = start_epoch

30 |

31 | opt.print_freq = lcm(opt.print_freq, opt.batchSize)

32 | if opt.debug:

33 | opt.display_freq = 1

34 | opt.print_freq = 1

35 | opt.niter = 1

36 | opt.niter_decay = 0

37 | opt.max_dataset_size = 10

38 |

39 | data_loader = CreateDataLoader(opt)

40 | dataset = data_loader.load_data()

41 | dataset_size = len(data_loader)

42 | print('#training images = %d' % dataset_size)

43 | writer = SummaryWriter(comment=opt.name)

44 |

45 | model = create_model(opt)

46 | visualizer = Visualizer(opt)

47 |

48 | total_steps = (start_epoch-1) * dataset_size + epoch_iter

49 |

50 | display_delta = total_steps % opt.display_freq

51 | print_delta = total_steps % opt.print_freq

52 | save_delta = total_steps % opt.save_latest_freq

53 |

54 | for epoch in range(start_epoch, opt.niter + opt.niter_decay + 1):

55 | epoch_start_time = time.time()

56 | if epoch != start_epoch:

57 | epoch_iter = epoch_iter % dataset_size

58 | for i, data in enumerate(dataset, start=epoch_iter):

59 | print("epoch: ", epoch, "iter: ", epoch_iter, "total_iteration: ", total_steps, end=" ")

60 | if total_steps % opt.print_freq == print_delta:

61 | iter_start_time = time.time()

62 | total_steps += opt.batchSize

63 | epoch_iter += opt.batchSize

64 |

65 | save_fake = total_steps % opt.display_freq == display_delta

66 |

67 | model.set_input(data)

68 | model.optimize_parameters()

69 |

70 | losses = model.get_current_errors()

71 | for k, v in losses.items():

72 | print(k, ": ", '%.2f' % v, end=" ")

73 | lr_G, lr_D = model.get_current_learning_rate()

74 | print("learning rate G: %.7f" % lr_G, end=" ")

75 | print("learning rate D: %.7f" % lr_D, end=" ")

76 | print('\n')

77 |

78 |

79 | writer.add_scalar('Loss/app_gen_s', losses['app_gen_s'], total_steps)

80 | writer.add_scalar('Loss/content_gen_s', losses['content_gen_s'], total_steps)

81 | writer.add_scalar('Loss/style_gen_s', losses['style_gen_s'], total_steps)

82 | writer.add_scalar('Loss/app_gen_t', losses['app_gen_t'], total_steps)

83 | writer.add_scalar('Loss/ad_gen_t', losses['ad_gen_t'], total_steps)

84 | writer.add_scalar('Loss/dis_img_gen_t', losses['dis_img_gen_t'], total_steps)

85 | writer.add_scalar('Loss/content_gen_t', losses['content_gen_t'], total_steps)

86 | writer.add_scalar('Loss/style_gen_t', losses['style_gen_t'], total_steps)

87 | writer.add_scalar('LR/G', lr_G, total_steps)

88 | writer.add_scalar('LR/D', lr_D, total_steps)

89 |

90 |

91 | ############## Display results and errors ##########

92 | if total_steps % opt.print_freq == print_delta:

93 | losses = model.get_current_errors()

94 | t = (time.time() - iter_start_time) / opt.batchSize

95 | visualizer.print_current_errors(epoch, epoch_iter, total_steps, losses, lr_G, lr_D, t)

96 | if opt.display_id > 0:

97 | visualizer.plot_current_errors(total_steps, losses)

98 |

99 | if total_steps % opt.display_freq == display_delta:

100 | visualizer.display_current_results(model.get_current_visuals(), epoch)

101 | if hasattr(model, 'distribution'):

102 | visualizer.plot_current_distribution(model.get_current_dis())

103 |

104 | if total_steps % opt.save_latest_freq == save_delta:

105 | print('saving the latest model (epoch %d, total_steps %d)' % (epoch, total_steps))

106 | model.save_networks('latest')

107 | if opt.dataset_mode == 'market':

108 | model.save_networks(total_steps)

109 | np.savetxt(iter_path, (epoch, epoch_iter), delimiter=',', fmt='%d')

110 |

111 | if epoch_iter >= dataset_size:

112 | break

113 |

114 | # end of epoch

115 | iter_end_time = time.time()

116 | print('End of epoch %d / %d \t Time Taken: %d sec' %

117 | (epoch, opt.niter + opt.niter_decay, time.time() - epoch_start_time))

118 |

119 | ### save model for this epoch

120 | if epoch % opt.save_epoch_freq == 0 or (epoch > opt.niter and epoch % (opt.save_epoch_freq//2) == 0):

121 | print('saving the model at the end of epoch %d, iters %d' % (epoch, total_steps))

122 | model.save_networks('latest')

123 | model.save_networks(epoch)

124 | np.savetxt(iter_path, (epoch+1, 0), delimiter=',', fmt='%d')

125 |

126 | ### linearly decay learning rate after certain iterations

127 | model.update_learning_rate()

128 |

--------------------------------------------------------------------------------

/util/pose_utils.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | from scipy.ndimage.filters import gaussian_filter

3 | from skimage.draw import circle, line_aa, polygon

4 | import json

5 |

6 | import matplotlib

7 | matplotlib.use('Agg')

8 | import matplotlib.pyplot as plt

9 | import matplotlib.patches as mpatches

10 | from collections import defaultdict

11 | import skimage.measure, skimage.transform

12 | import sys

13 |

14 | LIMB_SEQ = [[1,2], [1,5], [2,3], [3,4], [5,6], [6,7], [1,8], [8,9],

15 | [9,10], [1,11], [11,12], [12,13], [1,0], [0,14], [14,16],

16 | [0,15], [15,17], [2,16], [5,17]]

17 |

18 | COLORS = [[255, 0, 0], [255, 85, 0], [255, 170, 0], [255, 255, 0], [170, 255, 0], [85, 255, 0], [0, 255, 0],

19 | [0, 255, 85], [0, 255, 170], [0, 255, 255], [0, 170, 255], [0, 85, 255], [0, 0, 255], [85, 0, 255],

20 | [170, 0, 255], [255, 0, 255], [255, 0, 170], [255, 0, 85]]

21 |

22 |

23 | LABELS = ['nose', 'neck', 'Rsho', 'Relb', 'Rwri', 'Lsho', 'Lelb', 'Lwri',

24 | 'Rhip', 'Rkne', 'Rank', 'Lhip', 'Lkne', 'Lank', 'Leye', 'Reye', 'Lear', 'Rear']

25 |

26 | MISSING_VALUE = -1

27 |

28 |

29 | def map_to_cord(pose_map, threshold=0.1):

30 | all_peaks = [[] for i in range(18)]

31 | pose_map = pose_map[..., :18]

32 |

33 | y, x, z = np.where(np.logical_and(pose_map == pose_map.max(axis = (0, 1)),

34 | pose_map > threshold))

35 | for x_i, y_i, z_i in zip(x, y, z):

36 | all_peaks[z_i].append([x_i, y_i])

37 |

38 | x_values = []

39 | y_values = []

40 |

41 | for i in range(18):

42 | if len(all_peaks[i]) != 0:

43 | x_values.append(all_peaks[i][0][0])

44 | y_values.append(all_peaks[i][0][1])

45 | else:

46 | x_values.append(MISSING_VALUE)

47 | y_values.append(MISSING_VALUE)

48 |

49 | return np.concatenate([np.expand_dims(y_values, -1), np.expand_dims(x_values, -1)], axis=1)

50 |

51 |

52 | def cords_to_map(cords, img_size, old_size=None, affine_matrix=None, sigma=6):

53 | old_size = img_size if old_size is None else old_size

54 | cords = cords.astype(float)

55 | result = np.zeros(img_size + cords.shape[0:1], dtype='float32')

56 | for i, point in enumerate(cords):

57 | if point[0] == MISSING_VALUE or point[1] == MISSING_VALUE:

58 | continue

59 | point[0] = point[0]/old_size[0] * img_size[0]

60 | point[1] = point[1]/old_size[1] * img_size[1]

61 | if affine_matrix is not None:

62 | point_ =np.dot(affine_matrix, np.matrix([point[1], point[0], 1]).reshape(3,1))

63 | point_0 = int(point_[1])

64 | point_1 = int(point_[0])

65 | else:

66 | point_0 = int(point[0])

67 | point_1 = int(point[1])

68 | xx, yy = np.meshgrid(np.arange(img_size[1]), np.arange(img_size[0]))

69 | result[..., i] = np.exp(-((yy - point_0) ** 2 + (xx - point_1) ** 2) / (2 * sigma ** 2))

70 | return result

71 |

72 |

73 | def draw_pose_from_cords(pose_joints, img_size, radius=2, draw_joints=True):

74 | colors = np.zeros(shape=img_size + (3, ), dtype=np.uint8)

75 | mask = np.zeros(shape=img_size, dtype=bool)

76 |

77 | if draw_joints:

78 | for f, t in LIMB_SEQ:

79 | from_missing = pose_joints[f][0] == MISSING_VALUE or pose_joints[f][1] == MISSING_VALUE

80 | to_missing = pose_joints[t][0] == MISSING_VALUE or pose_joints[t][1] == MISSING_VALUE

81 | if from_missing or to_missing:

82 | continue

83 | yy, xx, val = line_aa(pose_joints[f][0], pose_joints[f][1], pose_joints[t][0], pose_joints[t][1])

84 | colors[yy, xx] = np.expand_dims(val, 1) * 255

85 | mask[yy, xx] = True

86 |

87 | for i, joint in enumerate(pose_joints):

88 | if pose_joints[i][0] == MISSING_VALUE or pose_joints[i][1] == MISSING_VALUE:

89 | continue

90 | yy, xx = circle(joint[0], joint[1], radius=radius, shape=img_size)

91 | colors[yy, xx] = COLORS[i]

92 | mask[yy, xx] = True

93 |

94 | return colors, mask

95 |

96 |

97 | def draw_pose_from_map(pose_map, threshold=0.1, **kwargs):

98 | cords = map_to_cord(pose_map, threshold=threshold)

99 | return draw_pose_from_cords(cords, pose_map.shape[:2], **kwargs)

100 |

101 |

102 | def load_pose_cords_from_strings(y_str, x_str):

103 | y_cords = json.loads(y_str)

104 | x_cords = json.loads(x_str)

105 | return np.concatenate([np.expand_dims(y_cords, -1), np.expand_dims(x_cords, -1)], axis=1)

106 |

107 | def mean_inputation(X):

108 | X = X.copy()

109 | for i in range(X.shape[1]):

110 | for j in range(X.shape[2]):

111 | val = np.mean(X[:, i, j][X[:, i, j] != -1])

112 | X[:, i, j][X[:, i, j] == -1] = val

113 | return X

114 |

115 | def draw_legend():

116 | handles = [mpatches.Patch(color=np.array(color) / 255.0, label=name) for color, name in zip(COLORS, LABELS)]

117 | plt.legend(handles=handles, bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.)

118 |

119 | def produce_ma_mask(kp_array, img_size, point_radius=4):

120 | from skimage.morphology import dilation, erosion, square

121 | mask = np.zeros(shape=img_size, dtype=bool)

122 | limbs = [[2,3], [2,6], [3,4], [4,5], [6,7], [7,8], [2,9], [9,10],

123 | [10,11], [2,12], [12,13], [13,14], [2,1], [1,15], [15,17],

124 | [1,16], [16,18], [2,17], [2,18], [9,12], [12,6], [9,3], [17,18]]

125 | limbs = np.array(limbs) - 1

126 | for f, t in limbs:

127 | from_missing = kp_array[f][0] == MISSING_VALUE or kp_array[f][1] == MISSING_VALUE

128 | to_missing = kp_array[t][0] == MISSING_VALUE or kp_array[t][1] == MISSING_VALUE

129 | if from_missing or to_missing:

130 | continue

131 |

132 | norm_vec = kp_array[f] - kp_array[t]

133 | norm_vec = np.array([-norm_vec[1], norm_vec[0]])

134 | norm_vec = point_radius * norm_vec / np.linalg.norm(norm_vec)

135 |

136 |

137 | vetexes = np.array([

138 | kp_array[f] + norm_vec,

139 | kp_array[f] - norm_vec,

140 | kp_array[t] - norm_vec,

141 | kp_array[t] + norm_vec

142 | ])

143 | yy, xx = polygon(vetexes[:, 0], vetexes[:, 1], shape=img_size)

144 | mask[yy, xx] = True

145 |

146 | for i, joint in enumerate(kp_array):

147 | if kp_array[i][0] == MISSING_VALUE or kp_array[i][1] == MISSING_VALUE:

148 | continue

149 | yy, xx = circle(joint[0], joint[1], radius=point_radius, shape=img_size)

150 | mask[yy, xx] = True

151 |

152 | mask = dilation(mask, square(5))

153 | mask = erosion(mask, square(5))

154 | return mask

155 |

156 | if __name__ == "__main__":

157 | import pandas as pd

158 | from skimage.io import imread

159 | import pylab as plt

160 | import os

161 | i = 5

162 | df = pd.read_csv('data/market-annotation-train.csv', sep=':')

163 |

164 | for index, row in df.iterrows():

165 | pose_cords = load_pose_cords_from_strings(row['keypoints_y'], row['keypoints_x'])

166 |

167 | colors, mask = draw_pose_from_cords(pose_cords, (128, 64))

168 |

169 | mmm = produce_ma_mask(pose_cords, (128, 64)).astype(float)[..., np.newaxis].repeat(3, axis=-1)

170 | print(mmm.shape)

171 | img = imread('data/market-dataset/train/' + row['name'])

172 |

173 | mmm[mask] = colors[mask]

174 |

175 | print (mmm)

176 | plt.subplot(1, 1, 1)

177 | plt.imshow(mmm)

178 | plt.show()

179 |

--------------------------------------------------------------------------------

/options/base_options.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import os

3 | from util import util

4 | import torch

5 | import data

6 | import models

7 |

8 | class BaseOptions():

9 | def __init__(self):

10 | self.parser = argparse.ArgumentParser()

11 | self.initialized = False

12 |

13 | def initialize(self):

14 | # experiment specifics

15 | self.parser.add_argument('--name', type=str, default='label2city', help='name of the experiment. It decides where to store samples and models')

16 | self.parser.add_argument('--gpu_ids', type=str, default='0', help='gpu ids: e.g. 0 0,1,2, 0,2. use -1 for CPU')

17 | self.parser.add_argument('--checkpoints_dir', type=str, default='./checkpoints', help='models are saved here')

18 | self.parser.add_argument('--model', type=str, default='pix2pixHD', help='which model to use')

19 | self.parser.add_argument('--norm', type=str, default='instance', help='instance normalization or batch normalization')

20 | self.parser.add_argument('--use_dropout', action='store_true', help='use dropout for the generator')

21 | self.parser.add_argument('--data_type', default=32, type=int, choices=[8, 16, 32], help="Supported data type i.e. 8, 16, 32 bit")

22 | self.parser.add_argument('--verbose', action='store_true', default=False, help='toggles verbose')

23 | self.parser.add_argument('--fp16', action='store_true', default=False, help='train with AMP')

24 | self.parser.add_argument('--local_rank', type=int, default=0, help='local rank for distributed training')

25 |

26 | # input/output sizes

27 | self.parser.add_argument('--image_nc', type=int, default=3)

28 | self.parser.add_argument('--pose_nc', type=int, default=18)

29 | self.parser.add_argument('--batchSize', type=int, default=1, help='input batch size')

30 | self.parser.add_argument('--old_size', type=int, default=(256, 176), help='Scale images to this size. The final image will be cropped to --crop_size.')

31 | self.parser.add_argument('--loadSize', type=int, default=256, help='scale images to this size')

32 | self.parser.add_argument('--fineSize', type=int, default=512, help='then crop to this size')

33 | self.parser.add_argument('--label_nc', type=int, default=35, help='# of input label channels')

34 | self.parser.add_argument('--input_nc', type=int, default=3, help='# of input image channels')

35 | self.parser.add_argument('--output_nc', type=int, default=3, help='# of output image channels')

36 |

37 | # for setting inputs

38 | self.parser.add_argument('--dataset_mode', type=str, default='fashion')

39 | self.parser.add_argument('--dataroot', type=str, default='/media/data2/zhangpz/DataSet/Fashion')

40 | self.parser.add_argument('--resize_or_crop', type=str, default='scale_width', help='scaling and cropping of images at load time [resize_and_crop|crop|scale_width|scale_width_and_crop]')

41 | self.parser.add_argument('--serial_batches', action='store_true', help='if true, takes images in order to make batches, otherwise takes them randomly')

42 | self.parser.add_argument('--no_flip', action='store_true', help='if specified, do not flip the images for data argumentation')

43 | self.parser.add_argument('--nThreads', default=2, type=int, help='# threads for loading data')

44 | self.parser.add_argument('--max_dataset_size', type=int, default=float("inf"), help='Maximum number of samples allowed per dataset. If the dataset directory contains more than max_dataset_size, only a subset is loaded.')

45 |

46 | # for displays

47 | self.parser.add_argument('--display_winsize', type=int, default=512, help='display window size')

48 | self.parser.add_argument('--tf_log', action='store_true', help='if specified, use tensorboard logging. Requires tensorflow installed')

49 | self.parser.add_argument('--display_id', type=int, default=0, help='display id of the web') # 1

50 | self.parser.add_argument('--display_port', type=int, default=8096, help='visidom port of the web display')

51 | self.parser.add_argument('--display_single_pane_ncols', type=int, default=0,

52 | help='if positive, display all images in a single visidom web panel')

53 | self.parser.add_argument('--display_env', type=str, default=self.parser.parse_known_args()[0].name.replace('_', ''),

54 | help='the environment of visidom display')

55 | # for instance-wise features

56 | self.parser.add_argument('--no_instance', action='store_true', help='if specified, do *not* add instance map as input')

57 | self.parser.add_argument('--instance_feat', action='store_true', help='if specified, add encoded instance features as input')

58 | self.parser.add_argument('--label_feat', action='store_true', help='if specified, add encoded label features as input')

59 | self.parser.add_argument('--feat_num', type=int, default=3, help='vector length for encoded features')

60 | self.parser.add_argument('--load_features', action='store_true', help='if specified, load precomputed feature maps')

61 | self.parser.add_argument('--n_downsample_E', type=int, default=4, help='# of downsampling layers in encoder')

62 | self.parser.add_argument('--nef', type=int, default=16, help='# of encoder filters in the first conv layer')

63 | self.parser.add_argument('--n_clusters', type=int, default=10, help='number of clusters for features')

64 |

65 | self.initialized = True

66 |

67 | def parse(self, save=True):

68 | if not self.initialized:

69 | self.initialize()

70 | opt, _ = self.parser.parse_known_args()

71 | # modify the options for different models

72 | model_option_set = models.get_option_setter(opt.model)

73 | self.parser = model_option_set(self.parser, self.isTrain)

74 |

75 | data_option_set = data.get_option_setter(opt.dataset_mode)

76 | self.parser = data_option_set(self.parser, self.isTrain)

77 |

78 | self.opt = self.parser.parse_args()

79 | self.opt.isTrain = self.isTrain # train or test

80 |

81 | if torch.cuda.is_available():

82 | self.opt.device = torch.device("cuda")

83 | torch.backends.cudnn.benchmark = True # cudnn auto-tuner

84 | else:

85 | self.opt.device = torch.device("cpu")

86 |

87 | str_ids = self.opt.gpu_ids.split(',')

88 | self.opt.gpu_ids = []

89 | for str_id in str_ids:

90 | id = int(str_id)

91 | if id >= 0:

92 | self.opt.gpu_ids.append(id)

93 |

94 | # set gpu ids

95 | if len(self.opt.gpu_ids) > 0:

96 | torch.cuda.set_device(self.opt.gpu_ids[0])

97 |

98 | args = vars(self.opt)

99 |

100 | print('------------ Options -------------')

101 | for k, v in sorted(args.items()):

102 | print('%s: %s' % (str(k), str(v)))

103 | print('-------------- End ----------------')

104 |

105 | # save to the disk

106 | expr_dir = os.path.join(self.opt.checkpoints_dir, self.opt.name)

107 | util.mkdirs(expr_dir)

108 | if save and not (self.isTrain and self.opt.continue_train):

109 | name = 'train' if self.isTrain else 'test'

110 | file_name = os.path.join(expr_dir, name+'_opt.txt')

111 | with open(file_name, 'wt') as opt_file:

112 | opt_file.write('------------ Options -------------\n')

113 | for k, v in sorted(args.items()):

114 | opt_file.write('%s: %s\n' % (str(k), str(v)))

115 | opt_file.write('-------------- End ----------------\n')

116 | return self.opt

117 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Dual-task Pose Transformer Network

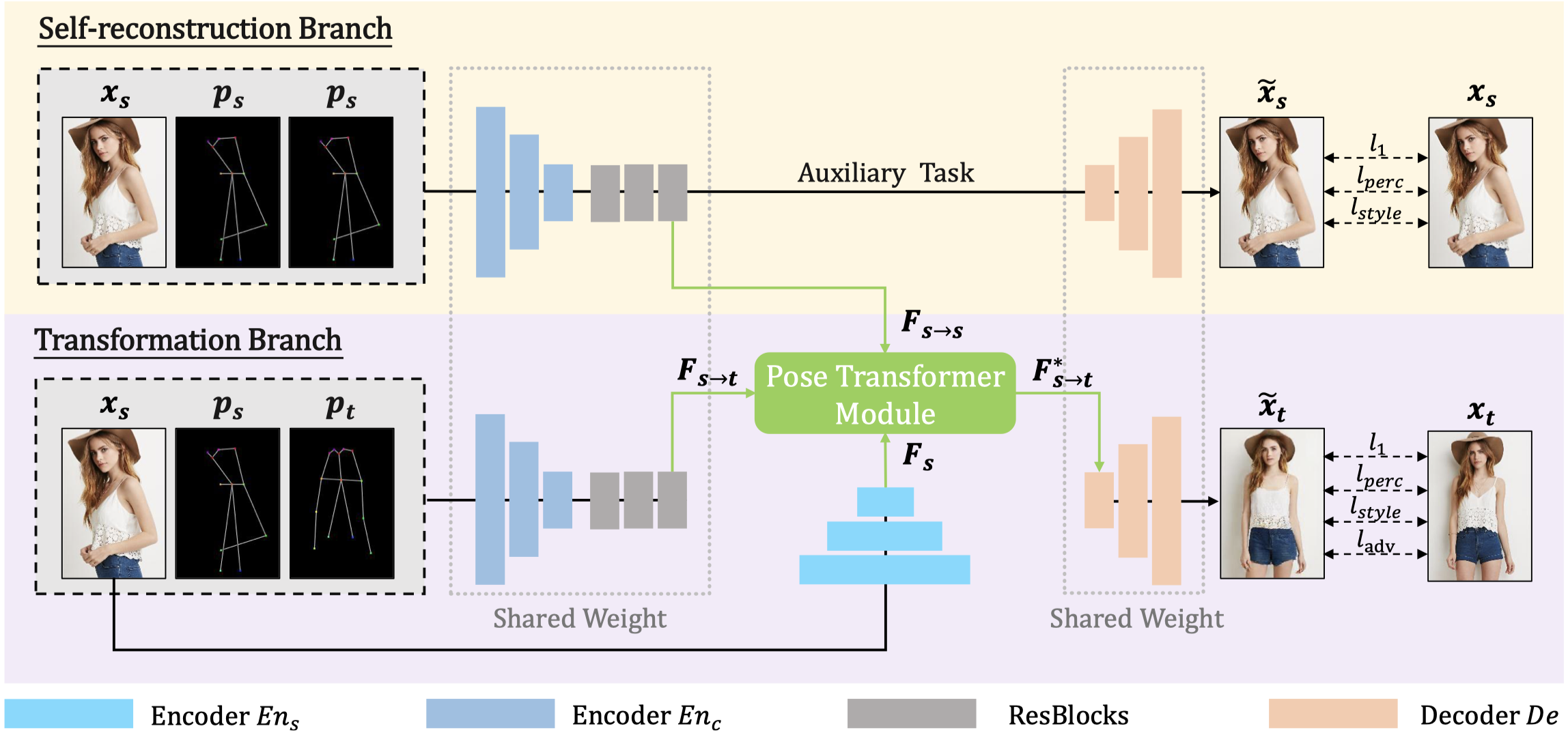

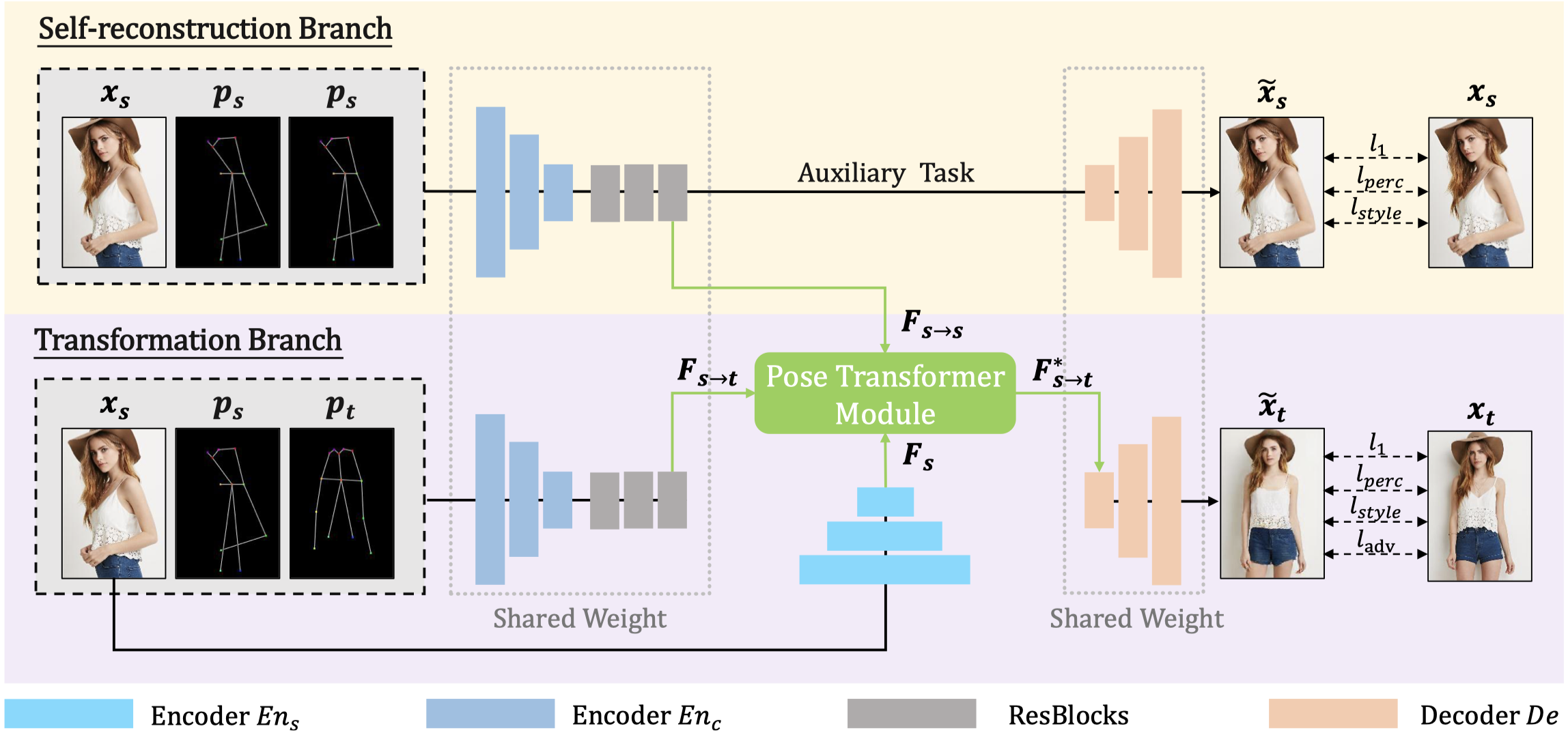

2 | The source code for our paper "[Exploring Dual-task Correlation for Pose Guided Person Image Generation](https://openaccess.thecvf.com/content/CVPR2022/papers/Zhang_Exploring_Dual-Task_Correlation_for_Pose_Guided_Person_Image_Generation_CVPR_2022_paper.pdf)“, Pengze Zhang, Lingxiao Yang, Jianhuang Lai, and Xiaohua Xie, CVPR 2022. Video: [[Chinese](https://www.koushare.com/video/videodetail/35887)] [[English](https://www.youtube.com/watch?v=p9o3lOlZBSE)]

3 |

2 | Our poster template can be download from [Google Drive](https://docs.google.com/presentation/d/1i02V0JZCw2mRZF99szitaOEkfVNeKR1q/edit?usp=sharing&ouid=111594135598063931892&rtpof=true&sd=true).

3 |

--------------------------------------------------------------------------------

/models/models.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import models

3 |

4 | def create_model(opt):

5 | '''

6 | if opt.model == 'pix2pixHD':

7 | from .pix2pixHD_model import Pix2PixHDModel, InferenceModel

8 | if opt.isTrain:

9 | model = Pix2PixHDModel()

10 | else:

11 | model = InferenceModel()

12 | elif opt.model == 'basic':

13 | from .basic_model import BasicModel

14 | model = BasicModel(opt)

15 | else:

16 | from .ui_model import UIModel

17 | model = UIModel()

18 | '''

19 | model = models.find_model_using_name(opt.model)(opt)

20 | if opt.verbose:

21 | print("model [%s] was created" % (model.name()))

22 | return model

23 |

--------------------------------------------------------------------------------

/test.py:

--------------------------------------------------------------------------------

1 | from data.data_loader import CreateDataLoader

2 | from options.test_options import TestOptions

3 | from models.models import create_model

4 | import numpy as np

5 | import torch

6 |

7 | if __name__=='__main__':

8 | # get testing options

9 | opt = TestOptions().parse()

10 | # creat a dataset

11 | data_loader = CreateDataLoader(opt)

12 | dataset = data_loader.load_data()

13 |

14 |

15 | print(len(dataset))

16 |

17 | dataset_size = len(dataset) * opt.batchSize

18 | print('testing images = %d' % dataset_size)

19 | # create a model

20 | model = create_model(opt)

21 |

22 | with torch.no_grad():

23 | for i, data in enumerate(dataset):

24 | model.set_input(data)

25 | model.test()

26 |

--------------------------------------------------------------------------------

/models/__init__.py:

--------------------------------------------------------------------------------

1 | """This package contains modules related to function, network architectures, and models"""

2 |

3 | import importlib

4 | from .base_model import BaseModel

5 |

6 |

7 | def find_model_using_name(model_name):

8 | """Import the module "model/[model_name]_model.py"."""

9 | model_file_name = "models." + model_name + "_model"

10 | modellib = importlib.import_module(model_file_name)

11 | model = None

12 | for name, cls in modellib.__dict__.items():

13 | if name.lower() == (model_name+'model').lower() and issubclass(cls, BaseModel):

14 | model = cls

15 |

16 | if model is None:

17 | print("In %s.py, there should be a subclass of BaseModel with class name that matches %s in lowercase." % (model_file_name, model_name))

18 | exit(0)

19 |

20 | return model

21 |

22 |

23 | def get_option_setter(model_name):

24 | """Return the static method of the model class."""

25 | model = find_model_using_name(model_name)

26 | return model.modify_options

27 |

--------------------------------------------------------------------------------

/util/image_pool.py:

--------------------------------------------------------------------------------

1 | import random

2 | import torch

3 | from torch.autograd import Variable

4 | class ImagePool():

5 | def __init__(self, pool_size):

6 | self.pool_size = pool_size

7 | if self.pool_size > 0:

8 | self.num_imgs = 0

9 | self.images = []

10 |

11 | def query(self, images):

12 | if self.pool_size == 0:

13 | return images

14 | return_images = []

15 | for image in images.data:

16 | image = torch.unsqueeze(image, 0)

17 | if self.num_imgs < self.pool_size:

18 | self.num_imgs = self.num_imgs + 1

19 | self.images.append(image)

20 | return_images.append(image)

21 | else:

22 | p = random.uniform(0, 1)

23 | if p > 0.5:

24 | random_id = random.randint(0, self.pool_size-1)

25 | tmp = self.images[random_id].clone()

26 | self.images[random_id] = image

27 | return_images.append(tmp)

28 | else:

29 | return_images.append(image)

30 | return_images = Variable(torch.cat(return_images, 0))

31 | return return_images

32 |

--------------------------------------------------------------------------------

/data/custom_dataset_data_loader.py:

--------------------------------------------------------------------------------

1 | import torch.utils.data

2 | from data.base_data_loader import BaseDataLoader

3 | import data

4 |

5 | def CreateDataset(opt):

6 | '''

7 | dataset = None

8 | if opt.dataset_mode == 'fashion':

9 | from data.fashion_dataset import FashionDataset

10 | dataset = FashionDataset()

11 | else:

12 | from data.aligned_dataset import AlignedDataset

13 | dataset = AlignedDataset()

14 | '''

15 | dataset = data.find_dataset_using_name(opt.dataset_mode)()

16 | print("dataset [%s] was created" % (dataset.name()))

17 | dataset.initialize(opt)

18 | return dataset

19 |

20 | class CustomDatasetDataLoader(BaseDataLoader):

21 | def name(self):

22 | return 'CustomDatasetDataLoader'

23 |

24 | def initialize(self, opt):

25 | BaseDataLoader.initialize(self, opt)

26 | self.dataset = CreateDataset(opt)

27 | self.dataloader = torch.utils.data.DataLoader(

28 | self.dataset,

29 | batch_size=opt.batchSize,

30 | shuffle=(not opt.serial_batches) and opt.isTrain,

31 | num_workers=int(opt.nThreads))

32 |

33 | def load_data(self):

34 | return self.dataloader

35 |

36 | def __len__(self):

37 | return min(len(self.dataset), self.opt.max_dataset_size)

38 |

--------------------------------------------------------------------------------

/options/test_options.py:

--------------------------------------------------------------------------------

1 | from .base_options import BaseOptions

2 |

3 | class TestOptions(BaseOptions):

4 | def initialize(self):

5 | BaseOptions.initialize(self)

6 | self.parser.add_argument('--ntest', type=int, default=float("inf"), help='# of test examples.')

7 | self.parser.add_argument('--results_dir', type=str, default='./results/', help='saves results here.')

8 | self.parser.add_argument('--aspect_ratio', type=float, default=1.0, help='aspect ratio of result images')

9 | self.parser.add_argument('--phase', type=str, default='test', help='train, val, test, etc')

10 | self.parser.add_argument('--which_epoch', type=str, default='latest', help='which epoch to load? set to latest to use latest cached model')

11 | self.parser.add_argument('--how_many', type=int, default=50, help='how many test images to run')

12 | self.parser.add_argument('--cluster_path', type=str, default='features_clustered_010.npy', help='the path for clustered results of encoded features')

13 | self.parser.add_argument('--use_encoded_image', action='store_true', help='if specified, encode the real image to get the feature map')

14 | self.parser.add_argument("--export_onnx", type=str, help="export ONNX model to a given file")

15 | self.parser.add_argument("--engine", type=str, help="run serialized TRT engine")

16 | self.parser.add_argument("--onnx", type=str, help="run ONNX model via TRT")

17 | self.isTrain = False

18 |

--------------------------------------------------------------------------------

/data/__init__.py:

--------------------------------------------------------------------------------

1 | import importlib

2 | import torch.utils.data

3 | from data.base_dataset import BaseDataset

4 |

5 |

6 | def find_dataset_using_name(dataset_name):

7 | # Given the option --dataset [datasetname],

8 | # the file "datasets/datasetname_dataset.py"

9 | # will be imported.

10 | dataset_filename = "data." + dataset_name + "_dataset"

11 | datasetlib = importlib.import_module(dataset_filename)

12 |

13 | # In the file, the class called DatasetNameDataset() will

14 | # be instantiated. It has to be a subclass of BaseDataset,

15 | # and it is case-insensitive.

16 | dataset = None

17 | target_dataset_name = dataset_name.replace('_', '') + 'dataset'

18 | for name, cls in datasetlib.__dict__.items():

19 | if name.lower() == target_dataset_name.lower() \

20 | and issubclass(cls, BaseDataset):

21 | dataset = cls

22 |

23 | if dataset is None:

24 | raise ValueError("In %s.py, there should be a subclass of BaseDataset "

25 | "with class name that matches %s in lowercase." %

26 | (dataset_filename, target_dataset_name))

27 |

28 | return dataset

29 |

30 |

31 | def get_option_setter(dataset_name):

32 | dataset_class = find_dataset_using_name(dataset_name)

33 | return dataset_class.modify_commandline_options

34 |

35 | '''

36 | def create_dataloader(opt):

37 | dataset = find_dataset_using_name(opt.dataset_mode)

38 | instance = dataset()

39 | instance.initialize(opt)

40 | print("dataset [%s] of size %d was created" %

41 | (type(instance).__name__, len(instance)))

42 | dataloader = torch.utils.data.DataLoader(

43 | instance,

44 | batch_size=opt.batchSize,

45 | shuffle=not opt.serial_batches,

46 | num_workers=int(opt.nThreads),

47 | drop_last=opt.isTrain

48 | )

49 | return dataloader

50 | '''

--------------------------------------------------------------------------------

/data/generate_fashion_datasets.py:

--------------------------------------------------------------------------------

1 | import os

2 | import shutil