By default it passively scans the responses with Target "Scope in" . Make sure to add the targets into the scope. (Reason: To Avoid Noise)

12 | 13 |WHITELIST_CODES - You can add status_code's to this list for more accurate results.

14 | 15 |ex: avoiding https redirects by adding 301, if the path,url redirects to https.

16 | 17 |WHITELIST_PATTERN - Regex extracting pattern based on given patterns.

18 | 19 |ex: Mainly used to avoid performing regexes in gif,img,jpg,swf etc

27 | 28 |no_of_threads - Increase no of threads , default : 15

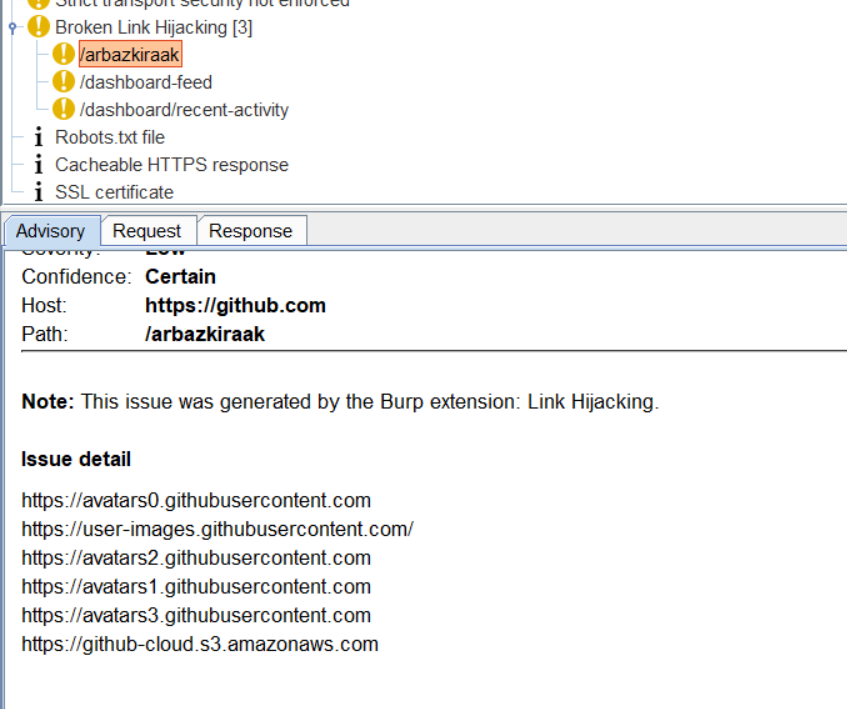

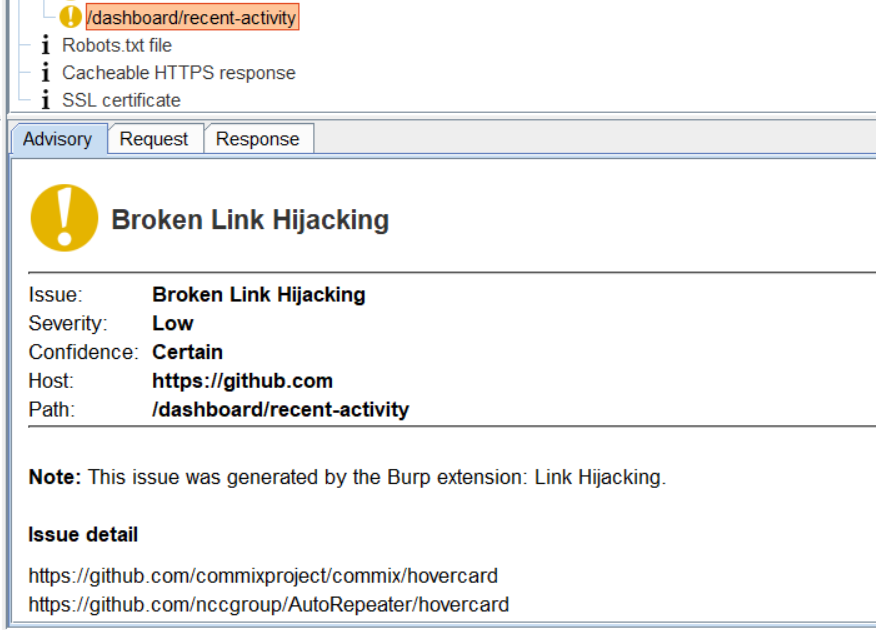

29 | -------------------------------------------------------------------------------- /.gitignore: -------------------------------------------------------------------------------- 1 | # Byte-compiled / optimized / DLL files 2 | __pycache__/ 3 | *.py[cod] 4 | *$py.class 5 | 6 | # C extensions 7 | *.so 8 | 9 | # Distribution / packaging 10 | .Python 11 | build/ 12 | develop-eggs/ 13 | dist/ 14 | downloads/ 15 | eggs/ 16 | .eggs/ 17 | lib/ 18 | lib64/ 19 | parts/ 20 | sdist/ 21 | var/ 22 | wheels/ 23 | *.egg-info/ 24 | .installed.cfg 25 | *.egg 26 | MANIFEST 27 | 28 | # PyInstaller 29 | # Usually these files are written by a python script from a template 30 | # before PyInstaller builds the exe, so as to inject date/other infos into it. 31 | *.manifest 32 | *.spec 33 | 34 | # Installer logs 35 | pip-log.txt 36 | pip-delete-this-directory.txt 37 | 38 | # Unit test / coverage reports 39 | htmlcov/ 40 | .tox/ 41 | .coverage 42 | .coverage.* 43 | .cache 44 | nosetests.xml 45 | coverage.xml 46 | *.cover 47 | .hypothesis/ 48 | .pytest_cache/ 49 | 50 | # Translations 51 | *.mo 52 | *.pot 53 | 54 | # Django stuff: 55 | *.log 56 | local_settings.py 57 | db.sqlite3 58 | 59 | # Flask stuff: 60 | instance/ 61 | .webassets-cache 62 | 63 | # Scrapy stuff: 64 | .scrapy 65 | 66 | # Sphinx documentation 67 | docs/_build/ 68 | 69 | # PyBuilder 70 | target/ 71 | 72 | # Jupyter Notebook 73 | .ipynb_checkpoints 74 | 75 | # pyenv 76 | .python-version 77 | 78 | # celery beat schedule file 79 | celerybeat-schedule 80 | 81 | # SageMath parsed files 82 | *.sage.py 83 | 84 | # Environments 85 | .env 86 | .venv 87 | env/ 88 | venv/ 89 | ENV/ 90 | env.bak/ 91 | venv.bak/ 92 | 93 | # Spyder project settings 94 | .spyderproject 95 | .spyproject 96 | 97 | # Rope project settings 98 | .ropeproject 99 | 100 | # mkdocs documentation 101 | /site 102 | 103 | # mypy 104 | .mypy_cache/ 105 | -------------------------------------------------------------------------------- /README.md: -------------------------------------------------------------------------------- 1 | About Broken Link Hijacking : https://edoverflow.com/2017/broken-link-hijacking/ by @EdOverflow 2 | 3 | # BLH Plugin 4 | 5 | Burp Extension to discover broken links using IScannerCheck & synchronized threads. 6 | 7 | # Features 8 | 9 | --- 10 | 11 | - Supports various HTML elements/attributes with regex based on following 12 | 13 | [`https://github.com/stevenvachon/broken-link-checker/blob/09682b3250e1b2d01e4995bac77ec77cb612db46/test/helpers/json-generators/scrapeHtml.js`](https://github.com/stevenvachon/broken-link-checker/blob/09682b3250e1b2d01e4995bac77ec77cb612db46/test/helpers/json-generators/scrapeHtml.js) 14 | 15 | - Concurrently checks multiple links using defined threads. 16 | - Customizing **`[STATUS_CODES|PATH-PATTERN|MIME-TYPE]`** 17 | 18 | [https://github.com/arbazkiraak/BurpBLH/blob/master/blhchecker.py#L20](https://github.com/arbazkiraak/BurpBLH/blob/master/blhchecker.py#L20) 19 | 20 | # Usage 21 | 22 | --- 23 | 24 | By default it passively scans the responses with Target "Scope in" . Make sure to add the targets into the scope. (Reason: To Avoid Noise) 25 | 26 | `WHITELIST_CODES` - You can add status_code's to this list for more accurate results. 27 | 28 | ex: avoiding https redirects by adding `301`, if the path,url redirects to https. 29 | 30 | `WHITELIST_PATTERN` - Regex extracting pattern based on given patterns. 31 | 32 | - ex: /admin.php 33 | - //google.com/test.jpg 34 | - ../../img.src 35 | 36 | `WHITELIST_MEMES` - Whitelisting MimeType to be processed for scanning patterns in responses if their Mime-Type matches. 37 | 38 | ex: Mainly used to avoid performing regexes in `gif,img,jpg,swf etc` 39 | 40 | `no_of_threads` - Increase no of threads , default : 15 41 | 42 | --- 43 | 44 | # Output 45 | 46 | - 2 Ways it outputs the broken links. 47 | 1. Broken Links which belongs to external origins. 48 | 2. Broken Links which belongs to same origins. 49 | - If there are no external origin broken links then look for same origin broken links & return **same origin broken links.** 50 | - if there are external origin broken links & same origin broken links then return only ***external origin broken links.*** 51 | 52 | OUTPUT1: External Origins 53 | 54 |  55 | 56 | OUTPUT2: Same Origins 57 | 58 |  59 | 60 | This plugin is based on [https://github.com/stevenvachon/broken-link-checker](https://github.com/stevenvachon/broken-link-checker) 61 | -------------------------------------------------------------------------------- /blhchecker.py: -------------------------------------------------------------------------------- 1 | from burp import IBurpExtender,IScannerCheck,IScanIssue,IHttpService 2 | from array import array 3 | import re,threading 4 | try: 5 | import Queue as queue 6 | except: 7 | import queue as queue 8 | from java.net import URL 9 | import urlparse 10 | from java.io import PrintWriter 11 | from java.lang import RuntimeException 12 | 13 | ## FOR URL BASED, Use below regex 14 | #REGEX = r'https?://(?:[-\w.]|(?:%[\da-fA-F]{2}))+' 15 | 16 | ## LINK BASED REGEX FOR HTML 17 | regex_str = r'(href|src|icon|data|url)=[\'"]?([^\'" >;]+)' 18 | 19 | 20 | WHITELIST_CODES = [200] 21 | WHITELIST_PATTERN = ["/","../","http","www"] 22 | WHITELIST_MEMES = ['HTML','Script','Other text','CSS','JSON'] 23 | 24 | class BurpExtender(IBurpExtender,IScannerCheck): 25 | extension_name = "Link Hijacking" 26 | q = queue.Queue() 27 | temp_urls = [] 28 | no_of_threads = 15 29 | def registerExtenderCallbacks(self,callbacks): 30 | try: 31 | self._callbacks = callbacks 32 | self._helpers = callbacks.getHelpers() 33 | self._callbacks.setExtensionName(self.extension_name) 34 | self._stdout = PrintWriter(self._callbacks.getStdout(),True) 35 | self._stderr = PrintWriter(self._callbacks.getStderr(),True) 36 | callbacks.registerScannerCheck(self) 37 | self._stdout.println("Extension Successfully Installed") 38 | return 39 | except Exception as e: 40 | self._stderr.println("Installation Problem ?") 41 | self._stderr.println(str(e)) 42 | return 43 | 44 | def split(self,strng, sep, pos): 45 | strng = strng.split(sep) 46 | return sep.join(strng[:pos]), sep.join(strng[pos:]) 47 | 48 | 49 | def _up_check(self,url): 50 | parse_object = urlparse.urlparse(url) 51 | hostname = parse_object.netloc 52 | if parse_object.scheme == 'https': 53 | port = 443 54 | SSL = True 55 | else: 56 | port = 80 57 | SSL = False 58 | try: 59 | req_bytes = self._helpers.buildHttpRequest(URL(str(url))) 60 | res_bytes = self._callbacks.makeHttpRequest(hostname,port,SSL,req_bytes) 61 | res = self._helpers.analyzeResponse(res_bytes) 62 | if res.getStatusCode() not in WHITELIST_CODES: 63 | return url 64 | except Exception as e: 65 | print(e) 66 | print('SKIPPING : ',url) 67 | return None 68 | 69 | def process_queue(self,res): 70 | while not self.q.empty(): 71 | url = self.q.get() 72 | furl = self._up_check(str(url)) 73 | if furl is not None: 74 | #print(furl) 75 | self.temp_urls.append(furl) 76 | 77 | def _blf(self,baseRequestResponse,regex,host): 78 | res = self._helpers.bytesToString(baseRequestResponse.getResponse()) 79 | re_r = regex.findall(res) 80 | if len(re_r) == 0: 81 | return 82 | re_r = list(set([tuple(j for j in re_r if j)[-1] for re_r in re_r])) 83 | re_r = list(set([i for i in re_r if i.startswith(tuple(WHITELIST_PATTERN))])) 84 | #re_r = [i.encode('utf-8').strip('') for i in re_r if isinstance(i,bytes)] 85 | #re_r = [i.replace('\\','/') for i in re_r] 86 | final = [] 87 | for i in re_r: 88 | if i.startswith('//') and ' ' not in i: 89 | i = 'https:'+str(i) 90 | final.append(i) 91 | elif i.startswith('/') and ' ' not in i: 92 | if '.' in i.split('/')[1]: 93 | i = 'https:/'+str(i) 94 | final.append(i) 95 | else: 96 | i = str(host)+str(i) 97 | final.append(i) 98 | elif (i.startswith('http') or i.startswith('www.')) and ' ' not in i: 99 | final.append(i) 100 | elif i.startswith('../') and ' ' not in i: 101 | i = str(host)+'/'+str(i) 102 | final.append(i) 103 | for url in final: 104 | self.q.put(str(url)) 105 | 106 | threads = [] 107 | for i in range(int(BurpExtender.no_of_threads)): 108 | t = threading.Thread(target=self.process_queue,args=(baseRequestResponse,)) 109 | threads.append(t) 110 | t.start() 111 | 112 | for j in threads: 113 | j.join() 114 | return True 115 | 116 | 117 | def doPassiveScan(self,baseRequestResponse): 118 | reqInfo = self._helpers.analyzeRequest(baseRequestResponse.getHttpService(),baseRequestResponse.getRequest()) 119 | if self._callbacks.isInScope(reqInfo.getUrl()) and str(self._helpers.analyzeResponse(baseRequestResponse.getResponse()).getInferredMimeType()) in WHITELIST_MEMES: 120 | _HTTP = baseRequestResponse.getHttpService() 121 | print('ON : ',str(reqInfo.getUrl())) 122 | _host = str(_HTTP.getProtocol())+"://"+str(_HTTP.getHost()) 123 | regex = re.compile(regex_str,re.VERBOSE) 124 | res = self._blf(baseRequestResponse,regex,_host) 125 | if res and len(self.temp_urls) > 0: 126 | final_urls = self.temp_urls[:] 127 | self.temp_urls[:] = [] 128 | return [CustomScanIssue(baseRequestResponse.getHttpService(),self._helpers.analyzeRequest(baseRequestResponse).getUrl(), 129 | [self._callbacks.applyMarkers(baseRequestResponse,None,None)],"Broken Link Hijacking",final_urls,"Low",_HTTP.getHost())] 130 | 131 | def consolidateDuplicateIssues(self,existingIssue,newIssue): 132 | if existingIssue.getIssueName() == newIssue.getIssueName(): 133 | return -1 134 | return 0 135 | 136 | def extensionUnloaded(self): 137 | self._stdout.println("Extension was unloaded") 138 | return 139 | 140 | class CustomScanIssue (IScanIssue): 141 | def __init__(self, httpService, url, httpMessages, name, detail, severity, hostbased): 142 | self._httpService = httpService 143 | self._url = url 144 | self._httpMessages = httpMessages 145 | self._name = name 146 | self._detail = detail 147 | self._severity = severity 148 | self._hostbased = hostbased 149 | 150 | 151 | def getUrl(self): 152 | return self._url 153 | 154 | def getIssueName(self): 155 | return self._name 156 | 157 | def getIssueType(self): 158 | return 0 159 | 160 | def getSeverity(self): 161 | return self._severity 162 | 163 | def getConfidence(self): 164 | return "Certain" 165 | 166 | def getIssueBackground(self): 167 | pass 168 | 169 | def getRemediationBackground(self): 170 | pass 171 | 172 | def getIssueDetail(self): 173 | host_b = [] 174 | for i in self._detail: 175 | if self._hostbased not in i: 176 | host_b.append(str(i)) 177 | if len(host_b) > 0: 178 | final = '