├── LICENSE

├── README.md

├── deep_learning_for_time_series_forecasting.ipynb

├── output

├── compare models.PNG

├── info

├── item daily sales.PNG

├── overall daily sales.PNG

├── overview.gif

└── store sales.PNG

├── test.csv

└── train.csv

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2021 Pradnya Rajendra Patil

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | [1]: https://github.com/Pradnya1208

4 | [2]: https://www.linkedin.com/in/pradnya-patil-b049161ba/

5 | [3]: https://public.tableau.com/app/profile/pradnya.patil3254#!/

6 | [4]: https://twitter.com/Pradnya1208

7 |

8 |

9 | [][1]

10 | [][2]

11 | [.svg)][3]

12 | [][4]

13 |

14 |

15 |

16 | # Time series forecasting using Deep Learning

17 |

18 |

19 |

20 |

21 | ## Overview:

22 | Deep learning methods offer a lot of promise for time series forecasting, such as the automatic learning of temporal dependence and the automatic handling of temporal structures like trends and seasonality.

23 |

24 | ## Dataset:

25 | [Predict Future Slaes](https://www.kaggle.com/c/competitive-data-science-predict-future-sales)

26 | [Store Item Demand Forecasting Challenge](https://www.kaggle.com/c/competitive-data-science-predict-future-sales/data)

27 | #### Data Fields:

28 | - **ID** - an Id that represents a (Shop, Item) tuple within the test set

29 | - **shop_id** - unique identifier of a shop

30 | - **item_id** - unique identifier of a product

31 | - **item_category_id** - unique identifier of item category

32 | - **item_cnt_day** - number of products sold. You are predicting a monthly amount of this measure

33 | - **item_price** - current price of an item

34 | - **date** - date in format dd/mm/yyyy

35 | - **date_block_num** - a consecutive month number, used for convenience. January 2013 is 0, February 2013 is 1,..., October 2015 is 33

36 | - **item_name** - name of item

37 | - **shop_name** - name of shop

38 | - **item_category_name** - name of item category

39 | - **store** - Store ID

40 | - **sales** - Number of items sold at a particular store on a particular date.

41 |

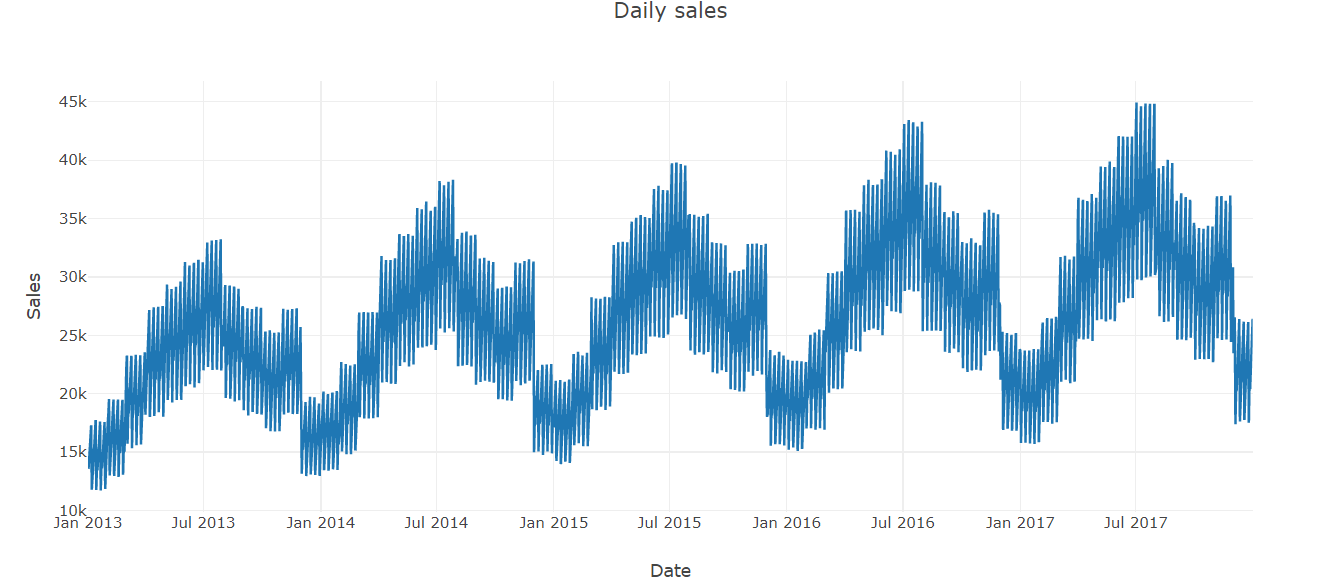

42 | #### Time period of dataset:

43 | ```

44 | Min date from train set: 2013-01-01

45 | Max date from train set: 2017-12-31

46 | ```

47 |

48 | ## Implementation:

49 |

50 | **Libraries:** `NumPy` `pandas` `tensorflow` `matplotlib` `sklearn` `seaborn`

51 | ## Data Exploration:

52 |  53 |

53 |  54 |

54 |  55 |

56 | ## Model training, evaluation, and prediction:

57 | ### Multilayer Perceptron:

58 | - Multilayer Perceptron model or MLP model, here our model will have input features equal to the window size.

59 | - MLP models don't take the input as sequenced data, so for the model, it is just receiving inputs and don't treat them as sequenced data, that may be a problem since the model won't see the data with the sequence pattern that it has.

60 |

61 | ### CNN Model:

62 | - For the CNN model we will use one convolutional hidden layer followed by a max pooling layer. The filter maps are then flattened before being interpreted by a Dense layer and outputting a prediction.

63 | - The convolutional layer should be able to identify patterns between the timesteps.

64 |

65 | ### LSTM:

66 | - Now the LSTM model actually sees the input data as a sequence, so it's able to learn patterns from sequenced data (assuming it exists) better than the other ones, especially patterns from long sequences.

67 |

68 | ### CNN-LSTM:

69 | - CNN-LSTM is a hybrid model for univariate time series forecasting.

70 |

71 | - The benefit of this model is that the model can support very long input sequences that can be read as blocks or subsequences by the CNN model, then pieced together by the LSTM model.

72 |

73 | ### Comapring Models:

74 |

55 |

56 | ## Model training, evaluation, and prediction:

57 | ### Multilayer Perceptron:

58 | - Multilayer Perceptron model or MLP model, here our model will have input features equal to the window size.

59 | - MLP models don't take the input as sequenced data, so for the model, it is just receiving inputs and don't treat them as sequenced data, that may be a problem since the model won't see the data with the sequence pattern that it has.

60 |

61 | ### CNN Model:

62 | - For the CNN model we will use one convolutional hidden layer followed by a max pooling layer. The filter maps are then flattened before being interpreted by a Dense layer and outputting a prediction.

63 | - The convolutional layer should be able to identify patterns between the timesteps.

64 |

65 | ### LSTM:

66 | - Now the LSTM model actually sees the input data as a sequence, so it's able to learn patterns from sequenced data (assuming it exists) better than the other ones, especially patterns from long sequences.

67 |

68 | ### CNN-LSTM:

69 | - CNN-LSTM is a hybrid model for univariate time series forecasting.

70 |

71 | - The benefit of this model is that the model can support very long input sequences that can be read as blocks or subsequences by the CNN model, then pieced together by the LSTM model.

72 |

73 | ### Comapring Models:

74 |  75 |

75 |

76 |

77 | | Model | Train RMSE | Validation RMSE |

78 | | ----------------- | -----------------| ------------------------------------------------------------------ |

79 | | MLP | 18.36| 18.50 |

80 | | CNN | 18.62| 18.76 |

81 | | LSTM | 19.98| 18.76 |

82 | | CNN-LSTM| 19.20 | 19.17 |

83 | ### Lessons Learned

84 | `Time Series Forecasting`

85 | `Deep Learning for Time Series Forecasting`

86 | `LSTM`

87 |

88 |

89 |

90 |

91 |

92 |

93 |

94 |

95 | ## References:

96 | [Deep Learning for Time Series Forecasting](https://machinelearningmastery.com/how-to-get-started-with-deep-learning-for-time-series-forecasting-7-day-mini-course/)

97 | ### Feedback

98 |

99 | If you have any feedback, please reach out at pradnyapatil671@gmail.com

100 |

101 |

102 | ### 🚀 About Me

103 | #### Hi, I'm Pradnya! 👋

104 | I am an AI Enthusiast and Data science & ML practitioner

105 |

106 |

107 |

108 |

109 |

110 |

111 |

112 |

113 |

114 |

115 |

116 |

117 | [1]: https://github.com/Pradnya1208

118 | [2]: https://www.linkedin.com/in/pradnya-patil-b049161ba/

119 | [3]: https://public.tableau.com/app/profile/pradnya.patil3254#!/

120 | [4]: https://twitter.com/Pradnya1208

121 |

122 |

123 | [][1]

124 | [][2]

125 | [.svg)][3]

126 | [][4]

127 |

--------------------------------------------------------------------------------

/output/compare models.PNG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Pradnya1208/Time-series-forecasting-using-Deep-Learning/ee81f3c8041e7aa36d2066724b8d1aef5d8a9fa9/output/compare models.PNG

--------------------------------------------------------------------------------

/output/info:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/output/item daily sales.PNG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Pradnya1208/Time-series-forecasting-using-Deep-Learning/ee81f3c8041e7aa36d2066724b8d1aef5d8a9fa9/output/item daily sales.PNG

--------------------------------------------------------------------------------

/output/overall daily sales.PNG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Pradnya1208/Time-series-forecasting-using-Deep-Learning/ee81f3c8041e7aa36d2066724b8d1aef5d8a9fa9/output/overall daily sales.PNG

--------------------------------------------------------------------------------

/output/overview.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Pradnya1208/Time-series-forecasting-using-Deep-Learning/ee81f3c8041e7aa36d2066724b8d1aef5d8a9fa9/output/overview.gif

--------------------------------------------------------------------------------

/output/store sales.PNG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Pradnya1208/Time-series-forecasting-using-Deep-Learning/ee81f3c8041e7aa36d2066724b8d1aef5d8a9fa9/output/store sales.PNG

--------------------------------------------------------------------------------