├── .gitignore

├── LICENSE

├── README.md

├── assets

└── demo.png

├── conf

├── config.yaml

└── diffusion.yml

├── data

└── demo

│ ├── jellycat

│ ├── 001.jpg

│ ├── 002.jpg

│ ├── 003.jpg

│ └── 004.jpg

│ ├── jordan

│ ├── 001.png

│ ├── 002.png

│ ├── 003.png

│ ├── 004.png

│ ├── 005.png

│ ├── 006.png

│ ├── 007.png

│ └── 008.png

│ ├── kew_gardens_ruined_arch

│ ├── 001.jpeg

│ ├── 002.jpeg

│ └── 003.jpeg

│ └── kotor_cathedral

│ ├── 001.jpeg

│ ├── 002.jpeg

│ ├── 003.jpeg

│ ├── 004.jpeg

│ ├── 005.jpeg

│ └── 006.jpeg

├── diffusionsfm

├── __init__.py

├── dataset

│ ├── __init__.py

│ ├── co3d_v2.py

│ └── custom.py

├── eval

│ ├── __init__.py

│ ├── eval_category.py

│ └── eval_jobs.py

├── inference

│ ├── __init__.py

│ ├── ddim.py

│ ├── load_model.py

│ └── predict.py

├── model

│ ├── base_model.py

│ ├── blocks.py

│ ├── diffuser.py

│ ├── diffuser_dpt.py

│ ├── dit.py

│ ├── feature_extractors.py

│ ├── memory_efficient_attention.py

│ └── scheduler.py

└── utils

│ ├── __init__.py

│ ├── configs.py

│ ├── distortion.py

│ ├── distributed.py

│ ├── geometry.py

│ ├── normalize.py

│ ├── rays.py

│ ├── slurm.py

│ └── visualization.py

├── docs

├── eval.md

└── train.md

├── gradio_app.py

├── requirements.txt

└── train.py

/.gitignore:

--------------------------------------------------------------------------------

1 | __pycache__/

2 | .DS_Store

3 | wandb/

4 | slurm_logs/

5 | output/

6 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2025 Qitao Zhao

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # DiffusionSfM

2 |

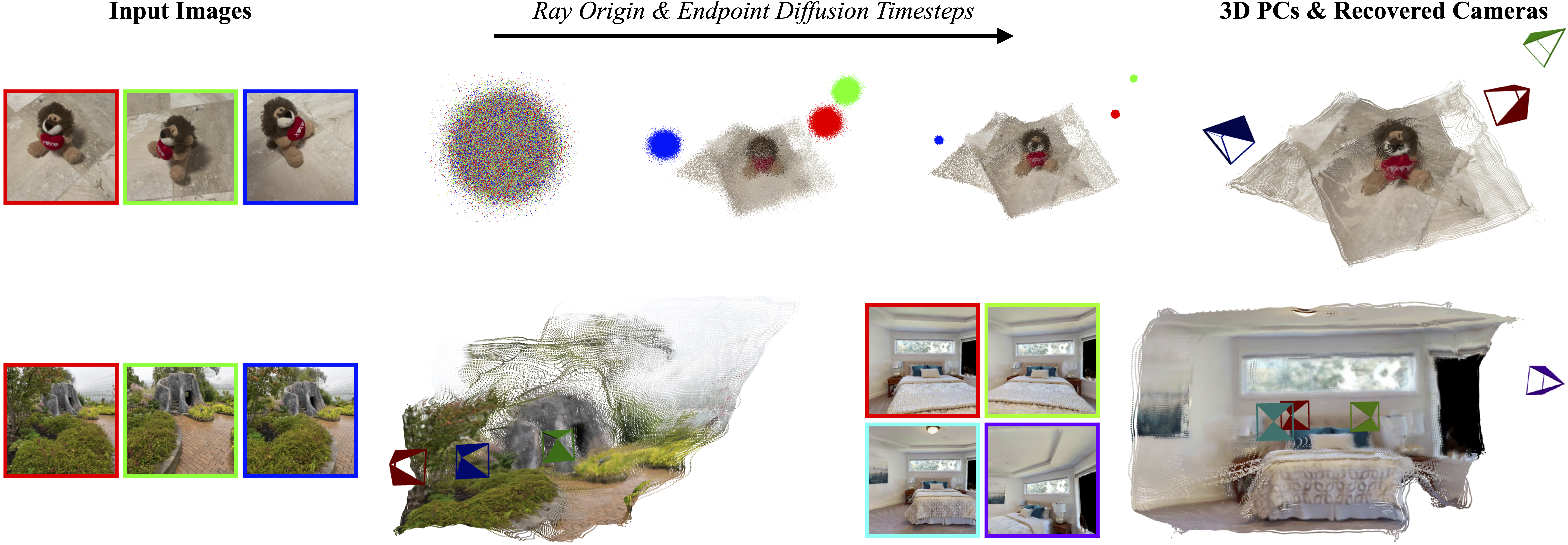

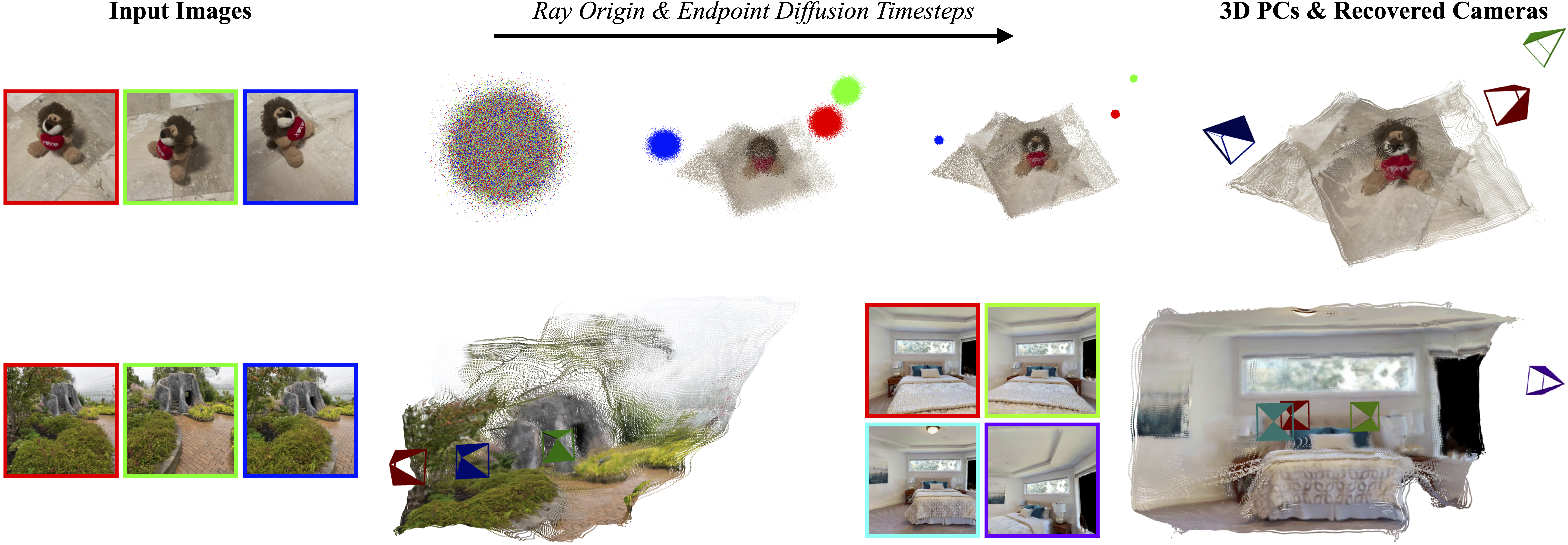

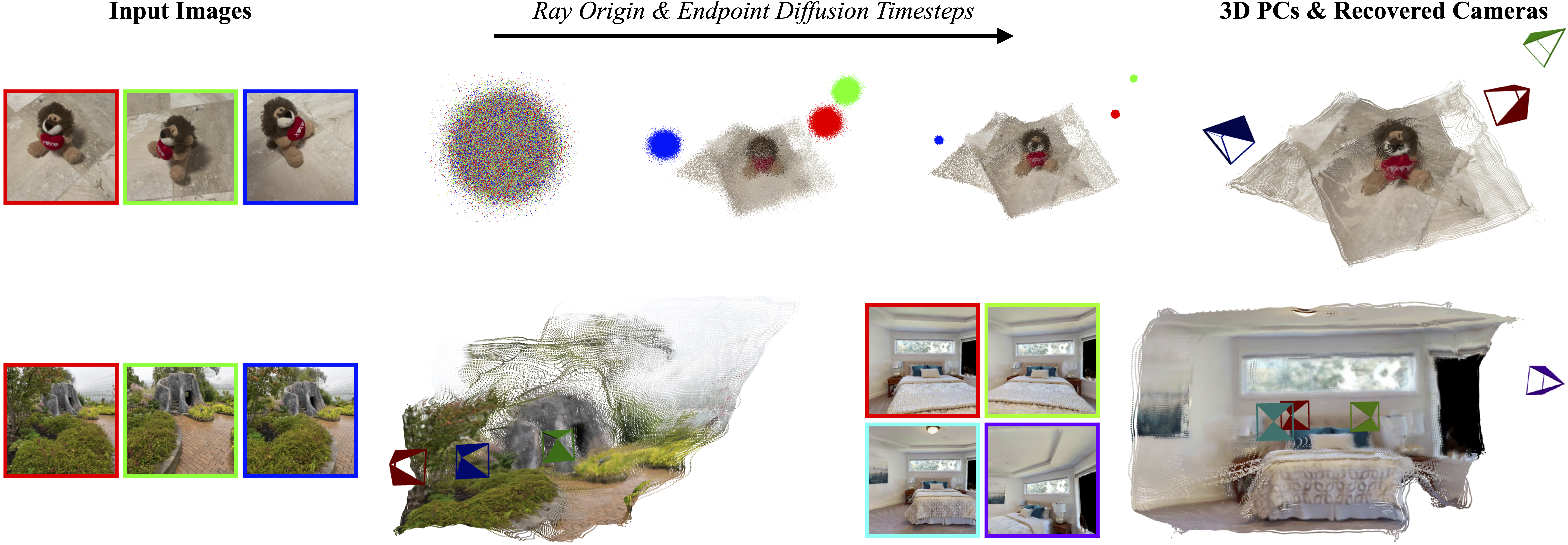

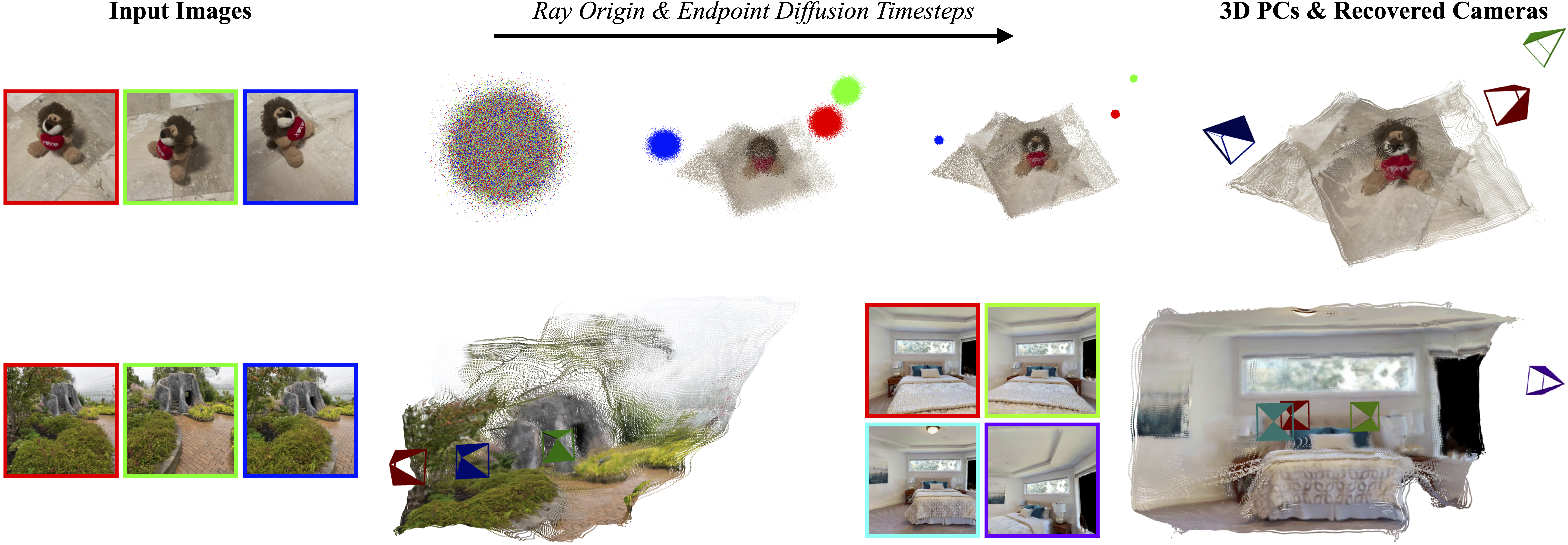

3 | This repository contains the official implementation for **DiffusionSfM: Predicting Structure and Motion**

4 | **via Ray Origin and Endpoint Diffusion**. The paper has been accepted to [CVPR 2025](https://cvpr.thecvf.com/Conferences/2025).

5 |

6 | [Project Page](https://qitaozhao.github.io/DiffusionSfM) | [arXiv](https://arxiv.org/abs/2505.05473) |  7 |

8 | ### News

9 |

10 | - 2025.05.04: Initial code release.

11 |

12 | ## Introduction

13 |

14 | **tl;dr** Given a set of multi-view images, **DiffusionSfM** represents scene geometry and cameras as pixel-wise ray origins and endpoints in a global frame. It learns a denoising diffusion model to infer these elements directly from multi-view inputs.

15 |

16 |

17 |

18 | ## Install

19 |

20 | 1. Clone DiffusionSfM:

21 |

22 | ```bash

23 | git clone https://github.com/QitaoZhao/DiffusionSfM.git

24 | cd DiffusionSfM

25 | ```

26 |

27 | 2. Create the environment and install packages:

28 |

29 | ```bash

30 | conda create -n diffusionsfm python=3.9

31 | conda activate diffusionsfm

32 |

33 | # enable nvcc

34 | conda install -c conda-forge cudatoolkit-dev

35 |

36 | ### torch

37 | # CUDA 11.7

38 | conda install pytorch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 pytorch-cuda=11.7 -c pytorch -c nvidia

39 |

40 | pip install -r requirements.txt

41 |

42 | ### pytorch3D

43 | # CUDA 11.7

44 | conda install https://anaconda.org/pytorch3d/pytorch3d/0.7.7/download/linux-64/pytorch3d-0.7.7-py39_cu117_pyt201.tar.bz2

45 |

46 | # xformers

47 | conda install xformers -c xformers

48 | ```

49 |

50 | Tested on:

51 |

52 | - Springdale Linux 8.6 with torch 2.0.1 & CUDA 11.7 on A6000 GPUs.

53 |

54 | > **Note:** If you encounter the error

55 |

56 | > ImportError: .../libtorch_cpu.so: undefined symbol: iJIT_NotifyEvent

57 |

58 | > when importing PyTorch, refer to this [related issue](https://github.com/coleygroup/shepherd-score/issues/1) or try installing Intel MKL explicitly with:

59 |

60 | ```

61 | conda install mkl==2024.0

62 | ```

63 |

64 | ## Run Demo

65 |

66 | #### (1) Try the Online Demo

67 |

68 | Check out our interactive demo on Hugging Face:

69 |

70 | 👉 [DiffusionSfM Demo](https://huggingface.co/spaces/qitaoz/DiffusionSfM)

71 |

72 | #### (2) Run the Gradio Demo Locally

73 |

74 | Download the model weights manually from [Hugging Face](https://huggingface.co/qitaoz/DiffusionSfM):

75 |

76 | ```python

77 | from huggingface_hub import hf_hub_download

78 |

79 | filepath = hf_hub_download(repo_id="qitaoz/DiffusionSfM", filename="qitaoz/DiffusionSfM")

80 | ```

81 |

82 | or [Google Drive](https://drive.google.com/file/d/1NBdq7A1QMFGhIbpK1HT3ATv2S1jXWr2h/view?usp=drive_link):

83 |

84 | ```bash

85 | gdown https://drive.google.com/uc\?id\=1NBdq7A1QMFGhIbpK1HT3ATv2S1jXWr2h

86 | unzip models.zip

87 | ```

88 | Next run the demo like so:

89 |

90 | ```bash

91 | # first-time running may take a longer time

92 | python gradio_app.py

93 | ```

94 |

95 |

96 |

97 | You can run our model in two ways:

98 |

99 | 1. **Upload Images** — Upload your own multi-view images above.

100 | 2. **Use a Preprocessed Example** — Select one of the pre-collected examples below.

101 |

102 | ## Training

103 |

104 | Set up wandb:

105 |

106 | ```bash

107 | wandb login

108 | ```

109 |

110 | See [docs/train.md](https://github.com/QitaoZhao/DiffusionSfM/blob/main/docs/train.md) for more detailed instructions on training.

111 |

112 | ## Evaluation

113 |

114 | See [docs/eval.md](https://github.com/QitaoZhao/DiffusionSfM/blob/main/docs/eval.md) for instructions on how to run evaluation code.

115 |

116 | ## Acknowledgments

117 |

118 | This project builds upon [RayDiffusion](https://github.com/jasonyzhang/RayDiffusion). [Amy Lin](https://amyxlase.github.io/) and [Jason Y. Zhang](https://jasonyzhang.com/) developed the initial codebase during the early stages of this project.

119 |

120 | ## Cite DiffusionSfM

121 |

122 | If you find this code helpful, please cite:

123 |

124 | ```

125 | @inproceedings{zhao2025diffusionsfm,

126 | title={DiffusionSfM: Predicting Structure and Motion via Ray Origin and Endpoint Diffusion},

127 | author={Qitao Zhao and Amy Lin and Jeff Tan and Jason Y. Zhang and Deva Ramanan and Shubham Tulsiani},

128 | booktitle={CVPR},

129 | year={2025}

130 | }

131 | ```

--------------------------------------------------------------------------------

/assets/demo.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/assets/demo.png

--------------------------------------------------------------------------------

/conf/config.yaml:

--------------------------------------------------------------------------------

1 | training:

2 | resume: False # If True, must set hydra.run.dir accordingly

3 | pretrain_path: ""

4 | interval_visualize: 1000

5 | interval_save_checkpoint: 5000

6 | interval_delete_checkpoint: 10000

7 | interval_evaluate: 5000

8 | delete_all_checkpoints_after_training: False

9 | lr: 1e-4

10 | mixed_precision: True

11 | matmul_precision: high

12 | max_iterations: 100000

13 | batch_size: 64

14 | num_workers: 8

15 | gpu_id: 0

16 | freeze_encoder: True

17 | seed: 0

18 | job_key: "" # Use this for submitit sweeps where timestamps might collide

19 | translation_scale: 1.0

20 | regression: False

21 | prob_unconditional: 0

22 | load_extra_cameras: False

23 | calculate_intrinsics: False

24 | distort: False

25 | normalize_first_camera: True

26 | diffuse_origins_and_endpoints: True

27 | diffuse_depths: False

28 | depth_resolution: 1

29 | dpt_head: False

30 | full_num_patches_x: 16

31 | full_num_patches_y: 16

32 | dpt_encoder_features: True

33 | nearest_neighbor: True

34 | no_bg_targets: True

35 | unit_normalize_scene: False

36 | sd_scale: 2

37 | bfloat: True

38 | first_cam_mediod: True

39 | gradient_clipping: False

40 | l1_loss: False

41 | grad_accumulation: False

42 | reinit: False

43 |

44 | model:

45 | pred_x0: True

46 | model_type: dit

47 | num_patches_x: 16

48 | num_patches_y: 16

49 | depth: 16

50 | num_images: 1

51 | random_num_images: True

52 | feature_extractor: dino

53 | append_ndc: True

54 | within_image: False

55 | use_homogeneous: True

56 | freeze_transformer: False

57 | cond_depth_mask: True

58 |

59 | noise_scheduler:

60 | type: linear

61 | max_timesteps: 100

62 | beta_start: 0.0120

63 | beta_end: 0.00085

64 | marigold_ddim: False

65 |

66 | dataset:

67 | name: co3d

68 | shape: all_train

69 | apply_augmentation: True

70 | use_global_intrinsics: True

71 | mask_holes: True

72 | image_size: 224

73 |

74 | debug:

75 | wandb: True

76 | project_name: diffusionsfm

77 | run_name:

78 | anomaly_detection: False

79 |

80 | hydra:

81 | run:

82 | dir: ./output/${now:%m%d_%H%M%S_%f}${training.job_key}

83 | output_subdir: hydra

84 |

--------------------------------------------------------------------------------

/conf/diffusion.yml:

--------------------------------------------------------------------------------

1 | name: diffusion

2 | channels:

3 | - conda-forge

4 | - iopath

5 | - nvidia

6 | - pkgs/main

7 | - pytorch

8 | - xformers

9 | dependencies:

10 | - _libgcc_mutex=0.1=conda_forge

11 | - _openmp_mutex=4.5=2_gnu

12 | - blas=1.0=mkl

13 | - brotli-python=1.0.9=py39h5a03fae_9

14 | - bzip2=1.0.8=h7f98852_4

15 | - ca-certificates=2023.7.22=hbcca054_0

16 | - certifi=2023.7.22=pyhd8ed1ab_0

17 | - charset-normalizer=3.2.0=pyhd8ed1ab_0

18 | - colorama=0.4.6=pyhd8ed1ab_0

19 | - cuda-cudart=11.7.99=0

20 | - cuda-cupti=11.7.101=0

21 | - cuda-libraries=11.7.1=0

22 | - cuda-nvrtc=11.7.99=0

23 | - cuda-nvtx=11.7.91=0

24 | - cuda-runtime=11.7.1=0

25 | - ffmpeg=4.3=hf484d3e_0

26 | - filelock=3.12.2=pyhd8ed1ab_0

27 | - freetype=2.12.1=hca18f0e_1

28 | - fvcore=0.1.5.post20221221=pyhd8ed1ab_0

29 | - gmp=6.2.1=h58526e2_0

30 | - gmpy2=2.1.2=py39h376b7d2_1

31 | - gnutls=3.6.13=h85f3911_1

32 | - idna=3.4=pyhd8ed1ab_0

33 | - intel-openmp=2022.1.0=h9e868ea_3769

34 | - iopath=0.1.9=py39

35 | - jinja2=3.1.2=pyhd8ed1ab_1

36 | - jpeg=9e=h0b41bf4_3

37 | - lame=3.100=h166bdaf_1003

38 | - lcms2=2.15=hfd0df8a_0

39 | - ld_impl_linux-64=2.40=h41732ed_0

40 | - lerc=4.0.0=h27087fc_0

41 | - libblas=3.9.0=16_linux64_mkl

42 | - libcblas=3.9.0=16_linux64_mkl

43 | - libcublas=11.10.3.66=0

44 | - libcufft=10.7.2.124=h4fbf590_0

45 | - libcufile=1.7.1.12=0

46 | - libcurand=10.3.3.129=0

47 | - libcusolver=11.4.0.1=0

48 | - libcusparse=11.7.4.91=0

49 | - libdeflate=1.17=h0b41bf4_0

50 | - libffi=3.3=h58526e2_2

51 | - libgcc-ng=13.1.0=he5830b7_0

52 | - libgomp=13.1.0=he5830b7_0

53 | - libiconv=1.17=h166bdaf_0

54 | - liblapack=3.9.0=16_linux64_mkl

55 | - libnpp=11.7.4.75=0

56 | - libnvjpeg=11.8.0.2=0

57 | - libpng=1.6.39=h753d276_0

58 | - libsqlite=3.42.0=h2797004_0

59 | - libstdcxx-ng=13.1.0=hfd8a6a1_0

60 | - libtiff=4.5.0=h6adf6a1_2

61 | - libwebp-base=1.3.1=hd590300_0

62 | - libxcb=1.13=h7f98852_1004

63 | - libzlib=1.2.13=hd590300_5

64 | - markupsafe=2.1.3=py39hd1e30aa_0

65 | - mkl=2022.1.0=hc2b9512_224

66 | - mpc=1.3.1=hfe3b2da_0

67 | - mpfr=4.2.0=hb012696_0

68 | - mpmath=1.3.0=pyhd8ed1ab_0

69 | - ncurses=6.4=hcb278e6_0

70 | - nettle=3.6=he412f7d_0

71 | - networkx=3.1=pyhd8ed1ab_0

72 | - numpy=1.25.2=py39h6183b62_0

73 | - openh264=2.1.1=h780b84a_0

74 | - openjpeg=2.5.0=hfec8fc6_2

75 | - openssl=1.1.1v=hd590300_0

76 | - pillow=9.4.0=py39h2320bf1_1

77 | - pip=23.2.1=pyhd8ed1ab_0

78 | - portalocker=2.7.0=py39hf3d152e_0

79 | - pthread-stubs=0.4=h36c2ea0_1001

80 | - pysocks=1.7.1=pyha2e5f31_6

81 | - python=3.9.0=hffdb5ce_5_cpython

82 | - python_abi=3.9=3_cp39

83 | - pytorch=2.0.1=py3.9_cuda11.7_cudnn8.5.0_0

84 | - pytorch-cuda=11.7=h778d358_5

85 | - pytorch-mutex=1.0=cuda

86 | - pyyaml=6.0=py39hb9d737c_5

87 | - readline=8.2=h8228510_1

88 | - requests=2.31.0=pyhd8ed1ab_0

89 | - setuptools=68.0.0=pyhd8ed1ab_0

90 | - sqlite=3.42.0=h2c6b66d_0

91 | - sympy=1.12=pypyh9d50eac_103

92 | - tabulate=0.9.0=pyhd8ed1ab_1

93 | - termcolor=2.3.0=pyhd8ed1ab_0

94 | - tk=8.6.12=h27826a3_0

95 | - torchaudio=2.0.2=py39_cu117

96 | - torchtriton=2.0.0=py39

97 | - torchvision=0.15.2=py39_cu117

98 | - tqdm=4.66.1=pyhd8ed1ab_0

99 | - typing_extensions=4.7.1=pyha770c72_0

100 | - tzdata=2023c=h71feb2d_0

101 | - urllib3=2.0.4=pyhd8ed1ab_0

102 | - wheel=0.41.1=pyhd8ed1ab_0

103 | - xformers=0.0.21=py39_cu11.8.0_pyt2.0.1

104 | - xorg-libxau=1.0.11=hd590300_0

105 | - xorg-libxdmcp=1.1.3=h7f98852_0

106 | - xz=5.2.6=h166bdaf_0

107 | - yacs=0.1.8=pyhd8ed1ab_0

108 | - yaml=0.2.5=h7f98852_2

109 | - zlib=1.2.13=hd590300_5

110 | - zstd=1.5.2=hfc55251_7

111 |

--------------------------------------------------------------------------------

/data/demo/jellycat/001.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/jellycat/001.jpg

--------------------------------------------------------------------------------

/data/demo/jellycat/002.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/jellycat/002.jpg

--------------------------------------------------------------------------------

/data/demo/jellycat/003.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/jellycat/003.jpg

--------------------------------------------------------------------------------

/data/demo/jellycat/004.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/jellycat/004.jpg

--------------------------------------------------------------------------------

/data/demo/jordan/001.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/jordan/001.png

--------------------------------------------------------------------------------

/data/demo/jordan/002.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/jordan/002.png

--------------------------------------------------------------------------------

/data/demo/jordan/003.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/jordan/003.png

--------------------------------------------------------------------------------

/data/demo/jordan/004.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/jordan/004.png

--------------------------------------------------------------------------------

/data/demo/jordan/005.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/jordan/005.png

--------------------------------------------------------------------------------

/data/demo/jordan/006.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/jordan/006.png

--------------------------------------------------------------------------------

/data/demo/jordan/007.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/jordan/007.png

--------------------------------------------------------------------------------

/data/demo/jordan/008.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/jordan/008.png

--------------------------------------------------------------------------------

/data/demo/kew_gardens_ruined_arch/001.jpeg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/kew_gardens_ruined_arch/001.jpeg

--------------------------------------------------------------------------------

/data/demo/kew_gardens_ruined_arch/002.jpeg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/kew_gardens_ruined_arch/002.jpeg

--------------------------------------------------------------------------------

/data/demo/kew_gardens_ruined_arch/003.jpeg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/kew_gardens_ruined_arch/003.jpeg

--------------------------------------------------------------------------------

/data/demo/kotor_cathedral/001.jpeg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/kotor_cathedral/001.jpeg

--------------------------------------------------------------------------------

/data/demo/kotor_cathedral/002.jpeg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/kotor_cathedral/002.jpeg

--------------------------------------------------------------------------------

/data/demo/kotor_cathedral/003.jpeg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/kotor_cathedral/003.jpeg

--------------------------------------------------------------------------------

/data/demo/kotor_cathedral/004.jpeg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/kotor_cathedral/004.jpeg

--------------------------------------------------------------------------------

/data/demo/kotor_cathedral/005.jpeg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/kotor_cathedral/005.jpeg

--------------------------------------------------------------------------------

/data/demo/kotor_cathedral/006.jpeg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/data/demo/kotor_cathedral/006.jpeg

--------------------------------------------------------------------------------

/diffusionsfm/__init__.py:

--------------------------------------------------------------------------------

1 | from .utils.rays import cameras_to_rays, rays_to_cameras, Rays

2 |

--------------------------------------------------------------------------------

/diffusionsfm/dataset/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/diffusionsfm/dataset/__init__.py

--------------------------------------------------------------------------------

/diffusionsfm/dataset/co3d_v2.py:

--------------------------------------------------------------------------------

1 | import gzip

2 | import json

3 | import os.path as osp

4 | import random

5 | import socket

6 | import time

7 | import torch

8 | import warnings

9 |

10 | import numpy as np

11 | from PIL import Image, ImageFile

12 | from tqdm import tqdm

13 | from pytorch3d.renderer import PerspectiveCameras

14 | from torch.utils.data import Dataset

15 | from torchvision import transforms

16 | import matplotlib.pyplot as plt

17 | from scipy import ndimage as nd

18 |

19 | from diffusionsfm.utils.distortion import distort_image

20 |

21 |

22 | HOSTNAME = socket.gethostname()

23 |

24 | CO3D_DIR = "../co3d_data" # update this

25 | CO3D_ANNOTATION_DIR = osp.join(CO3D_DIR, "co3d_annotations")

26 | CO3D_DIR = CO3D_DEPTH_DIR = osp.join(CO3D_DIR, "co3d")

27 | order_path = osp.join(

28 | CO3D_DIR, "co3d_v2_random_order_{sample_num}/{category}.json"

29 | )

30 |

31 |

32 | TRAINING_CATEGORIES = [

33 | "apple",

34 | "backpack",

35 | "banana",

36 | "baseballbat",

37 | "baseballglove",

38 | "bench",

39 | "bicycle",

40 | "bottle",

41 | "bowl",

42 | "broccoli",

43 | "cake",

44 | "car",

45 | "carrot",

46 | "cellphone",

47 | "chair",

48 | "cup",

49 | "donut",

50 | "hairdryer",

51 | "handbag",

52 | "hydrant",

53 | "keyboard",

54 | "laptop",

55 | "microwave",

56 | "motorcycle",

57 | "mouse",

58 | "orange",

59 | "parkingmeter",

60 | "pizza",

61 | "plant",

62 | "stopsign",

63 | "teddybear",

64 | "toaster",

65 | "toilet",

66 | "toybus",

67 | "toyplane",

68 | "toytrain",

69 | "toytruck",

70 | "tv",

71 | "umbrella",

72 | "vase",

73 | "wineglass",

74 | ]

75 |

76 | TEST_CATEGORIES = [

77 | "ball",

78 | "book",

79 | "couch",

80 | "frisbee",

81 | "hotdog",

82 | "kite",

83 | "remote",

84 | "sandwich",

85 | "skateboard",

86 | "suitcase",

87 | ]

88 |

89 | assert len(TRAINING_CATEGORIES) + len(TEST_CATEGORIES) == 51

90 |

91 | Image.MAX_IMAGE_PIXELS = None

92 | ImageFile.LOAD_TRUNCATED_IMAGES = True

93 |

94 |

95 | def fill_depths(data, invalid=None):

96 | data_list = []

97 | for i in range(data.shape[0]):

98 | data_item = data[i].numpy()

99 | # Invalid must be 1 where stuff is invalid, 0 where valid

100 | ind = nd.distance_transform_edt(

101 | invalid[i], return_distances=False, return_indices=True

102 | )

103 | data_list.append(torch.tensor(data_item[tuple(ind)]))

104 | return torch.stack(data_list, dim=0)

105 |

106 |

107 | def full_scene_scale(batch):

108 | cameras = PerspectiveCameras(R=batch["R"], T=batch["T"], device="cuda")

109 | cc = cameras.get_camera_center()

110 | centroid = torch.mean(cc, dim=0)

111 |

112 | diffs = cc - centroid

113 | norms = torch.linalg.norm(diffs, dim=1)

114 |

115 | furthest_index = torch.argmax(norms).item()

116 | scale = norms[furthest_index].item()

117 | return scale

118 |

119 |

120 | def square_bbox(bbox, padding=0.0, astype=None, tight=False):

121 | """

122 | Computes a square bounding box, with optional padding parameters.

123 | Args:

124 | bbox: Bounding box in xyxy format (4,).

125 | Returns:

126 | square_bbox in xyxy format (4,).

127 | """

128 | if astype is None:

129 | astype = type(bbox[0])

130 | bbox = np.array(bbox)

131 | center = (bbox[:2] + bbox[2:]) / 2

132 | extents = (bbox[2:] - bbox[:2]) / 2

133 |

134 | # No black bars if tight

135 | if tight:

136 | s = min(extents) * (1 + padding)

137 | else:

138 | s = max(extents) * (1 + padding)

139 |

140 | square_bbox = np.array(

141 | [center[0] - s, center[1] - s, center[0] + s, center[1] + s],

142 | dtype=astype,

143 | )

144 | return square_bbox

145 |

146 |

147 | def unnormalize_image(image, return_numpy=True, return_int=True):

148 | if isinstance(image, torch.Tensor):

149 | image = image.detach().cpu().numpy()

150 |

151 | if image.ndim == 3:

152 | if image.shape[0] == 3:

153 | image = image[None, ...]

154 | elif image.shape[2] == 3:

155 | image = image.transpose(2, 0, 1)[None, ...]

156 | else:

157 | raise ValueError(f"Unexpected image shape: {image.shape}")

158 | elif image.ndim == 4:

159 | if image.shape[1] == 3:

160 | pass

161 | elif image.shape[3] == 3:

162 | image = image.transpose(0, 3, 1, 2)

163 | else:

164 | raise ValueError(f"Unexpected batch image shape: {image.shape}")

165 | else:

166 | raise ValueError(f"Unsupported input shape: {image.shape}")

167 |

168 | mean = np.array([0.485, 0.456, 0.406])[None, :, None, None]

169 | std = np.array([0.229, 0.224, 0.225])[None, :, None, None]

170 | image = image * std + mean

171 |

172 | if return_int:

173 | image = np.clip(image * 255.0, 0, 255).astype(np.uint8)

174 | else:

175 | image = np.clip(image, 0.0, 1.0)

176 |

177 | if image.shape[0] == 1:

178 | image = image[0]

179 |

180 | if return_numpy:

181 | return image

182 | else:

183 | return torch.from_numpy(image)

184 |

185 |

186 | def unnormalize_image_for_vis(image):

187 | assert len(image.shape) == 5 and image.shape[2] == 3

188 | mean = torch.tensor([0.485, 0.456, 0.406]).view(1, 1, 3, 1, 1).to(image.device)

189 | std = torch.tensor([0.229, 0.224, 0.225]).view(1, 1, 3, 1, 1).to(image.device)

190 | image = image * std + mean

191 | image = (image - 0.5) / 0.5

192 | return image

193 |

194 |

195 | def _transform_intrinsic(image, bbox, principal_point, focal_length):

196 | # Rescale intrinsics to match bbox

197 | half_box = np.array([image.width, image.height]).astype(np.float32) / 2

198 | org_scale = min(half_box).astype(np.float32)

199 |

200 | # Pixel coordinates

201 | principal_point_px = half_box - (np.array(principal_point) * org_scale)

202 | focal_length_px = np.array(focal_length) * org_scale

203 | principal_point_px -= bbox[:2]

204 | new_bbox = (bbox[2:] - bbox[:2]) / 2

205 | new_scale = min(new_bbox)

206 |

207 | # NDC coordinates

208 | new_principal_ndc = (new_bbox - principal_point_px) / new_scale

209 | new_focal_ndc = focal_length_px / new_scale

210 |

211 | principal_point = torch.tensor(new_principal_ndc.astype(np.float32))

212 | focal_length = torch.tensor(new_focal_ndc.astype(np.float32))

213 |

214 | return principal_point, focal_length

215 |

216 |

217 | def construct_camera_from_batch(batch, device):

218 | if isinstance(device, int):

219 | device = f"cuda:{device}"

220 |

221 | return PerspectiveCameras(

222 | R=batch["R"].reshape(-1, 3, 3),

223 | T=batch["T"].reshape(-1, 3),

224 | focal_length=batch["focal_lengths"].reshape(-1, 2),

225 | principal_point=batch["principal_points"].reshape(-1, 2),

226 | image_size=batch["image_sizes"].reshape(-1, 2),

227 | device=device,

228 | )

229 |

230 |

231 | def save_batch_images(images, fname):

232 | cmap = plt.get_cmap("hsv")

233 | num_frames = len(images)

234 | num_rows = len(images)

235 | num_cols = 4

236 | figsize = (num_cols * 2, num_rows * 2)

237 | fig, axs = plt.subplots(num_rows, num_cols, figsize=figsize)

238 | axs = axs.flatten()

239 | for i in range(num_rows):

240 | for j in range(4):

241 | if i < num_frames:

242 | axs[i * 4 + j].imshow(unnormalize_image(images[i][j]))

243 | for s in ["bottom", "top", "left", "right"]:

244 | axs[i * 4 + j].spines[s].set_color(cmap(i / (num_frames)))

245 | axs[i * 4 + j].spines[s].set_linewidth(5)

246 | axs[i * 4 + j].set_xticks([])

247 | axs[i * 4 + j].set_yticks([])

248 | else:

249 | axs[i * 4 + j].axis("off")

250 | plt.tight_layout()

251 | plt.savefig(fname)

252 |

253 |

254 | def jitter_bbox(

255 | square_bbox,

256 | jitter_scale=(1.1, 1.2),

257 | jitter_trans=(-0.07, 0.07),

258 | direction_from_size=None,

259 | ):

260 |

261 | square_bbox = np.array(square_bbox.astype(float))

262 | s = np.random.uniform(jitter_scale[0], jitter_scale[1])

263 |

264 | # Jitter only one dimension if center cropping

265 | tx, ty = np.random.uniform(jitter_trans[0], jitter_trans[1], size=2)

266 | if direction_from_size is not None:

267 | if direction_from_size[0] > direction_from_size[1]:

268 | tx = 0

269 | else:

270 | ty = 0

271 |

272 | side_length = square_bbox[2] - square_bbox[0]

273 | center = (square_bbox[:2] + square_bbox[2:]) / 2 + np.array([tx, ty]) * side_length

274 | extent = side_length / 2 * s

275 | ul = center - extent

276 | lr = ul + 2 * extent

277 | return np.concatenate((ul, lr))

278 |

279 |

280 | class Co3dDataset(Dataset):

281 | def __init__(

282 | self,

283 | category=("all_train",),

284 | split="train",

285 | transform=None,

286 | num_images=2,

287 | img_size=224,

288 | mask_images=False,

289 | crop_images=True,

290 | co3d_dir=None,

291 | co3d_annotation_dir=None,

292 | precropped_images=False,

293 | apply_augmentation=True,

294 | normalize_cameras=True,

295 | no_images=False,

296 | sample_num=None,

297 | seed=0,

298 | load_extra_cameras=False,

299 | distort_image=False,

300 | load_depths=False,

301 | center_crop=False,

302 | depth_size=256,

303 | mask_holes=False,

304 | object_mask=True,

305 | ):

306 | """

307 | Args:

308 | num_images: Number of images in each batch.

309 | perspective_correction (str):

310 | "none": No perspective correction.

311 | "warp": Warp the image and label.

312 | "label_only": Correct the label only.

313 | """

314 | start_time = time.time()

315 |

316 | self.category = category

317 | self.split = split

318 | self.transform = transform

319 | self.num_images = num_images

320 | self.img_size = img_size

321 | self.mask_images = mask_images

322 | self.crop_images = crop_images

323 | self.precropped_images = precropped_images

324 | self.apply_augmentation = apply_augmentation

325 | self.normalize_cameras = normalize_cameras

326 | self.no_images = no_images

327 | self.sample_num = sample_num

328 | self.load_extra_cameras = load_extra_cameras

329 | self.distort = distort_image

330 | self.load_depths = load_depths

331 | self.center_crop = center_crop

332 | self.depth_size = depth_size

333 | self.mask_holes = mask_holes

334 | self.object_mask = object_mask

335 |

336 | if self.apply_augmentation:

337 | if self.center_crop:

338 | self.jitter_scale = (0.8, 1.1)

339 | self.jitter_trans = (0.0, 0.0)

340 | else:

341 | self.jitter_scale = (1.1, 1.2)

342 | self.jitter_trans = (-0.07, 0.07)

343 | else:

344 | # Note if trained with apply_augmentation, we should still use

345 | # apply_augmentation at test time.

346 | self.jitter_scale = (1, 1)

347 | self.jitter_trans = (0.0, 0.0)

348 |

349 | if self.distort:

350 | self.k1_max = 1.0

351 | self.k2_max = 1.0

352 |

353 | if co3d_dir is not None:

354 | self.co3d_dir = co3d_dir

355 | self.co3d_annotation_dir = co3d_annotation_dir

356 | else:

357 | self.co3d_dir = CO3D_DIR

358 | self.co3d_annotation_dir = CO3D_ANNOTATION_DIR

359 | self.co3d_depth_dir = CO3D_DEPTH_DIR

360 |

361 | if isinstance(self.category, str):

362 | self.category = [self.category]

363 |

364 | if "all_train" in self.category:

365 | self.category = TRAINING_CATEGORIES

366 | if "all_test" in self.category:

367 | self.category = TEST_CATEGORIES

368 | if "full" in self.category:

369 | self.category = TRAINING_CATEGORIES + TEST_CATEGORIES

370 | self.category = sorted(self.category)

371 | self.is_single_category = len(self.category) == 1

372 |

373 | # Fixing seed

374 | torch.manual_seed(seed)

375 | random.seed(seed)

376 | np.random.seed(seed)

377 |

378 | print(f"Co3d ({split}):")

379 |

380 | self.low_quality_translations = [

381 | "411_55952_107659",

382 | "427_59915_115716",

383 | "435_61970_121848",

384 | "112_13265_22828",

385 | "110_13069_25642",

386 | "165_18080_34378",

387 | "368_39891_78502",

388 | "391_47029_93665",

389 | "20_695_1450",

390 | "135_15556_31096",

391 | "417_57572_110680",

392 | ] # Initialized with sequences with poor depth masks

393 | self.rotations = {}

394 | self.category_map = {}

395 | for c in tqdm(self.category):

396 | annotation_file = osp.join(

397 | self.co3d_annotation_dir, f"{c}_{self.split}.jgz"

398 | )

399 | with gzip.open(annotation_file, "r") as fin:

400 | annotation = json.loads(fin.read())

401 |

402 | counter = 0

403 | for seq_name, seq_data in annotation.items():

404 | counter += 1

405 | if len(seq_data) < self.num_images:

406 | continue

407 |

408 | filtered_data = []

409 | self.category_map[seq_name] = c

410 | bad_seq = False

411 | for data in seq_data:

412 | # Make sure translations are not ridiculous and rotations are valid

413 | det = np.linalg.det(data["R"])

414 | if (np.abs(data["T"]) > 1e5).any() or det < 0.99 or det > 1.01:

415 | bad_seq = True

416 | self.low_quality_translations.append(seq_name)

417 | break

418 |

419 | # Ignore all unnecessary information.

420 | filtered_data.append(

421 | {

422 | "filepath": data["filepath"],

423 | "bbox": data["bbox"],

424 | "R": data["R"],

425 | "T": data["T"],

426 | "focal_length": data["focal_length"],

427 | "principal_point": data["principal_point"],

428 | },

429 | )

430 |

431 | if not bad_seq:

432 | self.rotations[seq_name] = filtered_data

433 |

434 | self.sequence_list = list(self.rotations.keys())

435 |

436 | IMAGENET_DEFAULT_MEAN = (0.485, 0.456, 0.406)

437 | IMAGENET_DEFAULT_STD = (0.229, 0.224, 0.225)

438 |

439 | if self.transform is None:

440 | self.transform = transforms.Compose(

441 | [

442 | transforms.ToTensor(),

443 | transforms.Resize(self.img_size, antialias=True),

444 | transforms.Normalize(mean=IMAGENET_DEFAULT_MEAN, std=IMAGENET_DEFAULT_STD),

445 | ]

446 | )

447 |

448 | self.transform_depth = transforms.Compose(

449 | [

450 | transforms.Resize(

451 | self.depth_size,

452 | antialias=False,

453 | interpolation=transforms.InterpolationMode.NEAREST_EXACT,

454 | ),

455 | ]

456 | )

457 |

458 | print(

459 | f"Low quality translation sequences, not used: {self.low_quality_translations}"

460 | )

461 | print(f"Data size: {len(self)}")

462 | print(f"Data loading took {(time.time()-start_time)} seconds.")

463 |

464 | def __len__(self):

465 | return len(self.sequence_list)

466 |

467 | def __getitem__(self, index):

468 | num_to_load = self.num_images if not self.load_extra_cameras else 8

469 |

470 | sequence_name = self.sequence_list[index % len(self.sequence_list)]

471 | metadata = self.rotations[sequence_name]

472 |

473 | if self.sample_num is not None:

474 | with open(

475 | order_path.format(sample_num=self.sample_num, category=self.category[0])

476 | ) as f:

477 | order = json.load(f)

478 | ids = order[sequence_name][:num_to_load]

479 | else:

480 | replace = len(metadata) < 8

481 | ids = np.random.choice(len(metadata), num_to_load, replace=replace)

482 |

483 | return self.get_data(index=index, ids=ids, num_valid_frames=num_to_load)

484 |

485 | def _get_scene_scale(self, sequence_name):

486 | n = len(self.rotations[sequence_name])

487 |

488 | R = torch.zeros(n, 3, 3)

489 | T = torch.zeros(n, 3)

490 |

491 | for i, ann in enumerate(self.rotations[sequence_name]):

492 | R[i, ...] = torch.tensor(self.rotations[sequence_name][i]["R"])

493 | T[i, ...] = torch.tensor(self.rotations[sequence_name][i]["T"])

494 |

495 | cameras = PerspectiveCameras(R=R, T=T)

496 | cc = cameras.get_camera_center()

497 | centeroid = torch.mean(cc, dim=0)

498 | diff = cc - centeroid

499 |

500 | norm = torch.norm(diff, dim=1)

501 | scale = torch.max(norm).item()

502 |

503 | return scale

504 |

505 | def _crop_image(self, image, bbox):

506 | image_crop = transforms.functional.crop(

507 | image,

508 | top=bbox[1],

509 | left=bbox[0],

510 | height=bbox[3] - bbox[1],

511 | width=bbox[2] - bbox[0],

512 | )

513 | return image_crop

514 |

515 | def _transform_intrinsic(self, image, bbox, principal_point, focal_length):

516 | half_box = np.array([image.width, image.height]).astype(np.float32) / 2

517 | org_scale = min(half_box).astype(np.float32)

518 |

519 | # Pixel coordinates

520 | principal_point_px = half_box - (np.array(principal_point) * org_scale)

521 | focal_length_px = np.array(focal_length) * org_scale

522 | principal_point_px -= bbox[:2]

523 | new_bbox = (bbox[2:] - bbox[:2]) / 2

524 | new_scale = min(new_bbox)

525 |

526 | # NDC coordinates

527 | new_principal_ndc = (new_bbox - principal_point_px) / new_scale

528 | new_focal_ndc = focal_length_px / new_scale

529 |

530 | return new_principal_ndc.astype(np.float32), new_focal_ndc.astype(np.float32)

531 |

532 | def get_data(

533 | self,

534 | index=None,

535 | sequence_name=None,

536 | ids=(0, 1),

537 | no_images=False,

538 | num_valid_frames=None,

539 | load_using_order=None,

540 | ):

541 | if load_using_order is not None:

542 | with open(

543 | order_path.format(sample_num=self.sample_num, category=self.category[0])

544 | ) as f:

545 | order = json.load(f)

546 | ids = order[sequence_name][:load_using_order]

547 |

548 | if sequence_name is None:

549 | index = index % len(self.sequence_list)

550 | sequence_name = self.sequence_list[index]

551 | metadata = self.rotations[sequence_name]

552 | category = self.category_map[sequence_name]

553 |

554 | # Read image & camera information from annotations

555 | annos = [metadata[i] for i in ids]

556 | images = []

557 | image_sizes = []

558 | PP = []

559 | FL = []

560 | crop_parameters = []

561 | filenames = []

562 | distortion_parameters = []

563 | depths = []

564 | depth_masks = []

565 | object_masks = []

566 | dino_images = []

567 | for anno in annos:

568 | filepath = anno["filepath"]

569 |

570 | if not no_images:

571 | image = Image.open(osp.join(self.co3d_dir, filepath)).convert("RGB")

572 | image_size = image.size

573 |

574 | # Optionally mask images with black background

575 | if self.mask_images:

576 | black_image = Image.new("RGB", image_size, (0, 0, 0))

577 | mask_name = osp.basename(filepath.replace(".jpg", ".png"))

578 |

579 | mask_path = osp.join(

580 | self.co3d_dir, category, sequence_name, "masks", mask_name

581 | )

582 | mask = Image.open(mask_path).convert("L")

583 |

584 | if mask.size != image_size:

585 | mask = mask.resize(image_size)

586 | mask = Image.fromarray(np.array(mask) > 125)

587 | image = Image.composite(image, black_image, mask)

588 |

589 | if self.object_mask:

590 | mask_name = osp.basename(filepath.replace(".jpg", ".png"))

591 | mask_path = osp.join(

592 | self.co3d_dir, category, sequence_name, "masks", mask_name

593 | )

594 | mask = Image.open(mask_path).convert("L")

595 |

596 | if mask.size != image_size:

597 | mask = mask.resize(image_size)

598 | mask = torch.from_numpy(np.array(mask) > 125)

599 |

600 | # Determine crop, Resnet wants square images

601 | bbox = np.array(anno["bbox"])

602 | good_bbox = ((bbox[2:] - bbox[:2]) > 30).all()

603 | bbox = (

604 | anno["bbox"]

605 | if not self.center_crop and good_bbox

606 | else [0, 0, image.width, image.height]

607 | )

608 |

609 | # Distort image and bbox if desired

610 | if self.distort:

611 | k1 = random.uniform(0, self.k1_max)

612 | k2 = random.uniform(0, self.k2_max)

613 |

614 | try:

615 | image, bbox = distort_image(

616 | image, np.array(bbox), k1, k2, modify_bbox=True

617 | )

618 |

619 | except:

620 | print("INFO:")

621 | print(sequence_name)

622 | print(index)

623 | print(ids)

624 | print(k1)

625 | print(k2)

626 |

627 | distortion_parameters.append(torch.FloatTensor([k1, k2]))

628 |

629 | bbox = square_bbox(np.array(bbox), tight=self.center_crop)

630 | if self.apply_augmentation:

631 | bbox = jitter_bbox(

632 | bbox,

633 | jitter_scale=self.jitter_scale,

634 | jitter_trans=self.jitter_trans,

635 | direction_from_size=image.size if self.center_crop else None,

636 | )

637 | bbox = np.around(bbox).astype(int)

638 |

639 | # Crop parameters

640 | crop_center = (bbox[:2] + bbox[2:]) / 2

641 | principal_point = torch.tensor(anno["principal_point"])

642 | focal_length = torch.tensor(anno["focal_length"])

643 |

644 | # convert crop center to correspond to a "square" image

645 | width, height = image.size

646 | length = max(width, height)

647 | s = length / min(width, height)

648 | crop_center = crop_center + (length - np.array([width, height])) / 2

649 |

650 | # convert to NDC

651 | cc = s - 2 * s * crop_center / length

652 | crop_width = 2 * s * (bbox[2] - bbox[0]) / length

653 | crop_params = torch.tensor([-cc[0], -cc[1], crop_width, s])

654 |

655 | # Crop and normalize image

656 | if not self.precropped_images:

657 | image = self._crop_image(image, bbox)

658 |

659 | try:

660 | image = self.transform(image)

661 | except:

662 | print("INFO:")

663 | print(sequence_name)

664 | print(index)

665 | print(ids)

666 | print(k1)

667 | print(k2)

668 |

669 | images.append(image[:, : self.img_size, : self.img_size])

670 | crop_parameters.append(crop_params)

671 |

672 | if self.load_depths:

673 | # Open depth map

674 | depth_name = osp.basename(

675 | filepath.replace(".jpg", ".jpg.geometric.png")

676 | )

677 | depth_path = osp.join(

678 | self.co3d_depth_dir,

679 | category,

680 | sequence_name,

681 | "depths",

682 | depth_name,

683 | )

684 | depth_pil = Image.open(depth_path)

685 |

686 | # 16 bit float type casting

687 | depth = torch.tensor(

688 | np.frombuffer(

689 | np.array(depth_pil, dtype=np.uint16), dtype=np.float16

690 | )

691 | .astype(np.float32)

692 | .reshape((depth_pil.size[1], depth_pil.size[0]))

693 | )

694 |

695 | # Crop and resize as with images

696 | if depth_pil.size != image_size:

697 | # bbox may have the wrong scale

698 | bbox = depth_pil.size[0] * bbox / image_size[0]

699 |

700 | if self.object_mask:

701 | assert mask.shape == depth.shape

702 |

703 | bbox = np.around(bbox).astype(int)

704 | depth = self._crop_image(depth, bbox)

705 |

706 | # Resize

707 | depth = self.transform_depth(depth.unsqueeze(0))[

708 | 0, : self.depth_size, : self.depth_size

709 | ]

710 | depths.append(depth)

711 |

712 | if self.object_mask:

713 | mask = self._crop_image(mask, bbox)

714 | mask = self.transform_depth(mask.unsqueeze(0))[

715 | 0, : self.depth_size, : self.depth_size

716 | ]

717 | object_masks.append(mask)

718 |

719 | PP.append(principal_point)

720 | FL.append(focal_length)

721 | image_sizes.append(torch.tensor([self.img_size, self.img_size]))

722 | filenames.append(filepath)

723 |

724 | if not no_images:

725 | if self.load_depths:

726 | depths = torch.stack(depths)

727 |

728 | depth_masks = torch.logical_or(depths <= 0, depths.isinf())

729 | depth_masks = (~depth_masks).long()

730 |

731 | if self.object_mask:

732 | object_masks = torch.stack(object_masks, dim=0)

733 |

734 | if self.mask_holes:

735 | depths = fill_depths(depths, depth_masks == 0)

736 |

737 | # Sometimes mask_holes misses stuff

738 | new_masks = torch.logical_or(depths <= 0, depths.isinf())

739 | new_masks = (~new_masks).long()

740 | depths[new_masks == 0] = -1

741 |

742 | assert torch.logical_or(depths > 0, depths == -1).all()

743 | assert not (depths.isinf()).any()

744 | assert not (depths.isnan()).any()

745 |

746 | if self.load_extra_cameras:

747 | # Remove the extra loaded image, for saving space

748 | images = images[: self.num_images]

749 |

750 | if self.distort:

751 | distortion_parameters = torch.stack(distortion_parameters)

752 |

753 | images = torch.stack(images)

754 | crop_parameters = torch.stack(crop_parameters)

755 | focal_lengths = torch.stack(FL)

756 | principal_points = torch.stack(PP)

757 | image_sizes = torch.stack(image_sizes)

758 | else:

759 | images = None

760 | crop_parameters = None

761 | distortion_parameters = None

762 | focal_lengths = []

763 | principal_points = []

764 | image_sizes = []

765 |

766 | # Assemble batch info to send back

767 | R = torch.stack([torch.tensor(anno["R"]) for anno in annos])

768 | T = torch.stack([torch.tensor(anno["T"]) for anno in annos])

769 |

770 | batch = {

771 | "model_id": sequence_name,

772 | "category": category,

773 | "n": len(metadata),

774 | "num_valid_frames": num_valid_frames,

775 | "ind": torch.tensor(ids),

776 | "image": images,

777 | "depth": depths,

778 | "depth_masks": depth_masks,

779 | "object_masks": object_masks,

780 | "R": R,

781 | "T": T,

782 | "focal_length": focal_lengths,

783 | "principal_point": principal_points,

784 | "image_size": image_sizes,

785 | "crop_parameters": crop_parameters,

786 | "distortion_parameters": torch.zeros(4),

787 | "filename": filenames,

788 | "category": category,

789 | "dataset": "co3d",

790 | }

791 |

792 | return batch

793 |

--------------------------------------------------------------------------------

/diffusionsfm/dataset/custom.py:

--------------------------------------------------------------------------------

1 |

2 | import torch

3 | import numpy as np

4 | import matplotlib.pyplot as plt

5 |

6 | from PIL import Image, ImageOps

7 | from torch.utils.data import Dataset

8 | from torchvision import transforms

9 |

10 | from diffusionsfm.dataset.co3d_v2 import square_bbox

11 |

12 |

13 | class CustomDataset(Dataset):

14 | def __init__(

15 | self,

16 | image_list,

17 | ):

18 | self.images = []

19 |

20 | for image_path in sorted(image_list):

21 | img = Image.open(image_path)

22 | img = ImageOps.exif_transpose(img).convert("RGB") # Apply EXIF rotation

23 | self.images.append(img)

24 |

25 | self.n = len(self.images)

26 | self.jitter_scale = [1, 1]

27 | self.jitter_trans = [0, 0]

28 | self.transform = transforms.Compose(

29 | [

30 | transforms.ToTensor(),

31 | transforms.Resize(224),

32 | transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]),

33 | ]

34 | )

35 | self.transform_for_vis = transforms.Compose(

36 | [

37 | transforms.Resize(224),

38 | ]

39 | )

40 |

41 | def __len__(self):

42 | return 1

43 |

44 | def _crop_image(self, image, bbox, white_bg=False):

45 | if white_bg:

46 | # Only support PIL Images

47 | image_crop = Image.new(

48 | "RGB", (bbox[2] - bbox[0], bbox[3] - bbox[1]), (255, 255, 255)

49 | )

50 | image_crop.paste(image, (-bbox[0], -bbox[1]))

51 | else:

52 | image_crop = transforms.functional.crop(

53 | image,

54 | top=bbox[1],

55 | left=bbox[0],

56 | height=bbox[3] - bbox[1],

57 | width=bbox[2] - bbox[0],

58 | )

59 | return image_crop

60 |

61 | def __getitem__(self):

62 | return self.get_data()

63 |

64 | def get_data(self):

65 | cmap = plt.get_cmap("hsv")

66 | ids = [i for i in range(len(self.images))]

67 | images = [self.images[i] for i in ids]

68 | images_transformed = []

69 | images_for_vis = []

70 | crop_parameters = []

71 |

72 | for i, image in enumerate(images):

73 | bbox = np.array([0, 0, image.width, image.height])

74 | bbox = square_bbox(bbox, tight=True)

75 | bbox = np.around(bbox).astype(int)

76 | image = self._crop_image(image, bbox)

77 | images_transformed.append(self.transform(image))

78 | image_for_vis = self.transform_for_vis(image)

79 | color_float = cmap(i / len(images))

80 | color_rgb = tuple(int(255 * c) for c in color_float[:3])

81 | image_for_vis = ImageOps.expand(image_for_vis, border=3, fill=color_rgb)

82 | images_for_vis.append(image_for_vis)

83 |

84 | width, height = image.size

85 | length = max(width, height)

86 | s = length / min(width, height)

87 | crop_center = (bbox[:2] + bbox[2:]) / 2

88 | crop_center = crop_center + (length - np.array([width, height])) / 2

89 | # convert to NDC

90 | cc = s - 2 * s * crop_center / length

91 | crop_width = 2 * s * (bbox[2] - bbox[0]) / length

92 | crop_params = torch.tensor([-cc[0], -cc[1], crop_width, s])

93 |

94 | crop_parameters.append(crop_params)

95 | images = images_transformed

96 |

97 | batch = {}

98 | batch["image"] = torch.stack(images)

99 | batch["image_for_vis"] = images_for_vis

100 | batch["n"] = len(images)

101 | batch["ind"] = torch.tensor(ids),

102 | batch["crop_parameters"] = torch.stack(crop_parameters)

103 | batch["distortion_parameters"] = torch.zeros(4)

104 |

105 | return batch

106 |

--------------------------------------------------------------------------------

/diffusionsfm/eval/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/diffusionsfm/eval/__init__.py

--------------------------------------------------------------------------------

/diffusionsfm/eval/eval_category.py:

--------------------------------------------------------------------------------

1 | import os

2 | import json

3 | import torch

4 | import torchvision

5 | import numpy as np

6 | from tqdm.auto import tqdm

7 |

8 | from diffusionsfm.dataset.co3d_v2 import (

9 | Co3dDataset,

10 | full_scene_scale,

11 | )

12 | from pytorch3d.renderer import PerspectiveCameras

13 | from diffusionsfm.utils.visualization import filter_and_align_point_clouds

14 | from diffusionsfm.inference.load_model import load_model

15 | from diffusionsfm.inference.predict import predict_cameras

16 | from diffusionsfm.utils.geometry import (

17 | compute_angular_error_batch,

18 | get_error,

19 | n_to_np_rotations,

20 | )

21 | from diffusionsfm.utils.slurm import init_slurm_signals_if_slurm

22 | from diffusionsfm.utils.rays import cameras_to_rays

23 | from diffusionsfm.utils.rays import normalize_cameras_batch

24 |

25 |

26 | @torch.no_grad()

27 | def evaluate(

28 | cfg,

29 | model,

30 | dataset,

31 | num_images,

32 | device,

33 | use_pbar=True,

34 | calculate_intrinsics=True,

35 | additional_timesteps=(),

36 | num_evaluate=None,

37 | max_num_images=None,

38 | mode=None,

39 | metrics=True,

40 | load_depth=True,

41 | ):

42 | if cfg.training.get("dpt_head", False):

43 | H_in = W_in = 224

44 | H_out = W_out = cfg.training.full_num_patches_y

45 | else:

46 | H_in = H_out = cfg.model.num_patches_x

47 | W_in = W_out = cfg.model.num_patches_y

48 |

49 | results = {}

50 | instances = np.arange(0, len(dataset)) if num_evaluate is None else np.linspace(0, len(dataset) - 1, num_evaluate, endpoint=True, dtype=int)

51 | instances = tqdm(instances) if use_pbar else instances

52 |

53 | for counter, idx in enumerate(instances):

54 | batch = dataset[idx]

55 | instance = batch["model_id"]

56 | images = batch["image"].to(device)

57 | focal_length = batch["focal_length"].to(device)[:num_images]

58 | R = batch["R"].to(device)[:num_images]

59 | T = batch["T"].to(device)[:num_images]

60 | crop_parameters = batch["crop_parameters"].to(device)[:num_images]

61 |

62 | if load_depth:

63 | depths = batch["depth"].to(device)[:num_images]

64 | depth_masks = batch["depth_masks"].to(device)[:num_images]

65 | try:

66 | object_masks = batch["object_masks"].to(device)[:num_images]

67 | except KeyError:

68 | object_masks = depth_masks.clone()

69 |

70 | # Normalize cameras and scale depths for output resolution

71 | cameras_gt = PerspectiveCameras(

72 | R=R, T=T, focal_length=focal_length, device=device

73 | )

74 | cameras_gt, _, _ = normalize_cameras_batch(

75 | [cameras_gt],

76 | first_cam_mediod=cfg.training.first_cam_mediod,

77 | normalize_first_camera=cfg.training.normalize_first_camera,

78 | depths=depths.unsqueeze(0),

79 | crop_parameters=crop_parameters.unsqueeze(0),

80 | num_patches_x=H_in,

81 | num_patches_y=W_in,

82 | return_scales=True,

83 | )

84 | cameras_gt = cameras_gt[0]

85 |

86 | gt_rays = cameras_to_rays(

87 | cameras=cameras_gt,

88 | num_patches_x=H_in,

89 | num_patches_y=W_in,

90 | crop_parameters=crop_parameters,

91 | depths=depths,

92 | mode=mode,

93 | )

94 | gt_points = gt_rays.get_segments().view(num_images, -1, 3)

95 |

96 | resize = torchvision.transforms.Resize(

97 | 224,

98 | antialias=False,

99 | interpolation=torchvision.transforms.InterpolationMode.NEAREST_EXACT,

100 | )

101 | else:

102 | cameras_gt = PerspectiveCameras(

103 | R=R, T=T, focal_length=focal_length, device=device

104 | )

105 |

106 | pred_cameras, additional_cams = predict_cameras(

107 | model,

108 | images,

109 | device,

110 | crop_parameters=crop_parameters,

111 | num_patches_x=H_out,

112 | num_patches_y=W_out,

113 | max_num_images=max_num_images,

114 | additional_timesteps=additional_timesteps,

115 | calculate_intrinsics=calculate_intrinsics,

116 | mode=mode,

117 | return_rays=True,

118 | use_homogeneous=cfg.model.get("use_homogeneous", False),

119 | )

120 | cameras_to_evaluate = additional_cams + [pred_cameras]

121 |

122 | all_cams_batch = dataset.get_data(

123 | sequence_name=instance, ids=np.arange(0, batch["n"]), no_images=True

124 | )

125 | gt_scene_scale = full_scene_scale(all_cams_batch)

126 | R_gt = R

127 | T_gt = T

128 |

129 | errors = []

130 | for _, (camera, pred_rays) in enumerate(cameras_to_evaluate):

131 | R_pred = camera.R

132 | T_pred = camera.T

133 | f_pred = camera.focal_length

134 |

135 | R_pred_rel = n_to_np_rotations(num_images, R_pred).cpu().numpy()

136 | R_gt_rel = n_to_np_rotations(num_images, batch["R"]).cpu().numpy()

137 | R_error = compute_angular_error_batch(R_pred_rel, R_gt_rel)

138 |

139 | CC_error, _ = get_error(True, R_pred, T_pred, R_gt, T_gt, gt_scene_scale)

140 |

141 | if load_depth and metrics:

142 | # Evaluate outputs at the same resolution as DUSt3R

143 | pred_points = pred_rays.get_segments().view(num_images, H_out, H_out, 3)

144 | pred_points = pred_points.permute(0, 3, 1, 2)

145 | pred_points = resize(pred_points).permute(0, 2, 3, 1).view(num_images, H_in*W_in, 3)

146 |

147 | (

148 | _,

149 | _,

150 | _,

151 | _,

152 | metric_values,

153 | ) = filter_and_align_point_clouds(

154 | num_images,

155 | gt_points,

156 | pred_points,

157 | depth_masks,

158 | depth_masks,

159 | images,

160 | metrics=metrics,

161 | num_patches_x=H_in,

162 | )

163 |

164 | (

165 | _,

166 | _,

167 | _,

168 | _,

169 | object_metric_values,

170 | ) = filter_and_align_point_clouds(

171 | num_images,

172 | gt_points,

173 | pred_points,

174 | depth_masks * object_masks,

175 | depth_masks * object_masks,

176 | images,

177 | metrics=metrics,

178 | num_patches_x=H_in,

179 | )

180 |

181 | result = {

182 | "R_pred": R_pred.detach().cpu().numpy().tolist(),

183 | "T_pred": T_pred.detach().cpu().numpy().tolist(),

184 | "f_pred": f_pred.detach().cpu().numpy().tolist(),

185 | "R_gt": R_gt.detach().cpu().numpy().tolist(),

186 | "T_gt": T_gt.detach().cpu().numpy().tolist(),

187 | "f_gt": focal_length.detach().cpu().numpy().tolist(),

188 | "scene_scale": gt_scene_scale,

189 | "R_error": R_error.tolist(),

190 | "CC_error": CC_error,

191 | }

192 |

193 | if load_depth and metrics:

194 | result["CD"] = metric_values[1]

195 | result["CD_Object"] = object_metric_values[1]

196 | else:

197 | result["CD"] = 0

198 | result["CD_Object"] = 0

199 |

200 | errors.append(result)

201 |

202 | results[instance] = errors

203 |

204 | if counter == len(dataset) - 1:

205 | break

206 | return results

207 |

208 |

209 | def save_results(

210 | output_dir,

211 | checkpoint=800_000,

212 | category="hydrant",

213 | num_images=None,

214 | calculate_additional_timesteps=True,

215 | calculate_intrinsics=True,

216 | split="test",

217 | force=False,

218 | sample_num=1,

219 | max_num_images=None,

220 | dataset="co3d",

221 | ):

222 | init_slurm_signals_if_slurm()

223 | os.umask(000) # Default to 777 permissions

224 | eval_path = os.path.join(

225 | output_dir,

226 | f"eval_{dataset}",

227 | f"{category}_{num_images}_{sample_num}_ckpt{checkpoint}.json",

228 | )

229 |

230 | if os.path.exists(eval_path) and not force:

231 | print(f"File {eval_path} already exists. Skipping.")

232 | return

233 |

234 | if num_images is not None and num_images > 8:

235 | custom_keys = {"model.num_images": num_images}

236 | ignore_keys = ["pos_table"]

237 | else:

238 | custom_keys = None

239 | ignore_keys = []

240 |

241 | device = torch.device("cuda")

242 | model, cfg = load_model(

243 | output_dir,

244 | checkpoint=checkpoint,

245 | device=device,

246 | custom_keys=custom_keys,

247 | ignore_keys=ignore_keys,

248 | )

249 | if num_images is None:

250 | num_images = cfg.dataset.num_images

251 |

252 | if cfg.training.dpt_head:

253 | # Evaluate outputs at the same resolution as DUSt3R

254 | depth_size = 224

255 | else:

256 | depth_size = cfg.model.num_patches_x

257 |

258 | dataset = Co3dDataset(

259 | category=category,

260 | split=split,

261 | num_images=num_images,

262 | apply_augmentation=False,

263 | sample_num=None if split == "train" else sample_num,

264 | use_global_intrinsics=cfg.dataset.use_global_intrinsics,

265 | load_depths=True,

266 | center_crop=True,

267 | depth_size=depth_size,

268 | mask_holes=not cfg.training.regression,

269 | img_size=256 if cfg.model.unet_diffuser else 224,

270 | )

271 | print(f"Category {category} {len(dataset)}")

272 |

273 | if calculate_additional_timesteps:

274 | additional_timesteps = [0, 10, 20, 30, 40, 50, 60, 70, 80, 90]

275 | else:

276 | additional_timesteps = []

277 |

278 | results = evaluate(

279 | cfg=cfg,

280 | model=model,

281 | dataset=dataset,

282 | num_images=num_images,

283 | device=device,

284 | calculate_intrinsics=calculate_intrinsics,

285 | additional_timesteps=additional_timesteps,

286 | max_num_images=max_num_images,

287 | mode="segment",

288 | )

289 |

290 | os.makedirs(os.path.dirname(eval_path), exist_ok=True)

291 | with open(eval_path, "w") as f:

292 | json.dump(results, f)

--------------------------------------------------------------------------------

/diffusionsfm/eval/eval_jobs.py:

--------------------------------------------------------------------------------

1 | """

2 | python -m diffusionsfm.eval.eval_jobs --eval_path output/multi_diffusionsfm_dense --use_submitit

3 | """

4 |

5 | import os

6 | import json

7 | import submitit

8 | import argparse

9 | import itertools

10 | from glob import glob

11 |

12 | import numpy as np

13 | from tqdm.auto import tqdm

14 |

15 | from diffusionsfm.dataset.co3d_v2 import TEST_CATEGORIES, TRAINING_CATEGORIES

16 | from diffusionsfm.eval.eval_category import save_results

17 | from diffusionsfm.utils.slurm import submitit_job_watcher

18 |

19 |

20 | def evaluate_diffusionsfm(eval_path, use_submitit, mode):

21 | JOB_PARAMS = {

22 | "output_dir": [eval_path],

23 | "checkpoint": [800_000],

24 | "num_images": [2, 3, 4, 5, 6, 7, 8],

25 | "sample_num": [0, 1, 2, 3, 4],

26 | "category": TEST_CATEGORIES, # TRAINING_CATEGORIES + TEST_CATEGORIES,

27 | "calculate_additional_timesteps": [True],

28 | }

29 | if mode == "test":

30 | JOB_PARAMS["category"] = TEST_CATEGORIES

31 | elif mode == "train1":

32 | JOB_PARAMS["category"] = TRAINING_CATEGORIES[:len(TRAINING_CATEGORIES) // 2]

33 | elif mode == "train2":

34 | JOB_PARAMS["category"] = TRAINING_CATEGORIES[len(TRAINING_CATEGORIES) // 2:]

35 | keys, values = zip(*JOB_PARAMS.items())

36 | job_configs = [dict(zip(keys, p)) for p in itertools.product(*values)]

37 |

38 | if use_submitit:

39 | log_output = "./slurm_logs"

40 | executor = submitit.AutoExecutor(

41 | cluster=None, folder=log_output, slurm_max_num_timeout=10

42 | )

43 | # Use your own parameters

44 | executor.update_parameters(

45 | slurm_additional_parameters={

46 | "nodes": 1,

47 | "cpus-per-task": 5,

48 | "gpus": 1,

49 | "time": "6:00:00",

50 | "partition": "all",

51 | "exclude": "grogu-1-9, grogu-1-14,"

52 | }

53 | )

54 | jobs = []

55 | with executor.batch():

56 | # This context manager submits all jobs at once at the end.

57 | for params in job_configs:

58 | job = executor.submit(save_results, **params)

59 | job_param = f"{params['category']}_N{params['num_images']}_{params['sample_num']}"

60 | jobs.append((job_param, job))

61 | jobs = {f"{job_param}_{job.job_id}": job for job_param, job in jobs}

62 | submitit_job_watcher(jobs)

63 | else:

64 | for job_config in tqdm(job_configs):

65 | # This is much slower.

66 | save_results(**job_config)

67 |

68 |

69 | def process_predictions(eval_path, pred_index, checkpoint=800_000, threshold_R=15, threshold_CC=0.1):

70 | """

71 | pred_index should be 1 (corresponding to T=90)

72 | """

73 | def aggregate_per_category(categories, metric_key, num_images, sample_num, threshold=None):

74 | """

75 | Aggregates one metric over all data points in a prediction file and then across categories.

76 | - For R_error and CC_error: use mean to threshold-based accuracy

77 | - For CD and CD_Object: use median to reduce the effect of outliers

78 | """

79 | per_category_values = []

80 |

81 | for category in tqdm(categories, desc=f"Sample {sample_num}, N={num_images}, {metric_key}"):

82 | per_pred_values = []

83 |

84 | data_path = glob(

85 | os.path.join(eval_path, "eval", f"{category}_{num_images}_{sample_num}_ckpt{checkpoint}*.json")

86 | )[0]

87 |

88 | with open(data_path) as f:

89 | eval_data = json.load(f)

90 |

91 | for preds in eval_data.values():

92 | if metric_key in ["R_error", "CC_error"]:

93 | vals = np.array(preds[pred_index][metric_key])

94 | per_pred_values.append(np.mean(vals < threshold))

95 | else:

96 | per_pred_values.append(preds[pred_index][metric_key])

97 |

98 | # Aggregate over all predictions within this category

99 | per_category_values.append(

100 | np.mean(per_pred_values) if metric_key in ["R_error", "CC_error"]

101 | else np.median(per_pred_values) # CD or CD_Object — use median to filter outliers

102 | )

103 |

104 | if metric_key in ["R_error", "CC_error"]:

105 | return np.mean(per_category_values)

106 | else:

107 | return np.median(per_category_values)

108 |

109 | def aggregate_metric(categories, metric_key, num_images, threshold=None):

110 | """Aggregates one metric over 5 random samples per category and returns the final mean"""

111 | return np.mean([

112 | aggregate_per_category(categories, metric_key, num_images, sample_num, threshold=threshold)

113 | for sample_num in range(5)

114 | ])

115 |

116 | # Output containers

117 | all_seen_acc_R, all_seen_acc_CC = [], []

118 | all_seen_CD, all_seen_CD_Object = [], []

119 | all_unseen_acc_R, all_unseen_acc_CC = [], []

120 | all_unseen_CD, all_unseen_CD_Object = [], []

121 |

122 | for num_images in range(2, 9):

123 | # Seen categories

124 | all_seen_acc_R.append(

125 | aggregate_metric(TRAINING_CATEGORIES, "R_error", num_images, threshold=threshold_R)

126 | )

127 | all_seen_acc_CC.append(

128 | aggregate_metric(TRAINING_CATEGORIES, "CC_error", num_images, threshold=threshold_CC)

129 | )

130 | all_seen_CD.append(

131 | aggregate_metric(TRAINING_CATEGORIES, "CD", num_images)

132 | )

133 | all_seen_CD_Object.append(

134 | aggregate_metric(TRAINING_CATEGORIES, "CD_Object", num_images)

135 | )

136 |

137 | # Unseen categories

138 | all_unseen_acc_R.append(

139 | aggregate_metric(TEST_CATEGORIES, "R_error", num_images, threshold=threshold_R)

140 | )

141 | all_unseen_acc_CC.append(

142 | aggregate_metric(TEST_CATEGORIES, "CC_error", num_images, threshold=threshold_CC)

143 | )

144 | all_unseen_CD.append(

145 | aggregate_metric(TEST_CATEGORIES, "CD", num_images)

146 | )

147 | all_unseen_CD_Object.append(

148 | aggregate_metric(TEST_CATEGORIES, "CD_Object", num_images)

149 | )

150 |

151 | # Print the results in formatted rows

152 | print("N= ", " ".join(f"{i: 5}" for i in range(2, 9)))

153 | print("Seen R ", " ".join([f"{x:0.3f}" for x in all_seen_acc_R]))

154 | print("Seen CC ", " ".join([f"{x:0.3f}" for x in all_seen_acc_CC]))

155 | print("Seen CD ", " ".join([f"{x:0.3f}" for x in all_seen_CD]))

156 | print("Seen CD_Obj ", " ".join([f"{x:0.3f}" for x in all_seen_CD_Object]))

157 | print("Unseen R ", " ".join([f"{x:0.3f}" for x in all_unseen_acc_R]))

158 | print("Unseen CC ", " ".join([f"{x:0.3f}" for x in all_unseen_acc_CC]))

159 | print("Unseen CD ", " ".join([f"{x:0.3f}" for x in all_unseen_CD]))

160 | print("Unseen CD_Obj", " ".join([f"{x:0.3f}" for x in all_unseen_CD_Object]))

161 |

162 |

163 | if __name__ == "__main__":

164 | parser = argparse.ArgumentParser()

165 | parser.add_argument("--eval_path", type=str, default=None)

166 | parser.add_argument("--use_submitit", action="store_true")

167 | parser.add_argument("--mode", type=str, default="test")

168 | args = parser.parse_args()

169 |

170 | eval_path = "output/multi_diffusionsfm_dense" if args.eval_path is None else args.eval_path

171 | use_submitit = args.use_submitit

172 | mode = args.mode

173 |

174 | evaluate_diffusionsfm(eval_path, use_submitit, mode)

175 | process_predictions(eval_path, 1)

--------------------------------------------------------------------------------

/diffusionsfm/inference/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/QitaoZhao/DiffusionSfM/4bb08800721bdcf46b0c823586a2fab4702ff282/diffusionsfm/inference/__init__.py

--------------------------------------------------------------------------------

/diffusionsfm/inference/ddim.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import random

3 | import numpy as np

4 | from tqdm.auto import tqdm

5 |

6 | from diffusionsfm.utils.rays import compute_ndc_coordinates

7 |

8 |

9 | def inference_ddim(

10 | model,

11 | images,

12 | device,

13 | crop_parameters=None,

14 | eta=0,

15 | num_inference_steps=100,

16 | pbar=True,

17 | stop_iteration=None,

18 | num_patches_x=16,

19 | num_patches_y=16,

20 | visualize=False,

21 | max_num_images=8,

22 | seed=0,

23 | ):

24 | """

25 | Implements DDIM-style inference.

26 |

27 | To get multiple samples, batch the images multiple times.

28 |

29 | Args:

30 | model: Ray Diffuser.

31 | images (torch.Tensor): (B, N, C, H, W).

32 | patch_rays_gt (torch.Tensor): If provided, the patch rays which are ground

33 | truth (B, N, P, 6).

34 | eta (float, optional): Stochasticity coefficient. 0 is completely deterministic,

35 | 1 is equivalent to DDPM. (Default: 0)

36 | num_inference_steps (int, optional): Number of inference steps. (Default: 100)

37 | pbar (bool, optional): Whether to show progress bar. (Default: True)

38 | """

39 | timesteps = model.noise_scheduler.compute_inference_timesteps(num_inference_steps)

40 | batch_size = images.shape[0]

41 | num_images = images.shape[1]

42 |

43 | if isinstance(eta, list):

44 | eta_0, eta_1 = float(eta[0]), float(eta[1])

45 | else:

46 | eta_0, eta_1 = 0, 0

47 |

48 | # Fixing seed

49 | if seed is not None:

50 | torch.manual_seed(seed)

51 | random.seed(seed)

52 | np.random.seed(seed)

53 |

54 | with torch.no_grad():

55 | x_tau = torch.randn(

56 | batch_size,

57 | num_images,

58 | model.ray_out if hasattr(model, "ray_out") else model.ray_dim,

59 | num_patches_x,

60 | num_patches_y,

61 | device=device,

62 | )

63 |

64 | if visualize:

65 | x_taus = [x_tau]

66 | all_pred = []

67 | noise_samples = []

68 |

69 | image_features = model.feature_extractor(images, autoresize=True)

70 |

71 | if model.append_ndc:

72 | ndc_coordinates = compute_ndc_coordinates(

73 | crop_parameters=crop_parameters,

74 | no_crop_param_device="cpu",

75 | num_patches_x=model.width,

76 | num_patches_y=model.width,

77 | distortion_coeffs=None,

78 | )[..., :2].to(device)

79 | ndc_coordinates = ndc_coordinates.permute(0, 1, 4, 2, 3)

80 | else:

81 | ndc_coordinates = None

82 |

83 | if stop_iteration is None:

84 | loop = range(len(timesteps))

85 | else:

86 | loop = range(len(timesteps) - stop_iteration + 1)

87 | loop = tqdm(loop) if pbar else loop

88 |

89 | for t in loop: