", "version": VERSION},

28 | )

29 |

30 |

31 | def check_first_connect(_: LifecycleMetaEvent) -> bool:

32 | return True

33 |

34 |

35 | start_metaevent = on_metaevent(rule=check_first_connect, temp=True)

36 | FIRST_BOOT_MESSAGE = (

37 | "首次启动,目前没有订阅,请添加!\n另外,请检查配置文件的内容(详见部署教程)!"

38 | )

39 | BOOT_SUCCESS_MESSAGE = "ELF_RSS 订阅器启动成功!"

40 |

41 |

42 | # 启动时发送启动成功信息

43 | @start_metaevent.handle()

44 | async def start(bot: Bot) -> None:

45 | # 启动后检查 data 目录,不存在就创建

46 | if not DATA_PATH.is_dir():

47 | DATA_PATH.mkdir()

48 |

49 | boot_message = (

50 | f"Version: v{VERSION}\nAuthor:Quan666\nhttps://github.com/Quan666/ELF_RSS"

51 | )

52 |

53 | rss_list = Rss.read_rss() # 读取list

54 | if not rss_list:

55 | await send_message_to_admin(f"{FIRST_BOOT_MESSAGE}\n{boot_message}", bot)

56 | logger.info(FIRST_BOOT_MESSAGE)

57 | if plugin_config.enable_boot_message:

58 | await send_message_to_admin(f"{BOOT_SUCCESS_MESSAGE}\n{boot_message}", bot)

59 | logger.info(BOOT_SUCCESS_MESSAGE)

60 | # 创建检查更新任务

61 | await asyncio.gather(*[tr.add_job(rss) for rss in rss_list if not rss.stop])

62 |

--------------------------------------------------------------------------------

/docs/更新日志.md:

--------------------------------------------------------------------------------

1 | # 更新日志

2 |

3 | ## 2.0

4 |

5 | 请查看 commit 记录

6 |

7 | ### 2020年12月21日

8 |

9 | #### 全新 2.0 正式版

10 |

11 | > 新特性:

12 | >

13 | > 1. 更易使用的命令

14 | > 2. 更简洁的代码,方便移植到你自己的机器人

15 | > 3. 使用全新的 Nonebot2 框架

16 |

17 | ### 2022年1月25日

18 |

19 | #### `nonebot2 2.0.0b1` 适配

20 |

21 | 适配 `nonebot2 2.0.0b1`,其余未修改。

22 |

23 | ### 2022年1月25日

24 |

25 | #### 频道适配

26 |

27 | 适配 QQ 频道,需使用 `gocqhttp v1.0.0-beta8-fix2` 及以上版本。

28 |

29 | ## 1.0

30 |

31 | ### 2020年6月8日

32 |

33 | [v1.3.1](https://github.com/Quan666/ELF_RSS/commit/4d6f9e45849e14c15849eaa871f4e79364b42256) 修复bug

34 |

35 | ### 2020年6月17日

36 |

37 | [v1.3.2](https://github.com/Quan666/ELF_RSS/commit/3b47c06ef0d90319c3de0fbeb728fb035fb67f82) 修复拉取失败 bug

38 |

39 | ### 2020年6月18日

40 |

41 | [v1.3.3](https://github.com/Quan666/ELF_RSS/commit/50935b3b8fae783027e007237ba4cf3388779f8f) 修复修改订阅地址时,订阅地址带有“=”号导致的bug

42 |

43 | ### 2020年8月8日

44 |

45 | [v1.3.4](https://github.com/Quan666/ELF_RSS/commit/c115e76499cdf308f129a13cfeb9d07fa4bae270) 修复一些 bug,新增图片压缩,默认压缩大于 3MB 的图片

46 |

47 | ### 2020年8月19日

48 |

49 | [v1.3.5](https://github.com/Quan666/ELF_RSS/commit/dbac5337f66c786ed97c286a503840871e6ffc7f)

50 |

51 | * 适配Linux

52 | * 修复图片压缩导致的图片丢失,以及优化压缩方式

53 | * 修复一些其他 bug

54 | * 新增配置项 `ZIP_SIZE` 图片压缩大小 kb * 1024 = MB

55 | * 删除配置项 `IsAir` `Linux_Path`

56 |

57 | [v1.3.7](https://github.com/Quan666/ELF_RSS/commit/a125119f3ea2c2d5c967e863b067fda145fcacc9)

58 |

59 | * 新增 [只转发包含图片的推特](https://github.com/Quan666/ELF_RSS/issues/5)

60 | * 新增 百度翻译,需自己申请相应api

61 | * 修复一些其他 bug

62 | * 新增配置项 `blockquote = True` #是否显示转发的内容,默认打开

63 | * 新增配置项 `showlottery = True` #是否显示互动抽奖信息,默认打开

64 | * 新增配置项 `UseBaidu = False` `BaiduID = ''` `BaiduKEY = ''`

65 |

66 | * 添加了retry来防止获取外网rss时超时

67 |

68 | [v1.3.8](https://github.com/Quan666/ELF_RSS/commit/b47e3da5a6cf2a7c7abd1ed96a05ad1d9c8d3cba)

69 |

70 | * 弃用 第三方属性,但添加订阅(add)等命令还保留该属性,预计 2 个版本后删除

71 | * 弃用 配置项 ROOTUSER, 只使用 SUPERUSERS

72 | * 新增 分群管理,即在群聊使用命令时,优先作用于群聊

73 |

74 | 如果群组添加的订阅名或者订阅地址已经存在于后台,会只添加进订阅群,不修改其他参数

75 |

76 | * 修复一些其他 bug

77 | * 新增配置项

78 |

79 | ```text

80 | #群组订阅的默认参数

81 | add_uptime = 10 #默认订阅更新时间

82 | add_proxy = False #默认是否启用代理

83 |

84 | ···

85 |

86 | Blockword = ["互动抽奖","微博抽奖平台"] #屏蔽词填写 支持正则,看里面格式就明白怎么添加了吧(

87 | ```

88 |

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/command/add_dy.py:

--------------------------------------------------------------------------------

1 | import re

2 |

3 | from nonebot import on_command

4 | from nonebot.adapters.onebot.v11 import (

5 | GroupMessageEvent,

6 | Message,

7 | MessageEvent,

8 | PrivateMessageEvent,

9 | )

10 | from nonebot.adapters.onebot.v11.permission import GROUP_ADMIN, GROUP_OWNER

11 | from nonebot.matcher import Matcher

12 | from nonebot.params import ArgPlainText, CommandArg

13 | from nonebot.permission import SUPERUSER

14 | from nonebot.rule import to_me

15 |

16 | from .. import my_trigger as tr

17 | from ..rss_class import Rss

18 |

19 | RSS_ADD = on_command(

20 | "add",

21 | aliases={"添加订阅", "sub"},

22 | rule=to_me(),

23 | priority=5,

24 | permission=GROUP_ADMIN | GROUP_OWNER | SUPERUSER,

25 | )

26 |

27 |

28 | @RSS_ADD.handle()

29 | async def handle_first_receive(matcher: Matcher, args: Message = CommandArg()) -> None:

30 | plain_text = args.extract_plain_text().strip()

31 | if plain_text and re.match(r"^\S+\s\S+$", plain_text):

32 | matcher.set_arg("RSS_ADD", args)

33 |

34 |

35 | prompt = """\

36 | 请输入

37 | 名称 订阅地址

38 | 空格分割

39 | 私聊默认订阅到当前账号,群聊默认订阅到当前群组

40 | 更多信息可通过 change 命令修改\

41 | """

42 |

43 |

44 | @RSS_ADD.got("RSS_ADD", prompt=prompt)

45 | async def handle_rss_add(

46 | event: MessageEvent, name_and_url: str = ArgPlainText("RSS_ADD")

47 | ) -> None:

48 | try:

49 | name, url = name_and_url.strip().split(" ")

50 | except ValueError:

51 | await RSS_ADD.reject(prompt)

52 | return

53 | if not name or not url:

54 | await RSS_ADD.reject(prompt)

55 | return

56 |

57 | if _ := Rss.get_one_by_name(name):

58 | await RSS_ADD.finish(f"已存在订阅名为 {name} 的订阅")

59 | return

60 |

61 | await add_feed(name, url, event)

62 |

63 |

64 | async def add_feed(

65 | name: str,

66 | url: str,

67 | event: MessageEvent,

68 | ) -> None:

69 | rss = Rss()

70 | rss.name = name

71 | rss.url = url

72 | user = str(event.user_id) if isinstance(event, PrivateMessageEvent) else None

73 | group = str(event.group_id) if isinstance(event, GroupMessageEvent) else None

74 | guild_channel = None

75 | rss.add_user_or_group_or_channel(user, group, guild_channel)

76 | await RSS_ADD.send(f"👏 已成功添加订阅 {name} !")

77 | await tr.add_job(rss)

78 |

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/command/show_dy.py:

--------------------------------------------------------------------------------

1 | from typing import List, Optional

2 |

3 | from nonebot import on_command

4 | from nonebot.adapters.onebot.v11 import GroupMessageEvent, Message, MessageEvent

5 | from nonebot.adapters.onebot.v11.permission import GROUP_ADMIN, GROUP_OWNER

6 | from nonebot.params import CommandArg

7 | from nonebot.permission import SUPERUSER

8 | from nonebot.rule import to_me

9 |

10 | from ..rss_class import Rss

11 |

12 | RSS_SHOW = on_command(

13 | "show",

14 | aliases={"查看订阅"},

15 | rule=to_me(),

16 | priority=5,

17 | permission=GROUP_ADMIN | GROUP_OWNER | SUPERUSER,

18 | )

19 |

20 |

21 | def handle_rss_list(rss_list: List[Rss]) -> str:

22 | rss_info_list = [

23 | f"(已停止){i.name}:{i.url}" if i.stop else f"{i.name}:{i.url}"

24 | for i in rss_list

25 | ]

26 | return "\n\n".join(rss_info_list)

27 |

28 |

29 | async def show_rss_by_name(

30 | rss_name: str, group_id: Optional[int], guild_channel_id: Optional[str]

31 | ) -> str:

32 | rss = Rss.get_one_by_name(rss_name)

33 | if (

34 | rss is None

35 | or (group_id and str(group_id) not in rss.group_id)

36 | or (guild_channel_id and guild_channel_id not in rss.guild_channel_id)

37 | ):

38 | return f"❌ 订阅 {rss_name} 不存在或未订阅!"

39 | else:

40 | # 隐私考虑,不展示除当前群组或频道外的群组、频道和QQ

41 | return str(rss.hide_some_infos(group_id, guild_channel_id))

42 |

43 |

44 | # 不带订阅名称默认展示当前群组或账号的订阅,带订阅名称就显示该订阅的

45 | @RSS_SHOW.handle()

46 | async def handle_rss_show(event: MessageEvent, args: Message = CommandArg()) -> None:

47 | rss_name = args.extract_plain_text().strip()

48 |

49 | user_id = event.get_user_id()

50 | group_id = event.group_id if isinstance(event, GroupMessageEvent) else None

51 | guild_channel_id = None

52 |

53 | if rss_name:

54 | rss_msg = await show_rss_by_name(rss_name, group_id, guild_channel_id)

55 | await RSS_SHOW.finish(rss_msg)

56 |

57 | if group_id:

58 | rss_list = Rss.get_by_group(group_id=group_id)

59 | elif guild_channel_id:

60 | rss_list = Rss.get_by_guild_channel(guild_channel_id=guild_channel_id)

61 | else:

62 | rss_list = Rss.get_by_user(user=user_id)

63 |

64 | if rss_list:

65 | msg_str = handle_rss_list(rss_list)

66 | await RSS_SHOW.finish(msg_str)

67 | else:

68 | await RSS_SHOW.finish("❌ 当前没有任何订阅!")

69 |

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/config.py:

--------------------------------------------------------------------------------

1 | from pathlib import Path

2 | from typing import List, Optional

3 |

4 | from nonebot import get_driver

5 | from nonebot.config import Config

6 | from nonebot.log import logger

7 | from pydantic import AnyHttpUrl

8 |

9 | DATA_PATH = Path.cwd() / "data"

10 | JSON_PATH = DATA_PATH / "rss.json"

11 |

12 |

13 | class ELFConfig(Config):

14 | class Config:

15 | extra = "allow"

16 |

17 | # 代理地址

18 | rss_proxy: Optional[str] = None

19 | rsshub: AnyHttpUrl = "https://rsshub.app" # type: ignore

20 | # 备用 rsshub 地址

21 | rsshub_backup: List[AnyHttpUrl] = []

22 | db_cache_expire: int = 30

23 | limit: int = 200

24 | max_length: int = 1024 # 正文长度限制,防止消息太长刷屏,以及消息过长发送失败的情况

25 | enable_boot_message: bool = True # 是否启用启动时的提示消息推送

26 | debug: bool = (

27 | False # 是否开启 debug 模式,开启后会打印更多的日志信息,同时检查更新时不会使用缓存,便于调试

28 | )

29 |

30 | zip_size: int = 2 * 1024

31 | gif_zip_size: int = 6 * 1024

32 | img_format: Optional[str] = None

33 | img_down_path: Optional[str] = None

34 |

35 | blockquote: bool = True

36 | black_word: Optional[List[str]] = None

37 |

38 | # 百度翻译的 appid 和 key

39 | baidu_id: Optional[str] = None

40 | baidu_key: Optional[str] = None

41 | deepl_translator_api_key: Optional[str] = None

42 | # 配合 deepl_translator 使用的语言检测接口,前往 https://detectlanguage.com/documentation 注册获取 api_key

43 | single_detection_api_key: Optional[str] = None

44 |

45 | qb_username: Optional[str] = None # qbittorrent 用户名

46 | qb_password: Optional[str] = None # qbittorrent 密码

47 | qb_web_url: Optional[str] = None # qbittorrent 的 web 地址

48 | qb_down_path: Optional[str] = (

49 | None # qb 的文件下载地址,这个地址必须是 go-cqhttp 能访问到的

50 | )

51 | down_status_msg_group: Optional[List[int]] = None # 下载进度消息提示群组

52 | down_status_msg_date: int = 10 # 下载进度检查及提示间隔时间,单位秒

53 |

54 | pikpak_username: Optional[str] = None # pikpak 用户名

55 | pikpak_password: Optional[str] = None # pikpak 密码

56 | # pikpak 离线保存的目录, 默认是根目录,示例: ELF_RSS/Downloads ,目录不存在会自动创建, 不能/结尾

57 | pikpak_download_path: str = ""

58 |

59 | telegram_admin_ids: List[int] = (

60 | []

61 | ) # Telegram 管理员 ID 列表,用于接收离线通知和管理机器人

62 | telegram_bot_token: Optional[str] = None # Telegram 机器人的 token

63 |

64 |

65 | config = ELFConfig(**get_driver().config.dict())

66 | logger.debug(f"RSS Config loaded: {config!r}")

67 |

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/parsing/routes/weibo.py:

--------------------------------------------------------------------------------

1 | from typing import Any, Dict

2 |

3 | from nonebot.log import logger

4 | from pyquery import PyQuery as Pq

5 |

6 | from ...config import config

7 | from ...rss_class import Rss

8 | from .. import ParsingBase, handle_html_tag

9 | from ..handle_images import handle_img_combo, handle_img_combo_with_content

10 | from ..utils import get_summary

11 |

12 |

13 | # 处理正文 处理网页 tag

14 | @ParsingBase.append_handler(parsing_type="summary", rex="/weibo/")

15 | async def handle_summary(item: Dict[str, Any], tmp: str) -> str:

16 | summary_html = Pq(get_summary(item))

17 |

18 | # 判断是否保留转发内容

19 | if not config.blockquote:

20 | summary_html.remove("blockquote")

21 |

22 | tmp += handle_html_tag(html=summary_html)

23 |

24 | return tmp

25 |

26 |

27 | # 处理图片

28 | @ParsingBase.append_handler(parsing_type="picture", rex="/weibo/")

29 | async def handle_picture(rss: Rss, item: Dict[str, Any], tmp: str) -> str:

30 | # 判断是否开启了只推送标题

31 | if rss.only_title:

32 | return ""

33 |

34 | res = ""

35 | try:

36 | res += await handle_img(

37 | item=item,

38 | img_proxy=rss.img_proxy,

39 | img_num=rss.max_image_number,

40 | )

41 | except Exception as e:

42 | logger.warning(f"{rss.name} 没有正文内容!{e}")

43 |

44 | # 判断是否开启了只推送图片

45 | return f"{res}\n" if rss.only_pic else f"{tmp + res}\n"

46 |

47 |

48 | # 处理图片、视频

49 | async def handle_img(item: Dict[str, Any], img_proxy: bool, img_num: int) -> str:

50 | if item.get("image_content"):

51 | return await handle_img_combo_with_content(

52 | item.get("gif_url", ""), item["image_content"]

53 | )

54 | html = Pq(get_summary(item))

55 | # 移除多余图标

56 | html.remove("span.url-icon")

57 | img_str = ""

58 | # 处理图片

59 | doc_img = list(html("img").items())

60 | # 只发送限定数量的图片,防止刷屏

61 | if 0 < img_num < len(doc_img):

62 | img_str += f"\n因启用图片数量限制,目前只有 {img_num} 张图片:"

63 | doc_img = doc_img[:img_num]

64 | for img in doc_img:

65 | url = img.attr("src")

66 | img_str += await handle_img_combo(url, img_proxy)

67 |

68 | # 处理视频

69 | if doc_video := html("video"):

70 | img_str += "\n视频封面:"

71 | for video in doc_video.items():

72 | url = video.attr("poster")

73 | img_str += await handle_img_combo(url, img_proxy)

74 |

75 | return img_str

76 |

--------------------------------------------------------------------------------

/.env.dev:

--------------------------------------------------------------------------------

1 | # 配置 NoneBot 监听的 IP/主机名

2 | HOST=0.0.0.0

3 | # 配置 NoneBot 监听的端口

4 | PORT=8080

5 | # 开启 debug 模式 **请勿在生产环境开启**

6 | DEBUG=false

7 | # 配置 NoneBot 超级用户:管理员qq,支持多管理员,逗号分隔 注意,启动消息只发送给第一个管理员

8 | SUPERUSERS=["123123123"]

9 | # 配置机器人的昵称

10 | NICKNAME=["elf","ELF"]

11 | # 配置命令起始字符

12 | COMMAND_START=["","/"]

13 | # 配置命令分割字符

14 | COMMAND_SEP=["."]

15 |

16 | # 代理地址

17 | #RSS_PROXY="127.0.0.1:7890"

18 | # rsshub订阅地址

19 | RSSHUB="https://rsshub.app"

20 | # 备用 rsshub 地址 示例: ["https://rsshub.app","https://rsshub.app"] 务必使用双引号!!!

21 | RSSHUB_BACKUP=[]

22 | # 去重数据库的记录清理限定天数

23 | DB_CACHE_EXPIRE=30

24 | # 缓存rss条数

25 | LIMIT=200

26 | # 正文长度限制,防止消息太长刷屏,以及消息过长发送失败的情况

27 | MAX_LENGTH=1024

28 | # 是否启用启动时的提示消息推送

29 | ENABLE_BOOT_MESSAGE=true

30 |

31 | # 图片压缩

32 | # 非 GIF 图片压缩后的最大长宽值,单位 px

33 | ZIP_SIZE=2048

34 | # GIF 图片压缩临界值,单位 KB

35 | GIF_ZIP_SIZE=6144

36 | # 保存图片的文件名,可使用 {subs}:订阅名 {name}:文件名 {ext}:文件后缀(可省略)

37 | IMG_FORMAT="{subs}/{name}{ext}"

38 | # 图片的下载路径,默认为./data/image 可以为相对路径(./test)或绝对路径(/home)

39 | IMG_DOWN_PATH=""

40 |

41 | # 是否显示转发的内容(主要是微博),默认打开,如果关闭还有转发的信息的话,可以自行添加进屏蔽词(但是这整条消息就会没)

42 | BLOCKQUOTE=true

43 | # 屏蔽词填写 支持正则,如 ["互动抽奖","微博抽奖平台"] 务必使用双引号!!!

44 | BLACK_WORD=[]

45 |

46 | # 使用百度翻译API 可选,填的话两个都要填,不填默认使用谷歌翻译(需墙外?)

47 | # 百度翻译接口appid和secretKey,前往http://api.fanyi.baidu.com/获取

48 | # 一般来说申请标准版免费就够了,想要好一点可以认证上高级版,有月限额,rss用也足够了

49 | BAIDU_ID=""

50 | BAIDU_KEY=""

51 | # DEEPL 翻译API 可选,不填默认使用谷歌翻译(需墙外?)

52 | DEEPL_TRANSLATOR_API_KEY=""

53 | # 配合 deepl_translator 使用的语言检测接口,前往 https://detectlanguage.com/documentation 注册获取 api_key

54 | SINGLE_DETECTION_API_KEY=""

55 |

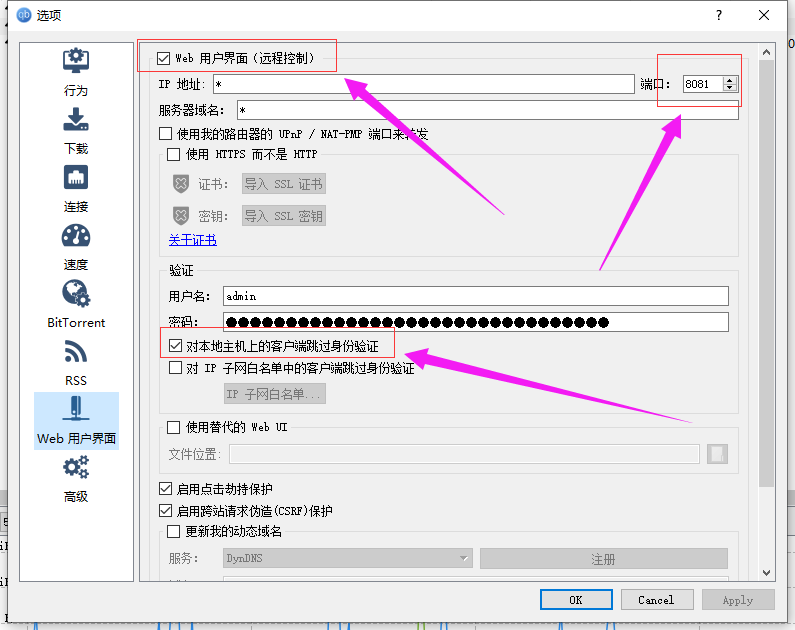

56 | # qbittorrent 相关设置(文件下载位置等更多设置请在qbittorrent软件中设置)

57 | # qbittorrent 用户名

58 | QB_USERNAME=""

59 | # qbittorrent 密码

60 | QB_PASSWORD=""

61 | # qbittorrent 客户端默认是关闭状态,请打开并设置端口号为 8081,同时勾选 “对本地主机上的客户端跳过身份验证”

62 | #QB_WEB_URL="http://127.0.0.1:8081"

63 | # qb的文件下载地址,这个地址必须是 go-cqhttp能访问到的

64 | QB_DOWN_PATH=""

65 | # 下载进度消息提示群组 示例 [12345678] 注意:最好是将该群设置为免打扰

66 | DOWN_STATUS_MSG_GROUP=[]

67 | # 下载进度检查及提示间隔时间,单位秒,不建议小于 10

68 | DOWN_STATUS_MSG_DATE=10

69 |

70 | # pikpak 相关设置

71 | # pikpak 用户名

72 | PIKPAK_USERNAME=""

73 | # pikpak 密码

74 | PIKPAK_PASSWORD=""

75 | # pikpak 离线保存的目录, 默认是根目录,示例: ELF_RSS/Downloads ,目录不存在会自动创建, 不能/结尾

76 | PIKPAK_DOWNLOAD_PATH=""

77 |

78 | # Telegram 相关设置

79 | # Telegram 管理员 ID 列表,用于接收离线通知和管理机器人

80 | TELEGRAM_ADMIN_IDS=[]

81 | # Telegram 机器人的 token

82 | TELEGRAM_BOT_TOKEN=""

83 |

84 | # MYELF博客地址 https://myelf.club

85 | # 出现问题请在 GitHub 上提 issues

86 | # 项目地址 https://github.com/Quan666/ELF_RSS

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # ELF_RSS

2 |

3 | [](https://www.codacy.com/gh/Quan666/ELF_RSS/dashboard?utm_source=github.com&utm_medium=referral&utm_content=Quan666/ELF_RSS&utm_campaign=Badge_Grade)

4 | [](https://jq.qq.com/?_wv=1027&k=sST08Nkd)

5 |

6 | > 1. 容易使用的命令

7 | > 2. 更规范的代码,方便移植到你自己的机器人

8 | > 3. 使用全新的 [Nonebot2](https://v2.nonebot.dev/guide/) 框架

9 |

10 | 这是一个以 Python 编写的 QQ 机器人插件,用于订阅 RSS 并实时以 QQ消息推送。

11 |

12 | 算是第一次用 Python 写出来的比较完整、实用的项目。代码比较难看,正在重构中

13 |

14 | ---

15 |

16 | 当然也有很多插件能够做到订阅 RSS ,但不同的是,大多数都需要在服务器上修改相应配置才能添加订阅,而该插件只需要发送QQ消息给机器人就能动态添加订阅。

17 |

18 | 对于订阅,支持QQ、QQ群、QQ频道的单个、多个订阅。

19 |

20 | 每个订阅的个性化设置丰富,能够应付多种场景。

21 |

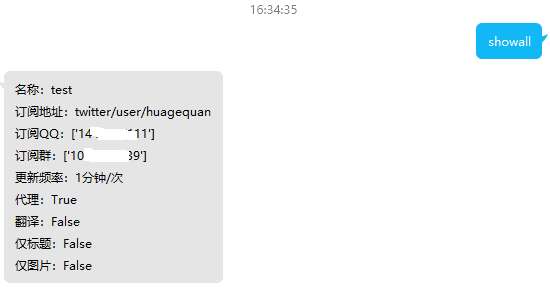

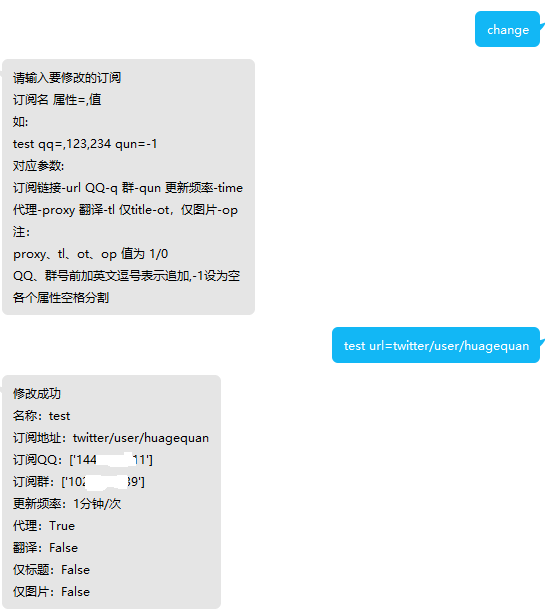

22 | ## 功能介绍

23 |

24 | * 发送命令添加、删除、查询、修改 RSS 订阅

25 | * 交互式添加 RSSHub 订阅

26 | * 订阅内容翻译(使用谷歌机翻,可设置为百度翻译)

27 | * 个性化订阅设置(更新频率、翻译、仅标题、仅图片等)

28 | * 多平台支持

29 | * 图片压缩后发送

30 | * 种子下载并上传到群文件

31 | * 离线下载到 PikPak 网盘(方便追番)

32 | * 消息支持根据链接、标题、图片去重

33 | * 可设置只发送限定数量的图片,防止刷屏

34 | * 可设置从正文中要移除的指定内容,支持正则

35 |

36 | ## 文档目录

37 |

38 | > 注意:推荐 Python 3.8.3+ 版本 Windows版安装包下载地址:[https://www.python.org/ftp/python/3.8.3/python-3.8.3-amd64.exe](https://www.python.org/ftp/python/3.8.3/python-3.8.3-amd64.exe)

39 | >

40 | > * [部署教程](docs/部署教程.md)

41 | > * [使用教程](docs/2.0%20使用教程.md)

42 | > * [使用教程 旧版](docs/1.0%20使用教程.md)

43 | > * [常见问题](docs/常见问题.md)

44 | > * [更新日志](docs/更新日志.md)

45 |

46 | ## 效果预览

47 |

48 |

49 |

50 |

51 |

52 |

53 |

54 |

55 |

56 | ## TODO

57 |

58 | * [x] 1. 订阅信息保护,不在群组中输出订阅QQ、群组

59 | * [x] 2. 更为强大的检查更新时间设置

60 | * [x] 3. RSS 源中 torrent 自动下载并上传至订阅群(适合番剧订阅)

61 | * [x] 4. 暂停检查订阅更新

62 | * [x] 5. 正则匹配订阅名

63 | * [x] 6. 性能优化,尽可能替换为异步操作

64 |

65 | ## 感谢以下项目或服务

66 |

67 | 不分先后

68 |

69 | * [RSSHub](https://github.com/DIYgod/RSSHub)

70 | * [Nonebot](https://github.com/nonebot/nonebot2)

71 | * [酷Q(R. I. P)](https://cqp.cc/)

72 | * [coolq-http-api](https://github.com/richardchien/coolq-http-api)

73 | * [go-cqhttp](https://github.com/Mrs4s/go-cqhttp)

74 |

75 | ## Star History

76 |

77 | [](https://starchart.cc/Quan666/ELF_RSS)

78 |

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/my_trigger.py:

--------------------------------------------------------------------------------

1 | import asyncio

2 | import re

3 |

4 | from apscheduler.executors.pool import ProcessPoolExecutor, ThreadPoolExecutor

5 | from apscheduler.triggers.cron import CronTrigger

6 | from apscheduler.triggers.interval import IntervalTrigger

7 | from async_timeout import timeout

8 | from nonebot.log import logger

9 | from nonebot_plugin_apscheduler import scheduler

10 |

11 | from . import rss_parsing

12 | from .rss_class import Rss

13 |

14 |

15 | # 检测某个rss更新

16 | async def check_update(rss: Rss) -> None:

17 | logger.info(f"{rss.name} 检查更新")

18 | try:

19 | wait_for = 5 * 60 if re.search(r"[_*/,-]", rss.time) else int(rss.time) * 60

20 | async with timeout(wait_for):

21 | await rss_parsing.start(rss)

22 | except asyncio.TimeoutError:

23 | logger.error(f"{rss.name} 检查更新超时,结束此次任务!")

24 |

25 |

26 | def delete_job(rss: Rss) -> None:

27 | if scheduler.get_job(rss.name):

28 | scheduler.remove_job(rss.name)

29 |

30 |

31 | # 加入订阅任务队列并立即执行一次

32 | async def add_job(rss: Rss) -> None:

33 | delete_job(rss)

34 | # 加入前判断是否存在子频道或群组或用户,三者不能同时为空

35 | if any([rss.user_id, rss.group_id, rss.guild_channel_id]):

36 | rss_trigger(rss)

37 | await check_update(rss)

38 |

39 |

40 | def rss_trigger(rss: Rss) -> None:

41 | if re.search(r"[_*/,-]", rss.time):

42 | my_trigger_cron(rss)

43 | return

44 | # 制作一个“time分钟/次”触发器

45 | trigger = IntervalTrigger(minutes=int(rss.time), jitter=10)

46 | # 添加任务

47 | scheduler.add_job(

48 | func=check_update, # 要添加任务的函数,不要带参数

49 | trigger=trigger, # 触发器

50 | args=(rss,), # 函数的参数列表,注意:只有一个值时,不能省略末尾的逗号

51 | id=rss.name,

52 | misfire_grace_time=30, # 允许的误差时间,建议不要省略

53 | max_instances=1, # 最大并发

54 | default=ThreadPoolExecutor(64), # 最大线程

55 | processpool=ProcessPoolExecutor(8), # 最大进程

56 | coalesce=True, # 积攒的任务是否只跑一次,是否合并所有错过的Job

57 | )

58 | logger.info(f"定时任务 {rss.name} 添加成功")

59 |

60 |

61 | # cron 表达式

62 | # 参考 https://www.runoob.com/linux/linux-comm-crontab.html

63 |

64 |

65 | def my_trigger_cron(rss: Rss) -> None:

66 | # 解析参数

67 | tmp_list = rss.time.split("_")

68 | times_list = ["*/5", "*", "*", "*", "*"]

69 | for index, value in enumerate(tmp_list):

70 | if value:

71 | times_list[index] = value

72 | try:

73 | # 制作一个触发器

74 | trigger = CronTrigger(

75 | minute=times_list[0],

76 | hour=times_list[1],

77 | day=times_list[2],

78 | month=times_list[3],

79 | day_of_week=times_list[4],

80 | )

81 | except Exception:

82 | logger.exception(f"创建定时器错误!cron:{times_list}")

83 | return

84 |

85 | # 添加任务

86 | scheduler.add_job(

87 | func=check_update, # 要添加任务的函数,不要带参数

88 | trigger=trigger, # 触发器

89 | args=(rss,), # 函数的参数列表,注意:只有一个值时,不能省略末尾的逗号

90 | id=rss.name,

91 | misfire_grace_time=30, # 允许的误差时间,建议不要省略

92 | max_instances=1, # 最大并发

93 | default=ThreadPoolExecutor(64), # 最大线程

94 | processpool=ProcessPoolExecutor(8), # 最大进程

95 | coalesce=True, # 积攒的任务是否只跑一次,是否合并所有错过的Job

96 | )

97 | logger.info(f"定时任务 {rss.name} 添加成功")

98 |

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/command/show_all.py:

--------------------------------------------------------------------------------

1 | import asyncio

2 | import re

3 | from typing import List, Optional

4 |

5 | from nonebot import on_command

6 | from nonebot.adapters.onebot.v11 import (

7 | ActionFailed,

8 | GroupMessageEvent,

9 | Message,

10 | MessageEvent,

11 | )

12 | from nonebot.adapters.onebot.v11.permission import GROUP_ADMIN, GROUP_OWNER

13 | from nonebot.params import CommandArg

14 | from nonebot.permission import SUPERUSER

15 | from nonebot.rule import to_me

16 |

17 | from ..rss_class import Rss

18 | from .show_dy import handle_rss_list

19 |

20 | RSS_SHOW_ALL = on_command(

21 | "show_all",

22 | aliases={"showall", "select_all", "selectall", "所有订阅"},

23 | rule=to_me(),

24 | priority=5,

25 | permission=GROUP_ADMIN | GROUP_OWNER | SUPERUSER,

26 | )

27 |

28 |

29 | def filter_results_by_keyword(

30 | rss_list: List[Rss],

31 | search_keyword: str,

32 | group_id: Optional[int],

33 | guild_channel_id: Optional[str],

34 | ) -> List[Rss]:

35 | return [

36 | i

37 | for i in rss_list

38 | if (

39 | re.search(search_keyword, i.name, flags=re.I)

40 | or re.search(search_keyword, i.url, flags=re.I)

41 | or (

42 | search_keyword.isdigit()

43 | and not group_id

44 | and not guild_channel_id

45 | and (

46 | (i.user_id and search_keyword in i.user_id)

47 | or (i.group_id and search_keyword in i.group_id)

48 | or (i.guild_channel_id and search_keyword in i.guild_channel_id)

49 | )

50 | )

51 | )

52 | ]

53 |

54 |

55 | def get_rss_list(group_id: Optional[int], guild_channel_id: Optional[str]) -> List[Rss]:

56 | if group_id:

57 | return Rss.get_by_group(group_id=group_id)

58 | elif guild_channel_id:

59 | return Rss.get_by_guild_channel(guild_channel_id=guild_channel_id)

60 | else:

61 | return Rss.read_rss()

62 |

63 |

64 | @RSS_SHOW_ALL.handle()

65 | async def handle_rss_show_all(

66 | event: MessageEvent, args: Message = CommandArg()

67 | ) -> None:

68 | search_keyword = args.extract_plain_text().strip()

69 |

70 | group_id = event.group_id if isinstance(event, GroupMessageEvent) else None

71 | guild_channel_id = None

72 |

73 | if not (rss_list := get_rss_list(group_id, guild_channel_id)):

74 | await RSS_SHOW_ALL.finish("❌ 当前没有任何订阅!")

75 | return

76 |

77 | result = (

78 | filter_results_by_keyword(rss_list, search_keyword, group_id, guild_channel_id)

79 | if search_keyword

80 | else rss_list

81 | )

82 |

83 | if result:

84 | await RSS_SHOW_ALL.send(f"当前共有 {len(result)} 条订阅")

85 | result.sort(key=lambda x: x.get_url())

86 | await asyncio.sleep(0.5)

87 | page_size = 30

88 | while result:

89 | current_page = result[:page_size]

90 | msg_str = handle_rss_list(current_page)

91 | try:

92 | await RSS_SHOW_ALL.send(msg_str)

93 | except ActionFailed:

94 | page_size -= 5

95 | continue

96 | result = result[page_size:]

97 | else:

98 | await RSS_SHOW_ALL.finish("❌ 当前没有任何订阅!")

99 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 |

2 | # Created by https://www.toptal.com/developers/gitignore/api/python

3 | # Edit at https://www.toptal.com/developers/gitignore?templates=python

4 |

5 | ### Python ###

6 | # Byte-compiled / optimized / DLL files

7 | __pycache__/

8 | *.py[cod]

9 | *$py.class

10 |

11 | # C extensions

12 | *.so

13 |

14 | # Distribution / packaging

15 | .Python

16 | build/

17 | develop-eggs/

18 | dist/

19 | downloads/

20 | eggs/

21 | .eggs/

22 | lib/

23 | lib64/

24 | parts/

25 | sdist/

26 | var/

27 | wheels/

28 | pip-wheel-metadata/

29 | share/python-wheels/

30 | *.egg-info/

31 | .installed.cfg

32 | *.egg

33 | MANIFEST

34 |

35 | # PyInstaller

36 | # Usually these files are written by a python script from a template

37 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

38 | *.manifest

39 | *.spec

40 |

41 | # Installer logs

42 | pip-log.txt

43 | pip-delete-this-directory.txt

44 |

45 | # Unit test / coverage reports

46 | htmlcov/

47 | .tox/

48 | .nox/

49 | .coverage

50 | .coverage.*

51 | .cache

52 | nosetests.xml

53 | coverage.xml

54 | *.cover

55 | *.py,cover

56 | .hypothesis/

57 | .pytest_cache/

58 | pytestdebug.log

59 |

60 | # Translations

61 | *.mo

62 | *.pot

63 |

64 | # Django stuff:

65 | *.log

66 | local_settings.py

67 | db.sqlite3

68 | db.sqlite3-journal

69 |

70 | # Flask stuff:

71 | instance/

72 | .webassets-cache

73 |

74 | # Scrapy stuff:

75 | .scrapy

76 |

77 | # Sphinx documentation

78 | docs/_build/

79 | doc/_build/

80 |

81 | # PyBuilder

82 | target/

83 |

84 | # Jupyter Notebook

85 | .ipynb_checkpoints

86 |

87 | # IPython

88 | profile_default/

89 | ipython_config.py

90 |

91 | # pyenv

92 | .python-version

93 |

94 | # pipenv

95 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

96 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

97 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

98 | # install all needed dependencies.

99 | #Pipfile.lock

100 |

101 | # poetry

102 | # Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

103 | # This is especially recommended for binary packages to ensure reproducibility, and is more

104 | # commonly ignored for libraries.

105 | # https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

106 | poetry.lock

107 |

108 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

109 | __pypackages__/

110 |

111 | # Celery stuff

112 | celerybeat-schedule

113 | celerybeat.pid

114 |

115 | # SageMath parsed files

116 | *.sage.py

117 |

118 | # Environments

119 | .env

120 | .venv

121 | env/

122 | venv/

123 | ENV/

124 | env.bak/

125 | venv.bak/

126 |

127 | # Spyder project settings

128 | .spyderproject

129 | .spyproject

130 |

131 | # Rope project settings

132 | .ropeproject

133 |

134 | # mkdocs documentation

135 | /site

136 |

137 | # mypy

138 | .mypy_cache/

139 | .dmypy.json

140 | dmypy.json

141 |

142 | # Pyre type checker

143 | .pyre/

144 |

145 | # pytype static type analyzer

146 | .pytype/

147 | .idea/

148 | .env.prod

149 | data/

150 |

151 | # vscode

152 | .vscode

153 | # End of https://www.toptal.com/developers/gitignore/api/python

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/command/del_dy.py:

--------------------------------------------------------------------------------

1 | from typing import List, Optional, Tuple

2 |

3 | from nonebot import on_command

4 | from nonebot.adapters.onebot.v11 import GroupMessageEvent, Message, MessageEvent

5 | from nonebot.adapters.onebot.v11.permission import GROUP_ADMIN, GROUP_OWNER

6 | from nonebot.matcher import Matcher

7 | from nonebot.params import ArgPlainText, CommandArg

8 | from nonebot.permission import SUPERUSER

9 | from nonebot.rule import to_me

10 |

11 | from .. import my_trigger as tr

12 | from ..rss_class import Rss

13 |

14 | RSS_DELETE = on_command(

15 | "deldy",

16 | aliases={"drop", "unsub", "删除订阅"},

17 | rule=to_me(),

18 | priority=5,

19 | permission=GROUP_ADMIN | GROUP_OWNER | SUPERUSER,

20 | )

21 |

22 |

23 | @RSS_DELETE.handle()

24 | async def handle_first_receive(matcher: Matcher, args: Message = CommandArg()) -> None:

25 | if args.extract_plain_text():

26 | matcher.set_arg("RSS_DELETE", args)

27 |

28 |

29 | async def process_rss_deletion(

30 | rss_name_list: List[str], group_id: Optional[int], guild_channel_id: Optional[str]

31 | ) -> Tuple[List[str], List[str]]:

32 | delete_successes = []

33 | delete_failures = []

34 |

35 | for rss_name in rss_name_list:

36 | rss = Rss.get_one_by_name(name=rss_name)

37 | if rss is None:

38 | delete_failures.append(rss_name)

39 | elif guild_channel_id:

40 | if rss.delete_guild_channel(guild_channel=guild_channel_id):

41 | if not any([rss.group_id, rss.user_id, rss.guild_channel_id]):

42 | rss.delete_rss()

43 | tr.delete_job(rss)

44 | else:

45 | await tr.add_job(rss)

46 | delete_successes.append(rss_name)

47 | else:

48 | delete_failures.append(rss_name)

49 | elif group_id:

50 | if rss.delete_group(group=str(group_id)):

51 | if not any([rss.group_id, rss.user_id, rss.guild_channel_id]):

52 | rss.delete_rss()

53 | tr.delete_job(rss)

54 | else:

55 | await tr.add_job(rss)

56 | delete_successes.append(rss_name)

57 | else:

58 | delete_failures.append(rss_name)

59 | else:

60 | rss.delete_rss()

61 | tr.delete_job(rss)

62 | delete_successes.append(rss_name)

63 |

64 | return delete_successes, delete_failures

65 |

66 |

67 | @RSS_DELETE.got("RSS_DELETE", prompt="输入要删除的订阅名")

68 | async def handle_rss_delete(

69 | event: MessageEvent, rss_name: str = ArgPlainText("RSS_DELETE")

70 | ) -> None:

71 | group_id = event.group_id if isinstance(event, GroupMessageEvent) else None

72 | guild_channel_id = None

73 |

74 | rss_name_list = rss_name.strip().split(" ")

75 |

76 | delete_successes, delete_failures = await process_rss_deletion(

77 | rss_name_list, group_id, guild_channel_id

78 | )

79 |

80 | result = []

81 | if delete_successes:

82 | if guild_channel_id:

83 | result.append(

84 | f'👏 当前子频道成功取消订阅: {"、".join(delete_successes)} !'

85 | )

86 | elif group_id:

87 | result.append(f'👏 当前群组成功取消订阅: {"、".join(delete_successes)} !')

88 | else:

89 | result.append(f'👏 成功删除订阅: {"、".join(delete_successes)} !')

90 | if delete_failures:

91 | if guild_channel_id:

92 | result.append(f'❌ 当前子频道没有订阅: {"、".join(delete_successes)} !')

93 | elif group_id:

94 | result.append(f'❌ 当前群组没有订阅: {"、".join(delete_successes)} !')

95 | else:

96 | result.append(f'❌ 未找到订阅: {"、".join(delete_successes)} !')

97 |

98 | await RSS_DELETE.finish("\n".join(result))

99 |

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/pikpak_offline.py:

--------------------------------------------------------------------------------

1 | from typing import Any, Dict, List, Optional

2 |

3 | from nonebot.log import logger

4 | from pikpakapi import PikPakApi

5 | from pikpakapi.PikpakException import PikpakException

6 |

7 | from .config import config

8 |

9 | pikpak_client: Optional[PikPakApi] = None

10 | if config.pikpak_username and config.pikpak_password:

11 | pikpak_client = PikPakApi(

12 | username=config.pikpak_username,

13 | password=config.pikpak_password,

14 | )

15 |

16 |

17 | async def refresh_access_token() -> None:

18 | """

19 | Login or Refresh access_token PikPak

20 |

21 | """

22 | try:

23 | await pikpak_client.refresh_access_token()

24 | except PikpakException as e:

25 | logger.warning(f"refresh_access_token {e}")

26 | await pikpak_client.login()

27 |

28 |

29 | async def login() -> None:

30 | if not pikpak_client.access_token:

31 | await pikpak_client.login()

32 |

33 |

34 | async def path_to_id(

35 | path: Optional[str] = None, create: bool = False

36 | ) -> List[Dict[str, Any]]:

37 | """

38 | path: str like "/1/2/3"

39 | create: bool create path if not exist

40 | 将形如 /path/a/b 的路径转换为 文件夹的id

41 | """

42 | if not path:

43 | return []

44 | paths = [p.strip() for p in path.split("/") if len(p) > 0]

45 | path_ids = []

46 | count = 0

47 | next_page_token = None

48 | parent_id = None

49 | while count < len(paths):

50 | data = await pikpak_client.file_list(

51 | parent_id=parent_id, next_page_token=next_page_token

52 | )

53 | if _id := next(

54 | (

55 | f.get("id")

56 | for f in data.get("files", [])

57 | if f.get("kind", "") == "drive#folder" and f.get("name") == paths[count]

58 | ),

59 | "",

60 | ):

61 | path_ids.append(

62 | {

63 | "id": _id,

64 | "name": paths[count],

65 | }

66 | )

67 | count += 1

68 | parent_id = _id

69 | elif data.get("next_page_token"):

70 | next_page_token = data.get("next_page_token")

71 | elif create:

72 | data = await pikpak_client.create_folder(

73 | name=paths[count], parent_id=parent_id

74 | )

75 | _id = data.get("file").get("id")

76 | path_ids.append(

77 | {

78 | "id": _id,

79 | "name": paths[count],

80 | }

81 | )

82 | count += 1

83 | parent_id = _id

84 | else:

85 | break

86 | return path_ids

87 |

88 |

89 | async def pikpak_offline_download(

90 | url: str,

91 | path: Optional[str] = None,

92 | parent_id: Optional[str] = None,

93 | name: Optional[str] = None,

94 | ) -> Dict[str, Any]:

95 | """

96 | Offline download

97 | 当有path时, 表示下载到指定的文件夹, 否则下载到根目录

98 | 如果存在 parent_id, 以 parent_id 为准

99 | """

100 | await login()

101 | try:

102 | if not parent_id:

103 | path_ids = await path_to_id(path, create=True)

104 | if path_ids and len(path_ids) > 0:

105 | parent_id = path_ids[-1].get("id")

106 | return await pikpak_client.offline_download(url, parent_id=parent_id, name=name) # type: ignore

107 | except PikpakException as e:

108 | logger.warning(e)

109 | await refresh_access_token()

110 | return await pikpak_offline_download(

111 | url=url, path=path, parent_id=parent_id, name=name

112 | )

113 | except Exception as e:

114 | msg = f"PikPak Offline Download Error: {e}"

115 | logger.error(msg)

116 | raise Exception(msg) from e

117 |

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/parsing/download_torrent.py:

--------------------------------------------------------------------------------

1 | import re

2 | from typing import Any, Dict, List, Optional

3 |

4 | import aiohttp

5 | from nonebot.adapters.onebot.v11 import Bot

6 | from nonebot.log import logger

7 |

8 | from ..config import config

9 | from ..parsing.utils import get_summary

10 | from ..pikpak_offline import pikpak_offline_download

11 | from ..qbittorrent_download import start_down

12 | from ..rss_class import Rss

13 | from ..utils import convert_size, get_bot, get_torrent_b16_hash, send_msg

14 |

15 |

16 | async def down_torrent(

17 | rss: Rss, item: Dict[str, Any], proxy: Optional[str]

18 | ) -> List[str]:

19 | """

20 | 创建下载种子任务

21 | """

22 | bot: Bot = await get_bot() # type: ignore

23 | if bot is None:

24 | raise ValueError("There are not bots to get.")

25 | hash_list = []

26 | for tmp in item["links"]:

27 | if (

28 | tmp["type"] == "application/x-bittorrent"

29 | or tmp["href"].find(".torrent") > 0

30 | ):

31 | hash_list.append(

32 | await start_down(

33 | bot=bot,

34 | url=tmp["href"],

35 | group_ids=rss.group_id,

36 | name=rss.name,

37 | proxy=proxy,

38 | )

39 | )

40 | return hash_list

41 |

42 |

43 | async def fetch_magnet_link(rss: Rss, url: str, proxy: Optional[str]) -> Optional[str]:

44 | try:

45 | async with aiohttp.ClientSession(

46 | timeout=aiohttp.ClientTimeout(total=100)

47 | ) as session:

48 | resp = await session.get(url, proxy=proxy)

49 | content = await resp.read()

50 | return f"magnet:?xt=urn:btih:{get_torrent_b16_hash(content)}"

51 | except Exception as e:

52 | msg = f"{rss.name} 下载种子失败: {e}"

53 | logger.error(msg)

54 | await send_msg(msg=msg, user_ids=rss.user_id, group_ids=rss.group_id)

55 | return None

56 |

57 |

58 | async def pikpak_offline(

59 | rss: Rss, item: Dict[str, Any], proxy: Optional[str]

60 | ) -> List[Dict[str, Any]]:

61 | """

62 | 创建pikpak 离线下载任务

63 | 下载到 config.pikpak_download_path/rss.name or find rss.pikpak_path_rex

64 | """

65 | download_infos = []

66 | for tmp in item["links"]:

67 | if (

68 | tmp["type"] == "application/x-bittorrent"

69 | or tmp["href"].find(".torrent") > 0

70 | ):

71 | url = tmp["href"]

72 | if not re.search(r"magnet:\?xt=urn:btih:", url):

73 | if not (url := await fetch_magnet_link(rss, url, proxy)):

74 | continue

75 | try:

76 | path = f"{config.pikpak_download_path}/{rss.name}"

77 | summary = get_summary(item)

78 | if rss.pikpak_path_key and (

79 | result := re.findall(rss.pikpak_path_key, summary)

80 | ):

81 | path = (

82 | config.pikpak_download_path

83 | + "/"

84 | + re.sub(r'[?*:"<>\\/|]', "_", result[0])

85 | )

86 | logger.info(f"Offline download {url} to {path}")

87 | info = await pikpak_offline_download(url=url, path=path)

88 | download_infos.append(

89 | {

90 | "name": info["task"]["name"],

91 | "file_size": convert_size(int(info["task"]["file_size"])),

92 | "path": path,

93 | }

94 | )

95 | except Exception as e:

96 | msg = f"{rss.name} PikPak 离线下载失败: {e}"

97 | logger.error(msg)

98 | await send_msg(msg=msg, user_ids=rss.user_id, group_ids=rss.group_id)

99 | return download_infos

100 |

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/parsing/handle_translation.py:

--------------------------------------------------------------------------------

1 | import hashlib

2 | import random

3 | import re

4 | from typing import Dict, Optional

5 |

6 | import aiohttp

7 | import emoji

8 | from deep_translator import DeeplTranslator, GoogleTranslator, single_detection

9 | from nonebot.log import logger

10 |

11 | from ..config import config

12 |

13 |

14 | async def baidu_translator(content: str, appid: str, secret_key: str) -> str:

15 | url = "https://api.fanyi.baidu.com/api/trans/vip/translate"

16 | salt = str(random.randint(32768, 65536))

17 | sign = hashlib.md5((appid + content + salt + secret_key).encode()).hexdigest()

18 | params = {

19 | "q": content,

20 | "from": "auto",

21 | "to": "zh",

22 | "appid": appid,

23 | "salt": salt,

24 | "sign": sign,

25 | }

26 | async with aiohttp.ClientSession() as session:

27 | resp = await session.get(url, params=params, timeout=aiohttp.ClientTimeout(10))

28 | data = await resp.json()

29 | try:

30 | content = "".join(i["dst"] + "\n" for i in data["trans_result"])

31 | return "\n百度翻译:\n" + content[:-1]

32 | except Exception as e:

33 | error_msg = f"百度翻译失败:{data['error_msg']}"

34 | logger.warning(error_msg)

35 | raise Exception(error_msg) from e

36 |

37 |

38 | async def google_translation(text: str, proxies: Optional[Dict[str, str]]) -> str:

39 | # text 是处理过emoji的

40 | try:

41 | translator = GoogleTranslator(source="auto", target="zh-CN", proxies=proxies)

42 | return "\n谷歌翻译:\n" + str(translator.translate(re.escape(text)))

43 | except Exception as e:

44 | error_msg = "\nGoogle翻译失败:" + str(e) + "\n"

45 | logger.warning(error_msg)

46 | raise Exception(error_msg) from e

47 |

48 |

49 | async def deepl_translator(text: str, proxies: Optional[Dict[str, str]]) -> str:

50 | try:

51 | lang = None

52 | if config.single_detection_api_key:

53 | lang = single_detection(text, api_key=config.single_detection_api_key)

54 | translator = DeeplTranslator(

55 | api_key=config.deepl_translator_api_key,

56 | source=lang,

57 | target="zh",

58 | use_free_api=True,

59 | proxies=proxies,

60 | )

61 | return "\nDeepl翻译:\n" + str(translator.translate(re.escape(text)))

62 | except Exception as e:

63 | error_msg = "\nDeeplTranslator翻译失败:" + str(e) + "\n"

64 | logger.warning(error_msg)

65 | raise Exception(error_msg) from e

66 |

67 |

68 | # 翻译

69 | async def handle_translation(content: str) -> str:

70 | proxies = (

71 | {

72 | "https": config.rss_proxy,

73 | "http": config.rss_proxy,

74 | }

75 | if config.rss_proxy

76 | else None

77 | )

78 |

79 | text = emoji.demojize(content)

80 | text = re.sub(r":[A-Za-z_]*:", " ", text)

81 | try:

82 | # 优先级 DeeplTranslator > 百度翻译 > GoogleTranslator

83 | # 异常时使用 GoogleTranslator 重试

84 | google_translator_flag = False

85 | try:

86 | if config.deepl_translator_api_key:

87 | text = await deepl_translator(text=text, proxies=proxies)

88 | elif config.baidu_id and config.baidu_key:

89 | text = await baidu_translator(

90 | content, config.baidu_id, config.baidu_key

91 | )

92 | else:

93 | google_translator_flag = True

94 | except Exception:

95 | google_translator_flag = True

96 | if google_translator_flag:

97 | text = await google_translation(text=text, proxies=proxies)

98 | except Exception as e:

99 | logger.error(e)

100 | text = str(e)

101 |

102 | text = text.replace("\\", "")

103 | return text

104 |

--------------------------------------------------------------------------------

/docs/迁移到 go-cqhttp.md:

--------------------------------------------------------------------------------

1 | # 迁移到 go-cqhttp

2 |

3 | > **前提:已经按照部署流程部署好 ELF_RSS**

4 |

5 | 准备工作

6 |

7 | - 下载 [go-cqhttp](https://github.com/Mrs4s/go-cqhttp/releases)

8 |

9 | ## 开始

10 |

11 | 1. 解压并运行 `go-cqhttp`,选择使用 `反向 WS` 方式连接,之后会生成配置文件,关闭 `go-cqhttp`

12 | 2. 修改 `config.yml` 文件,参考配置如下: 其中 `uin` 处填写 QQ 号

13 |

14 | ```yaml

15 | # go-cqhttp 默认配置文件

16 |

17 | account: # 账号相关

18 | uin: 1122334455 # QQ账号

19 | password: '' # 密码为空时使用扫码登录

20 | encrypt: false # 是否开启密码加密

21 | status: 0 # 在线状态 请参考 https://github.com/Mrs4s/go-cqhttp/blob/dev/docs/config.md#在线状态

22 | relogin: # 重连设置

23 | delay: 3 # 首次重连延迟, 单位秒

24 | interval: 3 # 重连间隔

25 | max-times: 0 # 最大重连次数, 0为无限制

26 |

27 | # 是否使用服务器下发的新地址进行重连

28 | # 注意, 此设置可能导致在海外服务器上连接情况更差

29 | use-sso-address: true

30 |

31 | heartbeat:

32 | # 心跳频率, 单位秒

33 | # -1 为关闭心跳

34 | interval: 5

35 |

36 | message:

37 | # 上报数据类型

38 | # 可选: string,array

39 | post-format: string

40 | # 是否忽略无效的CQ码, 如果为假将原样发送

41 | ignore-invalid-cqcode: false

42 | # 是否强制分片发送消息

43 | # 分片发送将会带来更快的速度

44 | # 但是兼容性会有些问题

45 | force-fragment: false

46 | # 是否将url分片发送

47 | fix-url: false

48 | # 下载图片等请求网络代理

49 | proxy-rewrite: ''

50 | # 是否上报自身消息

51 | report-self-message: false

52 | # 移除服务端的Reply附带的At

53 | remove-reply-at: false

54 | # 为Reply附加更多信息

55 | extra-reply-data: false

56 |

57 | output:

58 | # 日志等级 trace,debug,info,warn,error

59 | log-level: warn

60 | # 是否启用 DEBUG

61 | debug: false # 开启调试模式

62 |

63 | # 默认中间件锚点

64 | default-middlewares: &default

65 | # 访问密钥, 强烈推荐在公网的服务器设置

66 | access-token: ''

67 | # 事件过滤器文件目录

68 | filter: ''

69 | # API限速设置

70 | # 该设置为全局生效

71 | # 原 cqhttp 虽然启用了 rate_limit 后缀, 但是基本没插件适配

72 | # 目前该限速设置为令牌桶算法, 请参考:

73 | # https://baike.baidu.com/item/%E4%BB%A4%E7%89%8C%E6%A1%B6%E7%AE%97%E6%B3%95/6597000?fr=aladdin

74 | rate-limit:

75 | enabled: false # 是否启用限速

76 | frequency: 1 # 令牌回复频率, 单位秒

77 | bucket: 1 # 令牌桶大小

78 |

79 | database: # 数据库相关设置

80 | leveldb:

81 | # 是否启用内置leveldb数据库

82 | # 启用将会增加10-20MB的内存占用和一定的磁盘空间

83 | # 关闭将无法使用 撤回 回复 get_msg 等上下文相关功能

84 | enable: true

85 |

86 | # 连接服务列表

87 | servers:

88 | # HTTP 通信设置

89 | - http:

90 | # 服务端监听地址

91 | host: 127.0.0.1

92 | # 服务端监听端口

93 | port: 5700

94 | # 反向HTTP超时时间, 单位秒

95 | # 最小值为5,小于5将会忽略本项设置

96 | timeout: 5

97 | middlewares:

98 | <<: *default # 引用默认中间件

99 | # 反向HTTP POST地址列表

100 | post:

101 | #- url: '' # 地址

102 | # secret: '' # 密钥

103 | #- url: 127.0.0.1:5701 # 地址

104 | # secret: '' # 密钥

105 | # 正向WS设置

106 | - ws:

107 | # 正向WS服务器监听地址

108 | host: 127.0.0.1

109 | # 正向WS服务器监听端口

110 | port: 6700

111 | middlewares:

112 | <<: *default # 引用默认中间件

113 | # 反向WS设置

114 | - ws-reverse:

115 | # 反向WS Universal 地址

116 | # 注意 设置了此项地址后下面两项将会被忽略

117 | universal: ws://127.0.0.1:8080/onebot/v11/ws/

118 | # 反向WS API 地址

119 | api: ws://your_websocket_api.server

120 | # 反向WS Event 地址

121 | event: ws://your_websocket_event.server

122 | # 重连间隔 单位毫秒

123 | reconnect-interval: 3000

124 | middlewares:

125 | <<: *default # 引用默认中间件

126 | # pprof 性能分析服务器, 一般情况下不需要启用.

127 | # 如果遇到性能问题请上传报告给开发者处理

128 | # 注意: pprof服务不支持中间件、不支持鉴权. 请不要开放到公网

129 | - pprof:

130 | # 是否禁用pprof性能分析服务器

131 | disabled: true

132 | # pprof服务器监听地址

133 | host: 127.0.0.1

134 | # pprof服务器监听端口

135 | port: 7700

136 |

137 | # 可添加更多

138 | # 添加方式,同一连接方式可添加多个,具体配置说明请查看 go-cqhttp 文档

139 | #- http: # http 通信

140 | #- ws: # 正向 Websocket

141 | #- ws-reverse: # 反向 Websocket

142 | #- pprof: #性能分析服务器

143 | ```

144 |

145 | 3. 再次运行 go-cqhttp

146 | 4. 到此基本就迁移完毕

147 |

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/command/rsshub_add.py:

--------------------------------------------------------------------------------

1 | from typing import Any, Dict

2 |

3 | import aiohttp

4 | from nonebot import on_command

5 | from nonebot.adapters.onebot.v11 import Message, MessageEvent

6 | from nonebot.adapters.onebot.v11.permission import GROUP_ADMIN, GROUP_OWNER

7 | from nonebot.matcher import Matcher

8 | from nonebot.params import ArgPlainText, CommandArg

9 | from nonebot.permission import SUPERUSER

10 | from nonebot.rule import to_me

11 | from nonebot.typing import T_State

12 | from yarl import URL

13 |

14 | from ..config import config

15 | from ..rss_class import Rss

16 | from .add_dy import add_feed

17 |

18 | rsshub_routes: Dict[str, Any] = {}

19 |

20 |

21 | RSSHUB_ADD = on_command(

22 | "rsshub_add",

23 | rule=to_me(),

24 | priority=5,

25 | permission=GROUP_ADMIN | GROUP_OWNER | SUPERUSER,

26 | )

27 |

28 |

29 | @RSSHUB_ADD.handle()

30 | async def handle_first_receive(matcher: Matcher, args: Message = CommandArg()) -> None:

31 | if args.extract_plain_text().strip():

32 | matcher.set_arg("route", args)

33 |

34 |

35 | @RSSHUB_ADD.got("name", prompt="请输入要订阅的订阅名")

36 | async def handle_feed_name(name: str = ArgPlainText("name")) -> None:

37 | if not name.strip():

38 | await RSSHUB_ADD.reject("订阅名不能为空,请重新输入")

39 | return

40 |

41 | if _ := Rss.get_one_by_name(name=name):

42 | await RSSHUB_ADD.reject(f"已存在名为 {name} 的订阅,请重新输入")

43 |

44 |

45 | @RSSHUB_ADD.got("route", prompt="请输入要订阅的 RSSHub 路由名")

46 | async def handle_rsshub_routes(

47 | state: T_State, route: str = ArgPlainText("route")

48 | ) -> None:

49 | if not route.strip():

50 | await RSSHUB_ADD.reject("路由名不能为空,请重新输入")

51 | return

52 |

53 | rsshub_url = URL(str(config.rsshub))

54 | # 对本机部署的 RSSHub 不使用代理

55 | local_host = [

56 | "localhost",

57 | "127.0.0.1",

58 | ]

59 | if config.rss_proxy and rsshub_url.host not in local_host:

60 | proxy = f"http://{config.rss_proxy}"

61 | else:

62 | proxy = None

63 |

64 | global rsshub_routes

65 | if not rsshub_routes:

66 | async with aiohttp.ClientSession() as session:

67 | resp = await session.get(rsshub_url.with_path("api/routes"), proxy=proxy)

68 | if resp.status != 200:

69 | await RSSHUB_ADD.finish(

70 | "获取路由数据失败,请检查 RSSHub 的地址配置及网络连接"

71 | )

72 | rsshub_routes = await resp.json()

73 |

74 | if route not in rsshub_routes["data"]:

75 | await RSSHUB_ADD.reject(f"未找到名为 {route} 的 RSSHub 路由,请重新输入")

76 | else:

77 | route_list = state["route_list"] = rsshub_routes["data"][route]["routes"]

78 | if len(route_list) > 1:

79 | await RSSHUB_ADD.send(

80 | "请输入序号来选择要订阅的 RSSHub 路由:\n"

81 | + "\n".join(

82 | f"{index + 1}. {_route}" for index, _route in enumerate(route_list)

83 | )

84 | )

85 | else:

86 | state["route_index"] = Message("0")

87 |

88 |

89 | @RSSHUB_ADD.got("route_index")

90 | async def handle_route_index(

91 | state: T_State, route_index: str = ArgPlainText("route_index")

92 | ) -> None:

93 | route = state["route_list"][int(route_index) - 1]

94 | if args := [i for i in route.split("/") if i.startswith(":")]:

95 | await RSSHUB_ADD.send(

96 | '请依次输入要订阅的 RSSHub 路由参数,并用 "/" 分隔:\n'

97 | + "/".join(

98 | f"{i.rstrip('?')}(可选)" if i.endswith("?") else f"{i}" for i in args

99 | )

100 | + "\n要置空请输入#或直接留空"

101 | )

102 | else:

103 | state["route_args"] = Message()

104 |

105 |

106 | @RSSHUB_ADD.got("route_args")

107 | async def handle_route_args(

108 | event: MessageEvent,

109 | state: T_State,

110 | name: str = ArgPlainText("name"),

111 | route_index: str = ArgPlainText("route_index"),

112 | route_args: str = ArgPlainText("route_args"),

113 | ) -> None:

114 | route = state["route_list"][int(route_index) - 1]

115 | feed_url = "/".join([i for i in route.split("/") if not i.startswith(":")])

116 | for i in route_args.split("/"):

117 | if len(i.strip("#")) > 0:

118 | feed_url += f"/{i}"

119 |

120 | await add_feed(name, feed_url.lstrip("/"), event)

121 |

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/parsing/routes/danbooru.py:

--------------------------------------------------------------------------------

1 | import sqlite3

2 | from typing import Any, Dict

3 |

4 | import aiohttp

5 | from nonebot.log import logger

6 | from pyquery import PyQuery as Pq

7 | from tenacity import RetryError, retry, stop_after_attempt, stop_after_delay

8 |

9 | from ...config import DATA_PATH

10 | from ...rss_class import Rss

11 | from .. import ParsingBase, cache_db_manage, duplicate_exists, write_item

12 | from ..handle_images import (

13 | get_preview_gif_from_video,

14 | handle_img_combo,

15 | handle_img_combo_with_content,

16 | )

17 | from ..utils import get_proxy

18 |

19 |

20 | # 处理图片

21 | @ParsingBase.append_handler(parsing_type="picture", rex="danbooru")

22 | async def handle_picture(rss: Rss, item: Dict[str, Any], tmp: str) -> str:

23 | # 判断是否开启了只推送标题

24 | if rss.only_title:

25 | return ""

26 |

27 | try:

28 | res = await handle_img(

29 | item=item,

30 | img_proxy=rss.img_proxy,

31 | )

32 | except RetryError:

33 | res = "预览图获取失败"

34 | logger.warning(f"[{item['link']}]的预览图获取失败")

35 |

36 | # 判断是否开启了只推送图片

37 | return f"{res}\n" if rss.only_pic else f"{tmp + res}\n"

38 |

39 |

40 | # 处理图片、视频

41 | @retry(stop=(stop_after_attempt(5) | stop_after_delay(30)))

42 | async def handle_img(item: Dict[str, Any], img_proxy: bool) -> str:

43 | if item.get("image_content"):

44 | return await handle_img_combo_with_content(

45 | item.get("gif_url", ""), item["image_content"]

46 | )

47 | img_str = ""

48 |

49 | # 处理图片

50 | async with aiohttp.ClientSession() as session:

51 | resp = await session.get(item["link"], proxy=get_proxy(img_proxy))

52 | d = Pq(await resp.text())

53 | if img := d("img#image"):

54 | url = img.attr("src")

55 | else:

56 | img_str += "视频预览:"

57 | url = d("video#image").attr("src")

58 | try:

59 | url = await get_preview_gif_from_video(url)

60 | except RetryError:

61 | logger.warning("视频预览获取失败,将发送原视频封面")

62 | url = d("meta[property='og:image']").attr("content")

63 | img_str += await handle_img_combo(url, img_proxy)

64 |

65 | return img_str

66 |

67 |

68 | # 如果启用了去重模式,对推送列表进行过滤

69 | @ParsingBase.append_before_handler(rex="danbooru", priority=12)

70 | async def handle_check_update(rss: Rss, state: Dict[str, Any]) -> Dict[str, Any]:

71 | change_data = state["change_data"]

72 | conn = state["conn"]

73 | db = state["tinydb"]

74 |

75 | # 检查是否启用去重 使用 duplicate_filter_mode 字段

76 | if not rss.duplicate_filter_mode:

77 | return {"change_data": change_data}

78 |

79 | if not conn:

80 | conn = sqlite3.connect(str(DATA_PATH / "cache.db"))

81 | conn.set_trace_callback(logger.debug)

82 |

83 | cache_db_manage(conn)

84 |

85 | delete = []

86 | for index, item in enumerate(change_data):

87 | try:

88 | summary = await get_summary(item, rss.img_proxy)

89 | except RetryError:

90 | logger.warning(f"[{item['link']}]的预览图获取失败")

91 | continue

92 | is_duplicate, image_hash = await duplicate_exists(

93 | rss=rss,

94 | conn=conn,

95 | item=item,

96 | summary=summary,

97 | )

98 | if is_duplicate:

99 | write_item(db, item)

100 | delete.append(index)

101 | else:

102 | change_data[index]["image_hash"] = str(image_hash)

103 |

104 | change_data = [

105 | item for index, item in enumerate(change_data) if index not in delete

106 | ]

107 |

108 | return {

109 | "change_data": change_data,

110 | "conn": conn,

111 | }

112 |

113 |

114 | # 获取正文

115 | @retry(stop=(stop_after_attempt(5) | stop_after_delay(30)))

116 | async def get_summary(item: Dict[str, Any], img_proxy: bool) -> str:

117 | summary = (

118 | item["content"][0].get("value") if item.get("content") else item["summary"]

119 | )

120 | # 如果图片非视频封面,替换为更清晰的预览图;否则移除,以此跳过图片去重检查

121 | summary_doc = Pq(summary)

122 | async with aiohttp.ClientSession() as session:

123 | resp = await session.get(item["link"], proxy=get_proxy(img_proxy))

124 | d = Pq(await resp.text())

125 | if img := d("img#image"):

126 | summary_doc("img").attr("src", img.attr("src"))

127 | else:

128 | summary_doc.remove("img")

129 | return str(summary_doc)

130 |

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/parsing/routes/pixiv.py:

--------------------------------------------------------------------------------

1 | import re

2 | import sqlite3

3 | from typing import Any, Dict

4 |

5 | import aiohttp

6 | from nonebot.log import logger

7 | from pyquery import PyQuery as Pq

8 | from tenacity import RetryError, TryAgain, retry, stop_after_attempt, stop_after_delay

9 |

10 | from ...config import DATA_PATH

11 | from ...rss_class import Rss

12 | from .. import ParsingBase, cache_db_manage, duplicate_exists, write_item

13 | from ..handle_images import (

14 | get_preview_gif_from_video,

15 | handle_img_combo,

16 | handle_img_combo_with_content,

17 | )

18 | from ..utils import get_summary

19 |

20 |

21 | # 如果启用了去重模式,对推送列表进行过滤

22 | @ParsingBase.append_before_handler(rex="/pixiv/", priority=12)

23 | async def handle_check_update(rss: Rss, state: Dict[str, Any]) -> Dict[str, Any]:

24 | change_data = state["change_data"]

25 | conn = state["conn"]

26 | db = state["tinydb"]

27 |

28 | # 检查是否启用去重 使用 duplicate_filter_mode 字段

29 | if not rss.duplicate_filter_mode:

30 | return {"change_data": change_data}

31 |

32 | if not conn:

33 | conn = sqlite3.connect(str(DATA_PATH / "cache.db"))

34 | conn.set_trace_callback(logger.debug)

35 |

36 | cache_db_manage(conn)

37 |

38 | delete = []

39 | for index, item in enumerate(change_data):

40 | summary = get_summary(item)

41 | try:

42 | summary_doc = Pq(summary)

43 | # 如果图片为动图,通过移除来跳过图片去重检查

44 | if re.search("类型:ugoira", str(summary_doc)):

45 | summary_doc.remove("img")

46 | summary = str(summary_doc)

47 | except Exception as e:

48 | logger.warning(e)

49 | is_duplicate, image_hash = await duplicate_exists(

50 | rss=rss,

51 | conn=conn,

52 | item=item,

53 | summary=summary,

54 | )

55 | if is_duplicate:

56 | write_item(db, item)

57 | delete.append(index)

58 | else:

59 | change_data[index]["image_hash"] = str(image_hash)

60 |

61 | change_data = [

62 | item for index, item in enumerate(change_data) if index not in delete

63 | ]

64 |

65 | return {

66 | "change_data": change_data,

67 | "conn": conn,

68 | }

69 |

70 |

71 | # 处理图片

72 | @ParsingBase.append_handler(parsing_type="picture", rex="/pixiv/")

73 | async def handle_picture(rss: Rss, item: Dict[str, Any], tmp: str) -> str:

74 | # 判断是否开启了只推送标题

75 | if rss.only_title:

76 | return ""

77 |

78 | res = ""

79 | try:

80 | res += await handle_img(

81 | item=item, img_proxy=rss.img_proxy, img_num=rss.max_image_number, rss=rss

82 | )

83 | except Exception as e:

84 | logger.warning(f"{rss.name} 没有正文内容!{e}")

85 |

86 | # 判断是否开启了只推送图片

87 | return f"{res}\n" if rss.only_pic else f"{tmp + res}\n"

88 |

89 |

90 | # 处理图片、视频

91 | @retry(stop=(stop_after_attempt(5) | stop_after_delay(30)))

92 | async def handle_img(

93 | item: Dict[str, Any], img_proxy: bool, img_num: int, rss: Rss

94 | ) -> str:

95 | if item.get("image_content"):

96 | return await handle_img_combo_with_content(

97 | item.get("gif_url", ""), item["image_content"]

98 | )

99 | html = Pq(get_summary(item))

100 | link = item["link"]

101 | img_str = ""

102 | # 处理动图

103 | if re.search("类型:ugoira", str(html)):

104 | ugoira_id = re.search(r"\d+", link).group() # type: ignore

105 | try:

106 | url = await get_ugoira_video(ugoira_id)

107 | url = await get_preview_gif_from_video(url)

108 | img_str += await handle_img_combo(url, img_proxy)

109 | except RetryError:

110 | logger.warning(f"动图[{link}]的预览图获取失败,将发送原动图封面")

111 | url = html("img").attr("src")

112 | img_str += await handle_img_combo(url, img_proxy)

113 | else:

114 | # 处理图片

115 | doc_img = list(html("img").items())

116 | # 只发送限定数量的图片,防止刷屏

117 | if 0 < img_num < len(doc_img):

118 | img_str += f"\n因启用图片数量限制,目前只有 {img_num} 张图片:"

119 | doc_img = doc_img[:img_num]

120 | for img in doc_img:

121 | url = img.attr("src")

122 | img_str += await handle_img_combo(url, img_proxy, rss)

123 |

124 | return img_str

125 |

126 |

127 | # 获取动图为视频

128 | @retry(stop=(stop_after_attempt(5) | stop_after_delay(30)))

129 | async def get_ugoira_video(ugoira_id: str) -> Any:

130 | async with aiohttp.ClientSession() as session:

131 | data = {"id": ugoira_id, "type": "ugoira"}

132 | resp = await session.post("https://ugoira.huggy.moe/api/illusts", data=data)

133 | url = (await resp.json()).get("data")[0].get("url")

134 | if not url:

135 | raise TryAgain

136 | return url

137 |

138 |

139 | # 处理来源

140 | @ParsingBase.append_handler(parsing_type="source", rex="/pixiv/")

141 | async def handle_source(item: Dict[str, Any]) -> str:

142 | source = item["link"]

143 | # 缩短 pixiv 链接

144 | str_link = re.sub("https://www.pixiv.net/artworks/", "https://pixiv.net/i/", source)

145 | return f"链接:{str_link}\n"

146 |

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/parsing/cache_manage.py:

--------------------------------------------------------------------------------

1 | from io import BytesIO

2 | from sqlite3 import Connection

3 | from typing import Any, Dict, Optional, Tuple

4 |

5 | import imagehash

6 | from nonebot.log import logger

7 | from PIL import Image, UnidentifiedImageError

8 | from pyquery import PyQuery as Pq

9 | from tinydb import Query, TinyDB

10 | from tinydb.operations import delete

11 |

12 | from ..config import config

13 | from ..rss_class import Rss

14 | from .check_update import get_item_date

15 | from .handle_images import download_image

16 |

17 |

18 | # 精简 xxx.json (缓存) 中的字段

19 | def cache_filter(data: Dict[str, Any]) -> Dict[str, Any]:

20 | keys = [

21 | "guid",

22 | "link",

23 | "published",

24 | "updated",

25 | "title",

26 | "hash",

27 | ]

28 | if data.get("to_send"):

29 | keys += [

30 | "content",

31 | "summary",

32 | "to_send",

33 | ]

34 | return {k: v for k in keys if (v := data.get(k))}

35 |

36 |

37 | # 对去重数据库进行管理

38 | def cache_db_manage(conn: Connection) -> None:

39 | cursor = conn.cursor()

40 | # 用来去重的 sqlite3 数据表如果不存在就创建一个

41 | cursor.execute(

42 | """

43 | CREATE TABLE IF NOT EXISTS main (

44 | "id" INTEGER NOT NULL PRIMARY KEY AUTOINCREMENT,

45 | "link" TEXT,

46 | "title" TEXT,

47 | "image_hash" TEXT,

48 | "datetime" TEXT DEFAULT (DATETIME('Now', 'LocalTime'))

49 | );

50 | """

51 | )

52 | cursor.close()

53 | conn.commit()

54 | cursor = conn.cursor()

55 | # 移除超过 config.db_cache_expire 天没重复过的记录

56 | cursor.execute(

57 | "DELETE FROM main WHERE datetime <= DATETIME('Now', 'LocalTime', ?);",

58 | (f"-{config.db_cache_expire} Day",),

59 | )

60 | cursor.close()

61 | conn.commit()

62 |

63 |

64 | # 对缓存 json 进行管理

65 | def cache_json_manage(db: TinyDB, new_data_length: int) -> None:

66 | # 只保留最多 config.limit + new_data_length 条的记录

67 | limit = config.limit + new_data_length

68 | retains = db.all()

69 | retains.sort(key=get_item_date)

70 | retains = retains[-limit:]

71 | db.truncate()

72 | db.insert_multiple(retains)

73 |

74 |

75 | async def get_image_hash(rss: Rss, summary: str, item: Dict[str, Any]) -> Optional[str]:

76 | try:

77 | summary_doc = Pq(summary)

78 | except Exception as e:

79 | logger.warning(e)

80 | # 没有正文内容直接跳过

81 | return None

82 |

83 | img_doc = summary_doc("img")

84 | # 只处理仅有一张图片的情况

85 | if len(img_doc) != 1:

86 | return None

87 |

88 | url = img_doc.attr("src")

89 | # 通过图像的指纹来判断是否实际是同一张图片

90 | content = await download_image(url, rss.img_proxy)

91 |

92 | if not content:

93 | return None

94 |

95 | try:

96 | im = Image.open(BytesIO(content))

97 | except UnidentifiedImageError:

98 | return None

99 |

100 | item["image_content"] = content

101 | # GIF 图片的 image_hash 实际上是第一帧的值,为了避免误伤直接跳过

102 | if im.format == "GIF":

103 | item["gif_url"] = url

104 | return None

105 |

106 | return str(imagehash.dhash(im))

107 |

108 |

109 | # 去重判断

110 | async def duplicate_exists(

111 | rss: Rss, conn: Connection, item: Dict[str, Any], summary: str

112 | ) -> Tuple[bool, Optional[str]]:

113 | flag = False

114 | link = item["link"].replace("'", "''")

115 | title = item["title"].replace("'", "''")

116 | image_hash = None

117 | cursor = conn.cursor()

118 | sql = "SELECT * FROM main WHERE 1=1"

119 | args = []

120 |

121 | for mode in rss.duplicate_filter_mode:

122 | if mode == "image":

123 | image_hash = await get_image_hash(rss, summary, item)

124 | if image_hash:

125 | sql += " AND image_hash=?"

126 | args.append(image_hash)

127 | elif mode == "link":

128 | sql += " AND link=?"

129 | args.append(link)

130 | elif mode == "title":

131 | sql += " AND title=?"

132 | args.append(title)

133 |

134 | if "or" in rss.duplicate_filter_mode:

135 | sql = sql.replace("AND", "OR").replace("OR", "AND", 1)

136 |

137 | cursor.execute(f"{sql};", args)

138 | result = cursor.fetchone()

139 |

140 | if result is not None:

141 | result_id = result[0]

142 | cursor.execute(

143 | "UPDATE main SET datetime = DATETIME('Now','LocalTime') WHERE id = ?;",

144 | (result_id,),

145 | )

146 | cursor.close()

147 | conn.commit()

148 | flag = True

149 |

150 | return flag, image_hash

151 |

152 |

153 | # 消息发送后存入去重数据库

154 | def insert_into_cache_db(

155 | conn: Connection, item: Dict[str, Any], image_hash: str

156 | ) -> None:

157 | cursor = conn.cursor()

158 | link = item["link"].replace("'", "''")

159 | title = item["title"].replace("'", "''")

160 | cursor.execute(

161 | "INSERT INTO main (link, title, image_hash) VALUES (?, ?, ?);",

162 | (link, title, image_hash),

163 | )

164 | cursor.close()

165 | conn.commit()

166 |

167 |

168 | # 写入缓存 json

169 | def write_item(db: TinyDB, new_item: Dict[str, Any]) -> None:

170 | if not new_item.get("to_send"):

171 | db.update(delete("to_send"), Query().hash == str(new_item.get("hash"))) # type: ignore

172 | db.upsert(cache_filter(new_item), Query().hash == str(new_item.get("hash")))

173 |

--------------------------------------------------------------------------------

/src/plugins/ELF_RSS2/parsing/handle_html_tag.py:

--------------------------------------------------------------------------------

1 | import re

2 | from html import unescape as html_unescape

3 |

4 | import bbcode

5 | from pyquery import PyQuery as Pq

6 | from yarl import URL

7 |

8 | from ..config import config

9 |

10 |

11 | # 处理 bbcode

12 | def handle_bbcode(html: Pq) -> str:

13 | rss_str = html_unescape(str(html))

14 |

15 | # issue 36 处理 bbcode

16 | rss_str = re.sub(

17 | r"(\[url=[^]]+])?\[img[^]]*].+\[/img](\[/url])?", "", rss_str, flags=re.I

18 | )

19 |

20 | # 处理一些 bbcode 标签

21 | bbcode_tags = [

22 | "align",

23 | "b",

24 | "backcolor",

25 | "color",

26 | "font",

27 | "size",

28 | "table",

29 | "tbody",

30 | "td",

31 | "tr",

32 | "u",

33 | "url",

34 | ]

35 |

36 | for i in bbcode_tags:

37 | rss_str = re.sub(rf"\[{i}=[^]]+]", "", rss_str, flags=re.I)

38 | rss_str = re.sub(rf"\[/?{i}]", "", rss_str, flags=re.I)

39 |

40 | # 去掉结尾被截断的信息

41 | rss_str = re.sub(

42 | r"(\[[^]]+|\[img][^\[\]]+) \.\.\n?", "", rss_str, flags=re.I

43 | )

44 |

45 | # 检查正文是否为 bbcode ,没有成对的标签也当作不是,从而不进行处理

46 | bbcode_search = re.search(r"\[/(\w+)]", rss_str)

47 | if bbcode_search and re.search(f"\\[{bbcode_search[1]}", rss_str):

48 | parser = bbcode.Parser()

49 | parser.escape_html = False

50 | rss_str = parser.format(rss_str)

51 |

52 | return rss_str

53 |

54 |

55 | def handle_lists(html: Pq, rss_str: str) -> str:

56 | # 有序/无序列表 标签处理

57 | for ul in html("ul").items():

58 | for li in ul("li").items():

59 | li_str_search = re.search("(.+)", repr(str(li)))

60 | rss_str = rss_str.replace(

61 | str(li), f"\n- {li_str_search[1]}" # type: ignore

62 | ).replace("\\n", "\n")

63 | for ol in html("ol").items():

64 | for index, li in enumerate(ol("li").items()):

65 | li_str_search = re.search("(.+)", repr(str(li)))

66 | rss_str = rss_str.replace(

67 | str(li), f"\n{index + 1}. {li_str_search[1]}" # type: ignore

68 | ).replace("\\n", "\n")

69 | rss_str = re.sub("", "\n", rss_str)

70 | # 处理没有被 ul / ol 标签包围的 li 标签

71 | rss_str = rss_str.replace("", "- ").replace("", "")

72 | return rss_str

73 |

74 |

75 | # 标签处理

76 | def handle_links(html: Pq, rss_str: str) -> str:

77 | for a in html("a").items():

78 | a_str = re.search(

79 | r"]+>.*?", html_unescape(str(a)), flags=re.DOTALL

80 | ).group() # type: ignore

81 | if a.text() and str(a.text()) != a.attr("href"):

82 | # 去除微博超话

83 | if re.search(

84 | r"https://m\.weibo\.cn/p/index\?extparam=\S+&containerid=\w+",

85 | a.attr("href"),

86 | ):

87 | rss_str = rss_str.replace(a_str, "")

88 | # 去除微博话题对应链接 及 微博用户主页链接,只保留文本

89 | elif (

90 | a.attr("href").startswith("https://m.weibo.cn/search?containerid=")

91 | and re.search("#.+#", a.text())

92 | ) or (

93 | a.attr("href").startswith("https://weibo.com/")

94 | and a.text().startswith("@")

95 | ):

96 | rss_str = rss_str.replace(a_str, a.text())

97 | else:

98 | if a.attr("href").startswith("https://weibo.cn/sinaurl?u="):

99 | a.attr("href", URL(a.attr("href")).query["u"])

100 | rss_str = rss_str.replace(a_str, f" {a.text()}: {a.attr('href')}\n")

101 | else:

102 | rss_str = rss_str.replace(a_str, f" {a.attr('href')}\n")

103 | return rss_str

104 |

105 |

106 | # HTML标签等处理

107 | def handle_html_tag(html: Pq) -> str:

108 | rss_str = html_unescape(str(html))

109 |

110 | rss_str = handle_lists(html, rss_str)

111 | rss_str = handle_links(html, rss_str)

112 |

113 | # 处理一些 HTML 标签

114 | html_tags = [

115 | "b",

116 | "blockquote",

117 | "code",

118 | "dd",

119 | "del",

120 | "div",

121 | "dl",