├── .gitignore

├── LICENSE

├── README.md

├── REFERENCES.md

├── SETUP.md

├── app

├── app.py

├── assets

│ └── default.min.css

└── model.py

├── assets

├── CCCar_org_seq_inp_vert.gif

├── CCperson_org_seq_inp_vert.gif

├── Docker-config.png

└── Evaluation-Flow.png

├── data

└── .gitkeep

├── detect

├── docker

│ ├── Dockerfile

│ ├── README.md

│ ├── build_detector.sh

│ ├── run_detector.sh

│ └── set_X11.sh

└── scripts

│ ├── ObjectDetection

│ ├── __init__.py

│ ├── detect.py

│ ├── imutils.py

│ └── inpaintRemote.py

│ ├── demo.py

│ ├── run_detection.py

│ ├── test_detect.sh

│ ├── test_inpaint_remote.sh

│ └── test_sshparamiko.py

├── inpaint

├── docker

│ ├── Dockerfile

│ ├── build_inpaint.sh

│ ├── run_inpainting.sh

│ └── set_X11.sh

├── pretrained_models

│ └── .gitkeep

└── scripts

│ ├── test_inpaint_ex_container.sh

│ └── test_inpaint_in_container.sh

├── setup

├── _download_googledrive.sh

├── download_inpaint_models.sh

├── setup_network.sh

├── setup_venv.sh

└── ssh

│ └── sshd_config

└── tools

├── convert_frames2video.py

├── convert_video2frames.py

└── play_video.py

/.gitignore:

--------------------------------------------------------------------------------

1 | # force explicit directory keeping

2 | data/**

3 | !data/.gitkeep

4 |

5 | # vscode

6 | **.vscode

7 |

8 | # general files

9 | .DS_Store

10 | app/static/**

11 | app/upload/**

12 | app/_cache/**

13 | *.ipynb

14 | *.pyc

15 |

16 | # detectron2 files

17 |

18 | # inpainting files

19 | inpaint/pretrained_models/*.pth

20 | inpaint/pretrained_models/*.tar

21 | inpaint/pretrained_models/DAVIS_model/**

22 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2020 RexBarker

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # VideoObjectRemoval

2 | ### *Video inpainting with automatic object detection*

3 | ---

4 |

5 | ### Quick Links ###

6 |

7 | - [Setup](./SETUP.md)

8 |

9 | - [Background](./DESCRIPTION.md)

10 |

11 | - [References](./REFERENCES.md)

12 |

13 | ### Overview

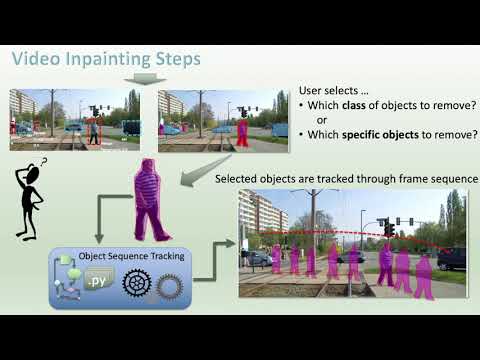

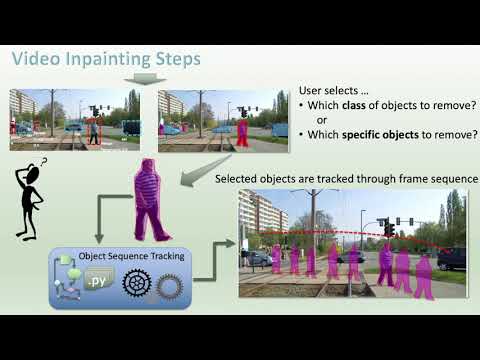

14 | In this project, a prototype video editing system based on “inpainting” is demonstrated. Inpainting is an image editing method for replacement of masked regions of an image with a suitable background. The resulting video is thus free from the selected objects and more readily adaptable for further simulation. The system utilizes a three-step approach to simulation: (1) detection, (2) mask grouping, and (3) inpainting. The detection step involves the identification of objects within the video based upon a given object class definition, and the production of pixel level masks. Next, the object masks are grouped and tracked through the frame sequence to determine persistence and allow correction of classified results. Finally, the grouped masks are used to target specific objects instances in the video for inpainting removal.

15 |

16 | The end result of this project is a video editing platform in the context of locomotive route simulation. The final video output demonstrates the system’s ability to automatically remove moving pedestrians in a video sequence, which commonly occur in most street tram simulations. This work also addresses the limitations of the system, in particular, the inability to remove quasi-stationary objects. The overall outcome of the project is a video editing system with automation capabilities rivaling commercial inpainting software.

17 |

18 | ### Project Video

19 |

20 |  23 |

24 |

25 | ### Project Results

26 |

27 | | Result | Description |

28 | | ------ |:------------ |

29 | |

23 |

24 |

25 | ### Project Results

26 |

27 | | Result | Description |

28 | | ------ |:------------ |

29 | |  | **Single object removal**

| **Single object removal**

- A single vehichle is removed using a conforming mask

- elliptical dilation mask of 21 pixels used

Source: YouTube video 15:16-15:17|

30 |

31 | ---

32 |

33 | | Result | Description |

34 | | ------ |:------------ |

35 | |  | **Multiple object removal**

| **Multiple object removal**

- pedestrians are removed using bounding-box shaped masks

- elliptical dilation mask of 21 pixels used

Source: YouTube video 15:26-15:27 |

36 |

37 | ### Project Setup

38 |

39 | 1. Install NVIDIA docker, if not already installed (see [setup](./SETUP.md) )

40 |

41 | 2. Follow the instructions from the YouTube video for the specific project setup:

42 |

43 |  46 |

47 |

48 |

--------------------------------------------------------------------------------

/REFERENCES.md:

--------------------------------------------------------------------------------

1 | # References

2 |

3 | The following resources were utilized in this project:

4 |

5 | - [1] nbei (R. Xu), “Deep-Flow-Guided-Video-Inpainting”, Git hub repository: https://github.com/nbei/Deep-Flow-Guided-Video-Inpainting

6 |

7 | - [2] nbei (R. Xu), "Deep-Flow-Guided-Video-Inpainting", Model training weights: https://drive.google.com/drive/folders/1a2FrHIQGExJTHXxSIibZOGMukNrypr_g

8 |

9 | - [3] Facebookresearch, Y. Wu, A. Kirillov, F. Massa, W-Y Lo, R. Girshick, “Detectron2”, Git hub repository: https://github.com/facebookresearch/detectron2

10 |

11 | - [4] Facebookresearch, (various authors), “Detectron2 Model Zoo and Baselines”, Git hub repository: https://github.com/facebookresearch/detectron2/blob/master/MODEL_ZOO.md

12 |

--------------------------------------------------------------------------------

/SETUP.md:

--------------------------------------------------------------------------------

1 | # Setup

2 | ---

3 |

4 | ### Docker Setup

5 | (based on the [NVIDIA Docker installation guide](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#docker))

6 |

7 | Here, the NVIDIA Docker installation for Ubuntu is described – specifically Ubuntu 18.04 LTS and 20.04 LTS. At the time of this report, NVIDIA Docker platform is only supported on Linux.

8 |

9 | **Remove existing older Docker Installations:**

10 |

11 | If there an existing Docker installation, this should upgraded as necessary. This project basis was constructed with Docker v19.03.

46 |

47 |

48 |

--------------------------------------------------------------------------------

/REFERENCES.md:

--------------------------------------------------------------------------------

1 | # References

2 |

3 | The following resources were utilized in this project:

4 |

5 | - [1] nbei (R. Xu), “Deep-Flow-Guided-Video-Inpainting”, Git hub repository: https://github.com/nbei/Deep-Flow-Guided-Video-Inpainting

6 |

7 | - [2] nbei (R. Xu), "Deep-Flow-Guided-Video-Inpainting", Model training weights: https://drive.google.com/drive/folders/1a2FrHIQGExJTHXxSIibZOGMukNrypr_g

8 |

9 | - [3] Facebookresearch, Y. Wu, A. Kirillov, F. Massa, W-Y Lo, R. Girshick, “Detectron2”, Git hub repository: https://github.com/facebookresearch/detectron2

10 |

11 | - [4] Facebookresearch, (various authors), “Detectron2 Model Zoo and Baselines”, Git hub repository: https://github.com/facebookresearch/detectron2/blob/master/MODEL_ZOO.md

12 |

--------------------------------------------------------------------------------

/SETUP.md:

--------------------------------------------------------------------------------

1 | # Setup

2 | ---

3 |

4 | ### Docker Setup

5 | (based on the [NVIDIA Docker installation guide](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#docker))

6 |

7 | Here, the NVIDIA Docker installation for Ubuntu is described – specifically Ubuntu 18.04 LTS and 20.04 LTS. At the time of this report, NVIDIA Docker platform is only supported on Linux.

8 |

9 | **Remove existing older Docker Installations:**

10 |

11 | If there an existing Docker installation, this should upgraded as necessary. This project basis was constructed with Docker v19.03.

12 | `$ sudo apt-get remove docker docker-engine docker.io containerd runc`

13 |

14 | **Install latest Docker engine:**

15 |

16 | The following script can be used to install docker all repositories as required:

17 | ```

18 | $ curl -fsSL https://get.docker.com -o get-docker.sh

19 | $ sudo sh get-docker.sh

20 | $ sudo sudo systemctl start docker && sudo systemctl enable docker

21 | ```

22 |

23 | Add user name to docker run group:

24 | `$ sudo usermod -aG docker your-user`

25 |

26 | **Install NVIDIA Docker:**

27 |

28 | These installation instructions are based on the NVIDIA Docker documentation [6]. First, ensure that the appropriate NVIDIA driver is installed on the host system. This can be tested with the following command. This should produce a listing of the current GPU state, along with the current version of the driver:

29 | `$ nvidia-smi`

30 |

31 | If there is an existing earlier version of the NVIDIA Docker system (<=1.0), this must be first uninstalled:

32 | `$ docker volume ls -q -f driver=nvidia-docker | xargs -r -I{} -n1 docker ps -q -a -f volume={} | xargs -r docker rm -f`

33 |

34 | `$ sudo apt-get purge nvidia-docker`

35 |

36 | Set the distribution package RPMs:

37 | ```

38 | $ distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

39 | $ curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

40 | $ curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

41 | ```

42 |

43 | **Install the nvidia-docker2 package:**

44 | ```

45 | $ sudo apt-get update

46 | $ sudo apt-get install -y nvidia-docker2

47 | $ sudo systemctl restart docker

48 | ```

49 |

50 | Test the NVIDIA Docker installation by executing nvidia-smi from within a container:

51 | `$ sudo docker run --rm --gpus all nvidia/cuda:11.0-base nvidia-smi`

52 |

53 | ---

54 | # Project Setup

55 |

56 | Follow the instructions from the YouTube video for the specific project setup:

57 |

58 |  61 |

62 |

--------------------------------------------------------------------------------

/app/app.py:

--------------------------------------------------------------------------------

1 | import os

2 | import sys

3 | import base64

4 | from glob import glob

5 | from shutil import rmtree

6 | from io import BytesIO

7 | import time

8 | from datetime import datetime

9 |

10 | #import flask

11 | #from flask import send_file, make_response

12 | #from flask import send_from_directory

13 | from flask_caching import Cache

14 | import dash

15 | import dash_player

16 | import dash_bootstrap_components as dbc

17 | from dash.dependencies import Input, Output, State

18 | import dash_core_components as dcc

19 | import dash_html_components as html

20 | import plotly.graph_objects as go

21 | from PIL import Image

22 |

23 | from model import detect_scores_bboxes_classes, \

24 | detr, createNullVideo

25 | from model import CLASSES, DEVICE

26 | from model import inpaint, testContainerWrite, performInpainting

27 |

28 | libpath = "/home/appuser/scripts/" # to keep the dev repo in place, w/o linking

29 | sys.path.insert(1,libpath)

30 | import ObjectDetection.imutils as imu

31 |

32 |

33 | basedir = os.getcwd()

34 | uploaddir = os.path.join(basedir,"upload")

35 | print(f"ObjectDetect loaded, using DEVICE={DEVICE}")

36 | print(f"Basedirectory: {basedir}")

37 |

38 | # cleaup old uploads

39 | if os.path.exists(uploaddir):

40 | rmtree(uploaddir)

41 |

42 | os.mkdir(uploaddir)

43 |

44 | # cleanup old runs

45 | if os.path.exists(os.path.join(basedir,"static")):

46 | for f in [*glob(os.path.join(basedir,'static/sequence_*.mp4')),

47 | *glob(os.path.join(basedir,'static/inpaint_*.mp4'))]:

48 | os.remove(f)

49 | else:

50 | os.mkdir(os.path.join(basedir,"static"))

51 |

52 | # remove and create dummy video for place holder

53 | tempfile = os.path.join(basedir,'static/result.mp4')

54 | if not os.path.exists(tempfile):

55 | createNullVideo(tempfile)

56 |

57 |

58 | # ----------

59 | # Helper functions

60 | def getImageFileNames(dirPath):

61 | if not os.path.isdir(dirPath):

62 | return go.Figure().update_layout(title='Incorrect Directory Path!')

63 |

64 | for filetype in ('*.png', '*.jpg'):

65 | fnames = sorted(glob(os.path.join(dirPath,filetype)))

66 | if len(fnames) > 0: break

67 |

68 | if not fnames:

69 | go.Figure().update_layout(title='No files found!')

70 | return None

71 | else:

72 | return fnames

73 |

74 |

75 | # ----------

76 | # Dash component wrappers

77 | def Row(children=None, **kwargs):

78 | return html.Div(children, className="row", **kwargs)

79 |

80 |

81 | def Column(children=None, width=1, **kwargs):

82 | nb_map = {

83 | 1: 'one', 2: 'two', 3: 'three', 4: 'four', 5: 'five', 6: 'six',

84 | 7: 'seven', 8: 'eight', 9: 'nine', 10: 'ten', 11: 'eleven', 12: 'twelve'}

85 |

86 | return html.Div(children, className=f"{nb_map[width]} columns", **kwargs)

87 |

88 | # ----------

89 | # plotly.py helper functions

90 |

91 | # upload files

92 | def save_file(name, content):

93 | """Decode and store a file uploaded with Plotly Dash."""

94 | filepath = os.path.join(uploaddir, name)

95 | data = content.encode("utf8").split(b";base64,")[1]

96 | with open(filepath, "wb") as fp:

97 | fp.write(base64.decodebytes(data))

98 |

99 | return filepath

100 |

101 | def vfile_to_frames(fname):

102 | assert os.path.exists(fname), "Could not determine path to video file"

103 | dirPath = os.path.join(uploaddir,fname.split(".")[-2])

104 | nfiles = imu.videofileToFramesDirectory(videofile=fname,dirPath=dirPath,

105 | padlength=5, imgtype='png', cleanDirectory=True)

106 | return dirPath

107 |

108 | # PIL images

109 | def pil_to_b64(im, enc="png"):

110 | io_buf = BytesIO()

111 | im.save(io_buf, format=enc)

112 | encoded = base64.b64encode(io_buf.getvalue()).decode("utf-8")

113 | return f"data:img/{enc};base64, " + encoded

114 |

115 |

116 | def pil_to_fig(im, showlegend=False, title=None):

117 | img_width, img_height = im.size

118 | fig = go.Figure()

119 | # This trace is added to help the autoresize logic work.

120 | fig.add_trace(go.Scatter(

121 | x=[img_width * 0.05, img_width * 0.95],

122 | y=[img_height * 0.95, img_height * 0.05],

123 | showlegend=False, mode="markers", marker_opacity=0,

124 | hoverinfo="none", legendgroup='Image'))

125 |

126 | fig.add_layout_image(dict(

127 | source=pil_to_b64(im), sizing="stretch", opacity=1, layer="below",

128 | x=0, y=0, xref="x", yref="y", sizex=img_width, sizey=img_height,))

129 |

130 | # Adapt axes to the right width and height, lock aspect ratio

131 | fig.update_xaxes(

132 | showgrid=False, visible=False, constrain="domain", range=[0, img_width])

133 |

134 | fig.update_yaxes(

135 | showgrid=False, visible=False,

136 | scaleanchor="x", scaleratio=1,

137 | range=[img_height, 0])

138 |

139 | fig.update_layout(title=title, showlegend=showlegend)

140 |

141 | return fig

142 |

143 | # graph

144 | def add_bbox(fig, x0, y0, x1, y1,

145 | showlegend=True, name=None, color=None,

146 | opacity=0.5, group=None, text=None):

147 | fig.add_trace(go.Scatter(

148 | x=[x0, x1, x1, x0, x0],

149 | y=[y0, y0, y1, y1, y0],

150 | mode="lines",

151 | fill="toself",

152 | opacity=opacity,

153 | marker_color=color,

154 | hoveron="fills",

155 | name=name,

156 | hoverlabel_namelength=0,

157 | text=text,

158 | legendgroup=group,

159 | showlegend=showlegend,

160 | ))

161 |

162 | def get_index_by_bbox(objGroupingDict,class_name,bbox_in):

163 | # gets the order of the object within the groupingSequence

164 | # by bounding box overlap

165 | maxIoU = 0.0

166 | maxIdx = 0

167 | for instIdx, objInst in enumerate(objGroupingDict[class_name]):

168 | # only search for object found in seq_i= 0

169 | # there must exist objects at seq_i=0 since we predicted them on first frame

170 | bbx,_,seq_i = objInst[0]

171 |

172 | if seq_i != 0: continue

173 | IoU = imu.bboxIoU(bbx, bbox_in)

174 | if IoU > maxIoU:

175 | maxIoU = IoU

176 | maxIdx = instIdx

177 |

178 | return maxIdx

179 |

180 |

181 | def get_selected_objects(figure,objGroupingDict):

182 | # recovers the selected items from the figure

183 | # infer the object location by the bbox IoU (more stable than order)

184 | # returns a dict of selected indexed items

185 |

186 | obsObjects = {}

187 | for d in figure['data']:

188 | objInst = d.get('legendgroup')

189 | wasVisible = False if d.get('visible') and d['visible'] == 'legendonly' else True

190 | if objInst is not None and ":" in objInst and wasVisible:

191 |

192 | classname = objInst.split(":")[0]

193 | x = d['x']

194 | y = d['y']

195 | bbox = [x[0], y[0], x[2], y[2]]

196 | inst = get_index_by_bbox(objGroupingDict,classname,bbox)

197 | if obsObjects.get(classname,None) is None:

198 | obsObjects[classname] = [int(inst)]

199 | else:

200 | obsObjects[classname].append(int(inst))

201 |

202 | return obsObjects

203 |

204 |

205 | # colors for visualization

206 | COLORS = ['#fe938c','#86e7b8','#f9ebe0','#208aae','#fe4a49',

207 | '#291711', '#5f4b66', '#b98b82', '#87f5fb', '#63326e'] * 50

208 |

209 | # Start Dash

210 | app = dash.Dash(__name__, external_stylesheets=[dbc.themes.BOOTSTRAP])

211 | server = app.server # Expose the server variable for deployments

212 | cache = Cache()

213 | CACHE_CONFIG = {

214 | 'CACHE_TYPE': 'filesystem',

215 | 'CACHE_DIR': os.path.join(basedir,'_cache')

216 | }

217 | cache.init_app(server,config=CACHE_CONFIG)

218 |

219 | # ----------

220 | # layout

221 | app.layout = html.Div(className='container', children=[

222 | Row(html.H1("Video Object Removal App")),

223 |

224 | # Input

225 | Row(html.P("Input Directory Path:")),

226 | Row([

227 | Column(width=6, children=[

228 | dcc.Upload(

229 | id="upload-file",

230 | children=html.Div(

231 | ["Drag and drop or click to select a file to upload."]

232 | ),

233 | style={

234 | "width": "100%",

235 | "height": "60px",

236 | "lineHeight": "60px",

237 | "borderWidth": "1px",

238 | "borderStyle": "dashed",

239 | "borderRadius": "5px",

240 | "textAlign": "center",

241 | "margin": "10px",

242 | },

243 | multiple=False,

244 | )

245 | ]),

246 | ]),

247 |

248 | Row([

249 | Column(width=6, children=[

250 | html.H3('Upload file',id='input-dirpath-display', style={'width': '100%'})

251 | ]),

252 | Column(width=2,children=[

253 | html.Button("Run Single", id='button-single', n_clicks=0)

254 | ]),

255 | ]),

256 |

257 | html.Hr(),

258 |

259 | # frame number selection

260 | Row([

261 | Column(width=2, children=[ html.P('Frame range:')]),

262 | Column(width=10, children=[

263 | dcc.Input(id='input-framenmin',type='number',value=0),

264 | dcc.RangeSlider(

265 | id='slider-framenums', min=0, max=100, step=1, value=[0,100],

266 | marks={0: '0', 100: '100'}, allowCross=False),

267 | dcc.Input(id='input-framenmax',type='number',value=100)

268 | ], style={"display": "grid", "grid-template-columns": "10% 70% 10%"}),

269 | ],style={'width':"100%"}),

270 |

271 | html.Hr(),

272 |

273 | Row([

274 | Column(width=5, children=[

275 | html.P('Confidence Threshold:'),

276 | dcc.Slider(

277 | id='slider-confidence', min=0, max=1, step=0.05, value=0.7,

278 | marks={0: '0', 1: '1'})

279 | ]),

280 | Column(width=7, children=[

281 | html.P('Object selection:'),

282 | Row([

283 | Column(width=3, children=dcc.Checklist(

284 | id='cb-person',

285 | options=[

286 | {'label': ' person', 'value': 'person'},

287 | {'label': ' handbag', 'value': 'handbag'},

288 | {'label': ' backpack', 'value': 'backpack'},

289 | {'label': ' suitcase', 'value': 'suitcase'},

290 | ],

291 | value = ['person', 'handbag','backpack','suitcase'])

292 | ),

293 | Column(width=3, children=dcc.Checklist(

294 | id='cb-vehicle',

295 | options=[

296 | {'label': ' car', 'value': 'car'},

297 | {'label': ' truck', 'value': 'truck'},

298 | {'label': ' motorcycle', 'value': 'motorcycle'},

299 | {'label': ' bus', 'value': 'bus'},

300 | ],

301 | value = ['car', 'truck', 'motorcycle'])

302 | ),

303 | Column(width=3, children=dcc.Checklist(

304 | id='cb-environment',

305 | options=[

306 | {'label': ' traffic light', 'value': 'traffic light'},

307 | {'label': ' stop sign', 'value': 'stop sign'},

308 | {'label': ' bench', 'value': 'bench'},

309 | ],

310 | value = [])

311 | )

312 | ])

313 | ]),

314 | ]),

315 |

316 | # prediction output graph

317 | Row(dcc.Graph(id='model-output', style={"height": "70vh"})),

318 |

319 | # processing options

320 | html.Hr(),

321 | Row([

322 | Column(width=7, children=[

323 | html.P('Processing options:'),

324 | Row([

325 | Column(width=3, children=dcc.Checklist(

326 | id='cb-options',

327 | options=[

328 | {'label': ' accept all', 'value': 'acceptall'},

329 | {'label': ' fill sequence', 'value': 'fillSequence'},

330 | {'label': ' use BBmask', 'value': 'useBBmasks'},

331 | ],

332 | value=['fillSequence'])

333 | ),

334 | Column(width=3, children= [

335 | html.P('dilation half-width (no dilation=0):'),

336 | dcc.Input(

337 | id='input-dilationhwidth',

338 | type='number',

339 | value=0)

340 | ]),

341 | Column(width=3, children=[

342 | html.P('minimum seq. length'),

343 | dcc.Input(

344 | id='input-minseqlength',

345 | type='number',

346 | value=0)

347 | ])

348 | ])

349 | ]),

350 | ]),

351 |

352 | # Sequence Video

353 | html.Hr(),

354 | Row([

355 | Column(width=2,children=[

356 | html.Button("Run Sequence", id='button-sequence', n_clicks=0),

357 | dcc.Loading(id='loading-sequence-bobble',

358 | type='circle',

359 | children=html.Div(id='loading-sequence'))

360 | ]),

361 | Column(width=2,children=[]), # place holder

362 | Row([

363 | Column(width=4, children=[

364 | html.P("Start Frame:"),

365 | html.Label("0",id='sequence-startframe')

366 | ]),

367 | Column(width=4, children=[]),

368 | Column(width=4, children=[

369 | html.P("End Frame:"),

370 | html.Label("0",id='sequence-endframe')

371 | ])

372 | ]),

373 | ]),

374 | html.P("Sequence Output"),

375 | html.Div([ dash_player.DashPlayer(

376 | id='sequence-output',

377 | url='static/result.mp4',

378 | controls=True,

379 | style={"height": "70vh"}) ]),

380 |

381 | # Inpainting Video

382 | html.Hr(),

383 | Row([

384 | Column(width=2,children=[

385 | html.Button("Run Inpaint", id='button-inpaint', n_clicks=0),

386 | dcc.Loading(id='loading-inpaint-bobble',

387 | type='circle',

388 | children=html.Div(id='loading-inpaint'))

389 | ]),

390 | Column(width=2,children=[]), # place holder

391 | Row([

392 | Column(width=4, children=[

393 | html.P("Start Frame:"),

394 | html.Label("0",id='inpaint-startframe')

395 | ]),

396 | Column(width=4, children=[]),

397 | Column(width=4, children=[

398 | html.P("End Frame:"),

399 | html.Label("0",id='inpaint-endframe')

400 | ])

401 | ]),

402 | ]),

403 | html.P("Inpainting Output"),

404 | html.Div([ dash_player.DashPlayer(

405 | id='inpaint-output',

406 | url='static/result.mp4',

407 | controls=True,

408 | style={"height": "70vh"}) ]),

409 |

410 | # hidden signal value

411 | html.Div(id='signal-sequence', style={'display': 'none'}),

412 | html.Div(id='signal-inpaint', style={'display': 'none'}),

413 | html.Div(id='input-dirpath', style={'display': 'none'}),

414 |

415 | ])

416 |

417 |

418 | # ----------

419 | # callbacks

420 |

421 | # upload file

422 | @app.callback(

423 | Output("input-dirpath", "children"),

424 | [Input("upload-file", "filename"), Input("upload-file", "contents")],

425 | )

426 | def update_output(uploaded_filename, uploaded_file_content):

427 | # Save uploaded files and regenerate the file list.

428 |

429 | if uploaded_filename is not None and uploaded_file_content is not None and \

430 | uploaded_filename.split(".")[-1] in ('mp4', 'mov', 'avi') :

431 | dirPath = vfile_to_frames(save_file(uploaded_filename, uploaded_file_content))

432 | return dirPath

433 | else:

434 | return "(none)"

435 |

436 | # update_framenum_minmax()

437 | # purpose: to update min/max boxes of the slider

438 | @app.callback(

439 | [Output('input-framenmin','value'),

440 | Output('input-framenmax','value'),

441 | Output('sequence-startframe','children'),

442 | Output('sequence-endframe','children'),

443 | Output('inpaint-startframe','children'),

444 | Output('inpaint-endframe','children')

445 | ],

446 | [Input('slider-framenums','value')]

447 | )

448 | def update_framenum_minmax(framenumrange):

449 | return framenumrange[0], framenumrange[1], \

450 | str(framenumrange[0]), str(framenumrange[1]), \

451 | str(framenumrange[0]), str(framenumrange[1])

452 |

453 |

454 | @app.callback(

455 | [Output('slider-framenums','max'),

456 | Output('slider-framenums','marks'),

457 | Output('slider-framenums','value'),

458 | Output('input-dirpath-display','children')],

459 | [Input('button-single','n_clicks'),

460 | Input('button-sequence','n_clicks'),

461 | Input('button-inpaint', 'n_clicks'),

462 | Input('input-dirpath', 'children')],

463 | [State('upload-file', 'filename'),

464 | State('slider-framenums','max'),

465 | State('slider-framenums','marks'),

466 | State('slider-framenums','value') ]

467 | )

468 | def update_dirpath(nc_single, nc_sequence, nc_inpaint, s_dirpath,

469 | vfilename, s_fnmax, s_fnmarks, s_fnvalue):

470 | if s_dirpath is None or s_dirpath == "(none)":

471 | #s_dirpath = "/home/appuser/data/Colomar/frames" #temporary fix

472 | return 100, {'0':'0', '100':'100'}, [0,100], '(none)'

473 |

474 | dirpath = s_dirpath

475 | fnames = getImageFileNames(s_dirpath)

476 | if fnames:

477 | fnmax = len(fnames)-1

478 | if fnmax != s_fnmax:

479 | fnmarks = {0: '0', fnmax: f"{fnmax}"}

480 | fnvalue = [0, fnmax]

481 | else:

482 | fnmarks = s_fnmarks

483 | fnvalue = s_fnvalue

484 | else:

485 | fnmax = s_fnmax

486 | fnmarks = s_fnmarks

487 | fnvalue = s_fnvalue

488 |

489 | return fnmax, fnmarks, fnvalue, vfilename

490 |

491 | # ***************

492 | # * run_single

493 | # ***************

494 | # create single prediction at first frame

495 | @app.callback(

496 | [Output('model-output', 'figure')],

497 | [Input('button-single', 'n_clicks')],

498 | [State('input-dirpath', 'children'),

499 | State('slider-framenums','value'),

500 | State('slider-confidence', 'value'),

501 | State('cb-person','value'),

502 | State('cb-vehicle','value'),

503 | State('cb-environment','value')

504 | ],

505 | )

506 | def run_single(n_clicks, dirpath, framerange, confidence,

507 | cb_person, cb_vehicle, cb_environment):

508 |

509 | if dirpath is not None and os.path.isdir(dirpath):

510 | fnames = getImageFileNames(dirpath)

511 | imgfile = fnames[framerange[0]]

512 | im = Image.open(imgfile)

513 | else:

514 | go.Figure().update_layout(title='Incorrect dirpath')

515 | im = Image.new('RGB',(640,480))

516 | fig = pil_to_fig(im, showlegend=True, title='No Image')

517 | return fig,

518 |

519 | theseObjects = [ *cb_person, *cb_vehicle, *cb_environment]

520 | wasConfidence = detr.cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST

521 |

522 | if sorted(theseObjects) != sorted(detr.selObjNames) or \

523 | abs(confidence - wasConfidence) > 0.00001:

524 | detr.__init__(selectObjectNames=theseObjects, score_threshold=confidence)

525 |

526 | tstart = time.time()

527 | scores, boxes, selClasses = detect_scores_bboxes_classes(imgfile, detr)

528 | tend = time.time()

529 |

530 | fig = pil_to_fig(im, showlegend=True, title=f'DETR Predictions ({tend-tstart:.2f}s)')

531 |

532 | seenClassIndex = {}

533 | insertOrder = []

534 | for i,class_id in enumerate(selClasses):

535 | classname = CLASSES[class_id]

536 |

537 | if seenClassIndex.get(classname,None) is not None:

538 | seenClassIndex[classname] += 1

539 | else:

540 | seenClassIndex[classname] = 0

541 |

542 | insertOrder.append([classname + ":" + str(seenClassIndex[classname]),i])

543 |

544 | insertOrder = sorted(insertOrder, \

545 | key=lambda x: (x[0].split(":")[0], int(x[0].split(":")[1])))

546 |

547 | for label,i in insertOrder:

548 |

549 | confidence = scores[i]

550 | x0, y0, x1, y1 = boxes[i]

551 | class_id = selClasses[i]

552 |

553 | # only display legend when it's not in the existing classes

554 | text = f"class={label}

61 |

62 |

--------------------------------------------------------------------------------

/app/app.py:

--------------------------------------------------------------------------------

1 | import os

2 | import sys

3 | import base64

4 | from glob import glob

5 | from shutil import rmtree

6 | from io import BytesIO

7 | import time

8 | from datetime import datetime

9 |

10 | #import flask

11 | #from flask import send_file, make_response

12 | #from flask import send_from_directory

13 | from flask_caching import Cache

14 | import dash

15 | import dash_player

16 | import dash_bootstrap_components as dbc

17 | from dash.dependencies import Input, Output, State

18 | import dash_core_components as dcc

19 | import dash_html_components as html

20 | import plotly.graph_objects as go

21 | from PIL import Image

22 |

23 | from model import detect_scores_bboxes_classes, \

24 | detr, createNullVideo

25 | from model import CLASSES, DEVICE

26 | from model import inpaint, testContainerWrite, performInpainting

27 |

28 | libpath = "/home/appuser/scripts/" # to keep the dev repo in place, w/o linking

29 | sys.path.insert(1,libpath)

30 | import ObjectDetection.imutils as imu

31 |

32 |

33 | basedir = os.getcwd()

34 | uploaddir = os.path.join(basedir,"upload")

35 | print(f"ObjectDetect loaded, using DEVICE={DEVICE}")

36 | print(f"Basedirectory: {basedir}")

37 |

38 | # cleaup old uploads

39 | if os.path.exists(uploaddir):

40 | rmtree(uploaddir)

41 |

42 | os.mkdir(uploaddir)

43 |

44 | # cleanup old runs

45 | if os.path.exists(os.path.join(basedir,"static")):

46 | for f in [*glob(os.path.join(basedir,'static/sequence_*.mp4')),

47 | *glob(os.path.join(basedir,'static/inpaint_*.mp4'))]:

48 | os.remove(f)

49 | else:

50 | os.mkdir(os.path.join(basedir,"static"))

51 |

52 | # remove and create dummy video for place holder

53 | tempfile = os.path.join(basedir,'static/result.mp4')

54 | if not os.path.exists(tempfile):

55 | createNullVideo(tempfile)

56 |

57 |

58 | # ----------

59 | # Helper functions

60 | def getImageFileNames(dirPath):

61 | if not os.path.isdir(dirPath):

62 | return go.Figure().update_layout(title='Incorrect Directory Path!')

63 |

64 | for filetype in ('*.png', '*.jpg'):

65 | fnames = sorted(glob(os.path.join(dirPath,filetype)))

66 | if len(fnames) > 0: break

67 |

68 | if not fnames:

69 | go.Figure().update_layout(title='No files found!')

70 | return None

71 | else:

72 | return fnames

73 |

74 |

75 | # ----------

76 | # Dash component wrappers

77 | def Row(children=None, **kwargs):

78 | return html.Div(children, className="row", **kwargs)

79 |

80 |

81 | def Column(children=None, width=1, **kwargs):

82 | nb_map = {

83 | 1: 'one', 2: 'two', 3: 'three', 4: 'four', 5: 'five', 6: 'six',

84 | 7: 'seven', 8: 'eight', 9: 'nine', 10: 'ten', 11: 'eleven', 12: 'twelve'}

85 |

86 | return html.Div(children, className=f"{nb_map[width]} columns", **kwargs)

87 |

88 | # ----------

89 | # plotly.py helper functions

90 |

91 | # upload files

92 | def save_file(name, content):

93 | """Decode and store a file uploaded with Plotly Dash."""

94 | filepath = os.path.join(uploaddir, name)

95 | data = content.encode("utf8").split(b";base64,")[1]

96 | with open(filepath, "wb") as fp:

97 | fp.write(base64.decodebytes(data))

98 |

99 | return filepath

100 |

101 | def vfile_to_frames(fname):

102 | assert os.path.exists(fname), "Could not determine path to video file"

103 | dirPath = os.path.join(uploaddir,fname.split(".")[-2])

104 | nfiles = imu.videofileToFramesDirectory(videofile=fname,dirPath=dirPath,

105 | padlength=5, imgtype='png', cleanDirectory=True)

106 | return dirPath

107 |

108 | # PIL images

109 | def pil_to_b64(im, enc="png"):

110 | io_buf = BytesIO()

111 | im.save(io_buf, format=enc)

112 | encoded = base64.b64encode(io_buf.getvalue()).decode("utf-8")

113 | return f"data:img/{enc};base64, " + encoded

114 |

115 |

116 | def pil_to_fig(im, showlegend=False, title=None):

117 | img_width, img_height = im.size

118 | fig = go.Figure()

119 | # This trace is added to help the autoresize logic work.

120 | fig.add_trace(go.Scatter(

121 | x=[img_width * 0.05, img_width * 0.95],

122 | y=[img_height * 0.95, img_height * 0.05],

123 | showlegend=False, mode="markers", marker_opacity=0,

124 | hoverinfo="none", legendgroup='Image'))

125 |

126 | fig.add_layout_image(dict(

127 | source=pil_to_b64(im), sizing="stretch", opacity=1, layer="below",

128 | x=0, y=0, xref="x", yref="y", sizex=img_width, sizey=img_height,))

129 |

130 | # Adapt axes to the right width and height, lock aspect ratio

131 | fig.update_xaxes(

132 | showgrid=False, visible=False, constrain="domain", range=[0, img_width])

133 |

134 | fig.update_yaxes(

135 | showgrid=False, visible=False,

136 | scaleanchor="x", scaleratio=1,

137 | range=[img_height, 0])

138 |

139 | fig.update_layout(title=title, showlegend=showlegend)

140 |

141 | return fig

142 |

143 | # graph

144 | def add_bbox(fig, x0, y0, x1, y1,

145 | showlegend=True, name=None, color=None,

146 | opacity=0.5, group=None, text=None):

147 | fig.add_trace(go.Scatter(

148 | x=[x0, x1, x1, x0, x0],

149 | y=[y0, y0, y1, y1, y0],

150 | mode="lines",

151 | fill="toself",

152 | opacity=opacity,

153 | marker_color=color,

154 | hoveron="fills",

155 | name=name,

156 | hoverlabel_namelength=0,

157 | text=text,

158 | legendgroup=group,

159 | showlegend=showlegend,

160 | ))

161 |

162 | def get_index_by_bbox(objGroupingDict,class_name,bbox_in):

163 | # gets the order of the object within the groupingSequence

164 | # by bounding box overlap

165 | maxIoU = 0.0

166 | maxIdx = 0

167 | for instIdx, objInst in enumerate(objGroupingDict[class_name]):

168 | # only search for object found in seq_i= 0

169 | # there must exist objects at seq_i=0 since we predicted them on first frame

170 | bbx,_,seq_i = objInst[0]

171 |

172 | if seq_i != 0: continue

173 | IoU = imu.bboxIoU(bbx, bbox_in)

174 | if IoU > maxIoU:

175 | maxIoU = IoU

176 | maxIdx = instIdx

177 |

178 | return maxIdx

179 |

180 |

181 | def get_selected_objects(figure,objGroupingDict):

182 | # recovers the selected items from the figure

183 | # infer the object location by the bbox IoU (more stable than order)

184 | # returns a dict of selected indexed items

185 |

186 | obsObjects = {}

187 | for d in figure['data']:

188 | objInst = d.get('legendgroup')

189 | wasVisible = False if d.get('visible') and d['visible'] == 'legendonly' else True

190 | if objInst is not None and ":" in objInst and wasVisible:

191 |

192 | classname = objInst.split(":")[0]

193 | x = d['x']

194 | y = d['y']

195 | bbox = [x[0], y[0], x[2], y[2]]

196 | inst = get_index_by_bbox(objGroupingDict,classname,bbox)

197 | if obsObjects.get(classname,None) is None:

198 | obsObjects[classname] = [int(inst)]

199 | else:

200 | obsObjects[classname].append(int(inst))

201 |

202 | return obsObjects

203 |

204 |

205 | # colors for visualization

206 | COLORS = ['#fe938c','#86e7b8','#f9ebe0','#208aae','#fe4a49',

207 | '#291711', '#5f4b66', '#b98b82', '#87f5fb', '#63326e'] * 50

208 |

209 | # Start Dash

210 | app = dash.Dash(__name__, external_stylesheets=[dbc.themes.BOOTSTRAP])

211 | server = app.server # Expose the server variable for deployments

212 | cache = Cache()

213 | CACHE_CONFIG = {

214 | 'CACHE_TYPE': 'filesystem',

215 | 'CACHE_DIR': os.path.join(basedir,'_cache')

216 | }

217 | cache.init_app(server,config=CACHE_CONFIG)

218 |

219 | # ----------

220 | # layout

221 | app.layout = html.Div(className='container', children=[

222 | Row(html.H1("Video Object Removal App")),

223 |

224 | # Input

225 | Row(html.P("Input Directory Path:")),

226 | Row([

227 | Column(width=6, children=[

228 | dcc.Upload(

229 | id="upload-file",

230 | children=html.Div(

231 | ["Drag and drop or click to select a file to upload."]

232 | ),

233 | style={

234 | "width": "100%",

235 | "height": "60px",

236 | "lineHeight": "60px",

237 | "borderWidth": "1px",

238 | "borderStyle": "dashed",

239 | "borderRadius": "5px",

240 | "textAlign": "center",

241 | "margin": "10px",

242 | },

243 | multiple=False,

244 | )

245 | ]),

246 | ]),

247 |

248 | Row([

249 | Column(width=6, children=[

250 | html.H3('Upload file',id='input-dirpath-display', style={'width': '100%'})

251 | ]),

252 | Column(width=2,children=[

253 | html.Button("Run Single", id='button-single', n_clicks=0)

254 | ]),

255 | ]),

256 |

257 | html.Hr(),

258 |

259 | # frame number selection

260 | Row([

261 | Column(width=2, children=[ html.P('Frame range:')]),

262 | Column(width=10, children=[

263 | dcc.Input(id='input-framenmin',type='number',value=0),

264 | dcc.RangeSlider(

265 | id='slider-framenums', min=0, max=100, step=1, value=[0,100],

266 | marks={0: '0', 100: '100'}, allowCross=False),

267 | dcc.Input(id='input-framenmax',type='number',value=100)

268 | ], style={"display": "grid", "grid-template-columns": "10% 70% 10%"}),

269 | ],style={'width':"100%"}),

270 |

271 | html.Hr(),

272 |

273 | Row([

274 | Column(width=5, children=[

275 | html.P('Confidence Threshold:'),

276 | dcc.Slider(

277 | id='slider-confidence', min=0, max=1, step=0.05, value=0.7,

278 | marks={0: '0', 1: '1'})

279 | ]),

280 | Column(width=7, children=[

281 | html.P('Object selection:'),

282 | Row([

283 | Column(width=3, children=dcc.Checklist(

284 | id='cb-person',

285 | options=[

286 | {'label': ' person', 'value': 'person'},

287 | {'label': ' handbag', 'value': 'handbag'},

288 | {'label': ' backpack', 'value': 'backpack'},

289 | {'label': ' suitcase', 'value': 'suitcase'},

290 | ],

291 | value = ['person', 'handbag','backpack','suitcase'])

292 | ),

293 | Column(width=3, children=dcc.Checklist(

294 | id='cb-vehicle',

295 | options=[

296 | {'label': ' car', 'value': 'car'},

297 | {'label': ' truck', 'value': 'truck'},

298 | {'label': ' motorcycle', 'value': 'motorcycle'},

299 | {'label': ' bus', 'value': 'bus'},

300 | ],

301 | value = ['car', 'truck', 'motorcycle'])

302 | ),

303 | Column(width=3, children=dcc.Checklist(

304 | id='cb-environment',

305 | options=[

306 | {'label': ' traffic light', 'value': 'traffic light'},

307 | {'label': ' stop sign', 'value': 'stop sign'},

308 | {'label': ' bench', 'value': 'bench'},

309 | ],

310 | value = [])

311 | )

312 | ])

313 | ]),

314 | ]),

315 |

316 | # prediction output graph

317 | Row(dcc.Graph(id='model-output', style={"height": "70vh"})),

318 |

319 | # processing options

320 | html.Hr(),

321 | Row([

322 | Column(width=7, children=[

323 | html.P('Processing options:'),

324 | Row([

325 | Column(width=3, children=dcc.Checklist(

326 | id='cb-options',

327 | options=[

328 | {'label': ' accept all', 'value': 'acceptall'},

329 | {'label': ' fill sequence', 'value': 'fillSequence'},

330 | {'label': ' use BBmask', 'value': 'useBBmasks'},

331 | ],

332 | value=['fillSequence'])

333 | ),

334 | Column(width=3, children= [

335 | html.P('dilation half-width (no dilation=0):'),

336 | dcc.Input(

337 | id='input-dilationhwidth',

338 | type='number',

339 | value=0)

340 | ]),

341 | Column(width=3, children=[

342 | html.P('minimum seq. length'),

343 | dcc.Input(

344 | id='input-minseqlength',

345 | type='number',

346 | value=0)

347 | ])

348 | ])

349 | ]),

350 | ]),

351 |

352 | # Sequence Video

353 | html.Hr(),

354 | Row([

355 | Column(width=2,children=[

356 | html.Button("Run Sequence", id='button-sequence', n_clicks=0),

357 | dcc.Loading(id='loading-sequence-bobble',

358 | type='circle',

359 | children=html.Div(id='loading-sequence'))

360 | ]),

361 | Column(width=2,children=[]), # place holder

362 | Row([

363 | Column(width=4, children=[

364 | html.P("Start Frame:"),

365 | html.Label("0",id='sequence-startframe')

366 | ]),

367 | Column(width=4, children=[]),

368 | Column(width=4, children=[

369 | html.P("End Frame:"),

370 | html.Label("0",id='sequence-endframe')

371 | ])

372 | ]),

373 | ]),

374 | html.P("Sequence Output"),

375 | html.Div([ dash_player.DashPlayer(

376 | id='sequence-output',

377 | url='static/result.mp4',

378 | controls=True,

379 | style={"height": "70vh"}) ]),

380 |

381 | # Inpainting Video

382 | html.Hr(),

383 | Row([

384 | Column(width=2,children=[

385 | html.Button("Run Inpaint", id='button-inpaint', n_clicks=0),

386 | dcc.Loading(id='loading-inpaint-bobble',

387 | type='circle',

388 | children=html.Div(id='loading-inpaint'))

389 | ]),

390 | Column(width=2,children=[]), # place holder

391 | Row([

392 | Column(width=4, children=[

393 | html.P("Start Frame:"),

394 | html.Label("0",id='inpaint-startframe')

395 | ]),

396 | Column(width=4, children=[]),

397 | Column(width=4, children=[

398 | html.P("End Frame:"),

399 | html.Label("0",id='inpaint-endframe')

400 | ])

401 | ]),

402 | ]),

403 | html.P("Inpainting Output"),

404 | html.Div([ dash_player.DashPlayer(

405 | id='inpaint-output',

406 | url='static/result.mp4',

407 | controls=True,

408 | style={"height": "70vh"}) ]),

409 |

410 | # hidden signal value

411 | html.Div(id='signal-sequence', style={'display': 'none'}),

412 | html.Div(id='signal-inpaint', style={'display': 'none'}),

413 | html.Div(id='input-dirpath', style={'display': 'none'}),

414 |

415 | ])

416 |

417 |

418 | # ----------

419 | # callbacks

420 |

421 | # upload file

422 | @app.callback(

423 | Output("input-dirpath", "children"),

424 | [Input("upload-file", "filename"), Input("upload-file", "contents")],

425 | )

426 | def update_output(uploaded_filename, uploaded_file_content):

427 | # Save uploaded files and regenerate the file list.

428 |

429 | if uploaded_filename is not None and uploaded_file_content is not None and \

430 | uploaded_filename.split(".")[-1] in ('mp4', 'mov', 'avi') :

431 | dirPath = vfile_to_frames(save_file(uploaded_filename, uploaded_file_content))

432 | return dirPath

433 | else:

434 | return "(none)"

435 |

436 | # update_framenum_minmax()

437 | # purpose: to update min/max boxes of the slider

438 | @app.callback(

439 | [Output('input-framenmin','value'),

440 | Output('input-framenmax','value'),

441 | Output('sequence-startframe','children'),

442 | Output('sequence-endframe','children'),

443 | Output('inpaint-startframe','children'),

444 | Output('inpaint-endframe','children')

445 | ],

446 | [Input('slider-framenums','value')]

447 | )

448 | def update_framenum_minmax(framenumrange):

449 | return framenumrange[0], framenumrange[1], \

450 | str(framenumrange[0]), str(framenumrange[1]), \

451 | str(framenumrange[0]), str(framenumrange[1])

452 |

453 |

454 | @app.callback(

455 | [Output('slider-framenums','max'),

456 | Output('slider-framenums','marks'),

457 | Output('slider-framenums','value'),

458 | Output('input-dirpath-display','children')],

459 | [Input('button-single','n_clicks'),

460 | Input('button-sequence','n_clicks'),

461 | Input('button-inpaint', 'n_clicks'),

462 | Input('input-dirpath', 'children')],

463 | [State('upload-file', 'filename'),

464 | State('slider-framenums','max'),

465 | State('slider-framenums','marks'),

466 | State('slider-framenums','value') ]

467 | )

468 | def update_dirpath(nc_single, nc_sequence, nc_inpaint, s_dirpath,

469 | vfilename, s_fnmax, s_fnmarks, s_fnvalue):

470 | if s_dirpath is None or s_dirpath == "(none)":

471 | #s_dirpath = "/home/appuser/data/Colomar/frames" #temporary fix

472 | return 100, {'0':'0', '100':'100'}, [0,100], '(none)'

473 |

474 | dirpath = s_dirpath

475 | fnames = getImageFileNames(s_dirpath)

476 | if fnames:

477 | fnmax = len(fnames)-1

478 | if fnmax != s_fnmax:

479 | fnmarks = {0: '0', fnmax: f"{fnmax}"}

480 | fnvalue = [0, fnmax]

481 | else:

482 | fnmarks = s_fnmarks

483 | fnvalue = s_fnvalue

484 | else:

485 | fnmax = s_fnmax

486 | fnmarks = s_fnmarks

487 | fnvalue = s_fnvalue

488 |

489 | return fnmax, fnmarks, fnvalue, vfilename

490 |

491 | # ***************

492 | # * run_single

493 | # ***************

494 | # create single prediction at first frame

495 | @app.callback(

496 | [Output('model-output', 'figure')],

497 | [Input('button-single', 'n_clicks')],

498 | [State('input-dirpath', 'children'),

499 | State('slider-framenums','value'),

500 | State('slider-confidence', 'value'),

501 | State('cb-person','value'),

502 | State('cb-vehicle','value'),

503 | State('cb-environment','value')

504 | ],

505 | )

506 | def run_single(n_clicks, dirpath, framerange, confidence,

507 | cb_person, cb_vehicle, cb_environment):

508 |

509 | if dirpath is not None and os.path.isdir(dirpath):

510 | fnames = getImageFileNames(dirpath)

511 | imgfile = fnames[framerange[0]]

512 | im = Image.open(imgfile)

513 | else:

514 | go.Figure().update_layout(title='Incorrect dirpath')

515 | im = Image.new('RGB',(640,480))

516 | fig = pil_to_fig(im, showlegend=True, title='No Image')

517 | return fig,

518 |

519 | theseObjects = [ *cb_person, *cb_vehicle, *cb_environment]

520 | wasConfidence = detr.cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST

521 |

522 | if sorted(theseObjects) != sorted(detr.selObjNames) or \

523 | abs(confidence - wasConfidence) > 0.00001:

524 | detr.__init__(selectObjectNames=theseObjects, score_threshold=confidence)

525 |

526 | tstart = time.time()

527 | scores, boxes, selClasses = detect_scores_bboxes_classes(imgfile, detr)

528 | tend = time.time()

529 |

530 | fig = pil_to_fig(im, showlegend=True, title=f'DETR Predictions ({tend-tstart:.2f}s)')

531 |

532 | seenClassIndex = {}

533 | insertOrder = []

534 | for i,class_id in enumerate(selClasses):

535 | classname = CLASSES[class_id]

536 |

537 | if seenClassIndex.get(classname,None) is not None:

538 | seenClassIndex[classname] += 1

539 | else:

540 | seenClassIndex[classname] = 0

541 |

542 | insertOrder.append([classname + ":" + str(seenClassIndex[classname]),i])

543 |

544 | insertOrder = sorted(insertOrder, \

545 | key=lambda x: (x[0].split(":")[0], int(x[0].split(":")[1])))

546 |

547 | for label,i in insertOrder:

548 |

549 | confidence = scores[i]

550 | x0, y0, x1, y1 = boxes[i]

551 | class_id = selClasses[i]

552 |

553 | # only display legend when it's not in the existing classes

554 | text = f"class={label}

confidence={confidence:.3f}"

555 |

556 | add_bbox(

557 | fig, x0, y0, x1, y1,

558 | opacity=0.7, group=label, name=label, color=COLORS[class_id],

559 | showlegend=True, text=text,

560 | )

561 |

562 | return fig,

563 |

564 |

565 | # ***************

566 | # run_sequence

567 | # ***************

568 | # Produce sequence prediction with grouping

569 | @app.callback(

570 | [Output('loading-sequence', 'children'),

571 | Output('signal-sequence','children')],

572 | [Input('button-sequence', 'n_clicks')],

573 | [State('input-dirpath', 'children'),

574 | State('slider-framenums','value'),

575 | State('slider-confidence', 'value'),

576 | State('model-output','figure'),

577 | State('cb-person','value'),

578 | State('cb-vehicle','value'),

579 | State('cb-environment','value'),

580 | State('cb-options','value'),

581 | State('input-dilationhwidth','value'),

582 | State('input-minseqlength','value')]

583 | )

584 | def run_sequence(n_clicks, dirpath, framerange, confidence,figure,

585 | cb_person, cb_vehicle, cb_environment,

586 | cb_options, dilationhwidth, minsequencelength):

587 |

588 | if dirpath is not None and os.path.isdir(dirpath):

589 | fnames = getImageFileNames(dirpath)

590 | else:

591 | return "", "Null:None"

592 |

593 | selectObjects = [ *cb_person, *cb_vehicle, *cb_environment]

594 | acceptall = 'acceptall' in cb_options

595 | fillSequence = 'fillSequence' in cb_options

596 | useBBmasks = 'useBBmasks' in cb_options

597 |

598 | fmin, fmax = framerange

599 | fnames = fnames[fmin:fmax]

600 |

601 | # was this a repeat?

602 | if len(detr.imglist) != 0:

603 | if fnames == detr.selectFiles:

604 | return "", "Null:None"

605 |

606 | detr.__init__(score_threshold=confidence,selectObjectNames=selectObjects)

607 |

608 | vfile = compute_sequence(fnames,framerange,confidence,

609 | figure, selectObjects,

610 | acceptall, fillSequence, useBBmasks,

611 | dilationhwidth, minsequencelength)

612 |

613 | return "", f'sequencevid:{vfile}'

614 |

615 |

616 | @cache.memoize()

617 | def compute_sequence(fnames,framerange,confidence,

618 | figure,selectObjectNames,

619 | acceptall, fillSequence, useBBmasks,

620 | dilationhwidth, minsequencelength):

621 | detr.selectFiles = fnames

622 |

623 | staticdir = os.path.join(os.getcwd(),"static")

624 | detr.load_images(filelist=fnames)

625 | detr.predict_sequence(useBBmasks=useBBmasks,selObjectNames=selectObjectNames)

626 | detr.groupObjBBMaskSequence()

627 |

628 | obsObjectsDict = get_selected_objects(figure=figure,

629 | objGroupingDict=detr.objBBMaskSeqGrpDict) \

630 | if not acceptall else None

631 |

632 | # filtered by object class, instance, and length

633 | detr.filter_ObjBBMaskSeq(allowObjNameInstances= obsObjectsDict,

634 | minCount=minsequencelength)

635 |

636 | # fill sequence

637 | if fillSequence:

638 | detr.fill_ObjBBMaskSequence(specificObjectNameInstances=obsObjectsDict)

639 |

640 | # use dilation

641 | if dilationhwidth > 0:

642 | detr.combine_MaskSequence()

643 | detr.dilateErode_MaskSequence(kernelShape='el',

644 | maskHalfWidth=dilationhwidth)

645 |

646 | vfile = 'sequence_' + datetime.now().strftime("%Y%m%d_%H%M%S") + ".mp4"

647 | if not os.environ.get("VSCODE_DEBUG"):

648 | detr.create_animationObject(framerange=framerange,

649 | useMasks=True,

650 | toHTML=False,

651 | figsize=(20,15),

652 | interval=30,

653 | MPEGfile=os.path.join(staticdir,vfile),

654 | useFFMPEGdirect=True)

655 | return vfile

656 |

657 |

658 | @app.callback(Output('sequence-output','url'),

659 | [Input('signal-sequence','children')],

660 | [State('sequence-output','url')])

661 | def serve_sequence_video(signal,currurl):

662 | if currurl is None:

663 | return 'static/result.mp4'

664 | else:

665 | sigtype,vfile = signal.split(":")

666 | if vfile is not None and \

667 | isinstance(vfile,str) and \

668 | sigtype == 'sequencevid' and \

669 | os.path.exists(f"./static/{vfile}"):

670 |

671 | # remove old file

672 | if os.path.exists(currurl):

673 | os.remove(currurl)

674 |

675 | # serve new file

676 | return f"static/{vfile}"

677 | else:

678 | return currurl

679 |

680 |

681 | # ***************

682 | # run_inpaint

683 | # ***************

684 | # Produce inpainting results

685 |

686 | @app.callback(

687 | [Output('loading-inpaint', 'children'),

688 | Output('signal-inpaint','children')],

689 | [Input('button-inpaint', 'n_clicks')]

690 | )

691 | def run_inpaint(n_clicks):

692 | if not n_clicks:

693 | return "", "Null:None"

694 |

695 | assert testContainerWrite(inpaintObj=inpaint,

696 | workDir="../data",

697 | hardFail=False) , "Errors connecting with write access in containers"

698 |

699 | staticdir = os.path.join(os.getcwd(),"static")

700 | vfile = 'inpaint_' + datetime.now().strftime("%Y%m%d_%H%M%S") + ".mp4"

701 | performInpainting(detrObj=detr,

702 | inpaintObj=inpaint,

703 | workDir = "../data",

704 | outputVideo=os.path.join(staticdir,vfile))

705 |

706 | return "", f"inpaintvid:{vfile}"

707 |

708 |

709 | @app.callback(Output('inpaint-output','url'),

710 | [Input('signal-inpaint','children')],

711 | [State('inpaint-output','url')])

712 | def serve_inpaint_video(signal,currurl):

713 | if currurl is None:

714 | return 'static/result.mp4'

715 | else:

716 | sigtype,vfile = signal.split(":")

717 | if vfile is not None and \

718 | isinstance(vfile,str) and \

719 | sigtype == 'inpaintvid' and \

720 | os.path.exists(f"./static/{vfile}"):

721 |

722 | # remove old file

723 | if os.path.exists(currurl):

724 | os.remove(currurl)

725 |

726 | # serve new file

727 | return f"static/{vfile}"

728 | else:

729 | return currurl

730 |

731 |

732 | # ---------------------------------------------------------------------

733 | if __name__ == '__main__':

734 |

735 | app.run_server(debug=True,host='0.0.0.0',processes=1,threaded=True)

736 |

--------------------------------------------------------------------------------

/app/assets/default.min.css:

--------------------------------------------------------------------------------

1 | /* Table of contents

2 | ––––––––––––––––––––––––––––––––––––––––––––––––––

3 | - Plotly.js

4 | - Grid

5 | - Base Styles

6 | - Typography

7 | - Links

8 | - Buttons

9 | - Forms

10 | - Lists

11 | - Code

12 | - Tables

13 | - Spacing

14 | - Utilities

15 | - Clearing

16 | - Media Queries

17 | */

18 |

19 | /* PLotly.js

20 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

21 | /* plotly.js's modebar's z-index is 1001 by default

22 | * https://github.com/plotly/plotly.js/blob/7e4d8ab164258f6bd48be56589dacd9bdd7fded2/src/css/_modebar.scss#L5

23 | * In case a dropdown is above the graph, the dropdown's options

24 | * will be rendered below the modebar

25 | * Increase the select option's z-index

26 | */

27 |

28 | /* This was actually not quite right -

29 | dropdowns were overlapping each other (edited October 26)

30 |

31 | .Select {

32 | z-index: 1002;

33 | }*/

34 |

35 |

36 | /* Grid

37 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

38 | .container {

39 | position: relative;

40 | width: 100%;

41 | max-width: 960px;

42 | margin: 0 auto;

43 | padding: 0 20px;

44 | box-sizing: border-box; }

45 | .column,

46 | .columns {

47 | width: 100%;

48 | float: left;

49 | box-sizing: border-box; }

50 |

51 | /* For devices larger than 400px */

52 | @media (min-width: 400px) {

53 | .container {

54 | width: 85%;

55 | padding: 0; }

56 | }

57 |

58 | /* For devices larger than 550px */

59 | @media (min-width: 550px) {

60 | .container {

61 | width: 80%; }

62 | .column,

63 | .columns {

64 | margin-left: 4%; }

65 | .column:first-child,

66 | .columns:first-child {

67 | margin-left: 0; }

68 |

69 | .one.column,

70 | .one.columns { width: 4.66666666667%; }

71 | .two.columns { width: 13.3333333333%; }

72 | .three.columns { width: 22%; }

73 | .four.columns { width: 30.6666666667%; }

74 | .five.columns { width: 39.3333333333%; }

75 | .six.columns { width: 48%; }

76 | .seven.columns { width: 56.6666666667%; }

77 | .eight.columns { width: 65.3333333333%; }

78 | .nine.columns { width: 74.0%; }

79 | .ten.columns { width: 82.6666666667%; }

80 | .eleven.columns { width: 91.3333333333%; }

81 | .twelve.columns { width: 100%; margin-left: 0; }

82 |

83 | .one-third.column { width: 30.6666666667%; }

84 | .two-thirds.column { width: 65.3333333333%; }

85 |

86 | .one-half.column { width: 48%; }

87 |

88 | /* Offsets */

89 | .offset-by-one.column,

90 | .offset-by-one.columns { margin-left: 8.66666666667%; }

91 | .offset-by-two.column,

92 | .offset-by-two.columns { margin-left: 17.3333333333%; }

93 | .offset-by-three.column,

94 | .offset-by-three.columns { margin-left: 26%; }

95 | .offset-by-four.column,

96 | .offset-by-four.columns { margin-left: 34.6666666667%; }

97 | .offset-by-five.column,

98 | .offset-by-five.columns { margin-left: 43.3333333333%; }

99 | .offset-by-six.column,

100 | .offset-by-six.columns { margin-left: 52%; }

101 | .offset-by-seven.column,

102 | .offset-by-seven.columns { margin-left: 60.6666666667%; }

103 | .offset-by-eight.column,

104 | .offset-by-eight.columns { margin-left: 69.3333333333%; }

105 | .offset-by-nine.column,

106 | .offset-by-nine.columns { margin-left: 78.0%; }

107 | .offset-by-ten.column,

108 | .offset-by-ten.columns { margin-left: 86.6666666667%; }

109 | .offset-by-eleven.column,

110 | .offset-by-eleven.columns { margin-left: 95.3333333333%; }

111 |

112 | .offset-by-one-third.column,

113 | .offset-by-one-third.columns { margin-left: 34.6666666667%; }

114 | .offset-by-two-thirds.column,

115 | .offset-by-two-thirds.columns { margin-left: 69.3333333333%; }

116 |

117 | .offset-by-one-half.column,

118 | .offset-by-one-half.columns { margin-left: 52%; }

119 |

120 | }

121 |

122 |

123 | /* Base Styles

124 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

125 | /* NOTE

126 | html is set to 62.5% so that all the REM measurements throughout Skeleton

127 | are based on 10px sizing. So basically 1.5rem = 15px :) */

128 | html {

129 | font-size: 62.5%; }

130 | body {

131 | font-size: 1.5em; /* currently ems cause chrome bug misinterpreting rems on body element */

132 | line-height: 1.6;

133 | font-weight: 400;

134 | font-family: "Open Sans", "HelveticaNeue", "Helvetica Neue", Helvetica, Arial, sans-serif;

135 | color: rgb(50, 50, 50); }

136 |

137 |

138 | /* Typography

139 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

140 | h1, h2, h3, h4, h5, h6 {

141 | margin-top: 0;

142 | margin-bottom: 0;

143 | font-weight: 300; }

144 | h1 { font-size: 4.5rem; line-height: 1.2; letter-spacing: -.1rem; margin-bottom: 2rem; }

145 | h2 { font-size: 3.6rem; line-height: 1.25; letter-spacing: -.1rem; margin-bottom: 1.8rem; margin-top: 1.8rem;}

146 | h3 { font-size: 3.0rem; line-height: 1.3; letter-spacing: -.1rem; margin-bottom: 1.5rem; margin-top: 1.5rem;}

147 | h4 { font-size: 2.6rem; line-height: 1.35; letter-spacing: -.08rem; margin-bottom: 1.2rem; margin-top: 1.2rem;}

148 | h5 { font-size: 2.2rem; line-height: 1.5; letter-spacing: -.05rem; margin-bottom: 0.6rem; margin-top: 0.6rem;}

149 | h6 { font-size: 2.0rem; line-height: 1.6; letter-spacing: 0; margin-bottom: 0.75rem; margin-top: 0.75rem;}

150 |

151 | p {

152 | margin-top: 0; }

153 |

154 |

155 | /* Blockquotes

156 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

157 | blockquote {

158 | border-left: 4px lightgrey solid;

159 | padding-left: 1rem;

160 | margin-top: 2rem;

161 | margin-bottom: 2rem;

162 | margin-left: 0rem;

163 | }

164 |

165 |

166 | /* Links

167 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

168 | a {

169 | color: #1EAEDB;

170 | text-decoration: underline;

171 | cursor: pointer;}

172 | a:hover {

173 | color: #0FA0CE; }

174 |

175 |

176 | /* Buttons

177 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

178 | .button,

179 | button,

180 | input[type="submit"],

181 | input[type="reset"],

182 | input[type="button"] {

183 | display: inline-block;

184 | height: 38px;

185 | padding: 0 30px;

186 | color: #555;

187 | text-align: center;

188 | font-size: 11px;

189 | font-weight: 600;

190 | line-height: 38px;

191 | letter-spacing: .1rem;

192 | text-transform: uppercase;

193 | text-decoration: none;

194 | white-space: nowrap;

195 | background-color: transparent;

196 | border-radius: 4px;

197 | border: 1px solid #bbb;

198 | cursor: pointer;

199 | box-sizing: border-box; }

200 | .button:hover,

201 | button:hover,

202 | input[type="submit"]:hover,

203 | input[type="reset"]:hover,

204 | input[type="button"]:hover,

205 | .button:focus,

206 | button:focus,

207 | input[type="submit"]:focus,

208 | input[type="reset"]:focus,

209 | input[type="button"]:focus {

210 | color: #333;

211 | border-color: #888;

212 | outline: 0; }

213 | .button.button-primary,

214 | button.button-primary,

215 | input[type="submit"].button-primary,

216 | input[type="reset"].button-primary,

217 | input[type="button"].button-primary {

218 | color: #FFF;

219 | background-color: #33C3F0;

220 | border-color: #33C3F0; }

221 | .button.button-primary:hover,

222 | button.button-primary:hover,

223 | input[type="submit"].button-primary:hover,

224 | input[type="reset"].button-primary:hover,

225 | input[type="button"].button-primary:hover,

226 | .button.button-primary:focus,

227 | button.button-primary:focus,

228 | input[type="submit"].button-primary:focus,

229 | input[type="reset"].button-primary:focus,

230 | input[type="button"].button-primary:focus {

231 | color: #FFF;

232 | background-color: #1EAEDB;

233 | border-color: #1EAEDB; }

234 |

235 |

236 | /* Forms

237 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

238 | input[type="email"],

239 | input[type="number"],

240 | input[type="search"],

241 | input[type="text"],

242 | input[type="tel"],

243 | input[type="url"],

244 | input[type="password"],

245 | textarea,

246 | select {

247 | height: 38px;

248 | padding: 6px 10px; /* The 6px vertically centers text on FF, ignored by Webkit */

249 | background-color: #fff;

250 | border: 1px solid #D1D1D1;

251 | border-radius: 4px;

252 | box-shadow: none;

253 | box-sizing: border-box;

254 | font-family: inherit;

255 | font-size: inherit; /*https://stackoverflow.com/questions/6080413/why-doesnt-input-inherit-the-font-from-body*/}

256 | /* Removes awkward default styles on some inputs for iOS */

257 | input[type="email"],

258 | input[type="number"],

259 | input[type="search"],

260 | input[type="text"],

261 | input[type="tel"],

262 | input[type="url"],

263 | input[type="password"],

264 | textarea {

265 | -webkit-appearance: none;

266 | -moz-appearance: none;

267 | appearance: none; }

268 | textarea {

269 | min-height: 65px;

270 | padding-top: 6px;

271 | padding-bottom: 6px; }

272 | input[type="email"]:focus,

273 | input[type="number"]:focus,

274 | input[type="search"]:focus,

275 | input[type="text"]:focus,

276 | input[type="tel"]:focus,

277 | input[type="url"]:focus,

278 | input[type="password"]:focus,

279 | textarea:focus,

280 | select:focus {

281 | border: 1px solid #33C3F0;

282 | outline: 0; }

283 | label,

284 | legend {

285 | display: block;

286 | margin-bottom: 0px; }

287 | fieldset {

288 | padding: 0;

289 | border-width: 0; }

290 | input[type="checkbox"],

291 | input[type="radio"] {

292 | display: inline; }

293 | label > .label-body {

294 | display: inline-block;

295 | margin-left: .5rem;

296 | font-weight: normal; }

297 |

298 |

299 | /* Lists

300 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

301 | ul {

302 | list-style: circle inside; }

303 | ol {

304 | list-style: decimal inside; }

305 | ol, ul {

306 | padding-left: 0;

307 | margin-top: 0; }

308 | ul ul,

309 | ul ol,

310 | ol ol,

311 | ol ul {

312 | margin: 1.5rem 0 1.5rem 3rem;

313 | font-size: 90%; }

314 | li {

315 | margin-bottom: 1rem; }

316 |

317 |

318 | /* Tables

319 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

320 | table {

321 | border-collapse: collapse;

322 | }

323 | th:not(.CalendarDay),

324 | td:not(.CalendarDay) {

325 | padding: 12px 15px;

326 | text-align: left;

327 | border-bottom: 1px solid #E1E1E1; }

328 | th:first-child:not(.CalendarDay),

329 | td:first-child:not(.CalendarDay) {

330 | padding-left: 0; }

331 | th:last-child:not(.CalendarDay),

332 | td:last-child:not(.CalendarDay) {

333 | padding-right: 0; }

334 |

335 |

336 | /* Spacing

337 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

338 | button,

339 | .button {

340 | margin-bottom: 0rem; }

341 | input,

342 | textarea,

343 | select,

344 | fieldset {

345 | margin-bottom: 0rem; }

346 | pre,

347 | dl,

348 | figure,

349 | table,

350 | form {

351 | margin-bottom: 0rem; }

352 | p,

353 | ul,

354 | ol {

355 | margin-bottom: 0.75rem; }

356 |

357 | /* Utilities

358 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

359 | .u-full-width {

360 | width: 100%;

361 | box-sizing: border-box; }

362 | .u-max-full-width {

363 | max-width: 100%;

364 | box-sizing: border-box; }

365 | .u-pull-right {

366 | float: right; }

367 | .u-pull-left {

368 | float: left; }

369 |

370 |

371 | /* Misc

372 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

373 | hr {

374 | margin-top: 3rem;

375 | margin-bottom: 3.5rem;

376 | border-width: 0;

377 | border-top: 1px solid #E1E1E1; }

378 |

379 |

380 | /* Clearing

381 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

382 |

383 | /* Self Clearing Goodness */

384 | .container:after,

385 | .row:after,

386 | .u-cf {

387 | content: "";

388 | display: table;

389 | clear: both; }

390 |

391 |

392 | /* Media Queries

393 | –––––––––––––––––––––––––––––––––––––––––––––––––– */

394 | /*

395 | Note: The best way to structure the use of media queries is to create the queries

396 | near the relevant code. For example, if you wanted to change the styles for buttons

397 | on small devices, paste the mobile query code up in the buttons section and style it

398 | there.

399 | */

400 |

401 |

402 | /* Larger than mobile */

403 | @media (min-width: 400px) {}

404 |

405 | /* Larger than phablet (also point when grid becomes active) */

406 | @media (min-width: 550px) {}

407 |

408 | /* Larger than tablet */

409 | @media (min-width: 750px) {}

410 |

411 | /* Larger than desktop */

412 | @media (min-width: 1000px) {}

413 |

414 | /* Larger than Desktop HD */

415 | @media (min-width: 1200px) {}

--------------------------------------------------------------------------------

/app/model.py:

--------------------------------------------------------------------------------

1 | import os

2 | import sys

3 | import tempfile

4 | import cv2

5 | from threading import Thread

6 | from time import sleep

7 | from glob import glob

8 |

9 | libpath = "/home/appuser/scripts/" # to keep the dev repo in place, w/o linking

10 | sys.path.insert(1,libpath)

11 | import ObjectDetection.imutils as imu

12 | from ObjectDetection.detect import DetectSingle, TrackSequence, GroupSequence

13 | from ObjectDetection.inpaintRemote import InpaintRemote

14 |

15 | # ------------

16 | # helper functions

17 |

18 | class ThreadWithReturnValue(Thread):

19 | def __init__(self, group=None, target=None, name=None,

20 | args=(), kwargs={}, Verbose=None):

21 | Thread.__init__(self, group, target, name, args, kwargs)

22 | self._return = None

23 | def run(self):

24 | if self._target is not None:

25 | self._return = self._target(*self._args, **self._kwargs)

26 | def join(self, *args):

27 | Thread.join(self, *args)

28 | return self._return

29 |

30 |

31 | # for output bounding box post-processing

32 | #def box_cxcywh_to_xyxy(x):

33 | # x_c, y_c, w, h = x.unbind(1)

34 | # b = [(x_c - 0.5 * w), (y_c - 0.5 * h),

35 | # (x_c + 0.5 * w), (y_c + 0.5 * h)]

36 | # return torch.stack(b, dim=1)

37 |

38 | #def rescale_bboxes(out_bbox, size):

39 | # img_w, img_h = size

40 | # b = box_cxcywh_to_xyxy(out_bbox)

41 | # b = b * torch.tensor([img_w, img_h, img_w, img_h], dtype=torch.float32)

42 | # return b

43 |

44 |

45 | def detect_scores_bboxes_classes(im,model):

46 | detr.predict(im)

47 | return detr.scores, detr.bboxes, detr.selClassList

48 |

49 |

50 | #def filter_boxes(scores, boxes, confidence=0.7, apply_nms=True, iou=0.5):

51 | # keep = scores.max(-1).values > confidence

52 | # scores, boxes = scores[keep], boxes[keep]

53 | #

54 | # if apply_nms:

55 | # top_scores, labels = scores.max(-1)

56 | # keep = batched_nms(boxes, top_scores, labels, iou)

57 | # scores, boxes = scores[keep], boxes[keep]

58 | #

59 | # return scores, boxes

60 |