├── IMG

├── EOTL-2D-CAR-312.png

├── EOTL-2D-CYC-066.png

├── EOTL-2D-PED-022.png

├── EOTL-3D-CAR-276.png

├── EOTL-3D-CYC-125.png

└── EOTL-3D-PED-091.png

├── README.md

├── autoware_tracker

├── CMakeLists.txt

├── LICENSE

├── README.md

├── config

│ └── params.yaml

├── launch

│ ├── run.launch

│ └── rviz.rviz

├── msg

│ ├── Centroids.msg

│ ├── CloudCluster.msg

│ ├── CloudClusterArray.msg

│ ├── DetectedObject.msg

│ └── DetectedObjectArray.msg

├── package.xml

└── src

│ ├── detected_objects_visualizer

│ ├── visualize_detected_objects.cpp

│ ├── visualize_detected_objects.h

│ ├── visualize_detected_objects_main.cpp

│ ├── visualize_rects.cpp

│ ├── visualize_rects.h

│ └── visualize_rects_main.cpp

│ ├── libkitti

│ ├── kitti.cpp

│ └── kitti.h

│ ├── lidar_euclidean_cluster_detect

│ ├── cluster.cpp

│ ├── cluster.h

│ ├── gencolors.cpp

│ ├── lidar_euclidean_cluster_detect.cpp

│ └── lidar_euclidean_cluster_detect.cpp.old

│ └── lidar_imm_ukf_pda_track

│ ├── imm_ukf_pda.cpp

│ ├── imm_ukf_pda.cpp.old

│ ├── imm_ukf_pda.h

│ ├── imm_ukf_pda_main.cpp

│ ├── ukf.cpp

│ └── ukf.h

├── efficient_det_ros

├── LICENSE

├── __pycache__

│ └── backbone.cpython-37.pyc

├── backbone.py

├── benchmark

│ └── coco_eval_result

├── coco_eval.py

├── efficient_det_node.py

├── efficientdet

│ ├── __pycache__

│ │ ├── model.cpython-37.pyc

│ │ └── utils.cpython-37.pyc

│ ├── config.py

│ ├── dataset.py

│ ├── loss.py

│ ├── model.py

│ └── utils.py

├── efficientdet_test.py

├── efficientdet_test_videos.py

├── efficientnet

│ ├── __init__.py

│ ├── __pycache__

│ │ ├── __init__.cpython-37.pyc

│ │ ├── model.cpython-37.pyc

│ │ ├── utils.cpython-37.pyc

│ │ └── utils_extra.cpython-37.pyc

│ ├── model.py

│ ├── utils.py

│ └── utils_extra.py

├── projects

│ ├── coco.yml

│ ├── kitti.yml

│ └── shape.yml

├── readme.md

├── train.py

├── tutorial

│ └── train_shape.ipynb

├── utils

│ ├── __pycache__

│ │ └── utils.cpython-37.pyc

│ ├── sync_batchnorm

│ │ ├── __init__.py

│ │ ├── __pycache__

│ │ │ ├── __init__.cpython-37.pyc

│ │ │ ├── batchnorm.cpython-37.pyc

│ │ │ ├── comm.cpython-37.pyc

│ │ │ └── replicate.cpython-37.pyc

│ │ ├── batchnorm.py

│ │ ├── batchnorm_reimpl.py

│ │ ├── comm.py

│ │ ├── replicate.py

│ │ └── unittest.py

│ └── utils.py

└── weights

│ └── efficientdet-d2.pth

├── kitti_camera_ros

├── .gitignore

├── .travis.yml

├── CMakeLists.txt

├── LICENSE

├── README.md

├── launch

│ └── kitti_camera_ros.launch

├── package.xml

└── src

│ └── kitti_camera_ros.cpp

├── kitti_velodyne_ros

├── .gitignore

├── .travis.yml

├── CMakeLists.txt

├── LICENSE

├── README.md

├── launch

│ ├── kitti_velodyne_ros.launch

│ ├── kitti_velodyne_ros.rviz

│ ├── kitti_velodyne_ros_loam.launch

│ └── kitti_velodyne_ros_loam.rviz

├── package.xml

└── src

│ └── kitti_velodyne_ros.cpp

├── launch

├── efficient_online_learning.launch

└── efficient_online_learning.rviz

├── online_forests_ros

├── CMakeLists.txt

├── LICENSE

├── README.md

├── config

│ └── orf.conf

├── doc

│ └── 2009-OnlineRandomForests.pdf

├── include

│ └── online_forests

│ │ ├── classifier.h

│ │ ├── data.h

│ │ ├── hyperparameters.h

│ │ ├── onlinenode.h

│ │ ├── onlinerf.h

│ │ ├── onlinetree.h

│ │ ├── randomtest.h

│ │ └── utilities.h

├── launch

│ └── online_forests_ros.launch

├── model

│ ├── dna-test.libsvm

│ └── dna-train.libsvm

├── package.xml

└── src

│ ├── online_forests

│ ├── Online-Forest.cpp

│ ├── classifier.cpp

│ ├── data.cpp

│ ├── hyperparameters.cpp

│ ├── onlinenode.cpp

│ ├── onlinerf.cpp

│ ├── onlinetree.cpp

│ ├── randomtest.cpp

│ └── utilities.cpp

│ └── online_forests_ros.cpp

├── online_svm_ros

├── CMakeLists.txt

├── LICENSE

├── README.md

├── config

│ └── svm.yaml

├── launch

│ └── online_svm_ros.launch

├── package.xml

└── src

│ └── online_svm_ros.cpp

└── point_cloud_features

├── CMakeLists.txt

├── LICENSE

├── README.md

├── include

└── point_cloud_features

│ └── point_cloud_features.h

├── launch

└── point_cloud_feature_extractor.launch

├── package.xml

└── src

├── point_cloud_feature_extractor.cpp

└── point_cloud_features

└── point_cloud_features.cpp

/IMG/EOTL-2D-CAR-312.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/RuiYang-1010/efficient_online_learning/2dca08c20a0a8f88b957c3e276f3ed4bf6f78c31/IMG/EOTL-2D-CAR-312.png

--------------------------------------------------------------------------------

/IMG/EOTL-2D-CYC-066.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/RuiYang-1010/efficient_online_learning/2dca08c20a0a8f88b957c3e276f3ed4bf6f78c31/IMG/EOTL-2D-CYC-066.png

--------------------------------------------------------------------------------

/IMG/EOTL-2D-PED-022.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/RuiYang-1010/efficient_online_learning/2dca08c20a0a8f88b957c3e276f3ed4bf6f78c31/IMG/EOTL-2D-PED-022.png

--------------------------------------------------------------------------------

/IMG/EOTL-3D-CAR-276.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/RuiYang-1010/efficient_online_learning/2dca08c20a0a8f88b957c3e276f3ed4bf6f78c31/IMG/EOTL-3D-CAR-276.png

--------------------------------------------------------------------------------

/IMG/EOTL-3D-CYC-125.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/RuiYang-1010/efficient_online_learning/2dca08c20a0a8f88b957c3e276f3ed4bf6f78c31/IMG/EOTL-3D-CYC-125.png

--------------------------------------------------------------------------------

/IMG/EOTL-3D-PED-091.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/RuiYang-1010/efficient_online_learning/2dca08c20a0a8f88b957c3e276f3ed4bf6f78c31/IMG/EOTL-3D-PED-091.png

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

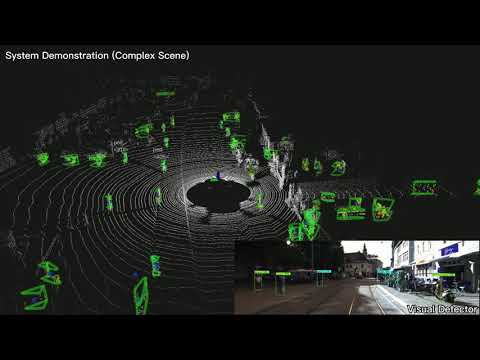

1 | # Efficient Online Transfer Learning for 3D Object Classification in Autonomous Driving #

2 |

3 | *We are actively updating this repository (especially removing hard code and adding comments) to make it easy to use. If you have any questions, please open an issue. Thanks!*

4 |

5 | This is a ROS-based efficient online learning framework for object classification in 3D LiDAR scans, taking advantage of robust multi-target tracking to avoid the need for data annotation by a human expert.

6 | The system is only tested in Ubuntu 18.04 and ROS Melodic (compilation fails on Ubuntu 20.04 and ROS Noetic).

7 |

8 | Please watch the videos below for more details.

9 |

10 | [](https://www.youtube.com/watch?v=wl5ehOFV5Ac)

11 |

12 | ## NEWS

13 | [2023-01-11] Our evaluation results in KITTI 3D OBJECT DETECTION are ranked 276, **91**, 125 in car, pedestrian and cyclist respectively.

14 |

15 | 3D Object Detection

16 |

17 | *CAR*

18 |

19 |

20 | *PEDESTRIAN*

21 |

22 |

23 | *CYCLIST*

24 |

25 |

26 |

27 | [2023-01-11] Our evaluation results in KITTI 2D OBJECT DETECTION achieved rankings of **22** on pedestrian and **66** on cyclist!

28 |

29 | 2D Object Detection

30 |

31 | *CAR*

32 |

33 |

34 | *PEDESTRIAN*

35 |

36 |

37 | *CYCLIST*

38 |

39 |

40 |

41 | ## Install & Build

42 | Please read the readme file of each sub-package first and install the corresponding dependencies.

43 |

44 | ## Run

45 | ### 1. Prepare dataset

46 | * (Optional) Download the [raw data](http://www.cvlibs.net/datasets/kitti/raw_data.php) from KITTI.

47 |

48 | * (Optional) Download the [sample data](https://github.com/epan-utbm/efficient_online_learning/releases/download/sample_data/2011_09_26_drive_0005_sync.tar) for testing.

49 |

50 | * (Optional) Prepare a customized dataset according to the format of the sample data.

51 |

52 | ### 2. Manual set specific path parameters

53 | # launch/efficient_online_learning

54 | # autoware_tracker/config/params.yaml

55 |

56 | ### 3. Run the project

57 | ```sh

58 | cd catkin_ws

59 | source devel/setup.bash

60 | roslaunch src/efficient_online_learning/launch/efficient_online_learning.launch

61 | ```

62 |

63 | ## Citation

64 |

65 | If you are considering using this code, please reference the following:

66 |

67 | ```

68 | @article{yangr23sensors,

69 | author = {Rui Yang and Zhi Yan and Tao Yang and Yaonan Wang and Yassine Ruichek},

70 | title = {Efficient Online Transfer Learning for Road Participants Detection in Autonomous Driving},

71 | journal = {IEEE Sensors Journal},

72 | volume = {23},

73 | number = {19},

74 | Pages = {23522--23535},

75 | year = {2023}

76 | }

77 |

78 | @inproceedings{yangr21itsc,

79 | title={Efficient online transfer learning for 3D object classification in autonomous driving},

80 | author={Rui Yang and Zhi Yan and Tao Yang and Yassine Ruichek},

81 | booktitle = {Proceedings of the 2021 IEEE International Conference on Intelligent Transportation Systems (ITSC)},

82 | pages = {2950--2957},

83 | address = {Indianapolis, USA},

84 | month = {September},

85 | year = {2021}

86 | }

87 | ```

88 |

--------------------------------------------------------------------------------

/autoware_tracker/CMakeLists.txt:

--------------------------------------------------------------------------------

1 | cmake_minimum_required(VERSION 2.8.3)

2 | project(autoware_tracker)

3 |

4 | set(CMAKE_BUILD_TYPE "Release")

5 | set(CMAKE_CXX_FLAGS "-std=c++11")

6 | set(CMAKE_CXX_FLAGS_RELEASE "-O3 -Wall -g -pthread")

7 |

8 | find_package(catkin REQUIRED COMPONENTS

9 | tf

10 | pcl_ros

11 | roscpp

12 | std_msgs

13 | sensor_msgs

14 | geometry_msgs

15 | visualization_msgs

16 | cv_bridge

17 | image_transport

18 | jsk_recognition_msgs

19 | jsk_rviz_plugins

20 | message_generation

21 | )

22 |

23 | # Messages

24 | add_message_files(

25 | DIRECTORY msg

26 | FILES

27 | Centroids.msg

28 | CloudCluster.msg

29 | CloudClusterArray.msg

30 | DetectedObject.msg

31 | DetectedObjectArray.msg

32 | )

33 | generate_messages(

34 | DEPENDENCIES

35 | geometry_msgs

36 | jsk_recognition_msgs

37 | sensor_msgs

38 | std_msgs

39 | )

40 |

41 | catkin_package(

42 | #INCLUDE_DIRS include

43 | CATKIN_DEPENDS

44 | tf

45 | pcl_ros

46 | roscpp

47 | std_msgs

48 | sensor_msgs

49 | geometry_msgs

50 | visualization_msgs

51 | cv_bridge

52 | image_transport

53 | jsk_recognition_msgs

54 | jsk_rviz_plugins

55 | message_runtime

56 | message_generation

57 | )

58 |

59 |

60 | find_package(OpenMP)

61 | find_package(OpenCV REQUIRED)

62 | find_package(Eigen3 QUIET)

63 |

64 | include_directories(

65 | #include

66 | ${catkin_INCLUDE_DIRS}

67 | ${OpenCV_INCLUDE_DIRS}

68 | )

69 |

70 | add_executable(visualize_detected_objects

71 | src/detected_objects_visualizer/visualize_detected_objects_main.cpp

72 | src/detected_objects_visualizer/visualize_detected_objects.cpp

73 | )

74 | add_dependencies(visualize_detected_objects ${catkin_EXPORTED_TARGETS} ${PROJECT_NAME}_generate_messages_cpp)

75 | target_link_libraries(visualize_detected_objects ${OpenCV_LIBRARIES} ${EIGEN3_LIBRARIES} ${catkin_LIBRARIES})

76 |

77 | add_executable(lidar_euclidean_cluster_detect

78 | src/lidar_euclidean_cluster_detect/lidar_euclidean_cluster_detect.cpp

79 | src/lidar_euclidean_cluster_detect/cluster.cpp

80 | src/libkitti/kitti.cpp)

81 | add_dependencies(lidar_euclidean_cluster_detect ${catkin_EXPORTED_TARGETS} ${PROJECT_NAME}_generate_messages_cpp)

82 | target_link_libraries(lidar_euclidean_cluster_detect ${OpenCV_LIBRARIES} ${catkin_LIBRARIES})

83 |

84 | add_executable(imm_ukf_pda

85 | src/lidar_imm_ukf_pda_track/imm_ukf_pda_main.cpp

86 | src/lidar_imm_ukf_pda_track/imm_ukf_pda.h

87 | src/lidar_imm_ukf_pda_track/imm_ukf_pda.cpp

88 | src/lidar_imm_ukf_pda_track/ukf.cpp

89 | )

90 | add_dependencies(imm_ukf_pda ${catkin_EXPORTED_TARGETS} ${PROJECT_NAME}_generate_messages_cpp)

91 | target_link_libraries(imm_ukf_pda ${catkin_LIBRARIES})

92 |

93 | install(DIRECTORY include/${PROJECT_NAME}/

94 | DESTINATION ${CATKIN_PACKAGE_INCLUDE_DESTINATION}

95 | FILES_MATCHING PATTERN "*.h"

96 | PATTERN ".svn" EXCLUDE)

97 |

--------------------------------------------------------------------------------

/autoware_tracker/README.md:

--------------------------------------------------------------------------------

1 | # autoware_tracker

2 |

3 | This pacakge is forked from [https://github.com/TixiaoShan/autoware_tracker](https://github.com/TixiaoShan/autoware_tracker), and the original Readme file is below the dividing line.

4 |

5 | [2020-10-xx]: Added "automatic annotation" for point clouds, please install the dependencies first: `$ sudo apt install ros-melodic-vision-msgs`.

6 |

7 | [2020-09-18]: Added "intensity" to the points, which is essential for our online learning system, as the intensity can help us distinguish objects.

8 |

9 | ---

10 |

11 | # Readme

12 |

13 | Barebone package for point cloud object tracking used in Autoware. The package is only tested in Ubuntu 16.04 and ROS Kinetic. No deep learning is used.

14 |

15 | # Install JSK

16 | ```

17 | sudo apt-get install ros-kinetic-jsk-recognition-msgs

18 | sudo apt-get install ros-kinetic-jsk-rviz-plugins

19 | ```

20 |

21 | # Compile

22 | ```

23 | cd ~/catkin_ws/src

24 | git clone https://github.com/TixiaoShan/autoware_tracker.git

25 | cd ~/catkin_ws

26 | catkin_make -j1

27 | ```

28 | ```-j1``` is only needed for message generation in the first install.

29 |

30 | # Sample data

31 |

32 | In case you don't have some bag files handy, you can download a sample bag using:

33 | ```

34 | wget https://autoware-ai.s3.us-east-2.amazonaws.com/sample_moriyama_150324.tar.gz

35 | ```

36 |

37 | # Demo

38 |

39 | Run the autoware tracker:

40 | ```

41 | roslaunch autoware_tracker run.launch

42 | ```

43 |

44 | Play the sample ros bag:

45 | ```

46 | rosbag play sample_moriyama_150324.bag

47 | ```

48 |

--------------------------------------------------------------------------------

/autoware_tracker/config/params.yaml:

--------------------------------------------------------------------------------

1 | autoware_tracker:

2 |

3 | cluster:

4 | label_source: "/image_detections"

5 | extrinsic_calibration: "/home/epan/Rui/datasets/2011_09_26_drive_0005_sync/calib_2011_09_26.txt"

6 | iou_threshold: 0.5

7 |

8 | points_node: "/points_raw"

9 | output_frame: "velodyne"

10 |

11 | remove_ground: true

12 |

13 | downsample_cloud: true

14 | leaf_size: 0.1

15 |

16 | use_multiple_thres: true

17 |

18 | cluster_size_min: 20

19 | cluster_size_max: 100000

20 | clip_min_height: -2.0

21 | clip_max_height: 0.0

22 | cluster_merge_threshold: 1.5

23 | clustering_distance: 0.75

24 | remove_points_min: 2.0

25 | remove_points_max: 100.0

26 |

27 | keep_lanes: false

28 | keep_lane_left_distance: 5.0

29 | keep_lane_right_distance: 5.0

30 |

31 | use_diffnormals: false

32 | pose_estimation: true

33 |

34 | tracker:

35 | gating_thres: 9.22

36 | gate_probability: 0.99

37 | detection_probability: 0.9

38 | life_time_thres: 8

39 | static_velocity_thres: 0.5

40 | static_num_history_thres: 3

41 | prevent_explosion_thres: 1000

42 | merge_distance_threshold: 0.5

43 | use_sukf: false

44 |

45 | tracking_frame: "/world"

46 | # namespace: /detection/object_tracker/

47 | track_probability: 0.7

48 |

--------------------------------------------------------------------------------

/autoware_tracker/launch/run.launch:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

--------------------------------------------------------------------------------

/autoware_tracker/launch/rviz.rviz:

--------------------------------------------------------------------------------

1 | Panels:

2 | - Class: rviz/Displays

3 | Help Height: 0

4 | Name: Displays

5 | Property Tree Widget:

6 | Expanded:

7 | - /Global Options1

8 | - /MarkerArray1

9 | - /MarkerArray1/Namespaces1

10 | Splitter Ratio: 0.505813956

11 | Tree Height: 1124

12 | - Class: rviz/Selection

13 | Name: Selection

14 | - Class: rviz/Tool Properties

15 | Expanded:

16 | - /2D Pose Estimate1

17 | - /2D Nav Goal1

18 | - /Publish Point1

19 | Name: Tool Properties

20 | Splitter Ratio: 0.588679016

21 | - Class: rviz/Views

22 | Expanded:

23 | - /Current View1

24 | Name: Views

25 | Splitter Ratio: 0.5

26 | - Class: rviz/Time

27 | Experimental: false

28 | Name: Time

29 | SyncMode: 0

30 | SyncSource: Point cluster

31 | Toolbars:

32 | toolButtonStyle: 2

33 | Visualization Manager:

34 | Class: ""

35 | Displays:

36 | - Class: rviz/Axes

37 | Enabled: true

38 | Length: 1

39 | Name: Axes

40 | Radius: 0.300000012

41 | Reference Frame:

42 | Value: true

43 | - Class: rviz/Group

44 | Displays:

45 | - Alpha: 1

46 | Autocompute Intensity Bounds: true

47 | Autocompute Value Bounds:

48 | Max Value: 10

49 | Min Value: -10

50 | Value: true

51 | Axis: Z

52 | Channel Name: intensity

53 | Class: rviz/PointCloud2

54 | Color: 255; 255; 255

55 | Color Transformer: Intensity

56 | Decay Time: 0

57 | Enabled: true

58 | Invert Rainbow: false

59 | Max Color: 255; 255; 255

60 | Max Intensity: 142

61 | Min Color: 0; 0; 0

62 | Min Intensity: 0

63 | Name: Point cloud

64 | Position Transformer: XYZ

65 | Queue Size: 10

66 | Selectable: true

67 | Size (Pixels): 1

68 | Size (m): 0.00999999978

69 | Style: Points

70 | Topic: /points_raw

71 | Unreliable: false

72 | Use Fixed Frame: true

73 | Use rainbow: true

74 | Value: true

75 | - Alpha: 1

76 | Autocompute Intensity Bounds: true

77 | Autocompute Value Bounds:

78 | Max Value: 10

79 | Min Value: -10

80 | Value: true

81 | Axis: Z

82 | Channel Name: intensity

83 | Class: rviz/PointCloud2

84 | Color: 255; 255; 255

85 | Color Transformer: RGB8

86 | Decay Time: 0

87 | Enabled: true

88 | Invert Rainbow: false

89 | Max Color: 255; 255; 255

90 | Max Intensity: 4096

91 | Min Color: 0; 0; 0

92 | Min Intensity: 0

93 | Name: Point cluster

94 | Position Transformer: XYZ

95 | Queue Size: 10

96 | Selectable: true

97 | Size (Pixels): 5

98 | Size (m): 0.00999999978

99 | Style: Points

100 | Topic: /autoware_tracker/cluster/points_cluster

101 | Unreliable: false

102 | Use Fixed Frame: true

103 | Use rainbow: true

104 | Value: true

105 | Enabled: true

106 | Name: Lidar cluster

107 | - Class: rviz/MarkerArray

108 | Enabled: true

109 | Marker Topic: /autoware_tracker/visualizer/objects

110 | Name: MarkerArray

111 | Namespaces:

112 | arrow_markers: true

113 | box_markers: false

114 | centroid_markers: true

115 | hull_markers: true

116 | label_markers: true

117 | Queue Size: 100

118 | Value: true

119 | Enabled: true

120 | Global Options:

121 | Background Color: 48; 48; 48

122 | Default Light: true

123 | Fixed Frame: velodyne

124 | Frame Rate: 30

125 | Name: root

126 | Tools:

127 | - Class: rviz/Interact

128 | Hide Inactive Objects: true

129 | - Class: rviz/MoveCamera

130 | - Class: rviz/Select

131 | - Class: rviz/FocusCamera

132 | - Class: rviz/Measure

133 | - Class: rviz/SetInitialPose

134 | Topic: /initialpose

135 | - Class: rviz/SetGoal

136 | Topic: /move_base_simple/goal

137 | - Class: rviz/PublishPoint

138 | Single click: true

139 | Topic: /clicked_point

140 | Value: true

141 | Views:

142 | Current:

143 | Class: rviz/Orbit

144 | Distance: 48.6941605

145 | Enable Stereo Rendering:

146 | Stereo Eye Separation: 0.0599999987

147 | Stereo Focal Distance: 1

148 | Swap Stereo Eyes: false

149 | Value: false

150 | Focal Point:

151 | X: 0

152 | Y: 0

153 | Z: 0

154 | Focal Shape Fixed Size: true

155 | Focal Shape Size: 0.0500000007

156 | Invert Z Axis: false

157 | Name: Current View

158 | Near Clip Distance: 0.00999999978

159 | Pitch: 0.869796932

160 | Target Frame: base_link

161 | Value: Orbit (rviz)

162 | Yaw: 2.58810186

163 | Saved: ~

164 | Window Geometry:

165 | Displays:

166 | collapsed: false

167 | Height: 1274

168 | Hide Left Dock: false

169 | Hide Right Dock: true

170 | QMainWindow State: 000000ff00000000fd000000040000000000000206000004abfc020000000efb0000001200530065006c0065006300740069006f006e00000001e10000009b0000006300fffffffb0000001e0054006f006f006c002000500072006f007000650072007400690065007302000001ed000001df00000185000000a3fb000000120056006900650077007300200054006f006f02000001df000002110000018500000122fb000000200054006f006f006c002000500072006f0070006500720074006900650073003203000002880000011d000002210000017afc0000002e000004ab000000dd00fffffffa000000020100000003fb0000000a0049006d0061006700650000000000ffffffff0000000000000000fb0000000c00430061006d0065007200610000000000ffffffff0000000000000000fb000000100044006900730070006c0061007900730100000000000001360000017900fffffffb0000000a0049006d006100670065010000028e000000d20000000000000000fb0000002000730065006c0065006300740069006f006e00200062007500660066006500720200000138000000aa0000023a00000294fb00000014005700690064006500530074006500720065006f02000000e6000000d2000003ee0000030bfb0000000c004b0069006e0065006300740200000186000001060000030c00000261fb000000120049006d006100670065005f0072006100770000000000ffffffff0000000000000000fb0000000c00430061006d006500720061000000024e000001710000000000000000fb000000120049006d00610067006500200052006100770100000421000000160000000000000000fb0000000a0049006d00610067006501000002f4000000cb0000000000000000fb0000000a0049006d006100670065010000056c0000026c0000000000000000000000010000016300000313fc0200000003fb0000000a00560069006500770073000000002e00000313000000b700fffffffb0000001e0054006f006f006c002000500072006f00700065007200740069006500730100000041000000780000000000000000fb0000001200530065006c0065006300740069006f006e010000025a000000b20000000000000000000000020000073f000000a8fc0100000001fb0000000a00560069006500770073030000004e00000080000002e10000019700000003000006400000005cfc0100000002fb0000000800540069006d00650000000000000006400000038300fffffffb0000000800540069006d00650100000000000004500000000000000000000006ae000004ab00000004000000040000000800000008fc0000000100000002000000010000000a0054006f006f006c00730100000000ffffffff0000000000000000

171 | Selection:

172 | collapsed: false

173 | Time:

174 | collapsed: false

175 | Tool Properties:

176 | collapsed: false

177 | Views:

178 | collapsed: true

179 | Width: 2235

180 | X: 692

181 | Y: 316

182 |

--------------------------------------------------------------------------------

/autoware_tracker/msg/Centroids.msg:

--------------------------------------------------------------------------------

1 | std_msgs/Header header

2 | geometry_msgs/Point[] points

3 |

--------------------------------------------------------------------------------

/autoware_tracker/msg/CloudCluster.msg:

--------------------------------------------------------------------------------

1 | std_msgs/Header header

2 |

3 | uint32 id

4 | string label

5 | float64 score

6 |

7 | sensor_msgs/PointCloud2 cloud

8 |

9 | geometry_msgs/PointStamped min_point

10 | geometry_msgs/PointStamped max_point

11 | geometry_msgs/PointStamped avg_point

12 | geometry_msgs/PointStamped centroid_point

13 |

14 | float64 estimated_angle

15 |

16 | geometry_msgs/Vector3 dimensions

17 | geometry_msgs/Vector3 eigen_values

18 | geometry_msgs/Vector3[] eigen_vectors

19 |

20 | #Array of 33 floats containing the FPFH descriptor

21 | std_msgs/Float32MultiArray fpfh_descriptor

22 |

23 | jsk_recognition_msgs/BoundingBox bounding_box

24 | geometry_msgs/PolygonStamped convex_hull

25 |

26 | # Indicator information

27 | # INDICATOR_LEFT 0

28 | # INDICATOR_RIGHT 1

29 | # INDICATOR_BOTH 2

30 | # INDICATOR_NONE 3

31 | uint32 indicator_state

--------------------------------------------------------------------------------

/autoware_tracker/msg/CloudClusterArray.msg:

--------------------------------------------------------------------------------

1 | std_msgs/Header header

2 | CloudCluster[] clusters

--------------------------------------------------------------------------------

/autoware_tracker/msg/DetectedObject.msg:

--------------------------------------------------------------------------------

1 | std_msgs/Header header

2 |

3 | uint32 id

4 | string label

5 | float32 score #Score as defined by the detection, Optional

6 | std_msgs/ColorRGBA color # Define this object specific color

7 | bool valid # Defines if this object is valid, or invalid as defined by the filtering

8 |

9 | ################ 3D BB

10 | string space_frame #3D Space coordinate frame of the object, required if pose and dimensions are defines

11 | geometry_msgs/Pose pose

12 | geometry_msgs/Vector3 dimensions

13 | geometry_msgs/Vector3 variance

14 | geometry_msgs/Twist velocity

15 | geometry_msgs/Twist acceleration

16 |

17 | sensor_msgs/PointCloud2 pointcloud

18 |

19 | geometry_msgs/PolygonStamped convex_hull

20 | # autoware_msgs/LaneArray candidate_trajectories

21 |

22 | bool pose_reliable

23 | bool velocity_reliable

24 | bool acceleration_reliable

25 |

26 | ############### 2D Rect

27 | string image_frame # Image coordinate Frame, Required if x,y,w,h defined

28 | int32 x # X coord in image space(pixel) of the initial point of the Rect

29 | int32 y # Y coord in image space(pixel) of the initial point of the Rect

30 | int32 width # Width of the Rect in pixels

31 | int32 height # Height of the Rect in pixels

32 | float32 angle # Angle [0 to 2*PI), allow rotated rects

33 |

34 | sensor_msgs/Image roi_image

35 |

36 | ############### Indicator information

37 | uint8 indicator_state # INDICATOR_LEFT = 0, INDICATOR_RIGHT = 1, INDICATOR_BOTH = 2, INDICATOR_NONE = 3

38 |

39 | ############### Behavior State of the Detected Object

40 | uint8 behavior_state # FORWARD_STATE = 0, STOPPING_STATE = 1, BRANCH_LEFT_STATE = 2, BRANCH_RIGHT_STATE = 3, YIELDING_STATE = 4, ACCELERATING_STATE = 5, SLOWDOWN_STATE = 6

41 |

42 | #

43 | string[] user_defined_info

44 |

45 | # yang21itsc

46 | bool last_sample

--------------------------------------------------------------------------------

/autoware_tracker/msg/DetectedObjectArray.msg:

--------------------------------------------------------------------------------

1 | std_msgs/Header header

2 | DetectedObject[] objects

--------------------------------------------------------------------------------

/autoware_tracker/package.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 | autoware_tracker

4 | 1.0.0

5 | The autoware tracker package

6 | Tixiao Shan

7 | Apache 2

8 |

9 | catkin

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 | jsk_recognition_msgs

20 | jsk_recognition_msgs

21 |

22 |

23 | pcl_ros

24 | roscpp

25 | geometry_msgs

26 | std_msgs

27 | sensor_msgs

28 | tf

29 | visualization_msgs

30 | cv_bridge

31 | image_transport

32 |

33 |

34 | pcl_ros

35 | roscpp

36 | geometry_msgs

37 | std_msgs

38 | sensor_msgs

39 | tf

40 | visualization_msgs

41 | cv_bridge

42 | image_transport

43 |

44 |

45 |

46 |

47 | jsk_rviz_plugins

48 |

49 |

50 |

51 | jsk_rviz_plugins

52 |

53 |

54 | message_generation

55 | message_generation

56 | message_runtime

57 | message_runtime

58 |

59 |

60 |

61 |

62 |

63 |

64 |

65 |

66 |

67 |

68 |

--------------------------------------------------------------------------------

/autoware_tracker/src/detected_objects_visualizer/visualize_detected_objects.h:

--------------------------------------------------------------------------------

1 | /*

2 | * Copyright 2018-2019 Autoware Foundation. All rights reserved.

3 | *

4 | * Licensed under the Apache License, Version 2.0 (the "License");

5 | * you may not use this file except in compliance with the License.

6 | * You may obtain a copy of the License at

7 | *

8 | * http://www.apache.org/licenses/LICENSE-2.0

9 | *

10 | * Unless required by applicable law or agreed to in writing, software

11 | * distributed under the License is distributed on an "AS IS" BASIS,

12 | * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | * See the License for the specific language governing permissions and

14 | * limitations under the License.

15 | *

16 | ********************

17 | * v1.0: amc-nu (abrahammonrroy@yahoo.com)

18 | */

19 |

20 | #ifndef _VISUALIZEDETECTEDOBJECTS_H

21 | #define _VISUALIZEDETECTEDOBJECTS_H

22 |

23 | #include

24 | #include

25 | #include

26 | #include

27 | #include

28 |

29 | #include

30 | #include

31 |

32 | #include

33 |

34 | #include

35 | #include

36 |

37 | #include "autoware_tracker/DetectedObject.h"

38 | #include "autoware_tracker/DetectedObjectArray.h"

39 |

40 | #define __APP_NAME__ "visualize_detected_objects"

41 |

42 | class VisualizeDetectedObjects

43 | {

44 | private:

45 | const double arrow_height_;

46 | const double label_height_;

47 | const double object_max_linear_size_ = 50.;

48 | double object_speed_threshold_;

49 | double arrow_speed_threshold_;

50 | double marker_display_duration_;

51 |

52 | int marker_id_;

53 |

54 | std_msgs::ColorRGBA label_color_, box_color_, hull_color_, arrow_color_, centroid_color_, model_color_;

55 |

56 | std::string input_topic_, ros_namespace_;

57 |

58 | ros::NodeHandle node_handle_;

59 | ros::Subscriber subscriber_detected_objects_;

60 |

61 | ros::Publisher publisher_markers_;

62 |

63 | visualization_msgs::MarkerArray ObjectsToLabels(const autoware_tracker::DetectedObjectArray &in_objects);

64 |

65 | visualization_msgs::MarkerArray ObjectsToArrows(const autoware_tracker::DetectedObjectArray &in_objects);

66 |

67 | visualization_msgs::MarkerArray ObjectsToBoxes(const autoware_tracker::DetectedObjectArray &in_objects);

68 |

69 | visualization_msgs::MarkerArray ObjectsToModels(const autoware_tracker::DetectedObjectArray &in_objects);

70 |

71 | visualization_msgs::MarkerArray ObjectsToHulls(const autoware_tracker::DetectedObjectArray &in_objects);

72 |

73 | visualization_msgs::MarkerArray ObjectsToCentroids(const autoware_tracker::DetectedObjectArray &in_objects);

74 |

75 | std::string ColorToString(const std_msgs::ColorRGBA &in_color);

76 |

77 | void DetectedObjectsCallback(const autoware_tracker::DetectedObjectArray &in_objects);

78 |

79 | bool IsObjectValid(const autoware_tracker::DetectedObject &in_object);

80 |

81 | float CheckColor(double value);

82 |

83 | float CheckAlpha(double value);

84 |

85 | std_msgs::ColorRGBA ParseColor(const std::vector &in_color);

86 |

87 | public:

88 | VisualizeDetectedObjects();

89 | };

90 |

91 | #endif // _VISUALIZEDETECTEDOBJECTS_H

92 |

--------------------------------------------------------------------------------

/autoware_tracker/src/detected_objects_visualizer/visualize_detected_objects_main.cpp:

--------------------------------------------------------------------------------

1 | /*

2 | * Copyright 2018-2019 Autoware Foundation. All rights reserved.

3 | *

4 | * Licensed under the Apache License, Version 2.0 (the "License");

5 | * you may not use this file except in compliance with the License.

6 | * You may obtain a copy of the License at

7 | *

8 | * http://www.apache.org/licenses/LICENSE-2.0

9 | *

10 | * Unless required by applicable law or agreed to in writing, software

11 | * distributed under the License is distributed on an "AS IS" BASIS,

12 | * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | * See the License for the specific language governing permissions and

14 | * limitations under the License.

15 | *

16 | ********************

17 | * v1.0: amc-nu (abrahammonrroy@yahoo.com)

18 | */

19 |

20 | #include "visualize_detected_objects.h"

21 |

22 | int main(int argc, char** argv)

23 | {

24 | ros::init(argc, argv, "visualize_detected_objects");

25 | ros::console::set_logger_level(ROSCONSOLE_DEFAULT_NAME, ros::console::levels::Warn);

26 |

27 | VisualizeDetectedObjects app;

28 | ros::spin();

29 |

30 | return 0;

31 | }

32 |

--------------------------------------------------------------------------------

/autoware_tracker/src/detected_objects_visualizer/visualize_rects.cpp:

--------------------------------------------------------------------------------

1 | /*

2 | * Copyright 2018-2019 Autoware Foundation. All rights reserved.

3 | *

4 | * Licensed under the Apache License, Version 2.0 (the "License");

5 | * you may not use this file except in compliance with the License.

6 | * You may obtain a copy of the License at

7 | *

8 | * http://www.apache.org/licenses/LICENSE-2.0

9 | *

10 | * Unless required by applicable law or agreed to in writing, software

11 | * distributed under the License is distributed on an "AS IS" BASIS,

12 | * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | * See the License for the specific language governing permissions and

14 | * limitations under the License.

15 | *

16 | ********************

17 | * v1.0: amc-nu (abrahammonrroy@yahoo.com)

18 | */

19 |

20 | #include "visualize_rects.h"

21 |

22 | VisualizeRects::VisualizeRects()

23 | {

24 | ros::NodeHandle private_nh_("~");

25 |

26 | ros::NodeHandle nh;

27 |

28 | std::string image_src_topic;

29 | std::string object_src_topic;

30 | std::string image_out_topic;

31 |

32 | private_nh_.param("image_src", image_src_topic, "/image_raw");

33 | private_nh_.param("object_src", object_src_topic, "/detection/image_detector/objects");

34 | private_nh_.param("image_out", image_out_topic, "/image_rects");

35 |

36 | //get namespace from topic

37 | std::string ros_namespace = image_src_topic;

38 | std::size_t found_pos = ros_namespace.rfind("/");//find last / from topic name to extract namespace

39 | std::cout << ros_namespace << std::endl;

40 | if (found_pos!=std::string::npos)

41 | ros_namespace.erase(found_pos, ros_namespace.length()-found_pos);

42 | std::cout << ros_namespace << std::endl;

43 | image_out_topic = ros_namespace + image_out_topic;

44 |

45 | image_filter_subscriber_ = new message_filters::Subscriber(private_nh_,

46 | image_src_topic,

47 | 1);

48 | ROS_INFO("[%s] image_src: %s", __APP_NAME__, image_src_topic.c_str());

49 | detection_filter_subscriber_ = new message_filters::Subscriber(private_nh_,

50 | object_src_topic,

51 | 1);

52 | ROS_INFO("[%s] object_src: %s", __APP_NAME__, object_src_topic.c_str());

53 |

54 | detections_synchronizer_ =

55 | new message_filters::Synchronizer(SyncPolicyT(10),

56 | *image_filter_subscriber_,

57 | *detection_filter_subscriber_);

58 | detections_synchronizer_->registerCallback(

59 | boost::bind(&VisualizeRects::SyncedDetectionsCallback, this, _1, _2));

60 |

61 |

62 | publisher_image_ = node_handle_.advertise(

63 | image_out_topic, 1);

64 | ROS_INFO("[%s] image_out: %s", __APP_NAME__, image_out_topic.c_str());

65 |

66 | }

67 |

68 | void

69 | VisualizeRects::SyncedDetectionsCallback(

70 | const sensor_msgs::Image::ConstPtr &in_image_msg,

71 | const autoware_tracker::DetectedObjectArray::ConstPtr &in_objects)

72 | {

73 | try

74 | {

75 | image_ = cv_bridge::toCvShare(in_image_msg, "bgr8")->image;

76 | cv::Mat drawn_image;

77 | drawn_image = ObjectsToRects(image_, in_objects);

78 | sensor_msgs::ImagePtr drawn_msg = cv_bridge::CvImage(in_image_msg->header, "bgr8", drawn_image).toImageMsg();

79 | publisher_image_.publish(drawn_msg);

80 | }

81 | catch (cv_bridge::Exception& e)

82 | {

83 | ROS_ERROR("[%s] Could not convert from '%s' to 'bgr8'.", __APP_NAME__, in_image_msg->encoding.c_str());

84 | }

85 | }

86 |

87 | cv::Mat

88 | VisualizeRects::ObjectsToRects(cv::Mat in_image, const autoware_tracker::DetectedObjectArray::ConstPtr &in_objects)

89 | {

90 | cv::Mat final_image = in_image.clone();

91 | for (auto const &object: in_objects->objects)

92 | {

93 | if (IsObjectValid(object))

94 | {

95 | cv::Rect rect;

96 | rect.x = object.x;

97 | rect.y = object.y;

98 | rect.width = object.width;

99 | rect.height = object.height;

100 |

101 | if (rect.x+rect.width >= in_image.cols)

102 | rect.width = in_image.cols -rect.x - 1;

103 |

104 | if (rect.y+rect.height >= in_image.rows)

105 | rect.height = in_image.rows -rect.y - 1;

106 |

107 | //draw rectangle

108 | cv::rectangle(final_image,

109 | rect,

110 | cv::Scalar(244,134,66),

111 | 4,

112 | CV_AA);

113 |

114 | //draw label

115 | std::string label = "";

116 | if (!object.label.empty() && object.label != "unknown")

117 | {

118 | label = object.label;

119 | }

120 | int font_face = cv::FONT_HERSHEY_DUPLEX;

121 | double font_scale = 1.5;

122 | int thickness = 1;

123 |

124 | int baseline=0;

125 | cv::Size text_size = cv::getTextSize(label,

126 | font_face,

127 | font_scale,

128 | thickness,

129 | &baseline);

130 | baseline += thickness;

131 |

132 | cv::Point text_origin(object.x - text_size.height,object.y);

133 |

134 | cv::rectangle(final_image,

135 | text_origin + cv::Point(0, baseline),

136 | text_origin + cv::Point(text_size.width, -text_size.height),

137 | cv::Scalar(0,0,0),

138 | CV_FILLED,

139 | CV_AA,

140 | 0);

141 |

142 | cv::putText(final_image,

143 | label,

144 | text_origin,

145 | font_face,

146 | font_scale,

147 | cv::Scalar::all(255),

148 | thickness,

149 | CV_AA,

150 | false);

151 |

152 | }

153 | }

154 | return final_image;

155 | }//ObjectsToBoxes

156 |

157 | bool VisualizeRects::IsObjectValid(const autoware_tracker::DetectedObject &in_object)

158 | {

159 | if (!in_object.valid ||

160 | in_object.width < 0 ||

161 | in_object.height < 0 ||

162 | in_object.x < 0 ||

163 | in_object.y < 0

164 | )

165 | {

166 | return false;

167 | }

168 | return true;

169 | }//end IsObjectValid

--------------------------------------------------------------------------------

/autoware_tracker/src/detected_objects_visualizer/visualize_rects.h:

--------------------------------------------------------------------------------

1 | /*

2 | * Copyright 2018-2019 Autoware Foundation. All rights reserved.

3 | *

4 | * Licensed under the Apache License, Version 2.0 (the "License");

5 | * you may not use this file except in compliance with the License.

6 | * You may obtain a copy of the License at

7 | *

8 | * http://www.apache.org/licenses/LICENSE-2.0

9 | *

10 | * Unless required by applicable law or agreed to in writing, software

11 | * distributed under the License is distributed on an "AS IS" BASIS,

12 | * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | * See the License for the specific language governing permissions and

14 | * limitations under the License.

15 | *

16 | ********************

17 | * v1.0: amc-nu (abrahammonrroy@yahoo.com)

18 | */

19 |

20 | #ifndef _VISUALIZERECTS_H

21 | #define _VISUALIZERECTS_H

22 |

23 | #include

24 | #include

25 | #include

26 | #include

27 | #include

28 |

29 | #include

30 |

31 | #include

32 | #include

33 | #include

34 | #include

35 | #include

36 |

37 | #include

38 | #include

39 | #include

40 |

41 | #include "autoware_tracker/DetectedObject.h"

42 | #include "autoware_tracker/DetectedObjectArray.h"

43 |

44 | #define __APP_NAME__ "visualize_rects"

45 |

46 | class VisualizeRects

47 | {

48 | private:

49 | std::string input_topic_;

50 |

51 | ros::NodeHandle node_handle_;

52 | ros::Subscriber subscriber_detected_objects_;

53 | image_transport::Subscriber subscriber_image_;

54 |

55 | message_filters::Subscriber *detection_filter_subscriber_;

56 | message_filters::Subscriber *image_filter_subscriber_;

57 |

58 | ros::Publisher publisher_image_;

59 |

60 | cv::Mat image_;

61 | std_msgs::Header image_header_;

62 |

63 | typedef

64 | message_filters::sync_policies::ApproximateTime SyncPolicyT;

66 |

67 | message_filters::Synchronizer

68 | *detections_synchronizer_;

69 |

70 | void

71 | SyncedDetectionsCallback(

72 | const sensor_msgs::Image::ConstPtr &in_image_msg,

73 | const autoware_tracker::DetectedObjectArray::ConstPtr &in_range_detections);

74 |

75 | bool IsObjectValid(const autoware_tracker::DetectedObject &in_object);

76 |

77 | cv::Mat ObjectsToRects(cv::Mat in_image, const autoware_tracker::DetectedObjectArray::ConstPtr &in_objects);

78 |

79 | public:

80 | VisualizeRects();

81 | };

82 |

83 | #endif // _VISUALIZERECTS_H

84 |

--------------------------------------------------------------------------------

/autoware_tracker/src/detected_objects_visualizer/visualize_rects_main.cpp:

--------------------------------------------------------------------------------

1 | /*

2 | * Copyright 2018-2019 Autoware Foundation. All rights reserved.

3 | *

4 | * Licensed under the Apache License, Version 2.0 (the "License");

5 | * you may not use this file except in compliance with the License.

6 | * You may obtain a copy of the License at

7 | *

8 | * http://www.apache.org/licenses/LICENSE-2.0

9 | *

10 | * Unless required by applicable law or agreed to in writing, software

11 | * distributed under the License is distributed on an "AS IS" BASIS,

12 | * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | * See the License for the specific language governing permissions and

14 | * limitations under the License.

15 | *

16 | ********************

17 | * v1.0: amc-nu (abrahammonrroy@yahoo.com)

18 | */

19 |

20 | #include "visualize_rects.h"

21 |

22 | int main(int argc, char** argv)

23 | {

24 | ros::init(argc, argv, "visualize_rects");

25 | VisualizeRects app;

26 | ros::spin();

27 |

28 | return 0;

29 | }

30 |

--------------------------------------------------------------------------------

/autoware_tracker/src/lidar_euclidean_cluster_detect/cluster.h:

--------------------------------------------------------------------------------

1 | /*

2 | * Cluster.h

3 | *

4 | * Created on: Oct 19, 2016

5 | * Author: Ne0

6 | */

7 | #ifndef CLUSTER_H_

8 | #define CLUSTER_H_

9 |

10 | #include

11 | #include

12 |

13 | #include

14 | #include

15 | #include

16 | #include

17 | #include

18 |

19 | #include

20 | #include

21 |

22 | #include

23 | #include

24 | #include

25 |

26 | #include

27 | #include

28 | #include

29 |

30 | #include

31 |

32 | #include

33 | #include

34 |

35 | #include

36 | #include

37 | #include

38 |

39 | #include

40 | #include

41 |

42 | #include

43 | #include

44 |

45 | #include

46 |

47 | #include

48 | #include

49 | #include

50 |

51 | #include "autoware_tracker/CloudCluster.h"

52 |

53 | #include "opencv2/core/core.hpp"

54 | #include "opencv2/imgproc/imgproc.hpp"

55 |

56 | #include

57 | #include

58 | #include

59 |

60 | class Cluster

61 | {

62 | pcl::PointCloud::Ptr pointcloud_;

63 | pcl::PointXYZI min_point_;

64 | pcl::PointXYZI max_point_;

65 | pcl::PointXYZI average_point_;

66 | pcl::PointXYZI centroid_;

67 | double orientation_angle_;

68 | float length_, width_, height_;

69 |

70 | jsk_recognition_msgs::BoundingBox bounding_box_;

71 | geometry_msgs::PolygonStamped polygon_;

72 |

73 | std::string label_;

74 | int id_;

75 | int r_, g_, b_;

76 |

77 | Eigen::Matrix3f eigen_vectors_;

78 | Eigen::Vector3f eigen_values_;

79 |

80 | bool valid_cluster_;

81 |

82 | public:

83 | /* \brief Constructor. Creates a Cluster object using the specified points in a PointCloud

84 | * \param[in] in_origin_cloud_ptr Origin PointCloud

85 | * \param[in] in_cluster_indices Indices of the Origin Pointcloud to create the Cluster

86 | * \param[in] in_id ID of the cluster

87 | * \param[in] in_r Amount of Red [0-255]

88 | * \param[in] in_g Amount of Green [0-255]

89 | * \param[in] in_b Amount of Blue [0-255]

90 | * \param[in] in_label Label to identify this cluster (optional)

91 | * \param[in] in_estimate_pose Flag to enable Pose Estimation of the Bounding Box

92 | * */

93 | void SetCloud(const pcl::PointCloud::Ptr in_origin_cloud_ptr,

94 | const std::vector& in_cluster_indices, std_msgs::Header in_ros_header, int in_id, int in_r,

95 | int in_g, int in_b, std::string in_label, bool in_estimate_pose);

96 |

97 | /* \brief Returns the autoware_tracker::CloudCluster message associated to this Cluster */

98 | void ToROSMessage(std_msgs::Header in_ros_header, autoware_tracker::CloudCluster& out_cluster_message);

99 |

100 | Cluster();

101 | virtual ~Cluster();

102 |

103 | /* \brief Returns the pointer to the PointCloud containing the points in this Cluster */

104 | pcl::PointCloud::Ptr GetCloud();

105 | /* \brief Returns the minimum point in the cluster */

106 | pcl::PointXYZI GetMinPoint();

107 | /* \brief Returns the maximum point in the cluster*/

108 | pcl::PointXYZI GetMaxPoint();

109 | /* \brief Returns the average point in the cluster*/

110 | pcl::PointXYZI GetAveragePoint();

111 | /* \brief Returns the centroid point in the cluster */

112 | pcl::PointXYZI GetCentroid();

113 | /* \brief Returns the calculated BoundingBox of the object */

114 | jsk_recognition_msgs::BoundingBox GetBoundingBox();

115 | /* \brief Returns the calculated PolygonArray of the object */

116 | geometry_msgs::PolygonStamped GetPolygon();

117 | /* \brief Returns the angle in radians of the BoundingBox. 0 if pose estimation was not enabled. */

118 | double GetOrientationAngle();

119 | /* \brief Returns the Length of the Cluster */

120 | float GetLenght();

121 | /* \brief Returns the Width of the Cluster */

122 | float GetWidth();

123 | /* \brief Returns the Height of the Cluster */

124 | float GetHeight();

125 | /* \brief Returns the Id of the Cluster */

126 | int GetId();

127 | /* \brief Returns the Label of the Cluster */

128 | std::string GetLabel();

129 | /* \brief Returns the Eigen Vectors of the cluster */

130 | Eigen::Matrix3f GetEigenVectors();

131 | /* \brief Returns the Eigen Values of the Cluster */

132 | Eigen::Vector3f GetEigenValues();

133 |

134 | /* \brief Returns if the Cluster is marked as valid or not*/

135 | bool IsValid();

136 | /* \brief Sets whether the Cluster is valid or not*/

137 | void SetValidity(bool in_valid);

138 |

139 | /* \brief Returns a pointer to a PointCloud object containing the merged points between current Cluster and the

140 | * specified PointCloud

141 | * \param[in] in_cloud_ptr Origin PointCloud

142 | * */

143 | pcl::PointCloud::Ptr JoinCloud(const pcl::PointCloud::Ptr in_cloud_ptr);

144 |

145 | /* \brief Calculates and returns a pointer to the FPFH Descriptor of this cluster

146 | *

147 | */

148 | std::vector GetFpfhDescriptor(const unsigned int& in_ompnum_threads, const double& in_normal_search_radius,

149 | const double& in_fpfh_search_radius);

150 | };

151 |

152 | typedef boost::shared_ptr ClusterPtr;

153 |

154 | #endif /* CLUSTER_H_ */

155 |

--------------------------------------------------------------------------------

/autoware_tracker/src/lidar_euclidean_cluster_detect/gencolors.cpp:

--------------------------------------------------------------------------------

1 | #ifndef GENCOLORS_CPP_

2 | #define GENCOLORS_CPP_

3 |

4 | /*M///////////////////////////////////////////////////////////////////////////////////////

5 | //

6 | // IMPORTANT: READ BEFORE DOWNLOADING, COPYING, INSTALLING OR USING.

7 | //

8 | // By downloading, copying, installing or using the software you agree to this license.

9 | // If you do not agree to this license, do not download, install,

10 | // copy or use the software.

11 | //

12 | //

13 | // License Agreement

14 | // For Open Source Computer Vision Library

15 | //

16 | // Copyright (C) 2000-2008, Intel Corporation, all rights reserved.

17 | // Copyright (C) 2009, Willow Garage Inc., all rights reserved.

18 | // Third party copyrights are property of their respective owners.

19 | //

20 | // Redistribution and use in source and binary forms, with or without modification,

21 | // are permitted provided that the following conditions are met:

22 | //

23 | // * Redistribution's of source code must retain the above copyright notice,

24 | // this list of conditions and the following disclaimer.

25 | //

26 | // * Redistribution's in binary form must reproduce the above copyright notice,

27 | // this list of conditions and the following disclaimer in the documentation

28 | // and/or other materials provided with the distribution.

29 | //

30 | // * The name of the copyright holders may not be used to endorse or promote products

31 | // derived from this software without specific prior written permission.

32 | //

33 | // This software is provided by the copyright holders and contributors "as is" and

34 | // any express or implied warranties, including, but not limited to, the implied

35 | // warranties of merchantability and fitness for a particular purpose are disclaimed.

36 | // In no event shall the Intel Corporation or contributors be liable for any direct,

37 | // indirect, incidental, special, exemplary, or consequential damages

38 | // (including, but not limited to, procurement of substitute goods or services;

39 | // loss of use, data, or profits; or business interruption) however caused

40 | // and on any theory of liability, whether in contract, strict liability,

41 | // or tort (including negligence or otherwise) arising in any way out of

42 | // the use of this software, even if advised of the possibility of such damage.

43 | //

44 | //M*/

45 | #include "opencv2/core/core.hpp"

46 | //#include "precomp.hpp"

47 | #include

48 |

49 | #include

50 |

51 | using namespace cv;

52 |

53 | static void downsamplePoints(const Mat& src, Mat& dst, size_t count)

54 | {

55 | CV_Assert(count >= 2);

56 | CV_Assert(src.cols == 1 || src.rows == 1);

57 | CV_Assert(src.total() >= count);

58 | CV_Assert(src.type() == CV_8UC3);

59 |

60 | dst.create(1, (int)count, CV_8UC3);

61 | // TODO: optimize by exploiting symmetry in the distance matrix

62 | Mat dists((int)src.total(), (int)src.total(), CV_32FC1, Scalar(0));

63 | if (dists.empty())

64 | std::cerr << "Such big matrix cann't be created." << std::endl;

65 |

66 | for (int i = 0; i < dists.rows; i++)

67 | {

68 | for (int j = i; j < dists.cols; j++)

69 | {

70 | float dist = (float)norm(src.at >(i) - src.at >(j));

71 | dists.at(j, i) = dists.at(i, j) = dist;

72 | }

73 | }

74 |

75 | double maxVal;

76 | Point maxLoc;

77 | minMaxLoc(dists, 0, &maxVal, 0, &maxLoc);

78 |

79 | dst.at >(0) = src.at >(maxLoc.x);

80 | dst.at >(1) = src.at >(maxLoc.y);

81 |

82 | Mat activedDists(0, dists.cols, dists.type());

83 | Mat candidatePointsMask(1, dists.cols, CV_8UC1, Scalar(255));

84 | activedDists.push_back(dists.row(maxLoc.y));

85 | candidatePointsMask.at(0, maxLoc.y) = 0;

86 |

87 | for (size_t i = 2; i < count; i++)

88 | {

89 | activedDists.push_back(dists.row(maxLoc.x));

90 | candidatePointsMask.at(0, maxLoc.x) = 0;

91 |

92 | Mat minDists;

93 | reduce(activedDists, minDists, 0, CV_REDUCE_MIN);

94 | minMaxLoc(minDists, 0, &maxVal, 0, &maxLoc, candidatePointsMask);

95 | dst.at >((int)i) = src.at >(maxLoc.x);

96 | }

97 | }

98 |

99 | void generateColors(std::vector& colors, size_t count, size_t factor = 10)

100 | {

101 | if (count < 1)

102 | return;

103 |

104 | colors.resize(count);

105 |

106 | if (count == 1)

107 | {

108 | colors[0] = Scalar(0, 0, 255); // red

109 | return;

110 | }

111 | if (count == 2)

112 | {

113 | colors[0] = Scalar(0, 0, 255); // red

114 | colors[1] = Scalar(0, 255, 0); // green

115 | return;

116 | }

117 |

118 | // Generate a set of colors in RGB space. A size of the set is severel times (=factor) larger then

119 | // the needed count of colors.

120 | Mat bgr(1, (int)(count * factor), CV_8UC3);

121 | randu(bgr, 0, 256);

122 |

123 | // Convert the colors set to Lab space.

124 | // Distances between colors in this space correspond a human perception.

125 | Mat lab;

126 | cvtColor(bgr, lab, cv::COLOR_BGR2Lab);

127 |

128 | // Subsample colors from the generated set so that

129 | // to maximize the minimum distances between each other.

130 | // Douglas-Peucker algorithm is used for this.

131 | Mat lab_subset;

132 | downsamplePoints(lab, lab_subset, count);

133 |

134 | // Convert subsampled colors back to RGB

135 | Mat bgr_subset;

136 | cvtColor(lab_subset, bgr_subset, cv::COLOR_BGR2Lab);

137 |

138 | CV_Assert(bgr_subset.total() == count);

139 | for (size_t i = 0; i < count; i++)

140 | {

141 | Point3_ c = bgr_subset.at >((int)i);

142 | colors[i] = Scalar(c.x, c.y, c.z);

143 | }

144 | }

145 |

146 | #endif // GENCOLORS_CPP

147 |

--------------------------------------------------------------------------------

/autoware_tracker/src/lidar_imm_ukf_pda_track/imm_ukf_pda.h:

--------------------------------------------------------------------------------

1 | /*

2 | * Copyright 2018-2019 Autoware Foundation. All rights reserved.

3 | *

4 | * Licensed under the Apache License, Version 2.0 (the "License");

5 | * you may not use this file except in compliance with the License.

6 | * You may obtain a copy of the License at

7 | *

8 | * http://www.apache.org/licenses/LICENSE-2.0

9 | *

10 | * Unless required by applicable law or agreed to in writing, software

11 | * distributed under the License is distributed on an "AS IS" BASIS,

12 | * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | * See the License for the specific language governing permissions and

14 | * limitations under the License.

15 | */

16 |

17 | #ifndef OBJECT_TRACKING_IMM_UKF_JPDAF_H

18 | #define OBJECT_TRACKING_IMM_UKF_JPDAF_H

19 |

20 |

21 | #include

22 | #include

23 | #include

24 |

25 |

26 | #include

27 | #include

28 |

29 | #include

30 | #include

31 | #include

32 | #include

33 |

34 | #include

35 |

36 | // #include

37 |

38 | #include "autoware_tracker/DetectedObject.h"

39 | #include "autoware_tracker/DetectedObjectArray.h"

40 |

41 | #include "ukf.h"

42 |

43 | class ImmUkfPda

44 | {

45 | private:

46 | int target_id_;

47 | bool init_;

48 | double timestamp_;

49 |

50 | std::vector targets_;

51 |

52 | // probabilistic data association params

53 | double gating_thres_;

54 | double gate_probability_;

55 | double detection_probability_;

56 |

57 | // object association param

58 | int life_time_thres_;

59 |

60 | // static classification param

61 | double static_velocity_thres_;

62 | int static_num_history_thres_;

63 |

64 | // switch sukf and ImmUkfPda

65 | bool use_sukf_;

66 |

67 | // whether if benchmarking tracking result

68 | bool is_benchmark_;

69 | int frame_count_;

70 | std::string kitti_data_dir_;

71 |

72 | // for benchmark

73 | std::string result_file_path_;

74 |

75 | // prevent explode param for ukf

76 | double prevent_explosion_thres_;

77 |

78 | // for vectormap assisted tarcking

79 | bool use_vectormap_;

80 | bool has_subscribed_vectormap_;

81 | double lane_direction_chi_thres_;

82 | double nearest_lane_distance_thres_;

83 | std::string vectormap_frame_;

84 | // vector_map::VectorMap vmap_;

85 | // std::vector lanes_;

86 |

87 | double merge_distance_threshold_;

88 | const double CENTROID_DISTANCE = 0.2;//distance to consider centroids the same

89 |

90 | std::string input_topic_;

91 | std::string output_topic_;

92 |

93 | std::string tracking_frame_;

94 |

95 | tf::TransformListener tf_listener_;

96 | tf::StampedTransform local2global_;

97 | tf::StampedTransform tracking_frame2lane_frame_;

98 | tf::StampedTransform lane_frame2tracking_frame_;

99 |

100 | ros::NodeHandle node_handle_;

101 | ros::NodeHandle private_nh_;

102 | ros::Subscriber sub_detected_array_;

103 | ros::Publisher pub_object_array_;

104 | //yang21itsc

105 | ros::Publisher pub_example_array_;

106 | ros::Publisher vis_examples_;

107 | //yang21itsc

108 |

109 | std_msgs::Header input_header_;

110 |

111 | // yang21itsc

112 | std::vector learning_buffer;

113 | double track_probability_;

114 | // yang21itsc

115 |

116 | void callback(const autoware_tracker::DetectedObjectArray& input);

117 |

118 | void transformPoseToGlobal(const autoware_tracker::DetectedObjectArray& input,

119 | autoware_tracker::DetectedObjectArray& transformed_input);

120 | void transformPoseToLocal(autoware_tracker::DetectedObjectArray& detected_objects_output);

121 |

122 | geometry_msgs::Pose getTransformedPose(const geometry_msgs::Pose& in_pose,

123 | const tf::StampedTransform& tf_stamp);

124 |

125 | bool updateNecessaryTransform();

126 |

127 | void measurementValidation(const autoware_tracker::DetectedObjectArray& input, UKF& target, const bool second_init,

128 | const Eigen::VectorXd& max_det_z, const Eigen::MatrixXd& max_det_s,

129 | std::vector& object_vec, std::vector& matching_vec);

130 | autoware_tracker::DetectedObject getNearestObject(UKF& target,

131 | const std::vector& object_vec);

132 | void updateBehaviorState(const UKF& target, const bool use_sukf, autoware_tracker::DetectedObject& object);

133 |

134 | void initTracker(const autoware_tracker::DetectedObjectArray& input, double timestamp);

135 | void secondInit(UKF& target, const std::vector& object_vec, double dt);

136 |

137 | void updateTrackingNum(const std::vector& object_vec, UKF& target);

138 |

139 | bool probabilisticDataAssociation(const autoware_tracker::DetectedObjectArray& input, const double dt,

140 | std::vector& matching_vec,

141 | std::vector& object_vec, UKF& target);

142 | void makeNewTargets(const double timestamp, const autoware_tracker::DetectedObjectArray& input,

143 | const std::vector& matching_vec);

144 |

145 | void staticClassification();

146 |

147 | void makeOutput(const autoware_tracker::DetectedObjectArray& input,

148 | const std::vector& matching_vec,

149 | autoware_tracker::DetectedObjectArray& detected_objects_output);

150 |

151 | void removeUnnecessaryTarget();

152 |

153 | void dumpResultText(autoware_tracker::DetectedObjectArray& detected_objects);

154 |

155 | void tracker(const autoware_tracker::DetectedObjectArray& transformed_input,

156 | autoware_tracker::DetectedObjectArray& detected_objects_output);

157 |

158 | bool updateDirection(const double smallest_nis, const autoware_tracker::DetectedObject& in_object,

159 | autoware_tracker::DetectedObject& out_object, UKF& target);

160 |

161 | bool storeObjectWithNearestLaneDirection(const autoware_tracker::DetectedObject& in_object,

162 | autoware_tracker::DetectedObject& out_object);

163 |

164 | void checkVectormapSubscription();

165 |

166 | autoware_tracker::DetectedObjectArray

167 | removeRedundantObjects(const autoware_tracker::DetectedObjectArray& in_detected_objects,

168 | const std::vector in_tracker_indices);

169 |

170 | autoware_tracker::DetectedObjectArray

171 | forwardNonMatchedObject(const autoware_tracker::DetectedObjectArray& tmp_objects,

172 | const autoware_tracker::DetectedObjectArray& input,

173 | const std::vector& matching_vec);

174 |

175 | bool

176 | arePointsClose(const geometry_msgs::Point& in_point_a,

177 | const geometry_msgs::Point& in_point_b,

178 | float in_radius);

179 |

180 | bool

181 | arePointsEqual(const geometry_msgs::Point& in_point_a,

182 | const geometry_msgs::Point& in_point_b);

183 |

184 | bool

185 | isPointInPool(const std::vector& in_pool,

186 | const geometry_msgs::Point& in_point);

187 |

188 | void updateTargetWithAssociatedObject(const std::vector& object_vec,

189 | UKF& target);

190 |

191 | public:

192 | ImmUkfPda();

193 | void run();

194 | };

195 |

196 | #endif /* OBJECT_TRACKING_IMM_UKF_JPDAF_H */

197 |

--------------------------------------------------------------------------------

/autoware_tracker/src/lidar_imm_ukf_pda_track/imm_ukf_pda_main.cpp:

--------------------------------------------------------------------------------

1 | /*

2 | * Copyright 2018-2019 Autoware Foundation. All rights reserved.

3 | *

4 | * Licensed under the Apache License, Version 2.0 (the "License");

5 | * you may not use this file except in compliance with the License.

6 | * You may obtain a copy of the License at

7 | *

8 | * http://www.apache.org/licenses/LICENSE-2.0

9 | *

10 | * Unless required by applicable law or agreed to in writing, software

11 | * distributed under the License is distributed on an "AS IS" BASIS,

12 | * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | * See the License for the specific language governing permissions and

14 | * limitations under the License.

15 | */

16 |

17 | #include "imm_ukf_pda.h"

18 |

19 | int main(int argc, char** argv)

20 | {

21 | ros::init(argc, argv, "imm_ukf_pda_tracker");

22 | ros::console::set_logger_level(ROSCONSOLE_DEFAULT_NAME, ros::console::levels::Warn);

23 |

24 | ImmUkfPda app;

25 | app.run();

26 | ros::spin();

27 | return 0;

28 | }

29 |

--------------------------------------------------------------------------------

/efficient_det_ros/__pycache__/backbone.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/RuiYang-1010/efficient_online_learning/2dca08c20a0a8f88b957c3e276f3ed4bf6f78c31/efficient_det_ros/__pycache__/backbone.cpython-37.pyc

--------------------------------------------------------------------------------

/efficient_det_ros/backbone.py:

--------------------------------------------------------------------------------

1 | # Author: Zylo117

2 |

3 | import torch

4 | from torch import nn

5 |

6 | from efficientdet.model import BiFPN, Regressor, Classifier, EfficientNet

7 | from efficientdet.utils import Anchors

8 |

9 |

10 | class EfficientDetBackbone(nn.Module):

11 | def __init__(self, num_classes=80, compound_coef=0, load_weights=False, **kwargs):

12 | super(EfficientDetBackbone, self).__init__()

13 | self.compound_coef = compound_coef

14 |

15 | self.backbone_compound_coef = [0, 1, 2, 3, 4, 5, 6, 6, 7]

16 | self.fpn_num_filters = [64, 88, 112, 160, 224, 288, 384, 384, 384]

17 | self.fpn_cell_repeats = [3, 4, 5, 6, 7, 7, 8, 8, 8]

18 | self.input_sizes = [512, 640, 768, 896, 1024, 1280, 1280, 1536, 1536]

19 | self.box_class_repeats = [3, 3, 3, 4, 4, 4, 5, 5, 5]

20 | self.pyramid_levels = [5, 5, 5, 5, 5, 5, 5, 5, 6]

21 | self.anchor_scale = [4., 4., 4., 4., 4., 4., 4., 5., 4.]

22 | self.aspect_ratios = kwargs.get('ratios', [(1.0, 1.0), (1.4, 0.7), (0.7, 1.4)])

23 | self.num_scales = len(kwargs.get('scales', [2 ** 0, 2 ** (1.0 / 3.0), 2 ** (2.0 / 3.0)]))

24 | conv_channel_coef = {

25 | # the channels of P3/P4/P5.

26 | 0: [40, 112, 320],

27 | 1: [40, 112, 320],

28 | 2: [48, 120, 352],

29 | 3: [48, 136, 384],

30 | 4: [56, 160, 448],

31 | 5: [64, 176, 512],

32 | 6: [72, 200, 576],

33 | 7: [72, 200, 576],

34 | 8: [80, 224, 640],

35 | }

36 |

37 | num_anchors = len(self.aspect_ratios) * self.num_scales

38 |

39 | self.bifpn = nn.Sequential(

40 | *[BiFPN(self.fpn_num_filters[self.compound_coef],

41 | conv_channel_coef[compound_coef],

42 | True if _ == 0 else False,

43 | attention=True if compound_coef < 6 else False,

44 | use_p8=compound_coef > 7)

45 | for _ in range(self.fpn_cell_repeats[compound_coef])])

46 |

47 | self.num_classes = num_classes

48 | self.regressor = Regressor(in_channels=self.fpn_num_filters[self.compound_coef], num_anchors=num_anchors,

49 | num_layers=self.box_class_repeats[self.compound_coef],

50 | pyramid_levels=self.pyramid_levels[self.compound_coef])

51 | self.classifier = Classifier(in_channels=self.fpn_num_filters[self.compound_coef], num_anchors=num_anchors,

52 | num_classes=num_classes,

53 | num_layers=self.box_class_repeats[self.compound_coef],

54 | pyramid_levels=self.pyramid_levels[self.compound_coef])

55 |

56 | self.anchors = Anchors(anchor_scale=self.anchor_scale[compound_coef],

57 | pyramid_levels=(torch.arange(self.pyramid_levels[self.compound_coef]) + 3).tolist(),

58 | **kwargs)

59 |

60 | self.backbone_net = EfficientNet(self.backbone_compound_coef[compound_coef], load_weights)

61 |

62 | def freeze_bn(self):

63 | for m in self.modules():

64 | if isinstance(m, nn.BatchNorm2d):

65 | m.eval()

66 |

67 | def forward(self, inputs):

68 | max_size = inputs.shape[-1]

69 |

70 | _, p3, p4, p5 = self.backbone_net(inputs)

71 |

72 | features = (p3, p4, p5)

73 | features = self.bifpn(features)

74 |

75 | regression = self.regressor(features)

76 | classification = self.classifier(features)

77 | anchors = self.anchors(inputs, inputs.dtype)

78 |

79 | return features, regression, classification, anchors

80 |

81 | def init_backbone(self, path):

82 | state_dict = torch.load(path)

83 | try:

84 | ret = self.load_state_dict(state_dict, strict=False)

85 | print(ret)

86 | except RuntimeError as e:

87 | print('Ignoring ' + str(e) + '"')

88 |

--------------------------------------------------------------------------------

/efficient_det_ros/coco_eval.py:

--------------------------------------------------------------------------------

1 | # Author: Zylo117

2 |

3 | """

4 | COCO-Style Evaluations

5 |

6 | put images here datasets/your_project_name/val_set_name/*.jpg

7 | put annotations here datasets/your_project_name/annotations/instances_{val_set_name}.json

8 | put weights here /path/to/your/weights/*.pth

9 | change compound_coef

10 |

11 | """

12 |

13 | import json

14 | import os

15 |

16 | import argparse

17 | import torch

18 | import yaml

19 | from tqdm import tqdm

20 | from pycocotools.coco import COCO

21 | from pycocotools.cocoeval import COCOeval

22 |

23 | from backbone import EfficientDetBackbone

24 | from efficientdet.utils import BBoxTransform, ClipBoxes

25 | from utils.utils import preprocess, invert_affine, postprocess, boolean_string

26 |

27 | ap = argparse.ArgumentParser()

28 | ap.add_argument('-p', '--project', type=str, default='coco', help='project file that contains parameters')

29 | ap.add_argument('-c', '--compound_coef', type=int, default=0, help='coefficients of efficientdet')

30 | ap.add_argument('-w', '--weights', type=str, default=None, help='/path/to/weights')

31 | ap.add_argument('--nms_threshold', type=float, default=0.5, help='nms threshold, don\'t change it if not for testing purposes')

32 | ap.add_argument('--cuda', type=boolean_string, default=True)

33 | ap.add_argument('--device', type=int, default=0)

34 | ap.add_argument('--float16', type=boolean_string, default=False)

35 | ap.add_argument('--override', type=boolean_string, default=True, help='override previous bbox results file if exists')

36 | args = ap.parse_args()

37 |

38 | compound_coef = args.compound_coef

39 | nms_threshold = args.nms_threshold

40 | use_cuda = args.cuda

41 | gpu = args.device

42 | use_float16 = args.float16

43 | override_prev_results = args.override

44 | project_name = args.project

45 | weights_path = f'weights/efficientdet-d{compound_coef}.pth' if args.weights is None else args.weights

46 |

47 | print(f'running coco-style evaluation on project {project_name}, weights {weights_path}...')

48 |

49 | params = yaml.safe_load(open(f'projects/{project_name}.yml'))

50 | obj_list = params['obj_list']

51 |

52 | input_sizes = [512, 640, 768, 896, 1024, 1280, 1280, 1536, 1536]

53 |

54 |

55 | def evaluate_coco(img_path, set_name, image_ids, coco, model, threshold=0.05):

56 | results = []

57 |

58 | regressBoxes = BBoxTransform()

59 | clipBoxes = ClipBoxes()

60 |

61 | for image_id in tqdm(image_ids):

62 | image_info = coco.loadImgs(image_id)[0]

63 | image_path = img_path + image_info['file_name']

64 |

65 | ori_imgs, framed_imgs, framed_metas = preprocess(image_path, max_size=input_sizes[compound_coef], mean=params['mean'], std=params['std'])

66 | x = torch.from_numpy(framed_imgs[0])

67 |

68 | if use_cuda:

69 | x = x.cuda(gpu)

70 | if use_float16:

71 | x = x.half()

72 | else:

73 | x = x.float()

74 | else:

75 | x = x.float()

76 |

77 | x = x.unsqueeze(0).permute(0, 3, 1, 2)

78 | features, regression, classification, anchors = model(x)

79 |

80 | preds = postprocess(x,

81 | anchors, regression, classification,

82 | regressBoxes, clipBoxes,

83 | threshold, nms_threshold)

84 |

85 | if not preds:

86 | continue

87 |

88 | preds = invert_affine(framed_metas, preds)[0]

89 |

90 | scores = preds['scores']

91 | class_ids = preds['class_ids']

92 | rois = preds['rois']

93 |

94 | if rois.shape[0] > 0:

95 | # x1,y1,x2,y2 -> x1,y1,w,h

96 | rois[:, 2] -= rois[:, 0]

97 | rois[:, 3] -= rois[:, 1]

98 |

99 | bbox_score = scores

100 |

101 | for roi_id in range(rois.shape[0]):