3 |

4 |  5 |

5 |

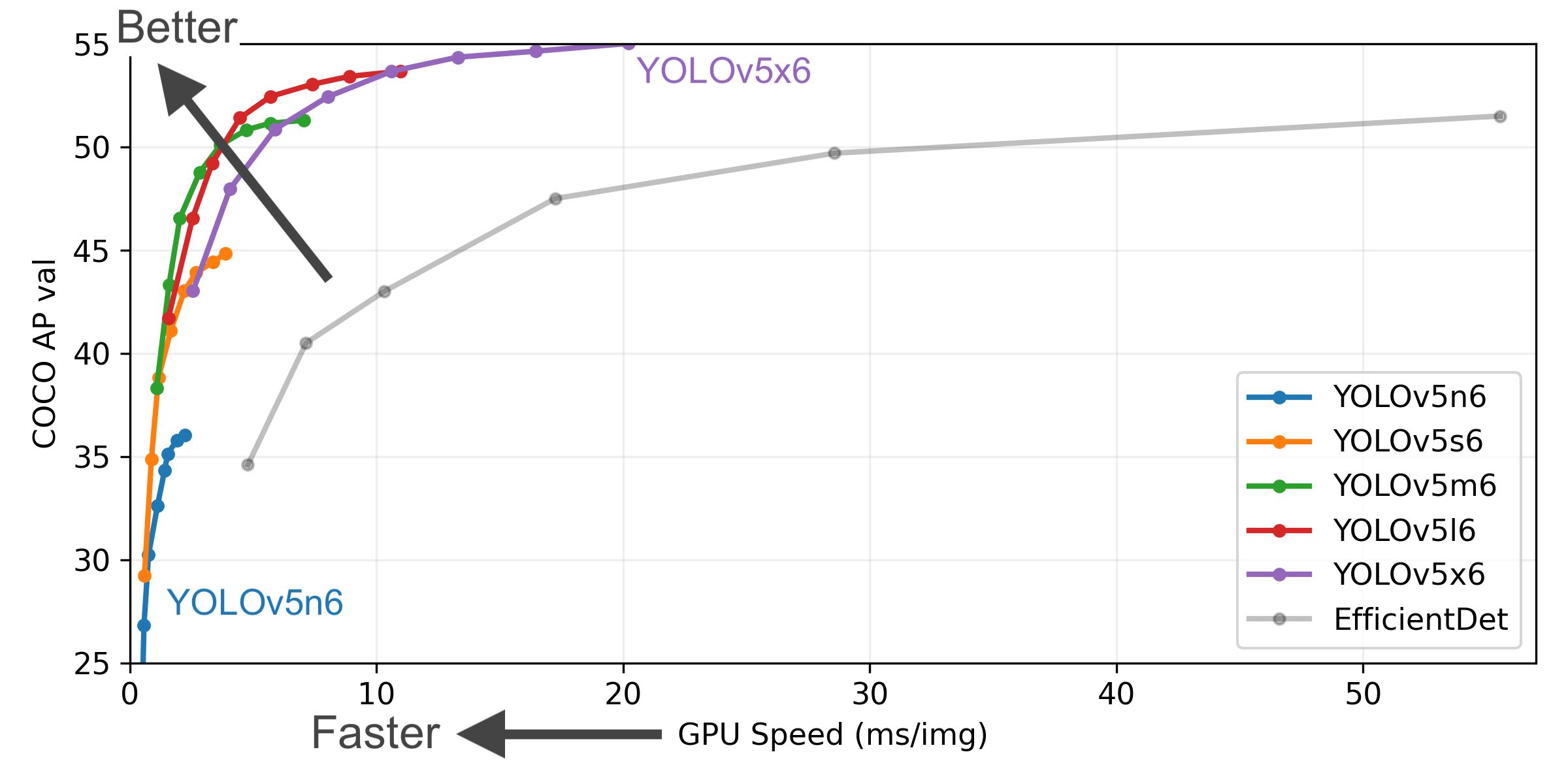

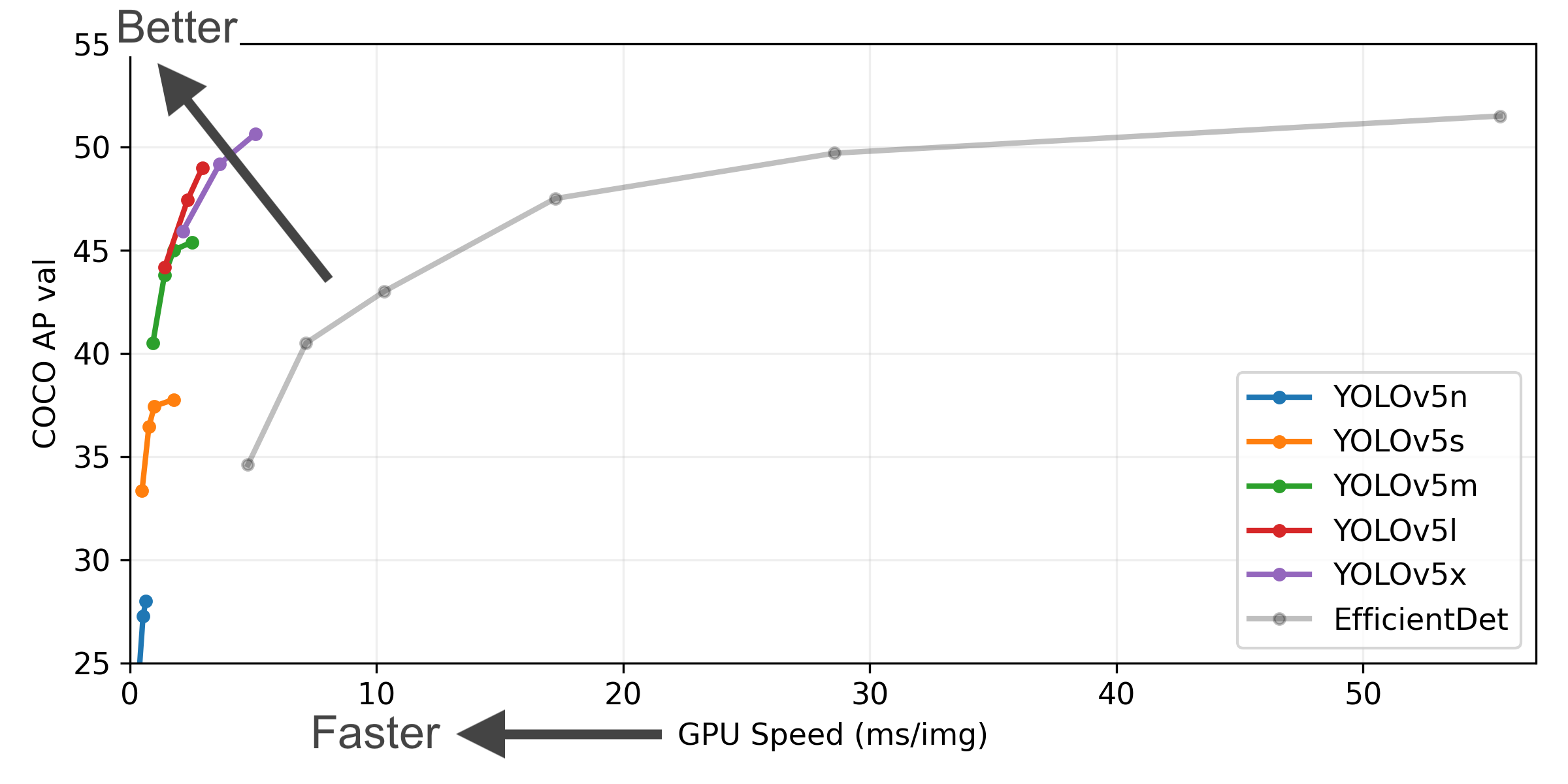

19 | This project is improve the YOLOv5 for the small object detection 20 | YOLOv5 🚀 is a family of object detection architectures and models pretrained on the COCO dataset, and represents Ultralytics 21 | open-source research into future vision AI methods, incorporating lessons learned and best practices evolved over thousands of hours of research and development. 22 |

23 | 24 | 53 | 54 | 58 | 59 | 147 |

148 |

147 |

148 |  197 |

198 |

199 |

197 |

198 |

199 |  200 |

201 |

200 |

201 |

280 |

281 |

280 |

281 |  284 |

285 |

284 |

285 |  288 |

289 |

288 |

289 |  292 |

293 |

292 |

293 |  296 |

297 |

296 |

297 |  300 |

301 |

300 |

301 |  304 |

305 |

304 |

305 |