├── .github

└── workflows

│ └── lint.yaml

├── .gitignore

├── .pre-commit-config.yaml

├── Dockerfile

├── LICENSE

├── Pipfile

├── Pipfile.lock

├── README.md

├── action.yaml

├── github_actions_avatar.png

├── github_status_embed

├── __init__.py

├── __main__.py

├── log.py

├── types.py

└── webhook.py

├── img

├── embed_comparison.png

└── type_comparison.png

├── requirements.txt

└── tox.ini

/.github/workflows/lint.yaml:

--------------------------------------------------------------------------------

1 | name: Lint

2 |

3 | on:

4 | push:

5 | branches:

6 | - main

7 | pull_request:

8 |

9 |

10 | jobs:

11 | lint:

12 | name: Run pre-commit & flake8

13 | runs-on: ubuntu-latest

14 | env:

15 | # Configure pip to cache dependencies and do a user install

16 | PIP_NO_CACHE_DIR: false

17 | PIP_USER: 1

18 |

19 | # Hide the graphical elements from pipenv's output

20 | PIPENV_HIDE_EMOJIS: 1

21 | PIPENV_NOSPIN: 1

22 |

23 | # Make sure pipenv does not try reuse an environment it's running in

24 | PIPENV_IGNORE_VIRTUALENVS: 1

25 |

26 | # Specify explicit paths for python dependencies and the pre-commit

27 | # environment so we know which directories to cache

28 | PYTHONUSERBASE: ${{ github.workspace }}/.cache/py-user-base

29 | PRE_COMMIT_HOME: ${{ github.workspace }}/.cache/pre-commit-cache

30 |

31 | steps:

32 | - name: Add custom PYTHONUSERBASE to PATH

33 | run: echo '${{ env.PYTHONUSERBASE }}/bin/' >> $GITHUB_PATH

34 |

35 | - name: Checkout repository

36 | uses: actions/checkout@v2

37 |

38 | - name: Setup python

39 | id: python

40 | uses: actions/setup-python@v2

41 | with:

42 | python-version: '3.9'

43 |

44 | # This step caches our Python dependencies. To make sure we

45 | # only restore a cache when the dependencies, the python version,

46 | # the runner operating system, and the dependency location haven't

47 | # changed, we create a cache key that is a composite of those states.

48 | #

49 | # Only when the context is exactly the same, we will restore the cache.

50 | - name: Python Dependency Caching

51 | uses: actions/cache@v2

52 | id: python_cache

53 | with:

54 | path: ${{ env.PYTHONUSERBASE }}

55 | key: "python-0-${{ runner.os }}-${{ env.PYTHONUSERBASE }}-\

56 | ${{ steps.python.outputs.python-version }}-\

57 | ${{ hashFiles('./Pipfile', './Pipfile.lock') }}"

58 |

59 | # Install our dependencies if we did not restore a dependency cache

60 | - name: Install dependencies using pipenv

61 | if: steps.python_cache.outputs.cache-hit != 'true'

62 | run: |

63 | pip install pipenv

64 | pipenv install --dev --deploy --system

65 |

66 | # This step caches our pre-commit environment. To make sure we

67 | # do create a new environment when our pre-commit setup changes,

68 | # we create a cache key based on relevant factors.

69 | - name: Pre-commit Environment Caching

70 | uses: actions/cache@v2

71 | with:

72 | path: ${{ env.PRE_COMMIT_HOME }}

73 | key: "precommit-0-${{ runner.os }}-${{ env.PRE_COMMIT_HOME }}-\

74 | ${{ steps.python.outputs.python-version }}-\

75 | ${{ hashFiles('./.pre-commit-config.yaml') }}"

76 |

77 | # We will not run `flake8` here, as we will use a separate flake8

78 | # action. As pre-commit does not support user installs, we set

79 | # PIP_USER=0 to not do a user install.

80 | - name: Run pre-commit hooks

81 | id: pre-commit

82 | run: export PIP_USER=0; SKIP=flake8 pre-commit run --all-files

83 |

84 | # Run flake8 and have it format the linting errors in the format of

85 | # the GitHub Workflow command to register error annotations. This

86 | # means that our flake8 output is automatically added as an error

87 | # annotation to both the run result and in the "Files" tab of a

88 | # pull request.

89 | #

90 | # Format used:

91 | # ::error file={filename},line={line},col={col}::{message}

92 | #

93 | # We run this step if every other step succeeded or if only

94 | # the pre-commit step failed. If one of the pre-commit tests

95 | # fails, we're still interested in linting results as well.

96 | - name: Run flake8

97 | if: success() || steps.pre-commit.outcome == 'failure'

98 | run: "flake8 \

99 | --format='::error file=%(path)s,line=%(row)d,col=%(col)d::[flake8] %(code)s: %(text)s'"

100 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | env/

12 | build/

13 | develop-eggs/

14 | dist/

15 | downloads/

16 | eggs/

17 | .eggs/

18 | lib/

19 | lib64/

20 | parts/

21 | sdist/

22 | var/

23 | *.egg-info/

24 | .installed.cfg

25 | *.egg

26 |

27 | # PyInstaller

28 | # Usually these files are written by a python script from a template

29 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

30 | *.manifest

31 | *.spec

32 |

33 | # Installer logs

34 | pip-log.txt

35 | pip-delete-this-directory.txt

36 |

37 | # Unit test / coverage reports

38 | htmlcov/

39 | .tox/

40 | .coverage

41 | .coverage.*

42 | .cache

43 | nosetests.xml

44 | coverage.xml

45 | *.cover

46 | .hypothesis/

47 |

48 | # Translations

49 | *.mo

50 | *.pot

51 |

52 | # Django stuff:

53 | *.log

54 | local_settings.py

55 |

56 | # Flask stuff:

57 | instance/

58 | .webassets-cache

59 |

60 | # Scrapy stuff:

61 | .scrapy

62 |

63 | # Sphinx documentation

64 | docs/_build/

65 |

66 | # PyBuilder

67 | target/

68 |

69 | # Jupyter Notebook

70 | .ipynb_checkpoints

71 |

72 | # pyenv

73 | .python-version

74 |

75 | # celery beat schedule file

76 | celerybeat-schedule

77 |

78 | # SageMath parsed files

79 | *.sage.py

80 |

81 | # dotenv

82 | .env

83 |

84 | # virtualenv

85 | .venv

86 | venv/

87 | ENV/

88 |

89 | # Spyder project settings

90 | .spyderproject

91 | .spyproject

92 |

93 | # Rope project settings

94 | .ropeproject

95 |

96 | # mkdocs documentation

97 | /site

98 |

99 | # mypy

100 | .mypy_cache/

101 |

102 | # PyCharm

103 | .idea/

104 |

105 | # VSCode

106 | .vscode/

107 |

108 | # Vagrant

109 | .vagrant

110 |

111 | # Logfiles

112 | log.*

113 | *.log.*

114 | !log.py

115 |

116 | # Custom user configuration

117 | config.yml

118 |

119 | # xmlrunner unittest XML reports

120 | TEST-**.xml

121 |

122 | # Mac OS .DS_Store, which is a file that stores custom attributes of its containing folder

123 | .DS_Store

124 |

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | repos:

2 | - repo: https://github.com/pre-commit/pre-commit-hooks

3 | rev: v3.2.0

4 | hooks:

5 | - id: check-merge-conflict

6 | - id: check-yaml

7 | - id: end-of-file-fixer

8 | - id: mixed-line-ending

9 | args: [--fix=lf]

10 | - id: trailing-whitespace

11 | args: [--markdown-linebreak-ext=md]

12 | - repo: https://github.com/pre-commit/pygrep-hooks

13 | rev: v1.5.1

14 | hooks:

15 | - id: python-check-blanket-noqa

16 | - repo: local

17 | hooks:

18 | - id: flake8

19 | name: Flake8

20 | description: This hook runs flake8 within our project's pipenv environment.

21 | entry: pipenv run flake8

22 | language: python

23 | types: [python]

24 | require_serial: true

25 |

--------------------------------------------------------------------------------

/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM python:3.9-slim

2 |

3 | COPY requirements.txt .

4 | RUN pip install --target=/app -r requirements.txt

5 |

6 | WORKDIR /app

7 | COPY action.yaml github_status_embed/

8 | COPY ./github_status_embed github_status_embed/

9 |

10 | ENV PYTHONPATH /app

11 | ENTRYPOINT ["python", "-m", "github_status_embed"]

12 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2020 Sebastiaan Zeeff

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/Pipfile:

--------------------------------------------------------------------------------

1 | [[source]]

2 | url = "https://pypi.org/simple"

3 | verify_ssl = true

4 | name = "pypi"

5 |

6 | [packages]

7 | requests = "==2.25.0"

8 | PyYAML = "==5.3.1"

9 |

10 | [dev-packages]

11 | flake8 = "~=3.8.4"

12 | flake8-annotations = "~=2.4.1"

13 | flake8-bugbear = "~=20.11.1"

14 | flake8-docstrings = "~=1.5.0"

15 | flake8-import-order = "~=0.18.1"

16 | flake8-string-format = "~=0.3.0"

17 | flake8-tidy-imports = "~=4.1.0"

18 | flake8-todo = "~=0.7"

19 | pep8-naming = "~=0.11.1"

20 | pre-commit = "~=2.9.2"

21 |

22 | [requires]

23 | python_version = "3.9"

24 |

--------------------------------------------------------------------------------

/Pipfile.lock:

--------------------------------------------------------------------------------

1 | {

2 | "_meta": {

3 | "hash": {

4 | "sha256": "80c125f22c0aa5e29eed0b545013174edac9043c561e5368f8d19ea0edbbfc93"

5 | },

6 | "pipfile-spec": 6,

7 | "requires": {

8 | "python_version": "3.9"

9 | },

10 | "sources": [

11 | {

12 | "name": "pypi",

13 | "url": "https://pypi.org/simple",

14 | "verify_ssl": true

15 | }

16 | ]

17 | },

18 | "default": {

19 | "certifi": {

20 | "hashes": [

21 | "sha256:1f422849db327d534e3d0c5f02a263458c3955ec0aae4ff09b95f195c59f4edd",

22 | "sha256:f05def092c44fbf25834a51509ef6e631dc19765ab8a57b4e7ab85531f0a9cf4"

23 | ],

24 | "version": "==2020.11.8"

25 | },

26 | "chardet": {

27 | "hashes": [

28 | "sha256:84ab92ed1c4d4f16916e05906b6b75a6c0fb5db821cc65e70cbd64a3e2a5eaae",

29 | "sha256:fc323ffcaeaed0e0a02bf4d117757b98aed530d9ed4531e3e15460124c106691"

30 | ],

31 | "version": "==3.0.4"

32 | },

33 | "idna": {

34 | "hashes": [

35 | "sha256:b307872f855b18632ce0c21c5e45be78c0ea7ae4c15c828c20788b26921eb3f6",

36 | "sha256:b97d804b1e9b523befed77c48dacec60e6dcb0b5391d57af6a65a312a90648c0"

37 | ],

38 | "markers": "python_version >= '2.7' and python_version not in '3.0, 3.1, 3.2, 3.3'",

39 | "version": "==2.10"

40 | },

41 | "pyyaml": {

42 | "hashes": [

43 | "sha256:06a0d7ba600ce0b2d2fe2e78453a470b5a6e000a985dd4a4e54e436cc36b0e97",

44 | "sha256:240097ff019d7c70a4922b6869d8a86407758333f02203e0fc6ff79c5dcede76",

45 | "sha256:4f4b913ca1a7319b33cfb1369e91e50354d6f07a135f3b901aca02aa95940bd2",

46 | "sha256:6034f55dab5fea9e53f436aa68fa3ace2634918e8b5994d82f3621c04ff5ed2e",

47 | "sha256:69f00dca373f240f842b2931fb2c7e14ddbacd1397d57157a9b005a6a9942648",

48 | "sha256:73f099454b799e05e5ab51423c7bcf361c58d3206fa7b0d555426b1f4d9a3eaf",

49 | "sha256:74809a57b329d6cc0fdccee6318f44b9b8649961fa73144a98735b0aaf029f1f",

50 | "sha256:7739fc0fa8205b3ee8808aea45e968bc90082c10aef6ea95e855e10abf4a37b2",

51 | "sha256:95f71d2af0ff4227885f7a6605c37fd53d3a106fcab511b8860ecca9fcf400ee",

52 | "sha256:ad9c67312c84def58f3c04504727ca879cb0013b2517c85a9a253f0cb6380c0a",

53 | "sha256:b8eac752c5e14d3eca0e6dd9199cd627518cb5ec06add0de9d32baeee6fe645d",

54 | "sha256:cc8955cfbfc7a115fa81d85284ee61147059a753344bc51098f3ccd69b0d7e0c",

55 | "sha256:d13155f591e6fcc1ec3b30685d50bf0711574e2c0dfffd7644babf8b5102ca1a"

56 | ],

57 | "index": "pypi",

58 | "version": "==5.3.1"

59 | },

60 | "requests": {

61 | "hashes": [

62 | "sha256:7f1a0b932f4a60a1a65caa4263921bb7d9ee911957e0ae4a23a6dd08185ad5f8",

63 | "sha256:e786fa28d8c9154e6a4de5d46a1d921b8749f8b74e28bde23768e5e16eece998"

64 | ],

65 | "index": "pypi",

66 | "version": "==2.25.0"

67 | },

68 | "urllib3": {

69 | "hashes": [

70 | "sha256:19188f96923873c92ccb987120ec4acaa12f0461fa9ce5d3d0772bc965a39e08",

71 | "sha256:d8ff90d979214d7b4f8ce956e80f4028fc6860e4431f731ea4a8c08f23f99473"

72 | ],

73 | "markers": "python_version >= '2.7' and python_version not in '3.0, 3.1, 3.2, 3.3, 3.4' and python_version < '4'",

74 | "version": "==1.26.2"

75 | }

76 | },

77 | "develop": {

78 | "appdirs": {

79 | "hashes": [

80 | "sha256:7d5d0167b2b1ba821647616af46a749d1c653740dd0d2415100fe26e27afdf41",

81 | "sha256:a841dacd6b99318a741b166adb07e19ee71a274450e68237b4650ca1055ab128"

82 | ],

83 | "version": "==1.4.4"

84 | },

85 | "attrs": {

86 | "hashes": [

87 | "sha256:31b2eced602aa8423c2aea9c76a724617ed67cf9513173fd3a4f03e3a929c7e6",

88 | "sha256:832aa3cde19744e49938b91fea06d69ecb9e649c93ba974535d08ad92164f700"

89 | ],

90 | "markers": "python_version >= '2.7' and python_version not in '3.0, 3.1, 3.2, 3.3'",

91 | "version": "==20.3.0"

92 | },

93 | "cfgv": {

94 | "hashes": [

95 | "sha256:32e43d604bbe7896fe7c248a9c2276447dbef840feb28fe20494f62af110211d",

96 | "sha256:cf22deb93d4bcf92f345a5c3cd39d3d41d6340adc60c78bbbd6588c384fda6a1"

97 | ],

98 | "markers": "python_full_version >= '3.6.1'",

99 | "version": "==3.2.0"

100 | },

101 | "distlib": {

102 | "hashes": [

103 | "sha256:8c09de2c67b3e7deef7184574fc060ab8a793e7adbb183d942c389c8b13c52fb",

104 | "sha256:edf6116872c863e1aa9d5bb7cb5e05a022c519a4594dc703843343a9ddd9bff1"

105 | ],

106 | "version": "==0.3.1"

107 | },

108 | "filelock": {

109 | "hashes": [

110 | "sha256:18d82244ee114f543149c66a6e0c14e9c4f8a1044b5cdaadd0f82159d6a6ff59",

111 | "sha256:929b7d63ec5b7d6b71b0fa5ac14e030b3f70b75747cef1b10da9b879fef15836"

112 | ],

113 | "version": "==3.0.12"

114 | },

115 | "flake8": {

116 | "hashes": [

117 | "sha256:749dbbd6bfd0cf1318af27bf97a14e28e5ff548ef8e5b1566ccfb25a11e7c839",

118 | "sha256:aadae8761ec651813c24be05c6f7b4680857ef6afaae4651a4eccaef97ce6c3b"

119 | ],

120 | "index": "pypi",

121 | "version": "==3.8.4"

122 | },

123 | "flake8-annotations": {

124 | "hashes": [

125 | "sha256:0bcebb0792f1f96d617ded674dca7bf64181870bfe5dace353a1483551f8e5f1",

126 | "sha256:bebd11a850f6987a943ce8cdff4159767e0f5f89b3c88aca64680c2175ee02df"

127 | ],

128 | "index": "pypi",

129 | "version": "==2.4.1"

130 | },

131 | "flake8-bugbear": {

132 | "hashes": [

133 | "sha256:528020129fea2dea33a466b9d64ab650aa3e5f9ffc788b70ea4bc6cf18283538",

134 | "sha256:f35b8135ece7a014bc0aee5b5d485334ac30a6da48494998cc1fabf7ec70d703"

135 | ],

136 | "index": "pypi",

137 | "version": "==20.11.1"

138 | },

139 | "flake8-docstrings": {

140 | "hashes": [

141 | "sha256:3d5a31c7ec6b7367ea6506a87ec293b94a0a46c0bce2bb4975b7f1d09b6f3717",

142 | "sha256:a256ba91bc52307bef1de59e2a009c3cf61c3d0952dbe035d6ff7208940c2edc"

143 | ],

144 | "index": "pypi",

145 | "version": "==1.5.0"

146 | },

147 | "flake8-import-order": {

148 | "hashes": [

149 | "sha256:90a80e46886259b9c396b578d75c749801a41ee969a235e163cfe1be7afd2543",

150 | "sha256:a28dc39545ea4606c1ac3c24e9d05c849c6e5444a50fb7e9cdd430fc94de6e92"

151 | ],

152 | "index": "pypi",

153 | "version": "==0.18.1"

154 | },

155 | "flake8-polyfill": {

156 | "hashes": [

157 | "sha256:12be6a34ee3ab795b19ca73505e7b55826d5f6ad7230d31b18e106400169b9e9",

158 | "sha256:e44b087597f6da52ec6393a709e7108b2905317d0c0b744cdca6208e670d8eda"

159 | ],

160 | "version": "==1.0.2"

161 | },

162 | "flake8-string-format": {

163 | "hashes": [

164 | "sha256:65f3da786a1461ef77fca3780b314edb2853c377f2e35069723348c8917deaa2",

165 | "sha256:812ff431f10576a74c89be4e85b8e075a705be39bc40c4b4278b5b13e2afa9af"

166 | ],

167 | "index": "pypi",

168 | "version": "==0.3.0"

169 | },

170 | "flake8-tidy-imports": {

171 | "hashes": [

172 | "sha256:62059ca07d8a4926b561d392cbab7f09ee042350214a25cf12823384a45d27dd",

173 | "sha256:c30b40337a2e6802ba3bb611c26611154a27e94c53fc45639e3e282169574fd3"

174 | ],

175 | "index": "pypi",

176 | "version": "==4.1.0"

177 | },

178 | "flake8-todo": {

179 | "hashes": [

180 | "sha256:6e4c5491ff838c06fe5a771b0e95ee15fc005ca57196011011280fc834a85915"

181 | ],

182 | "index": "pypi",

183 | "version": "==0.7"

184 | },

185 | "identify": {

186 | "hashes": [

187 | "sha256:943cd299ac7f5715fcb3f684e2fc1594c1e0f22a90d15398e5888143bd4144b5",

188 | "sha256:cc86e6a9a390879dcc2976cef169dd9cc48843ed70b7380f321d1b118163c60e"

189 | ],

190 | "markers": "python_version >= '2.7' and python_version not in '3.0, 3.1, 3.2, 3.3'",

191 | "version": "==1.5.10"

192 | },

193 | "mccabe": {

194 | "hashes": [

195 | "sha256:ab8a6258860da4b6677da4bd2fe5dc2c659cff31b3ee4f7f5d64e79735b80d42",

196 | "sha256:dd8d182285a0fe56bace7f45b5e7d1a6ebcbf524e8f3bd87eb0f125271b8831f"

197 | ],

198 | "version": "==0.6.1"

199 | },

200 | "nodeenv": {

201 | "hashes": [

202 | "sha256:5304d424c529c997bc888453aeaa6362d242b6b4631e90f3d4bf1b290f1c84a9",

203 | "sha256:ab45090ae383b716c4ef89e690c41ff8c2b257b85b309f01f3654df3d084bd7c"

204 | ],

205 | "version": "==1.5.0"

206 | },

207 | "pep8-naming": {

208 | "hashes": [

209 | "sha256:a1dd47dd243adfe8a83616e27cf03164960b507530f155db94e10b36a6cd6724",

210 | "sha256:f43bfe3eea7e0d73e8b5d07d6407ab47f2476ccaeff6937c84275cd30b016738"

211 | ],

212 | "index": "pypi",

213 | "version": "==0.11.1"

214 | },

215 | "pre-commit": {

216 | "hashes": [

217 | "sha256:949b13efb7467ae27e2c8f9e83434dacf2682595124d8902554a4e18351e5781",

218 | "sha256:e31c04bc23741194a7c0b983fe512801e151a0638c6001c49f2bd034f8a664a1"

219 | ],

220 | "index": "pypi",

221 | "version": "==2.9.2"

222 | },

223 | "pycodestyle": {

224 | "hashes": [

225 | "sha256:2295e7b2f6b5bd100585ebcb1f616591b652db8a741695b3d8f5d28bdc934367",

226 | "sha256:c58a7d2815e0e8d7972bf1803331fb0152f867bd89adf8a01dfd55085434192e"

227 | ],

228 | "markers": "python_version >= '2.7' and python_version not in '3.0, 3.1, 3.2, 3.3'",

229 | "version": "==2.6.0"

230 | },

231 | "pydocstyle": {

232 | "hashes": [

233 | "sha256:19b86fa8617ed916776a11cd8bc0197e5b9856d5433b777f51a3defe13075325",

234 | "sha256:aca749e190a01726a4fb472dd4ef23b5c9da7b9205c0a7857c06533de13fd678"

235 | ],

236 | "markers": "python_version >= '3.5'",

237 | "version": "==5.1.1"

238 | },

239 | "pyflakes": {

240 | "hashes": [

241 | "sha256:0d94e0e05a19e57a99444b6ddcf9a6eb2e5c68d3ca1e98e90707af8152c90a92",

242 | "sha256:35b2d75ee967ea93b55750aa9edbbf72813e06a66ba54438df2cfac9e3c27fc8"

243 | ],

244 | "markers": "python_version >= '2.7' and python_version not in '3.0, 3.1, 3.2, 3.3'",

245 | "version": "==2.2.0"

246 | },

247 | "pyyaml": {

248 | "hashes": [

249 | "sha256:06a0d7ba600ce0b2d2fe2e78453a470b5a6e000a985dd4a4e54e436cc36b0e97",

250 | "sha256:240097ff019d7c70a4922b6869d8a86407758333f02203e0fc6ff79c5dcede76",

251 | "sha256:4f4b913ca1a7319b33cfb1369e91e50354d6f07a135f3b901aca02aa95940bd2",

252 | "sha256:6034f55dab5fea9e53f436aa68fa3ace2634918e8b5994d82f3621c04ff5ed2e",

253 | "sha256:69f00dca373f240f842b2931fb2c7e14ddbacd1397d57157a9b005a6a9942648",

254 | "sha256:73f099454b799e05e5ab51423c7bcf361c58d3206fa7b0d555426b1f4d9a3eaf",

255 | "sha256:74809a57b329d6cc0fdccee6318f44b9b8649961fa73144a98735b0aaf029f1f",

256 | "sha256:7739fc0fa8205b3ee8808aea45e968bc90082c10aef6ea95e855e10abf4a37b2",

257 | "sha256:95f71d2af0ff4227885f7a6605c37fd53d3a106fcab511b8860ecca9fcf400ee",

258 | "sha256:ad9c67312c84def58f3c04504727ca879cb0013b2517c85a9a253f0cb6380c0a",

259 | "sha256:b8eac752c5e14d3eca0e6dd9199cd627518cb5ec06add0de9d32baeee6fe645d",

260 | "sha256:cc8955cfbfc7a115fa81d85284ee61147059a753344bc51098f3ccd69b0d7e0c",

261 | "sha256:d13155f591e6fcc1ec3b30685d50bf0711574e2c0dfffd7644babf8b5102ca1a"

262 | ],

263 | "index": "pypi",

264 | "version": "==5.3.1"

265 | },

266 | "six": {

267 | "hashes": [

268 | "sha256:30639c035cdb23534cd4aa2dd52c3bf48f06e5f4a941509c8bafd8ce11080259",

269 | "sha256:8b74bedcbbbaca38ff6d7491d76f2b06b3592611af620f8426e82dddb04a5ced"

270 | ],

271 | "markers": "python_version >= '2.7' and python_version not in '3.0, 3.1, 3.2, 3.3'",

272 | "version": "==1.15.0"

273 | },

274 | "snowballstemmer": {

275 | "hashes": [

276 | "sha256:209f257d7533fdb3cb73bdbd24f436239ca3b2fa67d56f6ff88e86be08cc5ef0",

277 | "sha256:df3bac3df4c2c01363f3dd2cfa78cce2840a79b9f1c2d2de9ce8d31683992f52"

278 | ],

279 | "version": "==2.0.0"

280 | },

281 | "toml": {

282 | "hashes": [

283 | "sha256:806143ae5bfb6a3c6e736a764057db0e6a0e05e338b5630894a5f779cabb4f9b",

284 | "sha256:b3bda1d108d5dd99f4a20d24d9c348e91c4db7ab1b749200bded2f839ccbe68f"

285 | ],

286 | "markers": "python_version >= '2.6' and python_version not in '3.0, 3.1, 3.2, 3.3'",

287 | "version": "==0.10.2"

288 | },

289 | "virtualenv": {

290 | "hashes": [

291 | "sha256:07cff122e9d343140366055f31be4dcd61fd598c69d11cd33a9d9c8df4546dd7",

292 | "sha256:e0aac7525e880a429764cefd3aaaff54afb5d9f25c82627563603f5d7de5a6e5"

293 | ],

294 | "markers": "python_version >= '2.7' and python_version not in '3.0, 3.1, 3.2, 3.3'",

295 | "version": "==20.2.1"

296 | }

297 | }

298 | }

299 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # GitHub Actions Status Embed for Discord

2 | _Send enhanced and informational GitHub Actions status embeds to Discord webhooks._

3 |

4 | ## Why?

5 |

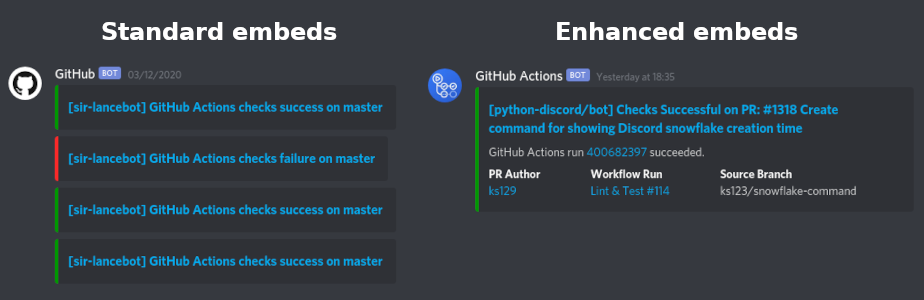

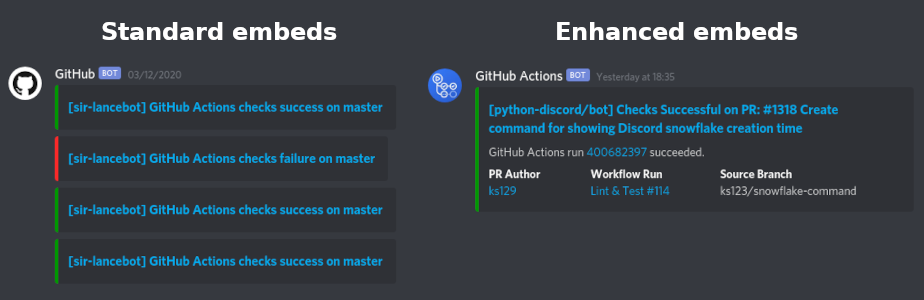

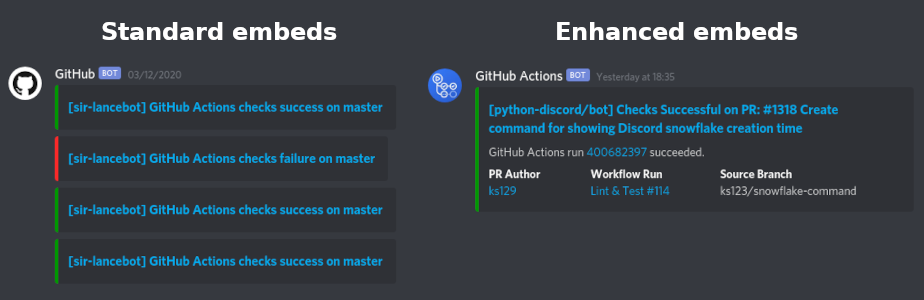

6 | The default status embeds GitHub delivers for workflow runs are very basic: They contain the name of repository, the result of the workflow run (but not the name!), and the branch that served as the context for the workflow run. If the workflow was triggered by a pull request from a fork, the embed does not even differentatiate between branches on the fork and the base repository: The "master" branch in the example below actually refers to the "master" branch of a fork!

7 |

8 | Another problem occurs when multiple workflows are chained: Github will send an embed to your webhook for each individual run result. While this is sometimes what you want, if you chain multiple workflows, the number of embeds you receive may flood your log channel or trigger Discord ratelimiting. Unfortunately, there's no finetuning either: With the standard GitHub webhook events, it's all or nothing.

9 |

10 | ## Solution

11 |

12 | As a solution to this problem, I decided to write a new action that sends an enhanced embed containing a lot more information about the workflow run. The design was inspired by both the status embed sent by Azure as well as the embeds GitHub sends for issue/pull request updates. Here's an example:

13 |

14 |

15 |  16 | Comparison between a standard and an enhanced embed as provided by this action.

17 |

16 | Comparison between a standard and an enhanced embed as provided by this action.

17 |

18 |

19 | As you can see, the standard embeds on the left don't contain a lot of information, while the embed on the right shows the information you'd typically want for a check run on a pull request. While it would be possible to include even more information, there's also obviously a trade-off between the amount of information and the vertical space required to display the embed in Discord.

20 |

21 | Having a custom action also lets you deliver embeds to webhooks when you want to. If you want, you can only send embeds for failing jobs or only at the end of your sequence of chained workflows.

22 |

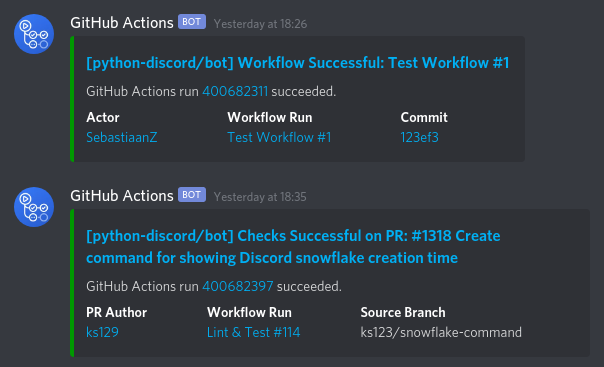

23 | ## General Workflow Runs & PRs

24 |

25 | When a workflow is triggered for a Pull Request, it's natural to include a bit of information about the Pull Request in the embed to give context to the result. However, when a workflow is triggered for another event, there's no Pull Request involved, which also means we can't include information about that non-existant PR in the embed. That's why the Action automatically tailores the embed towards a PR if PR information is provided and tailors it towards a general workflow run if not.

26 |

27 | Spot the difference:

28 |

29 |

30 |  31 |

31 |

32 |

33 | ## Usage

34 |

35 | To use the workflow, simply add it to your workflow and provide the appropriate arguments.

36 |

37 | ### Example workflow file

38 |

39 | ```yaml

40 | on:

41 | push:

42 | branches:

43 | - main

44 | pull_request:

45 |

46 | jobs:

47 | send_embed:

48 | runs-on: ubuntu-latest

49 | name: Send an embed to Discord

50 |

51 | steps:

52 | - name: Run the GitHub Actions Status Embed Action

53 | uses: SebastiaanZ/github-status-embed-for-discord@main

54 | with:

55 | # Discord webhook

56 | webhook_id: '1234567890' # Has to be provided as a string

57 | webhook_token: ${{ secrets.webhook_token }}

58 |

59 | # Optional arguments for PR-related events

60 | # Note: There's no harm in including these lines for non-PR

61 | # events, as non-existing paths in objects will evaluate to

62 | # `null` silently and the github status embed action will

63 | # treat them as absent.

64 | pr_author_login: ${{ github.event.pull_request.user.login }}

65 | pr_number: ${{ github.event.pull_request.number }}

66 | pr_title: ${{ github.event.pull_request.title }}

67 | pr_source: ${{ github.event.pull_request.head.label }}

68 | ```

69 |

70 | ### Command specification

71 |

72 | **Note:** The default values assume that the workflow you want to report the status of is also the workflow that is running this action. If this is not possible (e.g., because you don't have access to secrets in a `pull_request`-triggered workflow), you could use a `workflow_run` triggered workflow that reports the status of the workflow that triggered it. See the recipes section below for an example.

73 |

74 | | Argument | Description | Default |

75 | | --- | --- | :---: |

76 | | status | Status for the embed; one of ["succes", "failure", "cancelled"] | (required) |

77 | | webhook_id | ID of the Discord webhook (use a string) | (required) |

78 | | webhook_token | Token of the Discord webhook | (required) |

79 | | workflow_name | Name of the workflow | github.workflow |

80 | | run_id | Run ID of the workflow | github.run_id |

81 | | run_number | Run number of the workflow | github.run_number |

82 | | actor | Actor who requested the workflow | github.actor |

83 | | repository | Repository; has to be in form `owner/repo` | github.repository |

84 | | ref | Branch or tag ref that triggered the workflow run | github.ref |

85 | | sha | Full commit SHA that triggered the workflow run. | github.sha |

86 | | pr_author_login | **Login** of the Pull Request author | (optional)¹ |

87 | | pr_number | Pull Request number | (optional)¹ |

88 | | pr_title | Title of the Pull Request | (optional)¹ |

89 | | pr_source | Source branch for the Pull Request | (optional)¹ |

90 | | debug | set to "true" to turn on debug logging | false |

91 | | dry_run | set to "true" to not send the webhook request | false |

92 | | pull_request_payload | PR payload in JSON format² **(deprecated)** | (deprecated)³ |

93 |

94 | 1) The Action will determine whether to send an embed tailored towards a Pull Request Check Run or towards a general workflow run based on the presence of non-null values for the four pull request arguments. This means that you either have to provide **all** of them or **none** of them.

95 |

96 | Do note that you can typically keep the arguments in the argument list even if your workflow is triggered for non-PR events, as GitHub's object notation (`name.name.name`) will silently return `null` if a name is unset. In the workflow example above, a `push` event would send an embed tailored to a general workflow run, as all the PR-related arguments would all be `null`.

97 |

98 | 2) The pull request payload may be nested within an array, `[{...}]`. If the array contains multiple PR payloads, only the first one will be picked.

99 |

100 | 3) Providing a JSON payload will take precedence over the individual pr arguments. If a JSON payload is present, it will be used and the individual pr arguments will be ignored, unless parsing the JSON fails.

101 |

102 | ## Recipes

103 |

104 | ### Reporting the status of a `pull_request`-triggered workflow

105 |

106 | One complication with `pull_request`-triggered workflows is that your secrets won't be available if the workflow is triggered for a pull request made from a fork. As you'd typically provide the webhook token as a secret, this makes using this action in such a workflow slightly more complicated.

107 |

108 | However, GitHub has provided an additional workflow trigger specifically for this situation: [`workflow_run`](https://docs.github.com/en/free-pro-team@latest/actions/reference/events-that-trigger-workflows#workflow_run). You can use this event to start a workflow whenever another workflow is being run or has just finished. As workflows triggered by `workflow_run` always run in the base repository, it has full access to your secrets.

109 |

110 | To give your `workflow_run`-triggered workflow access to all the information we need to build a Pull Request status embed, you'll need to share some details from the original workflow in some way. One way to do that is by uploading an artifact. To do that, add these two steps to the end of your `pull_request`-triggered workflow:

111 |

112 | ```yaml

113 | name: Lint & Test

114 |

115 | on:

116 | pull_request:

117 |

118 |

119 | jobs:

120 | lint-test:

121 | runs-on: ubuntu-latest

122 |

123 | steps:

124 | # Your regular steps here

125 |

126 | # -------------------------------------------------------------------------------

127 |

128 | # Prepare the Pull Request Payload artifact. If this fails, we

129 | # we fail silently using the `continue-on-error` option. It's

130 | # nice if this succeeds, but if it fails for any reason, it

131 | # does not mean that our lint-test checks failed.

132 | - name: Prepare Pull Request Payload artifact

133 | id: prepare-artifact

134 | if: always() && github.event_name == 'pull_request'

135 | continue-on-error: true

136 | run: cat $GITHUB_EVENT_PATH | jq '.pull_request' > pull_request_payload.json

137 |

138 | # This only makes sense if the previous step succeeded. To

139 | # get the original outcome of the previous step before the

140 | # `continue-on-error` conclusion is applied, we use the

141 | # `.outcome` value. This step also fails silently.

142 | - name: Upload a Build Artifact

143 | if: always() && steps.prepare-artifact.outcome == 'success'

144 | continue-on-error: true

145 | uses: actions/upload-artifact@v2

146 | with:

147 | name: pull-request-payload

148 | path: pull_request_payload.json

149 | ```

150 |

151 | Then, add a new workflow that is triggered whenever the workflow above is run:

152 |

153 | ```yaml

154 | name: Status Embed

155 |

156 | on:

157 | workflow_run:

158 | workflows:

159 | - Lint & Test

160 | types:

161 | - completed

162 |

163 | jobs:

164 | status_embed:

165 | name: Send Status Embed to Discord

166 | runs-on: ubuntu-latest

167 |

168 | steps:

169 | # Process the artifact uploaded in the `pull_request`-triggered workflow:

170 | - name: Get Pull Request Information

171 | id: pr_info

172 | if: github.event.workflow_run.event == 'pull_request'

173 | run: |

174 | curl -s -H "Authorization: token $GITHUB_TOKEN" ${{ github.event.workflow_run.artifacts_url }} > artifacts.json

175 | DOWNLOAD_URL=$(cat artifacts.json | jq -r '.artifacts[] | select(.name == "pull-request-payload") | .archive_download_url')

176 | [ -z "$DOWNLOAD_URL" ] && exit 1

177 | wget --quiet --header="Authorization: token $GITHUB_TOKEN" -O pull_request_payload.zip $DOWNLOAD_URL || exit 2

178 | unzip -p pull_request_payload.zip > pull_request_payload.json

179 | [ -s pull_request_payload.json ] || exit 3

180 | echo "::set-output name=pr_author_login::$(jq -r '.user.login // empty' pull_request_payload.json)"

181 | echo "::set-output name=pr_number::$(jq -r '.number // empty' pull_request_payload.json)"

182 | echo "::set-output name=pr_title::$(jq -r '.title // empty' pull_request_payload.json)"

183 | echo "::set-output name=pr_source::$(jq -r '.head.label // empty' pull_request_payload.json)"

184 | env:

185 | GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

186 |

187 | # Send an informational status embed to Discord instead of the

188 | # standard embeds that Discord sends. This embed will contain

189 | # more information and we can fine tune when we actually want

190 | # to send an embed.

191 | - name: GitHub Actions Status Embed for Discord

192 | uses: SebastiaanZ/github-status-embed-for-discord@v0.2.1

193 | with:

194 | # Webhook token

195 | webhook_id: '1234567'

196 | webhook_token: ${{ secrets.webhook_token }}

197 |

198 | # We need to provide the information of the workflow that

199 | # triggered this workflow instead of this workflow.

200 | workflow_name: ${{ github.event.workflow_run.name }}

201 | run_id: ${{ github.event.workflow_run.id }}

202 | run_number: ${{ github.event.workflow_run.run_number }}

203 | status: ${{ github.event.workflow_run.conclusion }}

204 | sha: ${{ github.event.workflow_run.head_sha }}

205 |

206 | # Now we can use the information extracted in the previous step:

207 | pr_author_login: ${{ steps.pr_info.outputs.pr_author_login }}

208 | pr_number: ${{ steps.pr_info.outputs.pr_number }}

209 | pr_title: ${{ steps.pr_info.outputs.pr_title }}

210 | pr_source: ${{ steps.pr_info.outputs.pr_source }}

211 | ```

212 |

--------------------------------------------------------------------------------

/action.yaml:

--------------------------------------------------------------------------------

1 | name: GitHub Actions Status Embed for Discord

2 | author: Sebastiaan Zeeff

3 | description: Send an enhanced GitHub Actions status embed to a Discord webhook

4 |

5 | inputs:

6 | workflow_name:

7 | description: 'name of the workflow'

8 | required: false

9 | default: ${{ github.workflow }}

10 |

11 | run_id:

12 | description: 'run id of this workflow run'

13 | required: false

14 | default: ${{ github.run_id }}

15 |

16 | run_number:

17 | description: 'number of this workflow run'

18 | required: false

19 | default: ${{ github.run_number }}

20 |

21 | status:

22 | description: 'results status communicated with this embed'

23 | required: true

24 |

25 | repository:

26 | description: 'GitHub repository name, including owner'

27 | required: false

28 | default: ${{ github.repository }}

29 |

30 | actor:

31 | description: 'actor that initiated the workflow run'

32 | required: false

33 | default: ${{ github.actor }}

34 |

35 | ref:

36 | description: 'The branch or tag that triggered the workflow'

37 | required: false

38 | default: ${{ github.ref }}

39 |

40 | sha:

41 | description: 'sha of the commit that triggered the workflow'

42 | required: false

43 | default: ${{ github.sha }}

44 |

45 | webhook_id:

46 | description: 'ID of the Discord webhook that is targeted'

47 | required: true

48 |

49 | webhook_token:

50 | description: 'token for the Discord webhook that is targeted'

51 | required: true

52 |

53 | pr_author_login:

54 | description: 'login of the PR author'

55 | required: false

56 |

57 | pr_number:

58 | description: 'number of the Pull Request'

59 | required: false

60 |

61 | pr_title:

62 | description: 'title of the Pull Request'

63 | required: false

64 |

65 | pr_source:

66 | description: 'source of the Pull Request'

67 | required: false

68 |

69 | pull_request_payload:

70 | description: 'Pull Request in jSON payload form'

71 | required: false

72 |

73 | debug:

74 | description: 'Output debug logging as annotations'

75 | required: false

76 | default: 'false'

77 |

78 | dry_run:

79 | description: 'Do not send a request to the webhook endpoint'

80 | required: false

81 | default: 'false'

82 |

83 | runs:

84 | using: 'docker'

85 | image: 'Dockerfile'

86 | args:

87 | # Arguments should be listed in the same order as above.

88 | - ${{ inputs.workflow_name }}

89 | - ${{ inputs.run_id }}

90 | - ${{ inputs.run_number }}

91 | - ${{ inputs.status }}

92 | - ${{ inputs.repository }}

93 | - ${{ inputs.actor }}

94 | - ${{ inputs.ref }}

95 | - ${{ inputs.sha }}

96 | - ${{ inputs.webhook_id }}

97 | - ${{ inputs.webhook_token }}

98 | - ${{ inputs.pr_author_login }}

99 | - ${{ inputs.pr_number }}

100 | - ${{ inputs.pr_title }}

101 | - ${{ inputs.pr_source }}

102 | - ${{ inputs.pull_request_payload }}

103 | - ${{ inputs.debug }}

104 | - ${{ inputs.dry_run }}

105 |

106 | branding:

107 | icon: 'check-circle'

108 | color: 'green'

109 |

--------------------------------------------------------------------------------

/github_actions_avatar.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/SebastiaanZ/github-status-embed-for-discord/67f67a60934c0254efd1ed741b5ce04250ebd508/github_actions_avatar.png

--------------------------------------------------------------------------------

/github_status_embed/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Github Status Embed for Discord.

3 |

4 | This applications sends an enhanced GitHub Actions Status Embed to a

5 | Discord webhook. The default embeds sent by GitHub don't contain a lot

6 | of information and are send for every workflow run. This application,

7 | part of a Docker-based action, allows you to send more meaningful embeds

8 | when you want to send them.

9 | """

10 |

--------------------------------------------------------------------------------

/github_status_embed/__main__.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import importlib.resources

3 | import logging

4 | import pathlib

5 | import sys

6 |

7 | import yaml

8 |

9 | from github_status_embed.webhook import send_webhook

10 | from .log import setup_logging

11 | from .types import MissingActionFile, PullRequest, Webhook, Workflow

12 |

13 |

14 | log = logging.getLogger(__name__)

15 |

16 | parser = argparse.ArgumentParser(

17 | description="Send an enhanced GitHub Actions Status Embed to a Discord webhook.",

18 | epilog="Note: Make sure to keep your webhook token private!",

19 | )

20 |

21 | # Auto-generate arguments from `action.yaml` to ensure they are in

22 | # synchronization with each other. This also takes the description

23 | # of each input argument and adds it as a `help` hint.

24 | #

25 | # The `action.yaml` file may be located in two different places,

26 | # depending on whether the application is run in distributed form

27 | # or directly from the git repository.

28 | try:

29 | # Distributed package location using `importlib`

30 | action_specs = importlib.resources.read_text('github_status_embed', 'action.yaml')

31 | except FileNotFoundError:

32 | # The GitHub Actions marketplace requires it to be located in the

33 | # root of the repository, so if we're running a non-distributed

34 | # version, we haven't packaged the filed inside of the package

35 | # directly yet and we have to read it from the repo root.

36 | action_file = pathlib.Path(__file__).parent.parent / "action.yaml"

37 | try:

38 | action_specs = action_file.read_text(encoding="utf-8")

39 | except FileNotFoundError:

40 | raise MissingActionFile("the `action.yaml` can't be found!") from None

41 |

42 | # Now that we've loaded the specifications of our action, extract the inputs

43 | # and their description to register CLI arguments.

44 | action_specs = yaml.safe_load(action_specs)

45 | for argument, configuration in action_specs["inputs"].items():

46 | parser.add_argument(argument, help=configuration["description"])

47 |

48 |

49 | if __name__ == "__main__":

50 | arguments = vars(parser.parse_args())

51 | debug = arguments.pop('debug') not in ('', '0', 'false')

52 | dry_run = arguments.pop('dry_run') not in ('', '0', 'false')

53 |

54 | # Set up logging and make sure to mask the webhook_token in log records

55 | level = logging.DEBUG if debug else logging.WARNING

56 | setup_logging(level, masked_values=[arguments['webhook_token']])

57 |

58 | # If debug logging is requested, enable it for our application only

59 | if debug:

60 | output = "\n".join(f" {k}={v!r}" for k, v in arguments.items())

61 | log.debug("Received the following arguments from the CLI:\n%s", output)

62 |

63 | # Extract Action arguments by creating dataclasses

64 | workflow = Workflow.from_arguments(arguments)

65 | webhook = Webhook.from_arguments(arguments)

66 | if arguments.get("pull_request_payload", False):

67 | log.warning("The use of `pull_request_payload` is deprecated and will be removed in v1.0.0")

68 | pull_request = PullRequest.from_payload(arguments)

69 | else:

70 | pull_request = PullRequest.from_arguments(arguments)

71 |

72 | # Send webhook

73 | success = send_webhook(workflow, webhook, pull_request, dry_run)

74 |

75 | if not success:

76 | log.debug("Exiting with status code 1")

77 | sys.exit(1)

78 |

--------------------------------------------------------------------------------

/github_status_embed/log.py:

--------------------------------------------------------------------------------

1 | import logging

2 | import sys

3 | import typing

4 |

5 |

6 | class MaskingFormatter(logging.Formatter):

7 | """A logging formatter that masks given values."""

8 |

9 | def __init__(self, *args, masked_values: typing.Sequence[str], **kwargs) -> None:

10 | super().__init__(*args, **kwargs)

11 | self._masked_values = masked_values

12 |

13 | def format(self, record: logging.LogRecord) -> str:

14 | """Emit a logging record after masking certain values."""

15 | message = super().format(record)

16 | for masked_value in self._masked_values:

17 | message = message.replace(masked_value, "")

18 |

19 | return message

20 |

21 |

22 | def setup_logging(level: int, masked_values: typing.Sequence[str]) -> None:

23 | """Set up the logging utilities and make sure to mask sensitive data."""

24 | root = logging.getLogger()

25 | root.setLevel(level)

26 |

27 | logging.addLevelName(logging.DEBUG, 'debug')

28 | logging.addLevelName(logging.INFO, 'debug') # GitHub Actions does not have an "info" message

29 | logging.addLevelName(logging.WARNING, 'warning')

30 | logging.addLevelName(logging.ERROR, 'error')

31 |

32 | handler = logging.StreamHandler(sys.stdout)

33 | formatter = MaskingFormatter(

34 | '::%(levelname)s::%(message)s',

35 | datefmt="%Y-%m-%d %H:%M:%S",

36 | masked_values=masked_values,

37 | )

38 | handler.setFormatter(formatter)

39 | root.addHandler(handler)

40 |

--------------------------------------------------------------------------------

/github_status_embed/types.py:

--------------------------------------------------------------------------------

1 | from __future__ import annotations

2 |

3 | import collections

4 | import dataclasses

5 | import enum

6 | import json

7 | import logging

8 | import typing

9 |

10 | log = logging.getLogger(__name__)

11 |

12 | MIN_EMBED_FIELD_LENGTH = 20

13 |

14 |

15 | class MissingActionFile(FileNotFoundError):

16 | """Raised when the Action file can't be located."""

17 |

18 |

19 | class InvalidArgument(TypeError):

20 | """Raised when an argument is of the wrong type."""

21 |

22 |

23 | class MissingArgument(TypeError):

24 | """Raised when a required argument was missing from the inputs."""

25 |

26 | def __init__(self, arg_name: str) -> None:

27 | msg = "\n\n".join((

28 | f"missing non-null value for argument `{arg_name}`",

29 | "Hint: incorrect context paths like `github.non_existent` return `null` silently.",

30 | ))

31 | super().__init__(msg)

32 |

33 |

34 | class TypedDataclass:

35 | """Convert the dataclass arguments to the annotated types."""

36 |

37 | optional = False

38 |

39 | def __init__(self, *args, **kwargs):

40 | raise NotImplementedError

41 |

42 | @classmethod

43 | def __init_subclass__(cls, optional: bool = False, **kwargs) -> None:

44 | """Keep track of whether or not this class is optional."""

45 | super().__init_subclass__(**kwargs)

46 | cls.optional = optional

47 |

48 | @classmethod

49 | def from_arguments(cls, arguments: typing.Dict[str, str]) -> typing.Optional[TypedDataclass]:

50 | """Convert the attributes to their annotated types."""

51 | typed_attributes = typing.get_type_hints(cls)

52 |

53 | # If we have an optional dataclass and none of the values were provided,

54 | # return `None`. The reason is that optional classes should either be

55 | # fully initialized, with all values, or not at all. If we observe at

56 | # least one value, we assume that the intention was to provide them

57 | # all.

58 | if cls.optional and all(arguments.get(attr, "") == "" for attr in typed_attributes):

59 | return None

60 |

61 | # Extract and convert the keyword arguments needed for this data type.

62 | kwargs = {}

63 | for attribute, _type in typed_attributes.items():

64 | value = arguments.pop(attribute, None)

65 |

66 | # At this point, we should not have any missing arguments any more.

67 | if value is None:

68 | raise MissingArgument(attribute)

69 |

70 | try:

71 | if issubclass(_type, enum.Enum):

72 | value = _type[value.upper()]

73 | else:

74 | value = _type(value)

75 | if isinstance(value, collections.Sized) and len(value) == 0:

76 | raise ValueError

77 | except (ValueError, KeyError):

78 | raise InvalidArgument(f"invalid value for `{attribute}`: {value}") from None

79 | else:

80 | kwargs[attribute] = value

81 |

82 | return cls(**kwargs)

83 |

84 |

85 | class WorkflowStatus(enum.Enum):

86 | """An Enum subclass that represents the workflow status."""

87 |

88 | SUCCESS = {"verb": "succeeded", "adjective": "Successful", "color": 38912}

89 | FAILURE = {"verb": "failed", "adjective": "Failed", "color": 16525609}

90 | CANCELLED = {"verb": "was cancelled", "adjective": "Cancelled", "color": 6702148}

91 |

92 | @property

93 | def verb(self) -> str:

94 | """Return the verb associated with the status."""

95 | return self.value["verb"]

96 |

97 | @property

98 | def color(self) -> int:

99 | """Return the color associated with the status."""

100 | return self.value["color"]

101 |

102 | @property

103 | def adjective(self) -> str:

104 | """Return the adjective associated with the status."""

105 | return self.value["adjective"]

106 |

107 |

108 | @dataclasses.dataclass(frozen=True)

109 | class Workflow(TypedDataclass):

110 | """A dataclass to hold information about the executed workflow."""

111 |

112 | workflow_name: str

113 | run_id: int

114 | run_number: int

115 | status: WorkflowStatus

116 | repository: str

117 | actor: str

118 | ref: str

119 | sha: str

120 |

121 | @property

122 | def name(self) -> str:

123 | """A convenience getter for the Workflow name."""

124 | return self.workflow_name

125 |

126 | @property

127 | def id(self) -> int:

128 | """A convenience getter for the Workflow id."""

129 | return self.run_id

130 |

131 | @property

132 | def number(self) -> int:

133 | """A convenience getter for the Workflow number."""

134 | return self.run_number

135 |

136 | @property

137 | def url(self) -> str:

138 | """Get the url to the Workflow run result."""

139 | return f"https://github.com/{self.repository}/actions/runs/{self.run_id}"

140 |

141 | @property

142 | def actor_url(self) -> str:

143 | """Get the url to the Workflow run result."""

144 | return f"https://github.com/{self.actor}"

145 |

146 | @property

147 | def short_sha(self) -> str:

148 | """Return the short commit sha."""

149 | return self.sha[:7]

150 |

151 | @property

152 | def commit_url(self) -> str:

153 | """Return the short commit sha."""

154 | return f"https://github.com/{self.repository}/commit/{self.sha}"

155 |

156 | @property

157 | def repository_owner(self) -> str:

158 | """Extract and return the repository owner from the repository field."""

159 | owner, _, _name = self.repository.partition("/")

160 | return owner

161 |

162 | @property

163 | def repository_name(self) -> str:

164 | """Extract and return the repository owner from the repository field."""

165 | _owner, _, name = self.repository.partition("/")

166 | return name

167 |

168 |

169 | @dataclasses.dataclass(frozen=True)

170 | class Webhook(TypedDataclass):

171 | """A simple dataclass to hold information about the target webhook."""

172 |

173 | webhook_id: int

174 | webhook_token: str

175 |

176 | @property

177 | def id(self) -> int:

178 | """Return the snowflake ID of the webhook."""

179 | return self.webhook_id

180 |

181 | @property

182 | def token(self) -> str:

183 | """Return the token of the webhook."""

184 | return self.webhook_token

185 |

186 | @property

187 | def url(self) -> str:

188 | """Return the endpoint to execute this webhook."""

189 | return f"https://canary.discord.com/api/webhooks/{self.id}/{self.token}"

190 |

191 |

192 | @dataclasses.dataclass(frozen=True)

193 | class PullRequest(TypedDataclass, optional=True):

194 | """

195 | Dataclass to hold the PR-related arguments.

196 |

197 | The attributes names are equal to argument names in the GitHub Actions

198 | specification to allow for helpful error messages. To provide a convenient

199 | public API, property getters were used with less redundant information in

200 | the naming scheme.

201 | """

202 |

203 | pr_author_login: str

204 | pr_number: int

205 | pr_title: str

206 | pr_source: str

207 |

208 | @classmethod

209 | def from_payload(cls, arguments: typing.Dict[str, str]) -> typing.Optional[PullRequest]:

210 | """Create a Pull Request instance from Pull Request Payload JSON."""

211 | # Safe load the JSON Payload provided as a command line argument.

212 | raw_payload = arguments.pop('pull_request_payload').replace("\\", "\\\\")

213 | log.debug(f"Attempting to parse PR Payload JSON: {raw_payload!r}.")

214 | try:

215 | payload = json.loads(raw_payload)

216 | except json.JSONDecodeError:

217 | log.debug("Failed to parse JSON, dropping down to empty payload")

218 | payload = {}

219 | else:

220 | log.debug("Successfully parsed parsed payload")

221 |

222 | # If the payload contains multiple PRs in a list, use the first one.

223 | if isinstance(payload, list):

224 | log.debug("The payload contained a list, extracting first PR.")

225 | payload = payload[0] if payload else {}

226 |

227 | if not payload:

228 | log.warning("PR payload could not be parsed, attempting regular pr arguments.")

229 | return cls.from_arguments(arguments)

230 |

231 | # Get the target arguments from the payload, yielding similar results

232 | # when keys are missing as to when their corresponding arguments are

233 | # missing.

234 | arguments["pr_author_login"] = payload.get('user', {}).get('login', '')

235 | arguments["pr_number"] = payload.get('number', '')

236 | arguments["pr_title"] = payload.get('title', '')

237 | arguments["pr_source"] = payload.get('head', {}).get('label', '')

238 |

239 | return cls.from_arguments(arguments)

240 |

241 | @property

242 | def author(self) -> str:

243 | """Return the `pr_author_login` field."""

244 | return self.pr_author_login

245 |

246 | @property

247 | def author_url(self) -> str:

248 | """Return a URL for the author's profile."""

249 | return f"https://github.com/{self.pr_author_login}"

250 |

251 | @property

252 | def number(self) -> int:

253 | """Return the `pr_number`."""

254 | return self.pr_number

255 |

256 | @property

257 | def title(self) -> str:

258 | """Return the title of the PR."""

259 | return self.pr_title

260 |

261 | def shortened_source(self, length: int, owner: typing.Optional[str] = None) -> str:

262 | """Returned a shortened representation of the source branch."""

263 | pr_source = self.pr_source

264 |

265 | # This removes the owner prefix in the source field if it matches

266 | # the current repository. This means that it will only be displayed

267 | # when the PR is made from a branch on a fork.

268 | if owner:

269 | pr_source = pr_source.removeprefix(f"{owner}:")

270 |

271 | # Truncate the `pr_source` if it's longer than the specified length

272 | length = length if length >= MIN_EMBED_FIELD_LENGTH else MIN_EMBED_FIELD_LENGTH

273 | if len(pr_source) > length:

274 | stop = length - 3

275 | pr_source = f"{pr_source[:stop]}..."

276 |

277 | return pr_source

278 |

279 |

280 | class AllowedMentions(typing.TypedDict, total=False):

281 | """A TypedDict to represent the AllowedMentions in a webhook payload."""

282 |

283 | parse: typing.List[str]

284 | users: typing.List[str]

285 | roles: typing.List[str]

286 | replied_user: bool

287 |

288 |

289 | class EmbedField(typing.TypedDict, total=False):

290 | """A TypedDict to represent an embed field in a webhook payload."""

291 |

292 | name: str

293 | value: str

294 | inline: bool

295 |

296 |

297 | class EmbedFooter(typing.TypedDict, total=False):

298 | """A TypedDict to represent an embed footer in a webhook payload."""

299 |

300 | text: str

301 | icon_url: str

302 | proxy_icon_url: str

303 |

304 |

305 | class EmbedThumbnail(typing.TypedDict, total=False):

306 | """A TypedDict to represent an embed thumbnail in a webhook payload."""

307 |

308 | url: str

309 | proxy_url: str

310 | height: str

311 | width: str

312 |

313 |

314 | class EmbedProvider(typing.TypedDict, total=False):

315 | """A TypedDict to represent an embed provider in a webhook payload."""

316 |

317 | name: str

318 | url: str

319 |

320 |

321 | class EmbedAuthor(typing.TypedDict, total=False):

322 | """A TypedDict to represent an embed author in a webhook payload."""

323 |

324 | name: str

325 | url: str

326 | icon_url: str

327 | proxy_icon_url: str

328 |

329 |

330 | class EmbedVideo(typing.TypedDict, total=False):

331 | """A TypedDict to represent an embed video in a webhook payload."""

332 |

333 | url: str

334 | height: str

335 | width: str

336 |

337 |

338 | class EmbedImage(typing.TypedDict, total=False):

339 | """A TypedDict to represent an embed image in a webhook payload."""

340 |

341 | url: str

342 | proxy_url: str

343 | height: str

344 | width: str

345 |

346 |

347 | class Embed(typing.TypedDict, total=False):

348 | """A TypedDict to represent an embed in a webhook payload."""

349 |

350 | title: str

351 | type: str

352 | description: str

353 | url: str

354 | timestamp: str

355 | color: int

356 | footer: EmbedFooter

357 | image: EmbedImage

358 | thumbnail: EmbedThumbnail

359 | video: EmbedVideo

360 | provider: EmbedProvider

361 | author: EmbedAuthor

362 | fields: typing.List[EmbedField]

363 |

364 |

365 | class WebhookPayload(typing.TypedDict, total=False):

366 | """A TypedDict to represent the webhook payload itself."""

367 |

368 | content: str

369 | username: str

370 | avatar_url: str

371 | tts: bool

372 | file: bytes

373 | embeds: typing.List[Embed]

374 | payload_json: str

375 | allowed_mentions: AllowedMentions

376 |

--------------------------------------------------------------------------------

/github_status_embed/webhook.py:

--------------------------------------------------------------------------------

1 | import json

2 | import logging

3 | import typing

4 |

5 | import requests

6 |

7 | from github_status_embed import types

8 |

9 |

10 | log = logging.getLogger(__name__)

11 |

12 | EMBED_DESCRIPTION = "GitHub Actions run [{run_id}]({run_url}) {status_verb}."

13 | PULL_REQUEST_URL = "https://github.com/{repository}/pull/{number}"

14 | WEBHOOK_USERNAME = "GitHub Actions"

15 | WEBHOOK_AVATAR_URL = (

16 | "https://raw.githubusercontent.com/"

17 | "SebastiaanZ/github-status-embed-for-discord/main/"

18 | "github_actions_avatar.png"

19 | )

20 | FIELD_CHARACTER_BUDGET = 60

21 |

22 |

23 | def get_payload_pull_request(

24 | workflow: types.Workflow, pull_request: types.PullRequest

25 | ) -> types.WebhookPayload:

26 | """Create a WebhookPayload with information about a Pull Request."""

27 | # Calculate the character budget for the Source Branch field

28 | author = pull_request.pr_author_login

29 | workflow_number = f"{workflow.name} #{workflow.number}"

30 | characters_left = FIELD_CHARACTER_BUDGET - len(author) - len(workflow_number)

31 |

32 | fields = [

33 | types.EmbedField(

34 | name="PR Author",

35 | value=f"[{author}]({pull_request.author_url})",

36 | inline=True,

37 | ),

38 | types.EmbedField(

39 | name="Workflow Run",

40 | value=f"[{workflow_number}]({workflow.url})",

41 | inline=True,

42 | ),

43 | types.EmbedField(

44 | name="Source Branch",

45 | value=pull_request.shortened_source(characters_left, owner=workflow.repository_owner),

46 | inline=True,

47 | ),

48 | ]

49 |

50 | embed = types.Embed(

51 | title=(

52 | f"[{workflow.repository}] Checks {workflow.status.adjective} on PR: "

53 | f"#{pull_request.number} {pull_request.title}"

54 | ),

55 | description=EMBED_DESCRIPTION.format(

56 | run_id=workflow.id, run_url=workflow.url, status_verb=workflow.status.verb,

57 | ),

58 | url=PULL_REQUEST_URL.format(

59 | repository=workflow.repository, number=pull_request.number

60 | ),

61 | color=workflow.status.color,

62 | fields=fields,

63 | )

64 |

65 | webhook_payload = types.WebhookPayload(

66 | username=WEBHOOK_USERNAME,

67 | avatar_url=WEBHOOK_AVATAR_URL,

68 | embeds=[embed]

69 | )

70 | return webhook_payload

71 |

72 |

73 | def get_payload(workflow: types.Workflow) -> types.WebhookPayload:

74 | """Create a WebhookPayload with information about a generic Workflow run."""

75 | embed_fields = [

76 | types.EmbedField(

77 | name="Actor",

78 | value=f"[{workflow.actor}]({workflow.actor_url})",

79 | inline=True,

80 | ),

81 | types.EmbedField(

82 | name="Workflow Run",

83 | value=f"[{workflow.name} #{workflow.number}]({workflow.url})",

84 | inline=True,

85 | ),

86 | types.EmbedField(

87 | name="Commit",

88 | value=f"[{workflow.short_sha}]({workflow.commit_url})",

89 | inline=True,

90 | ),

91 | ]

92 |

93 | embed = types.Embed(

94 | title=f"[{workflow.repository}] Workflow {workflow.status.adjective}",

95 | description=EMBED_DESCRIPTION.format(

96 | run_id=workflow.id, run_url=workflow.url, status_verb=workflow.status.verb,

97 | ),

98 | url=workflow.url,

99 | color=workflow.status.color,

100 | fields=embed_fields,

101 | )

102 |

103 | webhook_payload = types.WebhookPayload(

104 | username=WEBHOOK_USERNAME,

105 | avatar_url=WEBHOOK_AVATAR_URL,

106 | embeds=[embed]

107 | )

108 |

109 | return webhook_payload

110 |

111 |

112 | def send_webhook(

113 | workflow: types.Workflow,

114 | webhook: types.Webhook,

115 | pull_request: typing.Optional[types.PullRequest],

116 | dry_run: bool = False,

117 | ) -> bool:

118 | """Send an embed to specified webhook."""

119 | if pull_request is None:

120 | log.debug("Creating payload for non-Pull Request event")

121 | payload = get_payload(workflow)

122 | else:

123 | log.debug("Creating payload for Pull Request Check")

124 | payload = get_payload_pull_request(workflow, pull_request)

125 |

126 | log.debug("Generated payload:\n%s", json.dumps(payload, indent=4))

127 |

128 | if dry_run:

129 | return True

130 |

131 | response = requests.post(webhook.url, json=payload)

132 |

133 | log.debug(f"Response: [{response.status_code}] {response.reason}")

134 | if response.ok:

135 | print(f"[status: {response.status_code}] Successfully delivered webhook payload!")

136 | else:

137 | # Output an error message using the GitHub Actions error command format

138 | print(

139 | "::error::Discord webhook delivery failed! "

140 | f"(status: {response.status_code}; reason: {response.reason})"

141 | )

142 |

143 | return response.ok

144 |

--------------------------------------------------------------------------------

/img/embed_comparison.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/SebastiaanZ/github-status-embed-for-discord/67f67a60934c0254efd1ed741b5ce04250ebd508/img/embed_comparison.png

--------------------------------------------------------------------------------

/img/type_comparison.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/SebastiaanZ/github-status-embed-for-discord/67f67a60934c0254efd1ed741b5ce04250ebd508/img/type_comparison.png

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | requests==2.25.0

2 | PyYAML==5.3.1

3 |

--------------------------------------------------------------------------------

/tox.ini:

--------------------------------------------------------------------------------

1 | [flake8]

2 | max-line-length=100

3 | docstring-convention=all

4 | import-order-style=pycharm

5 | application_import_names=github_status_embed

6 | exclude=.cache,.venv,.git

7 | ignore=

8 | B311,W503,E226,S311,T000,

9 | # Missing Docstrings

10 | D100,D104,D105,D107,

11 | # Docstring Whitespace

12 | D203,D212,D214,D215,

13 | # Docstring Quotes

14 | D301,D302,

15 | # Docstring Content

16 | D400,D401,D402,D404,D405,D406,D407,D408,D409,D410,D411,D412,D413,D414,D416,D417,

17 | # Type Annotations

18 | ANN002,ANN003,ANN101,ANN102,ANN204,ANN206

19 |

--------------------------------------------------------------------------------

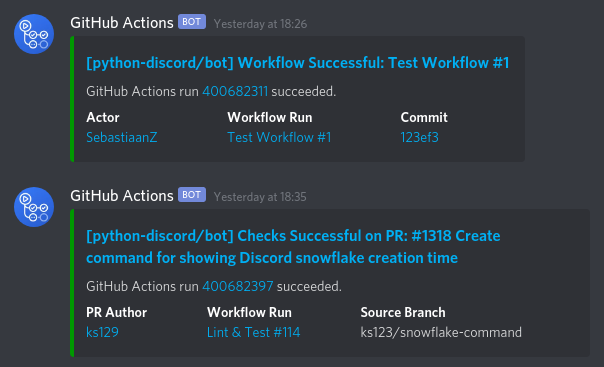

16 | Comparison between a standard and an enhanced embed as provided by this action.

17 |

16 | Comparison between a standard and an enhanced embed as provided by this action.

17 |  16 | Comparison between a standard and an enhanced embed as provided by this action.

17 |

16 | Comparison between a standard and an enhanced embed as provided by this action.

17 |  31 |

31 |