├── .idea

├── .gitignore

├── encodings.xml

├── libraries

│ └── R_User_Library.xml

├── misc.xml

├── modules.xml

├── vcs.xml

└── 基于Paddlepaddle的黑白影片色彩重建.iml

├── Prior

├── Clear.py

└── imageCrop.py

├── README.md

├── conversion.py

├── gray2color.py

├── main.py

├── model

└── gray2color.inference.model

│ ├── batch_norm_0.b_0

│ ├── batch_norm_0.w_0

│ ├── batch_norm_0.w_1

│ ├── batch_norm_0.w_2

│ ├── batch_norm_1.b_0

│ ├── batch_norm_1.w_0

│ ├── batch_norm_1.w_1

│ ├── batch_norm_1.w_2

│ ├── batch_norm_10.b_0

│ ├── batch_norm_10.w_0

│ ├── batch_norm_10.w_1

│ ├── batch_norm_10.w_2

│ ├── batch_norm_11.b_0

│ ├── batch_norm_11.w_0

│ ├── batch_norm_11.w_1

│ ├── batch_norm_11.w_2

│ ├── batch_norm_12.b_0

│ ├── batch_norm_12.w_0

│ ├── batch_norm_12.w_1

│ ├── batch_norm_12.w_2

│ ├── batch_norm_13.b_0

│ ├── batch_norm_13.w_0

│ ├── batch_norm_13.w_1

│ ├── batch_norm_13.w_2

│ ├── batch_norm_14.b_0

│ ├── batch_norm_14.w_0

│ ├── batch_norm_14.w_1

│ ├── batch_norm_14.w_2

│ ├── batch_norm_2.b_0

│ ├── batch_norm_2.w_0

│ ├── batch_norm_2.w_1

│ ├── batch_norm_2.w_2

│ ├── batch_norm_3.b_0

│ ├── batch_norm_3.w_0

│ ├── batch_norm_3.w_1

│ ├── batch_norm_3.w_2

│ ├── batch_norm_4.b_0

│ ├── batch_norm_4.w_0

│ ├── batch_norm_4.w_1

│ ├── batch_norm_4.w_2

│ ├── batch_norm_5.b_0

│ ├── batch_norm_5.w_0

│ ├── batch_norm_5.w_1

│ ├── batch_norm_5.w_2

│ ├── batch_norm_6.b_0

│ ├── batch_norm_6.w_0

│ ├── batch_norm_6.w_1

│ ├── batch_norm_6.w_2

│ ├── batch_norm_7.b_0

│ ├── batch_norm_7.w_0

│ ├── batch_norm_7.w_1

│ ├── batch_norm_7.w_2

│ ├── batch_norm_8.b_0

│ ├── batch_norm_8.w_0

│ ├── batch_norm_8.w_1

│ ├── batch_norm_8.w_2

│ ├── batch_norm_9.b_0

│ ├── batch_norm_9.w_0

│ ├── batch_norm_9.w_1

│ ├── batch_norm_9.w_2

│ ├── conv2d_0.b_0

│ ├── conv2d_0.w_0

│ ├── conv2d_1.b_0

│ ├── conv2d_1.w_0

│ ├── conv2d_10.b_0

│ ├── conv2d_10.w_0

│ ├── conv2d_11.b_0

│ ├── conv2d_11.w_0

│ ├── conv2d_12.b_0

│ ├── conv2d_12.w_0

│ ├── conv2d_13.b_0

│ ├── conv2d_13.w_0

│ ├── conv2d_14.b_0

│ ├── conv2d_14.w_0

│ ├── conv2d_2.b_0

│ ├── conv2d_2.w_0

│ ├── conv2d_3.b_0

│ ├── conv2d_3.w_0

│ ├── conv2d_4.b_0

│ ├── conv2d_4.w_0

│ ├── conv2d_5.b_0

│ ├── conv2d_5.w_0

│ ├── conv2d_6.b_0

│ ├── conv2d_6.w_0

│ ├── conv2d_7.b_0

│ ├── conv2d_7.w_0

│ ├── conv2d_8.b_0

│ ├── conv2d_8.w_0

│ ├── conv2d_9.b_0

│ ├── conv2d_9.w_0

│ ├── conv2d_transpose_0.w_0

│ └── conv2d_transpose_1.w_0

├── resouse

└── logo.png

└── train

├── ResNet.py

├── Utils.py

└── fileReader.py

/.idea/.gitignore:

--------------------------------------------------------------------------------

1 | # Default ignored files

2 | /workspace.xml

--------------------------------------------------------------------------------

/.idea/encodings.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/.idea/libraries/R_User_Library.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/.idea/misc.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

--------------------------------------------------------------------------------

/.idea/modules.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

--------------------------------------------------------------------------------

/.idea/vcs.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/.idea/基于Paddlepaddle的黑白影片色彩重建.iml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

--------------------------------------------------------------------------------

/Prior/Clear.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import os

3 | import imghdr

4 | from PIL import Image

5 | import threading

6 |

7 |

8 | '''多线程将数据集中单通道图删除'''

9 | def cutArray(l, num):

10 | avg = len(l) / float(num)

11 | o = []

12 | last = 0.0

13 |

14 | while last < len(l):

15 | o.append(l[int(last):int(last + avg)])

16 | last += avg

17 |

18 | return o

19 |

20 | def deleteErrorImage(path,image_dir):

21 | count = 0

22 | for file in image_dir:

23 | try:

24 | image = os.path.join(path,file)

25 | image_type = imghdr.what(image)

26 | if image_type is not 'jpeg':

27 | os.remove(image)

28 | count = count + 1

29 |

30 | img = np.array(Image.open(image))

31 | if len(img.shape) is 2:

32 | os.remove(image)

33 | count = count + 1

34 | except Exception as e:

35 | print(e)

36 | print('done!')

37 | print('已删除数量:' + str(count))

38 |

39 | class thread(threading.Thread):

40 | def __init__(self, threadID, path, files):

41 | threading.Thread.__init__(self)

42 | self.threadID = threadID

43 | self.path = path

44 | self.files = files

45 | def run(self):

46 | deleteErrorImage(self.path,self.files)

47 |

48 | if __name__ == '__main__':

49 | path = './work/train/'

50 | files = os.listdir(path)

51 | files = cutArray(files,8)

52 | t1 = threading.Thread(target=deleteErrorImage,args=(path,files[0]))

53 | t2 = threading.Thread(target=deleteErrorImage,args=(path,files[1]))

54 | t3 = threading.Thread(target=deleteErrorImage,args=(path,files[2]))

55 | t4 = threading.Thread(target=deleteErrorImage,args=(path,files[3]))

56 | t5 = threading.Thread(target=deleteErrorImage,args=(path,files[4]))

57 | t6 = threading.Thread(target=deleteErrorImage,args=(path,files[5]))

58 | t7 = threading.Thread(target=deleteErrorImage,args=(path,files[6]))

59 | t8 = threading.Thread(target=deleteErrorImage,args=(path,files[7]))

60 | threadList = []

61 | threadList.append(t1)

62 | threadList.append(t2)

63 | threadList.append(t3)

64 | threadList.append(t4)

65 | threadList.append(t5)

66 | threadList.append(t6)

67 | threadList.append(t7)

68 | threadList.append(t8)

69 | for t in threadList:

70 | t.setDaemon(True)

71 | t.start()

72 | t.join()

--------------------------------------------------------------------------------

/Prior/imageCrop.py:

--------------------------------------------------------------------------------

1 | from PIL import Image

2 | import os.path

3 | import os

4 | import threading

5 | from PIL import ImageFile

6 | ImageFile.LOAD_TRUNCATED_IMAGES = True

7 |

8 | '''多线程将图片缩放后再裁切到256*256分辨率'''

9 | w = 256

10 | h = 256

11 |

12 | def cutArray(l, num):

13 | avg = len(l) / float(num)

14 | o = []

15 | last = 0.0

16 |

17 | while last < len(l):

18 | o.append(l[int(last):int(last + avg)])

19 | last += avg

20 |

21 | return o

22 |

23 | def convertjpg(jpgfile,outdir,width=w,height=h):

24 | img=Image.open(jpgfile)

25 | (l,h) = img.size

26 | rate = min(l,h) / width

27 | try:

28 | img = img.resize((int(l // rate),int(h // rate)),Image.BILINEAR)

29 | (l,h) = img.size

30 | lstart = (l - width)//2

31 | hstart = (h - height)//2

32 | img = img.crop((lstart,hstart,lstart + width,hstart + height))

33 | img.save(os.path.join(outdir,os.path.basename(jpgfile)))

34 | except Exception as e:

35 | print(e)

36 |

37 | class thread(threading.Thread):

38 | def __init__(self, threadID, inpath, outpath, files):

39 | threading.Thread.__init__(self)

40 | self.threadID = threadID

41 | self.inpath = inpath

42 | self.outpath = outpath

43 | self.files = files

44 | def run(self):

45 | count = 0

46 | try:

47 | for file in self.files:

48 | convertjpg(self.inpath + file,self.outpath)

49 | count = count + 1

50 | except Exception as e:

51 | print(e)

52 | print('已处理图片数量:' + str(count))

53 |

54 | if __name__ == '__main__':

55 | inpath = './work/train/'

56 | outpath = './work/train/'

57 | files = os.listdir(inpath)

58 | # for file in files:

59 | # convertjpg(path + file,path)

60 | files = cutArray(files,8)

61 | T1 = thread(1, inpath, outpath, files[0])

62 | T2 = thread(2, inpath, outpath, files[1])

63 | T3 = thread(3, inpath, outpath, files[2])

64 | T4 = thread(4, inpath, outpath, files[3])

65 | T5 = thread(5, inpath, outpath, files[4])

66 | T6 = thread(6, inpath, outpath, files[5])

67 | T7 = thread(7, inpath, outpath, files[6])

68 | T8 = thread(8, inpath, outpath, files[7])

69 |

70 | T1.start()

71 | T2.start()

72 | T3.start()

73 | T4.start()

74 | T5.start()

75 | T6.start()

76 | T7.start()

77 | T8.start()

78 |

79 | T1.join()

80 | T2.join()

81 | T3.join()

82 | T4.join()

83 | T5.join()

84 | T6.join()

85 | T7.join()

86 | T8.join()

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | **通过黑白图片的情景语义找到颜色和结构纹理特征的映射,将黑白影片彩色化**

4 |

5 | | 脚本需要配合命令行工具ffmpeg |

6 | | -------------------------- |

7 | ## 项目文件结构

8 |

9 | + cache 视频转换缓存目录

10 | * audio 原视频的音频文件

11 | * input_img 原视频分解成每帧图像目录(黑白图片)

12 | * output_img 将input_img文件夹下图片处理后生成对应的图片目录(彩色图片)

13 | + data 训练集目录

14 | * test 测试集

15 | * train 训练集

16 | + model 模型存放目录

17 | + movie 视频文件(*.mp4)文件存放目录

18 | + prior 预处理目录

19 | * Clear.py 清理训练集和测试集中的损坏文件和不是JPEG格式的图片

20 | * getExperience.py 预处理ImageNet数据集得到Q空间上的经验分布

21 | * getWeight.py 将经验分布结合高斯函数,经过处理得到经验分布的权值

22 | * imageCrop.py 将图片进行缩放后再裁剪到256*256像素

23 | * Experience.npy 运行getExperience.py生成经验分布的Numpy矩阵文件

24 | * Q.npy 人为划定的Q空间对应的Numpy矩阵文件

25 | * Weight.npy 运行getWeight.py生成经验分布的Numpy矩阵文件

26 | + train 训练脚本目录

27 | * fileReader.npy 从指定文件夹读取文件的Reader,并做预处理

28 | * train.npy 训练脚本

29 | + conversion.py 将movie目录读取input.mp4文件并分解成每帧图片存放在cache/input_img/,音频文件存放在cache/audio/

30 | + gray2color.py 将输入图片经过模型处理再转换为RGB图片并储存

31 |

32 | ## 转换图片/视频

33 | 先进入paddle环境

34 | ```bash

35 | cd <项目路径>

36 | source activate

37 | ```

38 | 转换图片

39 | ```bash

40 | Python main.py -img_in <需要转换图片路径> -save <保存位置>

41 | ```

42 | 转换视频

43 | ```bash

44 | Python main.py -movie_in <需要转换视频路径> -save <保存位置>

45 | ```

46 | # PaddleColorization-黑白照片着色

47 |

48 | > 将黑白照片着色是不是一件神奇的事情?

49 |

50 | > 本项目将带领你一步一步学习将黑白图片甚至黑白影片的彩色化

51 |

52 | ---

53 |

54 | ## 开启着色之旅!!!

55 |

56 | ### 先来看看成品

57 |

58 |

59 |

60 | > 欢迎大家fork学习~有任何问题欢迎在评论区留言互相交流哦

61 |

62 | > 这里一点小小的宣传,我感兴趣的领域包括迁移学习、生成对抗网络。欢迎交流关注。[来AI Studio互粉吧~等你哦~ ](https://aistudio.baidu.com/aistudio/personalcenter/thirdview/56447)

63 |

64 |

65 | # 1 项目简介

66 |

67 | 本项目基于paddlepaddle,结合残差网络(ResNet),通过监督学习的方式,训练模型将黑白图片转换为彩色图片

68 |

69 | ---

70 |

71 | ### 1.1 残差网络(ResNet)

72 |

73 | ### 1.1.1 背景介绍

74 |

75 | ResNet(Residual Network) [15] 是2015年ImageNet图像分类、图像物体定位和图像物体检测比赛的冠军。针对随着网络训练加深导致准确度下降的问题,ResNet提出了残差学习方法来减轻训练深层网络的困难。在已有设计思路(BN, 小卷积核,全卷积网络)的基础上,引入了残差模块。每个残差模块包含两条路径,其中一条路径是输入特征的直连通路,另一条路径对该特征做两到三次卷积操作得到该特征的残差,最后再将两条路径上的特征相加。

76 |

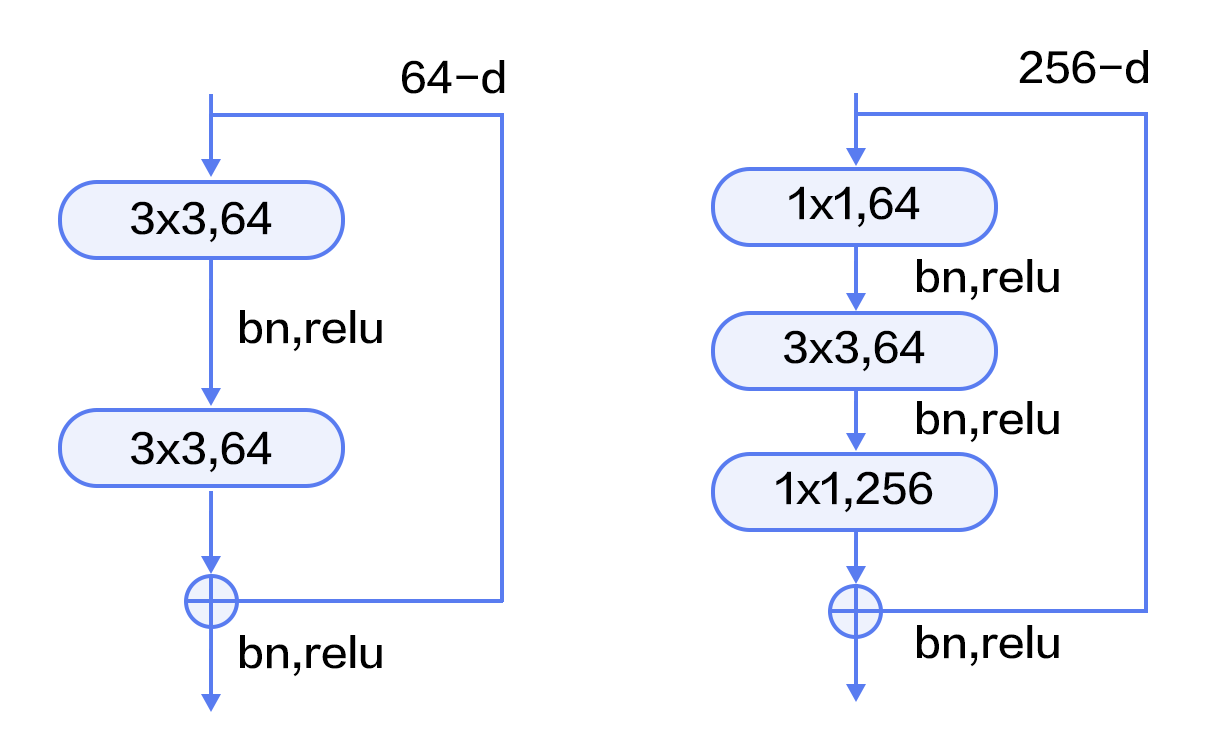

77 | 残差模块如图9所示,左边是基本模块连接方式,由两个输出通道数相同的3x3卷积组成。右边是瓶颈模块(Bottleneck)连接方式,之所以称为瓶颈,是因为上面的1x1卷积用来降维(图示例即256->64),下面的1x1卷积用来升维(图示例即64->256),这样中间3x3卷积的输入和输出通道数都较小(图示例即64->64)。

78 |

79 |

80 |

81 | ### 1.2 项目设计思路及主要解决问题

82 |

83 | > * 设计思路:通过训练网络对大量样本的学习得到经验分布(例如天空永远是蓝色的,草永远是绿色的),通过经验分布推得黑白图像上各部分合理的颜色

84 |

85 | > * 主要解决问题:大量物体颜色并不是固定的也就是物体颜色具有多模态性(例如:苹果可以是红色也可以是绿色和黄色)。通常使用均方差作为损失函数会让具有颜色多模态属性的物体趋于寻找一个“平均”的颜色(通常为淡黄色)导致着色后的图片饱和度不高。

86 |

87 | ---

88 |

89 | ### 1.3 本文主要特征

90 |

91 | * 将Adam优化器beta1参数设置为0.8,具体请参考[原论文](https://arxiv.org/abs/1412.6980)

92 | * 将BatchNorm批归一化中momentum参数设置为0.5

93 | * 采用基本模块连接方式

94 | * 为抑制多模态问题,在均方差的基础上重新设计损失函数

95 |

96 | ---

97 |

98 | 损失函数公式如下:

99 |

100 | $Out = 1/n\sum{(input-label)^{2}} + 26.7/(n{\sum{(input - \bar{input})^{2}}})$

101 |

102 | ---

103 |

104 | ### 1.4 数据集介绍(ImageNet)

105 |

106 | > ImageNet项目是一个用于视觉对象识别软件研究的大型可视化数据库。超过1400万的图像URL被ImageNet手动注释,以指示图片中的对象;在至少一百万个图像中,还提供了边界框。ImageNet包含2万多个类别; [2]一个典型的类别,如“气球”或“草莓”,包含数百个图像。第三方图像URL的注释数据库可以直接从ImageNet免费获得;但是,实际的图像不属于ImageNet。自2010年以来,ImageNet项目每年举办一次软件比赛,即ImageNet大规模视觉识别挑战赛(ILSVRC),软件程序竞相正确分类检测物体和场景。 ImageNet挑战使用了一个“修剪”的1000个非重叠类的列表。2012年在解决ImageNet挑战方面取得了巨大的突破,被广泛认为是2010年的深度学习革命的开始。(来源:百度百科)

107 |

108 | > ImageNet2012介绍:

109 | >

110 | > * Training images (Task 1 & 2). 138GB.(约120万张高清图片,共1000个类别)

111 | > * Validation images (all tasks). 6.3GB.

112 | > * Training bounding box annotations (Task 1 & 2 only). 20MB.

113 |

114 | ### 1.5 LAB颜色空间

115 |

116 | > Lab模式是根据Commission International Eclairage(CIE)在1931年所制定的一种测定颜色的国际标准建立的。于1976年被改进,并且命名的一种色彩模式。Lab颜色模型弥补了RGB和CMYK两种色彩模式的不足。它是一种设备无关的颜色模型,也是一种基于生理特征的颜色模型。 [1] Lab颜色模型由三个要素组成,一个要素是亮度(L),a 和b是两个颜色通道。a包括的颜色是从深绿色(低亮度值)到灰色(中亮度值)再到亮粉红色(高亮度值);b是从亮蓝色(低亮度值)到灰色(中亮度值)再到黄色(高亮度值)。因此,这种颜色混合后将产生具有明亮效果的色彩。(来源:百度百科)

117 | >

118 |

119 |

120 |

121 |

122 | # 2.项目总结

123 |

124 | 通过循序渐进的方式叙述了项目的过程。

125 | 对于训练结果虽然本项目通过抑制平均化加大离散程度提高了着色的饱和度,但最终结果仍然有较大现实差距,只能对部分场景有比较好的结果,对人造场景(如超市景观等)仍然表现力不足。

126 | 接下来准备进一步去设计损失函数,目的是让网络着色结果足以欺骗人的”直觉感受“,而不是一味地接近真实场景

--------------------------------------------------------------------------------

/conversion.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | from cv2 import VideoWriter,VideoWriter_fourcc

3 | import os

4 | import shutil

5 | import subprocess

6 |

7 | '''本脚本需要配合命令行工具ffmpeg'''

8 |

9 | def video2photo(videoName = './movie/input.mp4'):

10 | '''将视频转换为图片,视频默认读取路径./movie/input.mp4,图片写入路径./cache/input_img/*(从1开始给每一帧图片命名*.jpg)

11 | INPUTS

12 | videoName 视频读取路径

13 | OUTPUTS

14 | None

15 | '''

16 | video = cv2.VideoCapture(videoName)

17 | n = 1

18 | #清空图片缓存

19 | shutil.rmtree('./cache/input_img')

20 | os.mkdir('./cache/input_img')

21 | #清空音频缓存

22 | shutil.rmtree('./cache/audio')

23 | os.mkdir('./cache/audio')

24 | # 提取音频保存到./cache/audio/

25 | # 运行终端程序提取音频

26 | command = 'ffmpeg -i ' + videoName + ' -ab 160k -ac 2 -ar 44100 -vn ./cache/audio/audio.wav'

27 | subprocess.call(command, shell=True,stdout= open('/dev/null','w'),stderr=subprocess.STDOUT)

28 |

29 | if video.isOpened():

30 | rval, frame = video.read()

31 | else:

32 | rval = False

33 | while rval:

34 | try:

35 | rval,frame = video.read()

36 | cv2.imwrite('./cache/input_img/' + str(n) + '.jpg',frame)

37 | n = n + 1

38 | cv2.waitKey(1)

39 | except Exception as e:

40 | break

41 | video.release()

42 | print('[*] 视频转换图片成功...')

43 |

44 |

45 |

46 |

47 | def photo2video(videoName = './cache/output.mp4',fps = 25,imgs = './cache/output_img'):

48 | '''将图片转换为视频,图片读取路径./cache/output_img.mp4,视频默认写入路径./movie/output.mp4,待合成图片默认文件夹路径./cache/output_img

49 | INPUTS

50 | videoName 视频写入路径

51 | fps 视频帧率

52 | imgs 待合成图片文件夹路径

53 | OUTPUTS

54 | None

55 | '''

56 | #获取图像分辨率

57 | img = cv2.imread(imgs + '/1.jpg')

58 | sp = img.shape

59 | height = sp[0]

60 | width = sp[1]

61 | size = (width,height)

62 |

63 | fourcc = VideoWriter_fourcc(*'mp4v')

64 | videoWriter = VideoWriter(videoName,fourcc,fps,size)

65 | photos = os.listdir(imgs)

66 | for photo in range(len(photos)):

67 | frame = cv2.imread(imgs + '/' + str(photo) + '.jpg')

68 | videoWriter.write(frame)

69 | videoWriter.release()

70 | #合成新生成的视频和音频

71 | if (os.path.exists('./movie/output.mkv')):

72 | os.remove('./movie/output.mkv')

73 | command = 'ffmpeg -i ./movie/output.mp4 -i ./cache/audio/audio.wav -c copy ./movie/output.mkv'

74 | subprocess.call(command,shell=True,stdout= open('/dev/null','w'),stderr=subprocess.STDOUT)

75 | #os.remove('./cache/output.mp4')

76 | print('[*] 生成视频成功,路径为./cache/output.mkv')

77 |

78 |

79 |

80 |

81 | '''test'''

82 | if __name__ == '__main__':

83 | photo2video(imgs='./cache/output_img')

--------------------------------------------------------------------------------

/gray2color.py:

--------------------------------------------------------------------------------

1 | import paddle

2 | import numpy as np

3 | from skimage import io,color,transform

4 | from paddle import fluid

5 | import matplotlib.pyplot as plt

6 | import os

7 | import lab2rgb

8 | import Prior.imageCrop as crop

9 | import matplotlib.pyplot as plt

10 |

11 | use_cuda = True

12 | place = fluid.CUDAPlace(0) if use_cuda else fluid.CPUPlace()

13 | exe = fluid.Executor(place)

14 | scope = fluid.core.Scope()

15 | fluid.scope_guard(scope)

16 | [inference_program, feed_target_names, fetch_targets] = fluid.io.load_inference_model(dirname=r'model/gray2color.inference.model',executor=exe,)

17 |

18 | def loadImage(image):

19 | '''读取图片,并转为Lab,并提取出L和ab'''

20 | img = io.imread(image)

21 | lab = np.array(color.rgb2lab(img)).transpose()

22 | l = lab[:1, :, :]

23 | return l.reshape(1,1,256,256).astype('float32')

24 |

25 |

26 | def nd_to_2d( i, axis=0):

27 | '''将N维的矩阵转为2维矩阵

28 | INPUT

29 | i N维矩阵

30 | axis 需要保留的维度

31 | OUTPUT

32 | o 转换的2维矩阵

33 | '''

34 | n = i.ndim

35 | shapeArray = np.array(i.shape)

36 | diff = np.setdiff1d(np.arange(0, n), np.array(axis))

37 | p = np.prod(shapeArray[diff])

38 | ax = np.concatenate((diff, np.array(axis).flatten()), axis=0)

39 | o = i.transpose((ax))

40 | o = o.reshape(p, shapeArray[axis])

41 | return o

42 |

43 | def run(input,output):

44 | '''处理图片并存储到相应位置

45 | INPUT

46 | input 输入图片路径

47 | output 输出图片路径

48 | OUTPUT

49 | None

50 | '''

51 | inference_transpiler_program = inference_program.clone()

52 | # t = fluid.transpiler.InferenceTranspiler()

53 | # t.transpile(inference_transpiler_program, place)

54 | crop.convertjpg(jpgfile=input,outdir=None)

55 | l = loadImage(input)

56 | result = exe.run(inference_program, feed={feed_target_names[0]: (l)}, fetch_list=fetch_targets)

57 |

58 | ab = result[0][0]

59 | l = l[0][0]

60 | img = lab2rgb.lab2rgb(l,ab)

61 | #img = transform.rotate(img,270)

62 | #img = np.fliplr(img)

63 | img = img.astype('float32')

64 | plt.grid(False)

65 | plt.axis('off')

66 | plt.imshow(img)

67 | #plt.show()

68 | plt.savefig(str(output),bbox_inches='tight')

69 |

70 |

71 | if __name__ == '__main__':

72 | # run('/home/redflashing/PycharmProjects/基于Paddlepaddle的黑白影片色彩重建/data/train/n01514859_142.JPEG','/home/redflashing/下载/1.JPEG')

73 | path = './dataset/test/'

74 | files = os.listdir(path)

75 | for file in files:

76 | try:

77 | run(path+file,'output_img/'+file)

78 | print(file + ' done!')

79 | except Exception as e:

80 | print(e)

81 |

82 |

83 |

84 |

85 |

--------------------------------------------------------------------------------

/main.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import os

3 | import skimage.color as color

4 | import matplotlib.pyplot as plt

5 | import conversion

6 | import gray2color

7 | import argparse

8 |

9 | def parse_args():

10 | parser = argparse.ArgumentParser(description='基于paddlepaddle的黑白影片色彩重建')

11 | parser.add_argument('-img_in',dest='img_in',help='输入需要转换的黑白照片路径', type=str)

12 | parser.add_argument('-save',dest='save',help='需要保存结果的路径', type=str, default='./movie/')

13 | parser.add_argument('-movie_in',dest='movie_in',help='输入需要转换的黑白影片的路径', type=str)

14 | args = parser.parse_args()

15 | return args

16 |

17 | if __name__ == '__main__':

18 | args = parse_args()

19 | if args.img_in is not None and args.movie_in is None:

20 | gray2color.run(input=args.img_in,output=args.save)

21 | print('[*] 图片转换成功,路径为' + args.save)

22 | elif args.img_in is None and args.movie_in is not None:

23 | print('[*] 正在转换中...')

24 | conversion.video2photo(videoName=args.movie_in)

25 | cacheIN = 'cache/input_img/'

26 | cacheOUT = 'cache/output_img/'

27 | imgs = os.listdir(cacheIN)

28 | for img in imgs:

29 | gray2color.run(input=cacheIN + img,output= cacheOUT + img)

30 | conversion.photo2video(videoName=args.save,fps=25)

31 | print('[*] 视频转换成功,路径为' + args.save)

32 | else:

33 | print('[*] 输入有误')

34 |

35 |

36 |

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_0.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_0.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_0.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_0.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_0.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_0.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_0.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_0.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_1.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_1.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_1.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_1.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_1.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_1.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_1.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_1.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_10.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_10.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_10.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_10.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_10.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_10.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_10.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_10.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_11.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_11.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_11.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_11.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_11.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_11.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_11.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_11.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_12.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_12.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_12.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_12.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_12.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_12.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_12.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_12.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_13.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_13.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_13.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_13.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_13.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_13.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_13.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_13.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_14.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_14.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_14.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_14.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_14.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_14.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_14.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_14.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_2.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_2.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_2.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_2.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_2.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_2.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_2.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_2.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_3.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_3.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_3.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_3.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_3.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_3.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_3.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_3.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_4.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_4.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_4.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_4.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_4.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_4.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_4.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_4.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_5.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_5.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_5.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_5.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_5.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_5.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_5.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_5.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_6.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_6.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_6.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_6.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_6.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_6.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_6.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_6.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_7.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_7.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_7.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_7.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_7.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_7.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_7.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_7.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_8.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_8.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_8.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_8.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_8.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_8.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_8.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_8.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_9.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_9.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_9.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_9.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_9.w_1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_9.w_1

--------------------------------------------------------------------------------

/model/gray2color.inference.model/batch_norm_9.w_2:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/batch_norm_9.w_2

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_0.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_0.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_0.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_0.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_1.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_1.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_1.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_1.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_10.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_10.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_10.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_10.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_11.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_11.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_11.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_11.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_12.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_12.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_12.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_12.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_13.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_13.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_13.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_13.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_14.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_14.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_14.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_14.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_2.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_2.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_2.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_2.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_3.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_3.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_3.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_3.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_4.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_4.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_4.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_4.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_5.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_5.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_5.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_5.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_6.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_6.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_6.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_6.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_7.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_7.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_7.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_7.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_8.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_8.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_8.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_8.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_9.b_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_9.b_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_9.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_9.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_transpose_0.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_transpose_0.w_0

--------------------------------------------------------------------------------

/model/gray2color.inference.model/conv2d_transpose_1.w_0:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/model/gray2color.inference.model/conv2d_transpose_1.w_0

--------------------------------------------------------------------------------

/resouse/logo.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Siyou-Li/PaddleColorization/ba3e06e41e09d37d73dbf2cf766b60bcb0e1a46f/resouse/logo.png

--------------------------------------------------------------------------------

/train/ResNet.py:

--------------------------------------------------------------------------------

1 | import paddle

2 | import paddle.fluid as fluid

3 | import numpy as np

4 | import sys

5 | import os

6 | sys.path.append('./train')

7 | from skimage import io,color

8 | from train.fileReader import *

9 | import matplotlib.pyplot as plt

10 | import lab2rgb

11 |

12 | Params_dirname = "work/model/gray2color.inference.model"

13 | BATCH_SIZE = 30

14 | EPOCH_NUM = 300

15 |

16 | '''自定义损失函数'''

17 | def createLoss(predict, truth):

18 | '''均方差'''

19 | loss1 = fluid.layers.square_error_cost(predict,truth)

20 | #loss2 = fluid.layers.square_error_cost(predict,fluid.layers.fill_constant(shape=[BATCH_SIZE,2,256,256],value=fluid.layers.mean(predict),dtype='float32'))

21 | cost = fluid.layers.mean(loss1) #+ 16.7 / fluid.layers.mean(loss2)

22 | return cost

23 |

24 | def conv_bn_layer(input,

25 | ch_out,

26 | filter_size,

27 | stride,

28 | padding,

29 | act='relu',

30 | bias_attr=True):

31 | tmp = fluid.layers.conv2d(

32 | input=input,

33 | filter_size=filter_size,

34 | num_filters=ch_out,

35 | stride=stride,

36 | padding=padding,

37 | act=None,

38 | bias_attr=bias_attr)

39 | return fluid.layers.batch_norm(input=tmp,act=act,momentum=0.5)

40 |

41 |

42 | def shortcut(input, ch_in, ch_out, stride):

43 | if ch_in != ch_out:

44 | return conv_bn_layer(input, ch_out, 1, stride, 0, None)

45 | else:

46 | return input

47 |

48 |

49 | def basicblock(input, ch_in, ch_out, stride):

50 | tmp = conv_bn_layer(input, ch_out, 3, stride, 1)

51 | tmp = conv_bn_layer(tmp, ch_out, 3, 1, 1, act=None, bias_attr=True)

52 | short = shortcut(input, ch_in, ch_out, stride)

53 | return fluid.layers.elementwise_add(x=tmp, y=short, act='relu')

54 |

55 |

56 | def layer_warp(block_func, input, ch_in, ch_out, count, stride):

57 | tmp = block_func(input, ch_in, ch_out, stride)

58 | for i in range(1, count):

59 | tmp = block_func(tmp, ch_out, ch_out, 1)

60 | return tmp

61 |

62 | ###反卷积层

63 | def deconv(x, num_filters, filter_size=5, stride=2, dilation=1, padding=2, output_size=None, act=None):

64 | return fluid.layers.conv2d_transpose(

65 | input=x,

66 | num_filters=num_filters,

67 | # 滤波器数量

68 | output_size=output_size,

69 | # 输出图片大小

70 | filter_size=filter_size,

71 | # 滤波器大小

72 | stride=stride,

73 | # 步长

74 | dilation=dilation,

75 | # 膨胀比例大小

76 | padding=padding,

77 | use_cudnn=True,

78 | # 是否使用cudnn内核

79 | act=act

80 | # 激活函数

81 | )

82 | def bn(x, name=None, act=None,momentum=0.5):

83 | return fluid.layers.batch_norm(

84 | x,

85 | bias_attr=None,

86 | # 指定偏置的属性的对象

87 | moving_mean_name=name + '3',

88 | # moving_mean的名称

89 | moving_variance_name=name + '4',

90 | # moving_variance的名称

91 | name=name,

92 | act=act,

93 | momentum=momentum,

94 | )

95 |

96 |

97 | def resnetImagenet(input):

98 | #128

99 | x = layer_warp(basicblock, input, 64, 128, 1, 2)

100 | #64

101 | x = layer_warp(basicblock, x, 128, 256, 1, 2)

102 | #32

103 | x = layer_warp(basicblock, x, 256, 512, 1, 2)

104 | #16

105 | x = layer_warp(basicblock, x, 512, 1024, 1, 2)

106 | #8

107 | x = layer_warp(basicblock, x, 1024, 2048, 1, 2)

108 | #16

109 | x = deconv(x, num_filters=1024, filter_size=4, stride=2, padding=1)

110 | x = bn(x, name='bn_1', act='relu', momentum=0.5)

111 | #32

112 | x = deconv(x, num_filters=512, filter_size=4, stride=2, padding=1)

113 | x = bn(x, name='bn_2', act='relu', momentum=0.5)

114 | #64

115 | x = deconv(x, num_filters=256, filter_size=4, stride=2, padding=1)

116 | x = bn(x, name='bn_3', act='relu', momentum=0.5)

117 | #128

118 | x = deconv(x, num_filters=128, filter_size=4, stride=2, padding=1)

119 | x = bn(x, name='bn_4', act='relu', momentum=0.5)

120 | #256

121 | x = deconv(x, num_filters=64, filter_size=4, stride=2, padding=1)

122 | x = bn(x, name='bn_5', act='relu', momentum=0.5)

123 |

124 | x = deconv(x, num_filters=2, filter_size=3, stride=1, padding=1)

125 | return x

126 |

127 |

128 | def ResNettrain():

129 | gray = fluid.layers.data(name='gray', shape=[1, 256, 256], dtype='float32')

130 | truth = fluid.layers.data(name='truth', shape=[2, 256, 256], dtype='float32')

131 | predict = resnetImagenet(gray)

132 | cost = createLoss(predict=predict, truth=truth)

133 | return predict, cost

134 |

135 |

136 | '''optimizer函数'''

137 |

138 |

139 | def optimizer_program():

140 | return fluid.optimizer.Adam(learning_rate=2e-5, beta1=0.8)

141 |

142 |

143 | train_reader = paddle.batch(paddle.reader.shuffle(

144 | reader=train(), buf_size=7500 * 3

145 | ), batch_size=BATCH_SIZE)

146 | test_reader = paddle.batch(reader=test(), batch_size=10)

147 |

148 | use_cuda = True

149 | if not use_cuda:

150 | os.environ['CPU_NUM'] = str(6)

151 | feed_order = ['gray', 'weight']

152 | place = fluid.CUDAPlace(0) if use_cuda else fluid.CPUPlace()

153 |

154 | main_program = fluid.default_main_program()

155 | star_program = fluid.default_startup_program()

156 |

157 | '''网络训练'''

158 | predict, cost = ResNettrain()

159 |

160 | '''优化函数'''

161 | optimizer = optimizer_program()

162 | optimizer.minimize(cost)

163 |

164 | exe = fluid.Executor(place)

165 |

166 | plt.ion()

167 | def train_loop():

168 | gray = fluid.layers.data(name='gray', shape=[1, 256, 256], dtype='float32')

169 | truth = fluid.layers.data(name='truth', shape=[2, 256, 256], dtype='float32')

170 | feeder = fluid.DataFeeder(

171 | feed_list=['gray', 'truth'], place=place)

172 | exe.run(star_program)

173 |

174 | # 增量训练

175 | fluid.io.load_persistables(exe, 'work/model/incremental/', main_program)

176 |

177 | for pass_id in range(EPOCH_NUM):

178 | step = 0

179 | for data in train_reader():

180 | loss = exe.run(main_program, feed=feeder.feed(data), fetch_list=[cost])

181 | step += 1

182 | if step % 1000 == 0:

183 | try:

184 | generated_img = exe.run(main_program, feed=feeder.feed(data), fetch_list=[predict])

185 | plt.figure(figsize=(15, 6))

186 | plt.grid(False)

187 | for i in range(10):

188 | ab = generated_img[0][i]

189 | l = data[i][0][0]

190 | a = ab[0]

191 | b = ab[1]

192 | l = l[:, :, np.newaxis]

193 | a = a[:, :, np.newaxis].astype('float64')

194 | b = b[:, :, np.newaxis].astype('float64')

195 | lab = np.concatenate((l, a, b), axis=2)

196 | img = color.lab2rgb((lab))

197 | img = transform.rotate(img, 270)

198 | img = np.fliplr(img)

199 | plt.grid(False)

200 | plt.subplot(2, 5, i + 1)

201 | plt.imshow(img)

202 | plt.axis('off')

203 | plt.xticks([])

204 | plt.yticks([])

205 | msg = 'Epoch ID={0} Batch ID={1} Loss={2}'.format(pass_id, step, loss[0][0])

206 | plt.suptitle(msg, fontsize=20)

207 | plt.draw()

208 | plt.savefig('{}/{:04d}_{:04d}.png'.format('work/output_img', pass_id, step), bbox_inches='tight')

209 | plt.pause(0.01)

210 | except IOError:

211 | print(IOError)

212 |

213 | fluid.io.save_persistables(exe, 'work/model/incremental/', main_program)

214 | fluid.io.save_inference_model(Params_dirname, ["gray"], [predict], exe)

215 | plt.ioff()

216 | train_loop()

--------------------------------------------------------------------------------

/train/Utils.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import matplotlib

3 | import math

4 | # matplotlib.use('agg')

5 | import matplotlib.pyplot as plt

6 | import matplotlib.gridspec as gridspec

7 |

8 | # % matplotlib inline

9 | # % config InlineBackend.figure_format = 'svg'

10 |

11 | img_dim = 48

12 |

13 | def plot(gen_data):

14 | pad_dim = 1

15 | paded = pad_dim + img_dim

16 | gen_data = gen_data.reshape(gen_data.shape[0], img_dim, img_dim)

17 | # math.ceil()函数返回数字的上入整数(取靠近0的整数)

18 | n = int(math.ceil(math.sqrt(gen_data.shape[0])))

19 | # np.pad()方法:扩充矩阵,其中‘constant’指的是连续填充相同的值

20 | # np.transpose()函数用于对换数组的维度

21 | gen_data = (np.pad(

22 | gen_data, [[0, n * n - gen_data.shape[0]], [pad_dim, 0], [pad_dim, 0]],

23 | 'constant').reshape((n, n, paded, paded)).transpose((0, 2, 1, 3))

24 | .reshape((n * paded, n * paded)))

25 | fig = plt.figure(figsize=(8, 8))

26 | # plt.axis('off')中'off'表示关闭轴线和标签

27 | plt.axis('off')

28 | plt.imshow(gen_data, cmap='Greys_r', vmin=-1, vmax=1)

29 | return fig

--------------------------------------------------------------------------------

/train/fileReader.py:

--------------------------------------------------------------------------------

1 | import os

2 | import cv2

3 | import numpy as np

4 | import paddle.dataset as dataset

5 | from skimage import io,color,transform

6 | import sklearn.neighbors as neighbors

7 |

8 | '''准备数据,定义Reader()'''

9 |

10 | PATH = 'work/train/'

11 | TEST = 'work/train/'

12 |

13 |

14 | class DataGenerater:

15 | def __init__(self):

16 | datalist = os.listdir(PATH)

17 | self.testlist = os.listdir(TEST)

18 | self.datalist = datalist

19 |

20 | def load(self, image):

21 | '''读取图片,并转为Lab,并提取出L和ab'''

22 | img = io.imread(image)

23 | lab = np.array(color.rgb2lab(img)).transpose()

24 | l = lab[:1, :, :]

25 | l = l.astype('float32')

26 | ab = lab[1:, :, :]

27 | ab = ab.astype('float32')

28 | return l, ab

29 |

30 | def create_train_reader(self):

31 | '''给dataset定义reader'''

32 |

33 | def reader():

34 | for img in self.datalist:

35 | # print(img)

36 | try:

37 | l, ab = self.load(PATH + img)

38 | # print(ab)

39 | yield l.astype('float32'), ab.astype('float32')

40 | except Exception as e:

41 | print(e)

42 |

43 | return reader

44 |

45 | def create_test_reader(self, ):

46 | '''给test定义reader'''

47 |

48 | def reader():

49 | for img in self.testlist:

50 | l, ab = self.load(TEST + img)

51 | yield l.astype('float32'), ab.astype('float32')

52 |

53 | return reader

54 |

55 |

56 | def train(batch_sizes=32):

57 | reader = DataGenerater().create_train_reader()

58 | return reader

59 |

60 |

61 | def test():

62 | reader = DataGenerater().create_test_reader()

63 | return reader

--------------------------------------------------------------------------------