├── pytorch_ssim

├── LICENSE.txt

├── setup.cfg

├── einstein.png

├── max_ssim.gif

├── .gitignore

├── setup.py

├── max_ssim.py

├── README.md

└── pytorch_ssim

│ └── __init__.py

├── PerceptualSimilarity

├── data

│ ├── __init__.py

│ ├── dataset

│ │ ├── __init__.py

│ │ ├── base_dataset.py

│ │ ├── jnd_dataset.py

│ │ └── twoafc_dataset.py

│ ├── base_data_loader.py

│ ├── data_loader.py

│ ├── custom_dataset_data_loader.py

│ └── image_folder.py

├── util

│ ├── __init__.py

│ ├── util.py

│ ├── html.py

│ └── visualizer.py

├── .gitignore

├── imgs

│ ├── ex_p0.png

│ ├── ex_p1.png

│ ├── ex_ref.png

│ ├── fig1.png

│ ├── ex_dir0

│ │ ├── 0.png

│ │ └── 1.png

│ ├── ex_dir1

│ │ ├── 0.png

│ │ └── 1.png

│ └── ex_dir_pair

│ │ ├── ex_p0.png

│ │ ├── ex_p1.png

│ │ └── ex_ref.png

├── scripts

│ ├── eval_valsets.sh

│ ├── train_test_metric.sh

│ ├── train_test_metric_tune.sh

│ ├── train_test_metric_scratch.sh

│ ├── download_dataset_valonly.sh

│ └── download_dataset.sh

├── lpips

│ ├── weights

│ │ ├── v0.0

│ │ │ ├── alex.pth

│ │ │ ├── vgg.pth

│ │ │ └── squeeze.pth

│ │ └── v0.1

│ │ │ ├── alex.pth

│ │ │ ├── vgg.pth

│ │ │ └── squeeze.pth

│ ├── __init__.py

│ ├── pretrained_networks.py

│ ├── lpips.py

│ └── trainer.py

├── requirements.txt

├── setup.py

├── lpips_2imgs.py

├── lpips_2dirs.py

├── LICENSE

├── test_network.py

├── lpips_loss.py

├── Dockerfile

├── lpips_1dir_allpairs.py

├── test_dataset_model.py

├── train.py

└── README.md

├── pytorch_msssim

├── LICENSE.txt

├── try1.py

├── setup.cfg

├── einstein.png

├── .gitignore

├── setup.py

├── max_ssim.py

├── README.md

└── pytorch_msssim

│ └── __init__.py

├── Examples

└── BRViT_sample1.jfif

├── LICENSE

├── README.md

├── evaluate.py

├── store_results.py

├── train.py

├── Bokeh_Data

└── test.csv

└── model.py

/pytorch_ssim/LICENSE.txt:

--------------------------------------------------------------------------------

1 | MIT

2 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/data/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/util/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/pytorch_msssim/LICENSE.txt:

--------------------------------------------------------------------------------

1 | MIT

2 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/data/dataset/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/pytorch_msssim/try1.py:

--------------------------------------------------------------------------------

1 | import pytorch_msssim

2 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/.gitignore:

--------------------------------------------------------------------------------

1 | *.pyc

2 |

3 | checkpoints/*

4 |

--------------------------------------------------------------------------------

/pytorch_msssim/setup.cfg:

--------------------------------------------------------------------------------

1 | [metadata]

2 | description-file = README.md

3 |

--------------------------------------------------------------------------------

/pytorch_ssim/setup.cfg:

--------------------------------------------------------------------------------

1 | [metadata]

2 | description-file = README.md

3 |

--------------------------------------------------------------------------------

/pytorch_ssim/einstein.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/pytorch_ssim/einstein.png

--------------------------------------------------------------------------------

/pytorch_ssim/max_ssim.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/pytorch_ssim/max_ssim.gif

--------------------------------------------------------------------------------

/Examples/BRViT_sample1.jfif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/Examples/BRViT_sample1.jfif

--------------------------------------------------------------------------------

/pytorch_msssim/einstein.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/pytorch_msssim/einstein.png

--------------------------------------------------------------------------------

/PerceptualSimilarity/imgs/ex_p0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/imgs/ex_p0.png

--------------------------------------------------------------------------------

/PerceptualSimilarity/imgs/ex_p1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/imgs/ex_p1.png

--------------------------------------------------------------------------------

/PerceptualSimilarity/imgs/ex_ref.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/imgs/ex_ref.png

--------------------------------------------------------------------------------

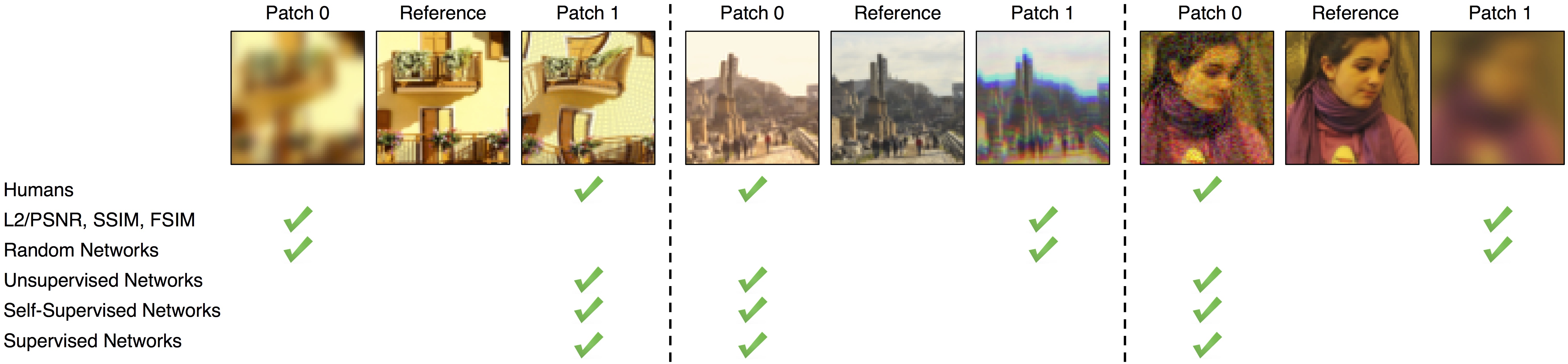

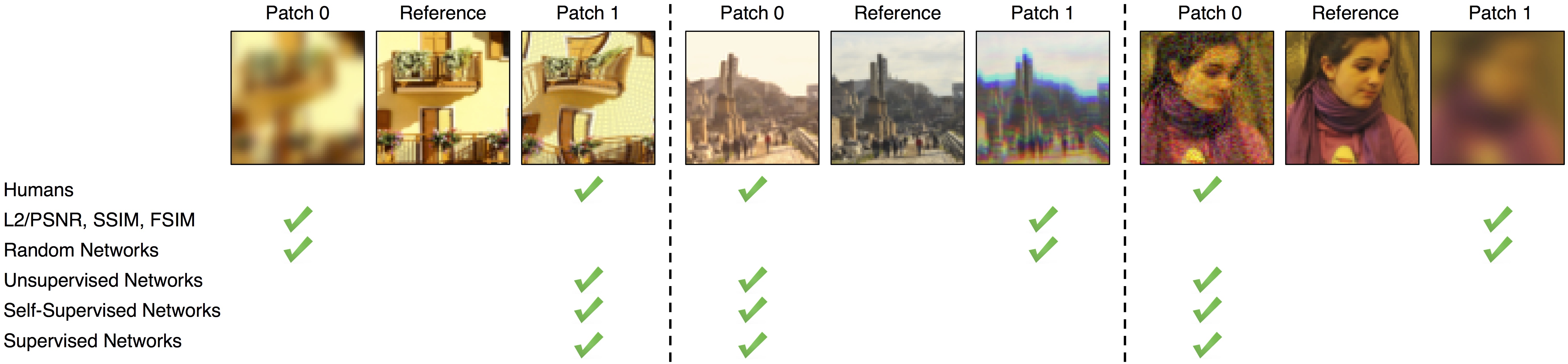

/PerceptualSimilarity/imgs/fig1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/imgs/fig1.png

--------------------------------------------------------------------------------

/PerceptualSimilarity/scripts/eval_valsets.sh:

--------------------------------------------------------------------------------

1 |

2 | python ./test_dataset_model.py --dataset_mode 2afc --model lpips --net alex --use_gpu --batch_size 50

3 |

4 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/imgs/ex_dir0/0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/imgs/ex_dir0/0.png

--------------------------------------------------------------------------------

/PerceptualSimilarity/imgs/ex_dir0/1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/imgs/ex_dir0/1.png

--------------------------------------------------------------------------------

/PerceptualSimilarity/imgs/ex_dir1/0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/imgs/ex_dir1/0.png

--------------------------------------------------------------------------------

/PerceptualSimilarity/imgs/ex_dir1/1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/imgs/ex_dir1/1.png

--------------------------------------------------------------------------------

/pytorch_msssim/.gitignore:

--------------------------------------------------------------------------------

1 | *.pyc

2 | *.gif

3 | *.png

4 | *.jpg

5 | test*

6 | !einstein.png

7 | !max_ssim.gif

8 | MANIFEST

9 | dist/*

10 | .sync-config.cson

11 |

--------------------------------------------------------------------------------

/pytorch_ssim/.gitignore:

--------------------------------------------------------------------------------

1 | *.pyc

2 | *.gif

3 | *.png

4 | *.jpg

5 | test*

6 | !einstein.png

7 | !max_ssim.gif

8 | MANIFEST

9 | dist/*

10 | .sync-config.cson

11 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/imgs/ex_dir_pair/ex_p0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/imgs/ex_dir_pair/ex_p0.png

--------------------------------------------------------------------------------

/PerceptualSimilarity/imgs/ex_dir_pair/ex_p1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/imgs/ex_dir_pair/ex_p1.png

--------------------------------------------------------------------------------

/PerceptualSimilarity/imgs/ex_dir_pair/ex_ref.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/imgs/ex_dir_pair/ex_ref.png

--------------------------------------------------------------------------------

/PerceptualSimilarity/lpips/weights/v0.0/alex.pth:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/lpips/weights/v0.0/alex.pth

--------------------------------------------------------------------------------

/PerceptualSimilarity/lpips/weights/v0.0/vgg.pth:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/lpips/weights/v0.0/vgg.pth

--------------------------------------------------------------------------------

/PerceptualSimilarity/lpips/weights/v0.1/alex.pth:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/lpips/weights/v0.1/alex.pth

--------------------------------------------------------------------------------

/PerceptualSimilarity/lpips/weights/v0.1/vgg.pth:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/lpips/weights/v0.1/vgg.pth

--------------------------------------------------------------------------------

/PerceptualSimilarity/lpips/weights/v0.0/squeeze.pth:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/lpips/weights/v0.0/squeeze.pth

--------------------------------------------------------------------------------

/PerceptualSimilarity/lpips/weights/v0.1/squeeze.pth:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Soester10/Bokeh-Rendering-with-Vision-Transformers/HEAD/PerceptualSimilarity/lpips/weights/v0.1/squeeze.pth

--------------------------------------------------------------------------------

/PerceptualSimilarity/requirements.txt:

--------------------------------------------------------------------------------

1 | torch>=0.4.0

2 | torchvision>=0.2.1

3 | numpy>=1.14.3

4 | scipy>=1.0.1

5 | scikit-image>=0.13.0

6 | opencv>=2.4.11

7 | matplotlib>=1.5.1

8 | tqdm>=4.28.1

9 | jupyter

10 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/data/base_data_loader.py:

--------------------------------------------------------------------------------

1 |

2 | class BaseDataLoader():

3 | def __init__(self):

4 | pass

5 |

6 | def initialize(self):

7 | pass

8 |

9 | def load_data():

10 | return None

11 |

12 |

13 |

14 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/scripts/train_test_metric.sh:

--------------------------------------------------------------------------------

1 |

2 | TRIAL=${1}

3 | NET=${2}

4 | mkdir checkpoints

5 | mkdir checkpoints/${NET}_${TRIAL}

6 | python ./train.py --use_gpu --net ${NET} --name ${NET}_${TRIAL}

7 | python ./test_dataset_model.py --use_gpu --net ${NET} --model_path ./checkpoints/${NET}_${TRIAL}/latest_net_.pth

8 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/data/dataset/base_dataset.py:

--------------------------------------------------------------------------------

1 | import torch.utils.data as data

2 |

3 | class BaseDataset(data.Dataset):

4 | def __init__(self):

5 | super(BaseDataset, self).__init__()

6 |

7 | def name(self):

8 | return 'BaseDataset'

9 |

10 | def initialize(self):

11 | pass

12 |

13 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/scripts/train_test_metric_tune.sh:

--------------------------------------------------------------------------------

1 |

2 | TRIAL=${1}

3 | NET=${2}

4 | mkdir checkpoints

5 | mkdir checkpoints/${NET}_${TRIAL}_tune

6 | python ./train.py --train_trunk --use_gpu --net ${NET} --name ${NET}_${TRIAL}_tune

7 | python ./test_dataset_model.py --train_trunk --use_gpu --net ${NET} --model_path ./checkpoints/${NET}_${TRIAL}_tune/latest_net_.pth

8 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/scripts/train_test_metric_scratch.sh:

--------------------------------------------------------------------------------

1 |

2 | TRIAL=${1}

3 | NET=${2}

4 | mkdir checkpoints

5 | mkdir checkpoints/${NET}_${TRIAL}_scratch

6 | python ./train.py --from_scratch --train_trunk --use_gpu --net ${NET} --name ${NET}_${TRIAL}_scratch

7 | python ./test_dataset_model.py --from_scratch --train_trunk --use_gpu --net ${NET} --model_path ./checkpoints/${NET}_${TRIAL}_scratch/latest_net_.pth

8 |

9 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/data/data_loader.py:

--------------------------------------------------------------------------------

1 | def CreateDataLoader(datafolder,dataroot='./dataset',dataset_mode='2afc',load_size=64,batch_size=1,serial_batches=True,nThreads=4):

2 | from data.custom_dataset_data_loader import CustomDatasetDataLoader

3 | data_loader = CustomDatasetDataLoader()

4 | # print(data_loader.name())

5 | data_loader.initialize(datafolder,dataroot=dataroot+'/'+dataset_mode,dataset_mode=dataset_mode,load_size=load_size,batch_size=batch_size,serial_batches=serial_batches, nThreads=nThreads)

6 | return data_loader

7 |

--------------------------------------------------------------------------------

/pytorch_msssim/setup.py:

--------------------------------------------------------------------------------

1 | from distutils.core import setup

2 |

3 | setup(

4 | name = 'pytorch_msssim',

5 | packages = ['pytorch_msssim'], # this must be the same as the name above

6 | version = '0.1',

7 | description = 'Differentiable multi-scale structural similarity (MS-SSIM) index',

8 | author = 'Jorge Pessoa',

9 | author_email = 'jpessoa.on@gmail.com',

10 | url = 'https://github.com/jorge-pessoa/pytorch-msssim', # use the URL to the github repo

11 | keywords = ['pytorch', 'image-processing', 'deep-learning', 'ms-ssim'], # arbitrary keywords

12 | classifiers = [],

13 | )

14 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/scripts/download_dataset_valonly.sh:

--------------------------------------------------------------------------------

1 |

2 | mkdir dataset

3 |

4 | # JND Dataset

5 | wget https://people.eecs.berkeley.edu/~rich.zhang/projects/2018_perceptual/dataset/jnd.tar.gz -O ./dataset/jnd.tar.gz

6 |

7 | mkdir dataset/jnd

8 | tar -xzf ./dataset/jnd.tar.gz -C ./dataset

9 | rm ./dataset/jnd.tar.gz

10 |

11 | # 2AFC Val set

12 | mkdir dataset/2afc/

13 | wget https://people.eecs.berkeley.edu/~rich.zhang/projects/2018_perceptual/dataset/twoafc_val.tar.gz -O ./dataset/twoafc_val.tar.gz

14 |

15 | mkdir dataset/2afc/val

16 | tar -xzf ./dataset/twoafc_val.tar.gz -C ./dataset/2afc

17 | rm ./dataset/twoafc_val.tar.gz

18 |

--------------------------------------------------------------------------------

/pytorch_ssim/setup.py:

--------------------------------------------------------------------------------

1 | from distutils.core import setup

2 | setup(

3 | name = 'pytorch_ssim',

4 | packages = ['pytorch_ssim'], # this must be the same as the name above

5 | version = '0.1',

6 | description = 'Differentiable structural similarity (SSIM) index',

7 | author = 'Po-Hsun (Evan) Su',

8 | author_email = 'evan.pohsun.su@gmail.com',

9 | url = 'https://github.com/Po-Hsun-Su/pytorch-ssim', # use the URL to the github repo

10 | download_url = 'https://github.com/Po-Hsun-Su/pytorch-ssim/archive/0.1.tar.gz', # I'll explain this in a second

11 | keywords = ['pytorch', 'image-processing', 'deep-learning'], # arbitrary keywords

12 | classifiers = [],

13 | )

14 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/setup.py:

--------------------------------------------------------------------------------

1 |

2 | import setuptools

3 | with open("README.md", "r") as fh:

4 | long_description = fh.read()

5 | setuptools.setup(

6 | name='lpips',

7 | version='0.1.2',

8 | author="Richard Zhang",

9 | author_email="rizhang@adobe.com",

10 | description="LPIPS Similarity metric",

11 | long_description=long_description,

12 | long_description_content_type="text/markdown",

13 | url="https://github.com/richzhang/PerceptualSimilarity",

14 | packages=['lpips'],

15 | package_data={'lpips': ['weights/v0.0/*.pth','weights/v0.1/*.pth']},

16 | include_package_data=True,

17 | classifiers=[

18 | "Programming Language :: Python :: 3",

19 | "License :: OSI Approved :: BSD License",

20 | "Operating System :: OS Independent",

21 | ],

22 | )

23 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/scripts/download_dataset.sh:

--------------------------------------------------------------------------------

1 |

2 | mkdir dataset

3 |

4 | # JND Dataset

5 | wget https://people.eecs.berkeley.edu/~rich.zhang/projects/2018_perceptual/dataset/jnd.tar.gz -O ./dataset/jnd.tar.gz

6 |

7 | mkdir dataset/jnd

8 | tar -xzf ./dataset/jnd.tar.gz -C ./dataset

9 | rm ./dataset/jnd.tar.gz

10 |

11 | # 2AFC Val set

12 | mkdir dataset/2afc/

13 | wget https://people.eecs.berkeley.edu/~rich.zhang/projects/2018_perceptual/dataset/twoafc_val.tar.gz -O ./dataset/twoafc_val.tar.gz

14 |

15 | mkdir dataset/2afc/val

16 | tar -xzf ./dataset/twoafc_val.tar.gz -C ./dataset/2afc

17 | rm ./dataset/twoafc_val.tar.gz

18 |

19 | # 2AFC Train set

20 | mkdir dataset/2afc/

21 | wget https://people.eecs.berkeley.edu/~rich.zhang/projects/2018_perceptual/dataset/twoafc_train.tar.gz -O ./dataset/twoafc_train.tar.gz

22 |

23 | mkdir dataset/2afc/train

24 | tar -xzf ./dataset/twoafc_train.tar.gz -C ./dataset/2afc

25 | rm ./dataset/twoafc_train.tar.gz

26 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/lpips_2imgs.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import lpips

3 |

4 | parser = argparse.ArgumentParser(formatter_class=argparse.ArgumentDefaultsHelpFormatter)

5 | parser.add_argument('-p0','--path0', type=str, default='./imgs/ex_ref.png')

6 | parser.add_argument('-p1','--path1', type=str, default='./imgs/ex_p0.png')

7 | parser.add_argument('-v','--version', type=str, default='0.1')

8 | parser.add_argument('--use_gpu', action='store_true', help='turn on flag to use GPU')

9 |

10 | opt = parser.parse_args()

11 |

12 | ## Initializing the model

13 | loss_fn = lpips.LPIPS(net='alex',version=opt.version)

14 |

15 | if(opt.use_gpu):

16 | loss_fn.cuda()

17 |

18 | # Load images

19 | img0 = lpips.im2tensor(lpips.load_image(opt.path0)) # RGB image from [-1,1]

20 | img1 = lpips.im2tensor(lpips.load_image(opt.path1))

21 |

22 | if(opt.use_gpu):

23 | img0 = img0.cuda()

24 | img1 = img1.cuda()

25 |

26 | # Compute distance

27 | dist01 = loss_fn.forward(img0,img1)

28 | print('Distance: %.3f'%dist01)

29 |

--------------------------------------------------------------------------------

/pytorch_ssim/max_ssim.py:

--------------------------------------------------------------------------------

1 | import pytorch_ssim

2 | import torch

3 | from torch.autograd import Variable

4 | from torch import optim

5 | import cv2

6 | import numpy as np

7 |

8 | npImg1 = cv2.imread("einstein.png")

9 |

10 | img1 = torch.from_numpy(np.rollaxis(npImg1, 2)).float().unsqueeze(0)/255.0

11 | img2 = torch.rand(img1.size())

12 |

13 | if torch.cuda.is_available():

14 | img1 = img1.cuda()

15 | img2 = img2.cuda()

16 |

17 |

18 | img1 = Variable( img1, requires_grad=False)

19 | img2 = Variable( img2, requires_grad = True)

20 |

21 |

22 | # Functional: pytorch_ssim.ssim(img1, img2, window_size = 11, size_average = True)

23 | ssim_value = pytorch_ssim.ssim(img1, img2).data[0]

24 | print("Initial ssim:", ssim_value)

25 |

26 | # Module: pytorch_ssim.SSIM(window_size = 11, size_average = True)

27 | ssim_loss = pytorch_ssim.SSIM()

28 |

29 | optimizer = optim.Adam([img2], lr=0.01)

30 |

31 | while ssim_value < 0.95:

32 | optimizer.zero_grad()

33 | ssim_out = -ssim_loss(img1, img2)

34 | ssim_value = - ssim_out.data[0]

35 | print(ssim_value)

36 | ssim_out.backward()

37 | optimizer.step()

38 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2021 Hariharan N

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/lpips_2dirs.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import os

3 | import lpips

4 |

5 | parser = argparse.ArgumentParser(formatter_class=argparse.ArgumentDefaultsHelpFormatter)

6 | parser.add_argument('-d0','--dir0', type=str, default='./imgs/ex_dir0')

7 | parser.add_argument('-d1','--dir1', type=str, default='./imgs/ex_dir1')

8 | parser.add_argument('-o','--out', type=str, default='./imgs/example_dists.txt')

9 | parser.add_argument('-v','--version', type=str, default='0.1')

10 | parser.add_argument('--use_gpu', action='store_true', help='turn on flag to use GPU')

11 |

12 | opt = parser.parse_args()

13 |

14 | ## Initializing the model

15 | loss_fn = lpips.LPIPS(net='alex',version=opt.version)

16 | if(opt.use_gpu):

17 | loss_fn.cuda()

18 |

19 | # crawl directories

20 | f = open(opt.out,'w')

21 | files = os.listdir(opt.dir0)

22 |

23 | for file in files:

24 | if(os.path.exists(os.path.join(opt.dir1,file))):

25 | # Load images

26 | img0 = lpips.im2tensor(lpips.load_image(os.path.join(opt.dir0,file))) # RGB image from [-1,1]

27 | img1 = lpips.im2tensor(lpips.load_image(os.path.join(opt.dir1,file)))

28 |

29 | if(opt.use_gpu):

30 | img0 = img0.cuda()

31 | img1 = img1.cuda()

32 |

33 | # Compute distance

34 | dist01 = loss_fn.forward(img0,img1)

35 | print('%s: %.3f'%(file,dist01))

36 | f.writelines('%s: %.6f\n'%(file,dist01))

37 |

38 | f.close()

39 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/LICENSE:

--------------------------------------------------------------------------------

1 | Copyright (c) 2018, Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, Oliver Wang

2 | All rights reserved.

3 |

4 | Redistribution and use in source and binary forms, with or without

5 | modification, are permitted provided that the following conditions are met:

6 |

7 | * Redistributions of source code must retain the above copyright notice, this

8 | list of conditions and the following disclaimer.

9 |

10 | * Redistributions in binary form must reproduce the above copyright notice,

11 | this list of conditions and the following disclaimer in the documentation

12 | and/or other materials provided with the distribution.

13 |

14 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

15 | AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

16 | IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

17 | DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

18 | FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

19 | DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

20 | SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

21 | CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

22 | OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

23 | OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

24 |

25 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Bokeh-Rendering-with-Vision-Transformers

2 | Establishing new state-of-the-art results for Bokeh Rendering on the EBB! Dataset. The preprint of our work can be found [here](https://www.techrxiv.org/articles/preprint/Bokeh_Effect_Rendering_with_Vision_Transformers/17714849).

3 |

4 | ### Sample:

5 |

6 |  7 |

8 |

9 | ### References

10 |

11 | Model adapted from https://github.com/isl-org/DPT

12 |

13 | SSIM loss can be found at https://github.com/Po-Hsun-Su/pytorch-ssim

14 |

15 | MSSSIM loss can be found at https://github.com/jorge-pessoa/pytorch-msssim

16 |

17 | LPIPS can be found at https://github.com/richzhang/PerceptualSimilarity

18 |

19 |

20 | ### BRViT Weights

21 |

22 | Our latest model weights can be downloaded from [here](https://drive.google.com/file/d/1V4oX1fARjaIujXQ7Vf4UDwxJhm9ubVG-/view?usp=sharing).

23 |

24 |

25 | ### BRViT Metrics

26 |

27 | Common Metrics with the latest weights for model comparison:

28 |

29 | 1. PSNR: 24.76

30 | 2. SSIM: 0.8904

31 | 3. LPIPS: 0.1924

32 |

33 |

34 | ### Dataset

35 |

36 | Training: [https://data.vision.ee.ethz.ch/timofter/AIM19Bokeh/Training.zip](https://data.vision.ee.ethz.ch/timofter/AIM19Bokeh/Training.zip)

37 |

38 | Validation: [https://data.vision.ee.ethz.ch/timofter/AIM19Bokeh/ValidationBokehFree.zip](https://data.vision.ee.ethz.ch/timofter/AIM19Bokeh/ValidationBokehFree.zip)

39 |

40 | Testing: [https://data.vision.ee.ethz.ch/timofter/AIM19Bokeh/TestBokehFree.zip](https://data.vision.ee.ethz.ch/timofter/AIM19Bokeh/TestBokehFree.zip)

41 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/util/util.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function

2 |

3 | import numpy as np

4 | from PIL import Image

5 | import numpy as np

6 | import os

7 | import matplotlib.pyplot as plt

8 | import torch

9 |

10 | def load_image(path):

11 | if(path[-3:] == 'dng'):

12 | import rawpy

13 | with rawpy.imread(path) as raw:

14 | img = raw.postprocess()

15 | elif(path[-3:]=='bmp' or path[-3:]=='jpg' or path[-3:]=='png'):

16 | import cv2

17 | return cv2.imread(path)[:,:,::-1]

18 | else:

19 | img = (255*plt.imread(path)[:,:,:3]).astype('uint8')

20 |

21 | return img

22 |

23 | def save_image(image_numpy, image_path, ):

24 | image_pil = Image.fromarray(image_numpy)

25 | image_pil.save(image_path)

26 |

27 | def mkdirs(paths):

28 | if isinstance(paths, list) and not isinstance(paths, str):

29 | for path in paths:

30 | mkdir(path)

31 | else:

32 | mkdir(paths)

33 |

34 | def mkdir(path):

35 | if not os.path.exists(path):

36 | os.makedirs(path)

37 |

38 |

39 | def tensor2im(image_tensor, imtype=np.uint8, cent=1., factor=255./2.):

40 | # def tensor2im(image_tensor, imtype=np.uint8, cent=1., factor=1.):

41 | image_numpy = image_tensor[0].cpu().float().numpy()

42 | image_numpy = (np.transpose(image_numpy, (1, 2, 0)) + cent) * factor

43 | return image_numpy.astype(imtype)

44 |

45 | def im2tensor(image, imtype=np.uint8, cent=1., factor=255./2.):

46 | # def im2tensor(image, imtype=np.uint8, cent=1., factor=1.):

47 | return torch.Tensor((image / factor - cent)

48 | [:, :, :, np.newaxis].transpose((3, 2, 0, 1)))

49 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/test_network.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import lpips

3 | from IPython import embed

4 |

5 | use_gpu = False # Whether to use GPU

6 | spatial = True # Return a spatial map of perceptual distance.

7 |

8 | # Linearly calibrated models (LPIPS)

9 | loss_fn = lpips.LPIPS(net='alex', spatial=spatial) # Can also set net = 'squeeze' or 'vgg'

10 | # loss_fn = lpips.LPIPS(net='alex', spatial=spatial, lpips=False) # Can also set net = 'squeeze' or 'vgg'

11 |

12 | if(use_gpu):

13 | loss_fn.cuda()

14 |

15 | ## Example usage with dummy tensors

16 | dummy_im0 = torch.zeros(1,3,64,64) # image should be RGB, normalized to [-1,1]

17 | dummy_im1 = torch.zeros(1,3,64,64)

18 | if(use_gpu):

19 | dummy_im0 = dummy_im0.cuda()

20 | dummy_im1 = dummy_im1.cuda()

21 | dist = loss_fn.forward(dummy_im0,dummy_im1)

22 |

23 | ## Example usage with images

24 | ex_ref = lpips.im2tensor(lpips.load_image('./imgs/ex_ref.png'))

25 | ex_p0 = lpips.im2tensor(lpips.load_image('./imgs/ex_p0.png'))

26 | ex_p1 = lpips.im2tensor(lpips.load_image('./imgs/ex_p1.png'))

27 | if(use_gpu):

28 | ex_ref = ex_ref.cuda()

29 | ex_p0 = ex_p0.cuda()

30 | ex_p1 = ex_p1.cuda()

31 |

32 | ex_d0 = loss_fn.forward(ex_ref,ex_p0)

33 | ex_d1 = loss_fn.forward(ex_ref,ex_p1)

34 |

35 | if not spatial:

36 | print('Distances: (%.3f, %.3f)'%(ex_d0, ex_d1))

37 | else:

38 | print('Distances: (%.3f, %.3f)'%(ex_d0.mean(), ex_d1.mean())) # The mean distance is approximately the same as the non-spatial distance

39 |

40 | # Visualize a spatially-varying distance map between ex_p0 and ex_ref

41 | import pylab

42 | pylab.imshow(ex_d0[0,0,...].data.cpu().numpy())

43 | pylab.show()

44 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/data/custom_dataset_data_loader.py:

--------------------------------------------------------------------------------

1 | import torch.utils.data

2 | from data.base_data_loader import BaseDataLoader

3 | import os

4 |

5 | def CreateDataset(dataroots,dataset_mode='2afc',load_size=64,):

6 | dataset = None

7 | if dataset_mode=='2afc': # human judgements

8 | from data.dataset.twoafc_dataset import TwoAFCDataset

9 | dataset = TwoAFCDataset()

10 | elif dataset_mode=='jnd': # human judgements

11 | from data.dataset.jnd_dataset import JNDDataset

12 | dataset = JNDDataset()

13 | else:

14 | raise ValueError("Dataset Mode [%s] not recognized."%self.dataset_mode)

15 |

16 | dataset.initialize(dataroots,load_size=load_size)

17 | return dataset

18 |

19 | class CustomDatasetDataLoader(BaseDataLoader):

20 | def name(self):

21 | return 'CustomDatasetDataLoader'

22 |

23 | def initialize(self, datafolders, dataroot='./dataset',dataset_mode='2afc',load_size=64,batch_size=1,serial_batches=True, nThreads=1):

24 | BaseDataLoader.initialize(self)

25 | if(not isinstance(datafolders,list)):

26 | datafolders = [datafolders,]

27 | data_root_folders = [os.path.join(dataroot,datafolder) for datafolder in datafolders]

28 | self.dataset = CreateDataset(data_root_folders,dataset_mode=dataset_mode,load_size=load_size)

29 | self.dataloader = torch.utils.data.DataLoader(

30 | self.dataset,

31 | batch_size=batch_size,

32 | shuffle=not serial_batches,

33 | num_workers=int(nThreads))

34 |

35 | def load_data(self):

36 | return self.dataloader

37 |

38 | def __len__(self):

39 | return len(self.dataset)

40 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/lpips_loss.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import torch

3 | from torch.autograd import Variable

4 | import matplotlib.pyplot as plt

5 | import argparse

6 | import lpips

7 |

8 | parser = argparse.ArgumentParser(formatter_class=argparse.ArgumentDefaultsHelpFormatter)

9 | parser.add_argument('--ref_path', type=str, default='./imgs/ex_ref.png')

10 | parser.add_argument('--pred_path', type=str, default='./imgs/ex_p1.png')

11 | parser.add_argument('--use_gpu', action='store_true', help='turn on flag to use GPU')

12 |

13 | opt = parser.parse_args()

14 |

15 | loss_fn = lpips.LPIPS(net='vgg')

16 | if(opt.use_gpu):

17 | loss_fn.cuda()

18 |

19 | ref = lpips.im2tensor(lpips.load_image(opt.ref_path))

20 | pred = Variable(lpips.im2tensor(lpips.load_image(opt.pred_path)), requires_grad=True)

21 | if(opt.use_gpu):

22 | with torch.no_grad():

23 | ref = ref.cuda()

24 | pred = pred.cuda()

25 |

26 | optimizer = torch.optim.Adam([pred,], lr=1e-3, betas=(0.9, 0.999))

27 |

28 | plt.ion()

29 | fig = plt.figure(1)

30 | ax = fig.add_subplot(131)

31 | ax.imshow(lpips.tensor2im(ref))

32 | ax.set_title('target')

33 | ax = fig.add_subplot(133)

34 | ax.imshow(lpips.tensor2im(pred.data))

35 | ax.set_title('initialization')

36 |

37 | for i in range(1000):

38 | dist = loss_fn.forward(pred, ref)

39 | optimizer.zero_grad()

40 | dist.backward()

41 | optimizer.step()

42 | pred.data = torch.clamp(pred.data, -1, 1)

43 |

44 | if i % 10 == 0:

45 | print('iter %d, dist %.3g' % (i, dist.view(-1).data.cpu().numpy()[0]))

46 | pred.data = torch.clamp(pred.data, -1, 1)

47 | pred_img = lpips.tensor2im(pred.data)

48 |

49 | ax = fig.add_subplot(132)

50 | ax.imshow(pred_img)

51 | ax.set_title('iter %d, dist %.3f' % (i, dist.view(-1).data.cpu().numpy()[0]))

52 | plt.pause(5e-2)

53 | # plt.imsave('imgs_saved/%04d.jpg'%i,pred_img)

54 |

55 |

56 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM nvidia/cuda:9.0-base-ubuntu16.04

2 |

3 | LABEL maintainer="Seyoung Park "

4 |

5 | # This Dockerfile is forked from Tensorflow Dockerfile

6 |

7 | # Pick up some PyTorch gpu dependencies

8 | RUN apt-get update && apt-get install -y --no-install-recommends \

9 | build-essential \

10 | cuda-command-line-tools-9-0 \

11 | cuda-cublas-9-0 \

12 | cuda-cufft-9-0 \

13 | cuda-curand-9-0 \

14 | cuda-cusolver-9-0 \

15 | cuda-cusparse-9-0 \

16 | curl \

17 | libcudnn7=7.1.4.18-1+cuda9.0 \

18 | libfreetype6-dev \

19 | libhdf5-serial-dev \

20 | libpng12-dev \

21 | libzmq3-dev \

22 | pkg-config \

23 | python \

24 | python-dev \

25 | rsync \

26 | software-properties-common \

27 | unzip \

28 | && \

29 | apt-get clean && \

30 | rm -rf /var/lib/apt/lists/*

31 |

32 |

33 | # Install miniconda

34 | RUN apt-get update && apt-get install -y --no-install-recommends \

35 | wget && \

36 | MINICONDA="Miniconda3-latest-Linux-x86_64.sh" && \

37 | wget --quiet https://repo.continuum.io/miniconda/$MINICONDA && \

38 | bash $MINICONDA -b -p /miniconda && \

39 | rm -f $MINICONDA

40 | ENV PATH /miniconda/bin:$PATH

41 |

42 | # Install PyTorch

43 | RUN conda update -n base conda && \

44 | conda install pytorch torchvision cuda90 -c pytorch

45 |

46 | # Install PerceptualSimilarity dependencies

47 | RUN conda install numpy scipy jupyter matplotlib && \

48 | conda install -c conda-forge scikit-image && \

49 | apt-get install -y python-qt4 && \

50 | pip install opencv-python

51 |

52 | # For CUDA profiling, TensorFlow requires CUPTI. Maybe PyTorch needs this too.

53 | ENV LD_LIBRARY_PATH /usr/local/cuda/extras/CUPTI/lib64:$LD_LIBRARY_PATH

54 |

55 | # IPython

56 | EXPOSE 8888

57 |

58 | WORKDIR "/notebooks"

59 |

60 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/lpips_1dir_allpairs.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import os

3 | import lpips

4 | import numpy as np

5 |

6 | parser = argparse.ArgumentParser(formatter_class=argparse.ArgumentDefaultsHelpFormatter)

7 | parser.add_argument('-d','--dir', type=str, default='./imgs/ex_dir_pair')

8 | parser.add_argument('-o','--out', type=str, default='./imgs/example_dists.txt')

9 | parser.add_argument('-v','--version', type=str, default='0.1')

10 | parser.add_argument('--all-pairs', action='store_true', help='turn on to test all N(N-1)/2 pairs, leave off to just do consecutive pairs (N-1)')

11 | parser.add_argument('-N', type=int, default=None)

12 | parser.add_argument('--use_gpu', action='store_true', help='turn on flag to use GPU')

13 |

14 | opt = parser.parse_args()

15 |

16 | ## Initializing the model

17 | loss_fn = lpips.LPIPS(net='alex',version=opt.version)

18 | if(opt.use_gpu):

19 | loss_fn.cuda()

20 |

21 | # crawl directories

22 | f = open(opt.out,'w')

23 | files = os.listdir(opt.dir)

24 | if(opt.N is not None):

25 | files = files[:opt.N]

26 | F = len(files)

27 |

28 | dists = []

29 | for (ff,file) in enumerate(files[:-1]):

30 | img0 = lpips.im2tensor(lpips.load_image(os.path.join(opt.dir,file))) # RGB image from [-1,1]

31 | if(opt.use_gpu):

32 | img0 = img0.cuda()

33 |

34 | if(opt.all_pairs):

35 | files1 = files[ff+1:]

36 | else:

37 | files1 = [files[ff+1],]

38 |

39 | for file1 in files1:

40 | img1 = lpips.im2tensor(lpips.load_image(os.path.join(opt.dir,file1)))

41 |

42 | if(opt.use_gpu):

43 | img1 = img1.cuda()

44 |

45 | # Compute distance

46 | dist01 = loss_fn.forward(img0,img1)

47 | print('(%s,%s): %.3f'%(file,file1,dist01))

48 | f.writelines('(%s,%s): %.6f\n'%(file,file1,dist01))

49 |

50 | dists.append(dist01.item())

51 |

52 | avg_dist = np.mean(np.array(dists))

53 | stderr_dist = np.std(np.array(dists))/np.sqrt(len(dists))

54 |

55 | print('Avg: %.5f +/- %.5f'%(avg_dist,stderr_dist))

56 | f.writelines('Avg: %.6f +/- %.6f'%(avg_dist,stderr_dist))

57 |

58 | f.close()

59 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/data/dataset/jnd_dataset.py:

--------------------------------------------------------------------------------

1 | import os.path

2 | import torchvision.transforms as transforms

3 | from data.dataset.base_dataset import BaseDataset

4 | from data.image_folder import make_dataset

5 | from PIL import Image

6 | import numpy as np

7 | import torch

8 | from IPython import embed

9 |

10 | class JNDDataset(BaseDataset):

11 | def initialize(self, dataroot, load_size=64):

12 | self.root = dataroot

13 | self.load_size = load_size

14 |

15 | self.dir_p0 = os.path.join(self.root, 'p0')

16 | self.p0_paths = make_dataset(self.dir_p0)

17 | self.p0_paths = sorted(self.p0_paths)

18 |

19 | self.dir_p1 = os.path.join(self.root, 'p1')

20 | self.p1_paths = make_dataset(self.dir_p1)

21 | self.p1_paths = sorted(self.p1_paths)

22 |

23 | transform_list = []

24 | transform_list.append(transforms.Scale(load_size))

25 | transform_list += [transforms.ToTensor(),

26 | transforms.Normalize((0.5, 0.5, 0.5),(0.5, 0.5, 0.5))]

27 |

28 | self.transform = transforms.Compose(transform_list)

29 |

30 | # judgement directory

31 | self.dir_S = os.path.join(self.root, 'same')

32 | self.same_paths = make_dataset(self.dir_S,mode='np')

33 | self.same_paths = sorted(self.same_paths)

34 |

35 | def __getitem__(self, index):

36 | p0_path = self.p0_paths[index]

37 | p0_img_ = Image.open(p0_path).convert('RGB')

38 | p0_img = self.transform(p0_img_)

39 |

40 | p1_path = self.p1_paths[index]

41 | p1_img_ = Image.open(p1_path).convert('RGB')

42 | p1_img = self.transform(p1_img_)

43 |

44 | same_path = self.same_paths[index]

45 | same_img = np.load(same_path).reshape((1,1,1,)) # [0,1]

46 |

47 | same_img = torch.FloatTensor(same_img)

48 |

49 | return {'p0': p0_img, 'p1': p1_img, 'same': same_img,

50 | 'p0_path': p0_path, 'p1_path': p1_path, 'same_path': same_path}

51 |

52 | def __len__(self):

53 | return len(self.p0_paths)

54 |

--------------------------------------------------------------------------------

/pytorch_ssim/README.md:

--------------------------------------------------------------------------------

1 | # pytorch-ssim

2 |

3 | ### Differentiable structural similarity (SSIM) index.

4 |

5 |

6 | ## Installation

7 | 1. Clone this repo.

8 | 2. Copy "pytorch_ssim" folder in your project.

9 |

10 | ## Example

11 | ### basic usage

12 | ```python

13 | import pytorch_ssim

14 | import torch

15 | from torch.autograd import Variable

16 |

17 | img1 = Variable(torch.rand(1, 1, 256, 256))

18 | img2 = Variable(torch.rand(1, 1, 256, 256))

19 |

20 | if torch.cuda.is_available():

21 | img1 = img1.cuda()

22 | img2 = img2.cuda()

23 |

24 | print(pytorch_ssim.ssim(img1, img2))

25 |

26 | ssim_loss = pytorch_ssim.SSIM(window_size = 11)

27 |

28 | print(ssim_loss(img1, img2))

29 |

30 | ```

31 | ### maximize ssim

32 | ```python

33 | import pytorch_ssim

34 | import torch

35 | from torch.autograd import Variable

36 | from torch import optim

37 | import cv2

38 | import numpy as np

39 |

40 | npImg1 = cv2.imread("einstein.png")

41 |

42 | img1 = torch.from_numpy(np.rollaxis(npImg1, 2)).float().unsqueeze(0)/255.0

43 | img2 = torch.rand(img1.size())

44 |

45 | if torch.cuda.is_available():

46 | img1 = img1.cuda()

47 | img2 = img2.cuda()

48 |

49 |

50 | img1 = Variable( img1, requires_grad=False)

51 | img2 = Variable( img2, requires_grad = True)

52 |

53 |

54 | # Functional: pytorch_ssim.ssim(img1, img2, window_size = 11, size_average = True)

55 | ssim_value = pytorch_ssim.ssim(img1, img2).data[0]

56 | print("Initial ssim:", ssim_value)

57 |

58 | # Module: pytorch_ssim.SSIM(window_size = 11, size_average = True)

59 | ssim_loss = pytorch_ssim.SSIM()

60 |

61 | optimizer = optim.Adam([img2], lr=0.01)

62 |

63 | while ssim_value < 0.95:

64 | optimizer.zero_grad()

65 | ssim_out = -ssim_loss(img1, img2)

66 | ssim_value = - ssim_out.data[0]

67 | print(ssim_value)

68 | ssim_out.backward()

69 | optimizer.step()

70 |

71 | ```

72 |

73 | ## Reference

74 | https://ece.uwaterloo.ca/~z70wang/research/ssim/

75 |

--------------------------------------------------------------------------------

/pytorch_msssim/max_ssim.py:

--------------------------------------------------------------------------------

1 | from pytorch_msssim import msssim, ssim

2 | import torch

3 | from torch import optim

4 |

5 | from PIL import Image

6 | from torchvision.transforms.functional import to_tensor

7 | import numpy as np

8 |

9 | # display = True requires matplotlib

10 | display = True

11 | metric = 'MSSSIM' # MSSSIM or SSIM

12 | device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

13 |

14 | def post_process(img):

15 | img = img.detach().cpu().numpy()

16 | img = np.transpose(np.squeeze(img, axis=0), (1, 2, 0))

17 | img = np.squeeze(img) # works if grayscale

18 | return img

19 |

20 | # Preprocessing

21 | img1 = to_tensor(Image.open('einstein.png')).unsqueeze(0).type(torch.FloatTensor)

22 |

23 | img2 = torch.rand(img1.size())

24 | img2 = torch.nn.functional.sigmoid(img2) # use sigmoid to clamp between [0, 1]

25 |

26 | img1 = img1.to(device)

27 | img2 = img2.to(device)

28 |

29 | img1.requires_grad = False

30 | img2.requires_grad = True

31 |

32 | loss_func = msssim if metric == 'MSSSIM' else ssim

33 |

34 | value = loss_func(img1, img2)

35 | print("Initial %s: %.5f" % (metric, value.item()))

36 |

37 | optimizer = optim.Adam([img2], lr=0.01)

38 |

39 | # MSSSIM yields higher values for worse results, because noise is removed in scales with lower resolutions

40 | threshold = 0.999 if metric == 'MSSSIM' else 0.9

41 |

42 | while value < threshold:

43 | optimizer.zero_grad()

44 | msssim_out = -loss_func(img1, img2)

45 | value = -msssim_out.item()

46 | print('Current MS-SSIM = %.5f' % value)

47 | msssim_out.backward()

48 | optimizer.step()

49 |

50 | if display:

51 | # Post processing

52 | img1np = post_process(img1)

53 | img2 = torch.nn.functional.sigmoid(img2)

54 | img2np = post_process(img2)

55 | import matplotlib.pyplot as plt

56 | cmap = 'gray' if len(img1np.shape) == 2 else None

57 | plt.subplot(1, 2, 1)

58 | plt.imshow(img1np, cmap=cmap)

59 | plt.title('Original')

60 | plt.subplot(1, 2, 2)

61 | plt.imshow(img2np, cmap=cmap)

62 | plt.title('Generated, {:s}: {:.3f}'.format(metric, value))

63 | plt.show()

64 |

--------------------------------------------------------------------------------

/evaluate.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 | import torch.nn.functional as F

4 |

5 | import timm

6 | import types

7 | import math

8 | import numpy as np

9 | from PIL import Image

10 | import PIL.Image as pil

11 | from torchvision import transforms, datasets

12 | from torch.utils.data import Dataset, DataLoader

13 | import torch.utils.data as data

14 | import PerceptualSimilarity.lpips.lpips as lpips

15 | import glob

16 | import gc

17 | import pytorch_msssim.pytorch_msssim as pytorch_msssim

18 | from torch.cuda.amp import autocast

19 | from model import BRViT

20 | import matplotlib.pyplot as plt

21 | from pytorch_ssim.pytorch_ssim import ssim

22 | from torchvision.utils import save_image

23 | from PerceptualSimilarity.util import util

24 |

25 |

26 | import pandas as pd

27 | from tqdm import tqdm

28 | import sys

29 | import cv2

30 |

31 | from skimage.metrics import peak_signal_noise_ratio as compare_psnr

32 | from skimage.metrics import structural_similarity as compare_ssim

33 |

34 | batch_size = 1

35 | feed_width = 1536

36 | feed_height = 1024

37 |

38 |

39 |

40 | def evaluate():

41 | tot_lpips_loss = 0.0

42 | total_psnr = 0.0

43 | total_ssim = 0.0

44 |

45 | device = torch.device("cuda")

46 | loss_fn = lpips.LPIPS(net='alex').to(device)

47 |

48 | for i in tqdm(range(294)):

49 | csv_file = "Bokeh_Data/test.csv"

50 | root_dir = "."

51 | dataa = pd.read_csv(csv_file)

52 | idx = i

53 |

54 | img0 = util.im2tensor(util.load_image(root_dir + dataa.iloc[idx, 0][1:])) # RGB image from [-1,1]

55 | img1 = util.im2tensor(util.load_image(f"Results/{4400+i}.png"))

56 | img0 = img0.to(device)

57 | img1 = img1.to(device)

58 |

59 | lpips_loss = loss_fn.forward(img0, img1)

60 | tot_lpips_loss += lpips_loss.item()

61 |

62 |

63 | total_psnr += compare_psnr(I0,I1)

64 | total_ssim += compare_ssim(I0, I1, multichannel=True)

65 |

66 |

67 | print("TOTAL LPIPS:",":", tot_lpips_loss / 294)

68 | print("TOTAL PSNR",":", total_psnr / 294)

69 | print("TOTAL SSIM",":", total_ssim / 294)

70 |

71 |

72 | if __name__ == "__main__":

73 | evaluate()

74 |

75 |

76 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/util/html.py:

--------------------------------------------------------------------------------

1 | import dominate

2 | from dominate.tags import *

3 | import os

4 |

5 |

6 | class HTML:

7 | def __init__(self, web_dir, title, image_subdir='', reflesh=0):

8 | self.title = title

9 | self.web_dir = web_dir

10 | # self.img_dir = os.path.join(self.web_dir, )

11 | self.img_subdir = image_subdir

12 | self.img_dir = os.path.join(self.web_dir, image_subdir)

13 | if not os.path.exists(self.web_dir):

14 | os.makedirs(self.web_dir)

15 | if not os.path.exists(self.img_dir):

16 | os.makedirs(self.img_dir)

17 | # print(self.img_dir)

18 |

19 | self.doc = dominate.document(title=title)

20 | if reflesh > 0:

21 | with self.doc.head:

22 | meta(http_equiv="reflesh", content=str(reflesh))

23 |

24 | def get_image_dir(self):

25 | return self.img_dir

26 |

27 | def add_header(self, str):

28 | with self.doc:

29 | h3(str)

30 |

31 | def add_table(self, border=1):

32 | self.t = table(border=border, style="table-layout: fixed;")

33 | self.doc.add(self.t)

34 |

35 | def add_images(self, ims, txts, links, width=400):

36 | self.add_table()

37 | with self.t:

38 | with tr():

39 | for im, txt, link in zip(ims, txts, links):

40 | with td(style="word-wrap: break-word;", halign="center", valign="top"):

41 | with p():

42 | with a(href=os.path.join(link)):

43 | img(style="width:%dpx" % width, src=os.path.join(im))

44 | br()

45 | p(txt)

46 |

47 | def save(self,file='index'):

48 | html_file = '%s/%s.html' % (self.web_dir,file)

49 | f = open(html_file, 'wt')

50 | f.write(self.doc.render())

51 | f.close()

52 |

53 |

54 | if __name__ == '__main__':

55 | html = HTML('web/', 'test_html')

56 | html.add_header('hello world')

57 |

58 | ims = []

59 | txts = []

60 | links = []

61 | for n in range(4):

62 | ims.append('image_%d.png' % n)

63 | txts.append('text_%d' % n)

64 | links.append('image_%d.png' % n)

65 | html.add_images(ims, txts, links)

66 | html.save()

67 |

--------------------------------------------------------------------------------

/store_results.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 | import torch.nn.functional as F

4 |

5 | import timm

6 | import types

7 | import math

8 | import numpy as np

9 | from PIL import Image

10 | import PIL.Image as pil

11 | from torchvision import transforms, datasets

12 | from torch.utils.data import Dataset, DataLoader

13 | import torch.utils.data as data

14 | import PerceptualSimilarity.lpips.lpips as lpips

15 | import glob

16 | import gc

17 | import pytorch_msssim.pytorch_msssim as pytorch_msssim

18 | from torch.cuda.amp import autocast

19 | from model import BRViT

20 | import matplotlib.pyplot as plt

21 | from pytorch_ssim.pytorch_ssim import ssim

22 | from torchvision.utils import save_image

23 |

24 | import pandas as pd

25 | from tqdm import tqdm

26 | import sys

27 | import cv2

28 | import time

29 | import scipy.misc

30 |

31 | from skimage.metrics import peak_signal_noise_ratio as compare_psnr

32 | from skimage.metrics import structural_similarity as compare_ssim

33 |

34 |

35 | feed_width = 1536

36 | feed_height = 1024

37 |

38 |

39 | #to store results

40 | def store_results():

41 | device = torch.device("cuda")

42 |

43 | model = BRViT().to(device)

44 |

45 | PATH = "weights/BRViT_53_0.pt"

46 | model.load_state_dict(torch.load(PATH), strict=True)

47 |

48 | with torch.no_grad():

49 | for i in tqdm(range(4400,4694)):

50 | csv_file = "Bokeh_Data/test.csv"

51 | data = pd.read_csv(csv_file)

52 | root_dir = "."

53 | idx = i - 4400

54 | bok = pil.open(root_dir + data.iloc[idx, 0][1:]).convert('RGB')

55 | input_image = pil.open(root_dir + data.iloc[idx, 1][1:]).convert('RGB')

56 | original_width, original_height = input_image.size

57 |

58 | org_image = input_image

59 | org_image = transforms.ToTensor()(org_image).unsqueeze(0)

60 |

61 | input_image = input_image.resize((feed_width, feed_height), pil.LANCZOS)

62 | input_image = transforms.ToTensor()(input_image).unsqueeze(0)

63 |

64 | # prediction

65 | org_image = org_image.to(device)

66 | input_image = input_image.to(device)

67 |

68 | bok_pred = model(input_image)

69 |

70 | bok_pred = F.interpolate(bok_pred,(original_height,original_width),mode = 'bilinear')

71 |

72 | save_image(bok_pred,'Results/'+ str(i) +'.png')

73 |

74 |

75 |

76 | if __name__ == "__main__":

77 | store_results()

78 |

79 |

80 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/data/image_folder.py:

--------------------------------------------------------------------------------

1 | ################################################################################

2 | # Code from

3 | # https://github.com/pytorch/vision/blob/master/torchvision/datasets/folder.py

4 | # Modified the original code so that it also loads images from the current

5 | # directory as well as the subdirectories

6 | ################################################################################

7 |

8 | import torch.utils.data as data

9 |

10 | from PIL import Image

11 | import os

12 | import os.path

13 |

14 | IMG_EXTENSIONS = [

15 | '.jpg', '.JPG', '.jpeg', '.JPEG',

16 | '.png', '.PNG', '.ppm', '.PPM', '.bmp', '.BMP',

17 | ]

18 |

19 | NP_EXTENSIONS = ['.npy',]

20 |

21 | def is_image_file(filename, mode='img'):

22 | if(mode=='img'):

23 | return any(filename.endswith(extension) for extension in IMG_EXTENSIONS)

24 | elif(mode=='np'):

25 | return any(filename.endswith(extension) for extension in NP_EXTENSIONS)

26 |

27 | def make_dataset(dirs, mode='img'):

28 | if(not isinstance(dirs,list)):

29 | dirs = [dirs,]

30 |

31 | images = []

32 | for dir in dirs:

33 | assert os.path.isdir(dir), '%s is not a valid directory' % dir

34 | for root, _, fnames in sorted(os.walk(dir)):

35 | for fname in fnames:

36 | if is_image_file(fname, mode=mode):

37 | path = os.path.join(root, fname)

38 | images.append(path)

39 |

40 | # print("Found %i images in %s"%(len(images),root))

41 | return images

42 |

43 | def default_loader(path):

44 | return Image.open(path).convert('RGB')

45 |

46 | class ImageFolder(data.Dataset):

47 | def __init__(self, root, transform=None, return_paths=False,

48 | loader=default_loader):

49 | imgs = make_dataset(root)

50 | if len(imgs) == 0:

51 | raise(RuntimeError("Found 0 images in: " + root + "\n"

52 | "Supported image extensions are: " + ",".join(IMG_EXTENSIONS)))

53 |

54 | self.root = root

55 | self.imgs = imgs

56 | self.transform = transform

57 | self.return_paths = return_paths

58 | self.loader = loader

59 |

60 | def __getitem__(self, index):

61 | path = self.imgs[index]

62 | img = self.loader(path)

63 | if self.transform is not None:

64 | img = self.transform(img)

65 | if self.return_paths:

66 | return img, path

67 | else:

68 | return img

69 |

70 | def __len__(self):

71 | return len(self.imgs)

72 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/data/dataset/twoafc_dataset.py:

--------------------------------------------------------------------------------

1 | import os.path

2 | import torchvision.transforms as transforms

3 | from data.dataset.base_dataset import BaseDataset

4 | from data.image_folder import make_dataset

5 | from PIL import Image

6 | import numpy as np

7 | import torch

8 | # from IPython import embed

9 |

10 | class TwoAFCDataset(BaseDataset):

11 | def initialize(self, dataroots, load_size=64):

12 | if(not isinstance(dataroots,list)):

13 | dataroots = [dataroots,]

14 | self.roots = dataroots

15 | self.load_size = load_size

16 |

17 | # image directory

18 | self.dir_ref = [os.path.join(root, 'ref') for root in self.roots]

19 | self.ref_paths = make_dataset(self.dir_ref)

20 | self.ref_paths = sorted(self.ref_paths)

21 |

22 | self.dir_p0 = [os.path.join(root, 'p0') for root in self.roots]

23 | self.p0_paths = make_dataset(self.dir_p0)

24 | self.p0_paths = sorted(self.p0_paths)

25 |

26 | self.dir_p1 = [os.path.join(root, 'p1') for root in self.roots]

27 | self.p1_paths = make_dataset(self.dir_p1)

28 | self.p1_paths = sorted(self.p1_paths)

29 |

30 | transform_list = []

31 | transform_list.append(transforms.Scale(load_size))

32 | transform_list += [transforms.ToTensor(),

33 | transforms.Normalize((0.5, 0.5, 0.5),(0.5, 0.5, 0.5))]

34 |

35 | self.transform = transforms.Compose(transform_list)

36 |

37 | # judgement directory

38 | self.dir_J = [os.path.join(root, 'judge') for root in self.roots]

39 | self.judge_paths = make_dataset(self.dir_J,mode='np')

40 | self.judge_paths = sorted(self.judge_paths)

41 |

42 | def __getitem__(self, index):

43 | p0_path = self.p0_paths[index]

44 | p0_img_ = Image.open(p0_path).convert('RGB')

45 | p0_img = self.transform(p0_img_)

46 |

47 | p1_path = self.p1_paths[index]

48 | p1_img_ = Image.open(p1_path).convert('RGB')

49 | p1_img = self.transform(p1_img_)

50 |

51 | ref_path = self.ref_paths[index]

52 | ref_img_ = Image.open(ref_path).convert('RGB')

53 | ref_img = self.transform(ref_img_)

54 |

55 | judge_path = self.judge_paths[index]

56 | # judge_img = (np.load(judge_path)*2.-1.).reshape((1,1,1,)) # [-1,1]

57 | judge_img = np.load(judge_path).reshape((1,1,1,)) # [0,1]

58 |

59 | judge_img = torch.FloatTensor(judge_img)

60 |

61 | return {'p0': p0_img, 'p1': p1_img, 'ref': ref_img, 'judge': judge_img,

62 | 'p0_path': p0_path, 'p1_path': p1_path, 'ref_path': ref_path, 'judge_path': judge_path}

63 |

64 | def __len__(self):

65 | return len(self.p0_paths)

66 |

--------------------------------------------------------------------------------

/pytorch_msssim/README.md:

--------------------------------------------------------------------------------

1 | # pytorch-msssim

2 |

3 | ### Differentiable Multi-Scale Structural Similarity (SSIM) index

4 |

5 | This small utiliy provides a differentiable MS-SSIM implementation for PyTorch based on Po Hsun Su's implementation of SSIM @ https://github.com/Po-Hsun-Su/pytorch-ssim.

6 | At the moment only the product method for MS-SSIM is supported.

7 |

8 | ## Installation

9 |

10 | Master branch now only supports PyTorch 0.4 or higher. All development occurs in the dev branch (`git checkout dev` after cloning the repository to get the latest development version).

11 |

12 | To install the current version of pytorch_mssim:

13 |

14 | 1. Clone this repo.

15 | 2. Go to the repo directory.

16 | 3. Run `python setup.py install`

17 |

18 | or

19 |

20 | 1. Clone this repo.

21 | 2. Copy "pytorch_msssim" folder in your project.

22 |

23 | To install a version of of pytorch_mssim that runs in PyTorch 0.3.1 or lower use the tag checkpoint-0.3. To do so, run the following commands after cloning the repository:

24 |

25 | ```

26 | git fetch --all --tags

27 | git checkout tags/checkpoint-0.3

28 | ```

29 |

30 | ## Example

31 |

32 | ### Basic usage

33 | ```python

34 | import pytorch_msssim

35 | import torch

36 |

37 | device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

38 | m = pytorch_msssim.MSSSIM()

39 |

40 | img1 = torch.rand(1, 1, 256, 256)

41 | img2 = torch.rand(1, 1, 256, 256)

42 |

43 | print(pytorch_msssim.msssim(img1, img2))

44 | print(m(img1, img2))

45 |

46 |

47 | ```

48 |

49 | ### Training

50 |

51 | For a detailed example on how to use msssim for optimization, take a look at the file max_ssim.py.

52 |

53 |

54 | ### Stability and normalization

55 |

56 | MS-SSIM is a particularly unstable metric when used for some architectures and may result in NaN values early on during the training. The msssim method provides a normalize attribute to help in these cases. There are three possible values. We recommend using the value normalized="relu" when training.

57 |

58 | - None : no normalization method is used and should be used for evaluation

59 | - "relu" : the `ssim`and `mc` values of each level during the calculation are rectified using a relu ensuring that negative values are zeroed

60 | - "simple" : the `ssim`result of each iteration is averaged with 1 for an expected lower bound of 0.5 - should ONLY be used for the initial iterations of your training or when averaging below 0.6 normalized score

61 |

62 | Currently and due to backward compability, a value of True will equal the "simple" normalization.

63 |

64 | ## Reference

65 | https://ece.uwaterloo.ca/~z70wang/research/ssim/

66 |

67 | https://github.com/Po-Hsun-Su/pytorch-ssim

68 |

69 | Thanks to z70wang for proposing MS-SSIM and providing the initial implementation, and Po-Hsun-Su for the initial differentiable SSIM implementation for Pytorch.

70 |

--------------------------------------------------------------------------------

/pytorch_ssim/pytorch_ssim/__init__.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn.functional as F

3 | from torch.autograd import Variable

4 | import numpy as np

5 | from math import exp

6 |

7 | def gaussian(window_size, sigma):

8 | gauss = torch.Tensor([exp(-(x - window_size//2)**2/float(2*sigma**2)) for x in range(window_size)])

9 | return gauss/gauss.sum()

10 |

11 | def create_window(window_size, channel):

12 | _1D_window = gaussian(window_size, 1.5).unsqueeze(1)

13 | _2D_window = _1D_window.mm(_1D_window.t()).float().unsqueeze(0).unsqueeze(0)

14 | window = Variable(_2D_window.expand(channel, 1, window_size, window_size).contiguous())

15 | return window

16 |

17 | def _ssim(img1, img2, window, window_size, channel, size_average = True):

18 | mu1 = F.conv2d(img1, window, padding = window_size//2, groups = channel)

19 | mu2 = F.conv2d(img2, window, padding = window_size//2, groups = channel)

20 |

21 | mu1_sq = mu1.pow(2)

22 | mu2_sq = mu2.pow(2)

23 | mu1_mu2 = mu1*mu2

24 |

25 | sigma1_sq = F.conv2d(img1*img1, window, padding = window_size//2, groups = channel) - mu1_sq

26 | sigma2_sq = F.conv2d(img2*img2, window, padding = window_size//2, groups = channel) - mu2_sq

27 | sigma12 = F.conv2d(img1*img2, window, padding = window_size//2, groups = channel) - mu1_mu2

28 |

29 | C1 = 0.01**2

30 | C2 = 0.03**2

31 |

32 | ssim_map = ((2*mu1_mu2 + C1)*(2*sigma12 + C2))/((mu1_sq + mu2_sq + C1)*(sigma1_sq + sigma2_sq + C2))

33 |

34 | if size_average:

35 | return ssim_map.mean()

36 | else:

37 | return ssim_map.mean(1).mean(1).mean(1)

38 |

39 | class SSIM(torch.nn.Module):

40 | def __init__(self, window_size = 11, size_average = True):

41 | super(SSIM, self).__init__()

42 | self.window_size = window_size

43 | self.size_average = size_average

44 | self.channel = 1

45 | self.window = create_window(window_size, self.channel)

46 |

47 | def forward(self, img1, img2):

48 | (_, channel, _, _) = img1.size()

49 |

50 | if channel == self.channel and self.window.data.type() == img1.data.type():

51 | window = self.window

52 | else:

53 | window = create_window(self.window_size, channel)

54 |

55 | if img1.is_cuda:

56 | window = window.cuda(img1.get_device())

57 | window = window.type_as(img1)

58 |

59 | self.window = window

60 | self.channel = channel

61 |

62 |

63 | return _ssim(img1, img2, window, self.window_size, channel, self.size_average)

64 |

65 | def ssim(img1, img2, window_size = 11, size_average = True):

66 | (_, channel, _, _) = img1.size()

67 | window = create_window(window_size, channel)

68 |

69 | if img1.is_cuda:

70 | window = window.cuda(img1.get_device())

71 | window = window.type_as(img1)

72 |

73 | return _ssim(img1, img2, window, window_size, channel, size_average)

74 |

--------------------------------------------------------------------------------

/PerceptualSimilarity/test_dataset_model.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import lpips

3 | from data import data_loader as dl

4 | import argparse

5 | from IPython import embed

6 |

7 | parser = argparse.ArgumentParser()

8 | parser.add_argument('--dataset_mode', type=str, default='2afc', help='[2afc,jnd]')

9 | parser.add_argument('--datasets', type=str, nargs='+', default=['val/traditional','val/cnn','val/superres','val/deblur','val/color','val/frameinterp'], help='datasets to test - for jnd mode: [val/traditional],[val/cnn]; for 2afc mode: [train/traditional],[train/cnn],[train/mix],[val/traditional],[val/cnn],[val/color],[val/deblur],[val/frameinterp],[val/superres]')

10 | parser.add_argument('--model', type=str, default='lpips', help='distance model type [lpips] for linearly calibrated net, [baseline] for off-the-shelf network, [l2] for euclidean distance, [ssim] for Structured Similarity Image Metric')

11 | parser.add_argument('--net', type=str, default='alex', help='[squeeze], [alex], or [vgg] for network architectures')

12 | parser.add_argument('--colorspace', type=str, default='Lab', help='[Lab] or [RGB] for colorspace to use for l2, ssim model types')

13 | parser.add_argument('--batch_size', type=int, default=50, help='batch size to test image patches in')

14 | parser.add_argument('--use_gpu', action='store_true', help='turn on flag to use GPU')

15 | parser.add_argument('--gpu_ids', type=int, nargs='+', default=[0], help='gpus to use')

16 | parser.add_argument('--nThreads', type=int, default=4, help='number of threads to use in data loader')

17 |

18 | parser.add_argument('--model_path', type=str, default=None, help='location of model, will default to ./weights/v[version]/[net_name].pth')

19 |

20 | parser.add_argument('--from_scratch', action='store_true', help='model was initialized from scratch')

21 | parser.add_argument('--train_trunk', action='store_true', help='model trunk was trained/tuned')

22 | parser.add_argument('--version', type=str, default='0.1', help='v0.1 is latest, v0.0 was original release')

23 |

24 | opt = parser.parse_args()

25 | if(opt.model in ['l2','ssim']):

26 | opt.batch_size = 1

27 |

28 | # initialize model

29 | trainer = lpips.Trainer()

30 | # trainer.initialize(model=opt.model,net=opt.net,colorspace=opt.colorspace,model_path=opt.model_path,use_gpu=opt.use_gpu)

31 | trainer.initialize(model=opt.model, net=opt.net, colorspace=opt.colorspace,

32 | model_path=opt.model_path, use_gpu=opt.use_gpu, pnet_rand=opt.from_scratch, pnet_tune=opt.train_trunk,

33 | version=opt.version, gpu_ids=opt.gpu_ids)

34 |

35 | if(opt.model in ['net-lin','net']):

36 | print('Testing model [%s]-[%s]'%(opt.model,opt.net))

37 | elif(opt.model in ['l2','ssim']):

38 | print('Testing model [%s]-[%s]'%(opt.model,opt.colorspace))

39 |

40 | # initialize data loader

41 | for dataset in opt.datasets:

42 | data_loader = dl.CreateDataLoader(dataset,dataset_mode=opt.dataset_mode, batch_size=opt.batch_size, nThreads=opt.nThreads)

43 |

44 | # evaluate model on data

45 | if(opt.dataset_mode=='2afc'):

46 | (score, results_verbose) = lpips.score_2afc_dataset(data_loader, trainer.forward, name=dataset)

47 | elif(opt.dataset_mode=='jnd'):

48 | (score, results_verbose) = lpips.score_jnd_dataset(data_loader, trainer.forward, name=dataset)

49 |

50 | # print results

51 | print(' Dataset [%s]: %.2f'%(dataset,100.*score))

52 |

53 |

--------------------------------------------------------------------------------

/pytorch_msssim/pytorch_msssim/__init__.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn.functional as F

3 | from math import exp

4 | import numpy as np

5 |

6 |

7 | def gaussian(window_size, sigma):

8 | gauss = torch.Tensor([exp(-(x - window_size//2)**2/float(2*sigma**2)) for x in range(window_size)])

9 | return gauss/gauss.sum()

10 |

11 |

12 | def create_window(window_size, channel=1):

13 | _1D_window = gaussian(window_size, 1.5).unsqueeze(1)

14 | _2D_window = _1D_window.mm(_1D_window.t()).float().unsqueeze(0).unsqueeze(0)

15 | window = _2D_window.expand(channel, 1, window_size, window_size).contiguous()

16 | return window

17 |

18 |

19 | def ssim(img1, img2, window_size=11, window=None, size_average=True, full=False, val_range=None):

20 | # Value range can be different from 255. Other common ranges are 1 (sigmoid) and 2 (tanh).

21 | if val_range is None:

22 | if torch.max(img1) > 128:

23 | max_val = 255

24 | else:

25 | max_val = 1

26 |

27 | if torch.min(img1) < -0.5:

28 | min_val = -1

29 | else:

30 | min_val = 0

31 | L = max_val - min_val

32 | else:

33 | L = val_range

34 |

35 | padd = 0