├── .env.example

├── CODE_OF_CONDUCT.md

├── CONTRIBUTING.md

├── LICENCE

├── README.md

└── main.py

/.env.example:

--------------------------------------------------------------------------------

1 | DEEPSEEK=xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

2 |

--------------------------------------------------------------------------------

/CODE_OF_CONDUCT.md:

--------------------------------------------------------------------------------

1 | # Code of Conduct for DeepSeek-API-Unofficial

2 |

3 | ## Our Pledge

4 |

5 | In the interest of fostering an open and welcoming environment, we as contributors and maintainers pledge to make participation in our project and our community a harassment-free experience for everyone, regardless of age, body size, disability, ethnicity, gender identity and expression, level of experience, nationality, personal appearance, race, religion, or sexual identity and orientation.

6 |

7 | ## Our Standards

8 |

9 | Examples of behavior that contributes to creating a positive environment include:

10 |

11 | - Using welcoming and inclusive language

12 | - Being respectful of differing viewpoints and experiences

13 | - Gracefully accepting constructive criticism

14 | - Focusing on what is best for the community

15 | - Showing empathy towards other community members

16 |

17 | Examples of unacceptable behavior by participants include:

18 |

19 | - The use of sexualized language or imagery and unwelcome sexual attention or advances

20 | - Trolling, insulting/derogatory comments, and personal or political attacks

21 | - Public or private harassment

22 | - Publishing others' private information, such as a physical or electronic address, without explicit permission

23 | - Other conduct which could reasonably be considered inappropriate in a professional setting

24 |

25 | ## Our Responsibilities

26 |

27 | Project maintainers are responsible for clarifying the standards of acceptable behavior and are expected to take appropriate and fair corrective action in response to any instances of unacceptable behavior.

28 |

29 | Project maintainers have the right and responsibility to remove, edit, or reject comments, commits, code, wiki edits, issues, and other contributions that are not aligned to this Code of Conduct, or to ban temporarily or permanently any contributor for other behaviors that they deem inappropriate, threatening, offensive, or harmful.

30 |

31 | ## Scope

32 |

33 | This Code of Conduct applies both within project spaces and in public spaces when an individual is representing the project or its community. Examples of representing a project or community include using an official project e-mail address, posting via an official social media account, or acting as an appointed representative at an online or offline event.

34 |

35 | ## Enforcement

36 |

37 | Instances of abusive, harassing, or otherwise unacceptable behavior may be reported by contacting the project team at [your email]. All complaints will be reviewed and investigated and will result in a response that is deemed necessary and appropriate to the circumstances. The project team is obligated to maintain confidentiality with regard to the reporter of an incident.

38 |

39 | ## Attribution

40 |

41 | This Code of Conduct is adapted from the Contributor Covenant, version 1.4, available at https://www.contributor-covenant.org/version/1/4/code-of-conduct.html.

42 |

43 | Date: May 4, 2024

44 |

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

🌟 Contributing to DeepSeek-API-Unofficial🌟

4 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

77 |

78 |

79 |

80 |

81 |

82 |

83 |

21 |

22 |

23 |

24 |

25 |

26 |

120 |

121 |

122 |

123 |

136 |

137 | | **Model** | **#Total Params** | **#Activated Params** | **Context Length** | **Download** |

138 | | :------------: | :------------: | :------------: | :------------: | :------------: |

139 | | DeepSeek-V2-Lite | 16B | 2.4B | 32k | [🤗 HuggingFace](https://huggingface.co/deepseek-ai/DeepSeek-V2-Lite) |

140 | | DeepSeek-V2-Lite-Chat (SFT) | 16B | 2.4B | 32k | [🤗 HuggingFace](https://huggingface.co/deepseek-ai/DeepSeek-V2-Lite-Chat) |

141 | | DeepSeek-V2 | 236B | 21B | 128k | [🤗 HuggingFace](https://huggingface.co/deepseek-ai/DeepSeek-V2) |

142 | | DeepSeek-V2-Chat (RL) | 236B | 21B | 128k | [🤗 HuggingFace](https://huggingface.co/deepseek-ai/DeepSeek-V2-Chat) |

143 |

144 |

145 |

146 | Due to the constraints of HuggingFace, the open-source code currently experiences slower performance than our internal codebase when running on GPUs with Huggingface. To facilitate the efficient execution of our model, we offer a dedicated vllm solution that optimizes performance for running our model effectively.

147 |

148 | ## 4. Evaluation Results

149 | ### Base Model

150 | #### Standard Benchmark (Models larger than 67B)

151 |

152 |

153 |

154 | | **Benchmark** | **Domain** | **LLaMA3 70B** | **Mixtral 8x22B** | **DeepSeek-V1 (Dense-67B)** | **DeepSeek-V2 (MoE-236B)** |

155 | |:-----------:|:--------:|:------------:|:---------------:|:-------------------------:|:------------------------:|

156 | | **MMLU** | English | 78.9 | 77.6 | 71.3 | 78.5 |

157 | | **BBH** | English | 81.0 | 78.9 | 68.7 | 78.9 |

158 | | **C-Eval** | Chinese | 67.5 | 58.6 | 66.1 | 81.7 |

159 | | **CMMLU** | Chinese | 69.3 | 60.0 | 70.8 | 84.0 |

160 | | **HumanEval** | Code | 48.2 | 53.1 | 45.1 | 48.8 |

161 | | **MBPP** | Code | 68.6 | 64.2 | 57.4 | 66.6 |

162 | | **GSM8K** | Math | 83.0 | 80.3 | 63.4 | 79.2 |

163 | | **Math** | Math | 42.2 | 42.5 | 18.7 | 43.6 |

164 |

165 |

166 |

167 | #### Standard Benchmark (Models smaller than 16B)

168 |

169 |

170 | | **Benchmark** | **Domain** | **DeepSeek 7B (Dense)** | **DeepSeekMoE 16B** | **DeepSeek-V2-Lite (MoE-16B)** |

171 | |:-------------:|:----------:|:--------------:|:-----------------:|:--------------------------:|

172 | | **Architecture** | - | MHA+Dense | MHA+MoE | MLA+MoE |

173 | | **MMLU** | English | 48.2 | 45.0 | 58.3 |

174 | | **BBH** | English | 39.5 | 38.9 | 44.1 |

175 | | **C-Eval** | Chinese | 45.0 | 40.6 | 60.3 |

176 | | **CMMLU** | Chinese | 47.2 | 42.5 | 64.3 |

177 | | **HumanEval** | Code | 26.2 | 26.8 | 29.9 |

178 | | **MBPP** | Code | 39.0 | 39.2 | 43.2 |

179 | | **GSM8K** | Math | 17.4 | 18.8 | 41.1 |

180 | | **Math** | Math | 3.3 | 4.3 | 17.1 |

181 |

182 |

183 | For more evaluation details, such as few-shot settings and prompts, please check our paper.

184 |

185 | #### Context Window

186 |

187 |  188 |

188 |

189 |

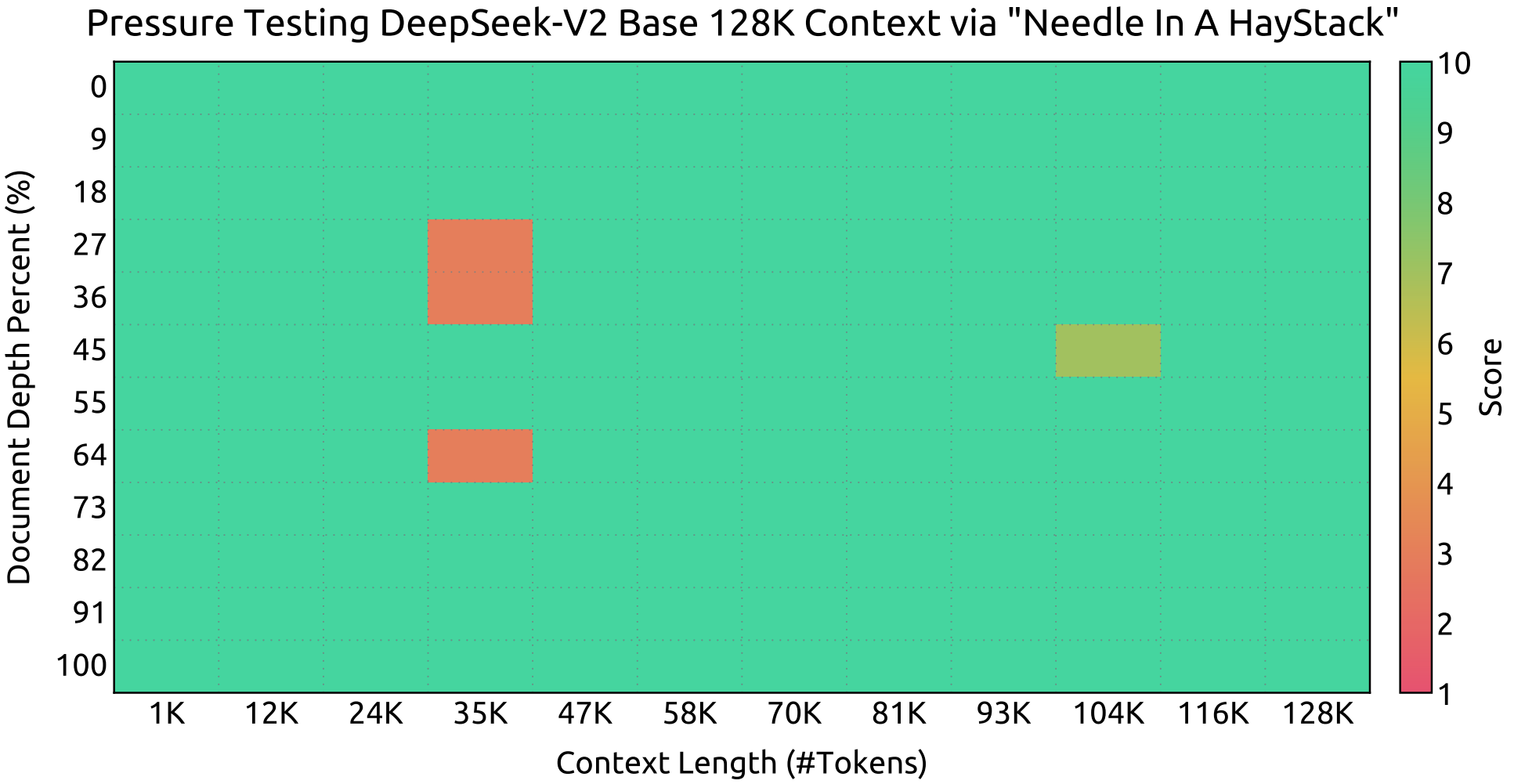

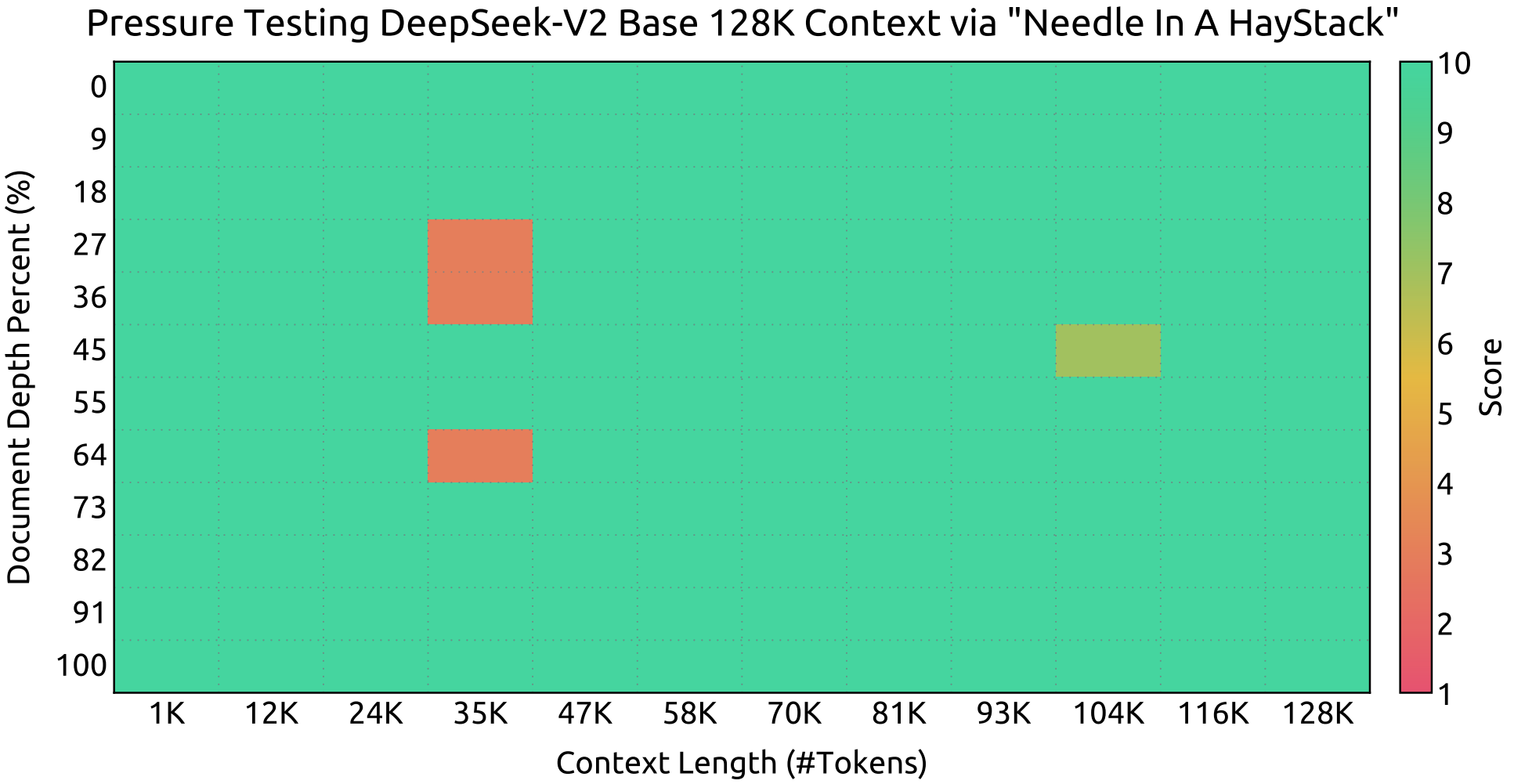

190 | Evaluation results on the ``Needle In A Haystack`` (NIAH) tests. DeepSeek-V2 performs well across all context window lengths up to **128K**.

191 |

192 | ### Chat Model

193 | #### Standard Benchmark (Models larger than 67B)

194 |

195 |

196 | | Benchmark | Domain | QWen1.5 72B Chat | Mixtral 8x22B | LLaMA3 70B Instruct | DeepSeek-V1 Chat (SFT) | DeepSeek-V2 Chat (SFT) | DeepSeek-V2 Chat (RL) |

197 | |:-----------:|:----------------:|:------------------:|:---------------:|:---------------------:|:-------------:|:-----------------------:|:----------------------:|

198 | | **MMLU** | English | 76.2 | 77.8 | 80.3 | 71.1 | 78.4 | 77.8 |

199 | | **BBH** | English | 65.9 | 78.4 | 80.1 | 71.7 | 81.3 | 79.7 |

200 | | **C-Eval** | Chinese | 82.2 | 60.0 | 67.9 | 65.2 | 80.9 | 78.0 |

201 | | **CMMLU** | Chinese | 82.9 | 61.0 | 70.7 | 67.8 | 82.4 | 81.6 |

202 | | **HumanEval** | Code | 68.9 | 75.0 | 76.2 | 73.8 | 76.8 | 81.1 |

203 | | **MBPP** | Code | 52.2 | 64.4 | 69.8 | 61.4 | 70.4 | 72.0 |

204 | | **LiveCodeBench (0901-0401)** | Code | 18.8 | 25.0 | 30.5 | 18.3 | 28.7 | 32.5 |

205 | | **GSM8K** | Math | 81.9 | 87.9 | 93.2 | 84.1 | 90.8 | 92.2 |

206 | | **Math** | Math | 40.6 | 49.8 | 48.5 | 32.6 | 52.7 | 53.9 |

207 |

208 |

209 |

210 | #### Standard Benchmark (Models smaller than 16B)

211 |

212 |

213 |

214 | | Benchmark | Domain | DeepSeek 7B Chat (SFT) | DeepSeekMoE 16B Chat (SFT) | DeepSeek-V2-Lite 16B Chat (SFT) |

215 | |:-----------:|:----------------:|:------------------:|:---------------:|:---------------------:|

216 | | **MMLU** | English | 49.7 | 47.2 | 55.7 |

217 | | **BBH** | English | 43.1 | 42.2 | 48.1 |

218 | | **C-Eval** | Chinese | 44.7 | 40.0 | 60.1 |

219 | | **CMMLU** | Chinese | 51.2 | 49.3 | 62.5 |

220 | | **HumanEval** | Code | 45.1 | 45.7 | 57.3 |

221 | | **MBPP** | Code | 39.0 | 46.2 | 45.8 |

222 | | **GSM8K** | Math | 62.6 | 62.2 | 72.0 |

223 | | **Math** | Math | 14.7 | 15.2 | 27.9 |

224 |

225 |

226 |

227 | #### English Open Ended Generation Evaluation

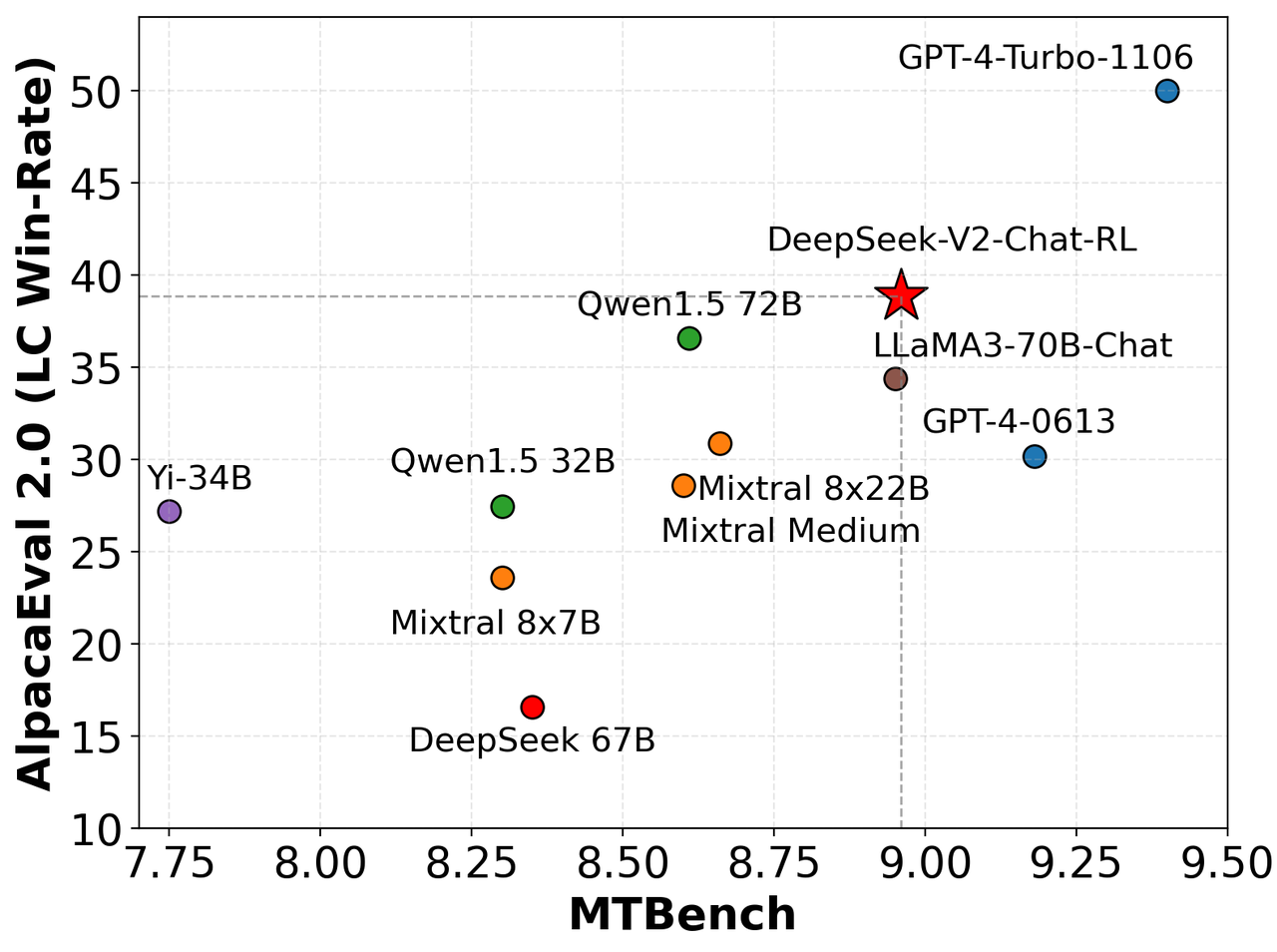

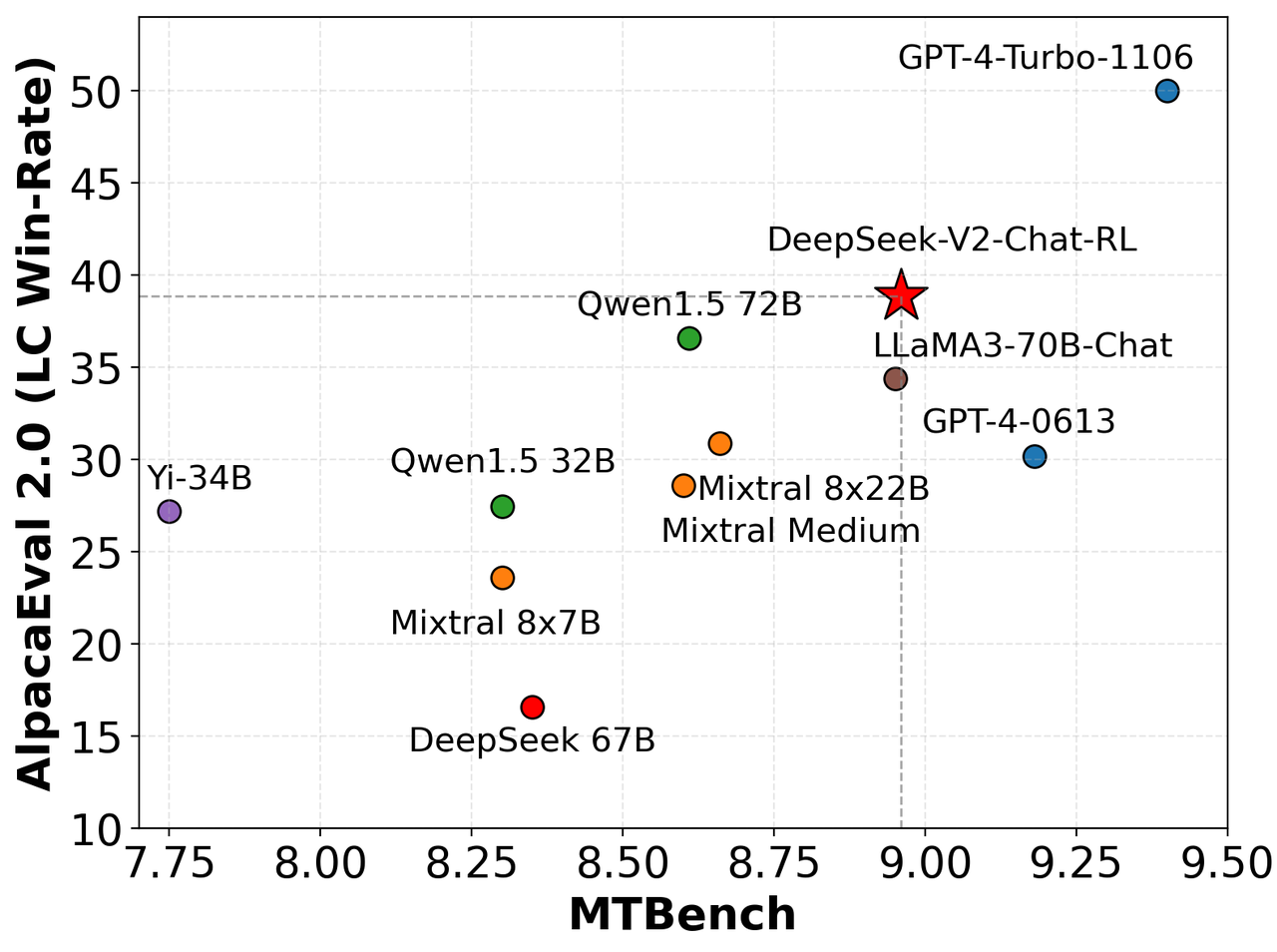

228 | We evaluate our model on AlpacaEval 2.0 and MTBench, showing the competitive performance of DeepSeek-V2-Chat-RL on English conversation generation.

229 |

230 |  231 |

231 |

232 |

233 | #### Chinese Open Ended Generation Evaluation

234 | **Alignbench** (https://arxiv.org/abs/2311.18743)

235 |

236 |

237 | | **模型** | **开源/闭源** | **总分** | **中文推理** | **中文语言** |

238 | | :---: | :---: | :---: | :---: | :---: |

239 | | gpt-4-1106-preview | 闭源 | 8.01 | 7.73 | 8.29 |

240 | | DeepSeek-V2 Chat (RL) | 开源 | 7.91 | 7.45 | 8.36 |

241 | | erniebot-4.0-202404 (文心一言) | 闭源 | 7.89 | 7.61 | 8.17 |

242 | | DeepSeek-V2 Chat (SFT) | 开源 | 7.74 | 7.30 | 8.17 |

243 | | gpt-4-0613 | 闭源 | 7.53 | 7.47 | 7.59 |

244 | | erniebot-4.0-202312 (文心一言) | 闭源 | 7.36 | 6.84 | 7.88 |

245 | | moonshot-v1-32k-202404 (月之暗面) | 闭源 | 7.22 | 6.42 | 8.02 |

246 | | Qwen1.5-72B-Chat (通义千问) | 开源 | 7.19 | 6.45 | 7.93 |

247 | | DeepSeek-67B-Chat | 开源 | 6.43 | 5.75 | 7.11 |

248 | | Yi-34B-Chat (零一万物) | 开源 | 6.12 | 4.86 | 7.38 |

249 | | gpt-3.5-turbo-0613 | 闭源 | 6.08 | 5.35 | 6.71 |

250 | | DeepSeek-V2-Lite 16B Chat | 开源 | 6.01 | 4.71 | 7.32 |

251 |

252 |

253 |

254 | #### Coding Benchmarks

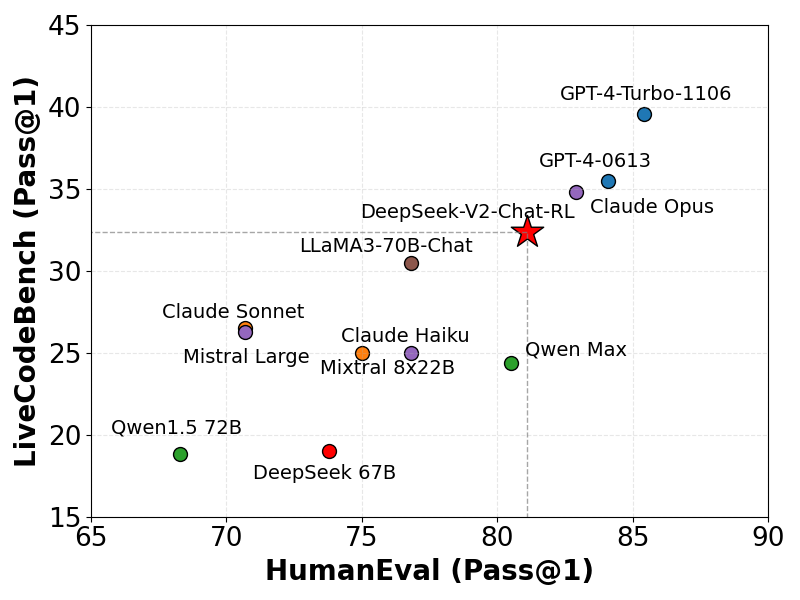

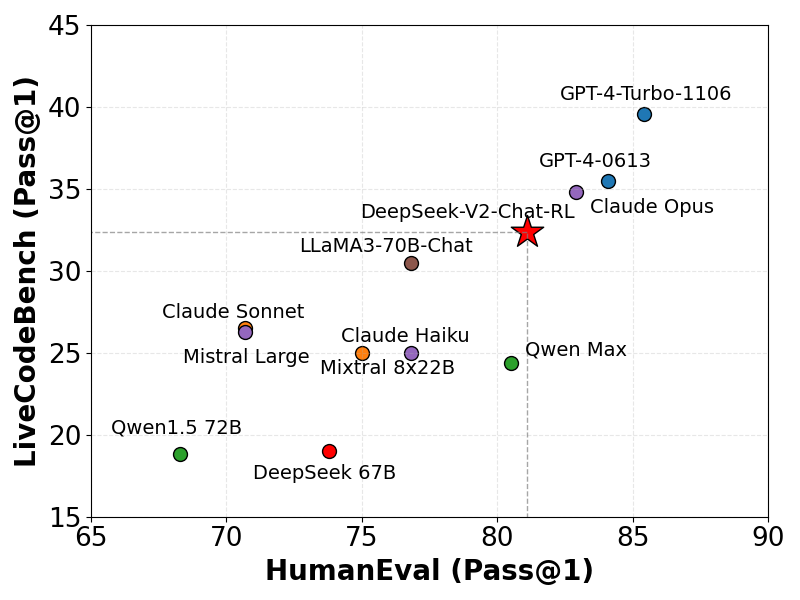

255 | We evaluate our model on LiveCodeBench (0901-0401), a benchmark designed for live coding challenges. As illustrated, DeepSeek-V2 demonstrates considerable proficiency in LiveCodeBench, achieving a Pass@1 score that surpasses several other sophisticated models. This performance highlights the model's effectiveness in tackling live coding tasks.

256 |

257 |

258 |  259 |

259 |

260 |

261 | ## 5. Model Architecture

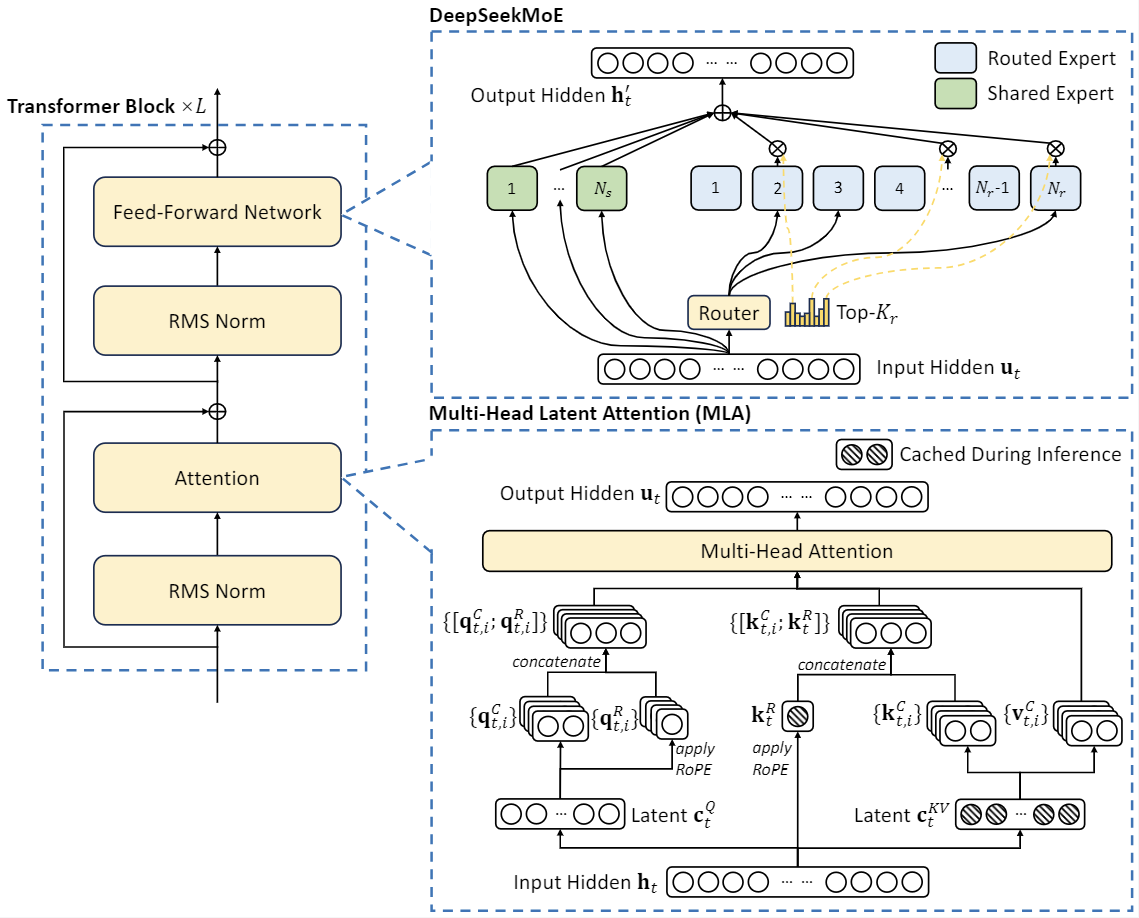

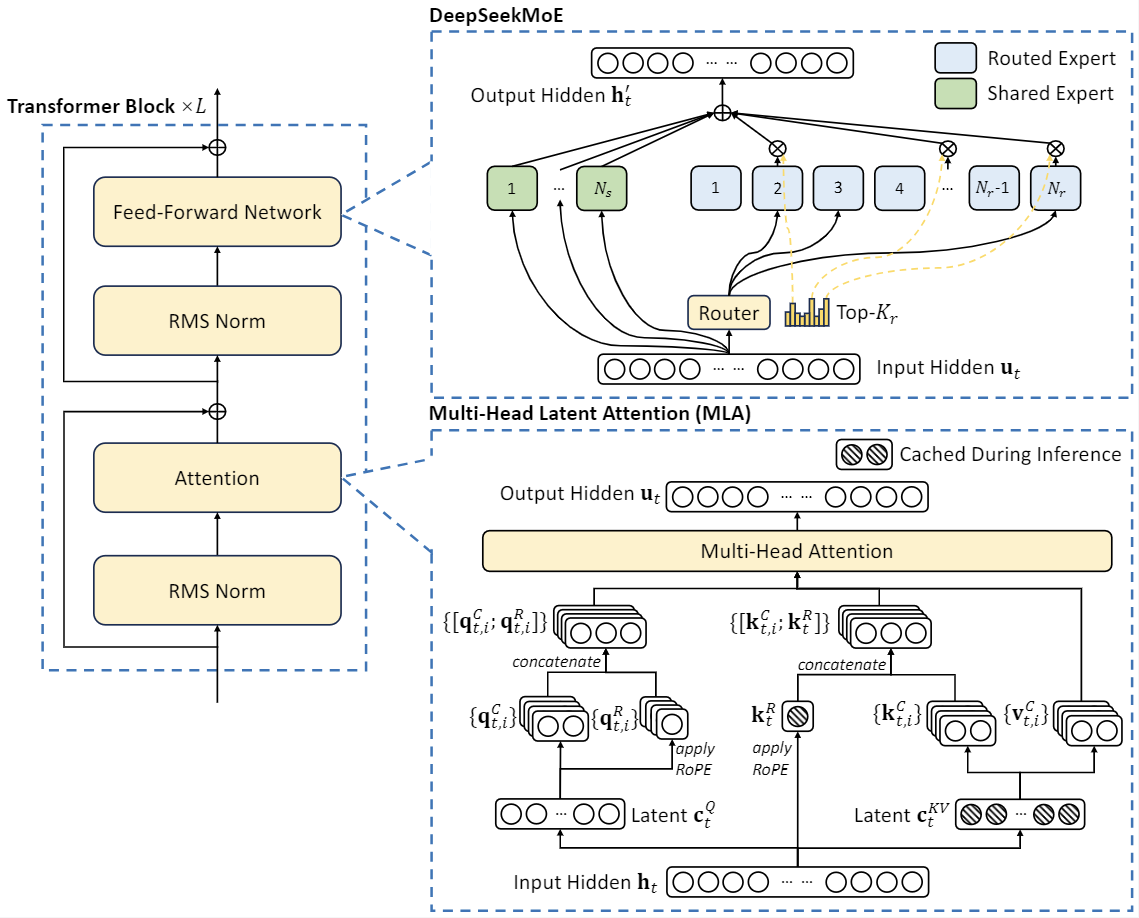

262 | DeepSeek-V2 adopts innovative architectures to guarantee economical training and efficient inference:

263 | - For attention, we design MLA (Multi-head Latent Attention), which utilizes low-rank key-value union compression to eliminate the bottleneck of inference-time key-value cache, thus supporting efficient inference.

264 | - For Feed-Forward Networks (FFNs), we adopt DeepSeekMoE architecture, a high-performance MoE architecture that enables training stronger models at lower costs.

265 |

266 |

267 |  268 |

268 |

269 |

270 | ## 6. Chat Website

271 | You can chat with the DeepSeek-V2 on DeepSeek's official website: [chat.deepseek.com](https://chat.deepseek.com/sign_in)

272 |

273 | ### 🤝 Contributing

274 |

275 | Your contributions are welcome! Please refer to our [CONTRIBUTING.md](CONTRIBUTING.md) for contribution guidelines.

276 |

277 | ### 📜 License

278 |

279 | This project is licensed under the [MIT License](LICENSE). Full license text is available in the [LICENSE](LICENSE) file.

280 |

281 | ### 📬 Get in Touch

282 |

283 | For inquiries or assistance, please open an issue or reach out through our social channels:

284 |

285 |

286 |

287 |

288 |

289 |

290 |

291 |

292 |

3 |

3 |  3 |

3 |  3 |

4 |

3 |

4 |  122 |

122 |  123 |

123 |  188 |

188 |  231 |

231 |  259 |

259 |  268 |

268 |