6 | Hang Zhang, Kristin Dana 7 |

8 | @article{zhang2017multistyle,

9 | title={Multi-style Generative Network for Real-time Transfer},

10 | author={Zhang, Hang and Dana, Kristin},

11 | journal={arXiv preprint arXiv:1703.06953},

12 | year={2017}

13 | }

14 |

15 |

47 |

47 |  48 |

48 |  49 |

49 |  50 |

50 |  51 |

51 |  52 |

52 |  53 |

53 |  54 |

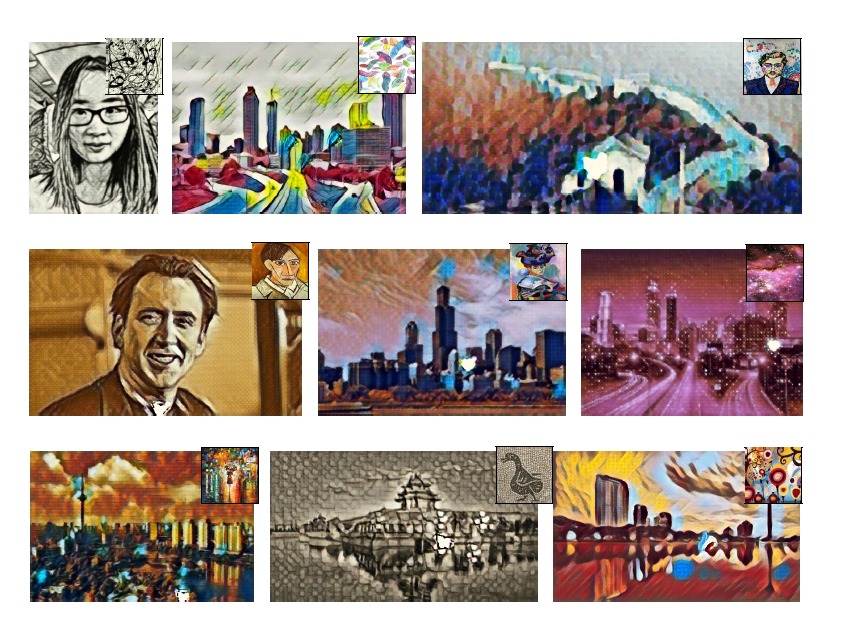

55 | [[More Example Results](Examples.md)]

56 |

57 | ### Train Your Own Model

58 | Please follow [this tutorial to train a new model](Training.md).

59 |

60 | ### Release Timeline

61 | - [x] 03/20/2017 we have released the [demo video](https://www.youtube.com/watch?v=oy6pWNWBt4Y).

62 | - [x] 03/24/2017 We have released [ArXiv paper](https://arxiv.org/pdf/1703.06953.pdf) and test code with pre-trained models.

63 | - [x] 04/09/2017 We have released the training code.

64 | - [x] 04/24/2017 Please checkout our PyTorch [implementation](https://github.com/zhanghang1989/PyTorch-Style-Transfer).

65 |

66 | ### Acknowledgement

67 | The code benefits from outstanding prior work and their implementations including:

68 | - [Texture Networks: Feed-forward Synthesis of Textures and Stylized Images](https://arxiv.org/pdf/1603.03417.pdf) by Ulyanov *et al. ICML 2016*. ([code](https://github.com/DmitryUlyanov/texture_nets))

69 | - [Perceptual Losses for Real-Time Style Transfer and Super-Resolution](https://arxiv.org/pdf/1603.08155.pdf) by Johnson *et al. ECCV 2016* ([code](https://github.com/jcjohnson/fast-neural-style))

70 | - [Image Style Transfer Using Convolutional Neural Networks](http://www.cv-foundation.org/openaccess/content_cvpr_2016/papers/Gatys_Image_Style_Transfer_CVPR_2016_paper.pdf) by Gatys *et al. CVPR 2016* and its torch implementation [code](https://github.com/jcjohnson/neural-style) by Johnson.

71 |

--------------------------------------------------------------------------------

/modules/Inspiration.lua:

--------------------------------------------------------------------------------

1 | --+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | -- Created by: Hang Zhang

3 | -- ECE Department, Rutgers University

4 | -- Email: zhang.hang@rutgers.edu

5 | -- Copyright (c) 2017

6 | --

7 | -- Free to reuse and distribute this software for research or

8 | -- non-profit purpose, subject to the following conditions:

9 | -- 1. The code must retain the above copyright notice, this list of

10 | -- conditions.

11 | -- 2. Original authors' names are not deleted.

12 | -- 3. The authors' names are not used to endorse or promote products

13 | -- derived from this software

14 | --+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

15 |

16 | local Inspiration, parent = torch.class('nn.Inspiration', 'nn.Module')

17 |

18 | local function isint(x)

19 | return type(x) == 'number' and x == math.floor(x)

20 | end

21 |

22 | function Inspiration:__init(C)

23 | parent.__init(self)

24 | assert(self and C, 'should specify C')

25 | assert(isint(C), 'C should be integers')

26 |

27 | self.C = C

28 | self.MM_WG = nn.MM()

29 | self.MM_PX = nn.MM(true, false)

30 | self.target = torch.Tensor(C,C)

31 | self.Weight = torch.Tensor(C,C)

32 | self.P = torch.Tensor(C,C)

33 |

34 | self.gradWeight = torch.Tensor(C, C)

35 | self.gradWG = {torch.Tensor(C, C), torch.Tensor(C, C)}

36 | self.gradPX = {torch.Tensor(), torch.Tensor()}

37 | self.gradInput = torch.Tensor()

38 | self:reset()

39 | end

40 |

41 | function Inspiration:reset(stdv)

42 | if stdv then

43 | stdv = stdv * math.sqrt(2)

44 | else

45 | stdv = 1./math.sqrt(self.C)

46 | end

47 | self.Weight:uniform(-stdv, stdv)

48 | self.target:uniform(-stdv, stdv)

49 | return self

50 | end

51 |

52 | function Inspiration:setTarget(nT)

53 | assert(self and image)

54 | self.target = nT

55 | end

56 |

57 | function Inspiration:updateOutput(input)

58 | assert(self)

59 | -- P=WG Y=XP

60 | --self.output:resizeAs(input)

61 | if input:dim() == 3 then

62 | self.P = self.MM_WG:forward({self.Weight, self.target})

63 | self.output = self.MM_PX:forward({self.P, input:view(self.C,-1)}):viewAs(input)

64 | elseif input:dim() == 4 then

65 | local B = input:size(1)

66 | self.P = self.MM_WG:forward({self.Weight, self.target})

67 | self.output = self.MM_PX:forward({self.P:add_dummy():expand(B,self.C,self.C), input:view(B,self.C,-1)}):viewAs(input)

68 | else

69 | error('Unsupported dimention for Inspiration layer')

70 | end

71 | return self.output

72 | end

73 |

74 | function Inspiration:updateGradInput(input, gradOutput)

75 | assert(self and self.gradInput)

76 |

77 | --self.gradInput:resizeAs(input):fill(0)

78 | if input:dim() == 3 then

79 | self.gradPX = self.MM_PX:backward({self.P, input:view(self.C,-1)}, gradOutput:view(self.C,-1))

80 | elseif input:dim() == 4 then

81 | local B = input:size(1)

82 | self.gradPX = self.MM_PX:backward({self.P:add_dummy():expand(B,self.C,self.C), input:view(B,self.C,-1)}, gradOutput:view(B,self.C,-1))

83 | else

84 | error('Unsupported dimention for Inspiration layer')

85 | end

86 |

87 | self.gradInput = self.gradPX[2]:viewAs(input)

88 | return self.gradInput

89 | end

90 |

91 | function Inspiration:accGradParameters(input, gradOutput, scale)

92 | assert(self)

93 | scale = scale or 1

94 |

95 | if input:dim() == 3 then

96 | self.gradWG = self.MM_WG:backward({self.Weight, self.target}, self.gradPX[1])

97 | self.gradWeight = scale * self.gradWG[1]

98 | elseif input:dim() == 4 then

99 | self.gradWG = self.MM_WG:backward({self.Weight, self.target}, self.gradPX[1]:sum(1):squeeze())

100 | self.gradWeight = scale * self.gradWG[1]

101 | else

102 | error('Unsupported dimention for Inspiration layer')

103 | end

104 | end

105 |

106 | function Inspiration:__tostring__()

107 | return torch.type(self) ..

108 | string.format(

109 | '(%dxHxW, -> %dxHxW)',

110 | self.C, self.C

111 | )

112 | end

113 |

--------------------------------------------------------------------------------

/experiments/utils/utils.lua:

--------------------------------------------------------------------------------

1 | require 'torch'

2 | require 'nn'

3 | local cjson = require 'cjson'

4 |

5 |

6 | local M = {}

7 |

8 |

9 | -- Parse a string of comma-separated numbers

10 | -- For example convert "1.0,3.14" to {1.0, 3.14}

11 | function M.parse_num_list(s)

12 | local nums = {}

13 | for _, ss in ipairs(s:split(',')) do

14 | table.insert(nums, tonumber(ss))

15 | end

16 | return nums

17 | end

18 |

19 |

20 | -- Parse a layer string and associated weights string.

21 | -- The layers string is a string of comma-separated layer strings, and the

22 | -- weight string contains comma-separated numbers. If the weights string

23 | -- contains only a single number it is duplicated to be the same length as the

24 | -- layers.

25 | function M.parse_layers(layers_string, weights_string)

26 | local layers = layers_string:split(',')

27 | local weights = M.parse_num_list(weights_string)

28 | if #weights == 1 and #layers > 1 then

29 | -- Duplicate the same weight for all layers

30 | local w = weights[1]

31 | weights = {}

32 | for i = 1, #layers do

33 | table.insert(weights, w)

34 | end

35 | elseif #weights ~= #layers then

36 | local msg = 'size mismatch between layers "%s" and weights "%s"'

37 | error(string.format(msg, layers_string, weights_string))

38 | end

39 | return layers, weights

40 | end

41 |

42 |

43 | function M.setup_gpu(gpu, backend, use_cudnn)

44 | local dtype = 'torch.FloatTensor'

45 | if gpu >= 0 then

46 | if backend == 'cuda' then

47 | require 'cutorch'

48 | require 'cunn'

49 | cutorch.setDevice(gpu + 1)

50 | dtype = 'torch.CudaTensor'

51 | if use_cudnn then

52 | require 'cudnn'

53 | cudnn.benchmark = true

54 | end

55 | elseif backend == 'opencl' then

56 | require 'cltorch'

57 | require 'clnn'

58 | cltorch.setDevice(gpu + 1)

59 | dtype = torch.Tensor():cl():type()

60 | use_cudnn = false

61 | end

62 | else

63 | use_cudnn = false

64 | end

65 | return dtype, use_cudnn

66 | end

67 |

68 |

69 | function M.clear_gradients(m)

70 | if torch.isTypeOf(m, nn.Container) then

71 | m:applyToModules(M.clear_gradients)

72 | end

73 | if m.weight and m.gradWeight then

74 | m.gradWeight = m.gradWeight.new()

75 | end

76 | if m.bias and m.gradBias then

77 | m.gradBias = m.gradBias.new()

78 | end

79 | end

80 |

81 |

82 | function M.restore_gradients(m)

83 | if torch.isTypeOf(m, nn.Container) then

84 | m:applyToModules(M.restore_gradients)

85 | end

86 | if m.weight and m.gradWeight then

87 | m.gradWeight = m.gradWeight.new(#m.weight):zero()

88 | end

89 | if m.bias and m.gradBias then

90 | m.gradBias = m.gradBias.new(#m.bias):zero()

91 | end

92 | end

93 |

94 |

95 | function M.read_json(path)

96 | local file = io.open(path, 'r')

97 | local text = file:read()

98 | file:close()

99 | local info = cjson.decode(text)

100 | return info

101 | end

102 |

103 |

104 | function M.write_json(path, j)

105 | cjson.encode_sparse_array(true, 2, 10)

106 | local text = cjson.encode(j)

107 | local file = io.open(path, 'w')

108 | file:write(text)

109 | file:close()

110 | end

111 |

112 | local IMAGE_EXTS = {'jpg', 'jpeg', 'png', 'ppm', 'pgm'}

113 | function M.is_image_file(filename)

114 | -- Hidden file are not images

115 | if string.sub(filename, 1, 1) == '.' then

116 | return false

117 | end

118 | -- Check against a list of known image extensions

119 | local ext = string.lower(paths.extname(filename) or "")

120 | for _, image_ext in ipairs(IMAGE_EXTS) do

121 | if ext == image_ext then

122 | return true

123 | end

124 | end

125 | return false

126 | end

127 |

128 |

129 | function M.median_filter(img, r)

130 | local u = img:unfold(2, r, 1):contiguous()

131 | u = u:unfold(3, r, 1):contiguous()

132 | local HH, WW = u:size(2), u:size(3)

133 | local dtype = u:type()

134 | -- Median is not defined for CudaTensors, cast to float and back

135 | local med = u:view(3, HH, WW, r * r):float():median():type(dtype)

136 | return med[{{}, {}, {}, 1}]

137 | end

138 |

139 |

140 | return M

141 |

142 |

--------------------------------------------------------------------------------

/modules/layer_utils.lua:

--------------------------------------------------------------------------------

1 | require 'nn'

2 |

3 | --[[

4 | Utility functions for getting and inserting layers into models composed of

5 | hierarchies of nn Modules and nn Containers. In such a model, we can uniquely

6 | address each module with a unique "layer string", which is a series of integers

7 | separated by dashes. This is easiest to understand with an example: consider

8 | the following network; we have labeled each module with its layer string:

9 |

10 | nn.Sequential {

11 | (1) nn.SpatialConvolution

12 | (2) nn.Sequential {

13 | (2-1) nn.SpatialConvolution

14 | (2-2) nn.SpatialConvolution

15 | }

16 | (3) nn.Sequential {

17 | (3-1) nn.SpatialConvolution

18 | (3-2) nn.Sequential {

19 | (3-2-1) nn.SpatialConvolution

20 | (3-2-2) nn.SpatialConvolution

21 | (3-2-3) nn.SpatialConvolution

22 | }

23 | (3-3) nn.SpatialConvolution

24 | }

25 | (4) nn.View

26 | (5) nn.Linear

27 | }

28 |

29 | Any layers that that have the instance variable _ignore set to true are ignored

30 | when computing layer strings for layers. This way, we can insert new layers into

31 | a network without changing the layer strings of existing layers.

32 | --]]

33 | local M = {}

34 |

35 |

36 | --[[

37 | Convert a layer string to an array of integers.

38 |

39 | For example layer_string_to_nums("1-23-4") = {1, 23, 4}.

40 | --]]

41 | function M.layer_string_to_nums(layer_string)

42 | local nums = {}

43 | for _, s in ipairs(layer_string:split('-')) do

44 | table.insert(nums, tonumber(s))

45 | end

46 | return nums

47 | end

48 |

49 |

50 | --[[

51 | Comparison function for layer strings that is compatible with table.sort.

52 | In this comparison scheme, 2-3 comes AFTER 2-3-X for all X.

53 |

54 | Input:

55 | - s1, s2: Two layer strings.

56 |

57 | Output:

58 | - true if s1 should come before s2 in sorted order; false otherwise.

59 | --]]

60 | function M.compare_layer_strings(s1, s2)

61 | local left = M.layer_string_to_nums(s1)

62 | local right = M.layer_string_to_nums(s2)

63 | local out = nil

64 | for i = 1, math.min(#left, #right) do

65 | if left[i] < right[i] then

66 | out = true

67 | elseif left[i] > right[i] then

68 | out = false

69 | end

70 | if out ~= nil then break end

71 | end

72 |

73 | if out == nil then

74 | out = (#left > #right)

75 | end

76 | return out

77 | end

78 |

79 |

80 | --[[

81 | Get a layer from the network net using a layer string.

82 | --]]

83 | function M.get_layer(net, layer_string)

84 | local nums = M.layer_string_to_nums(layer_string)

85 | local layer = net

86 | for i, num in ipairs(nums) do

87 | local count = 0

88 | for j = 1, #layer do

89 | if not layer:get(j)._ignore then

90 | count = count + 1

91 | end

92 | if count == num then

93 | layer = layer:get(j)

94 | break

95 | end

96 | end

97 | end

98 | return layer

99 | end

100 |

101 |

102 | -- Insert a new layer immediately after the layer specified by a layer string.

103 | -- Any layers inserted this way are flagged with a special variable

104 | function M.insert_after(net, layer_string, new_layer)

105 | new_layer._ignore = true

106 | local nums = M.layer_string_to_nums(layer_string)

107 | local container = net

108 | for i = 1, #nums do

109 | local count = 0

110 | for j = 1, #container do

111 | if not container:get(j)._ignore then

112 | count = count + 1

113 | end

114 | if count == nums[i] then

115 | if i < #nums then

116 | container = container:get(j)

117 | break

118 | elseif i == #nums then

119 | container:insert(new_layer, j + 1)

120 | return

121 | end

122 | end

123 | end

124 | end

125 | end

126 |

127 |

128 | -- Remove the layers of the network that occur after the last _ignore

129 | function M.trim_network(net)

130 | local function contains_ignore(layer)

131 | if torch.isTypeOf(layer, nn.Container) then

132 | local found = false

133 | for i = 1, layer:size() do

134 | found = found or contains_ignore(layer:get(i))

135 | end

136 | return found

137 | else

138 | return layer._ignore == true

139 | end

140 | end

141 | local last_layer = 0

142 | for i = 1, #net do

143 | if contains_ignore(net:get(i)) then

144 | last_layer = i

145 | end

146 | end

147 | local num_to_remove = #net - last_layer

148 | for i = 1, num_to_remove do

149 | net:remove()

150 | end

151 | return net

152 | end

153 |

154 |

155 | return M

156 |

157 |

--------------------------------------------------------------------------------

/modules/PerceptualCriterion.lua:

--------------------------------------------------------------------------------

1 | local layer_utils = require 'texture.layer_utils'

2 |

3 | local crit, parent = torch.class('nn.PerceptualCriterion', 'nn.Criterion')

4 |

5 | --[[

6 | Input: args is a table with the following keys:

7 | - cnn: A network giving the base CNN.

8 | - content_layers: An array of layer strings

9 | - content_weights: A list of the same length as content_layers

10 | - style_layers: An array of layers strings

11 | - style_weights: A list of the same length as style_layers

12 | "mean" or "gram"

13 | - deepdream_layers: Array of layer strings

14 | - deepdream_weights: List of the same length as deepdream_layers

15 | --]]

16 | function crit:__init(args)

17 | args.content_layers = args.content_layers or {}

18 | args.style_layers = args.style_layers or {}

19 | args.deepdream_layers = args.deepdream_layers or {}

20 |

21 | self.net = args.cnn

22 | self.net:evaluate()

23 | self.content_loss_layers = {}

24 | self.style_loss_layers = {}

25 | self.deepdream_loss_layers = {}

26 |

27 | -- Set up content loss layers

28 | for i, layer_string in ipairs(args.content_layers) do

29 | local weight = args.content_weights[i]

30 | local content_loss_layer = nn.ContentLoss(weight)

31 | layer_utils.insert_after(self.net, layer_string, content_loss_layer)

32 | table.insert(self.content_loss_layers, content_loss_layer)

33 | end

34 |

35 | -- Set up style loss layers

36 | for i, layer_string in ipairs(args.style_layers) do

37 | local weight = args.style_weights[i]

38 | local style_loss_layer = nn.StyleLoss(weight)

39 | layer_utils.insert_after(self.net, layer_string, style_loss_layer)

40 | table.insert(self.style_loss_layers, style_loss_layer)

41 | end

42 |

43 | layer_utils.trim_network(self.net)

44 | self.grad_net_output = torch.Tensor()

45 |

46 | end

47 |

48 |

49 | --[[

50 | target: Tensor of shape (1, 3, H, W) giving pixels for style target image

51 | --]]

52 | function crit:setStyleTarget(target)

53 | for i, content_loss_layer in ipairs(self.content_loss_layers) do

54 | content_loss_layer:setMode('none')

55 | end

56 | for i, style_loss_layer in ipairs(self.style_loss_layers) do

57 | style_loss_layer:setMode('capture')

58 | end

59 | self.net:forward(target)

60 | end

61 |

62 |

63 | --[[

64 | target: Tensor of shape (N, 3, H, W) giving pixels for content target images

65 | --]]

66 | function crit:setContentTarget(target)

67 | for i, style_loss_layer in ipairs(self.style_loss_layers) do

68 | style_loss_layer:setMode('none')

69 | end

70 | for i, content_loss_layer in ipairs(self.content_loss_layers) do

71 | content_loss_layer:setMode('capture')

72 | end

73 | self.net:forward(target)

74 | end

75 |

76 |

77 | function crit:setStyleWeight(weight)

78 | for i, style_loss_layer in ipairs(self.style_loss_layers) do

79 | style_loss_layer.strength = weight

80 | end

81 | end

82 |

83 |

84 | function crit:setContentWeight(weight)

85 | for i, content_loss_layer in ipairs(self.content_loss_layers) do

86 | content_loss_layer.strength = weight

87 | end

88 | end

89 |

90 |

91 | --[[

92 | Inputs:

93 | - input: Tensor of shape (N, 3, H, W) giving pixels for generated images

94 | - target: Table with the following keys:

95 | - content_target: Tensor of shape (N, 3, H, W)

96 | - style_target: Tensor of shape (1, 3, H, W)

97 | --]]

98 | function crit:updateOutput(input, target)

99 | if target.content_target then

100 | self:setContentTarget(target.content_target)

101 | end

102 | if target.style_target then

103 | self.setStyleTarget(target.style_target)

104 | end

105 |

106 | -- Make sure to set all content and style loss layers to loss mode before

107 | -- running the image forward.

108 | for i, content_loss_layer in ipairs(self.content_loss_layers) do

109 | content_loss_layer:setMode('loss')

110 | end

111 | for i, style_loss_layer in ipairs(self.style_loss_layers) do

112 | style_loss_layer:setMode('loss')

113 | end

114 |

115 | local output = self.net:forward(input)

116 |

117 | -- Set up a tensor of zeros to pass as gradient to net in backward pass

118 | self.grad_net_output:resizeAs(output):zero()

119 |

120 | -- Go through and add up losses

121 | self.total_content_loss = 0

122 | self.content_losses = {}

123 | self.total_style_loss = 0

124 | self.style_losses = {}

125 | for i, content_loss_layer in ipairs(self.content_loss_layers) do

126 | self.total_content_loss = self.total_content_loss + content_loss_layer.loss

127 | table.insert(self.content_losses, content_loss_layer.loss)

128 | end

129 | for i, style_loss_layer in ipairs(self.style_loss_layers) do

130 | self.total_style_loss = self.total_style_loss + style_loss_layer.loss

131 | table.insert(self.style_losses, style_loss_layer.loss)

132 | end

133 |

134 | self.output = self.total_style_loss + self.total_content_loss

135 | return self.output

136 | end

137 |

138 |

139 | function crit:updateGradInput(input, target)

140 | self.gradInput = self.net:updateGradInput(input, self.grad_net_output)

141 | return self.gradInput

142 | end

143 |

144 |

--------------------------------------------------------------------------------

/experiments/models/hang.lua:

--------------------------------------------------------------------------------

1 | --+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | -- Created by: Hang Zhang

3 | -- ECE Department, Rutgers University

4 | -- Email: zhang.hang@rutgers.edu

5 | -- Copyright (c) 2017

6 | --

7 | -- Free to reuse and distribute this software for research or

8 | -- non-profit purpose, subject to the following conditions:

9 | -- 1. The code must retain the above copyright notice, this list of

10 | -- conditions.

11 | -- 2. Original authors' names are not deleted.

12 | -- 3. The authors' names are not used to endorse or promote products

13 | -- derived from this software

14 | --+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

15 |

16 | require 'texture'

17 |

18 | local pad = nn.SpatialReflectionPadding

19 | local normalization = nn.InstanceNormalization

20 | local layer_utils = require 'texture.layer_utils'

21 |

22 | local M = {}

23 |

24 | function M.createModel(opt)

25 | -- Global variable keeping track of the input channels

26 | local iChannels

27 |

28 | -- The shortcut layer is either identity or 1x1 convolution

29 | local function shortcut(nInputPlane, nOutputPlane, stride)

30 | if nInputPlane ~= nOutputPlane then

31 | return nn.Sequential()

32 | :add(nn.SpatialConvolution(nInputPlane, nOutputPlane, 1, 1, stride, stride))

33 | else

34 | return nn.Identity()

35 | end

36 | end

37 |

38 | local function full_shortcut(nInputPlane, nOutputPlane, stride)

39 | if nInputPlane ~= nOutputPlane or stride ~= 1 then

40 | return nn.Sequential()

41 | --:add(pad(1,0,1,0))

42 | :add(nn.SpatialUpSamplingNearest(stride))

43 | :add(nn.SpatialConvolution(nInputPlane, nOutputPlane, 1, 1, 1, 1))

44 | --:add(nn.SpatialFullConvolution(nInputPlane, nOutputPlane, 1, 1, stride, stride, 1, 1, 1, 1))

45 | else

46 | return nn.Identity()

47 | end

48 | end

49 |

50 | local function basic_block(n, stride)

51 | stride = stride or 1

52 | local nInputPlane = iChannels

53 | iChannels = n

54 | -- Convolutions

55 | local conv_block = nn.Sequential()

56 |

57 | conv_block:add(normalization(nInputPlane))

58 | conv_block:add(nn.ReLU(true))

59 | conv_block:add(pad(1, 1, 1, 1))

60 | conv_block:add(nn.SpatialConvolution(nInputPlane, n, 3, 3, stride, stride, 0, 0))

61 |

62 | conv_block:add(normalization(n))

63 | conv_block:add(nn.ReLU(true))

64 | conv_block:add(pad(1, 1, 1, 1))

65 | conv_block:add(nn.SpatialConvolution(n, n, 3, 3, 1, 1, 0, 0))

66 |

67 | local concat = nn.ConcatTable():add(conv_block):add(shortcut(nInputPlane, n, stride))

68 |

69 | -- Sum

70 | local res_block = nn.Sequential()

71 | res_block:add(concat)

72 | res_block:add(nn.CAddTable())

73 | return res_block

74 | end

75 |

76 | local function bottleneck(n, stride)

77 | stride = stride or 1

78 | local nInputPlane = iChannels

79 | iChannels = 4 * n

80 | -- Convolutions

81 | local conv_block = nn.Sequential()

82 |

83 | conv_block:add(normalization(nInputPlane))

84 | conv_block:add(nn.ReLU(true))

85 | conv_block:add(nn.SpatialConvolution(nInputPlane, n, 1, 1, 1, 1, 0, 0))

86 |

87 | conv_block:add(normalization(n))

88 | conv_block:add(nn.ReLU(true))

89 | conv_block:add(pad(1, 1, 1, 1))

90 | conv_block:add(nn.SpatialConvolution(n, n, 3, 3, stride, stride, 0, 0))

91 |

92 | conv_block:add(normalization(n))

93 | conv_block:add(nn.ReLU(true))

94 | conv_block:add(nn.SpatialConvolution(n, n*4, 1, 1, 1, 1, 0, 0))

95 |

96 | local concat = nn.ConcatTable():add(conv_block):add(shortcut(nInputPlane, n*4, stride))

97 |

98 | -- Sum

99 | local res_block = nn.Sequential()

100 | res_block:add(concat)

101 | res_block:add(nn.CAddTable())

102 | return res_block

103 | end

104 |

105 | local function full_bottleneck(n, stride)

106 | stride = stride or 1

107 | local nInputPlane = iChannels

108 | iChannels = 4 * n

109 | -- Convolutions

110 | local conv_block = nn.Sequential()

111 |

112 | conv_block:add(normalization(nInputPlane))

113 | conv_block:add(nn.ReLU(true))

114 | conv_block:add(nn.SpatialConvolution(nInputPlane, n, 1, 1, 1, 1, 0, 0))

115 |

116 | conv_block:add(normalization(n))

117 | conv_block:add(nn.ReLU(true))

118 |

119 | if stride~=1 then

120 | conv_block:add(nn.SpatialUpSamplingNearest(stride))

121 | conv_block:add(pad(1, 1, 1, 1))

122 | conv_block:add(nn.SpatialConvolution(n, n, 3, 3, 1, 1, 0, 0))

123 | else

124 | conv_block:add(pad(1, 1, 1, 1))

125 | conv_block:add(nn.SpatialConvolution(n, n, 3, 3, 1, 1, 0, 0))

126 | end

127 | conv_block:add(normalization(n))

128 | conv_block:add(nn.ReLU(true))

129 | conv_block:add(nn.SpatialConvolution(n, n*4, 1, 1, 1, 1, 0, 0))

130 |

131 | local concat = nn.ConcatTable()

132 | :add(conv_block)

133 | :add(full_shortcut(nInputPlane, n*4, stride))

134 |

135 | -- Sum

136 | local res_block = nn.Sequential()

137 | res_block:add(concat)

138 | res_block:add(nn.CAddTable())

139 | return res_block

140 | end

141 |

142 | local function layer(block, features, count, stride)

143 | local s = nn.Sequential()

144 | for i=1,count do

145 | s:add(block(features, i==1 and stride or 1))

146 | end

147 | return s

148 | end

149 |

150 | local model = nn.Sequential()

151 | model.cNetsNum = {}

152 |

153 | -- 256x256

154 | model:add(normalization(3))

155 | model:add(pad(3, 3, 3, 3))

156 | model:add(nn.SpatialConvolution(3, 64, 7, 7, 1, 1, 0, 0))

157 | model:add(normalization(64))

158 | model:add(nn.ReLU(true))

159 |

160 | iChannels = 64

161 | local block = bottleneck -- basic_block

162 |

163 | model:add(layer(block, 32, 1, 2))

164 | model:add(layer(block, 64, 1, 2))

165 |

166 | -- 32x32x512

167 | model:add(nn.Inspiration(iChannels))

168 | table.insert(model.cNetsNum,#model)

169 | model:add(normalization(iChannels))

170 | model:add(nn.ReLU(true))

171 |

172 | for i = 1,opt.model_nres do

173 | model:add(layer(block, 64, 1, 1))

174 | end

175 |

176 | block = full_bottleneck

177 | model:add(layer(block, 32, 1, 2))

178 | model:add(layer(block, 16, 1, 2))

179 |

180 | model:add(normalization(64))

181 | model:add(nn.ReLU(true))

182 |

183 | model:add(pad(3, 3, 3, 3))

184 | model:add(nn.SpatialConvolution(64, 3, 7, 7, 1, 1, 0, 0))

185 |

186 | model:add(nn.TotalVariation(opt.tv_strength))

187 |

188 | function model:setTarget(feat, dtype)

189 | model.modules[model.cNetsNum[1]]:setTarget(feat[3]:type(dtype))

190 | end

191 | return model

192 | end

193 |

194 | function M.createCNets(opt)

195 | -- The descriptive network in the paper

196 | local cnet = torch.load(opt.loss_network)

197 | cnet:evaluate()

198 | cnet.style_layers = {}

199 |

200 | -- Set up calibrate layers

201 | for i, layer_string in ipairs(opt.style_layers) do

202 | local calibrator = nn.Calibrate()

203 | layer_utils.insert_after(cnet, layer_string, calibrator)

204 | table.insert(cnet.style_layers, calibrator)

205 | end

206 | layer_utils.trim_network(cnet)

207 |

208 | function cnet:calibrate(input)

209 | cnet:forward(input)

210 | local feat = {}

211 | for i, calibrator in ipairs(cnet.style_layers) do

212 | table.insert(feat, calibrator:getGram())

213 | end

214 | return feat

215 | end

216 |

217 | return cnet

218 | end

219 |

220 | return M

221 |

--------------------------------------------------------------------------------

/experiments/main.lua:

--------------------------------------------------------------------------------

1 | --+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | -- Created by: Hang Zhang

3 | -- ECE Department, Rutgers University

4 | -- Email: zhang.hang@rutgers.edu

5 | -- Copyright (c) 2017

6 | --

7 | -- Free to reuse and distribute this software for research or

8 | -- non-profit purpose, subject to the following conditions:

9 | -- 1. The code must retain the above copyright notice, this list of

10 | -- conditions.

11 | -- 2. Original authors' names are not deleted.

12 | -- 3. The authors' names are not used to endorse or promote products

13 | -- derived from this software

14 | --+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

15 |

16 | require 'texture'

17 | require 'image'

18 | require 'optim'

19 |

20 | require 'utils.DataLoader'

21 |

22 | local utils = require 'utils.utils'

23 | local preprocess = require 'utils.preprocess'

24 | local opts = require 'opts'

25 | local imgLoader = require 'utils.getImages'

26 |

27 | function main()

28 | local opt = opts.parse(arg)

29 | -- Parse layer strings and weights

30 | opt.content_layers, opt.content_weights =

31 | utils.parse_layers(opt.content_layers, opt.content_weights)

32 | opt.style_layers, opt.style_weights =

33 | utils.parse_layers(opt.style_layers, opt.style_weights)

34 |

35 | -- Figure out preprocessing

36 | if not preprocess[opt.preprocessing] then

37 | local msg = 'invalid -preprocessing "%s"; must be "vgg" or "resnet"'

38 | error(string.format(msg, opt.preprocessing))

39 | end

40 | preprocess = preprocess[opt.preprocessing]

41 |

42 | -- Figure out the backend

43 | local dtype, use_cudnn = utils.setup_gpu(opt.gpu, opt.backend, opt.use_cudnn == 1)

44 |

45 | -- Style images

46 | local styleLoader = imgLoader(opt.style_image_folder)

47 | local featpath = opt.style_image_folder .. '/feat.t7'

48 | if not paths.filep(featpath) then

49 | local extractor = require "extractGram"

50 | extractor.exec(opt)

51 | end

52 | local feat = torch.load(featpath)

53 | feat = nn.utils.recursiveType(feat, 'torch.CudaTensor')

54 |

55 | -- Build the model

56 | local model = nil

57 |

58 | -- Checkpoint

59 | if opt.resume ~= '' then

60 | print('Loading checkpoint from ' .. opt.resume)

61 | model = torch.load(opt.resume).model:type(dtype)

62 | else

63 | print('Initializing model from scratch')

64 | models = require('models.' .. opt.model)

65 | model = models.createModel(opt):type(dtype)

66 | end

67 |

68 | if use_cudnn then cudnn.convert(model, cudnn) end

69 | model:training()

70 | print(model)

71 |

72 | -- Set up the perceptual loss function

73 | local percep_crit

74 | local loss_net = torch.load(opt.loss_network)

75 | local crit_args = {

76 | cnn = loss_net,

77 | style_layers = opt.style_layers,

78 | style_weights = opt.style_weights,

79 | content_layers = opt.content_layers,

80 | content_weights = opt.content_weights,

81 | }

82 | percep_crit = nn.PerceptualCriterion(crit_args):type(dtype)

83 |

84 | local loader = DataLoader(opt)

85 | local params, grad_params = model:getParameters()

86 |

87 | local function f(x)

88 | assert(x == params)

89 | grad_params:zero()

90 |

91 | local x, y = loader:getBatch('train')

92 | x, y = x:type(dtype), y:type(dtype)

93 |

94 | -- Run model forward

95 | local out = model:forward(x)

96 | local target = {content_target=y}

97 | local loss = percep_crit:forward(out, target)

98 | local grad_out = percep_crit:backward(out, target)

99 |

100 | -- Run model backward

101 | model:backward(x, grad_out)

102 |

103 | return loss, grad_params

104 | end

105 |

106 | local optim_state = {learningRate=opt.learning_rate}

107 | local train_loss_history = {}

108 | local val_loss_history = {}

109 | local val_loss_history_ts = {}

110 | local style_loss_history = nil

111 |

112 | style_loss_history = {}

113 | for i, k in ipairs(opt.style_layers) do

114 | style_loss_history[string.format('style-%d', k)] = {}

115 | end

116 | for i, k in ipairs(opt.content_layers) do

117 | style_loss_history[string.format('content-%d', k)] = {}

118 | end

119 |

120 | local style_weight = opt.style_weight

121 | for t = 1, opt.num_iterations do

122 | -- set Target Here

123 | if (t-1)%opt.style_iter == 0 then

124 | --print('Setting Style Target')

125 | local idx = (t-1)/opt.style_iter % #feat + 1

126 |

127 | local style_image = styleLoader:get(idx)

128 | style_image = image.scale(style_image, opt.style_image_size)

129 | style_image = preprocess.preprocess(style_image:add_dummy())

130 | percep_crit:setStyleTarget(style_image:type(dtype))

131 |

132 | local style_image_feat = feat[idx]

133 | model:setTarget(style_image_feat, dtype)

134 | end

135 | local epoch = t / loader.num_minibatches['train']

136 |

137 | local _, loss = optim.adam(f, params, optim_state)

138 |

139 | table.insert(train_loss_history, loss[1])

140 |

141 | for i, k in ipairs(opt.style_layers) do

142 | table.insert(style_loss_history[string.format('style-%d', k)],

143 | percep_crit.style_losses[i])

144 | end

145 | for i, k in ipairs(opt.content_layers) do

146 | table.insert(style_loss_history[string.format('content-%d', k)],

147 | percep_crit.content_losses[i])

148 | end

149 |

150 | print(string.format('Epoch %f, Iteration %d / %d, loss = %f',

151 | epoch, t, opt.num_iterations, loss[1]), optim_state.learningRate)

152 |

153 | if t % opt.checkpoint_every == 0 then

154 | -- Check loss on the validation set

155 | loader:reset('val')

156 | model:evaluate()

157 | local val_loss = 0

158 | print 'Running on validation set ... '

159 | local val_batches = opt.num_val_batches

160 | for j = 1, val_batches do

161 | local x, y = loader:getBatch('val')

162 | x, y = x:type(dtype), y:type(dtype)

163 | local out = model:forward(x)

164 | --y = shave_y(x, y, out)

165 |

166 | local percep_loss = 0

167 | percep_loss = percep_crit:forward(out, {content_target=y})

168 | val_loss = val_loss + percep_loss

169 | end

170 | val_loss = val_loss / val_batches

171 | print(string.format('val loss = %f', val_loss))

172 | table.insert(val_loss_history, val_loss)

173 | table.insert(val_loss_history_ts, t)

174 | model:training()

175 |

176 | -- Save a checkpoint

177 | local checkpoint = {

178 | opt=opt,

179 | train_loss_history=train_loss_history,

180 | val_loss_history=val_loss_history,

181 | val_loss_history_ts=val_loss_history_ts,

182 | style_loss_history=style_loss_history,

183 | }

184 | local filename = string.format('%s.json', opt.checkpoint_name)

185 | paths.mkdir(paths.dirname(filename))

186 | utils.write_json(filename, checkpoint)

187 |

188 | -- Save a torch checkpoint; convert the model to float first

189 | model:clearState()

190 | if use_cudnn then

191 | cudnn.convert(model, nn)

192 | end

193 | model:float()

194 | checkpoint.model = model

195 | filename = string.format('%s.t7', opt.checkpoint_name)

196 | torch.save(filename, checkpoint)

197 |

198 | -- Convert the model back

199 | model:type(dtype)

200 | if use_cudnn then

201 | cudnn.convert(model, cudnn)

202 | end

203 | params, grad_params = model:getParameters()

204 |

205 | collectgarbage()

206 | collectgarbage()

207 | end

208 |

209 | if opt.lr_decay_every > 0 and t % opt.lr_decay_every == 0 then

210 | local new_lr = opt.lr_decay_factor * optim_state.learningRate

211 | optim_state = {learningRate = new_lr}

212 | end

213 | end

214 | end

215 |

216 |

217 | main()

218 |

219 |

--------------------------------------------------------------------------------

/cmake/select_compute_arch.cmake:

--------------------------------------------------------------------------------

1 | # Synopsis:

2 | # CUDA_SELECT_NVCC_ARCH_FLAGS(out_variable [target_CUDA_architectures])

3 | # -- Selects GPU arch flags for nvcc based on target_CUDA_architectures

4 | # target_CUDA_architectures : Auto | Common | All | LIST(ARCH_AND_PTX ...)

5 | # - "Auto" detects local machine GPU compute arch at runtime.

6 | # - "Common" and "All" cover common and entire subsets of architectures

7 | # ARCH_AND_PTX : NAME | NUM.NUM | NUM.NUM(NUM.NUM) | NUM.NUM+PTX

8 | # NAME: Fermi Kepler Maxwell Kepler+Tegra Kepler+Tesla Maxwell+Tegra Pascal

9 | # NUM: Any number. Only those pairs are currently accepted by NVCC though:

10 | # 2.0 2.1 3.0 3.2 3.5 3.7 5.0 5.2 5.3 6.0 6.2

11 | # Returns LIST of flags to be added to CUDA_NVCC_FLAGS in ${out_variable}

12 | # Additionally, sets ${out_variable}_readable to the resulting numeric list

13 | # Example:

14 | # CUDA_SELECT_NVCC_ARCH_FLAGS(ARCH_FLAGS 3.0 3.5+PTX 5.2(5.0) Maxwell)

15 | # LIST(APPEND CUDA_NVCC_FLAGS ${ARCH_FLAGS})

16 | #

17 | # More info on CUDA architectures: https://en.wikipedia.org/wiki/CUDA

18 | #

19 |

20 | # This list will be used for CUDA_ARCH_NAME = All option

21 | set(CUDA_KNOWN_GPU_ARCHITECTURES "Fermi" "Kepler" "Maxwell")

22 |

23 | # This list will be used for CUDA_ARCH_NAME = Common option (enabled by default)

24 | set(CUDA_COMMON_GPU_ARCHITECTURES "3.0" "3.5" "5.0")

25 |

26 | if (CUDA_VERSION VERSION_GREATER "6.5")

27 | list(APPEND CUDA_KNOWN_GPU_ARCHITECTURES "Kepler+Tegra" "Kepler+Tesla" "Maxwell+Tegra")

28 | list(APPEND CUDA_COMMON_GPU_ARCHITECTURES "5.2")

29 | endif ()

30 |

31 | if (CUDA_VERSION VERSION_GREATER "7.5")

32 | list(APPEND CUDA_KNOWN_GPU_ARCHITECTURES "Pascal")

33 | list(APPEND CUDA_COMMON_GPU_ARCHITECTURES "6.0" "6.1" "6.1+PTX")

34 | else()

35 | list(APPEND CUDA_COMMON_GPU_ARCHITECTURES "5.2+PTX")

36 | endif ()

37 |

38 |

39 |

40 | ################################################################################################

41 | # A function for automatic detection of GPUs installed (if autodetection is enabled)

42 | # Usage:

43 | # CUDA_DETECT_INSTALLED_GPUS(OUT_VARIABLE)

44 | #

45 | function(CUDA_DETECT_INSTALLED_GPUS OUT_VARIABLE)

46 | if(NOT CUDA_GPU_DETECT_OUTPUT)

47 | set(cufile ${PROJECT_BINARY_DIR}/detect_cuda_archs.cu)

48 |

49 | file(WRITE ${cufile} ""

50 | "#include

54 |

55 | [[More Example Results](Examples.md)]

56 |

57 | ### Train Your Own Model

58 | Please follow [this tutorial to train a new model](Training.md).

59 |

60 | ### Release Timeline

61 | - [x] 03/20/2017 we have released the [demo video](https://www.youtube.com/watch?v=oy6pWNWBt4Y).

62 | - [x] 03/24/2017 We have released [ArXiv paper](https://arxiv.org/pdf/1703.06953.pdf) and test code with pre-trained models.

63 | - [x] 04/09/2017 We have released the training code.

64 | - [x] 04/24/2017 Please checkout our PyTorch [implementation](https://github.com/zhanghang1989/PyTorch-Style-Transfer).

65 |

66 | ### Acknowledgement

67 | The code benefits from outstanding prior work and their implementations including:

68 | - [Texture Networks: Feed-forward Synthesis of Textures and Stylized Images](https://arxiv.org/pdf/1603.03417.pdf) by Ulyanov *et al. ICML 2016*. ([code](https://github.com/DmitryUlyanov/texture_nets))

69 | - [Perceptual Losses for Real-Time Style Transfer and Super-Resolution](https://arxiv.org/pdf/1603.08155.pdf) by Johnson *et al. ECCV 2016* ([code](https://github.com/jcjohnson/fast-neural-style))

70 | - [Image Style Transfer Using Convolutional Neural Networks](http://www.cv-foundation.org/openaccess/content_cvpr_2016/papers/Gatys_Image_Style_Transfer_CVPR_2016_paper.pdf) by Gatys *et al. CVPR 2016* and its torch implementation [code](https://github.com/jcjohnson/neural-style) by Johnson.

71 |

--------------------------------------------------------------------------------

/modules/Inspiration.lua:

--------------------------------------------------------------------------------

1 | --+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | -- Created by: Hang Zhang

3 | -- ECE Department, Rutgers University

4 | -- Email: zhang.hang@rutgers.edu

5 | -- Copyright (c) 2017

6 | --

7 | -- Free to reuse and distribute this software for research or

8 | -- non-profit purpose, subject to the following conditions:

9 | -- 1. The code must retain the above copyright notice, this list of

10 | -- conditions.

11 | -- 2. Original authors' names are not deleted.

12 | -- 3. The authors' names are not used to endorse or promote products

13 | -- derived from this software

14 | --+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

15 |

16 | local Inspiration, parent = torch.class('nn.Inspiration', 'nn.Module')

17 |

18 | local function isint(x)

19 | return type(x) == 'number' and x == math.floor(x)

20 | end

21 |

22 | function Inspiration:__init(C)

23 | parent.__init(self)

24 | assert(self and C, 'should specify C')

25 | assert(isint(C), 'C should be integers')

26 |

27 | self.C = C

28 | self.MM_WG = nn.MM()

29 | self.MM_PX = nn.MM(true, false)

30 | self.target = torch.Tensor(C,C)

31 | self.Weight = torch.Tensor(C,C)

32 | self.P = torch.Tensor(C,C)

33 |

34 | self.gradWeight = torch.Tensor(C, C)

35 | self.gradWG = {torch.Tensor(C, C), torch.Tensor(C, C)}

36 | self.gradPX = {torch.Tensor(), torch.Tensor()}

37 | self.gradInput = torch.Tensor()

38 | self:reset()

39 | end

40 |

41 | function Inspiration:reset(stdv)

42 | if stdv then

43 | stdv = stdv * math.sqrt(2)

44 | else

45 | stdv = 1./math.sqrt(self.C)

46 | end

47 | self.Weight:uniform(-stdv, stdv)

48 | self.target:uniform(-stdv, stdv)

49 | return self

50 | end

51 |

52 | function Inspiration:setTarget(nT)

53 | assert(self and image)

54 | self.target = nT

55 | end

56 |

57 | function Inspiration:updateOutput(input)

58 | assert(self)

59 | -- P=WG Y=XP

60 | --self.output:resizeAs(input)

61 | if input:dim() == 3 then

62 | self.P = self.MM_WG:forward({self.Weight, self.target})

63 | self.output = self.MM_PX:forward({self.P, input:view(self.C,-1)}):viewAs(input)

64 | elseif input:dim() == 4 then

65 | local B = input:size(1)

66 | self.P = self.MM_WG:forward({self.Weight, self.target})

67 | self.output = self.MM_PX:forward({self.P:add_dummy():expand(B,self.C,self.C), input:view(B,self.C,-1)}):viewAs(input)

68 | else

69 | error('Unsupported dimention for Inspiration layer')

70 | end

71 | return self.output

72 | end

73 |

74 | function Inspiration:updateGradInput(input, gradOutput)

75 | assert(self and self.gradInput)

76 |

77 | --self.gradInput:resizeAs(input):fill(0)

78 | if input:dim() == 3 then

79 | self.gradPX = self.MM_PX:backward({self.P, input:view(self.C,-1)}, gradOutput:view(self.C,-1))

80 | elseif input:dim() == 4 then

81 | local B = input:size(1)

82 | self.gradPX = self.MM_PX:backward({self.P:add_dummy():expand(B,self.C,self.C), input:view(B,self.C,-1)}, gradOutput:view(B,self.C,-1))

83 | else

84 | error('Unsupported dimention for Inspiration layer')

85 | end

86 |

87 | self.gradInput = self.gradPX[2]:viewAs(input)

88 | return self.gradInput

89 | end

90 |

91 | function Inspiration:accGradParameters(input, gradOutput, scale)

92 | assert(self)

93 | scale = scale or 1

94 |

95 | if input:dim() == 3 then

96 | self.gradWG = self.MM_WG:backward({self.Weight, self.target}, self.gradPX[1])

97 | self.gradWeight = scale * self.gradWG[1]

98 | elseif input:dim() == 4 then

99 | self.gradWG = self.MM_WG:backward({self.Weight, self.target}, self.gradPX[1]:sum(1):squeeze())

100 | self.gradWeight = scale * self.gradWG[1]

101 | else

102 | error('Unsupported dimention for Inspiration layer')

103 | end

104 | end

105 |

106 | function Inspiration:__tostring__()

107 | return torch.type(self) ..

108 | string.format(

109 | '(%dxHxW, -> %dxHxW)',

110 | self.C, self.C

111 | )

112 | end

113 |

--------------------------------------------------------------------------------

/experiments/utils/utils.lua:

--------------------------------------------------------------------------------

1 | require 'torch'

2 | require 'nn'

3 | local cjson = require 'cjson'

4 |

5 |

6 | local M = {}

7 |

8 |

9 | -- Parse a string of comma-separated numbers

10 | -- For example convert "1.0,3.14" to {1.0, 3.14}

11 | function M.parse_num_list(s)

12 | local nums = {}

13 | for _, ss in ipairs(s:split(',')) do

14 | table.insert(nums, tonumber(ss))

15 | end

16 | return nums

17 | end

18 |

19 |

20 | -- Parse a layer string and associated weights string.

21 | -- The layers string is a string of comma-separated layer strings, and the

22 | -- weight string contains comma-separated numbers. If the weights string

23 | -- contains only a single number it is duplicated to be the same length as the

24 | -- layers.

25 | function M.parse_layers(layers_string, weights_string)

26 | local layers = layers_string:split(',')

27 | local weights = M.parse_num_list(weights_string)

28 | if #weights == 1 and #layers > 1 then

29 | -- Duplicate the same weight for all layers

30 | local w = weights[1]

31 | weights = {}

32 | for i = 1, #layers do

33 | table.insert(weights, w)

34 | end

35 | elseif #weights ~= #layers then

36 | local msg = 'size mismatch between layers "%s" and weights "%s"'

37 | error(string.format(msg, layers_string, weights_string))

38 | end

39 | return layers, weights

40 | end

41 |

42 |

43 | function M.setup_gpu(gpu, backend, use_cudnn)

44 | local dtype = 'torch.FloatTensor'

45 | if gpu >= 0 then

46 | if backend == 'cuda' then

47 | require 'cutorch'

48 | require 'cunn'

49 | cutorch.setDevice(gpu + 1)

50 | dtype = 'torch.CudaTensor'

51 | if use_cudnn then

52 | require 'cudnn'

53 | cudnn.benchmark = true

54 | end

55 | elseif backend == 'opencl' then

56 | require 'cltorch'

57 | require 'clnn'

58 | cltorch.setDevice(gpu + 1)

59 | dtype = torch.Tensor():cl():type()

60 | use_cudnn = false

61 | end

62 | else

63 | use_cudnn = false

64 | end

65 | return dtype, use_cudnn

66 | end

67 |

68 |

69 | function M.clear_gradients(m)

70 | if torch.isTypeOf(m, nn.Container) then

71 | m:applyToModules(M.clear_gradients)

72 | end

73 | if m.weight and m.gradWeight then

74 | m.gradWeight = m.gradWeight.new()

75 | end

76 | if m.bias and m.gradBias then

77 | m.gradBias = m.gradBias.new()

78 | end

79 | end

80 |

81 |

82 | function M.restore_gradients(m)

83 | if torch.isTypeOf(m, nn.Container) then

84 | m:applyToModules(M.restore_gradients)

85 | end

86 | if m.weight and m.gradWeight then

87 | m.gradWeight = m.gradWeight.new(#m.weight):zero()

88 | end

89 | if m.bias and m.gradBias then

90 | m.gradBias = m.gradBias.new(#m.bias):zero()

91 | end

92 | end

93 |

94 |

95 | function M.read_json(path)

96 | local file = io.open(path, 'r')

97 | local text = file:read()

98 | file:close()

99 | local info = cjson.decode(text)

100 | return info

101 | end

102 |

103 |

104 | function M.write_json(path, j)

105 | cjson.encode_sparse_array(true, 2, 10)

106 | local text = cjson.encode(j)

107 | local file = io.open(path, 'w')

108 | file:write(text)

109 | file:close()

110 | end

111 |

112 | local IMAGE_EXTS = {'jpg', 'jpeg', 'png', 'ppm', 'pgm'}

113 | function M.is_image_file(filename)

114 | -- Hidden file are not images

115 | if string.sub(filename, 1, 1) == '.' then

116 | return false

117 | end

118 | -- Check against a list of known image extensions

119 | local ext = string.lower(paths.extname(filename) or "")

120 | for _, image_ext in ipairs(IMAGE_EXTS) do

121 | if ext == image_ext then

122 | return true

123 | end

124 | end

125 | return false

126 | end

127 |

128 |

129 | function M.median_filter(img, r)

130 | local u = img:unfold(2, r, 1):contiguous()

131 | u = u:unfold(3, r, 1):contiguous()

132 | local HH, WW = u:size(2), u:size(3)

133 | local dtype = u:type()

134 | -- Median is not defined for CudaTensors, cast to float and back

135 | local med = u:view(3, HH, WW, r * r):float():median():type(dtype)

136 | return med[{{}, {}, {}, 1}]

137 | end

138 |

139 |

140 | return M

141 |

142 |

--------------------------------------------------------------------------------

/modules/layer_utils.lua:

--------------------------------------------------------------------------------

1 | require 'nn'

2 |

3 | --[[

4 | Utility functions for getting and inserting layers into models composed of

5 | hierarchies of nn Modules and nn Containers. In such a model, we can uniquely

6 | address each module with a unique "layer string", which is a series of integers

7 | separated by dashes. This is easiest to understand with an example: consider

8 | the following network; we have labeled each module with its layer string:

9 |

10 | nn.Sequential {

11 | (1) nn.SpatialConvolution

12 | (2) nn.Sequential {

13 | (2-1) nn.SpatialConvolution

14 | (2-2) nn.SpatialConvolution

15 | }

16 | (3) nn.Sequential {

17 | (3-1) nn.SpatialConvolution

18 | (3-2) nn.Sequential {

19 | (3-2-1) nn.SpatialConvolution

20 | (3-2-2) nn.SpatialConvolution

21 | (3-2-3) nn.SpatialConvolution

22 | }

23 | (3-3) nn.SpatialConvolution

24 | }

25 | (4) nn.View

26 | (5) nn.Linear

27 | }

28 |

29 | Any layers that that have the instance variable _ignore set to true are ignored

30 | when computing layer strings for layers. This way, we can insert new layers into

31 | a network without changing the layer strings of existing layers.

32 | --]]

33 | local M = {}

34 |

35 |

36 | --[[

37 | Convert a layer string to an array of integers.

38 |

39 | For example layer_string_to_nums("1-23-4") = {1, 23, 4}.

40 | --]]

41 | function M.layer_string_to_nums(layer_string)

42 | local nums = {}

43 | for _, s in ipairs(layer_string:split('-')) do

44 | table.insert(nums, tonumber(s))

45 | end

46 | return nums

47 | end

48 |

49 |

50 | --[[

51 | Comparison function for layer strings that is compatible with table.sort.

52 | In this comparison scheme, 2-3 comes AFTER 2-3-X for all X.

53 |

54 | Input:

55 | - s1, s2: Two layer strings.

56 |

57 | Output:

58 | - true if s1 should come before s2 in sorted order; false otherwise.

59 | --]]

60 | function M.compare_layer_strings(s1, s2)

61 | local left = M.layer_string_to_nums(s1)

62 | local right = M.layer_string_to_nums(s2)

63 | local out = nil

64 | for i = 1, math.min(#left, #right) do

65 | if left[i] < right[i] then

66 | out = true

67 | elseif left[i] > right[i] then

68 | out = false

69 | end

70 | if out ~= nil then break end

71 | end

72 |

73 | if out == nil then

74 | out = (#left > #right)

75 | end

76 | return out

77 | end

78 |

79 |

80 | --[[

81 | Get a layer from the network net using a layer string.

82 | --]]

83 | function M.get_layer(net, layer_string)

84 | local nums = M.layer_string_to_nums(layer_string)

85 | local layer = net

86 | for i, num in ipairs(nums) do

87 | local count = 0

88 | for j = 1, #layer do

89 | if not layer:get(j)._ignore then

90 | count = count + 1

91 | end

92 | if count == num then

93 | layer = layer:get(j)

94 | break

95 | end

96 | end

97 | end

98 | return layer

99 | end

100 |

101 |

102 | -- Insert a new layer immediately after the layer specified by a layer string.

103 | -- Any layers inserted this way are flagged with a special variable

104 | function M.insert_after(net, layer_string, new_layer)

105 | new_layer._ignore = true

106 | local nums = M.layer_string_to_nums(layer_string)

107 | local container = net

108 | for i = 1, #nums do

109 | local count = 0

110 | for j = 1, #container do

111 | if not container:get(j)._ignore then

112 | count = count + 1

113 | end

114 | if count == nums[i] then

115 | if i < #nums then

116 | container = container:get(j)

117 | break

118 | elseif i == #nums then

119 | container:insert(new_layer, j + 1)

120 | return

121 | end

122 | end

123 | end

124 | end

125 | end

126 |

127 |

128 | -- Remove the layers of the network that occur after the last _ignore

129 | function M.trim_network(net)

130 | local function contains_ignore(layer)

131 | if torch.isTypeOf(layer, nn.Container) then

132 | local found = false

133 | for i = 1, layer:size() do

134 | found = found or contains_ignore(layer:get(i))

135 | end

136 | return found

137 | else

138 | return layer._ignore == true

139 | end

140 | end

141 | local last_layer = 0

142 | for i = 1, #net do

143 | if contains_ignore(net:get(i)) then

144 | last_layer = i

145 | end

146 | end

147 | local num_to_remove = #net - last_layer

148 | for i = 1, num_to_remove do

149 | net:remove()

150 | end

151 | return net

152 | end

153 |

154 |

155 | return M

156 |

157 |

--------------------------------------------------------------------------------

/modules/PerceptualCriterion.lua:

--------------------------------------------------------------------------------

1 | local layer_utils = require 'texture.layer_utils'

2 |

3 | local crit, parent = torch.class('nn.PerceptualCriterion', 'nn.Criterion')

4 |

5 | --[[

6 | Input: args is a table with the following keys:

7 | - cnn: A network giving the base CNN.

8 | - content_layers: An array of layer strings

9 | - content_weights: A list of the same length as content_layers

10 | - style_layers: An array of layers strings

11 | - style_weights: A list of the same length as style_layers

12 | "mean" or "gram"

13 | - deepdream_layers: Array of layer strings

14 | - deepdream_weights: List of the same length as deepdream_layers

15 | --]]

16 | function crit:__init(args)

17 | args.content_layers = args.content_layers or {}

18 | args.style_layers = args.style_layers or {}

19 | args.deepdream_layers = args.deepdream_layers or {}

20 |

21 | self.net = args.cnn

22 | self.net:evaluate()

23 | self.content_loss_layers = {}

24 | self.style_loss_layers = {}

25 | self.deepdream_loss_layers = {}

26 |

27 | -- Set up content loss layers

28 | for i, layer_string in ipairs(args.content_layers) do

29 | local weight = args.content_weights[i]

30 | local content_loss_layer = nn.ContentLoss(weight)

31 | layer_utils.insert_after(self.net, layer_string, content_loss_layer)

32 | table.insert(self.content_loss_layers, content_loss_layer)

33 | end

34 |

35 | -- Set up style loss layers

36 | for i, layer_string in ipairs(args.style_layers) do

37 | local weight = args.style_weights[i]

38 | local style_loss_layer = nn.StyleLoss(weight)

39 | layer_utils.insert_after(self.net, layer_string, style_loss_layer)

40 | table.insert(self.style_loss_layers, style_loss_layer)

41 | end

42 |

43 | layer_utils.trim_network(self.net)

44 | self.grad_net_output = torch.Tensor()

45 |

46 | end

47 |

48 |

49 | --[[

50 | target: Tensor of shape (1, 3, H, W) giving pixels for style target image

51 | --]]

52 | function crit:setStyleTarget(target)

53 | for i, content_loss_layer in ipairs(self.content_loss_layers) do

54 | content_loss_layer:setMode('none')

55 | end

56 | for i, style_loss_layer in ipairs(self.style_loss_layers) do

57 | style_loss_layer:setMode('capture')

58 | end

59 | self.net:forward(target)

60 | end

61 |

62 |

63 | --[[

64 | target: Tensor of shape (N, 3, H, W) giving pixels for content target images

65 | --]]

66 | function crit:setContentTarget(target)

67 | for i, style_loss_layer in ipairs(self.style_loss_layers) do

68 | style_loss_layer:setMode('none')

69 | end

70 | for i, content_loss_layer in ipairs(self.content_loss_layers) do

71 | content_loss_layer:setMode('capture')

72 | end

73 | self.net:forward(target)

74 | end

75 |

76 |

77 | function crit:setStyleWeight(weight)

78 | for i, style_loss_layer in ipairs(self.style_loss_layers) do

79 | style_loss_layer.strength = weight

80 | end

81 | end

82 |

83 |

84 | function crit:setContentWeight(weight)

85 | for i, content_loss_layer in ipairs(self.content_loss_layers) do

86 | content_loss_layer.strength = weight

87 | end

88 | end

89 |

90 |

91 | --[[

92 | Inputs:

93 | - input: Tensor of shape (N, 3, H, W) giving pixels for generated images

94 | - target: Table with the following keys:

95 | - content_target: Tensor of shape (N, 3, H, W)

96 | - style_target: Tensor of shape (1, 3, H, W)

97 | --]]

98 | function crit:updateOutput(input, target)

99 | if target.content_target then

100 | self:setContentTarget(target.content_target)

101 | end

102 | if target.style_target then

103 | self.setStyleTarget(target.style_target)

104 | end

105 |

106 | -- Make sure to set all content and style loss layers to loss mode before

107 | -- running the image forward.

108 | for i, content_loss_layer in ipairs(self.content_loss_layers) do

109 | content_loss_layer:setMode('loss')

110 | end

111 | for i, style_loss_layer in ipairs(self.style_loss_layers) do

112 | style_loss_layer:setMode('loss')

113 | end

114 |

115 | local output = self.net:forward(input)

116 |

117 | -- Set up a tensor of zeros to pass as gradient to net in backward pass

118 | self.grad_net_output:resizeAs(output):zero()

119 |

120 | -- Go through and add up losses

121 | self.total_content_loss = 0

122 | self.content_losses = {}

123 | self.total_style_loss = 0

124 | self.style_losses = {}

125 | for i, content_loss_layer in ipairs(self.content_loss_layers) do

126 | self.total_content_loss = self.total_content_loss + content_loss_layer.loss

127 | table.insert(self.content_losses, content_loss_layer.loss)

128 | end

129 | for i, style_loss_layer in ipairs(self.style_loss_layers) do

130 | self.total_style_loss = self.total_style_loss + style_loss_layer.loss

131 | table.insert(self.style_losses, style_loss_layer.loss)

132 | end

133 |

134 | self.output = self.total_style_loss + self.total_content_loss

135 | return self.output

136 | end

137 |

138 |

139 | function crit:updateGradInput(input, target)

140 | self.gradInput = self.net:updateGradInput(input, self.grad_net_output)

141 | return self.gradInput

142 | end

143 |

144 |

--------------------------------------------------------------------------------

/experiments/models/hang.lua:

--------------------------------------------------------------------------------

1 | --+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | -- Created by: Hang Zhang

3 | -- ECE Department, Rutgers University

4 | -- Email: zhang.hang@rutgers.edu

5 | -- Copyright (c) 2017

6 | --

7 | -- Free to reuse and distribute this software for research or

8 | -- non-profit purpose, subject to the following conditions:

9 | -- 1. The code must retain the above copyright notice, this list of

10 | -- conditions.

11 | -- 2. Original authors' names are not deleted.

12 | -- 3. The authors' names are not used to endorse or promote products

13 | -- derived from this software

14 | --+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

15 |

16 | require 'texture'

17 |

18 | local pad = nn.SpatialReflectionPadding

19 | local normalization = nn.InstanceNormalization

20 | local layer_utils = require 'texture.layer_utils'

21 |

22 | local M = {}

23 |

24 | function M.createModel(opt)

25 | -- Global variable keeping track of the input channels

26 | local iChannels

27 |

28 | -- The shortcut layer is either identity or 1x1 convolution

29 | local function shortcut(nInputPlane, nOutputPlane, stride)

30 | if nInputPlane ~= nOutputPlane then

31 | return nn.Sequential()

32 | :add(nn.SpatialConvolution(nInputPlane, nOutputPlane, 1, 1, stride, stride))

33 | else

34 | return nn.Identity()

35 | end

36 | end

37 |

38 | local function full_shortcut(nInputPlane, nOutputPlane, stride)

39 | if nInputPlane ~= nOutputPlane or stride ~= 1 then

40 | return nn.Sequential()

41 | --:add(pad(1,0,1,0))

42 | :add(nn.SpatialUpSamplingNearest(stride))

43 | :add(nn.SpatialConvolution(nInputPlane, nOutputPlane, 1, 1, 1, 1))

44 | --:add(nn.SpatialFullConvolution(nInputPlane, nOutputPlane, 1, 1, stride, stride, 1, 1, 1, 1))

45 | else

46 | return nn.Identity()

47 | end

48 | end

49 |

50 | local function basic_block(n, stride)

51 | stride = stride or 1

52 | local nInputPlane = iChannels

53 | iChannels = n

54 | -- Convolutions

55 | local conv_block = nn.Sequential()

56 |

57 | conv_block:add(normalization(nInputPlane))

58 | conv_block:add(nn.ReLU(true))

59 | conv_block:add(pad(1, 1, 1, 1))

60 | conv_block:add(nn.SpatialConvolution(nInputPlane, n, 3, 3, stride, stride, 0, 0))

61 |

62 | conv_block:add(normalization(n))

63 | conv_block:add(nn.ReLU(true))

64 | conv_block:add(pad(1, 1, 1, 1))

65 | conv_block:add(nn.SpatialConvolution(n, n, 3, 3, 1, 1, 0, 0))

66 |

67 | local concat = nn.ConcatTable():add(conv_block):add(shortcut(nInputPlane, n, stride))

68 |

69 | -- Sum

70 | local res_block = nn.Sequential()

71 | res_block:add(concat)

72 | res_block:add(nn.CAddTable())

73 | return res_block

74 | end

75 |

76 | local function bottleneck(n, stride)

77 | stride = stride or 1

78 | local nInputPlane = iChannels

79 | iChannels = 4 * n

80 | -- Convolutions

81 | local conv_block = nn.Sequential()

82 |

83 | conv_block:add(normalization(nInputPlane))

84 | conv_block:add(nn.ReLU(true))

85 | conv_block:add(nn.SpatialConvolution(nInputPlane, n, 1, 1, 1, 1, 0, 0))

86 |

87 | conv_block:add(normalization(n))

88 | conv_block:add(nn.ReLU(true))

89 | conv_block:add(pad(1, 1, 1, 1))

90 | conv_block:add(nn.SpatialConvolution(n, n, 3, 3, stride, stride, 0, 0))

91 |

92 | conv_block:add(normalization(n))

93 | conv_block:add(nn.ReLU(true))

94 | conv_block:add(nn.SpatialConvolution(n, n*4, 1, 1, 1, 1, 0, 0))

95 |

96 | local concat = nn.ConcatTable():add(conv_block):add(shortcut(nInputPlane, n*4, stride))

97 |

98 | -- Sum

99 | local res_block = nn.Sequential()

100 | res_block:add(concat)

101 | res_block:add(nn.CAddTable())

102 | return res_block

103 | end

104 |

105 | local function full_bottleneck(n, stride)

106 | stride = stride or 1

107 | local nInputPlane = iChannels

108 | iChannels = 4 * n

109 | -- Convolutions

110 | local conv_block = nn.Sequential()

111 |

112 | conv_block:add(normalization(nInputPlane))

113 | conv_block:add(nn.ReLU(true))

114 | conv_block:add(nn.SpatialConvolution(nInputPlane, n, 1, 1, 1, 1, 0, 0))

115 |

116 | conv_block:add(normalization(n))

117 | conv_block:add(nn.ReLU(true))

118 |

119 | if stride~=1 then

120 | conv_block:add(nn.SpatialUpSamplingNearest(stride))

121 | conv_block:add(pad(1, 1, 1, 1))

122 | conv_block:add(nn.SpatialConvolution(n, n, 3, 3, 1, 1, 0, 0))

123 | else

124 | conv_block:add(pad(1, 1, 1, 1))

125 | conv_block:add(nn.SpatialConvolution(n, n, 3, 3, 1, 1, 0, 0))

126 | end

127 | conv_block:add(normalization(n))

128 | conv_block:add(nn.ReLU(true))

129 | conv_block:add(nn.SpatialConvolution(n, n*4, 1, 1, 1, 1, 0, 0))

130 |

131 | local concat = nn.ConcatTable()

132 | :add(conv_block)

133 | :add(full_shortcut(nInputPlane, n*4, stride))

134 |

135 | -- Sum

136 | local res_block = nn.Sequential()

137 | res_block:add(concat)

138 | res_block:add(nn.CAddTable())

139 | return res_block

140 | end

141 |

142 | local function layer(block, features, count, stride)

143 | local s = nn.Sequential()

144 | for i=1,count do

145 | s:add(block(features, i==1 and stride or 1))

146 | end

147 | return s

148 | end

149 |

150 | local model = nn.Sequential()

151 | model.cNetsNum = {}

152 |

153 | -- 256x256

154 | model:add(normalization(3))

155 | model:add(pad(3, 3, 3, 3))

156 | model:add(nn.SpatialConvolution(3, 64, 7, 7, 1, 1, 0, 0))

157 | model:add(normalization(64))

158 | model:add(nn.ReLU(true))

159 |

160 | iChannels = 64

161 | local block = bottleneck -- basic_block

162 |

163 | model:add(layer(block, 32, 1, 2))

164 | model:add(layer(block, 64, 1, 2))

165 |

166 | -- 32x32x512

167 | model:add(nn.Inspiration(iChannels))

168 | table.insert(model.cNetsNum,#model)

169 | model:add(normalization(iChannels))

170 | model:add(nn.ReLU(true))

171 |

172 | for i = 1,opt.model_nres do

173 | model:add(layer(block, 64, 1, 1))

174 | end

175 |

176 | block = full_bottleneck

177 | model:add(layer(block, 32, 1, 2))

178 | model:add(layer(block, 16, 1, 2))

179 |

180 | model:add(normalization(64))

181 | model:add(nn.ReLU(true))

182 |

183 | model:add(pad(3, 3, 3, 3))

184 | model:add(nn.SpatialConvolution(64, 3, 7, 7, 1, 1, 0, 0))

185 |

186 | model:add(nn.TotalVariation(opt.tv_strength))

187 |

188 | function model:setTarget(feat, dtype)

189 | model.modules[model.cNetsNum[1]]:setTarget(feat[3]:type(dtype))

190 | end

191 | return model

192 | end

193 |

194 | function M.createCNets(opt)

195 | -- The descriptive network in the paper

196 | local cnet = torch.load(opt.loss_network)

197 | cnet:evaluate()

198 | cnet.style_layers = {}

199 |

200 | -- Set up calibrate layers

201 | for i, layer_string in ipairs(opt.style_layers) do

202 | local calibrator = nn.Calibrate()

203 | layer_utils.insert_after(cnet, layer_string, calibrator)

204 | table.insert(cnet.style_layers, calibrator)

205 | end

206 | layer_utils.trim_network(cnet)

207 |

208 | function cnet:calibrate(input)

209 | cnet:forward(input)

210 | local feat = {}

211 | for i, calibrator in ipairs(cnet.style_layers) do

212 | table.insert(feat, calibrator:getGram())

213 | end

214 | return feat

215 | end

216 |

217 | return cnet

218 | end

219 |

220 | return M

221 |

--------------------------------------------------------------------------------

/experiments/main.lua:

--------------------------------------------------------------------------------

1 | --+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | -- Created by: Hang Zhang

3 | -- ECE Department, Rutgers University

4 | -- Email: zhang.hang@rutgers.edu

5 | -- Copyright (c) 2017

6 | --

7 | -- Free to reuse and distribute this software for research or

8 | -- non-profit purpose, subject to the following conditions:

9 | -- 1. The code must retain the above copyright notice, this list of

10 | -- conditions.

11 | -- 2. Original authors' names are not deleted.

12 | -- 3. The authors' names are not used to endorse or promote products

13 | -- derived from this software

14 | --+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

15 |

16 | require 'texture'

17 | require 'image'

18 | require 'optim'

19 |

20 | require 'utils.DataLoader'

21 |

22 | local utils = require 'utils.utils'

23 | local preprocess = require 'utils.preprocess'

24 | local opts = require 'opts'

25 | local imgLoader = require 'utils.getImages'

26 |

27 | function main()

28 | local opt = opts.parse(arg)

29 | -- Parse layer strings and weights

30 | opt.content_layers, opt.content_weights =

31 | utils.parse_layers(opt.content_layers, opt.content_weights)

32 | opt.style_layers, opt.style_weights =

33 | utils.parse_layers(opt.style_layers, opt.style_weights)

34 |

35 | -- Figure out preprocessing

36 | if not preprocess[opt.preprocessing] then

37 | local msg = 'invalid -preprocessing "%s"; must be "vgg" or "resnet"'

38 | error(string.format(msg, opt.preprocessing))

39 | end

40 | preprocess = preprocess[opt.preprocessing]

41 |

42 | -- Figure out the backend

43 | local dtype, use_cudnn = utils.setup_gpu(opt.gpu, opt.backend, opt.use_cudnn == 1)

44 |

45 | -- Style images

46 | local styleLoader = imgLoader(opt.style_image_folder)

47 | local featpath = opt.style_image_folder .. '/feat.t7'

48 | if not paths.filep(featpath) then

49 | local extractor = require "extractGram"

50 | extractor.exec(opt)

51 | end

52 | local feat = torch.load(featpath)

53 | feat = nn.utils.recursiveType(feat, 'torch.CudaTensor')

54 |

55 | -- Build the model

56 | local model = nil

57 |

58 | -- Checkpoint

59 | if opt.resume ~= '' then

60 | print('Loading checkpoint from ' .. opt.resume)

61 | model = torch.load(opt.resume).model:type(dtype)

62 | else

63 | print('Initializing model from scratch')

64 | models = require('models.' .. opt.model)

65 | model = models.createModel(opt):type(dtype)

66 | end

67 |

68 | if use_cudnn then cudnn.convert(model, cudnn) end

69 | model:training()

70 | print(model)

71 |

72 | -- Set up the perceptual loss function

73 | local percep_crit

74 | local loss_net = torch.load(opt.loss_network)

75 | local crit_args = {

76 | cnn = loss_net,

77 | style_layers = opt.style_layers,

78 | style_weights = opt.style_weights,

79 | content_layers = opt.content_layers,

80 | content_weights = opt.content_weights,

81 | }

82 | percep_crit = nn.PerceptualCriterion(crit_args):type(dtype)

83 |

84 | local loader = DataLoader(opt)

85 | local params, grad_params = model:getParameters()

86 |

87 | local function f(x)

88 | assert(x == params)

89 | grad_params:zero()

90 |

91 | local x, y = loader:getBatch('train')

92 | x, y = x:type(dtype), y:type(dtype)

93 |

94 | -- Run model forward

95 | local out = model:forward(x)

96 | local target = {content_target=y}

97 | local loss = percep_crit:forward(out, target)

98 | local grad_out = percep_crit:backward(out, target)

99 |

100 | -- Run model backward

101 | model:backward(x, grad_out)

102 |

103 | return loss, grad_params

104 | end

105 |

106 | local optim_state = {learningRate=opt.learning_rate}

107 | local train_loss_history = {}

108 | local val_loss_history = {}

109 | local val_loss_history_ts = {}

110 | local style_loss_history = nil

111 |

112 | style_loss_history = {}

113 | for i, k in ipairs(opt.style_layers) do

114 | style_loss_history[string.format('style-%d', k)] = {}

115 | end

116 | for i, k in ipairs(opt.content_layers) do

117 | style_loss_history[string.format('content-%d', k)] = {}

118 | end

119 |