├── flink-tabel-sql 1

├── flink-tabel-sql.iml

├── src

│ └── main

│ │ ├── resources

│ │ ├── list.txt

│ │ └── log4j.properties

│ │ └── java

│ │ └── robinwang

│ │ ├── SQLJob.java

│ │ └── TabelJob.java

├── Flink SQL实战 - 1.md

└── pom.xml

├── flink-tabel-sql 2&3

├── flink-tabel-sql.iml

├── src

│ └── main

│ │ ├── resources

│ │ ├── list.txt

│ │ └── log4j.properties

│ │ └── java

│ │ └── robinwang

│ │ ├── custom

│ │ ├── Tuple1Schema.java

│ │ ├── KafkaTabelSource.java

│ │ └── MyRetractStreamTableSink.java

│ │ ├── KafkaSource2.java

│ │ ├── CustomSinkJob.java

│ │ └── KafkaSource.java

├── Socket_server.py

├── kafka_tabelCount.py

├── Flink SQL实战 - 2.md

├── Flink SQL实战 - 3.md

└── pom.xml

├── flink-tabel-sql 4&5

├── flink-tabel-sql.iml

├── src

│ └── main

│ │ ├── resources

│ │ ├── list.txt

│ │ └── log4j.properties

│ │ └── java

│ │ └── robinwang

│ │ ├── udfs

│ │ ├── IsStatus.java

│ │ ├── KyeWordCount.java

│ │ └── MaxStatus.java

│ │ ├── custom

│ │ ├── POJOSchema.java

│ │ ├── KafkaTabelSource.java

│ │ └── MyRetractStreamTableSink.java

│ │ ├── entity

│ │ └── Response.java

│ │ ├── UdafJob.java

│ │ ├── UdtfJob.java

│ │ └── UdsfJob.java

├── kafka_JSON.py

├── kafka_keywordsJSON.py

├── Flink SQL 实战 (5):使用自定义函数实现关键字过滤统计.md

├── flink-table-sql.iml

├── pom.xml

└── Flink SQL 实战 (4):UDF-用户自定义函数.md

├── flink-tabel-sql 6

├── flink-json-1.9.1.jar

├── flink-sql-connector-kafka_2.11-1.9.1.jar

├── kafka_result.py

├── sql-client-defaults.yaml

└── Flink SQL 实战 (6):SQL Client.md

└── README.md

/flink-tabel-sql 1/flink-tabel-sql.iml:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/flink-tabel-sql 2&3/flink-tabel-sql.iml:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/flink-tabel-sql.iml:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/flink-tabel-sql 6/flink-json-1.9.1.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/StarPlatinumStudio/Flink-SQL-Practice/HEAD/flink-tabel-sql 6/flink-json-1.9.1.jar

--------------------------------------------------------------------------------

/flink-tabel-sql 6/flink-sql-connector-kafka_2.11-1.9.1.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/StarPlatinumStudio/Flink-SQL-Practice/HEAD/flink-tabel-sql 6/flink-sql-connector-kafka_2.11-1.9.1.jar

--------------------------------------------------------------------------------

/flink-tabel-sql 1/src/main/resources/list.txt:

--------------------------------------------------------------------------------

1 | Apple

2 | Xiaomi

3 | Huawei

4 | Oppo

5 | Vivo

6 | OnePlus

7 | Apple

8 | Xiaomi

9 | Huawei

10 | Oppo

11 | Vivo

12 | OnePlus

13 | Apple

14 | Xiaomi

15 |

--------------------------------------------------------------------------------

/flink-tabel-sql 2&3/src/main/resources/list.txt:

--------------------------------------------------------------------------------

1 | Apple

2 | Xiaomi

3 | Huawei

4 | Oppo

5 | Vivo

6 | OnePlus

7 | Apple

8 | Xiaomi

9 | Huawei

10 | Oppo

11 | Vivo

12 | OnePlus

13 | Apple

14 | Xiaomi

15 |

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/src/main/resources/list.txt:

--------------------------------------------------------------------------------

1 | Apple

2 | Xiaomi

3 | Huawei

4 | Oppo

5 | Vivo

6 | OnePlus

7 | Apple

8 | Xiaomi

9 | Huawei

10 | Oppo

11 | Vivo

12 | OnePlus

13 | Apple

14 | Xiaomi

15 |

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/src/main/java/robinwang/udfs/IsStatus.java:

--------------------------------------------------------------------------------

1 | package robinwang.udfs;

2 |

3 | import org.apache.flink.table.functions.ScalarFunction;

4 |

5 | public class IsStatus extends ScalarFunction {

6 | private int status = 0;

7 | public IsStatus(int status){

8 | this.status = status;

9 | }

10 |

11 | public boolean eval(int status){

12 | if (this.status == status){

13 | return true;

14 | } else {

15 | return false;

16 | }

17 | }

18 | }

19 |

--------------------------------------------------------------------------------

/flink-tabel-sql 2&3/src/main/java/robinwang/custom/Tuple1Schema.java:

--------------------------------------------------------------------------------

1 | package robinwang.custom;

2 | import org.apache.flink.api.common.serialization.AbstractDeserializationSchema;

3 | import org.apache.flink.api.java.tuple.Tuple1;

4 |

5 | import java.io.IOException;

6 |

7 | public final class Tuple1Schema extends AbstractDeserializationSchema> {

8 | @Override

9 | public Tuple1 deserialize(byte[] bytes) throws IOException {

10 | return new Tuple1<>(new String(bytes,"utf-8"));

11 | }

12 | }

13 |

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/src/main/java/robinwang/udfs/KyeWordCount.java:

--------------------------------------------------------------------------------

1 | package robinwang.udfs;

2 |

3 | import org.apache.flink.api.java.tuple.Tuple2;

4 | import org.apache.flink.table.functions.TableFunction;

5 |

6 | public class KyeWordCount extends TableFunction> {

7 | private String[] keys;

8 | public KyeWordCount(String[] keys){

9 | this.keys=keys;

10 | }

11 | public void eval(String in){

12 | for (String key:keys){

13 | if (in.contains(key)){

14 | collect(new Tuple2(key,1));

15 | }

16 | }

17 | }

18 | }

19 |

--------------------------------------------------------------------------------

/flink-tabel-sql 2&3/Socket_server.py:

--------------------------------------------------------------------------------

1 | import socket

2 | import time

3 |

4 | # 创建socket对象

5 | s = socket.socket()

6 | # 将socket绑定到本机IP和端口

7 | s.bind(('192.168.1.130', 9000))

8 | # 服务端开始监听来自客户端的连接

9 | s.listen()

10 | while True:

11 | c, addr = s.accept()

12 | count = 0

13 |

14 | while True:

15 | c.send('{"project":"mobile","protocol":"Dindex/","companycode":"05780","model":"Dprotocol","response":"SucceedHeSNNNllo","response_time":0.03257,"status":0}\n'.encode('utf-8'))

16 | time.sleep(0.005)

17 | count += 1

18 | if count > 100000:

19 | # 关闭连接

20 | c.close()

21 | break

22 | time.sleep(1)

23 |

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/src/main/java/robinwang/udfs/MaxStatus.java:

--------------------------------------------------------------------------------

1 | package robinwang.udfs;

2 |

3 | import org.apache.flink.table.functions.AggregateFunction;

4 |

5 | public class MaxStatus extends AggregateFunction {

6 | @Override

7 | public Integer getValue(StatusACC statusACC) {

8 | return statusACC.maxStatus;

9 | }

10 |

11 | @Override

12 | public StatusACC createAccumulator() {

13 | return new StatusACC();

14 | }

15 | public void accumulate(StatusACC statusACC,int status){

16 | if (status>statusACC.maxStatus){

17 | statusACC.maxStatus=status;

18 | }

19 | }

20 | public static class StatusACC{

21 | public int maxStatus=0;

22 | }

23 | }

24 |

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/src/main/java/robinwang/custom/POJOSchema.java:

--------------------------------------------------------------------------------

1 | package robinwang.custom;

2 |

3 | import com.alibaba.fastjson.JSON;

4 | import robinwang.entity.Response;

5 | import org.apache.flink.api.common.serialization.AbstractDeserializationSchema;

6 |

7 | import java.io.IOException;

8 |

9 | /**

10 | * JSON:

11 | * {

12 | * "response": "",

13 | * "status": 0,

14 | * "protocol": ""

15 | * "timestamp":0

16 | * }

17 | */

18 | public final class POJOSchema extends AbstractDeserializationSchema {

19 | @Override

20 | public Response deserialize(byte[] bytes) throws IOException {

21 | //byte[]转JavaBean

22 | try {

23 | return JSON.parseObject(bytes,Response.class);

24 | }

25 | catch (Exception ex){

26 | ex.printStackTrace();

27 | }

28 | return null;

29 | }

30 | }

31 |

--------------------------------------------------------------------------------

/flink-tabel-sql 2&3/kafka_tabelCount.py:

--------------------------------------------------------------------------------

1 | # https://pypi.org/project/kafka-python/

2 | import pickle

3 | import time

4 | import json

5 | from kafka import KafkaProducer

6 |

7 | producer = KafkaProducer(bootstrap_servers=['127.0.0.1:9092'],

8 | key_serializer=lambda k: pickle.dumps(k),

9 | value_serializer=lambda v: pickle.dumps(v))

10 | start_time = time.time()

11 | for i in range(0, 10000):

12 | print('------{}---------'.format(i))

13 | producer = KafkaProducer(value_serializer=lambda v: json.dumps(v).encode('utf-8'),compression_type='gzip')

14 | producer.send('test', [{"response":"testSucceed","status":0},{"response":"testSucceed","status":0},{"response":"testSucceed","status":0},{"response":"testSucceed","status":0},{"response":"testSucceed","status":0}])

15 | # future = producer.send('test', key='num', value=i, partition=0)

16 | # 将缓冲区的全部消息push到broker当中

17 | producer.flush()

18 | producer.close()

19 |

20 | end_time = time.time()

21 | time_counts = end_time - start_time

22 | print(time_counts)

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Apache Flink® SQL Practice

2 |

3 | **This repository provides a practice for Flink's Tabel API & SQL API.**

4 |

5 | 请配合我的专栏:[Flink SQL原理和实战]( https://blog.csdn.net/qq_35815527/category_9634641.html ) 使用

6 |

7 | ### Apache Flink

8 |

9 | Apache Flink(以下简称Flink)是第三代流处理引擎,支持精确的流处理,能同时满足各种规模下对高吞吐和低延迟的需求等优势。

10 |

11 | ## 为什么要用 SQL

12 |

13 | 以下是本人基于[Apache Flink® SQL Training]( https://github.com/ververica/sql-training )翻译的Flink SQL介绍:

14 |

15 | SQL 是 Flink的强大抽象处理功能,位于 Flink 分层抽象的顶层。

16 |

17 | #### DataStream API非常棒

18 |

19 | 非常有表现力的流处理API转换、聚合和连接事件Java和Scala控制如何处理事件的时间戳、水印、窗口、计时器、触发器、允许延迟……维护和更新应用程序状态键控状态、操作符状态、状态后端、检查点

20 |

21 | #### 但并不是每个人都适合

22 |

23 | - 编写分布式程序并不总是那么容易理解新概念:时间,状态等

24 |

25 | - 需要知识和技能–连续应用程序有特殊要求–编程经验(Java / Scala)

26 | - 用户希望专注于他们的业务逻辑

27 |

28 | #### 而SQL API 就做的很好

29 |

30 | - 关系api是声明性的,用户说什么是需要的,

31 |

32 | - 系统决定如何计算it查询,可以有效地优化,让Flink处理状态和时间,

33 |

34 | - 每个人都知道和使用SQL

35 |

36 | #### 结论

37 |

38 | Flink SQL 简单、声明性和简洁的关系API表达能力强,

39 |

40 | 足以支持大量的用例,

41 |

42 | 用于批处理和流数据的统一语法和语义

43 |

44 | ------

45 |

46 | *Apache Flink, Flink®, Apache®, the squirrel logo, and the Apache feather logo are either registered trademarks or trademarks of The Apache Software Foundation.*

47 |

48 |

--------------------------------------------------------------------------------

/flink-tabel-sql 6/kafka_result.py:

--------------------------------------------------------------------------------

1 | # https://pypi.org/project/kafka-python/

2 | import pickle

3 | import time

4 | import json

5 | from kafka import KafkaProducer

6 |

7 | producer = KafkaProducer(bootstrap_servers=['127.0.0.1:9092'],

8 | key_serializer=lambda k: pickle.dumps(k),

9 | value_serializer=lambda v: pickle.dumps(v))

10 | start_time = time.time()

11 | for i in range(0, 10000):

12 | print('------{}---------'.format(i))

13 | producer = KafkaProducer(value_serializer=lambda v: json.dumps(v).encode('utf-8'),compression_type='gzip')

14 | producer.send('log',{"response":"res","status":0,"protocol":"protocol","timestamp":0})

15 | producer.send('log',{"response":"res","status":1,"protocol":"protocol","timestamp":0})

16 | producer.send('log',{"response":"resKEY","status":2,"protocol":"protocol","timestamp":0})

17 | producer.send('log',{"response":"res","status":3,"protocol":"protocol","timestamp":0})

18 | producer.send('log',{"response":"res","status":4,"protocol":"protocol","timestamp":0})

19 | producer.send('log',{"response":"res","status":5,"protocol":"protocol","timestamp":0})

20 | producer.flush()

21 | producer.close()

22 | #

23 | end_time = time.time()

24 | time_counts = end_time - start_time

25 | print(time_counts)

26 |

--------------------------------------------------------------------------------

/flink-tabel-sql 1/src/main/resources/log4j.properties:

--------------------------------------------------------------------------------

1 | ################################################################################

2 | # Licensed to the Apache Software Foundation (ASF) under one

3 | # or more contributor license agreements. See the NOTICE file

4 | # distributed with this work for additional information

5 | # regarding copyright ownership. The ASF licenses this file

6 | # to you under the Apache License, Version 2.0 (the

7 | # "License"); you may not use this file except in compliance

8 | # with the License. You may obtain a copy of the License at

9 | #

10 | # http://www.apache.org/licenses/LICENSE-2.0

11 | #

12 | # Unless required by applicable law or agreed to in writing, software

13 | # distributed under the License is distributed on an "AS IS" BASIS,

14 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

15 | # See the License for the specific language governing permissions and

16 | # limitations under the License.

17 | ################################################################################

18 |

19 | log4j.rootLogger=INFO, console

20 |

21 | log4j.appender.console=org.apache.log4j.ConsoleAppender

22 | log4j.appender.console.layout=org.apache.log4j.PatternLayout

23 | log4j.appender.console.layout.ConversionPattern=%d{HH:mm:ss,SSS} %-5p %-60c %x - %m%n

24 |

--------------------------------------------------------------------------------

/flink-tabel-sql 2&3/src/main/resources/log4j.properties:

--------------------------------------------------------------------------------

1 | ################################################################################

2 | # Licensed to the Apache Software Foundation (ASF) under one

3 | # or more contributor license agreements. See the NOTICE file

4 | # distributed with this work for additional information

5 | # regarding copyright ownership. The ASF licenses this file

6 | # to you under the Apache License, Version 2.0 (the

7 | # "License"); you may not use this file except in compliance

8 | # with the License. You may obtain a copy of the License at

9 | #

10 | # http://www.apache.org/licenses/LICENSE-2.0

11 | #

12 | # Unless required by applicable law or agreed to in writing, software

13 | # distributed under the License is distributed on an "AS IS" BASIS,

14 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

15 | # See the License for the specific language governing permissions and

16 | # limitations under the License.

17 | ################################################################################

18 |

19 | log4j.rootLogger=INFO, console

20 |

21 | log4j.appender.console=org.apache.log4j.ConsoleAppender

22 | log4j.appender.console.layout=org.apache.log4j.PatternLayout

23 | log4j.appender.console.layout.ConversionPattern=%d{HH:mm:ss,SSS} %-5p %-60c %x - %m%n

24 |

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/src/main/resources/log4j.properties:

--------------------------------------------------------------------------------

1 | ################################################################################

2 | # Licensed to the Apache Software Foundation (ASF) under one

3 | # or more contributor license agreements. See the NOTICE file

4 | # distributed with this work for additional information

5 | # regarding copyright ownership. The ASF licenses this file

6 | # to you under the Apache License, Version 2.0 (the

7 | # "License"); you may not use this file except in compliance

8 | # with the License. You may obtain a copy of the License at

9 | #

10 | # http://www.apache.org/licenses/LICENSE-2.0

11 | #

12 | # Unless required by applicable law or agreed to in writing, software

13 | # distributed under the License is distributed on an "AS IS" BASIS,

14 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

15 | # See the License for the specific language governing permissions and

16 | # limitations under the License.

17 | ################################################################################

18 |

19 | log4j.rootLogger=INFO, console

20 |

21 | log4j.appender.console=org.apache.log4j.ConsoleAppender

22 | log4j.appender.console.layout=org.apache.log4j.PatternLayout

23 | log4j.appender.console.layout.ConversionPattern=%d{HH:mm:ss,SSS} %-5p %-60c %x - %m%n

24 |

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/kafka_JSON.py:

--------------------------------------------------------------------------------

1 | # https://pypi.org/project/kafka-python/

2 | import pickle

3 | import time

4 | import json

5 | from kafka import KafkaProducer

6 |

7 | producer = KafkaProducer(bootstrap_servers=['127.0.0.1:9092'],

8 | key_serializer=lambda k: pickle.dumps(k),

9 | value_serializer=lambda v: pickle.dumps(v))

10 | start_time = time.time()

11 | for i in range(0, 10000):

12 | print('------{}---------'.format(i))

13 | producer = KafkaProducer(value_serializer=lambda v: json.dumps(v).encode('utf-8'),compression_type='gzip')

14 | producer.send('test',{"response":"res","status":0,"protocol":"protocol","timestamp":0})

15 | producer.send('test',{"response":"res","status":1,"protocol":"protocol","timestamp":0})

16 | producer.send('test',{"response":"res","status":2,"protocol":"protocol","timestamp":0})

17 | producer.send('test',{"response":"res","status":3,"protocol":"protocol","timestamp":0})

18 | producer.send('test',{"response":"res","status":4,"protocol":"protocol","timestamp":0})

19 | producer.send('test',{"response":"res","status":5,"protocol":"protocol","timestamp":0})

20 | # future = producer.send('test', key='num', value=i, partition=0)

21 | # 将缓冲区的全部消息push到broker当中

22 | producer.flush()

23 | producer.close()

24 |

25 | end_time = time.time()

26 | time_counts = end_time - start_time

27 | print(time_counts)

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/src/main/java/robinwang/entity/Response.java:

--------------------------------------------------------------------------------

1 | package robinwang.entity;

2 |

3 | /**

4 | * JavaBean类

5 | * JSON:

6 | * {

7 | * "response": "",

8 | * "status": 0,

9 | * "protocol": ""

10 | * "timestamp":0

11 | * }

12 | */

13 | public class Response {

14 | private String response;

15 | private int status;

16 | private String protocol;

17 | private long timestamp;

18 |

19 | public Response(String response, int status, String protocol, long timestamp) {

20 | this.response = response;

21 | this.status = status;

22 | this.protocol = protocol;

23 | this.timestamp = timestamp;

24 | }

25 | public Response(){}

26 |

27 | public String getResponse() {

28 | return response;

29 | }

30 |

31 | public void setResponse(String response) {

32 | this.response = response;

33 | }

34 |

35 | public int getStatus() {

36 | return status;

37 | }

38 |

39 | public void setStatus(int status) {

40 | this.status = status;

41 | }

42 |

43 | public String getProtocol() {

44 | return protocol;

45 | }

46 |

47 | public void setProtocol(String protocol) {

48 | this.protocol = protocol;

49 | }

50 |

51 | public long getTimestamp() {

52 | return timestamp;

53 | }

54 |

55 | public void setTimestamp(long timestamp) {

56 | this.timestamp = timestamp;

57 | }

58 | }

59 |

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/src/main/java/robinwang/UdafJob.java:

--------------------------------------------------------------------------------

1 | package robinwang;

2 |

3 | import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

4 | import org.apache.flink.table.api.EnvironmentSettings;

5 | import org.apache.flink.table.api.Table;

6 | import org.apache.flink.table.api.java.StreamTableEnvironment;

7 | import org.apache.flink.types.Row;

8 | import robinwang.custom.KafkaTabelSource;

9 | import robinwang.udfs.MaxStatus;

10 |

11 | /**

12 | *聚合最大的status

13 | */

14 | public class UdafJob {

15 | public static void main(String[] args) throws Exception {

16 | StreamExecutionEnvironment streamEnv = StreamExecutionEnvironment.getExecutionEnvironment();

17 | EnvironmentSettings streamSettings = EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build();

18 | StreamTableEnvironment streamTabelEnv = StreamTableEnvironment.create(streamEnv, streamSettings);

19 | KafkaTabelSource kafkaTabelSource = new KafkaTabelSource();

20 | streamTabelEnv.registerTableSource("kafkaDataStream", kafkaTabelSource);//使用自定义TableSource

21 | streamTabelEnv.registerFunction("maxStatus",new MaxStatus());

22 | Table wordWithCount = streamTabelEnv.sqlQuery("SELECT maxStatus(status) AS maxStatus FROM kafkaDataStream");

23 | streamTabelEnv.toRetractStream(wordWithCount, Row.class).print();

24 | streamTabelEnv.execute("BLINK STREAMING QUERY");

25 | }

26 | }

27 |

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/kafka_keywordsJSON.py:

--------------------------------------------------------------------------------

1 | # https://pypi.org/project/kafka-python/

2 | import pickle

3 | import time

4 | import json

5 | from kafka import KafkaProducer

6 |

7 | producer = KafkaProducer(bootstrap_servers=['127.0.0.1:9092'],

8 | key_serializer=lambda k: pickle.dumps(k),

9 | value_serializer=lambda v: pickle.dumps(v))

10 | start_time = time.time()

11 | for i in range(0, 10000):

12 | print('------{}---------'.format(i))

13 | producer = KafkaProducer(value_serializer=lambda v: json.dumps(v).encode('utf-8'),compression_type='gzip')

14 | producer.send('test',{"response":"resKeyWordWARNINGillegal","status":0,"protocol":"protocol","timestamp":0})

15 | producer.send('test',{"response":"resKeyWordWARNINGillegal","status":1,"protocol":"protocol","timestamp":0})

16 | producer.send('test',{"response":"resresKeyWordWARNING","status":2,"protocol":"protocol","timestamp":0})

17 | producer.send('test',{"response":"resKeyWord","status":3,"protocol":"protocol","timestamp":0})

18 | producer.send('test',{"response":"res","status":4,"protocol":"protocol","timestamp":0})

19 | producer.send('test',{"response":"res","status":5,"protocol":"protocol","timestamp":0})

20 | # future = producer.send('test', key='num', value=i, partition=0)

21 | # 将缓冲区的全部消息push到broker当中

22 | producer.flush()

23 | producer.close()

24 |

25 | end_time = time.time()

26 | time_counts = end_time - start_time

27 | print(time_counts)

--------------------------------------------------------------------------------

/flink-tabel-sql 2&3/src/main/java/robinwang/custom/KafkaTabelSource.java:

--------------------------------------------------------------------------------

1 | package robinwang.custom;

2 |

3 | import org.apache.flink.api.common.serialization.SimpleStringSchema;

4 | import org.apache.flink.streaming.api.datastream.DataStream;

5 | import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

6 | import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer011;

7 | import org.apache.flink.table.api.DataTypes;

8 | import org.apache.flink.table.api.TableSchema;

9 | import org.apache.flink.table.sources.StreamTableSource;

10 | import org.apache.flink.table.types.DataType;

11 |

12 | import java.util.Properties;

13 |

14 | public class KafkaTabelSource implements StreamTableSource {

15 | @Override

16 | public DataType getProducedDataType() {

17 | return DataTypes.STRING();

18 | }

19 | @Override

20 | public TableSchema getTableSchema() {

21 | return TableSchema.builder().fields(new String[]{"word"},new DataType[]{DataTypes.STRING()}).build();

22 | }

23 | @Override

24 | public DataStream getDataStream(StreamExecutionEnvironment env) {

25 | Properties kafkaProperties=new Properties();

26 | kafkaProperties.setProperty("bootstrap.servers", "0.0.0.0:9092");

27 | kafkaProperties.setProperty("group.id", "test");

28 | DataStream kafkaStream=env.addSource(new FlinkKafkaConsumer011<>("test",new SimpleStringSchema(),kafkaProperties));

29 | return kafkaStream;

30 | }

31 | }

32 |

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/src/main/java/robinwang/UdtfJob.java:

--------------------------------------------------------------------------------

1 | package robinwang;

2 |

3 | import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

4 | import org.apache.flink.table.api.EnvironmentSettings;

5 | import org.apache.flink.table.api.Table;

6 | import org.apache.flink.table.api.java.StreamTableEnvironment;

7 | import org.apache.flink.types.Row;

8 | import robinwang.custom.KafkaTabelSource;

9 | import robinwang.udfs.KyeWordCount;

10 | /**

11 | * 关键字过滤统计

12 | */

13 | public class UdtfJob {

14 | public static void main(String[] args) throws Exception {

15 | StreamExecutionEnvironment streamEnv = StreamExecutionEnvironment.getExecutionEnvironment();

16 | EnvironmentSettings streamSettings = EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build();

17 | StreamTableEnvironment streamTabelEnv = StreamTableEnvironment.create(streamEnv, streamSettings);

18 | KafkaTabelSource kafkaTabelSource = new KafkaTabelSource();

19 | streamTabelEnv.registerTableSource("kafkaDataStream", kafkaTabelSource);//使用自定义TableSource

20 | streamTabelEnv.registerFunction("CountKEY", new KyeWordCount(new String[]{"KeyWord","WARNING","illegal"}));

21 | Table wordWithCount = streamTabelEnv.sqlQuery("SELECT key,COUNT(countv) AS countsum FROM kafkaDataStream LEFT JOIN LATERAL TABLE(CountKEY(response)) as T(key, countv) ON TRUE GROUP BY key");

22 | streamTabelEnv.toRetractStream(wordWithCount, Row.class).print();

23 | streamTabelEnv.execute("BLINK STREAMING QUERY");

24 | }

25 | }

26 |

--------------------------------------------------------------------------------

/flink-tabel-sql 1/src/main/java/robinwang/SQLJob.java:

--------------------------------------------------------------------------------

1 | package robinwang;

2 | import org.apache.flink.api.common.typeinfo.TypeInformation;

3 | import org.apache.flink.api.common.typeinfo.Types;

4 | import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

5 | import org.apache.flink.table.api.EnvironmentSettings;

6 | import org.apache.flink.table.api.Table;

7 | import org.apache.flink.table.api.java.StreamTableEnvironment;

8 | import org.apache.flink.table.sources.CsvTableSource;

9 | import org.apache.flink.table.sources.TableSource;

10 | import org.apache.flink.types.Row;

11 |

12 | public class SQLJob {

13 | public static void main(String[] args) throws Exception {

14 | StreamExecutionEnvironment blinkStreamEnv=StreamExecutionEnvironment.getExecutionEnvironment();

15 | EnvironmentSettings blinkStreamSettings= EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build();

16 | StreamTableEnvironment blinkStreamTabelEnv= StreamTableEnvironment.create(blinkStreamEnv,blinkStreamSettings);

17 | String path= SQLJob.class.getClassLoader().getResource("list.txt").getPath();

18 | String[] fieldNames={"word"};

19 | TypeInformation[] fieldTypes={Types.STRING};

20 | TableSource fileSource=new CsvTableSource(path,fieldNames,fieldTypes);

21 | blinkStreamTabelEnv.registerTableSource("FlieSourceTable",fileSource);

22 | Table wordWithCount = blinkStreamTabelEnv.sqlQuery("SELECT count(word) AS _count,word FROM FlieSourceTable GROUP BY word");

23 | blinkStreamTabelEnv.toRetractStream(wordWithCount, Row.class).print();

24 | blinkStreamTabelEnv.execute("BLINK STREAMING QUERY");

25 | }

26 | }

27 |

--------------------------------------------------------------------------------

/flink-tabel-sql 2&3/src/main/java/robinwang/KafkaSource2.java:

--------------------------------------------------------------------------------

1 | package robinwang;

2 | import robinwang.custom.KafkaTabelSource;

3 | import robinwang.custom.MyRetractStreamTableSink;

4 | import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

5 | import org.apache.flink.table.api.DataTypes;

6 | import org.apache.flink.table.api.EnvironmentSettings;

7 | import org.apache.flink.table.api.Table;

8 | import org.apache.flink.table.api.java.StreamTableEnvironment;

9 | import org.apache.flink.table.sinks.RetractStreamTableSink;

10 | import org.apache.flink.table.types.DataType;

11 | import org.apache.flink.types.Row;

12 | public class KafkaSource2 {

13 | public static void main(String[] args) throws Exception {

14 | StreamExecutionEnvironment blinkStreamEnv=StreamExecutionEnvironment.getExecutionEnvironment();

15 | EnvironmentSettings blinkStreamSettings= EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build();

16 | StreamTableEnvironment blinkStreamTabelEnv= StreamTableEnvironment.create(blinkStreamEnv,blinkStreamSettings);

17 | blinkStreamTabelEnv.registerTableSource("kafkaDataStream",new KafkaTabelSource());//使用自定义TableSource

18 | RetractStreamTableSink retractStreamTableSink=new MyRetractStreamTableSink(new String[]{"_count","word"},new DataType[]{DataTypes.BIGINT(), DataTypes.STRING()});

19 | blinkStreamTabelEnv.registerTableSink("sinkTable",retractStreamTableSink);

20 | Table wordWithCount = blinkStreamTabelEnv.sqlQuery("SELECT count(word) AS _count,word FROM kafkaDataStream GROUP BY word ");

21 | wordWithCount.insertInto("sinkTable");

22 | blinkStreamTabelEnv.execute("BLINK STREAMING QUERY");

23 | }

24 | }

25 |

--------------------------------------------------------------------------------

/flink-tabel-sql 1/src/main/java/robinwang/TabelJob.java:

--------------------------------------------------------------------------------

1 | package robinwang;

2 | import org.apache.flink.api.common.typeinfo.Types;

3 | import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

4 | import org.apache.flink.table.api.EnvironmentSettings;

5 | import org.apache.flink.table.api.Table;

6 | import org.apache.flink.table.api.java.StreamTableEnvironment;

7 | import org.apache.flink.table.descriptors.FileSystem;

8 | import org.apache.flink.table.descriptors.OldCsv;

9 | import org.apache.flink.table.descriptors.Schema;

10 | import org.apache.flink.types.Row;

11 |

12 | public class TabelJob {

13 | public static void main(String[] args) throws Exception {

14 | StreamExecutionEnvironment blinkStreamEnv=StreamExecutionEnvironment.getExecutionEnvironment();

15 | EnvironmentSettings blinkStreamSettings= EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build();

16 | StreamTableEnvironment blinkStreamTabelEnv= StreamTableEnvironment.create(blinkStreamEnv,blinkStreamSettings);

17 | String path=TabelJob.class.getClassLoader().getResource("list.txt").getPath();

18 | blinkStreamTabelEnv

19 | .connect(new FileSystem().path(path))

20 | .withFormat(new OldCsv().field("word", Types.STRING).lineDelimiter("\n"))

21 | .withSchema(new Schema().field("word",Types.STRING))

22 | .inAppendMode()

23 | .registerTableSource("FlieSourceTable");

24 |

25 | Table wordWithCount = blinkStreamTabelEnv.scan("FlieSourceTable")

26 | .groupBy("word")

27 | .select("word,count(word) as _count");

28 | blinkStreamTabelEnv.toRetractStream(wordWithCount, Row.class).print();

29 | blinkStreamTabelEnv.execute("BLINK STREAMING QUERY");

30 | }

31 | }

32 |

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/src/main/java/robinwang/custom/KafkaTabelSource.java:

--------------------------------------------------------------------------------

1 | package robinwang.custom;

2 |

3 | import robinwang.entity.Response;

4 | import org.apache.flink.api.common.typeinfo.TypeInformation;

5 | import org.apache.flink.streaming.api.datastream.DataStream;

6 | import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

7 | import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer011;

8 | import org.apache.flink.table.api.DataTypes;

9 | import org.apache.flink.table.api.TableSchema;

10 | import org.apache.flink.table.sources.StreamTableSource;

11 | import org.apache.flink.table.factories.TableSourceFactory;

12 | import org.apache.flink.table.types.DataType;

13 |

14 | import java.util.Properties;

15 | /**

16 | * {

17 | * "response": "",

18 | * "status": 0,

19 | * "protocol": ""

20 | * "timestamp":0

21 | * }

22 | */

23 | public class KafkaTabelSource implements StreamTableSource {

24 | @Override

25 | public TypeInformation getReturnType() {

26 | // 对于非泛型类型,传递Class

27 | return TypeInformation.of(Response.class);

28 | }

29 |

30 | @Override

31 | public TableSchema getTableSchema() {

32 | return TableSchema.builder().fields(new String[]{"response","status","protocol","timestamp"},new DataType[]{DataTypes.STRING(),DataTypes.INT(),DataTypes.STRING(),DataTypes.BIGINT()}).build();

33 | }

34 |

35 | @Override

36 | public DataStream getDataStream(StreamExecutionEnvironment env) {

37 | Properties kafkaProperties=new Properties();

38 | kafkaProperties.setProperty("bootstrap.servers", "0.0.0.0:9092");

39 | kafkaProperties.setProperty("group.id", "test");

40 | DataStream kafkaStream=env.addSource(new FlinkKafkaConsumer011<>("test",new POJOSchema(),kafkaProperties));

41 | return kafkaStream;

42 | }

43 | }

44 |

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/src/main/java/robinwang/UdsfJob.java:

--------------------------------------------------------------------------------

1 | package robinwang;

2 | import robinwang.custom.KafkaTabelSource;

3 | import robinwang.custom.MyRetractStreamTableSink;

4 | import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

5 | import org.apache.flink.table.api.EnvironmentSettings;

6 | import org.apache.flink.table.api.Table;

7 | import org.apache.flink.table.api.java.StreamTableEnvironment;

8 | import org.apache.flink.table.sinks.RetractStreamTableSink;

9 | import org.apache.flink.types.Row;

10 | import robinwang.udfs.IsStatus;

11 |

12 | /**

13 | * 查看kafkaDataStream中status=5的数据

14 | */

15 | public class UdsfJob {

16 | public static void main(String[] args) throws Exception {

17 | StreamExecutionEnvironment blinkStreamEnv=StreamExecutionEnvironment.getExecutionEnvironment();

18 | EnvironmentSettings blinkStreamSettings= EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build();

19 | StreamTableEnvironment blinkStreamTabelEnv= StreamTableEnvironment.create(blinkStreamEnv,blinkStreamSettings);

20 | KafkaTabelSource kafkaTabelSource=new KafkaTabelSource();

21 | blinkStreamTabelEnv.registerTableSource("kafkaDataStream",kafkaTabelSource);//使用自定义TableSource

22 | // RetractStreamTableSink retractStreamTableSink=new MyRetractStreamTableSink(kafkaTabelSource.getTableSchema().getFieldNames(),kafkaTabelSource.getTableSchema().getFieldDataTypes());

23 | // blinkStreamTabelEnv.registerTableSink("sinkTable",retractStreamTableSink);

24 | blinkStreamTabelEnv.registerFunction("IsStatusFive",new IsStatus(5));

25 | Table wordWithCount = blinkStreamTabelEnv.sqlQuery("SELECT * FROM kafkaDataStream WHERE IsStatusFive(status)");

26 | blinkStreamTabelEnv.toAppendStream(wordWithCount,Row.class).print();

27 | // wordWithCount.insertInto("sinkTable");

28 | blinkStreamTabelEnv.execute("BLINK STREAMING QUERY");

29 | }

30 | }

31 |

--------------------------------------------------------------------------------

/flink-tabel-sql 2&3/src/main/java/robinwang/CustomSinkJob.java:

--------------------------------------------------------------------------------

1 | package robinwang;

2 | import robinwang.custom.MyRetractStreamTableSink;

3 | import org.apache.flink.api.common.typeinfo.TypeInformation;

4 | import org.apache.flink.api.common.typeinfo.Types;

5 | import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

6 | import org.apache.flink.table.api.EnvironmentSettings;

7 | import org.apache.flink.table.api.Table;

8 | import org.apache.flink.table.api.java.StreamTableEnvironment;

9 | import org.apache.flink.table.sinks.RetractStreamTableSink;

10 | import org.apache.flink.table.sources.CsvTableSource;

11 | import org.apache.flink.table.sources.TableSource;

12 | import org.apache.flink.types.Row;

13 |

14 | public class CustomSinkJob {

15 | public static void main(String[] args) throws Exception {

16 | //初始化Flink执行环境

17 | StreamExecutionEnvironment blinkStreamEnv=StreamExecutionEnvironment.getExecutionEnvironment();

18 | EnvironmentSettings blinkStreamSettings= EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build();

19 | StreamTableEnvironment blinkStreamTabelEnv= StreamTableEnvironment.create(blinkStreamEnv,blinkStreamSettings);

20 | //获取Resource路径

21 | String path= CustomSinkJob.class.getClassLoader().getResource("list.txt").getPath();

22 |

23 | //注册数据源

24 | TableSource fileSource=new CsvTableSource(path,new String[]{"word"},new TypeInformation[]{Types.STRING});

25 | blinkStreamTabelEnv.registerTableSource("flieSourceTable",fileSource);

26 |

27 | //注册数据汇(Sink)

28 | RetractStreamTableSink retractStreamTableSink=new MyRetractStreamTableSink(new String[]{"_count","word"},new TypeInformation[]{Types.LONG,Types.STRING});

29 | //或者

30 | //RetractStreamTableSink retractStreamTableSink=new MyRetractStreamTableSink(new String[]{"_count","word"},new DataType[]{DataTypes.BIGINT(),DataTypes.STRING()});

31 | blinkStreamTabelEnv.registerTableSink("sinkTable",retractStreamTableSink);

32 |

33 | //执行SQL

34 | Table wordWithCount = blinkStreamTabelEnv.sqlQuery("SELECT count(word) AS _count,word FROM flieSourceTable GROUP BY word ");

35 |

36 | //将SQL结果插入到Sink Table

37 | wordWithCount.insertInto("sinkTable");

38 | blinkStreamTabelEnv.execute("BLINK STREAMING QUERY");

39 | }

40 | }

41 |

--------------------------------------------------------------------------------

/flink-tabel-sql 2&3/src/main/java/robinwang/custom/MyRetractStreamTableSink.java:

--------------------------------------------------------------------------------

1 | package robinwang.custom;

2 |

3 | import org.apache.flink.api.common.typeinfo.TypeInformation;

4 | import org.apache.flink.api.java.tuple.Tuple2;

5 | import org.apache.flink.api.java.typeutils.RowTypeInfo;

6 | import org.apache.flink.streaming.api.datastream.DataStream;

7 | import org.apache.flink.streaming.api.datastream.DataStreamSink;

8 | import org.apache.flink.streaming.api.functions.sink.SinkFunction;

9 | import org.apache.flink.table.api.TableSchema;

10 | import org.apache.flink.table.sinks.RetractStreamTableSink;

11 | import org.apache.flink.table.sinks.TableSink;

12 | import org.apache.flink.table.types.DataType;

13 | import org.apache.flink.types.Row;

14 |

15 | public class MyRetractStreamTableSink implements RetractStreamTableSink {

16 | private TableSchema tableSchema;

17 | //构造函数,储存TableSchema

18 | public MyRetractStreamTableSink(String[] fieldNames,TypeInformation[] typeInformations){

19 | this.tableSchema=new TableSchema(fieldNames,typeInformations);

20 | }

21 | //重载

22 | public MyRetractStreamTableSink(String[] fieldNames,DataType[] dataTypes){

23 | this.tableSchema=TableSchema.builder().fields(fieldNames,dataTypes).build();

24 | }

25 | //Table sink must implement a table schema.

26 | @Override

27 | public TableSchema getTableSchema() {

28 | return tableSchema;

29 | }

30 | @Override

31 | public DataStreamSink consumeDataStream(DataStream> dataStream) {

32 | return dataStream.addSink(new SinkFunction>() {

33 | @Override

34 | public void invoke(Tuple2 value, Context context) throws Exception {

35 | //自定义Sink

36 | // f0==true :插入新数据

37 | // f0==false:删除旧数据

38 | if(value.f0){

39 | //可以写入MySQL、Kafka或者发HttpPost...根据具体情况开发

40 | System.out.println(value.f1);

41 | }

42 | }

43 | });

44 | }

45 |

46 | //接口定义的方法

47 | @Override

48 | public TypeInformation getRecordType() {

49 | return new RowTypeInfo(tableSchema.getFieldTypes(),tableSchema.getFieldNames());

50 | }

51 | //接口定义的方法

52 | @Override

53 | public TableSink> configure(String[] strings, TypeInformation[] typeInformations) {

54 | return null;

55 | }

56 | //接口定义的方法

57 | @Override

58 | public void emitDataStream(DataStream> dataStream) {

59 | }

60 |

61 | }

62 |

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/src/main/java/robinwang/custom/MyRetractStreamTableSink.java:

--------------------------------------------------------------------------------

1 | package robinwang.custom;

2 |

3 | import org.apache.flink.api.common.typeinfo.TypeInformation;

4 | import org.apache.flink.api.java.tuple.Tuple2;

5 | import org.apache.flink.api.java.typeutils.RowTypeInfo;

6 | import org.apache.flink.streaming.api.datastream.DataStream;

7 | import org.apache.flink.streaming.api.datastream.DataStreamSink;

8 | import org.apache.flink.streaming.api.functions.sink.SinkFunction;

9 | import org.apache.flink.table.api.TableSchema;

10 | import org.apache.flink.table.sinks.RetractStreamTableSink;

11 | import org.apache.flink.table.sinks.TableSink;

12 | import org.apache.flink.table.types.DataType;

13 | import org.apache.flink.types.Row;

14 |

15 | public class MyRetractStreamTableSink implements RetractStreamTableSink {

16 | private TableSchema tableSchema;

17 | //构造函数,储存TableSchema

18 | public MyRetractStreamTableSink(String[] fieldNames,TypeInformation[] typeInformations){

19 | this.tableSchema=new TableSchema(fieldNames,typeInformations);

20 | }

21 | //重载

22 | public MyRetractStreamTableSink(String[] fieldNames,DataType[] dataTypes){

23 | this.tableSchema=TableSchema.builder().fields(fieldNames,dataTypes).build();

24 | }

25 | //Table sink must implement a table schema.

26 | @Override

27 | public TableSchema getTableSchema() {

28 | return tableSchema;

29 | }

30 | @Override

31 | public DataStreamSink consumeDataStream(DataStream> dataStream) {

32 | return dataStream.addSink(new SinkFunction>() {

33 | @Override

34 | public void invoke(Tuple2 value, Context context) throws Exception {

35 | //自定义Sink

36 | // f0==true :插入新数据

37 | // f0==false:删除旧数据

38 | if(value.f0){

39 | //可以写入MySQL、Kafka或者发HttpPost...根据具体情况开发

40 | System.out.println(value.f1);

41 | }

42 | }

43 | });

44 | }

45 |

46 | //接口定义的方法

47 | @Override

48 | public TypeInformation getRecordType() {

49 | return new RowTypeInfo(tableSchema.getFieldTypes(),tableSchema.getFieldNames());

50 | }

51 | //接口定义的方法

52 | @Override

53 | public TableSink> configure(String[] strings, TypeInformation[] typeInformations) {

54 | return null;

55 | }

56 | //接口定义的方法

57 | @Override

58 | public void emitDataStream(DataStream> dataStream) {

59 | }

60 |

61 | }

62 |

--------------------------------------------------------------------------------

/flink-tabel-sql 2&3/src/main/java/robinwang/KafkaSource.java:

--------------------------------------------------------------------------------

1 | package robinwang;

2 | import robinwang.custom.MyRetractStreamTableSink;

3 | import robinwang.custom.Tuple1Schema;

4 | import org.apache.flink.api.java.tuple.Tuple1;

5 | import org.apache.flink.streaming.api.datastream.DataStream;

6 | import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

7 | import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer011;

8 | import org.apache.flink.table.api.DataTypes;

9 | import org.apache.flink.table.api.EnvironmentSettings;

10 | import org.apache.flink.table.api.Table;

11 | import org.apache.flink.table.api.java.StreamTableEnvironment;

12 | import org.apache.flink.table.sinks.RetractStreamTableSink;

13 | import org.apache.flink.table.types.DataType;

14 | import org.apache.flink.types.Row;

15 |

16 | import java.util.Properties;

17 |

18 | public class KafkaSource {

19 | public static void main(String[] args) throws Exception {

20 | //初始化Flink执行环境

21 | StreamExecutionEnvironment blinkStreamEnv=StreamExecutionEnvironment.getExecutionEnvironment();

22 | EnvironmentSettings blinkStreamSettings= EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build();

23 | StreamTableEnvironment blinkStreamTabelEnv= StreamTableEnvironment.create(blinkStreamEnv,blinkStreamSettings);

24 |

25 | Properties kafkaProperties=new Properties();

26 | kafkaProperties.setProperty("bootstrap.servers", "0.0.0.0:9092");

27 | kafkaProperties.setProperty("group.id", "test");

28 | DataStream> kafkaStream=blinkStreamEnv.addSource(new FlinkKafkaConsumer011<>("test",new Tuple1Schema(),kafkaProperties));

29 | // DataStream> kafkaStream=blinkStreamEnv.addSource(new FlinkKafkaConsumer011<>("test",new AbstractDeserializationSchema>(){

30 | // @Override

31 | // public Tuple1 deserialize(byte[] bytes) throws IOException {

32 | // return new Tuple1<>(new String(bytes,"utf-8"));

33 | // }

34 | // },kafkaProperties));

35 |

36 | //如果多列应为:fromDataStream(kafkaStream,"f0,f1,f2");

37 | Table source=blinkStreamTabelEnv.fromDataStream(kafkaStream,"word");

38 | blinkStreamTabelEnv.registerTable("kafkaDataStream",source);

39 |

40 | //注册数据汇(Sink)

41 | RetractStreamTableSink retractStreamTableSink=new MyRetractStreamTableSink(new String[]{"_count","word"},new DataType[]{DataTypes.BIGINT(), DataTypes.STRING()});

42 | blinkStreamTabelEnv.registerTableSink("sinkTable",retractStreamTableSink);

43 |

44 | //执行SQL

45 | Table wordWithCount = blinkStreamTabelEnv.sqlQuery("SELECT count(word) AS _count,word FROM kafkaDataStream GROUP BY word ");

46 |

47 | //将SQL结果插入到Sink Table

48 | wordWithCount.insertInto("sinkTable");

49 | blinkStreamTabelEnv.execute("BLINK STREAMING QUERY");

50 | }

51 | }

52 |

--------------------------------------------------------------------------------

/flink-tabel-sql 6/sql-client-defaults.yaml:

--------------------------------------------------------------------------------

1 | ################################################################################

2 | # Licensed to the Apache Software Foundation (ASF) under one

3 | # or more contributor license agreements. See the NOTICE file

4 | # distributed with this work for additional information

5 | # regarding copyright ownership. The ASF licenses this file

6 | # to you under the Apache License, Version 2.0 (the

7 | # "License"); you may not use this file except in compliance

8 | # with the License. You may obtain a copy of the License at

9 | #

10 | # http://www.apache.org/licenses/LICENSE-2.0

11 | #

12 | # Unless required by applicable law or agreed to in writing, software

13 | # distributed under the License is distributed on an "AS IS" BASIS,

14 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

15 | # See the License for the specific language governing permissions and

16 | # limitations under the License.

17 | ################################################################################

18 |

19 |

20 | # This file defines the default environment for Flink's SQL Client.

21 | # Defaults might be overwritten by a session specific environment.

22 |

23 |

24 | # See the Table API & SQL documentation for details about supported properties.

25 |

26 |

27 | #==============================================================================

28 | # Tables

29 | #==============================================================================

30 |

31 | # Define tables here such as sources, sinks, views, or temporal tables.

32 |

33 | tables:

34 | - name: Logs

35 | type: source

36 | update-mode: append

37 | schema:

38 | - name: response

39 | type: STRING

40 | - name: status

41 | type: INT

42 | - name: protocol

43 | type: STRING

44 | - name: timestamp

45 | type: BIGINT

46 | connector:

47 | property-version: 1

48 | type: kafka

49 | version: universal

50 | topic: log

51 | startup-mode: earliest-offset

52 | properties:

53 | - key: zookeeper.connect

54 | value: 0.0.0.0:2181

55 | - key: bootstrap.servers

56 | value: 0.0.0.0:9092

57 | - key: group.id

58 | value: test

59 | format:

60 | property-version: 1

61 | type: json

62 | schema: "ROW(response STRING,status INT,protocol STRING,timestamp BIGINT)"

63 | # A typical table source definition looks like:

64 | # - name: ...

65 | # type: source-table

66 | # connector: ...

67 | # format: ...

68 | # schema: ...

69 |

70 | # A typical view definition looks like:

71 | # - name: ...

72 | # type: view

73 | # query: "SELECT ..."

74 |

75 | # A typical temporal table definition looks like:

76 | # - name: ...

77 | # type: temporal-table

78 | # history-table: ...

79 | # time-attribute: ...

80 | # primary-key: ...

81 |

82 |

83 | #==============================================================================

84 | # User-defined functions

85 | #==============================================================================

86 |

87 | # Define scalar, aggregate, or table functions here.

88 |

89 | functions: [] # empty list

90 | # A typical function definition looks like:

91 | # - name: ...

92 | # from: class

93 | # class: ...

94 | # constructor: ...

95 |

96 |

97 | #==============================================================================

98 | # Catalogs

99 | #==============================================================================

100 |

101 | # Define catalogs here.

102 |

103 | catalogs: [] # empty list

104 | # A typical catalog definition looks like:

105 | # - name: myhive

106 | # type: hive

107 | # hive-conf-dir: /opt/hive_conf/

108 | # default-database: ...

109 |

110 |

111 | #==============================================================================

112 | # Execution properties

113 | #==============================================================================

114 |

115 | # Properties that change the fundamental execution behavior of a table program.

116 |

117 | execution:

118 | # select the implementation responsible for planning table programs

119 | # possible values are 'old' (used by default) or 'blink'

120 | planner: old

121 | # 'batch' or 'streaming' execution

122 | type: streaming

123 | # allow 'event-time' or only 'processing-time' in sources

124 | time-characteristic: event-time

125 | # interval in ms for emitting periodic watermarks

126 | periodic-watermarks-interval: 200

127 | # 'changelog' or 'table' presentation of results

128 | result-mode: table

129 | # maximum number of maintained rows in 'table' presentation of results

130 | max-table-result-rows: 1000000

131 | # parallelism of the program

132 | parallelism: 1

133 | # maximum parallelism

134 | max-parallelism: 128

135 | # minimum idle state retention in ms

136 | min-idle-state-retention: 0

137 | # maximum idle state retention in ms

138 | max-idle-state-retention: 0

139 | # current catalog ('default_catalog' by default)

140 | current-catalog: default_catalog

141 | # current database of the current catalog (default database of the catalog by default)

142 | current-database: default_database

143 | # controls how table programs are restarted in case of a failures

144 | restart-strategy:

145 | # strategy type

146 | # possible values are "fixed-delay", "failure-rate", "none", or "fallback" (default)

147 | type: fallback

148 |

149 | #==============================================================================

150 | # Configuration options

151 | #==============================================================================

152 |

153 | # Configuration options for adjusting and tuning table programs.

154 |

155 | # A full list of options and their default values can be found

156 | # on the dedicated "Configuration" web page.

157 |

158 | # A configuration can look like:

159 | # configuration:

160 | # table.exec.spill-compression.enabled: true

161 | # table.exec.spill-compression.block-size: 128kb

162 | # table.optimizer.join-reorder-enabled: true

163 |

164 | #==============================================================================

165 | # Deployment properties

166 | #==============================================================================

167 |

168 | # Properties that describe the cluster to which table programs are submitted to.

169 |

170 | deployment:

171 | # general cluster communication timeout in ms

172 | response-timeout: 5000

173 | # (optional) address from cluster to gateway

174 | gateway-address: ""

175 | # (optional) port from cluster to gateway

176 | gateway-port: 0

177 |

--------------------------------------------------------------------------------

/flink-tabel-sql 2&3/Flink SQL实战 - 2.md:

--------------------------------------------------------------------------------

1 | ## 实战篇-2:Tabel API & SQL 自定义 Sinks函数

2 |

3 | ### 引子:匪夷所思的Bool数据

4 |

5 | 在上一篇实战博客,我们使用Flink SQL API编写了一个基本的WordWithCount计算任务

6 |

7 | 我截取了一段控制台输出:

8 |

9 | ```

10 | 2> (true,1,Huawei)

11 | 5> (false,1,Vivo)

12 | 5> (true,2,Vivo)

13 | 2> (false,1,Huawei)

14 | 2> (true,2,Huawei)

15 | 3> (true,1,Xiaomi)

16 | 3> (false,1,Xiaomi)

17 | 3> (true,2,Xiaomi)

18 | ```

19 |

20 | 不难发现我们定义的表数据本应该是只有LONG和STRING两个字段,但是控制台直接输出Tabel的结果却多出一个BOOL类型的数据。而且同样计数值的数据会出现true和false各一次。

21 |

22 | 在官方文档关于[retractstreamtablesink]( https://ci.apache.org/projects/flink/flink-docs-release-1.9/dev/table/sourceSinks.html#retractstreamtablesink )的介绍中, 该表数据将被转换为一个累加和收回消息流,这些消息被编码为Java的 ```Tuple2``` 类型。第一个字段是一个布尔标志,用于指示消息类型(true表示插入,false表示删除)。第二个字段才是sink的数据类型。

23 |

24 | 所以在我们的WordWithCount计算中,执行的SQL语句对表的操作不是单纯insert插入,而是每执行一次sink都会在sink中执行 **删除旧数据** 和 **插入新数据** 两次操作。

25 |

26 | ----

27 |

28 | 用于Flink Tabel环境的自定义 Sources & Sinks函数和DataStream API思路是差不多的,如果有编写DataStream APISources & Sinks函数的经验,编写用于Flink Tabel环境的自定义函数是较容易理解和上手的。

29 |

30 | ### 定义TableSink

31 |

32 | 现在我们要给之前的WordWithCount计算任务添加一个自定义Sink

33 |

34 |

35 |

36 |

37 |

38 |

39 |

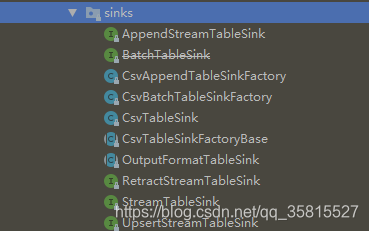

40 | flink.table.sinks提供了有三种继承 ```StreamTableSink``` 类的接口:

41 |

42 | - AppendStreamTableSink: 仅发出对表插入的更改

43 | - RetractStreamTableSink:发出对表具有插入,更新和删除的更改 ,消息被编码为 ```Tuple2```

44 | - UpsertStreamTableSink: 发出对表具有插入,更新和删除的更改 ,消息被编码为 ```Tuple2 ```,表必须要有类似主键的唯一键值( 使用setKeyFields(方法),不然会报错

45 |

46 | 因为在我们的WordWithCount计算中,执行的SQL语句对表的操作不是单纯insert插入,所以我们需要编写实现RetractStreamTableSink的用户自定义函数:

47 |

48 | ```

49 | public class MyRetractStreamTableSink implements RetractStreamTableSink {

50 | private TableSchema tableSchema;

51 | //构造函数,储存TableSchema

52 | public MyRetractStreamTableSink(String[] fieldNames,TypeInformation[] typeInformations){

53 | this.tableSchema=new TableSchema(fieldNames,typeInformations);

54 | }

55 | //重载

56 | public MyRetractStreamTableSink(String[] fieldNames,DataType[] dataTypes){

57 | this.tableSchema=TableSchema.builder().fields(fieldNames,dataTypes).build();

58 | }

59 | //Table sink must implement a table schema.

60 | @Override

61 | public TableSchema getTableSchema() {

62 | return tableSchema;

63 | }

64 | @Override

65 | public DataStreamSink consumeDataStream(DataStream> dataStream) {

66 | return dataStream.addSink(new SinkFunction>() {

67 | @Override

68 | public void invoke(Tuple2 value, Context context) throws Exception {

69 | //自定义Sink

70 | // f0==true :插入新数据

71 | // f0==false:删除旧数据

72 | if(value.f0){

73 | //可以写入MySQL、Kafka或者发HttpPost...根据具体情况开发

74 | System.out.println(value.f1);

75 | }

76 | }

77 | });

78 | }

79 |

80 | //接口定义的方法

81 | @Override

82 | public TypeInformation getRecordType() {

83 | return new RowTypeInfo(tableSchema.getFieldTypes(),tableSchema.getFieldNames());

84 | }

85 | //接口定义的方法

86 | @Override

87 | public TableSink> configure(String[] strings, TypeInformation[] typeInformations) {

88 | return null;

89 | }

90 | //接口定义的方法

91 | @Override

92 | public void emitDataStream(DataStream> dataStream) {

93 | }

94 |

95 | }

96 | ```

97 |

98 | 吐槽一下,目前使用1.9.0版本API,在注册source Tabel都用 ```TypeInformation[]``` 表示数据类型。

99 |

100 | 而在编写Sink时使用```TypeInformation[]```的方法都被@Deprecated,提供了Builder方法代替构造,使用```DataType[]``` 为 ```TableSchema.builder().fields``` 的参数表示数据类型,统一使用 ```TypeInformation[]``` 表示数据类型比较潇洒,当然使用 ```TableSchema.builder()``` 方法有对空值的检查,更加***可靠***。

101 |

102 | 所以写了重载函数:我全都要

103 |

104 | 使用自定义Sink,直接用new定义Tabel的结构简化了代码:

105 |

106 | ```

107 | import kmops.models.MyRetractStreamTableSink;

108 | import org.apache.flink.api.common.typeinfo.TypeInformation;

109 | import org.apache.flink.api.common.typeinfo.Types;

110 | import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

111 | import org.apache.flink.table.api.EnvironmentSettings;

112 | import org.apache.flink.table.api.Table;

113 | import org.apache.flink.table.api.java.StreamTableEnvironment;

114 | import org.apache.flink.table.sinks.RetractStreamTableSink;

115 | import org.apache.flink.table.sources.CsvTableSource;

116 | import org.apache.flink.table.sources.TableSource;

117 | import org.apache.flink.types.Row;

118 |

119 | public class CustomSinkJob {

120 | public static void main(String[] args) throws Exception {

121 | //初始化Flink执行环境

122 | StreamExecutionEnvironment blinkStreamEnv=StreamExecutionEnvironment.getExecutionEnvironment();

123 | EnvironmentSettings blinkStreamSettings= EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build();

124 | StreamTableEnvironment blinkStreamTabelEnv= StreamTableEnvironment.create(blinkStreamEnv,blinkStreamSettings);

125 | //获取Resource路径

126 | String path= CustomSinkJob.class.getClassLoader().getResource("list.txt").getPath();

127 |

128 | //注册数据源

129 | TableSource fileSource=new CsvTableSource(path,new String[]{"word"},new TypeInformation[]{Types.STRING});

130 | blinkStreamTabelEnv.registerTableSource("flieSourceTable",fileSource);

131 |

132 | //注册数据汇(Sink)

133 | RetractStreamTableSink retractStreamTableSink=new MyRetractStreamTableSink(new String[]{"_count","word"},new TypeInformation[]{Types.LONG,Types.STRING});

134 | //或者

135 | //RetractStreamTableSink retractStreamTableSink=new MyRetractStreamTableSink(new String[]{"_count","word"},new DataType[]{DataTypes.BIGINT(),DataTypes.STRING()});

136 | blinkStreamTabelEnv.registerTableSink("sinkTable",retractStreamTableSink);

137 |

138 | //执行SQL

139 | Table wordWithCount = blinkStreamTabelEnv.sqlQuery("SELECT count(word) AS _count,word FROM flieSourceTable GROUP BY word ");

140 |

141 | //将SQL结果插入到Sink Table

142 | wordWithCount.insertInto("sinkTable");

143 | blinkStreamTabelEnv.execute("BLINK STREAMING QUERY");

144 | }

145 | }

146 |

147 | ```

148 |

149 | 输出结果:

150 |

151 | ```

152 | 1,OnePlus

153 | 1,Oppo

154 | 2,Oppo

155 | 2,OnePlus

156 | ```

157 |

158 | ### GitHub

159 |

160 | 源码已上传至GitHub

161 |

162 | https://github.com/StarPlatinumStudio/Flink-SQL-Practice

163 |

164 | 下篇博客干货极多

165 |

166 | ### To Be Continue=>

--------------------------------------------------------------------------------

/flink-tabel-sql 4&5/Flink SQL 实战 (5):使用自定义函数实现关键字过滤统计.md:

--------------------------------------------------------------------------------

1 | ## Flink SQL 实战 (5):使用自定义函数实现关键字过滤统计

2 |

3 | 在上一篇实战博客中使用POJO Schema解析来自 Kafka 的 JSON 数据源并且使用自定义函数处理。

4 |

5 | 现在我们使用更强大自定义函数处理数据

6 |

7 | ## 使用自定义函数实现关键字过滤统计

8 |

9 | ### 自定义表函数(UDTF)

10 |

11 | 与自定义的标量函数相似,自定义表函数将零,一个或多个标量值作为输入参数。 但是,与标量函数相比,它可以返回任意数量的行作为输出,而不是单个值。

12 |

13 | 为了定义表函数,必须扩展基类TableFunction并实现**评估方法**。 表函数的行为由其评估方法确定。 必须将评估方法声明为公开并命名为eval。 通过实现多个名为eval的方法,可以重载TableFunction。 评估方法的参数类型确定表函数的所有有效参数。 返回表的类型由TableFunction的通用类型确定。 评估方法使用 collect(T)方法发出输出行。

14 |

15 | 定义一个过滤字符串 记下关键字 的自定义表函数

16 |

17 | KyeWordCount.java:

18 |

19 | ```

20 | import org.apache.flink.api.java.tuple.Tuple2;

21 | import org.apache.flink.table.functions.TableFunction;

22 |

23 | public class KyeWordCount extends TableFunction> {

24 | private String[] keys;

25 | public KyeWordCount(String[] keys){

26 | this.keys=keys;

27 | }

28 | public void eval(String in){

29 | for (String key:keys){

30 | if (in.contains(key)){

31 | collect(new Tuple2(key,1));

32 | }

33 | }

34 | }

35 | }

36 | ```

37 |

38 | 实现关键字过滤统计:

39 |

40 | ```

41 | public class UdtfJob {

42 | public static void main(String[] args) throws Exception {

43 | StreamExecutionEnvironment streamEnv = StreamExecutionEnvironment.getExecutionEnvironment();

44 | EnvironmentSettings streamSettings = EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build();

45 | StreamTableEnvironment streamTabelEnv = StreamTableEnvironment.create(streamEnv, streamSettings);

46 | KafkaTabelSource kafkaTabelSource = new KafkaTabelSource();

47 | streamTabelEnv.registerTableSource("kafkaDataStream", kafkaTabelSource);//使用自定义TableSource

48 | //注册自定义函数定义三个关键字:"KeyWord","WARNING","illegal"

49 | streamTabelEnv.registerFunction("CountKEY", new KyeWordCount(new String[]{"KeyWord","WARNING","illegal"}));

50 | //编写SQL

51 | Table wordWithCount = streamTabelEnv.sqlQuery("SELECT key,COUNT(countv) AS countsum FROM kafkaDataStream LEFT JOIN LATERAL TABLE(CountKEY(response)) as T(key, countv) ON TRUE GROUP BY key");

52 | //直接输出Retract流

53 | streamTabelEnv.toRetractStream(wordWithCount, Row.class).print();

54 | streamTabelEnv.execute("BLINK STREAMING QUERY");

55 | }

56 | }

57 | ```

58 |

59 | 测试用Python脚本如下

60 |

61 | ```

62 | # https://pypi.org/project/kafka-python/

63 | import pickle

64 | import time

65 | import json

66 | from kafka import KafkaProducer

67 |

68 | producer = KafkaProducer(bootstrap_servers=['127.0.0.1:9092'],

69 | key_serializer=lambda k: pickle.dumps(k),

70 | value_serializer=lambda v: pickle.dumps(v))

71 | start_time = time.time()

72 | for i in range(0, 10000):

73 | print('------{}---------'.format(i))

74 | producer = KafkaProducer(value_serializer=lambda v: json.dumps(v).encode('utf-8'),compression_type='gzip')

75 | producer.send('test',{"response":"resKeyWordWARNINGillegal","status":0,"protocol":"protocol","timestamp":0})

76 | producer.send('test',{"response":"resKeyWordWARNINGillegal","status":1,"protocol":"protocol","timestamp":0})

77 | producer.send('test',{"response":"resresKeyWordWARNING","status":2,"protocol":"protocol","timestamp":0})

78 | producer.send('test',{"response":"resKeyWord","status":3,"protocol":"protocol","timestamp":0})

79 | producer.send('test',{"response":"res","status":4,"protocol":"protocol","timestamp":0})

80 | producer.send('test',{"response":"res","status":5,"protocol":"protocol","timestamp":0})

81 | # future = producer.send('test', key='num', value=i, partition=0)

82 | # 将缓冲区的全部消息push到broker当中

83 | producer.flush()

84 | producer.close()

85 |

86 | end_time = time.time()

87 | time_counts = end_time - start_time

88 | print(time_counts)

89 | ```

90 |

91 | 控制台输出:

92 |

93 | ```

94 | ...

95 | 6> (false,KeyWord,157)

96 | 3> (false,WARNING,119)

97 | 3> (true,WARNING,120)

98 | 6> (true,KeyWord,158)

99 | 7> (true,illegal,80)

100 | 6> (false,KeyWord,158)

101 | 6> (true,KeyWord,159)

102 | 6> (false,KeyWord,159)

103 | 6> (true,KeyWord,160)

104 | ...

105 | ```

106 |

107 | ### 自定义聚合函数

108 |

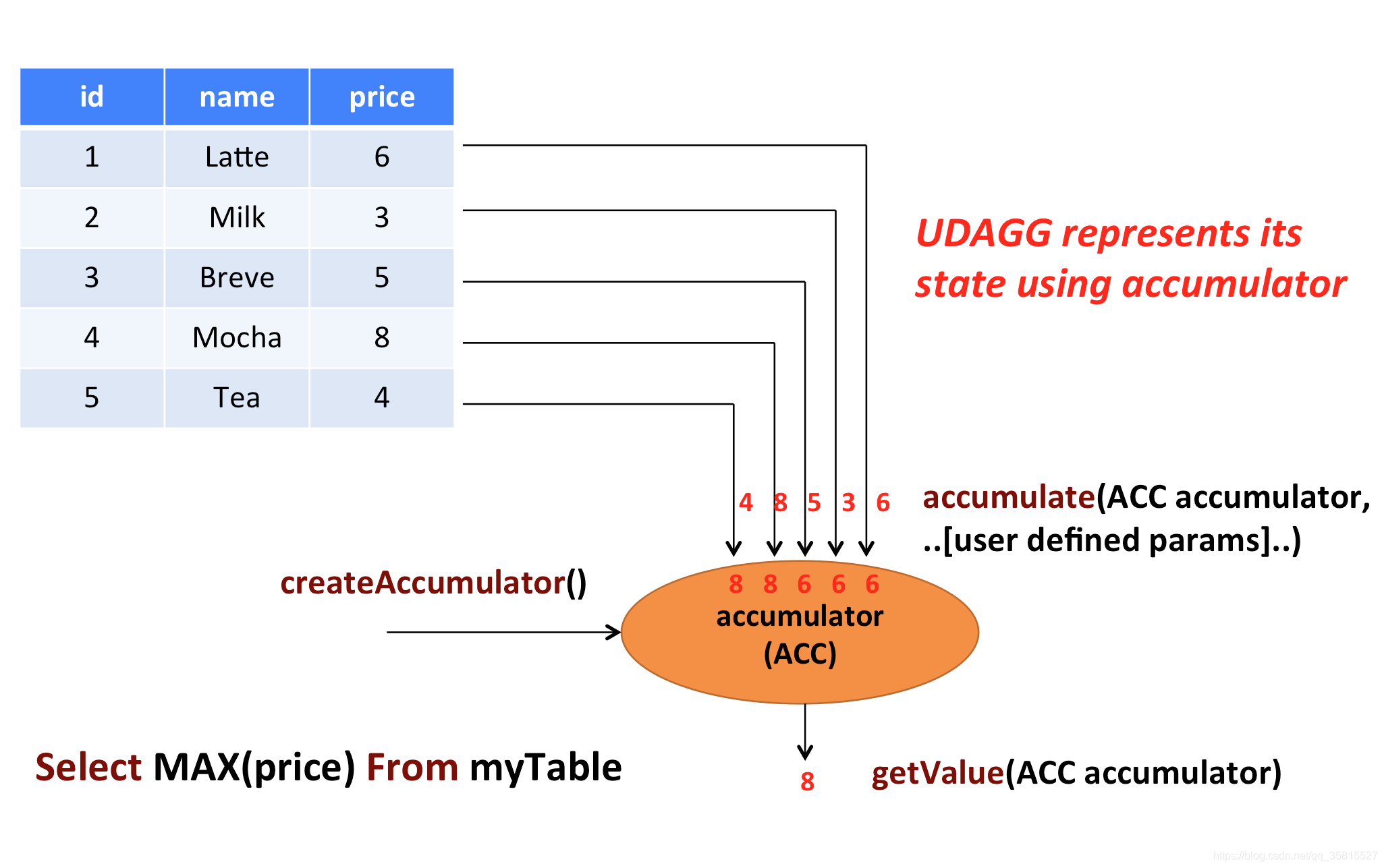

109 | 自定义聚合函数(UDAGGs)将一个表聚合为一个标量值。

110 |

111 |

112 |

113 | 聚合函数适合用于累计的工作,上面的图显示了聚合的一个示例。假设您有一个包含饮料数据的表。该表由三列组成:id、name和price,共计5行。想象一下,你需要找到所有饮料的最高价格。执行max()聚合。您需要检查5行中的每一行,结果将是单个数值。

114 |

115 | 用户定义的聚合函数是通过扩展AggregateFunction类来实现的。AggregateFunction的工作原理如下。首先,它需要一个累加器,这个累加器是保存聚合中间结果的数据结构。通过调用AggregateFunction的createAccumulator()方法来创建一个空的累加器。随后,对每个输入行调用该函数的accumulator()方法来更新累加器。处理完所有行之后,将调用函数的getValue()方法来计算并返回最终结果。

116 |

117 | **每个AggregateFunction必须使用以下方法: **

118 |

119 | - `createAccumulator()`创建一个空的累加器

120 | - `accumulate()`更新累加器

121 | - `getValue()`计算并返回最终结果

122 |

123 | 除了上述方法之外,还有一些可选方法。虽然其中一些方法允许系统更有效地执行查询,但是对于某些用例是必需的。例如,如果应该在会话组窗口的上下文中应用聚合函数,那么merge()方法是必需的(当观察到连接它们的行时,需要连接两个会话窗口的累加器。

124 |

125 | **AggregateFunction可选方法**

126 |

127 | - `retract()` 定义restract:减少Accumulator ,对于在有界窗口上的聚合是必需的。

128 | - `merge()` merge多个Accumulator , 对于许多批处理聚合和会话窗口聚合都是必需的。

129 | - `resetAccumulator()` 重置Accumulator ,对于许多批处理聚合都是必需的。

130 |

131 | ##### 使用聚合函数聚合最大的status值

132 |

133 | 编写自定义聚合函数,用于聚合出最大的status

134 |

135 | ```

136 | public class MaxStatus extends AggregateFunction {

137 | @Override

138 | public Integer getValue(StatusACC statusACC) {

139 | return statusACC.maxStatus;

140 | }

141 |

142 | @Override

143 | public StatusACC createAccumulator() {

144 | return new StatusACC();

145 | }

146 | public void accumulate(StatusACC statusACC,int status){

147 | if (status>statusACC.maxStatus){

148 | statusACC.maxStatus=status;

149 | }

150 | }

151 | public static class StatusACC{

152 | public int maxStatus=0;

153 | }

154 | }

155 | ```

156 |

157 | mian函数修改注册和SQL就可以使用

158 |

159 | ```

160 | /**

161 | *聚合最大的status

162 | */

163 | streamTabelEnv.registerFunction("maxStatus",new MaxStatus());

164 | Table wordWithCount = streamTabelEnv.sqlQuery("SELECT maxStatus(status) AS maxStatus FROM kafkaDataStream");

165 | ```

166 |

167 | 使用之前的python脚本测试

168 |

169 | 控制台输出(全部):

170 |

171 | ```

172 | 5> (false,1)

173 | 8> (true,3)

174 | 3> (false,0)

175 | 4> (true,1)

176 | 6> (true,2)

177 | 2> (true,0)

178 | 2> (true,4)

179 | 1> (false,3)

180 | 7> (false,2)

181 | 3> (false,4)

182 | 4> (true,5)

183 | ```

184 |

185 | 除非输入更大的Status,否则控制台不会继续输出新结果

186 |

187 | ### 表聚合函数

188 |

189 | 用户定义的表聚合函数(UDTAGGs)将一个表(具有一个或多个属性的一个或多个行)聚合到具有多行和多列的结果表。

190 |

191 | 和聚合函数几乎一致,有需求的朋友可以参考官方文档

192 |

193 | [Table Aggregation Functions]( https://ci.apache.org/projects/flink/flink-docs-release-1.9/dev/table/udfs.html#table-aggregation-functions )

194 |

195 | ## GitHub

196 |

197 | 项目源码、python kafka moke小程序已上传至GitHub

198 |

199 | https://github.com/StarPlatinumStudio/Flink-SQL-Practice

200 |

201 | 我的专栏:[Flink SQL原理和实战]( https://blog.csdn.net/qq_35815527/category_9634641.html )

202 |

203 | ### To Be Continue=>

--------------------------------------------------------------------------------

/flink-tabel-sql 2&3/Flink SQL实战 - 3.md:

--------------------------------------------------------------------------------

1 | ## 实战篇-3:Tabel API & SQL 注册Tabel Source

2 |

3 | 在上一篇实战博客,我们给WordWithCount计算任务自定义了Sink函数

4 |

5 | 现在我们开始研究自定义Source:

6 |

7 | ### 前 方 干 货 极 多 ###

8 |

9 |

10 |

11 | ## 注册Tabel Source

12 |

13 | 我们以Kafka Source举例,讲2种注册Tabel Source的方法和一些技巧:

14 |

15 | ### 将DataStream转换为表

16 |

17 | 想要将DataStream转换为表,我们需要一个DataStream

18 |

19 | 以Kafka为外部数据源,需要在pom文件中添加依赖

20 |

21 | ```

22 |

23 | org.apache.flink

24 | flink-connector-kafka-0.11_2.11

25 | ${flink.version}

26 |

27 |

28 | org.apache.flink

29 | flink-connector-kafka_2.11

30 | ${flink.version}

31 |

32 | ```

33 |

34 | 添加Kafka DataStream:

35 |

36 | ```

37 | DataStream> kafkaStream=blinkStreamEnv.addSource(new FlinkKafkaConsumer011<>("test",new AbstractDeserializationSchema>(){

38 | @Override

39 | public Tuple1 deserialize(byte[] bytes) throws IOException {

40 | return new Tuple1<>(new String(bytes,"utf-8"));

41 | }

42 | },kafkaProperties));

43 | ```

44 |

45 | 注册表:

46 |

47 | ```

48 | //如果多列应为:fromDataStream(kafkaStream,"f0,f1,f2");

49 | Table source=blinkStreamTabelEnv.fromDataStream(kafkaStream,"word");

50 | blinkStreamTabelEnv.registerTable("kafkaDataStream",source);

51 | ```

52 |

53 | 虽然没有指定是Tabel Source,但是可以在后续流程使用注册好的 kafkaDataStream 表

54 |

55 | ### 数据类型到表架构的映射

56 |

57 | Flink的DataStream和DataSet API支持非常多种类型。元组,POJO,Scala案例类和Flink的Row类型等复合类型允许嵌套的数据结构具有多个字段,这些字段可在表表达式中访问。

58 |

59 | 上述符合数据类型可以通过自定义Schema来使用

60 |

61 | ### 自定义Schema

62 |

63 | 我喜欢将自定义函数封装成类,简洁可复用

64 |

65 | ```

66 | import org.apache.flink.api.common.serialization.AbstractDeserializationSchema;

67 | import org.apache.flink.types.Row;

68 | import java.io.IOException;

69 |

70 | public final class RowSchema extends AbstractDeserializationSchema {

71 | @Override

72 | public Row deserialize(byte[] bytes) throws IOException {

73 | //定义长度为1行的Row

74 | Row row=new Row(1);

75 | //设置字段,如果多行可以解析JSON循环

76 | row.setField(0,new String(bytes,"utf-8"));

77 | return row;

78 | }

79 | }

80 | ```

81 |

82 | 在main中使用:

83 |

84 | ```

85 | DataStream kafkaStream=blinkStreamEnv.addSource(new FlinkKafkaConsumer011<>("test",new RowSchema(),kafkaProperties));

86 | ```

87 |

88 | 到这里已经注册好可用的Datastream Source Tabel了

89 |

90 | 但是还可以进一步自定义:

91 |

92 | ### 自定义TableSource

93 |

94 | StreamTableSource接口继承自TableSource接口,可以在getDataStream方法中编写DataStream

95 |

96 | ```

97 | import org.apache.flink.api.common.serialization.SimpleStringSchema;

98 | import org.apache.flink.streaming.api.datastream.DataStream;

99 | import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

100 | import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer011;

101 | import org.apache.flink.table.api.DataTypes;

102 | import org.apache.flink.table.api.TableSchema;

103 | import org.apache.flink.table.sources.StreamTableSource;

104 | import org.apache.flink.table.types.DataType;

105 |

106 | import java.util.Properties;

107 |

108 | public class KafkaTabelSource implements StreamTableSource {

109 | @Override

110 | public DataType getProducedDataType() {

111 | return DataTypes.STRING();

112 | }

113 | @Override

114 | public TableSchema getTableSchema() {

115 | return TableSchema.builder().fields(new String[]{"word"},new DataType[]{DataTypes.STRING()}).build();

116 | }

117 | @Override