├── LICENSE

├── README.md

├── aliyun-yd.rules

├── bad-crawler.rules

├── basic-crawler.rules

├── good-bot.rules

└── security-scan-bot.rules

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2018 Sukka

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |  3 |

3 |

4 | Cloudflare Block Bad Bot Ruleset

5 |

6 |

7 |  8 |

8 |  9 |

9 |

10 |

11 | > Block bad, possibly even malicious web crawlers (automated bots) using Cloudflare Firewall Rules

12 | > 使用 Cloudflare Firewall Rules 拦截恶意网络爬虫(自动机器人)和其它恶意流量

13 |

14 | ## Introduction 简介

15 |

16 | `Cloudflare Block Bad Bot Ruleset` projects stop and block Bad Bot, Spam Referrer, Adware, Malware and any other kinds of bad internet traffic ever reaching your web sites. Inspired by [nginx-badbot-blocker](https://github.com/mariusv/nginx-badbot-blocker) & worked with Cloudflare Firewall Rules.

17 |

18 | `Cloudflare Block Bad Bot Ruleset` 可以阻止恶意爬虫、垃圾引荐来源、广告、恶意软件以及任何其他类型的恶意互联网流量到达您的网站。灵感来自 [nginx-badbot-blocker](https://github.com/mariusv/nginx-badbot-blocker) 并与 Cloudflare Firewall Rules 搭配使用。

19 |

20 | ## Precautions 注意事项

21 |

22 | `Cloudflare Block Bad Bot Ruleset` mainly based on User-Agent, which is known to all that could be changed easily. So the project can not replace the Web Application Firewall.

23 |

24 | `Cloudflare Block Bad Bot Ruleset` 主要基于 User-Agent,但是众所周知 User-Agent 可以伪装,所以本项目并不能取代正规的 Web Application Firewall。

25 |

26 | ## Ruleset 规则

27 |

28 | Rule Name | File Name | Action | What For

29 | ---- | ---- | ---- | ----

30 | Good Bot | [good-bot.rules](./good-bot.rules) | Allow | Match known good bot.

匹配已知的正常爬虫

31 | Aliyun Yundun | [aliyun-yd.rules](./aliyun-yd.rules) | Block | Match Aliyun Yundun based on known IP cidr.

基于已知 IP 段匹配阿里云盾

32 | Basic Crawler | [basic-crawler.rules](./basic-crawler.rules) | Block/Challenge | Block some known bad bot.

匹配一些基本的 HTTP Request 库

33 | Bad Crawler | [bad-crawler.rules](./bad-crawler.rules) | Block/Challenge | Match mostly known bad bot, basic ruleset not included.

匹配绝大部分已知的恶意爬虫、SEO 爬虫和营销爬虫

34 | Security Scanner | [security-scan-bot.rules](./security-scan-bot.rules) | Block/Challenge | Match mostly known security scanner.

匹配大部分已知的漏洞扫描爬虫

35 |

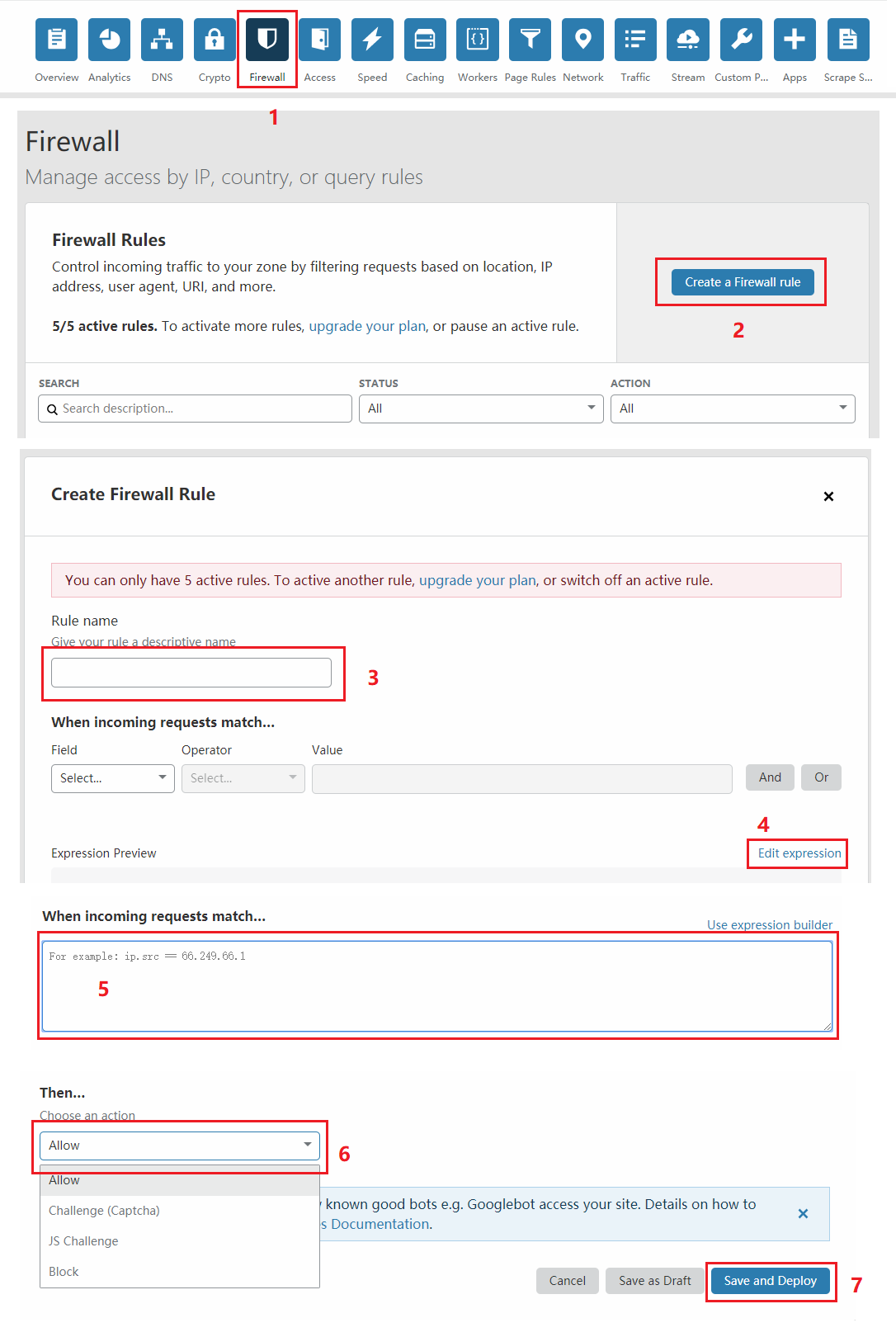

36 | ## Usage 用法

37 |

38 |

39 |

40 | ## More Information 更多详情

41 |

42 | - [Announcing Firewall Rules | Cloudflare Blog](https://blog.cloudflare.com/announcing-firewall-rules/)

43 | - [Cloudflare Firewall Rules | Cloudflare Documentations](https://developers.cloudflare.com/firewall/)

44 | - [nginx-badbot-blocker | GitHub](https://github.com/mariusv/nginx-badbot-blocker)

45 | - [nginx-ultimate-bad-bot-blocker | GitHub](https://github.com/mitchellkrogza/nginx-ultimate-bad-bot-blocker)

46 |

47 | ## Todo List

48 |

49 | - [ ] Bad referrer list

50 | - [ ] Known bad IP List

51 |

52 | ## Maintainer 维护者

53 |

54 | **Cloudflare Block Bad Bot Ruleset** © [Sukka](https://github.com/SukkaW), Released under the [MIT](./LICENSE) License.

55 |

56 | > [Personal Website](https://skk.moe) · [Blog](https://blog.skk.moe) · GitHub [@SukkaW](https://github.com/SukkaW) · Telegram Channel [@SukkaChannel](https://t.me/SukkaChannel) · Twitter [@isukkaw](https://twitter.com/isukkaw) · Keybase [@sukka](https://keybase.io/sukka)

57 |

--------------------------------------------------------------------------------

/aliyun-yd.rules:

--------------------------------------------------------------------------------

1 | (ip.src in {140.205.201.0/24 140.205.225.0/24 106.11.222.0/23 106.11.224.0/24 106.11.228.0/22})

2 |

--------------------------------------------------------------------------------

/bad-crawler.rules:

--------------------------------------------------------------------------------

1 | (http.user_agent contains "80legs") or

2 | (http.user_agent contains "Abonti") or

3 | (http.user_agent contains "admantx") or

4 | (http.user_agent contains "aipbot") or

5 | (http.user_agent contains "AllSubmitter") or

6 | (http.user_agent contains "Backlink") or (http.user_agent contains "backlink") or

7 | (http.user_agent contains "Badass") or

8 | (http.user_agent contains "Bigfoot") or

9 | (http.user_agent contains "blexbot") or

10 | (http.user_agent contains "Buddy") or

11 | (http.user_agent contains "CherryPicker") or

12 | (http.user_agent contains "cloudsystemnetwork") or

13 | (http.user_agent contains "cognitiveseo") or

14 | (http.user_agent contains "Collector") or

15 | (http.user_agent contains "cosmos") or

16 | (http.user_agent contains "CrazyWebCrawler") or

17 | (http.user_agent contains "Crescent") or

18 | (http.user_agent contains "Devil") or

19 | ((lower(http.user_agent) contains "domain") and ((http.user_agent contains "spider") or (http.user_agent contains "stat") or (http.user_agent contains "Appender") or (http.user_agent contains "Crawler"))) or

20 | (http.user_agent contains "DittoSpyder") or

21 | (http.user_agent contains "Konqueror") or

22 | (http.user_agent contains "Easou") or (http.user_agent contains "Yisou") or (http.user_agent contains "Etao") or

23 | (http.user_agent contains "mail" and ((http.user_agent contains "olf") or (http.user_agent contains "spider"))) or

24 | (http.user_agent contains "exabot.com") or

25 | (http.user_agent contains "getintent") or

26 | (http.user_agent contains "Grabber") or

27 | (http.user_agent contains "GrabNet") or

28 | (http.user_agent contains "HEADMasterSEO") or

29 | (http.user_agent contains "heritrix") or

30 | (http.user_agent contains "htmlparser") or

31 | (http.user_agent contains "hubspot") or

32 | (http.user_agent contains "Jyxobot") or

33 | (lower(http.user_agent) contains "kraken") or

34 | (http.user_agent contains "larbin") or

35 | (http.user_agent contains "ltx71") or

36 | (http.user_agent contains "leiki") or

37 | (http.user_agent contains "LinkScan") or

38 | (http.user_agent contains "Magnet") or

39 | (http.user_agent contains "Mag-Net") or

40 | (http.user_agent contains "Mechanize") or

41 | (http.user_agent contains "MegaIndex") or

42 | (http.user_agent contains "Metasearch") or

43 | (http.user_agent contains "MJ12bot") or

44 | (http.user_agent contains "moz.com") or

45 | (http.user_agent contains "Navroad") or

46 | (http.user_agent contains "Netcraft") or

47 | (http.user_agent contains "niki-bot") or

48 | (http.user_agent contains "NimbleCrawler") or

49 | (http.user_agent contains "Nimbostratus") or

50 | (http.user_agent contains "Ninja") or

51 | (http.user_agent contains "Openfind") or

52 | (http.user_agent contains "Page" and http.user_agent contains "Analyzer") or

53 | (http.user_agent contains "Pixray") or

54 | (http.user_agent contains "probethenet") or

55 | (http.user_agent contains "proximic") or

56 | (http.user_agent contains "psbot") or

57 | (http.user_agent contains "RankActive") or (http.user_agent contains "RankingBot") or (http.user_agent contains "RankurBot") or

58 | (http.user_agent contains "Reaper") or

59 | (http.user_agent contains "SalesIntelligent") or

60 | (http.user_agent contains "Semrush") or

61 | (http.user_agent contains "SEOkicks") or

62 | (http.user_agent contains "spbot") or

63 | (http.user_agent contains "SEOstats") or

64 | (http.user_agent contains "Snapbot") or

65 | (http.user_agent contains "Stripper") or

66 | (http.user_agent contains "Siteimprove") or

67 | (http.user_agent contains "sitesell") or

68 | (http.user_agent contains "Siphon") or

69 | (http.user_agent contains "Sucker") or

70 | (http.user_agent contains "TenFourFox") or

71 | (http.user_agent contains "TurnitinBot") or

72 | (http.user_agent contains "trendiction") or

73 | (http.user_agent contains "twingly") or

74 | (http.user_agent contains "VidibleScraper") or

75 | (http.user_agent contains "WebLeacher") or

76 | (http.user_agent contains "WebmasterWorldForum") or

77 | (http.user_agent contains "webmeup") or

78 | (http.user_agent contains "Webster") or

79 | (http.user_agent contains "Widow") or

80 | (http.user_agent contains "Xaldon") or

81 | (http.user_agent contains "Xenu") or

82 | (http.user_agent contains "xtractor") or

83 | (http.user_agent contains "Zermelo")

84 |

--------------------------------------------------------------------------------

/basic-crawler.rules:

--------------------------------------------------------------------------------

1 | (lower(http.user_agent) contains "fuck") or

2 | (http.user_agent contains "lient" and http.user_agent contains "ttp") or

3 | (lower(http.user_agent) contains "java") or

4 | (http.user_agent contains "Joomla") or

5 | (http.user_agent contains "libweb") or (http.user_agent contains "libwww") or

6 | (http.user_agent contains "PHPCrawl") or

7 | (http.user_agent contains "PyCurl") or

8 | (lower(http.user_agent) contains "python") or

9 | (http.user_agent contains "wrk") or

10 | (http.user_agent contains "hey/")

--------------------------------------------------------------------------------

/good-bot.rules:

--------------------------------------------------------------------------------

1 | (cf.client.bot) or

2 | (http.user_agent contains "duckduckgo") or

3 | (http.user_agent contains "facebookexternalhit") or

4 | (http.user_agent contains "Feedfetcher-Google") or

5 | (http.user_agent contains "LinkedInBot") or

6 | (http.user_agent contains "Mediapartners-Google") or

7 | (http.user_agent contains "msnbot") or

8 | (http.user_agent contains "Slackbot") or

9 | (http.user_agent contains "TwitterBot") or

10 | (http.user_agent contains "ia_archive") or

11 | (http.user_agent contains "yahoo")

12 |

--------------------------------------------------------------------------------

/security-scan-bot.rules:

--------------------------------------------------------------------------------

1 | (http.user_agent contains "Acunetix") or

2 | (lower(http.user_agent) contains "apache") or

3 | (http.user_agent contains "BackDoorBot") or

4 | (http.user_agent contains "cobion") or

5 | (http.user_agent contains "masscan") or (http.user_agent contains "FHscan") or (http.user_agent contains "scanbot") or (http.user_agent contains "Gscan") or (http.user_agent contains "Researchscan") or (http.user_agent contains "WPScan") or (http.user_agent contains "ScanAlert") or (http.user_agent contains "Wprecon") or

6 | (lower(http.user_agent) contains "virusdie") or

7 | (http.user_agent contains "VoidEYE") or

8 | (http.user_agent contains "WebShag") or

9 | (http.user_agent contains "Zeus") or

10 | (http.user_agent contains "zgrab") or

11 | (lower(http.user_agent) contains "zmap") or (lower(http.user_agent) contains "nmap") or (lower(http.user_agent) contains "fimap") or

12 | (http.user_agent contains "ZmEu") or

13 | (http.user_agent contains "ZumBot") or

14 | (http.user_agent contains "Zyborg")

15 |

--------------------------------------------------------------------------------

3 |

3 |  3 |

3 |