├── setup.cfg

├── DeepImageSearch

├── __init__.py

├── config.py

└── DeepImageSearch.py

├── images

├── deep image search logo New.png

├── deep image search logo - white.png

└── Deep-Image-Search-Demo-Screenshot-NEW.png

├── requirements.txt

├── Demo

└── DeepImageSearchDemo.py

├── LICENSE.txt

├── setup.py

├── Documents

└── Document.md

├── README.md

└── CHANGELOG.md

/setup.cfg:

--------------------------------------------------------------------------------

1 | [metadata]

2 | description-file = README.rst

3 |

--------------------------------------------------------------------------------

/DeepImageSearch/__init__.py:

--------------------------------------------------------------------------------

1 | from DeepImageSearch.DeepImageSearch import Load_Data,Search_Setup

2 |

--------------------------------------------------------------------------------

/images/deep image search logo New.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TechyNilesh/DeepImageSearch/HEAD/images/deep image search logo New.png

--------------------------------------------------------------------------------

/images/deep image search logo - white.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TechyNilesh/DeepImageSearch/HEAD/images/deep image search logo - white.png

--------------------------------------------------------------------------------

/images/Deep-Image-Search-Demo-Screenshot-NEW.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TechyNilesh/DeepImageSearch/HEAD/images/Deep-Image-Search-Demo-Screenshot-NEW.png

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | faiss_cpu==1.7.3

2 | matplotlib==3.5.2

3 | numpy==1.24.2

4 | pandas==1.4.3

5 | Pillow==9.5.0

6 | timm==0.6.13

7 | torch==2.0.0

8 | torchvision==0.15.1

9 | tqdm==4.65.0

--------------------------------------------------------------------------------

/Demo/DeepImageSearchDemo.py:

--------------------------------------------------------------------------------

1 | ### Sample Dataset Link:

2 | ### https://www.kaggle.com/datasets/iamsouravbanerjee/animal-image-dataset-90-different-animals

3 |

4 | from DeepImageSearch import Load_Data, Search_Setup

5 |

6 | # Load images from a folder

7 | image_list = Load_Data().from_folder(['folder_path'])

8 |

9 | # Set up the search engine

10 | st = Search_Setup(image_list=image_list, model_name='vgg19', pretrained=True, image_count=100)

11 |

12 | # Index the images

13 | st.run_index()

14 |

15 | # Get metadata

16 | metadata = st.get_image_metadata_file()

17 |

18 | # Add New images to the index

19 | st.add_images_to_index(['image_path_1', 'image_path_2'])

20 |

21 | # Get similar images

22 | st.get_similar_images(image_path='image_path', number_of_images=10)

23 |

24 | # Plot similar images

25 | st.plot_similar_images(image_path='image_path', number_of_images=9)

26 |

27 | # Update metadata

28 | metadata = st.get_image_metadata_file()

--------------------------------------------------------------------------------

/LICENSE.txt:

--------------------------------------------------------------------------------

1 | MIT License

2 | Copyright (c) 2021 Nilesh Verma

3 | Permission is hereby granted, free of charge, to any person obtaining a copy

4 | of this software and associated documentation files (the "Software"), to deal

5 | in the Software without restriction, including without limitation the rights

6 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

7 | copies of the Software, and to permit persons to whom the Software is

8 | furnished to do so, subject to the following conditions:

9 | The above copyright notice and this permission notice shall be included in all

10 | copies or substantial portions of the Software.

11 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

12 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

13 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

14 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

15 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

16 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

17 | SOFTWARE.

--------------------------------------------------------------------------------

/DeepImageSearch/config.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | def image_data_with_features_pkl(model_name: str, metadata_dir: str = 'metadata-files') -> str:

4 | """

5 | Get the path to the image data features pickle file.

6 |

7 | Parameters:

8 | -----------

9 | model_name : str

10 | Name of the model

11 | metadata_dir : str, optional (default='metadata-files')

12 | Base directory for metadata files

13 |

14 | Returns:

15 | --------

16 | str

17 | Path to the pickle file

18 | """

19 | image_data_with_features_pkl = os.path.join(metadata_dir, model_name, 'image_data_features.pkl')

20 | return image_data_with_features_pkl

21 |

22 | def image_features_vectors_idx(model_name: str, metadata_dir: str = 'metadata-files') -> str:

23 | """

24 | Get the path to the image features vectors index file.

25 |

26 | Parameters:

27 | -----------

28 | model_name : str

29 | Name of the model

30 | metadata_dir : str, optional (default='metadata-files')

31 | Base directory for metadata files

32 |

33 | Returns:

34 | --------

35 | str

36 | Path to the FAISS index file

37 | """

38 | image_features_vectors_idx = os.path.join(metadata_dir, model_name, 'image_features_vectors.idx')

39 | return image_features_vectors_idx

40 |

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | from setuptools import setup

2 | import pathlib

3 |

4 |

5 | # The directory containing this file

6 | HERE = pathlib.Path(__file__).parent

7 |

8 | # The text of the README file

9 | README = (HERE / "README.md").read_text()

10 |

11 | setup(

12 | long_description_content_type="text/markdown",

13 | name = 'DeepImageSearch',

14 | packages = ['DeepImageSearch'],

15 | version = '2.5',

16 | license='MIT',

17 | description = 'DeepImageSearch is a Python library for fast and accurate image search. It offers seamless integration with Python, GPU support, and advanced capabilities for identifying complex image patterns using the Vision Transformer models.',

18 | long_description=README,

19 | author = 'Nilesh Verma',

20 | author_email = 'me@nileshverma.com',

21 | url = 'https://github.com/TechyNilesh/DeepImageSearch',

22 | download_url = 'https://github.com/TechyNilesh/DeepImageSearch/archive/refs/tags/v_25.tar.gz',

23 | keywords = ['Deep Image Search Engine', 'AI Image search', 'Image Search Python'],

24 | install_requires=[

25 | 'faiss_cpu>=1.7.3,<1.8.0',

26 | 'torch>=2.0.0,<2.1.0',

27 | 'torchvision>=0.15.1,<0.16.0',

28 | 'matplotlib>=3.5.2,<3.6.0',

29 | 'pandas>=1.4.3,<1.5.0',

30 | 'numpy>=1.24.2,<1.25.0',

31 | 'tqdm>=4.65.0,<5.0.0',

32 | 'Pillow>=9.5.0,<10.0.0',

33 | 'timm>=0.6.13,<0.7.0'

34 | ],

35 | classifiers=[

36 | 'Development Status :: 5 - Production/Stable',

37 | 'Intended Audience :: Developers',

38 | 'Topic :: Software Development :: Build Tools',

39 | 'License :: OSI Approved :: MIT License',

40 | 'Programming Language :: Python :: 3',

41 | 'Programming Language :: Python :: 3.7',

42 | 'Programming Language :: Python :: 3.8',

43 | 'Programming Language :: Python :: 3.9',

44 | 'Programming Language :: Python :: 3.10'

45 | ],

46 | )

47 |

--------------------------------------------------------------------------------

/Documents/Document.md:

--------------------------------------------------------------------------------

1 | # Deep Image Search - AI Based Image Search Engine

2 |

3 | **DeepImageSearch** is a Python library for fast and accurate image search. It offers seamless integration with Python, GPU support, and advanced capabilities for identifying complex image patterns using the Vision Transformer models.

4 |

5 | ## Features

6 |

7 | - **500+ Pre-trained Models:** With DeepImageSearch, you can import more than 500 pre-trained image and transformer models based on the `timm` library.

8 | - **Listing Models:** To list all the available models, you can use the following code snippet:

9 |

10 | ```python

11 | import timm

12 | timm.list_models(pretrained=True)

13 | ```

14 |

15 | - **Facebook FAISS Integration:** DeepImageSearch also integrates with Facebook's FAISS library, which allows for efficient similarity search and clustering of dense vectors. This enhances the performance of the search and provides better results.

16 |

17 | ## Installation

18 |

19 | ```bash

20 | pip install DeepImageSearch --upgrade

21 | ```

22 |

23 | ## Usage

24 |

25 | ```python

26 | from DeepImageSearch import Load_Data, Search_Setup

27 | ```

28 |

29 | ### Load Data

30 |

31 | Load data from single/multiple folders or a CSV file.

32 |

33 | ```python

34 | dl = Load_Data()

35 |

36 | image_list = dl.from_folder(["folder1", "folder2"])

37 |

38 | # or

39 |

40 | image_list = dl.from_csv("image_data.csv", "image_paths")

41 | ```

42 |

43 | ### Initialize Search Setup

44 |

45 | Initialize the search setup with the list of images, model name, and other configurations.

46 |

47 | ```python

48 | st = Search_Setup(image_list, model_name="vgg19", pretrained=True, image_count=None)

49 | ```

50 |

51 | ### Index Images

52 |

53 | Index images for searching.

54 |

55 | ```python

56 | st.run_index()

57 | ```

58 |

59 | ### Add New Images to Index

60 |

61 | Add new images to the existing index.

62 |

63 | ```python

64 | new_image_paths = ["new_image1.jpg", "new_image2.jpg"]

65 |

66 | st.add_images_to_index(new_image_paths)

67 | ```

68 |

69 | ### Plot Similar Images

70 |

71 | Display similar images in a grid.

72 |

73 | ```python

74 | st.plot_similar_images("query_image.jpg", number_of_images=6)

75 | ```

76 |

77 | ### Get Similar Images

78 |

79 | Get a list of similar images.

80 |

81 | ```python

82 | similar_images = search_setup.get_similar_images("query_image.jpg", number_of_images=10)

83 | ```

84 |

85 | ### Get Image Metadata File

86 |

87 | Get the metadata file containing image paths and features.

88 |

89 | ```python

90 | metadata = st.get_image_metadata_file()

91 | ```

92 |

93 | ## Classes and Methods

94 |

95 | ### Load_Data

96 |

97 | A class for loading data from single/multiple folders or a CSV file.

98 |

99 | - `from_folder(folder_list: list) -> list`: Loads image paths from a list of folder paths. The method iterates through all files in each folder and appends the path of the image files with extensions like .png, .jpg, .jpeg, .gif, and .bmp. The method returns a list of image paths.

100 | - `from_csv(csv_file_path: str, images_column_name: str) -> list`: Load images from a CSV file with the specified column name and return a list of image paths.

101 |

102 | ### Search_Setup

103 |

104 | A class to setup the search functionality.

105 |

106 | - `Search_Setup(image_list: list, model_name='vgg19', pretrained=True, image_count: int = None)`: Initialize the search setup with the given image list, model name, and pretrained flag. Optionally, limit the number of images to use.

107 | - `image_list`: A list of images paths.

108 | - `model_name`: Name of the pre-trained model to be used for feature extraction. Default is 'vgg19'.

109 | - `pretrained`: Boolean value indicating whether to use the pre-trained weights for the model. Default is True.

110 | - `image_count`: Number of images to be considered for feature extraction. If not specified, all images will be used.

111 | - `run_index() -> info`: Extracts features from the dataset and indexes them. If the metadata and features are already present, the method prompts the user to confirm whether to re-extract the features or not. The method also loads the metadata and feature vectors from the saved pickle file.

112 | - `add_images_to_index(new_image_paths: list) -> info`: Adds new images to the existing index. The method loads the existing metadata and index and appends the feature vectors of the new images. The method saves the updated index to disk.

113 | - `plot_similar_images(image_path: str, number_of_images: int = 6) -> plot`: Display similar images in a grid for the given image path and number of images.

114 | - `get_similar_images(image_path: str, number_of_images: int = 10) -> dict`: Get a dictionary of similar images for the given image path and number of images.

115 | - `get_image_metadata_file() -> pd.DataFrame`: Get the metadata file containing image paths and features as a pandas DataFrame.

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Deep Image Search - AI-Based Image Search Engine

2 |

3 |

4 | **DeepImageSearch** is a powerful Python library that combines **state-of-the-art computer vision models** for feature extraction with **highly optimized algorithms for indexing and searching**. This enables fast and accurate similarity search and clustering of dense vectors, allowing users to build **scalable image search systems** capable of handling large-scale datasets. The library offers seamless integration with Python and provides **GPU support** for accelerated processing, delivering a comprehensive solution for researchers and developers working on image-based search and retrieval applications. By incorporating the **Vision Transformer (ViT) model**, DeepImageSearch further enhances its capabilities in identifying and understanding complex image patterns, making it an essential tool for advanced image search and analysis tasks.

5 |

6 |

7 |    [](https://pepy.tech/project/deepimagesearch)

8 |

9 | ## Developed By

10 |

11 | ### [Nilesh Verma](https://nileshverma.com "Nilesh Verma")

12 |

13 | ## Features

14 | - You can now load more than 500+ pre-trained state-of-the-art computer vision models available on [timm](https://timm.fast.ai/).

15 | - Faster Search using [FAISS (Facebook AI Similarity Search)](https://github.com/facebookresearch/faiss).

16 | - Highly Accurate Output Results.

17 | - GPU & CPU based indexing and Searching Support.

18 | - Best for implementing on Python-based web applications or APIs.

19 | - Applications include image-based e-commerce recommendations, social media, and other image-based platforms that want to implement image recommendations and search.

20 |

21 | ## Installation

22 |

23 | This library is compatible with both *windows* and *Linux system* you can just use **PIP command** to install this library on your system:

24 |

25 | ```shell

26 | pip install DeepImageSearch --upgrade

27 | ```

28 | If you're using a GPU, first uninstall the **faiss_cpu** version and then try installing the **faiss_gpu** version. The library installs the CPU version by default because not all systems support GPUs.

29 |

30 | ## How To Use?

31 |

32 | We have provided the **Demo** folder under the *GitHub repository*, you can find the example in both **.py** and **.ipynb** file. Following are the ideal flow of the code:

33 |

34 | ```python

35 | from DeepImageSearch import Load_Data, Search_Setup

36 |

37 | # Load images from a folder

38 | image_list = Load_Data().from_folder(['folder_path'])

39 |

40 | # Set up the search engine, You can load 'vit_base_patch16_224_in21k', 'resnet50' etc more then 500+ models

41 | st = Search_Setup(image_list=image_list, model_name='vgg19', pretrained=True, image_count=100)

42 |

43 | # Index the images

44 | st.run_index()

45 |

46 | # Get metadata

47 | metadata = st.get_image_metadata_file()

48 |

49 | # Add new images to the index

50 | st.add_images_to_index(['image_path_1', 'image_path_2'])

51 |

52 | # Get similar images

53 | st.get_similar_images(image_path='image_path', number_of_images=10)

54 |

55 | # Plot similar images

56 | st.plot_similar_images(image_path='image_path', number_of_images=9)

57 |

58 | # Update metadata

59 | metadata = st.get_image_metadata_file()

60 | ```

61 |

62 | This code demonstrates how to load images, set up the search engine, index the images, add new images to the index, and retrieve similar images.

63 |

64 | **Note:** Some models may not work properly due to resizing and normalization issues. By default, I have chosen a size of 224x244. Please try to select models that support this size or resized inputs. I have already tested many models, but testing over 500 is beyond my scope.

65 |

66 | ## Documentation

67 |

68 | This project aims to provide a powerful image search engine using deep learning techniques. To get started, please follow the link: [Read Full Documents](https://github.com/TechyNilesh/DeepImageSearch/blob/main/Documents/Document.md)

69 |

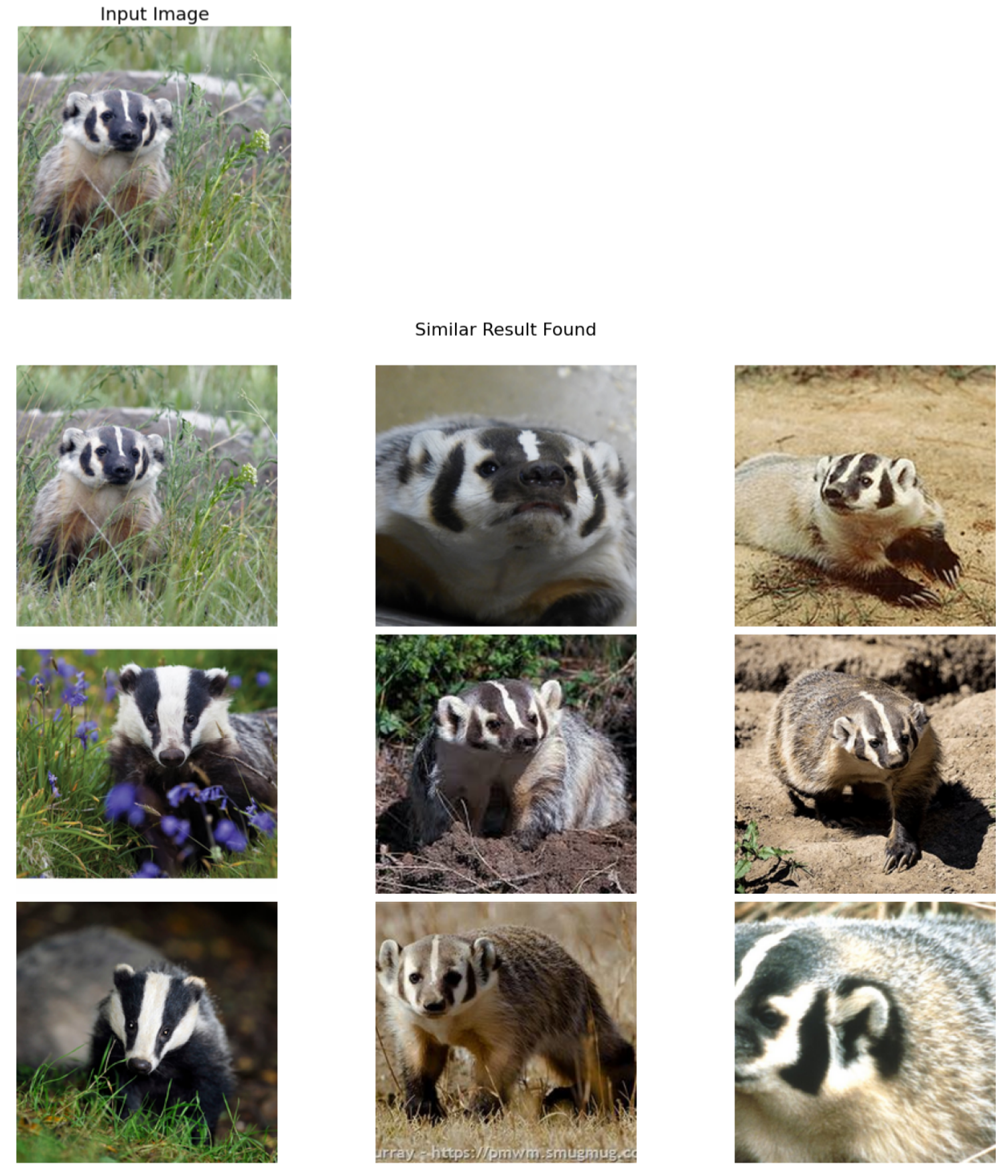

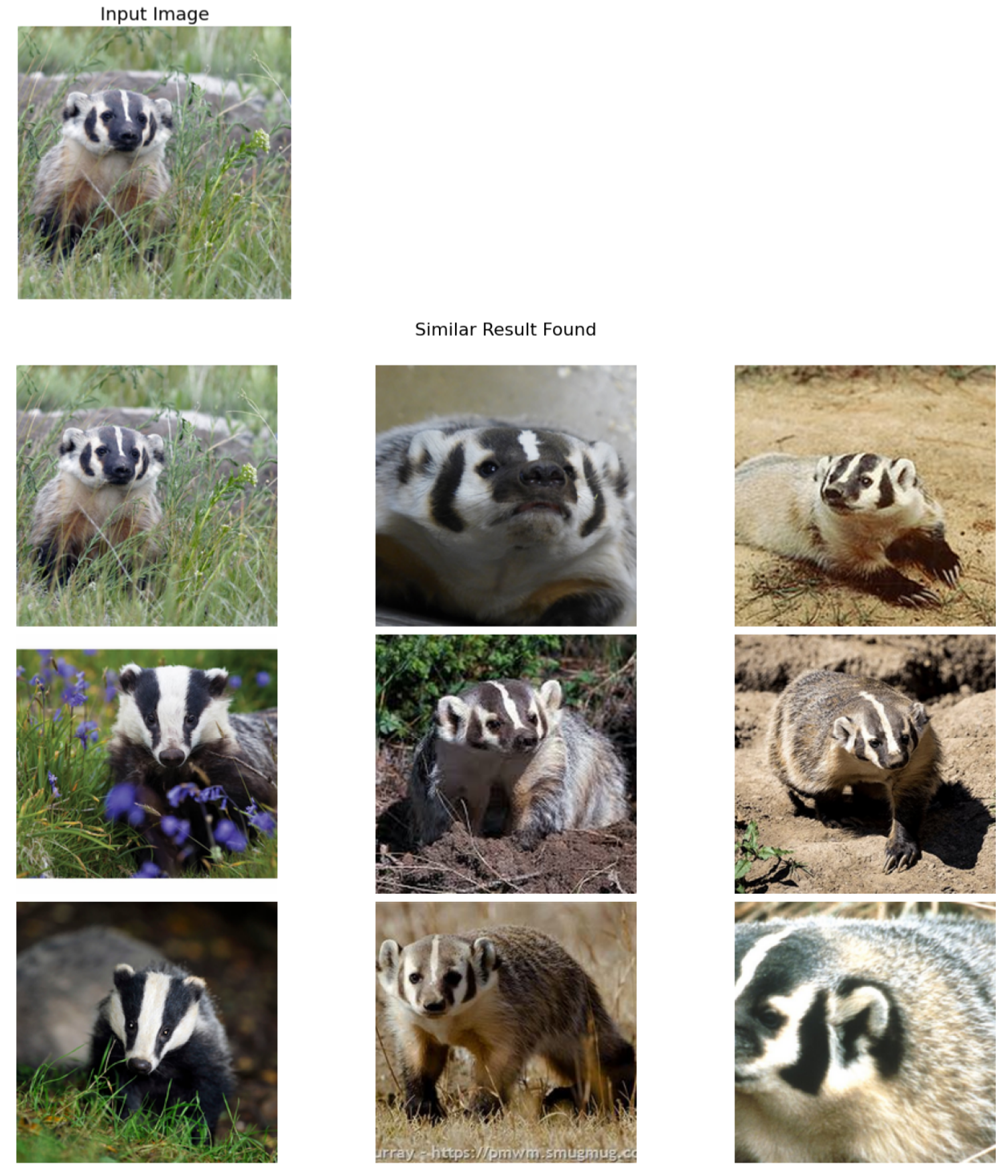

70 | ## Screenshot

71 |

72 |

73 |

74 | ## Citaion

75 |

76 | If you use DeepImageSerach in your Research/Product, please cite the following GitHub Repository:

77 |

78 | ```latex

79 | @misc{TechyNilesh/DeepImageSearch,

80 | author = {VERMA, NILESH},

81 | title = {Deep Image Search - AI-Based Image Search Engine},

82 | year = {2021},

83 | publisher = {GitHub},

84 | journal = {GitHub repository},

85 | howpublished = {\url{https://github.com/TechyNilesh/DeepImageSearch}},

86 | }

87 | ```

88 |

89 | ### Please do STAR the repository, if it helped you in anyway.

90 |

91 | **More cool features will be added in future. Feel free to give suggestions, report bugs and contribute.**

92 |

--------------------------------------------------------------------------------

/CHANGELOG.md:

--------------------------------------------------------------------------------

1 | # Changelog

2 |

3 | All notable changes to DeepImageSearch will be documented in this file.

4 |

5 | ## [Unreleased] - Code Review Fixes

6 |

7 | ### Critical Bug Fixes

8 |

9 | #### Fixed directory existence check bug

10 | - **Issue**: Line 82 used `if f'metadata-files/{self.model_name}' not in os.listdir()` which would fail

11 | - **Fix**: Now uses `os.path.exists()` for proper directory checking

12 | - **Impact**: Prevents crashes when checking for metadata directories

13 |

14 | #### Removed blocking input() call

15 | - **Issue**: Line 157 used `input()` which blocked in automated/production environments

16 | - **Fix**: Replaced with `force_reindex` parameter (default: False)

17 | - **Impact**: Library now works in APIs, scripts, and automated pipelines

18 | - **Breaking Change**: `run_index()` no longer prompts user interactively

19 |

20 | #### Fixed silent error handling

21 | - **Issue**: Lines 122-125 used bare `except:` clause that caught all errors silently

22 | - **Fix**: Now uses specific exceptions (FileNotFoundError, IOError) with proper logging

23 | - **Impact**: Errors are now visible and debuggable

24 |

25 | ### New Features

26 |

27 | #### Configurable image size

28 | - **Added**: `image_size` parameter (default: 224) to `Search_Setup.__init__()`

29 | - **Benefit**: Support for models requiring different input sizes (384x384, 512x512, etc.)

30 |

31 | #### Multiple FAISS index types

32 | - **Added**: `index_type` parameter with options: 'flat', 'ivf', 'hnsw'

33 | - `flat`: Exact search (default, backward compatible)

34 | - `ivf`: Faster approximate search for large datasets (100k+ images)

35 | - `hnsw`: Graph-based approximate search

36 | - **Benefit**: Better performance and scalability for large image collections

37 |

38 | #### GPU support

39 | - **Added**: `use_gpu` parameter (default: False) to `Search_Setup.__init__()`

40 | - **Benefit**: Faster feature extraction when GPU is available

41 |

42 | #### Configurable metadata directory

43 | - **Added**: `metadata_dir` parameter (default: 'metadata-files')

44 | - **Benefit**: Allows custom storage locations for index files

45 |

46 | ### Performance Improvements

47 |

48 | #### Batch processing for indexing

49 | - **Change**: Features now added to FAISS index in batches (default: 1000)

50 | - **Benefit**: Reduced memory usage for large datasets

51 |

52 | #### Efficient bulk image addition

53 | - **Change**: `add_images_to_index()` now processes in batches (default: 100)

54 | - **Benefit**: Significantly faster when adding many images at once

55 |

56 | #### IVF index training

57 | - **Change**: When using IVF index, automatically trains with optimal cluster count

58 | - **Benefit**: Better search accuracy vs speed tradeoff

59 |

60 | #### torch.no_grad() for inference

61 | - **Change**: Added `with torch.no_grad()` during feature extraction

62 | - **Benefit**: Reduced memory usage during inference

63 |

64 | ### Code Quality Improvements

65 |

66 | #### Comprehensive input validation

67 | - **Added**: Validation for all user inputs

68 | - `image_list` cannot be empty or None

69 | - `image_count` must be positive integer

70 | - `image_size` must be positive integer

71 | - `index_type` must be valid option

72 | - File paths validated before processing

73 | - **Benefit**: Clear error messages instead of cryptic failures

74 |

75 | #### Professional logging system

76 | - **Change**: Replaced print statements with Python logging module

77 | - **Format**: `%(asctime)s - %(name)s - %(levelname)s - %(message)s`

78 | - **Levels**: INFO for normal operations, WARNING for issues, ERROR for failures

79 | - **Benefit**: Proper log management and no ANSI color code issues

80 |

81 | #### Comprehensive type hints

82 | - **Added**: Type hints for all function parameters and return values

83 | - **Types used**: List, Dict, Optional, Union from typing module

84 | - **Benefit**: Better IDE support and code maintainability

85 |

86 | #### Image file validation

87 | - **Change**: Image files validated with `Image.open().verify()` before adding

88 | - **Benefit**: Prevents processing of corrupted or non-image files

89 |

90 | #### Path validation and security

91 | - **Change**: All file paths validated before use

92 | - Check file existence with `os.path.exists()`

93 | - Verify files are regular files with `os.path.isfile()`

94 | - Validate image extensions

95 | - **Benefit**: Better security and clearer error messages

96 |

97 | #### Better exception handling

98 | - **Change**: Specific exceptions with descriptive messages

99 | - **Example**: `FileNotFoundError`, `RuntimeError`, `ValueError`, `TypeError`

100 | - **Benefit**: Easier debugging and error handling

101 |

102 | ### Documentation Improvements

103 |

104 | #### Enhanced docstrings

105 | - **Change**: All methods now have detailed docstrings with:

106 | - Parameter descriptions

107 | - Return value descriptions

108 | - Examples where appropriate

109 | - **Benefit**: Better API documentation

110 |

111 | #### Type-annotated config functions

112 | - **Change**: config.py functions now have type hints and docstrings

113 | - **Benefit**: Clearer interface for configuration paths

114 |

115 | ### Backward Compatibility

116 |

117 | #### Maintained API compatibility

118 | - **Note**: All existing code should work without changes

119 | - **Exception**: `run_index()` no longer prompts interactively (use `force_reindex=True` instead)

120 |

121 | #### Default values preserved

122 | - All new parameters have sensible defaults matching old behavior

123 | - `image_size=224` (same as hardcoded before)

124 | - `index_type='flat'` (same as before)

125 | - `use_gpu=False` (CPU-only as before)

126 | - `metadata_dir='metadata-files'` (same as before)

127 |

128 | ### Bug Fixes List

129 |

130 | 1. ✅ Directory check using `os.path.exists()` instead of `os.listdir()`

131 | 2. ✅ Removed blocking `input()` call

132 | 3. ✅ Fixed bare `except:` clauses with specific exceptions

133 | 4. ✅ Fixed hardcoded image size (224x224)

134 | 5. ✅ Added batch processing for memory efficiency

135 | 6. ✅ Fixed inefficient one-by-one image addition

136 | 7. ✅ Fixed deprecated `DataFrame.append()` usage

137 | 8. ✅ Added input validation throughout

138 | 9. ✅ Replaced print with logging module

139 | 10. ✅ Added comprehensive type hints

140 | 11. ✅ Added file path validation

141 | 12. ✅ Made metadata directory configurable

142 | 13. ✅ Added FAISS index type options

143 | 14. ✅ Added proper context managers for file operations

144 | 15. ✅ Added GPU support with automatic detection

145 | 16. ✅ Added support for more image formats (tiff, webp)

146 |

147 | ### Migration Guide

148 |

149 | #### For users upgrading from previous versions:

150 |

151 | **No changes required** - All existing code continues to work!

152 |

153 | **Optional improvements** you can make:

154 |

155 | ```python

156 | # Old code (still works):

157 | st = Search_Setup(image_list=image_list, model_name='vgg19')

158 | st.run_index()

159 |

160 | # New recommended code with improvements:

161 | st = Search_Setup(

162 | image_list=image_list,

163 | model_name='vgg19',

164 | image_size=224, # Now configurable

165 | use_gpu=True, # Use GPU if available

166 | index_type='ivf', # Faster for large datasets

167 | metadata_dir='./my_index' # Custom location

168 | )

169 | st.run_index(force_reindex=False) # No interactive prompt

170 |

171 | # For large datasets (100k+ images):

172 | st = Search_Setup(

173 | image_list=image_list,

174 | model_name='vit_base_patch16_224',

175 | index_type='hnsw', # Much faster approximate search

176 | use_gpu=True

177 | )

178 |

179 | # Adding images is now much faster:

180 | st.add_images_to_index(new_images, batch_size=100)

181 | ```

182 |

183 | ### Technical Details

184 |

185 | #### FAISS Index Selection Guide

186 |

187 | - **IndexFlatL2 (flat)**:

188 | - Exact nearest neighbor search

189 | - Best for: < 10k images

190 | - Search time: O(n)

191 |

192 | - **IndexIVFFlat (ivf)**:

193 | - Approximate search with inverted file index

194 | - Best for: 10k - 1M images

195 | - Search time: O(log n) with training

196 | - Trains with sqrt(n) clusters

197 |

198 | - **IndexHNSWFlat (hnsw)**:

199 | - Graph-based approximate search

200 | - Best for: 100k+ images

201 | - Search time: O(log n)

202 | - No training required

203 |

204 | ### Known Issues

205 |

206 | None currently identified.

207 |

208 | ### Next Steps / Future Improvements

209 |

210 | Potential future enhancements (not yet implemented):

211 | - HDF5 support as alternative to pickle for features

212 | - Progress checkpointing for long operations

213 | - Multi-GPU support

214 | - Asynchronous feature extraction

215 | - More flexible image preprocessing pipelines

216 |

217 | ---

218 |

219 | ## Version History

220 |

221 | ### [2.5] - Previous Release

222 | - Initial support for 500+ models via timm

223 | - FAISS integration for similarity search

224 | - Basic indexing and search functionality

225 |

--------------------------------------------------------------------------------

/DeepImageSearch/DeepImageSearch.py:

--------------------------------------------------------------------------------

1 | import DeepImageSearch.config as config

2 | import os

3 | import pandas as pd

4 | import matplotlib.pyplot as plt

5 | from PIL import Image

6 | from tqdm import tqdm

7 | import numpy as np

8 | from torchvision import transforms

9 | import torch

10 | from torch.autograd import Variable

11 | import timm

12 | from PIL import ImageOps

13 | import math

14 | import faiss

15 | import logging

16 | from typing import List, Dict, Optional, Union

17 | from pathlib import Path

18 |

19 | # Configure logging

20 | logging.basicConfig(

21 | level=logging.INFO,

22 | format='%(asctime)s - %(name)s - %(levelname)s - %(message)s'

23 | )

24 | logger = logging.getLogger(__name__)

25 |

26 | class Load_Data:

27 | """A class for loading data from single/multiple folders or a CSV file"""

28 |

29 | def __init__(self):

30 | """

31 | Initializes an instance of LoadData class

32 | """

33 | pass

34 |

35 | def from_folder(self, folder_list: List[str]) -> List[str]:

36 | """

37 | Adds images from the specified folders to the image_list.

38 |

39 | Parameters:

40 | -----------

41 | folder_list : list

42 | A list of paths to the folders containing images to be added to the image_list.

43 |

44 | Returns:

45 | --------

46 | list

47 | List of valid image paths found in the folders.

48 | """

49 | if not folder_list:

50 | raise ValueError("folder_list cannot be empty")

51 |

52 | if not isinstance(folder_list, list):

53 | raise TypeError("folder_list must be a list")

54 |

55 | self.folder_list = folder_list

56 | image_path = []

57 | valid_extensions = ('.png', '.jpg', '.jpeg', '.gif', '.bmp', '.tiff', '.webp')

58 |

59 | for folder in self.folder_list:

60 | if not os.path.exists(folder):

61 | logger.warning(f"Folder does not exist: {folder}")

62 | continue

63 |

64 | if not os.path.isdir(folder):

65 | logger.warning(f"Path is not a directory: {folder}")

66 | continue

67 |

68 | for root, dirs, files in os.walk(folder):

69 | for file in files:

70 | if file.lower().endswith(valid_extensions):

71 | full_path = os.path.join(root, file)

72 | # Validate that the file can be opened as an image

73 | try:

74 | with Image.open(full_path) as img:

75 | img.verify()

76 | image_path.append(full_path)

77 | except Exception as e:

78 | logger.warning(f"Skipping invalid image file {full_path}: {e}")

79 | continue

80 |

81 | if not image_path:

82 | logger.warning("No valid images found in the specified folders")

83 | else:

84 | logger.info(f"Found {len(image_path)} valid images")

85 |

86 | return image_path

87 |

88 | def from_csv(self, csv_file_path: str, images_column_name: str) -> List[str]:

89 | """

90 | Adds images from the specified column of a CSV file to the image_list.

91 |

92 | Parameters:

93 | -----------

94 | csv_file_path : str

95 | The path to the CSV file.

96 | images_column_name : str

97 | The name of the column containing the paths to the images to be added to the image_list.

98 |

99 | Returns:

100 | --------

101 | list

102 | List of image paths from the CSV file.

103 | """

104 | if not os.path.exists(csv_file_path):

105 | raise FileNotFoundError(f"CSV file not found: {csv_file_path}")

106 |

107 | self.csv_file_path = csv_file_path

108 | self.images_column_name = images_column_name

109 |

110 | try:

111 | df = pd.read_csv(self.csv_file_path)

112 | except Exception as e:

113 | raise ValueError(f"Error reading CSV file: {e}")

114 |

115 | if images_column_name not in df.columns:

116 | raise ValueError(f"Column '{images_column_name}' not found in CSV. Available columns: {df.columns.tolist()}")

117 |

118 | image_paths = df[self.images_column_name].dropna().tolist()

119 |

120 | # Validate image paths

121 | valid_paths = []

122 | for path in image_paths:

123 | if os.path.exists(path) and os.path.isfile(path):

124 | valid_paths.append(path)

125 | else:

126 | logger.warning(f"Image path does not exist: {path}")

127 |

128 | logger.info(f"Loaded {len(valid_paths)} valid image paths from CSV")

129 | return valid_paths

130 |

131 | class Search_Setup:

132 | """ A class for setting up and running image similarity search."""

133 |

134 | def __init__(

135 | self,

136 | image_list: List[str],

137 | model_name: str = 'vgg19',

138 | pretrained: bool = True,

139 | image_count: Optional[int] = None,

140 | image_size: int = 224,

141 | metadata_dir: str = 'metadata-files',

142 | use_gpu: bool = False,

143 | index_type: str = 'flat'

144 | ):

145 | """

146 | Parameters:

147 | -----------

148 | image_list : list

149 | A list of images to be indexed and searched.

150 | model_name : str, optional (default='vgg19')

151 | The name of the pre-trained model to use for feature extraction.

152 | pretrained : bool, optional (default=True)

153 | Whether to use the pre-trained weights for the chosen model.

154 | image_count : int, optional (default=None)

155 | The number of images to be indexed and searched. If None, all images in the image_list will be used.

156 | image_size : int, optional (default=224)

157 | The size to which images will be resized for feature extraction.

158 | metadata_dir : str, optional (default='metadata-files')

159 | Directory to store metadata and index files.

160 | use_gpu : bool, optional (default=False)

161 | Whether to use GPU for feature extraction.

162 | index_type : str, optional (default='flat')

163 | Type of FAISS index to use. Options: 'flat', 'ivf', 'hnsw'

164 | """

165 | # Validate inputs

166 | if not image_list:

167 | raise ValueError("image_list cannot be empty")

168 |

169 | if not isinstance(image_list, list):

170 | raise TypeError("image_list must be a list")

171 |

172 | if image_count is not None and (not isinstance(image_count, int) or image_count <= 0):

173 | raise ValueError("image_count must be a positive integer or None")

174 |

175 | if not isinstance(image_size, int) or image_size <= 0:

176 | raise ValueError("image_size must be a positive integer")

177 |

178 | if index_type not in ['flat', 'ivf', 'hnsw']:

179 | raise ValueError("index_type must be one of: 'flat', 'ivf', 'hnsw'")

180 |

181 | self.model_name = model_name

182 | self.pretrained = pretrained

183 | self.image_data = pd.DataFrame()

184 | self.d = None

185 | self.image_size = image_size

186 | self.metadata_dir = metadata_dir

187 | self.use_gpu = use_gpu and torch.cuda.is_available()

188 | self.index_type = index_type

189 |

190 | if image_count is None:

191 | self.image_list = image_list

192 | else:

193 | self.image_list = image_list[:image_count]

194 |

195 | logger.info(f"Initialized with {len(self.image_list)} images")

196 |

197 | # Create metadata directory

198 | model_metadata_dir = os.path.join(self.metadata_dir, self.model_name)

199 | if not os.path.exists(model_metadata_dir):

200 | try:

201 | os.makedirs(model_metadata_dir)

202 | logger.info(f"Created metadata directory: {model_metadata_dir}")

203 | except Exception as e:

204 | raise RuntimeError(f"Error creating metadata directory {model_metadata_dir}: {e}")

205 |

206 | # Load the pre-trained model and remove the last layer

207 | logger.info(f"Loading model: {model_name}")

208 | try:

209 | base_model = timm.create_model(self.model_name, pretrained=self.pretrained)

210 | self.model = torch.nn.Sequential(*list(base_model.children())[:-1])

211 | self.model.eval()

212 |

213 | if self.use_gpu:

214 | self.model = self.model.cuda()

215 | logger.info(f"Model loaded successfully on GPU: {model_name}")

216 | else:

217 | logger.info(f"Model loaded successfully on CPU: {model_name}")

218 | except Exception as e:

219 | raise RuntimeError(f"Error loading model {model_name}: {e}")

220 |

221 | def _extract(self, img: Image.Image) -> np.ndarray:

222 | """

223 | Extract features from a single image.

224 |

225 | Parameters:

226 | -----------

227 | img : PIL.Image

228 | Input image

229 |

230 | Returns:

231 | --------

232 | np.ndarray

233 | Normalized feature vector

234 | """

235 | # Resize and convert the image

236 | img = img.resize((self.image_size, self.image_size))

237 | img = img.convert('RGB')

238 |

239 | # Preprocess the image

240 | preprocess = transforms.Compose([

241 | transforms.ToTensor(),

242 | transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

243 | ])

244 | x = preprocess(img)

245 | x = Variable(torch.unsqueeze(x, dim=0).float(), requires_grad=False)

246 |

247 | if self.use_gpu:

248 | x = x.cuda()

249 |

250 | # Extract features

251 | with torch.no_grad():

252 | feature = self.model(x)

253 |

254 | if self.use_gpu:

255 | feature = feature.cpu()

256 |

257 | feature = feature.data.numpy().flatten()

258 | return feature / np.linalg.norm(feature)

259 |

260 | def _get_feature(self, image_data: List[str]) -> List[Optional[np.ndarray]]:

261 | """

262 | Extract features from a list of images.

263 |

264 | Parameters:

265 | -----------

266 | image_data : list

267 | List of image paths

268 |

269 | Returns:

270 | --------

271 | list

272 | List of feature vectors (or None for failed extractions)

273 | """

274 | self.image_data = image_data

275 | features = []

276 | failed_images = []

277 |

278 | for img_path in tqdm(self.image_data, desc="Extracting features"):

279 | try:

280 | with Image.open(img_path) as img:

281 | feature = self._extract(img=img)

282 | features.append(feature)

283 | except FileNotFoundError:

284 | logger.error(f"File not found: {img_path}")

285 | features.append(None)

286 | failed_images.append((img_path, "File not found"))

287 | except IOError as e:

288 | logger.error(f"Error opening image {img_path}: {e}")

289 | features.append(None)

290 | failed_images.append((img_path, f"IO Error: {e}"))

291 | except Exception as e:

292 | logger.error(f"Unexpected error processing {img_path}: {e}")

293 | features.append(None)

294 | failed_images.append((img_path, f"Error: {e}"))

295 |

296 | if failed_images:

297 | logger.warning(f"Failed to process {len(failed_images)} images")

298 |

299 | return features

300 |

301 | def _start_feature_extraction(self) -> pd.DataFrame:

302 | """

303 | Extract features from all images and save to disk.

304 |

305 | Returns:

306 | --------

307 | pd.DataFrame

308 | DataFrame containing image paths and features

309 | """

310 | image_data = pd.DataFrame()

311 | image_data['images_paths'] = self.image_list

312 | f_data = self._get_feature(self.image_list)

313 | image_data['features'] = f_data

314 |

315 | # Remove rows with None features

316 | original_count = len(image_data)

317 | image_data = image_data.dropna().reset_index(drop=True)

318 | removed_count = original_count - len(image_data)

319 |

320 | if removed_count > 0:

321 | logger.warning(f"Removed {removed_count} images due to feature extraction failures")

322 |

323 | if len(image_data) == 0:

324 | raise RuntimeError("No valid features extracted from any images")

325 |

326 | # Save metadata

327 | pkl_path = config.image_data_with_features_pkl(self.model_name, self.metadata_dir)

328 | image_data.to_pickle(pkl_path)

329 | logger.info(f"Image metadata saved: {pkl_path}")

330 |

331 | return image_data

332 |

333 | def _create_faiss_index(self, dimension: int) -> faiss.Index:

334 | """

335 | Create a FAISS index based on the specified index_type.

336 |

337 | Parameters:

338 | -----------

339 | dimension : int

340 | Dimension of feature vectors

341 |

342 | Returns:

343 | --------

344 | faiss.Index

345 | FAISS index object

346 | """

347 | if self.index_type == 'flat':

348 | index = faiss.IndexFlatL2(dimension)

349 | logger.info("Using IndexFlatL2 (exact search)")

350 | elif self.index_type == 'ivf':

351 | # For IVF, use sqrt(n) clusters as a rule of thumb

352 | n_list = min(100, int(np.sqrt(len(self.image_list))))

353 | quantizer = faiss.IndexFlatL2(dimension)

354 | index = faiss.IndexIVFFlat(quantizer, dimension, n_list)

355 | logger.info(f"Using IndexIVFFlat with {n_list} clusters (approximate search)")

356 | elif self.index_type == 'hnsw':

357 | index = faiss.IndexHNSWFlat(dimension, 32)

358 | logger.info("Using IndexHNSWFlat (approximate search)")

359 | else:

360 | raise ValueError(f"Unknown index type: {self.index_type}")

361 |

362 | return index

363 |

364 | def _start_indexing(self, image_data: pd.DataFrame, batch_size: int = 1000):

365 | """

366 | Create and save FAISS index from extracted features.

367 |

368 | Parameters:

369 | -----------

370 | image_data : pd.DataFrame

371 | DataFrame containing image features

372 | batch_size : int, optional (default=1000)

373 | Number of features to process at once to avoid memory issues

374 | """

375 | self.image_data = image_data

376 | d = len(image_data['features'][0])

377 | self.d = d

378 |

379 | index = self._create_faiss_index(d)

380 |

381 | # For IVF index, need to train first

382 | if self.index_type == 'ivf':

383 | logger.info("Training IVF index...")

384 | features_matrix = np.vstack(image_data['features'].values).astype(np.float32)

385 | index.train(features_matrix)

386 |

387 | # Add features in batches to avoid memory issues

388 | total_features = len(image_data)

389 | for start_idx in tqdm(range(0, total_features, batch_size), desc="Indexing features"):

390 | end_idx = min(start_idx + batch_size, total_features)

391 | batch_features = image_data['features'].iloc[start_idx:end_idx]

392 | features_matrix = np.vstack(batch_features.values).astype(np.float32)

393 | index.add(features_matrix)

394 |

395 | # Save index

396 | idx_path = config.image_features_vectors_idx(self.model_name, self.metadata_dir)

397 | faiss.write_index(index, idx_path)

398 | logger.info(f"Index saved: {idx_path}")

399 |

400 | def run_index(self, force_reindex: bool = False):

401 | """

402 | Indexes the images in the image_list and creates an index file for fast similarity search.

403 |

404 | Parameters:

405 | -----------

406 | force_reindex : bool, optional (default=False)

407 | If True, force re-extraction of features even if metadata exists.

408 | If False, will skip if metadata already exists.

409 | """

410 | model_metadata_dir = os.path.join(self.metadata_dir, self.model_name)

411 |

412 | # Check if metadata already exists

413 | metadata_exists = (

414 | os.path.exists(config.image_data_with_features_pkl(self.model_name, self.metadata_dir)) and

415 | os.path.exists(config.image_features_vectors_idx(self.model_name, self.metadata_dir))

416 | )

417 |

418 | if metadata_exists and not force_reindex:

419 | logger.info("Metadata and index files already exist. Use force_reindex=True to re-extract.")

420 | logger.info(f"Loading existing metadata from: {model_metadata_dir}")

421 | else:

422 | if force_reindex:

423 | logger.info("Force reindex enabled. Re-extracting features...")

424 | data = self._start_feature_extraction()

425 | self._start_indexing(data)

426 |

427 | # Load metadata

428 | self.image_data = pd.read_pickle(config.image_data_with_features_pkl(self.model_name, self.metadata_dir))

429 | self.f = len(self.image_data['features'][0])

430 | logger.info(f"Loaded {len(self.image_data)} indexed images")

431 |

432 | def add_images_to_index(self, new_image_paths: List[str], batch_size: int = 100):

433 | """

434 | Adds new images to the existing index.

435 |

436 | Parameters:

437 | -----------

438 | new_image_paths : list

439 | A list of paths to the new images to be added to the index.

440 | batch_size : int, optional (default=100)

441 | Number of images to process in each batch for efficiency.

442 | """

443 | if not new_image_paths:

444 | logger.warning("No new images provided")

445 | return

446 |

447 | # Validate new image paths

448 | valid_paths = []

449 | for path in new_image_paths:

450 | if os.path.exists(path) and os.path.isfile(path):

451 | valid_paths.append(path)

452 | else:

453 | logger.warning(f"Skipping invalid path: {path}")

454 |

455 | if not valid_paths:

456 | logger.warning("No valid images to add")

457 | return

458 |

459 | logger.info(f"Adding {len(valid_paths)} new images to index")

460 |

461 | # Load existing metadata and index

462 | self.image_data = pd.read_pickle(config.image_data_with_features_pkl(self.model_name, self.metadata_dir))

463 | index = faiss.read_index(config.image_features_vectors_idx(self.model_name, self.metadata_dir))

464 |

465 | # Process new images in batches

466 | new_metadata_list = []

467 | new_features_list = []

468 |

469 | for i, new_image_path in enumerate(tqdm(valid_paths, desc="Processing new images")):

470 | try:

471 | with Image.open(new_image_path) as img:

472 | feature = self._extract(img)

473 | new_metadata_list.append({"images_paths": new_image_path, "features": feature})

474 | new_features_list.append(feature)

475 | except Exception as e:

476 | logger.error(f"Error processing {new_image_path}: {e}")

477 | continue

478 |

479 | # Add to index in batches

480 | if len(new_features_list) >= batch_size or i == len(valid_paths) - 1:

481 | if new_features_list:

482 | features_array = np.array(new_features_list, dtype=np.float32)

483 | index.add(features_array)

484 | new_features_list = []

485 |

486 | # Update metadata

487 | if new_metadata_list:

488 | new_metadata_df = pd.DataFrame(new_metadata_list)

489 | self.image_data = pd.concat([self.image_data, new_metadata_df], axis=0, ignore_index=True)

490 |

491 | # Save the updated metadata and index

492 | self.image_data.to_pickle(config.image_data_with_features_pkl(self.model_name, self.metadata_dir))

493 | faiss.write_index(index, config.image_features_vectors_idx(self.model_name, self.metadata_dir))

494 |

495 | logger.info(f"Successfully added {len(new_metadata_list)} new images to the index")

496 | else:

497 | logger.warning("No new images were successfully processed")

498 |

499 | def _search_by_vector(self, v: np.ndarray, n: int) -> Dict[int, str]:

500 | """

501 | Search for similar images using a feature vector.

502 |

503 | Parameters:

504 | -----------

505 | v : np.ndarray

506 | Feature vector to search for

507 | n : int

508 | Number of similar images to return

509 |

510 | Returns:

511 | --------

512 | dict

513 | Dictionary mapping indices to image paths

514 | """

515 | self.v = v

516 | self.n = n

517 |

518 | index = faiss.read_index(config.image_features_vectors_idx(self.model_name, self.metadata_dir))

519 |

520 | # For IVF index, set number of probes for search

521 | if self.index_type == 'ivf':

522 | index.nprobe = 10

523 |

524 | D, I = index.search(np.array([self.v], dtype=np.float32), self.n)

525 | return dict(zip(I[0], self.image_data.iloc[I[0]]['images_paths'].to_list()))

526 |

527 | def _get_query_vector(self, image_path: str) -> np.ndarray:

528 | """

529 | Extract feature vector from a query image.

530 |

531 | Parameters:

532 | -----------

533 | image_path : str

534 | Path to the query image

535 |

536 | Returns:

537 | --------

538 | np.ndarray

539 | Feature vector

540 | """

541 | if not os.path.exists(image_path):

542 | raise FileNotFoundError(f"Query image not found: {image_path}")

543 |

544 | self.image_path = image_path

545 |

546 | try:

547 | with Image.open(self.image_path) as img:

548 | query_vector = self._extract(img)

549 | except Exception as e:

550 | raise RuntimeError(f"Error extracting features from query image: {e}")

551 |

552 | return query_vector

553 |

554 | def plot_similar_images(self, image_path: str, number_of_images: int = 6):

555 | """

556 | Plots a given image and its most similar images according to the indexed image features.

557 |

558 | Parameters:

559 | -----------

560 | image_path : str

561 | The path to the query image to be plotted.

562 | number_of_images : int, optional (default=6)

563 | The number of most similar images to the query image to be plotted.

564 | """

565 | if not os.path.exists(image_path):

566 | raise FileNotFoundError(f"Query image not found: {image_path}")

567 |

568 | if number_of_images <= 0:

569 | raise ValueError("number_of_images must be positive")

570 |

571 | input_img = Image.open(image_path)

572 | input_img_resized = ImageOps.fit(input_img, (224, 224), Image.LANCZOS)

573 | plt.figure(figsize=(5, 5))

574 | plt.axis('off')

575 | plt.title('Input Image', fontsize=18)

576 | plt.imshow(input_img_resized)

577 | plt.show()

578 |

579 | query_vector = self._get_query_vector(image_path)

580 | img_list = list(self._search_by_vector(query_vector, number_of_images).values())

581 |

582 | grid_size = math.ceil(math.sqrt(number_of_images))

583 | axes = []

584 | fig = plt.figure(figsize=(20, 15))

585 | for a in range(number_of_images):

586 | if a >= len(img_list):

587 | break

588 | axes.append(fig.add_subplot(grid_size, grid_size, a + 1))

589 | plt.axis('off')

590 | try:

591 | img = Image.open(img_list[a])

592 | img_resized = ImageOps.fit(img, (224, 224), Image.LANCZOS)

593 | plt.imshow(img_resized)

594 | except Exception as e:

595 | logger.error(f"Error displaying image {img_list[a]}: {e}")

596 |

597 | fig.tight_layout()

598 | fig.subplots_adjust(top=0.93)

599 | fig.suptitle('Similar Result Found', fontsize=22)

600 | plt.show(fig)

601 |

602 | def get_similar_images(self, image_path: str, number_of_images: int = 10) -> Dict[int, str]:

603 | """

604 | Returns the most similar images to a given query image according to the indexed image features.

605 |

606 | Parameters:

607 | -----------

608 | image_path : str

609 | The path to the query image.

610 | number_of_images : int, optional (default=10)

611 | The number of most similar images to the query image to be returned.

612 |

613 | Returns:

614 | --------

615 | dict

616 | Dictionary mapping indices to similar image paths

617 | """

618 | if number_of_images <= 0:

619 | raise ValueError("number_of_images must be positive")

620 |

621 | self.image_path = image_path

622 | self.number_of_images = number_of_images

623 | query_vector = self._get_query_vector(self.image_path)

624 | img_dict = self._search_by_vector(query_vector, self.number_of_images)

625 | return img_dict

626 |

627 | def get_image_metadata_file(self) -> pd.DataFrame:

628 | """

629 | Returns the metadata file containing information about the indexed images.

630 |

631 | Returns:

632 | --------

633 | DataFrame

634 | The Pandas DataFrame of the metadata file.

635 | """

636 | self.image_data = pd.read_pickle(config.image_data_with_features_pkl(self.model_name, self.metadata_dir))

637 | return self.image_data

638 |

--------------------------------------------------------------------------------