├── src

└── animatediff

│ ├── utils

│ ├── __init__.py

│ ├── wild_card.py

│ ├── mask_rembg.py

│ ├── mask_animseg.py

│ ├── civitai2config.py

│ ├── device.py

│ ├── pipeline.py

│ ├── huggingface.py

│ ├── convert_lora_safetensor_to_diffusers.py

│ ├── tagger.py

│ └── composite.py

│ ├── models

│ ├── __init__.py

│ ├── clip.py

│ └── resnet.py

│ ├── repo

│ └── .gitignore

│ ├── rife

│ ├── __init__.py

│ ├── ncnn.py

│ ├── rife.py

│ └── ffmpeg.py

│ ├── __main__.py

│ ├── ip_adapter

│ ├── __init__.py

│ └── resampler.py

│ ├── softmax_splatting

│ ├── correlation

│ │ └── README.md

│ └── README.md

│ ├── pipelines

│ ├── __init__.py

│ ├── context.py

│ ├── ti.py

│ └── lora.py

│ ├── dwpose

│ ├── wholebody.py

│ ├── __init__.py

│ └── onnxdet.py

│ ├── __init__.py

│ ├── schedulers.py

│ └── settings.py

├── setup.py

├── config

├── prompts

│ ├── ignore_tokens.txt

│ ├── to_8fps_Frames.bat

│ ├── concat_2horizontal.bat

│ ├── copy_png.bat

│ ├── 01-ToonYou.json

│ ├── 04-MajicMix.json

│ ├── 08-GhibliBackground.json

│ ├── 03-RcnzCartoon.json

│ ├── 06-Tusun.json

│ ├── 05-RealisticVision.json

│ ├── 07-FilmVelvia.json

│ ├── 02-Lyriel.json

│ ├── prompt_travel_multi_controlnet.json

│ └── img2img_sample.json

├── inference

│ ├── motion_sdxl.json

│ ├── default.json

│ ├── motion_v2.json

│ ├── sd15-unet3d.json

│ └── sd15-unet.json

└── GroundingDINO

│ ├── GroundingDINO_SwinB_cfg.py

│ └── GroundingDINO_SwinT_OGC.py

├── MANIFEST.in

├── scripts

├── download

│ ├── 11-ToonYou.sh

│ ├── 12-Lyriel.sh

│ ├── 14-MajicMix.sh

│ ├── 13-RcnzCartoon.sh

│ ├── 15-RealisticVision.sh

│ ├── 16-Tusun.sh

│ ├── 17-FilmVelvia.sh

│ ├── 18-GhibliBackground.sh

│ ├── 03-BaseSD.py

│ ├── 01-Motion-Modules.sh

│ ├── sd-models.aria2

│ └── 02-All-SD-Models.sh

└── test_persistent.py

├── data

└── models

│ ├── WD14tagger

│ └── model.onnx

│ └── README.md

├── .editorconfig

├── pyproject.toml

├── .pre-commit-config.yaml

├── setup.cfg

├── .vscode

└── settings.json

├── test.py

├── app.py

├── requirements.txt

├── README.md

├── .gitignore

└── example.md

/src/animatediff/utils/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/src/animatediff/models/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/src/animatediff/repo/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

3 |

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | import setuptools

2 |

3 | setuptools.setup()

4 |

--------------------------------------------------------------------------------

/config/prompts/ignore_tokens.txt:

--------------------------------------------------------------------------------

1 | motion_blur

2 | blurry

3 | realistic

4 | depth_of_field

5 |

--------------------------------------------------------------------------------

/config/prompts/to_8fps_Frames.bat:

--------------------------------------------------------------------------------

1 | ffmpeg -i %1 -start_number 0 -vf "scale=512:768,fps=8" %%04d.png

--------------------------------------------------------------------------------

/MANIFEST.in:

--------------------------------------------------------------------------------

1 | # setuptools_scm will grab all tracked files, minus these exclusions

2 | prune .vscode

3 |

--------------------------------------------------------------------------------

/src/animatediff/rife/__init__.py:

--------------------------------------------------------------------------------

1 | from .rife import app

2 |

3 | __all__ = [

4 | "app",

5 | ]

6 |

--------------------------------------------------------------------------------

/src/animatediff/__main__.py:

--------------------------------------------------------------------------------

1 | from animatediff.cli import cli

2 |

3 | if __name__ == "__main__":

4 | cli()

5 |

--------------------------------------------------------------------------------

/config/prompts/concat_2horizontal.bat:

--------------------------------------------------------------------------------

1 | ffmpeg -i %1 -i %2 -filter_complex "[0:v][1:v]hstack=inputs=2[v]" -map "[v]" -crf 15 2horizontal.mp4

--------------------------------------------------------------------------------

/scripts/download/11-ToonYou.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 | wget https://civitai.com/api/download/models/78775 -P models/DreamBooth_LoRA/ --content-disposition --no-check-certificate

3 |

--------------------------------------------------------------------------------

/scripts/download/12-Lyriel.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 | wget https://civitai.com/api/download/models/72396 -P models/DreamBooth_LoRA/ --content-disposition --no-check-certificate

3 |

--------------------------------------------------------------------------------

/scripts/download/14-MajicMix.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 | wget https://civitai.com/api/download/models/79068 -P models/DreamBooth_LoRA/ --content-disposition --no-check-certificate

3 |

--------------------------------------------------------------------------------

/data/models/WD14tagger/model.onnx:

--------------------------------------------------------------------------------

1 | ../../../../../.cache/huggingface/hub/models--SmilingWolf--wd-v1-4-moat-tagger-v2/blobs/b8cef913be4c9e8d93f9f903e74271416502ce0b4b04df0ff1e2f00df488aa03

--------------------------------------------------------------------------------

/scripts/download/13-RcnzCartoon.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 | wget https://civitai.com/api/download/models/71009 -P models/DreamBooth_LoRA/ --content-disposition --no-check-certificate

3 |

--------------------------------------------------------------------------------

/scripts/download/15-RealisticVision.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 | wget https://civitai.com/api/download/models/29460 -P models/DreamBooth_LoRA/ --content-disposition --no-check-certificate

3 |

--------------------------------------------------------------------------------

/data/models/README.md:

--------------------------------------------------------------------------------

1 | ## Folder that contains the weight

2 |

3 | Put the weights of the base model by creating a new 'huggingface' folder and that of the motion module by creating a new 'motion-module' folder

4 |

5 |

--------------------------------------------------------------------------------

/config/prompts/copy_png.bat:

--------------------------------------------------------------------------------

1 |

2 | setlocal enableDelayedExpansion

3 | FOR /l %%N in (1,1,%~n1) do (

4 | set "n=00000%%N"

5 | set "TEST=!n:~-5!

6 | echo !TEST!

7 | copy /y %1 !TEST!.png

8 | )

9 |

10 | ren %1 00000.png

11 |

12 |

--------------------------------------------------------------------------------

/scripts/download/16-Tusun.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 | wget https://civitai.com/api/download/models/97261 -P models/DreamBooth_LoRA/ --content-disposition --no-check-certificate

3 | wget https://civitai.com/api/download/models/50705 -P models/DreamBooth_LoRA/ --content-disposition --no-check-certificate

4 |

--------------------------------------------------------------------------------

/scripts/download/17-FilmVelvia.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 | wget https://civitai.com/api/download/models/90115 -P models/DreamBooth_LoRA/ --content-disposition --no-check-certificate

3 | wget https://civitai.com/api/download/models/92475 -P models/DreamBooth_LoRA/ --content-disposition --no-check-certificate

4 |

--------------------------------------------------------------------------------

/scripts/download/18-GhibliBackground.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 | wget https://civitai.com/api/download/models/102828 -P models/DreamBooth_LoRA/ --content-disposition --no-check-certificate

3 | wget https://civitai.com/api/download/models/57618 -P models/DreamBooth_LoRA/ --content-disposition --no-check-certificate

4 |

--------------------------------------------------------------------------------

/src/animatediff/ip_adapter/__init__.py:

--------------------------------------------------------------------------------

1 | from .ip_adapter import (IPAdapter, IPAdapterFull, IPAdapterPlus,

2 | IPAdapterPlusXL, IPAdapterXL)

3 |

4 | __all__ = [

5 | "IPAdapter",

6 | "IPAdapterPlus",

7 | "IPAdapterPlusXL",

8 | "IPAdapterXL",

9 | "IPAdapterFull",

10 | ]

11 |

--------------------------------------------------------------------------------

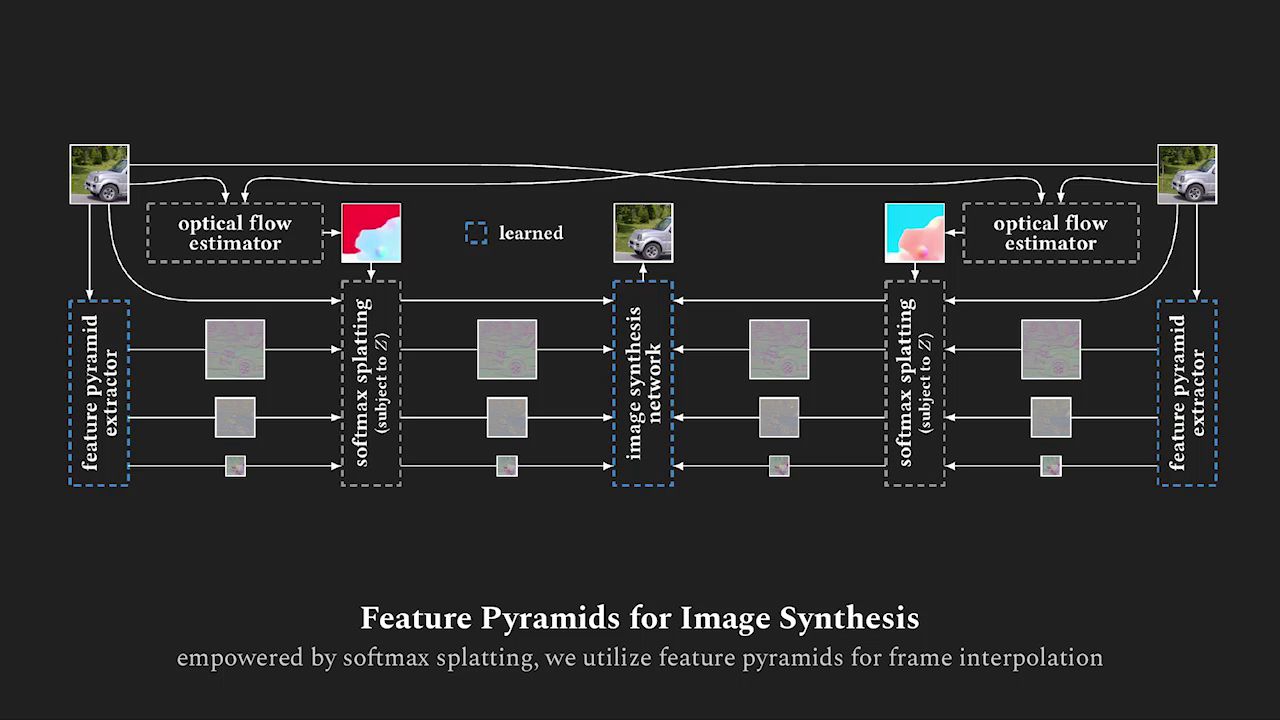

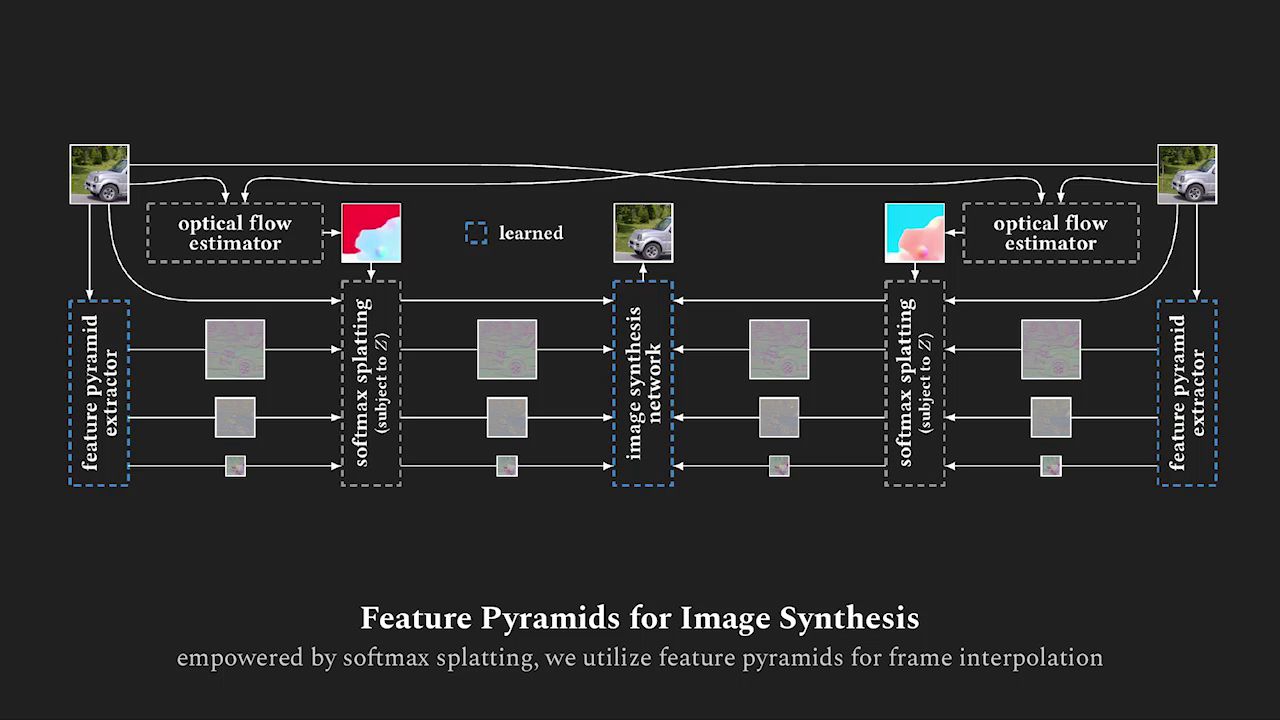

/src/animatediff/softmax_splatting/correlation/README.md:

--------------------------------------------------------------------------------

1 | This is an adaptation of the FlowNet2 implementation in order to compute cost volumes. Should you be making use of this work, please make sure to adhere to the licensing terms of the original authors. Should you be making use or modify this particular implementation, please acknowledge it appropriately.

--------------------------------------------------------------------------------

/src/animatediff/pipelines/__init__.py:

--------------------------------------------------------------------------------

1 | from .animation import AnimationPipeline, AnimationPipelineOutput

2 | from .context import get_context_scheduler, get_total_steps, ordered_halving, uniform

3 | from .ti import get_text_embeddings, load_text_embeddings

4 |

5 | __all__ = [

6 | "AnimationPipeline",

7 | "AnimationPipelineOutput",

8 | "get_context_scheduler",

9 | "get_total_steps",

10 | "ordered_halving",

11 | "uniform",

12 | "get_text_embeddings",

13 | "load_text_embeddings",

14 | ]

15 |

--------------------------------------------------------------------------------

/scripts/download/03-BaseSD.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | from diffusers.pipelines import StableDiffusionPipeline

3 |

4 | from animatediff import get_dir

5 |

6 | out_dir = get_dir("data/models/huggingface/stable-diffusion-v1-5")

7 |

8 | pipeline = StableDiffusionPipeline.from_pretrained(

9 | "runwayml/stable-diffusion-v1-5",

10 | use_safetensors=True,

11 | kwargs=dict(safety_checker=None, requires_safety_checker=False),

12 | )

13 | pipeline.save_pretrained(

14 | save_directory=str(out_dir),

15 | safe_serialization=True,

16 | )

17 |

--------------------------------------------------------------------------------

/scripts/download/01-Motion-Modules.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 |

3 | echo "Attempting download of Motion Module models from Google Drive."

4 | echo "If this fails, please download them manually from the links in the error messages/README."

5 |

6 | gdown 1RqkQuGPaCO5sGZ6V6KZ-jUWmsRu48Kdq -O models/motion-module/ || true

7 | gdown 1ql0g_Ys4UCz2RnokYlBjyOYPbttbIpbu -O models/motion-module/ || true

8 |

9 | echo "Motion module download script complete."

10 | echo "If you see errors above, please download the models manually from the links in the error messages/README."

11 | exit 0

12 |

--------------------------------------------------------------------------------

/.editorconfig:

--------------------------------------------------------------------------------

1 | # http://editorconfig.org

2 |

3 | root = true

4 |

5 | [*]

6 | indent_style = space

7 | indent_size = 4

8 | trim_trailing_whitespace = true

9 | insert_final_newline = true

10 | charset = utf-8

11 | end_of_line = lf

12 |

13 | [*.bat]

14 | indent_style = tab

15 | end_of_line = crlf

16 |

17 | [*.{json,jsonc}]

18 | indent_style = space

19 | indent_size = 2

20 |

21 | [.vscode/*.{json,jsonc}]

22 | indent_style = space

23 | indent_size = 4

24 |

25 | [*.{yml,yaml,toml}]

26 | indent_style = space

27 | indent_size = 2

28 |

29 | [*.md]

30 | trim_trailing_whitespace = false

31 |

32 | [Makefile]

33 | indent_style = tab

34 | indent_size = 8

35 |

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

1 | [build-system]

2 | build-backend = "setuptools.build_meta"

3 | requires = ["setuptools>=46.4.0", "wheel", "setuptools_scm[toml]>=6.2"]

4 |

5 | [tool.setuptools_scm]

6 | write_to = "src/animatediff/_version.py"

7 |

8 | [tool.black]

9 | line-length = 110

10 | target-version = ['py310']

11 | ignore = ['F841', 'F401', 'E501']

12 | preview = true

13 |

14 | [tool.ruff]

15 | line-length = 110

16 | target-version = 'py310'

17 | ignore = ['F841', 'F401', 'E501']

18 |

19 | [tool.ruff.isort]

20 | combine-as-imports = true

21 | force-wrap-aliases = true

22 | known-local-folder = ["src"]

23 | known-first-party = ["animatediff"]

24 |

25 | [tool.pyright]

26 | include = ['src/**']

27 | exclude = ['/usr/lib/**']

28 |

--------------------------------------------------------------------------------

/config/inference/motion_sdxl.json:

--------------------------------------------------------------------------------

1 | {

2 | "unet_additional_kwargs": {

3 | "unet_use_temporal_attention": false,

4 | "use_motion_module": true,

5 | "motion_module_resolutions": [1, 2, 4, 8],

6 | "motion_module_mid_block": false,

7 | "motion_module_type": "Vanilla",

8 | "motion_module_kwargs": {

9 | "num_attention_heads": 8,

10 | "num_transformer_block": 1,

11 | "attention_block_types": ["Temporal_Self", "Temporal_Self"],

12 | "temporal_position_encoding": true,

13 | "temporal_position_encoding_max_len": 32,

14 | "temporal_attention_dim_div": 1

15 | }

16 | },

17 | "noise_scheduler_kwargs": {

18 | "num_train_timesteps": 1000,

19 | "beta_start": 0.00085,

20 | "beta_end": 0.020,

21 | "beta_schedule": "scaled_linear"

22 | }

23 | }

24 |

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | # See https://pre-commit.com for more information

2 | ci:

3 | autofix_prs: true

4 | autoupdate_branch: "main"

5 | autoupdate_commit_msg: "[pre-commit.ci] pre-commit autoupdate"

6 | autoupdate_schedule: weekly

7 |

8 | repos:

9 | - repo: https://github.com/astral-sh/ruff-pre-commit

10 | rev: "v0.0.281"

11 | hooks:

12 | - id: ruff

13 | args: ["--fix", "--exit-non-zero-on-fix"]

14 |

15 | - repo: https://github.com/psf/black

16 | rev: 23.7.0

17 | hooks:

18 | - id: black

19 | args: ["--line-length=110"]

20 |

21 | - repo: https://github.com/pre-commit/pre-commit-hooks

22 | rev: v4.4.0

23 | hooks:

24 | - id: trailing-whitespace

25 | args: [--markdown-linebreak-ext=md]

26 | - id: end-of-file-fixer

27 | - id: check-yaml

28 | - id: check-added-large-files

29 |

--------------------------------------------------------------------------------

/config/inference/default.json:

--------------------------------------------------------------------------------

1 | {

2 | "unet_additional_kwargs": {

3 | "unet_use_cross_frame_attention": false,

4 | "unet_use_temporal_attention": false,

5 | "use_motion_module": true,

6 | "motion_module_resolutions": [1, 2, 4, 8],

7 | "motion_module_mid_block": false,

8 | "motion_module_decoder_only": false,

9 | "motion_module_type": "Vanilla",

10 | "motion_module_kwargs": {

11 | "num_attention_heads": 8,

12 | "num_transformer_block": 1,

13 | "attention_block_types": ["Temporal_Self", "Temporal_Self"],

14 | "temporal_position_encoding": true,

15 | "temporal_position_encoding_max_len": 24,

16 | "temporal_attention_dim_div": 1

17 | }

18 | },

19 | "noise_scheduler_kwargs": {

20 | "num_train_timesteps": 1000,

21 | "beta_start": 0.00085,

22 | "beta_end": 0.012,

23 | "beta_schedule": "linear",

24 | "steps_offset": 1,

25 | "clip_sample": false

26 | }

27 | }

28 |

--------------------------------------------------------------------------------

/config/inference/motion_v2.json:

--------------------------------------------------------------------------------

1 | {

2 | "unet_additional_kwargs": {

3 | "use_inflated_groupnorm": true,

4 | "unet_use_cross_frame_attention": false,

5 | "unet_use_temporal_attention": false,

6 | "use_motion_module": true,

7 | "motion_module_resolutions": [1, 2, 4, 8],

8 | "motion_module_mid_block": true,

9 | "motion_module_decoder_only": false,

10 | "motion_module_type": "Vanilla",

11 | "motion_module_kwargs": {

12 | "num_attention_heads": 8,

13 | "num_transformer_block": 1,

14 | "attention_block_types": ["Temporal_Self", "Temporal_Self"],

15 | "temporal_position_encoding": true,

16 | "temporal_position_encoding_max_len": 32,

17 | "temporal_attention_dim_div": 1

18 | }

19 | },

20 | "noise_scheduler_kwargs": {

21 | "num_train_timesteps": 1000,

22 | "beta_start": 0.00085,

23 | "beta_end": 0.012,

24 | "beta_schedule": "linear",

25 | "steps_offset": 1,

26 | "clip_sample": false

27 | }

28 | }

29 |

--------------------------------------------------------------------------------

/scripts/test_persistent.py:

--------------------------------------------------------------------------------

1 | from rich import print

2 |

3 | from animatediff import get_dir

4 | from animatediff.cli import generate, logger

5 |

6 | config_dir = get_dir("config")

7 |

8 | config_path = config_dir.joinpath("prompts/test.json")

9 | width = 512

10 | height = 512

11 | length = 32

12 | context = 16

13 | stride = 4

14 |

15 | logger.warn("Running first-round generation test, this should load the full model.\n\n")

16 | out_dir = generate(

17 | config_path=config_path,

18 | width=width,

19 | height=height,

20 | length=length,

21 | context=context,

22 | stride=stride,

23 | )

24 | logger.warn(f"Generated animation to {out_dir}")

25 |

26 | logger.warn("\n\nRunning second-round generation test, this should reuse the already loaded model.\n\n")

27 | out_dir = generate(

28 | config_path=config_path,

29 | width=width,

30 | height=height,

31 | length=length,

32 | context=context,

33 | stride=stride,

34 | )

35 | logger.warn(f"Generated animation to {out_dir}")

36 |

37 | logger.error("If the second round didn't talk about reloading the model, it worked! yay!")

38 |

--------------------------------------------------------------------------------

/scripts/download/sd-models.aria2:

--------------------------------------------------------------------------------

1 | https://civitai.com/api/download/models/78775

2 | out=models/sd/toonyou_beta3.safetensors

3 | https://civitai.com/api/download/models/72396

4 | out=models/sd/lyriel_v16.safetensors

5 | https://civitai.com/api/download/models/71009

6 | out=models/sd/rcnzCartoon3d_v10.safetensors

7 | https://civitai.com/api/download/models/79068

8 | out=majicmixRealistic_v5Preview.safetensors

9 | https://civitai.com/api/download/models/29460

10 | out=models/sd/realisticVisionV40_v20Novae.safetensors

11 | https://civitai.com/api/download/models/97261

12 | out=models/sd/TUSUN.safetensors

13 | https://civitai.com/api/download/models/50705

14 | out=models/sd/leosamsMoonfilm_reality20.safetensors

15 | https://civitai.com/api/download/models/90115

16 | out=models/sd/FilmVelvia2.safetensors

17 | https://civitai.com/api/download/models/92475

18 | out=models/sd/leosamsMoonfilm_filmGrain10.safetensors

19 | https://civitai.com/api/download/models/102828

20 | out=models/sd/Pyramid\ lora_Ghibli_n3.safetensors

21 | https://civitai.com/api/download/models/57618

22 | out=models/sd/CounterfeitV30_v30.safetensors

23 |

--------------------------------------------------------------------------------

/config/GroundingDINO/GroundingDINO_SwinB_cfg.py:

--------------------------------------------------------------------------------

1 | batch_size = 1

2 | modelname = "groundingdino"

3 | backbone = "swin_B_384_22k"

4 | position_embedding = "sine"

5 | pe_temperatureH = 20

6 | pe_temperatureW = 20

7 | return_interm_indices = [1, 2, 3]

8 | backbone_freeze_keywords = None

9 | enc_layers = 6

10 | dec_layers = 6

11 | pre_norm = False

12 | dim_feedforward = 2048

13 | hidden_dim = 256

14 | dropout = 0.0

15 | nheads = 8

16 | num_queries = 900

17 | query_dim = 4

18 | num_patterns = 0

19 | num_feature_levels = 4

20 | enc_n_points = 4

21 | dec_n_points = 4

22 | two_stage_type = "standard"

23 | two_stage_bbox_embed_share = False

24 | two_stage_class_embed_share = False

25 | transformer_activation = "relu"

26 | dec_pred_bbox_embed_share = True

27 | dn_box_noise_scale = 1.0

28 | dn_label_noise_ratio = 0.5

29 | dn_label_coef = 1.0

30 | dn_bbox_coef = 1.0

31 | embed_init_tgt = True

32 | dn_labelbook_size = 2000

33 | max_text_len = 256

34 | text_encoder_type = "bert-base-uncased"

35 | use_text_enhancer = True

36 | use_fusion_layer = True

37 | use_checkpoint = True

38 | use_transformer_ckpt = True

39 | use_text_cross_attention = True

40 | text_dropout = 0.0

41 | fusion_dropout = 0.0

42 | fusion_droppath = 0.1

43 | sub_sentence_present = True

44 |

--------------------------------------------------------------------------------

/config/GroundingDINO/GroundingDINO_SwinT_OGC.py:

--------------------------------------------------------------------------------

1 | batch_size = 1

2 | modelname = "groundingdino"

3 | backbone = "swin_T_224_1k"

4 | position_embedding = "sine"

5 | pe_temperatureH = 20

6 | pe_temperatureW = 20

7 | return_interm_indices = [1, 2, 3]

8 | backbone_freeze_keywords = None

9 | enc_layers = 6

10 | dec_layers = 6

11 | pre_norm = False

12 | dim_feedforward = 2048

13 | hidden_dim = 256

14 | dropout = 0.0

15 | nheads = 8

16 | num_queries = 900

17 | query_dim = 4

18 | num_patterns = 0

19 | num_feature_levels = 4

20 | enc_n_points = 4

21 | dec_n_points = 4

22 | two_stage_type = "standard"

23 | two_stage_bbox_embed_share = False

24 | two_stage_class_embed_share = False

25 | transformer_activation = "relu"

26 | dec_pred_bbox_embed_share = True

27 | dn_box_noise_scale = 1.0

28 | dn_label_noise_ratio = 0.5

29 | dn_label_coef = 1.0

30 | dn_bbox_coef = 1.0

31 | embed_init_tgt = True

32 | dn_labelbook_size = 2000

33 | max_text_len = 256

34 | text_encoder_type = "bert-base-uncased"

35 | use_text_enhancer = True

36 | use_fusion_layer = True

37 | use_checkpoint = True

38 | use_transformer_ckpt = True

39 | use_text_cross_attention = True

40 | text_dropout = 0.0

41 | fusion_dropout = 0.0

42 | fusion_droppath = 0.1

43 | sub_sentence_present = True

44 |

--------------------------------------------------------------------------------

/config/prompts/01-ToonYou.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "ToonYou",

3 | "base": "",

4 | "path": "models/sd/toonyou_beta3.safetensors",

5 | "motion_module": "models/motion-module/mm_sd_v15.ckpt",

6 | "compile": false,

7 | "seed": [

8 | 10788741199826055000, 6520604954829637000, 6519455744612556000,

9 | 16372571278361864000

10 | ],

11 | "scheduler": "k_dpmpp",

12 | "steps": 30,

13 | "guidance_scale": 8.5,

14 | "clip_skip": 2,

15 | "prompt": [

16 | "1girl, solo, best quality, masterpiece, looking at viewer, purple hair, orange hair, gradient hair, blurry background, upper body, dress, flower print, spaghetti strap, bare shoulders",

17 | "1girl, solo, masterpiece, best quality, cherry blossoms, hanami, pink flower, white flower, spring season, wisteria, petals, flower, plum blossoms, outdoors, falling petals, white hair, black eyes,",

18 | "1girl, solo, best quality, masterpiece, looking at viewer, purple hair, orange hair, gradient hair, blurry background, upper body, dress, flower print, spaghetti strap, bare shoulders",

19 | "1girl, solo, best quality, masterpiece, cloudy sky, dandelion, contrapposto, alternate hairstyle"

20 | ],

21 | "n_prompt": [

22 | "worst quality, low quality, cropped, lowres, text, jpeg artifacts, multiple view"

23 | ]

24 | }

25 |

--------------------------------------------------------------------------------

/src/animatediff/utils/wild_card.py:

--------------------------------------------------------------------------------

1 | import glob

2 | import os

3 | import random

4 | import re

5 |

6 | wild_card_regex = r'(\A|\W)__([\w-]+)__(\W|\Z)'

7 |

8 |

9 | def create_wild_card_map(wild_card_dir):

10 | result = {}

11 | if os.path.isdir(wild_card_dir):

12 | txt_list = glob.glob( os.path.join(wild_card_dir ,"**/*.txt"), recursive=True)

13 | for txt in txt_list:

14 | basename_without_ext = os.path.splitext(os.path.basename(txt))[0]

15 | with open(txt, encoding='utf-8') as f:

16 | try:

17 | result[basename_without_ext] = [s.rstrip() for s in f.readlines()]

18 | except Exception as e:

19 | print(e)

20 | print("can not read ", txt)

21 | return result

22 |

23 | def replace_wild_card_token(match_obj, wild_card_map):

24 | m1 = match_obj.group(1)

25 | m3 = match_obj.group(3)

26 |

27 | dict_name = match_obj.group(2)

28 |

29 | if dict_name in wild_card_map:

30 | token_list = wild_card_map[dict_name]

31 | token = token_list[random.randint(0,len(token_list)-1)]

32 | return m1+token+m3

33 | else:

34 | return match_obj.group(0)

35 |

36 | def replace_wild_card(prompt, wild_card_dir):

37 | wild_card_map = create_wild_card_map(wild_card_dir)

38 | prompt = re.sub(wild_card_regex, lambda x: replace_wild_card_token(x, wild_card_map ), prompt)

39 | return prompt

40 |

--------------------------------------------------------------------------------

/config/prompts/04-MajicMix.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "MajicMix",

3 | "base": "",

4 | "path": "models/sd/majicmixRealistic_v5Preview.safetensors",

5 | "motion_module": "models/motion-module/mm_sd_v15.ckpt",

6 | "seed": [

7 | 1572448948722921000, 1099474677988590700, 6488833139725636000,

8 | 18339859844376519000

9 | ],

10 | "scheduler": "k_dpmpp",

11 | "steps": 25,

12 | "guidance_scale": 7.5,

13 | "prompt": [

14 | "1girl, offshoulder, light smile, shiny skin best quality, masterpiece, photorealistic",

15 | "best quality, masterpiece, photorealistic, 1boy, 50 years old beard, dramatic lighting",

16 | "best quality, masterpiece, photorealistic, 1girl, light smile, shirt with collars, waist up, dramatic lighting, from below",

17 | "male, man, beard, bodybuilder, skinhead,cold face, tough guy, cowboyshot, tattoo, french windows, luxury hotel masterpiece, best quality, photorealistic"

18 | ],

19 | "n_prompt": [

20 | "ng_deepnegative_v1_75t, badhandv4, worst quality, low quality, normal quality, lowres, bad anatomy, bad hands, watermark, moles",

21 | "nsfw, ng_deepnegative_v1_75t,badhandv4, worst quality, low quality, normal quality, lowres,watermark, monochrome",

22 | "nsfw, ng_deepnegative_v1_75t,badhandv4, worst quality, low quality, normal quality, lowres,watermark, monochrome",

23 | "nude, nsfw, ng_deepnegative_v1_75t, badhandv4, worst quality, low quality, normal quality, lowres, bad anatomy, bad hands, monochrome, grayscale watermark, moles, people"

24 | ]

25 | }

26 |

--------------------------------------------------------------------------------

/config/prompts/08-GhibliBackground.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "GhibliBackground",

3 | "base": "models/sd/CounterfeitV30_25.safetensors",

4 | "path": "models/sd/lora_Ghibli_n3.safetensors",

5 | "motion_module": "models/motion-module/mm_sd_v15.ckpt",

6 | "seed": [

7 | 8775748474469046000, 5893874876080607000, 11911465742147697000,

8 | 12437784838692000000

9 | ],

10 | "scheduler": "k_dpmpp",

11 | "steps": 25,

12 | "guidance_scale": 7.5,

13 | "lora_alpha": 1,

14 | "prompt": [

15 | "best quality,single build,architecture, blue_sky, building,cloudy_sky, day, fantasy, fence, field, house, build,architecture,landscape, moss, outdoors, overgrown, path, river, road, rock, scenery, sky, sword, tower, tree, waterfall",

16 | "black_border, building, city, day, fantasy, ice, landscape, letterboxed, mountain, ocean, outdoors, planet, scenery, ship, snow, snowing, water, watercraft, waterfall, winter",

17 | ",mysterious sea area, fantasy,build,concept",

18 | "Tomb Raider,Scenography,Old building"

19 | ],

20 | "n_prompt": [

21 | "easynegative,bad_construction,bad_structure,bad_wail,bad_windows,blurry,cloned_window,cropped,deformed,disfigured,error,extra_windows,extra_chimney,extra_door,extra_structure,extra_frame,fewer_digits,fused_structure,gross_proportions,jpeg_artifacts,long_roof,low_quality,structure_limbs,missing_windows,missing_doors,missing_roofs,mutated_structure,mutation,normal_quality,out_of_frame,owres,poorly_drawn_structure,poorly_drawn_house,signature,text,too_many_windows,ugly,username,uta,watermark,worst_quality"

22 | ]

23 | }

24 |

--------------------------------------------------------------------------------

/scripts/download/02-All-SD-Models.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 | set -euo pipefail

3 |

4 | repo_dir=$(git rev-parse --show-toplevel)

5 | if [[ ! -d "${repo_dir}" ]]; then

6 | echo "Could not find the repo root. Checking for ./data/models/sd"

7 | repo_dir="."

8 | fi

9 |

10 | models_dir=$(realpath "${repo_dir}/data/models/sd")

11 | if [[ ! -d "${models_dir}" ]]; then

12 | echo "Could not find repo root or models directory."

13 | echo "Either create ./data/models/sd or run this script from a checked-out git repo."

14 | exit 1

15 | fi

16 |

17 | model_urls=(

18 | https://civitai.com/api/download/models/78775 # ToonYou

19 | https://civitai.com/api/download/models/72396 # Lyriel

20 | https://civitai.com/api/download/models/71009 # RcnzCartoon

21 | https://civitai.com/api/download/models/79068 # MajicMix

22 | https://civitai.com/api/download/models/29460 # RealisticVision

23 | https://civitai.com/api/download/models/97261 # Tusun (1/2)

24 | https://civitai.com/api/download/models/50705 # Tusun (2/2)

25 | https://civitai.com/api/download/models/90115 # FilmVelvia (1/2)

26 | https://civitai.com/api/download/models/92475 # FilmVelvia (2/2)

27 | https://civitai.com/api/download/models/102828 # GhibliBackground (1/2)

28 | https://civitai.com/api/download/models/57618 # GhibliBackground (2/2)

29 | )

30 |

31 | echo "Downloading model files to ${models_dir}..."

32 |

33 | # Create the models directory if it doesn't exist

34 | mkdir -p "${models_dir}"

35 |

36 | # Download the models

37 | for url in ${model_urls[@]}; do

38 | curl -JLO --output-dir "${models_dir}" "${url}" || true

39 | done

40 |

--------------------------------------------------------------------------------

/src/animatediff/dwpose/wholebody.py:

--------------------------------------------------------------------------------

1 | # https://github.com/IDEA-Research/DWPose

2 | import cv2

3 | import numpy as np

4 | import onnxruntime as ort

5 |

6 | from .onnxdet import inference_detector

7 | from .onnxpose import inference_pose

8 |

9 |

10 | class Wholebody:

11 | def __init__(self, device='cuda:0'):

12 | providers = ['CPUExecutionProvider'

13 | ] if device == 'cpu' else ['CUDAExecutionProvider']

14 | onnx_det = 'data/models/DWPose/yolox_l.onnx'

15 | onnx_pose = 'data/models/DWPose/dw-ll_ucoco_384.onnx'

16 |

17 | self.session_det = ort.InferenceSession(path_or_bytes=onnx_det, providers=providers)

18 | self.session_pose = ort.InferenceSession(path_or_bytes=onnx_pose, providers=providers)

19 |

20 | def __call__(self, oriImg):

21 | det_result = inference_detector(self.session_det, oriImg)

22 | keypoints, scores = inference_pose(self.session_pose, det_result, oriImg)

23 |

24 | keypoints_info = np.concatenate(

25 | (keypoints, scores[..., None]), axis=-1)

26 | # compute neck joint

27 | neck = np.mean(keypoints_info[:, [5, 6]], axis=1)

28 | # neck score when visualizing pred

29 | neck[:, 2:4] = np.logical_and(

30 | keypoints_info[:, 5, 2:4] > 0.3,

31 | keypoints_info[:, 6, 2:4] > 0.3).astype(int)

32 | new_keypoints_info = np.insert(

33 | keypoints_info, 17, neck, axis=1)

34 | mmpose_idx = [

35 | 17, 6, 8, 10, 7, 9, 12, 14, 16, 13, 15, 2, 1, 4, 3

36 | ]

37 | openpose_idx = [

38 | 1, 2, 3, 4, 6, 7, 8, 9, 10, 12, 13, 14, 15, 16, 17

39 | ]

40 | new_keypoints_info[:, openpose_idx] = \

41 | new_keypoints_info[:, mmpose_idx]

42 | keypoints_info = new_keypoints_info

43 |

44 | keypoints, scores = keypoints_info[

45 | ..., :2], keypoints_info[..., 2]

46 |

47 | return keypoints, scores

48 |

49 |

50 |

--------------------------------------------------------------------------------

/src/animatediff/__init__.py:

--------------------------------------------------------------------------------

1 | try:

2 | from ._version import (

3 | version as __version__,

4 | version_tuple,

5 | )

6 | except ImportError:

7 | __version__ = "unknown (no version information available)"

8 | version_tuple = (0, 0, "unknown", "noinfo")

9 |

10 | from functools import lru_cache

11 | from os import getenv

12 | from pathlib import Path

13 | from warnings import filterwarnings

14 |

15 | from rich.console import Console

16 | from tqdm import TqdmExperimentalWarning

17 |

18 | PACKAGE = __package__.replace("_", "-")

19 | PACKAGE_ROOT = Path(__file__).parent.parent

20 |

21 | HF_HOME = Path(getenv("HF_HOME", Path.home() / ".cache" / "huggingface"))

22 | HF_HUB_CACHE = Path(getenv("HUGGINGFACE_HUB_CACHE", HF_HOME.joinpath("hub")))

23 |

24 | HF_LIB_NAME = "animatediff-cli"

25 | HF_LIB_VER = __version__

26 | HF_MODULE_REPO = "neggles/animatediff-modules"

27 |

28 | console = Console(highlight=True)

29 | err_console = Console(stderr=True)

30 |

31 | # shhh torch, don't worry about it it's fine

32 | filterwarnings("ignore", category=UserWarning, message="TypedStorage is deprecated")

33 | # you too tqdm

34 | filterwarnings("ignore", category=TqdmExperimentalWarning)

35 |

36 |

37 | @lru_cache(maxsize=4)

38 | def get_dir(dirname: str = "data") -> Path:

39 | if PACKAGE_ROOT.name == "src":

40 | # we're installed in editable mode from within the repo

41 | dirpath = PACKAGE_ROOT.parent.joinpath(dirname)

42 | else:

43 | # we're installed normally, so we just use the current working directory

44 | dirpath = Path.cwd().joinpath(dirname)

45 | dirpath.mkdir(parents=True, exist_ok=True)

46 | return dirpath.absolute()

47 |

48 |

49 | __all__ = [

50 | "__version__",

51 | "version_tuple",

52 | "PACKAGE",

53 | "PACKAGE_ROOT",

54 | "HF_HOME",

55 | "HF_HUB_CACHE",

56 | "console",

57 | "err_console",

58 | "get_dir",

59 | "models",

60 | "pipelines",

61 | "rife",

62 | "utils",

63 | "cli",

64 | "generate",

65 | "schedulers",

66 | "settings",

67 | ]

68 |

--------------------------------------------------------------------------------

/config/inference/sd15-unet3d.json:

--------------------------------------------------------------------------------

1 | {

2 | "sample_size": 64,

3 | "in_channels": 4,

4 | "out_channels": 4,

5 | "center_input_sample": false,

6 | "flip_sin_to_cos": true,

7 | "freq_shift": 0,

8 | "down_block_types": [

9 | "CrossAttnDownBlock3D",

10 | "CrossAttnDownBlock3D",

11 | "CrossAttnDownBlock3D",

12 | "DownBlock3D"

13 | ],

14 | "mid_block_type": "UNetMidBlock3DCrossAttn",

15 | "up_block_types": [

16 | "UpBlock3D",

17 | "CrossAttnUpBlock3D",

18 | "CrossAttnUpBlock3D",

19 | "CrossAttnUpBlock3D"

20 | ],

21 | "only_cross_attention": false,

22 | "block_out_channels": [320, 640, 1280, 1280],

23 | "layers_per_block": 2,

24 | "downsample_padding": 1,

25 | "mid_block_scale_factor": 1,

26 | "act_fn": "silu",

27 | "norm_num_groups": 32,

28 | "norm_eps": 1e-5,

29 | "cross_attention_dim": 768,

30 | "attention_head_dim": 8,

31 | "dual_cross_attention": false,

32 | "use_linear_projection": false,

33 | "class_embed_type": null,

34 | "num_class_embeds": null,

35 | "upcast_attention": false,

36 | "resnet_time_scale_shift": "default",

37 | "use_motion_module": true,

38 | "motion_module_resolutions": [1, 2, 4, 8],

39 | "motion_module_mid_block": false,

40 | "motion_module_decoder_only": false,

41 | "motion_module_type": "Vanilla",

42 | "motion_module_kwargs": {

43 | "num_attention_heads": 8,

44 | "num_transformer_block": 1,

45 | "attention_block_types": ["Temporal_Self", "Temporal_Self"],

46 | "temporal_position_encoding": true,

47 | "temporal_position_encoding_max_len": 24,

48 | "temporal_attention_dim_div": 1

49 | },

50 | "unet_use_cross_frame_attention": false,

51 | "unet_use_temporal_attention": false,

52 | "_use_default_values": [

53 | "use_linear_projection",

54 | "mid_block_type",

55 | "upcast_attention",

56 | "dual_cross_attention",

57 | "num_class_embeds",

58 | "only_cross_attention",

59 | "class_embed_type",

60 | "resnet_time_scale_shift"

61 | ],

62 | "_class_name": "UNet3DConditionModel",

63 | "_diffusers_version": "0.6.0"

64 | }

65 |

--------------------------------------------------------------------------------

/setup.cfg:

--------------------------------------------------------------------------------

1 | [metadata]

2 | name = animatediff

3 | author = Andi Powers-Holmes

4 | email = aholmes@omnom.net

5 | maintainer = Andi Powers-Holmes

6 | maintainer_email = aholmes@omnom.net

7 | license_files = LICENSE.md

8 |

9 | [options]

10 | python_requires = >=3.10

11 | packages = find:

12 | package_dir =

13 | =src

14 | py_modules =

15 | animatediff

16 | include_package_data = True

17 | install_requires =

18 | accelerate >= 0.20.3

19 | colorama >= 0.4.3, < 0.5.0

20 | cmake >= 3.25.0

21 | diffusers == 0.23.0

22 | einops >= 0.6.1

23 | gdown >= 4.6.6

24 | ninja >= 1.11.0

25 | numpy >= 1.22.4

26 | omegaconf >= 2.3.0

27 | pillow >= 9.4.0, < 10.0.0

28 | pydantic >= 1.10.0, < 2.0.0

29 | rich >= 13.0.0, < 14.0.0

30 | safetensors >= 0.3.1

31 | sentencepiece >= 0.1.99

32 | shellingham >= 1.5.0, < 2.0.0

33 | torch >= 2.1.0, < 2.2.0

34 | torchaudio

35 | torchvision

36 | transformers >= 4.30.2, < 4.35.0

37 | typer >= 0.9.0, < 1.0.0

38 | controlnet_aux

39 | matplotlib

40 | ffmpeg-python >= 0.2.0

41 | mediapipe

42 | xformers >= 0.0.22.post7

43 | opencv-python

44 |

45 | [options.packages.find]

46 | where = src

47 |

48 | [options.package_data]

49 | * = *.txt, *.md

50 |

51 | [options.extras_require]

52 | dev =

53 | black >= 22.3.0

54 | ruff >= 0.0.234

55 | setuptools-scm >= 7.0.0

56 | pre-commit >= 3.3.0

57 | ipython

58 | rife =

59 | ffmpeg-python >= 0.2.0

60 | stylize =

61 | ffmpeg-python >= 0.2.0

62 | onnxruntime-gpu

63 | pandas

64 | opencv-python

65 | dwpose =

66 | onnxruntime-gpu

67 | stylize_mask =

68 | ffmpeg-python >= 0.2.0

69 | pandas

70 | segment-anything-hq == 0.3

71 | groundingdino-py == 0.4.0

72 | gitpython

73 | rembg[gpu]

74 | onnxruntime-gpu

75 |

76 | [options.entry_points]

77 | console_scripts =

78 | animatediff = animatediff.cli:cli

79 |

80 | [flake8]

81 | max-line-length = 110

82 | ignore =

83 | # these are annoying during development but should be enabled later

84 | F401 # module imported but unused

85 | F841 # local variable is assigned to but never used

86 | # black automatically fixes this

87 | E501 # line too long

88 | # black breaks these two rules:

89 | E203 # whitespace before :

90 | W503 # line break before binary operator

91 | extend-exclude =

92 | .venv

93 |

--------------------------------------------------------------------------------

/.vscode/settings.json:

--------------------------------------------------------------------------------

1 | {

2 | "editor.insertSpaces": true,

3 | "editor.tabSize": 4,

4 | "files.trimTrailingWhitespace": true,

5 | "editor.rulers": [100, 120],

6 |

7 | "files.associations": {

8 | "*.yaml": "yaml"

9 | },

10 |

11 | "files.exclude": {

12 | "**/.git": true,

13 | "**/.svn": true,

14 | "**/.hg": true,

15 | "**/CVS": true,

16 | "**/.DS_Store": true,

17 | "**/Thumbs.db": true,

18 | "**/__pycache__": true

19 | },

20 |

21 | "[python]": {

22 | "editor.wordBasedSuggestions": false,

23 | "editor.formatOnSave": true,

24 | "editor.defaultFormatter": "ms-python.black-formatter",

25 | "editor.codeActionsOnSave": {

26 | "source.organizeImports": true

27 | }

28 | },

29 | "python.analysis.include": ["./src", "./scripts", "./tests"],

30 |

31 | "python.linting.enabled": false,

32 | "python.linting.pylintEnabled": false,

33 | "python.linting.flake8Enabled": true,

34 | "python.linting.flake8Args": ["--config=${workspaceFolder}/setup.cfg"],

35 |

36 | "[json]": {

37 | "editor.tabSize": 2,

38 | "editor.detectIndentation": false,

39 | "editor.formatOnSave": true,

40 | "editor.formatOnSaveMode": "file"

41 | },

42 |

43 | "[toml]": {

44 | "editor.tabSize": 2,

45 | "editor.detectIndentation": false,

46 | "editor.formatOnSave": true,

47 | "editor.formatOnSaveMode": "file",

48 | "editor.defaultFormatter": "tamasfe.even-better-toml",

49 | "editor.rulers": [80, 100]

50 | },

51 | "evenBetterToml.formatter.columnWidth": 88,

52 |

53 | "[yaml]": {

54 | "editor.detectIndentation": false,

55 | "editor.tabSize": 2,

56 | "editor.formatOnSave": true,

57 | "editor.formatOnSaveMode": "file"

58 | },

59 | "yaml.format.bracketSpacing": true,

60 | "yaml.format.proseWrap": "preserve",

61 | "yaml.format.singleQuote": false,

62 | "yaml.format.printWidth": 110,

63 |

64 | "[markdown]": {

65 | "files.trimTrailingWhitespace": false

66 | },

67 |

68 | "css.lint.validProperties": ["dock", "content-align", "content-justify"],

69 | "[css]": {

70 | "editor.formatOnSave": true

71 | },

72 |

73 | "remote.autoForwardPorts": false,

74 | "remote.autoForwardPortsSource": "process"

75 | }

76 |

--------------------------------------------------------------------------------

/test.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | import json

3 | import os

4 | import asyncio

5 |

6 | async def stylize(video):

7 | command = f"animatediff stylize create-config {video}"

8 | process = await asyncio.create_subprocess_shell(

9 | command,

10 | stdout=asyncio.subprocess.PIPE,

11 | stderr=asyncio.subprocess.PIPE

12 | )

13 | stdout, stderr = await process.communicate()

14 | if process.returncode == 0:

15 | return stdout.decode()

16 | else:

17 | print(f"Error: {stderr.decode()}")

18 |

19 | async def start_video_edit(prompt_file):

20 | command = f"animatediff stylize generate {prompt_file}"

21 | process = await asyncio.create_subprocess_shell(

22 | command,

23 | stdout=asyncio.subprocess.PIPE,

24 | stderr=asyncio.subprocess.PIPE

25 | )

26 | stdout, stderr = await process.communicate()

27 | if process.returncode == 0:

28 | return stdout.decode()

29 | else:

30 | print(f"Error: {stderr.decode()}")

31 |

32 | def edit_video(video, pos_prompt):

33 | x = asyncio.run(stylize(video))

34 | x = x.split("stylize.py")

35 | config = x[18].split("config =")[-1].strip()

36 | d = x[19].split("stylize_dir = ")[-1].strip()

37 |

38 | with open(config, 'r+') as f:

39 | data = json.load(f)

40 | data['head_prompt'] = pos_prompt

41 | data["path"] = "models/huggingface/xxmix9realistic_v40.safetensors"

42 |

43 | os.remove(config)

44 | with open(config, 'w') as f:

45 | json.dump(data, f, indent=4)

46 |

47 | out = asyncio.run(start_video_edit(d))

48 | out = out.split("Stylized results are output to ")[-1]

49 | out = out.split("stylize.py")[0].strip()

50 |

51 | cwd = os.getcwd()

52 | video_dir = cwd + "/" + out

53 |

54 | video_extensions = {'.mp4', '.avi', '.mkv', '.mov', '.flv', '.wmv'}

55 | video_path = None

56 |

57 | for dirpath, dirnames, filenames in os.walk(video_dir):

58 | for filename in filenames:

59 | if os.path.splitext(filename)[1].lower() in video_extensions:

60 | video_path = os.path.join(dirpath, filename)

61 | break

62 | if video_path:

63 | break

64 |

65 | return video_path

66 |

67 | video_path = input("Enter the path to your video: ")

68 | pos_prompt = input("Enter the what you want to do with the video: ")

69 | print("The video is stored at", edit_video(video_path, pos_prompt))

--------------------------------------------------------------------------------

/src/animatediff/utils/mask_rembg.py:

--------------------------------------------------------------------------------

1 | import glob

2 | import logging

3 | import os

4 | from pathlib import Path

5 |

6 | import cv2

7 | import numpy as np

8 | import torch

9 | from PIL import Image

10 | from rembg import new_session, remove

11 | from tqdm.rich import tqdm

12 |

13 | logger = logging.getLogger(__name__)

14 |

15 | def rembg_create_fg(frame_dir, output_dir, output_mask_dir, masked_area_list,

16 | bg_color=(0,255,0),

17 | mask_padding=0,

18 | ):

19 |

20 | frame_list = sorted(glob.glob( os.path.join(frame_dir, "[0-9]*.png"), recursive=False))

21 |

22 | if mask_padding != 0:

23 | kernel = np.ones((abs(mask_padding),abs(mask_padding)),np.uint8)

24 | kernel2 = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (3, 3))

25 |

26 | session = new_session(providers=['CUDAExecutionProvider', 'CPUExecutionProvider'])

27 |

28 | for i, frame in tqdm(enumerate(frame_list),total=len(frame_list), desc=f"creating mask"):

29 | frame = Path(frame)

30 | file_name = frame.name

31 |

32 | cur_frame_no = int(frame.stem)

33 |

34 | img = Image.open(frame)

35 | img_array = np.asarray(img)

36 |

37 | mask_array = remove(img_array, only_mask=True, session=session)

38 |

39 | #mask_array = mask_array[None,...]

40 |

41 | if mask_padding < 0:

42 | mask_array = cv2.erode(mask_array.astype(np.uint8),kernel,iterations = 1)

43 | elif mask_padding > 0:

44 | mask_array = cv2.dilate(mask_array.astype(np.uint8),kernel,iterations = 1)

45 |

46 | mask_array = cv2.morphologyEx(mask_array, cv2.MORPH_OPEN, kernel2)

47 | mask_array = cv2.GaussianBlur(mask_array, (7, 7), sigmaX=3, sigmaY=3, borderType=cv2.BORDER_DEFAULT)

48 |

49 | if masked_area_list[cur_frame_no] is not None:

50 | masked_area_list[cur_frame_no] = np.where(masked_area_list[cur_frame_no] > mask_array[None,...], masked_area_list[cur_frame_no], mask_array[None,...])

51 | else:

52 | masked_area_list[cur_frame_no] = mask_array[None,...]

53 |

54 | if output_mask_dir:

55 | Image.fromarray(mask_array).save( output_mask_dir / file_name )

56 |

57 | img_array = np.asarray(img).copy()

58 | if bg_color is not None:

59 | img_array[mask_array == 0] = bg_color

60 |

61 | img = Image.fromarray(img_array)

62 |

63 | img.save( output_dir / file_name )

64 |

65 | return masked_area_list

66 |

67 |

68 |

69 |

--------------------------------------------------------------------------------

/config/prompts/03-RcnzCartoon.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "RcnzCartoon",

3 | "base": "",

4 | "path": "models/sd/rcnzCartoon3d_v10.safetensors",

5 | "motion_module": "models/motion-module/mm_sd_v15.ckpt",

6 | "seed": [

7 | 16931037867122268000, 2094308009433392000, 4292543217695451000,

8 | 15572665120852310000

9 | ],

10 | "scheduler": "k_dpmpp",

11 | "steps": 25,

12 | "guidance_scale": 7.5,

13 | "prompt": [

14 | "Jane Eyre with headphones, natural skin texture,4mm,k textures, soft cinematic light, adobe lightroom, photolab, hdr, intricate, elegant, highly detailed, sharp focus, cinematic look, soothing tones, insane details, intricate details, hyperdetailed, low contrast, soft cinematic light, dim colors, exposure blend, hdr, faded",

15 | "close up Portrait photo of muscular bearded guy in a worn mech suit, light bokeh, intricate, steel metal [rust], elegant, sharp focus, photo by greg rutkowski, soft lighting, vibrant colors, masterpiece, streets, detailed face",

16 | "absurdres, photorealistic, masterpiece, a 30 year old man with gold framed, aviator reading glasses and a black hooded jacket and a beard, professional photo, a character portrait, altermodern, detailed eyes, detailed lips, detailed face, grey eyes",

17 | "a golden labrador, warm vibrant colours, natural lighting, dappled lighting, diffused lighting, absurdres, highres,k, uhd, hdr, rtx, unreal, octane render, RAW photo, photorealistic, global illumination, subsurface scattering"

18 | ],

19 | "n_prompt": [

20 | "deformed, distorted, disfigured, poorly drawn, bad anatomy, wrong anatomy, extra limb, missing limb, floating limbs, mutated hands and fingers, disconnected limbs, mutation, mutated, ugly, disgusting, blurry, amputation",

21 | "nude, cross eyed, tongue, open mouth, inside, 3d, cartoon, anime, sketches, worst quality, low quality, normal quality, lowres, normal quality, monochrome, grayscale, skin spots, acnes, skin blemishes, bad anatomy, red eyes, muscular",

22 | "easynegative, cartoon, anime, sketches, necklace, earrings worst quality, low quality, normal quality, bad anatomy, bad hands, shiny skin, error, missing fingers, extra digit, fewer digits, jpeg artifacts, signature, watermark, username, blurry, chubby, anorectic, bad eyes, old, wrinkled skin, red skin, photograph By bad artist -neg, big eyes, muscular face,",

23 | "beard, EasyNegative, lowres, chromatic aberration, depth of field, motion blur, blurry, bokeh, bad quality, worst quality, multiple arms, badhand"

24 | ]

25 | }

26 |

--------------------------------------------------------------------------------

/config/prompts/06-Tusun.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "Tusun",

3 | "base": "models/sd/moonfilm_reality20.safetensors",

4 | "path": "models/sd/TUSUN.safetensors",

5 | "motion_module": "models/motion-module/mm_sd_v15.ckpt",

6 | "seed": [

7 | 10154078483724687000, 2664393535095473700, 4231566096207623000,

8 | 1713349740448094500

9 | ],

10 | "scheduler": "k_dpmpp",

11 | "steps": 25,

12 | "guidance_scale": 7.5,

13 | "lora_alpha": 0.6,

14 | "prompt": [

15 | "tusuncub with its mouth open, blurry, open mouth, fangs, photo background, looking at viewer, tongue, full body, solo, cute and lovely, Beautiful and realistic eye details, perfect anatomy, Nonsense, pure background, Centered-Shot, realistic photo, photograph, 4k, hyper detailed, DSLR, 24 Megapixels, 8mm Lens, Full Frame, film grain, Global Illumination, studio Lighting, Award Winning Photography, diffuse reflection, ray tracing",

16 | "cute tusun with a blurry background, black background, simple background, signature, face, solo, cute and lovely, Beautiful and realistic eye details, perfect anatomy, Nonsense, pure background, Centered-Shot, realistic photo, photograph, 4k, hyper detailed, DSLR, 24 Megapixels, 8mm Lens, Full Frame, film grain, Global Illumination, studio Lighting, Award Winning Photography, diffuse reflection, ray tracing",

17 | "cut tusuncub walking in the snow, blurry, looking at viewer, depth of field, blurry background, full body, solo, cute and lovely, Beautiful and realistic eye details, perfect anatomy, Nonsense, pure background, Centered-Shot, realistic photo, photograph, 4k, hyper detailed, DSLR, 24 Megapixels, 8mm Lens, Full Frame, film grain, Global Illumination, studio Lighting, Award Winning Photography, diffuse reflection, ray tracing",

18 | "character design, cyberpunk tusun kitten wearing astronaut suit, sci-fic, realistic eye color and details, fluffy, big head, science fiction, communist ideology, Cyborg, fantasy, intense angle, soft lighting, photograph, 4k, hyper detailed, portrait wallpaper, realistic, photo-realistic, DSLR, 24 Megapixels, Full Frame, vibrant details, octane render, finely detail, best quality, incredibly absurdres, robotic parts, rim light, vibrant details, luxurious cyberpunk, hyperrealistic, cable electric wires, microchip, full body"

19 | ],

20 | "n_prompt": [

21 | "worst quality, low quality, deformed, distorted, disfigured, bad eyes, bad anatomy, disconnected limbs, wrong body proportions, low quality, worst quality, text, watermark, signatre, logo, illustration, painting, cartoons, ugly, easy_negative"

22 | ]

23 | }

24 |

--------------------------------------------------------------------------------

/config/prompts/05-RealisticVision.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "RealisticVision",

3 | "base": "",

4 | "path": "models/sd/realisticVisionV20_v20.safetensors",

5 | "motion_module": "models/motion-module/mm_sd_v15.ckpt",

6 | "seed": [

7 | 5658137986800322000, 12099779162349365000, 10499524853910854000,

8 | 16768009035333712000

9 | ],

10 | "scheduler": "k_dpmpp",

11 | "steps": 25,

12 | "guidance_scale": 7.5,

13 | "prompt": [

14 | "b&w photo of 42 y.o man in black clothes, bald, face, half body, body, high detailed skin, skin pores, coastline, overcast weather, wind, waves, 8k uhd, dslr, soft lighting, high quality, film grain, Fujifilm XT3",

15 | "close up photo of a rabbit, forest, haze, halation, bloom, dramatic atmosphere, centred, rule of thirds, 200mm 1.4f macro shot",

16 | "photo of coastline, rocks, storm weather, wind, waves, lightning, 8k uhd, dslr, soft lighting, high quality, film grain, Fujifilm XT3",

17 | "night, b&w photo of old house, post apocalypse, forest, storm weather, wind, rocks, 8k uhd, dslr, soft lighting, high quality, film grain"

18 | ],

19 | "n_prompt": [

20 | "semi-realistic, cgi, 3d, render, sketch, cartoon, drawing, anime, text, close up, cropped, out of frame, worst quality, low quality, jpeg artifacts, ugly, duplicate, morbid, mutilated, extra fingers, mutated hands, poorly drawn hands, poorly drawn face, mutation, deformed, blurry, dehydrated, bad anatomy, bad proportions, extra limbs, cloned face, disfigured, gross proportions, malformed limbs, missing arms, missing legs, extra arms, extra legs, fused fingers, too many fingers, long neck",

21 | "semi-realistic, cgi, 3d, render, sketch, cartoon, drawing, anime, text, close up, cropped, out of frame, worst quality, low quality, jpeg artifacts, ugly, duplicate, morbid, mutilated, extra fingers, mutated hands, poorly drawn hands, poorly drawn face, mutation, deformed, blurry, dehydrated, bad anatomy, bad proportions, extra limbs, cloned face, disfigured, gross proportions, malformed limbs, missing arms, missing legs, extra arms, extra legs, fused fingers, too many fingers, long neck",

22 | "blur, haze, deformed iris, deformed pupils, semi-realistic, cgi, 3d, render, sketch, cartoon, drawing, anime, mutated hands and fingers, deformed, distorted, disfigured, poorly drawn, bad anatomy, wrong anatomy, extra limb, missing limb, floating limbs, disconnected limbs, mutation, mutated, ugly, disgusting, amputation",

23 | "blur, haze, deformed iris, deformed pupils, semi-realistic, cgi, 3d, render, sketch, cartoon, drawing, anime, art, mutated hands and fingers, deformed, distorted, disfigured, poorly drawn, bad anatomy, wrong anatomy, extra limb, missing limb, floating limbs, disconnected limbs, mutation, mutated, ugly, disgusting, amputation"

24 | ]

25 | }

26 |

--------------------------------------------------------------------------------

/config/inference/sd15-unet.json:

--------------------------------------------------------------------------------

1 | {

2 | "sample_size": 64,

3 | "in_channels": 4,

4 | "out_channels": 4,

5 | "center_input_sample": false,

6 | "flip_sin_to_cos": true,

7 | "freq_shift": 0,

8 | "down_block_types": [

9 | "CrossAttnDownBlock2D",

10 | "CrossAttnDownBlock2D",

11 | "CrossAttnDownBlock2D",

12 | "DownBlock2D"

13 | ],

14 | "mid_block_type": "UNetMidBlock2DCrossAttn",

15 | "up_block_types": [

16 | "UpBlock2D",

17 | "CrossAttnUpBlock2D",

18 | "CrossAttnUpBlock2D",

19 | "CrossAttnUpBlock2D"

20 | ],

21 | "only_cross_attention": false,

22 | "block_out_channels": [320, 640, 1280, 1280],

23 | "layers_per_block": 2,

24 | "downsample_padding": 1,

25 | "mid_block_scale_factor": 1,

26 | "act_fn": "silu",

27 | "norm_num_groups": 32,

28 | "norm_eps": 1e-5,

29 | "cross_attention_dim": 768,

30 | "transformer_layers_per_block": 1,

31 | "encoder_hid_dim": null,

32 | "encoder_hid_dim_type": null,

33 | "attention_head_dim": 8,

34 | "num_attention_heads": null,

35 | "dual_cross_attention": false,

36 | "use_linear_projection": false,

37 | "class_embed_type": null,

38 | "addition_embed_type": null,

39 | "addition_time_embed_dim": null,

40 | "num_class_embeds": null,

41 | "upcast_attention": false,

42 | "resnet_time_scale_shift": "default",

43 | "resnet_skip_time_act": false,

44 | "resnet_out_scale_factor": 1.0,

45 | "time_embedding_type": "positional",

46 | "time_embedding_dim": null,

47 | "time_embedding_act_fn": null,

48 | "timestep_post_act": null,

49 | "time_cond_proj_dim": null,

50 | "conv_in_kernel": 3,

51 | "conv_out_kernel": 3,

52 | "projection_class_embeddings_input_dim": null,

53 | "class_embeddings_concat": false,

54 | "mid_block_only_cross_attention": null,

55 | "cross_attention_norm": null,

56 | "addition_embed_type_num_heads": 64,

57 | "_use_default_values": [

58 | "transformer_layers_per_block",

59 | "use_linear_projection",

60 | "num_class_embeds",

61 | "addition_embed_type",

62 | "cross_attention_norm",

63 | "conv_out_kernel",

64 | "encoder_hid_dim_type",

65 | "projection_class_embeddings_input_dim",

66 | "num_attention_heads",

67 | "only_cross_attention",

68 | "class_embed_type",

69 | "resnet_time_scale_shift",

70 | "addition_embed_type_num_heads",

71 | "timestep_post_act",

72 | "mid_block_type",

73 | "mid_block_only_cross_attention",

74 | "time_embedding_type",

75 | "addition_time_embed_dim",

76 | "time_embedding_dim",

77 | "encoder_hid_dim",

78 | "resnet_skip_time_act",

79 | "conv_in_kernel",

80 | "upcast_attention",

81 | "dual_cross_attention",

82 | "resnet_out_scale_factor",

83 | "time_cond_proj_dim",

84 | "class_embeddings_concat",

85 | "time_embedding_act_fn"

86 | ],

87 | "_class_name": "UNet2DConditionModel",

88 | "_diffusers_version": "0.6.0"

89 | }

90 |

--------------------------------------------------------------------------------

/app.py:

--------------------------------------------------------------------------------

1 | import json

2 | import os

3 | import asyncio

4 | import gradio as gr

5 |

6 | async def stylize(video):

7 | command = f"animatediff stylize create-config {video}"

8 | process = await asyncio.create_subprocess_shell(

9 | command,

10 | stdout=asyncio.subprocess.PIPE,

11 | stderr=asyncio.subprocess.PIPE

12 | )

13 | stdout, stderr = await process.communicate()

14 | if process.returncode == 0:

15 | return stdout.decode()

16 | else:

17 | print(f"Error: {stderr.decode()}")

18 |

19 | async def start_video_edit(prompt_file):

20 | command = f"animatediff stylize generate {prompt_file}"

21 | process = await asyncio.create_subprocess_shell(

22 | command,

23 | stdout=asyncio.subprocess.PIPE,

24 | stderr=asyncio.subprocess.PIPE

25 | )

26 | stdout, stderr = await process.communicate()

27 | if process.returncode == 0:

28 | return stdout.decode()

29 | else:

30 | print(f"Error: {stderr.decode()}")

31 |

32 | def edit_video(video, pos_prompt):

33 | x = asyncio.run(stylize(video))

34 | x = x.split("stylize.py")

35 | config = x[18].split("config =")[-1].strip()

36 | d = x[19].split("stylize_dir = ")[-1].strip()

37 |

38 | with open(config, 'r+') as f:

39 | data = json.load(f)

40 | data['head_prompt'] = pos_prompt

41 | data["path"] = "models/huggingface/xxmix9realistic_v40.safetensors"

42 |

43 | os.remove(config)

44 | with open(config, 'w') as f:

45 | json.dump(data, f, indent=4)

46 |

47 | out = asyncio.run(start_video_edit(d))

48 | out = out.split("Stylized results are output to ")[-1]

49 | out = out.split("stylize.py")[0].strip()

50 |

51 | cwd = os.getcwd()

52 | video_dir = cwd + "/" + out

53 |

54 | video_extensions = {'.mp4', '.avi', '.mkv', '.mov', '.flv', '.wmv'}

55 | video_path = None

56 |

57 | for dirpath, dirnames, filenames in os.walk(video_dir):

58 | for filename in filenames:

59 | if os.path.splitext(filename)[1].lower() in video_extensions:

60 | video_path = os.path.join(dirpath, filename)

61 | break

62 | if video_path:

63 | break

64 |

65 | return video_path

66 |

67 | print("ready")

68 |

69 | with gr.Blocks() as interface:

70 | gr.Markdown("## Video Processor with Text Prompts")

71 | with gr.Row():

72 | with gr.Column():

73 | positive_prompt = gr.Textbox(label="Positive Prompt")

74 | video_input = gr.Video(label="Input Video")

75 | with gr.Column():

76 | video_output = gr.Video(label="Processed Video")

77 |

78 | process_button = gr.Button("Process Video")

79 | process_button.click(fn=edit_video,

80 | inputs=[video_input, positive_prompt],

81 | outputs=video_output

82 | )

83 |

84 | interface.launch()

85 |

--------------------------------------------------------------------------------

/src/animatediff/dwpose/__init__.py:

--------------------------------------------------------------------------------

1 | # https://github.com/IDEA-Research/DWPose

2 | # Openpose

3 | # Original from CMU https://github.com/CMU-Perceptual-Computing-Lab/openpose

4 | # 2nd Edited by https://github.com/Hzzone/pytorch-openpose

5 | # 3rd Edited by ControlNet

6 | # 4th Edited by ControlNet (added face and correct hands)

7 |

8 | import os

9 |

10 | os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

11 | import cv2

12 | import numpy as np

13 | import torch

14 | from controlnet_aux.util import HWC3, resize_image

15 | from PIL import Image

16 |

17 | from . import util

18 | from .wholebody import Wholebody

19 |

20 |

21 | def draw_pose(pose, H, W):

22 | bodies = pose['bodies']

23 | faces = pose['faces']

24 | hands = pose['hands']

25 | candidate = bodies['candidate']

26 | subset = bodies['subset']

27 | canvas = np.zeros(shape=(H, W, 3), dtype=np.uint8)

28 |

29 | canvas = util.draw_bodypose(canvas, candidate, subset)

30 |

31 | canvas = util.draw_handpose(canvas, hands)

32 |

33 | canvas = util.draw_facepose(canvas, faces)

34 |

35 | return canvas

36 |

37 |

38 | class DWposeDetector:

39 | def __init__(self):

40 | pass

41 |

42 | def to(self, device):

43 | self.pose_estimation = Wholebody(device)

44 | return self

45 |

46 | def __call__(self, input_image, detect_resolution=512, image_resolution=512, output_type="pil", **kwargs):

47 | input_image = cv2.cvtColor(np.array(input_image, dtype=np.uint8), cv2.COLOR_RGB2BGR)

48 |

49 | input_image = HWC3(input_image)

50 | input_image = resize_image(input_image, detect_resolution)

51 | H, W, C = input_image.shape

52 | with torch.no_grad():

53 | candidate, subset = self.pose_estimation(input_image)

54 | nums, keys, locs = candidate.shape

55 | candidate[..., 0] /= float(W)

56 | candidate[..., 1] /= float(H)

57 | body = candidate[:,:18].copy()

58 | body = body.reshape(nums*18, locs)

59 | score = subset[:,:18]

60 | for i in range(len(score)):

61 | for j in range(len(score[i])):

62 | if score[i][j] > 0.3:

63 | score[i][j] = int(18*i+j)

64 | else:

65 | score[i][j] = -1

66 |

67 | un_visible = subset<0.3

68 | candidate[un_visible] = -1

69 |

70 | foot = candidate[:,18:24]

71 |

72 | faces = candidate[:,24:92]

73 |

74 | hands = candidate[:,92:113]

75 | hands = np.vstack([hands, candidate[:,113:]])

76 |

77 | bodies = dict(candidate=body, subset=score)

78 | pose = dict(bodies=bodies, hands=hands, faces=faces)

79 |

80 | detected_map = draw_pose(pose, H, W)

81 | detected_map = HWC3(detected_map)

82 |

83 | img = resize_image(input_image, image_resolution)

84 | H, W, C = img.shape

85 |

86 | detected_map = cv2.resize(detected_map, (W, H), interpolation=cv2.INTER_LINEAR)

87 |

88 | if output_type == "pil":

89 | detected_map = Image.fromarray(detected_map)

90 |

91 | return detected_map

92 |

--------------------------------------------------------------------------------

/config/prompts/07-FilmVelvia.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "FilmVelvia",

3 | "base": "models/sd/majicmixRealistic_v4.safetensors",

4 | "path": "models/sd/FilmVelvia2.safetensors",

5 | "motion_module": "models/motion-module/mm_sd_v15.ckpt",

6 | "seed": [

7 | 358675358833372800, 3519455280971924000, 11684545350557985000,

8 | 8696855302100400000

9 | ],

10 | "scheduler": "k_dpmpp",

11 | "steps": 25,

12 | "guidance_scale": 7.5,

13 | "lora_alpha": 0.6,

14 | "prompt": [

15 | "a woman standing on the side of a road at night,girl, long hair, motor vehicle, car, looking at viewer, ground vehicle, night, hands in pockets, blurry background, coat, black hair, parted lips, bokeh, jacket, brown hair, outdoors, red lips, upper body, artist name",

16 | ", dark shot,0mm, portrait quality of a arab man worker,boy, wasteland that stands out vividly against the background of the desert, barren landscape, closeup, moles skin, soft light, sharp, exposure blend, medium shot, bokeh, hdr, high contrast, cinematic, teal and orange5, muted colors, dim colors, soothing tones, low saturation, hyperdetailed, noir",

17 | "fashion photography portrait of 1girl, offshoulder, fluffy short hair, soft light, rim light, beautiful shadow, low key, photorealistic, raw photo, natural skin texture, realistic eye and face details, hyperrealism, ultra high res, 4K, Best quality, masterpiece, necklace, cleavage, in the dark",

18 | "In this lighthearted portrait, a woman is dressed as a fierce warrior, armed with an arsenal of paintbrushes and palette knives. Her war paint is composed of thick, vibrant strokes of color, and her armor is made of paint tubes and paint-splattered canvases. She stands victoriously atop a mountain of conquered blank canvases, with a beautiful, colorful landscape behind her, symbolizing the power of art and creativity. bust Portrait, close-up, Bright and transparent scene lighting, "

19 | ],

20 | "n_prompt": [

21 | "cartoon, anime, sketches,worst quality, low quality, deformed, distorted, disfigured, bad eyes, wrong lips, weird mouth, bad teeth, mutated hands and fingers, bad anatomy, wrong anatomy, amputation, extra limb, missing limb, floating limbs, disconnected limbs, mutation, ugly, disgusting, bad_pictures, negative_hand-neg",

22 | "cartoon, anime, sketches,worst quality, low quality, deformed, distorted, disfigured, bad eyes, wrong lips, weird mouth, bad teeth, mutated hands and fingers, bad anatomy, wrong anatomy, amputation, extra limb, missing limb, floating limbs, disconnected limbs, mutation, ugly, disgusting, bad_pictures, negative_hand-neg",

23 | "wrong white balance, dark, cartoon, anime, sketches,worst quality, low quality, deformed, distorted, disfigured, bad eyes, wrong lips, weird mouth, bad teeth, mutated hands and fingers, bad anatomy, wrong anatomy, amputation, extra limb, missing limb, floating limbs, disconnected limbs, mutation, ugly, disgusting, bad_pictures, negative_hand-neg",

24 | "wrong white balance, dark, cartoon, anime, sketches,worst quality, low quality, deformed, distorted, disfigured, bad eyes, wrong lips, weird mouth, bad teeth, mutated hands and fingers, bad anatomy, wrong anatomy, amputation, extra limb, missing limb, floating limbs, disconnected limbs, mutation, ugly, disgusting, bad_pictures, negative_hand-neg"

25 | ]

26 | }

27 |

--------------------------------------------------------------------------------

/config/prompts/02-Lyriel.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "Lyriel",

3 | "base": "",

4 | "path": "models/sd/lyriel_v16.safetensors",

5 | "motion_module": "models/motion-module/mm_sd_v15.ckpt",

6 | "seed": [

7 | 10917152860782582000, 6399018107401806000, 15875751942533906000,

8 | 6653196880059937000

9 | ],

10 | "scheduler": "k_dpmpp",

11 | "steps": 25,

12 | "guidance_scale": 7.5,

13 | "prompt": [

14 | "dark shot, epic realistic, portrait of halo, sunglasses, blue eyes, tartan scarf, white hair by atey ghailan, by greg rutkowski, by greg tocchini, by james gilleard, by joe fenton, by kaethe butcher, gradient yellow, black, brown and magenta color scheme, grunge aesthetic!!! graffiti tag wall background, art by greg rutkowski and artgerm, soft cinematic light, adobe lightroom, photolab, hdr, intricate, highly detailed, depth of field, faded, neutral colors, hdr, muted colors, hyperdetailed, artstation, cinematic, warm lights, dramatic light, intricate details, complex background, rutkowski, teal and orange",

15 | "A forbidden castle high up in the mountains, pixel art, intricate details2, hdr, intricate details, hyperdetailed5, natural skin texture, hyperrealism, soft light, sharp, game art, key visual, surreal",

16 | "dark theme, medieval portrait of a man sharp features, grim, cold stare, dark colors, Volumetric lighting, baroque oil painting by Greg Rutkowski, Artgerm, WLOP, Alphonse Mucha dynamic lighting hyperdetailed intricately detailed, hdr, muted colors, complex background, hyperrealism, hyperdetailed, amandine van ray",

17 | "As I have gone alone in there and with my treasures bold, I can keep my secret where and hint of riches new and old. Begin it where warm waters halt and take it in a canyon down, not far but too far to walk, put in below the home of brown."

18 | ],

19 | "n_prompt": [