├── .gitignore

├── 0. Old

├── 1. Reinforcement Learning - Pickup problem.ipynb

├── 2. Reinforcement Learning - Multi-armed bandits.ipynb

└── scripts

│ ├── algorithms.py

│ ├── grid_world.py

│ ├── maze.py

│ ├── multi_armed_bandit.py

│ ├── open_ai_gym.py

│ └── pickup_problem.py

├── 0. Solving Gym environments

├── Agents development - Breakout.ipynb

├── breakout_with_rl.py

├── cartpole_with_deepqlearning.py

└── pendulum_with_actorcritic.py

├── 1. Tic Tac Toe

├── 1. Solving Tic Tac Toe with Policy gradients.ipynb

└── images

│ ├── game_random_rl_agents.gif

│ ├── game_random_rl_agents2.gif

│ ├── game_random_rl_agents3.gif

│ ├── game_random_rules_agents.gif

│ ├── game_random_rules_agents2.gif

│ ├── game_rules_rl_agents.gif

│ └── game_two_random_agents.gif

├── 2. Data Center Cooling

├── 0. Explaining the Data Center Cooling environment.ipynb

├── 1. Reinforcement Learning - Q Learning.ipynb

├── 2. Reinforcement Learning - Deep-Q-Learning.ipynb

├── README.md

└── app.py

├── 3. Robotics

├── Minitaur pybullet environment.ipynb

└── minitaur.py

├── 4. Chrome Dino

├── 20180102 - Chrome Dino development.ipynb

├── 20180203 - Genetic algorithms experiments.ipynb

├── README.md

├── dino.py

├── experiments.py

└── images

│ ├── capture1.png

│ ├── dino_hardcoded_agent.gif

│ ├── dino_ml_agent1.gif

│ └── dino_ml_agent1_bad.gif

├── 5. Delivery Optimization

├── Optimizing delivery with Reinforcement Learning.ipynb

├── README.md

├── Routing optimization with Deep Reinforcement Learning.ipynb

├── delivery.py

├── env1.png

├── env2.png

├── env3.png

├── training.png

├── training_100_stops.gif

├── training_100_stops_traffic.gif

├── training_10_stops.gif

├── training_500_stops.gif

├── training_500_stops_traffic.gif

└── training_50_stops.gif

├── 6. Solving a Rubik's Cube

├── Solving a Rubik's cube with RL.ipynb

└── rubik.py

├── 7. Multi-Agents Simulations

├── 20191018 - Sugarscape playground.ipynb

├── 20191112 - Chicken game.ipynb

├── 20200318 - Hyperion dev.ipynb

├── README.md

├── pygame_test.py

├── test.gif

└── test2.gif

├── 8. Unity ML agents tests

├── README.md

└── rolling_a_ball

│ ├── 20200202 - Rolling a Ball.ipynb

│ └── rollingaball1.png

├── 9. Discrete optimization with RL

├── README.md

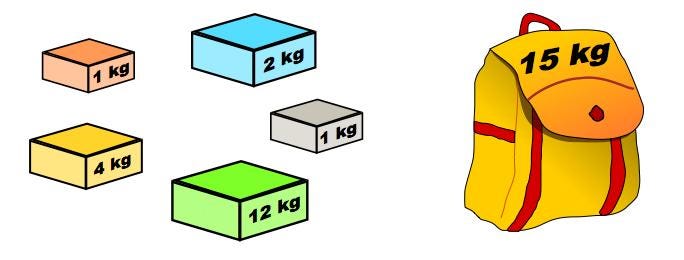

├── Reinforcement Learning for knapsack problem.ipynb

├── knapsack_problem

│ └── knapsack

│ │ ├── Solver.java

│ │ ├── _coursera

│ │ ├── data

│ │ ├── ks_10000_0

│ │ ├── ks_1000_0

│ │ ├── ks_100_0

│ │ ├── ks_100_1

│ │ ├── ks_100_2

│ │ ├── ks_106_0

│ │ ├── ks_19_0

│ │ ├── ks_200_0

│ │ ├── ks_200_1

│ │ ├── ks_300_0

│ │ ├── ks_30_0

│ │ ├── ks_400_0

│ │ ├── ks_40_0

│ │ ├── ks_45_0

│ │ ├── ks_4_0

│ │ ├── ks_500_0

│ │ ├── ks_50_0

│ │ ├── ks_50_1

│ │ ├── ks_60_0

│ │ ├── ks_82_0

│ │ ├── ks_lecture_dp_1

│ │ └── ks_lecture_dp_2

│ │ ├── handout.pdf

│ │ ├── solver.py

│ │ ├── solverJava.py

│ │ └── submit.py

└── lessons

│ ├── README.md

│ ├── discrete_optimization.md

│ ├── dynamic_programming.md

│ └── knapsack_problem.md

├── README.md

└── rl

├── __init__.py

├── agents

├── __init__.py

├── actor_critic_agent.py

├── base_agent.py

├── dqn2d_agent.py

├── dqn_agent.py

├── q_agent.py

└── sarsa_agent.py

├── envs

├── __init__.py

├── data_center_cooling.py

└── tictactoe.py

├── memory.py

└── utils.py

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | env/

12 | build/

13 | develop-eggs/

14 | dist/

15 | downloads/

16 | eggs/

17 | .eggs/

18 | lib/

19 | lib64/

20 | parts/

21 | sdist/

22 | var/

23 | wheels/

24 | *.egg-info/

25 | .installed.cfg

26 | *.egg

27 |

28 | # PyInstaller

29 | # Usually these files are written by a python script from a template

30 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

31 | *.manifest

32 | *.spec

33 |

34 | # Installer logs

35 | pip-log.txt

36 | pip-delete-this-directory.txt

37 |

38 | # Unit test / coverage reports

39 | htmlcov/

40 | .tox/

41 | .coverage

42 | .coverage.*

43 | .cache

44 | nosetests.xml

45 | coverage.xml

46 | *.cover

47 | .hypothesis/

48 |

49 | # Translations

50 | *.mo

51 | *.pot

52 |

53 | # Django stuff:

54 | *.log

55 | local_settings.py

56 |

57 | # Flask stuff:

58 | instance/

59 | .webassets-cache

60 |

61 | # Scrapy stuff:

62 | .scrapy

63 |

64 | # Sphinx documentation

65 | docs/_build/

66 |

67 | # PyBuilder

68 | target/

69 |

70 | # Jupyter Notebook

71 | .ipynb_checkpoints

72 |

73 | # pyenv

74 | .python-version

75 |

76 | # celery beat schedule file

77 | celerybeat-schedule

78 |

79 | # SageMath parsed files

80 | *.sage.py

81 |

82 | # dotenv

83 | .env

84 |

85 | # Spyder project settings

86 | .spyderproject

87 | .spyproject

88 |

89 | # Rope project settings

90 | .ropeproject

91 |

92 | # mkdocs documentation

93 | /site

94 |

95 | # mypy

96 | .mypy_cache/

97 |

--------------------------------------------------------------------------------

/0. Old/scripts/algorithms.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 |

4 |

5 | """--------------------------------------------------------------------

6 | REINFORCEMENT LEARNING

7 | First RL script done using Keras and policy gradients

8 |

9 | - Inspired by @steinbrecher script on https://gym.openai.com/evaluations/eval_usjJ7onVTTwrn43wrbBiAv

10 | - Still inspired by Karpathy's work too

11 |

12 | Started on the 30/12/2016

13 |

14 |

15 |

16 | theo.alves.da.costa@gmail.com

17 | https://github.com/theolvs

18 | ------------------------------------------------------------------------

19 | """

20 |

21 |

22 | import numpy as np

23 | # import gym

24 | import os

25 | from keras.models import load_model, Sequential

26 | from keras.layers import Dense, Activation, Dropout

27 | from keras.optimizers import SGD, RMSprop

28 |

29 |

30 |

31 |

32 |

33 |

34 |

35 |

36 |

37 | class Brain():

38 | def __init__(self,env,env_name = "default",H = 500,learning_rate = 0.01,dropout = 0.0,hidden_layers = 1,reload = False,input_dim = 0,output_dim = 0):

39 |

40 | self.env_name = env_name

41 | self.base_path = "C:/Data Science/15. Reinforcement Learning/0. Models/"

42 | file = [x for x in os.listdir(self.base_path) if self.env_name in x]

43 |

44 | self.H = H

45 | self.gamma = 0.5

46 | self.batch_size = 10

47 | self.learning_rate = learning_rate

48 | self.dropout = dropout

49 | self.hidden_layers = hidden_layers

50 |

51 | if input_dim == 0:

52 | try:

53 | self.observation_space = env.observation_space.n

54 | self.observation_to_vectorize = True

55 | except Exception as e:

56 | self.observation_space = env.observation_space.shape[0]

57 | self.observation_to_vectorize = False

58 | else:

59 | self.observation_space = input_dim

60 | self.observation_to_vectorize = False

61 |

62 | if output_dim == 0:

63 | self.action_space = env.action_space.n

64 | else:

65 | self.action_space = output_dim

66 |

67 |

68 | if len(file) == 0 or reload:

69 | print('>> Building a fully connected neural network')

70 | self.episode_number = 0

71 | self.model = self.build_fcc_model_with_regularization(H,input_dim = self.observation_space,output_dim = self.action_space,dropout = self.dropout,hidden_layers = self.hidden_layers)

72 | else:

73 | print('>> Loading the previously trained model')

74 | self.episode_number = int(file[0][file[0].find("(")+1:file[0].find(")")])

75 | self.model = load_model(self.base_path + file[0])

76 |

77 |

78 |

79 | self.inputs,self.actions,self.probas,self.rewards,self.step_rewards = [],[],[],[],[]

80 | self.episode_rewards,self.episode_running_rewards = [],[]

81 | self.reward_sum = 0

82 | self.running_reward = 0

83 |

84 |

85 | def rebuild_model(self):

86 | self.model = self.build_fcc_model_with_regularization(self.H,input_dim = self.observation_space,output_dim = self.action_space,dropout = self.dropout,hidden_layers = self.hidden_layers)

87 |

88 |

89 |

90 | def build_fcc_model(self,H = 500,input_dim = 4,output_dim = 2):

91 | model = Sequential()

92 | model.add(Dense(H, input_dim=input_dim))

93 | model.add(Activation('relu'))

94 | model.add(Dense(H))

95 | model.add(Activation('relu'))

96 |

97 | sgd = SGD(lr=self.learning_rate, decay=1e-6, momentum=0.9, nesterov=True)

98 |

99 | if output_dim <= 2:

100 | model.add(Dense(1))

101 | model.add(Activation('sigmoid'))

102 | model.compile(loss='mse',

103 | optimizer=sgd,

104 | metrics=['accuracy'])

105 | else:

106 | model.add(Dense(output_dim))

107 | model.add(Activation('softmax'))

108 | model.compile(loss='categorical_crossentropy',

109 | optimizer=sgd,

110 | metrics=['accuracy'])

111 |

112 | return model

113 |

114 |

115 |

116 | def build_fcc_model_with_regularization(self,H = 500,input_dim = 4,output_dim = 2,dropout = 0.0,hidden_layers = 1):

117 | model = Sequential()

118 | model.add(Dense(H, input_dim=input_dim,init='uniform'))

119 | model.add(Activation('relu'))

120 | model.add(Dropout(dropout))

121 |

122 | for i in range(hidden_layers):

123 | model.add(Dense(H,init='uniform'))

124 | model.add(Activation('relu'))

125 | model.add(Dropout(dropout))

126 |

127 | sgd = SGD(lr=self.learning_rate, decay=1e-6, momentum=0.9, nesterov=True)

128 |

129 | if output_dim <= 2:

130 | model.add(Dense(1))

131 | model.add(Activation('sigmoid'))

132 | model.compile(loss='mse',

133 | optimizer=sgd,

134 | metrics=['accuracy'])

135 | else:

136 | model.add(Dense(output_dim))

137 | model.add(Activation('softmax'))

138 | model.compile(loss='categorical_crossentropy',

139 | optimizer=sgd,

140 | metrics=['accuracy'])

141 |

142 | return model

143 |

144 |

145 |

146 | def to_input(self,observation):

147 | if self.observation_to_vectorize:

148 | observation = self.vectorize_observation(observation,self.observation_space)

149 | return np.reshape(observation,(1,self.observation_space))

150 |

151 |

152 | def predict(self,observation,possible_moves = []):

153 |

154 | x = self.to_input(observation)

155 |

156 | # getting the probability of action

157 | probas = self.model.predict(x)[0]

158 |

159 |

160 | if len(possible_moves) > 0:

161 | probas += 1e-9

162 | probas *= possible_moves

163 | probas /= np.sum(probas)

164 |

165 | # sampling the correct action

166 | action= self.sample_action(probas)

167 |

168 | return x,action,probas

169 |

170 |

171 | def sample_action(self,probabilities):

172 | if len(probabilities)<=2:

173 | action = 1 if np.random.uniform() < probabilities[0] else 0

174 | else:

175 | action = np.random.choice(len(probabilities),p = np.array(probabilities))

176 |

177 | return action

178 |

179 | def vectorize_action(self,action):

180 | if self.action_space <= 2:

181 | return action

182 | else:

183 | onehot_vector = np.zeros(self.action_space)

184 | onehot_vector[action] = 1

185 | return onehot_vector

186 |

187 | def vectorize_observation(self,value,size):

188 | onehot_vector = np.zeros(size)

189 | onehot_vector[value] = 1

190 | return onehot_vector

191 |

192 |

193 |

194 | def record(self,input = None,action = None,proba = None,reward = None):

195 | if type(input) != type(None):

196 | self.inputs.append(input)

197 |

198 | if type(action) != type(None):

199 | self.actions.append(action)

200 |

201 | if type(proba) != type(None):

202 | self.probas.append(proba)

203 |

204 | if type(reward) != type(None):

205 | self.rewards.append(reward)

206 | self.reward_sum += reward

207 |

208 |

209 |

210 |

211 | def discounting_rewards(self,r,normalization = True):

212 | discounted_r = np.zeros_like(r)

213 | running_add = 0

214 | for t in reversed(range(0, r.size)):

215 | running_add = running_add * self.gamma + r[t]

216 | discounted_r[t] = running_add

217 |

218 | if normalization:

219 | discounted_r = np.subtract(discounted_r,np.mean(discounted_r),casting = "unsafe")

220 | discounted_r = np.divide(discounted_r,np.std(discounted_r),casting = "unsafe")

221 |

222 | return discounted_r

223 |

224 |

225 | def discount_rewards(self,normalization = True):

226 | rewards = np.vstack(self.rewards)

227 | return self.discounting_rewards(rewards,normalization)

228 |

229 |

230 | def record_episode(self):

231 | # self.step_rewards.extend(self.discount_rewards(normalization = True))

232 |

233 | # self.rewards = np.array([self.rewards[-1]]*len(self.rewards))

234 | # self.reward_sum = self.rewards[-1]*100

235 |

236 | self.reward_sum = np.sum(self.rewards)

237 | self.rewards = self.discount_rewards(normalization = False)

238 | self.step_rewards.extend(self.rewards)

239 |

240 |

241 | self.episode_rewards.append(self.reward_sum)

242 | self.running_reward = np.mean(self.episode_rewards)

243 | self.episode_number += 1

244 |

245 | def reset_episode(self):

246 | self.rewards = []

247 | self.reward_sum = 0

248 |

249 | def update_on_batch(self,show = False):

250 | if show: print('... Training on batch of size %s'%self.batch_size)

251 | self.actions = np.vstack(self.actions)

252 | self.probas = np.vstack(self.probas)

253 | self.step_rewards = np.vstack(self.step_rewards)

254 | self.inputs = np.vstack(self.inputs)

255 |

256 | self.targets = self.step_rewards * (self.actions - self.probas) + self.probas

257 | # print(self.targets)

258 |

259 | #ajouter la protection de la max rewards

260 |

261 | self.model.train_on_batch(self.inputs,self.targets)

262 |

263 | self.inputs,self.actions,self.probas,self.step_rewards = [],[],[],[]

264 |

265 | def save_model(self):

266 | file = [x for x in os.listdir(self.base_path) if self.env_name in x]

267 | self.model.save(self.base_path+"%s(%s).h5"%(self.env_name,self.episode_number))

268 | if len(file)>0:

269 | os.remove(self.base_path+file[0])

270 | # self.model.save(self.base_path+"%s.h5"%(self.env_name))

271 |

272 |

273 | def build_cnn_model(self,input_dim,output_dim):

274 | model = Sequential()

275 |

276 | model.add(Convolution2D(32, 3, 3, border_mode='same',input_shape=input_dim))

277 | model.add(Activation('relu'))

278 | model.add(Convolution2D(32, 3, 3))

279 | model.add(Activation('relu'))

280 | model.add(MaxPooling2D(pool_size=(2, 2)))

281 | model.add(Dropout(0.25))

282 |

283 | model.add(Convolution2D(64, 3, 3, border_mode='same'))

284 | model.add(Activation('relu'))

285 | model.add(Convolution2D(64, 3, 3))

286 | model.add(Activation('relu'))

287 | model.add(MaxPooling2D(pool_size=(2, 2)))

288 | model.add(Dropout(0.25))

289 |

290 | model.add(Flatten())

291 | model.add(Dense(512))

292 | model.add(Activation('relu'))

293 | model.add(Dropout(0.5))

294 | model.add(Dense(output_dim))

295 | model.add(Activation('softmax'))

296 |

297 | # Let's train the model using RMSprop

298 | model.compile(loss='categorical_crossentropy',optimizer='rmsprop',metrics=['accuracy'])

299 |

300 | return model

301 |

--------------------------------------------------------------------------------

/0. Old/scripts/grid_world.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 |

4 |

5 | """--------------------------------------------------------------------

6 | REINFORCEMENT LEARNING

7 | Grid World

8 |

9 | Started on the 08/08/2017

10 |

11 |

12 | References :

13 | - https://www.youtube.com/watch?v=A5eihauRQvo&t=5s

14 | - https://github.com/llSourcell/q_learning_demo

15 | - http://firsttimeprogrammer.blogspot.fr/2016/09/getting-ai-smarter-with-q-learning.html

16 |

17 |

18 | theo.alves.da.costa@gmail.com

19 | https://github.com/theolvs

20 | ------------------------------------------------------------------------

21 | """

22 |

23 |

24 | import os

25 | import matplotlib.pyplot as plt

26 | import pandas as pd

27 | import numpy as np

28 | import sys

29 | import random

30 | import time

31 |

32 |

33 |

34 |

35 |

36 | #===========================================================================================================

37 | # CELLS DEFINITION

38 | #===========================================================================================================

39 |

40 |

41 | class Cell(object):

42 | def __init__(self,reward = 0,is_terminal = False,is_occupied = False,is_wall = False,is_start = False):

43 | self.reward = reward

44 | self.is_terminal = is_terminal

45 | self.is_occupied = is_occupied

46 | self.is_wall = is_wall

47 | self.is_start = is_start

48 |

49 | def __repr__(self):

50 | if self.is_occupied:

51 | return "x"

52 | else:

53 | return " "

54 |

55 |

56 | def __str__(self):

57 | return self.__str__()

58 |

59 |

60 |

61 |

62 | class Start(Cell):

63 | def __init__(self):

64 | super().__init__(is_occupied = True,is_start = True)

65 |

66 |

67 |

68 |

69 | class End(Cell):

70 | def __init__(self,reward = 10):

71 | super().__init__(reward = reward,is_terminal = True)

72 |

73 | def __repr__(self):

74 | return "O"

75 |

76 |

77 |

78 | class Hole(Cell):

79 | def __init__(self,reward = -10):

80 | super().__init__(reward = reward,is_terminal = True)

81 |

82 | def __repr__(self):

83 | return "X"

84 |

85 |

86 |

87 | class Wall(Cell):

88 | def __init__(self):

89 | super().__init__(is_wall = True)

90 |

91 | def __repr__(self):

92 | return "#"

93 |

94 |

95 |

96 |

97 | #===========================================================================================================

98 | # GRIDS DEFINITION

99 | #===========================================================================================================

100 |

101 |

102 |

103 |

104 | class Grid(object):

105 | def __init__(self,cells):

106 | self.grid = cells

107 |

108 |

109 | def __repr__(self):

110 | pass

111 |

112 |

113 | def __str__(self):

114 | pass

115 |

116 |

--------------------------------------------------------------------------------

/0. Old/scripts/multi_armed_bandit.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 |

4 |

5 | """--------------------------------------------------------------------

6 | REINFORCEMENT LEARNING

7 | Multi Armed Bandit Problem

8 |

9 | Started on the 14/04/2017

10 |

11 |

12 | theo.alves.da.costa@gmail.com

13 | https://github.com/theolvs

14 | ------------------------------------------------------------------------

15 | """

16 |

17 |

18 | import os

19 | import matplotlib.pyplot as plt

20 | import pandas as pd

21 | import numpy as np

22 | import sys

23 |

24 |

25 | # Deep Learning (Keras, Tensorflow)

26 | import tensorflow as tf

27 | from keras.models import Sequential

28 | from keras.optimizers import SGD,RMSprop, Adam

29 | from keras.layers import Dense, Dropout, Activation, Flatten

30 | from keras.layers import MaxPooling2D,ZeroPadding2D,Conv2D

31 | from keras.utils.np_utils import to_categorical

32 |

33 |

34 |

35 |

36 | #===========================================================================================================

37 | # BANDIT DEFINITION

38 | #===========================================================================================================

39 |

40 |

41 |

42 | class Bandit(object):

43 | def __init__(self,p = None):

44 | '''Simple bandit initialization'''

45 | self.p = p if p is not None else np.random.random()

46 |

47 | def pull(self):

48 | '''Simulate a pull from the bandit

49 |

50 | '''

51 | if np.random.random() < self.p:

52 | return 1

53 | else:

54 | return -1

55 |

56 |

57 |

58 | def create_list_bandits(n = 4,p = None):

59 | if p is None: p = [None]*n

60 | bandits = [Bandit(p = p[i]) for i in range(n)]

61 | return bandits

62 |

63 |

64 |

65 |

66 |

67 | #===========================================================================================================

68 | # NEURAL NETWORK

69 | #===========================================================================================================

70 |

71 |

72 |

73 | def build_fcc_model(H = 100,lr = 0.1,dim = 4):

74 | model = Sequential()

75 | model.add(Dense(H, input_dim=dim))

76 | model.add(Activation('relu'))

77 | model.add(Dense(H))

78 | model.add(Activation('relu'))

79 |

80 | sgd = SGD(lr=lr, decay=1e-6, momentum=0.9, nesterov=True)

81 |

82 |

83 | model.add(Dense(dim))

84 | model.add(Activation('softmax'))

85 | model.compile(loss='categorical_crossentropy',

86 | optimizer=sgd,

87 | metrics=['accuracy'])

88 |

89 | return model

90 |

91 |

92 | model = build_fcc_model()

93 |

94 |

95 |

96 |

97 |

98 | #===========================================================================================================

99 | # SAMPLING ACTION

100 | #===========================================================================================================

101 |

102 |

103 | def sample_action(probas,epsilon = 0.2):

104 | probas = probas[0]

105 | if np.random.rand() < epsilon:

106 | choice = np.random.randint(0,len(probas))

107 | else:

108 | choice = np.random.choice(range(len(probas)),p = probas)

109 | return choice

110 |

111 |

112 |

113 |

114 |

115 |

116 |

117 |

118 |

119 | #===========================================================================================================

120 | # EPISODE

121 | #===========================================================================================================

122 |

123 |

124 |

125 |

126 | def run_episode(bandits,model,probas = None,train = True,epsilon = 0.2):

127 |

128 | if probas is None:

129 | probas = np.ones((1,len(bandits)))/len(bandits)

130 |

131 | # sampling action

132 | bandit_to_pull = sample_action(probas,epsilon = epsilon)

133 | action = to_categorical(bandit_to_pull,num_classes=probas.shape[1])

134 |

135 | # reward

136 | reward = bandits[bandit_to_pull].pull()

137 |

138 | # feed vectors

139 | X = action

140 | y = (action - probas)*reward

141 |

142 | if train:

143 | model.train_on_batch(X,y)

144 |

145 | # update probabilities

146 | probas = model.predict(X)

147 |

148 | return reward,probas

149 |

150 |

151 |

152 |

153 |

154 |

155 |

156 | #===========================================================================================================

157 | # GAME

158 | #===========================================================================================================

159 |

160 |

161 | def run_game(n_episodes = 100,lr = 0.1,n_bandits = 4,p = None,epsilon = 0.2):

162 |

163 | # DEFINE THE BANDITS

164 | bandits = create_list_bandits(n = n_bandits,p = p)

165 | probabilities_to_win = [x.p for x in bandits]

166 | best_bandit = np.argmax(probabilities_to_win)

167 | print(">> Probabilities to win : {} -> Best bandit : {}".format(probabilities_to_win,best_bandit))

168 |

169 | # INITIALIZE THE NEURAL NETWORK

170 | model = build_fcc_model(lr = lr,dim = n_bandits)

171 |

172 | # INITIALIZE BUFFERS

173 | rewards = []

174 | avg_rewards = []

175 | all_probas = np.array([])

176 |

177 | # EPISODES LOOP

178 | for i in range(n_episodes):

179 | print("\r[{}/{}] episodes completed".format(i+1,n_episodes),end = "")

180 |

181 | # Random choice at the first episode

182 | if i == 0:

183 | reward,probas = run_episode(bandits = bandits,model = model,epsilon = epsilon)

184 |

185 | # Updated probabilities at the following episodes

186 | else:

187 | reward,probas = run_episode(bandits = bandits,model = model,probas = probas)

188 |

189 |

190 | # Store the rewards and the probas

191 | rewards.append(reward)

192 | avg_rewards.append(np.mean(rewards))

193 | all_probas = np.append(all_probas,probas)

194 |

195 | print("")

196 |

197 |

198 | # GET THE BEST PREDICTED BANDIT

199 | predicted_bandit = np.argmax(probas)

200 | print(">> Predicted bandit : {} - {}".format(predicted_bandit,"CORRECT !!!" if predicted_bandit == best_bandit else "INCORRECT"))

201 |

202 |

203 | # PLOT THE EVOLUTION OF PROBABILITIES OVER TRAINING

204 | all_probas = all_probas.reshape((n_episodes,n_bandits)).transpose()

205 | plt.figure(figsize = (12,5))

206 | plt.title("Probabilities on Bandit choice - {} episodes - learning rate {}".format(n_episodes,lr))

207 | for i,p in enumerate(list(all_probas)):

208 | plt.plot(p,label = "Bandit {}".format(i),lw = 1)

209 |

210 | plt.plot(avg_rewards,linestyle="-", dashes=(5, 4),color = "black",lw = 0.5,label = "average running reward")

211 | plt.legend()

212 | plt.ylim([-0.2,1])

213 |

214 | plt.show()

215 |

216 |

217 |

218 |

219 |

220 |

--------------------------------------------------------------------------------

/0. Old/scripts/open_ai_gym.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 |

4 |

5 | """--------------------------------------------------------------------

6 | REINFORCEMENT LEARNING

7 | First RL script done using Keras and policy gradients

8 |

9 | - Inspired by @steinbrecher script on https://gym.openai.com/evaluations/eval_usjJ7onVTTwrn43wrbBiAv

10 | - Still inspired by Karpathy's work too

11 |

12 | Started on the 30/12/2016

13 |

14 |

15 | https://github.com/rybskej/atari-py

16 | https://sourceforge.net/projects/vcxsrv/

17 |

18 |

19 | Environment which works with script:

20 | - CartPole-v0

21 | - MountainCar-v0

22 | - Taxi-v1

23 |

24 |

25 | theo.alves.da.costa@gmail.com

26 | https://github.com/theolvs

27 | ------------------------------------------------------------------------

28 | """

29 |

30 |

31 | import numpy as np

32 | import gym

33 | import os

34 | from keras.models import load_model, Sequential

35 | from keras.layers import Dense, Activation

36 | from keras.optimizers import SGD, RMSprop

37 |

38 |

39 |

40 | #-------------------------------------------------------------------------------

41 |

42 |

43 |

44 |

45 |

46 | # def main(n_episodes = 20):

47 | # for i_episode in range(n_episodes):

48 | # observation = env.reset()

49 | # print(observation)

50 | # break

51 | # for t in range(1000):

52 | # if render: env.render

53 | # print(observation)

54 | # action = env.action_space.sample()

55 | # observation, reward, done, info = env.step(action)

56 | # if done:

57 | # print("Episode finished after {} timesteps".format(t+1))

58 | # break

59 |

60 |

61 |

62 |

63 |

64 |

65 | #-------------------------------------------------------------------------------

66 |

67 |

68 |

69 |

70 |

71 |

72 |

73 |

74 | class Brain():

75 | def __init__(self,env,env_name = "default",H = 500,reload = False):

76 |

77 | self.env_name = env_name

78 | self.base_path = "C:/Users/talvesdacosta/Documents/Perso/Data Science/15. Reinforcement Learning/3. Open AI Gym/models/"

79 | file = [x for x in os.listdir(self.base_path) if self.env_name in x]

80 |

81 | self.H = H

82 | self.gamma = 0.975

83 | self.batch_size = 10

84 |

85 | try:

86 | self.observation_space = env.observation_space.n

87 | self.observation_to_vectorize = True

88 | except Exception as e:

89 | self.observation_space = env.observation_space.shape[0]

90 | self.observation_to_vectorize = False

91 |

92 | self.action_space = env.action_space.n

93 |

94 |

95 | if len(file) == 0 or reload:

96 | print('>> Building a fully connected neural network')

97 | self.episode_number = 0

98 | self.model = self.build_fcc_model(H,input_dim = self.observation_space,output_dim = self.action_space)

99 | else:

100 | print('>> Loading the previously trained model')

101 | self.episode_number = int(file[0][file[0].find("(")+1:file[0].find(")")])

102 | self.model = load_model(self.base_path + file[0])

103 |

104 |

105 |

106 | self.inputs,self.actions,self.probas,self.rewards,self.step_rewards = [],[],[],[],[]

107 | self.episode_rewards,self.episode_running_rewards = [],[]

108 | self.reward_sum = 0

109 | self.running_reward = 0

110 |

111 |

112 |

113 |

114 | def build_fcc_model(self,H = 500,input_dim = 4,output_dim = 2):

115 | model = Sequential()

116 | model.add(Dense(H, input_dim=input_dim))

117 | model.add(Activation('relu'))

118 | model.add(Dense(H))

119 | model.add(Activation('relu'))

120 |

121 | sgd = SGD(lr=0.05, decay=1e-6, momentum=0.9, nesterov=True)

122 |

123 | if output_dim <= 2:

124 | model.add(Dense(1))

125 | model.add(Activation('sigmoid'))

126 | model.compile(loss='mse',

127 | optimizer=sgd,

128 | metrics=['accuracy'])

129 | else:

130 | model.add(Dense(output_dim))

131 | model.add(Activation('softmax'))

132 | model.compile(loss='categorical_crossentropy',

133 | optimizer=sgd,

134 | metrics=['accuracy'])

135 |

136 | return model

137 |

138 |

139 |

140 | def to_input(self,observation):

141 | if self.observation_to_vectorize:

142 | observation = self.vectorize_observation(observation,self.observation_space)

143 | return np.reshape(observation,(1,self.observation_space))

144 |

145 |

146 | def predict(self,observation):

147 |

148 | x = self.to_input(observation)

149 |

150 | # getting the probability of action

151 | probas = self.model.predict(x)[0]

152 |

153 | # sampling the correct action

154 | action= self.sample_action(probas)

155 |

156 | return x,action,probas

157 |

158 |

159 | def sample_action(self,probabilities):

160 | if len(probabilities)<=2:

161 | action = 1 if np.random.uniform() < probabilities[0] else 0

162 | else:

163 | action = np.random.choice(len(probabilities),p = np.array(probabilities))

164 |

165 | return action

166 |

167 | def vectorize_action(self,action):

168 | if self.action_space <= 2:

169 | return action

170 | else:

171 | onehot_vector = np.zeros(self.action_space)

172 | onehot_vector[action] = 1

173 | return onehot_vector

174 |

175 | def vectorize_observation(self,value,size):

176 | onehot_vector = np.zeros(size)

177 | onehot_vector[value] = 1

178 | return onehot_vector

179 |

180 |

181 |

182 | def record(self,input = None,action = None,proba = None,reward = None):

183 | if type(input) != type(None):

184 | self.inputs.append(input)

185 |

186 | if type(action) != type(None):

187 | self.actions.append(action)

188 |

189 | if type(proba) != type(None):

190 | self.probas.append(proba)

191 |

192 | if type(reward) != type(None):

193 | self.rewards.append(reward)

194 | self.reward_sum += reward

195 |

196 |

197 |

198 |

199 | def discounting_rewards(self,r,normalization = True):

200 | discounted_r = np.zeros_like(r)

201 | running_add = 0

202 | for t in reversed(range(0, r.size)):

203 | running_add = running_add * self.gamma + r[t]

204 | discounted_r[t] = running_add

205 |

206 | if normalization:

207 | discounted_r = np.subtract(discounted_r,np.mean(discounted_r),casting = "unsafe")

208 | discounted_r = np.divide(discounted_r,np.std(discounted_r),casting = "unsafe")

209 |

210 | return discounted_r

211 |

212 |

213 | def discount_rewards(self,normalization = True):

214 | rewards = np.vstack(self.rewards)

215 | return self.discounting_rewards(rewards,normalization)

216 |

217 |

218 | def record_episode(self):

219 | self.step_rewards.extend(self.discount_rewards(normalization = True))

220 | self.episode_rewards.append(self.reward_sum)

221 | self.running_reward = np.mean(self.episode_rewards)

222 | self.episode_number += 1

223 |

224 | def reset_episode(self):

225 | self.rewards = []

226 | self.reward_sum = 0

227 |

228 | def update_on_batch(self):

229 | print('... Training on batch of size %s'%self.batch_size)

230 | self.actions = np.vstack(self.actions)

231 | self.probas = np.vstack(self.probas)

232 | self.step_rewards = np.vstack(self.step_rewards)

233 | self.inputs = np.vstack(self.inputs)

234 |

235 | self.targets = self.step_rewards * (self.actions - self.probas) + self.probas

236 |

237 | #ajouter la protection de la max rewards

238 |

239 | self.model.train_on_batch(self.inputs,self.targets)

240 |

241 | self.inputs,self.actions,self.probas,self.step_rewards = [],[],[],[]

242 |

243 | def save_model(self):

244 | file = [x for x in os.listdir(self.base_path) if self.env_name in x]

245 | self.model.save(self.base_path+"%s(%s).h5"%(self.env_name,self.episode_number))

246 | if len(file)>0:

247 | os.remove(self.base_path+file[0])

248 | # self.model.save(self.base_path+"%s.h5"%(self.env_name))

249 |

250 |

251 |

252 |

253 |

254 |

255 |

256 |

257 |

258 |

259 |

260 | def main(env_name = 'CartPole-v0',n_episodes = 20,render = False,reload = False,n_by_episode = 1000):

261 | env = gym.make(env_name)

262 | brain = Brain(env,env_name = env_name,reload = reload)

263 | # env.monitor.start(brain.base_path+'monitor/%s'%env_name)

264 |

265 |

266 | for i_episode in range(1,n_episodes+1):

267 | observation = env.reset()

268 | for t in range(n_by_episode):

269 | if render: env.render()

270 |

271 | x,action,proba = brain.predict(observation)

272 |

273 | observation, reward, done, info = env.step(action)

274 | action = brain.vectorize_action(action)

275 | brain.record(input = x,action = action,proba = proba,reward = reward)

276 |

277 | if done or t == n_by_episode - 1:

278 | brain.record_episode()

279 | print("Episode {} : total reward was {:0.03f} and running mean {:0.03f}".format(brain.episode_number, brain.reward_sum, brain.running_reward))

280 |

281 |

282 | if i_episode % brain.batch_size == 0:

283 | brain.update_on_batch()

284 |

285 | if i_episode % 100 == 0:

286 | brain.save_model()

287 |

288 |

289 | brain.reset_episode()

290 |

291 | break

292 |

293 | # env.monitor.close()

--------------------------------------------------------------------------------

/0. Solving Gym environments/breakout_with_rl.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 |

4 |

5 | """--------------------------------------------------------------------

6 | REINFORCEMENT LEARNING

7 |

8 | Started on the 25/08/2017

9 |

10 | theo.alves.da.costa@gmail.com

11 | https://github.com/theolvs

12 | ------------------------------------------------------------------------

13 | """

14 |

15 |

16 |

17 |

18 |

19 | # Usual libraries

20 | import os

21 | import matplotlib.pyplot as plt

22 | import pandas as pd

23 | import numpy as np

24 | import sys

25 | import random

26 | import time

27 | from tqdm import tqdm

28 | import random

29 | import gym

30 | import numpy as np

31 |

32 |

33 | # Keras (Deep Learning)

34 | from keras.models import Sequential

35 | from keras.layers import Dense

36 | from keras.optimizers import Adam

37 |

38 |

39 | # Custom RL library

40 | import sys

41 | sys.path.insert(0,'..')

42 |

43 | from rl import utils

44 | from rl.agents.dqn2d_agent import DQN2DAgent

45 |

46 |

47 |

48 |

49 |

50 |

51 |

52 | #----------------------------------------------------------------

53 | # CONSTANTS

54 |

55 |

56 | N_EPISODES = 1000

57 | MAX_STEPS = 10000

58 | RENDER = True

59 | RENDER_EVERY = 50

60 | BATCH_SIZE = 256

61 | MAX_MEMORY = MAX_STEPS

62 |

63 |

64 |

65 | #----------------------------------------------------------------

66 | # MAIN LOOP

67 |

68 |

69 | if __name__ == "__main__":

70 |

71 | # Define the gym environment

72 | env = gym.make('Pong-v0')

73 |

74 | # Get the environement action and observation space

75 | state_size = env.observation_space.shape

76 | action_size = env.action_space.n

77 |

78 | # Create the RL Agent

79 | agent = DQN2DAgent(state_size,action_size,max_memory = MAX_MEMORY)

80 |

81 | # Initialize a list to store the rewards

82 | rewards = []

83 |

84 |

85 |

86 | #---------------------------------------------

87 | # ITERATION OVER EPISODES

88 | for i_episode in range(N_EPISODES):

89 |

90 |

91 |

92 | # Reset the environment

93 | s = env.reset()

94 |

95 |

96 | #-----------------------------------------

97 | # EPISODE RUN

98 | for i_step in range(MAX_STEPS):

99 |

100 | # Render the environement

101 | if RENDER : env.render() #and (i_step % RENDER_EVERY == 0)

102 |

103 | # Store s before

104 | if i_step == 0:

105 | s_before = s

106 |

107 |

108 | # The agent chose the action considering the given current state

109 | a = agent.act(s_before,s)

110 |

111 |

112 | # Take the action, get the reward from environment and go to the next state

113 | s_next,r,done,info = env.step(a)

114 |

115 | # print(r)

116 |

117 | # Tweaking the reward to make it negative when we lose

118 | # r = r if not done else -10

119 |

120 | # Remember the important variables

121 | agent.remember(

122 | np.expand_dims(s,axis=0),

123 | a,

124 | r,

125 | np.expand_dims(s_next,axis=0),

126 | np.expand_dims(s_before,axis=0),

127 | done)

128 |

129 | # Go to the next state

130 | s_before = s

131 | s = s_next

132 |

133 | # If the episode is terminated

134 | if done:

135 | print("Episode {}/{} finished after {} timesteps - epsilon : {:.2}".format(i_episode+1,N_EPISODES,i_step,agent.epsilon))

136 | break

137 |

138 |

139 | #-----------------------------------------

140 |

141 | # Store the rewards

142 | rewards.append(i_step)

143 |

144 |

145 | # Training

146 | agent.train(batch_size = BATCH_SIZE)

147 |

148 |

149 |

150 |

151 |

152 | # Plot the average running rewards

153 | utils.plot_average_running_rewards(rewards)

154 |

--------------------------------------------------------------------------------

/0. Solving Gym environments/cartpole_with_deepqlearning.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 |

4 |

5 | """--------------------------------------------------------------------

6 | REINFORCEMENT LEARNING

7 |

8 | Started on the 25/08/2017

9 |

10 | theo.alves.da.costa@gmail.com

11 | https://github.com/theolvs

12 | ------------------------------------------------------------------------

13 | """

14 |

15 |

16 |

17 |

18 |

19 | # Usual libraries

20 | import os

21 | import matplotlib.pyplot as plt

22 | import pandas as pd

23 | import numpy as np

24 | import sys

25 | import random

26 | import time

27 | from tqdm import tqdm

28 | import random

29 | import gym

30 | import numpy as np

31 |

32 |

33 | # Keras (Deep Learning)

34 | from keras.models import Sequential

35 | from keras.layers import Dense

36 | from keras.optimizers import Adam

37 |

38 |

39 | # Custom RL library

40 | import sys

41 | sys.path.insert(0,'..')

42 |

43 | from rl import utils

44 | from rl.agents.dqn_agent import DQNAgent

45 |

46 |

47 |

48 |

49 |

50 |

51 |

52 | #----------------------------------------------------------------

53 | # CONSTANTS

54 |

55 |

56 | N_EPISODES = 1000

57 | MAX_STEPS = 1000

58 | RENDER = True

59 | RENDER_EVERY = 50

60 |

61 |

62 |

63 | #----------------------------------------------------------------

64 | # MAIN LOOP

65 |

66 |

67 | if __name__ == "__main__":

68 |

69 | # Define the gym environment

70 | env = gym.make('CartPole-v1')

71 |

72 | # Get the environement action and observation space

73 | state_size = env.observation_space.shape[0]

74 | action_size = env.action_space.n

75 |

76 | # Create the RL Agent

77 | agent = DQNAgent(state_size,action_size)

78 |

79 | # Initialize a list to store the rewards

80 | rewards = []

81 |

82 |

83 |

84 |

85 |

86 | #---------------------------------------------

87 | # ITERATION OVER EPISODES

88 | for i_episode in range(N_EPISODES):

89 |

90 |

91 |

92 | # Reset the environment

93 | s = env.reset()

94 |

95 |

96 | #-----------------------------------------

97 | # EPISODE RUN

98 | for i_step in range(MAX_STEPS):

99 |

100 | # Render the environement

101 | if RENDER : env.render() #and (i_step % RENDER_EVERY == 0)

102 |

103 | # The agent chose the action considering the given current state

104 | a = agent.act(s)

105 |

106 | # Take the action, get the reward from environment and go to the next state

107 | s_next,r,done,info = env.step(a)

108 |

109 | # Tweaking the reward to make it negative when we lose

110 | r = r if not done else -10

111 |

112 | # Remember the important variables

113 | agent.remember(s,a,r,s_next,done)

114 |

115 | # Go to the next state

116 | s = s_next

117 |

118 | # If the episode is terminated

119 | if done:

120 | print("Episode {}/{} finished after {} timesteps - epsilon : {:.2}".format(i_episode+1,N_EPISODES,i_step,agent.epsilon))

121 | break

122 |

123 |

124 | #-----------------------------------------

125 |

126 | # Store the rewards

127 | rewards.append(i_step)

128 |

129 |

130 | # Training

131 | agent.train()

132 |

133 |

134 |

135 |

136 |

137 | # Plot the average running rewards

138 | utils.plot_average_running_rewards(rewards)

139 |

--------------------------------------------------------------------------------

/0. Solving Gym environments/pendulum_with_actorcritic.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 |

4 |

5 | """--------------------------------------------------------------------

6 | REINFORCEMENT LEARNING

7 |

8 | Started on the 13/11/2017

9 |

10 | theo.alves.da.costa@gmail.com

11 | https://github.com/theolvs

12 | ------------------------------------------------------------------------

13 | """

14 |

15 |

16 |

17 |

18 |

19 | # Usual libraries

20 | import os

21 | import matplotlib.pyplot as plt

22 | import pandas as pd

23 | import numpy as np

24 | import sys

25 | import random

26 | import time

27 | from tqdm import tqdm

28 | import random

29 | import gym

30 | import numpy as np

31 |

32 |

33 | # Keras (Deep Learning)

34 | from keras.models import Sequential

35 | from keras.layers import Dense

36 | from keras.optimizers import Adam

37 | import tensorflow as tf

38 | import keras.backend as K

39 |

40 | # Custom RL library

41 | import sys

42 | sys.path.insert(0,'..')

43 |

44 | from rl import utils

45 | from rl.agents.actor_critic_agent import ActorCriticAgent

46 |

47 |

48 |

49 |

50 |

51 |

52 |

53 | #----------------------------------------------------------------

54 | # CONSTANTS

55 |

56 |

57 | N_EPISODES = 10000

58 | MAX_STEPS = 500

59 | RENDER = True

60 | RENDER_EVERY = 50

61 |

62 |

63 |

64 | #----------------------------------------------------------------

65 | # MAIN LOOP

66 |

67 |

68 | if __name__ == "__main__":

69 |

70 | # Define the gym environment

71 | sess = tf.Session()

72 | K.set_session(sess)

73 | env = gym.make('Pendulum-v0')

74 |

75 | # Define the agent

76 | agent = ActorCriticAgent(env, sess)

77 |

78 | # Initialize a list to store the rewards

79 | rewards = []

80 |

81 |

82 |

83 |

84 |

85 | #---------------------------------------------

86 | # ITERATION OVER EPISODES

87 | for i_episode in range(N_EPISODES):

88 |

89 |

90 |

91 | # Reset the environment

92 | s = env.reset()

93 |

94 | reward = 0

95 |

96 |

97 | #-----------------------------------------

98 | # EPISODE RUN

99 | for i_step in range(MAX_STEPS):

100 |

101 | # Render the environement

102 | if RENDER : env.render() #and (i_step % RENDER_EVERY == 0)

103 |

104 | # The agent chose the action considering the given current state

105 | s = s.reshape((1, env.observation_space.shape[0]))

106 | a = agent.act(s)

107 | a = a.reshape((1, env.action_space.shape[0]))

108 |

109 | # Take the action, get the reward from environment and go to the next state

110 | s_next,r,done,_ = env.step(a)

111 | s_next = s_next.reshape((1, env.observation_space.shape[0]))

112 | reward += r

113 |

114 | # Tweaking the reward to make it negative when we lose

115 |

116 | # Remember the important variables

117 | agent.remember(s,a,r,s_next,done)

118 |

119 | # Go to the next state

120 | s = s_next

121 |

122 | # If the episode is terminated

123 | if done:

124 | print("Episode {}/{} finished after {} timesteps - epsilon : {:.2} - reward : {}".format(i_episode+1,N_EPISODES,i_step,agent.epsilon,reward))

125 | break

126 |

127 |

128 | #-----------------------------------------

129 |

130 | # Store the rewards

131 | rewards.append(i_step)

132 |

133 |

134 | # Training

135 | agent.train()

136 |

137 |

138 |

139 |

140 |

141 | # Plot the average running rewards

142 | utils.plot_average_running_rewards(rewards)

143 |

--------------------------------------------------------------------------------

/1. Tic Tac Toe/images/game_random_rl_agents.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TheoLvs/reinforcement-learning/c54f732d25c198b4daa3deccb4684bc847131cf2/1. Tic Tac Toe/images/game_random_rl_agents.gif

--------------------------------------------------------------------------------

/1. Tic Tac Toe/images/game_random_rl_agents2.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TheoLvs/reinforcement-learning/c54f732d25c198b4daa3deccb4684bc847131cf2/1. Tic Tac Toe/images/game_random_rl_agents2.gif

--------------------------------------------------------------------------------

/1. Tic Tac Toe/images/game_random_rl_agents3.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TheoLvs/reinforcement-learning/c54f732d25c198b4daa3deccb4684bc847131cf2/1. Tic Tac Toe/images/game_random_rl_agents3.gif

--------------------------------------------------------------------------------

/1. Tic Tac Toe/images/game_random_rules_agents.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TheoLvs/reinforcement-learning/c54f732d25c198b4daa3deccb4684bc847131cf2/1. Tic Tac Toe/images/game_random_rules_agents.gif

--------------------------------------------------------------------------------

/1. Tic Tac Toe/images/game_random_rules_agents2.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TheoLvs/reinforcement-learning/c54f732d25c198b4daa3deccb4684bc847131cf2/1. Tic Tac Toe/images/game_random_rules_agents2.gif

--------------------------------------------------------------------------------

/1. Tic Tac Toe/images/game_rules_rl_agents.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TheoLvs/reinforcement-learning/c54f732d25c198b4daa3deccb4684bc847131cf2/1. Tic Tac Toe/images/game_rules_rl_agents.gif

--------------------------------------------------------------------------------

/1. Tic Tac Toe/images/game_two_random_agents.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TheoLvs/reinforcement-learning/c54f732d25c198b4daa3deccb4684bc847131cf2/1. Tic Tac Toe/images/game_two_random_agents.gif

--------------------------------------------------------------------------------

/2. Data Center Cooling/README.md:

--------------------------------------------------------------------------------

1 | # Data Center Cooling

2 |

3 |

4 | Inspired by [DeepMind's realization](https://deepmind.com/blog/deepmind-ai-reduces-google-data-centre-cooling-bill-40/)

5 |

6 | This repository hold the development of a business question that can be solved with Reinforcement Learning : cooling data centers

7 | - The environment modelled with the fashion of OpenAI Gym's environments

8 | - Solving the problem with different RL algorithms (Q-Learning, Deep-Q-Learning, Policy Gradients)

9 | - A interactive Dash app to test the environment and the agents

10 |

11 |

12 | ***

13 | ## Data Center Cooling environment

14 |

15 | To try out the app, launch with ``python app.py`` and go to port ``localhost:8050``

16 |

17 |

18 |

19 |

20 |

21 |

--------------------------------------------------------------------------------

/2. Data Center Cooling/app.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 |

4 |

5 | """--------------------------------------------------------------------

6 | TWITTER APP

7 |

8 | Started on the 22/06/2017

9 |

10 |

11 | https://plot.ly/dash/live-updates

12 | https://plot.ly/dash/getting-started

13 | https://plot.ly/dash/getting-started-part-2

14 | https://plot.ly/dash/gallery/new-york-oil-and-gas/

15 |

16 | theo.alves.da.costa@gmail.com

17 | https://github.com/theolvs

18 | ------------------------------------------------------------------------

19 | """

20 |

21 | # USUAL

22 | import os

23 | import numpy as np

24 | from tqdm import tqdm

25 | from copy import deepcopy

26 |

27 | # DASH IMPORT

28 | import dash

29 | import dash_core_components as dcc

30 | import dash_html_components as html

31 | from dash.dependencies import Input, Output, Event, State

32 | import plotly.graph_objs as go

33 |

34 | import sys

35 | sys.path.append("C:/git/reinforcement-learning/")

36 |

37 |

38 |

39 | #--------------------------------------------------------------------------------

40 | from rl.envs.data_center_cooling import DataCenterCooling

41 | from rl.agents.q_agent import QAgent

42 | from rl.agents.dqn_agent import DQNAgent

43 | from rl.agents.sarsa_agent import SarsaAgent

44 | from rl import utils

45 |

46 |

47 |

48 |

49 | def run_episode(env,agent,max_step = 100,verbose = 1):

50 |

51 | s = env.reset()

52 |

53 | episode_reward = 0

54 |

55 | i = 0

56 | while i < max_step:

57 |

58 | # Choose an action

59 | a = agent.act(s)

60 |

61 | # Take the action, and get the reward from environment

62 | s_next,r,done = env.step(a)

63 |

64 | if verbose: print(s_next,r,done)

65 |

66 | # Update our knowledge in the Q-table

67 | agent.train(s,a,r,s_next)

68 |

69 | # Update the caches

70 | episode_reward += r

71 | s = s_next

72 |

73 | # If the episode is terminated

74 | i += 1

75 | if done:

76 | break

77 |

78 | return env,agent,episode_reward

79 |

80 |

81 |

82 |

83 | def run_n_episodes(env,type_agent = "Q Agent",n_episodes = 2000,lr = 0.8,gamma = 0.95):

84 |

85 | environment = deepcopy(env)

86 |

87 | # Initialize the agent

88 | states_size = len(env.observation_space)

89 | actions_size = len(env.action_space)

90 |

91 | if type_agent == "Q Agent":

92 | print("... Using Q Agent")

93 | agent = QAgent(states_size,actions_size,lr = lr,gamma = gamma)

94 | elif type_agent == "SARSA Agent":

95 | print("... Using SARSA Agent")

96 | agent = SarsaAgent(states_size,actions_size,lr = lr,gamma = gamma)

97 |

98 | # Store the rewards

99 | rewards = []

100 |

101 | # Experience replay

102 | for i in tqdm(range(n_episodes)):

103 |

104 | # Run the episode

105 | environment,agent,episode_reward = run_episode(environment,agent,verbose = 0)

106 | rewards.append(episode_reward)

107 |

108 | return environment,agent,rewards

109 |

110 |

111 | class Clicks(object):

112 | def __init__(self):

113 | self.count = 0

114 |

115 | reset_clicks = Clicks()

116 | train_clicks = Clicks()

117 | env = DataCenterCooling()

118 | np.random.seed()

119 |

120 | #---------------------------------------------------------------------------------

121 | # CREATE THE APP

122 | app = dash.Dash("Data Cooling Center")

123 |

124 |

125 | # # Making the app available offline

126 | offline = False

127 | app.css.config.serve_locally = offline

128 | app.scripts.config.serve_locally = offline

129 |

130 |

131 | style = {

132 | 'font-weight': 'bolder',

133 | 'font-family': 'Product Sans',

134 | }

135 |

136 | container_style = {

137 | "margin":"20px",

138 | }

139 |

140 |

141 |

142 | AGENTS = [{"label":x,"value":x} for x in ["Q Agent","SARSA Agent","Deep-Q-Network Agent","Policy Gradient Agent"]]

143 |

144 | #---------------------------------------------------------------------------------

145 | # LAYOUT

146 | app.layout = html.Div(children=[

147 |

148 |

149 |

150 |

151 |

152 | # HEADER FIRST CONTAINER

153 | html.Div([

154 | html.H2("Data Center Cooling",style = {'color': "rgba(117, 117, 117, 0.95)",**style}),

155 |

156 | html.Div([

157 | html.H4("Environment",style = {'color': "rgba(117, 117, 117, 0.95)",**style}),

158 | html.P("Cooling levels",id = "cooling"),

159 | dcc.Slider(min=10,max=100,step=10,value=10,id = "levels-cooling"),

160 | html.P("Cost factor",id = "cost-factor"),

161 | dcc.Slider(min=0.0,max=5,step=0.1,value=1,id = "levels-cost-factor"),

162 | html.P("Risk factor",id = "risk-factor"),

163 | dcc.Slider(min=0.0,max=5,step=0.1,value=1,id = "levels-risk-factor"),

164 | html.Br(),

165 | html.Button("Reset",id = "reset-env",style = style,n_clicks = 0),

166 | ],style = {"height":"50%"}),

167 |

168 |

169 | html.Div([

170 | html.H4("Agent",style = {'color': "rgba(117, 117, 117, 0.95)",**style}),

171 | dcc.Dropdown(id = "input-agent",options = AGENTS,value = "Q Agent",multi = False),

172 | html.P("N episodes",id = "input-episodes"),

173 | dcc.Slider(min=500,max=10000,step=500,value=5000,id = "n-episodes"),

174 | html.P("Learning rate",id = "input-lr"),

175 | dcc.Slider(min=0.001,max=1.0,step=0.005,value=0.1,id = "lr"),

176 | html.Br(),

177 | html.Button("Train",id = "training",style = style,n_clicks = 0),

178 | ],style = {"height":"50%"}),

179 |

180 |

181 |

182 | ],style={**style,**container_style,'width': '20%',"height":"800px", 'float' : 'left', 'display': 'inline'}, className="container"),

183 |

184 |

185 |

186 |

187 | # ANALYTICS CONTAINER

188 | html.Div([

189 |

190 | dcc.Graph(id='render',animate = False,figure = env.render(with_plotly = True),style = {"height":"100%"}),

191 |

192 |

193 | ],style={**style,**container_style,'width': '55%',"height":"800px", 'float' : 'right', 'display': 'inline'}, className="container"),

194 |

195 |

196 | ])

197 |

198 |

199 |

200 |

201 | #---------------------------------------------------------------------------------

202 | # CALLBACKS

203 |

204 |

205 |

206 | # Callback to stop the streaming

207 | @app.callback(

208 | Output("render","figure"),

209 | [Input('reset-env','n_clicks'),Input('training','n_clicks'),Input('levels-cost-factor','value'),Input('levels-risk-factor','value')],

210 | state = [State('levels-cooling','value'),State('lr','value'),State('n-episodes','value'),State('input-agent','value')]

211 |

212 | )

213 | def render(click_reset,click_training,cost_factor,risk_factor,levels_cooling,lr,n_episodes,type_agent):

214 |

215 |

216 | print("Reset ",click_reset," - ",reset_clicks.count)

217 | print("Train ",click_training," - ",train_clicks.count)

218 |

219 |

220 | if click_reset > reset_clicks.count:

221 | reset_clicks.count = click_reset

222 | env.__init__(levels_cooling = levels_cooling,risk_factor = risk_factor,cost_factor = cost_factor,keep_cooling = True)

223 |

224 | elif click_training > train_clicks.count:

225 | train_clicks.count = click_training

226 | env_temp,agent,rewards = run_n_episodes(env,n_episodes = n_episodes,lr = lr,type_agent = type_agent)

227 | utils.plot_average_running_rewards(rewards,"C:/Users/talvesdacosta/Desktop/results.png")

228 | # os.system("start "+"C:/Users/talvesdacosta/Desktop/results.png")

229 | env.cooling = env_temp.cooling

230 | else:

231 | env.risk_factor = risk_factor

232 | env.cost_factor = cost_factor

233 |

234 |

235 |

236 | return env.render(with_plotly = True)

237 |

238 |

239 |

240 |

241 | @app.callback(

242 | Output("cooling","children"),

243 | [Input('levels-cooling','value')])

244 | def update_cooling(value):

245 | env.levels_cooling = value

246 | env.define_cooling(value)

247 | return "Cooling levels : {}".format(value)

248 |

249 |

250 |

251 | @app.callback(

252 | Output("risk-factor","children"),

253 | [Input('levels-risk-factor','value')])

254 | def update_risk(value):

255 | return "Risk factor : {}".format(value)

256 |

257 |

258 |

259 | @app.callback(

260 | Output("cost-factor","children"),

261 | [Input('levels-cost-factor','value')])

262 | def update_cost(value):

263 | return "Cost factor : {}".format(value)

264 |

265 | @app.callback(

266 | Output("input-episodes","children"),

267 | [Input('n-episodes','value')])

268 | def update_episodes(value):

269 | return "N episodes : {}".format(value)

270 |

271 | @app.callback(

272 | Output("input-lr","children"),

273 | [Input('lr','value')])

274 | def update_lr(value):

275 | return "Learning rate : {}".format(value)

276 |

277 |

278 |

279 |

280 |

281 |

282 | #---------------------------------------------------------------------------------

283 | # ADD EXTERNAL CSS

284 |

285 | external_css = ["https://fonts.googleapis.com/css?family=Product+Sans:400,400i,700,700i",

286 | "https://cdn.rawgit.com/plotly/dash-app-stylesheets/2cc54b8c03f4126569a3440aae611bbef1d7a5dd/stylesheet.css"]

287 |

288 | for css in external_css:

289 | app.css.append_css({"external_url": css})

290 |

291 |

292 |

293 |

294 |

295 |

296 |

297 | #---------------------------------------------------------------------------------

298 | # RUN SERVER

299 | if __name__ == '__main__':

300 | app.run_server(debug=True)

301 | np.random.seed()

--------------------------------------------------------------------------------

/3. Robotics/minitaur.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 |

4 |

5 | """--------------------------------------------------------------------

6 | REINFORCEMENT LEARNING

7 |

8 | Started on the 25/08/2017

9 |

10 | theo.alves.da.costa@gmail.com

11 | https://github.com/theolvs

12 | ------------------------------------------------------------------------

13 | """

14 |

15 |

16 |

17 |

18 |

19 | # Usual libraries

20 | import os

21 | import matplotlib.pyplot as plt

22 | import pandas as pd

23 | import numpy as np

24 | import sys

25 | import random

26 | import time

27 | from tqdm import tqdm

28 | import random

29 | import gym

30 | import numpy as np

31 |

32 |

33 | # Keras (Deep Learning)

34 | from keras.models import Sequential

35 | from keras.layers import Dense

36 | from keras.optimizers import Adam

37 |

38 |

39 | # Custom RL library

40 | import sys

41 | sys.path.insert(0,'..')

42 |

43 | from rl import utils

44 | from rl.agents.dqn_agent import DQNAgent

45 |

46 | import pybullet_envs.bullet.minitaur_gym_env as e

47 |

48 |

49 |

50 |

51 |

52 | #----------------------------------------------------------------

53 | # CONSTANTS

54 |

55 |

56 | N_EPISODES = 1000

57 | MAX_STEPS = 2000

58 | RENDER = True

59 | RENDER_EVERY = 50

60 |

61 |

62 |

63 | #----------------------------------------------------------------

64 | # MAIN LOOP

65 |

66 |

67 | if __name__ == "__main__":

68 |

69 | # Define the gym environment

70 | env = e.MinitaurBulletEnv(render=True)

71 |

72 | # Get the environement action and observation space

73 | state_size = env.observation_space.shape[0]

74 | action_size = env.action_space.shape[0]

75 |

76 | # Create the RL Agent

77 | agent = DQNAgent(state_size,action_size,low = -1,high = 1,action_type="continuous")

78 |

79 | # Initialize a list to store the rewards

80 | rewards = []

81 |

82 |

83 |

84 |

85 |

86 | #---------------------------------------------

87 | # ITERATION OVER EPISODES

88 | for i_episode in range(N_EPISODES):

89 |

90 |

91 |

92 | # Reset the environment

93 | s = env.reset()

94 | reward = 0

95 |

96 |

97 | #-----------------------------------------

98 | # EPISODE RUN

99 | for i_step in range(MAX_STEPS):

100 |

101 | # Render the environement

102 | if RENDER : env.render() #and (i_step % RENDER_EVERY == 0)

103 |

104 | # The agent chose the action considering the given current state

105 | a = agent.act(s)

106 |

107 | # Take the action, get the reward from environment and go to the next state

108 | s_next,r,done,info = env.step(a)

109 | reward += r

110 |

111 | # Remember the important variables

112 | agent.remember(s,a,r,s_next,done)

113 |

114 | # Go to the next state

115 | s = s_next

116 |

117 | # If the episode is terminated

118 | if done:

119 | print("Episode {}/{} finished after {} timesteps - epsilon : {:.2} - reward : {:.2}".format(i_episode+1,N_EPISODES,i_step,agent.epsilon,reward))

120 | break

121 |

122 |

123 | #-----------------------------------------

124 |

125 | # Store the rewards

126 | rewards.append(i_step)

127 |

128 |

129 | # Training

130 | agent.train(batch_size = 128)

131 |

132 |

133 |

134 |

135 |

136 | # Plot the average running rewards

137 | utils.plot_average_running_rewards(rewards)

138 |

--------------------------------------------------------------------------------

/4. Chrome Dino/README.md:

--------------------------------------------------------------------------------

1 | # Chrome Dino Project

2 | ## Playing and solving the Chrome Dinosaur Game with Evolution Strategies and PyTorch

3 |

4 |

5 |

6 | ##### Summary

7 | - Capturing image from the game - **OK**

8 | - Allowing control programmatically - **OK**

9 | - Trying a simple implementation of rules-based agent with classic CV algorithms - **OK**

10 | - Capturing scores for fitness and reward - **OK**

11 | - Creating the environment for RL - **OK**

12 | - Developing a RL agent that learns via evolution strategies - **OK**

13 | - Different experiments on both agent and method of learning

14 |

15 |

16 | ##### Ideas

17 | - Taking as input of the neural network

18 | - The boundaries of the obstacles in a 1D vector

19 | - The raw image

20 | - The processed image

21 | - Initialize the agent with hard coded policy

22 | - Combine the RL agent and the rules-based Agent

23 | - Try other evolution strategies

24 | - Crossover on the fitness

25 | - Simple ES

26 | - CMA-ES

27 |

28 |

29 | ##### Experiments :

30 | 1. **Genetic algorithm** : Generation of 20 dinos, 5 survive, and make 10 offsprings. 10 random dinos are created to complete the 20 population. Did not work at all after 100 generations, still an average score of 50 which is stopping at the first obstacle. This was tested without mutations. The Neural Network is very shallow MLP with one 100-unit hidden layer.

31 | 2. **Genetic algorithm** : Generation of 40 dinos, 10 survive, make 45 offsprings, but only 40 are selected at random to recreate the 40-population. Added mutations with gaussian noise at this step. Tried as well with a shallow MLP but also with a simple logistic regression in PyTorch

32 | 3. **Genetic algorithm** : Generation of 50 dinos, 12 survive, make 66 offsprings, but only 38 are selected at random to recreate the population. The input is now modelled by a vector with the position on the x axis of the next 2 obstacles. Thus I went back to a shallow MLP with the following structure ``(2 input features,50 hidden layers,1 output)`` giving me the probability to jump. When ensuring a high mutation factor for the gaussian noise to have more exploration. The dinosaurs reach a max score of 600 in about 70 generations of 50 dinos (6 hours on my laptop). But they fail when reaching the birds that were not included in the training.

33 | 4. **Evolution Strategy** : I went back to a simple evolution strategy to focus the training on the dino with the good behavior. The selection will be the top 10 or 20% at each generation. Then the next generation is created based on the fittest on which is adding gaussian noise as the mutations. With this strategy the dinosaur reach a max score of 600 in about 20 generations of 50 dinos. This works better than the last solution, but it is always falling to local optimas with dino jumping all the time to maximize their score.

34 | 5. **Evolution Strategy** : to correct the bad behavior of jumping all the time, I added a discount factor if moves are done when there is no obstacles. By counting the number of obstacles passed and the number of moves. The new reward is then modelled in the fashion of the Bellman equation, by incrementing a discounted reward to the previous reward. With this correction, after one generation the "always-jumping" behavior has disappeared, and with a few generations the dinos reach a good enough policy. In 10 generations of 10 dinos only (only 10 minutes on my laptop) we reach easily the max score of 600 previously reached, with a good enough average policy. But new issues arise : birds that come after 600 points which require to duck, speed increasing over time, long obstacles which would require to jump before. Here is a screen capture of the game at this state :

35 |

36 |

37 |

38 |

39 | ##### Misc

40 | - Finding parameter on when to jump

41 | - Logreg/NN on the first and second position of obstacles

42 | - ML + Heuristics model

43 | - Bayesian priors

--------------------------------------------------------------------------------

/4. Chrome Dino/experiments.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 |

4 |

5 | """--------------------------------------------------------------------

6 | GENETIC ALGORITHMS EXPERIMENTS

7 | Started on the 2018/01/03

8 | theo.alves.da.costa@gmail.com

9 | https://github.com/theolvs

10 | ------------------------------------------------------------------------

11 | """

12 |

13 | from scipy import stats

14 | import seaborn as sns

15 | import os

16 | import matplotlib.pyplot as plt

17 | import pandas as pd

18 | import numpy as np

19 | import sys

20 | import time

21 | from tqdm import tqdm

22 | import itertools

23 |

24 |

25 |

26 |

27 | #=============================================================================================================================

28 | # DISTRIBUTIONS

29 | #=============================================================================================================================

30 |

31 |

32 |

33 |

34 |

35 | class Dist(object):

36 | def __init__(self,mu = None,std = None,label = None):

37 | self.mu = np.random.rand()*20 - 10 if mu is None else mu

38 | self.std = np.random.rand()*10 if std is None else std

39 | self.label = "" if not label else " - "+label

40 | self.func = lambda x : stats.norm.cdf(x,loc = self.mu,scale = self.std)

41 |

42 | def __repr__(self,markdown = False):

43 | return "Norm {1}mu={2}{0}, {0}std={3}{0}{4}".format("$" if markdown else "","$\\" if markdown else "",

44 | round(self.mu,2),round(self.std,2),self.label)

45 |

46 | def plot(self,fill = True):

47 | x = np.linspace(-20, 20, 100)

48 | y = stats.norm.pdf(x,loc = self.mu,scale = self.std)

49 | plt.plot(x,y,label = self.__repr__(markdown = True))

50 | if fill:

51 | plt.fill_between(x, 0, y, alpha=0.4)

52 |

53 |

54 | def __add__(self,other):

55 | mu = np.mean([self.mu,other.mu])

56 | std = np.mean([self.std,other.std])

57 | return Dist(mu,std)

58 |

59 | def mutate(self,alpha = 1):

60 | self.mu = self.mu + 1/(1+np.log(1+alpha)) * np.random.randn()

61 | self.std = max(self.std + 1/(1+np.log(1+alpha)) * np.random.randn(),0.5)

62 |

63 | def fitness(self,x):

64 | return 1 - stats.kstest(x,self.func).statistic

65 |

66 |

67 |

68 |

69 |

70 |

71 |