├── .gitignore

├── LICENSE

├── README.md

├── elephant-hbase

├── README.md

├── pom.xml

└── src

│ └── main

│ ├── java

│ └── HelloHbase.java

│ └── resources

│ ├── core-site.xml

│ ├── hbase-site.xml

│ ├── hbase.keytab

│ ├── hdfs-site.xml

│ └── krb5.conf

├── elephant-hdfs

├── README.md

├── conf

│ ├── core-site.xml

│ ├── hdfs-site.xml

│ ├── hdfs.keytab

│ └── krb5.conf

├── pom.xml

└── src

│ └── main

│ └── java

│ ├── HDFSUtils.java

│ └── KBHDFSTest.java

├── elephant-hive

├── README.md

├── pom.xml

└── src

│ └── main

│ └── java

│ ├── UDAF

│ ├── Maximum.java

│ └── Mean.java

│ └── UDF

│ └── Strip.java

├── elephant-mr

├── README.md

├── input

│ └── sample.txt

├── pom.xml

└── src

│ └── main

│ └── java

│ ├── MaxTemperature.java

│ ├── MaxTemperatureMapper.java

│ └── MaxTemperatureReducer.java

└── pom.xml

/.gitignore:

--------------------------------------------------------------------------------

1 | # Compiled class file

2 | *.class

3 |

4 | # Log file

5 | *.log

6 |

7 | # BlueJ files

8 | *.ctxt

9 |

10 | # Mobile Tools for Java (J2ME)

11 | .mtj.tmp/

12 |

13 | # Package Files #

14 | *.jar

15 | *.war

16 | *.nar

17 | *.ear

18 | *.zip

19 | *.tar.gz

20 | *.rar

21 |

22 | # virtual machine crash logs, see http://www.java.com/en/download/help/error_hotspot.xml

23 | hs_err_pid*

24 |

25 | # IDEA

26 | *.idea/

27 | *.iml

28 | target/

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2018

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | 大数据周边组件 API 使用。

--------------------------------------------------------------------------------

/elephant-hbase/README.md:

--------------------------------------------------------------------------------

1 | ## HelloHbase

2 |

3 | Create a simple Hbase table with Kerberos in HDFS HA.

4 |

5 |

--------------------------------------------------------------------------------

/elephant-hbase/pom.xml:

--------------------------------------------------------------------------------

1 |

2 |

5 |

6 | elephant

7 | com.hadoopbook

8 | 1.0-SNAPSHOT

9 |

10 | 4.0.0

11 |

12 | elephant-hbase

13 |

14 |

15 |

16 | org.apache.maven.plugins

17 | maven-compiler-plugin

18 |

19 | 7

20 | 7

21 |

22 |

23 |

24 |

25 |

26 |

27 | org.apache.hbase

28 | hbase-client

29 | 1.2.0-cdh5.13.1

30 |

31 |

32 | org.apache.hadoop

33 | hadoop-client

34 | 2.6.0

35 |

36 |

37 | org.apache.hadoop

38 | hadoop-common

39 | 2.6.0

40 |

41 |

42 |

43 |

44 |

--------------------------------------------------------------------------------

/elephant-hbase/src/main/java/HelloHbase.java:

--------------------------------------------------------------------------------

1 | import org.apache.hadoop.conf.Configuration;

2 | import org.apache.hadoop.fs.Path;

3 | import org.apache.hadoop.hbase.*;

4 | import org.apache.hadoop.hbase.client.Admin;

5 | import org.apache.hadoop.hbase.client.Connection;

6 | import org.apache.hadoop.hbase.client.ConnectionFactory;

7 | import org.apache.hadoop.hbase.io.compress.Compression;

8 | import org.apache.hadoop.security.UserGroupInformation;

9 |

10 | import java.io.IOException;

11 | import java.net.URISyntaxException;

12 |

13 | public class HelloHbase {

14 |

15 | /*

16 | * 初始化 Kerberos 认证

17 | */

18 | public static void initKerberosEnv(Configuration conf) {

19 | System.setProperty("java.security.krb5.conf", HelloHbase.class.getClassLoader().getResource("krb5.conf").getPath());

20 | System.setProperty("javax.security.auth.useSubjectCredsOnly", "false");

21 | System.setProperty("sun.security.krb5.debug", "true");

22 | try {

23 | UserGroupInformation.setConfiguration(conf);

24 | UserGroupInformation.loginUserFromKeytab("hbase/kt1@DEV.DXY.CN", HelloHbase.class.getClassLoader().getResource("hbase.keytab").getPath());

25 | System.out.println(UserGroupInformation.getCurrentUser());

26 | } catch (IOException e) {

27 | e.printStackTrace();

28 | }

29 | }

30 |

31 | /**

32 | * 检查 mytable 表是否存在,如果存在就删掉旧表重新建立

33 | * @param admin

34 | * @param table

35 | * @throws IOException

36 | */

37 | public static void createOrOverwrite(Admin admin, HTableDescriptor table) throws IOException {

38 | if (admin.tableExists(table.getTableName())) {

39 | admin.disableTable(table.getTableName());

40 | admin.deleteTable(table.getTableName());

41 | }

42 | admin.createTable(table);

43 | }

44 |

45 | /**

46 | * 建表

47 | * @param config

48 | * @throws IOException

49 | * @throws URISyntaxException

50 | */

51 | public static void createSchemaTables(Configuration config) throws IOException {

52 | try(Connection connection = ConnectionFactory.createConnection(config);

53 | Admin admin = connection.getAdmin()) {

54 | HTableDescriptor table = new HTableDescriptor(TableName.valueOf("mytable"));

55 | table.addFamily(new HColumnDescriptor("mycf").setCompressionType(Compression.Algorithm.NONE));

56 | System.out.println("Creating table.");

57 | // 新建表

58 | createOrOverwrite(admin, table);

59 | System.out.println("Done.");

60 | }

61 | }

62 |

63 | /**

64 | * 修改

65 | * @param config

66 | * @throws IOException

67 | */

68 | public static void modifySchema(Configuration config) throws IOException {

69 | try (Connection connection = ConnectionFactory.createConnection(config);

70 | Admin admin = connection.getAdmin()) {

71 | TableName tableName = TableName.valueOf("mytable");

72 |

73 | if (!admin.tableExists(tableName)) {

74 | System.out.println("Table does not exist");

75 | System.exit(-1);

76 | }

77 |

78 | // 往 mytable 里添加 newcf 列族

79 | HColumnDescriptor newColumn = new HColumnDescriptor("newcf");

80 | newColumn.setCompactionCompressionType(Compression.Algorithm.GZ);

81 | newColumn.setMaxVersions(HConstants.ALL_VERSIONS);

82 | admin.addColumn(tableName, newColumn);

83 |

84 | // 获取表定义

85 | HTableDescriptor table = admin.getTableDescriptor(tableName);

86 |

87 | // 更新 mycf 列族

88 | HColumnDescriptor mycf = new HColumnDescriptor("mycf");

89 | mycf.setCompactionCompressionType(Compression.Algorithm.GZ);

90 | mycf.setMaxVersions(HConstants.ALL_VERSIONS);

91 | table.modifyFamily(mycf);

92 | admin.modifyTable(tableName, table);

93 | }

94 | }

95 |

96 | /**

97 | * 删表

98 | * @param config

99 | * @throws IOException

100 | */

101 |

102 | public static void deleteSchema(Configuration config) throws IOException {

103 | try(Connection connection = ConnectionFactory.createConnection(config);

104 | Admin admin = connection.getAdmin()) {

105 | TableName tableName = TableName.valueOf("mytable");

106 |

107 | // 停用 mytable

108 | admin.disableTable(tableName);

109 |

110 | // 删除 mycf 列族

111 | admin.deleteColumn(tableName, "mycf".getBytes("UTF-8"));

112 |

113 | // 删除 mytable 表

114 | admin.deleteTable(tableName);

115 | }

116 | }

117 |

118 | public static void main(String[] args) throws URISyntaxException, IOException {

119 | // 获取配置文件

120 | Configuration config = HBaseConfiguration.create();

121 | config.addResource(new Path(ClassLoader.getSystemResource("hdfs-site.xml").toURI()));

122 | config.addResource(new Path(ClassLoader.getSystemResource("core-site.xml").toURI()));

123 | config.addResource(new Path(ClassLoader.getSystemResource("hbase-site.xml").toURI()));

124 |

125 | // Kerberos 认证

126 | initKerberosEnv(config);

127 |

128 | // 建表

129 | createSchemaTables(config);

130 |

131 | // 该表

132 | modifySchema(config);

133 |

134 | // 删表

135 | deleteSchema(config);

136 | }

137 | }

138 |

--------------------------------------------------------------------------------

/elephant-hbase/src/main/resources/core-site.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 | fs.defaultFS

7 | hdfs://nameservice1

8 |

9 |

10 | fs.trash.interval

11 | 1

12 |

13 |

14 | io.compression.codecs

15 | org.apache.hadoop.io.compress.DefaultCodec,org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.BZip2Codec,org.apache.hadoop.io.compress.DeflateCodec,org.apache.hadoop.io.compress.SnappyCodec,org.apache.hadoop.io.compress.Lz4Codec

16 |

17 |

18 | hadoop.security.authentication

19 | kerberos

20 |

21 |

22 | hadoop.security.authorization

23 | true

24 |

25 |

26 | hadoop.rpc.protection

27 | authentication

28 |

29 |

30 | hadoop.ssl.require.client.cert

31 | false

32 | true

33 |

34 |

35 | hadoop.ssl.keystores.factory.class

36 | org.apache.hadoop.security.ssl.FileBasedKeyStoresFactory

37 | true

38 |

39 |

40 | hadoop.ssl.server.conf

41 | ssl-server.xml

42 | true

43 |

44 |

45 | hadoop.ssl.client.conf

46 | ssl-client.xml

47 | true

48 |

49 |

50 | hadoop.security.auth_to_local

51 | RULE:[1:$1@$0](^.*@DEV\.DXY\.CN$)s/^(.*)@DEV\.DXY\.CN$/$1/g

52 | RULE:[2:$1@$0](^.*@DEV\.DXY\.CN$)s/^(.*)@DEV\.DXY\.CN$/$1/g

53 | DEFAULT

54 |

55 |

56 | hadoop.proxyuser.oozie.hosts

57 | *

58 |

59 |

60 | hadoop.proxyuser.oozie.groups

61 | *

62 |

63 |

64 | hadoop.proxyuser.mapred.hosts

65 | *

66 |

67 |

68 | hadoop.proxyuser.mapred.groups

69 | *

70 |

71 |

72 | hadoop.proxyuser.flume.hosts

73 | *

74 |

75 |

76 | hadoop.proxyuser.flume.groups

77 | *

78 |

79 |

80 | hadoop.proxyuser.HTTP.hosts

81 | *

82 |

83 |

84 | hadoop.proxyuser.HTTP.groups

85 | *

86 |

87 |

88 | hadoop.proxyuser.hive.hosts

89 | *

90 |

91 |

92 | hadoop.proxyuser.hive.groups

93 | *

94 |

95 |

96 | hadoop.proxyuser.hue.hosts

97 | *

98 |

99 |

100 | hadoop.proxyuser.hue.groups

101 | *

102 |

103 |

104 | hadoop.proxyuser.httpfs.hosts

105 | *

106 |

107 |

108 | hadoop.proxyuser.httpfs.groups

109 | *

110 |

111 |

112 | hadoop.proxyuser.hdfs.groups

113 | *

114 |

115 |

116 | hadoop.proxyuser.hdfs.hosts

117 | *

118 |

119 |

120 | hadoop.proxyuser.yarn.hosts

121 | *

122 |

123 |

124 | hadoop.proxyuser.yarn.groups

125 | *

126 |

127 |

128 | hadoop.security.group.mapping

129 | org.apache.hadoop.security.LdapGroupsMapping

130 |

131 |

132 | hadoop.security.group.mapping.ldap.url

133 | ldap://kt1

134 |

135 |

136 | hadoop.security.group.mapping.ldap.bind.user

137 | cn=admin,dc=dev,dc=dxy,dc=cn

138 |

139 |

140 | hadoop.security.group.mapping.ldap.base

141 | dc=dev,dc=dxy,dc=cn

142 |

143 |

144 | hadoop.security.group.mapping.ldap.search.filter.user

145 | (&(objectClass=posixAccount)(cn={0}))

146 |

147 |

148 | hadoop.security.group.mapping.ldap.search.filter.group

149 | (objectClass=groupOfNames)

150 |

151 |

152 | hadoop.security.group.mapping.ldap.search.attr.member

153 | member

154 |

155 |

156 | hadoop.security.group.mapping.ldap.search.attr.group.name

157 | cn

158 |

159 |

160 | hadoop.security.instrumentation.requires.admin

161 | false

162 |

163 |

164 |

--------------------------------------------------------------------------------

/elephant-hbase/src/main/resources/hbase-site.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 | hbase.rootdir

7 | hdfs://nameservice1/hbase

8 |

9 |

10 | hbase.cluster.distributed

11 | true

12 |

13 |

14 | hbase.replication

15 | true

16 |

17 |

18 | replication.source.ratio

19 | 1.0

20 |

21 |

22 | replication.source.nb.capacity

23 | 1000

24 |

25 |

26 | hbase.client.write.buffer

27 | 2097152

28 |

29 |

30 | hbase.client.pause

31 | 100

32 |

33 |

34 | hbase.client.retries.number

35 | 35

36 |

37 |

38 | hbase.client.scanner.caching

39 | 100

40 |

41 |

42 | hbase.client.keyvalue.maxsize

43 | 10485760

44 |

45 |

46 | hbase.ipc.client.allowsInterrupt

47 | true

48 |

49 |

50 | hbase.client.primaryCallTimeout.get

51 | 10

52 |

53 |

54 | hbase.client.primaryCallTimeout.multiget

55 | 10

56 |

57 |

58 | hbase.splitlog.manager.timeout

59 | 120000

60 |

61 |

62 | hbase.fs.tmp.dir

63 | /user/${user.name}/hbase-staging

64 |

65 |

66 | hbase.regionserver.port

67 | 60020

68 |

69 |

70 | hbase.regionserver.ipc.address

71 | 0.0.0.0

72 |

73 |

74 | hbase.regionserver.info.port

75 | 60030

76 |

77 |

78 | hbase.client.scanner.timeout.period

79 | 60000

80 |

81 |

82 | hbase.regionserver.handler.count

83 | 30

84 |

85 |

86 | hbase.regionserver.metahandler.count

87 | 10

88 |

89 |

90 | hbase.regionserver.msginterval

91 | 3000

92 |

93 |

94 | hbase.regionserver.optionallogflushinterval

95 | 1000

96 |

97 |

98 | hbase.regionserver.regionSplitLimit

99 | 2147483647

100 |

101 |

102 | hbase.regionserver.logroll.period

103 | 3600000

104 |

105 |

106 | hbase.regionserver.nbreservationblocks

107 | 4

108 |

109 |

110 | hbase.regionserver.global.memstore.size

111 | 0.4

112 |

113 |

114 | hbase.regionserver.global.memstore.size.lower.limit

115 | 0.95

116 |

117 |

118 | hbase.regionserver.codecs

119 |

120 |

121 |

122 | hbase.regionserver.maxlogs

123 | 32

124 |

125 |

126 | hbase.regionserver.hlog.blocksize

127 | 134217728

128 |

129 |

130 | hbase.regionserver.thread.compaction.small

131 | 1

132 |

133 |

134 | hbase.ipc.server.read.threadpool.size

135 | 10

136 |

137 |

138 | hbase.wal.regiongrouping.numgroups

139 | 1

140 |

141 |

142 | hbase.wal.provider

143 | multiwal

144 |

145 |

146 | hbase.wal.storage.policy

147 | NONE

148 |

149 |

150 | hbase.hregion.memstore.flush.size

151 | 134217728

152 |

153 |

154 | hbase.hregion.memstore.mslab.enabled

155 | true

156 |

157 |

158 | hbase.hregion.memstore.mslab.chunksize

159 | 2097152

160 |

161 |

162 | hbase.hregion.memstore.mslab.max.allocation

163 | 262144

164 |

165 |

166 | hbase.hregion.preclose.flush.size

167 | 5242880

168 |

169 |

170 | hbase.hregion.memstore.block.multiplier

171 | 2

172 |

173 |

174 | hbase.hregion.max.filesize

175 | 10737418240

176 |

177 |

178 | hbase.hregion.majorcompaction.jitter

179 | 0.5

180 |

181 |

182 | hbase.region.replica.replication.enabled

183 | false

184 |

185 |

186 | hbase.hstore.compactionThreshold

187 | 3

188 |

189 |

190 | hbase.hstore.blockingStoreFiles

191 | 10

192 |

193 |

194 | hbase.hstore.blockingWaitTime

195 | 90000

196 |

197 |

198 | hbase.hregion.majorcompaction

199 | 604800000

200 |

201 |

202 | hfile.block.cache.size

203 | 0.4

204 |

205 |

206 | hbase.bucketcache.combinedcache.enabled

207 | true

208 |

209 |

210 | hbase.bucketcache.size

211 | 1024

212 |

213 |

214 | hbase.hash.type

215 | murmur

216 |

217 |

218 | hbase.server.thread.wakefrequency

219 | 10000

220 |

221 |

222 | hbase.coprocessor.abortonerror

223 | false

224 |

225 |

226 | hbase.coprocessor.region.classes

227 | org.apache.hadoop.hbase.security.access.SecureBulkLoadEndpoint

228 |

229 |

230 | hbase.superuser

231 |

232 |

233 |

234 | hbase.rpc.timeout

235 | 60000

236 |

237 |

238 | hbase.snapshot.master.timeoutMillis

239 | 60000

240 |

241 |

242 | hbase.snapshot.region.timeout

243 | 60000

244 |

245 |

246 | hbase.snapshot.master.timeout.millis

247 | 60000

248 |

249 |

250 | hbase.security.authentication

251 | simple

252 |

253 |

254 | hbase.security.authorization

255 | false

256 |

257 |

258 | hbase.row.level.authorization

259 | false

260 |

261 |

262 | hbase.rpc.protection

263 | authentication

264 |

265 |

266 | zookeeper.session.timeout

267 | 60000

268 |

269 |

270 | zookeeper.znode.parent

271 | /hbase

272 |

273 |

274 | zookeeper.znode.rootserver

275 | root-region-server

276 |

277 |

278 | hbase.zookeeper.quorum

279 | kt3,kt2,kt1

280 |

281 |

282 | hbase.zookeeper.property.clientPort

283 | 2181

284 |

285 |

286 | hbase.zookeeper.client.keytab.file

287 | hbase.keytab

288 |

289 |

290 | hbase.regionserver.kerberos.principal

291 | hbase/_HOST@DEV.DXY.CN

292 |

293 |

294 | hbase.regionserver.keytab.file

295 | hbase.keytab

296 |

297 |

298 | hbase.client.kerberos.principal

299 | hbase/_HOST@DEV.DXY.CN

300 |

301 |

302 | hbase.client.keytab.file

303 | hbase.keytab

304 |

305 |

306 | hbase.bulkload.staging.dir

307 | /tmp/hbase-staging

308 |

309 |

310 |

--------------------------------------------------------------------------------

/elephant-hbase/src/main/resources/hbase.keytab:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Tianny/Elephant/7dd7454f4070c726d676120ad0a709f1d70b412a/elephant-hbase/src/main/resources/hbase.keytab

--------------------------------------------------------------------------------

/elephant-hbase/src/main/resources/hdfs-site.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 | dfs.nameservices

7 | nameservice1

8 |

9 |

10 | dfs.client.failover.proxy.provider.nameservice1

11 | org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

12 |

13 |

14 | dfs.ha.automatic-failover.enabled.nameservice1

15 | true

16 |

17 |

18 | ha.zookeeper.quorum

19 | kt1:2181,kt2:2181,kt3:2181

20 |

21 |

22 | dfs.ha.namenodes.nameservice1

23 | namenode109,namenode177

24 |

25 |

26 | dfs.namenode.rpc-address.nameservice1.namenode109

27 | kt1:8020

28 |

29 |

30 | dfs.namenode.servicerpc-address.nameservice1.namenode109

31 | kt1:8022

32 |

33 |

34 | dfs.namenode.http-address.nameservice1.namenode109

35 | kt1:50070

36 |

37 |

38 | dfs.namenode.https-address.nameservice1.namenode109

39 | kt1:50470

40 |

41 |

42 | dfs.namenode.rpc-address.nameservice1.namenode177

43 | kt2:8020

44 |

45 |

46 | dfs.namenode.servicerpc-address.nameservice1.namenode177

47 | kt2:8022

48 |

49 |

50 | dfs.namenode.http-address.nameservice1.namenode177

51 | kt2:50070

52 |

53 |

54 | dfs.namenode.https-address.nameservice1.namenode177

55 | kt2:50470

56 |

57 |

58 | dfs.replication

59 | 3

60 |

61 |

62 | dfs.blocksize

63 | 134217728

64 |

65 |

66 | dfs.client.use.datanode.hostname

67 | false

68 |

69 |

70 | fs.permissions.umask-mode

71 | 022

72 |

73 |

74 | dfs.encrypt.data.transfer.algorithm

75 | 3des

76 |

77 |

78 | dfs.encrypt.data.transfer.cipher.suites

79 | AES/CTR/NoPadding

80 |

81 |

82 | dfs.encrypt.data.transfer.cipher.key.bitlength

83 | 256

84 |

85 |

86 | dfs.namenode.acls.enabled

87 | true

88 |

89 |

90 | dfs.client.use.legacy.blockreader

91 | false

92 |

93 |

94 | dfs.client.read.shortcircuit

95 | false

96 |

97 |

98 | dfs.domain.socket.path

99 | /var/run/hdfs-sockets/dn

100 |

101 |

102 | dfs.client.read.shortcircuit.skip.checksum

103 | false

104 |

105 |

106 | dfs.client.domain.socket.data.traffic

107 | false

108 |

109 |

110 | dfs.datanode.hdfs-blocks-metadata.enabled

111 | true

112 |

113 |

114 | dfs.block.access.token.enable

115 | true

116 |

117 |

118 | dfs.namenode.kerberos.principal

119 | hdfs/_HOST@DEV.DXY.CN

120 |

121 |

122 | dfs.namenode.kerberos.internal.spnego.principal

123 | HTTP/_HOST@DEV.DXY.CN

124 |

125 |

126 | dfs.datanode.kerberos.principal

127 | hdfs/_HOST@DEV.DXY.CN

128 |

129 |

130 |

--------------------------------------------------------------------------------

/elephant-hbase/src/main/resources/krb5.conf:

--------------------------------------------------------------------------------

1 | [libdefaults]

2 | default_realm = DEV.DXY.CN

3 | dns_lookup_kdc = false

4 | dns_lookup_realm = false

5 | ticket_lifetime = 86400

6 | renew_lifetime = 604800

7 | forwardable = true

8 | default_tgs_enctypes = aes256-cts-hmac-sha1-96

9 | default_tkt_enctypes = aes256-cts-hmac-sha1-96

10 | permitted_enctypes = aes256-cts-hmac-sha1-96

11 | udp_preference_limit = 1

12 | kdc_timeout = 3000

13 | [realms]

14 | DEV.DXY.CN = {

15 | kdc = kt1

16 | admin_server = kt1

17 | }

18 | [domain_realm]

19 |

--------------------------------------------------------------------------------

/elephant-hdfs/README.md:

--------------------------------------------------------------------------------

1 | ## Elephant-hdfs

2 |

3 | HDFS API example with kerberos.

--------------------------------------------------------------------------------

/elephant-hdfs/conf/core-site.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 | fs.defaultFS

7 | hdfs://nameservice1

8 |

9 |

10 | fs.trash.interval

11 | 1

12 |

13 |

14 | io.compression.codecs

15 | org.apache.hadoop.io.compress.DefaultCodec,org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.BZip2Codec,org.apache.hadoop.io.compress.DeflateCodec,org.apache.hadoop.io.compress.SnappyCodec,org.apache.hadoop.io.compress.Lz4Codec

16 |

17 |

18 | hadoop.security.authentication

19 | kerberos

20 |

21 |

22 | hadoop.security.authorization

23 | true

24 |

25 |

26 | hadoop.rpc.protection

27 | authentication

28 |

29 |

30 | hadoop.ssl.require.client.cert

31 | false

32 | true

33 |

34 |

35 | hadoop.ssl.keystores.factory.class

36 | org.apache.hadoop.security.ssl.FileBasedKeyStoresFactory

37 | true

38 |

39 |

40 | hadoop.ssl.server.conf

41 | ssl-server.xml

42 | true

43 |

44 |

45 | hadoop.ssl.client.conf

46 | ssl-client.xml

47 | true

48 |

49 |

50 | hadoop.security.auth_to_local

51 | RULE:[1:$1@$0](^.*@DEV\.DXY\.CN$)s/^(.*)@DEV\.DXY\.CN$/$1/g

52 | RULE:[2:$1@$0](^.*@DEV\.DXY\.CN$)s/^(.*)@DEV\.DXY\.CN$/$1/g

53 | DEFAULT

54 |

55 |

56 | hadoop.proxyuser.oozie.hosts

57 | *

58 |

59 |

60 | hadoop.proxyuser.oozie.groups

61 | *

62 |

63 |

64 | hadoop.proxyuser.mapred.hosts

65 | *

66 |

67 |

68 | hadoop.proxyuser.mapred.groups

69 | *

70 |

71 |

72 | hadoop.proxyuser.flume.hosts

73 | *

74 |

75 |

76 | hadoop.proxyuser.flume.groups

77 | *

78 |

79 |

80 | hadoop.proxyuser.HTTP.hosts

81 | *

82 |

83 |

84 | hadoop.proxyuser.HTTP.groups

85 | *

86 |

87 |

88 | hadoop.proxyuser.hive.hosts

89 | *

90 |

91 |

92 | hadoop.proxyuser.hive.groups

93 | *

94 |

95 |

96 | hadoop.proxyuser.hue.hosts

97 | *

98 |

99 |

100 | hadoop.proxyuser.hue.groups

101 | *

102 |

103 |

104 | hadoop.proxyuser.httpfs.hosts

105 | *

106 |

107 |

108 | hadoop.proxyuser.httpfs.groups

109 | *

110 |

111 |

112 | hadoop.proxyuser.hdfs.groups

113 | *

114 |

115 |

116 | hadoop.proxyuser.hdfs.hosts

117 | *

118 |

119 |

120 | hadoop.proxyuser.yarn.hosts

121 | *

122 |

123 |

124 | hadoop.proxyuser.yarn.groups

125 | *

126 |

127 |

128 | hadoop.security.group.mapping

129 | org.apache.hadoop.security.LdapGroupsMapping

130 |

131 |

132 | hadoop.security.group.mapping.ldap.url

133 | ldap://kt1

134 |

135 |

136 | hadoop.security.group.mapping.ldap.bind.user

137 | cn=admin,dc=dev,dc=dxy,dc=cn

138 |

139 |

140 | hadoop.security.group.mapping.ldap.base

141 | dc=dev,dc=dxy,dc=cn

142 |

143 |

144 | hadoop.security.group.mapping.ldap.search.filter.user

145 | (&(objectClass=posixAccount)(cn={0}))

146 |

147 |

148 | hadoop.security.group.mapping.ldap.search.filter.group

149 | (objectClass=groupOfNames)

150 |

151 |

152 | hadoop.security.group.mapping.ldap.search.attr.member

153 | member

154 |

155 |

156 | hadoop.security.group.mapping.ldap.search.attr.group.name

157 | cn

158 |

159 |

160 | hadoop.security.instrumentation.requires.admin

161 | false

162 |

163 |

164 |

--------------------------------------------------------------------------------

/elephant-hdfs/conf/hdfs-site.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 | dfs.nameservices

7 | nameservice1

8 |

9 |

10 | dfs.client.failover.proxy.provider.nameservice1

11 | org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

12 |

13 |

14 | dfs.ha.automatic-failover.enabled.nameservice1

15 | true

16 |

17 |

18 | ha.zookeeper.quorum

19 | kt1:2181,kt2:2181,kt3:2181

20 |

21 |

22 | dfs.ha.namenodes.nameservice1

23 | namenode109,namenode177

24 |

25 |

26 | dfs.namenode.rpc-address.nameservice1.namenode109

27 | kt1:8020

28 |

29 |

30 | dfs.namenode.servicerpc-address.nameservice1.namenode109

31 | kt1:8022

32 |

33 |

34 | dfs.namenode.http-address.nameservice1.namenode109

35 | kt1:50070

36 |

37 |

38 | dfs.namenode.https-address.nameservice1.namenode109

39 | kt1:50470

40 |

41 |

42 | dfs.namenode.rpc-address.nameservice1.namenode177

43 | kt2:8020

44 |

45 |

46 | dfs.namenode.servicerpc-address.nameservice1.namenode177

47 | kt2:8022

48 |

49 |

50 | dfs.namenode.http-address.nameservice1.namenode177

51 | kt2:50070

52 |

53 |

54 | dfs.namenode.https-address.nameservice1.namenode177

55 | kt2:50470

56 |

57 |

58 | dfs.replication

59 | 3

60 |

61 |

62 | dfs.blocksize

63 | 134217728

64 |

65 |

66 | dfs.client.use.datanode.hostname

67 | false

68 |

69 |

70 | fs.permissions.umask-mode

71 | 022

72 |

73 |

74 | dfs.encrypt.data.transfer.algorithm

75 | 3des

76 |

77 |

78 | dfs.encrypt.data.transfer.cipher.suites

79 | AES/CTR/NoPadding

80 |

81 |

82 | dfs.encrypt.data.transfer.cipher.key.bitlength

83 | 256

84 |

85 |

86 | dfs.namenode.acls.enabled

87 | true

88 |

89 |

90 | dfs.client.use.legacy.blockreader

91 | false

92 |

93 |

94 | dfs.client.read.shortcircuit

95 | false

96 |

97 |

98 | dfs.domain.socket.path

99 | /var/run/hdfs-sockets/dn

100 |

101 |

102 | dfs.client.read.shortcircuit.skip.checksum

103 | false

104 |

105 |

106 | dfs.client.domain.socket.data.traffic

107 | false

108 |

109 |

110 | dfs.datanode.hdfs-blocks-metadata.enabled

111 | true

112 |

113 |

114 | dfs.block.access.token.enable

115 | true

116 |

117 |

118 | dfs.namenode.kerberos.principal

119 | hdfs/_HOST@DEV.DXY.CN

120 |

121 |

122 | dfs.namenode.kerberos.internal.spnego.principal

123 | HTTP/_HOST@DEV.DXY.CN

124 |

125 |

126 | dfs.datanode.kerberos.principal

127 | hdfs/_HOST@DEV.DXY.CN

128 |

129 |

130 |

--------------------------------------------------------------------------------

/elephant-hdfs/conf/hdfs.keytab:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Tianny/Elephant/7dd7454f4070c726d676120ad0a709f1d70b412a/elephant-hdfs/conf/hdfs.keytab

--------------------------------------------------------------------------------

/elephant-hdfs/conf/krb5.conf:

--------------------------------------------------------------------------------

1 | [libdefaults]

2 | default_realm = DEV.DXY.CN

3 | dns_lookup_kdc = false

4 | dns_lookup_realm = false

5 | ticket_lifetime = 86400

6 | renew_lifetime = 604800

7 | forwardable = true

8 | default_tgs_enctypes = aes256-cts-hmac-sha1-96

9 | default_tkt_enctypes = aes256-cts-hmac-sha1-96

10 | permitted_enctypes = aes256-cts-hmac-sha1-96

11 | udp_preference_limit = 1

12 | kdc_timeout = 3000

13 | [realms]

14 | DEV.DXY.CN = {

15 | kdc = kt1

16 | admin_server = kt1

17 | }

18 | [domain_realm]

19 |

--------------------------------------------------------------------------------

/elephant-hdfs/pom.xml:

--------------------------------------------------------------------------------

1 |

2 |

5 |

6 | elephant

7 | com.hadoopbook

8 | 1.0-SNAPSHOT

9 |

10 | 4.0.0

11 | elephant-hdfs

12 |

13 | UTF-8

14 | 1.8

15 | 1.8

16 | 2.6.0

17 |

18 |

19 |

20 |

21 | org.apache.hadoop

22 | hadoop-common

23 | ${hadoop.version}

24 |

25 |

26 | org.apache.hadoop

27 | hadoop-client

28 | ${hadoop.version}

29 |

30 |

31 | org.apache.hadoop

32 | hadoop-hdfs

33 | ${hadoop.version}

34 |

35 |

36 | junit

37 | junit

38 | 4.11

39 |

40 |

41 | org.apache.maven.plugins

42 | maven-compiler-plugin

43 | 3.7.0

44 |

45 |

46 |

47 |

--------------------------------------------------------------------------------

/elephant-hdfs/src/main/java/HDFSUtils.java:

--------------------------------------------------------------------------------

1 | import org.apache.hadoop.conf.Configuration;

2 | import org.apache.hadoop.fs.FSDataOutputStream;

3 | import org.apache.hadoop.fs.FileStatus;

4 | import org.apache.hadoop.fs.FileSystem;

5 | import org.apache.hadoop.fs.Path;

6 | import org.apache.hadoop.io.IOUtils;

7 |

8 | import java.io.File;

9 | import java.io.IOException;

10 | import java.io.InputStream;

11 |

12 | /**

13 | * HDFS 文件系统操作工具类

14 | */

15 |

16 | public class HDFSUtils {

17 | /*

18 | * 初始化 HDFS Configuration

19 | */

20 | public static Configuration initConfiguration(String confPath) {

21 | Configuration configuration = new Configuration();

22 | // System.out.println(confPath + File.separator + "core-site.xml");

23 | configuration.addResource(new Path(confPath + File.separator + "core-site.xml"));

24 | configuration.addResource(new Path(confPath + File.separator + "hdfs-site.xml"));

25 | return configuration;

26 | }

27 |

28 | /*

29 | * 向 HDFS 指定目录创建一个文件

30 | * @param fs HDFS 文件系统

31 | * @param dst 目标文件路径

32 | * @param contents 文件内容

33 | */

34 | public static void createFile(FileSystem fs, String dst, String contents) {

35 | try {

36 | Path path = new Path(dst);

37 | FSDataOutputStream fsDataOutputStream = fs.create(path);

38 | fsDataOutputStream.write(contents.getBytes());

39 | fsDataOutputStream.close();

40 | } catch (IOException e) {

41 | e.printStackTrace();

42 | }

43 | }

44 |

45 | /*

46 | * 上传文件至 HDFS

47 | * @param fs HDFS 文件系统

48 | * @param src 源文件路径

49 | * @param dst 目标文件路径

50 | * */

51 | public static void uploadFile(FileSystem fs, String src, String dst) {

52 | try {

53 | Path srcPath = new Path(src); // 原路径

54 | Path dstPath = new Path(dst); // 目标路径

55 | fs.copyFromLocalFile(false, srcPath, dstPath);

56 | // 打印文件路径

57 | System.out.println("----list files-----");

58 | FileStatus[] fileStatuses = fs.listStatus(dstPath);

59 | for (FileStatus file : fileStatuses) {

60 | System.out.println(file.getPath());

61 | }

62 | } catch (IOException e) {

63 | e.printStackTrace();

64 | }

65 | }

66 |

67 | /*

68 | * 文件重命名

69 | * @param fs

70 | * @param oldName

71 | * @param newName

72 | */

73 |

74 | public static void rename(FileSystem fs, String oldName, String newName) {

75 | try {

76 | Path oldPath = new Path(oldName);

77 | Path newPath = new Path(newName);

78 | boolean isOk = fs.rename(oldPath, newPath);

79 | if (isOk) {

80 | System.out.print("rename ok");

81 | } else {

82 | System.out.print("rename failure");

83 | }

84 | } catch (IOException e) {

85 | e.printStackTrace();

86 | }

87 | }

88 |

89 | /*

90 | * 删除文件

91 | */

92 |

93 | public static void delete(FileSystem fs, String filePath) {

94 | try {

95 | Path path = new Path(filePath);

96 | boolean isOk = fs.deleteOnExit(path);

97 | if (isOk) {

98 | System.out.println("delete ok");

99 | } else {

100 | System.out.print("delete failure");

101 | }

102 | } catch (IOException e) {

103 | e.printStackTrace();

104 | }

105 | }

106 |

107 | /*

108 | 创建 HDFS 目录

109 | */

110 | public static void mkdir(FileSystem fs, String path) {

111 | try {

112 | Path srcPath = new Path(path);

113 | if (fs.exists(srcPath)) {

114 | System.out.println("目录已存在");

115 | return;

116 | }

117 |

118 | boolean isOk = fs.mkdirs(srcPath);

119 | if (isOk) {

120 | System.out.println("create dir ok");

121 | } else {

122 | System.out.print("create dir failure");

123 | }

124 | } catch (IOException e) {

125 | e.printStackTrace();

126 | }

127 | }

128 |

129 | /*

130 | 读取 HDFS 文件

131 | */

132 | public static void readFile(FileSystem fs, String filePath) {

133 | try {

134 | Path srcPath = new Path(filePath);

135 | InputStream in = fs.open(srcPath);

136 | IOUtils.copyBytes(in, System.out, 4096, false); // 复制输出到标准输出

137 | } catch (IOException e) {

138 | e.printStackTrace();

139 | }

140 | }

141 |

142 | }

143 |

--------------------------------------------------------------------------------

/elephant-hdfs/src/main/java/KBHDFSTest.java:

--------------------------------------------------------------------------------

1 | import org.apache.hadoop.fs.FileSystem;

2 | import org.apache.hadoop.conf.Configuration;

3 | import org.apache.hadoop.security.UserGroupInformation;

4 |

5 | import java.io.File;

6 | import java.io.IOException;

7 |

8 | /*

9 | * 访问 Kerberos 环境下的 HDFS

10 | */

11 |

12 | public class KBHDFSTest {

13 | private static String confPath = System.getProperty("user.dir") + File.separator + "elephant-hdfs" + File.separator + "conf";

14 |

15 | /*

16 | * 初始化 Kerberos 环境

17 | */

18 | public static void initKerberosEnv(Configuration conf) {

19 | System.setProperty("java.security.krb5.conf", confPath + File.separator + "krb5.conf");

20 | System.setProperty("javax.security.auth.useSubjectCredsOnly", "false");

21 | System.setProperty("sun.security.krb5.debug", "true");

22 | try {

23 | UserGroupInformation.setConfiguration(conf);

24 | UserGroupInformation.loginUserFromKeytab("hdfs/kt1@DEV.DXY.CN", confPath + File.separator + "hdfs.keytab");

25 | System.out.println(UserGroupInformation.getCurrentUser());

26 | } catch (IOException e) {

27 | e.printStackTrace();

28 | }

29 | }

30 |

31 | public static void main(String[] args) {

32 | // 初始化 HDFS Configuration 配置

33 | Configuration configuration = HDFSUtils.initConfiguration(confPath);

34 | initKerberosEnv(configuration);

35 | try {

36 | FileSystem fileSystem = FileSystem.get(configuration);

37 | HDFSUtils.mkdir(fileSystem, "/test");

38 | HDFSUtils.uploadFile(fileSystem, confPath + File.separator + "krb5.conf", "/test");

39 | HDFSUtils.rename(fileSystem, "/test/krb5.conf", "/test/krb51.conf");

40 | HDFSUtils.readFile(fileSystem, "/test/krb51.conf");

41 | HDFSUtils.delete(fileSystem, "/test/krb51.conf");

42 | } catch (IOException e) {

43 | e.printStackTrace();

44 | }

45 | }

46 | }

47 |

--------------------------------------------------------------------------------

/elephant-hive/README.md:

--------------------------------------------------------------------------------

1 | ## Elephant-hive

2 |

3 | Hive UDF & UDAF Example.

4 |

5 | [Detail explanation to see in my blog.](https://tianny.cc/2019/03/15/Hive-UDF/)

--------------------------------------------------------------------------------

/elephant-hive/pom.xml:

--------------------------------------------------------------------------------

1 |

2 |

5 |

6 | elephant

7 | com.hadoopbook

8 | 1.0-SNAPSHOT

9 |

10 | 4.0.0

11 |

12 | elephant-hive

13 |

14 |

15 | org.apache.hive

16 | hive-exec

17 | 0.13.1

18 |

19 |

20 | org.apache.hadoop

21 | hadoop-common

22 | 2.6.0

23 |

24 |

25 |

--------------------------------------------------------------------------------

/elephant-hive/src/main/java/UDAF/Maximum.java:

--------------------------------------------------------------------------------

1 | package UDAF;

2 |

3 | import org.apache.hadoop.hive.ql.exec.UDAF;

4 | import org.apache.hadoop.hive.ql.exec.UDAFEvaluator;

5 | import org.apache.hadoop.io.IntWritable;

6 |

7 | /**

8 | * A UDAF for calculating the maximum of a collection of integers

9 | */

10 | public class Maximum extends UDAF {

11 | public static class MaximumIntUDAFEvaluator implements UDAFEvaluator {

12 | private IntWritable result;

13 |

14 | public void init() {

15 | result = null;

16 | }

17 |

18 | public boolean iterate(IntWritable value) {

19 | if (value == null) {

20 | return true;

21 | }

22 | if (result == null) {

23 | result = new IntWritable(value.get());

24 | } else {

25 | result.set(Math.max(result.get(), value.get()));

26 | }

27 | return true;

28 | }

29 |

30 | public IntWritable terminatePartial() {

31 | return result;

32 | }

33 |

34 | public boolean merge(IntWritable other) {

35 | return iterate(other);

36 | }

37 |

38 | public IntWritable terminate() {

39 | return result;

40 | }

41 | }

42 | }

43 |

--------------------------------------------------------------------------------

/elephant-hive/src/main/java/UDAF/Mean.java:

--------------------------------------------------------------------------------

1 | package UDAF;

2 |

3 | import org.apache.hadoop.hive.ql.exec.UDAF;

4 | import org.apache.hadoop.hive.ql.exec.UDAFEvaluator;

5 | import org.apache.hadoop.hive.serde2.io.DoubleWritable;

6 |

7 | /**

8 | * A UDAF for calculating the mean of a collection of doubles

9 | */

10 | public class Mean extends UDAF {

11 | public static class MeanDoubleUDAFEvaluator implements UDAFEvaluator {

12 | public static class PartialResult {

13 | double sum;

14 | long count;

15 | }

16 |

17 | private PartialResult partial;

18 |

19 | public void init() {

20 | partial = null;

21 | }

22 |

23 | public boolean iterate(DoubleWritable value) {

24 | if (value == null) {

25 | return true;

26 | }

27 | if (partial == null) {

28 | partial = new PartialResult();

29 | }

30 | partial.sum += value.get();

31 | partial.count++;

32 | return true;

33 | }

34 |

35 | public PartialResult terminatePartial() {

36 | return partial;

37 | }

38 |

39 | public boolean merge(PartialResult other) {

40 | if (other == null) {

41 | return true;

42 | }

43 | if (partial == null) {

44 | partial = new PartialResult();

45 | }

46 | partial.sum += other.sum;

47 | partial.count += other.count;

48 | return true;

49 | }

50 |

51 | public DoubleWritable terminate() {

52 | if (partial == null) {

53 | return null;

54 | }

55 | return new DoubleWritable(partial.sum / partial.count);

56 | }

57 | }

58 | }

59 |

--------------------------------------------------------------------------------

/elephant-hive/src/main/java/UDF/Strip.java:

--------------------------------------------------------------------------------

1 | package UDF;

2 |

3 | import org.apache.commons.lang.StringUtils;

4 | import org.apache.hadoop.hive.ql.exec.UDF;

5 | import org.apache.hadoop.io.Text;

6 |

7 | /**

8 | * A UDF must be a subclass of org.apache.hadoop.hive.ql.exec.UDF.

9 | * A UDF must implement at least one evaluate() method

10 | */

11 |

12 | public class Strip extends UDF {

13 | private Text result = new Text();

14 |

15 | /**

16 | * leading and trailing whitespace from the input

17 | * @param str

18 | * @return

19 | */

20 | public Text evalute(Text str) {

21 | if (str == null) {

22 | return null;

23 | }

24 | result.set(StringUtils.strip(str.toString()));

25 | return result;

26 | }

27 |

28 | /**

29 | * strip any of a set of supplied characters from the ends of the string

30 | * @param str

31 | * @param stripChars

32 | * @return

33 | */

34 | public Text evaluate(Text str, String stripChars) {

35 | result.set(StringUtils.strip(str.toString(), stripChars));

36 | return result;

37 | }

38 | }

39 |

--------------------------------------------------------------------------------

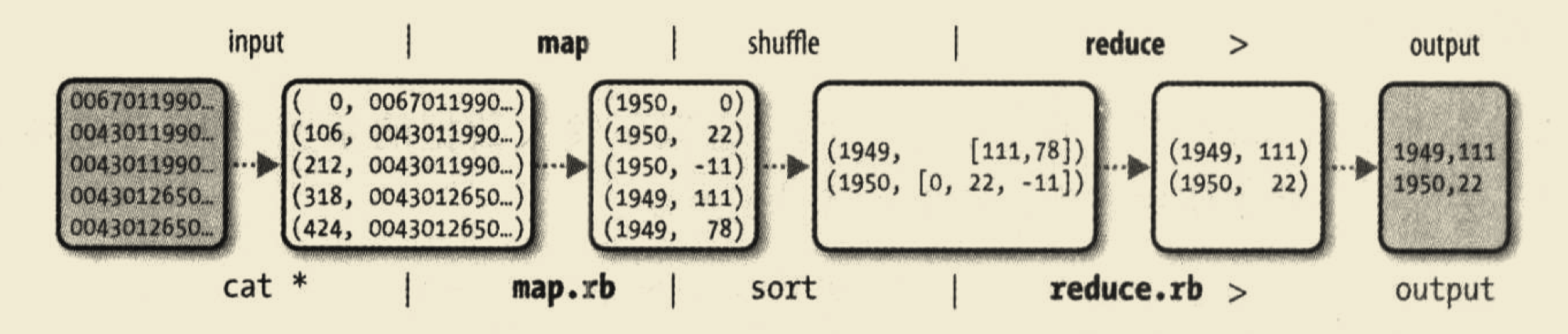

/elephant-mr/README.md:

--------------------------------------------------------------------------------

1 | ## Elephant-mr

2 |

3 | 用于输出每一年的最高气温

4 |

5 | 整个 MapReduce 的流程如下图:

6 |

7 |

--------------------------------------------------------------------------------

/elephant-mr/input/sample.txt:

--------------------------------------------------------------------------------

1 | 0067011990999991950051507004+68750+023550FM-12+038299999V0203301N00671220001CN9999999N9+00001+99999999999

2 | 0043011990999991950051512004+68750+023550FM-12+038299999V0203201N00671220001CN9999999N9+00221+99999999999

3 | 0043011990999991950051518004+68750+023550FM-12+038299999V0203201N00261220001CN9999999N9-00111+99999999999

4 | 0043012650999991949032412004+62300+010750FM-12+048599999V0202701N00461220001CN0500001N9+01111+99999999999

5 | 0043012650999991949032418004+62300+010750FM-12+048599999V0202701N00461220001CN0500001N9+00781+99999999999

6 |

--------------------------------------------------------------------------------

/elephant-mr/pom.xml:

--------------------------------------------------------------------------------

1 |

2 |

5 |

6 | elephant

7 | com.hadoopbook

8 | 1.0-SNAPSHOT

9 |

10 | 4.0.0

11 | elephant-mr

12 |

13 | UTF-8

14 | 1.8

15 | 1.8

16 | 2.6.0

17 |

18 |

19 |

20 |

21 | org.apache.hadoop

22 | hadoop-common

23 | ${hadoop.version}

24 |

25 |

26 | org.apache.hadoop

27 | hadoop-client

28 | ${hadoop.version}

29 |

30 |

31 | junit

32 | junit

33 | 4.11

34 |

35 |

36 | org.apache.maven.plugins

37 | maven-compiler-plugin

38 | 3.7.0

39 |

40 |

41 |

--------------------------------------------------------------------------------

/elephant-mr/src/main/java/MaxTemperature.java:

--------------------------------------------------------------------------------

1 | import org.apache.hadoop.conf.Configuration;

2 | import org.apache.hadoop.fs.Path;

3 | import org.apache.hadoop.io.IntWritable;

4 | import org.apache.hadoop.io.Text;

5 | import org.apache.hadoop.mapreduce.Job;

6 | import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

7 | import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

8 |

9 | public class MaxTemperature {

10 |

11 | public static void main(String[] args) throws Exception{

12 | if (args.length != 2) {

13 | System.err.println("Usage: MaxTemperature