├── CaseAnalyse.md

├── DatasetUrl.md

├── Images

├── 0004.png

├── 0005.png

├── 0006.png

├── 0007.png

├── 0008.png

├── 0009.png

├── 001.png

├── 0010.png

├── 0011.png

├── 0012.png

├── 0013.png

├── 0014.png

├── 0015.png

├── 0016.png

├── 0017.png

├── 0018.png

├── 0019.png

├── 002.png

├── 0020.png

├── 0021.png

├── 0022.png

├── 0023.png

├── 0024.png

├── 0025.png

├── 0026.png

└── 003.png

├── Knowledge.md

├── Lecture_00

└── README.md

├── Lecture_01

├── LinearRegression.md

├── LinearRegression.py

└── README.md

├── Lecture_02

├── LogisticRegression.py

├── README.md

├── data

│ ├── 01.png

│ ├── 02.png

│ └── LogiReg_data.txt

├── e2.py

└── e3.py

├── Lecture_03

├── README.md

├── data

│ ├── 04.png

│ └── README.md

├── ex1.py

└── ex2.py

├── Lecture_04

├── README.md

├── allElectronicsData.dot

├── data

│ └── 01.PNG

└── ex1.py

├── Lecture_05

├── README.md

├── data

│ └── README.md

├── ex1.py

├── ex2.py

└── ex3.py

├── Lecture_06

├── README.md

├── data

│ ├── Figure_1.png

│ ├── README.md

│ ├── p18.png

│ └── simhei.ttf

├── ex1.py

├── ex2.py

├── ex3.py

└── word_cloud.py

├── Lecture_07

├── README.md

├── ex1.py

└── maping.py

├── Lecture_08

├── DBSCAN.md

├── Kmeans.md

├── README.md

├── data

│ └── README.md

└── ex1.py

├── Lecture_09

├── README.md

├── data

│ └── README.md

├── ex1.py

└── ex2.py

├── Lecture_10

├── README.md

└── 初探神经网络.pdf

├── Lecture_11

├── README.md

├── data

│ └── README.md

└── ex1.py

├── Lecture_12

├── README.md

├── data

│ └── README.md

├── ex1.py

├── ex2.py

├── ex3.py

├── ex4.py

└── ex5.py

├── Lecture_13

├── README.md

├── data

│ └── README.md

└── ex1.py

├── Lecture_14

├── README.md

└── data

│ └── README.md

├── Lecture_15

├── README.md

└── data

│ └── README.md

├── Others

├── Anaconda.md

├── EnvironmentSetting.md

├── InstallCUDA.md

├── InstallPytorch.md

├── InstallTensorflow.md

└── Xshell2Service.md

├── README.md

├── RecommendBook.md

└── tools

├── README.md

├── accFscore.py

├── pieChart.py

├── plot001.py

├── plot002.py

├── plot003.py

├── plot004.py

└── visiualImage.py

/CaseAnalyse.md:

--------------------------------------------------------------------------------

1 | # 实战案例分析集合

2 | ## 1.回归

3 | - [1001 波士顿房价预测](./Lecture_01/README.md)

4 |

5 | - [1002 基于Tensorflow的波士顿房价预测](./Lecture_12/README.md)

6 | - [1003 Tensorflow实现两层全连接神经网络拟合正弦函数](./Lecture_12/README.md)

7 |

8 | ## 2.分类

9 | - [2001 是否患癌预测](./Lecture_02/README.md)

10 |

11 | - [2002 是否录取预测](./Lecture_02/README.md)

12 | - [2003 信用卡通过预测](./Lecture_03/README.md)

13 | - [2004 基于决策树的iris预测](./Lecture_04/README.md)

14 | - [2005 是否患有糖尿病预测](./Lecture_05/README.md)

15 | - [2006 泰坦尼克号人员生还预测](./Lecture_05/README.md)

16 | - [2007 基于贝叶斯算法和编辑距离的单词拼写纠正](./Lecture_06/README.md)

17 | - [2008 基于贝叶斯算法和TF-IDF的中文垃圾邮件分类](./Lecture_06/README.md)

18 | - [2009 基于贝叶斯算法和TF-IDF的中文新闻分类](./Lecture_06/README.md)

19 | - [2010 基于SVM的人脸识别](./Lecture_07/README.md)

20 | - [2011 基于决策树和词向量表示的中文垃圾邮件分类](./Lecture_09/README.md)

21 | - [2012 三层神经网络手写体识别](./Lecture_11/README.md)

22 | - [2013 基于Softmax分类器的MNIST手写体识别](./Lecture_12/README.md)

23 | - [2014 基于多层全连接神经网络的MNIST手写体识别](./Lecture_12/README.md)

24 |

25 | ## 3.聚类

26 | - [3001 基于Kmeans的手写体聚类分析](Lecture_08/README.md)

27 | ### [<主页>](./README.md)

28 |

--------------------------------------------------------------------------------

/DatasetUrl.md:

--------------------------------------------------------------------------------

1 | # 数据集下载地址集合

2 | ### 如链接失效,请联系 wangchengo@126.com

3 |

4 | ### 1.分类

5 | - [1001-印度人糖尿病预测(pima-indians-diabetes)](https://pan.baidu.com/s/1Z2JtgJBafytuMRzPDU8Ncw) 提取码:hfb3

6 |

7 | - [1002-泰坦尼克号获救预测](https://pan.baidu.com/s/1Nbd29zac79SHV43oMVDV9A) 提取码: wvmf

8 |

9 | - [1003-单词拼写纠正](https://pan.baidu.com/s/1EPz-Z7WKVPAULGmZ8K6UWQ ) 提取码:zw1s

10 |

11 | - [1004-中文垃圾邮件分类](https://pan.baidu.com/s/10hGDFL9t58o0Moq6BcbotA) 提取码:dyxr

12 |

13 | - [1005-搜狗新闻分类(搜狗实验室)](https://pan.baidu.com/s/1CVLWjTmKht8bQHeep7NSJw) 提取码:44t6

14 |

15 | - [1006-手写体识别5000by10](https://pan.baidu.com/s/1zgOpwZSJMNJ4JP5cbZxMKA) 提取码:wt5f

16 |

17 | - [1007-英文新闻分类数据集AG_news](https://pan.baidu.com/s/19sXx0xnol8c9L0wse_OAMw) 提取码:xvqr

18 |

19 | 训练集120K=4*20K,测试集7.6K,类别数4

20 |

21 | - [1008-DBPedia ontology](https://pan.baidu.com/s/18Uy8uJCAr0uoM0v3uu0yWw) 提取码:nn97

22 |

23 | 来自 DBpedia 2014 的 14 个不重叠的分类的 40,000 个训练样本和 5,000 个测试样本,即训练集560K,测试集70K

24 |

25 | - [1009-Yelp review Full](https://pan.baidu.com/s/1OoJ387QsY7aGgdEPPMKjBw) 提取码:0k94

26 |

27 | 5分类,每个评级分别包含 130,000 个训练样本和 10,000 个 测试样本,即训练集650K,测试集50K

28 |

29 | - [1010-Yelp reviews Polarity](https://pan.baidu.com/s/1oT6du2rLQDCWhPxXtbIyjw) 提取码:do3p

30 |

31 | 2分类,不同极性分别包含 280,000 个训练样本和 19,000 个测试样,即训练集560K,测试集38K

32 |

33 |

34 |

35 | ### 2.回归

36 |

37 | ### 3.中英文语料库

38 | - [3001-常用中文停用词表](https://pan.baidu.com/s/1ovGC1RrIOioMNALjsXu9Ow) 提取码: 9jff

39 |

40 | - [3002-常用词向量](https://github.com/Embedding/Chinese-Word-Vectors)

41 | ### [<主页>](./README.md)

42 |

43 |

--------------------------------------------------------------------------------

/Images/0004.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0004.png

--------------------------------------------------------------------------------

/Images/0005.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0005.png

--------------------------------------------------------------------------------

/Images/0006.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0006.png

--------------------------------------------------------------------------------

/Images/0007.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0007.png

--------------------------------------------------------------------------------

/Images/0008.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0008.png

--------------------------------------------------------------------------------

/Images/0009.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0009.png

--------------------------------------------------------------------------------

/Images/001.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/001.png

--------------------------------------------------------------------------------

/Images/0010.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0010.png

--------------------------------------------------------------------------------

/Images/0011.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0011.png

--------------------------------------------------------------------------------

/Images/0012.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0012.png

--------------------------------------------------------------------------------

/Images/0013.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0013.png

--------------------------------------------------------------------------------

/Images/0014.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0014.png

--------------------------------------------------------------------------------

/Images/0015.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0015.png

--------------------------------------------------------------------------------

/Images/0016.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0016.png

--------------------------------------------------------------------------------

/Images/0017.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0017.png

--------------------------------------------------------------------------------

/Images/0018.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0018.png

--------------------------------------------------------------------------------

/Images/0019.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0019.png

--------------------------------------------------------------------------------

/Images/002.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/002.png

--------------------------------------------------------------------------------

/Images/0020.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0020.png

--------------------------------------------------------------------------------

/Images/0021.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0021.png

--------------------------------------------------------------------------------

/Images/0022.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0022.png

--------------------------------------------------------------------------------

/Images/0023.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0023.png

--------------------------------------------------------------------------------

/Images/0024.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0024.png

--------------------------------------------------------------------------------

/Images/0025.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0025.png

--------------------------------------------------------------------------------

/Images/0026.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/0026.png

--------------------------------------------------------------------------------

/Images/003.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Images/003.png

--------------------------------------------------------------------------------

/Knowledge.md:

--------------------------------------------------------------------------------

1 | ## 章节知识点预览目录

2 | - 第零讲 [预备](Lecture_00/README.md)

3 | - 0.1 最小二乘法与正态分布

4 | - 第一讲 [线性回归](Lecture_01/README.md)

5 | - 1.1 最小二乘法

6 | - 1.2 似然函数

7 | - 1.3 梯度下降算法与学习率

8 | - 1.4 特征缩放

9 | - 第二讲 [逻辑回归](Lecture_02/README.md)

10 | - 2.1 Matplotlib作图库介绍

11 | - 2.2 sklearn库介绍

12 | - 第三讲 [案例分析](Lecture_03/README.md)

13 | - 3.1 过拟合、欠拟合与恰拟合

14 | - 3.2 准确率与混淆矩阵

15 | - 3.3 超参数与k-flod交叉验证

16 | - 3.4 超参数的并行搜索

17 | - 3.5 样本重采样

18 | - 3.6 Pandas库介绍

19 | - 第四讲 [决策树算法](Lecture_04/README.md)

20 | - 4.1 决策树的构造及剪枝

21 | - 4.2 决策树的可视化

22 | - 第五讲 [集成算法](Lecture_05/README.md)

23 | - 5.1 Bagging: 随机森林

24 | - 5.2 Boosting: Xgboost,AdaBoost

25 | - 5.3 Stacking

26 | - 5.4 数据缺失值的处理

27 | - 5.5 特征筛选及特征转换

28 | - 第六讲 [贝叶斯算法](Lecture_06/README.md)

29 | - 6.1 贝叶斯算法和平滑处理

30 | - 6.2 中文分词

31 | - 6.3 词集模型与词袋模型

32 | - 6.4 TF-IDF

33 | - 6.5 相似度衡量(欧氏距离与余弦距离)

34 | - 第七讲 [支持向量机](Lecture_07/README.md)

35 | - 7.1 支持向量机与核函数

36 | - 7.2 PCA的使用

37 | - 7.3 RGB图片的介绍

38 | - 7.4 pipeline的使用

39 | - 第八讲 [聚类算法](Lecture_08/README.md)

40 | - 8.1 聚类与无监督算法

41 | - 8.2 聚类与分类的区别

42 | - 8.3 基于距离的聚类算法(Kmeans)

43 | - 8.4 基于密度的聚类算法(DBSCAN)

44 | - 8.5 聚类算法的评估标准(准确率与召回率)

45 | - 第九讲 [语言模型于词向量](Lecture_09/README.md)

46 | - 9.1 词向量模型简介

47 | - 9.2 Gensim库的使用

48 | - 9.3 第三方词向量使用

49 | - 第十讲 [初探神经网络](Lecture_10/README.md)

50 | - 10.1 什么是神经网络? 怎么理解

51 | - 10.2 神经网络的前向传播过程

52 | - 第十一讲 [反向传播算法](Lecture_11/README.md)

53 | - 11.1 神经网络的求解

54 | - 11.2 反向传播算法

55 | - 11.3 如何用Pickle保存变量

56 | - 第十二讲 [Tensorflow的使用](Lecture_12/README.md)

57 | - 12.1 Tensorflow框架简介与安装

58 | - 12.2 Tensorflow的运行模式

59 | - 12.3 Softmax分类器与交叉熵(Cross entropy)

60 | - 12.4 `tf.add_to_collection`与`tf.nn.in_top_k`

61 | - 第十三讲 [卷积神经网络](./Lecture_13/README.md)

62 | - 2.1 卷积的思想与特点

63 | - 2.2 卷积的过程

64 | - 2.3 `Tensorflow`中卷积的使用方法

65 | - 2.4 `Tensorflow`中的padding操作

66 | ### [<主页>](./README.md)

67 |

--------------------------------------------------------------------------------

/Lecture_00/README.md:

--------------------------------------------------------------------------------

1 | 1. **工欲善其事必先利其器**

2 | 1. **系统选择**

3 | 对于操作系统,如果之前有学过各种Linux发行版操作系统的,可以继续使用;如果没接触过的那就先使用windows,等到后面再教如何使用。

4 | 2. **笔记整理**

5 | 记住,**一定要做笔记**、**一定要做笔记**、**一定要做笔记**,重要的话说三遍,并且尽量是电子笔记,不做笔记你会后悔的!

6 | 推荐大家尽量使用支持[Markdown](https://baike.baidu.com/item/markdown/3245829?fr=aladdin)和[LaTex](https://baike.baidu.com/item/LaTeX/1212106?fr=aladdin)的博客平台,例如[CSDN](https://blog.csdn.net/)、[作业部落](https://www.zybuluo.com/mdeditor)等;

7 | - [Markdown 语法手册](https://www.zybuluo.com/EncyKe/note/120103)

8 | - [LaTeX公式指导手册](https://www.zybuluo.com/codeep/note/163962#2%E6%B7%BB%E5%8A%A0%E6%B3%A8%E9%87%8A%E6%96%87%E5%AD%97-text)

9 | 3. **代码托管**

10 | 对于代码的维护也是一个重要的工作,需要我们尽量学习掌握如何用第三方工具来有效的管理。因为即使你不用,比你厉害的人也在用,你要用到别人的代码就要先接触这个平台。对于常用的代码托管平台国外有有[Github](https://github.com/)、[Gitlab](https://about.gitlab.com/)等,国内有[码云](https://gitee.com/)等,推荐使用github

11 | - [Github入门](https://blog.csdn.net/The_lastest/article/details/70001156)

12 | - [Git教程](https://www.liaoxuefeng.com/wiki/0013739516305929606dd18361248578c67b8067c8c017b000/)

13 |

14 | 对于上面提到的这三点,大家之前有没接触到都无所谓;先去大致了解一下他们分别都是干什么的,脑子有个印象就好,不用一头钻进去学。说白了这些都只是工具,完全可以在我们需要用到那个部分那个点的时候再去看。包括Python语言也一样,我不建议大家一门心思的去学Python,这样真的没多大用,在实践中学习就好。

15 | 2. **对于大家的学习方法**

16 | 你们先按照我的说明来看视频,然后我再总的来讲解一下一些常见的问题,以及带着大家做一些动手的实例来理解算法的原理。对于接下来的第一讲,线性回归,大家可以先去看一下这篇文章[最小二乘法与正态分布](https://blog.csdn.net/The_lastest/article/details/82413772)

17 |

18 | 3. **本节视频1,2**

19 | 练习:

20 |

21 | - [这100道练习,带你玩转Numpy](https://www.kesci.com/home/project/59f29f67c5f3f5119527a2cc)

22 | 大家只需要完成中100个练习,就不用再去刻意学numpy了,遵循遇到一个掌握一个即可!对于这100个练习,也只需有个印象就行,不要去刻意花时间去记。

23 | ### [<主页>](../README.md) [<下一讲>](../Lecture_01/README.md)

--------------------------------------------------------------------------------

/Lecture_01/LinearRegression.md:

--------------------------------------------------------------------------------

1 | #### 1.为什么说线性回归中误差是服从均值为0的方差为$\color{red}{\sigma^2}$的正态(高斯)分布,不是0均值行不行?

2 |

3 | 正态分布:

4 | $$

5 | f(x)=\frac{1}{\sqrt{2\pi}\sigma}\exp{\left(-\frac{(x-\mu)^2}{2\sigma^2}\right)}

6 | $$

7 | 对于为什么服从正态分布,参见[最小二乘法与正态分布](https://blog.csdn.net/The_lastest/article/details/82413772);对于“不是0均值行不行”,答案是行。因为在线性回归中即使均值不为0,我们也可以通过最终通过调节偏置来使得均值为0。

8 |

9 | #### 2.什么是最小二乘法?

10 |

11 | 预测值与真实值的算术平均值

12 |

13 | #### 3.为什么要用最小二乘法而不是最小四乘法,六乘法?

14 |

15 | 因为最小二乘法的优化结果,同高斯分布下的极大似然估计结果一样;即最小二乘法是根据基于高斯分布下的极大似然估计推导出来的,而最小四乘法等不能保证这一点。

16 |

17 | #### 4.怎么理解似然函数(likelihood function)

18 |

19 | 统计学中,似然函数是一种关于统计模型参数的函数。给定输出$X$时,关于参数$\theta$的似然函数$L(\theta|x)$(在数值上)等于给定参数$\theta$后变量$x$的概率:$L(\theta|x)=P(X=x|\theta)$。

20 |

21 | 统计学的观点始终是认为样本的出现是基于一个分布的。那么我们去假设这个分布为$f$,里面有参数$\theta$。对于不同的$\theta$,样本的分布不一样(例如,质地不同的硬币,即使在大样本下也不可能得出正面朝上的概率相同)。$P(X=x|θ)$表示的就是在给定参数$\theta$的情况下,$x$出现的可能性多大。$L(θ|x)$表示的是在给定样本$x$的时候,哪个参数$\theta$使得$x$出现的可能性多大。所以其实这个等式要表示的核心意思都是在给一个$\theta$和一个样本$x$的时候,整个事件发生的可能性多大。

22 |

23 | 一句话,对于似然函数就是已知观测结果,但对于不同的分布(不同的参数$\theta$),将使得出现这一结果的概率不同;

24 |

25 | 举例:

26 |

27 | 小明从兜里掏出一枚硬币(质地不均)向上抛了10次,其中正面朝上7次,正面朝下3次;但并不知道在大样本下随机一次正面朝上的概率$\theta$。问:出现这一结果的概率?

28 | $$

29 | P=C_{10}^{7}\theta^{7}(1-\theta)^{3}=120\cdot\theta^{7}(1-\theta)^{3}

30 | $$

31 |

32 | ```

33 | import matplotlib.pyplot as plt

34 | import numpy as np

35 | x = np.linspace(0,1,500)

36 | y=120*np.power(x,7)*np.power((1-x),3)

37 | plt.scatter(x,y,color='r',linestyle='-',linewidth=0.1)

38 | plt.xlabel(r'$\theta$',fontsize=20)

39 | plt.ylabel('p',fontsize=20)

40 | plt.show()

41 | ```

42 |

43 |

44 |

45 |

46 |

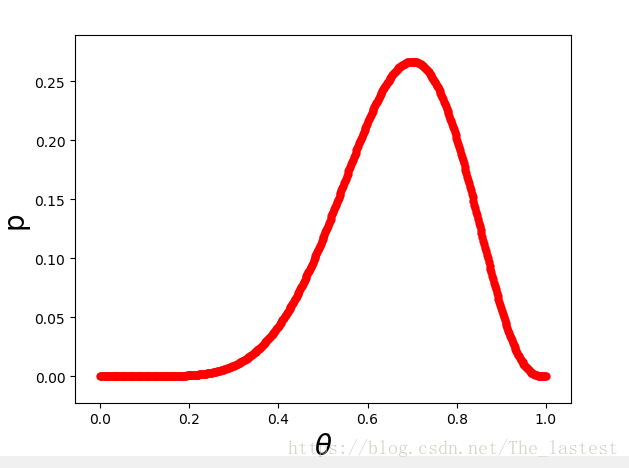

47 | 如图,我们可以发现当且仅当$\theta=0.7$ 时,似然函数取得最大值,即此时情况下事件“正面朝上7次,正面朝下3次”发生的可能性最大,而$\theta=0.7$也就是最大似然估计的结果。

48 |

49 | ------

50 |

51 | **线性回归推导:**

52 |

53 | 记样本为$(x^{(i)},y^{(i)})$,对样本的观测(预测)值记为$\hat{y}^{(i)}=\theta^Tx^{(i)}+\epsilon^{(i)}$,则有:

54 | $$

55 | y^{(i)}=\theta^Tx^{(i)}+\epsilon^{(i)}\tag{01}

56 | $$

57 | 其中$\epsilon^{(i)}$表示第$i$个预测值与真实值之间的误差,同时由于误差$\epsilon^{(i)}$服从均值为0的高斯分布,于是有:

58 | $$

59 | p(\epsilon^{(i)})=\frac{1}{\sqrt{2\pi}\sigma}\exp{\left(-\frac{(\epsilon^{(i)})^2}{2\sigma^2}\right)}\tag{02}

60 | $$

61 | 其中,$p(\epsilon^{(i)})$是概率密度函数

62 |

63 | 于是将$(1)$带入$(2)$有:

64 | $$

65 | p(\epsilon^{(i)})=\frac{1}{\sqrt{2\pi}\sigma}\exp{\left(-\frac{(y^{(i)}-\theta^Tx^{(i)})^2}{2\sigma^2}\right)}\tag{03}

66 | $$

67 | 此时请注意看等式$(3)$的右边部分,显然是随机变量$y^{(i)}$,服从以$\theta^Tx^{(i)}$为均值的正态分布(想想正态分布的表达式),又由于该密度函数与参数$\theta,x$有关(即随机变量$(y^{i})$是$x^{(i)},\theta$下的条件分布),于是有:

68 | $$

69 | p(y^{(i)}|x^{(i)};\theta)=\frac{1}{\sqrt{2\pi}\sigma}\exp{\left(-\frac{(y^{(i)}-\theta^Tx^{(i)})^2}{2\sigma^2}\right)}\tag{04}

70 | $$

71 | 到目前为止,也就是说此时真实值$y^{(i)}$服从均值为$\theta^Tx^{(i)}$,方差为$\sigma^2$的正态分布。同时,由于$\theta^Tx^{(i)}$是依赖于参数$\theta$的变量,那么什么样的一组参数$\theta$能够使得已知的观测值最容易发生呢?此时就要用到极大似然估计来进行参数估计(似然函数的作用就是找到一组参数能够使得随机变量(此处就是$y^{(i)}$)出现的可能性最大):

72 | $$

73 | L(\theta)=\prod_{i=1}^m p(y^{(i)}|x^{(i)};\theta)=\prod_{i=1}^m\frac{1}{\sqrt{2\pi}\sigma}\exp\left(-\frac{(y^{(i)}-\theta^Tx^{(i)})^2}{2\sigma^2}\right)\tag{05}

74 | $$

75 | 为了便于求解,在等式$(05)$的两边同时取自然对数:

76 | $$

77 | \begin{aligned}

78 | \log L(\theta)&=\log\left\{ \prod_{i=1}^m\frac{1}{\sqrt{2\pi}\sigma}\exp\left(-\frac{(y^{(i)}-\theta^Tx^{(i)})^2}{2\sigma^2}\right)\right\}\\[3ex]

79 | &=\sum_{i=1}^m\log\left\{\frac{1}{\sqrt{2\pi}\sigma}\exp\left(-\frac{(y^{(i)}-\theta^Tx^{(i)})^2}{2\sigma^2}\right)\right\}\\[3ex]

80 | &=\sum_{i=1}^m\left\{\log\frac{1}{\sqrt{2\pi}\sigma}-\frac{(y^{(i)}-\theta^Tx^{(i)})^2}{2\sigma^2}\right\}\\[3ex]

81 | &=m\cdot\log\frac{1}{\sqrt{2\pi}\sigma}-\frac{1}{\sigma^2}\frac{1}{2}\sum_{i=1}^m\left(y^{(i)}-\theta^Tx^{(i)}\right)^2

82 | \end{aligned}

83 | $$

84 | 由于$\max L(\theta)\iff\max\log L(\theta)$,所以:

85 | $$

86 | \max\log L(\theta)\iff\min \frac{1}{\sigma^2}\frac{1}{2}\sum_{i=1}^m\left(y^{(i)}-\theta^Tx^{(i)}\right)^2\iff\min\frac{1}{2}\sum_{i=1}^m\left(y^{(i)}-\theta^Tx^{(i)}\right)^2

87 | $$

88 | 于是得目标函数:

89 | $$

90 | \begin{aligned}

91 | J(\theta)&=\frac{1}{2m}\sum_{i=1}^m\left(y^{(i)}-\theta^Tx^{(i)}\right)^2\\[3ex]

92 | &=\frac{1}{2m}\sum_{i=1}^m\left(y^{(i)}-Wx^{(i)}\right)^2

93 | \end{aligned}

94 | $$

95 | 矢量化:

96 | $$

97 | J = 0.5 * (1 / m) * np.sum((y - np.dot(X, w) - b) ** 2)

98 | $$

99 | **求解梯度**

100 |

101 | 符号说明:

102 | $y^{(i)}$表示第$i$个样本的真实值;

103 | $\hat{y}^{(i)}$表示第$i$个样本的预测值;

104 | $W$表示权重(列)向量,$W_j$表示其中一个分量;

105 | $X$表示数据集,形状为$m\times n$,$m$为样本个数,$n$为特征维度;

106 | $x^{(i)}$为一个(列)向量,表示第$i$个样本,$x^{(i)}_j$为第$j$维特征

107 | $$

108 | \begin{aligned}

109 | J(W,b)&=\frac{1}{2m}\sum_{i=1}^m\left(y^{(i)}-\hat{y}^{(i)}\right)^2=\frac{1}{2m}\sum_{i=1}^m\left(y^{(i)}-(W^Tx^{(i)}+b)\right)^2\\[4ex]

110 | \frac{\partial J}{\partial W_j}&=\frac{\partial }{\partial W_j}\frac{1}{2m}\sum_{i=1}^m\left(y^{(i)}-(W_1x^{(i)}_1+W_2x^{(i)}_2\cdots W_nx^{(i)}_n+b)\right)^2\\[3ex]

111 | &=\frac{1}{m}\sum_{i=1}^m\left(y^{(i)}-(W_1x^{(i)}_1+W_2x^{(i)}_2\cdots W_nx^{(i)}_n+b)\right)\cdot(-x_j^{(i)})\\[3ex]

112 | &=\frac{1}{m}\sum_{i=1}^m\left(y^{(i)}-(W^Tx^{(i)}+b)\right)\cdot(-x_j^{(i)})\\[4ex]

113 | \frac{\partial J}{\partial b}&=\frac{\partial }{\partial W_j}\frac{1}{2m}\sum_{i=1}^m\left(y^{(i)}-(W^Tx^{(i)}+b)\right)^2\\[3ex]

114 | &=-\frac{1}{m}\sum_{i=1}^m\left(y^{(i)}-(W^Tx^{(i)}+b)\right)\\[3ex]

115 | \frac{\partial J}{\partial W}&=-\frac{1}{m} np.dot(x.T,(y-\hat{y}))\\[3ex]

116 | \frac{\partial J}{\partial b}&=-\frac{1}{m} np.sum(y-\hat{y})\\[3ex]

117 | \end{aligned}

118 | $$

119 |

120 | ------

121 |

122 | #### 5.怎么理解梯度(Gradient and learning rate),为什么沿着梯度的方向就能保证函数的变化率最大?

123 |

124 | 首先需要明白梯度是一个向量;其次是函数在任意一点,只有沿着梯度的方向才能保证函数值的变化率最大。

125 |

126 | 我们知道函数$f(x)$在某点($x_0$)的导数值决定了其在该点的变化率,也就是说$|f'(x_0)|$越大,则函数$f(x)$在$x=x_0$处的变化速度越快。同时对于高维空间(以三维空间为例)来说,函数$f(x,y)$在某点$(x_0,y_0)$的方向导数值$|\frac{\partial f}{\partial\vec{l}}|$ 的大小还取决于沿着哪个方向求导,也就是说沿着不同的方向,函数$f(x,y)$在$(x_0,y_0)$处的变化率不同。又由于:

127 | $$

128 | \begin{align*}

129 | \frac{\partial f}{\partial\vec{l}}&=\{\frac{\partial f}{\partial x},\frac{\partial f}{\partial y}\} \cdot\{cos\alpha,cos\beta\}\\

130 | &=gradf\cdot\vec{l^0}\\

131 | &=|gradf|\cdot|\vec{l^0}|\cdot cos\theta\\

132 | &=|gradf|\cdot1\cdot cos\theta\\

133 | &=|gradf|\cdot cos\theta

134 | \end{align*}

135 | $$

136 | 因此,当$\theta=0$是,即$\vec{l}$与向量(梯度)$\{\frac{\partial f}{\partial x},\frac{\partial f}{\partial y}\}$同向时方向导数取到最大值:

137 | $$\color{red}{\frac{\partial f}{\partial\vec{l}}=|gradf|=\sqrt{(\frac{\partial f}{\partial x})^2+(\frac{\partial f}{\partial y})^2}}$$

138 |

139 | 故,沿着梯度的方向才能保证函数值的变化率最大。

140 | 参见:[方向导数(Directional derivatives)](https://blog.csdn.net/The_lastest/article/details/77898799)、[梯度(Gradient vectors)](https://blog.csdn.net/The_lastest/article/details/77899206)

141 |

142 | 函数$f(\cdot)$的(方向)导数反映的是函数$f(\cdot)$在点$P$处的变化率的大小,即$|f'(\cdot)|_P|$越大,函数$f(\cdot)$在该点的变化率越大。为了更快的优化目标函数,我们需要找到满足$|f'(\cdot)|_P|$最大时的情况,由梯度计算公式可知,当且仅当方向导数的方向与梯度的方向一致时,$|f'(\cdot)|_P|$能取得最大值。——2019年10月5日更新

143 |

144 | #### 6.怎么理解梯度下降算法与学习率(Gradient Descent)?

145 |

146 | $$w=w-\alpha\frac{\partial J}{\partial w}$$

147 | 梯度下降算法可以看成是空间中的某个点$w$,每次沿着梯度的反方向走一小步,然后更新$w$,然后再走一小步,如此往复直到$J(w)$收敛。而学习率$\alpha$决定的就是在确定方向后每次走多大的“步子”。

148 |

149 | #### 7.学习率过大或者过小将会对目标函数产生什么样的影响?

150 |

151 | $\alpha$过大可能会导致目标函数震荡不能收敛,太小则可能需要大量的迭代才能收敛,耗费时间。

152 |

153 | #### 8.运用梯度下降算法的前提是什么?

154 |

155 | 目标函数为凸函数(形如$y=x^2$)

156 |

157 | #### 9.梯度下降算法是否一定能找到最优解?

158 |

159 | 对于凸函数而言一定等。对于非凸函数来说,能找到局部最优。

160 |

161 |

--------------------------------------------------------------------------------

/Lecture_01/LinearRegression.py:

--------------------------------------------------------------------------------

1 | from sklearn.datasets import load_boston

2 | import numpy as np

3 | import matplotlib.pyplot as plt

4 |

5 |

6 | def feature_scalling(X):

7 | mean = X.mean(axis=0)

8 | std = X.std(axis=0)

9 | return (X - mean) / std

10 |

11 |

12 | def load_data(shuffled=False):

13 | data = load_boston()

14 | # print(data.DESCR)# 数据集描述

15 | X = data.data

16 | y = data.target

17 | X = feature_scalling(X)

18 | y = np.reshape(y, (len(y), 1))

19 | if shuffled:

20 | shuffle_index = np.random.permutation(y.shape[0])

21 | X = X[shuffle_index]

22 | y = y[shuffle_index] # 打乱数据

23 | return X, y

24 |

25 |

26 | def costJ(X, y, w, b):

27 | m, n = X.shape

28 | J = 0.5 * (1 / m) * np.sum((y - np.dot(X, w) - b) ** 2)

29 | return J

30 |

31 |

32 | X, y = load_data()

33 | m, n = X.shape # 506,13

34 | w = np.random.randn(13, 1)

35 | b = 0.1

36 | alpha = 0.01

37 | cost_history = []

38 | for i in range(5000):

39 | y_hat = np.dot(X, w) + b

40 | grad_w = -(1 / m) * np.dot(X.T, (y - y_hat))

41 | grad_b = -(1 / m) * np.sum(y - y_hat)

42 | w = w - alpha * grad_w

43 | b = b - alpha * grad_b

44 | if i % 100 == 0:

45 | cost_history.append(costJ(X, y, w, b))

46 |

47 | # plt.plot(np.arange(len(cost_history)),cost_history)

48 | # plt.show()

49 | # print(cost_history)

50 |

51 | y_pre = np.dot(X, w) + b

52 | numerator = np.sum((y - y_pre) ** 2)

53 | denominator= np.sum((y - y.mean()) ** 2)

54 | print(1 - (numerator / denominator))

55 |

--------------------------------------------------------------------------------

/Lecture_01/README.md:

--------------------------------------------------------------------------------

1 | ### 1. 本节视频6,7

2 | ### 2. 思考问题:

3 | 1. 为什么说线性回归中误差是服从均值为0的方差为sigma^2的正态(高斯)分布,不是0均值行不行?

4 |

5 | 2. 什么是最小二乘法?

6 | 3. 为什么要用最小二乘法而不是最小四乘法,六乘法?

7 | 4. 怎么理解似然函数(likelihood function)

8 | 5. 怎么理解梯度与学习率(Gradient and learning rate)?

9 | 6. 怎么理解梯度下降算法(Gradient Descent)?

10 | 7. 运用梯度下降算法的前提是什么?

11 | 8. 梯度下降算法是否一定能找到最优解?

12 | 9. 学习率过大或者过小将会对目标函数产生什么样的影响?

13 | 10. 什么是feature scalling

14 |

15 | 参考 [线性回归 地址一](https://blog.csdn.net/The_lastest/article/details/82556307) [地址二](./LinearRegression.md)

16 |

17 | ### 3. 算法示例:

18 | - 示例1[波士顿房价预测](LinearRegression.py)

19 | 涉及知识点:

20 | 1. 数据归一化(feature scalling)

21 | 2. 数据打乱(shuffle)

22 | 3. 实现梯度下降

23 | ### [<主页>](../README.md) [<下一讲>](../Lecture_02/README.md)

--------------------------------------------------------------------------------

/Lecture_02/LogisticRegression.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | from sklearn.datasets import load_breast_cancer

3 |

4 |

5 | def feature_scalling(X):

6 | mean = X.mean(axis=0)

7 | std = X.std(axis=0)

8 | return (X - mean) / std

9 |

10 |

11 | def load_data(shuffled=False):

12 | data_cancer = load_breast_cancer()

13 | x = data_cancer.data

14 | y = data_cancer.target

15 | x = feature_scalling(x)

16 | y = np.reshape(y, (len(y), 1))

17 | if shuffled:

18 | shuffled_index = np.random.permutation(y.shape[0])

19 | x = x[shuffled_index]

20 | y = y[shuffled_index]

21 | return x, y

22 |

23 |

24 | def sigmoid(z):

25 | gz = 1 / (1 + np.exp(-z))

26 | return gz

27 |

28 |

29 | def gradDescent(X, y, W, b, alpha, maxIt):

30 | cost_history = []

31 | maxIteration = maxIt

32 | m, n = X.shape

33 | for i in range(maxIteration):

34 | z = np.dot(X, W) + b

35 | error = sigmoid(z) - y

36 | W = W - (1 / m) * alpha * np.dot(X.T, error)

37 | b = b - (1.0 / m) * alpha * np.sum(error)

38 | cost_history.append(cost_function(X, y, W, b))

39 | return W, b, cost_history

40 |

41 |

42 | def accuracy(X, y, W, b):

43 | m, n = np.shape(X)

44 | z = np.dot(X, W) + b

45 | y_hat = sigmoid(z)

46 | predictioin = np.ones((m, 1), dtype=float)

47 | for i in range(m):

48 | if y_hat[i, 0] < 0.5:

49 | predictioin[i] = 0.0

50 | return 1 - np.sum(np.abs(y - predictioin)) / m

51 |

52 |

53 | def cost_function(X, y, W, b):

54 | m, n = X.shape

55 | z = np.dot(X, W) + b

56 | y_hat = sigmoid(z)

57 | J = (-1 / m) * np.sum(y * np.log(y_hat) + (1 - y) * np.log(1 - y_hat))

58 | return J

59 |

60 | if __name__ == '__main__':

61 | X, y = load_data()

62 | m, n = X.shape

63 | alpha = 0.1

64 | W = np.random.randn(n, 1)

65 | b = 0.1

66 | maxIt = 200

67 | W, b, cost_history = gradDescent(X, y, W, b, alpha, maxIt)

68 | print("******************")

69 | print("W is : ")

70 | print(W)

71 | print("accuracy is : " + str(accuracy(X, y, W, b)))

72 | print("******************")

73 |

--------------------------------------------------------------------------------

/Lecture_02/README.md:

--------------------------------------------------------------------------------

1 | ### 1. 本节视频3,4,8,9

2 | ### 2. 知识点

3 | - 利用 `Matplotlib`画图

4 | - [Matplotlib画图系列(一)简易线形图及散点图](https://blog.csdn.net/The_lastest/article/details/79828638)

5 | - [Matplotlib画图系列(二)误差曲线(errorbar) ](https://blog.csdn.net/The_lastest/article/details/79829046)

6 | - 逻辑回归代价函数的由来

7 | - [Logistic回归代价函数的数学推导及实现](https://blog.csdn.net/The_lastest/article/details/78761577)

8 | - 利用sklearn库来实现逻辑回归

9 | - 见示例3

10 | ### 3. 算法示例:

11 | - 示例1[breast_cancer分类](LogisticRegression.py)

12 | - 示例2[是否录取分类](e2.py)

13 | - 可视化

14 |

15 | - 损失图

16 |

17 | - 示例3[用sklearn库实现示例2](e3.py)

18 | ### [<主页>](../README.md) [<下一讲>](../Lecture_03/README.md)

--------------------------------------------------------------------------------

/Lecture_02/data/01.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Lecture_02/data/01.png

--------------------------------------------------------------------------------

/Lecture_02/data/02.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Lecture_02/data/02.png

--------------------------------------------------------------------------------

/Lecture_02/data/LogiReg_data.txt:

--------------------------------------------------------------------------------

1 | 34.62365962451697,78.0246928153624,0

2 | 30.28671076822607,43.89499752400101,0

3 | 35.84740876993872,72.90219802708364,0

4 | 60.18259938620976,86.30855209546826,1

5 | 79.0327360507101,75.3443764369103,1

6 | 45.08327747668339,56.3163717815305,0

7 | 61.10666453684766,96.51142588489624,1

8 | 75.02474556738889,46.55401354116538,1

9 | 76.09878670226257,87.42056971926803,1

10 | 84.43281996120035,43.53339331072109,1

11 | 95.86155507093572,38.22527805795094,0

12 | 75.01365838958247,30.60326323428011,0

13 | 82.30705337399482,76.48196330235604,1

14 | 69.36458875970939,97.71869196188608,1

15 | 39.53833914367223,76.03681085115882,0

16 | 53.9710521485623,89.20735013750205,1

17 | 69.07014406283025,52.74046973016765,1

18 | 67.94685547711617,46.67857410673128,0

19 | 70.66150955499435,92.92713789364831,1

20 | 76.97878372747498,47.57596364975532,1

21 | 67.37202754570876,42.83843832029179,0

22 | 89.67677575072079,65.79936592745237,1

23 | 50.534788289883,48.85581152764205,0

24 | 34.21206097786789,44.20952859866288,0

25 | 77.9240914545704,68.9723599933059,1

26 | 62.27101367004632,69.95445795447587,1

27 | 80.1901807509566,44.82162893218353,1

28 | 93.114388797442,38.80067033713209,0

29 | 61.83020602312595,50.25610789244621,0

30 | 38.78580379679423,64.99568095539578,0

31 | 61.379289447425,72.80788731317097,1

32 | 85.40451939411645,57.05198397627122,1

33 | 52.10797973193984,63.12762376881715,0

34 | 52.04540476831827,69.43286012045222,1

35 | 40.23689373545111,71.16774802184875,0

36 | 54.63510555424817,52.21388588061123,0

37 | 33.91550010906887,98.86943574220611,0

38 | 64.17698887494485,80.90806058670817,1

39 | 74.78925295941542,41.57341522824434,0

40 | 34.1836400264419,75.2377203360134,0

41 | 83.90239366249155,56.30804621605327,1

42 | 51.54772026906181,46.85629026349976,0

43 | 94.44336776917852,65.56892160559052,1

44 | 82.36875375713919,40.61825515970618,0

45 | 51.04775177128865,45.82270145776001,0

46 | 62.22267576120188,52.06099194836679,0

47 | 77.19303492601364,70.45820000180959,1

48 | 97.77159928000232,86.7278223300282,1

49 | 62.07306379667647,96.76882412413983,1

50 | 91.56497449807442,88.69629254546599,1

51 | 79.94481794066932,74.16311935043758,1

52 | 99.2725269292572,60.99903099844988,1

53 | 90.54671411399852,43.39060180650027,1

54 | 34.52451385320009,60.39634245837173,0

55 | 50.2864961189907,49.80453881323059,0

56 | 49.58667721632031,59.80895099453265,0

57 | 97.64563396007767,68.86157272420604,1

58 | 32.57720016809309,95.59854761387875,0

59 | 74.24869136721598,69.82457122657193,1

60 | 71.79646205863379,78.45356224515052,1

61 | 75.3956114656803,85.75993667331619,1

62 | 35.28611281526193,47.02051394723416,0

63 | 56.25381749711624,39.26147251058019,0

64 | 30.05882244669796,49.59297386723685,0

65 | 44.66826172480893,66.45008614558913,0

66 | 66.56089447242954,41.09209807936973,0

67 | 40.45755098375164,97.53518548909936,1

68 | 49.07256321908844,51.88321182073966,0

69 | 80.27957401466998,92.11606081344084,1

70 | 66.74671856944039,60.99139402740988,1

71 | 32.72283304060323,43.30717306430063,0

72 | 64.0393204150601,78.03168802018232,1

73 | 72.34649422579923,96.22759296761404,1

74 | 60.45788573918959,73.09499809758037,1

75 | 58.84095621726802,75.85844831279042,1

76 | 99.82785779692128,72.36925193383885,1

77 | 47.26426910848174,88.47586499559782,1

78 | 50.45815980285988,75.80985952982456,1

79 | 60.45555629271532,42.50840943572217,0

80 | 82.22666157785568,42.71987853716458,0

81 | 88.9138964166533,69.80378889835472,1

82 | 94.83450672430196,45.69430680250754,1

83 | 67.31925746917527,66.58935317747915,1

84 | 57.23870631569862,59.51428198012956,1

85 | 80.36675600171273,90.96014789746954,1

86 | 68.46852178591112,85.59430710452014,1

87 | 42.0754545384731,78.84478600148043,0

88 | 75.47770200533905,90.42453899753964,1

89 | 78.63542434898018,96.64742716885644,1

90 | 52.34800398794107,60.76950525602592,0

91 | 94.09433112516793,77.15910509073893,1

92 | 90.44855097096364,87.50879176484702,1

93 | 55.48216114069585,35.57070347228866,0

94 | 74.49269241843041,84.84513684930135,1

95 | 89.84580670720979,45.35828361091658,1

96 | 83.48916274498238,48.38028579728175,1

97 | 42.2617008099817,87.10385094025457,1

98 | 99.31500880510394,68.77540947206617,1

99 | 55.34001756003703,64.9319380069486,1

100 | 74.77589300092767,89.52981289513276,1

101 |

--------------------------------------------------------------------------------

/Lecture_02/e2.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | import matplotlib.pyplot as plt

3 | import pandas as pd

4 | import numpy as np

5 | from LogisticRegression import gradDescent,cost_function,accuracy,feature_scalling

6 |

7 |

8 | def load_data():

9 | data = pd.read_csv('./data/LogiReg_data.txt', names=['exam1', 'exam2', 'label']).as_matrix()

10 | X = data[:, :-1] # 取前两列

11 | y = data[:, -1:] # 取最后一列

12 | shuffle_index = np.random.permutation(X.shape[0])

13 | X = X[shuffle_index]

14 | y = y[shuffle_index]

15 | return X, y

16 |

17 |

18 | def visualize_data(X, y):

19 | positive = np.where(y == 1)[0]

20 | negative = np.where(y == 0)[0]

21 | plt.scatter(X[positive,0],X[positive,1],s=30,c='b',marker='o',label='Admitted')

22 | plt.scatter(X[negative,0],X[negative,1],s=30,c='r',marker='o',label='Not Admitted')

23 | plt.legend()

24 | plt.show()

25 |

26 | def visualize_cost(ite,cost):

27 | plt.plot(np.linspace(0,ite,ite),cost,linewidth=1)

28 | plt.title('cost history',color='r')

29 | plt.xlabel('iterations')

30 | plt.ylabel('cost J')

31 | plt.show()

32 |

33 |

34 | if __name__ == '__main__':

35 | # Step 1. Load data

36 | X, y = load_data()

37 | # Step 2. Visualize data

38 | visualize_data(X, y)

39 | #

40 | m, n = X.shape

41 | X = feature_scalling(X)

42 | alpha = 0.1

43 | W = np.random.randn(n, 1)

44 | b = 0.1

45 | maxIt = 10000

46 | W, b, cost_history = gradDescent(X, y, W, b, alpha, maxIt)

47 | print("******************")

48 | print(cost_history[:20])

49 | visualize_cost(maxIt,cost_history)

50 | print("accuracys is : " + str(accuracy(X, y, W, b)))

51 | print("W:",W)

52 | print("b: ",b)

53 | print("******************")

54 |

--------------------------------------------------------------------------------

/Lecture_02/e3.py:

--------------------------------------------------------------------------------

1 | import matplotlib.pyplot as plt

2 | import pandas as pd

3 | import numpy as np

4 | from LogisticRegression import feature_scalling

5 | from sklearn.linear_model import LogisticRegression

6 |

7 | def load_data():

8 | data = pd.read_csv('./data/LogiReg_data.txt', names=['exam1', 'exam2', 'label']).as_matrix()

9 | X = data[:, :-1] # 取前两列

10 | y = data[:, -1:] # 取最后一列

11 | shuffle_index = np.random.permutation(X.shape[0])

12 | X = X[shuffle_index]

13 | y = y[shuffle_index]

14 | return X, y

15 |

16 |

17 | def visualize_cost(ite,cost):

18 | plt.plot(np.linspace(0,ite,ite),cost,linewidth=1)

19 | plt.title('cost history',color='r')

20 | plt.xlabel('iterations')

21 | plt.ylabel('cost J')

22 | plt.show()

23 |

24 |

25 | if __name__ == '__main__':

26 | X, y = load_data()

27 | X = feature_scalling(X)

28 | lr = LogisticRegression()

29 | lr.fit(X,y)

30 | print("******************")

31 | print("accuracys is :" ,lr.score(X,y))

32 | print("W:{},b:{}".format(lr.coef_,lr.intercept_))

33 | print("******************")

--------------------------------------------------------------------------------

/Lecture_03/README.md:

--------------------------------------------------------------------------------

1 | ### 1. 本节视频10

2 | 本节视频中讲到的内容比较多也比较杂,但却**非常重要**。有一点不好的就是视频中的代码稍微有点乱,我会用一些其它方式来实现视频中代码(自己能看下去也行)。大家只要掌握好我列出的知识点即可。

3 | ### 2. 知识点

4 | - 2.1 过拟合(over fitting)和欠拟合(under fitting)具体是指什么?常用的解决方法是什么?

5 | - [斯坦福机器学习-第三周(分类,逻辑回归,过度拟合及解决方法)](https://blog.csdn.net/The_lastest/article/details/73349592)

6 | - [机器学习中正则化项L1和L2的直观理解](https://blog.csdn.net/jinping_shi/article/details/52433975)

7 | - 2.2 什么就超参数(hyper parameter)? 如何对模型进行评估?

8 | - 混淆矩阵(confusion matrix)

9 |

10 | - 准确性(accuracy)

11 | - 精确率(precision)

12 | - 召回率(recall)

13 | - F1-score(**精确率和召回率的调和平均**)

14 | - 2.3 如何对模型进行筛选?

15 | - K折交叉验证(K-fold cross validation)

16 | - 并行参数搜索

17 | - 2.2 如何解决样本分布不均?

18 | - 下采样(down sampling)示例1:以样本数少的类别为标准,去掉样本数多的类别中多余的样本;

19 | - 过采样(over sampling)示例2:以样本数多的类别为标准,对样本数少的类别再生成若干个样本,使两个类别中的样本一致;

20 | ### 3. 示例

21 | - 3.1 示例1 [下采样](ex1.py)

22 | - 3.2 示例2 [过采样](ex2.py)

23 | ### [<主页>](../README.md) [<下一讲>](../Lecture_04/README.md)

--------------------------------------------------------------------------------

/Lecture_03/data/04.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Lecture_03/data/04.png

--------------------------------------------------------------------------------

/Lecture_03/data/README.md:

--------------------------------------------------------------------------------

1 | 下载好后放入data目录下即可

2 |

3 | ### 数据集下载地址:

4 | 链接: https://pan.baidu.com/s/1OlZ-nkS4sbjSgoaetqqOGg 提取码: ggr8

--------------------------------------------------------------------------------

/Lecture_03/ex1.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | import matplotlib.pyplot as plt

3 | import numpy as np

4 | from sklearn.preprocessing import StandardScaler

5 | from sklearn.cross_validation import train_test_split

6 | from sklearn.grid_search import GridSearchCV

7 | from sklearn.linear_model import LogisticRegression

8 | from sklearn.metrics import classification_report

9 |

10 |

11 | def load_and_analyse_data():

12 | data = pd.read_csv('./data/creditcard.csv')

13 |

14 | # ----------------------查看样本分布情况----------------------------------

15 | # count_classes = pd.value_counts(data['Class'],sort=True).sort_index()

16 | # print(count_classes)# negative 0 :284315 positive 1 :492

17 | # count_classes.plot(kind='bar')

18 | # plt.title('Fraud class histogram')

19 | # plt.xlabel('Class')

20 | # plt.ylabel('Frequency')

21 | # plt.show()

22 | # --------------------------------------------------------------------------

23 |

24 | # ----------------------预处理---------------------------------------------

25 |

26 | # ----------------------标准化Amount列---------

27 | data['normAmout'] = StandardScaler().fit_transform(data['Amount'].values.reshape(-1, 1))

28 | data = data.drop(['Time', 'Amount'], axis=1)

29 | # ----------------------------------------------

30 |

31 | X = data.ix[:, data.columns != 'Class']

32 | y = data.ix[:, data.columns == 'Class']

33 | positive_number = len(y[y.Class == 1]) # 492

34 | negative_number = len(y[y.Class == 0]) # 284315

35 | positive_indices = np.array(y[y.Class == 1].index)

36 | negative_indices = np.array(y[y.Class == 0].index)

37 |

38 | # ----------------------采样-------------------

39 | random_negative_indices = np.random.choice(negative_indices, positive_number, replace=False)

40 | random_negative_indices = np.array(random_negative_indices)

41 | under_sample_indices = np.concatenate([positive_indices, random_negative_indices])

42 | under_sample_data = data.iloc[under_sample_indices, :]

43 | X_sample = under_sample_data.ix[:, under_sample_data.columns != 'Class']

44 | y_sample = under_sample_data.ix[:, under_sample_data.columns == 'Class']

45 | return np.array(X), np.array(y).reshape(len(y)), np.array(X_sample), np.array(y_sample).reshape(len(y_sample))

46 |

47 |

48 | if __name__ == '__main__':

49 | X, y, X_sample, y_sample = load_and_analyse_data()

50 | _, X_test, _, y_test = train_test_split(X, y, test_size=0.3, random_state=30)

51 | X_train, X_dev, y_train, y_dev = train_test_split(X_sample, y_sample, test_size=0.3,

52 | random_state=1)

53 |

54 | print("X_train:{} X_dev:{} X_test:{}".format(len(y_train),len(y_dev),len(y_test)))

55 | model = LogisticRegression()

56 | parameters = {'C': [0.001, 0.003, 0.01, 0.03, 0.1, 0.3, 1, 3, 10]}

57 | gs = GridSearchCV(model, parameters, verbose=5, cv=5)

58 | gs.fit(X_train, y_train)

59 | print('最佳模型:', gs.best_params_, gs.best_score_)

60 | print('在采样数据上的性能表现:')

61 | print(gs.score(X_dev, y_dev))

62 | y_dev_pre = gs.predict(X_dev)

63 | print(classification_report(y_dev, y_dev_pre))

64 | print('在原始数据上的性能表现:')

65 | print(gs.score(X_test, y_test))

66 | y_pre = gs.predict(X_test)

67 | print(classification_report(y_test, y_pre))

68 |

--------------------------------------------------------------------------------

/Lecture_03/ex2.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | import matplotlib.pyplot as plt

3 | import numpy as np

4 | from sklearn.preprocessing import StandardScaler

5 | from sklearn.cross_validation import train_test_split

6 | from sklearn.grid_search import GridSearchCV

7 | from sklearn.linear_model import LogisticRegression

8 | from sklearn.metrics import classification_report

9 | from imblearn.over_sampling import SMOTE

10 |

11 | def load_and_analyse_data():

12 | data = pd.read_csv('./data/creditcard.csv')

13 | # ----------------------预处理---------------------------------------------

14 |

15 | # ----------------------标准化Amount列---------

16 | data['normAmout'] = StandardScaler().fit_transform(data['Amount'].values.reshape(-1, 1))

17 | data = data.drop(['Time', 'Amount'], axis=1)

18 | # ----------------------------------------------

19 |

20 | X = data.ix[:, data.columns != 'Class']

21 | y = data.ix[:, data.columns == 'Class']

22 | X_train,X_test,y_train,y_test = train_test_split(X,y,test_size=0.3,random_state=0)

23 | # ----------------------采样-------------------

24 | sample_solver = SMOTE(random_state=0)

25 | X_sample ,y_sample = sample_solver.fit_sample(X_train,y_train)

26 | return np.array(X_test),np.array(y_test).reshape(len(y_test)),np.array(X_sample),np.array(y_sample).reshape(len(y_sample))

27 |

28 | if __name__ == '__main__':

29 | X_test, y_test, X_sample, y_sample = load_and_analyse_data()

30 | X_train,X_dev,y_train,y_dev = train_test_split(X_sample,y_sample,test_size=0.3,random_state=1)

31 |

32 | print("X_train:{} X_dev:{} X_test:{}".format(len(y_train), len(y_dev), len(y_test)))

33 | model = LogisticRegression()

34 | parameters = {'C':[0.001,0.003,0.01,0.03,0.1,0.3,1,3,10]}

35 | gs = GridSearchCV(model,parameters,verbose=5,cv=5)

36 | gs.fit(X_train,y_train)

37 | print('最佳模型:',gs.best_params_,gs.best_score_)

38 | print('在采样数据上的性能表现:')

39 | print(gs.score(X_dev,y_dev))

40 | y_dev_pre = gs.predict(X_dev)

41 | print(classification_report(y_dev,y_dev_pre))

42 | print('在原始数据上的性能表现:')

43 | print(gs.score(X_test,y_test))

44 | y_pre = gs.predict(X_test)

45 | print(classification_report(y_test,y_pre))

46 |

--------------------------------------------------------------------------------

/Lecture_04/README.md:

--------------------------------------------------------------------------------

1 | ### 1. 本节视频

2 | - 本节视频11,12

3 | ### 2. 知识点

4 | - 2.1 什么是决策树(decision tree)?什么是信息熵?

5 | - [决策树——(一)决策树的思想](https://blog.csdn.net/The_lastest/article/details/78906751)

6 | - 2.2 决策树的构造与剪枝?

7 | - [决策树——(二)决策树的生成与剪枝ID3,C4.5](https://blog.csdn.net/The_lastest/article/details/78915862)

8 | - [决策树——(三)决策树的生成与剪枝CART](https://blog.csdn.net/The_lastest/article/details/78975439)

9 | - 2.3 决策树的可视化

10 | - [Graphviz](https://graphviz.gitlab.io/_pages/Download/Download_windows.html)

11 | - [机器学习笔记——决策数实现及使用Graphviz查看](https://blog.csdn.net/akadiao/article/details/77800909)

12 |

13 |

14 | ### 3. 示例

15 | - 示例1[ iris分类](ex1.py)

16 | ### 4. 任务

17 | - 4.1 熟悉查看[scikit-learn API](http://scikit-learn.org/stable/modules/classes.html)

18 | ### [<主页>](../README.md) [<下一讲>](../Lecture_05/README.md)

--------------------------------------------------------------------------------

/Lecture_04/allElectronicsData.dot:

--------------------------------------------------------------------------------

1 | digraph Tree {

2 | node [shape=box] ;

3 | 0 [label="petal width (cm) <= -0.526\ngini = 0.665\nsamples = 105\nvalue = [36, 32, 37]\nclass = C"] ;

4 | 1 [label="gini = 0.0\nsamples = 36\nvalue = [36, 0, 0]\nclass = A"] ;

5 | 0 -> 1 [labeldistance=2.5, labelangle=45, headlabel="True"] ;

6 | 2 [label="petal width (cm) <= 0.593\ngini = 0.497\nsamples = 69\nvalue = [0, 32, 37]\nclass = C"] ;

7 | 0 -> 2 [labeldistance=2.5, labelangle=-45, headlabel="False"] ;

8 | 3 [label="petal length (cm) <= 0.706\ngini = 0.161\nsamples = 34\nvalue = [0, 31, 3]\nclass = B"] ;

9 | 2 -> 3 ;

10 | 4 [label="gini = 0.0\nsamples = 30\nvalue = [0, 30, 0]\nclass = B"] ;

11 | 3 -> 4 ;

12 | 5 [label="gini = 0.375\nsamples = 4\nvalue = [0, 1, 3]\nclass = C"] ;

13 | 3 -> 5 ;

14 | 6 [label="petal length (cm) <= 0.621\ngini = 0.056\nsamples = 35\nvalue = [0, 1, 34]\nclass = C"] ;

15 | 2 -> 6 ;

16 | 7 [label="gini = 0.375\nsamples = 4\nvalue = [0, 1, 3]\nclass = C"] ;

17 | 6 -> 7 ;

18 | 8 [label="gini = 0.0\nsamples = 31\nvalue = [0, 0, 31]\nclass = C"] ;

19 | 6 -> 8 ;

20 | }

--------------------------------------------------------------------------------

/Lecture_04/data/01.PNG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Lecture_04/data/01.PNG

--------------------------------------------------------------------------------

/Lecture_04/ex1.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | from sklearn.tree import DecisionTreeClassifier

3 | from sklearn.metrics import classification_report

4 |

5 |

6 | def load_data():

7 | from sklearn.datasets import load_iris

8 | from sklearn.preprocessing import StandardScaler

9 | from sklearn.model_selection import train_test_split

10 | data = load_iris()

11 | X = data.data

12 | y = data.target

13 | ss = StandardScaler()

14 | X = ss.fit_transform(X)

15 | x_train, x_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=1)

16 | return x_train, y_train, x_test, y_test, data.feature_names

17 |

18 |

19 | def train():

20 | x_train, y_train, x_test, y_test, _ = load_data()

21 | model = DecisionTreeClassifier()

22 | model.fit(x_train, y_train)

23 | y_pre = model.predict(x_test)

24 | print(model.score(x_test, y_test))

25 | print(classification_report(y_test, y_pre))

26 |

27 |

28 | def grid_search():

29 | from sklearn.model_selection import GridSearchCV

30 | x_train, y_train, x_test, y_test, _ = load_data()

31 | model = DecisionTreeClassifier()

32 | parameters = {'max_depth': np.arange(1, 50, 2)}

33 | gs = GridSearchCV(model, parameters, verbose=5, cv=5)

34 | gs.fit(x_train, y_train)

35 | print('最佳模型:', gs.best_params_, gs.best_score_)

36 | y_pre = gs.predict(x_test)

37 | print(classification_report(y_test, y_pre))

38 |

39 |

40 | def tree_visilize():

41 | from sklearn import tree

42 | x_train, y_train, x_test, y_test, feature_names = load_data()

43 | print('类标:', np.unique(y_train))

44 | print('特征名称:', feature_names)

45 | model = DecisionTreeClassifier(max_depth=3)

46 | model.fit(x_train, y_train)

47 | print(model.score(x_test, y_test))

48 | with open("allElectronicsData.dot", "w") as f:

49 | tree.export_graphviz(model, feature_names=feature_names, class_names=['A', 'B', 'C'], out_file=f)

50 |

51 |

52 | if __name__ == '__main__':

53 | train()

54 | # grid_search()

55 | # tree_visilize()

56 |

--------------------------------------------------------------------------------

/Lecture_05/README.md:

--------------------------------------------------------------------------------

1 | ### 1. 本节视频

2 | - 本节视频13,14,24

3 | ### 2. 知识点

4 | - 2.1 算法原理理解

5 | - Bagging:并行构造n个模型,每个模型彼此独立;如,RandomForest

6 | - Boosting:串行构造模型,下一个模型的提升依赖于训练好的上以个模型;如,AdaBoost,Xgboost

7 | - Stacking:第一阶段得出各自结果,第二阶段再用前一阶段结果训练

8 | - 2.2 数据预处理

9 | - 分析筛选数据特征

10 | - 缺失值补充(均值,最值)

11 | - 特征转换

12 | [用pandas处理缺失值补全及DictVectorizer特征转换](https://blog.csdn.net/The_lastest/article/details/79103386)

13 | [利用随机森林对特征重要性进行评估](https://blog.csdn.net/The_lastest/article/details/81151986)

14 | - 2.3 Xgboost

15 | - 安装

16 | - 方法一:在线安装

17 | ```python

18 | pip install -i https://pypi.tuna.tsinghua.edu.cn/simple/ xgboost

19 | ```

20 | - 方法二:本地安装

21 | 首先去[戳此处](https://www.lfd.uci.edu/~gohlke/pythonlibs/#xgboost)搜索并下载xboost对应安装包

22 | (cp27表示python2.7,win32表示32位,amd64表示64位)

23 | ```python

24 | pip install xgboost-0.80-cp36-cp36m-win_amd64.whl

25 | ```

26 | - 大致原理

27 | ### 3. 示例

28 | - 3.1 本示例先对特征进行人工分析,然后选择其中7个进行训练

29 | - [示例1](ex1.py)

30 | - 3.2 本示例先对特征进行评估,然后选择其中3个进行训练

31 | - [示例2](ex2.py)

32 | - 3.3 本示例是以stacking的思想进行训练

33 | - [示例3](ex3.py)

34 | ### 4. 任务

35 | - 4.1 根据所给[数据集1001](../DatasetUrl.md),预测某人是否患有糖尿病;

36 | - 4.2 根据所给[数据集1002](../DatasetUrl.md),预测泰坦尼克号人员生还情况;

37 |

38 | 要求:

39 | - 要求模型预测的准确率尽可能高;

40 | - 分模块书写代码(比如数据预处理,不同模型的训练要抽象成函数,具体可参见前面例子);

41 | ### [<主页>](../README.md) [<下一讲>](../Lecture_06/README.md)

--------------------------------------------------------------------------------

/Lecture_05/data/README.md:

--------------------------------------------------------------------------------

1 | 下载好后放入data目录下即可

2 |

3 | ### 数据集下载地址:

4 |

5 | [数据集1002](../../DatasetUrl.md)

--------------------------------------------------------------------------------

/Lecture_05/ex1.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | from sklearn.model_selection import GridSearchCV

3 | import numpy as np

4 |

5 |

6 | def load_data_and_preprocessing():

7 | train = pd.read_csv('./data/titanic_train.csv')

8 | test = pd.read_csv('./data/test.csv')

9 | # print(train['Name'])

10 | # print(titannic_train.describe())

11 | # print(train.info())

12 | train_y = train['Survived']

13 | selected_features = ['Pclass', 'Sex', 'Age', 'SibSp', 'Parch', 'Fare', 'Embarked']

14 | train_x = train[selected_features]

15 | train_x['Age'].fillna(train_x['Age'].mean(), inplace=True) # 以均值填充

16 | # print(train_x['Embarked'].value_counts())

17 | train_x['Embarked'].fillna('S', inplace=True)

18 | # print(train_x.info())

19 |

20 | test_x = test[selected_features]

21 | test_x['Age'].fillna(test_x['Age'].mean(), inplace=True)

22 | test_x['Fare'].fillna(test_x['Fare'].mean(), inplace=True)

23 | # print(test_x.info())

24 |

25 | train_x.loc[train_x['Embarked'] == 'S', 'Embarked'] = 0

26 | train_x.loc[train_x['Embarked'] == 'C', 'Embarked'] = 1

27 | train_x.loc[train_x['Embarked'] == 'Q', 'Embarked'] = 2

28 | train_x.loc[train_x['Sex'] == 'male', 'Sex'] = 0

29 | train_x.loc[train_x['Sex'] == 'female', 'Sex'] = 1

30 | x_train = train_x.as_matrix()

31 | y_train = train_y.as_matrix()

32 |

33 | test_x.loc[test_x['Embarked'] == 'S', 'Embarked'] = 0

34 | test_x.loc[test_x['Embarked'] == 'C', 'Embarked'] = 1

35 | test_x.loc[test_x['Embarked'] == 'Q', 'Embarked'] = 2

36 | test_x.loc[test_x['Sex'] == 'male', 'Sex'] = 0

37 | test_x.loc[test_x['Sex'] == 'female', 'Sex'] = 1

38 | x_test = test_x

39 | return x_train, y_train, x_test

40 |

41 |

42 | def logistic_regression():

43 | from sklearn.linear_model import LogisticRegression

44 | x_train, y_train, x_test = load_data_and_preprocessing()

45 | model = LogisticRegression()

46 | paras = {'C': np.linspace(0.1, 10, 50)}

47 | gs = GridSearchCV(model, paras, cv=5, verbose=3)

48 | gs.fit(x_train, y_train)

49 | print('best score:', gs.best_score_)

50 | print('best parameters:', gs.best_params_)

51 |

52 |

53 | def decision_tree():

54 | from sklearn.tree import DecisionTreeClassifier

55 | x_train, y_train, x_test = load_data_and_preprocessing()

56 | model = DecisionTreeClassifier()

57 | paras = {'criterion': ['gini', 'entropy'], 'max_depth': np.arange(5, 50, 5)}

58 | gs = GridSearchCV(model, paras, cv=5, verbose=3)

59 | gs.fit(x_train, y_train)

60 | print('best score:', gs.best_score_)

61 | print('best parameters:', gs.best_params_)

62 |

63 |

64 | def random_forest():

65 | from sklearn.ensemble import RandomForestClassifier

66 | x_train, y_train, x_test = load_data_and_preprocessing()

67 | model = RandomForestClassifier()

68 | paras = {'n_estimators': np.arange(10, 100, 10), 'criterion': ['gini', 'entropy'], 'max_depth': np.arange(5, 50, 5)}

69 | gs = GridSearchCV(model, paras, cv=5, verbose=3)

70 | gs.fit(x_train, y_train)

71 | print('best score:', gs.best_score_)

72 | print('best parameters:', gs.best_params_)

73 |

74 |

75 | def gradient_boosting():

76 | from sklearn.ensemble import GradientBoostingClassifier

77 | x_train, y_train, x_test = load_data_and_preprocessing()

78 | model = GradientBoostingClassifier()

79 | paras = {'learning_rate': np.arange(0.1, 1, 0.1), 'n_estimators': range(80, 120, 10), 'max_depth': range(5, 10, 1)}

80 | gs = GridSearchCV(model, paras, cv=5, verbose=3,n_jobs=2)

81 | gs.fit(x_train, y_train)

82 | print('best score:', gs.best_score_)

83 | print('best parameters:', gs.best_params_)

84 |

85 |

86 | if __name__ == '__main__':

87 | # logistic_regression() # 0.7979

88 | # decision_tree()#0.813

89 | # random_forest() # 0.836 {'criterion': 'entropy', 'max_depth': 10, 'n_estimators': 60}

90 | gradient_boosting()#0.830 {'learning_rate': 0.1, 'max_depth': 5, 'n_estimators': 90}

91 |

--------------------------------------------------------------------------------

/Lecture_05/ex2.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | import numpy as np

3 | from sklearn.model_selection import GridSearchCV

4 |

5 | def feature_selection():

6 | from sklearn.feature_selection import SelectKBest, f_classif

7 | import matplotlib.pyplot as plt

8 | train = pd.read_csv('./data/titanic_train.csv')

9 | selected_features = ['Pclass', 'Sex', 'Age', 'SibSp', 'Parch', 'Fare', 'Embarked']

10 | train_x = train[selected_features]

11 | train_y = train['Survived']

12 | train_x['Age'].fillna(train_x['Age'].mean(), inplace=True) # 以均值填充

13 | train_x['Embarked'].fillna('S', inplace=True)

14 | train_x.loc[train_x['Embarked'] == 'S', 'Embarked'] = 0

15 | train_x.loc[train_x['Embarked'] == 'C', 'Embarked'] = 1

16 | train_x.loc[train_x['Embarked'] == 'Q', 'Embarked'] = 2

17 | train_x.loc[train_x['Sex'] == 'male', 'Sex'] = 0

18 | train_x.loc[train_x['Sex'] == 'female', 'Sex'] = 1

19 |

20 | selector = SelectKBest(f_classif, k=5)

21 | selector.fit(train_x, train_y)

22 | scores = selector.scores_

23 | plt.bar(range(len(selected_features)), scores)

24 | plt.xticks(range(len(selected_features)), selected_features, rotation='vertical')

25 | plt.show()

26 |

27 | x_train = train_x[['Pclass', 'Sex', 'Fare']]

28 | y_train = train_y.as_matrix()

29 | return x_train, y_train

30 | def logistic_regression():

31 | from sklearn.linear_model import LogisticRegression

32 | x_train, y_train= feature_selection()

33 | model = LogisticRegression()

34 | paras = {'C': np.linspace(0.1, 10, 50)}

35 | gs = GridSearchCV(model, paras, cv=5, verbose=3)

36 | gs.fit(x_train, y_train)

37 | print('best score:', gs.best_score_)

38 | print('best parameters:', gs.best_params_)

39 |

40 |

41 | def decision_tree():

42 | from sklearn.tree import DecisionTreeClassifier

43 | x_train, y_train = feature_selection()

44 | model = DecisionTreeClassifier()

45 | paras = {'criterion': ['gini', 'entropy'], 'max_depth': np.arange(5, 50, 5)}

46 | gs = GridSearchCV(model, paras, cv=5, verbose=3)

47 | gs.fit(x_train, y_train)

48 | print('best score:', gs.best_score_)

49 | print('best parameters:', gs.best_params_)

50 |

51 |

52 | def random_forest():

53 | from sklearn.ensemble import RandomForestClassifier

54 | x_train, y_train = feature_selection()

55 | model = RandomForestClassifier()

56 | paras = {'n_estimators': np.arange(10, 100, 10), 'criterion': ['gini', 'entropy'], 'max_depth': np.arange(5, 50, 5)}

57 | gs = GridSearchCV(model, paras, cv=5, verbose=3)

58 | gs.fit(x_train, y_train)

59 | print('best score:', gs.best_score_)

60 | print('best parameters:', gs.best_params_)

61 |

62 | if __name__ == '__main__':

63 | # feature_selection()

64 | # logistic_regression()#0.783

65 | # decision_tree()#0.814

66 | random_forest()# 0.814

--------------------------------------------------------------------------------

/Lecture_05/ex3.py:

--------------------------------------------------------------------------------

1 | from ex1 import load_data_and_preprocessing

2 | from sklearn.model_selection import KFold

3 | from sklearn.ensemble import RandomForestClassifier, GradientBoostingClassifier

4 |

5 |

6 | def stacking():

7 | s = 0

8 | x_train, y_train, x_test = load_data_and_preprocessing()

9 | kf = KFold(n_splits=5)

10 | rfc = RandomForestClassifier(criterion='entropy', max_depth=10, n_estimators=60)

11 | gbc = GradientBoostingClassifier(learning_rate=0.1, max_depth=5, n_estimators=90)

12 | for train_index, test_index in kf.split(x_train):

13 | train_x, test_x = x_train[train_index], x_train[test_index]

14 | train_y, test_y = y_train[train_index], y_train[test_index]

15 | rfc.fit(train_x, train_y)

16 | rfc_pre = rfc.predict_proba(test_x)[:,1]

17 | gbc.fit(train_x, train_y)

18 | gbc_pre = gbc.predict_proba(test_x)[:,1]

19 | y_pre = ((rfc_pre+gbc_pre)/2 >= 0.5)*1

20 | acc = sum((test_y == y_pre)*1)/len(y_pre)

21 | s += acc

22 | print(acc)

23 | print('Accuracy: ',s/5)# 0.823

24 |

25 |

26 | if __name__ == '__main__':

27 | stacking()

28 |

--------------------------------------------------------------------------------

/Lecture_06/README.md:

--------------------------------------------------------------------------------

1 | ### 1. 本节视频

2 | - 本节视频15,16

3 | ### 2. 知识点

4 | - 2.1 贝叶斯算法和贝叶斯估计

5 | - [朴素贝叶斯算法与贝叶斯估计](https://blog.csdn.net/The_lastest/article/details/78807198)

6 | - 平滑处理(拉普拉斯平滑)

7 | - 2.2 特征提取

8 | - 分词处理

9 | - [利用jieba进行中文分词并进行词频统计](https://blog.csdn.net/The_lastest/article/details/81027387)

10 | - 词集模型

11 | - 词袋模型

12 | - TF-IDF

13 | - [Scikit-learn CountVectorizer与TfidfVectorizer](https://blog.csdn.net/The_lastest/article/details/79093407)

14 | - 2.3 相似度计算

15 | - 欧氏距离

16 | - cos距离

17 |

18 | ### 3. 示例

19 | - [3.1 基于贝叶斯算法的中文垃圾邮件分类](ex1.py)

20 | - [3.2 词云图](word_cloud.py)

21 |

22 | ### 4. 任务

23 | - [4.1 在3.1的基础上,完成选取所有词中前5000个出现频率最高的词为字典构造TF-IDF特征矩阵,然后训练模型]()

24 | - [4.2 基于贝叶斯算法和编辑距离的单词拼写纠正](ex2.py)

25 | - [4.3 基于贝叶斯算法的中文新闻分类](ex3.py)

26 | ### [<主页>](../README.md) [<下一讲>](../Lecture_07/README.md)

--------------------------------------------------------------------------------

/Lecture_06/data/Figure_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Lecture_06/data/Figure_1.png

--------------------------------------------------------------------------------

/Lecture_06/data/README.md:

--------------------------------------------------------------------------------

1 | 下载好后放入data目录下即可

2 |

3 | ### 数据集下载地址:

4 |

5 | 数据集编号: 1003,1004,1005

6 |

7 | 停用词:3001

8 |

9 | [数据集下载地址集合](../../DatasetUrl.md)

10 |

11 |

--------------------------------------------------------------------------------

/Lecture_06/data/p18.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Lecture_06/data/p18.png

--------------------------------------------------------------------------------

/Lecture_06/data/simhei.ttf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TolicWang/MachineLearningWithMe/11d3bc63acc4a40947856e9cdabd47ab6c40793d/Lecture_06/data/simhei.ttf

--------------------------------------------------------------------------------

/Lecture_06/ex1.py:

--------------------------------------------------------------------------------

1 | import re

2 | import jieba

3 | import numpy as np

4 | from sklearn.feature_extraction.text import TfidfVectorizer

5 | from sklearn.model_selection import train_test_split

6 | import sys

7 | from sklearn.naive_bayes import MultinomialNB

8 |

9 |

10 | def clean_str(string, sep=" "):

11 | """

12 | 该函数的作用是去掉一个字符串中的所有非中文字符

13 | :param string: 输入必须是字符串类型

14 | :param sep: 表示去掉的部分用什么填充,默认为一个空格

15 | :return: 返回处理后的字符串

16 |

17 | example:

18 | s = "祝你2018000国庆快乐!"

19 | print(clean_str(s))# 祝你 国庆快乐

20 | print(clean_str(s,sep=""))# 祝你国庆快乐

21 | """

22 | string = re.sub(r"[^\u4e00-\u9fff]", sep, string)

23 | string = re.sub(r"\s{2,}", sep, string) # 若有空格,则最多只保留2个宽度

24 | return string.strip()

25 |

26 |

27 | def cut_line(line):

28 | """

29 | 该函数的作用是 先清洗字符串,然后分词

30 | :param line: 输入必须是字符串类型

31 | :return: 分词后的结果

32 |

33 | example:

34 | s ='我今天很高兴'

35 | print(cut_line(s))# 我 今天 很 高兴

36 | """

37 | line = clean_str(line)

38 | seg_list = jieba.cut(line)

39 | cut_words = " ".join(seg_list)

40 | return cut_words

41 |

42 |

43 | def load_data_and_labels(positive_data_file, negative_data_file):

44 | """

45 | 该函数的作用是按行载入数据,然后分词。同时给每个样本构造构造标签

46 | :param positive_data_file: txt文本格式,其中每一行为一个样本

47 | :param negative_data_file: txt文本格式,其中每一行为一个样本

48 | :return: 分词后的结果和标签

49 | example:

50 | positive_data_file:

51 | 今天我很高兴,你吃饭了吗?

52 | 这个怎么这么不正式啊?还上进青年

53 | 我觉得这个不错!

54 | return:

55 | x_text: ['今天 我 很 高兴 你 吃饭 了 吗', '这个 怎么 这么 不 正式 啊 还 上 进 青年', '我 觉得 这个 不错']

56 | y: [1,1,1]

57 | """

58 | print("================Processing in function: %s() !=================" % sys._getframe().f_code.co_name)

59 | positive = []

60 | negative = []

61 | for line in open(positive_data_file, encoding='utf-8'):

62 | positive.append(cut_line(line))

63 | for line in open(negative_data_file, encoding='utf-8'):

64 | negative.append(cut_line(line))

65 | x_text = positive + negative

66 |

67 | positive_label = [1 for _ in positive] # 构造标签

68 | negative_label = [0 for _ in negative]

69 |

70 | y = np.concatenate([positive_label, negative_label], axis=0)

71 |

72 | return x_text, y

73 |

74 |

75 | def get_tf_idf(features,top_k=None):

76 | print()

77 | """

78 | 该函数的作用是得到tfidf特征矩阵

79 | :param features:

80 | :param top_k: 取出现频率最高的前top_k个词为特征向量,默认取全部(即字典长度)

81 | :return:

82 |

83 | example:

84 | X_test = ['没有 你 的 地方 都是 他乡', '没有 你 的 旅行 都是 流浪 较之']

85 | IFIDF词频矩阵:

86 | [[0.57615236 0.57615236 0. 0.40993715 0. 0.40993715]

87 | [0. 0. 0.57615236 0.40993715 0.57615236 0.40993715]]

88 | """

89 | print("================Processing in function: %s() !=================" % sys._getframe().f_code.co_name)

90 | stopwors_dir = './data/stopwords/chinaStopwords.txt'

91 | stopwords = open(stopwors_dir, encoding='utf-8').read().replace('\n', ' ').split()

92 | tfidf = TfidfVectorizer(token_pattern=r"(?u)\b\w\w+\b", stop_words=stopwords)

93 | weight = tfidf.fit_transform(features).toarray()

94 | word = tfidf.get_feature_names()

95 | print('字典长度为:', len(word))

96 | return weight

97 |

98 |

99 | def get_train_test(positive_file, negative_file):

100 | """

101 | 该函数的作用是打乱并划分数据集

102 | :param positive_file:

103 | :param negative_file:

104 | :return:

105 | """

106 | print("================Processing in function: %s() !=================" % sys._getframe().f_code.co_name)

107 | x_text, y = load_data_and_labels(positive_file, negative_file)

108 | x = get_tf_idf(x_text)

109 | X_train, X_test, y_train, y_test = train_test_split(x, y, shuffle=True, test_size=0.3)

110 | return X_train, X_test, y_train, y_test

111 |

112 |

113 | def train(positive_file, negative_file):

114 | print("================Processing in function: %s() !=================" % sys._getframe().f_code.co_name)

115 | X_train, X_test, y_train, y_test = get_train_test(positive_file, negative_file)

116 | model = MultinomialNB()

117 | model.fit(X_train, y_train)

118 | print(model.score(X_test, y_test))

119 |

120 |

121 | if __name__ == "__main__":

122 | positive_file = './data/email/ham_5000.utf8'

123 | negative_file = './data/email/spam_5000.utf8'

124 | train(positive_file, negative_file)

125 |

--------------------------------------------------------------------------------

/Lecture_06/ex2.py:

--------------------------------------------------------------------------------

1 | import re

2 | from collections import Counter

3 | import pickle

4 | import sys, os

5 |

6 |

7 | def load_all_words(data_dir):

8 | """

9 | 该函数的作用是返回数据集中所有的单词

10 | :param data_dir:

11 | :return:

12 |

13 | example:

14 | (#15 in our series by

15 | all_words =['in','our','series','by']

16 | """

17 | text = open(data_dir).read().replace('\n', '').lower()

18 | all_words = re.findall('[a-z]+', text)

19 | return all_words

20 |

21 |

22 | def get_edit_one_distance(word='at'):

23 | """

24 | 该函数的作用是得到一个单词,编辑距离为1情况下的所有可能单词(不一定是正确单词)

25 | :param word:

26 | :return:

27 | example:

28 |

29 | word = 'at'

30 | edit_one={'att', 'aa', 'am', 'ati', 't', 'abt', 'mt', 'aot', 'atu', 'ay', 'aft', 'ac', 'dat', 'ato', 'ft', 'lat',.......}

31 | """

32 | n = len(word)

33 | alphabet = 'abcdefghijklmnopqrstuvwxyz'

34 | edit_one = set([word[0:i] + word[i + 1:] for i in range(n)] + # deletion

35 | [word[0:i] + word[i + 1] + word[i] + word[i + 2:] for i in range(n - 1)] + # transposition

36 | [word[0:i] + c + word[i + 1:] for i in range(n) for c in alphabet] + # alteration

37 | [word[0:i] + c + word[i:] for i in range(n + 1) for c in alphabet]) # insertion

38 | return edit_one

39 |

40 |

41 | def save_model(model_dir='./', para=None):

42 | """

43 | 该函数的作用是保存传进来的参数para

44 | :param model_dir: 保存路径

45 | :param para:

46 | :return:

47 | """

48 | p = {'model': para}

49 | temp = open(model_dir, 'wb')

50 | pickle.dump(p, temp)

51 |

52 |

53 | def load_model(model_dir='./'):

54 | """

55 | 该函数的作用是载入训练好的模型,如果不存在则训练

56 | :param model_dir:

57 | :return:

58 | """

59 | if os.path.exists(model_dir):

60 | p = open(model_dir, 'rb')

61 | data = pickle.load(p)

62 | model = data['model']

63 | else:

64 | model = train()

65 | save_model(model_dir, model)

66 | return model

67 |

68 |

69 | def train():

70 | """

71 | 该函数的作用是训练模型,并且保存

72 | :return:

73 | """

74 | data_dir = './data/spellcheck/big.txt'

75 | all_words = load_all_words(data_dir=data_dir)

76 | c = Counter()

77 | for word in all_words: # 统计词频

78 | c[word] += 1

79 | return c

80 |

81 |

82 | def predict(word):

83 | """

84 | 该函数的作用是,当用户输入单词不在预料库中是,然后根据预料库预测某个可能词

85 | :param word: 输入的单词

86 | :return:

87 |

88 | example:

89 | word = 'tha'

90 | the

91 | """

92 | model_dir = './data/spellcheck/model.dic'

93 | model = load_model(model_dir)

94 | all_words = [w for w in model]

95 | if word in all_words:

96 | correct_word = word

97 | else:

98 | all_candidates = get_edit_one_distance(word)

99 | correct_candidates = []

100 | unique_words = set(all_words)

101 | max_fre = 0

102 | correct_word = ""

103 | for word in all_candidates:

104 | if word in unique_words:

105 | correct_candidates.append(word)

106 | for word in correct_candidates:

107 | freq = model.get(word)

108 | if freq > max_fre:

109 | max_fre = freq

110 | correct_word = word

111 | print("所有的候选词:", correct_candidates)