├── .gitignore

├── Cargo.lock

├── Cargo.toml

├── README.md

├── config

└── entangledb.yaml

├── husky

└── cloud

│ ├── build.sh

│ ├── entangledb1

│ ├── data

│ │ └── .gitkeep

│ └── entangledb.yaml

│ ├── entangledb2

│ ├── data

│ │ └── .gitkeep

│ └── entangledb.yaml

│ ├── entangledb3

│ ├── data

│ │ └── .gitkeep

│ └── entangledb.yaml

│ ├── entangledb4

│ ├── data

│ │ └── .gitkeep

│ └── entangledb.yaml

│ └── entangledb5

│ ├── data

│ └── .gitkeep

│ └── entangledb.yaml

├── learning_resources.md

└── src

├── bin

├── entangledb.rs

└── entanglesql.rs

├── client.rs

├── error.rs

├── lib.rs

├── raft

├── log.rs

├── message.rs

├── mod.rs

├── node

│ ├── candidate.rs

│ ├── follower.rs

│ ├── leader.rs

│ └── mod.rs

├── server.rs

└── state.rs

├── server.rs

├── sql

├── engine

│ ├── kv.rs

│ ├── mod.rs

│ └── raft.rs

├── execution

│ ├── aggregation.rs

│ ├── join.rs

│ ├── mod.rs

│ ├── mutation.rs

│ ├── query.rs

│ ├── schema.rs

│ └── source.rs

├── mod.rs

├── parser

│ ├── ast.rs

│ ├── lexer.rs

│ └── mod.rs

├── plan

│ ├── mod.rs

│ ├── optimizer.rs

│ └── planner.rs

├── schema.rs

└── types

│ ├── expression.rs

│ └── mod.rs

└── storage

├── bincode.rs

├── debug.rs

├── engine

├── bitcask.rs

├── memory.rs

└── mod.rs

├── golden

├── bitcask

│ ├── compact-after

│ ├── compact-before

│ └── log

└── mvcc

│ ├── anomaly_dirty_read

│ ├── anomaly_dirty_write

│ ├── anomaly_fuzzy_read

│ ├── anomaly_lost_update

│ ├── anomaly_phantom_read

│ ├── anomaly_read_skew

│ ├── anomaly_write_skew

│ ├── begin

│ ├── begin_as_of

│ ├── begin_read_only

│ ├── delete

│ ├── delete_conflict

│ ├── get

│ ├── get_isolation

│ ├── resume

│ ├── rollback

│ ├── scan

│ ├── scan_isolation

│ ├── scan_key_version_encoding

│ ├── scan_prefix

│ ├── set

│ ├── set_conflict

│ └── unversioned

├── keycode.rs

├── mod.rs

└── mvcc.rs

/.gitignore:

--------------------------------------------------------------------------------

1 | /clusters/*/entangledb*/data

2 | /data

3 | /target

4 | .vscode/

5 | **/*.rs.bk

6 | .aider*

7 |

--------------------------------------------------------------------------------

/Cargo.toml:

--------------------------------------------------------------------------------

1 | [package]

2 | name = "entangledb"

3 | description = "A distributed SQL database"

4 | version = "0.1.0"

5 | edition = "2021"

6 | default-run = "entangledb"

7 |

8 | [lib]

9 | doctest = false

10 |

11 | [dependencies]

12 | bincode = "~1.3.3"

13 | clap = { version = "~4.4.2", features = ["cargo"] }

14 | config = "~0.13.3"

15 | derivative = "~2.2.0"

16 | fs4 = "~0.6.6"

17 | futures = "~0.3.15"

18 | futures-util = "~0.3.15"

19 | hex = "~0.4.3"

20 | lazy_static = "~1.4.0"

21 | log = "~0.4.14"

22 | names = "~0.14.0"

23 | rand = "~0.8.3"

24 | regex = "1.5.4"

25 | rustyline = "~12.0.0"

26 | rustyline-derive = "0.9.0"

27 | serde = "~1.0.126"

28 | serde_bytes = "~0.11.12"

29 | serde_derive = "~1.0.126"

30 | simplelog = "~0.12.1"

31 | tokio = { version = "~1.32.0", features = [

32 | "macros",

33 | "rt",

34 | "rt-multi-thread",

35 | "net",

36 | "io-util",

37 | "time",

38 | "sync",

39 | ] }

40 | tokio-serde = { version = "~0.8", features = ["bincode"] }

41 | tokio-stream = { version = "~0.1.6", features = ["net"] }

42 | tokio-util = { version = "~0.7.8", features = ["codec"] }

43 | uuid = { version = "~1.4.1", features = ["v4"] }

44 |

45 | [dev-dependencies]

46 | goldenfile = "~1.5.2"

47 | paste = "~1.0.14"

48 | pretty_assertions = "~1.4.0"

49 | serial_test = "~2.0.0"

50 | tempdir = "~0.3.7"

51 | tempfile = "~3.8.0"

52 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Table of Contents

2 | - [Overview](#overview)

3 | - [Usage](#usage)

4 | - [TODO](#todo)

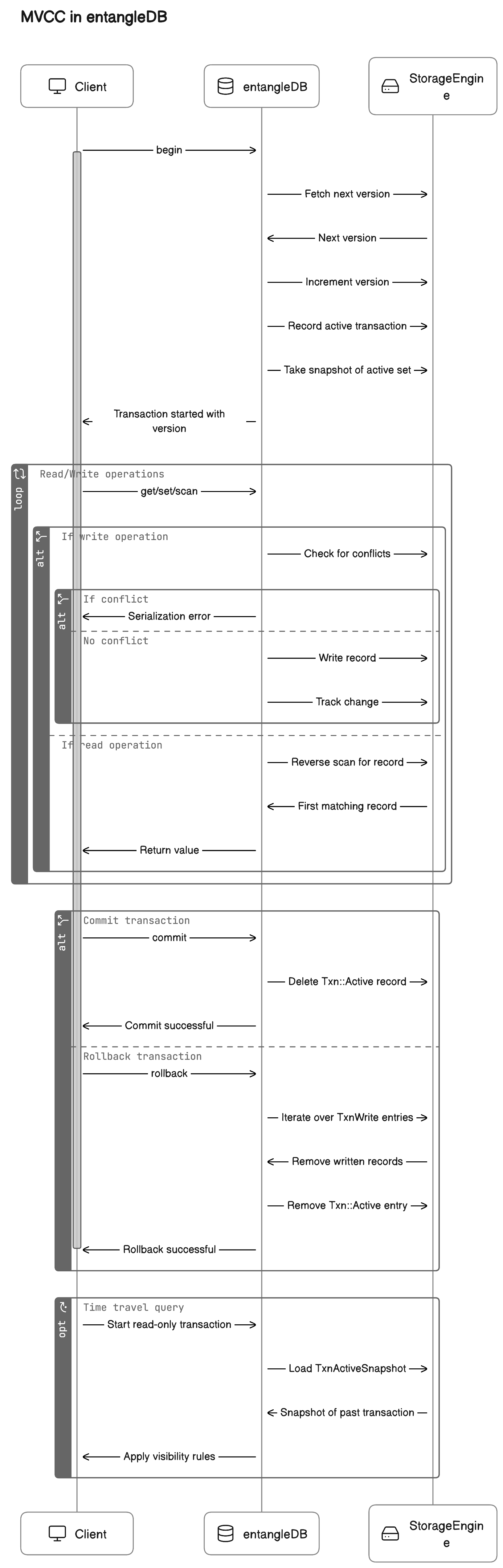

5 | - [MVCC in entangleDB](#mvcc-in-entangledb)

6 | - [SQL Query Execution in entangleDB](#sql-query-execution-in-entangledb)

7 | - [entangleDB Raft Consensus Engine](#entangledb-raft-consensus-engine)

8 | - [What I am trying to build](#what-i-am-trying-to-build)

9 | - [Distributed Consensus Engine](#1-distributed-consensus-engine)

10 | - [Transaction Engine](#2-transaction-engine)

11 | - [Storage Engine](#3-storage-engine)

12 | - [Query Engine](#4-query-engine)

13 | - [SQL Interface and PostgreSQL Compatibility](#5-sql-interface-and-postgresql-compatibility)

14 | - [Proposed Architecture](#proposed-architecture)

15 | - [SQL Engine](#sql-engine)

16 | - [Raft Engine](#raft-engine)

17 | - [Storage Engine](#storage-engine)

18 | - [entangleDB Peers](#entangledb-peers)

19 | - [Example SQL Queries that you will be able to execute in entangleDB](#example-sql-queries-that-you-will-be-able-to-execute-in-entangledb)

20 | - [Learning Resources I've been using for building the database](#learning-resources-ive-been-using-for-building-the-database)

21 |

22 | ## Overview

23 |

24 | I'm working on creating entangleDB, a project that's all about really getting to know how databases work from the inside out. My aim is to deeply understand everything about databases, from the big picture down to the small details. It's a way for me to build a strong foundation in database.

25 |

26 | The name "entangleDB" is special because it's in honor of a friend who loves databases just as much as I do.

27 |

28 | The plan is to write the database in Rust. My main goal is to create something that's not only useful for me to learn from but also helpful for others who are interested in diving deep into how databases work. I'm hoping to make it postgresSQL compatible.

29 |

30 | ## Usage

31 | Pre-requisite is to have the Rust compiler; follow this doc to install the [Rust compiler](https://www.rust-lang.org/tools/install)

32 |

33 | entangledb cluster can be started on `localhost` ports `3201` to `3205`:

34 |

35 | ```

36 | (cd husky/cloud && ./build.sh)

37 | ```

38 |

39 | Client can be used to connect with the node on `localhost` port `3205`:

40 |

41 | ```

42 | cargo run --release --bin entanglesql

43 |

44 | Connected to EntangleDB node "5". Enter !help for instructions.

45 | entangledb> SELECT * FROM dishes;

46 | poha

47 | breads

48 | korma

49 | ```

50 |

51 | ## TODO

52 | 1. Make the isolation level configurable; currently, it is set to repeatable read (snapshot).

53 | 2. Implement partitions, both hash and range types.

54 | 3. Utilize generics throughout in Rust, thereby eliminating the need for std::fmt::Display + Send + Sync.

55 | 4. Consider the use of runtime assertions instead of employing Error::Internal ubiquitously.

56 | 5. Revisit the implementation of time-travel queries

57 |

58 | ## MVCC in entangleDB

59 |

60 |

61 |

62 | ## SQL Query Execution in entangleDB

63 |

64 |

65 | ## entangleDB Raft Consensus Engine

66 |

67 |

68 | ## What I am trying to build

69 |

70 | ### 1. Distributed Consensus Engine

71 |

72 | The design for entangleDB centers around a custom-built consensus engine, intended for high availability in distributed settings. This engine will be crucial in maintaining consistent and reliable state management across various nodes.

73 |

74 | A key focus will be on linearizable state machine replication, an essential feature for ensuring data consistency across all nodes, especially for applications that require strong consistency.

75 |

76 | ### 2. Transaction Engine

77 |

78 | The proposed transaction engine for entangleDB is committed to adhering to ACID properties, ensuring reliability and integrity in every transaction.

79 |

80 | The plan includes the implementation of Snapshot Isolation and Serializable Isolation, with the aim of optimizing transaction handling for enhanced concurrency and data integrity.

81 |

82 | ### 3. Storage Engine

83 |

84 | The planned storage engine for entangleDB will explore a variety of storage formats to find and utilize the most efficient methods for data storage and retrieval.

85 |

86 | The storage layer is being designed for flexibility, to support a range of backend technologies and meet diverse storage requirements.

87 |

88 | ### 4. Query Engine

89 |

90 | The development of the query engine will focus on rapid and effective query processing, utilizing advanced optimization algorithms.

91 |

92 | A distinctive feature of entangleDB will be its ability to handle time-travel queries, allowing users to access and analyze data from different historical states.

93 |

94 | ### 5. SQL Interface and PostgreSQL Compatibility

95 |

96 | The SQL interface for entangleDB is intended to support a wide array of SQL functionalities, including complex queries, joins, aggregates, and window functions.

97 |

98 | Compatibility with PostgreSQL’s wire protocol is a goal, to facilitate smooth integration with existing PostgreSQL setups and offer a solid alternative for database system upgrades or migrations.

99 |

100 | ## Proposed Architecture

101 |  102 |

103 | ## SQL Engine

104 |

105 | The SQL Engine is responsible for the intake and processing of SQL queries. It consists of:

106 |

107 | - **SQL Session**: The processing pipeline within a session includes:

108 | - `Parser`: Interprets SQL queries and converts them into a machine-understandable format.

109 | - `Planner`: Devises an execution plan based on the parsed input.

110 | - `Executor`: Carries out the plan, accessing and modifying the database.

111 |

112 | Adjacent to the session is the:

113 |

114 | - **SQL Storage Raft Backend**: This component integrates with the Raft consensus protocol to ensure distributed transactions are consistent and resilient.

115 |

116 | ## Raft Engine

117 |

118 | The Raft Engine is crucial for maintaining a consistent state across the distributed system:

119 |

120 | - **Raft Node**: This consensus node confirms that all database transactions are in sync across the network.

121 | - **Raft Log**: A record of all transactions agreed upon by the Raft consensus algorithm, which is crucial for data integrity and fault tolerance.

122 |

123 | ## Storage Engine

124 |

125 | The Storage Engine is where the actual data is stored and managed:

126 |

127 | - **State Machine Driver**: Comprising of:

128 | - `State Machine Interface`: An intermediary that conveys state changes from the Raft log to the storage layer.

129 | - `Key Value Backend`: The primary storage layer, consisting of:

130 | - `Bitcask Engine`: A simple, fast on-disk storage system for key-value data.

131 | - `MVCC Storage`: Handles multiple versions of data for read-write concurrency control.

132 |

133 | ## entangleDB Peers

134 |

135 | - interaction between multiple database instances or "peers".

136 |

137 | ## Example SQL Queries that you will be able to execute in entangleDB

138 |

139 | ```sql

140 | -- Transaction example with a table creation, data insertion, and selection

141 | BEGIN;

142 |

143 | CREATE TABLE employees (id INT PRIMARY KEY, name VARCHAR, department VARCHAR);

144 | INSERT INTO employees VALUES (1, 'Alice', 'Engineering'), (2, 'Bob', 'HR');

145 | SELECT * FROM employees;

146 |

147 | COMMIT;

148 |

149 | -- Aggregation query with JOIN

150 | SELECT department, AVG(salary) FROM employees JOIN salaries ON employees.id = salaries.emp_id GROUP BY department;

151 |

152 | -- Time-travel query

153 | SELECT * FROM employees AS OF SYSTEM TIME '-5m';

154 | ```

155 |

156 | ## Learning Resources I've been using for building the database

157 |

158 | For a comprehensive list of resources that have been learning what to build in a distributed database, check out the [Learning Resources](https://github.com/TypicalDefender/entangleDB/blob/main/learning_resources.md) page.

159 |

160 |

161 |

162 |

163 |

--------------------------------------------------------------------------------

/config/entangledb.yaml:

--------------------------------------------------------------------------------

1 | # The node ID, peer ID/address map (empty for single node), and log level.

2 | id: 1

3 | peers: {}

4 | log_level: INFO

5 |

6 | # Network addresses to bind the SQL and Raft servers to.

7 | listen_sql: 0.0.0.0:3205

8 | listen_raft: 0.0.0.0:3305

9 |

10 |

11 | data_dir: data

12 | compact_threshold: 0.2

13 | sync: true

14 |

15 | storage_raft: bitcask

16 |

17 | storage_sql: bitcask

18 |

--------------------------------------------------------------------------------

/husky/cloud/build.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 |

3 | set -euo pipefail

4 |

5 | cargo build --release --bin entangledb

6 |

7 | for ID in 1 2 3 4 5; do

8 | (cargo run -q --release -- -c entangledb$ID/entangledb.yaml 2>&1 | sed -e "s/\\(.*\\)/entangledb$ID \\1/g") &

9 | done

10 |

11 | trap 'kill $(jobs -p)' EXIT

12 | wait < <(jobs -p)

--------------------------------------------------------------------------------

/husky/cloud/entangledb1/data/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TypicalDefender/entangleDB/beaf75098d2c936bf841c34ccb9241a144058380/husky/cloud/entangledb1/data/.gitkeep

--------------------------------------------------------------------------------

/husky/cloud/entangledb1/entangledb.yaml:

--------------------------------------------------------------------------------

1 | id: 1

2 | data_dir: entangledb1/data

3 | sync: false

4 | listen_sql: 0.0.0.0:3201

5 | listen_raft: 0.0.0.0:3301

6 | peers:

7 | '2': 127.0.0.1:3302

8 | '3': 127.0.0.1:3303

9 | '4': 127.0.0.1:3304

10 | '5': 127.0.0.1:3305

--------------------------------------------------------------------------------

/husky/cloud/entangledb2/data/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TypicalDefender/entangleDB/beaf75098d2c936bf841c34ccb9241a144058380/husky/cloud/entangledb2/data/.gitkeep

--------------------------------------------------------------------------------

/husky/cloud/entangledb2/entangledb.yaml:

--------------------------------------------------------------------------------

1 | id: 2

2 | data_dir: entangledb2/data

3 | sync: false

4 | listen_sql: 0.0.0.0:3202

5 | listen_raft: 0.0.0.0:3302

6 | peers:

7 | '1': 127.0.0.1:3301

8 | '3': 127.0.0.1:3303

9 | '4': 127.0.0.1:3304

10 | '5': 127.0.0.1:3305

--------------------------------------------------------------------------------

/husky/cloud/entangledb3/data/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TypicalDefender/entangleDB/beaf75098d2c936bf841c34ccb9241a144058380/husky/cloud/entangledb3/data/.gitkeep

--------------------------------------------------------------------------------

/husky/cloud/entangledb3/entangledb.yaml:

--------------------------------------------------------------------------------

1 | id: 3

2 | data_dir: entangledb3/data

3 | sync: false

4 | listen_sql: 0.0.0.0:3203

5 | listen_raft: 0.0.0.0:3303

6 | peers:

7 | '1': 127.0.0.1:3301

8 | '2': 127.0.0.1:3302

9 | '4': 127.0.0.1:3304

10 | '5': 127.0.0.1:3305

--------------------------------------------------------------------------------

/husky/cloud/entangledb4/data/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TypicalDefender/entangleDB/beaf75098d2c936bf841c34ccb9241a144058380/husky/cloud/entangledb4/data/.gitkeep

--------------------------------------------------------------------------------

/husky/cloud/entangledb4/entangledb.yaml:

--------------------------------------------------------------------------------

1 | id: 4

2 | data_dir: entangledb4/data

3 | sync: false

4 | listen_sql: 0.0.0.0:3204

5 | listen_raft: 0.0.0.0:3304

6 | peers:

7 | '1': 127.0.0.1:3301

8 | '2': 127.0.0.1:3302

9 | '3': 127.0.0.1:3303

10 | '5': 127.0.0.1:3305

--------------------------------------------------------------------------------

/husky/cloud/entangledb5/data/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TypicalDefender/entangleDB/beaf75098d2c936bf841c34ccb9241a144058380/husky/cloud/entangledb5/data/.gitkeep

--------------------------------------------------------------------------------

/husky/cloud/entangledb5/entangledb.yaml:

--------------------------------------------------------------------------------

1 | id: 5

2 | data_dir: entangledb5/data

3 | sync: false

4 | listen_sql: 0.0.0.0:3205

5 | listen_raft: 0.0.0.0:3305

6 | peers:

7 | '1': 127.0.0.1:3301

8 | '2': 127.0.0.1:3302

9 | '3': 127.0.0.1:3303

10 | '4': 127.0.0.1:3304

--------------------------------------------------------------------------------

/learning_resources.md:

--------------------------------------------------------------------------------

1 | # Learning Resources I've been using for building the database

2 |

3 | ### Introductory Materials

4 |

5 | **1. Lectures by Andy Pavlo**

6 | - **CMU 15-445 Intro to Database Systems**: [YouTube Playlist](https://www.youtube.com/playlist?list=PLSE8ODhjZXjbohkNBWQs_otTrBTrjyohi) (A Pavlo 2019)

7 | - **CMU 15-721 Advanced Database Systems**: [YouTube Playlist](https://www.youtube.com/playlist?list=PLSE8ODhjZXjasmrEd2_Yi1deeE360zv5O) (A Pavlo 2020)

8 |

9 | **2. Books by Martin Kleppman and Alex Petrov**

10 | - **Designing Data-Intensive Applications**: [Link to Book](https://dataintensive.net/) (M Kleppmann 2017)

11 | - **Database Internals**: [Link to Book](https://www.databass.dev) (A Petrov 2019)

12 |

13 | ### Raft Algorithm

14 |

15 | **1. Original Paper and Talks**

16 | - **In Search of an Understandable Consensus Algorithm**: [Raft Paper](https://raft.github.io/raft.pdf) (D Ongaro, J Ousterhout 2014)

17 | - **Designing for Understandability: The Raft Consensus Algorithm**: [YouTube Video](https://www.youtube.com/watch?v=vYp4LYbnnW8) (J Ousterhout 2016)

18 |

19 | **2. Student Guide**

20 | - **Students' Guide to Raft**: [Blog Post](https://thesquareplanet.com/blog/students-guide-to-raft/) (J Gjengset 2016)

21 |

22 | ### Parsing Techniques

23 |

24 | **1. Books by Thorsten Ball**

25 | - **Writing An Interpreter In Go**: [Link to Book](https://interpreterbook.com) (T Ball 2016)

26 | - **Writing A Compiler In Go**: [Link to Book](https://compilerbook.com) (T Ball 2018)

27 |

28 | **2. Blog Post**

29 | - **Parsing Expressions by Precedence Climbing**: [Blog Post](https://eli.thegreenplace.net/2012/08/02/parsing-expressions-by-precedence-climbing) (E Bendersky 2012)

30 |

31 | ### Transactions and Consistency

32 |

33 | **1. Overviews and Classic Papers**

34 | - **Consistency Models**: [Jepsen Article](https://jepsen.io/consistency) (Jepsen 2016)

35 | - **A Critique of ANSI SQL Isolation Levels**: [Research Paper](https://www.microsoft.com/en-us/research/wp-content/uploads/2016/02/tr-95-51.pdf) (H Berenson et al 1995)

36 | - **Generalized Isolation Level Definitions**: [Research Paper](http://pmg.csail.mit.edu/papers/icde00.pdf) (A Adya, B Liskov, P O'Neil 2000)

37 |

38 | **2. Blog Posts on MVCC Implementation**

39 | - **Implementing Your Own Transactions with MVCC**: [Blog Post](https://levelup.gitconnected.com/implementing-your-own-transactions-with-mvcc-bba11cab8e70) (E Chance 2015)

40 | - **How Postgres Makes Transactions Atomic**: [Blog Post](https://brandur.org/postgres-atomicity) (B Leach 2017)

41 |

--------------------------------------------------------------------------------

/src/bin/entangledb.rs:

--------------------------------------------------------------------------------

1 | /*

2 | * entangledb is the entangledb server. It takes configuration via a configuration file, command-line

3 | * parameters, and environment variables, then starts up a entangledb TCP server that communicates with

4 | * SQL clients (port 3205) and Raft peers (port 3305).

5 | */

6 |

7 | #![warn(clippy::all)]

8 |

9 | use serde_derive::Deserialize;

10 | use std::collections::HashMap;

11 | use entangledb::error::{Error, Result};

12 | use entangledb::raft;

13 | use entangledb::sql;

14 | use entangledb::storage;

15 | use entangledb::Server;

16 |

17 | #[tokio::main]

18 | async fn main() -> Result<()> {

19 | let args = clap::command!()

20 | .arg(

21 | clap::Arg::new("config")

22 | .short('c')

23 | .long("config")

24 | .help("Configuration file path")

25 | .default_value("config/entangledb.yaml"),

26 | )

27 | .get_matches();

28 | let cfg = Config::new(args.get_one::("config").unwrap().as_ref())?;

29 |

30 | let loglevel = cfg.log_level.parse::()?;

31 | let mut logconfig = simplelog::ConfigBuilder::new();

32 | if loglevel != simplelog::LevelFilter::Debug {

33 | logconfig.add_filter_allow_str("entangledb");

34 | }

35 | simplelog::SimpleLogger::init(loglevel, logconfig.build())?;

36 |

37 | let path = std::path::Path::new(&cfg.data_dir);

38 | let raft_log = match cfg.storage_raft.as_str() {

39 | "bitcask" | "" => raft::Log::new(

40 | Box::new(storage::engine::BitCask::new_compact(

41 | path.join("log"),

42 | cfg.compact_threshold,

43 | )?),

44 | cfg.sync,

45 | )?,

46 | "memory" => raft::Log::new(Box::new(storage::engine::Memory::new()), false)?,

47 | name => return Err(Error::Config(format!("Unknown Raft storage engine {}", name))),

48 | };

49 | let raft_state: Box = match cfg.storage_sql.as_str() {

50 | "bitcask" | "" => {

51 | let engine =

52 | storage::engine::BitCask::new_compact(path.join("state"), cfg.compact_threshold)?;

53 | Box::new(sql::engine::Raft::new_state(engine)?)

54 | }

55 | "memory" => {

56 | let engine = storage::engine::Memory::new();

57 | Box::new(sql::engine::Raft::new_state(engine)?)

58 | }

59 | name => return Err(Error::Config(format!("Unknown SQL storage engine {}", name))),

60 | };

61 |

62 | Server::new(cfg.id, cfg.peers, raft_log, raft_state)

63 | .await?

64 | .listen(&cfg.listen_sql, &cfg.listen_raft)

65 | .await?

66 | .serve()

67 | .await

68 | }

69 |

70 | #[derive(Debug, Deserialize)]

71 | struct Config {

72 | id: raft::NodeID,

73 | peers: HashMap,

74 | listen_sql: String,

75 | listen_raft: String,

76 | log_level: String,

77 | data_dir: String,

78 | compact_threshold: f64,

79 | sync: bool,

80 | storage_raft: String,

81 | storage_sql: String,

82 | }

83 |

84 | impl Config {

85 | fn new(file: &str) -> Result {

86 | Ok(config::Config::builder()

87 | .set_default("id", "entangledb")?

88 | .set_default("listen_sql", "0.0.0.0:3205")?

89 | .set_default("listen_raft", "0.0.0.0:3305")?

90 | .set_default("log_level", "info")?

91 | .set_default("data_dir", "data")?

92 | .set_default("compact_threshold", 0.2)?

93 | .set_default("sync", true)?

94 | .set_default("storage_raft", "bitcask")?

95 | .set_default("storage_sql", "bitcask")?

96 | .add_source(config::File::with_name(file))

97 | .add_source(config::Environment::with_prefix("entangledb"))

98 | .build()?

99 | .try_deserialize()?)

100 | }

101 | }

102 |

--------------------------------------------------------------------------------

/src/bin/entanglesql.rs:

--------------------------------------------------------------------------------

1 | #![warn(clippy::all)]

2 |

3 | use rustyline::history::DefaultHistory;

4 | use rustyline::validate::{ValidationContext, ValidationResult, Validator};

5 | use rustyline::{error::ReadlineError, Editor, Modifiers};

6 | use rustyline_derive::{Completer, Helper, Highlighter, Hinter};

7 | use entangledb::error::{Error, Result};

8 | use entangledb::sql::execution::ResultSet;

9 | use entangledb::sql::parser::{Lexer, Token};

10 | use entangledb::Client;

11 |

12 | #[tokio::main]

13 | async fn main() -> Result<()> {

14 | let opts = clap::command!()

15 | .name("entanglesql")

16 | .about("An EntangleDB client.")

17 | .args([

18 | clap::Arg::new("command"),

19 | clap::Arg::new("host")

20 | .short('H')

21 | .long("host")

22 | .help("Host to connect to")

23 | .default_value("127.0.0.1"),

24 | clap::Arg::new("port")

25 | .short('p')

26 | .long("port")

27 | .help("Port number to connect to")

28 | .value_parser(clap::value_parser!(u16))

29 | .default_value("3205"),

30 | ])

31 | .get_matches();

32 |

33 | let mut entanglesql =

34 | EntangleSQL::new(opts.get_one::("host").unwrap(), *opts.get_one("port").unwrap())

35 | .await?;

36 |

37 | if let Some(command) = opts.get_one::("command") {

38 | entanglesql.execute(command).await

39 | } else {

40 | entanglesql.run().await

41 | }

42 | }

43 |

44 | /// The EntangleSQL REPL

45 | struct EntangleSQL {

46 | client: Client,

47 | editor: Editor,

48 | history_path: Option,

49 | show_headers: bool,

50 | }

51 |

52 | impl EntangleSQL {

53 | async fn new(host: &str, port: u16) -> Result {

54 | Ok(Self {

55 | client: Client::new((host, port)).await?,

56 | editor: Editor::new()?,

57 | history_path: std::env::var_os("HOME")

58 | .map(|home| std::path::Path::new(&home).join(".entanglesql.history")),

59 | show_headers: false,

60 | })

61 | }

62 |

63 | /// Executes a line of input

64 | async fn execute(&mut self, input: &str) -> Result<()> {

65 | if input.starts_with('!') {

66 | self.execute_command(input).await

67 | } else if !input.is_empty() {

68 | self.execute_query(input).await

69 | } else {

70 | Ok(())

71 | }

72 | }

73 |

74 | /// Handles a REPL command (prefixed by !, e.g. !help)

75 | async fn execute_command(&mut self, input: &str) -> Result<()> {

76 | let mut input = input.split_ascii_whitespace();

77 | let command = input.next().ok_or_else(|| Error::Parse("Expected command.".to_string()))?;

78 |

79 | let getargs = |n| {

80 | let args: Vec<&str> = input.collect();

81 | if args.len() != n {

82 | Err(Error::Parse(format!("{}: expected {} args, got {}", command, n, args.len())))

83 | } else {

84 | Ok(args)

85 | }

86 | };

87 |

88 | match command {

89 | "!headers" => match getargs(1)?[0] {

90 | "on" => {

91 | self.show_headers = true;

92 | println!("Headers enabled");

93 | }

94 | "off" => {

95 | self.show_headers = false;

96 | println!("Headers disabled");

97 | }

98 | v => return Err(Error::Parse(format!("Invalid value {}, expected on or off", v))),

99 | },

100 | "!help" => println!(

101 | r#"

102 | Enter a SQL statement terminated by a semicolon (;) to execute it and display the result.

103 | The following commands are also available:

104 |

105 | !headers Enable or disable column headers

106 | !help This help message

107 | !status Display server status

108 | !table [table] Display table schema, if it exists

109 | !tables List tables

110 | "#

111 | ),

112 | "!status" => {

113 | let status = self.client.status().await?;

114 | let mut node_logs = status

115 | .raft

116 | .node_last_index

117 | .iter()

118 | .map(|(id, index)| format!("{}:{}", id, index))

119 | .collect::>();

120 | node_logs.sort();

121 | println!(

122 | r#"

123 | Server: {server} (leader {leader} in term {term} with {nodes} nodes)

124 | Raft log: {committed} committed, {applied} applied, {raft_size} MB ({raft_storage} storage)

125 | Node logs: {logs}

126 | MVCC: {active_txns} active txns, {versions} versions

127 | Storage: {keys} keys, {logical_size} MB logical, {nodes}x {disk_size} MB disk, {garbage_percent}% garbage ({sql_storage} engine)

128 | "#,

129 | server = status.raft.server,

130 | leader = status.raft.leader,

131 | term = status.raft.term,

132 | nodes = status.raft.node_last_index.len(),

133 | committed = status.raft.commit_index,

134 | applied = status.raft.apply_index,

135 | raft_storage = status.raft.storage,

136 | raft_size =

137 | format_args!("{:.3}", status.raft.storage_size as f64 / 1000.0 / 1000.0),

138 | logs = node_logs.join(" "),

139 | versions = status.mvcc.versions,

140 | active_txns = status.mvcc.active_txns,

141 | keys = status.mvcc.storage.keys,

142 | logical_size =

143 | format_args!("{:.3}", status.mvcc.storage.size as f64 / 1000.0 / 1000.0),

144 | garbage_percent = format_args!(

145 | "{:.0}",

146 | if status.mvcc.storage.total_disk_size > 0 {

147 | status.mvcc.storage.garbage_disk_size as f64

148 | / status.mvcc.storage.total_disk_size as f64

149 | * 100.0

150 | } else {

151 | 0.0

152 | }

153 | ),

154 | disk_size = format_args!(

155 | "{:.3}",

156 | status.mvcc.storage.total_disk_size as f64 / 1000.0 / 1000.0

157 | ),

158 | sql_storage = status.mvcc.storage.name,

159 | )

160 | }

161 | "!table" => {

162 | let args = getargs(1)?;

163 | println!("{}", self.client.get_table(args[0]).await?);

164 | }

165 | "!tables" => {

166 | getargs(0)?;

167 | for table in self.client.list_tables().await? {

168 | println!("{}", table)

169 | }

170 | }

171 | c => return Err(Error::Parse(format!("Unknown command {}", c))),

172 | }

173 | Ok(())

174 | }

175 |

176 | /// Runs a query and displays the results

177 | async fn execute_query(&mut self, query: &str) -> Result<()> {

178 | match self.client.execute(query).await? {

179 | ResultSet::Begin { version, read_only } => match read_only {

180 | false => println!("Began transaction at new version {}", version),

181 | true => println!("Began read-only transaction at version {}", version),

182 | },

183 | ResultSet::Commit { version: id } => println!("Committed transaction {}", id),

184 | ResultSet::Rollback { version: id } => println!("Rolled back transaction {}", id),

185 | ResultSet::Create { count } => println!("Created {} rows", count),

186 | ResultSet::Delete { count } => println!("Deleted {} rows", count),

187 | ResultSet::Update { count } => println!("Updated {} rows", count),

188 | ResultSet::CreateTable { name } => println!("Created table {}", name),

189 | ResultSet::DropTable { name } => println!("Dropped table {}", name),

190 | ResultSet::Explain(plan) => println!("{}", plan),

191 | ResultSet::Query { columns, mut rows } => {

192 | if self.show_headers {

193 | println!(

194 | "{}",

195 | columns

196 | .iter()

197 | .map(|c| c.name.as_deref().unwrap_or("?"))

198 | .collect::>()

199 | .join("|")

200 | );

201 | }

202 | while let Some(row) = rows.next().transpose()? {

203 | println!(

204 | "{}",

205 | row.into_iter().map(|v| format!("{}", v)).collect::>().join("|")

206 | );

207 | }

208 | }

209 | }

210 | Ok(())

211 | }

212 |

213 | /// Prompts the user for input

214 | fn prompt(&mut self) -> Result> {

215 | let prompt = match self.client.txn() {

216 | Some((version, false)) => format!("entangledb:{}> ", version),

217 | Some((version, true)) => format!("entangledb@{}> ", version),

218 | None => "entangledb> ".into(),

219 | };

220 | match self.editor.readline(&prompt) {

221 | Ok(input) => {

222 | self.editor.add_history_entry(&input)?;

223 | Ok(Some(input.trim().to_string()))

224 | }

225 | Err(ReadlineError::Eof) | Err(ReadlineError::Interrupted) => Ok(None),

226 | Err(err) => Err(err.into()),

227 | }

228 | }

229 |

230 | /// Runs the EntangleSQL REPL

231 | async fn run(&mut self) -> Result<()> {

232 | if let Some(path) = &self.history_path {

233 | match self.editor.load_history(path) {

234 | Ok(_) => {}

235 | Err(ReadlineError::Io(ref err)) if err.kind() == std::io::ErrorKind::NotFound => {}

236 | Err(err) => return Err(err.into()),

237 | };

238 | }

239 | // self.editor.set_helper(Some(InputValidator));

240 | self.editor.set_helper(Some(EntangleInputValidator));

241 | // Make sure multiline pastes are interpreted as normal inputs.

242 | self.editor.bind_sequence(

243 | rustyline::KeyEvent(rustyline::KeyCode::BracketedPasteStart, Modifiers::NONE),

244 | rustyline::Cmd::Noop,

245 | );

246 |

247 | let status = self.client.status().await?;

248 | println!(

249 | "Connected to EntangleDB node \"{}\". Enter !help for instructions.",

250 | status.raft.server

251 | );

252 |

253 | while let Some(input) = self.prompt()? {

254 | match self.execute(&input).await {

255 | Ok(()) => {}

256 | error @ Err(Error::Internal(_)) => return error,

257 | Err(error) => println!("Error: {}", error),

258 | }

259 | }

260 |

261 | if let Some(path) = &self.history_path {

262 | self.editor.save_history(path)?;

263 | }

264 | Ok(())

265 | }

266 | }

267 |

268 | /// A Rustyline helper for multiline editing. It parses input lines and determines if they make up a complete command or not.

269 | #[derive(Completer, Helper, Highlighter, Hinter)]

270 | struct EntangleInputValidator;

271 |

272 | impl Validator for EntangleInputValidator {

273 | fn validate(&self, ctx: &mut ValidationContext) -> rustyline::Result {

274 | let input = ctx.input();

275 |

276 | // Empty lines and ! commands are fine.

277 | if input.is_empty() || input.starts_with('!') || input == ";" {

278 | return Ok(ValidationResult::Valid(None));

279 | }

280 |

281 | // For SQL statements, just look for any semicolon or lexer error and if found accept the

282 | // input and rely on the server to do further validation and error handling. Otherwise,

283 | // wait for more input.

284 | for result in Lexer::new(ctx.input()) {

285 | match result {

286 | Ok(Token::Semicolon) => return Ok(ValidationResult::Valid(None)),

287 | Err(_) => return Ok(ValidationResult::Valid(None)),

288 | _ => {}

289 | }

290 | }

291 | Ok(ValidationResult::Incomplete)

292 | }

293 |

294 | fn validate_while_typing(&self) -> bool {

295 | false

296 | }

297 | }

298 |

--------------------------------------------------------------------------------

/src/client.rs:

--------------------------------------------------------------------------------

1 | use crate::error::{Error, Result};

2 | use crate::server::{Request, Response};

3 | use crate::sql::engine::Status;

4 | use crate::sql::execution::ResultSet;

5 | use crate::sql::schema::Table;

6 |

7 | use futures::future::FutureExt as _;

8 | use futures::sink::SinkExt as _;

9 | use futures::stream::TryStreamExt as _;

10 | use rand::Rng as _;

11 | use std::cell::Cell;

12 | use std::future::Future;

13 | use std::ops::{Deref, Drop};

14 | use std::sync::Arc;

15 | use tokio::net::{TcpStream, ToSocketAddrs};

16 | use tokio::sync::{Mutex, MutexGuard};

17 | use tokio_util::codec::{Framed, LengthDelimitedCodec};

18 |

19 | type Connection = tokio_serde::Framed<

20 | Framed,

21 | Result,

22 | Request,

23 | tokio_serde::formats::Bincode, Request>,

24 | >;

25 |

26 | /// Number of serialization retries in with_txn()

27 | const WITH_TXN_RETRIES: u8 = 8;

28 |

29 | /// A entangledb client

30 | #[derive(Clone)]

31 | pub struct Client {

32 | conn: Arc>,

33 | txn: Cell>,

34 | }

35 |

36 | impl Client {

37 | /// Creates a new client

38 | pub async fn new(addr: A) -> Result {

39 | Ok(Self {

40 | conn: Arc::new(Mutex::new(tokio_serde::Framed::new(

41 | Framed::new(TcpStream::connect(addr).await?, LengthDelimitedCodec::new()),

42 | tokio_serde::formats::Bincode::default(),

43 | ))),

44 | txn: Cell::new(None),

45 | })

46 | }

47 |

48 | /// Call a server method

49 | async fn call(&self, request: Request) -> Result {

50 | let mut conn = self.conn.lock().await;

51 | self.call_locked(&mut conn, request).await

52 | }

53 |

54 | /// Call a server method while holding the mutex lock

55 | async fn call_locked(

56 | &self,

57 | conn: &mut MutexGuard<'_, Connection>,

58 | request: Request,

59 | ) -> Result {

60 | conn.send(request).await?;

61 | match conn.try_next().await? {

62 | Some(result) => result,

63 | None => Err(Error::Internal("Server disconnected".into())),

64 | }

65 | }

66 |

67 | /// Executes a query

68 | pub async fn execute(&self, query: &str) -> Result {

69 | let mut conn = self.conn.lock().await;

70 | let mut resultset =

71 | match self.call_locked(&mut conn, Request::Execute(query.into())).await? {

72 | Response::Execute(rs) => rs,

73 | resp => return Err(Error::Internal(format!("Unexpected response {:?}", resp))),

74 | };

75 | if let ResultSet::Query { columns, .. } = resultset {

76 | // FIXME We buffer rows for now to avoid lifetime hassles

77 | let mut rows = Vec::new();

78 | while let Some(result) = conn.try_next().await? {

79 | match result? {

80 | Response::Row(Some(row)) => rows.push(row),

81 | Response::Row(None) => break,

82 | response => {

83 | return Err(Error::Internal(format!("Unexpected response {:?}", response)))

84 | }

85 | }

86 | }

87 | resultset = ResultSet::Query { columns, rows: Box::new(rows.into_iter().map(Ok)) }

88 | };

89 | match &resultset {

90 | ResultSet::Begin { version, read_only } => self.txn.set(Some((*version, *read_only))),

91 | ResultSet::Commit { .. } => self.txn.set(None),

92 | ResultSet::Rollback { .. } => self.txn.set(None),

93 | _ => {}

94 | }

95 | Ok(resultset)

96 | }

97 |

98 | /// Fetches the table schema as SQL

99 | pub async fn get_table(&self, table: &str) -> Result

102 |

103 | ## SQL Engine

104 |

105 | The SQL Engine is responsible for the intake and processing of SQL queries. It consists of:

106 |

107 | - **SQL Session**: The processing pipeline within a session includes:

108 | - `Parser`: Interprets SQL queries and converts them into a machine-understandable format.

109 | - `Planner`: Devises an execution plan based on the parsed input.

110 | - `Executor`: Carries out the plan, accessing and modifying the database.

111 |

112 | Adjacent to the session is the:

113 |

114 | - **SQL Storage Raft Backend**: This component integrates with the Raft consensus protocol to ensure distributed transactions are consistent and resilient.

115 |

116 | ## Raft Engine

117 |

118 | The Raft Engine is crucial for maintaining a consistent state across the distributed system:

119 |

120 | - **Raft Node**: This consensus node confirms that all database transactions are in sync across the network.

121 | - **Raft Log**: A record of all transactions agreed upon by the Raft consensus algorithm, which is crucial for data integrity and fault tolerance.

122 |

123 | ## Storage Engine

124 |

125 | The Storage Engine is where the actual data is stored and managed:

126 |

127 | - **State Machine Driver**: Comprising of:

128 | - `State Machine Interface`: An intermediary that conveys state changes from the Raft log to the storage layer.

129 | - `Key Value Backend`: The primary storage layer, consisting of:

130 | - `Bitcask Engine`: A simple, fast on-disk storage system for key-value data.

131 | - `MVCC Storage`: Handles multiple versions of data for read-write concurrency control.

132 |

133 | ## entangleDB Peers

134 |

135 | - interaction between multiple database instances or "peers".

136 |

137 | ## Example SQL Queries that you will be able to execute in entangleDB

138 |

139 | ```sql

140 | -- Transaction example with a table creation, data insertion, and selection

141 | BEGIN;

142 |

143 | CREATE TABLE employees (id INT PRIMARY KEY, name VARCHAR, department VARCHAR);

144 | INSERT INTO employees VALUES (1, 'Alice', 'Engineering'), (2, 'Bob', 'HR');

145 | SELECT * FROM employees;

146 |

147 | COMMIT;

148 |

149 | -- Aggregation query with JOIN

150 | SELECT department, AVG(salary) FROM employees JOIN salaries ON employees.id = salaries.emp_id GROUP BY department;

151 |

152 | -- Time-travel query

153 | SELECT * FROM employees AS OF SYSTEM TIME '-5m';

154 | ```

155 |

156 | ## Learning Resources I've been using for building the database

157 |

158 | For a comprehensive list of resources that have been learning what to build in a distributed database, check out the [Learning Resources](https://github.com/TypicalDefender/entangleDB/blob/main/learning_resources.md) page.

159 |

160 |

161 |

162 |

163 |

--------------------------------------------------------------------------------

/config/entangledb.yaml:

--------------------------------------------------------------------------------

1 | # The node ID, peer ID/address map (empty for single node), and log level.

2 | id: 1

3 | peers: {}

4 | log_level: INFO

5 |

6 | # Network addresses to bind the SQL and Raft servers to.

7 | listen_sql: 0.0.0.0:3205

8 | listen_raft: 0.0.0.0:3305

9 |

10 |

11 | data_dir: data

12 | compact_threshold: 0.2

13 | sync: true

14 |

15 | storage_raft: bitcask

16 |

17 | storage_sql: bitcask

18 |

--------------------------------------------------------------------------------

/husky/cloud/build.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 |

3 | set -euo pipefail

4 |

5 | cargo build --release --bin entangledb

6 |

7 | for ID in 1 2 3 4 5; do

8 | (cargo run -q --release -- -c entangledb$ID/entangledb.yaml 2>&1 | sed -e "s/\\(.*\\)/entangledb$ID \\1/g") &

9 | done

10 |

11 | trap 'kill $(jobs -p)' EXIT

12 | wait < <(jobs -p)

--------------------------------------------------------------------------------

/husky/cloud/entangledb1/data/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TypicalDefender/entangleDB/beaf75098d2c936bf841c34ccb9241a144058380/husky/cloud/entangledb1/data/.gitkeep

--------------------------------------------------------------------------------

/husky/cloud/entangledb1/entangledb.yaml:

--------------------------------------------------------------------------------

1 | id: 1

2 | data_dir: entangledb1/data

3 | sync: false

4 | listen_sql: 0.0.0.0:3201

5 | listen_raft: 0.0.0.0:3301

6 | peers:

7 | '2': 127.0.0.1:3302

8 | '3': 127.0.0.1:3303

9 | '4': 127.0.0.1:3304

10 | '5': 127.0.0.1:3305

--------------------------------------------------------------------------------

/husky/cloud/entangledb2/data/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TypicalDefender/entangleDB/beaf75098d2c936bf841c34ccb9241a144058380/husky/cloud/entangledb2/data/.gitkeep

--------------------------------------------------------------------------------

/husky/cloud/entangledb2/entangledb.yaml:

--------------------------------------------------------------------------------

1 | id: 2

2 | data_dir: entangledb2/data

3 | sync: false

4 | listen_sql: 0.0.0.0:3202

5 | listen_raft: 0.0.0.0:3302

6 | peers:

7 | '1': 127.0.0.1:3301

8 | '3': 127.0.0.1:3303

9 | '4': 127.0.0.1:3304

10 | '5': 127.0.0.1:3305

--------------------------------------------------------------------------------

/husky/cloud/entangledb3/data/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TypicalDefender/entangleDB/beaf75098d2c936bf841c34ccb9241a144058380/husky/cloud/entangledb3/data/.gitkeep

--------------------------------------------------------------------------------

/husky/cloud/entangledb3/entangledb.yaml:

--------------------------------------------------------------------------------

1 | id: 3

2 | data_dir: entangledb3/data

3 | sync: false

4 | listen_sql: 0.0.0.0:3203

5 | listen_raft: 0.0.0.0:3303

6 | peers:

7 | '1': 127.0.0.1:3301

8 | '2': 127.0.0.1:3302

9 | '4': 127.0.0.1:3304

10 | '5': 127.0.0.1:3305

--------------------------------------------------------------------------------

/husky/cloud/entangledb4/data/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TypicalDefender/entangleDB/beaf75098d2c936bf841c34ccb9241a144058380/husky/cloud/entangledb4/data/.gitkeep

--------------------------------------------------------------------------------

/husky/cloud/entangledb4/entangledb.yaml:

--------------------------------------------------------------------------------

1 | id: 4

2 | data_dir: entangledb4/data

3 | sync: false

4 | listen_sql: 0.0.0.0:3204

5 | listen_raft: 0.0.0.0:3304

6 | peers:

7 | '1': 127.0.0.1:3301

8 | '2': 127.0.0.1:3302

9 | '3': 127.0.0.1:3303

10 | '5': 127.0.0.1:3305

--------------------------------------------------------------------------------

/husky/cloud/entangledb5/data/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/TypicalDefender/entangleDB/beaf75098d2c936bf841c34ccb9241a144058380/husky/cloud/entangledb5/data/.gitkeep

--------------------------------------------------------------------------------

/husky/cloud/entangledb5/entangledb.yaml:

--------------------------------------------------------------------------------

1 | id: 5

2 | data_dir: entangledb5/data

3 | sync: false

4 | listen_sql: 0.0.0.0:3205

5 | listen_raft: 0.0.0.0:3305

6 | peers:

7 | '1': 127.0.0.1:3301

8 | '2': 127.0.0.1:3302

9 | '3': 127.0.0.1:3303

10 | '4': 127.0.0.1:3304

--------------------------------------------------------------------------------

/learning_resources.md:

--------------------------------------------------------------------------------

1 | # Learning Resources I've been using for building the database

2 |

3 | ### Introductory Materials

4 |

5 | **1. Lectures by Andy Pavlo**

6 | - **CMU 15-445 Intro to Database Systems**: [YouTube Playlist](https://www.youtube.com/playlist?list=PLSE8ODhjZXjbohkNBWQs_otTrBTrjyohi) (A Pavlo 2019)

7 | - **CMU 15-721 Advanced Database Systems**: [YouTube Playlist](https://www.youtube.com/playlist?list=PLSE8ODhjZXjasmrEd2_Yi1deeE360zv5O) (A Pavlo 2020)

8 |

9 | **2. Books by Martin Kleppman and Alex Petrov**

10 | - **Designing Data-Intensive Applications**: [Link to Book](https://dataintensive.net/) (M Kleppmann 2017)

11 | - **Database Internals**: [Link to Book](https://www.databass.dev) (A Petrov 2019)

12 |

13 | ### Raft Algorithm

14 |

15 | **1. Original Paper and Talks**

16 | - **In Search of an Understandable Consensus Algorithm**: [Raft Paper](https://raft.github.io/raft.pdf) (D Ongaro, J Ousterhout 2014)

17 | - **Designing for Understandability: The Raft Consensus Algorithm**: [YouTube Video](https://www.youtube.com/watch?v=vYp4LYbnnW8) (J Ousterhout 2016)

18 |

19 | **2. Student Guide**

20 | - **Students' Guide to Raft**: [Blog Post](https://thesquareplanet.com/blog/students-guide-to-raft/) (J Gjengset 2016)

21 |

22 | ### Parsing Techniques

23 |

24 | **1. Books by Thorsten Ball**

25 | - **Writing An Interpreter In Go**: [Link to Book](https://interpreterbook.com) (T Ball 2016)

26 | - **Writing A Compiler In Go**: [Link to Book](https://compilerbook.com) (T Ball 2018)

27 |

28 | **2. Blog Post**

29 | - **Parsing Expressions by Precedence Climbing**: [Blog Post](https://eli.thegreenplace.net/2012/08/02/parsing-expressions-by-precedence-climbing) (E Bendersky 2012)

30 |

31 | ### Transactions and Consistency

32 |

33 | **1. Overviews and Classic Papers**

34 | - **Consistency Models**: [Jepsen Article](https://jepsen.io/consistency) (Jepsen 2016)

35 | - **A Critique of ANSI SQL Isolation Levels**: [Research Paper](https://www.microsoft.com/en-us/research/wp-content/uploads/2016/02/tr-95-51.pdf) (H Berenson et al 1995)

36 | - **Generalized Isolation Level Definitions**: [Research Paper](http://pmg.csail.mit.edu/papers/icde00.pdf) (A Adya, B Liskov, P O'Neil 2000)

37 |

38 | **2. Blog Posts on MVCC Implementation**

39 | - **Implementing Your Own Transactions with MVCC**: [Blog Post](https://levelup.gitconnected.com/implementing-your-own-transactions-with-mvcc-bba11cab8e70) (E Chance 2015)

40 | - **How Postgres Makes Transactions Atomic**: [Blog Post](https://brandur.org/postgres-atomicity) (B Leach 2017)

41 |

--------------------------------------------------------------------------------

/src/bin/entangledb.rs:

--------------------------------------------------------------------------------

1 | /*

2 | * entangledb is the entangledb server. It takes configuration via a configuration file, command-line

3 | * parameters, and environment variables, then starts up a entangledb TCP server that communicates with

4 | * SQL clients (port 3205) and Raft peers (port 3305).

5 | */

6 |

7 | #![warn(clippy::all)]

8 |

9 | use serde_derive::Deserialize;

10 | use std::collections::HashMap;

11 | use entangledb::error::{Error, Result};

12 | use entangledb::raft;

13 | use entangledb::sql;

14 | use entangledb::storage;

15 | use entangledb::Server;

16 |

17 | #[tokio::main]

18 | async fn main() -> Result<()> {

19 | let args = clap::command!()

20 | .arg(

21 | clap::Arg::new("config")

22 | .short('c')

23 | .long("config")

24 | .help("Configuration file path")

25 | .default_value("config/entangledb.yaml"),

26 | )

27 | .get_matches();

28 | let cfg = Config::new(args.get_one::("config").unwrap().as_ref())?;

29 |

30 | let loglevel = cfg.log_level.parse::()?;

31 | let mut logconfig = simplelog::ConfigBuilder::new();

32 | if loglevel != simplelog::LevelFilter::Debug {

33 | logconfig.add_filter_allow_str("entangledb");

34 | }

35 | simplelog::SimpleLogger::init(loglevel, logconfig.build())?;

36 |

37 | let path = std::path::Path::new(&cfg.data_dir);

38 | let raft_log = match cfg.storage_raft.as_str() {

39 | "bitcask" | "" => raft::Log::new(

40 | Box::new(storage::engine::BitCask::new_compact(

41 | path.join("log"),

42 | cfg.compact_threshold,

43 | )?),

44 | cfg.sync,

45 | )?,

46 | "memory" => raft::Log::new(Box::new(storage::engine::Memory::new()), false)?,

47 | name => return Err(Error::Config(format!("Unknown Raft storage engine {}", name))),

48 | };

49 | let raft_state: Box = match cfg.storage_sql.as_str() {

50 | "bitcask" | "" => {

51 | let engine =

52 | storage::engine::BitCask::new_compact(path.join("state"), cfg.compact_threshold)?;

53 | Box::new(sql::engine::Raft::new_state(engine)?)

54 | }

55 | "memory" => {

56 | let engine = storage::engine::Memory::new();

57 | Box::new(sql::engine::Raft::new_state(engine)?)

58 | }

59 | name => return Err(Error::Config(format!("Unknown SQL storage engine {}", name))),

60 | };

61 |

62 | Server::new(cfg.id, cfg.peers, raft_log, raft_state)

63 | .await?

64 | .listen(&cfg.listen_sql, &cfg.listen_raft)

65 | .await?

66 | .serve()

67 | .await

68 | }

69 |

70 | #[derive(Debug, Deserialize)]

71 | struct Config {

72 | id: raft::NodeID,

73 | peers: HashMap,

74 | listen_sql: String,

75 | listen_raft: String,

76 | log_level: String,

77 | data_dir: String,

78 | compact_threshold: f64,

79 | sync: bool,

80 | storage_raft: String,

81 | storage_sql: String,

82 | }

83 |

84 | impl Config {

85 | fn new(file: &str) -> Result {

86 | Ok(config::Config::builder()

87 | .set_default("id", "entangledb")?

88 | .set_default("listen_sql", "0.0.0.0:3205")?

89 | .set_default("listen_raft", "0.0.0.0:3305")?

90 | .set_default("log_level", "info")?

91 | .set_default("data_dir", "data")?

92 | .set_default("compact_threshold", 0.2)?

93 | .set_default("sync", true)?

94 | .set_default("storage_raft", "bitcask")?

95 | .set_default("storage_sql", "bitcask")?

96 | .add_source(config::File::with_name(file))

97 | .add_source(config::Environment::with_prefix("entangledb"))

98 | .build()?

99 | .try_deserialize()?)

100 | }

101 | }

102 |

--------------------------------------------------------------------------------

/src/bin/entanglesql.rs:

--------------------------------------------------------------------------------

1 | #![warn(clippy::all)]

2 |

3 | use rustyline::history::DefaultHistory;

4 | use rustyline::validate::{ValidationContext, ValidationResult, Validator};

5 | use rustyline::{error::ReadlineError, Editor, Modifiers};

6 | use rustyline_derive::{Completer, Helper, Highlighter, Hinter};

7 | use entangledb::error::{Error, Result};

8 | use entangledb::sql::execution::ResultSet;

9 | use entangledb::sql::parser::{Lexer, Token};

10 | use entangledb::Client;

11 |

12 | #[tokio::main]

13 | async fn main() -> Result<()> {

14 | let opts = clap::command!()

15 | .name("entanglesql")

16 | .about("An EntangleDB client.")

17 | .args([

18 | clap::Arg::new("command"),

19 | clap::Arg::new("host")

20 | .short('H')

21 | .long("host")

22 | .help("Host to connect to")

23 | .default_value("127.0.0.1"),

24 | clap::Arg::new("port")

25 | .short('p')

26 | .long("port")

27 | .help("Port number to connect to")

28 | .value_parser(clap::value_parser!(u16))

29 | .default_value("3205"),

30 | ])

31 | .get_matches();

32 |

33 | let mut entanglesql =

34 | EntangleSQL::new(opts.get_one::("host").unwrap(), *opts.get_one("port").unwrap())

35 | .await?;

36 |

37 | if let Some(command) = opts.get_one::("command") {

38 | entanglesql.execute(command).await

39 | } else {

40 | entanglesql.run().await

41 | }

42 | }

43 |

44 | /// The EntangleSQL REPL

45 | struct EntangleSQL {

46 | client: Client,

47 | editor: Editor,

48 | history_path: Option,

49 | show_headers: bool,

50 | }

51 |

52 | impl EntangleSQL {

53 | async fn new(host: &str, port: u16) -> Result {

54 | Ok(Self {

55 | client: Client::new((host, port)).await?,

56 | editor: Editor::new()?,

57 | history_path: std::env::var_os("HOME")

58 | .map(|home| std::path::Path::new(&home).join(".entanglesql.history")),

59 | show_headers: false,

60 | })

61 | }

62 |

63 | /// Executes a line of input

64 | async fn execute(&mut self, input: &str) -> Result<()> {

65 | if input.starts_with('!') {

66 | self.execute_command(input).await

67 | } else if !input.is_empty() {

68 | self.execute_query(input).await

69 | } else {

70 | Ok(())

71 | }

72 | }

73 |

74 | /// Handles a REPL command (prefixed by !, e.g. !help)

75 | async fn execute_command(&mut self, input: &str) -> Result<()> {

76 | let mut input = input.split_ascii_whitespace();

77 | let command = input.next().ok_or_else(|| Error::Parse("Expected command.".to_string()))?;

78 |

79 | let getargs = |n| {

80 | let args: Vec<&str> = input.collect();

81 | if args.len() != n {

82 | Err(Error::Parse(format!("{}: expected {} args, got {}", command, n, args.len())))

83 | } else {

84 | Ok(args)

85 | }

86 | };

87 |

88 | match command {

89 | "!headers" => match getargs(1)?[0] {

90 | "on" => {

91 | self.show_headers = true;

92 | println!("Headers enabled");

93 | }

94 | "off" => {

95 | self.show_headers = false;

96 | println!("Headers disabled");

97 | }

98 | v => return Err(Error::Parse(format!("Invalid value {}, expected on or off", v))),

99 | },

100 | "!help" => println!(

101 | r#"

102 | Enter a SQL statement terminated by a semicolon (;) to execute it and display the result.

103 | The following commands are also available:

104 |

105 | !headers Enable or disable column headers

106 | !help This help message

107 | !status Display server status

108 | !table [table] Display table schema, if it exists

109 | !tables List tables

110 | "#

111 | ),

112 | "!status" => {

113 | let status = self.client.status().await?;

114 | let mut node_logs = status

115 | .raft

116 | .node_last_index

117 | .iter()

118 | .map(|(id, index)| format!("{}:{}", id, index))

119 | .collect::>();

120 | node_logs.sort();

121 | println!(

122 | r#"

123 | Server: {server} (leader {leader} in term {term} with {nodes} nodes)

124 | Raft log: {committed} committed, {applied} applied, {raft_size} MB ({raft_storage} storage)

125 | Node logs: {logs}

126 | MVCC: {active_txns} active txns, {versions} versions

127 | Storage: {keys} keys, {logical_size} MB logical, {nodes}x {disk_size} MB disk, {garbage_percent}% garbage ({sql_storage} engine)

128 | "#,

129 | server = status.raft.server,

130 | leader = status.raft.leader,

131 | term = status.raft.term,

132 | nodes = status.raft.node_last_index.len(),

133 | committed = status.raft.commit_index,

134 | applied = status.raft.apply_index,

135 | raft_storage = status.raft.storage,

136 | raft_size =

137 | format_args!("{:.3}", status.raft.storage_size as f64 / 1000.0 / 1000.0),

138 | logs = node_logs.join(" "),

139 | versions = status.mvcc.versions,

140 | active_txns = status.mvcc.active_txns,

141 | keys = status.mvcc.storage.keys,

142 | logical_size =

143 | format_args!("{:.3}", status.mvcc.storage.size as f64 / 1000.0 / 1000.0),

144 | garbage_percent = format_args!(

145 | "{:.0}",

146 | if status.mvcc.storage.total_disk_size > 0 {

147 | status.mvcc.storage.garbage_disk_size as f64

148 | / status.mvcc.storage.total_disk_size as f64

149 | * 100.0

150 | } else {

151 | 0.0

152 | }

153 | ),

154 | disk_size = format_args!(

155 | "{:.3}",

156 | status.mvcc.storage.total_disk_size as f64 / 1000.0 / 1000.0

157 | ),

158 | sql_storage = status.mvcc.storage.name,

159 | )

160 | }

161 | "!table" => {

162 | let args = getargs(1)?;

163 | println!("{}", self.client.get_table(args[0]).await?);

164 | }

165 | "!tables" => {

166 | getargs(0)?;

167 | for table in self.client.list_tables().await? {

168 | println!("{}", table)

169 | }

170 | }

171 | c => return Err(Error::Parse(format!("Unknown command {}", c))),

172 | }

173 | Ok(())

174 | }

175 |

176 | /// Runs a query and displays the results

177 | async fn execute_query(&mut self, query: &str) -> Result<()> {

178 | match self.client.execute(query).await? {

179 | ResultSet::Begin { version, read_only } => match read_only {

180 | false => println!("Began transaction at new version {}", version),

181 | true => println!("Began read-only transaction at version {}", version),

182 | },

183 | ResultSet::Commit { version: id } => println!("Committed transaction {}", id),

184 | ResultSet::Rollback { version: id } => println!("Rolled back transaction {}", id),

185 | ResultSet::Create { count } => println!("Created {} rows", count),

186 | ResultSet::Delete { count } => println!("Deleted {} rows", count),

187 | ResultSet::Update { count } => println!("Updated {} rows", count),

188 | ResultSet::CreateTable { name } => println!("Created table {}", name),

189 | ResultSet::DropTable { name } => println!("Dropped table {}", name),

190 | ResultSet::Explain(plan) => println!("{}", plan),

191 | ResultSet::Query { columns, mut rows } => {

192 | if self.show_headers {

193 | println!(

194 | "{}",

195 | columns

196 | .iter()

197 | .map(|c| c.name.as_deref().unwrap_or("?"))

198 | .collect::>()

199 | .join("|")

200 | );

201 | }

202 | while let Some(row) = rows.next().transpose()? {

203 | println!(

204 | "{}",

205 | row.into_iter().map(|v| format!("{}", v)).collect::>().join("|")

206 | );

207 | }

208 | }

209 | }

210 | Ok(())

211 | }

212 |

213 | /// Prompts the user for input

214 | fn prompt(&mut self) -> Result> {

215 | let prompt = match self.client.txn() {

216 | Some((version, false)) => format!("entangledb:{}> ", version),

217 | Some((version, true)) => format!("entangledb@{}> ", version),

218 | None => "entangledb> ".into(),

219 | };

220 | match self.editor.readline(&prompt) {

221 | Ok(input) => {

222 | self.editor.add_history_entry(&input)?;

223 | Ok(Some(input.trim().to_string()))

224 | }

225 | Err(ReadlineError::Eof) | Err(ReadlineError::Interrupted) => Ok(None),

226 | Err(err) => Err(err.into()),

227 | }

228 | }

229 |

230 | /// Runs the EntangleSQL REPL

231 | async fn run(&mut self) -> Result<()> {

232 | if let Some(path) = &self.history_path {

233 | match self.editor.load_history(path) {

234 | Ok(_) => {}

235 | Err(ReadlineError::Io(ref err)) if err.kind() == std::io::ErrorKind::NotFound => {}

236 | Err(err) => return Err(err.into()),

237 | };

238 | }

239 | // self.editor.set_helper(Some(InputValidator));

240 | self.editor.set_helper(Some(EntangleInputValidator));

241 | // Make sure multiline pastes are interpreted as normal inputs.

242 | self.editor.bind_sequence(

243 | rustyline::KeyEvent(rustyline::KeyCode::BracketedPasteStart, Modifiers::NONE),

244 | rustyline::Cmd::Noop,

245 | );

246 |

247 | let status = self.client.status().await?;

248 | println!(

249 | "Connected to EntangleDB node \"{}\". Enter !help for instructions.",

250 | status.raft.server

251 | );

252 |

253 | while let Some(input) = self.prompt()? {

254 | match self.execute(&input).await {

255 | Ok(()) => {}

256 | error @ Err(Error::Internal(_)) => return error,

257 | Err(error) => println!("Error: {}", error),

258 | }

259 | }

260 |

261 | if let Some(path) = &self.history_path {

262 | self.editor.save_history(path)?;

263 | }

264 | Ok(())

265 | }

266 | }

267 |

268 | /// A Rustyline helper for multiline editing. It parses input lines and determines if they make up a complete command or not.

269 | #[derive(Completer, Helper, Highlighter, Hinter)]

270 | struct EntangleInputValidator;

271 |

272 | impl Validator for EntangleInputValidator {

273 | fn validate(&self, ctx: &mut ValidationContext) -> rustyline::Result {

274 | let input = ctx.input();

275 |

276 | // Empty lines and ! commands are fine.

277 | if input.is_empty() || input.starts_with('!') || input == ";" {

278 | return Ok(ValidationResult::Valid(None));

279 | }

280 |

281 | // For SQL statements, just look for any semicolon or lexer error and if found accept the

282 | // input and rely on the server to do further validation and error handling. Otherwise,

283 | // wait for more input.

284 | for result in Lexer::new(ctx.input()) {

285 | match result {

286 | Ok(Token::Semicolon) => return Ok(ValidationResult::Valid(None)),

287 | Err(_) => return Ok(ValidationResult::Valid(None)),

288 | _ => {}

289 | }

290 | }

291 | Ok(ValidationResult::Incomplete)

292 | }

293 |

294 | fn validate_while_typing(&self) -> bool {

295 | false

296 | }

297 | }

298 |

--------------------------------------------------------------------------------

/src/client.rs:

--------------------------------------------------------------------------------

1 | use crate::error::{Error, Result};

2 | use crate::server::{Request, Response};

3 | use crate::sql::engine::Status;

4 | use crate::sql::execution::ResultSet;

5 | use crate::sql::schema::Table;

6 |

7 | use futures::future::FutureExt as _;

8 | use futures::sink::SinkExt as _;

9 | use futures::stream::TryStreamExt as _;

10 | use rand::Rng as _;

11 | use std::cell::Cell;

12 | use std::future::Future;

13 | use std::ops::{Deref, Drop};

14 | use std::sync::Arc;

15 | use tokio::net::{TcpStream, ToSocketAddrs};

16 | use tokio::sync::{Mutex, MutexGuard};

17 | use tokio_util::codec::{Framed, LengthDelimitedCodec};

18 |

19 | type Connection = tokio_serde::Framed<

20 | Framed,

21 | Result,

22 | Request,

23 | tokio_serde::formats::Bincode, Request>,

24 | >;

25 |

26 | /// Number of serialization retries in with_txn()

27 | const WITH_TXN_RETRIES: u8 = 8;

28 |

29 | /// A entangledb client

30 | #[derive(Clone)]

31 | pub struct Client {

32 | conn: Arc>,

33 | txn: Cell>,

34 | }

35 |

36 | impl Client {

37 | /// Creates a new client

38 | pub async fn new(addr: A) -> Result {

39 | Ok(Self {

40 | conn: Arc::new(Mutex::new(tokio_serde::Framed::new(

41 | Framed::new(TcpStream::connect(addr).await?, LengthDelimitedCodec::new()),

42 | tokio_serde::formats::Bincode::default(),

43 | ))),

44 | txn: Cell::new(None),

45 | })

46 | }

47 |

48 | /// Call a server method

49 | async fn call(&self, request: Request) -> Result {

50 | let mut conn = self.conn.lock().await;

51 | self.call_locked(&mut conn, request).await

52 | }

53 |

54 | /// Call a server method while holding the mutex lock

55 | async fn call_locked(

56 | &self,

57 | conn: &mut MutexGuard<'_, Connection>,

58 | request: Request,

59 | ) -> Result {

60 | conn.send(request).await?;

61 | match conn.try_next().await? {

62 | Some(result) => result,

63 | None => Err(Error::Internal("Server disconnected".into())),

64 | }

65 | }

66 |

67 | /// Executes a query

68 | pub async fn execute(&self, query: &str) -> Result {

69 | let mut conn = self.conn.lock().await;

70 | let mut resultset =

71 | match self.call_locked(&mut conn, Request::Execute(query.into())).await? {

72 | Response::Execute(rs) => rs,

73 | resp => return Err(Error::Internal(format!("Unexpected response {:?}", resp))),

74 | };

75 | if let ResultSet::Query { columns, .. } = resultset {

76 | // FIXME We buffer rows for now to avoid lifetime hassles

77 | let mut rows = Vec::new();

78 | while let Some(result) = conn.try_next().await? {

79 | match result? {

80 | Response::Row(Some(row)) => rows.push(row),

81 | Response::Row(None) => break,

82 | response => {

83 | return Err(Error::Internal(format!("Unexpected response {:?}", response)))

84 | }

85 | }

86 | }

87 | resultset = ResultSet::Query { columns, rows: Box::new(rows.into_iter().map(Ok)) }

88 | };

89 | match &resultset {

90 | ResultSet::Begin { version, read_only } => self.txn.set(Some((*version, *read_only))),

91 | ResultSet::Commit { .. } => self.txn.set(None),

92 | ResultSet::Rollback { .. } => self.txn.set(None),

93 | _ => {}

94 | }

95 | Ok(resultset)

96 | }

97 |

98 | /// Fetches the table schema as SQL

99 | pub async fn get_table(&self, table: &str) -> Result {

100 | match self.call(Request::GetTable(table.into())).await? {

101 | Response::GetTable(t) => Ok(t),

102 | resp => Err(Error::Value(format!("Unexpected response: {:?}", resp))),

103 | }

104 | }

105 |

106 | /// Lists database tables

107 | pub async fn list_tables(&self) -> Result> {

108 | match self.call(Request::ListTables).await? {

109 | Response::ListTables(t) => Ok(t),

110 | resp => Err(Error::Value(format!("Unexpected response: {:?}", resp))),

111 | }

112 | }

113 |

114 | /// Checks server status

115 | pub async fn status(&self) -> Result {

116 | match self.call(Request::Status).await? {

117 | Response::Status(s) => Ok(s),

118 | resp => Err(Error::Value(format!("Unexpected response: {:?}", resp))),

119 | }

120 | }

121 |

122 | /// Returns the version and read-only state of the txn

123 | pub fn txn(&self) -> Option<(u64, bool)> {

124 | self.txn.get()

125 | }

126 |

127 | /// Runs a query in a transaction, automatically retrying serialization failures with

128 | /// exponential backoff.

129 | pub async fn with_txn(&self, mut with: W) -> Result

130 | where

131 | W: FnMut(Client) -> F,

132 | F: Future