├── .gitignore

├── .gitignore~

├── .idea

└── vcs.xml

├── CMakeLists.txt

├── Makefile

├── Makefile.config

├── Makefile.config.example

├── README.md

├── README_en.md

├── a.out

├── build

├── caffe.cloc

├── cmake

├── ConfigGen.cmake

├── Cuda.cmake

├── Dependencies.cmake

├── External

│ ├── gflags.cmake

│ └── glog.cmake

├── Misc.cmake

├── Modules

│ ├── FindAtlas.cmake

│ ├── FindGFlags.cmake

│ ├── FindGlog.cmake

│ ├── FindLAPACK.cmake

│ ├── FindLMDB.cmake

│ ├── FindLevelDB.cmake

│ ├── FindMKL.cmake

│ ├── FindMatlabMex.cmake

│ ├── FindNumPy.cmake

│ ├── FindOpenBLAS.cmake

│ ├── FindSnappy.cmake

│ └── FindvecLib.cmake

├── ProtoBuf.cmake

├── Summary.cmake

├── Targets.cmake

├── Templates

│ ├── CaffeConfig.cmake.in

│ ├── CaffeConfigVersion.cmake.in

│ └── caffe_config.h.in

├── Utils.cmake

└── lint.cmake

├── examples

├── 00-classification.ipynb

├── 01-learning-lenet.ipynb

├── 02-fine-tuning.ipynb

├── CMakeLists.txt

├── brewing-logreg.ipynb

├── cifar10

│ ├── cifar10_full.prototxt

│ ├── cifar10_full_sigmoid_solver.prototxt

│ ├── cifar10_full_sigmoid_solver_bn.prototxt

│ ├── cifar10_full_sigmoid_train_test.prototxt

│ ├── cifar10_full_sigmoid_train_test_bn.prototxt

│ ├── cifar10_full_solver.prototxt

│ ├── cifar10_full_solver_lr1.prototxt

│ ├── cifar10_full_solver_lr2.prototxt

│ ├── cifar10_full_train_test.prototxt

│ ├── cifar10_quick.prototxt

│ ├── cifar10_quick_solver.prototxt

│ ├── cifar10_quick_solver_lr1.prototxt

│ ├── cifar10_quick_train_test.prototxt

│ ├── convert_cifar_data.cpp

│ ├── create_cifar10.sh

│ ├── readme.md

│ ├── train_full.sh

│ ├── train_full_sigmoid.sh

│ ├── train_full_sigmoid_bn.sh

│ └── train_quick.sh

├── cpp_classification

│ ├── classification.cpp

│ └── readme.md

├── detection.ipynb

├── feature_extraction

│ ├── imagenet_val.prototxt

│ └── readme.md

├── finetune_flickr_style

│ ├── assemble_data.py

│ ├── flickr_style.csv.gz

│ ├── readme.md

│ └── style_names.txt

├── finetune_pascal_detection

│ ├── pascal_finetune_solver.prototxt

│ └── pascal_finetune_trainval_test.prototxt

├── hdf5_classification

│ ├── nonlinear_auto_test.prototxt

│ ├── nonlinear_auto_train.prototxt

│ ├── nonlinear_train_val.prototxt

│ └── train_val.prototxt

├── imagenet

│ ├── create_imagenet.sh

│ ├── make_imagenet_mean.sh

│ ├── readme.md

│ ├── resume_training.sh

│ └── train_caffenet.sh

├── images

│ ├── cat gray.jpg

│ ├── cat.jpg

│ ├── cat_gray.jpg

│ └── fish-bike.jpg

├── mnist

│ ├── convert_mnist_data.cpp

│ ├── create_mnist.sh

│ ├── lenet.prototxt

│ ├── lenet_adadelta_solver.prototxt

│ ├── lenet_auto_solver.prototxt

│ ├── lenet_consolidated_solver.prototxt

│ ├── lenet_multistep_solver.prototxt

│ ├── lenet_solver.prototxt

│ ├── lenet_solver_adam.prototxt

│ ├── lenet_solver_rmsprop.prototxt

│ ├── lenet_train_test.prototxt

│ ├── mnist_autoencoder.prototxt

│ ├── mnist_autoencoder_solver.prototxt

│ ├── mnist_autoencoder_solver_adadelta.prototxt

│ ├── mnist_autoencoder_solver_adagrad.prototxt

│ ├── mnist_autoencoder_solver_nesterov.prototxt

│ ├── readme.md

│ ├── train_lenet.sh

│ ├── train_lenet_adam.sh

│ ├── train_lenet_consolidated.sh

│ ├── train_lenet_docker.sh

│ ├── train_lenet_rmsprop.sh

│ ├── train_mnist_autoencoder.sh

│ ├── train_mnist_autoencoder_adadelta.sh

│ ├── train_mnist_autoencoder_adagrad.sh

│ └── train_mnist_autoencoder_nesterov.sh

├── net_surgery.ipynb

├── net_surgery

│ ├── bvlc_caffenet_full_conv.prototxt

│ └── conv.prototxt

├── pascal-multilabel-with-datalayer.ipynb

├── pycaffe

│ ├── caffenet.py

│ ├── layers

│ │ ├── pascal_multilabel_datalayers.py

│ │ └── pyloss.py

│ ├── linreg.prototxt

│ └── tools.py

├── siamese

│ ├── convert_mnist_siamese_data.cpp

│ ├── create_mnist_siamese.sh

│ ├── mnist_siamese.ipynb

│ ├── mnist_siamese.prototxt

│ ├── mnist_siamese_solver.prototxt

│ ├── mnist_siamese_train_test.prototxt

│ ├── readme.md

│ └── train_mnist_siamese.sh

├── unreal_example

│ ├── CMakeLists.txt

│ ├── readme.md

│ └── src

│ │ ├── main.cpp

│ │ └── main.cpp~

└── web_demo

│ ├── app.py

│ ├── exifutil.py

│ ├── readme.md

│ ├── requirements.txt

│ └── templates

│ └── index.html

├── generatepb.sh

├── include

└── caffe

│ ├── blob.hpp

│ ├── caffe.hpp

│ ├── common.hpp

│ ├── data_transformer.hpp

│ ├── filler.hpp

│ ├── internal_thread.hpp

│ ├── layer.hpp

│ ├── layer_factory.hpp

│ ├── layers

│ ├── absval_layer.hpp

│ ├── accuracy_layer.hpp

│ ├── argmax_layer.hpp

│ ├── base_conv_layer.hpp

│ ├── base_data_layer.hpp

│ ├── batch_norm_layer.hpp

│ ├── batch_reindex_layer.hpp

│ ├── bias_layer.hpp

│ ├── bnll_layer.hpp

│ ├── box_annotator_ohem_layer.hpp

│ ├── concat_layer.hpp

│ ├── contrastive_loss_layer.hpp

│ ├── conv_layer.hpp

│ ├── crop_layer.hpp

│ ├── cudnn_conv_layer.hpp

│ ├── cudnn_lcn_layer.hpp

│ ├── cudnn_lrn_layer.hpp

│ ├── cudnn_pooling_layer.hpp

│ ├── cudnn_relu_layer.hpp

│ ├── cudnn_sigmoid_layer.hpp

│ ├── cudnn_softmax_layer.hpp

│ ├── cudnn_tanh_layer.hpp

│ ├── data_layer.hpp

│ ├── deconv_layer.hpp

│ ├── dense_image_data_layer.hpp.bk

│ ├── dropout_layer.hpp

│ ├── dummy_data_layer.hpp

│ ├── eltwise_layer.hpp

│ ├── elu_layer.hpp

│ ├── embed_layer.hpp

│ ├── euclidean_loss_layer.hpp

│ ├── exp_layer.hpp

│ ├── filter_layer.hpp

│ ├── flatten_layer.hpp

│ ├── hdf5_data_layer.hpp

│ ├── hdf5_output_layer.hpp

│ ├── hinge_loss_layer.hpp

│ ├── im2col_layer.hpp

│ ├── image_data_layer.hpp

│ ├── infogain_loss_layer.hpp

│ ├── inner_product_blob_layer.hpp

│ ├── inner_product_layer.hpp

│ ├── input_layer.hpp

│ ├── log_layer.hpp

│ ├── loss_layer.hpp

│ ├── lrn_layer.hpp

│ ├── lstm_layer.hpp

│ ├── memory_data_layer.hpp

│ ├── multinomial_logistic_loss_layer.hpp

│ ├── mvn_layer.hpp

│ ├── neuron_layer.hpp

│ ├── parameter_layer.hpp

│ ├── pooling_layer.hpp

│ ├── power_layer.hpp

│ ├── prelu_layer.hpp

│ ├── proposal_layer.bk

│ ├── psroi_pooling_layer.hpp

│ ├── python_layer.hpp

│ ├── recurrent_layer.hpp

│ ├── reduction_layer.hpp

│ ├── relu_layer.hpp

│ ├── reshape_layer.hpp

│ ├── rnn_layer.hpp

│ ├── roi_pooling_layer.hpp

│ ├── scale_layer.hpp

│ ├── sigmoid_cross_entropy_loss_layer.hpp

│ ├── sigmoid_layer.hpp

│ ├── silence_layer.hpp

│ ├── slice_layer.hpp

│ ├── smooth_l1_loss_layer.hpp

│ ├── smooth_l1_loss_ohem_layer.hpp

│ ├── softmax_layer.hpp

│ ├── softmax_loss_layer.hpp

│ ├── softmax_loss_ohem_layer.hpp

│ ├── split_layer.hpp

│ ├── spp_layer.hpp

│ ├── tanh_layer.hpp

│ ├── threshold_layer.hpp

│ ├── tile_layer.hpp

│ └── window_data_layer.hpp

│ ├── net.hpp

│ ├── parallel.hpp

│ ├── proto

│ └── caffe.pb.h

│ ├── sgd_solvers.hpp

│ ├── solver.hpp

│ ├── solver_factory.hpp

│ ├── syncedmem.hpp

│ ├── test

│ ├── test_caffe_main.hpp

│ └── test_gradient_check_util.hpp

│ └── util

│ ├── benchmark.hpp

│ ├── blocking_queue.hpp

│ ├── cudnn.hpp

│ ├── db.hpp

│ ├── db_leveldb.hpp

│ ├── db_lmdb.hpp

│ ├── device_alternate.hpp

│ ├── format.hpp

│ ├── gpu_util.cuh

│ ├── hdf5.hpp

│ ├── im2col.hpp

│ ├── insert_splits.hpp

│ ├── io.hpp

│ ├── math_functions.hpp

│ ├── mkl_alternate.hpp

│ ├── nccl.hpp

│ ├── rng.hpp

│ ├── signal_handler.h

│ └── upgrade_proto.hpp

├── install_depencies.sh

├── matlab

├── +caffe

│ ├── +test

│ │ ├── test_io.m

│ │ ├── test_net.m

│ │ └── test_solver.m

│ ├── Blob.m

│ ├── Layer.m

│ ├── Net.m

│ ├── Solver.m

│ ├── get_net.m

│ ├── get_solver.m

│ ├── imagenet

│ │ └── ilsvrc_2012_mean.mat

│ ├── io.m

│ ├── private

│ │ ├── CHECK.m

│ │ ├── CHECK_FILE_EXIST.m

│ │ ├── caffe_.cpp

│ │ └── is_valid_handle.m

│ ├── reset_all.m

│ ├── run_tests.m

│ ├── set_device.m

│ ├── set_mode_cpu.m

│ ├── set_mode_gpu.m

│ └── version.m

├── CMakeLists.txt

├── demo

│ └── classification_demo.m

└── hdf5creation

│ ├── .gitignore

│ ├── demo.m

│ └── store2hdf5.m

├── python

├── .gitignore

├── .gitignore~

├── CMakeLists.txt

├── caffe

│ ├── __init__.py

│ ├── __init__.pyc

│ ├── _caffe.cpp

│ ├── classifier.py

│ ├── classifier.pyc

│ ├── coord_map.py

│ ├── detector.py

│ ├── detector.pyc

│ ├── draw.py

│ ├── imagenet

│ │ └── ilsvrc_2012_mean.npy

│ ├── io.py

│ ├── io.pyc

│ ├── net_spec.py

│ ├── net_spec.pyc

│ ├── pycaffe.py

│ ├── pycaffe.pyc

│ └── test

│ │ ├── test_coord_map.py

│ │ ├── test_io.py

│ │ ├── test_layer_type_list.py

│ │ ├── test_net.py

│ │ ├── test_net_spec.py

│ │ ├── test_python_layer.py

│ │ ├── test_python_layer_with_param_str.py

│ │ └── test_solver.py

├── classify.py

├── detect.py

├── draw_net.py

├── net_pics

│ └── lenet.png

├── requirements.txt

└── train.py

├── scripts

├── build_docs.sh

├── copy_notebook.py

├── cpp_lint.py

├── deploy_docs.sh

├── download_model_binary.py

├── download_model_from_gist.sh

├── gather_examples.sh

├── split_caffe_proto.py

├── travis

│ ├── build.sh

│ ├── configure-cmake.sh

│ ├── configure-make.sh

│ ├── configure.sh

│ ├── defaults.sh

│ ├── install-deps.sh

│ ├── install-python-deps.sh

│ ├── setup-venv.sh

│ └── test.sh

└── upload_model_to_gist.sh

├── src

├── caffe

│ ├── CMakeLists.txt

│ ├── blob.cpp

│ ├── common.cpp

│ ├── data_transformer.cpp

│ ├── internal_thread.cpp

│ ├── layer.cpp

│ ├── layer_factory.cpp

│ ├── layers

│ │ ├── absval_layer.cpp

│ │ ├── absval_layer.cu

│ │ ├── accuracy_layer.cpp

│ │ ├── argmax_layer.cpp

│ │ ├── base_conv_layer.cpp

│ │ ├── base_data_layer.cpp

│ │ ├── base_data_layer.cu

│ │ ├── batch_norm_layer.cpp

│ │ ├── batch_norm_layer.cu

│ │ ├── batch_reindex_layer.cpp

│ │ ├── batch_reindex_layer.cu

│ │ ├── bias_layer.cpp

│ │ ├── bias_layer.cu

│ │ ├── bnll_layer.cpp

│ │ ├── bnll_layer.cu

│ │ ├── box_annotator_ohem_layer.cpp

│ │ ├── box_annotator_ohem_layer.cu

│ │ ├── concat_layer.cpp

│ │ ├── concat_layer.cu

│ │ ├── contrastive_loss_layer.cpp

│ │ ├── contrastive_loss_layer.cu

│ │ ├── conv_layer.cpp

│ │ ├── conv_layer.cu

│ │ ├── crop_layer.cpp

│ │ ├── crop_layer.cu

│ │ ├── cudnn_conv_layer.cpp

│ │ ├── cudnn_conv_layer.cu

│ │ ├── cudnn_lcn_layer.cpp

│ │ ├── cudnn_lcn_layer.cu

│ │ ├── cudnn_lrn_layer.cpp

│ │ ├── cudnn_lrn_layer.cu

│ │ ├── cudnn_pooling_layer.cpp

│ │ ├── cudnn_pooling_layer.cu

│ │ ├── cudnn_relu_layer.cpp

│ │ ├── cudnn_relu_layer.cu

│ │ ├── cudnn_sigmoid_layer.cpp

│ │ ├── cudnn_sigmoid_layer.cu

│ │ ├── cudnn_softmax_layer.cpp

│ │ ├── cudnn_softmax_layer.cu

│ │ ├── cudnn_tanh_layer.cpp

│ │ ├── cudnn_tanh_layer.cu

│ │ ├── data_layer.cpp

│ │ ├── deconv_layer.cpp

│ │ ├── deconv_layer.cu

│ │ ├── dense_image_data_layer.cpp.bk

│ │ ├── dropout_layer.cpp

│ │ ├── dropout_layer.cu

│ │ ├── dummy_data_layer.cpp

│ │ ├── eltwise_layer.cpp

│ │ ├── eltwise_layer.cu

│ │ ├── elu_layer.cpp

│ │ ├── elu_layer.cu

│ │ ├── embed_layer.cpp

│ │ ├── embed_layer.cu

│ │ ├── euclidean_loss_layer.cpp

│ │ ├── euclidean_loss_layer.cu

│ │ ├── exp_layer.cpp

│ │ ├── exp_layer.cu

│ │ ├── filter_layer.cpp

│ │ ├── filter_layer.cu

│ │ ├── flatten_layer.cpp

│ │ ├── hdf5_data_layer.cpp

│ │ ├── hdf5_data_layer.cu

│ │ ├── hdf5_output_layer.cpp

│ │ ├── hdf5_output_layer.cu

│ │ ├── hinge_loss_layer.cpp

│ │ ├── im2col_layer.cpp

│ │ ├── im2col_layer.cu

│ │ ├── image_data_layer.cpp

│ │ ├── infogain_loss_layer.cpp

│ │ ├── inner_product_blob_layer.cpp

│ │ ├── inner_product_blob_layer.cu

│ │ ├── inner_product_layer.cpp

│ │ ├── inner_product_layer.cu

│ │ ├── input_layer.cpp

│ │ ├── log_layer.cpp

│ │ ├── log_layer.cu

│ │ ├── loss_layer.cpp

│ │ ├── lrn_layer.cpp

│ │ ├── lrn_layer.cu

│ │ ├── lstm_layer.cpp

│ │ ├── lstm_unit_layer.cpp

│ │ ├── lstm_unit_layer.cu

│ │ ├── memory_data_layer.cpp

│ │ ├── multinomial_logistic_loss_layer.cpp

│ │ ├── mvn_layer.cpp

│ │ ├── mvn_layer.cu

│ │ ├── neuron_layer.cpp

│ │ ├── parameter_layer.cpp

│ │ ├── pooling_layer.cpp

│ │ ├── pooling_layer.cu

│ │ ├── power_layer.cpp

│ │ ├── power_layer.cu

│ │ ├── prelu_layer.cpp

│ │ ├── prelu_layer.cu

│ │ ├── proposal_layer.cpp.bk

│ │ ├── proposal_layer.cu.bk

│ │ ├── psroi_pooling_layer.cpp

│ │ ├── psroi_pooling_layer.cu

│ │ ├── recurrent_layer.cpp

│ │ ├── recurrent_layer.cu

│ │ ├── reduction_layer.cpp

│ │ ├── reduction_layer.cu

│ │ ├── relu_layer.cpp

│ │ ├── relu_layer.cu

│ │ ├── reshape_layer.cpp

│ │ ├── rnn_layer.cpp

│ │ ├── roi_pooling_layer.cpp

│ │ ├── roi_pooling_layer.cu

│ │ ├── scale_layer.cpp

│ │ ├── scale_layer.cu

│ │ ├── sigmoid_cross_entropy_loss_layer.cpp

│ │ ├── sigmoid_cross_entropy_loss_layer.cu

│ │ ├── sigmoid_layer.cpp

│ │ ├── sigmoid_layer.cu

│ │ ├── silence_layer.cpp

│ │ ├── silence_layer.cu

│ │ ├── slice_layer.cpp

│ │ ├── slice_layer.cu

│ │ ├── smooth_L1_loss_ohem_layer.cpp

│ │ ├── smooth_L1_loss_ohem_layer.cu

│ │ ├── smooth_l1_loss_layer.cpp

│ │ ├── smooth_l1_loss_layer.cu

│ │ ├── softmax_layer.cpp

│ │ ├── softmax_layer.cu

│ │ ├── softmax_loss_layer.cpp

│ │ ├── softmax_loss_layer.cu

│ │ ├── softmax_loss_ohem_layer.cpp

│ │ ├── softmax_loss_ohem_layer.cu

│ │ ├── split_layer.cpp

│ │ ├── split_layer.cu

│ │ ├── spp_layer.cpp

│ │ ├── tanh_layer.cpp

│ │ ├── tanh_layer.cu

│ │ ├── threshold_layer.cpp

│ │ ├── threshold_layer.cu

│ │ ├── tile_layer.cpp

│ │ ├── tile_layer.cu

│ │ └── window_data_layer.cpp

│ ├── net.cpp

│ ├── parallel.cpp

│ ├── proto

│ │ ├── caffe.pb.cc

│ │ ├── caffe.pb.h

│ │ └── caffe.proto

│ ├── solver.cpp

│ ├── solvers

│ │ ├── adadelta_solver.cpp

│ │ ├── adadelta_solver.cu

│ │ ├── adagrad_solver.cpp

│ │ ├── adagrad_solver.cu

│ │ ├── adam_solver.cpp

│ │ ├── adam_solver.cu

│ │ ├── nesterov_solver.cpp

│ │ ├── nesterov_solver.cu

│ │ ├── rmsprop_solver.cpp

│ │ ├── rmsprop_solver.cu

│ │ ├── sgd_solver.cpp

│ │ └── sgd_solver.cu

│ ├── syncedmem.cpp

│ ├── test

│ │ ├── CMakeLists.txt

│ │ ├── test_accuracy_layer.cpp

│ │ ├── test_argmax_layer.cpp

│ │ ├── test_batch_norm_layer.cpp

│ │ ├── test_batch_reindex_layer.cpp

│ │ ├── test_benchmark.cpp

│ │ ├── test_bias_layer.cpp

│ │ ├── test_blob.cpp

│ │ ├── test_caffe_main.cpp

│ │ ├── test_common.cpp

│ │ ├── test_concat_layer.cpp

│ │ ├── test_contrastive_loss_layer.cpp

│ │ ├── test_convolution_layer.cpp

│ │ ├── test_crop_layer.cpp

│ │ ├── test_data

│ │ │ ├── generate_sample_data.py

│ │ │ ├── sample_data.h5

│ │ │ ├── sample_data_2_gzip.h5

│ │ │ ├── sample_data_list.txt

│ │ │ ├── solver_data.h5

│ │ │ └── solver_data_list.txt

│ │ ├── test_data_layer.cpp

│ │ ├── test_data_transformer.cpp

│ │ ├── test_db.cpp

│ │ ├── test_deconvolution_layer.cpp

│ │ ├── test_dummy_data_layer.cpp

│ │ ├── test_eltwise_layer.cpp

│ │ ├── test_embed_layer.cpp

│ │ ├── test_euclidean_loss_layer.cpp

│ │ ├── test_filler.cpp

│ │ ├── test_filter_layer.cpp

│ │ ├── test_flatten_layer.cpp

│ │ ├── test_gradient_based_solver.cpp

│ │ ├── test_hdf5_output_layer.cpp

│ │ ├── test_hdf5data_layer.cpp

│ │ ├── test_hinge_loss_layer.cpp

│ │ ├── test_im2col_kernel.cu

│ │ ├── test_im2col_layer.cpp

│ │ ├── test_image_data_layer.cpp

│ │ ├── test_infogain_loss_layer.cpp

│ │ ├── test_inner_product_layer.cpp

│ │ ├── test_internal_thread.cpp

│ │ ├── test_io.cpp

│ │ ├── test_layer_factory.cpp

│ │ ├── test_lrn_layer.cpp

│ │ ├── test_lstm_layer.cpp

│ │ ├── test_math_functions.cpp

│ │ ├── test_maxpool_dropout_layers.cpp

│ │ ├── test_memory_data_layer.cpp

│ │ ├── test_multinomial_logistic_loss_layer.cpp

│ │ ├── test_mvn_layer.cpp

│ │ ├── test_net.cpp

│ │ ├── test_neuron_layer.cpp

│ │ ├── test_platform.cpp

│ │ ├── test_pooling_layer.cpp

│ │ ├── test_power_layer.cpp

│ │ ├── test_protobuf.cpp

│ │ ├── test_random_number_generator.cpp

│ │ ├── test_reduction_layer.cpp

│ │ ├── test_reshape_layer.cpp

│ │ ├── test_rnn_layer.cpp

│ │ ├── test_scale_layer.cpp

│ │ ├── test_sigmoid_cross_entropy_loss_layer.cpp

│ │ ├── test_slice_layer.cpp

│ │ ├── test_softmax_layer.cpp

│ │ ├── test_softmax_with_loss_layer.cpp

│ │ ├── test_solver.cpp

│ │ ├── test_solver_factory.cpp

│ │ ├── test_split_layer.cpp

│ │ ├── test_spp_layer.cpp

│ │ ├── test_stochastic_pooling.cpp

│ │ ├── test_syncedmem.cpp

│ │ ├── test_tanh_layer.cpp

│ │ ├── test_threshold_layer.cpp

│ │ ├── test_tile_layer.cpp

│ │ ├── test_upgrade_proto.cpp

│ │ └── test_util_blas.cpp

│ └── util

│ │ ├── benchmark.cpp

│ │ ├── blocking_queue.cpp

│ │ ├── cudnn.cpp

│ │ ├── db.cpp

│ │ ├── db_leveldb.cpp

│ │ ├── db_lmdb.cpp

│ │ ├── hdf5.cpp

│ │ ├── im2col.cpp

│ │ ├── im2col.cu

│ │ ├── insert_splits.cpp

│ │ ├── io.cpp

│ │ ├── math_functions.cpp

│ │ ├── math_functions.cu

│ │ ├── signal_handler.cpp

│ │ └── upgrade_proto.cpp

└── gtest

│ ├── CMakeLists.txt

│ ├── gtest-all.cpp

│ ├── gtest.h

│ └── gtest_main.cc

└── tools

├── CMakeLists.txt

├── caffe.cpp

├── caffe_pb2.py

├── compute_image_mean.cpp

├── convert_imageset.cpp

├── device_query.cpp

├── extra

├── extract_seconds.py

├── launch_resize_and_crop_images.sh

├── parse_log.py

├── parse_log.sh

├── plot_log.gnuplot.example

├── plot_training_log.py.example

├── resize_and_crop_images.py

└── summarize.py

├── extract_features.cpp

├── finetune_net.cpp

├── net_speed_benchmark.cpp

├── test_net.cpp

├── train_net.cpp

├── upgrade_net_proto_binary.cpp

├── upgrade_net_proto_text.cpp

└── upgrade_solver_proto_text.cpp

/.gitignore:

--------------------------------------------------------------------------------

1 | ./build

2 | cmake-build-debug/

3 | .idea/

4 | .build_release/

5 |

--------------------------------------------------------------------------------

/.gitignore~:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/UnrealVision/unreal_caffe/45243b6a2100739cd9110ffbeded55c5723894b3/.gitignore~

--------------------------------------------------------------------------------

/.idea/vcs.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/README_en.md:

--------------------------------------------------------------------------------

1 | # Unreal Caffe

2 |

3 |

4 |

5 |

6 |

7 | This is a self-contained caffe version. I gathered many useful layers in here, and you can using this directly to run many tasks such as *Faster-RCNN*, *RFCN*, *FCN* etc. Briefly, I do those adjustment based on the official caffe:

8 |

9 | - cudnn 7 support, add to cudnn7;

10 | - `roi_pooling_layer` needed in Fast-RCNN series task;

11 | - `smooth_l1_loss_ohem_layer` needed in RFCN task;

12 | - `deformalable_conv_layer` which is the most advanced conv (still under test.);

13 |

14 |

15 |

16 | ### Installation

17 |

18 | In **unreal_caffe**, you are not need to bare the official full of errors. just:

19 |

20 | ```shell

21 | git clone https://github.com/UnrealVision/unreal_caffe.git

22 | cd unreal_caffe

23 | make -j32

24 | ```

25 |

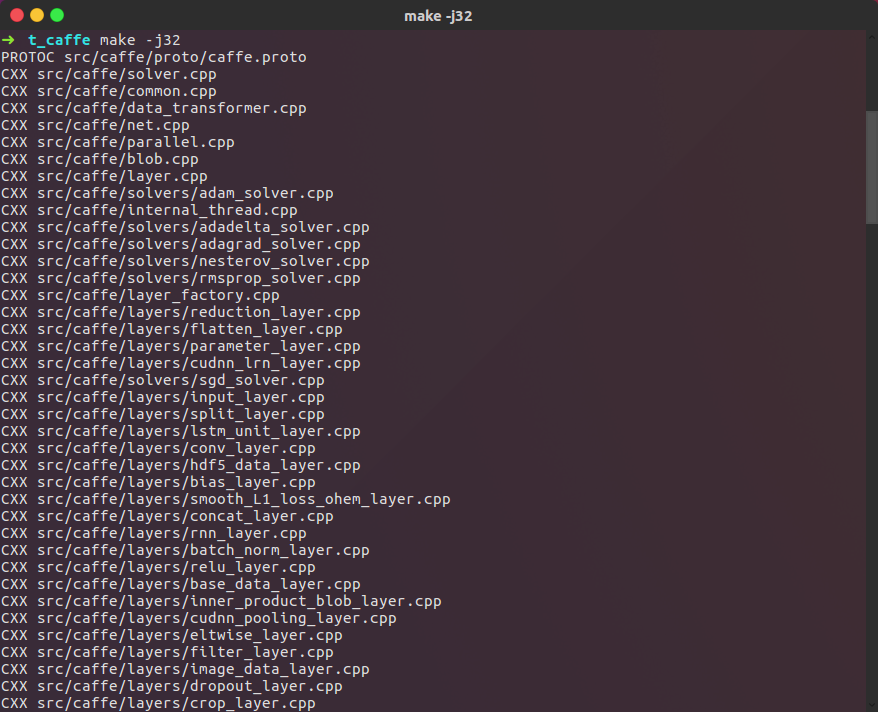

26 | You should got this:

27 |

28 |

29 |

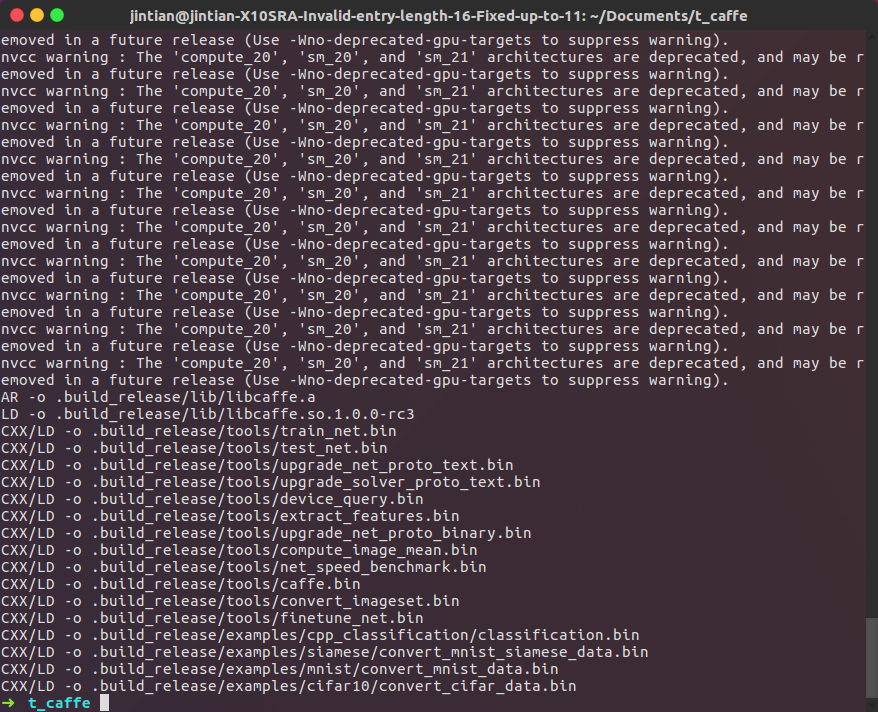

30 | And after build complete, you will got this:

31 |

32 |

33 |

34 | Then directly:

35 |

36 | ```

37 | makepycaffe

38 | ```

39 |

40 | One last thing:

41 |

42 | Add `/path/to/unreal_caffe/python` to your `~/.bashrc` or `~/.zshrc` file. You will using caffe through python. **unreal_caffe default using Python2.7, you can open python3 support but not open them at same time!**

43 |

44 |

45 |

46 | ### Tasks

47 |

48 | I will later on post some demo to should how to using this caffe version do many tasks!

49 |

50 |

51 |

52 | # Copyright

53 |

54 | This code original written by BLVC and Yangqing Jia. I just do some modification beyond that. And I do help many others maintain this version with me, add more features into unreal_caffe.

--------------------------------------------------------------------------------

/a.out:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/UnrealVision/unreal_caffe/45243b6a2100739cd9110ffbeded55c5723894b3/a.out

--------------------------------------------------------------------------------

/build:

--------------------------------------------------------------------------------

1 | .build_release

--------------------------------------------------------------------------------

/caffe.cloc:

--------------------------------------------------------------------------------

1 | Bourne Shell

2 | filter remove_matches ^\s*#

3 | filter remove_inline #.*$

4 | extension sh

5 | script_exe sh

6 | C

7 | filter remove_matches ^\s*//

8 | filter call_regexp_common C

9 | filter remove_inline //.*$

10 | extension c

11 | extension ec

12 | extension pgc

13 | C++

14 | filter remove_matches ^\s*//

15 | filter remove_inline //.*$

16 | filter call_regexp_common C

17 | extension C

18 | extension cc

19 | extension cpp

20 | extension cxx

21 | extension pcc

22 | C/C++ Header

23 | filter remove_matches ^\s*//

24 | filter call_regexp_common C

25 | filter remove_inline //.*$

26 | extension H

27 | extension h

28 | extension hh

29 | extension hpp

30 | CUDA

31 | filter remove_matches ^\s*//

32 | filter remove_inline //.*$

33 | filter call_regexp_common C

34 | extension cu

35 | Python

36 | filter remove_matches ^\s*#

37 | filter docstring_to_C

38 | filter call_regexp_common C

39 | filter remove_inline #.*$

40 | extension py

41 | make

42 | filter remove_matches ^\s*#

43 | filter remove_inline #.*$

44 | extension Gnumakefile

45 | extension Makefile

46 | extension am

47 | extension gnumakefile

48 | extension makefile

49 | filename Gnumakefile

50 | filename Makefile

51 | filename gnumakefile

52 | filename makefile

53 | script_exe make

54 |

--------------------------------------------------------------------------------

/cmake/Modules/FindGFlags.cmake:

--------------------------------------------------------------------------------

1 | # - Try to find GFLAGS

2 | #

3 | # The following variables are optionally searched for defaults

4 | # GFLAGS_ROOT_DIR: Base directory where all GFLAGS components are found

5 | #

6 | # The following are set after configuration is done:

7 | # GFLAGS_FOUND

8 | # GFLAGS_INCLUDE_DIRS

9 | # GFLAGS_LIBRARIES

10 | # GFLAGS_LIBRARYRARY_DIRS

11 |

12 | include(FindPackageHandleStandardArgs)

13 |

14 | set(GFLAGS_ROOT_DIR "" CACHE PATH "Folder contains Gflags")

15 |

16 | # We are testing only a couple of files in the include directories

17 | if(WIN32)

18 | find_path(GFLAGS_INCLUDE_DIR gflags/gflags.h

19 | PATHS ${GFLAGS_ROOT_DIR}/src/windows)

20 | else()

21 | find_path(GFLAGS_INCLUDE_DIR gflags/gflags.h

22 | PATHS ${GFLAGS_ROOT_DIR})

23 | endif()

24 |

25 | if(MSVC)

26 | find_library(GFLAGS_LIBRARY_RELEASE

27 | NAMES libgflags

28 | PATHS ${GFLAGS_ROOT_DIR}

29 | PATH_SUFFIXES Release)

30 |

31 | find_library(GFLAGS_LIBRARY_DEBUG

32 | NAMES libgflags-debug

33 | PATHS ${GFLAGS_ROOT_DIR}

34 | PATH_SUFFIXES Debug)

35 |

36 | set(GFLAGS_LIBRARY optimized ${GFLAGS_LIBRARY_RELEASE} debug ${GFLAGS_LIBRARY_DEBUG})

37 | else()

38 | find_library(GFLAGS_LIBRARY gflags)

39 | endif()

40 |

41 | find_package_handle_standard_args(GFlags DEFAULT_MSG GFLAGS_INCLUDE_DIR GFLAGS_LIBRARY)

42 |

43 |

44 | if(GFLAGS_FOUND)

45 | set(GFLAGS_INCLUDE_DIRS ${GFLAGS_INCLUDE_DIR})

46 | set(GFLAGS_LIBRARIES ${GFLAGS_LIBRARY})

47 | message(STATUS "Found gflags (include: ${GFLAGS_INCLUDE_DIR}, library: ${GFLAGS_LIBRARY})")

48 | mark_as_advanced(GFLAGS_LIBRARY_DEBUG GFLAGS_LIBRARY_RELEASE

49 | GFLAGS_LIBRARY GFLAGS_INCLUDE_DIR GFLAGS_ROOT_DIR)

50 | endif()

51 |

--------------------------------------------------------------------------------

/cmake/Modules/FindGlog.cmake:

--------------------------------------------------------------------------------

1 | # - Try to find Glog

2 | #

3 | # The following variables are optionally searched for defaults

4 | # GLOG_ROOT_DIR: Base directory where all GLOG components are found

5 | #

6 | # The following are set after configuration is done:

7 | # GLOG_FOUND

8 | # GLOG_INCLUDE_DIRS

9 | # GLOG_LIBRARIES

10 | # GLOG_LIBRARYRARY_DIRS

11 |

12 | include(FindPackageHandleStandardArgs)

13 |

14 | set(GLOG_ROOT_DIR "" CACHE PATH "Folder contains Google glog")

15 |

16 | if(WIN32)

17 | find_path(GLOG_INCLUDE_DIR glog/logging.h

18 | PATHS ${GLOG_ROOT_DIR}/src/windows)

19 | else()

20 | find_path(GLOG_INCLUDE_DIR glog/logging.h

21 | PATHS ${GLOG_ROOT_DIR})

22 | endif()

23 |

24 | if(MSVC)

25 | find_library(GLOG_LIBRARY_RELEASE libglog_static

26 | PATHS ${GLOG_ROOT_DIR}

27 | PATH_SUFFIXES Release)

28 |

29 | find_library(GLOG_LIBRARY_DEBUG libglog_static

30 | PATHS ${GLOG_ROOT_DIR}

31 | PATH_SUFFIXES Debug)

32 |

33 | set(GLOG_LIBRARY optimized ${GLOG_LIBRARY_RELEASE} debug ${GLOG_LIBRARY_DEBUG})

34 | else()

35 | find_library(GLOG_LIBRARY glog

36 | PATHS ${GLOG_ROOT_DIR}

37 | PATH_SUFFIXES lib lib64)

38 | endif()

39 |

40 | find_package_handle_standard_args(Glog DEFAULT_MSG GLOG_INCLUDE_DIR GLOG_LIBRARY)

41 |

42 | if(GLOG_FOUND)

43 | set(GLOG_INCLUDE_DIRS ${GLOG_INCLUDE_DIR})

44 | set(GLOG_LIBRARIES ${GLOG_LIBRARY})

45 | message(STATUS "Found glog (include: ${GLOG_INCLUDE_DIR}, library: ${GLOG_LIBRARY})")

46 | mark_as_advanced(GLOG_ROOT_DIR GLOG_LIBRARY_RELEASE GLOG_LIBRARY_DEBUG

47 | GLOG_LIBRARY GLOG_INCLUDE_DIR)

48 | endif()

49 |

--------------------------------------------------------------------------------

/cmake/Modules/FindLMDB.cmake:

--------------------------------------------------------------------------------

1 | # Try to find the LMBD libraries and headers

2 | # LMDB_FOUND - system has LMDB lib

3 | # LMDB_INCLUDE_DIR - the LMDB include directory

4 | # LMDB_LIBRARIES - Libraries needed to use LMDB

5 |

6 | # FindCWD based on FindGMP by:

7 | # Copyright (c) 2006, Laurent Montel,

8 | #

9 | # Redistribution and use is allowed according to the terms of the BSD license.

10 |

11 | # Adapted from FindCWD by:

12 | # Copyright 2013 Conrad Steenberg

13 | # Aug 31, 2013

14 |

15 | find_path(LMDB_INCLUDE_DIR NAMES lmdb.h PATHS "$ENV{LMDB_DIR}/include")

16 | find_library(LMDB_LIBRARIES NAMES lmdb PATHS "$ENV{LMDB_DIR}/lib" )

17 |

18 | include(FindPackageHandleStandardArgs)

19 | find_package_handle_standard_args(LMDB DEFAULT_MSG LMDB_INCLUDE_DIR LMDB_LIBRARIES)

20 |

21 | if(LMDB_FOUND)

22 | message(STATUS "Found lmdb (include: ${LMDB_INCLUDE_DIR}, library: ${LMDB_LIBRARIES})")

23 | mark_as_advanced(LMDB_INCLUDE_DIR LMDB_LIBRARIES)

24 |

25 | caffe_parse_header(${LMDB_INCLUDE_DIR}/lmdb.h

26 | LMDB_VERSION_LINES MDB_VERSION_MAJOR MDB_VERSION_MINOR MDB_VERSION_PATCH)

27 | set(LMDB_VERSION "${MDB_VERSION_MAJOR}.${MDB_VERSION_MINOR}.${MDB_VERSION_PATCH}")

28 | endif()

29 |

--------------------------------------------------------------------------------

/cmake/Modules/FindSnappy.cmake:

--------------------------------------------------------------------------------

1 | # Find the Snappy libraries

2 | #

3 | # The following variables are optionally searched for defaults

4 | # Snappy_ROOT_DIR: Base directory where all Snappy components are found

5 | #

6 | # The following are set after configuration is done:

7 | # SNAPPY_FOUND

8 | # Snappy_INCLUDE_DIR

9 | # Snappy_LIBRARIES

10 |

11 | find_path(Snappy_INCLUDE_DIR NAMES snappy.h

12 | PATHS ${SNAPPY_ROOT_DIR} ${SNAPPY_ROOT_DIR}/include)

13 |

14 | find_library(Snappy_LIBRARIES NAMES snappy

15 | PATHS ${SNAPPY_ROOT_DIR} ${SNAPPY_ROOT_DIR}/lib)

16 |

17 | include(FindPackageHandleStandardArgs)

18 | find_package_handle_standard_args(Snappy DEFAULT_MSG Snappy_INCLUDE_DIR Snappy_LIBRARIES)

19 |

20 | if(SNAPPY_FOUND)

21 | message(STATUS "Found Snappy (include: ${Snappy_INCLUDE_DIR}, library: ${Snappy_LIBRARIES})")

22 | mark_as_advanced(Snappy_INCLUDE_DIR Snappy_LIBRARIES)

23 |

24 | caffe_parse_header(${Snappy_INCLUDE_DIR}/snappy-stubs-public.h

25 | SNAPPY_VERION_LINES SNAPPY_MAJOR SNAPPY_MINOR SNAPPY_PATCHLEVEL)

26 | set(Snappy_VERSION "${SNAPPY_MAJOR}.${SNAPPY_MINOR}.${SNAPPY_PATCHLEVEL}")

27 | endif()

28 |

29 |

--------------------------------------------------------------------------------

/cmake/Modules/FindvecLib.cmake:

--------------------------------------------------------------------------------

1 | # Find the vecLib libraries as part of Accelerate.framework or as standalon framework

2 | #

3 | # The following are set after configuration is done:

4 | # VECLIB_FOUND

5 | # vecLib_INCLUDE_DIR

6 | # vecLib_LINKER_LIBS

7 |

8 |

9 | if(NOT APPLE)

10 | return()

11 | endif()

12 |

13 | set(__veclib_include_suffix "Frameworks/vecLib.framework/Versions/Current/Headers")

14 |

15 | find_path(vecLib_INCLUDE_DIR vecLib.h

16 | DOC "vecLib include directory"

17 | PATHS /System/Library/Frameworks/Accelerate.framework/Versions/Current/${__veclib_include_suffix}

18 | /System/Library/${__veclib_include_suffix}

19 | /Applications/Xcode.app/Contents/Developer/Platforms/MacOSX.platform/Developer/SDKs/MacOSX10.9.sdk/System/Library/Frameworks/Accelerate.framework/Versions/Current/Frameworks/vecLib.framework/Headers/

20 | NO_DEFAULT_PATH)

21 |

22 | include(FindPackageHandleStandardArgs)

23 | find_package_handle_standard_args(vecLib DEFAULT_MSG vecLib_INCLUDE_DIR)

24 |

25 | if(VECLIB_FOUND)

26 | if(vecLib_INCLUDE_DIR MATCHES "^/System/Library/Frameworks/vecLib.framework.*")

27 | set(vecLib_LINKER_LIBS -lcblas "-framework vecLib")

28 | message(STATUS "Found standalone vecLib.framework")

29 | else()

30 | set(vecLib_LINKER_LIBS -lcblas "-framework Accelerate")

31 | message(STATUS "Found vecLib as part of Accelerate.framework")

32 | endif()

33 |

34 | mark_as_advanced(vecLib_INCLUDE_DIR)

35 | endif()

36 |

--------------------------------------------------------------------------------

/cmake/Templates/CaffeConfigVersion.cmake.in:

--------------------------------------------------------------------------------

1 | set(PACKAGE_VERSION "@Caffe_VERSION@")

2 |

3 | # Check whether the requested PACKAGE_FIND_VERSION is compatible

4 | if("${PACKAGE_VERSION}" VERSION_LESS "${PACKAGE_FIND_VERSION}")

5 | set(PACKAGE_VERSION_COMPATIBLE FALSE)

6 | else()

7 | set(PACKAGE_VERSION_COMPATIBLE TRUE)

8 | if ("${PACKAGE_VERSION}" VERSION_EQUAL "${PACKAGE_FIND_VERSION}")

9 | set(PACKAGE_VERSION_EXACT TRUE)

10 | endif()

11 | endif()

12 |

--------------------------------------------------------------------------------

/cmake/Templates/caffe_config.h.in:

--------------------------------------------------------------------------------

1 | /* Sources directory */

2 | #define SOURCE_FOLDER "${PROJECT_SOURCE_DIR}"

3 |

4 | /* Binaries directory */

5 | #define BINARY_FOLDER "${PROJECT_BINARY_DIR}"

6 |

7 | /* NVIDA Cuda */

8 | #cmakedefine HAVE_CUDA

9 |

10 | /* NVIDA cuDNN */

11 | #cmakedefine HAVE_CUDNN

12 | #cmakedefine USE_CUDNN

13 |

14 | /* NVIDA cuDNN */

15 | #cmakedefine CPU_ONLY

16 |

17 | /* Test device */

18 | #define CUDA_TEST_DEVICE ${CUDA_TEST_DEVICE}

19 |

20 | /* Temporary (TODO: remove) */

21 | #if 1

22 | #define CMAKE_SOURCE_DIR SOURCE_FOLDER "/src/"

23 | #define EXAMPLES_SOURCE_DIR BINARY_FOLDER "/examples/"

24 | #define CMAKE_EXT ".gen.cmake"

25 | #else

26 | #define CMAKE_SOURCE_DIR "src/"

27 | #define EXAMPLES_SOURCE_DIR "examples/"

28 | #define CMAKE_EXT ""

29 | #endif

30 |

31 | /* Matlab */

32 | #cmakedefine HAVE_MATLAB

33 |

34 | /* IO libraries */

35 | #cmakedefine USE_OPENCV

36 | #cmakedefine USE_LEVELDB

37 | #cmakedefine USE_LMDB

38 | #cmakedefine ALLOW_LMDB_NOLOCK

39 |

--------------------------------------------------------------------------------

/cmake/lint.cmake:

--------------------------------------------------------------------------------

1 |

2 | set(CMAKE_SOURCE_DIR ..)

3 | set(LINT_COMMAND ${CMAKE_SOURCE_DIR}/scripts/cpp_lint.py)

4 | set(SRC_FILE_EXTENSIONS h hpp hu c cpp cu cc)

5 | set(EXCLUDE_FILE_EXTENSTIONS pb.h pb.cc)

6 | set(LINT_DIRS include src/caffe examples tools python matlab)

7 |

8 | cmake_policy(SET CMP0009 NEW) # suppress cmake warning

9 |

10 | # find all files of interest

11 | foreach(ext ${SRC_FILE_EXTENSIONS})

12 | foreach(dir ${LINT_DIRS})

13 | file(GLOB_RECURSE FOUND_FILES ${CMAKE_SOURCE_DIR}/${dir}/*.${ext})

14 | set(LINT_SOURCES ${LINT_SOURCES} ${FOUND_FILES})

15 | endforeach()

16 | endforeach()

17 |

18 | # find all files that should be excluded

19 | foreach(ext ${EXCLUDE_FILE_EXTENSTIONS})

20 | file(GLOB_RECURSE FOUND_FILES ${CMAKE_SOURCE_DIR}/*.${ext})

21 | set(EXCLUDED_FILES ${EXCLUDED_FILES} ${FOUND_FILES})

22 | endforeach()

23 |

24 | # exclude generated pb files

25 | list(REMOVE_ITEM LINT_SOURCES ${EXCLUDED_FILES})

26 |

27 | execute_process(

28 | COMMAND ${LINT_COMMAND} ${LINT_SOURCES}

29 | ERROR_VARIABLE LINT_OUTPUT

30 | ERROR_STRIP_TRAILING_WHITESPACE

31 | )

32 |

33 | string(REPLACE "\n" ";" LINT_OUTPUT ${LINT_OUTPUT})

34 |

35 | list(GET LINT_OUTPUT -1 LINT_RESULT)

36 | list(REMOVE_AT LINT_OUTPUT -1)

37 | string(REPLACE " " ";" LINT_RESULT ${LINT_RESULT})

38 | list(GET LINT_RESULT -1 NUM_ERRORS)

39 | if(NUM_ERRORS GREATER 0)

40 | foreach(msg ${LINT_OUTPUT})

41 | string(FIND ${msg} "Done" result)

42 | if(result LESS 0)

43 | message(STATUS ${msg})

44 | endif()

45 | endforeach()

46 | message(FATAL_ERROR "Lint found ${NUM_ERRORS} errors!")

47 | else()

48 | message(STATUS "Lint did not find any errors!")

49 | endif()

50 |

51 |

--------------------------------------------------------------------------------

/examples/CMakeLists.txt:

--------------------------------------------------------------------------------

1 | file(GLOB_RECURSE examples_srcs "${PROJECT_SOURCE_DIR}/examples/*.cpp")

2 |

3 | foreach(source_file ${examples_srcs})

4 | # get file name

5 | get_filename_component(name ${source_file} NAME_WE)

6 |

7 | # get folder name

8 | get_filename_component(path ${source_file} PATH)

9 | get_filename_component(folder ${path} NAME_WE)

10 |

11 | add_executable(${name} ${source_file})

12 | target_link_libraries(${name} ${Caffe_LINK})

13 | caffe_default_properties(${name})

14 |

15 | # set back RUNTIME_OUTPUT_DIRECTORY

16 | set_target_properties(${name} PROPERTIES

17 | RUNTIME_OUTPUT_DIRECTORY "${PROJECT_BINARY_DIR}/examples/${folder}")

18 |

19 | caffe_set_solution_folder(${name} examples)

20 |

21 | # install

22 | install(TARGETS ${name} DESTINATION bin)

23 |

24 | if(UNIX OR APPLE)

25 | # Funny command to make tutorials work

26 | # TODO: remove in future as soon as naming is standardized everywhere

27 | set(__outname ${PROJECT_BINARY_DIR}/examples/${folder}/${name}${Caffe_POSTFIX})

28 | add_custom_command(TARGET ${name} POST_BUILD

29 | COMMAND ln -sf "${__outname}" "${__outname}.bin")

30 | endif()

31 | endforeach()

32 |

--------------------------------------------------------------------------------

/examples/cifar10/cifar10_full_sigmoid_solver.prototxt:

--------------------------------------------------------------------------------

1 | # reduce learning rate after 120 epochs (60000 iters) by factor 0f 10

2 | # then another factor of 10 after 10 more epochs (5000 iters)

3 |

4 | # The train/test net protocol buffer definition

5 | net: "examples/cifar10/cifar10_full_sigmoid_train_test.prototxt"

6 | # test_iter specifies how many forward passes the test should carry out.

7 | # In the case of CIFAR10, we have test batch size 100 and 100 test iterations,

8 | # covering the full 10,000 testing images.

9 | test_iter: 10

10 | # Carry out testing every 1000 training iterations.

11 | test_interval: 1000

12 | # The base learning rate, momentum and the weight decay of the network.

13 | base_lr: 0.001

14 | momentum: 0.9

15 | #weight_decay: 0.004

16 | # The learning rate policy

17 | lr_policy: "step"

18 | gamma: 1

19 | stepsize: 5000

20 | # Display every 100 iterations

21 | display: 100

22 | # The maximum number of iterations

23 | max_iter: 60000

24 | # snapshot intermediate results

25 | snapshot: 10000

26 | snapshot_prefix: "examples/cifar10_full_sigmoid"

27 | # solver mode: CPU or GPU

28 | solver_mode: GPU

29 |

--------------------------------------------------------------------------------

/examples/cifar10/cifar10_full_sigmoid_solver_bn.prototxt:

--------------------------------------------------------------------------------

1 | # reduce learning rate after 120 epochs (60000 iters) by factor 0f 10

2 | # then another factor of 10 after 10 more epochs (5000 iters)

3 |

4 | # The train/test net protocol buffer definition

5 | net: "examples/cifar10/cifar10_full_sigmoid_train_test_bn.prototxt"

6 | # test_iter specifies how many forward passes the test should carry out.

7 | # In the case of CIFAR10, we have test batch size 100 and 100 test iterations,

8 | # covering the full 10,000 testing images.

9 | test_iter: 10

10 | # Carry out testing every 1000 training iterations.

11 | test_interval: 1000

12 | # The base learning rate, momentum and the weight decay of the network.

13 | base_lr: 0.001

14 | momentum: 0.9

15 | #weight_decay: 0.004

16 | # The learning rate policy

17 | lr_policy: "step"

18 | gamma: 1

19 | stepsize: 5000

20 | # Display every 100 iterations

21 | display: 100

22 | # The maximum number of iterations

23 | max_iter: 60000

24 | # snapshot intermediate results

25 | snapshot: 10000

26 | snapshot_prefix: "examples/cifar10_full_sigmoid_bn"

27 | # solver mode: CPU or GPU

28 | solver_mode: GPU

29 |

--------------------------------------------------------------------------------

/examples/cifar10/cifar10_full_solver.prototxt:

--------------------------------------------------------------------------------

1 | # reduce learning rate after 120 epochs (60000 iters) by factor 0f 10

2 | # then another factor of 10 after 10 more epochs (5000 iters)

3 |

4 | # The train/test net protocol buffer definition

5 | net: "examples/cifar10/cifar10_full_train_test.prototxt"

6 | # test_iter specifies how many forward passes the test should carry out.

7 | # In the case of CIFAR10, we have test batch size 100 and 100 test iterations,

8 | # covering the full 10,000 testing images.

9 | test_iter: 100

10 | # Carry out testing every 1000 training iterations.

11 | test_interval: 1000

12 | # The base learning rate, momentum and the weight decay of the network.

13 | base_lr: 0.001

14 | momentum: 0.9

15 | weight_decay: 0.004

16 | # The learning rate policy

17 | lr_policy: "fixed"

18 | # Display every 200 iterations

19 | display: 200

20 | # The maximum number of iterations

21 | max_iter: 60000

22 | # snapshot intermediate results

23 | snapshot: 10000

24 | snapshot_format: HDF5

25 | snapshot_prefix: "examples/cifar10/cifar10_full"

26 | # solver mode: CPU or GPU

27 | solver_mode: GPU

28 |

--------------------------------------------------------------------------------

/examples/cifar10/cifar10_full_solver_lr1.prototxt:

--------------------------------------------------------------------------------

1 | # reduce learning rate after 120 epochs (60000 iters) by factor 0f 10

2 | # then another factor of 10 after 10 more epochs (5000 iters)

3 |

4 | # The train/test net protocol buffer definition

5 | net: "examples/cifar10/cifar10_full_train_test.prototxt"

6 | # test_iter specifies how many forward passes the test should carry out.

7 | # In the case of CIFAR10, we have test batch size 100 and 100 test iterations,

8 | # covering the full 10,000 testing images.

9 | test_iter: 100

10 | # Carry out testing every 1000 training iterations.

11 | test_interval: 1000

12 | # The base learning rate, momentum and the weight decay of the network.

13 | base_lr: 0.0001

14 | momentum: 0.9

15 | weight_decay: 0.004

16 | # The learning rate policy

17 | lr_policy: "fixed"

18 | # Display every 200 iterations

19 | display: 200

20 | # The maximum number of iterations

21 | max_iter: 65000

22 | # snapshot intermediate results

23 | snapshot: 5000

24 | snapshot_format: HDF5

25 | snapshot_prefix: "examples/cifar10/cifar10_full"

26 | # solver mode: CPU or GPU

27 | solver_mode: GPU

28 |

--------------------------------------------------------------------------------

/examples/cifar10/cifar10_full_solver_lr2.prototxt:

--------------------------------------------------------------------------------

1 | # reduce learning rate after 120 epochs (60000 iters) by factor 0f 10

2 | # then another factor of 10 after 10 more epochs (5000 iters)

3 |

4 | # The train/test net protocol buffer definition

5 | net: "examples/cifar10/cifar10_full_train_test.prototxt"

6 | # test_iter specifies how many forward passes the test should carry out.

7 | # In the case of CIFAR10, we have test batch size 100 and 100 test iterations,

8 | # covering the full 10,000 testing images.

9 | test_iter: 100

10 | # Carry out testing every 1000 training iterations.

11 | test_interval: 1000

12 | # The base learning rate, momentum and the weight decay of the network.

13 | base_lr: 0.00001

14 | momentum: 0.9

15 | weight_decay: 0.004

16 | # The learning rate policy

17 | lr_policy: "fixed"

18 | # Display every 200 iterations

19 | display: 200

20 | # The maximum number of iterations

21 | max_iter: 70000

22 | # snapshot intermediate results

23 | snapshot: 5000

24 | snapshot_format: HDF5

25 | snapshot_prefix: "examples/cifar10/cifar10_full"

26 | # solver mode: CPU or GPU

27 | solver_mode: GPU

28 |

--------------------------------------------------------------------------------

/examples/cifar10/cifar10_quick_solver.prototxt:

--------------------------------------------------------------------------------

1 | # reduce the learning rate after 8 epochs (4000 iters) by a factor of 10

2 |

3 | # The train/test net protocol buffer definition

4 | net: "examples/cifar10/cifar10_quick_train_test.prototxt"

5 | # test_iter specifies how many forward passes the test should carry out.

6 | # In the case of MNIST, we have test batch size 100 and 100 test iterations,

7 | # covering the full 10,000 testing images.

8 | test_iter: 100

9 | # Carry out testing every 500 training iterations.

10 | test_interval: 500

11 | # The base learning rate, momentum and the weight decay of the network.

12 | base_lr: 0.001

13 | momentum: 0.9

14 | weight_decay: 0.004

15 | # The learning rate policy

16 | lr_policy: "fixed"

17 | # Display every 100 iterations

18 | display: 100

19 | # The maximum number of iterations

20 | max_iter: 4000

21 | # snapshot intermediate results

22 | snapshot: 4000

23 | snapshot_format: HDF5

24 | snapshot_prefix: "examples/cifar10/cifar10_quick"

25 | # solver mode: CPU or GPU

26 | solver_mode: GPU

27 |

--------------------------------------------------------------------------------

/examples/cifar10/cifar10_quick_solver_lr1.prototxt:

--------------------------------------------------------------------------------

1 | # reduce the learning rate after 8 epochs (4000 iters) by a factor of 10

2 |

3 | # The train/test net protocol buffer definition

4 | net: "examples/cifar10/cifar10_quick_train_test.prototxt"

5 | # test_iter specifies how many forward passes the test should carry out.

6 | # In the case of MNIST, we have test batch size 100 and 100 test iterations,

7 | # covering the full 10,000 testing images.

8 | test_iter: 100

9 | # Carry out testing every 500 training iterations.

10 | test_interval: 500

11 | # The base learning rate, momentum and the weight decay of the network.

12 | base_lr: 0.0001

13 | momentum: 0.9

14 | weight_decay: 0.004

15 | # The learning rate policy

16 | lr_policy: "fixed"

17 | # Display every 100 iterations

18 | display: 100

19 | # The maximum number of iterations

20 | max_iter: 5000

21 | # snapshot intermediate results

22 | snapshot: 5000

23 | snapshot_format: HDF5

24 | snapshot_prefix: "examples/cifar10/cifar10_quick"

25 | # solver mode: CPU or GPU

26 | solver_mode: GPU

27 |

--------------------------------------------------------------------------------

/examples/cifar10/create_cifar10.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env sh

2 | # This script converts the cifar data into leveldb format.

3 | set -e

4 |

5 | EXAMPLE=examples/cifar10

6 | DATA=data/cifar10

7 | DBTYPE=lmdb

8 |

9 | echo "Creating $DBTYPE..."

10 |

11 | rm -rf $EXAMPLE/cifar10_train_$DBTYPE $EXAMPLE/cifar10_test_$DBTYPE

12 |

13 | ./build/examples/cifar10/convert_cifar_data.bin $DATA $EXAMPLE $DBTYPE

14 |

15 | echo "Computing image mean..."

16 |

17 | ./build/tools/compute_image_mean -backend=$DBTYPE \

18 | $EXAMPLE/cifar10_train_$DBTYPE $EXAMPLE/mean.binaryproto

19 |

20 | echo "Done."

21 |

--------------------------------------------------------------------------------

/examples/cifar10/train_full.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env sh

2 | set -e

3 |

4 | TOOLS=./build/tools

5 |

6 | $TOOLS/caffe train \

7 | --solver=examples/cifar10/cifar10_full_solver.prototxt $@

8 |

9 | # reduce learning rate by factor of 10

10 | $TOOLS/caffe train \

11 | --solver=examples/cifar10/cifar10_full_solver_lr1.prototxt \

12 | --snapshot=examples/cifar10/cifar10_full_iter_60000.solverstate.h5 $@

13 |

14 | # reduce learning rate by factor of 10

15 | $TOOLS/caffe train \

16 | --solver=examples/cifar10/cifar10_full_solver_lr2.prototxt \

17 | --snapshot=examples/cifar10/cifar10_full_iter_65000.solverstate.h5 $@

18 |

--------------------------------------------------------------------------------

/examples/cifar10/train_full_sigmoid.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env sh

2 | set -e

3 |

4 | TOOLS=./build/tools

5 |

6 | $TOOLS/caffe train \

7 | --solver=examples/cifar10/cifar10_full_sigmoid_solver.prototxt $@

8 |

9 |

--------------------------------------------------------------------------------

/examples/cifar10/train_full_sigmoid_bn.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env sh

2 | set -e

3 |

4 | TOOLS=./build/tools

5 |

6 | $TOOLS/caffe train \

7 | --solver=examples/cifar10/cifar10_full_sigmoid_solver_bn.prototxt $@

8 |

9 |

--------------------------------------------------------------------------------

/examples/cifar10/train_quick.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env sh

2 | set -e

3 |

4 | TOOLS=./build/tools

5 |

6 | $TOOLS/caffe train \

7 | --solver=examples/cifar10/cifar10_quick_solver.prototxt $@

8 |

9 | # reduce learning rate by factor of 10 after 8 epochs

10 | $TOOLS/caffe train \

11 | --solver=examples/cifar10/cifar10_quick_solver_lr1.prototxt \

12 | --snapshot=examples/cifar10/cifar10_quick_iter_4000.solverstate.h5 $@

13 |

--------------------------------------------------------------------------------

/examples/finetune_flickr_style/flickr_style.csv.gz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/UnrealVision/unreal_caffe/45243b6a2100739cd9110ffbeded55c5723894b3/examples/finetune_flickr_style/flickr_style.csv.gz

--------------------------------------------------------------------------------

/examples/finetune_flickr_style/style_names.txt:

--------------------------------------------------------------------------------

1 | Detailed

2 | Pastel

3 | Melancholy

4 | Noir

5 | HDR

6 | Vintage

7 | Long Exposure

8 | Horror

9 | Sunny

10 | Bright

11 | Hazy

12 | Bokeh

13 | Serene

14 | Texture

15 | Ethereal

16 | Macro

17 | Depth of Field

18 | Geometric Composition

19 | Minimal

20 | Romantic

21 |

--------------------------------------------------------------------------------

/examples/finetune_pascal_detection/pascal_finetune_solver.prototxt:

--------------------------------------------------------------------------------

1 | net: "examples/finetune_pascal_detection/pascal_finetune_trainval_test.prototxt"

2 | test_iter: 100

3 | test_interval: 1000

4 | base_lr: 0.001

5 | lr_policy: "step"

6 | gamma: 0.1

7 | stepsize: 20000

8 | display: 20

9 | max_iter: 100000

10 | momentum: 0.9

11 | weight_decay: 0.0005

12 | snapshot: 10000

13 | snapshot_prefix: "examples/finetune_pascal_detection/pascal_det_finetune"

14 |

--------------------------------------------------------------------------------

/examples/hdf5_classification/nonlinear_auto_test.prototxt:

--------------------------------------------------------------------------------

1 | layer {

2 | name: "data"

3 | type: "HDF5Data"

4 | top: "data"

5 | top: "label"

6 | hdf5_data_param {

7 | source: "examples/hdf5_classification/data/test.txt"

8 | batch_size: 10

9 | }

10 | }

11 | layer {

12 | name: "ip1"

13 | type: "InnerProduct"

14 | bottom: "data"

15 | top: "ip1"

16 | inner_product_param {

17 | num_output: 40

18 | weight_filler {

19 | type: "xavier"

20 | }

21 | }

22 | }

23 | layer {

24 | name: "relu1"

25 | type: "ReLU"

26 | bottom: "ip1"

27 | top: "ip1"

28 | }

29 | layer {

30 | name: "ip2"

31 | type: "InnerProduct"

32 | bottom: "ip1"

33 | top: "ip2"

34 | inner_product_param {

35 | num_output: 2

36 | weight_filler {

37 | type: "xavier"

38 | }

39 | }

40 | }

41 | layer {

42 | name: "accuracy"

43 | type: "Accuracy"

44 | bottom: "ip2"

45 | bottom: "label"

46 | top: "accuracy"

47 | }

48 | layer {

49 | name: "loss"

50 | type: "SoftmaxWithLoss"

51 | bottom: "ip2"

52 | bottom: "label"

53 | top: "loss"

54 | }

55 |

--------------------------------------------------------------------------------

/examples/hdf5_classification/nonlinear_auto_train.prototxt:

--------------------------------------------------------------------------------

1 | layer {

2 | name: "data"

3 | type: "HDF5Data"

4 | top: "data"

5 | top: "label"

6 | hdf5_data_param {

7 | source: "examples/hdf5_classification/data/train.txt"

8 | batch_size: 10

9 | }

10 | }

11 | layer {

12 | name: "ip1"

13 | type: "InnerProduct"

14 | bottom: "data"

15 | top: "ip1"

16 | inner_product_param {

17 | num_output: 40

18 | weight_filler {

19 | type: "xavier"

20 | }

21 | }

22 | }

23 | layer {

24 | name: "relu1"

25 | type: "ReLU"

26 | bottom: "ip1"

27 | top: "ip1"

28 | }

29 | layer {

30 | name: "ip2"

31 | type: "InnerProduct"

32 | bottom: "ip1"

33 | top: "ip2"

34 | inner_product_param {

35 | num_output: 2

36 | weight_filler {

37 | type: "xavier"

38 | }

39 | }

40 | }

41 | layer {

42 | name: "accuracy"

43 | type: "Accuracy"

44 | bottom: "ip2"

45 | bottom: "label"

46 | top: "accuracy"

47 | }

48 | layer {

49 | name: "loss"

50 | type: "SoftmaxWithLoss"

51 | bottom: "ip2"

52 | bottom: "label"

53 | top: "loss"

54 | }

55 |

--------------------------------------------------------------------------------

/examples/hdf5_classification/nonlinear_train_val.prototxt:

--------------------------------------------------------------------------------

1 | name: "LogisticRegressionNet"

2 | layer {

3 | name: "data"

4 | type: "HDF5Data"

5 | top: "data"

6 | top: "label"

7 | include {

8 | phase: TRAIN

9 | }

10 | hdf5_data_param {

11 | source: "examples/hdf5_classification/data/train.txt"

12 | batch_size: 10

13 | }

14 | }

15 | layer {

16 | name: "data"

17 | type: "HDF5Data"

18 | top: "data"

19 | top: "label"

20 | include {

21 | phase: TEST

22 | }

23 | hdf5_data_param {

24 | source: "examples/hdf5_classification/data/test.txt"

25 | batch_size: 10

26 | }

27 | }

28 | layer {

29 | name: "fc1"

30 | type: "InnerProduct"

31 | bottom: "data"

32 | top: "fc1"

33 | param {

34 | lr_mult: 1

35 | decay_mult: 1

36 | }

37 | param {

38 | lr_mult: 2

39 | decay_mult: 0

40 | }

41 | inner_product_param {

42 | num_output: 40

43 | weight_filler {

44 | type: "xavier"

45 | }

46 | bias_filler {

47 | type: "constant"

48 | value: 0

49 | }

50 | }

51 | }

52 | layer {

53 | name: "relu1"

54 | type: "ReLU"

55 | bottom: "fc1"

56 | top: "fc1"

57 | }

58 | layer {

59 | name: "fc2"

60 | type: "InnerProduct"

61 | bottom: "fc1"

62 | top: "fc2"

63 | param {

64 | lr_mult: 1

65 | decay_mult: 1

66 | }

67 | param {

68 | lr_mult: 2

69 | decay_mult: 0

70 | }

71 | inner_product_param {

72 | num_output: 2

73 | weight_filler {

74 | type: "xavier"

75 | }

76 | bias_filler {

77 | type: "constant"

78 | value: 0

79 | }

80 | }

81 | }

82 | layer {

83 | name: "loss"

84 | type: "SoftmaxWithLoss"

85 | bottom: "fc2"

86 | bottom: "label"

87 | top: "loss"

88 | }

89 | layer {

90 | name: "accuracy"

91 | type: "Accuracy"

92 | bottom: "fc2"

93 | bottom: "label"

94 | top: "accuracy"

95 | include {

96 | phase: TEST

97 | }

98 | }

99 |

--------------------------------------------------------------------------------

/examples/hdf5_classification/train_val.prototxt:

--------------------------------------------------------------------------------

1 | name: "LogisticRegressionNet"

2 | layer {

3 | name: "data"

4 | type: "HDF5Data"

5 | top: "data"

6 | top: "label"

7 | include {

8 | phase: TRAIN

9 | }

10 | hdf5_data_param {

11 | source: "examples/hdf5_classification/data/train.txt"

12 | batch_size: 10

13 | }

14 | }

15 | layer {

16 | name: "data"

17 | type: "HDF5Data"

18 | top: "data"

19 | top: "label"

20 | include {

21 | phase: TEST

22 | }

23 | hdf5_data_param {

24 | source: "examples/hdf5_classification/data/test.txt"

25 | batch_size: 10

26 | }

27 | }

28 | layer {

29 | name: "fc1"

30 | type: "InnerProduct"

31 | bottom: "data"

32 | top: "fc1"

33 | param {

34 | lr_mult: 1

35 | decay_mult: 1

36 | }

37 | param {

38 | lr_mult: 2

39 | decay_mult: 0

40 | }

41 | inner_product_param {

42 | num_output: 2

43 | weight_filler {

44 | type: "xavier"

45 | }

46 | bias_filler {

47 | type: "constant"

48 | value: 0

49 | }

50 | }

51 | }

52 | layer {

53 | name: "loss"

54 | type: "SoftmaxWithLoss"

55 | bottom: "fc1"

56 | bottom: "label"

57 | top: "loss"

58 | }

59 | layer {

60 | name: "accuracy"

61 | type: "Accuracy"

62 | bottom: "fc1"

63 | bottom: "label"

64 | top: "accuracy"

65 | include {

66 | phase: TEST

67 | }

68 | }

69 |

--------------------------------------------------------------------------------

/examples/imagenet/create_imagenet.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env sh

2 | # Create the imagenet lmdb inputs

3 | # N.B. set the path to the imagenet train + val data dirs

4 | set -e

5 |

6 | EXAMPLE=examples/imagenet

7 | DATA=data/ilsvrc12

8 | TOOLS=build/tools

9 |

10 | TRAIN_DATA_ROOT=/path/to/imagenet/train/

11 | VAL_DATA_ROOT=/path/to/imagenet/val/

12 |

13 | # Set RESIZE=true to resize the images to 256x256. Leave as false if images have

14 | # already been resized using another tool.

15 | RESIZE=false

16 | if $RESIZE; then

17 | RESIZE_HEIGHT=256

18 | RESIZE_WIDTH=256

19 | else

20 | RESIZE_HEIGHT=0

21 | RESIZE_WIDTH=0

22 | fi

23 |

24 | if [ ! -d "$TRAIN_DATA_ROOT" ]; then

25 | echo "Error: TRAIN_DATA_ROOT is not a path to a directory: $TRAIN_DATA_ROOT"

26 | echo "Set the TRAIN_DATA_ROOT variable in create_imagenet.sh to the path" \

27 | "where the ImageNet training data is stored."

28 | exit 1

29 | fi

30 |

31 | if [ ! -d "$VAL_DATA_ROOT" ]; then

32 | echo "Error: VAL_DATA_ROOT is not a path to a directory: $VAL_DATA_ROOT"

33 | echo "Set the VAL_DATA_ROOT variable in create_imagenet.sh to the path" \

34 | "where the ImageNet validation data is stored."

35 | exit 1

36 | fi

37 |

38 | echo "Creating train lmdb..."

39 |

40 | GLOG_logtostderr=1 $TOOLS/convert_imageset \

41 | --resize_height=$RESIZE_HEIGHT \

42 | --resize_width=$RESIZE_WIDTH \

43 | --shuffle \

44 | $TRAIN_DATA_ROOT \

45 | $DATA/train.txt \

46 | $EXAMPLE/ilsvrc12_train_lmdb

47 |

48 | echo "Creating val lmdb..."

49 |

50 | GLOG_logtostderr=1 $TOOLS/convert_imageset \

51 | --resize_height=$RESIZE_HEIGHT \

52 | --resize_width=$RESIZE_WIDTH \

53 | --shuffle \

54 | $VAL_DATA_ROOT \

55 | $DATA/val.txt \

56 | $EXAMPLE/ilsvrc12_val_lmdb

57 |

58 | echo "Done."

59 |

--------------------------------------------------------------------------------

/examples/imagenet/make_imagenet_mean.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env sh

2 | # Compute the mean image from the imagenet training lmdb

3 | # N.B. this is available in data/ilsvrc12

4 |

5 | EXAMPLE=examples/imagenet

6 | DATA=data/ilsvrc12

7 | TOOLS=build/tools

8 |

9 | $TOOLS/compute_image_mean $EXAMPLE/ilsvrc12_train_lmdb \

10 | $DATA/imagenet_mean.binaryproto

11 |

12 | echo "Done."

13 |

--------------------------------------------------------------------------------

/examples/imagenet/resume_training.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env sh

2 | set -e

3 |

4 | ./build/tools/caffe train \

5 | --solver=models/bvlc_reference_caffenet/solver.prototxt \

6 | --snapshot=models/bvlc_reference_caffenet/caffenet_train_10000.solverstate.h5 \

7 | $@

8 |

--------------------------------------------------------------------------------

/examples/imagenet/train_caffenet.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env sh

2 | set -e

3 |

4 | ./build/tools/caffe train \

5 | --solver=models/bvlc_reference_caffenet/solver.prototxt $@

6 |

--------------------------------------------------------------------------------

/examples/images/cat gray.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/UnrealVision/unreal_caffe/45243b6a2100739cd9110ffbeded55c5723894b3/examples/images/cat gray.jpg

--------------------------------------------------------------------------------

/examples/images/cat.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/UnrealVision/unreal_caffe/45243b6a2100739cd9110ffbeded55c5723894b3/examples/images/cat.jpg

--------------------------------------------------------------------------------

/examples/images/cat_gray.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/UnrealVision/unreal_caffe/45243b6a2100739cd9110ffbeded55c5723894b3/examples/images/cat_gray.jpg

--------------------------------------------------------------------------------

/examples/images/fish-bike.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/UnrealVision/unreal_caffe/45243b6a2100739cd9110ffbeded55c5723894b3/examples/images/fish-bike.jpg

--------------------------------------------------------------------------------

/examples/mnist/create_mnist.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env sh

2 | # This script converts the mnist data into lmdb/leveldb format,

3 | # depending on the value assigned to $BACKEND.

4 | set -e

5 |

6 | EXAMPLE=examples/mnist

7 | DATA=data/mnist

8 | BUILD=build/examples/mnist

9 |

10 | BACKEND="lmdb"

11 |

12 | echo "Creating ${BACKEND}..."

13 |

14 | rm -rf $EXAMPLE/mnist_train_${BACKEND}

15 | rm -rf $EXAMPLE/mnist_test_${BACKEND}

16 |

17 | $BUILD/convert_mnist_data.bin $DATA/train-images-idx3-ubyte \

18 | $DATA/train-labels-idx1-ubyte $EXAMPLE/mnist_train_${BACKEND} --backend=${BACKEND}

19 | $BUILD/convert_mnist_data.bin $DATA/t10k-images-idx3-ubyte \

20 | $DATA/t10k-labels-idx1-ubyte $EXAMPLE/mnist_test_${BACKEND} --backend=${BACKEND}

21 |

22 | echo "Done."

23 |

--------------------------------------------------------------------------------

/examples/mnist/lenet_adadelta_solver.prototxt:

--------------------------------------------------------------------------------

1 | # The train/test net protocol buffer definition

2 | net: "examples/mnist/lenet_train_test.prototxt"

3 | # test_iter specifies how many forward passes the test should carry out.

4 | # In the case of MNIST, we have test batch size 100 and 100 test iterations,

5 | # covering the full 10,000 testing images.

6 | test_iter: 100

7 | # Carry out testing every 500 training iterations.

8 | test_interval: 500

9 | # The base learning rate, momentum and the weight decay of the network.

10 | base_lr: 1.0

11 | lr_policy: "fixed"

12 | momentum: 0.95

13 | weight_decay: 0.0005

14 | # Display every 100 iterations

15 | display: 100

16 | # The maximum number of iterations

17 | max_iter: 10000

18 | # snapshot intermediate results

19 | snapshot: 5000

20 | snapshot_prefix: "examples/mnist/lenet_adadelta"

21 | # solver mode: CPU or GPU

22 | solver_mode: GPU

23 | type: "AdaDelta"

24 | delta: 1e-6

25 |

--------------------------------------------------------------------------------

/examples/mnist/lenet_auto_solver.prototxt:

--------------------------------------------------------------------------------

1 | # The train/test net protocol buffer definition

2 | train_net: "mnist/lenet_auto_train.prototxt"

3 | test_net: "mnist/lenet_auto_test.prototxt"

4 | # test_iter specifies how many forward passes the test should carry out.

5 | # In the case of MNIST, we have test batch size 100 and 100 test iterations,

6 | # covering the full 10,000 testing images.

7 | test_iter: 100

8 | # Carry out testing every 500 training iterations.

9 | test_interval: 500

10 | # The base learning rate, momentum and the weight decay of the network.

11 | base_lr: 0.01

12 | momentum: 0.9

13 | weight_decay: 0.0005

14 | # The learning rate policy

15 | lr_policy: "inv"

16 | gamma: 0.0001

17 | power: 0.75

18 | # Display every 100 iterations

19 | display: 100

20 | # The maximum number of iterations

21 | max_iter: 10000

22 | # snapshot intermediate results

23 | snapshot: 5000

24 | snapshot_prefix: "mnist/lenet"

25 |

--------------------------------------------------------------------------------

/examples/mnist/lenet_multistep_solver.prototxt:

--------------------------------------------------------------------------------

1 | # The train/test net protocol buffer definition

2 | net: "examples/mnist/lenet_train_test.prototxt"

3 | # test_iter specifies how many forward passes the test should carry out.

4 | # In the case of MNIST, we have test batch size 100 and 100 test iterations,

5 | # covering the full 10,000 testing images.

6 | test_iter: 100

7 | # Carry out testing every 500 training iterations.

8 | test_interval: 500

9 | # The base learning rate, momentum and the weight decay of the network.

10 | base_lr: 0.01

11 | momentum: 0.9

12 | weight_decay: 0.0005

13 | # The learning rate policy

14 | lr_policy: "multistep"

15 | gamma: 0.9

16 | stepvalue: 5000

17 | stepvalue: 7000

18 | stepvalue: 8000

19 | stepvalue: 9000

20 | stepvalue: 9500

21 | # Display every 100 iterations

22 | display: 100

23 | # The maximum number of iterations

24 | max_iter: 10000

25 | # snapshot intermediate results

26 | snapshot: 5000

27 | snapshot_prefix: "examples/mnist/lenet_multistep"

28 | # solver mode: CPU or GPU

29 | solver_mode: GPU

30 |

--------------------------------------------------------------------------------

/examples/mnist/lenet_solver.prototxt:

--------------------------------------------------------------------------------

1 | # The train/test net protocol buffer definition

2 | net: "examples/mnist/lenet_train_test.prototxt"

3 | # test_iter specifies how many forward passes the test should carry out.

4 | # In the case of MNIST, we have test batch size 100 and 100 test iterations,

5 | # covering the full 10,000 testing images.

6 | test_iter: 100

7 | # Carry out testing every 500 training iterations.

8 | test_interval: 500

9 | # The base learning rate, momentum and the weight decay of the network.

10 | base_lr: 0.01

11 | momentum: 0.9

12 | weight_decay: 0.0005

13 | # The learning rate policy

14 | lr_policy: "inv"

15 | gamma: 0.0001

16 | power: 0.75

17 | # Display every 100 iterations

18 | display: 100

19 | # The maximum number of iterations

20 | max_iter: 10000

21 | # snapshot intermediate results

22 | snapshot: 5000

23 | snapshot_prefix: "examples/mnist/lenet"

24 | # solver mode: CPU or GPU

25 | solver_mode: GPU

26 |

--------------------------------------------------------------------------------

/examples/mnist/lenet_solver_adam.prototxt:

--------------------------------------------------------------------------------

1 | # The train/test net protocol buffer definition

2 | # this follows "ADAM: A METHOD FOR STOCHASTIC OPTIMIZATION"

3 | net: "examples/mnist/lenet_train_test.prototxt"

4 | # test_iter specifies how many forward passes the test should carry out.

5 | # In the case of MNIST, we have test batch size 100 and 100 test iterations,

6 | # covering the full 10,000 testing images.

7 | test_iter: 100

8 | # Carry out testing every 500 training iterations.

9 | test_interval: 500

10 | # All parameters are from the cited paper above

11 | base_lr: 0.001

12 | momentum: 0.9

13 | momentum2: 0.999

14 | # since Adam dynamically changes the learning rate, we set the base learning

15 | # rate to a fixed value

16 | lr_policy: "fixed"

17 | # Display every 100 iterations

18 | display: 100

19 | # The maximum number of iterations

20 | max_iter: 10000

21 | # snapshot intermediate results

22 | snapshot: 5000

23 | snapshot_prefix: "examples/mnist/lenet"

24 | # solver mode: CPU or GPU

25 | type: "Adam"

26 | solver_mode: GPU

27 |

--------------------------------------------------------------------------------

/examples/mnist/lenet_solver_rmsprop.prototxt:

--------------------------------------------------------------------------------

1 | # The train/test net protocol buffer definition

2 | net: "examples/mnist/lenet_train_test.prototxt"

3 | # test_iter specifies how many forward passes the test should carry out.

4 | # In the case of MNIST, we have test batch size 100 and 100 test iterations,

5 | # covering the full 10,000 testing images.

6 | test_iter: 100

7 | # Carry out testing every 500 training iterations.

8 | test_interval: 500

9 | # The base learning rate, momentum and the weight decay of the network.

10 | base_lr: 0.01

11 | momentum: 0.0

12 | weight_decay: 0.0005

13 | # The learning rate policy

14 | lr_policy: "inv"

15 | gamma: 0.0001

16 | power: 0.75

17 | # Display every 100 iterations

18 | display: 100

19 | # The maximum number of iterations

20 | max_iter: 10000

21 | # snapshot intermediate results

22 | snapshot: 5000

23 | snapshot_prefix: "examples/mnist/lenet_rmsprop"

24 | # solver mode: CPU or GPU

25 | solver_mode: GPU

26 | type: "RMSProp"

27 | rms_decay: 0.98

28 |

--------------------------------------------------------------------------------

/examples/mnist/mnist_autoencoder_solver.prototxt:

--------------------------------------------------------------------------------

1 | net: "examples/mnist/mnist_autoencoder.prototxt"

2 | test_state: { stage: 'test-on-train' }

3 | test_iter: 500

4 | test_state: { stage: 'test-on-test' }

5 | test_iter: 100

6 | test_interval: 500

7 | test_compute_loss: true

8 | base_lr: 0.01

9 | lr_policy: "step"

10 | gamma: 0.1

11 | stepsize: 10000

12 | display: 100

13 | max_iter: 65000

14 | weight_decay: 0.0005

15 | snapshot: 10000

16 | snapshot_prefix: "examples/mnist/mnist_autoencoder"

17 | momentum: 0.9

18 | # solver mode: CPU or GPU

19 | solver_mode: GPU

20 |

--------------------------------------------------------------------------------

/examples/mnist/mnist_autoencoder_solver_adadelta.prototxt:

--------------------------------------------------------------------------------

1 | net: "examples/mnist/mnist_autoencoder.prototxt"

2 | test_state: { stage: 'test-on-train' }

3 | test_iter: 500

4 | test_state: { stage: 'test-on-test' }

5 | test_iter: 100

6 | test_interval: 500

7 | test_compute_loss: true

8 | base_lr: 1.0

9 | lr_policy: "fixed"

10 | momentum: 0.95

11 | delta: 1e-8

12 | display: 100

13 | max_iter: 65000

14 | weight_decay: 0.0005