├── models

├── __init__.py

├── model_store.py

├── resnet.py

├── resnet_dilation.py

└── fpn_global_local_fmreg_ensemble.py

├── utils

├── __init__.py

├── metrics.py

├── lr_scheduler.py

├── loss.py

└── lovasz_losses.py

├── dataset

├── __init__.py

└── deep_globe.py

├── docs

└── images

│ ├── glnet.png

│ ├── examples.jpg

│ ├── gl_branch.png

│ └── deep_globe_acc_mem_ext.jpg

├── requirements.txt

├── train_deep_globe_global.sh

├── train_deep_globe_global2local.sh

├── train_deep_globe_local2global.sh

├── eval_deep_globe.sh

├── LICENSE

├── .gitignore

├── test.txt

├── option.py

├── crossvali.txt

├── README.md

├── train.txt

├── train_deep_globe.py

└── helper.py

/models/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/utils/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/dataset/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/docs/images/glnet.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/VITA-Group/GLNet/HEAD/docs/images/glnet.png

--------------------------------------------------------------------------------

/docs/images/examples.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/VITA-Group/GLNet/HEAD/docs/images/examples.jpg

--------------------------------------------------------------------------------

/docs/images/gl_branch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/VITA-Group/GLNet/HEAD/docs/images/gl_branch.png

--------------------------------------------------------------------------------

/docs/images/deep_globe_acc_mem_ext.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/VITA-Group/GLNet/HEAD/docs/images/deep_globe_acc_mem_ext.jpg

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy

2 | torch==0.4.1

3 | torchvision==0.3.0

4 | tqdm

5 | tensorboardX

6 | Pillow

7 | opencv-python==3.4.4

8 |

--------------------------------------------------------------------------------

/train_deep_globe_global.sh:

--------------------------------------------------------------------------------

1 | export CUDA_VISIBLE_DEVICES=0

2 | python train_deep_globe.py \

3 | --n_class 7 \

4 | --data_path "/ssd1/chenwy/deep_globe/data/" \

5 | --model_path "/home/chenwy/deep_globe/saved_models/" \

6 | --log_path "/home/chenwy/deep_globe/runs/" \

7 | --task_name "fpn_deepglobe_global" \

8 | --mode 1 \

9 | --batch_size 6 \

10 | --sub_batch_size 6 \

11 | --size_g 508 \

12 | --size_p 508 \

--------------------------------------------------------------------------------

/train_deep_globe_global2local.sh:

--------------------------------------------------------------------------------

1 | export CUDA_VISIBLE_DEVICES=0

2 | python train_deep_globe.py \

3 | --n_class 7 \

4 | --data_path "/ssd1/chenwy/deep_globe/data/" \

5 | --model_path "/home/chenwy/deep_globe/saved_models/" \

6 | --log_path "/home/chenwy/deep_globe/runs/" \

7 | --task_name "fpn_deepglobe_global2local" \

8 | --mode 2 \

9 | --batch_size 6 \

10 | --sub_batch_size 6 \

11 | --size_g 508 \

12 | --size_p 508 \

13 | --path_g "fpn_deepglobe_global.pth" \

--------------------------------------------------------------------------------

/train_deep_globe_local2global.sh:

--------------------------------------------------------------------------------

1 | export CUDA_VISIBLE_DEVICES=0

2 | python train_deep_globe.py \

3 | --n_class 7 \

4 | --data_path "/ssd1/chenwy/deep_globe/data/" \

5 | --model_path "/home/chenwy/deep_globe/saved_models/" \

6 | --log_path "/home/chenwy/deep_globe/runs/" \

7 | --task_name "fpn_deepglobe_local2global" \

8 | --mode 3 \

9 | --batch_size 6 \

10 | --sub_batch_size 6 \

11 | --size_g 508 \

12 | --size_p 508 \

13 | --path_g "fpn_deepglobe_global.pth" \

14 | --path_g2l "fpn_deepglobe_global2local.pth" \

--------------------------------------------------------------------------------

/eval_deep_globe.sh:

--------------------------------------------------------------------------------

1 | export CUDA_VISIBLE_DEVICES=0

2 | python train_deep_globe.py \

3 | --n_class 7 \

4 | --data_path "/ssd1/chenwy/deep_globe/data/" \

5 | --model_path "/home/chenwy/deep_globe/saved_models/" \

6 | --log_path "/home/chenwy/deep_globe/runs/" \

7 | --task_name "eval" \

8 | --mode 3 \

9 | --batch_size 6 \

10 | --sub_batch_size 6 \

11 | --size_g 508 \

12 | --size_p 508 \

13 | --path_g "fpn_deepglobe_global.pth" \

14 | --path_g2l "fpn_deepglobe_global2local.pth" \

15 | --path_l2g "fpn_deepglobe_local2global.pth" \

16 | --evaluation

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2019 Wuyang

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # vim swp files

2 | *.swp

3 | # caffe/pytorch model files

4 | *.pth

5 |

6 | # Mkdocs

7 | # /docs/

8 | /mkdocs/docs/temp

9 |

10 | .DS_Store

11 | .idea

12 | .vscode

13 | .pytest_cache

14 | /experiments

15 |

16 | # resource temp folder

17 | tests/resources/temp/*

18 | !tests/resources/temp/.gitkeep

19 |

20 | # Byte-compiled / optimized / DLL files

21 | __pycache__/

22 | *.py[cod]

23 | *$py.class

24 |

25 | # C extensions

26 | *.so

27 |

28 | # Distribution / packaging

29 | .Python

30 | build/

31 | develop-eggs/

32 | dist/

33 | downloads/

34 | eggs/

35 | .eggs/

36 | lib/

37 | lib64/

38 | parts/

39 | sdist/

40 | var/

41 | wheels/

42 | *.egg-info/

43 | .installed.cfg

44 | *.egg

45 | MANIFEST

46 |

47 | # PyInstaller

48 | # Usually these files are written by a python script from a template

49 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

50 | *.manifest

51 | *.spec

52 |

53 | # Installer logs

54 | pip-log.txt

55 | pip-delete-this-directory.txt

56 |

57 | # Unit test / coverage reports

58 | htmlcov/

59 | .tox/

60 | .coverage

61 | .coverage.*

62 | .cache

63 | nosetests.xml

64 | coverage.xml

65 | *.cover

66 | .hypothesis/

67 | .pytest_cache/

68 |

69 | # Translations

70 | *.mo

71 | *.pot

72 |

73 | # Django stuff:

74 | *.log

75 | .static_storage/

76 | .media/

77 | local_settings.py

78 | local_settings.py

79 | db.sqlite3

80 |

81 | # Flask stuff:

82 | instance/

83 | .webassets-cache

84 |

85 | # Scrapy stuff:

86 | .scrapy

87 |

88 | # Sphinx documentation

89 | docs/_build/

90 |

91 | # PyBuilder

92 | target/

93 |

94 | # Jupyter Notebook

95 | .ipynb_checkpoints

96 |

97 | # pyenv

98 | .python-version

99 |

100 | # celery beat schedule file

101 | celerybeat-schedule

102 |

103 | # SageMath parsed files

104 | *.sage.py

105 |

106 | # Environments

107 | .env

108 | .venv

109 | env/

110 | venv/

111 | ENV/

112 | env.bak/

113 | venv.bak/

114 |

115 | # Spyder project settings

116 | .spyderproject

117 | .spyproject

118 |

119 | # Rope project settings

120 | .ropeproject

121 |

122 | # mkdocs documentation

123 | /site

124 |

125 | # mypy

126 | .mypy_cache/

127 |

--------------------------------------------------------------------------------

/utils/metrics.py:

--------------------------------------------------------------------------------

1 | # Adapted from score written by wkentaro

2 | # https://github.com/wkentaro/pytorch-fcn/blob/master/torchfcn/utils.py

3 |

4 | import numpy as np

5 |

6 | class ConfusionMatrix(object):

7 |

8 | def __init__(self, n_classes):

9 | self.n_classes = n_classes

10 | # axis = 0: target

11 | # axis = 1: prediction

12 | self.confusion_matrix = np.zeros((n_classes, n_classes))

13 | # self.iou = []

14 | # self.iou_threshold = []

15 |

16 | def _fast_hist(self, label_true, label_pred, n_class):

17 | mask = (label_true >= 0) & (label_true < n_class)

18 | hist = np.bincount(n_class * label_true[mask].astype(int) + label_pred[mask], minlength=n_class**2).reshape(n_class, n_class)

19 | return hist

20 |

21 | def update(self, label_trues, label_preds):

22 | for lt, lp in zip(label_trues, label_preds):

23 | tmp = self._fast_hist(lt.flatten(), lp.flatten(), self.n_classes)

24 |

25 | # iu = np.diag(tmp) / (tmp.sum(axis=1) + tmp.sum(axis=0) - np.diag(tmp))

26 | # self.iou.append(iu[1])

27 | # if iu[1] >= 0.65: self.iou_threshold.append(iu[1])

28 | # else: self.iou_threshold.append(0)

29 |

30 | self.confusion_matrix += tmp

31 |

32 | def get_scores(self):

33 | """Returns accuracy score evaluation result.

34 | - overall accuracy

35 | - mean accuracy

36 | - mean IU

37 | - fwavacc

38 | """

39 | hist = self.confusion_matrix

40 | # accuracy is recall/sensitivity for each class, predicted TP / all real positives

41 | # axis in sum: perform summation along

42 | acc = np.nan_to_num(np.diag(hist) / hist.sum(axis=1))

43 | acc_mean = np.mean(np.nan_to_num(acc))

44 |

45 | intersect = np.diag(hist)

46 | union = hist.sum(axis=1) + hist.sum(axis=0) - np.diag(hist)

47 | iou = intersect / union

48 | mean_iou = np.mean(np.nan_to_num(iou))

49 |

50 | freq = hist.sum(axis=1) / hist.sum() # freq of each target

51 | # fwavacc = (freq[freq > 0] * iou[freq > 0]).sum()

52 | freq_iou = (freq * iou).sum()

53 |

54 | return {'accuracy': acc,

55 | 'accuracy_mean': acc_mean,

56 | 'freqw_iou': freq_iou,

57 | 'iou': iou,

58 | 'iou_mean': mean_iou,

59 | # 'IoU_threshold': np.mean(np.nan_to_num(self.iou_threshold)),

60 | }

61 |

62 | def reset(self):

63 | self.confusion_matrix = np.zeros((self.n_classes, self.n_classes))

64 | # self.iou = []

65 | # self.iou_threshold = []

--------------------------------------------------------------------------------

/test.txt:

--------------------------------------------------------------------------------

1 | 10452_sat.jpg

2 | 114473_sat.jpg

3 | 120245_sat.jpg

4 | 127660_sat.jpg

5 | 137499_sat.jpg

6 | 143364_sat.jpg

7 | 143794_sat.jpg

8 | 147545_sat.jpg

9 | 148260_sat.jpg

10 | 148381_sat.jpg

11 | 161109_sat.jpg

12 | 170535_sat.jpg

13 | 181447_sat.jpg

14 | 185522_sat.jpg

15 | 186739_sat.jpg

16 | 195769_sat.jpg

17 | 209787_sat.jpg

18 | 211316_sat.jpg

19 | 219555_sat.jpg

20 | 225393_sat.jpg

21 | 225945_sat.jpg

22 | 226788_sat.jpg

23 | 242583_sat.jpg

24 | 245846_sat.jpg

25 | 255876_sat.jpg

26 | 271245_sat.jpg

27 | 271941_sat.jpg

28 | 273002_sat.jpg

29 | 277049_sat.jpg

30 | 277900_sat.jpg

31 | 28689_sat.jpg

32 | 28935_sat.jpg

33 | 294978_sat.jpg

34 | 307626_sat.jpg

35 | 309818_sat.jpg

36 | 321711_sat.jpg

37 | 326173_sat.jpg

38 | 326238_sat.jpg

39 | 330838_sat.jpg

40 | 332354_sat.jpg

41 | 338111_sat.jpg

42 | 340798_sat.jpg

43 | 343215_sat.jpg

44 | 349442_sat.jpg

45 | 351228_sat.jpg

46 | 387018_sat.jpg

47 | 393043_sat.jpg

48 | 396979_sat.jpg

49 | 397137_sat.jpg

50 | 402209_sat.jpg

51 | 407467_sat.jpg

52 | 412210_sat.jpg

53 | 420078_sat.jpg

54 | 427037_sat.jpg

55 | 428841_sat.jpg

56 | 432089_sat.jpg

57 | 437963_sat.jpg

58 | 449319_sat.jpg

59 | 454655_sat.jpg

60 | 457070_sat.jpg

61 | 457265_sat.jpg

62 | 471187_sat.jpg

63 | 498049_sat.jpg

64 | 501284_sat.jpg

65 | 503968_sat.jpg

66 | 504704_sat.jpg

67 | 505217_sat.jpg

68 | 508676_sat.jpg

69 | 509290_sat.jpg

70 | 513585_sat.jpg

71 | 513968_sat.jpg

72 | 525105_sat.jpg

73 | 533948_sat.jpg

74 | 533952_sat.jpg

75 | 543806_sat.jpg

76 | 547080_sat.jpg

77 | 556452_sat.jpg

78 | 557439_sat.jpg

79 | 560353_sat.jpg

80 | 572237_sat.jpg

81 | 574789_sat.jpg

82 | 576417_sat.jpg

83 | 584663_sat.jpg

84 | 589940_sat.jpg

85 | 591815_sat.jpg

86 | 599743_sat.jpg

87 | 603617_sat.jpg

88 | 606_sat.jpg

89 | 615420_sat.jpg

90 | 620018_sat.jpg

91 | 624916_sat.jpg

92 | 627583_sat.jpg

93 | 635841_sat.jpg

94 | 639004_sat.jpg

95 | 649042_sat.jpg

96 | 652183_sat.jpg

97 | 659953_sat.jpg

98 | 660933_sat.jpg

99 | 661864_sat.jpg

100 | 671164_sat.jpg

101 | 68078_sat.jpg

102 | 684377_sat.jpg

103 | 691384_sat.jpg

104 | 708588_sat.jpg

105 | 71125_sat.jpg

106 | 713813_sat.jpg

107 | 732669_sat.jpg

108 | 751939_sat.jpg

109 | 755453_sat.jpg

110 | 757745_sat.jpg

111 | 771393_sat.jpg

112 | 772452_sat.jpg

113 | 777185_sat.jpg

114 | 7791_sat.jpg

115 | 78298_sat.jpg

116 | 78430_sat.jpg

117 | 7892_sat.jpg

118 | 79049_sat.jpg

119 | 799523_sat.jpg

120 | 810749_sat.jpg

121 | 819442_sat.jpg

122 | 828684_sat.jpg

123 | 829962_sat.jpg

124 | 835147_sat.jpg

125 | 842556_sat.jpg

126 | 850510_sat.jpg

127 | 857201_sat.jpg

128 | 858771_sat.jpg

129 | 875327_sat.jpg

130 | 882451_sat.jpg

131 | 898741_sat.jpg

132 | 925382_sat.jpg

133 | 937922_sat.jpg

134 | 950926_sat.jpg

135 | 956410_sat.jpg

136 | 956928_sat.jpg

137 | 965276_sat.jpg

138 | 982744_sat.jpg

139 | 987381_sat.jpg

140 | 992507_sat.jpg

141 | 994520_sat.jpg

142 | 998002_sat.jpg

143 |

--------------------------------------------------------------------------------

/utils/lr_scheduler.py:

--------------------------------------------------------------------------------

1 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | ## Created by: Hang Zhang

3 | ## ECE Department, Rutgers University

4 | ## Email: zhang.hang@rutgers.edu

5 | ## Copyright (c) 2017

6 | ##

7 | ## This source code is licensed under the MIT-style license found in the

8 | ## LICENSE file in the root directory of this source tree

9 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

10 |

11 | import math

12 |

13 | class LR_Scheduler(object):

14 | """Learning Rate Scheduler

15 |

16 | Step mode: ``lr = baselr * 0.1 ^ {floor(epoch-1 / lr_step)}``

17 |

18 | Cosine mode: ``lr = baselr * 0.5 * (1 + cos(iter/maxiter))``

19 |

20 | Poly mode: ``lr = baselr * (1 - iter/maxiter) ^ 0.9``

21 |

22 | Args:

23 | args: :attr:`args.lr_scheduler` lr scheduler mode (`cos`, `poly`),

24 | :attr:`args.lr` base learning rate, :attr:`args.epochs` number of epochs,

25 | :attr:`args.lr_step`

26 |

27 | iters_per_epoch: number of iterations per epoch

28 | """

29 | def __init__(self, mode, base_lr, num_epochs, iters_per_epoch=0,

30 | lr_step=0, warmup_epochs=0):

31 | self.mode = mode

32 | print('Using {} LR Scheduler!'.format(self.mode))

33 | self.lr = base_lr

34 | if mode == 'step':

35 | assert lr_step

36 | self.lr_step = lr_step

37 | self.iters_per_epoch = iters_per_epoch

38 | self.N = num_epochs * iters_per_epoch

39 | self.epoch = -1

40 | self.warmup_iters = warmup_epochs * iters_per_epoch

41 |

42 | def __call__(self, optimizer, i, epoch, best_pred):

43 | T = epoch * self.iters_per_epoch + i

44 | if self.mode == 'cos':

45 | lr = 0.5 * self.lr * (1 + math.cos(1.0 * T / self.N * math.pi))

46 | elif self.mode == 'poly':

47 | lr = self.lr * pow((1 - 1.0 * T / self.N), 0.9)

48 | elif self.mode == 'step':

49 | lr = self.lr * (0.1 ** (epoch // self.lr_step))

50 | else:

51 | raise NotImplemented

52 | # warm up lr schedule

53 | if self.warmup_iters > 0 and T < self.warmup_iters:

54 | lr = lr * 1.0 * T / self.warmup_iters

55 | if epoch > self.epoch:

56 | print('\n=>Epoches %i, learning rate = %.7f, \

57 | previous best = %.4f' % (epoch, lr, best_pred))

58 | self.epoch = epoch

59 | assert lr >= 0

60 | self._adjust_learning_rate(optimizer, lr)

61 |

62 | def _adjust_learning_rate(self, optimizer, lr):

63 | if len(optimizer.param_groups) == 1:

64 | optimizer.param_groups[0]['lr'] = lr

65 | else:

66 | # enlarge the lr at the head

67 | for i in range(len(optimizer.param_groups)):

68 | if optimizer.param_groups[i]['lr'] > 0: optimizer.param_groups[i]['lr'] = lr

69 | # optimizer.param_groups[0]['lr'] = lr

70 | # for i in range(1, len(optimizer.param_groups)):

71 | # optimizer.param_groups[i]['lr'] = lr * 10

72 |

--------------------------------------------------------------------------------

/option.py:

--------------------------------------------------------------------------------

1 | ###########################################################################

2 | # Created by: CASIA IVA

3 | # Email: jliu@nlpr.ia.ac.cn

4 | # Copyright (c) 2018

5 | ###########################################################################

6 |

7 | import os

8 | import argparse

9 | import torch

10 |

11 | # path_g = os.path.join(model_path, "cityscapes_global.800_4.5.2019.lr5e5.pth")

12 | # # path_g = os.path.join(model_path, "fpn_global.804_nonorm_3.17.2019.lr2e5" + ".pth")

13 | # path_g2l = os.path.join(model_path, "fpn_global2local.508_deep.cat.1x_fmreg_ensemble.p3.0.15l2_3.19.2019.lr2e5.pth")

14 | # path_l2g = os.path.join(model_path, "fpn_local2global.508_deep.cat.1x_fmreg_ensemble.p3_3.19.2019.lr2e5.pth")

15 | class Options():

16 | def __init__(self):

17 | parser = argparse.ArgumentParser(description='PyTorch Segmentation')

18 | # model and dataset

19 | parser.add_argument('--n_class', type=int, default=7, help='segmentation classes')

20 | parser.add_argument('--data_path', type=str, help='path to dataset where images store')

21 | parser.add_argument('--model_path', type=str, help='path to store trained model files, no need to include task specific name')

22 | parser.add_argument('--log_path', type=str, help='path to store tensorboard log files, no need to include task specific name')

23 | parser.add_argument('--task_name', type=str, help='task name for naming saved model files and log files')

24 | parser.add_argument('--mode', type=int, default=1, choices=[1, 2, 3], help='mode for training procedure. 1: train global branch only. 2: train local branch with fixed global branch. 3: train global branch with fixed local branch')

25 | parser.add_argument('--evaluation', action='store_true', default=False, help='evaluation only')

26 | parser.add_argument('--batch_size', type=int, default=6, help='batch size for origin global image (without downsampling)')

27 | parser.add_argument('--sub_batch_size', type=int, default=6, help='batch size for using local image patches')

28 | parser.add_argument('--size_g', type=int, default=508, help='size (in pixel) for downsampled global image')

29 | parser.add_argument('--size_p', type=int, default=508, help='size (in pixel) for cropped local image')

30 | parser.add_argument('--path_g', type=str, default="", help='name for global model path')

31 | parser.add_argument('--path_g2l', type=str, default="", help='name for local from global model path')

32 | parser.add_argument('--path_l2g', type=str, default="", help='name for global from local model path')

33 | parser.add_argument('--lamb_fmreg', type=float, default=0.15, help='loss weight feature map regularization')

34 |

35 | # the parser

36 | self.parser = parser

37 |

38 | def parse(self):

39 | args = self.parser.parse_args()

40 | # default settings for epochs and lr

41 | if args.mode == 1 or args.mode == 3:

42 | args.num_epochs = 120

43 | args.lr = 5e-5

44 | else:

45 | args.num_epochs = 50

46 | args.lr = 2e-5

47 | return args

48 |

--------------------------------------------------------------------------------

/utils/loss.py:

--------------------------------------------------------------------------------

1 | import torch.nn as nn

2 | import torch.nn.functional as F

3 | import torch

4 |

5 |

6 | class CrossEntropyLoss2d(nn.Module):

7 | def __init__(self, weight=None, size_average=True, ignore_index=-100):

8 | super(CrossEntropyLoss2d, self).__init__()

9 | self.nll_loss = nn.NLLLoss(weight, size_average, ignore_index)

10 |

11 | def forward(self, inputs, targets):

12 | return self.nll_loss(F.log_softmax(inputs, dim=1), targets)

13 |

14 |

15 | def one_hot(index, classes):

16 | # index is not flattened (pypass ignore) ############

17 | # size = index.size()[:1] + (classes,) + index.size()[1:]

18 | # view = index.size()[:1] + (1,) + index.size()[1:]

19 | #####################################################

20 | # index is flatten (during ignore) ##################

21 | size = index.size()[:1] + (classes,)

22 | view = index.size()[:1] + (1,)

23 | #####################################################

24 |

25 | # mask = torch.Tensor(size).fill_(0).to(device)

26 | mask = torch.Tensor(size).fill_(0).cuda()

27 | index = index.view(view)

28 | ones = 1.

29 |

30 | return mask.scatter_(1, index, ones)

31 |

32 |

33 | class FocalLoss(nn.Module):

34 |

35 | def __init__(self, gamma=0, eps=1e-7, size_average=True, one_hot=True, ignore=None):

36 | super(FocalLoss, self).__init__()

37 | self.gamma = gamma

38 | self.eps = eps

39 | self.size_average = size_average

40 | self.one_hot = one_hot

41 | self.ignore = ignore

42 |

43 | def forward(self, input, target):

44 | '''

45 | only support ignore at 0

46 | '''

47 | B, C, H, W = input.size()

48 | input = input.permute(0, 2, 3, 1).contiguous().view(-1, C) # B * H * W, C = P, C

49 | target = target.view(-1)

50 | if self.ignore is not None:

51 | valid = (target != self.ignore)

52 | input = input[valid]

53 | target = target[valid]

54 |

55 | if self.one_hot: target = one_hot(target, input.size(1))

56 | probs = F.softmax(input, dim=1)

57 | probs = (probs * target).sum(1)

58 | probs = probs.clamp(self.eps, 1. - self.eps)

59 |

60 | log_p = probs.log()

61 | # print('probs size= {}'.format(probs.size()))

62 | # print(probs)

63 |

64 | batch_loss = -(torch.pow((1 - probs), self.gamma)) * log_p

65 | # print('-----bacth_loss------')

66 | # print(batch_loss)

67 |

68 | if self.size_average:

69 | loss = batch_loss.mean()

70 | else:

71 | loss = batch_loss.sum()

72 | return loss

73 |

74 |

75 | class SoftCrossEntropyLoss2d(nn.Module):

76 | def __init__(self):

77 | super(SoftCrossEntropyLoss2d, self).__init__()

78 |

79 | def forward(self, inputs, targets):

80 | loss = 0

81 | inputs = -F.log_softmax(inputs, dim=1)

82 | for index in range(inputs.size()[0]):

83 | loss += F.conv2d(inputs[range(index, index+1)], targets[range(index, index+1)])/(targets.size()[2] *

84 | targets.size()[3])

85 | return loss

86 |

--------------------------------------------------------------------------------

/models/model_store.py:

--------------------------------------------------------------------------------

1 | """Model store which provides pretrained models."""

2 | from __future__ import print_function

3 | __all__ = ['get_model_file', 'purge']

4 | import os

5 | import zipfile

6 |

7 | from .utils import download, check_sha1

8 |

9 | _model_sha1 = {name: checksum for checksum, name in [

10 | ('ebb6acbbd1d1c90b7f446ae59d30bf70c74febc1', 'resnet50'),

11 | ('2a57e44de9c853fa015b172309a1ee7e2d0e4e2a', 'resnet101'),

12 | ('0d43d698c66aceaa2bc0309f55efdd7ff4b143af', 'resnet152'),

13 | ('2e22611a7f3992ebdee6726af169991bc26d7363', 'deepten_minc'),

14 | ('662e979de25a389f11c65e9f1df7e06c2c356381', 'fcn_resnet50_ade'),

15 | ('eeed8e582f0fdccdba8579e7490570adc6d85c7c', 'fcn_resnet50_pcontext'),

16 | ('54f70c772505064e30efd1ddd3a14e1759faa363', 'psp_resnet50_ade'),

17 | ('075195c5237b778c718fd73ceddfa1376c18dfd0', 'deeplab_resnet50_ade'),

18 | ('5ee47ee28b480cc781a195d13b5806d5bbc616bf', 'encnet_resnet101_coco'),

19 | ('4de91d5922d4d3264f678b663f874da72e82db00', 'encnet_resnet50_pcontext'),

20 | ('9f27ea13d514d7010e59988341bcbd4140fcc33d', 'encnet_resnet101_pcontext'),

21 | ('07ac287cd77e53ea583f37454e17d30ce1509a4a', 'encnet_resnet50_ade'),

22 | ('3f54fa3b67bac7619cd9b3673f5c8227cf8f4718', 'encnet_resnet101_ade'),

23 | ]}

24 |

25 | encoding_repo_url = 'https://hangzh.s3.amazonaws.com/'

26 | _url_format = '{repo_url}encoding/models/{file_name}.zip'

27 |

28 | def short_hash(name):

29 | if name not in _model_sha1:

30 | raise ValueError('Pretrained model for {name} is not available.'.format(name=name))

31 | return _model_sha1[name][:8]

32 |

33 | def get_model_file(name, root=os.path.join('~', '.encoding', 'models')):

34 | r"""Return location for the pretrained on local file system.

35 |

36 | This function will download from online model zoo when model cannot be found or has mismatch.

37 | The root directory will be created if it doesn't exist.

38 |

39 | Parameters

40 | ----------

41 | name : str

42 | Name of the model.

43 | root : str, default '~/.encoding/models'

44 | Location for keeping the model parameters.

45 |

46 | Returns

47 | -------

48 | file_path

49 | Path to the requested pretrained model file.

50 | """

51 | file_name = '{name}-{short_hash}'.format(name=name, short_hash=short_hash(name))

52 | root = os.path.expanduser(root)

53 | file_path = os.path.join(root, file_name+'.pth')

54 | sha1_hash = _model_sha1[name]

55 | if os.path.exists(file_path):

56 | if check_sha1(file_path, sha1_hash):

57 | return file_path

58 | else:

59 | print('Mismatch in the content of model file {} detected.' +

60 | ' Downloading again.'.format(file_path))

61 | else:

62 | print('Model file {} is not found. Downloading.'.format(file_path))

63 |

64 | if not os.path.exists(root):

65 | os.makedirs(root)

66 |

67 | zip_file_path = os.path.join(root, file_name+'.zip')

68 | repo_url = os.environ.get('ENCODING_REPO', encoding_repo_url)

69 | if repo_url[-1] != '/':

70 | repo_url = repo_url + '/'

71 | download(_url_format.format(repo_url=repo_url, file_name=file_name),

72 | path=zip_file_path,

73 | overwrite=True)

74 | with zipfile.ZipFile(zip_file_path) as zf:

75 | zf.extractall(root)

76 | os.remove(zip_file_path)

77 |

78 | if check_sha1(file_path, sha1_hash):

79 | return file_path

80 | else:

81 | raise ValueError('Downloaded file has different hash. Please try again.')

82 |

83 | def purge(root=os.path.join('~', '.encoding', 'models')):

84 | r"""Purge all pretrained model files in local file store.

85 |

86 | Parameters

87 | ----------

88 | root : str, default '~/.encoding/models'

89 | Location for keeping the model parameters.

90 | """

91 | root = os.path.expanduser(root)

92 | files = os.listdir(root)

93 | for f in files:

94 | if f.endswith(".pth"):

95 | os.remove(os.path.join(root, f))

96 |

97 | def pretrained_model_list():

98 | return list(_model_sha1.keys())

99 |

--------------------------------------------------------------------------------

/crossvali.txt:

--------------------------------------------------------------------------------

1 | 102122_sat.jpg

2 | 114577_sat.jpg

3 | 115444_sat.jpg

4 | 119012_sat.jpg

5 | 123172_sat.jpg

6 | 124529_sat.jpg

7 | 125510_sat.jpg

8 | 126796_sat.jpg

9 | 127976_sat.jpg

10 | 129297_sat.jpg

11 | 129298_sat.jpg

12 | 133209_sat.jpg

13 | 136252_sat.jpg

14 | 139581_sat.jpg

15 | 143353_sat.jpg

16 | 147716_sat.jpg

17 | 154626_sat.jpg

18 | 155165_sat.jpg

19 | 162310_sat.jpg

20 | 16453_sat.jpg

21 | 166293_sat.jpg

22 | 166805_sat.jpg

23 | 168514_sat.jpg

24 | 176225_sat.jpg

25 | 180902_sat.jpg

26 | 192918_sat.jpg

27 | 194156_sat.jpg

28 | 19627_sat.jpg

29 | 200561_sat.jpg

30 | 200589_sat.jpg

31 | 210436_sat.jpg

32 | 211739_sat.jpg

33 | 219670_sat.jpg

34 | 229383_sat.jpg

35 | 233615_sat.jpg

36 | 234269_sat.jpg

37 | 246378_sat.jpg

38 | 247179_sat.jpg

39 | 255889_sat.jpg

40 | 262885_sat.jpg

41 | 264436_sat.jpg

42 | 268881_sat.jpg

43 | 273274_sat.jpg

44 | 2774_sat.jpg

45 | 280861_sat.jpg

46 | 283326_sat.jpg

47 | 286339_sat.jpg

48 | 300745_sat.jpg

49 | 312676_sat.jpg

50 | 315848_sat.jpg

51 | 323581_sat.jpg

52 | 324170_sat.jpg

53 | 329017_sat.jpg

54 | 331421_sat.jpg

55 | 334677_sat.jpg

56 | 334811_sat.jpg

57 | 338661_sat.jpg

58 | 34567_sat.jpg

59 | 350033_sat.jpg

60 | 350328_sat.jpg

61 | 351271_sat.jpg

62 | 354033_sat.jpg

63 | 358314_sat.jpg

64 | 358464_sat.jpg

65 | 362191_sat.jpg

66 | 373103_sat.jpg

67 | 375563_sat.jpg

68 | 394500_sat.jpg

69 | 406425_sat.jpg

70 | 416794_sat.jpg

71 | 418261_sat.jpg

72 | 419820_sat.jpg

73 | 424590_sat.jpg

74 | 427774_sat.jpg

75 | 428597_sat.jpg

76 | 430587_sat.jpg

77 | 434210_sat.jpg

78 | 43814_sat.jpg

79 | 438721_sat.jpg

80 | 44070_sat.jpg

81 | 442338_sat.jpg

82 | 443271_sat.jpg

83 | 455374_sat.jpg

84 | 461001_sat.jpg

85 | 461755_sat.jpg

86 | 462612_sat.jpg

87 | 467855_sat.jpg

88 | 471930_sat.jpg

89 | 472774_sat.jpg

90 | 479682_sat.jpg

91 | 491491_sat.jpg

92 | 495406_sat.jpg

93 | 499325_sat.jpg

94 | 499600_sat.jpg

95 | 501804_sat.jpg

96 | 512669_sat.jpg

97 | 514385_sat.jpg

98 | 514414_sat.jpg

99 | 51911_sat.jpg

100 | 536496_sat.jpg

101 | 537221_sat.jpg

102 | 538243_sat.jpg

103 | 538922_sat.jpg

104 | 544078_sat.jpg

105 | 544537_sat.jpg

106 | 550312_sat.jpg

107 | 552001_sat.jpg

108 | 557175_sat.jpg

109 | 559477_sat.jpg

110 | 563092_sat.jpg

111 | 565914_sat.jpg

112 | 570992_sat.jpg

113 | 571520_sat.jpg

114 | 577164_sat.jpg

115 | 584712_sat.jpg

116 | 584865_sat.jpg

117 | 586222_sat.jpg

118 | 586806_sat.jpg

119 | 600230_sat.jpg

120 | 605707_sat.jpg

121 | 614561_sat.jpg

122 | 619800_sat.jpg

123 | 62078_sat.jpg

124 | 621459_sat.jpg

125 | 626323_sat.jpg

126 | 628479_sat.jpg

127 | 638168_sat.jpg

128 | 638937_sat.jpg

129 | 641771_sat.jpg

130 | 646596_sat.jpg

131 | 650253_sat.jpg

132 | 651537_sat.jpg

133 | 652733_sat.jpg

134 | 654770_sat.jpg

135 | 660069_sat.jpg

136 | 669156_sat.jpg

137 | 673927_sat.jpg

138 | 679507_sat.jpg

139 | 686781_sat.jpg

140 | 688544_sat.jpg

141 | 692982_sat.jpg

142 | 702918_sat.jpg

143 | 703413_sat.jpg

144 | 705728_sat.jpg

145 | 706996_sat.jpg

146 | 707319_sat.jpg

147 | 708527_sat.jpg

148 | 725646_sat.jpg

149 | 726265_sat.jpg

150 | 728521_sat.jpg

151 | 730889_sat.jpg

152 | 733758_sat.jpg

153 | 741105_sat.jpg

154 | 748225_sat.jpg

155 | 749375_sat.jpg

156 | 762470_sat.jpg

157 | 762937_sat.jpg

158 | 767012_sat.jpg

159 | 772130_sat.jpg

160 | 775304_sat.jpg

161 | 77669_sat.jpg

162 | 784518_sat.jpg

163 | 794214_sat.jpg

164 | 81039_sat.jpg

165 | 818254_sat.jpg

166 | 820347_sat.jpg

167 | 831146_sat.jpg

168 | 834900_sat.jpg

169 | 838873_sat.jpg

170 | 839012_sat.jpg

171 | 839641_sat.jpg

172 | 841286_sat.jpg

173 | 841404_sat.jpg

174 | 861353_sat.jpg

175 | 864488_sat.jpg

176 | 867349_sat.jpg

177 | 867983_sat.jpg

178 | 875409_sat.jpg

179 | 876248_sat.jpg

180 | 891153_sat.jpg

181 | 893651_sat.jpg

182 | 897901_sat.jpg

183 | 900985_sat.jpg

184 | 904606_sat.jpg

185 | 908837_sat.jpg

186 | 912087_sat.jpg

187 | 912620_sat.jpg

188 | 918105_sat.jpg

189 | 919602_sat.jpg

190 | 925425_sat.jpg

191 | 930491_sat.jpg

192 | 934795_sat.jpg

193 | 935193_sat.jpg

194 | 935318_sat.jpg

195 | 941237_sat.jpg

196 | 942986_sat.jpg

197 | 949559_sat.jpg

198 | 958243_sat.jpg

199 | 958443_sat.jpg

200 | 961919_sat.jpg

201 | 96841_sat.jpg

202 | 970925_sat.jpg

203 | 97337_sat.jpg

204 | 978039_sat.jpg

205 | 981253_sat.jpg

206 | 986342_sat.jpg

207 | 997521_sat.jpg

208 |

--------------------------------------------------------------------------------

/models/resnet.py:

--------------------------------------------------------------------------------

1 | import torch.nn as nn

2 | import torch.utils.model_zoo as model_zoo

3 |

4 |

5 | __all__ = ['ResNet', 'resnet50', 'resnet101']

6 |

7 |

8 | model_urls = {

9 | 'resnet18': 'https://download.pytorch.org/models/resnet18-5c106cde.pth',

10 | 'resnet34': 'https://download.pytorch.org/models/resnet34-333f7ec4.pth',

11 | 'resnet50': 'https://download.pytorch.org/models/resnet50-19c8e357.pth',

12 | 'resnet101': 'https://download.pytorch.org/models/resnet101-5d3b4d8f.pth',

13 | 'resnet152': 'https://download.pytorch.org/models/resnet152-b121ed2d.pth',

14 | }

15 |

16 |

17 | class Bottleneck(nn.Module):

18 | expansion = 4

19 |

20 | def __init__(self, inplanes, planes, stride=1, downsample=None):

21 | super(Bottleneck, self).__init__()

22 | self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=False)

23 | self.bn1 = nn.BatchNorm2d(planes)

24 | self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride,

25 | padding=1, bias=False)

26 | self.bn2 = nn.BatchNorm2d(planes)

27 | self.conv3 = nn.Conv2d(planes, planes * self.expansion, kernel_size=1, bias=False)

28 | self.bn3 = nn.BatchNorm2d(planes * self.expansion)

29 | self.relu = nn.ReLU(inplace=True)

30 | self.downsample = downsample

31 | self.stride = stride

32 |

33 | def forward(self, x):

34 | residual = x

35 |

36 | out = self.conv1(x)

37 | out = self.bn1(out)

38 | out = self.relu(out)

39 |

40 | out = self.conv2(out)

41 | out = self.bn2(out)

42 | out = self.relu(out)

43 |

44 | out = self.conv3(out)

45 | out = self.bn3(out)

46 |

47 | if self.downsample is not None:

48 | residual = self.downsample(x)

49 |

50 | out += residual

51 | out = self.relu(out)

52 |

53 | return out

54 |

55 |

56 | class ResNet(nn.Module):

57 |

58 | def __init__(self, block, layers):

59 | self.inplanes = 64

60 | super(ResNet, self).__init__()

61 | self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3,

62 | bias=False)

63 | self.bn1 = nn.BatchNorm2d(64)

64 | self.relu = nn.ReLU(inplace=True)

65 | self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

66 | self.layer1 = self._make_layer(block, 64, layers[0])

67 | self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

68 | self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

69 | self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

70 |

71 | for m in self.modules():

72 | if isinstance(m, nn.Conv2d):

73 | nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

74 | elif isinstance(m, nn.BatchNorm2d):

75 | nn.init.constant_(m.weight, 1)

76 | nn.init.constant_(m.bias, 0)

77 |

78 | def _make_layer(self, block, planes, blocks, stride=1):

79 | downsample = None

80 | if stride != 1 or self.inplanes != planes * block.expansion:

81 | downsample = nn.Sequential(

82 | nn.Conv2d(self.inplanes, planes * block.expansion,

83 | kernel_size=1, stride=stride, bias=False),

84 | nn.BatchNorm2d(planes * block.expansion),

85 | )

86 |

87 | layers = []

88 | layers.append(block(self.inplanes, planes, stride, downsample))

89 | self.inplanes = planes * block.expansion

90 | for i in range(1, blocks):

91 | layers.append(block(self.inplanes, planes))

92 |

93 | return nn.Sequential(*layers)

94 |

95 | def forward(self, x):

96 | x = self.conv1(x)

97 | x = self.bn1(x)

98 | x = self.relu(x)

99 | x = self.maxpool(x)

100 |

101 | c2 = self.layer1(x)

102 | c3 = self.layer2(c2)

103 | c4 = self.layer3(c3)

104 | c5 = self.layer4(c4)

105 |

106 | return c2, c3, c4, c5

107 |

108 |

109 | def resnet50(pretrained=False, **kwargs):

110 | """Constructs a ResNet-50 model.

111 | Args:

112 | pretrained (bool): If True, returns a model pre-trained on ImageNet

113 | """

114 | model = ResNet(Bottleneck, [3, 4, 6, 3])

115 | if pretrained:

116 | model.load_state_dict(model_zoo.load_url(model_urls['resnet50']), strict=False)

117 | return model

118 |

119 |

120 | def resnet101(pretrained=False, **kwargs):

121 | """Constructs a ResNet-101 model.

122 | Args:

123 | pretrained (bool): If True, returns a model pre-trained on ImageNet

124 | """

125 | model = ResNet(Bottleneck, [3, 4, 23, 3], **kwargs)

126 | if pretrained:

127 | model.load_state_dict(model_zoo.load_url(model_urls['resnet101']), strict=False)

128 | return model

129 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

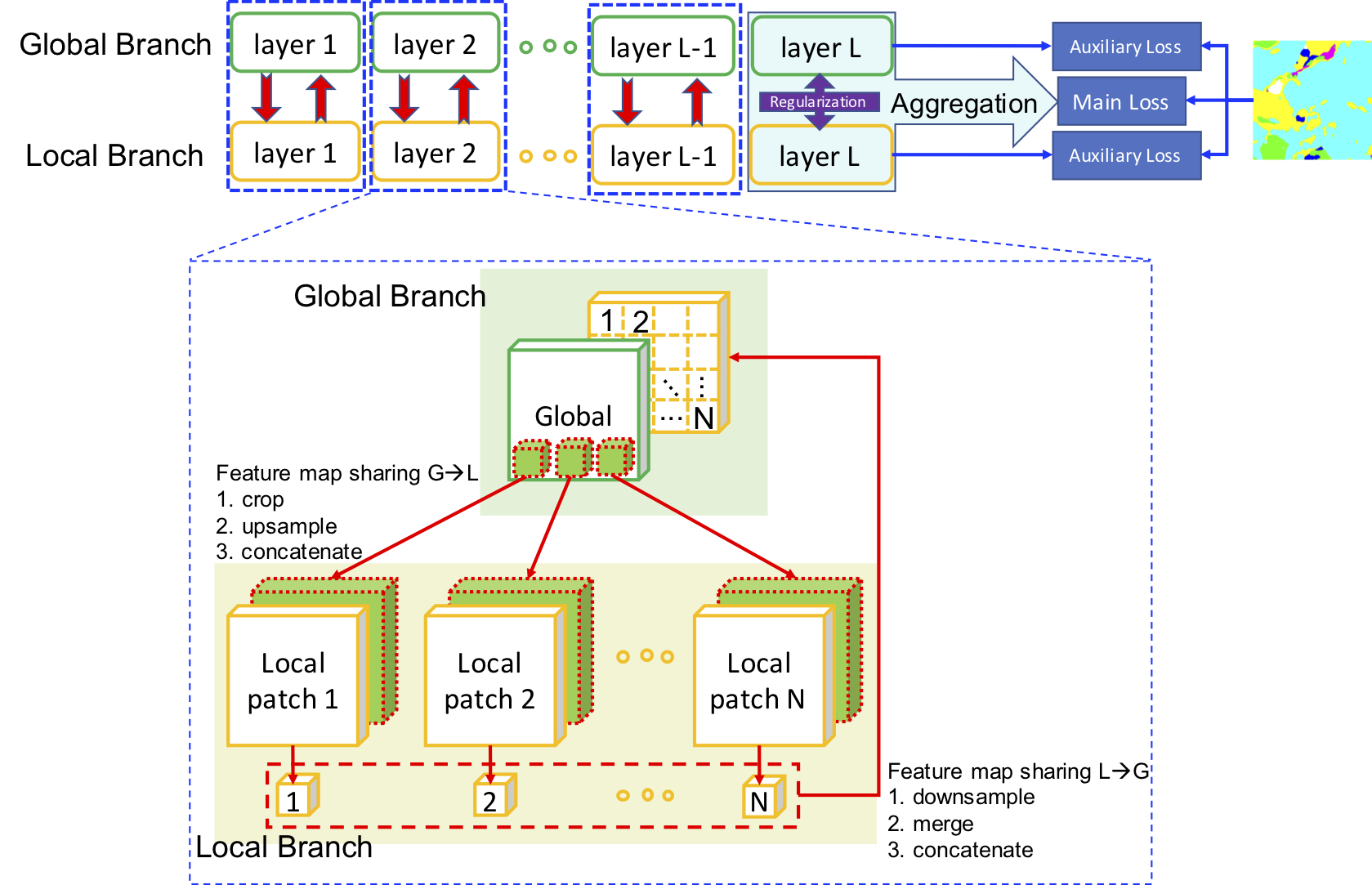

1 | # GLNet for Memory-Efficient Segmentation of Ultra-High Resolution Images

2 |

3 | [](https://lgtm.com/projects/g/chenwydj/ultra_high_resolution_segmentation/context:python) [](https://opensource.org/licenses/MIT)

4 |

5 | Collaborative Global-Local Networks for Memory-Efficient Segmentation of Ultra-High Resolution Images

6 |

7 | Wuyang Chen*, Ziyu Jiang*, Zhangyang Wang, Kexin Cui, and Xiaoning Qian

8 |

9 | In CVPR 2019 (Oral). [[Youtube](https://www.youtube.com/watch?v=am1GiItQI88)]

10 |

11 | ## Overview

12 |

13 | Segmentation of ultra-high resolution images is increasingly demanded in a wide range of applications (e.g. urban planning), yet poses significant challenges for algorithm efficiency, in particular considering the (GPU) memory limits.

14 |

15 | We propose collaborative **Global-Local Networks (GLNet)** to effectively preserve both global and local information in a highly memory-efficient manner.

16 |

17 | * **Memory-efficient**: **training w. only one 1080Ti** and **inference w. less than 2GB GPU memory**, for ultra-high resolution images of up to 30M pixels.

18 |

19 | * **High-quality**: GLNet outperforms existing segmentation models on ultra-high resolution images.

20 |

21 |

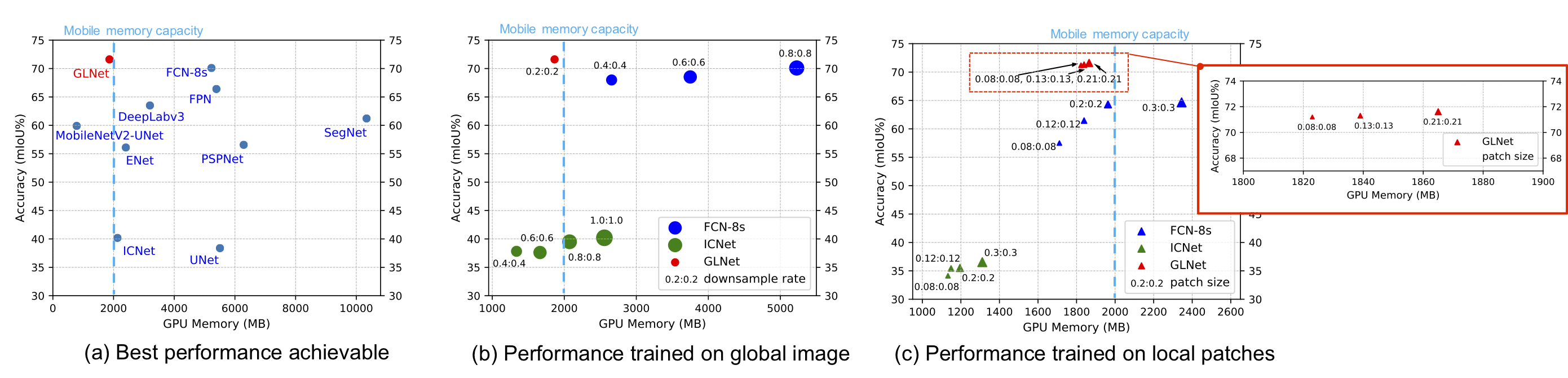

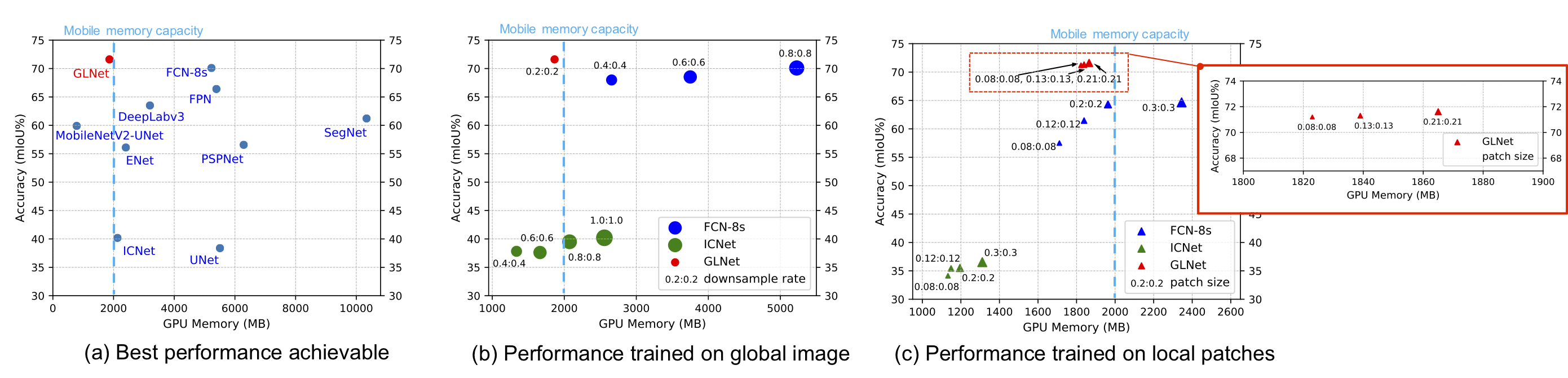

22 |  23 | Inference memory v.s. mIoU on the DeepGlobe dataset.

24 |

25 | GLNet (red dots) integrates both global and local information in a compact way, contributing to a well-balanced trade-off between accuracy and memory usage.

26 |

23 | Inference memory v.s. mIoU on the DeepGlobe dataset.

24 |

25 | GLNet (red dots) integrates both global and local information in a compact way, contributing to a well-balanced trade-off between accuracy and memory usage.

26 |

27 |

28 |

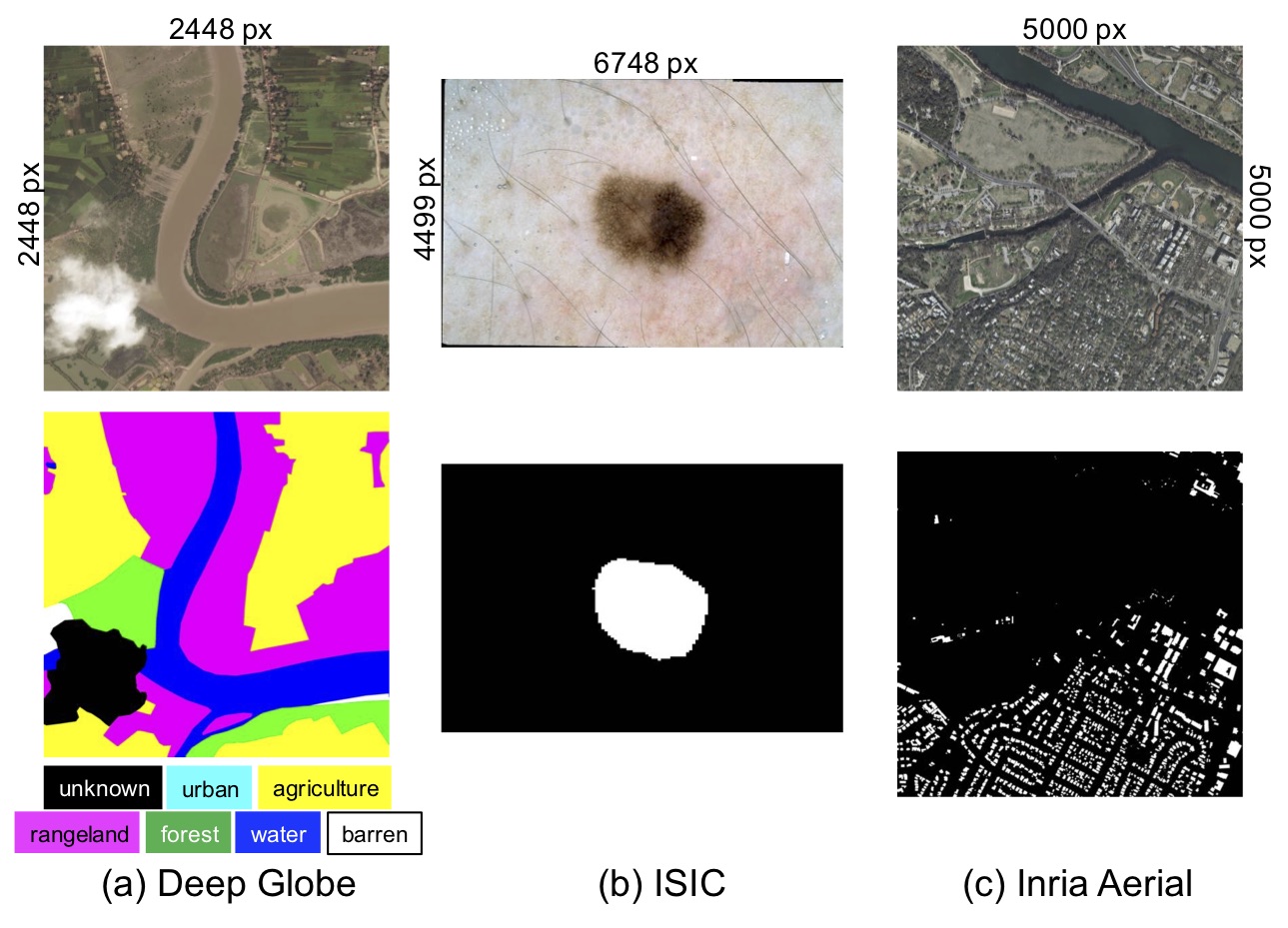

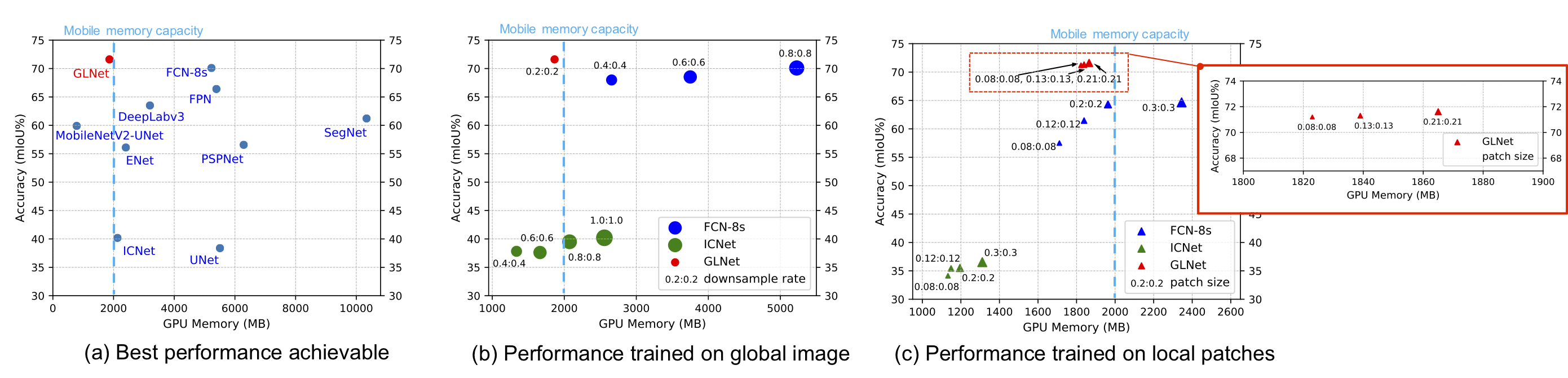

29 |  30 | Ultra-high resolution Datasets: DeepGlobe, ISIC, Inria Aerial

31 |

30 | Ultra-high resolution Datasets: DeepGlobe, ISIC, Inria Aerial

31 |

32 |

33 | ## Methods

34 |

35 |

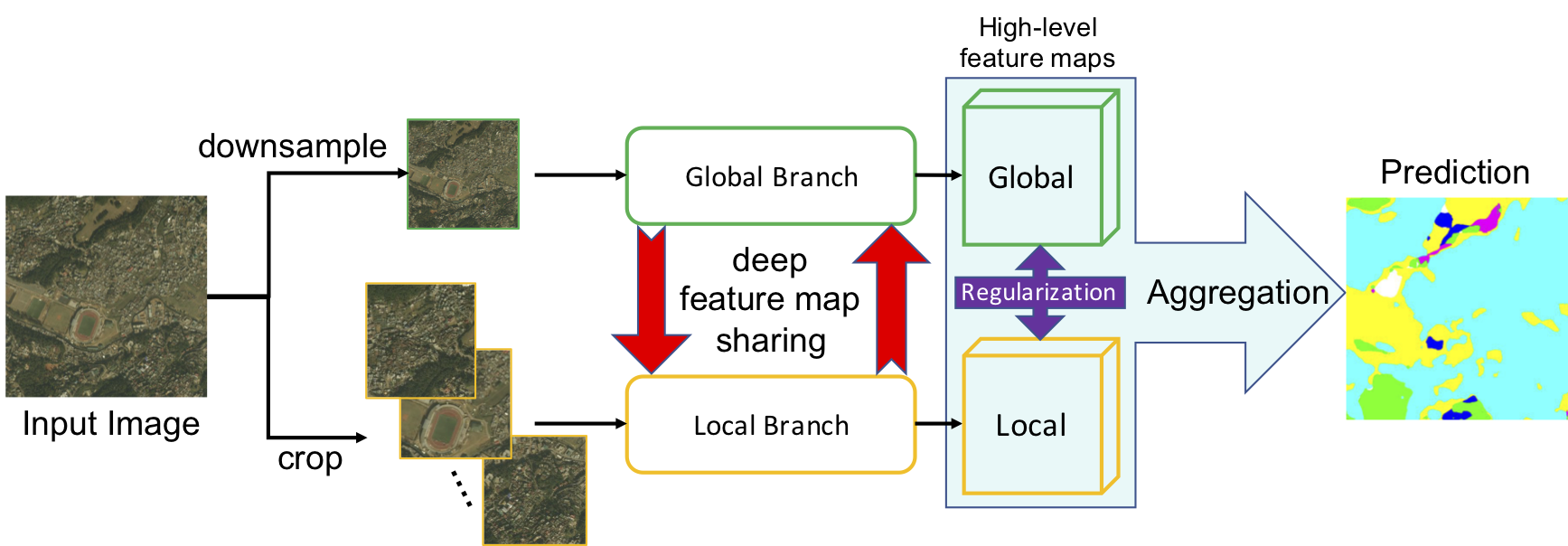

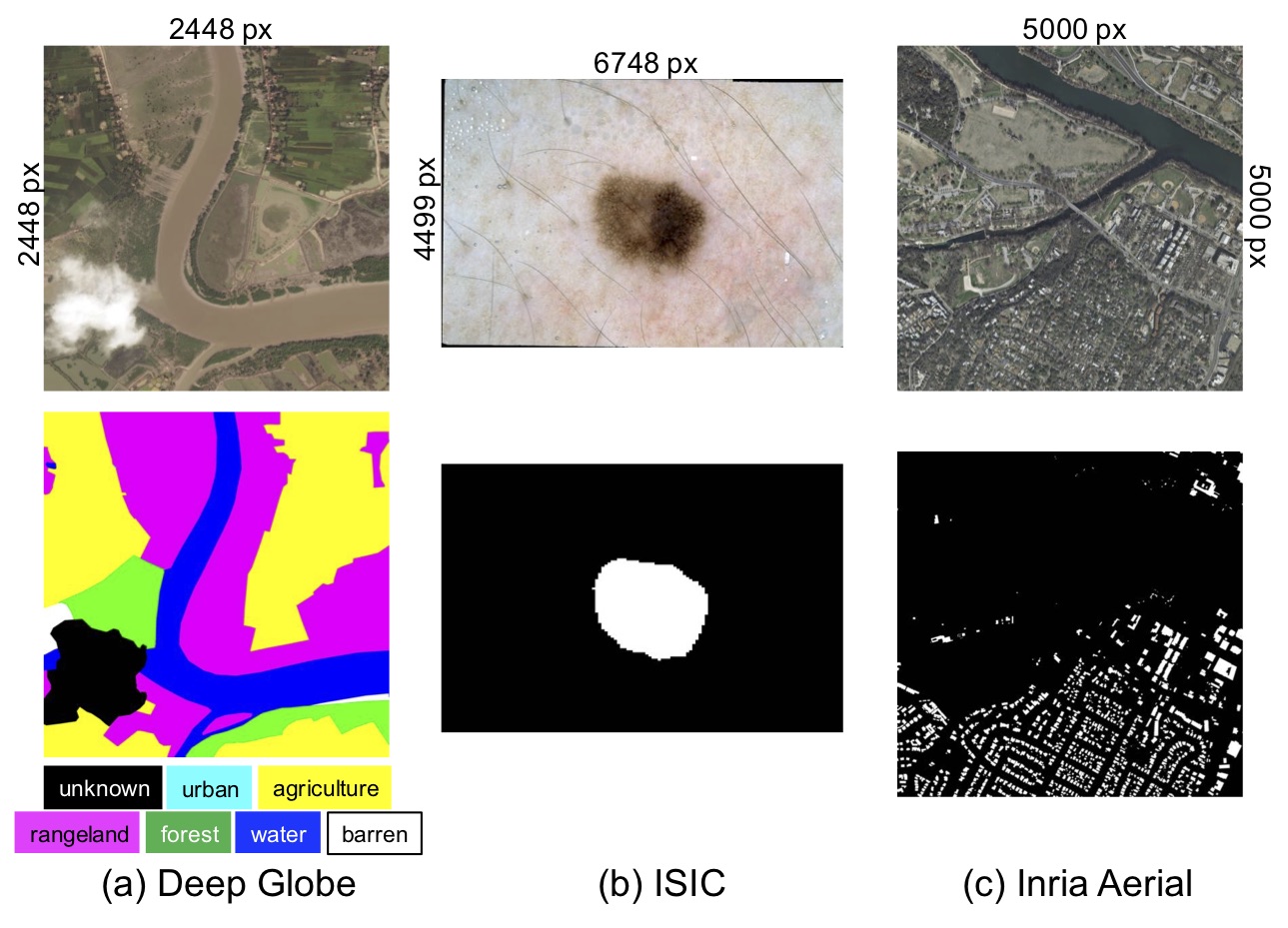

36 |  37 | GLNet: the global and local branch takes downsampled and cropped images, respectively. Deep feature map sharing and feature map regularization enforce our global-local collaboration. The final segmentation is generated by aggregating high-level feature maps from two branches.

38 |

37 | GLNet: the global and local branch takes downsampled and cropped images, respectively. Deep feature map sharing and feature map regularization enforce our global-local collaboration. The final segmentation is generated by aggregating high-level feature maps from two branches.

38 |

39 |

40 |

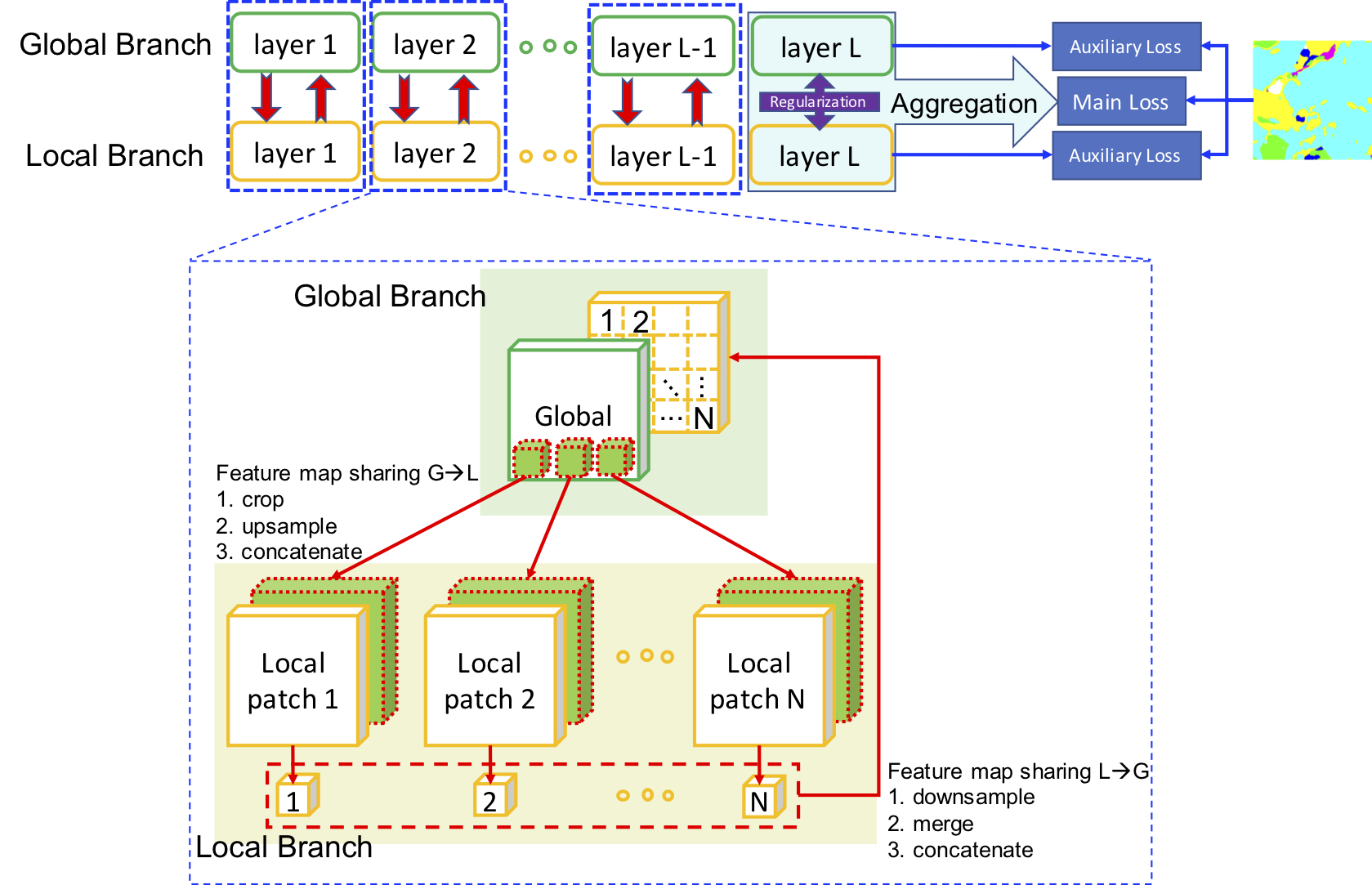

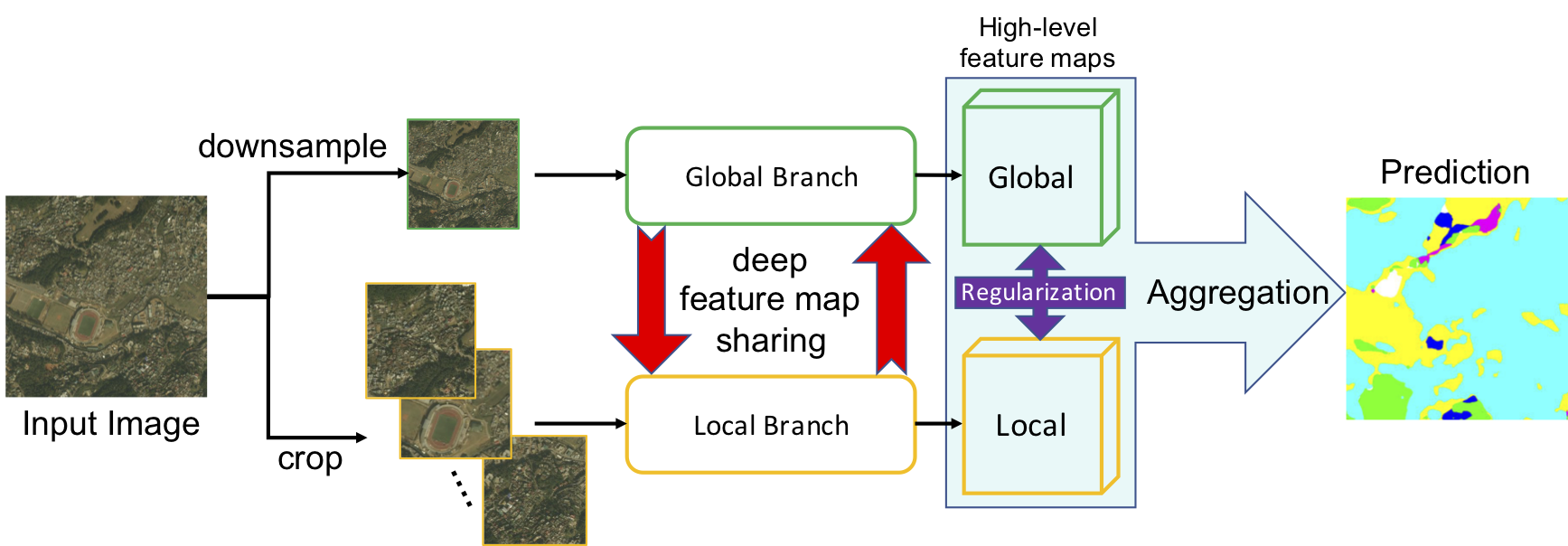

41 |  42 | Deep feature map sharing: at each layer, feature maps with global context and ones with local fine structures are bidirectionally brought together, contributing to a complete patch-based deep global-local collaboration.

43 |

42 | Deep feature map sharing: at each layer, feature maps with global context and ones with local fine structures are bidirectionally brought together, contributing to a complete patch-based deep global-local collaboration.

43 |

44 |

45 | ## Training

46 | Current this code base works for Python version >= 3.5.

47 |

48 | Please install the dependencies: `pip install -r requirements.txt`

49 |

50 | First, you could register and download the Deep Globe "Land Cover Classification" dataset here:

51 | https://competitions.codalab.org/competitions/18468

52 |

53 | Then please sequentially finish the following steps:

54 | 1. `./train_deep_globe_global.sh`

55 | 2. `./train_deep_globe_global2local.sh`

56 | 3. `./train_deep_globe_local2global.sh`

57 |

58 | The above jobs complete the following tasks:

59 | * create folder "saved_models" and "runs" to store the model checkpoints and logging files (you could configure the bash scrips to use your own paths).

60 | * step 1 and 2 prepare the trained models for step 2 and 3, respectively. You could use your own names to save the model checkpoints, but this requires to update values of the flag `path_g` and `path_g2l`.

61 |

62 | ## Evaluation

63 | 1. Please download the pre-trained models for the Deep Globe dataset and put them into folder "saved_models":

64 | * [fpn_deepglobe_global.pth](https://drive.google.com/file/d/1xUJoNEzj5LeclH9tHXZ2VsEI9LpC77kQ/view?usp=sharing)

65 | * [fpn_deepglobe_global2local.pth](https://drive.google.com/file/d/1_lCzi2KIygcrRcvBJ31G3cBwAMibn_AS/view?usp=sharing)

66 | * [fpn_deepglobe_local2global.pth](https://drive.google.com/file/d/198EcAO7VN8Ujn4N4FBg3sRgb8R_UKhYv/view?usp=sharing)

67 | 2. Download (see above "Training" section) and prepare the Deep Globe dataset according to the train.txt and crossvali.txt: put the image and label files into folder "train" and folder "crossvali"

68 | 3. Run script `./eval_deep_globe.sh`

69 |

70 | ## Citation

71 | If you use this code for your research, please cite our paper.

72 | ```

73 | @inproceedings{chen2019GLNET,

74 | title={Collaborative Global-Local Networks for Memory-Efficient Segmentation of Ultra-High Resolution Images},

75 | author={Chen, Wuyang and Jiang, Ziyu and Wang, Zhangyang and Cui, Kexin and Qian, Xiaoning},

76 | booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

77 | year={2019}

78 | }

79 | ```

80 |

81 | ## Acknowledgement

82 | We thank Prof. Andrew Jiang and Junru Wu for helping experiments.

83 |

84 |

86 |

--------------------------------------------------------------------------------

/dataset/deep_globe.py:

--------------------------------------------------------------------------------

1 | import os

2 | import torch.utils.data as data

3 | import numpy as np

4 | from PIL import Image, ImageFile

5 | import random

6 | from torchvision.transforms import ToTensor

7 | from torchvision import transforms

8 | import cv2

9 |

10 | ImageFile.LOAD_TRUNCATED_IMAGES = True

11 |

12 |

13 | def is_image_file(filename):

14 | return any(filename.endswith(extension) for extension in [".png", ".jpg", ".jpeg"])

15 |

16 |

17 | def find_label_map_name(img_filenames, labelExtension=".png"):

18 | img_filenames = img_filenames.replace('_sat.jpg', '_mask')

19 | return img_filenames + labelExtension

20 |

21 |

22 | def RGB_mapping_to_class(label):

23 | l, w = label.shape[0], label.shape[1]

24 | classmap = np.zeros(shape=(l, w))

25 | indices = np.where(np.all(label == (0, 255, 255), axis=-1))

26 | classmap[indices[0].tolist(), indices[1].tolist()] = 1

27 | indices = np.where(np.all(label == (255, 255, 0), axis=-1))

28 | classmap[indices[0].tolist(), indices[1].tolist()] = 2

29 | indices = np.where(np.all(label == (255, 0, 255), axis=-1))

30 | classmap[indices[0].tolist(), indices[1].tolist()] = 3

31 | indices = np.where(np.all(label == (0, 255, 0), axis=-1))

32 | classmap[indices[0].tolist(), indices[1].tolist()] = 4

33 | indices = np.where(np.all(label == (0, 0, 255), axis=-1))

34 | classmap[indices[0].tolist(), indices[1].tolist()] = 5

35 | indices = np.where(np.all(label == (255, 255, 255), axis=-1))

36 | classmap[indices[0].tolist(), indices[1].tolist()] = 6

37 | indices = np.where(np.all(label == (0, 0, 0), axis=-1))

38 | classmap[indices[0].tolist(), indices[1].tolist()] = 0

39 | # plt.imshow(colmap)

40 | # plt.show()

41 | return classmap

42 |

43 |

44 | def classToRGB(label):

45 | l, w = label.shape[0], label.shape[1]

46 | colmap = np.zeros(shape=(l, w, 3)).astype(np.float32)

47 | indices = np.where(label == 1)

48 | colmap[indices[0].tolist(), indices[1].tolist(), :] = [0, 255, 255]

49 | indices = np.where(label == 2)

50 | colmap[indices[0].tolist(), indices[1].tolist(), :] = [255, 255, 0]

51 | indices = np.where(label == 3)

52 | colmap[indices[0].tolist(), indices[1].tolist(), :] = [255, 0, 255]

53 | indices = np.where(label == 4)

54 | colmap[indices[0].tolist(), indices[1].tolist(), :] = [0, 255, 0]

55 | indices = np.where(label == 5)

56 | colmap[indices[0].tolist(), indices[1].tolist(), :] = [0, 0, 255]

57 | indices = np.where(label == 6)

58 | colmap[indices[0].tolist(), indices[1].tolist(), :] = [255, 255, 255]

59 | indices = np.where(label == 0)

60 | colmap[indices[0].tolist(), indices[1].tolist(), :] = [0, 0, 0]

61 | transform = ToTensor();

62 | # plt.imshow(colmap)

63 | # plt.show()

64 | return transform(colmap)

65 |

66 |

67 | def class_to_target(inputs, numClass):

68 | batchSize, l, w = inputs.shape[0], inputs.shape[1], inputs.shape[2]

69 | target = np.zeros(shape=(batchSize, l, w, numClass), dtype=np.float32)

70 | for index in range(7):

71 | indices = np.where(inputs == index)

72 | temp = np.zeros(shape=7, dtype=np.float32)

73 | temp[index] = 1

74 | target[indices[0].tolist(), indices[1].tolist(), indices[2].tolist(), :] = temp

75 | return target.transpose(0, 3, 1, 2)

76 |

77 |

78 | def label_bluring(inputs):

79 | batchSize, numClass, height, width = inputs.shape

80 | outputs = np.ones((batchSize, numClass, height, width), dtype=np.float)

81 | for batchCnt in range(batchSize):

82 | for index in range(numClass):

83 | outputs[batchCnt, index, ...] = cv2.GaussianBlur(inputs[batchCnt, index, ...].astype(np.float), (7, 7), 0)

84 | return outputs

85 |

86 |

87 | class DeepGlobe(data.Dataset):

88 | """input and label image dataset"""

89 |

90 | def __init__(self, root, ids, label=False, transform=False):

91 | super(DeepGlobe, self).__init__()

92 | """

93 | Args:

94 |

95 | fileDir(string): directory with all the input images.

96 | transform(callable, optional): Optional transform to be applied on a sample

97 | """

98 | self.root = root

99 | self.label = label

100 | self.transform = transform

101 | self.ids = ids

102 | self.classdict = {1: "urban", 2: "agriculture", 3: "rangeland", 4: "forest", 5: "water", 6: "barren", 0: "unknown"}

103 |

104 | self.color_jitter = transforms.ColorJitter(brightness=0.3, contrast=0.3, saturation=0.3, hue=0.04)

105 | self.resizer = transforms.Resize((2448, 2448))

106 |

107 | def __getitem__(self, index):

108 | sample = {}

109 | sample['id'] = self.ids[index][:-8]

110 | image = Image.open(os.path.join(self.root, "Sat/" + self.ids[index])) # w, h

111 | sample['image'] = image

112 | # sample['image'] = transforms.functional.adjust_contrast(image, 1.4)

113 | if self.label:

114 | # label = scipy.io.loadmat(join(self.root, 'Notification/' + self.ids[index].replace('_sat.jpg', '_mask.mat')))["label"]

115 | # label = Image.fromarray(label)

116 | label = Image.open(os.path.join(self.root, 'Label/' + self.ids[index].replace('_sat.jpg', '_mask.png')))

117 | sample['label'] = label

118 | if self.transform and self.label:

119 | image, label = self._transform(image, label)

120 | sample['image'] = image

121 | sample['label'] = label

122 | # return {'image': image.astype(np.float32), 'label': label.astype(np.int64)}

123 | return sample

124 |

125 | def _transform(self, image, label):

126 | # if np.random.random() > 0.5:

127 | # image = self.color_jitter(image)

128 |

129 | # if np.random.random() > 0.5:

130 | # image = transforms.functional.vflip(image)

131 | # label = transforms.functional.vflip(label)

132 |

133 | if np.random.random() > 0.5:

134 | image = transforms.functional.hflip(image)

135 | label = transforms.functional.hflip(label)

136 |

137 | if np.random.random() > 0.5:

138 | degree = random.choice([90, 180, 270])

139 | image = transforms.functional.rotate(image, degree)

140 | label = transforms.functional.rotate(label, degree)

141 |

142 | # if np.random.random() > 0.5:

143 | # degree = 60 * np.random.random() - 30

144 | # image = transforms.functional.rotate(image, degree)

145 | # label = transforms.functional.rotate(label, degree)

146 |

147 | # if np.random.random() > 0.5:

148 | # ratio = np.random.random()

149 | # h = int(2448 * (ratio + 2) / 3.)

150 | # w = int(2448 * (ratio + 2) / 3.)

151 | # i = int(np.floor(np.random.random() * (2448 - h)))

152 | # j = int(np.floor(np.random.random() * (2448 - w)))

153 | # image = self.resizer(transforms.functional.crop(image, i, j, h, w))

154 | # label = self.resizer(transforms.functional.crop(label, i, j, h, w))

155 |

156 | return image, label

157 |

158 |

159 | def __len__(self):

160 | return len(self.ids)

--------------------------------------------------------------------------------

/utils/lovasz_losses.py:

--------------------------------------------------------------------------------

1 | # https://github.com/bermanmaxim/LovaszSoftmax/blob/master/pytorch/lovasz_losses.py

2 | """

3 | Lovasz-Softmax and Jaccard hinge loss in PyTorch

4 | Maxim Berman 2018 ESAT-PSI KU Leuven (MIT License)

5 | """

6 |

7 | from __future__ import print_function, division

8 |

9 | import torch

10 | from torch.autograd import Variable

11 | import torch.nn.functional as F

12 | import numpy as np

13 | try:

14 | from itertools import ifilterfalse

15 | except ImportError: # py3k

16 | from itertools import filterfalse

17 |

18 |

19 | def lovasz_grad(gt_sorted):

20 | """

21 | Computes gradient of the Lovasz extension w.r.t sorted errors

22 | See Alg. 1 in paper

23 | """

24 | p = len(gt_sorted)

25 | gts = gt_sorted.sum()

26 | intersection = gts - gt_sorted.float().cumsum(0)

27 | union = gts + (1 - gt_sorted).float().cumsum(0)

28 | jaccard = 1. - intersection / union

29 | if p > 1: # cover 1-pixel case

30 | jaccard[1:p] = jaccard[1:p] - jaccard[0:-1]

31 | return jaccard

32 |

33 |

34 | def iou_binary(preds, labels, EMPTY=1., ignore=None, per_image=True):

35 | """

36 | IoU for foreground class

37 | binary: 1 foreground, 0 background

38 | """

39 | if not per_image:

40 | preds, labels = (preds,), (labels,)

41 | ious = []

42 | for pred, label in zip(preds, labels):

43 | intersection = ((label == 1) & (pred == 1)).sum()

44 | union = ((label == 1) | ((pred == 1) & (label != ignore))).sum()

45 | if not union:

46 | iou = EMPTY

47 | else:

48 | iou = float(intersection) / union

49 | ious.append(iou)

50 | iou = mean(ious) # mean accross images if per_image

51 | return 100 * iou

52 |

53 |

54 | def iou(preds, labels, C, EMPTY=1., ignore=None, per_image=False):

55 | """

56 | Array of IoU for each (non ignored) class

57 | """

58 | if not per_image:

59 | preds, labels = (preds,), (labels,)

60 | ious = []

61 | for pred, label in zip(preds, labels):

62 | iou = []

63 | for i in range(C):

64 | if i != ignore: # The ignored label is sometimes among predicted classes (ENet - CityScapes)

65 | intersection = ((label == i) & (pred == i)).sum()

66 | union = ((label == i) | ((pred == i) & (label != ignore))).sum()

67 | if not union:

68 | iou.append(EMPTY)

69 | else:

70 | iou.append(float(intersection) / union)

71 | ious.append(iou)

72 | ious = map(mean, zip(*ious)) # mean accross images if per_image

73 | return 100 * np.array(ious)

74 |

75 |

76 | # --------------------------- BINARY LOSSES ---------------------------

77 |

78 |

79 | def lovasz_hinge(logits, labels, per_image=True, ignore=None):

80 | """

81 | Binary Lovasz hinge loss

82 | logits: [B, H, W] Variable, logits at each pixel (between -\infty and +\infty)

83 | labels: [B, H, W] Tensor, binary ground truth masks (0 or 1)

84 | per_image: compute the loss per image instead of per batch

85 | ignore: void class id

86 | """

87 | if per_image:

88 | loss = mean(lovasz_hinge_flat(*flatten_binary_scores(log.unsqueeze(0), lab.unsqueeze(0), ignore))

89 | for log, lab in zip(logits, labels))

90 | else:

91 | loss = lovasz_hinge_flat(*flatten_binary_scores(logits, labels, ignore))

92 | return loss

93 |

94 |

95 | def lovasz_hinge_flat(logits, labels):

96 | """

97 | Binary Lovasz hinge loss

98 | logits: [P] Variable, logits at each prediction (between -\infty and +\infty)

99 | labels: [P] Tensor, binary ground truth labels (0 or 1)

100 | ignore: label to ignore

101 | """

102 | if len(labels) == 0:

103 | # only void pixels, the gradients should be 0

104 | return logits.sum() * 0.

105 | signs = 2. * labels.float() - 1.

106 | errors = (1. - logits * Variable(signs))

107 | errors_sorted, perm = torch.sort(errors, dim=0, descending=True)

108 | perm = perm.data

109 | gt_sorted = labels[perm]

110 | grad = lovasz_grad(gt_sorted)

111 | loss = torch.dot(F.relu(errors_sorted), Variable(grad))

112 | return loss

113 |

114 |

115 | def flatten_binary_scores(scores, labels, ignore=None):

116 | """

117 | Flattens predictions in the batch (binary case)

118 | Remove labels equal to 'ignore'

119 | """

120 | scores = scores.view(-1)

121 | labels = labels.view(-1)

122 | if ignore is None:

123 | return scores, labels

124 | valid = (labels != ignore)

125 | vscores = scores[valid]

126 | vlabels = labels[valid]

127 | return vscores, vlabels

128 |

129 |

130 | class StableBCELoss(torch.nn.modules.Module):

131 | def __init__(self):

132 | super(StableBCELoss, self).__init__()

133 | def forward(self, input, target):

134 | neg_abs = - input.abs()

135 | loss = input.clamp(min=0) - input * target + (1 + neg_abs.exp()).log()

136 | return loss.mean()

137 |

138 |

139 | def binary_xloss(logits, labels, ignore=None):

140 | """

141 | Binary Cross entropy loss

142 | logits: [B, H, W] Variable, logits at each pixel (between -\infty and +\infty)

143 | labels: [B, H, W] Tensor, binary ground truth masks (0 or 1)

144 | ignore: void class id

145 | """

146 | logits, labels = flatten_binary_scores(logits, labels, ignore)

147 | loss = StableBCELoss()(logits, Variable(labels.float()))

148 | return loss

149 |

150 |

151 | # --------------------------- MULTICLASS LOSSES ---------------------------

152 |

153 |

154 | def lovasz_softmax(probas, labels, only_present=False, per_image=False, ignore=None):

155 | """

156 | Multi-class Lovasz-Softmax loss

157 | probas: [B, C, H, W] Variable, class probabilities at each prediction (between 0 and 1)

158 | labels: [B, H, W] Tensor, ground truth labels (between 0 and C - 1)

159 | only_present: average only on classes present in ground truth

160 | per_image: compute the loss per image instead of per batch

161 | ignore: void class labels

162 | """

163 | if per_image:

164 | loss = mean(lovasz_softmax_flat(*flatten_probas(prob.unsqueeze(0), lab.unsqueeze(0), ignore), only_present=only_present)

165 | for prob, lab in zip(probas, labels))

166 | else:

167 | loss = lovasz_softmax_flat(*flatten_probas(probas, labels, ignore), only_present=only_present)

168 | return loss

169 |

170 |

171 | def lovasz_softmax_flat(probas, labels, only_present=False):

172 | """

173 | Multi-class Lovasz-Softmax loss

174 | probas: [P, C] Variable, class probabilities at each prediction (between 0 and 1)

175 | labels: [P] Tensor, ground truth labels (between 0 and C - 1)

176 | only_present: average only on classes present in ground truth

177 | """

178 | C = probas.size(1)

179 | losses = []

180 | for c in range(C):

181 | fg = (labels == c).float() # foreground for class c

182 | if only_present and fg.sum() == 0:

183 | continue

184 | errors = (Variable(fg) - probas[:, c]).abs()

185 | errors_sorted, perm = torch.sort(errors, 0, descending=True)

186 | perm = perm.data

187 | fg_sorted = fg[perm]

188 | losses.append(torch.dot(errors_sorted, Variable(lovasz_grad(fg_sorted))))

189 | return mean(losses)

190 |

191 |

192 | def flatten_probas(probas, labels, ignore=None):

193 | """

194 | Flattens predictions in the batch

195 | """

196 | B, C, H, W = probas.size()

197 | probas = probas.permute(0, 2, 3, 1).contiguous().view(-1, C) # B * H * W, C = P, C

198 | labels = labels.view(-1)

199 | if ignore is None:

200 | return probas, labels

201 | valid = (labels != ignore)

202 | vprobas = probas[valid.nonzero().squeeze()]

203 | vlabels = labels[valid]

204 | # vlabels = labels[valid] - 1

205 | return vprobas, vlabels

206 |

207 | def xloss(logits, labels, ignore=None):

208 | """

209 | Cross entropy loss

210 | """

211 | return F.cross_entropy(logits, Variable(labels), ignore_index=255)

212 |

213 |

214 | # --------------------------- HELPER FUNCTIONS ---------------------------

215 |

216 | def mean(l, ignore_nan=False, empty=0):

217 | """

218 | nanmean compatible with generators.

219 | """

220 | l = iter(l)

221 | if ignore_nan:

222 | l = ifilterfalse(np.isnan, l)

223 | try:

224 | n = 1

225 | acc = next(l)

226 | except StopIteration:

227 | if empty == 'raise':

228 | raise ValueError('Empty mean')

229 | return empty

230 | for n, v in enumerate(l, 2):

231 | acc += v

232 | if n == 1:

233 | return acc

234 | return acc / n

235 |

--------------------------------------------------------------------------------

/train.txt:

--------------------------------------------------------------------------------

1 | 100694_sat.jpg

2 | 10233_sat.jpg

3 | 103665_sat.jpg

4 | 103730_sat.jpg

5 | 104113_sat.jpg

6 | 10901_sat.jpg

7 | 111335_sat.jpg

8 | 114433_sat.jpg

9 | 119079_sat.jpg

10 | 119_sat.jpg

11 | 120625_sat.jpg

12 | 122104_sat.jpg

13 | 122178_sat.jpg

14 | 125795_sat.jpg

15 | 131720_sat.jpg

16 | 133254_sat.jpg

17 | 13415_sat.jpg

18 | 134465_sat.jpg

19 | 137806_sat.jpg

20 | 139482_sat.jpg

21 | 140299_sat.jpg

22 | 141685_sat.jpg

23 | 142766_sat.jpg

24 | 149624_sat.jpg

25 | 152569_sat.jpg

26 | 154124_sat.jpg

27 | 15573_sat.jpg

28 | 156574_sat.jpg

29 | 156951_sat.jpg

30 | 157839_sat.jpg

31 | 158163_sat.jpg

32 | 159177_sat.jpg

33 | 159280_sat.jpg

34 | 159322_sat.jpg

35 | 160037_sat.jpg

36 | 161838_sat.jpg

37 | 164029_sat.jpg

38 | 172307_sat.jpg

39 | 172854_sat.jpg

40 | 174980_sat.jpg

41 | 176112_sat.jpg

42 | 176506_sat.jpg

43 | 182027_sat.jpg

44 | 182422_sat.jpg

45 | 185562_sat.jpg

46 | 192576_sat.jpg

47 | 192602_sat.jpg

48 | 20187_sat.jpg

49 | 202277_sat.jpg

50 | 204494_sat.jpg

51 | 204562_sat.jpg

52 | 207663_sat.jpg

53 | 207743_sat.jpg

54 | 208495_sat.jpg

55 | 208695_sat.jpg

56 | 21023_sat.jpg

57 | 210473_sat.jpg

58 | 210669_sat.jpg

59 | 215525_sat.jpg

60 | 217085_sat.jpg

61 | 21717_sat.jpg

62 | 218329_sat.jpg

63 | 221278_sat.jpg

64 | 232373_sat.jpg

65 | 2334_sat.jpg

66 | 235869_sat.jpg

67 | 238322_sat.jpg

68 | 239955_sat.jpg

69 | 244423_sat.jpg

70 | 24813_sat.jpg

71 | 252743_sat.jpg

72 | 253691_sat.jpg

73 | 254565_sat.jpg

74 | 255711_sat.jpg

75 | 256189_sat.jpg

76 | 257695_sat.jpg

77 | 26261_sat.jpg

78 | 263576_sat.jpg

79 | 266_sat.jpg

80 | 267065_sat.jpg

81 | 267163_sat.jpg

82 | 269601_sat.jpg

83 | 271609_sat.jpg

84 | 27460_sat.jpg

85 | 276761_sat.jpg

86 | 276912_sat.jpg

87 | 277644_sat.jpg

88 | 277994_sat.jpg

89 | 280703_sat.jpg

90 | 282120_sat.jpg

91 | 28559_sat.jpg

92 | 291214_sat.jpg

93 | 291781_sat.jpg

94 | 293776_sat.jpg

95 | 29419_sat.jpg

96 | 294697_sat.jpg

97 | 296279_sat.jpg

98 | 296368_sat.jpg

99 | 298396_sat.jpg

100 | 298817_sat.jpg

101 | 299287_sat.jpg

102 | 300626_sat.jpg

103 | 300967_sat.jpg

104 | 303327_sat.jpg

105 | 306486_sat.jpg

106 | 308959_sat.jpg

107 | 310419_sat.jpg

108 | 311386_sat.jpg

109 | 315352_sat.jpg

110 | 316446_sat.jpg

111 | 318338_sat.jpg

112 | 321724_sat.jpg

113 | 322400_sat.jpg

114 | 325354_sat.jpg

115 | 331533_sat.jpg

116 | 331994_sat.jpg

117 | 33262_sat.jpg

118 | 333661_sat.jpg

119 | 335737_sat.jpg

120 | 33573_sat.jpg

121 | 337272_sat.jpg

122 | 338798_sat.jpg

123 | 340898_sat.jpg

124 | 343016_sat.jpg

125 | 34330_sat.jpg

126 | 343425_sat.jpg

127 | 34359_sat.jpg

128 | 345134_sat.jpg

129 | 345494_sat.jpg

130 | 347676_sat.jpg

131 | 347725_sat.jpg

132 | 3484_sat.jpg

133 | 351727_sat.jpg

134 | 352808_sat.jpg

135 | 358591_sat.jpg

136 | 361129_sat.jpg

137 | 36183_sat.jpg

138 | 362274_sat.jpg

139 | 365555_sat.jpg

140 | 373186_sat.jpg

141 | 37586_sat.jpg

142 | 376441_sat.jpg

143 | 37755_sat.jpg

144 | 382428_sat.jpg

145 | 383392_sat.jpg

146 | 383637_sat.jpg

147 | 384477_sat.jpg

148 | 387554_sat.jpg

149 | 388811_sat.jpg

150 | 392711_sat.jpg

151 | 397351_sat.jpg

152 | 397864_sat.jpg

153 | 400179_sat.jpg

154 | 40168_sat.jpg

155 | 402002_sat.jpg

156 | 40350_sat.jpg

157 | 403978_sat.jpg

158 | 405378_sat.jpg

159 | 405744_sat.jpg

160 | 411741_sat.jpg

161 | 413779_sat.jpg

162 | 416381_sat.jpg

163 | 416463_sat.jpg

164 | 417313_sat.jpg

165 | 41944_sat.jpg

166 | 420066_sat.jpg

167 | 423117_sat.jpg

168 | 428327_sat.jpg

169 | 434243_sat.jpg

170 | 435277_sat.jpg

171 | 439854_sat.jpg

172 | 442329_sat.jpg

173 | 444902_sat.jpg

174 | 45357_sat.jpg

175 | 45676_sat.jpg

176 | 457982_sat.jpg

177 | 458687_sat.jpg

178 | 458776_sat.jpg

179 | 463855_sat.jpg

180 | 467076_sat.jpg

181 | 468103_sat.jpg

182 | 470446_sat.jpg

183 | 470798_sat.jpg

184 | 476582_sat.jpg

185 | 476991_sat.jpg

186 | 482365_sat.jpg

187 | 483506_sat.jpg

188 | 485061_sat.jpg

189 | 491356_sat.jpg

190 | 491696_sat.jpg

191 | 492365_sat.jpg

192 | 495876_sat.jpg

193 | 496948_sat.jpg

194 | 499161_sat.jpg

195 | 499266_sat.jpg

196 | 499418_sat.jpg

197 | 499511_sat.jpg

198 | 501053_sat.jpg

199 | 507241_sat.jpg

200 | 508571_sat.jpg

201 | 511850_sat.jpg

202 | 515521_sat.jpg

203 | 516056_sat.jpg

204 | 516317_sat.jpg

205 | 518833_sat.jpg

206 | 520614_sat.jpg

207 | 524056_sat.jpg

208 | 524518_sat.jpg

209 | 528163_sat.jpg

210 | 530040_sat.jpg

211 | 534154_sat.jpg

212 | 53987_sat.jpg

213 | 541060_sat.jpg

214 | 541353_sat.jpg

215 | 544464_sat.jpg

216 | 547201_sat.jpg

217 | 547785_sat.jpg

218 | 548423_sat.jpg

219 | 548686_sat.jpg

220 | 549870_sat.jpg

221 | 549959_sat.jpg

222 | 552206_sat.jpg

223 | 552396_sat.jpg

224 | 55374_sat.jpg

225 | 556572_sat.jpg

226 | 557309_sat.jpg

227 | 561117_sat.jpg

228 | 568270_sat.jpg

229 | 56924_sat.jpg

230 | 570332_sat.jpg

231 | 575902_sat.jpg

232 | 584941_sat.jpg

233 | 585043_sat.jpg

234 | 586670_sat.jpg

235 | 587968_sat.jpg

236 | 588542_sat.jpg

237 | 58864_sat.jpg

238 | 58910_sat.jpg

239 | 596837_sat.jpg

240 | 599842_sat.jpg

241 | 599975_sat.jpg

242 | 601966_sat.jpg

243 | 602453_sat.jpg

244 | 604647_sat.jpg

245 | 604833_sat.jpg

246 | 605037_sat.jpg

247 | 605764_sat.jpg

248 | 606014_sat.jpg

249 | 606370_sat.jpg

250 | 607622_sat.jpg

251 | 608673_sat.jpg

252 | 609234_sat.jpg

253 | 611015_sat.jpg

254 | 612214_sat.jpg

255 | 61245_sat.jpg

256 | 613687_sat.jpg

257 | 616234_sat.jpg

258 | 616860_sat.jpg

259 | 617844_sat.jpg

260 | 618372_sat.jpg

261 | 621206_sat.jpg

262 | 621633_sat.jpg

263 | 622733_sat.jpg

264 | 623857_sat.jpg

265 | 625296_sat.jpg

266 | 626208_sat.jpg

267 | 627806_sat.jpg

268 | 629198_sat.jpg

269 | 632489_sat.jpg

270 | 634421_sat.jpg

271 | 634717_sat.jpg

272 | 635157_sat.jpg

273 | 636849_sat.jpg

274 | 638158_sat.jpg

275 | 639149_sat.jpg

276 | 639314_sat.jpg

277 | 6399_sat.jpg

278 | 642909_sat.jpg

279 | 644103_sat.jpg

280 | 644150_sat.jpg

281 | 645001_sat.jpg

282 | 649260_sat.jpg

283 | 650751_sat.jpg

284 | 651312_sat.jpg

285 | 65170_sat.jpg

286 | 651774_sat.jpg

287 | 652883_sat.jpg

288 | 655313_sat.jpg

289 | 66344_sat.jpg

290 | 664140_sat.jpg

291 | 664396_sat.jpg

292 | 665914_sat.jpg

293 | 668465_sat.jpg

294 | 669010_sat.jpg

295 | 669779_sat.jpg

296 | 672041_sat.jpg

297 | 672823_sat.jpg

298 | 675424_sat.jpg

299 | 675849_sat.jpg

300 | 676758_sat.jpg

301 | 678520_sat.jpg

302 | 679036_sat.jpg

303 | 682046_sat.jpg

304 | 682688_sat.jpg

305 | 682949_sat.jpg

306 | 692004_sat.jpg

307 | 695475_sat.jpg

308 | 696257_sat.jpg

309 | 69628_sat.jpg

310 | 698065_sat.jpg

311 | 698628_sat.jpg

312 | 699650_sat.jpg

313 | 711893_sat.jpg

314 | 714414_sat.jpg

315 | 715633_sat.jpg

316 | 715846_sat.jpg

317 | 71619_sat.jpg

318 | 717225_sat.jpg

319 | 723067_sat.jpg

320 | 723719_sat.jpg

321 | 727832_sat.jpg

322 | 72807_sat.jpg

323 | 730821_sat.jpg

324 | 736869_sat.jpg

325 | 736933_sat.jpg

326 | 739122_sat.jpg

327 | 739760_sat.jpg

328 | 740937_sat.jpg

329 | 747824_sat.jpg

330 | 749523_sat.jpg

331 | 753408_sat.jpg

332 | 759668_sat.jpg

333 | 759855_sat.jpg

334 | 761189_sat.jpg

335 | 762359_sat.jpg

336 | 763075_sat.jpg

337 | 763892_sat.jpg

338 | 765792_sat.jpg

339 | 76759_sat.jpg

340 | 768475_sat.jpg

341 | 772144_sat.jpg

342 | 772567_sat.jpg

343 | 77388_sat.jpg

344 | 774779_sat.jpg

345 | 778804_sat.jpg

346 | 782103_sat.jpg

347 | 784140_sat.jpg

348 | 786226_sat.jpg

349 | 7906_sat.jpg

350 | 798411_sat.jpg

351 | 801361_sat.jpg

352 | 802645_sat.jpg

353 | 80318_sat.jpg

354 | 803958_sat.jpg

355 | 805150_sat.jpg

356 | 806805_sat.jpg

357 | 807146_sat.jpg

358 | 80808_sat.jpg

359 | 808980_sat.jpg

360 | 81011_sat.jpg

361 | 810368_sat.jpg

362 | 811075_sat.jpg

363 | 820543_sat.jpg

364 | 825592_sat.jpg

365 | 825816_sat.jpg

366 | 827126_sat.jpg

367 | 830444_sat.jpg

368 | 834433_sat.jpg

369 | 838669_sat.jpg

370 | 841621_sat.jpg

371 | 845069_sat.jpg

372 | 847604_sat.jpg

373 | 848649_sat.jpg

374 | 848728_sat.jpg

375 | 848780_sat.jpg

376 | 849797_sat.jpg

377 | 853702_sat.jpg

378 | 855_sat.jpg

379 | 860326_sat.jpg

380 | 866782_sat.jpg

381 | 867017_sat.jpg

382 | 868003_sat.jpg

383 | 86805_sat.jpg

384 | 870705_sat.jpg

385 | 873132_sat.jpg

386 | 875328_sat.jpg

387 | 877160_sat.jpg

388 | 878990_sat.jpg

389 | 880610_sat.jpg

390 | 88571_sat.jpg

391 | 888263_sat.jpg

392 | 888343_sat.jpg

393 | 889145_sat.jpg

394 | 889920_sat.jpg

395 | 890145_sat.jpg

396 | 893261_sat.jpg

397 | 893904_sat.jpg

398 | 895509_sat.jpg

399 | 899693_sat.jpg

400 | 901715_sat.jpg

401 | 902350_sat.jpg

402 | 903649_sat.jpg

403 | 906113_sat.jpg

404 | 910525_sat.jpg

405 | 911457_sat.jpg

406 | 914008_sat.jpg

407 | 916141_sat.jpg

408 | 916336_sat.jpg

409 | 916518_sat.jpg

410 | 917081_sat.jpg

411 | 918446_sat.jpg

412 | 919051_sat.jpg

413 | 923223_sat.jpg

414 | 923618_sat.jpg

415 | 924236_sat.jpg

416 | 926392_sat.jpg

417 | 927126_sat.jpg

418 | 927644_sat.jpg

419 | 930028_sat.jpg

420 | 939614_sat.jpg

421 | 940229_sat.jpg

422 | 942307_sat.jpg

423 | 942594_sat.jpg

424 | 943463_sat.jpg

425 | 943943_sat.jpg

426 | 946386_sat.jpg

427 | 946408_sat.jpg

428 | 946475_sat.jpg

429 | 947994_sat.jpg

430 | 949235_sat.jpg

431 | 951120_sat.jpg

432 | 952430_sat.jpg

433 | 954552_sat.jpg

434 | 95613_sat.jpg

435 | 95683_sat.jpg

436 | 95863_sat.jpg

437 | 961407_sat.jpg

438 | 965977_sat.jpg

439 | 967818_sat.jpg

440 | 96870_sat.jpg

441 | 969934_sat.jpg

442 | 971880_sat.jpg

443 | 98150_sat.jpg

444 | 981852_sat.jpg

445 | 983603_sat.jpg

446 | 987079_sat.jpg

447 | 987427_sat.jpg

448 | 988517_sat.jpg

449 | 989499_sat.jpg

450 | 990573_sat.jpg

451 | 990617_sat.jpg

452 | 990619_sat.jpg

453 | 991758_sat.jpg

454 | 995492_sat.jpg

455 |

--------------------------------------------------------------------------------

/train_deep_globe.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # coding: utf-8

3 |

4 | from __future__ import absolute_import, division, print_function

5 |

6 | import os

7 | import numpy as np

8 | import torch

9 | import torch.nn as nn

10 | from torchvision import transforms

11 | from tqdm import tqdm

12 | from dataset.deep_globe import DeepGlobe, classToRGB, is_image_file