├── .gitignore

├── LICENSE

├── README.md

├── __init__.py

├── examples

├── batch_process.py

├── convert_siglib.py

├── libc-scraper

│ ├── .gitignore

│ ├── README.md

│ ├── merge_ubuntu.py

│ ├── process-deb.sh

│ ├── run.sh

│ └── ubuntu-libc-scraper.py

├── merge_multiple_versions.py

└── sig_match.py

├── icon.ico

├── images

└── explorer.png

├── plugin.json

├── pyproject.toml

├── requirements.txt

├── setup.cfg

├── sigkit

├── FlatbufSignatureLibrary

│ ├── CallRef.py

│ ├── Function.py

│ ├── Pattern.py

│ ├── SignatureLibrary.py

│ ├── TrieNode.py

│ └── __init__.py

├── __init__.py

├── compute_sig.py

├── sig_serialize_fb.py

├── sig_serialize_json.py

├── sigexplorer.py

├── signaturelibrary.py

└── trie_ops.py

└── signaturelibrary.fbs

/.gitignore:

--------------------------------------------------------------------------------

1 | __pycache__

2 | *.bndb

3 | *.a

4 | *.so

5 | *.so.6

6 | testcases/

7 | *.pyc

8 | .idea

9 |

10 | *.pkl

11 | *.fb

12 | *.sig

13 | *.zlib

14 |

15 | sigs/

16 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Copyright (c) 2019-2020 Vecto 35 Inc

2 |

3 | Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

4 |

5 | The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

6 |

7 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Signature Kit Plugin (v1.2.2)

2 | Author: **Vector 35 Inc**

3 |

4 | _Python tools for working with Signature Libraries_

5 |

6 | ## Description:

7 |

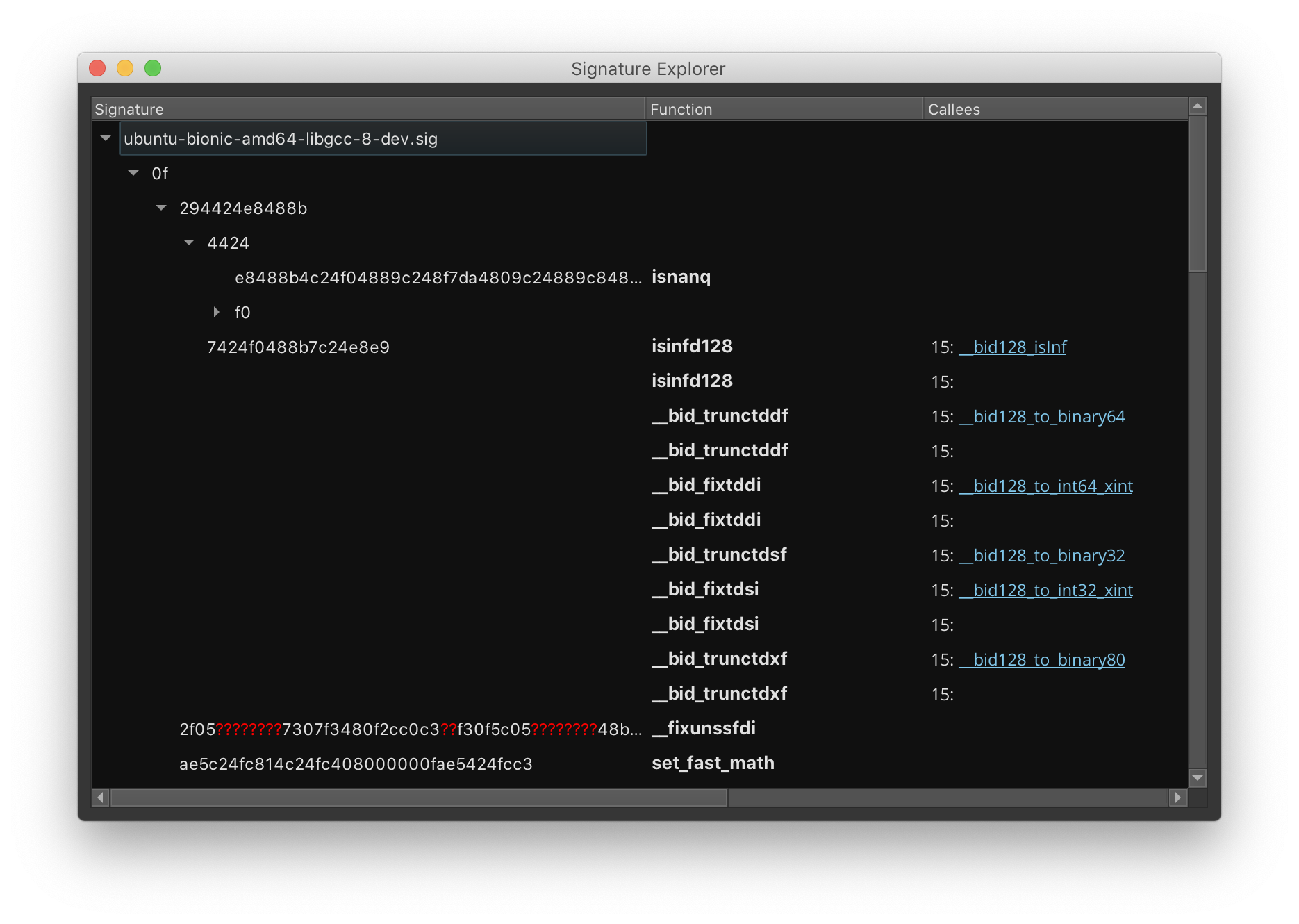

8 | This plugin provides Python tools for generating, manipulating, viewing, loading, and saving signature libraries (.sig) for the Signature System. This plugin also provides UI integration for easy access from the Binary Ninja UI to common functions in the `Plugins\Signature Library` menu.

9 |

10 |

11 |

12 |

13 | Also included are [example scripts](https://github.com/Vector35/sigkit/tree/master/examples) which demonstrate batch processing and automatic creation of signature libraries for Ubuntu libc.

14 | You can also run the Signature Explorer GUI as a standalone app.

15 |

16 |

17 | ## Installation Instructions

18 |

19 | ### Windows

20 |

21 |

22 |

23 | ### Linux

24 |

25 |

26 |

27 | ### Darwin

28 |

29 |

30 |

31 | ## Minimum Version

32 |

33 | This plugin requires the following minimum version of Binary Ninja:

34 |

35 | * 1997

36 |

37 |

38 |

39 | ## Required Dependencies

40 |

41 | The following dependencies are required for this plugin:

42 |

43 | * pip - flatbuffers

44 |

45 |

46 | ## License

47 |

48 | This plugin is released under a MIT license.

49 | ## Metadata Version

50 |

51 | 2

52 |

--------------------------------------------------------------------------------

/__init__.py:

--------------------------------------------------------------------------------

1 | # coding=utf-8

2 |

3 | # Copyright (c) 2019-2020 Vector 35 Inc

4 | #

5 | # Permission is hereby granted, free of charge, to any person obtaining a copy

6 | # of this software and associated documentation files (the "Software"), to

7 | # deal in the Software without restriction, including without limitation the

8 | # rights to use, copy, modify, merge, publish, distribute, sublicense, and/or

9 | # sell copies of the Software, and to permit persons to whom the Software is

10 | # furnished to do so, subject to the following conditions:

11 | #

12 | # The above copyright notice and this permission notice shall be included in

13 | # all copies or substantial portions of the Software.

14 | #

15 | # THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | # IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | # FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | # AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | # LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

20 | # FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS

21 | # IN THE SOFTWARE.

22 |

23 | from .sigkit.sig_serialize_fb import SignatureLibraryReader, SignatureLibraryWriter

24 | from .sigkit.compute_sig import process_function as generate_function_signature

25 |

26 | def load_signature_library(filename):

27 | """

28 | Load a signature library from a .sig file.

29 | :param filename: input filename

30 | :return: instance of `TrieNode`, the root of the signature trie.

31 | """

32 | with open(filename, 'rb') as f:

33 | buf = f.read()

34 | return SignatureLibraryReader().deserialize(buf)

35 |

36 | def save_signature_library(sig_lib, filename):

37 | """

38 | Save the given signature library to a file.

39 | :param sig_lib: instance of `TrieNode`, the root of the signature trie.

40 | :param filename: destination filename

41 | """

42 | buf = SignatureLibraryWriter().serialize(sig_lib)

43 | with open(filename, 'wb') as f:

44 | f.write(buf)

45 |

--------------------------------------------------------------------------------

/examples/batch_process.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | # Copyright (c) 2015-2020 Vector 35 Inc

4 | #

5 | # Permission is hereby granted, free of charge, to any person obtaining a copy

6 | # of this software and associated documentation files (the "Software"), to

7 | # deal in the Software without restriction, including without limitation the

8 | # rights to use, copy, modify, merge, publish, distribute, sublicense, and/or

9 | # sell copies of the Software, and to permit persons to whom the Software is

10 | # furnished to do so, subject to the following conditions:

11 | #

12 | # The above copyright notice and this permission notice shall be included in

13 | # all copies or substantial portions of the Software.

14 | #

15 | # THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | # IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | # FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | # AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | # LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

20 | # FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS

21 | # IN THE SOFTWARE.

22 |

23 | """

24 | This script processes many object files using headless mode and generate

25 | function signatures for functions in them in a highly parallelized fashion.

26 | The result is a dictionary of {FunctionNode: FunctionInfo} that is then pickled

27 | and saved to disk. These pickles can be processed with a merging script, i.e.

28 | merge_multiple_versions.py or libc-scraper's merge_ubuntu.py.

29 | """

30 |

31 | import time

32 |

33 | from binaryninja import *

34 |

35 | import sigkit

36 |

37 | def process_bv(bv):

38 | global results

39 | print(bv.file.filename, ': processing')

40 | guess_relocs = len(bv.relocation_ranges) == 0

41 |

42 | for func in bv.functions:

43 | try:

44 | if bv.get_symbol_at(func.start) is None: continue

45 | node, info = sigkit.generate_function_signature(func, guess_relocs)

46 | results.put((node, info))

47 | print("Processed", func.name)

48 | except:

49 | import traceback

50 | traceback.print_exc()

51 | print(bv.file.filename, ': done')

52 |

53 | def on_analysis_complete(self):

54 | global wg

55 | process_bv(self.view)

56 | with wg.get_lock():

57 | wg.value -= 1

58 | self.view.file.close()

59 |

60 | def process_binary(input_binary):

61 | global wg

62 | print(input_binary, ': loading')

63 | if input_binary.endswith('.dll'):

64 | bv = binaryninja.BinaryViewType["PE"].open(input_binary)

65 | cxt = PluginCommandContext(bv)

66 | PluginCommand.get_valid_list(cxt)['PDB\\Load (BETA)'].execute(cxt)

67 | elif input_binary.endswith('.o'):

68 | bv = binaryninja.BinaryViewType["ELF"].open(input_binary)

69 | else:

70 | raise ValueError('unsupported input file', input_binary)

71 | if not bv:

72 | print('Failed to load', input_binary)

73 | return

74 | AnalysisCompletionEvent(bv, on_analysis_complete)

75 | bv.update_analysis()

76 | with wg.get_lock():

77 | wg.value += 1

78 |

79 | def async_process(input_queue):

80 | for input_binary in input_queue:

81 | process_binary(input_binary)

82 | yield

83 |

84 | def init_child(wg_, results_):

85 | global wg, results

86 | wg, results = wg_, results_

87 |

88 | if __name__ == '__main__':

89 | import sys

90 | from pathlib import Path

91 | if len(sys.argv) < 3:

92 | print('Usage: %s ' % (sys.argv[0]))

93 | print('The pickle designates the filename of a pickle file that the computed function metadata will be saved to.')

94 | sys.exit(1)

95 |

96 | import multiprocessing as mp

97 | wg = mp.Value('i', 0)

98 | results = mp.Queue()

99 |

100 | func_info = {}

101 |

102 | with mp.Pool(mp.cpu_count(), initializer=init_child, initargs=(wg, results)) as pool:

103 | pool.map(process_binary, map(str, Path('.').glob(sys.argv[1])))

104 |

105 | while True:

106 | time.sleep(0.1)

107 | with wg.get_lock():

108 | if wg.value == 0: break

109 |

110 | while not results.empty():

111 | node, info = results.get()

112 | func_info[node] = info

113 |

114 | import pickle

115 | with open(sys.argv[2], 'wb') as f:

116 | pickle.dump(func_info, f)

117 |

--------------------------------------------------------------------------------

/examples/convert_siglib.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) 2015-2020 Vector 35 Inc

2 | #

3 | # Permission is hereby granted, free of charge, to any person obtaining a copy

4 | # of this software and associated documentation files (the "Software"), to

5 | # deal in the Software without restriction, including without limitation the

6 | # rights to use, copy, modify, merge, publish, distribute, sublicense, and/or

7 | # sell copies of the Software, and to permit persons to whom the Software is

8 | # furnished to do so, subject to the following conditions:

9 | #

10 | # The above copyright notice and this permission notice shall be included in

11 | # all copies or substantial portions of the Software.

12 | #

13 | # THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

14 | # IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

15 | # FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

16 | # AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

17 | # LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

18 | # FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS

19 | # IN THE SOFTWARE.

20 |

21 | """

22 | This utility shows how to load and save signature libraries using the sigkit API.

23 | Although many file formats are supported, Binary Ninja will only support signatures

24 | in the .sig (flatbuffer) format. The other formats are provided for debugging

25 | purposes.

26 | """

27 |

28 | import pickle

29 | import zlib

30 |

31 | from sigkit import *

32 |

33 | if __name__ == '__main__':

34 | import sys

35 |

36 | if len(sys.argv) < 2:

37 | print('Usage: convert_siglib.py ')

38 | sys.exit(1)

39 |

40 | # Load a signature library.

41 | filename = sys.argv[1]

42 | basename, ext = filename[:filename.index('.')], filename[filename.index('.'):]

43 | if ext == '.sig':

44 | with open(filename, 'rb') as f:

45 | sig_trie = sig_serialize_fb.load(f)

46 | elif ext == '.json':

47 | with open(filename, 'r') as f:

48 | sig_trie = sig_serialize_json.load(f)

49 | elif ext == '.json.zlib':

50 | with open(filename, 'rb') as f:

51 | sig_trie = sig_serialize_json.deserialize(json.loads(zlib.decompress(f.read()).decode('utf-8')))

52 | elif ext == '.pkl':

53 | with open(filename, 'rb') as f:

54 | sig_trie = pickle.load(f)

55 | else:

56 | print('Unsupported file extension ' + ext)

57 | sys.exit(1)

58 |

59 | # Save the signature library to a binary format and write it to a file.

60 | buf = sig_serialize_fb.dumps(sig_trie)

61 | with open(basename + '.sig', 'wb') as f:

62 | f.write(buf)

63 |

64 | # This is a pretty stringent assertion, but I want to be sure this implementation is correct.

65 | # having the exact same round-trip depends on having a consistent iteration order through the trie as well

66 | # as the ordering of the functions per node. That's enforced by iterating the trie (DFS) in a sorted fashion.

67 | assert buf == sig_serialize_fb.SignatureLibraryWriter().serialize(sig_serialize_fb.SignatureLibraryReader().deserialize(buf))

68 |

--------------------------------------------------------------------------------

/examples/libc-scraper/.gitignore:

--------------------------------------------------------------------------------

1 | ubuntu

2 | requests-cache.sqlite

3 |

--------------------------------------------------------------------------------

/examples/libc-scraper/README.md:

--------------------------------------------------------------------------------

1 | # libc-scraper

2 |

3 | This directory includes scripts that demonstrate how sigkit can scaled up performantly.

4 |

5 | The goal of libc-scraper is to scrape *.debs for Ubuntu libcs, process them using headless mode, and generate space-efficient signature libraries.

6 |

7 | batch_process.py demonstrates how to generate signatures using headless mode.

8 |

9 | Of special interest is merge_ubuntu.py, which shows how you can make create small signature libraries that combine multiple versions of the same library.

10 | Using clever tricks, it is possible to aggressively deduplicate across multiple versions while maintaining accuracy.

11 |

--------------------------------------------------------------------------------

/examples/libc-scraper/merge_ubuntu.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | # Copyright (c) 2015-2020 Vector 35 Inc

4 | #

5 | # Permission is hereby granted, free of charge, to any person obtaining a copy

6 | # of this software and associated documentation files (the "Software"), to

7 | # deal in the Software without restriction, including without limitation the

8 | # rights to use, copy, modify, merge, publish, distribute, sublicense, and/or

9 | # sell copies of the Software, and to permit persons to whom the Software is

10 | # furnished to do so, subject to the following conditions:

11 | #

12 | # The above copyright notice and this permission notice shall be included in

13 | # all copies or substantial portions of the Software.

14 | #

15 | # THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | # IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | # FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | # AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | # LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

20 | # FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS

21 | # IN THE SOFTWARE.

22 |

23 | """

24 | This script generates libc signature libraries after precomputing function

25 | signatures using batch_process.py, using all cpus available on the machine.

26 | """

27 |

28 | import os, sys

29 | import gc

30 | import pickle

31 | from pathlib import Path

32 | import tqdm

33 | import asyncio

34 | import concurrent.futures

35 | import math

36 |

37 | import sigkit.signaturelibrary

38 | import sigkit.trie_ops

39 | import sigkit.sig_serialize_fb

40 |

41 | cpu_factor = int(math.ceil(math.sqrt(os.cpu_count())))

42 |

43 | # delete weird, useless funcs and truncate names

44 | def cleanup_info(func_info, maxlen=40):

45 | import re

46 | to_delete = set()

47 | for f in func_info:

48 | if re.match(r'\.L\d+', f.name):

49 | to_delete.add(f)

50 | continue

51 | f.name = f.name[:maxlen]

52 | for f in to_delete:

53 | del func_info[f]

54 |

55 | # load all pickles into a single signature library

56 | def load_pkls(pkls):

57 | # rarely-used libgcc stuff

58 | pkl_blacklist = {'libcilkrts.pkl', 'libubsan.pkl', 'libitm.pkl', 'libgcov.pkl', 'libmpx.pkl', 'libmpxwrappers.pkl', 'libquadmath.pkl', 'libgomp.pkl'}

59 | trie, func_info = sigkit.signaturelibrary.new_trie(), {}

60 | for pkl in pkls:

61 | if os.path.basename(pkl) in pkl_blacklist: continue

62 | with open(pkl, 'rb') as f:

63 | pkl_funcs = pickle.load(f)

64 | cleanup_info(pkl_funcs)

65 | sigkit.trie_ops.trie_insert_funcs(trie, pkl_funcs)

66 | func_info.update(pkl_funcs)

67 | sigkit.trie_ops.finalize_trie(trie, func_info)

68 | return trie, func_info

69 |

70 | def combine_sig_libs(sig_lib1, sig_lib2):

71 | sigkit.trie_ops.combine_signature_libraries(*sig_lib1, *sig_lib2)

72 | return sig_lib1

73 |

74 | def finalize_sig_lib(sig_lib):

75 | sigkit.trie_ops.finalize_trie(*sig_lib)

76 | return sig_lib

77 |

78 | def do_package(package):

79 | loop = asyncio.get_event_loop()

80 | pool = concurrent.futures.ProcessPoolExecutor(cpu_factor)

81 |

82 | async def inner():

83 | print('Processing', package)

84 | result_filename = os.path.join('sigs', package.replace('/', '-') + '.sig')

85 | if os.path.exists(result_filename):

86 | print(result_filename + ' exists')

87 | return

88 |

89 | pkl_groups = []

90 | for pkg_version in os.listdir(package):

91 | pkg_version = os.path.join(package, pkg_version)

92 | pkls = Path(pkg_version).glob('**/*.pkl')

93 | pkls = list(map(str, pkls))

94 | if not pkls: continue

95 | # print(' ' + pkg_version, len(pkls))

96 | pkl_groups.append(pkls)

97 | if not pkl_groups:

98 | print(package, 'has no versions available')

99 | return

100 |

101 | with tqdm.tqdm(total=len(pkl_groups), desc='generating tries') as pbar:

102 | async def async_load(to_load):

103 | result = await loop.run_in_executor(pool, load_pkls, to_load)

104 | pbar.update(1)

105 | pbar.refresh()

106 | return result

107 | lib_versions = await asyncio.gather(*map(async_load, pkl_groups))

108 |

109 | # linear merge

110 | # dst_trie, dst_funcs = sigkit.signaturelibrary.new_trie(), {}

111 | # for trie, funcs in tqdm.tqdm(lib_versions):

112 | # sigkit.trie_ops.combine_signature_libraries(dst_trie, dst_funcs, trie, funcs)

113 |

114 | # big brain parallel async binary merge

115 | with tqdm.tqdm(total=len(lib_versions)-1, desc='merging') as pbar:

116 | async def merge(sig_libs):

117 | assert len(sig_libs)

118 | if len(sig_libs) == 1:

119 | return sig_libs[0]

120 | else:

121 | half = len(sig_libs) // 2

122 | sig_lib1, sig_lib2 = await asyncio.gather(merge(sig_libs[:half]), merge(sig_libs[half:]))

123 | sig_libs[:] = [None] * len(sig_libs) # free memory

124 | merged_lib = await loop.run_in_executor(pool, combine_sig_libs, sig_lib1, sig_lib2)

125 | pbar.update(1)

126 | pbar.refresh()

127 | gc.collect()

128 | return merged_lib

129 | sig_lib = await merge(lib_versions)

130 |

131 | dst_trie, dst_funcs = await loop.run_in_executor(pool, finalize_sig_lib, sig_lib)

132 | if not dst_funcs:

133 | print(package, 'has no functions')

134 | return

135 |

136 | buf = sigkit.sig_serialize_fb.SignatureLibraryWriter().serialize(dst_trie)

137 | with open(result_filename, 'wb') as f:

138 | f.write(buf)

139 | print(' saved to', result_filename, ' | size:', len(buf))

140 |

141 | loop.run_until_complete(inner())

142 |

143 | def main():

144 | if not os.path.exists('sigs'):

145 | os.mkdir('sigs')

146 | elif not os.path.isdir('sigs'):

147 | print('Please delete "sigs" before starting')

148 | sys.exit(1)

149 |

150 | tasks = []

151 | distr = 'ubuntu'

152 | # for version in os.listdir(distr):

153 | for version in ['bionic']:

154 | version = os.path.join(distr, version)

155 | for arch in os.listdir(version):

156 | arch = os.path.join(version, arch)

157 | for package in os.listdir(arch):

158 | package = os.path.join(arch, package)

159 | tasks.append(package)

160 |

161 | # we are going to do some heirarchical multiprocessing because there is a very high pickle message-passing overhead

162 | # so a lot of cpu time gets burned pickling in the main process simply passing work to worker processes

163 | import subprocess

164 | import multiprocessing.pool

165 | pool = multiprocessing.pool.ThreadPool(cpu_factor)

166 | def do_package_in_worker(package):

167 | subprocess.call(['python3', __file__, '-c', package])

168 | for _ in pool.imap_unordered(do_package_in_worker, tasks):

169 | pass

170 |

171 | if __name__ == '__main__':

172 | if len(sys.argv) <= 1:

173 | main()

174 | elif len(sys.argv) >= 3 and sys.argv[1] == '-c':

175 | # child

176 | do_package(sys.argv[2])

177 |

--------------------------------------------------------------------------------

/examples/libc-scraper/process-deb.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | debfile=$1

3 | pushd `dirname $debfile`

4 | debfilename=`basename $debfile`

5 | echo Now processing $debfilename

6 | debfile_extract=${debfilename%.*};

7 | if [ -d $debfile_extract ]; then

8 | echo $debfile_extract already exists, exiting

9 | exit

10 | fi

11 | dpkg-deb -x $debfilename $debfile_extract

12 | pushd $debfile_extract

13 | for libfile in `find . -iname '*.a'`; do

14 | f=`basename $libfile`

15 | if [[ $f = libasan.a || $f = libtsan.a ]]; then

16 | echo Skipping $libfile

17 | continue

18 | fi

19 | pushd `dirname $libfile`

20 | echo ..Now processing $f

21 | g=${f%.*};

22 | if [ ! -d $g ]; then

23 | mkdir -p $g

24 | pushd $g

25 | ar vx ../$f >> ../"$g"_log.txt

26 | python3 ~/sigkit/batch_process.py "*.o" ../"$g".pkl ../"$g"_checkpoint.pkl >> ../"$g"_log.txt 2>&1

27 | rm -f *.o # free disk space

28 | popd

29 | else

30 | echo Skipping existing $g

31 | fi

32 | popd

33 | done

34 | g=objs

35 | python3 ~/sigkit/batch_process.py "**/*.o" "$g".pkl "$g"_checkpoint.pkl >> "$g"_log.txt 2>&1

36 | find . -iname "*.so" -delete

37 | find . -iname "*.x" -delete

38 | find . -iname "*.h" -delete

39 | popd

40 | popd

--------------------------------------------------------------------------------

/examples/libc-scraper/run.sh:

--------------------------------------------------------------------------------

1 | find . -iname '*.deb' | (while read line; do

2 | arch=`echo $line | awk -F/ '{print $3}'`

3 | if [[ $arch = amd64 || $arch = arm64 || $arch = armel || $arch = armhf || $arch = i386 || $arch = lpia || $arch = powerpc ]]; then

4 | echo "$line"

5 | fi

6 | done) | parallel -j 3 "process-deb.sh {}"

7 |

--------------------------------------------------------------------------------

/examples/libc-scraper/ubuntu-libc-scraper.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | # Copyright (c) 2015-2020 Vector 35 Inc

4 | #

5 | # Permission is hereby granted, free of charge, to any person obtaining a copy

6 | # of this software and associated documentation files (the "Software"), to

7 | # deal in the Software without restriction, including without limitation the

8 | # rights to use, copy, modify, merge, publish, distribute, sublicense, and/or

9 | # sell copies of the Software, and to permit persons to whom the Software is

10 | # furnished to do so, subject to the following conditions:

11 | #

12 | # The above copyright notice and this permission notice shall be included in

13 | # all copies or substantial portions of the Software.

14 | #

15 | # THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | # IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | # FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | # AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | # LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

20 | # FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS

21 | # IN THE SOFTWARE.

22 |

23 | """

24 | This script downloads .debs for the libc-dev packages off Ubuntu launchpad

25 | leveraging high-performance asynchronous i/o.

26 | """

27 |

28 | import sys, os

29 | from bs4 import BeautifulSoup

30 | import urllib

31 |

32 | import aiohttp

33 | import asyncio

34 |

35 | packages = ['libc6-dev', 'libgcc-8-dev', 'libgcc-7-dev', 'libgcc-6-dev', 'libgcc-5-dev']

36 |

37 | session, sem = None, None

38 | async def must(f):

39 | global session, sem

40 | await sem.put(None)

41 | retries = 0

42 | while True:

43 | try:

44 | r = await f(session)

45 | if r.status == 200: break

46 | print(r.status)

47 | except: pass

48 | retries += 1

49 | if retries > 10:

50 | print('Maximum retry count exceeded')

51 | sys.exit(1)

52 | await asyncio.sleep(1.0)

53 | await sem.get()

54 | return r

55 |

56 | async def get_html(url):

57 | async with (await must(lambda session: session.get(url))) as resp:

58 | sys.stderr.write('GET ' + url + '\n')

59 | return BeautifulSoup(await resp.text(), features="html.parser")

60 |

61 | async def get_series():

62 | series = set()

63 | soup = await get_html('https://launchpad.net/ubuntu/+series')

64 | for strong in soup.find_all('strong'):

65 | for a in strong.find_all('a'):

66 | series.add(a['href'])

67 | return series

68 |

69 | async def get_archs(series):

70 | soup = await get_html('https://launchpad.net' + series + '/+builds')

71 | for select in soup.find_all('select', {'id': 'arch_tag'}):

72 | for option in select.find_all('option'):

73 | if option['value'] == 'all': continue

74 | yield series + '/' + option['value']

75 |

76 | async def get_versions(arch, package):

77 | soup = await get_html('https://launchpad.net' + arch + '/' + package)

78 | for tr in soup.find_all('tr'):

79 | if len(tr.find_all('td')) != 10: continue

80 | yield tr.find_all('td')[9].find_all('a')[0]['href']

81 |

82 | async def get_deb_link(version):

83 | soup = await get_html('https://launchpad.net' + version)

84 | for a in soup.find_all('a', {'class': 'sprite'}):

85 | if a['href'].endswith('.deb'):

86 | return a['href']

87 |

88 | async def download_deb(version, deb_url):

89 | filename = urllib.parse.urlparse(deb_url).path

90 | filename = filename[filename.rindex('/') + 1:]

91 | version = os.curdir + version

92 | filename = os.path.join(version, filename)

93 | if os.path.exists(filename):

94 | print('Skipping existing file', filename)

95 | return

96 | os.makedirs(version, exist_ok=True)

97 | async with (await must(lambda session: session.get(deb_url))) as resp:

98 | data = await resp.read()

99 | if not data:

100 | print('FAILED DOWNLOAD', filename, 'from', deb_url)

101 | return

102 | with open(filename, 'wb') as f:

103 | f.write(data)

104 | print('Downloaded', filename)

105 |

106 | async def process_version(version):

107 | deb_link = await get_deb_link(version)

108 | if deb_link:

109 | await download_deb(version, deb_link)

110 | else:

111 | print('No .deb for', version)

112 |

113 | async def process_arch(arch):

114 | await asyncio.gather(*[asyncio.create_task(process_version(version)) for package in packages async for version in get_versions(arch, package)])

115 |

116 | async def process_series(series):

117 | await asyncio.gather(*[asyncio.create_task(process_arch(arch)) async for arch in get_archs(series)])

118 |

119 | async def main():

120 | global session

121 | async with aiohttp.ClientSession() as session:

122 | await asyncio.gather(*[asyncio.create_task(process_series(series)) for series in await get_series()])

123 |

124 | if __name__ == '__main__':

125 | MAX_CONCURRENT = 16

126 | loop = asyncio.get_event_loop()

127 | sem = asyncio.Queue(loop=loop, maxsize=MAX_CONCURRENT)

128 | loop.run_until_complete(main())

129 | loop.close()

130 |

--------------------------------------------------------------------------------

/examples/merge_multiple_versions.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | # Copyright (c) 2015-2020 Vector 35 Inc

4 | #

5 | # Permission is hereby granted, free of charge, to any person obtaining a copy

6 | # of this software and associated documentation files (the "Software"), to

7 | # deal in the Software without restriction, including without limitation the

8 | # rights to use, copy, modify, merge, publish, distribute, sublicense, and/or

9 | # sell copies of the Software, and to permit persons to whom the Software is

10 | # furnished to do so, subject to the following conditions:

11 | #

12 | # The above copyright notice and this permission notice shall be included in

13 | # all copies or substantial portions of the Software.

14 | #

15 | # THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | # IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | # FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | # AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | # LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

20 | # FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS

21 | # IN THE SOFTWARE.

22 |

23 | """

24 | This script shows how you can merge the signature libraries generated for

25 | different versions of the same library. We would want to do this because

26 | there's like a lot of overlap between the two and duplicated functions.

27 | We want to avoid creating huge signatures that are bloated with these

28 | duplicated functions, so we will deduplicate them using the trie_ops package.

29 |

30 | This script loads pickled dicts of {FunctionNode: FunctionInfo} generated

31 | by batch_process.py.

32 | """

33 |

34 | import pickle, json

35 | import gc

36 | from pathlib import Path

37 |

38 | import sigkit.signaturelibrary, sigkit.trie_ops, sigkit.sig_serialize_json, sigkit.sigexplorer

39 |

40 |

41 | def func_count(trie):

42 | return len(set(trie.all_functions()))

43 |

44 | # Clean up the functions list, exclude some garbage functions, etc.

45 | def preprocess_funcs_list(func_info):

46 | import re

47 | to_delete = set()

48 | for f in func_info:

49 | if re.match(r'\.L\d+', f.name):

50 | to_delete.add(f)

51 | continue

52 | f.name = f.name[:40] # trim long names

53 | for f in to_delete:

54 | del func_info[f]

55 |

56 | def load_pkls(path, glob):

57 | pkls = list(map(str, Path(path).glob(glob)))

58 | trie, func_info = sigkit.signaturelibrary.new_trie(), {}

59 | for pkl in pkls:

60 | with open(pkl, 'rb') as f:

61 | pkl_funcs = pickle.load(f)

62 | preprocess_funcs_list(pkl_funcs)

63 | sigkit.trie_ops.trie_insert_funcs(trie, pkl_funcs)

64 | func_info.update(pkl_funcs)

65 | sigkit.trie_ops.finalize_trie(trie, func_info)

66 | return trie, func_info

67 |

68 | gc.disable() # I AM SPEED - Lightning McQueen

69 | dst_trie, dst_info = load_pkls('.', 'libc_version1/*.pkl')

70 | src_trie, src_info = load_pkls('.', 'libc_version2/*.pkl')

71 | gc.disable() # i am no longer speed.

72 |

73 | size1, size2 = func_count(dst_trie), func_count(src_trie)

74 | print("Pre-merge sizes: %d + %d = %d funcs" % (size1, size2, size1+size2))

75 |

76 | sigkit.trie_ops.combine_signature_libraries(dst_trie, dst_info, src_trie, src_info)

77 | print("Post-merge size: %d funcs" % (func_count(dst_trie),))

78 |

79 | sigkit.trie_ops.finalize_trie(dst_trie, dst_info)

80 | print("Finalized size: %d funcs" % (func_count(dst_trie),))

81 |

82 | print(json.dumps(sigkit.sig_serialize_json.serialize(dst_trie)))

83 | sigkit.explore_signature_library(dst_trie)

84 |

--------------------------------------------------------------------------------

/examples/sig_match.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) 2015-2020 Vector 35 Inc

2 | #

3 | # Permission is hereby granted, free of charge, to any person obtaining a copy

4 | # of this software and associated documentation files (the "Software"), to

5 | # deal in the Software without restriction, including without limitation the

6 | # rights to use, copy, modify, merge, publish, distribute, sublicense, and/or

7 | # sell copies of the Software, and to permit persons to whom the Software is

8 | # furnished to do so, subject to the following conditions:

9 | #

10 | # The above copyright notice and this permission notice shall be included in

11 | # all copies or substantial portions of the Software.

12 | #

13 | # THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

14 | # IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

15 | # FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

16 | # AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

17 | # LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

18 | # FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS

19 | # IN THE SOFTWARE.

20 |

21 | """

22 | This file contains a signature matcher implementation in Python. This

23 | implementation is only an illustrative example and should be used for testing

24 | purposes only. It is extremely slow compared to the native implementation

25 | found in Binary Ninja. Furthermore, the algorithm shown here is outdated

26 | compared to the native implementation, so matcher results will be of inferior

27 | quality.

28 | """

29 |

30 | from __future__ import print_function

31 |

32 | from binaryninja import *

33 |

34 | import sigkit.compute_sig

35 |

36 | class SignatureMatcher(object):

37 | def __init__(self, sig_trie, bv):

38 | self.sig_trie = sig_trie

39 | self.bv = bv

40 |

41 | self._matches = {}

42 | self._matches_inv = {}

43 | self.results = {}

44 |

45 | self._cur_match_debug = ""

46 |

47 | def resolve_thunk(self, func, level=0):

48 | if sigkit.compute_sig.get_func_len(func) >= 8:

49 | return func

50 |

51 | first_insn = func.mlil[0]

52 | if first_insn.operation == MediumLevelILOperation.MLIL_TAILCALL:

53 | thunk_dest = self.bv.get_function_at(first_insn.dest.value.value)

54 | elif first_insn.operation == MediumLevelILOperation.MLIL_JUMP and first_insn.dest.operation == MediumLevelILOperation.MLIL_LOAD and first_insn.dest.src.operation == MediumLevelILOperation.MLIL_CONST_PTR:

55 | data_var = self.bv.get_data_var_at(first_insn.dest.src.value.value)

56 | if not data_var or not data_var.data_refs_from: return None

57 | thunk_dest = self.bv.get_function_at(data_var.data_refs_from[0])

58 | else:

59 | return func

60 |

61 | if thunk_dest is None:

62 | return None

63 |

64 | if level >= 100:

65 | # something is wrong here. there's a weird infinite loop of thunks.

66 | sys.stderr.write('Warning: reached recursion limit while trying to resolve thunk %s!\n' % (func.name,))

67 | return None

68 |

69 | print('* following thunk %s -> %s' % (func.name, thunk_dest.name))

70 | return self.resolve_thunk(thunk_dest, level + 1)

71 |

72 | def on_match(self, func, func_node, level=0):

73 | if func in self._matches:

74 | if self._matches[func] != func_node:

75 | sys.stderr.write('Warning: CONFLICT on %s: %s vs %s' % (func.name, self._matches[func], func_node) + '\n')

76 | if func in self.results:

77 | del self.results[func]

78 | return

79 |

80 | self.results[func] = func_node

81 |

82 | if func_node in self._matches_inv:

83 | if self._matches_inv[func_node] != func:

84 | sys.stderr.write('Warning: INVERSE CONFLICT (%s) on %s: %s vs %s' % (self._cur_match_debug, func_node, self._matches_inv[func_node].name, func.name) + '\n')

85 | return

86 |

87 | print((' ' * level) + func.name, '=>', func_node.name, 'from', func_node.source_binary, '(' + self._cur_match_debug + ')')

88 | self._matches[func] = func_node

89 | self._matches_inv[func_node] = func

90 |

91 | def compute_func_callees(self, func):

92 | """

93 | Return a list of the names of symbols the function calls.

94 | """

95 | callees = {}

96 | for ref in func.call_sites:

97 | callee_addrs = self.bv.get_callees(ref.address, ref.function, ref.arch)

98 | if len(callee_addrs) != 1: continue

99 | callees[ref.address - func.start] = self.bv.get_function_at(callee_addrs[0])

100 | return callees

101 |

102 | def does_func_match(self, func, func_node, visited, level=0):

103 | print((' '*level) + 'compare', 'None' if not func else func.name, 'vs', '*' if not func_node else func_node.name, 'from ' + func_node.source_binary if func_node else '')

104 | # no information about this function. assume wildcard.

105 | if func_node is None:

106 | return 999

107 |

108 | # we expect a function to be here but there isn't one. no match.

109 | if func is None:

110 | return 0

111 |

112 | # fix for msvc thunks -.-

113 | thunk_dest = self.resolve_thunk(func)

114 | if not thunk_dest:

115 | sys.stderr.write('Warning: encountered a weird thunk %s, giving up\n' % (func.name,))

116 | return 0

117 | func = thunk_dest

118 |

119 | # this is essentially a dfs on the callgraph. if we encounter a backedge,

120 | # treat it optimistically, implying that the callers match if the callees match.

121 | # however, we track our previous assumptions, meaning that if we previously

122 | # optimistically assumed b == a, then later on if we compare b and c, we say

123 | # that b != c since we already assumed b == a (and c != a)

124 | if func in visited:

125 | print("we've already seen visited one before")

126 | return 999 if visited[func] == func_node else 0

127 | visited[func] = func_node

128 |

129 | # if we've already figured out what this function is, don't waste our time doing it again.

130 | if func in self._matches:

131 | return 999 if self._matches[func] == func_node else 0

132 |

133 | func_len = sigkit.compute_sig.get_func_len(func)

134 | func_data = self.bv.read(func.start, func_len)

135 | if not func_node.is_bridge:

136 | trie_matches = self.sig_trie.find(func_data)

137 | if func_node not in trie_matches:

138 | print((' ' * level) + 'trie mismatch!')

139 | return 0

140 | else:

141 | print((' ' * level) + 'this is a bridge node.')

142 |

143 | disambiguation_data = func_data[func_node.pattern_offset:func_node.pattern_offset + len(func_node.pattern)]

144 | if not func_node.pattern.matches(disambiguation_data):

145 | print((' ' * level) + 'disambiguation mismatch!')

146 | return 1

147 |

148 | callees = self.compute_func_callees(func)

149 | for call_site in callees:

150 | if call_site not in func_node:

151 | print((' ' * level) + 'call sites mismatch!')

152 | return 2

153 | for call_site, callee in func_node.callees.items():

154 | if callee is not None and call_site not in callees:

155 | print((' ' * level) + 'call sites mismatch!')

156 | return 2

157 |

158 | for call_site in callees:

159 | if self.does_func_match(callees[call_site], func_node.callees[call_site], visited, level + 1) != 999:

160 | print((' '*level) + 'callee ' + func_node.callees[call_site].name + ' mismatch!')

161 | return 3

162 |

163 | self._cur_match_debug = 'full match'

164 | self.on_match(func, func_node, level)

165 | return 999

166 |

167 |

168 | def process_func(self, func):

169 | """

170 | Try to sig the given function.

171 | Return the list of signatures the function matched against

172 | """

173 | func_len = sigkit.compute_sig.get_func_len(func)

174 | func_data = self.bv.read(func.start, func_len)

175 | trie_matches = self.sig_trie.find(func_data)

176 | best_score, results = 0, []

177 | for candidate_func in trie_matches:

178 | score = self.does_func_match(func, candidate_func, {})

179 | if score > best_score:

180 | results = [candidate_func]

181 | best_score = score

182 | elif score == best_score:

183 | results.append(candidate_func)

184 | if len(results) == 0:

185 | print(func.name, '=>', 'no match', end=", ")

186 | for x in self.sig_trie.all_values():

187 | if x.name == func.name:

188 | print('but there was a signature from', x.source_binary)

189 | break

190 | else:

191 | print('but this is OK.')

192 | assert best_score == 0

193 | return results

194 | elif len(results) > 1:

195 | print(func.name, '=>', 'deferred at level', best_score, results)

196 | return results

197 |

198 | match = results[0]

199 | if best_score == 1:

200 | self._cur_match_debug = 'bytes match (but disambiguation mismatch?)'

201 | self.on_match(func, match)

202 | return results

203 | elif best_score == 2:

204 | self._cur_match_debug = 'bytes + disambiguation match (but callee count mismatch)'

205 | self.on_match(func, match)

206 | return results

207 | elif best_score == 3:

208 | self._cur_match_debug = 'bytes + disambiguation match (but callees mismatch)'

209 | self.on_match(func, match)

210 | return results

211 | else:

212 | self._cur_match_debug = 'full match'

213 | self.on_match(func, match)

214 | return results

215 |

216 | def run(self):

217 | queue = self.bv.functions

218 | while True: # silly fixedpoint worklist algorithm

219 | deferred = []

220 | print('Start of pass %d functions remaining' % (len(queue)))

221 |

222 | for func in queue:

223 | if func in self._matches:

224 | continue

225 | if sigkit.compute_sig.get_func_len(func) < 8:

226 | continue

227 | matches = self.process_func(func)

228 | if len(matches) > 1:

229 | deferred.append(func)

230 |

231 | print('Pass complete, %d functions deferred' % (len(deferred),))

232 | if len(queue) == len(deferred):

233 | print('No changes. Quit.')

234 | break

235 | queue = deferred

236 |

--------------------------------------------------------------------------------

/icon.ico:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Vector35/sigkit/a7420964415a875a1e6181ecdc603cfc29e34058/icon.ico

--------------------------------------------------------------------------------

/images/explorer.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Vector35/sigkit/a7420964415a875a1e6181ecdc603cfc29e34058/images/explorer.png

--------------------------------------------------------------------------------

/plugin.json:

--------------------------------------------------------------------------------

1 | {

2 | "pluginmetadataversion": 2,

3 | "name": "Signature Kit Plugin",

4 | "type": [

5 | "helper",

6 | "ui",

7 | "core"

8 | ],

9 | "api": [

10 | "python2",

11 | "python3"

12 | ],

13 | "description": "Python tools for working with Signature Libraries",

14 | "license": {

15 | "name": "MIT",

16 | "text": "Copyright (c) 2019-2020 Vector 35 Inc\n\nPermission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the \"Software\"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:\n\nThe above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.\n\nTHE SOFTWARE IS PROVIDED \"AS IS\", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE."

17 | },

18 | "platforms": [

19 | "Windows",

20 | "Linux",

21 | "Darwin"

22 | ],

23 | "installinstructions": {

24 | "Windows": "",

25 | "Linux": "",

26 | "Darwin": ""

27 | },

28 | "dependencies": {

29 | "pip": [

30 | "flatbuffers"

31 | ]

32 | },

33 | "version": "1.2.2",

34 | "author": "Vector 35 Inc",

35 | "minimumbinaryninjaversion": 1997

36 | }

37 |

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

1 | [build-system]

2 | requires = ["setuptools"]

3 | build-backend = "setuptools.build_meta"

4 |

5 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | flatbuffers

2 |

--------------------------------------------------------------------------------

/setup.cfg:

--------------------------------------------------------------------------------

1 | [metadata]

2 | name = sigkit

3 | version = 1.2.1

4 | license = "MIT"

5 | long_description = file: README.md

6 |

7 | [options]

8 | install_requires = flatbuffers

9 | packages=find:

10 |

11 |

--------------------------------------------------------------------------------

/sigkit/FlatbufSignatureLibrary/CallRef.py:

--------------------------------------------------------------------------------

1 | # automatically generated by the FlatBuffers compiler, do not modify

2 |

3 | # namespace: FlatbufSignatureLibrary

4 |

5 | import flatbuffers

6 |

7 | class CallRef(object):

8 | __slots__ = ['_tab']

9 |

10 | # CallRef

11 | def Init(self, buf, pos):

12 | self._tab = flatbuffers.table.Table(buf, pos)

13 |

14 | # CallRef

15 | def Offset(self): return self._tab.Get(flatbuffers.number_types.Int32Flags, self._tab.Pos + flatbuffers.number_types.UOffsetTFlags.py_type(0))

16 | # CallRef

17 | def DstId(self): return self._tab.Get(flatbuffers.number_types.Int32Flags, self._tab.Pos + flatbuffers.number_types.UOffsetTFlags.py_type(4))

18 |

19 | def CreateCallRef(builder, offset, dstId):

20 | builder.Prep(4, 8)

21 | builder.PrependInt32(dstId)

22 | builder.PrependInt32(offset)

23 | return builder.Offset()

24 |

--------------------------------------------------------------------------------

/sigkit/FlatbufSignatureLibrary/Function.py:

--------------------------------------------------------------------------------

1 | # automatically generated by the FlatBuffers compiler, do not modify

2 |

3 | # namespace: FlatbufSignatureLibrary

4 |

5 | import flatbuffers

6 |

7 | class Function(object):

8 | __slots__ = ['_tab']

9 |

10 | @classmethod

11 | def GetRootAsFunction(cls, buf, offset):

12 | n = flatbuffers.encode.Get(flatbuffers.packer.uoffset, buf, offset)

13 | x = Function()

14 | x.Init(buf, n + offset)

15 | return x

16 |

17 | # Function

18 | def Init(self, buf, pos):

19 | self._tab = flatbuffers.table.Table(buf, pos)

20 |

21 | # Function

22 | def Name(self):

23 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(4))

24 | if o != 0:

25 | return self._tab.String(o + self._tab.Pos)

26 | return None

27 |

28 | # Function

29 | def SourceBinary(self):

30 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(6))

31 | if o != 0:

32 | return self._tab.String(o + self._tab.Pos)

33 | return None

34 |

35 | # Function

36 | def Callees(self, j):

37 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(8))

38 | if o != 0:

39 | x = self._tab.Vector(o)

40 | x += flatbuffers.number_types.UOffsetTFlags.py_type(j) * 8

41 | from .CallRef import CallRef

42 | obj = CallRef()

43 | obj.Init(self._tab.Bytes, x)

44 | return obj

45 | return None

46 |

47 | # Function

48 | def CalleesLength(self):

49 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(8))

50 | if o != 0:

51 | return self._tab.VectorLen(o)

52 | return 0

53 |

54 | # Function

55 | def Pattern(self):

56 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(10))

57 | if o != 0:

58 | x = self._tab.Indirect(o + self._tab.Pos)

59 | from .Pattern import Pattern

60 | obj = Pattern()

61 | obj.Init(self._tab.Bytes, x)

62 | return obj

63 | return None

64 |

65 | # Function

66 | def PatternOffset(self):

67 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(12))

68 | if o != 0:

69 | return self._tab.Get(flatbuffers.number_types.Uint32Flags, o + self._tab.Pos)

70 | return 0

71 |

72 | # Function

73 | def IsBridge(self):

74 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(14))

75 | if o != 0:

76 | return bool(self._tab.Get(flatbuffers.number_types.BoolFlags, o + self._tab.Pos))

77 | return False

78 |

79 | def FunctionStart(builder): builder.StartObject(6)

80 | def FunctionAddName(builder, name): builder.PrependUOffsetTRelativeSlot(0, flatbuffers.number_types.UOffsetTFlags.py_type(name), 0)

81 | def FunctionAddSourceBinary(builder, sourceBinary): builder.PrependUOffsetTRelativeSlot(1, flatbuffers.number_types.UOffsetTFlags.py_type(sourceBinary), 0)

82 | def FunctionAddCallees(builder, callees): builder.PrependUOffsetTRelativeSlot(2, flatbuffers.number_types.UOffsetTFlags.py_type(callees), 0)

83 | def FunctionStartCalleesVector(builder, numElems): return builder.StartVector(8, numElems, 4)

84 | def FunctionAddPattern(builder, pattern): builder.PrependUOffsetTRelativeSlot(3, flatbuffers.number_types.UOffsetTFlags.py_type(pattern), 0)

85 | def FunctionAddPatternOffset(builder, patternOffset): builder.PrependUint32Slot(4, patternOffset, 0)

86 | def FunctionAddIsBridge(builder, isBridge): builder.PrependBoolSlot(5, isBridge, 0)

87 | def FunctionEnd(builder): return builder.EndObject()

88 |

--------------------------------------------------------------------------------

/sigkit/FlatbufSignatureLibrary/Pattern.py:

--------------------------------------------------------------------------------

1 | # automatically generated by the FlatBuffers compiler, do not modify

2 |

3 | # namespace: FlatbufSignatureLibrary

4 |

5 | import flatbuffers

6 |

7 | class Pattern(object):

8 | __slots__ = ['_tab']

9 |

10 | @classmethod

11 | def GetRootAsPattern(cls, buf, offset):

12 | n = flatbuffers.encode.Get(flatbuffers.packer.uoffset, buf, offset)

13 | x = Pattern()

14 | x.Init(buf, n + offset)

15 | return x

16 |

17 | # Pattern

18 | def Init(self, buf, pos):

19 | self._tab = flatbuffers.table.Table(buf, pos)

20 |

21 | # Pattern

22 | def Data(self, j):

23 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(4))

24 | if o != 0:

25 | a = self._tab.Vector(o)

26 | return self._tab.Get(flatbuffers.number_types.Uint8Flags, a + flatbuffers.number_types.UOffsetTFlags.py_type(j * 1))

27 | return 0

28 |

29 | # Pattern

30 | def DataAsNumpy(self):

31 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(4))

32 | if o != 0:

33 | return self._tab.GetVectorAsNumpy(flatbuffers.number_types.Uint8Flags, o)

34 | return 0

35 |

36 | # Pattern

37 | def DataLength(self):

38 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(4))

39 | if o != 0:

40 | return self._tab.VectorLen(o)

41 | return 0

42 |

43 | # Pattern

44 | def Mask(self, j):

45 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(6))

46 | if o != 0:

47 | a = self._tab.Vector(o)

48 | return self._tab.Get(flatbuffers.number_types.Uint8Flags, a + flatbuffers.number_types.UOffsetTFlags.py_type(j * 1))

49 | return 0

50 |

51 | # Pattern

52 | def MaskAsNumpy(self):

53 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(6))

54 | if o != 0:

55 | return self._tab.GetVectorAsNumpy(flatbuffers.number_types.Uint8Flags, o)

56 | return 0

57 |

58 | # Pattern

59 | def MaskLength(self):

60 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(6))

61 | if o != 0:

62 | return self._tab.VectorLen(o)

63 | return 0

64 |

65 | def PatternStart(builder): builder.StartObject(2)

66 | def PatternAddData(builder, data): builder.PrependUOffsetTRelativeSlot(0, flatbuffers.number_types.UOffsetTFlags.py_type(data), 0)

67 | def PatternStartDataVector(builder, numElems): return builder.StartVector(1, numElems, 1)

68 | def PatternAddMask(builder, mask): builder.PrependUOffsetTRelativeSlot(1, flatbuffers.number_types.UOffsetTFlags.py_type(mask), 0)

69 | def PatternStartMaskVector(builder, numElems): return builder.StartVector(1, numElems, 1)

70 | def PatternEnd(builder): return builder.EndObject()

71 |

--------------------------------------------------------------------------------

/sigkit/FlatbufSignatureLibrary/SignatureLibrary.py:

--------------------------------------------------------------------------------

1 | # automatically generated by the FlatBuffers compiler, do not modify

2 |

3 | # namespace: FlatbufSignatureLibrary

4 |

5 | import flatbuffers

6 |

7 | class SignatureLibrary(object):

8 | __slots__ = ['_tab']

9 |

10 | @classmethod

11 | def GetRootAsSignatureLibrary(cls, buf, offset):

12 | n = flatbuffers.encode.Get(flatbuffers.packer.uoffset, buf, offset)

13 | x = SignatureLibrary()

14 | x.Init(buf, n + offset)

15 | return x

16 |

17 | # SignatureLibrary

18 | def Init(self, buf, pos):

19 | self._tab = flatbuffers.table.Table(buf, pos)

20 |

21 | # SignatureLibrary

22 | def Functions(self, j):

23 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(4))

24 | if o != 0:

25 | x = self._tab.Vector(o)

26 | x += flatbuffers.number_types.UOffsetTFlags.py_type(j) * 4

27 | x = self._tab.Indirect(x)

28 | from .Function import Function

29 | obj = Function()

30 | obj.Init(self._tab.Bytes, x)

31 | return obj

32 | return None

33 |

34 | # SignatureLibrary

35 | def FunctionsLength(self):

36 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(4))

37 | if o != 0:

38 | return self._tab.VectorLen(o)

39 | return 0

40 |

41 | # SignatureLibrary

42 | def Root(self):

43 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(6))

44 | if o != 0:

45 | x = self._tab.Indirect(o + self._tab.Pos)

46 | from .TrieNode import TrieNode

47 | obj = TrieNode()

48 | obj.Init(self._tab.Bytes, x)

49 | return obj

50 | return None

51 |

52 | def SignatureLibraryStart(builder): builder.StartObject(2)

53 | def SignatureLibraryAddFunctions(builder, functions): builder.PrependUOffsetTRelativeSlot(0, flatbuffers.number_types.UOffsetTFlags.py_type(functions), 0)

54 | def SignatureLibraryStartFunctionsVector(builder, numElems): return builder.StartVector(4, numElems, 4)

55 | def SignatureLibraryAddRoot(builder, root): builder.PrependUOffsetTRelativeSlot(1, flatbuffers.number_types.UOffsetTFlags.py_type(root), 0)

56 | def SignatureLibraryEnd(builder): return builder.EndObject()

57 |

--------------------------------------------------------------------------------

/sigkit/FlatbufSignatureLibrary/TrieNode.py:

--------------------------------------------------------------------------------

1 | # automatically generated by the FlatBuffers compiler, do not modify

2 |

3 | # namespace: FlatbufSignatureLibrary

4 |

5 | import flatbuffers

6 |

7 | class TrieNode(object):

8 | __slots__ = ['_tab']

9 |

10 | @classmethod

11 | def GetRootAsTrieNode(cls, buf, offset):

12 | n = flatbuffers.encode.Get(flatbuffers.packer.uoffset, buf, offset)

13 | x = TrieNode()

14 | x.Init(buf, n + offset)

15 | return x

16 |

17 | # TrieNode

18 | def Init(self, buf, pos):

19 | self._tab = flatbuffers.table.Table(buf, pos)

20 |

21 | # TrieNode

22 | def PatternPrefix(self):

23 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(4))

24 | if o != 0:

25 | return self._tab.Get(flatbuffers.number_types.Uint8Flags, o + self._tab.Pos)

26 | return 0

27 |

28 | # TrieNode

29 | def Pattern(self):

30 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(6))

31 | if o != 0:

32 | x = self._tab.Indirect(o + self._tab.Pos)

33 | from .Pattern import Pattern

34 | obj = Pattern()

35 | obj.Init(self._tab.Bytes, x)

36 | return obj

37 | return None

38 |

39 | # TrieNode

40 | def Children(self, j):

41 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(8))

42 | if o != 0:

43 | x = self._tab.Vector(o)

44 | x += flatbuffers.number_types.UOffsetTFlags.py_type(j) * 4

45 | x = self._tab.Indirect(x)

46 | from .TrieNode import TrieNode

47 | obj = TrieNode()

48 | obj.Init(self._tab.Bytes, x)

49 | return obj

50 | return None

51 |

52 | # TrieNode

53 | def ChildrenLength(self):

54 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(8))

55 | if o != 0:

56 | return self._tab.VectorLen(o)

57 | return 0

58 |

59 | # TrieNode

60 | def WildcardChild(self):

61 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(10))

62 | if o != 0:

63 | x = self._tab.Indirect(o + self._tab.Pos)

64 | from .TrieNode import TrieNode

65 | obj = TrieNode()

66 | obj.Init(self._tab.Bytes, x)

67 | return obj

68 | return None

69 |

70 | # TrieNode

71 | def Functions(self, j):

72 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(12))

73 | if o != 0:

74 | a = self._tab.Vector(o)

75 | return self._tab.Get(flatbuffers.number_types.Uint32Flags, a + flatbuffers.number_types.UOffsetTFlags.py_type(j * 4))

76 | return 0

77 |

78 | # TrieNode

79 | def FunctionsAsNumpy(self):

80 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(12))

81 | if o != 0:

82 | return self._tab.GetVectorAsNumpy(flatbuffers.number_types.Uint32Flags, o)

83 | return 0

84 |

85 | # TrieNode

86 | def FunctionsLength(self):

87 | o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(12))

88 | if o != 0:

89 | return self._tab.VectorLen(o)

90 | return 0

91 |

92 | def TrieNodeStart(builder): builder.StartObject(5)

93 | def TrieNodeAddPatternPrefix(builder, patternPrefix): builder.PrependUint8Slot(0, patternPrefix, 0)

94 | def TrieNodeAddPattern(builder, pattern): builder.PrependUOffsetTRelativeSlot(1, flatbuffers.number_types.UOffsetTFlags.py_type(pattern), 0)

95 | def TrieNodeAddChildren(builder, children): builder.PrependUOffsetTRelativeSlot(2, flatbuffers.number_types.UOffsetTFlags.py_type(children), 0)

96 | def TrieNodeStartChildrenVector(builder, numElems): return builder.StartVector(4, numElems, 4)

97 | def TrieNodeAddWildcardChild(builder, wildcardChild): builder.PrependUOffsetTRelativeSlot(3, flatbuffers.number_types.UOffsetTFlags.py_type(wildcardChild), 0)

98 | def TrieNodeAddFunctions(builder, functions): builder.PrependUOffsetTRelativeSlot(4, flatbuffers.number_types.UOffsetTFlags.py_type(functions), 0)

99 | def TrieNodeStartFunctionsVector(builder, numElems): return builder.StartVector(4, numElems, 4)

100 | def TrieNodeEnd(builder): return builder.EndObject()

101 |

--------------------------------------------------------------------------------

/sigkit/FlatbufSignatureLibrary/__init__.py:

--------------------------------------------------------------------------------

1 | import flatbuffers

2 |

3 | if hasattr(flatbuffers, "__version__"):

4 | saved_EndVector = flatbuffers.Builder.EndVector

5 | flatbuffers.Builder.EndVector = lambda self, *args: saved_EndVector(self)

--------------------------------------------------------------------------------

/sigkit/__init__.py:

--------------------------------------------------------------------------------

1 | from binaryninja import *

2 |

3 | # exports

4 | from . import trie_ops

5 | from . import sig_serialize_fb

6 | from . import sig_serialize_json

7 |

8 | from .signaturelibrary import TrieNode, FunctionNode, Pattern, MaskedByte, new_trie

9 | from .compute_sig import process_function as generate_function_signature

10 |

11 | if core_ui_enabled():

12 | from .sigexplorer import explore_signature_library

13 | import binaryninjaui

14 |

15 | def signature_explorer(prompt=True):

16 | """

17 | Open the signature explorer UI.

18 | :param prompt: if True, prompt the user to open a file immediately.

19 | :return: `App`, a QT window

20 | """

21 | if "qt_major_version" in binaryninjaui.__dict__ and binaryninjaui.qt_major_version == 6:

22 | from PySide6.QtWidgets import QApplication

23 | else:

24 | from PySide2.QtWidgets import QApplication

25 | app = QApplication.instance()

26 | global widget # avoid lifetime issues from it falling out of scope

27 | widget = sigexplorer.App()

28 | if prompt:

29 | widget.open_file()

30 | widget.show()

31 | if app: # VERY IMPORTANT to avoiding lifetime issues???

32 | app.exec_()

33 | return widget

34 |

35 |

36 | # UI plugin code

37 | def _generate_signature_library(bv):

38 | guess_relocs = len(bv.relocation_ranges) == 0

39 | if guess_relocs:

40 | log.log_debug('Relocation information unavailable; choosing pattern masks heuristically')

41 | else:

42 | log.log_debug('Generating pattern masks based on relocation ranges')

43 |

44 | func_count = sum(map(lambda func: int(bool(bv.get_symbol_at(func.start))), bv.functions))

45 | log.log_info('Generating signatures for %d functions' % (func_count,))

46 | # Warning for usability purposes. Someone will be confused why it's skipping auto-named functions

47 | if func_count / float(len(bv.functions)) < 0.5:

48 | num_skipped = len(bv.functions) - func_count

49 | log.log_warn("%d functions that don't have a name or symbol will be skipped" % (num_skipped,))

50 |

51 | funcs = {}

52 | for func in bv.functions:

53 | if bv.get_symbol_at(func.start) is None: continue

54 | func_node, info = generate_function_signature(func, guess_relocs)

55 | if func_node and info:

56 | funcs[func_node] = info

57 | log.log_debug('Processed ' + func.name)

58 |

59 |

60 | log.log_debug('Constructing signature trie')

61 | trie = signaturelibrary.new_trie()

62 | trie_ops.trie_insert_funcs(trie, funcs)

63 | log.log_debug('Finalizing trie')

64 | trie_ops.finalize_trie(trie, funcs)

65 |

66 |

67 | if 'SIGNATURE_FILE_NAME' in bv.session_data:

68 | output_filename = bv.session_data['SIGNATURE_FILE_NAME']

69 | else:

70 | output_filename = get_save_filename_input("Filename:", "*.sig", bv.file.filename + '.sig')

71 | if not output_filename:

72 | log.log_debug('Save cancelled')

73 | return

74 | if isinstance(output_filename, bytes):

75 | output_filename = output_filename.decode('utf-8')

76 | buf = sig_serialize_fb.SignatureLibraryWriter().serialize(trie)

77 | with open(output_filename, 'wb') as f:

78 | f.write(buf)

79 | log.log_info('Saved to ' + output_filename)

80 |

81 | PluginCommand.register(

82 | "Signature Library\\Generate Signature Library",

83 | "Create a Signature Library that the Signature Matcher can use to locate functions.",

84 | _generate_signature_library

85 | )

86 |

87 | PluginCommand.register(

88 | "Signature Library\\Explore Signature Library",

89 | "View a Signature Library's contents in a graphical interface.",

90 | lambda bv: signature_explorer()

91 | )

92 |

--------------------------------------------------------------------------------

/sigkit/compute_sig.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) 2015-2020 Vector 35 Inc

2 | #

3 | # Permission is hereby granted, free of charge, to any person obtaining a copy

4 | # of this software and associated documentation files (the "Software"), to

5 | # deal in the Software without restriction, including without limitation the

6 | # rights to use, copy, modify, merge, publish, distribute, sublicense, and/or

7 | # sell copies of the Software, and to permit persons to whom the Software is

8 | # furnished to do so, subject to the following conditions:

9 | #

10 | # The above copyright notice and this permission notice shall be included in

11 | # all copies or substantial portions of the Software.

12 | #

13 | # THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

14 | # IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

15 | # FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

16 | # AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

17 | # LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

18 | # FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS

19 | # IN THE SOFTWARE.

20 |

21 | """

22 | This package contains code to compute functions signatures using Binary

23 | Ninja's python API. The most useful function is `process_function`, which

24 | generates a function signature for the specified function.

25 | """

26 |

27 | from binaryninja import *

28 |

29 | from . import signaturelibrary

30 | from . import trie_ops

31 |

32 | def is_llil_relocatable(llil):

33 | """

34 | Guesses whether a LLIL instruction is likely to contain operands that have been or would be relocated by a linker.

35 | :param llil: the llil instruction

36 | :return: true if the LLIL instruction contains LLIL_CONST_PTR or LLIL_EXTERN_PTR.

37 | """

38 | if not isinstance(llil, LowLevelILInstruction):

39 | return False

40 | if llil.operation in [LowLevelILOperation.LLIL_CONST_PTR, LowLevelILOperation.LLIL_EXTERN_PTR]:

41 | return True

42 | for operand in llil.operands:

43 | if is_llil_relocatable(operand):

44 | return True

45 | return False

46 |

47 | def guess_relocations_mask(func, sig_length):

48 | """

49 | Compute the relocations mask on a best-efforts basis using a heuristic based on the LLIL.

50 | :param func: BinaryNinja api function

51 | :param sig_length: how long the mask should be

52 | :return: an array of booleans, signifying whether the byte at each index is significant or not for matching

53 | """

54 |

55 | mask = [False] * sig_length

56 | i = 0

57 | while i < sig_length:

58 | bb = func.get_basic_block_at(func.start + i)

59 | if not bb: # not in a basicblock; wildcard

60 | mask[i] = False

61 | i += 1

62 | continue

63 |

64 | bb._buildStartCache()

65 | if not bb._instLengths:

66 | i += 1

67 | continue

68 | for insn_len in bb._instLengths:

69 | # This throws an exception for large functions where you need to manually force analysis

70 | try:

71 | llil = func.get_low_level_il_at(func.start + i, bb.arch)

72 | except exceptions.ILException:

73 | log_warn(f"Skipping function at {hex(func.start)}. You need to force the analysis of this function.")

74 | return None

75 |

76 | insn_mask = not is_llil_relocatable(llil)

77 | # if not insn_mask:

78 | # func.set_auto_instr_highlight(func.start + i, HighlightStandardColor.BlueHighlightColor)

79 | mask[i:min(i + insn_len, sig_length)] = [insn_mask] * min(insn_len, sig_length - i)

80 | i += insn_len

81 | if i >= sig_length: break

82 | return mask

83 |

84 | def find_relocation(func, start, end):

85 | """

86 | Finds a relocation from `start` to `end`. If `start`==`end`, then they will be expanded to the closest instruction boundary

87 | :param func: function start and end are contained in

88 | :param start: start address

89 | :param end: end address

90 | :return: corrected start and end addresses for the relocation

91 | """

92 |

93 | if end != start: # relocation isn't stupid

94 | return start, end - start

95 | # relocation is stupid (start==end), so just expand to the whole instruction

96 | bb = func.get_basic_block_at(start)

97 | if not bb: # not in a basicblock, don't care.

98 | return None, None

99 | bb._buildStartCache()

100 | for i, insn_start in enumerate(bb._instStarts):

101 | insn_end = insn_start + bb._instLengths[i]

102 | if (insn_start < end and start < insn_end) or (start == end and insn_start <= start < insn_end):

103 | return insn_start, bb._instLengths[i]

104 |

105 | def relocations_mask(func, sig_length):

106 | """

107 | Compute the relocations mask based on the relocation metadata contained within the binary.

108 | :param func: BinaryNinja api function

109 | :param sig_length: how long the mask should be

110 | :return: an array of booleans, signifying whether the byte at each index is significant or not for matching

111 | """

112 |

113 | mask = [True] * sig_length

114 | for start, end in func.view.relocation_ranges:

115 | if start > func.start + sig_length or end < func.start: continue

116 | reloc_start, reloc_len = find_relocation(func, start, end)

117 | if reloc_start is None: continue # not in a basicblock, don't care.

118 | reloc_start -= func.start

119 | if reloc_start < 0:

120 | reloc_len = reloc_len + reloc_start

121 | reloc_start = 0

122 | if reloc_len <= 0: continue

123 | mask[reloc_start:reloc_start + reloc_len] = [False] * reloc_len

124 |

125 | in_block = [False] * sig_length

126 | for bb in func.basic_blocks:

127 | bb_start_offset = bb.start - func.start

128 | bb_end_offset = bb_start_offset + get_bb_len(bb)

129 | if bb_start_offset > sig_length or bb.start < func.start: continue

130 | in_block[bb_start_offset:min(bb_end_offset, sig_length)] = [True] * min(get_bb_len(bb), sig_length - bb_start_offset)

131 |

132 | mask = [a and b for a,b in zip(mask, in_block)]

133 | return mask

134 |

135 | def get_bb_len(bb):

136 | """

137 | Calculate the length of the basicblock, taking into account weird cases like the block ending with an illegal instruction