├── .gitignore

├── AI.jpg

├── _layouts

├── home.html

├── category.html

├── tag.html

├── collection.html

├── archive-taxonomy.html

├── posts.html

├── splash.html

├── tags.html

├── default.html

├── categories.html

├── archive.html

├── search.html

├── compress.html

└── single.html

├── Gemfile

├── _includes

├── footer

│ └── custom.html

├── analytics-providers

│ ├── custom.html

│ ├── google-gtag.html

│ ├── google-universal.html

│ └── google.html

├── comments-providers

│ ├── custom.html

│ ├── google-plus.html

│ ├── facebook.html

│ ├── scripts.html

│ ├── disqus.html

│ ├── discourse.html

│ ├── staticman.html

│ └── staticman_v2.html

├── posts-tag.html

├── posts-category.html

├── head

│ └── custom.html

├── page__hero_video.html

├── page__taxonomy.html

├── author-profile-custom-links.html

├── browser-upgrade.html

├── toc

├── figure

├── search

│ ├── lunr-search-scripts.html

│ ├── search_form.html

│ ├── google-search-scripts.html

│ └── algolia-search-scripts.html

├── analytics.html

├── mathjax.html

├── video

├── documents-collection.html

├── disqus.html

├── read-time.html

├── sidebar.html

├── post_pagination.html

├── scripts.html

├── footer.html

├── comment.html

├── tag-list.html

├── group-by-array

├── head.html

├── category-list.html

├── archive-single.html

├── gallery

├── masthead.html

├── breadcrumbs.html

├── feature_row

├── nav_list

├── social-share.html

├── paginator.html

├── page__hero.html

├── toc.html

├── seo.html

├── author-profile.html

└── comments.html

├── machine-learning

├── images

│ ├── svm_gm.png

│ ├── err_ana.png

│ ├── svm_bound.png

│ ├── err_ana_cn.png

│ ├── svm_outlier.png

│ ├── ablative_ana.png

│ ├── cs229_boost_1.png

│ ├── cs229_lec1_bgd.png

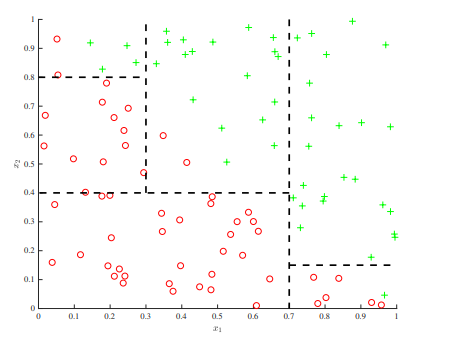

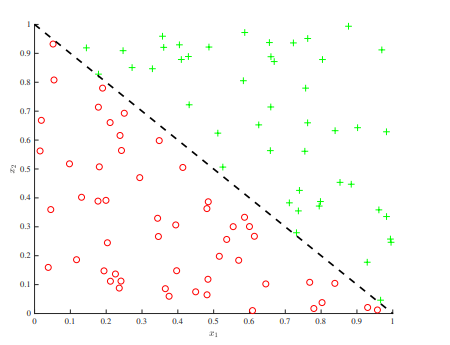

│ ├── cs229_trees_1.png

│ ├── cs229_trees_10.png

│ ├── cs229_trees_11.png

│ ├── cs229_trees_12.png

│ ├── cs229_trees_13.png

│ ├── cs229_trees_14.png

│ ├── cs229_trees_15.png

│ ├── cs229_trees_16.png

│ ├── cs229_trees_17.png

│ ├── cs229_trees_18.png

│ ├── cs229_trees_19.png

│ ├── cs229_trees_2.png

│ ├── cs229_trees_20.png

│ ├── cs229_trees_3.png

│ ├── cs229_trees_4.png

│ ├── cs229_trees_5.png

│ ├── cs229_trees_6.png

│ ├── cs229_trees_7.png

│ ├── cs229_trees_8.png

│ ├── cs229_trees_9.png

│ ├── svm_coordinate.png

│ ├── svm_intuition.png

│ ├── svm_two_coord.png

│ ├── ablative_ana_cn.png

│ ├── cs229_usv_keams.png

│ ├── cs229_gen_gda_learn.png

│ ├── cs229_gen_mul_gau.png

│ ├── cs229_lec1_intuit.png

│ ├── cs229_lec1_logistic.png

│ ├── cs229_lec1_newton.png

│ ├── cs229_usv_em_jensen.png

│ ├── cs229_deeplearning_nn.png

│ ├── cs229_em_missingdata.png

│ ├── cs229_deeplearning_bp_1.png

│ ├── cs229_deeplearning_bp_2.png

│ ├── cs229_deeplearning_cnn_1.png

│ ├── cs229_deeplearning_cnn_2.png

│ ├── cs229_deeplearning_link.png

│ ├── cs229_learningtheory_vc1.png

│ ├── cs229_learningtheory_vc2.png

│ ├── cs229_learningtheory_vc3.png

│ └── cs229_deeplearning_neuron.png

├── english-version

│ ├── dl_propagation.md

│ ├── usv_factor_analysis.md

│ ├── rl.md

│ ├── sv_bias_variance_tradeoff.md

│ ├── sv_online_learning_perceptron.md

│ ├── sv_regularization_model_selection.md

│ └── usv_kmeans.md

└── chinese-version

│ ├── sv_bias_variance_tradeoff_ch.md

│ ├── sv_regularization_model_selection_ch.md

│ ├── sv_tree_ch.md

│ └── sv_boost_ch.md

├── LICENSE

├── package.json

├── Rakefile

├── staticman.yml

├── README.md

├── Gemfile.lock

└── _config.yml

/.gitignore:

--------------------------------------------------------------------------------

1 | _site/*

2 | test

3 |

--------------------------------------------------------------------------------

/AI.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/AI.jpg

--------------------------------------------------------------------------------

/_layouts/home.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: archive

3 | ---

4 |

5 | {{ content }}

6 |

--------------------------------------------------------------------------------

/Gemfile:

--------------------------------------------------------------------------------

1 | source "https://rubygems.org"

2 | gem 'github-pages', group: :jekyll_plugins

--------------------------------------------------------------------------------

/_includes/footer/custom.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

--------------------------------------------------------------------------------

/_includes/analytics-providers/custom.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

--------------------------------------------------------------------------------

/_includes/comments-providers/custom.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

--------------------------------------------------------------------------------

/machine-learning/images/svm_gm.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/svm_gm.png

--------------------------------------------------------------------------------

/machine-learning/images/err_ana.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/err_ana.png

--------------------------------------------------------------------------------

/machine-learning/images/svm_bound.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/svm_bound.png

--------------------------------------------------------------------------------

/_includes/posts-tag.html:

--------------------------------------------------------------------------------

1 | {%- for post in site.tags[include.taxonomy] -%}

2 | {% include archive-single.html %}

3 | {%- endfor -%}

4 |

--------------------------------------------------------------------------------

/machine-learning/images/err_ana_cn.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/err_ana_cn.png

--------------------------------------------------------------------------------

/machine-learning/images/svm_outlier.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/svm_outlier.png

--------------------------------------------------------------------------------

/machine-learning/images/ablative_ana.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/ablative_ana.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_boost_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_boost_1.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_lec1_bgd.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_lec1_bgd.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_1.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_10.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_10.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_11.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_11.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_12.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_12.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_13.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_13.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_14.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_14.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_15.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_15.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_16.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_16.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_17.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_17.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_18.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_18.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_19.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_19.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_2.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_20.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_20.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_3.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_4.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_4.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_5.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_5.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_6.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_6.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_7.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_7.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_8.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_8.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_trees_9.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_trees_9.png

--------------------------------------------------------------------------------

/machine-learning/images/svm_coordinate.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/svm_coordinate.png

--------------------------------------------------------------------------------

/machine-learning/images/svm_intuition.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/svm_intuition.png

--------------------------------------------------------------------------------

/machine-learning/images/svm_two_coord.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/svm_two_coord.png

--------------------------------------------------------------------------------

/_includes/posts-category.html:

--------------------------------------------------------------------------------

1 | {%- for post in site.categories[include.taxonomy] -%}

2 | {% include archive-single.html %}

3 | {%- endfor -%}

4 |

--------------------------------------------------------------------------------

/machine-learning/images/ablative_ana_cn.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/ablative_ana_cn.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_usv_keams.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_usv_keams.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_gen_gda_learn.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_gen_gda_learn.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_gen_mul_gau.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_gen_mul_gau.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_lec1_intuit.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_lec1_intuit.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_lec1_logistic.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_lec1_logistic.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_lec1_newton.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_lec1_newton.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_usv_em_jensen.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_usv_em_jensen.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_deeplearning_nn.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_deeplearning_nn.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_em_missingdata.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_em_missingdata.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_deeplearning_bp_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_deeplearning_bp_1.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_deeplearning_bp_2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_deeplearning_bp_2.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_deeplearning_cnn_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_deeplearning_cnn_1.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_deeplearning_cnn_2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_deeplearning_cnn_2.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_deeplearning_link.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_deeplearning_link.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_learningtheory_vc1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_learningtheory_vc1.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_learningtheory_vc2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_learningtheory_vc2.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_learningtheory_vc3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_learningtheory_vc3.png

--------------------------------------------------------------------------------

/machine-learning/images/cs229_deeplearning_neuron.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Wei2624/AI_Learning_Hub/HEAD/machine-learning/images/cs229_deeplearning_neuron.png

--------------------------------------------------------------------------------

/_includes/head/custom.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/_includes/page__hero_video.html:

--------------------------------------------------------------------------------

1 | {% capture video_id %}{{ page.header.video.id }}{% endcapture %}

2 | {% capture video_provider %}{{ page.header.video.provider }}{% endcapture %}

3 |

4 | {% include video id=video_id provider=video_provider %}

5 |

--------------------------------------------------------------------------------

/_layouts/category.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: archive

3 | ---

4 |

5 | {{ content }}

6 |

7 |

8 | {% include posts-category.html taxonomy=page.taxonomy type=page.entries_layout %}

9 |

10 |

--------------------------------------------------------------------------------

/_includes/page__taxonomy.html:

--------------------------------------------------------------------------------

1 | {% if site.tag_archive.type and page.tags[0] %}

2 | {% include tag-list.html %}

3 | {% endif %}

4 |

5 | {% if site.category_archive.type and page.categories[0] %}

6 | {% include category-list.html %}

7 | {% endif %}

--------------------------------------------------------------------------------

/_includes/author-profile-custom-links.html:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/_layouts/tag.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: archive

3 | ---

4 |

5 | {{ content }}

6 |

7 |

8 | {% include posts-tag.html taxonomy=page.taxonomy type=page.entries_layout %}

9 |

10 |

--------------------------------------------------------------------------------

/_includes/comments-providers/google-plus.html:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/_includes/browser-upgrade.html:

--------------------------------------------------------------------------------

1 |

4 |

--------------------------------------------------------------------------------

/_layouts/collection.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: archive

3 | ---

4 |

5 | {{ content }}

6 |

7 |

8 | {% include documents-collection.html collection=page.collection sort_by=page.sort_by sort_order=page.sort_order type=page.entries_layout %}

9 |

10 |

--------------------------------------------------------------------------------

/_includes/toc:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/_layouts/archive-taxonomy.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: default

3 | author_profile: false

4 | ---

5 |

6 |

7 | {% include sidebar.html %}

8 |

9 |

10 |

{{ page.title }}

11 | {% for post in page.posts %}

12 | {% include archive-single.html %}

13 | {% endfor %}

14 |

15 |

9 | {% if include.caption %}

10 | {{ include.caption | markdownify | remove: "

9 | {% if include.caption %}

10 | {{ include.caption | markdownify | remove: "" | remove: "

" }}

11 | {% endif %}

12 |

13 |

--------------------------------------------------------------------------------

/_includes/search/lunr-search-scripts.html:

--------------------------------------------------------------------------------

1 | {% assign lang = site.locale | slice: 0,2 | default: "en" %}

2 | {% case lang %}

3 | {% when "gr" %}

4 | {% assign lang = "gr" %}

5 | {% else %}

6 | {% assign lang = "en" %}

7 | {% endcase %}

8 |

9 |

10 |

--------------------------------------------------------------------------------

/_includes/analytics-providers/google-gtag.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

10 |

--------------------------------------------------------------------------------

/_includes/analytics.html:

--------------------------------------------------------------------------------

1 | {% if jekyll.environment == 'production' and site.analytics.provider and page.analytics != false %}

2 |

3 | {% case site.analytics.provider %}

4 | {% when "google" %}

5 | {% include /analytics-providers/google.html %}

6 | {% when "google-universal" %}

7 | {% include /analytics-providers/google-universal.html %}

8 | {% when "google-gtag" %}

9 | {% include /analytics-providers/google-gtag.html %}

10 | {% when "custom" %}

11 | {% include /analytics-providers/custom.html %}

12 | {% endcase %}

13 |

14 | {% endif %}

--------------------------------------------------------------------------------

/_includes/mathjax.html:

--------------------------------------------------------------------------------

1 | {% if page.mathjax %}

2 |

10 |

16 |

22 | {% endif %}

23 |

--------------------------------------------------------------------------------

/_includes/video:

--------------------------------------------------------------------------------

1 | {% capture video_id %}{{ include.id }}{% endcapture %}

2 | {% capture video_provider %}{{ include.provider }}{% endcapture %}

3 |

4 |

5 |

6 | {% if video_provider == "vimeo" %}

7 |

8 | {% elsif video_provider == "youtube" %}

9 |

10 | {% endif %}

11 |

12 |

--------------------------------------------------------------------------------

/_includes/analytics-providers/google-universal.html:

--------------------------------------------------------------------------------

1 |

11 |

--------------------------------------------------------------------------------

/_includes/documents-collection.html:

--------------------------------------------------------------------------------

1 | {% assign entries = site[include.collection] %}

2 |

3 | {% if include.sort_by == 'title' %}

4 | {% if include.sort_order == 'reverse' %}

5 | {% assign entries = entries | sort: 'title' | reverse %}

6 | {% else %}

7 | {% assign entries = entries | sort: 'title' %}

8 | {% endif %}

9 | {% elsif include.sort_by == 'date' %}

10 | {% if include.sort_order == 'reverse' %}

11 | {% assign entries = entries | sort: 'date' | reverse %}

12 | {% else %}

13 | {% assign entries = entries | sort: 'date' %}

14 | {% endif %}

15 | {% endif %}

16 |

17 | {%- for post in entries -%}

18 | {% include archive-single.html %}

19 | {%- endfor -%}

20 |

--------------------------------------------------------------------------------

/_includes/analytics-providers/google.html:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/_includes/disqus.html:

--------------------------------------------------------------------------------

1 | {% if site.disqus %}

2 |

17 | {% endif %}

18 |

--------------------------------------------------------------------------------

/_includes/comments-providers/scripts.html:

--------------------------------------------------------------------------------

1 | {% if site.comments.provider and page.comments %}

2 | {% case site.comments.provider %}

3 | {% when "disqus" %}

4 | {% include /comments-providers/disqus.html %}

5 | {% when "discourse" %}

6 | {% include /comments-providers/discourse.html %}

7 | {% when "facebook" %}

8 | {% include /comments-providers/facebook.html %}

9 | {% when "google-plus" %}

10 | {% include /comments-providers/google-plus.html %}

11 | {% when "staticman" %}

12 | {% include /comments-providers/staticman.html %}

13 | {% when "staticman_v2" %}

14 | {% include /comments-providers/staticman_v2.html %}

15 | {% when "custom" %}

16 | {% include /comments-providers/custom.html %}

17 | {% endcase %}

18 | {% endif %}

--------------------------------------------------------------------------------

/_includes/read-time.html:

--------------------------------------------------------------------------------

1 | {% assign words_per_minute = site.words_per_minute | default: 200 %}

2 |

3 | {% if post.read_time %}

4 | {% assign words = post.content | strip_html | number_of_words %}

5 | {% elsif page.read_time %}

6 | {% assign words = page.content | strip_html | number_of_words %}

7 | {% endif %}

8 |

9 | {% if words < words_per_minute %}

10 | {{ site.data.ui-text[site.locale].less_than | default: "less than" }} 1 {{ site.data.ui-text[site.locale].minute_read | default: "minute read" }}

11 | {% elsif words == words_per_minute %}

12 | 1 {{ site.data.ui-text[site.locale].minute_read | default: "minute read" }}

13 | {% else %}

14 | {{ words | divided_by:words_per_minute }} {{ site.data.ui-text[site.locale].minute_read | default: "minute read" }}

15 | {% endif %}

--------------------------------------------------------------------------------

/_includes/comments-providers/disqus.html:

--------------------------------------------------------------------------------

1 | {% if site.comments.disqus.shortname %}

2 |

14 |

15 | {% endif %}

16 |

--------------------------------------------------------------------------------

/_includes/comments-providers/discourse.html:

--------------------------------------------------------------------------------

1 | {% if site.comments.discourse.server %}

2 | {% capture canonical %}{% if site.permalink contains '.html' %}{{ page.url | absolute_url }}{% else %}{{ page.url | absolute_url | remove:'index.html' | strip_slash }}{% endif %}{% endcapture %}

3 |

12 |

13 | {% endif %}

14 |

--------------------------------------------------------------------------------

/_includes/sidebar.html:

--------------------------------------------------------------------------------

1 | {% if page.author_profile or layout.author_profile or page.sidebar %}

2 |

23 | {% endif %}

24 |

--------------------------------------------------------------------------------

/_includes/post_pagination.html:

--------------------------------------------------------------------------------

1 | {% if page.previous or page.next %}

2 |

14 | {% endif %}

--------------------------------------------------------------------------------

/_includes/search/search_form.html:

--------------------------------------------------------------------------------

1 |

2 | {%- assign search_provider = site.search_provider | default: "lunr" -%}

3 | {%- case search_provider -%}

4 | {%- when "lunr" -%}

5 |

6 |

7 | {%- when "google" -%}

8 |

11 |

12 |

13 |

14 | {%- when "algolia" -%}

15 |

16 |

17 | {%- endcase -%}

18 |

12 |

13 | {% if page.title %}{% endif %}

14 | {% if page.excerpt %}{% endif %}

15 | {% if page.date %}{% endif %}

16 | {% if page.last_modified_at %}{% endif %}

17 |

18 |

21 |

22 |

17 | {{ site.data.ui-text[site.locale].tags_label | default: "Tags:" }}

18 |

19 | {% for hash in tag_hashes %}

20 | {% assign keyValue = hash | split: '#' %}

21 | {% capture tag_word %}{{ keyValue[1] | strip_newlines }}{% endcapture %}

22 | {{ tag_word }}{% unless forloop.last %}, {% endunless %}

23 | {% endfor %}

24 |

25 |

26 | {% endif %}

--------------------------------------------------------------------------------

/_includes/group-by-array:

--------------------------------------------------------------------------------

1 |

7 |

8 |

9 | {% assign __empty_array = '' | split: ',' %}

10 | {% assign group_names = __empty_array %}

11 | {% assign group_items = __empty_array %}

12 |

13 |

14 | {% assign __names = include.collection | map: include.field %}

15 |

16 |

17 | {% assign __names = __names | join: ',' | join: ',' | split: ',' %}

18 |

19 |

20 | {% assign __names = __names | sort %}

21 | {% for name in __names %}

22 |

23 |

24 | {% unless name == previous %}

25 |

26 |

27 | {% assign group_names = group_names | push: name %}

28 | {% endunless %}

29 |

30 | {% assign previous = name %}

31 | {% endfor %}

32 |

33 |

34 |

35 | {% for name in group_names %}

36 |

37 |

38 | {% assign __item = __empty_array %}

39 | {% for __element in include.collection %}

40 | {% if __element[include.field] contains name %}

41 | {% assign __item = __item | push: __element %}

42 | {% endif %}

43 | {% endfor %}

44 |

45 |

46 | {% assign group_items = group_items | push: __item %}

47 | {% endfor %}

--------------------------------------------------------------------------------

/_includes/head.html:

--------------------------------------------------------------------------------

1 |

2 |

3 | {% include seo.html %}

4 |

5 |

6 |

7 |

8 |

9 |

10 |

13 |

14 |

15 |

16 |

17 |

31 |

32 | {% if site.head_scripts %}

33 | {% for script in site.head_scripts %}

34 | {% if script contains "://" %}

35 | {% capture script_path %}{{ script }}{% endcapture %}

36 | {% else %}

37 | {% capture script_path %}{{ script | relative_url }}{% endcapture %}

38 | {% endif %}

39 |

40 | {% endfor %}

41 | {% endif %}

42 |

--------------------------------------------------------------------------------

/_layouts/tags.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: archive

3 | ---

4 |

5 | {{ content }}

6 |

7 | {% assign tags_max = 0 %}

8 | {% for tag in site.tags %}

9 | {% if tag[1].size > tags_max %}

10 | {% assign tags_max = tag[1].size %}

11 | {% endif %}

12 | {% endfor %}

13 |

14 |

15 | {% for i in (1..tags_max) reversed %}

16 | {% for tag in site.tags %}

17 | {% if tag[1].size == i %}

18 | -

19 |

20 | {{ tag[0] }} {{ i }}

21 |

22 |

23 | {% endif %}

24 | {% endfor %}

25 | {% endfor %}

26 |

27 |

28 | {% for i in (1..tags_max) reversed %}

29 | {% for tag in site.tags %}

30 | {% if tag[1].size == i %}

31 |

40 | {% endif %}

41 | {% endfor %}

42 | {% endfor %}

43 |

--------------------------------------------------------------------------------

/_includes/category-list.html:

--------------------------------------------------------------------------------

1 | {% case site.category_archive.type %}

2 | {% when "liquid" %}

3 | {% assign path_type = "#" %}

4 | {% when "jekyll-archives" %}

5 | {% assign path_type = nil %}

6 | {% endcase %}

7 |

8 | {% if site.category_archive.path %}

9 | {% comment %}

10 |

11 |

12 | {% endcomment %}

13 | {% capture page_categories %}{% for category in page.categories %}{{ category | downcase }}#{{ category }}{% unless forloop.last %},{% endunless %}{% endfor %}{% endcapture %}

14 | {% assign category_hashes = page_categories | split: ',' | sort %}

15 |

16 |

17 | {{ site.data.ui-text[site.locale].categories_label | default: "Categories:" }}

18 |

19 | {% for hash in category_hashes %}

20 | {% assign keyValue = hash | split: '#' %}

21 | {% capture category_word %}{{ keyValue[1] | strip_newlines }}{% endcapture %}

22 | {{ category_word }}{% unless forloop.last %}, {% endunless %}

23 | {% endfor %}

24 |

25 |

26 | {% endif %}

--------------------------------------------------------------------------------

/_layouts/default.html:

--------------------------------------------------------------------------------

1 | ---

2 | ---

3 |

4 |

5 |

11 |

12 |

13 | {% include head.html %}

14 | {% include head/custom.html %}

15 | {% include analytics.html %}

16 |

17 |

18 |

19 |

20 |

21 | {% include browser-upgrade.html %}

22 | {% include masthead.html %}

23 |

24 |

25 | {{ content }}

26 |

27 |

28 | {% if site.search == true %}

29 |

30 | {% include search/search_form.html %}

31 |

32 | {% endif %}

33 |

34 |

40 |

41 | {% include scripts.html %}

42 |

43 |

44 |

45 |

--------------------------------------------------------------------------------

/_layouts/categories.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: archive

3 | ---

4 |

5 | {{ content }}

6 |

7 | {% assign categories_max = 0 %}

8 | {% for category in site.categories %}

9 | {% if category[1].size > categories_max %}

10 | {% assign categories_max = category[1].size %}

11 | {% endif %}

12 | {% endfor %}

13 |

14 |

15 | {% for i in (1..categories_max) reversed %}

16 | {% for category in site.categories %}

17 | {% if category[1].size == i %}

18 | -

19 |

20 | {{ category[0] }} {{ i }}

21 |

22 |

23 | {% endif %}

24 | {% endfor %}

25 | {% endfor %}

26 |

27 |

28 | {% for i in (1..categories_max) reversed %}

29 | {% for category in site.categories %}

30 | {% if category[1].size == i %}

31 |

40 | {% endif %}

41 | {% endfor %}

42 | {% endfor %}

43 |

--------------------------------------------------------------------------------

/_layouts/archive.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: default

3 | ---

4 |

5 | {% if page.header.overlay_color or page.header.overlay_image or page.header.image %}

6 | {% include page__hero.html %}

7 | {% elsif page.header.video.id and page.header.video.provider %}

8 | {% include page__hero_video.html %}

9 | {% endif %}

10 |

11 | {% if page.url != "/" and site.breadcrumbs %}

12 | {% unless paginator %}

13 | {% include breadcrumbs.html %}

14 | {% endunless %}

15 | {% endif %}

16 |

17 |

18 | {% include sidebar.html %}

19 |

20 |

21 | {% unless page.header.overlay_color or page.header.overlay_image %}

22 |

{{ page.title }}

23 | {% endunless %}

24 |

25 | {% if page.toc %}

26 |

32 | {% endif %}

33 | {{ content }}

34 | {% if page.link %}{% endif %}

35 |

36 |

37 |

" | remove: "

" %}

9 | {% else %}

10 | {% assign title = post.title %}

11 | {% endif %}

12 |

13 |

14 |

15 | {% if include.type == "grid" and teaser %}

16 |

17 |

24 |

27 | {% if post.link %}

28 | {{ title }} Permalink

29 | {% else %}

30 | {{ title }}

31 | {% endif %}

32 |

33 | {% if post.read_time %}

34 | {% include read-time.html %}

35 | {% endif %}

36 | {% if post.excerpt %}{{ post.excerpt | markdownify | strip_html | truncate: 160 }}

{% endif %}

37 |

38 |

37 |

38 | {% else %}

39 |

37 |

38 | {% else %}

39 |  46 | {% endif %}

47 | {% endfor %}

48 | {% if include.caption %}

49 | {{ include.caption | markdownify | remove: "

46 | {% endif %}

47 | {% endfor %}

48 | {% if include.caption %}

49 | {{ include.caption | markdownify | remove: "" | remove: "

" }}

50 | {% endif %}

51 |

--------------------------------------------------------------------------------

/_layouts/search.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: default

3 | ---

4 |

5 | {% if page.header.overlay_color or page.header.overlay_image or page.header.image %}

6 | {% include page__hero.html %}

7 | {% endif %}

8 |

9 | {% if page.url != "/" and site.breadcrumbs %}

10 | {% unless paginator %}

11 | {% include breadcrumbs.html %}

12 | {% endunless %}

13 | {% endif %}

14 |

15 |

16 | {% include sidebar.html %}

17 |

18 |

19 | {% unless page.header.overlay_color or page.header.overlay_image %}

20 |

{{ page.title }}

21 | {% endunless %}

22 |

23 | {{ content }}

24 |

25 | {%- assign search_provider = site.search_provider | default: "lunr" -%}

26 | {%- case search_provider -%}

27 | {%- when "lunr" -%}

28 |

29 |

30 | {%- when "google" -%}

31 |

34 |

35 |

36 |

37 | {%- when "algolia" -%}

38 |

39 |

40 | {%- endcase -%}

41 |

42 |

8 |

9 | {% for f in feature_row %}

10 |

11 | {% if f.url contains "://" %}

12 | {% capture f_url %}{{ f.url }}{% endcapture %}

13 | {% else %}

14 | {% capture f_url %}{{ f.url | relative_url }}{% endcapture %}

15 | {% endif %}

16 |

17 |

18 |

19 | {% if f.image_path %}

20 |

21 |

28 | {% if f.image_caption %}

29 |

{{ f.image_caption | markdownify | remove: "" | remove: "

" }}

30 | {% endif %}

31 |

32 | {% endif %}

33 |

34 |

35 | {% if f.title %}

36 |

{{ f.title }}

37 | {% endif %}

38 |

39 | {% if f.excerpt %}

40 |

41 | {{ f.excerpt | markdownify }}

42 |

43 | {% endif %}

44 |

45 | {% if f.url %}

46 |

{{ f.btn_label | default: site.data.ui-text[site.locale].more_label | default: "Learn More" }}

47 | {% endif %}

48 |

49 |

50 |

51 | {% endfor %}

52 |

53 |

{{ site.data.ui-text[site.locale].share_on_label | default: "Share on" }}

4 | {% endif %}

5 |

6 |

7 |

8 | Facebook

9 |

10 | Google+

11 |

12 | QR Code

13 |

14 |

--------------------------------------------------------------------------------

/_includes/search/algolia-search-scripts.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

55 |

--------------------------------------------------------------------------------

/_includes/comments-providers/staticman.html:

--------------------------------------------------------------------------------

1 | {% if site.repository and site.staticman.branch %}

2 |

42 | {% endif %}

--------------------------------------------------------------------------------

/_includes/comments-providers/staticman_v2.html:

--------------------------------------------------------------------------------

1 | {% if site.repository and site.staticman.branch %}

2 |

42 | {% endif %}

--------------------------------------------------------------------------------

/Rakefile:

--------------------------------------------------------------------------------

1 | require "bundler/gem_tasks"

2 | require "jekyll"

3 | require "listen"

4 |

5 | def listen_ignore_paths(base, options)

6 | [

7 | /_config\.ya?ml/,

8 | /_site/,

9 | /\.jekyll-metadata/

10 | ]

11 | end

12 |

13 | def listen_handler(base, options)

14 | site = Jekyll::Site.new(options)

15 | Jekyll::Command.process_site(site)

16 | proc do |modified, added, removed|

17 | t = Time.now

18 | c = modified + added + removed

19 | n = c.length

20 | relative_paths = c.map{ |p| Pathname.new(p).relative_path_from(base).to_s }

21 | print Jekyll.logger.message("Regenerating:", "#{relative_paths.join(", ")} changed... ")

22 | begin

23 | Jekyll::Command.process_site(site)

24 | puts "regenerated in #{Time.now - t} seconds."

25 | rescue => e

26 | puts "error:"

27 | Jekyll.logger.warn "Error:", e.message

28 | Jekyll.logger.warn "Error:", "Run jekyll build --trace for more information."

29 | end

30 | end

31 | end

32 |

33 | task :preview do

34 | base = Pathname.new('.').expand_path

35 | options = {

36 | "source" => base.join('test').to_s,

37 | "destination" => base.join('test/_site').to_s,

38 | "force_polling" => false,

39 | "serving" => true,

40 | "theme" => "minimal-mistakes-jekyll"

41 | }

42 |

43 | options = Jekyll.configuration(options)

44 |

45 | ENV["LISTEN_GEM_DEBUGGING"] = "1"

46 | listener = Listen.to(

47 | base.join("_data"),

48 | base.join("_includes"),

49 | base.join("_layouts"),

50 | base.join("_sass"),

51 | base.join("assets"),

52 | options["source"],

53 | :ignore => listen_ignore_paths(base, options),

54 | :force_polling => options['force_polling'],

55 | &(listen_handler(base, options))

56 | )

57 |

58 | begin

59 | listener.start

60 | Jekyll.logger.info "Auto-regeneration:", "enabled for '#{options["source"]}'"

61 |

62 | unless options['serving']

63 | trap("INT") do

64 | listener.stop

65 | puts " Halting auto-regeneration."

66 | exit 0

67 | end

68 |

69 | loop { sleep 1000 }

70 | end

71 | rescue ThreadError

72 | # You pressed Ctrl-C, oh my!

73 | end

74 |

75 | Jekyll::Commands::Serve.process(options)

76 | end

77 |

--------------------------------------------------------------------------------

/machine-learning/english-version/dl_propagation.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: single

3 | mathjax: true

4 | toc: true

5 | toc_sticky: true

6 | category: Machine Learning

7 | tags: [notes]

8 | qr: machine_learning_notes.png

9 | title: Backpropagation

10 | share: true

11 | permalink: /MachineLearning/dl_propagtion/

12 | sidebar:

13 | nav: "MachineLearning"

14 | ---

15 |

16 | # 1 Forward Propagation

17 |

18 | This is more like a summary section.

19 |

20 | We set $a^{[0]} = x$ for our input to the network and $\ell = 1,2,\dots,N$ where N is the number of layers of network. Then, we have

21 |

22 | $$z^{[\ell]} = W^{[\ell]}a^{[\ell-1]} + b^{[\ell]}$$

23 |

24 | $$a^{[\ell]} = g^{[\ell]}(z^{[\ell]})$$

25 |

26 | where $g^{[\ell]}$ is the same for all the layers except for last layer. For the last layer, we can do:

27 |

28 | 1 regression then $g(x) = x$

29 |

30 | 2 binary then $g(x) = sigmoid(x)$

31 |

32 | 3 multi-class then $g(x) = softmax(x)$

33 |

34 | Finally, we can have the output of the network $a^{[N]}$ and compute its loss.

35 |

36 | For regression, we have:

37 |

38 | $$\mathcal{L}(\hat{y},y) = \frac{1}{2}(\hat{y} - y)^2$$

39 |

40 | For binary classification, we have:

41 |

42 | $$\mathcal{L}(\hat{y},y) = -\bigg(y\log\hat{y} + (1-y)\log (1-\hat{y})\bigg)$$

43 |

44 | For multi-classification, we have:

45 |

46 | $$\mathcal{L}(\hat{y},y) = -\sum\limits_{j=1}^k\mathbb{1}\{y=j\}\log\hat{y}_j$$

47 |

48 |

49 | Note that for multi-class, if we have $\hat{y}$ as a k-dimensional vector, we can calculate its cross-entropy for its loss:

50 |

51 | $$\mathcal{L}(\hat{y},y) = -\sum\limits_{j=1}^ky_j\log\hat{y}_j$$

52 |

53 | # 2 Backpropagation

54 |

55 | We define that:

56 |

57 | $$\delta^{[\ell]} = \triangledown_{z^{[\ell]}}\mathcal{L}(\hat{y},y)$$

58 |

59 | So we have three steps for computing the gradient for any layer:

60 |

61 | 1 For output layer N, we have:

62 |

63 | $$\delta^{[N]} = \triangledown_{z^{[N]}}\mathcal{L}(\hat{y},y)$$

64 |

65 | For softmax function, since it is not performed element-wise, so you can directly caculate it as a whole. For sigmoid, it is applied element-wise, so we need to:

66 |

67 | $$\triangledown_{z^{[N]}}\mathcal{L}(\hat{y},y) = \triangledown_{\hat{y}}\mathcal{L}(\hat{y},y)\circ (g^{[N]})^{\prime}(z^{[N]})$$

68 |

69 | Note this is element-wise operation.

70 |

71 | 2 For $\ell = N-1,N-2,\dots,1$, we have:

72 |

73 | $$\delta^{[\ell]} = (W^{[\ell+1]T}\delta^{[\ell+1]})\circ g^{\prime}(z^{[\ell]})$$

74 |

75 | 3 For each layer, we have:

76 |

77 | $$\triangle_{W^{[\ell]}}J(W,b) = \delta^{[\ell]}a^{[\ell]T}$$

78 |

79 | $$\triangle_{b^{[\ell]}}J(W,b) = \delta^{[\ell]}$$

80 |

81 | This can be directly used in coding, which acts like a formula.

82 |

--------------------------------------------------------------------------------

/machine-learning/chinese-version/sv_bias_variance_tradeoff_ch.md:

--------------------------------------------------------------------------------

1 | ---

2 | published: true

3 | layout: single

4 | mathjax: true

5 | toc: true

6 | toc_sticky: true

7 | category: Machine Learning

8 | tags: [notes,chinese]

9 | excerpt: "This post is a translation for one of Wei's posts in his machine learning notes."

10 | title: Bias Varicne Tradeoff Chinese Version

11 | share: true

12 | author_profile: true

13 | permalink: /MachineLearning/sv_bias_variance_tradeoff_ch/

14 | ---

15 |

16 | This Article is a Chinese translation of a study note by Wei. Click [here](https://wei2624.github.io/MachineLearning/sv_bias_varience_tradeoff/) to see the original English version in Wei's homepage. I will continue to update Chinese translation to sync with Wei's notes.

17 |

18 | 请注意: 本文是我翻译的一份学习资料,英文原版请点击[Wei的学习笔记](https://wei2624.github.io/MachineLearning/sv_bias_varience_tradeoff/)。我将不断和原作者的英文笔记同步内容,定期更新和维护。

19 |

20 | 在这一节中,我们重点讨论偏差和误差之间是如何相互关联的。我们总想拥有0偏差和0方差,然而在实际中这是不可能的。因此,它们之间总会有权衡,一者多,另一者少。

21 |

22 | # 1 偏差-方差间权衡 (Bias Variance Tradeoff)

23 |

24 | 我们将基于一些样本训练好的模型定义为$\overset{\wedge}{f}$,并且$y$ 为事实标签。因此,**均方差(mean squared error(MSE))**可以定义为:

25 |

26 | $$\mathbb{E}_{(x,y)\sim \text{test set}} \lvert \overset{\wedge}{f}(x) - y \rvert^2$$

27 |

28 | 对于很高的均方差,我们有以下3种解释:

29 |

30 | **过渡拟合(overfitting)**: 模型只在训练样本中表现良好,但是并不能很好地推广适用到测试数据上。

31 |

32 | **欠拟合(underfitting)**: 模型训练还不够,或者没有足够的训练数据,以至于模型不能很好的表示训练数据的情况。

33 |

34 | **两者都不**: 数据的**噪音(noise)**太大。

35 |

36 | 我们将这些情况归纳为**偏差-方差权衡(Bias-Variance Tradeoff)**。

37 |

38 | 假设所有数据都来自于以下定义的相似的分布:$y_i = f(x_i) + \epsilon_i$ 其中噪音 $\mathbb{E}[\epsilon] = 0$ and $Var(\epsilon) = \sigma^2$。

39 |

40 | 尽管我们的目标是计算f,但我们只能通过从以上分布所产生的样本中训练得到一个估值。因此,$\overset{\wedge}{f}(x_i)$ 是随机的,因为它取决于随机的$\epsilon_i$,并且它也是$y = f(x_i) + \epsilon_i$的预测值。因此,得出$\mathbb{E}(\overset{\wedge}{f}(x)-y)$是很合理的。

41 |

42 | 我们也可以计算MSE的期望:

43 |

44 | $$\begin{align}

45 | \mathbb{E}[(y-\overset{\wedge}{f}(x))^2] &= \mathbb{E}[y^2 + (\overset{\wedge}{f})^2 - 2y\overset{\wedge}{f}]\\

46 | &= \mathbb{E}{y^2} + E[(\overset{\wedge}{f})^2] - \mathbb{E}[2y\overset{\wedge}{f}] \\

47 | &= Var(y) + Var(\overset{\wedge}{f}) + (f^2 - 2f\mathbb{E}[\overset{\wedge}{f}] + (\mathbb{E}[\overset{\wedge}{f}])^2\\

48 | &= Var(y) + Var(\overset{\wedge}{f}) + (f - \mathbb{E}[\overset{\wedge}{f}])^2\\

49 | &=\sigma^2 + \text{Bias}(f)^2+ Var(\overset{\wedge}{f})

50 | \end{align}$$

51 |

52 | 第一项是我们无法处理的噪声。高偏差意味着模型的学习效率很低,并且欠拟合。一个高度的方差代表着模型不能很好的概括更多普通的情况,同时代表过渡拟合。

53 |

54 | # 2 误差分析 (Error Aanalysis)

55 |

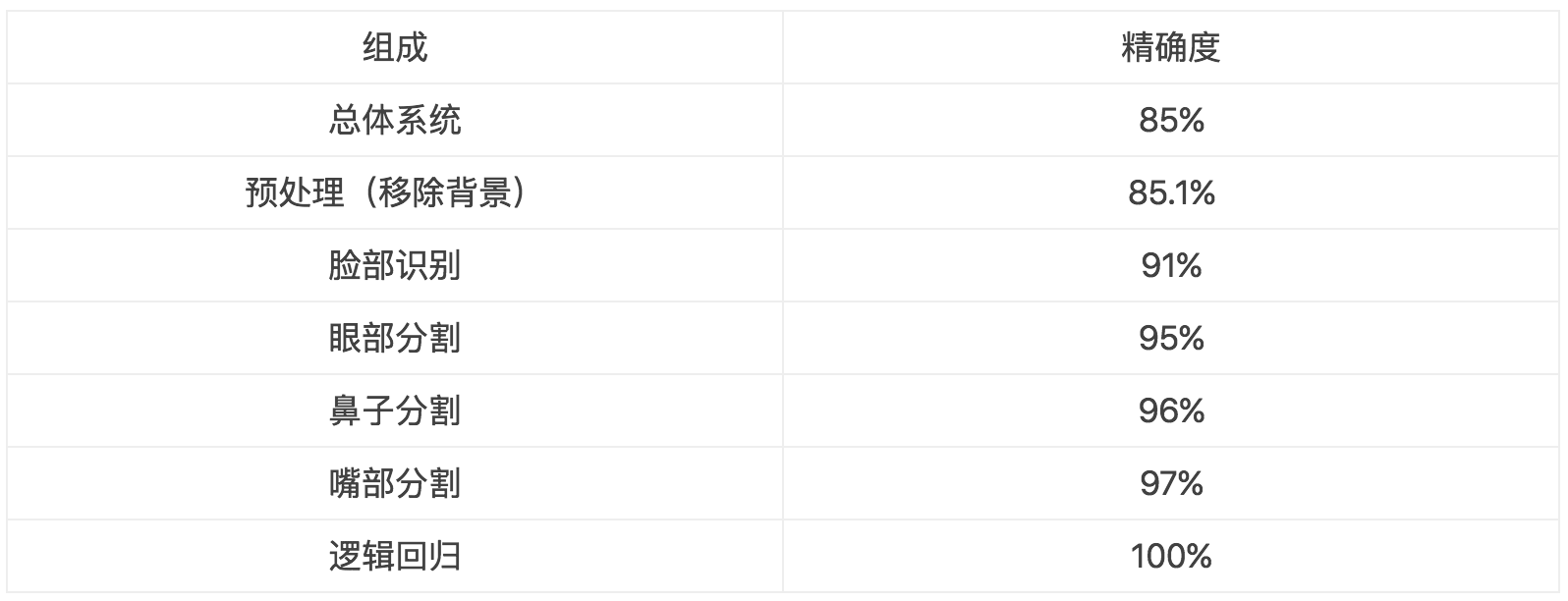

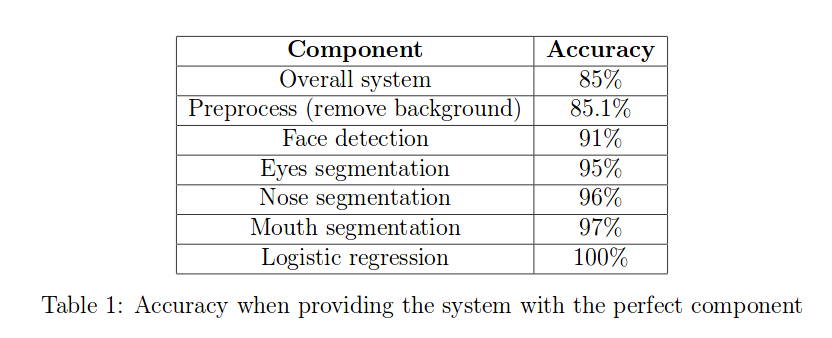

56 | 为了分析一个模型,我们应该首先将模型模块化。然后我们将每个模块的事实标签代入到每一模块中,观察每一个变化会如何影响整体模型的精确度。我们试图观察事实标签中的哪个模块对模型系统的影响最大。以下是一个例子

57 |

58 |

59 |

60 | 表1:这个表给出了模块化对应的准确度

61 |

62 | # 3 去除分析 (Ablative Analysis)

63 |

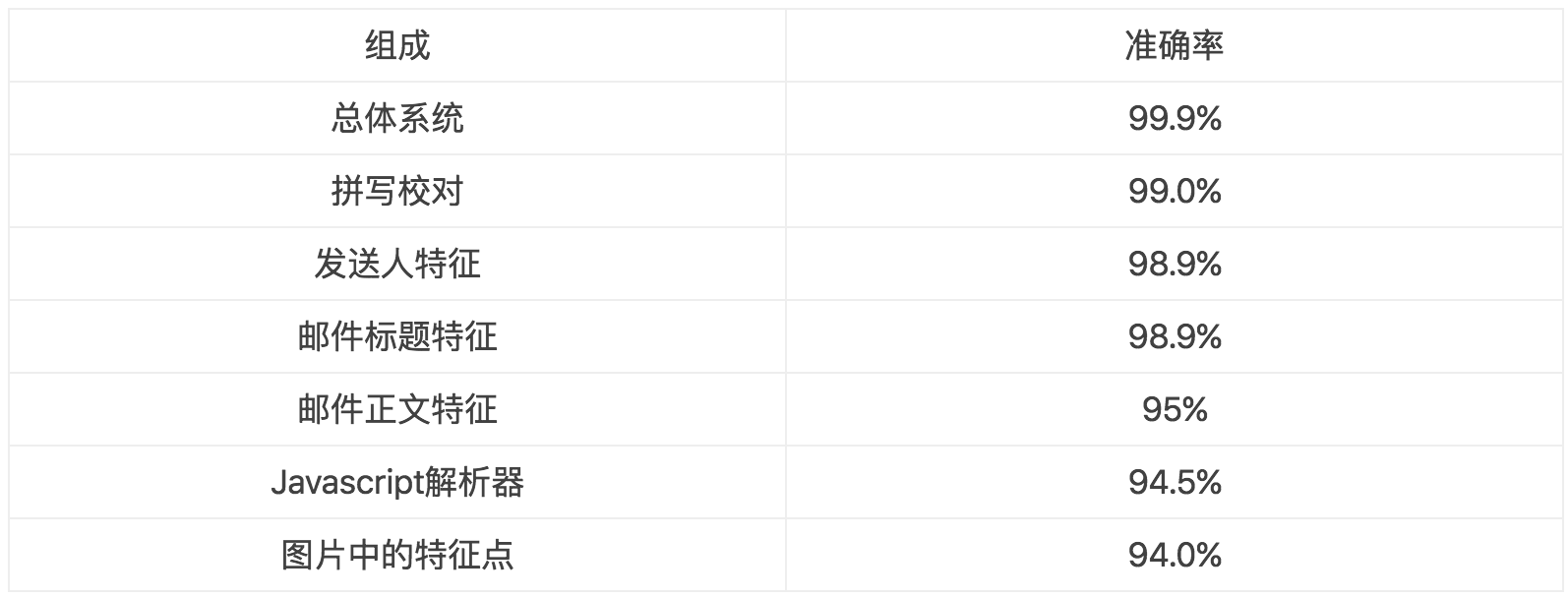

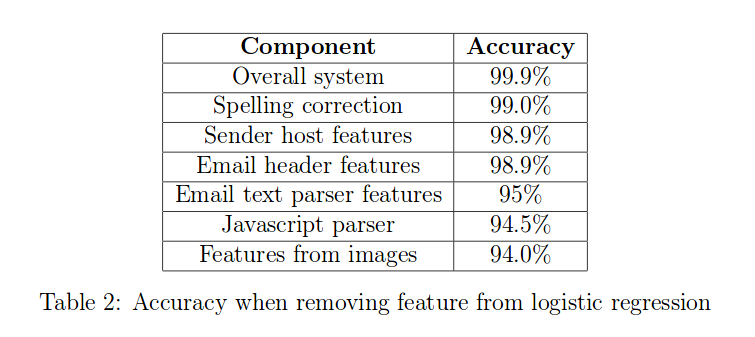

64 | 误差分析试图识别模型当前表现与完美表现之前的区别,而去除分析试图识别基准线与当前模型之前的区别。去除分析非常重要,很多研究论文因为丢失了这部分而被拒绝。这个分析可以告诉我们模型的哪个部分是最具影响力的。

65 | 例如,假设我们有更多附加的特征可以让模型表现更好。我们想观察通过每一次减少一个附加的特征,模型的表现会减少多少。下面是一个例子

66 |

67 |

68 |

69 | 表2:从逻辑回归移除特征的精确度

70 |

71 |

--------------------------------------------------------------------------------

/machine-learning/english-version/usv_factor_analysis.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: single

3 | mathjax: true

4 | toc: true

5 | toc_sticky: true

6 | category: Machine Learning

7 | tags: [notes]

8 | qr: machine_learning_notes.png

9 | title: Factor Analysis

10 | share: true

11 | permalink: /MachineLearning/usv_factor_analysis/

12 | sidebar:

13 | nav: "MachineLearning"

14 | ---

15 |

16 | # Introduction

17 |

18 | Recall that when we have the data $x^i\in \mathbb{R}^n$ for mixture of Gaussian, we usually assume that the number of samples m is larger than the sample dimension n. Then, EM algorithm can be applied to fit the data. However, EM algorithm will fail if data dimension is larger than the number of sample.

19 |

20 | For example, if $n \gg m$, in such case, it might be difficult to model the data with even a single Gaussian. This is because m data points can only span a subspace of feature space$\mathbb{R}^n$. If we model such a dataset using maximum likelihood estimator, we should have:

21 |

22 | $$\begin{align}

23 | \mu &= \frac{1}{m}\sum\limits_{i=1}^m x^i \\

24 | \Sigma &= \frac{1}{m} \sum\limits_{i=1}^m (x^i-\mu)((x^i-\mu)^T)

25 | \end{align}$$

26 |

27 | Each $(x^i-\mu)((x^i-\mu)^T)$ produces a matrix with rank 1. The rank of the sum of all the matrices is the sum of the rank of each matrix. Thus, the final $\Sigma$ has the most rank m. If $n \gg m$, $\Sigma$ is a singular matrix, and its inverse does not exist. Furthermore, $1/\lvert \Sigma \rvert^{1/2} = 1/0$, which is invalid. This cannot be used to define the density of Gaussian distribution.

28 |

29 | Thus, we will talk about how to find the best fit of model given the few amount of data.

30 |

31 |

32 | # Restrictions of $\Sigma$

33 |

34 | If we do not have sufficient data to fit a model, we might want to place some restrictions on $\Sigma$ so that it can be a valid covariance matrix.

35 |

36 | The first restriction is to force the covariance matrix to be diagonal. In this setting, we should have our covariance matrix as:

37 |

38 | $$\Sigma_{jj} = \frac{1}{m} \sum\limits_{i=1}^m (x_j^i - \mu_j)^2$$

39 |

40 | Off-diagonals are just zero.

41 |

42 | The second type of restriction is to further force the covariance matrix to the diagonal matrix where all the diagonals are equal. In general, we have $\Sigma = \sigma^2 I$ where $\sigma^2$ is the control parameter.

43 |

44 | It can also be found using maximum likelihood as:

45 |

46 | $$\sigma^2 = \frac{1}{mn} \sum\limits_{j=1}^n\sum\limits_{i=1}^m (x_j^i - \mu_j)^2$$

47 |

48 | If we have a 2D Gaussian and plot it, we should see a contours that are circles.

49 |

50 | To see why this helps, if we model a full, unconstrained covariance matrix, it was necessary (not sufficient) that $m\geq n$ in order to make $\Sigma$ non-singular. On the other hand, either of the two restriction above will produce a non-singular matrix $\Sigma$ when $m\geq 2$.

51 |

52 | However, both restrictions have the same issue. That is, we cannot model the correlation and dependence between any pair of features in the covariance matrix because they are forced to be zero. So We cannot capture any correlation between any pair of features, which is bad.

53 |

54 | # Marginals and Conditions of Gaussian

55 |

56 | Before talking about factor analysis, we want to talk about how to find conditional and marginal distributions of multivariate Gaussian variables.

57 |

58 | Suppose we have a vector-valued random variable:

59 |

60 | $$x = \begin{bmatrix} x_1 \\ x_2 \end{bmatrix}$$

61 |

62 | where $x_1\in \mathbb{R}^r, x_2\in$

63 |

--------------------------------------------------------------------------------

/_includes/paginator.html:

--------------------------------------------------------------------------------

1 | {% if paginator.total_pages > 1 %}

2 |

69 | {% endif %}

70 |

--------------------------------------------------------------------------------

/machine-learning/english-version/rl.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: single

3 | mathjax: true

4 | toc: true

5 | toc_sticky: true

6 | category: Machine Learning

7 | tags: [notes]

8 | qr: machine_learning_notes.png

9 | title: Reinforcement Learning

10 | share: true

11 | permalink: /MachineLearning/rl/

12 | sidebar:

13 | nav: "MachineLearning"

14 | ---

15 |

16 |

17 | # 1 Introduction

18 |

19 | In supervised learning scheme, we have labels for each data sample, which sorts of represents the "right answer" for each sample. In reinforcement learning (RL), we have no such labels at all because it might not be appropriate to define the "right answer" in some scenario. For example, it is hard to label the correct movement for game Go to achieve a higher score given a current state. Unlike unsupervised learning either where no metric evalution is given for a new prediction, RL has the reward function to evluate the proposed action. The goal is to maximize the reward.

20 |

21 |

22 | # 2 Markov Decision Processes (MDP)

23 |

24 | MDP has been the foundation of RL. MDP typically contains a typle $(S, A, \{P_{sa}\}, \gamma, R$ where:

25 |

26 | - S is the set of states.

27 |

28 | - A is the set of actions that an agent can take.

29 |

30 | - $P_{sa}$ is the transition probability vector of taking action $a\in A$ at the state $s \in S$.

31 |

32 | - $\gamma$ is the discount factor

33 |

34 | - $R: S\times A \rightarrow \mathbb{R}$ is the reward function.

35 |

36 | A typical MDP starts from an intial state $s_0$ and $a_o \in A$. Then we trainsit to $s_1 \sim P_{s_0 a_0}$. This process will continue as:

37 |

38 | $$s_0 \rightarrow{a_0} s_1 \rightarrow{a_1} s_2 \rightarrow{a_2} \dots$$

39 |

40 | The **reward** for such a sequence can be defined as:

41 |

42 | $$R(s_0,a_0) + \gamma R(s_1,a_1) + \gamma^2 R(s_2, a_2) + \dots$$

43 |

44 | Or without the loss of generality:

45 |

46 | $$R(s_0) + \gamma R(s_1) + \gamma^2 R(s_2) + \dots$$

47 |

48 | Our goal is to maxmize:

49 |

50 | $$\mathbb{E}[R(s_0) + \gamma R(s_1) + \gamma^2 R(s_2) + \dots]$$

51 |

52 | One key note here is to notice the discount factor which is compounded over time. This simply means that to maximize the total reward, we want to get the largest reward as soon as possible and postpone negative rewards as long as possible.

53 |

54 | To make an action at a given state, we also have the **policy** $\pi: S\rightarrow A$ mapping from the states to the actions, namely $a=\pi(s)$. In addition, we also define the **value function** with the fixed policy as:

55 |

56 | $$V^{\pi}(s) = R(s) + \gamma \sum\limits_{s^{\prime}\in S} P_{s\pi(s)}(s^{\prime})V^{\pi}(s^{\prime})$$

57 |

58 | which is also called **Bellman equation**. The first term is the immediate reward of a state s. The second term is the expected sum of discount rewards for starting in state $s^{\prime}$. Basically, it is $\mathbb{E}_{s^{\prime}\sim P_{s\pi(s)}}[V^{\pi}(s^{\prime})]$. Bellman equations enable us to solve a finite-state MDP problem ($\| S \| < \infty$). We can write dowm $\| S \|$ equations with one for each state with $\| S \|$ variables.

59 |

60 | The **optimal value function** is defined as:

61 |

62 | $$V^{\ast}(s) = \max_{\pi}V^{\pi}(s)$$

63 |

64 | We can also write it in Bellman's form:

65 |

66 | $$V^{\ast}(s) = R(s) + \max_{a\in A} \gamma \sum\limits_{s^{\prime}\in S} P_{sa}(s^{\prime})V^{\ast}(s^{\prime})$$

67 |

68 | Similarily, we can have:

69 |

70 | $$\pi^{\ast}(s) = \arg\max_{a\in A} \sum\limits_{s^{\prime}\in S} P_{sa}(s^{\prime})V^{\ast}(s^{\prime})$$

71 |

72 | Then, we can conlcude that:

73 |

74 | $$V^{\ast}(s) = V^{\pi^{\ast}}(s) \geq V^{\pi}(s)$$

75 |

76 | One thing to notice is that $\pi^{\ast}$ is optimal for all the states regardless of what current state it is.

77 |

78 |

79 |

80 |

--------------------------------------------------------------------------------

/staticman.yml:

--------------------------------------------------------------------------------

1 | comments:

2 | # (*) REQUIRED

3 | #

4 | # Names of the fields the form is allowed to submit. If a field that is

5 | # not here is part of the request, an error will be thrown.

6 | allowedFields: ["name", "email", "url", "message"]

7 |

8 | # (*) REQUIRED WHEN USING NOTIFICATIONS

9 | #

10 | # When allowedOrigins is defined, only requests sent from one of the domains

11 | # listed will be accepted. The origin is sent as part as the `options` object

12 | # (e.g.

36 | {% if page.header.overlay_color or page.header.overlay_image %}

37 |

38 |

39 | {% if paginator and site.paginate_show_page_num %}

40 | {{ site.title }}{% unless paginator.page == 1 %} {{ site.data.ui-text[site.locale].page | default: "Page" }} {{ paginator.page }}{% endunless %}

41 | {% else %}

42 | {{ page.title | default: site.title | markdownify | remove: "

" | remove: "

" }}

43 | {% endif %}

44 |

45 | {% if page.header.show_overlay_excerpt != false and page.excerpt %}

46 |

{{ page.excerpt | markdownify | remove: "

" | remove: "

" }}

47 | {% endif %}

48 | {% if page.read_time %}

49 |

{% include read-time.html %}

50 | {% endif %}

51 | {% if page.header.cta_url %}

52 |

{{ page.header.cta_label | default: site.data.ui-text[site.locale].more_label | default: "Learn More" }}

53 | {% endif %}

54 | {% if page.header.actions %}

55 |

56 | {% for action in page.header.actions %}

57 | {% if action.url contains "://" %}

58 | {% assign url = action.url %}

59 | {% else %}

60 | {% assign url = action.url | relative_url %}

61 | {% endif %}

62 | {{ action.label | default: site.data.ui-text[site.locale].more_label | default: "Learn More" }}

63 | {% endfor %}

64 | {% endif %}

65 |

68 | {% endif %}

69 | {% if page.header.caption %}

70 | {{ page.header.caption | markdownify | remove: "

68 | {% endif %}

69 | {% if page.header.caption %}

70 | {{ page.header.caption | markdownify | remove: "" | remove: "

" }}

71 | {% endif %}

72 |

73 |

--------------------------------------------------------------------------------

/machine-learning/english-version/sv_bias_variance_tradeoff.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: single

3 | mathjax: true

4 | toc: true

5 | toc_sticky: true

6 | category: Machine Learning

7 | tags: [notes]

8 | qr: machine_learning_notes.png

9 | title: Bias-Varaince and Error Analysis

10 | share: true

11 | permalink: /MachineLearning/sv_bias_variance_tradeoff/

12 | sidebar:

13 | nav: "MachineLearning"

14 | ---

15 |

16 | A Chinese version of this section is available. It can be found [here](https://dark417.github.io/MachineLearning/sv_bias_variance_tradeoff_ch/). The Chinese version will be synced periodically with English version. If the page is not working, you can check out a back-up link [here](https://wei2624.github.io/MachineLearning/sv_bias_variance_tradeoff_ch/).

17 |

18 | ---

19 |

20 | In this section, we focus on how bias and varaince are correlated. We always want to have zero bias and zero variance. However, this is practically impossible. So there is tradeoff in between.

21 |

22 | # 1 The Bias-Varaince Tradeoff

23 |

24 | Let's denote $\overset{\wedge}{f}$ be the model that is trained on some dataset and $y$ be the ground truth. Then, the mean squared error(MSE) is defined:

25 |

26 | $$\mathbb{E}_{(x,y)\sim \text{test set}} \lvert \overset{\wedge}{f}(x) - y \rvert^2$$

27 |

28 | We have three explanation for a high MSE:

29 |

30 | **Overfitting:** The model does not generalize well and probably only works well in training dataset.

31 |

32 | **Underfitting:** The model does not train enough or have enough data for training so does not learn a good representation.

33 |

34 | **Neither:** The noise of data is too high.

35 |

36 | We formulate these into **Bias-Varaince Tradeoff**.

37 |

38 | Assume that samples are sampled from similar distribution which can be defined as:

39 |

40 | $y_i = f(x_i) + \epsilon_i$ where the noise $\mathbb{E}[\epsilon] = 0$ and $Var(\epsilon) = \sigma^2$.

41 |

42 | Whereas our goal is to compute f, we can only obtain an estimate by looking at training samples generated from above distribution. Thus, $\overset{\wedge}{f}(x_i)$ is random since it depends on $\epsilon_i$ which is random and it is also the prediction of $y = f(x_i) + \epsilon_i$. Thus, it makes sense to get $\mathbb{E}(\overset{\wedge}{f}(x)-y)$.

43 |

44 | We can now calculate the expected MSE:

45 |

46 | $$\begin{align}

47 | \mathbb{E}[(y-\overset{\wedge}{f}(x))^2] &= \mathbb{E}[y^2 + (\overset{\wedge}{f})^2 - 2y\overset{\wedge}{f}]\\

48 | &= \mathbb{E}{y^2} + E[(\overset{\wedge}{f})^2] - \mathbb{E}[2y\overset{\wedge}{f}] \\

49 | &= Var(y) + Var(\overset{\wedge}{f}) + (f^2 - 2f\mathbb{E}[\overset{\wedge}{f}] + (\mathbb{E}[\overset{\wedge}{f}])^2\\

50 | &= Var(y) + Var(\overset{\wedge}{f}) + (f - \mathbb{E}[\overset{\wedge}{f}])^2\\

51 | &=\sigma^2 + \text{Bias}(f)^2+ Var(\overset{\wedge}{f})

52 | \end{align}$$

53 |

54 | The fisrt term is data noise which we cannot do anything. A high bias term means the model does not learn efficiently and is underfitting. A high variance means that the model does not generalize well and is overfitting.

55 |

56 | # 2 Error Analysis

57 |

58 | To analyze a model, we should first build a pipeline of the interests. Then, we start from plugging ground truth for each component and see how much accuracy that change makes on the model. We always try to see which componenet in ground truth is affect the most when adding to the system. An example can be seen below.

59 |

60 |

61 |

62 | # 3 Ablative Analysis

63 |

64 | Whereas error analysis tries to recognize the difference between current performance and perfect performance, Ablative Analysis tries to recognize that between baseline and current model. Ablation analysis is quite important, many research papers are rejected because of the missing of this part. This analysis can tell us which part of the model affects the most.

65 |

66 |

67 | For example, assume that we have more add-on features that makes the model perform better. We want to see how much performance it will be reduced by eliminating one add-on feature at a time. An example can be shown below.

68 |

69 |

--------------------------------------------------------------------------------

/machine-learning/chinese-version/sv_regularization_model_selection_ch.md:

--------------------------------------------------------------------------------

1 | ---

2 | published: true

3 | layout: single

4 | mathjax: true

5 | toc: true

6 | toc_sticky: true

7 | category: Machine Learning

8 | tags: [notes,chinese]

9 | excerpt: "This post is a translation for one of Wei's posts in his machine learning notes."

10 | title: Regularization and Model Selection Chinese Version

11 | share: true

12 | author_profile: true

13 | permalink: /MachineLearning/sv_regularization_model_selection_ch/

14 | ---

15 |

16 | This Article is a Chinese translation of a study note by Wei. Click [here](https://wei2624.github.io/MachineLearning/sv_regularization_model_selection/) to see the original English version in Wei's homepage. I will continue to update Chinese translation to sync with Wei's notes.

17 |

18 | 请注意: 本文是我翻译的一份学习资料,英文原版请点击[Wei的学习笔记](https://wei2624.github.io/MachineLearning/sv_regularization_model_selection/)。我将不断和原作者的英文笔记同步内容,定期更新和维护。

19 |

20 | 正则化与模型选择

21 | 在选择模型时,如果我们在一个模型中有k个参数,那么问题就是这k个参数应该是什么值?哪些值可以给出最佳偏差-方差权衡呢。其中,我们从有限集合的模型 $\mathcal{M} = \{M_1,M_2,\dots,M_d\}$ 中来选取最佳模型。在集合中,我们有不同的模型,或者不同的参数。

22 |

23 | # 1 交叉验证(Cross Validation)

24 |

25 | 想象一下,给定数据集S与一系列的模型,我们很容易想到通过以下方式来选择模型:

26 |

27 | 1 从S集合训练每个模型$M_i$ ,并得到相应的假设$h_i$

28 |

29 | 2 选取最小训练误差的模型

30 |

31 | 这个想法不能达到目的因为当我们选择的多项数阶数越高时,模型会更好的拟合训练数据集。然而,这个模型将会在新的数据集中有很高的统一化误差,也就是高方差。

32 |

33 | 在这个情况中,**保留交叉验证(hold-out cross validation)**将会做得更好:

34 |

35 | 1 以70%和30%的比例将S随机分成训练数据集$S_{tr}$和验证数据集$S_{cv}$

36 |

37 | 2 在$S_{tr}$在中训练每一个 $M_i$ 以学习假设 $h_i$

38 |

39 | 3 选择拥有最小**经验误差(empirical error)**的模型 $S_{cv}$,我们将它标记为

40 | $\hat{\varepsilon}\_{S_{cv}}(h_i)$

41 |

42 | 通过以上几步,我们试图通过测试模型在验证集上的表现以估计真实统一化误差。在第3步中,在选择最优模型后,我们可以用整个数据集来重复训练模型来得到最佳假设模型。然而,即使我们可以这样做,我们仍然选择的是基于70%数据集来训练模型。当数据少的时候这是很糟糕的。

43 |

44 | 因此,我们引出**K折交叉验证(K-fold cross validation)**:

45 |

46 | 1 随机将S分成k个分离的子集,每个子集有m/k个样本,记为$S_1,S_2,\dots,S_k$

47 |

48 | 2 对于每个模型$M_i$,我们排除一个子集并标记为j,然后我们用其余的样本训练模型以得到$H_{ij}$。我们在$S_j$上测试模型,并且得到 $\varepsilon_{S_j}(h_{ij})$。我们这样遍历每一个j。最后,我们获取统一化误差除以j的平均。

49 |

50 | 3 我们选择有最小平均统一误差的模型

51 |

52 | 通常我们取k为10。虽然这样计算上很复杂,但是它会给我们很好的结果。如果数据很少,我们也可能设k=m。在这种情况下,我们每一次除去一个样本,这种方法叫**除一交叉验证(leave-one-out cross validation)**。

53 |

54 | # 2 特征选择(Feature Selection)

55 |

56 | 如果我们有n个特征,m个样本,其中$n \gg m$ (VC 维度is O(n)),我们可能会过度拟合。在这种情况下,你想选择最重要的特征来训练。在暴力算法中,我们会有用$2^n$ 个特征组合,我们会有$2^n$ 个可能的模型,这处理起来会很费力。因此我们可以选择用**向前搜索算法(forward search algorithm)**:

57 |

58 | 1 我们初始化为$\mathcal{F} = \emptyset$

59 |

60 | 2 重复:(a)for $i =1,\dots,n$ 如果$i\notin\mathcal{F}$, 让$\mathcal{F}_i = \mathcal{F}\cup\{i\}$ 并且使用交叉验证算法来估计$\mathcal{F}_i$. (b)设置$\mathcal{F}$作为(a)中的最佳特征子集

61 |

62 | 3 从以上选择最佳特征子集。

63 |

64 | 你可以通过设置目标特征数量来终止循环。相反地,在特征选择中我们也可以使用**向后搜索算法(backward search)**,这于去除算法类似。然而,因为这两种算法的时间复杂度都是$O(n^2)$ ,它们训练起来都会比较慢。

65 |

66 | 然而,我们也可以使用**过滤特征选择(filter feature selection)**。它的概念是对于标签y,我们会根据每一个特征提供了多少信息来给它打分,然后挑选出最佳者。

67 | 一个容易想到的方法是根据每个$x_i$和标签y的相关性打分。实际中,我们将分数设为**相互信息(mutual information)**:

68 |

69 | $$MI(x_i,y) = \sum\limits_{x_i\in\{0,1\}}\sum\limits_{y\in\{0,1\}} p(x_i,y)\log\frac{p(x_i,y)}{p(x_i)p(y)}$$

70 |

71 | 其中我们假设每个特征和标签都是二元值,并且求和覆盖整个变量域。每一个可能性都会从训练数据集中计算。为了进一步理解,我们知道:

72 |

73 | $$MI(x_i,y) = KL(p(x_i,y)\lvert\lvert p(x_i)p(y))$$

74 |

75 | 其中KL是**相对熵(Kullback-Leibler divergence)**。它计算了竖线两边变量分布的差异。如果$x_i$和 $y$ 是独立的,那么 KL 是0。这代表着特征和标签直接没有任何关系。然而如果MI很高,那么这个特征和标签有强相关性。

76 |

77 | # 3 贝叶斯统计与正则化(Bayesian Statistics and regularization)

78 |

79 | 在前面一章我们讨论了**最大似然法(maximum likelihood (ML) algorithm)**是如何训练模型参数的:

80 |

81 | $$\theta_{ML} = \arg\max\prod_{i=1}^m p(y^{(i)}\lvert x^{(i)},\theta)$$

82 |

83 | 在这种情况下,我们视$\theta$ 为未知参数,它已经存在但是未知。我们的任务是找到未知参数并计算它的值。

84 | 同时$\theta$也是随机的,因此我们设置一个先验值,称它为**先验分布(prior distribution)**。基于先验分布,我们可以用S数据集来计算后验分布:

85 |

86 | $$p(\theta\lvert S) = \frac{p(S\lvert\theta)p(\theta)}{p(S)} = \frac{\prod_{i=1}^m p(y^{(i)}\lvert x^{(i)},\theta)(p(\theta)}{\int_{\theta}\prod_{i=1}^m p(y^{(i)}\lvert x^{(i)},\theta)(p(\theta)d\theta}$$

87 |

88 | 使用后验分布来预测推断,我们有:

89 |

90 | $$p(y\lvert x,S) = \int_{\theta}p(y\lvert x,\theta)p(\theta\lvert S)d\theta$$

91 |