├── code

├── finetune

│ ├── core

│ │ ├── __init__.py

│ │ ├── datasets

│ │ │ ├── __init__.py

│ │ │ ├── Makefile

│ │ │ ├── utils.py

│ │ │ ├── megatron_dataset.py

│ │ │ ├── blended_megatron_dataset_config.py

│ │ │ ├── blended_dataset.py

│ │ │ ├── blended_megatron_dataset_builder.py

│ │ │ ├── gpt_dataset.py

│ │ │ ├── indexed_dataset.py

│ │ │ └── helpers.cpp

│ │ └── parse_mixture.py

│ ├── scripts

│ │ ├── count_tokens.sh

│ │ ├── preprocess_data.sh

│ │ └── run_finetune.sh

│ ├── tools

│ │ ├── count_mmap_token.py

│ │ └── codecmanipulator.py

│ ├── config

│ │ └── ds_config_zero2.json

│ └── requirements.txt

├── prompt_egs

│ ├── genre.txt

│ └── lyrics.txt

├── inference

│ ├── mm_tokenizer_v0.2_hf

│ │ └── tokenizer.model

│ ├── codecmanipulator.py

│ ├── mmtokenizer.py

│ └── infer.py

├── requirements.txt

├── evals

│ └── pitch_range

│ │ └── main.py

└── top_200_tags.json

├── example.mp3

├── fig

├── model.pdf

└── tokenpair.pdf

├── tokenpair.png

└── README.md

/code/finetune/core/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/code/finetune/core/datasets/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/example.mp3:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/WtxwNs/BACH/HEAD/example.mp3

--------------------------------------------------------------------------------

/fig/model.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/WtxwNs/BACH/HEAD/fig/model.pdf

--------------------------------------------------------------------------------

/tokenpair.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/WtxwNs/BACH/HEAD/tokenpair.png

--------------------------------------------------------------------------------

/fig/tokenpair.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/WtxwNs/BACH/HEAD/fig/tokenpair.pdf

--------------------------------------------------------------------------------

/code/prompt_egs/genre.txt:

--------------------------------------------------------------------------------

1 | inspiring female uplifting pop airy vocal electronic bright vocal vocal

--------------------------------------------------------------------------------

/code/inference/mm_tokenizer_v0.2_hf/tokenizer.model:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/WtxwNs/BACH/HEAD/code/inference/mm_tokenizer_v0.2_hf/tokenizer.model

--------------------------------------------------------------------------------

/code/requirements.txt:

--------------------------------------------------------------------------------

1 | torch

2 | omegaconf

3 | torchaudio

4 | einops

5 | numpy

6 | transformers

7 | sentencepiece

8 | tqdm

9 | tensorboard

10 | descript-audiotools>=0.7.2

11 | descript-audio-codec

12 | scipy

13 | accelerate>=0.26.0

14 |

--------------------------------------------------------------------------------

/code/finetune/core/datasets/Makefile:

--------------------------------------------------------------------------------

1 | CXXFLAGS += -O3 -Wall -shared -std=c++11 -fPIC -fdiagnostics-color

2 | CPPFLAGS += $(shell python3 -m pybind11 --includes)

3 | LIBNAME = helpers

4 | LIBEXT = $(shell python3-config --extension-suffix)

5 |

6 | default: $(LIBNAME)$(LIBEXT)

7 |

8 | %$(LIBEXT): %.cpp

9 | $(CXX) $(CXXFLAGS) $(CPPFLAGS) $< -o $@

10 |

--------------------------------------------------------------------------------

/code/finetune/scripts/count_tokens.sh:

--------------------------------------------------------------------------------

1 | echo "Please input parenet directory, will count all .bin files..."

2 | echo "Example: bash ./count_tokens.sh /workspace/dataset/music"

3 |

4 | PARENT_DIR=${1:-/workspace/dataset/music}

5 | LOG_DIR=./count_token_logs/

6 | mkdir -p $LOG_DIR

7 |

8 | # find all .bin files

9 | BINS=$(find $PARENT_DIR -name "*.bin" -type f)

10 |

11 | for bin in $BINS; do

12 | echo Checking mmap file: $bin

13 |

14 | mmap_path=$bin

15 |

16 | # mmap size in human readable format (e.g. 1.2G)

17 | mmap_size=$(du -h $mmap_path | awk '{print $1}')

18 | echo "Counting largest mmap file: $mmap_path, size: $mmap_size"

19 |

20 | # remove PARENT_DIR, replace / with _

21 | subdir=$(echo $mmap_path | sed "s|$PARENT_DIR/||g" | sed 's/\//_/g')

22 |

23 | cmd="nohup python tools/count_mmap_token.py --mmap_path $mmap_path > $LOG_DIR/count.$subdir.log 2>&1 &"

24 | echo $cmd

25 |

26 | eval $cmd

27 |

28 |

29 | echo "Finished!"

30 | done

31 |

32 |

33 |

--------------------------------------------------------------------------------

/code/finetune/tools/count_mmap_token.py:

--------------------------------------------------------------------------------

1 | import os

2 | import sys

3 | sys.path.append(os.path.abspath(os.path.join(os.path.dirname(__file__),

4 | os.path.pardir)))

5 | from core.datasets.indexed_dataset import MMapIndexedDataset

6 | import argparse

7 | from tqdm import tqdm

8 |

9 |

10 | def get_args():

11 | parser = argparse.ArgumentParser()

12 | parser.add_argument("--mmap_path", type=str, required=True, help="Path to the .bin mmap file")

13 | return parser.parse_args()

14 |

15 |

16 | args = get_args()

17 |

18 | slice_path = args.mmap_path

19 | if slice_path.endswith(".bin"):

20 | slice_path = slice_path[:-4]

21 |

22 | dataset = MMapIndexedDataset(slice_path)

23 |

24 |

25 | def count_ids(dataset):

26 | count = 0

27 | for doc_ids in tqdm(dataset):

28 | count += doc_ids.shape[0]

29 | return count

30 |

31 | print("Counting tokens in ", args.mmap_path)

32 | total_cnt = count_ids(dataset)

33 | print("Total number of tokens: ", total_cnt)

34 |

--------------------------------------------------------------------------------

/code/prompt_egs/lyrics.txt:

--------------------------------------------------------------------------------

1 | [verse]

2 | Staring at the sunset, colors paint the sky

3 | Thoughts of you keep swirling, can't deny

4 | I know I let you down, I made mistakes

5 | But I'm here to mend the heart I didn't break

6 |

7 | [chorus]

8 | Every road you take, I'll be one step behind

9 | Every dream you chase, I'm reaching for the light

10 | You can't fight this feeling now

11 | I won't back down

12 | You know you can't deny it now

13 | I won't back down

14 |

15 | [verse]

16 | They might say I'm foolish, chasing after you

17 | But they don't feel this love the way we do

18 | My heart beats only for you, can't you see?

19 | I won't let you slip away from me

20 |

21 | [chorus]

22 | Every road you take, I'll be one step behind

23 | Every dream you chase, I'm reaching for the light

24 | You can't fight this feeling now

25 | I won't back down

26 | You know you can't deny it now

27 | I won't back down

28 |

29 | [bridge]

30 | No, I won't back down, won't turn around

31 | Until you're back where you belong

32 | I'll cross the oceans wide, stand by your side

33 | Together we are strong

34 |

35 | [outro]

36 | Every road you take, I'll be one step behind

37 | Every dream you chase, love's the tie that binds

38 | You can't fight this feeling now

39 | I won't back down

--------------------------------------------------------------------------------

/code/finetune/config/ds_config_zero2.json:

--------------------------------------------------------------------------------

1 | {

2 | "fp16": {

3 | "enabled": "auto",

4 | "loss_scale": 0,

5 | "loss_scale_window": 1000,

6 | "initial_scale_power": 64,

7 | "hysteresis": 2,

8 | "min_loss_scale": 1

9 | },

10 | "bf16": {

11 | "enabled": "auto"

12 | },

13 | "optimizer": {

14 | "type": "AdamW",

15 | "params": {

16 | "lr": "auto",

17 | "betas": "auto",

18 | "eps": "auto",

19 | "weight_decay": "auto"

20 | }

21 | },

22 |

23 | "scheduler": {

24 | "type": "WarmupCosineLR",

25 | "params": {

26 | "total_num_steps": "auto",

27 | "warmup_min_ratio": 0.03,

28 | "warmup_num_steps": "auto",

29 | "cos_min_ratio": 0.1

30 | }

31 | },

32 |

33 | "zero_optimization": {

34 | "stage": 2,

35 | "offload_optimizer": {

36 | "device": "none",

37 | "pin_memory": true

38 | },

39 | "offload_param": {

40 | "device": "none",

41 | "pin_memory": true

42 | },

43 | "overlap_comm": false,

44 | "contiguous_gradients": true,

45 | "sub_group_size": 1e9,

46 | "reduce_bucket_size": "auto"

47 | },

48 |

49 | "gradient_accumulation_steps": "auto",

50 | "gradient_clipping": 1.0,

51 | "steps_per_print": 100,

52 | "train_batch_size": "auto",

53 | "train_micro_batch_size_per_gpu": "auto",

54 | "wall_clock_breakdown": false

55 | }

56 |

--------------------------------------------------------------------------------

/code/finetune/requirements.txt:

--------------------------------------------------------------------------------

1 | accelerate==1.6.0

2 | annotated-types==0.7.0

3 | blobfile==3.0.0

4 | certifi==2025.4.26

5 | charset-normalizer==3.4.2

6 | click==8.2.0

7 | deepspeed==0.16.7

8 | docker-pycreds==0.4.0

9 | einops==0.8.1

10 | filelock==3.13.1

11 | fsspec==2024.6.1

12 | gitdb==4.0.12

13 | GitPython==3.1.44

14 | hjson==3.1.0

15 | huggingface-hub==0.31.2

16 | idna==3.10

17 | Jinja2==3.1.4

18 | lxml==5.4.0

19 | MarkupSafe==2.1.5

20 | mpmath==1.3.0

21 | msgpack==1.1.0

22 | networkx==3.3

23 | ninja==1.11.1.4

24 | nltk==3.9.1

25 | numpy==2.1.2

26 | nvidia-cublas-cu12==12.1.3.1

27 | nvidia-cuda-cupti-cu12==12.1.105

28 | nvidia-cuda-nvrtc-cu12==12.1.105

29 | nvidia-cuda-runtime-cu12==12.1.105

30 | nvidia-cudnn-cu12==9.1.0.70

31 | nvidia-cufft-cu12==11.0.2.54

32 | nvidia-curand-cu12==10.3.2.106

33 | nvidia-cusolver-cu12==11.4.5.107

34 | nvidia-cusparse-cu12==12.1.0.106

35 | nvidia-ml-py==12.575.51

36 | nvidia-nccl-cu12==2.20.5

37 | nvidia-nvjitlink-cu12==12.1.105

38 | nvidia-nvtx-cu12==12.1.105

39 | packaging==25.0

40 | peft==0.15.2

41 | pillow==11.0.0

42 | platformdirs==4.3.8

43 | protobuf==6.30.2

44 | psutil==7.0.0

45 | py-cpuinfo==9.0.0

46 | pybind11==2.13.6

47 | pycryptodomex==3.22.0

48 | pydantic==2.11.4

49 | pydantic_core==2.33.2

50 | PyYAML==6.0.2

51 | regex==2024.11.6

52 | requests==2.32.3

53 | safetensors==0.5.3

54 | scipy==1.15.3

55 | sentencepiece==0.2.0

56 | sentry-sdk==2.28.0

57 | setproctitle==1.3.6

58 | six==1.17.0

59 | smmap==5.0.2

60 | sympy==1.13.3

61 | tiktoken==0.9.0

62 | tokenizers==0.21.1

63 | torch==2.4.0

64 | torchaudio==2.4.0

65 | torchvision==0.19.0

66 | tqdm==4.67.1

67 | transformers==4.50.0

68 | triton==3.0.0

69 | typing-inspection==0.4.0

70 | typing_extensions==4.12.2

71 | urllib3==2.4.0

72 | wandb==0.19.11

73 |

--------------------------------------------------------------------------------

/code/finetune/core/datasets/utils.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) 2022, NVIDIA CORPORATION. All rights reserved.

2 |

3 | import logging

4 | from enum import Enum

5 | from typing import List

6 |

7 | import numpy

8 | import torch

9 |

10 | logger = logging.getLogger(__name__)

11 |

12 |

13 | class Split(Enum):

14 | train = 0

15 | valid = 1

16 | test = 2

17 |

18 |

19 | def compile_helpers():

20 | """Compile C++ helper functions at runtime. Make sure this is invoked on a single process.

21 | """

22 | import os

23 | import subprocess

24 |

25 | command = ["make", "-C", os.path.abspath(os.path.dirname(__file__))]

26 | if subprocess.run(command).returncode != 0:

27 | import sys

28 |

29 | log_single_rank(logger, logging.ERROR, "Failed to compile the C++ dataset helper functions")

30 | sys.exit(1)

31 |

32 |

33 | def log_single_rank(logger: logging.Logger, *args, rank=0, **kwargs):

34 | """If torch distributed is initialized, log only on rank

35 |

36 | Args:

37 | logger (logging.Logger): The logger to write the logs

38 |

39 | rank (int, optional): The rank to write on. Defaults to 0.

40 | """

41 | if torch.distributed.is_initialized():

42 | if torch.distributed.get_rank() == rank:

43 | logger.log(*args, **kwargs)

44 | else:

45 | logger.log(*args, **kwargs)

46 |

47 |

48 | def normalize(weights: List[float]) -> List[float]:

49 | """Do non-exponentiated normalization

50 |

51 | Args:

52 | weights (List[float]): The weights

53 |

54 | Returns:

55 | List[float]: The normalized weights

56 | """

57 | w = numpy.array(weights, dtype=numpy.float64)

58 | w_sum = numpy.sum(w)

59 | w = (w / w_sum).tolist()

60 | return w

61 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |  3 |

3 |

4 | Watch how BACH turns raw tokens into structured music—step by step.

5 |

6 |

7 | # BACH: Bar-level AI Composing Helper

8 |

9 |

10 |

11 |  12 |

13 |

14 |

12 |

13 |

14 |  15 |

16 |

15 |

16 |  17 |

17 |  18 |

18 |

19 |

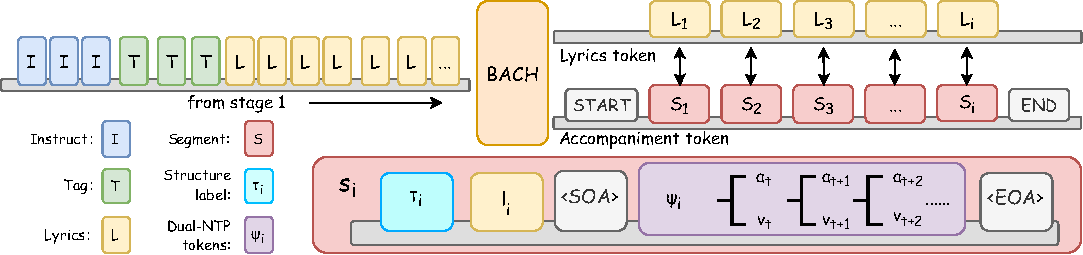

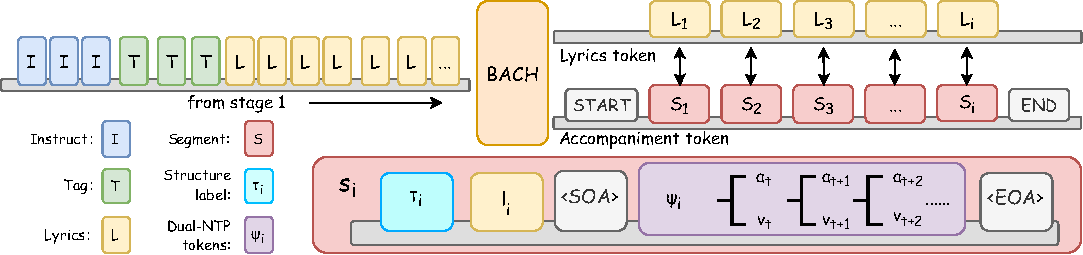

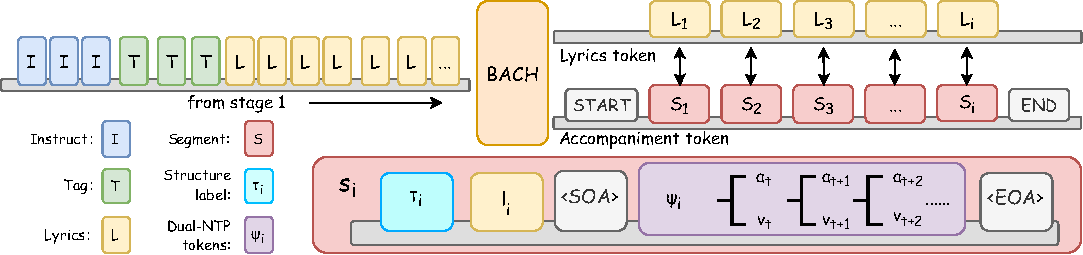

20 | > *"Via Score to Performance: Efficient Human-Controllable Long Song Generation with Bar-Level Symbolic Notation"*

21 | > ICASSP 2026 Submission – **Pending Review**

22 |

23 | ---

24 |

25 | ## 🎼 One-sentence Summary

26 | BACH is the first **human-editable**, **bar-level** symbolic song generator:

27 | LLM writes lyrics → Transformer emits ABC score → off-the-shelf renderers give **minutes-long, Suno-level** music.

28 | **1 B params**, **minute-level** inference, **SOTA open-source**.

29 |

30 | ---

31 |

32 | ## 📦 What is inside this repo (preview release)

33 | | Path | Description |

34 | |------|-------------|

35 | | `README.md` | This file |

36 | | `code/` | inference code |

37 | | `example.mp3` | an example song |

38 | | `fig/` | Architecture figure |

39 |

40 | ---

41 |

42 | ## 🏗️ Model Architecture (one glance)

43 |

44 | User prompt

45 | Qwen3 — lyrics & style tags

46 | BACH-1B Decoder-Only Transformer

47 | ABC score (Dual-NTP + Chain-of-Score)

48 | ABC → MIDI → FluidSynth + VOCALOID

49 | Stereo mix

50 |

51 |

52 | | Component | Key idea |

53 | |-----------|----------|

54 | | **Dual-NTP** | Predict `{vocal_patch, accomp_patch}` jointly every step |

55 | | **Chain-of-Score** | Section tags `[START:Chorus] ... [END:Chorus]` for long coherence |

56 | | **Bar-stream patch** | 16-char non-overlapping patches per bar |

57 |

58 | ---

59 |

60 | ## 🧪 Quick start (CPU friendly)

61 | ```bash

62 | # 1. Clone

63 | git clone https://github.com/your-github/BACH.git

64 | cd BACH

65 |

66 | # 2. Install

67 | pip install -r requirements.txt # transformers>=4.41 mido abcpy fluidsynth

68 |

69 | # 3. Generate ABC

70 | python bach/generate.py \

71 | --prompt "A rainy-day lo-fi hip-hop song about missing the last train" \

72 | --out_abc demo/rainy_lofi.abc

73 |

74 | # 4. Render audio

75 | ```

76 |

77 | ## 🎧 Listen now

78 | example.mp3 is ready for you, it's a whole song. You can compare it with Suno🙂

79 |

80 | ## Full release upon related paper acceptance

81 | - Complete training set (ABC + lyrics + structure labels)

82 | - BACH-1B weights (Transformers format)

83 | - Training scripts (multiphase + multitask + ICL)

84 | - Complete Code

85 |

86 | ## 📎 Citation

87 | Paper is released on Arxiv,

88 | ```bibtex

89 | @misc{wang2025scoreperformanceefficienthumancontrollable,

90 | title={Via Score to Performance: Efficient Human-Controllable Long Song Generation with Bar-Level Symbolic Notation},

91 | author={Tongxi Wang and Yang Yu and Qing Wang and Junlang Qian},

92 | year={2025},

93 | eprint={2508.01394},

94 | archivePrefix={arXiv},

95 | primaryClass={cs.SD},

96 | url={https://arxiv.org/abs/2508.01394},

97 | }

98 |

--------------------------------------------------------------------------------

/code/evals/pitch_range/main.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import librosa

3 | import time

4 | import argparse

5 | import torch

6 | from extract_pitch_values_from_audio.src import RMVPE

7 | import os

8 | from pathlib import Path

9 | from tqdm import tqdm

10 |

11 | def process_audio(rmvpe, audio_path, output_path, device, hop_length, threshold):

12 | """Process an audio file in 10-second chunks and save the results."""

13 | # Load the audio file

14 | audio, sr = librosa.load(str(audio_path), sr=None)

15 | chunk_size = 10 * sr

16 | # pad to make the audio length to be multiple of hop_length

17 | audio = np.pad(audio, (0, chunk_size - len(audio) % chunk_size), mode='constant')

18 |

19 | # Calculate chunk size in samples (10 seconds * sample rate)

20 | total_chunks = int(np.round(len(audio) / chunk_size))

21 |

22 | # Initialize arrays to store results

23 | all_f0 = []

24 | total_infer_time = 0

25 |

26 | # Process each chunk

27 | for i in tqdm(range(total_chunks)):

28 | start_idx = i * chunk_size

29 | end_idx = min((i + 1) * chunk_size, len(audio))

30 | chunk = audio[start_idx:end_idx]

31 |

32 | # Process the chunk

33 | t = time.time()

34 | f0_chunk = rmvpe.infer_from_audio(chunk, sr, device=device, thred=threshold, use_viterbi=True)

35 | chunk_infer_time = time.time() - t

36 | total_infer_time += chunk_infer_time

37 |

38 | # Append results

39 | all_f0.extend(f0_chunk)

40 |

41 | # Create output directory if it doesn't exist

42 | output_path.parent.mkdir(parents=True, exist_ok=True)

43 |

44 | # remove all 0 in the f0

45 | all_f0 = np.array(all_f0)

46 | all_f0 = all_f0[all_f0 != 0]

47 |

48 | # convert all_f0 to a list

49 | all_f0 = all_f0.tolist()

50 |

51 | # Save the results

52 | with open(output_path, 'w') as f:

53 | for f0 in all_f0:

54 | f.write(f'{f0:.2f}\n')

55 |

56 | return total_infer_time, len(audio) / sr # Return total inference time and audio duration

57 |

58 | def main():

59 | input_dir = Path("/root/yue_pitch_evals/yue_vs_others_sep")

60 | output_dir = Path("/root/yue_pitch_evals/yue_vs_others_sep_pitch")

61 | device = "cuda"

62 |

63 | print(f'Using device: {device}')

64 | print('Loading model...')

65 | rmvpe = RMVPE("model.pt", hop_length=160)

66 |

67 | # Find all WAV files in input directory and subdirectories

68 | wav_files = list(input_dir.rglob('*.Vocals.mp3'))

69 | print(f'Found {len(wav_files)} WAV files to process')

70 |

71 | total_time = 0

72 | total_audio_duration = 0

73 |

74 | # Process each WAV file

75 | for wav_path in tqdm(wav_files, desc="Processing files"):

76 | # Calculate relative path to maintain directory structure

77 | rel_path = wav_path.relative_to(input_dir)

78 | # Create output path with .txt extension

79 | output_path = output_dir / str(rel_path).replace('.Vocals.mp3', '.txt')

80 |

81 | try:

82 | infer_time, audio_duration = process_audio(

83 | rmvpe, wav_path, output_path, device,

84 | 160, 0.03

85 | )

86 | total_time += infer_time

87 | total_audio_duration += audio_duration

88 |

89 | tqdm.write(f'Processed {wav_path.name}')

90 | tqdm.write(f'Time: {infer_time:.2f}s, RTF: {infer_time/audio_duration:.2f}')

91 |

92 | except Exception as e:

93 | tqdm.write(f'Error processing {wav_path}: {str(e)}')

94 | continue

95 |

96 | print('\nProcessing complete!')

97 | print(f'Total processing time: {total_time:.2f}s')

98 | print(f'Average RTF: {total_time/total_audio_duration:.2f}')

99 |

100 | if __name__ == '__main__':

101 | main()

--------------------------------------------------------------------------------

/code/finetune/scripts/preprocess_data.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | DATA_SETTING=$1

4 | MODE_TYPE=$2

5 | TOKENIZER_MODEL=$3

6 | AUDIO_PROMPT_MODES=($4)

7 | if [ -z "$4" ]; then

8 | AUDIO_PROMPT_MODES=('dual' 'inst' 'vocal' 'mixture')

9 | fi

10 |

11 | if [ -z "$DATA_SETTING" ] || [ -z "$MODE_TYPE" ]; then

12 | echo "Usage: $0 "

13 | echo " : e.g., dummy"

14 | echo " : cot or icl_cot"

15 | exit 1

16 | fi

17 |

18 | # Common settings based on DATA_SETTING

19 | if [ "$DATA_SETTING" == "dummy" ]; then

20 | DATA_ROOT=example

21 | NAME_PREFIX=dummy.msa.xcodec_16k

22 | CODEC_TYPE=xcodec

23 | INSTRUCTION="Generate music from the given lyrics segment by segment."

24 | ORDER=textfirst

25 | DROPOUT=0.0

26 | KEEP_SEQUENTIAL_SAMPLES=true

27 | QUANTIZER_BEGIN_IDX=0

28 | NUM_QUANTIZERS=1

29 | else

30 | echo "Invalid setting: $DATA_SETTING"

31 | exit 1

32 | fi

33 |

34 | JSONL_NAME=jsonl/$NAME_PREFIX.jsonl

35 |

36 | # Mode-specific settings and execution

37 | if [ "$MODE_TYPE" == "cot" ]; then

38 | echo "Running in 'cot' mode..."

39 | NAME_SUFFIX=stage_1_token_level_interleave_cot_xcodec

40 | MMAP_NAME=mmap/${NAME_PREFIX}_${NAME_SUFFIX}_$ORDER

41 |

42 | rm -f $DATA_ROOT/jsonl/${NAME_PREFIX}_*.jsonl # Use -f to avoid error if files don't exist

43 | mkdir -p $DATA_ROOT/$MMAP_NAME

44 |

45 | args="python core/preprocess_data_conditional_xcodec_segment.py \

46 | --input $DATA_ROOT/$JSONL_NAME \

47 | --output-prefix $DATA_ROOT/$MMAP_NAME \

48 | --tokenizer-model $TOKENIZER_MODEL \

49 | --tokenizer-type MMSentencePieceTokenizer \

50 | --codec-type $CODEC_TYPE \

51 | --workers 8 \

52 | --partitions 1 \

53 | --instruction \"$INSTRUCTION\" \

54 | --instruction-dropout-rate $DROPOUT \

55 | --order $ORDER \

56 | --append-eod \

57 | --quantizer-begin $QUANTIZER_BEGIN_IDX \

58 | --n-quantizer $NUM_QUANTIZERS \

59 | --use-token-level-interleave \

60 | --keep-sequential-samples \

61 | --cot

62 | "

63 |

64 | echo "$args"

65 | sleep 5

66 | eval $args

67 |

68 | rm -f $DATA_ROOT/jsonl/${NAME_PREFIX}_*.jsonl # Use -f

69 | rm -f $DATA_ROOT/${MMAP_NAME}_*_text_document.bin # Use -f

70 | rm -f $DATA_ROOT/${MMAP_NAME}_*_text_document.idx # Use -f

71 |

72 | elif [ "$MODE_TYPE" == "icl_cot" ]; then

73 | echo "Running in 'icl_cot' mode..."

74 | NAME_SUFFIX=stage_1_token_level_interleave_long_prompt_msa

75 | MMAP_NAME=mmap/${NAME_PREFIX}_${NAME_SUFFIX}_$ORDER # Define MMAP_NAME base for this mode

76 | PROMPT_LEN=30

77 |

78 | rm -f $DATA_ROOT/jsonl/${NAME_PREFIX}_*.jsonl # Use -f

79 | mkdir -p $DATA_ROOT/$MMAP_NAME # Ensure base MMAP dir exists

80 |

81 |

82 | for mode in "${AUDIO_PROMPT_MODES[@]}"; do

83 | echo "Processing mode: $mode"

84 | MODE_MMAP_NAME=${MMAP_NAME}_${mode} # Mode specific path

85 | mkdir -p $DATA_ROOT/$MODE_MMAP_NAME # Ensure mode-specific dir exists

86 |

87 | args="python core/preprocess_data_conditional_xcodec_segment.py \

88 | --input $DATA_ROOT/$JSONL_NAME \

89 | --output-prefix $DATA_ROOT/$MODE_MMAP_NAME \

90 | --tokenizer-model $TOKENIZER_MODEL \

91 | --tokenizer-type MMSentencePieceTokenizer \

92 | --codec-type $CODEC_TYPE \

93 | --workers 8 \

94 | --partitions 1 \

95 | --instruction \"$INSTRUCTION\" \

96 | --instruction-dropout-rate $DROPOUT \

97 | --order $ORDER \

98 | --append-eod \

99 | --quantizer-begin $QUANTIZER_BEGIN_IDX \

100 | --n-quantizer $NUM_QUANTIZERS \

101 | --cot \

102 | --use-token-level-interleave \

103 | --use-audio-icl \

104 | --audio-prompt-mode $mode \

105 | --audio-prompt-len $PROMPT_LEN \

106 | --keep-sequential-samples

107 | "

108 |

109 | echo "$args"

110 | sleep 5

111 | eval $args

112 |

113 | # Clean up mode-specific files

114 | rm -f $DATA_ROOT/jsonl/${NAME_PREFIX}_*.jsonl # Use -f

115 | rm -f $DATA_ROOT/${MODE_MMAP_NAME}_*_text_document.bin # Use -f

116 | rm -f $DATA_ROOT/${MODE_MMAP_NAME}_*_text_document.idx # Use -f

117 | done

118 |

119 | else

120 | echo "Invalid mode_type: $MODE_TYPE. Use 'cot' or 'icl_cot'."

121 | exit 1

122 | fi

123 |

124 | echo "Preprocessing finished for setting '$DATA_SETTING' and mode_type '$MODE_TYPE'."

--------------------------------------------------------------------------------

/code/finetune/core/datasets/megatron_dataset.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) 2022, NVIDIA CORPORATION. All rights reserved.

2 |

3 | import hashlib

4 | import json

5 | from abc import ABC, abstractmethod, abstractstaticmethod

6 | from collections import OrderedDict

7 | from typing import Dict, List

8 |

9 | import numpy

10 | import torch

11 |

12 | from core.datasets.blended_megatron_dataset_config import BlendedMegatronDatasetConfig

13 | from core.datasets.indexed_dataset import MMapIndexedDataset

14 | from core.datasets.utils import Split

15 |

16 |

17 | class MegatronDataset(ABC, torch.utils.data.Dataset):

18 | """The wrapper class from which dataset classes should inherit e.g. GPTDataset

19 |

20 | Args:

21 | indexed_dataset (MMapIndexedDataset): The MMapIndexedDataset around which to build the

22 | MegatronDataset

23 |

24 | indexed_indices (numpy.ndarray): The set of the documents indices to expose

25 |

26 | num_samples (int): The number of samples to draw from the indexed dataset

27 |

28 | index_split (Split): The indexed_indices Split

29 |

30 | config (BlendedMegatronDatasetConfig): The container for all config sourced parameters

31 | """

32 |

33 | def __init__(

34 | self,

35 | indexed_dataset: MMapIndexedDataset,

36 | indexed_indices: numpy.ndarray,

37 | num_samples: int,

38 | index_split: Split,

39 | config: BlendedMegatronDatasetConfig,

40 | ) -> None:

41 | assert indexed_indices.size > 0

42 | assert num_samples > 0

43 | assert self.is_multimodal() == indexed_dataset.multimodal

44 | assert self.is_split_by_sequence() != self.is_split_by_document()

45 |

46 | self.indexed_dataset = indexed_dataset

47 | self.indexed_indices = indexed_indices

48 | self.num_samples = num_samples

49 | self.index_split = index_split

50 | self.config = config

51 |

52 | self.unique_identifiers = OrderedDict()

53 | self.unique_identifiers["class"] = type(self).__name__

54 | self.unique_identifiers["path_prefix"] = self.indexed_dataset.path_prefix

55 | self.unique_identifiers["num_samples"] = self.num_samples

56 | self.unique_identifiers["index_split"] = self.index_split.name

57 | for attr in self._key_config_attributes():

58 | self.unique_identifiers[attr] = getattr(self.config, attr)

59 | self.unique_identifiers["add_bos"] = getattr(self.config, "add_bos", False)

60 |

61 | self.unique_description = json.dumps(self.unique_identifiers, indent=4)

62 | self.unique_description_hash = hashlib.md5(

63 | self.unique_description.encode("utf-8")

64 | ).hexdigest()

65 |

66 | self._finalize()

67 |

68 | @abstractmethod

69 | def _finalize(self) -> None:

70 | """Build the dataset and assert any subclass-specific conditions

71 | """

72 | pass

73 |

74 | @abstractmethod

75 | def __len__(self) -> int:

76 | """Return the length of the dataset

77 |

78 | Returns:

79 | int: See abstract implementation

80 | """

81 | pass

82 |

83 | @abstractmethod

84 | def __getitem__(self, idx: int) -> Dict[str, numpy.ndarray]:

85 | """Return from the dataset

86 |

87 | Args:

88 | idx (int): The index into the dataset

89 |

90 | Returns:

91 | Dict[str, numpy.ndarray]: See abstract implementation

92 | """

93 | pass

94 |

95 | @abstractstaticmethod

96 | def is_multimodal() -> bool:

97 | """Return True if the inheritor class and its internal MMapIndexedDataset are multimodal

98 |

99 | Returns:

100 | bool: See abstract implementation

101 | """

102 | pass

103 |

104 | @abstractstaticmethod

105 | def is_split_by_sequence() -> bool:

106 | """Return whether the dataset is split by sequence

107 |

108 | For example, the GPT train/valid/test split is document agnostic

109 |

110 | Returns:

111 | bool: See abstract implementation

112 | """

113 | pass

114 |

115 | @classmethod

116 | def is_split_by_document(cls) -> bool:

117 | """Return whether the dataset is split by document

118 |

119 | For example, the BERT train/valid/test split is document aware

120 |

121 | Returns:

122 | bool: The negation of cls.is_split_by_sequence

123 | """

124 | return not cls.is_split_by_sequence()

125 |

126 | @staticmethod

127 | def _key_config_attributes() -> List[str]:

128 | """Return all config attributes which contribute to uniquely identifying the dataset.

129 |

130 | These attributes will be used to build a uniquely identifying string and MD5 hash which

131 | will be used to cache/load the dataset from run to run.

132 |

133 | Returns:

134 | List[str]: The key config attributes

135 | """

136 | return ["split", "random_seed", "sequence_length"]

--------------------------------------------------------------------------------

/code/finetune/core/parse_mixture.py:

--------------------------------------------------------------------------------

1 |

2 | """

3 | # you can run the following command to make DB2TOKCNT readable

4 | autopep8 --in-place --aggressive --aggressive finetune/scripts/parse_mixture.py

5 |

6 | This script is used to parse the mixture of the pretraining data

7 | input: path to the yaml file

8 | output: a megatron style data mixture string

9 | """

10 |

11 | import os

12 | import sys

13 | import argparse

14 | import yaml

15 | import re

16 |

17 |

18 | EXAMPLE_LOG_STRING = """Zarr-based strategies will not be registered because of missing packages

19 | Counting tokens in ./mmap/example.bin

20 |

21 | 0%| | 0/597667 [00:00= 1:

117 | mixture_str += repeat_str(

118 | f"1 {mmap_path_without_ext} ", int(repeat_times))

119 | else:

120 | # weight is less than 1

121 | mixture_str += f"{repeat_times} {mmap_path_without_ext} "

122 | tokcnt = DB2TOKCNT[mmap_path]

123 | if isinstance(tokcnt, str):

124 | assert tokcnt.endswith("B"), f"invalid tokcnt: {tokcnt}"

125 | tokcnt = float(tokcnt.replace("B", "")) * 10**9

126 | total_tokcnt += tokcnt * repeat_times

127 | else:

128 | assert isinstance(tokcnt, int), f"invalid tokcnt: {tokcnt}"

129 | total_tokcnt += tokcnt * repeat_times

130 |

131 | # total iter count

132 | total_iter = total_tokcnt / (cfg["GLOBAL_BATCH_SIZE"] * cfg["SEQ_LEN"])

133 |

134 | # into string x.xxxB

135 | total_tokcnt /= 1e9

136 | total_tokcnt = f"{total_tokcnt:.3f}B"

137 |

138 | return mixture_str, total_tokcnt, total_iter

139 |

140 |

141 | def parse_mixture_from_cfg(cfg):

142 | keys = list(cfg.keys())

143 | # find keys ends with _ROUND

144 | rounds = [k for k in keys if k.endswith("_ROUND")]

145 |

146 | def repeat_str(s, n):

147 | return "".join([s for _ in range(n)])

148 |

149 | total_tokcnt = 0

150 | mixture_str = ""

151 | for r in rounds:

152 | repeat_times = float(r.replace("_ROUND", ""))

153 | mmap_paths = sorted(set(cfg[r]))

154 | for mmap_path in mmap_paths:

155 | mmap_path_without_ext = os.path.splitext(mmap_path)[0]

156 | tokcnt = DB2TOKCNT[mmap_path]

157 | if isinstance(tokcnt, str):

158 | assert tokcnt.endswith("B"), f"invalid tokcnt: {tokcnt}"

159 | tokcnt = float(tokcnt.replace("B", "")) * 10**9

160 | total_tokcnt += tokcnt * repeat_times

161 | else:

162 | assert isinstance(tokcnt, int), f"invalid tokcnt: {tokcnt}"

163 | total_tokcnt += tokcnt * repeat_times

164 |

165 | mixture_str += f"{int(tokcnt * repeat_times)} {mmap_path_without_ext} "

166 |

167 | # total iter count

168 | total_iter = total_tokcnt / (cfg["GLOBAL_BATCH_SIZE"] * cfg["SEQ_LEN"])

169 |

170 | # into string x.xxxB

171 | total_tokcnt /= 1e9

172 | total_tokcnt = f"{total_tokcnt:.3f}B"

173 |

174 | return mixture_str, total_tokcnt, total_iter

175 |

176 |

177 | if __name__ == "__main__":

178 |

179 | args = parse_args()

180 |

181 | cfg = load_yaml(args.cfg)

182 | print(f"[INFO] Loaded cfg from {args.cfg}")

183 |

184 | TOKEN_COUNT_LOG_DIR = cfg["TOKEN_COUNT_LOG_DIR"]

185 | print(f"[INFO] TOKEN_COUNT_LOG_DIR: {TOKEN_COUNT_LOG_DIR}")

186 |

187 | get_tokcnts_from_logs(TOKEN_COUNT_LOG_DIR,

188 | by_billions=args.by_billions)

189 | print(f"[INFO] DB2TOKCNT reloaded from the logs in {TOKEN_COUNT_LOG_DIR}\n")

190 |

191 | mixture_str, total_tokcnt, total_iter = parse_mixture_from_cfg(cfg)

192 | print(f"[CRITICAL] DATA_PATH **(copy to the training script)**:\n{mixture_str}\n")

193 | print(f"[CRITICAL] TRAIN_ITERS **(copy to the training script)**:\n{total_iter}\n")

194 | print(f"[INFO] Total token count: {total_tokcnt}")

195 |

--------------------------------------------------------------------------------

/code/finetune/core/datasets/blended_megatron_dataset_config.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) 2023, NVIDIA CORPORATION. All rights reserved.

2 |

3 | import functools

4 | import logging

5 | import re

6 | from dataclasses import dataclass, field

7 | from typing import Callable, List, Optional, Tuple

8 |

9 | import torch

10 |

11 | from core.datasets.utils import Split, log_single_rank, normalize

12 | # from parallel_state import get_virtual_pipeline_model_parallel_rank

13 |

14 | logger = logging.getLogger(__name__)

15 |

16 |

17 | @dataclass

18 | class BlendedMegatronDatasetConfig:

19 | """Configuration object for megatron-core blended and megatron datasets

20 |

21 | Attributes:

22 | is_built_on_rank (Callable): A callable which returns True if the dataset should be built

23 | on the current rank. It should be Megatron Core parallelism aware i.e. global rank, group

24 | rank, and virtual rank may inform its return value.

25 |

26 | random_seed (int): The seed for all RNG during dataset creation.

27 |

28 | sequence_length (int): The sequence length.

29 |

30 | blend (Optional[List[str]]): The blend string, consisting of either a single dataset or a

31 | flattened sequential sequence of weight-dataset pairs. For exampe, ["dataset-path1"] and

32 | ["50", "dataset-path1", "50", "dataset-path2"] are both valid. Not to be used with

33 | 'blend_per_split'. Defaults to None.

34 |

35 | blend_per_split (blend_per_split: Optional[List[Optional[List[str]]]]): A set of blend

36 | strings, as defined above, one for each split distribution. Not to be used with 'blend'.

37 | Defauls to None.

38 |

39 | split (Optional[str]): The split string, a comma separated weighting for the dataset splits

40 | when drawing samples from a single distribution. Not to be used with 'blend_per_split'.

41 | Defaults to None.

42 |

43 | split_vector: (Optional[List[float]]): The split string, parsed and normalized post-

44 | initialization. Not to be passed to the constructor.

45 |

46 | path_to_cache (str): Where all re-useable dataset indices are to be cached.

47 | """

48 |

49 | is_built_on_rank: Callable

50 |

51 | random_seed: int

52 |

53 | sequence_length: int

54 |

55 | blend: Optional[List[str]] = None

56 |

57 | blend_per_split: Optional[List[Optional[List[str]]]] = None

58 |

59 | split: Optional[str] = None

60 |

61 | split_vector: Optional[List[float]] = field(init=False, default=None)

62 |

63 | path_to_cache: str = None

64 |

65 | def __post_init__(self):

66 | """Python dataclass method that is used to modify attributes after initialization. See

67 | https://docs.python.org/3/library/dataclasses.html#post-init-processing for more details.

68 | """

69 | if torch.distributed.is_initialized():

70 | gb_rank = torch.distributed.get_rank()

71 | # vp_rank = get_virtual_pipeline_model_parallel_rank()

72 | vp_rank = 0

73 | if gb_rank == 0 and (vp_rank == 0 or vp_rank is None):

74 | assert (

75 | self.is_built_on_rank()

76 | ), "is_built_on_rank must return True when global rank = 0 and vp rank = 0"

77 |

78 | if self.blend_per_split is not None and any(self.blend_per_split):

79 | assert self.blend is None, "blend and blend_per_split are incompatible"

80 | assert len(self.blend_per_split) == len(

81 | Split

82 | ), f"blend_per_split must contain {len(Split)} blends"

83 | if self.split is not None:

84 | self.split = None

85 | log_single_rank(logger, logging.WARNING, f"Let split = {self.split}")

86 | else:

87 | assert self.blend is not None, "one of either blend or blend_per_split must be provided"

88 | assert self.split is not None, "both blend and split must be provided"

89 | self.split_vector = _parse_and_normalize_split(self.split)

90 | self.split_matrix = convert_split_vector_to_split_matrix(self.split_vector)

91 | log_single_rank(logger, logging.INFO, f"Let split_vector = {self.split_vector}")

92 |

93 |

94 | @dataclass

95 | class GPTDatasetConfig(BlendedMegatronDatasetConfig):

96 | """Configuration object for megatron-core blended and megatron GPT datasets

97 |

98 | Attributes:

99 | return_document_ids (bool): Whether to return the document ids when querying the dataset.

100 | """

101 |

102 | return_document_ids: bool = False

103 |

104 | add_bos: bool = False

105 |

106 | enable_shuffle: bool = False

107 |

108 |

109 | def _parse_and_normalize_split(split: str) -> List[float]:

110 | """Parse the dataset split ratios from a string

111 |

112 | Args:

113 | split (str): The train valid test split string e.g. "99,1,0"

114 |

115 | Returns:

116 | List[float]: The trian valid test split ratios e.g. [99.0, 1.0, 0.0]

117 | """

118 | split = list(map(float, re.findall(r"[.0-9]+", split)))

119 | split = split + [0.0 for _ in range(len(Split) - len(split))]

120 |

121 | assert len(split) == len(Split)

122 | assert all(map(lambda _: _ >= 0.0, split))

123 |

124 | split = normalize(split)

125 |

126 | return split

127 |

128 |

129 | def convert_split_vector_to_split_matrix(

130 | vector_a: List[float], vector_b: Optional[List[float]] = None

131 | ) -> List[Optional[Tuple[float, float]]]:

132 | """Build the split matrix from one or optionally two contributing split vectors.

133 |

134 | Ex. a standard conversion:

135 |

136 | [0.99, 0.01, 0.0] -> [(0, 0.99), (0.99, 1.0), None]

137 |

138 | Ex. a conversion for Retro when Retro pretraining uses a [0.99, 0.01, 0.0] split and Retro

139 | preprocessing used a [0.98, 0.02, 0.0] split:

140 |

141 | [0.99, 0.01, 0.0], [0.98, 0.02, 0.0] -> [(0, 0.98), (0.99, 1.0), None]

142 |

143 | Args:

144 | vector_a (List[float]): The primary split vector

145 |

146 | vector_b (Optional[List[float]]): An optional secondary split vector which constrains the

147 | primary split vector. Defaults to None.

148 |

149 | Returns:

150 | List[Tuple[float, float]]: The split matrix consisting of book-ends of each split in order

151 | """

152 | if vector_b is None:

153 | vector_b = vector_a

154 |

155 | # [.900, .090, .010] -> [0.00, .900, .990, 100]

156 | expansion_a = functools.reduce(lambda a, b: a + [a[len(a) - 1] + b], [[0], *vector_a])

157 | expansion_b = functools.reduce(lambda a, b: a + [a[len(a) - 1] + b], [[0], *vector_b])

158 |

159 | # [0.00, .900, .990, 100.0] -> [(0.00, .900), (.900, .990), (.990, 100)]

160 | bookends_a = list(zip(expansion_a[:-1], expansion_a[1:]))

161 | bookends_b = list(zip(expansion_b[:-1], expansion_b[1:]))

162 |

163 | # gather per-split overlap or None

164 | matrix = []

165 | for bookend_a, bookend_b in zip(bookends_a, bookends_b):

166 | if min(bookend_a[1], bookend_b[1]) <= max(bookend_a[0], bookend_b[0]):

167 | overlap = None

168 | else:

169 | overlap = (max(bookend_a[0], bookend_b[0]), min(bookend_a[1], bookend_b[1]))

170 | matrix.append(overlap)

171 |

172 | return matrix

173 |

--------------------------------------------------------------------------------

/code/finetune/scripts/run_finetune.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # ==============================

4 | # YuE Fine-tuning Script

5 | # ==============================

6 |

7 | # Help information

8 | print_help() {

9 | echo "========================================================"

10 | echo "YuE Fine-tuning Script Help"

11 | echo "========================================================"

12 | echo "Before running this script, please update the following variables:"

13 | echo ""

14 | echo "1. Data paths:"

15 | echo " DATA_PATH - Replace with actual weights and data paths"

16 | echo " DATA_CACHE_PATH - Replace with actual cache directory"

17 | echo ""

18 | echo "2. Model configuration:"

19 | echo " TOKENIZER_MODEL_PATH - Replace with actual tokenizer path"

20 | echo " MODEL_CACHE_DIR - Replace with actual cache directory"

21 | echo " OUTPUT_DIR - Replace with actual output directory"

22 | echo ""

23 | echo "3. If using WandB:"

24 | echo " WANDB_API_KEY - Replace with your actual API key"

25 | echo ""

26 | echo "Example usage:"

27 | echo " DATA_PATH=\"data1-weight /path/to/data1 data2-weight /path/to/data2\""

28 | echo " DATA_CACHE_PATH=\"/path/to/cache\""

29 | echo " TOKENIZER_MODEL_PATH=\"/path/to/tokenizer\""

30 | echo " MODEL_CACHE_DIR=\"/path/to/model/cache\""

31 | echo " OUTPUT_DIR=\"/path/to/output\""

32 | echo " WANDB_API_KEY=\"your-actual-wandb-key\""

33 | echo "========================================================"

34 | exit 1

35 | }

36 |

37 | # Check if help is requested

38 | if [[ "$1" == "--help" || "$1" == "-h" ]]; then

39 | print_help

40 | fi

41 |

42 | # Check for placeholder values

43 | check_placeholders() {

44 | local has_placeholders=false

45 |

46 | if [[ "$DATA_PATH" == *""* ]]; then

52 | echo "Error: Please set actual data cache path in DATA_CACHE_PATH variable."

53 | has_placeholders=true

54 | fi

55 |

56 | if [[ "$TOKENIZER_MODEL_PATH" == *""* ]]; then

57 | echo "Error: Please set actual tokenizer model path in TOKENIZER_MODEL_PATH variable."

58 | has_placeholders=true

59 | fi

60 |

61 | if [[ "$MODEL_CACHE_DIR" == *""* ]]; then

62 | echo "Error: Please set actual model cache directory in MODEL_CACHE_DIR variable."

63 | has_placeholders=true

64 | fi

65 |

66 | if [[ "$OUTPUT_DIR" == *""* ]]; then

67 | echo "Error: Please set actual output directory in OUTPUT_DIR variable."

68 | has_placeholders=true

69 | fi

70 |

71 | if [[ "$USE_WANDB" == "true" && "$WANDB_API_KEY" == *""* ]]; then

72 | echo "Error: Please set actual WandB API key in WANDB_API_KEY variable or disable WandB."

73 | has_placeholders=true

74 | fi

75 |

76 | if [[ "$has_placeholders" == "true" ]]; then

77 | echo ""

78 | echo "Please update the script with your actual paths and values."

79 | echo "Run './scripts/run_finetune.sh --help' for more information."

80 | exit 1

81 | fi

82 | }

83 |

84 | # Exit on error

85 | set -e

86 |

87 | # Check if we're in the finetune directory

88 | CURRENT_DIR=$(basename "$PWD")

89 | if [ "$CURRENT_DIR" != "finetune" ]; then

90 | echo "Error: This script must be run from the finetune/ directory"

91 | echo "Current directory: $PWD"

92 | echo "Please change to the finetune directory and try again"

93 | exit 1

94 | fi

95 |

96 | # ==============================

97 | # Configuration Parameters

98 | # ==============================

99 |

100 | # Hardware configuration

101 | NUM_GPUS=8

102 | MASTER_PORT=9999

103 | # Uncomment and modify if you need specific GPUs

104 | # export CUDA_VISIBLE_DEVICES=4,5,6,7

105 |

106 | # Training hyperparameters

107 | PER_DEVICE_TRAIN_BATCH_SIZE=1

108 | PER_DEVICE_EVAL_BATCH_SIZE=1

109 | GLOBAL_BATCH_SIZE=$((NUM_GPUS*PER_DEVICE_TRAIN_BATCH_SIZE))

110 | USE_BF16=true

111 | SEQ_LENGTH=8192

112 | TRAIN_ITERS=150

113 | NUM_TRAIN_EPOCHS=10

114 |

115 | # Data paths (replace with your actual paths)

116 | DATA_PATH=""

117 | DATA_CACHE_PATH=""

118 |

119 | # Set comma-separated list of proportions for training, validation, and test split

120 | DATA_SPLIT="900,50,50"

121 |

122 | # Model configuration

123 | TOKENIZER_MODEL_PATH=""

124 | MODEL_NAME="m-a-p/YuE-s1-7B-anneal-en-cot"

125 | MODEL_CACHE_DIR=""

126 | OUTPUT_DIR=""

127 | DEEPSPEED_CONFIG=config/ds_config_zero2.json

128 |

129 | # LoRA configuration

130 | LORA_R=64

131 | LORA_ALPHA=32

132 | LORA_DROPOUT=0.1

133 | LORA_TARGET_MODULES="q_proj k_proj v_proj o_proj"

134 | # Logging configuration

135 | LOGGING_STEPS=5

136 | SAVE_STEPS=5

137 | USE_WANDB=true

138 | WANDB_API_KEY=""

139 | RUN_NAME="YuE-ft-lora"

140 |

141 | # ==============================

142 | # Environment Setup

143 | # ==============================

144 |

145 | # Check for placeholder values

146 | check_placeholders

147 |

148 | # Export environment variables

149 | export WANDB_API_KEY=$WANDB_API_KEY

150 | export PYTHONPATH=$PWD:$PYTHONPATH

151 |

152 | # Print configuration

153 | echo "==============================================="

154 | echo "YuE Fine-tuning Configuration:"

155 | echo "==============================================="

156 | echo "Number of GPUs: $NUM_GPUS"

157 | echo "Global batch size: $GLOBAL_BATCH_SIZE"

158 | echo "Model: $MODEL_NAME"

159 | echo "Output directory: $OUTPUT_DIR"

160 | echo "Training epochs: $NUM_TRAIN_EPOCHS"

161 | echo "==============================================="

162 |

163 | # ==============================

164 | # Build and Execute Command

165 | # ==============================

166 |

167 | # Base command

168 | CMD="torchrun --nproc_per_node=$NUM_GPUS --master_port=$MASTER_PORT scripts/train_lora.py \

169 | --seq-length $SEQ_LENGTH \

170 | --data-path $DATA_PATH \

171 | --data-cache-path $DATA_CACHE_PATH \

172 | --split $DATA_SPLIT \

173 | --tokenizer-model $TOKENIZER_MODEL_PATH \

174 | --global-batch-size $GLOBAL_BATCH_SIZE \

175 | --per-device-train-batch-size $PER_DEVICE_TRAIN_BATCH_SIZE \

176 | --per-device-eval-batch-size $PER_DEVICE_EVAL_BATCH_SIZE \

177 | --train-iters $TRAIN_ITERS \

178 | --num-train-epochs $NUM_TRAIN_EPOCHS \

179 | --logging-steps $LOGGING_STEPS \

180 | --save-steps $SAVE_STEPS \

181 | --deepspeed $DEEPSPEED_CONFIG"

182 |

183 | # Add conditional arguments

184 | if [ "$USE_WANDB" = true ]; then

185 | CMD="$CMD --report-to wandb --run-name \"$RUN_NAME\""

186 | elif [ "$USE_WANDB" = false ]; then

187 | CMD="$CMD --report-to none"

188 | fi

189 |

190 | CMD="$CMD \

191 | --model-name-or-path \"$MODEL_NAME\" \

192 | --cache-dir $MODEL_CACHE_DIR \

193 | --output-dir $OUTPUT_DIR \

194 | --lora-r $LORA_R \

195 | --lora-alpha $LORA_ALPHA \

196 | --lora-dropout $LORA_DROPOUT \

197 | --lora-target-modules $LORA_TARGET_MODULES"

198 |

199 | if [ "$USE_BF16" = true ]; then

200 | CMD="$CMD --bf16"

201 | fi

202 |

203 | # Execute the command

204 | echo "Running command: $CMD"

205 | echo "==============================================="

206 | eval $CMD

207 |

208 | # Check exit status

209 | if [ $? -eq 0 ]; then

210 | echo "==============================================="

211 | echo "Fine-tuning completed successfully!"

212 | echo "Output saved to: $OUTPUT_DIR"

213 | echo "==============================================="

214 | else

215 | echo "==============================================="

216 | echo "Error: Fine-tuning failed with exit code $?"

217 | echo "==============================================="

218 | exit 1

219 | fi

--------------------------------------------------------------------------------

/code/finetune/core/datasets/blended_dataset.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) 2022, NVIDIA CORPORATION. All rights reserved.

2 |

3 | import hashlib

4 | import json

5 | import logging

6 | import os

7 | import time

8 | from collections import OrderedDict

9 | from typing import Dict, List, Tuple, Union

10 |

11 | import numpy

12 | import torch

13 |

14 | from core.datasets.blended_megatron_dataset_config import BlendedMegatronDatasetConfig

15 | from core.datasets.megatron_dataset import MegatronDataset

16 | from core.datasets.utils import log_single_rank, normalize

17 |

18 | logger = logging.getLogger(__name__)

19 |

20 | _VERBOSE = False

21 |

22 |

23 | class BlendedDataset(torch.utils.data.Dataset):

24 | """Conjugating class for a set of MegatronDataset instances

25 |

26 | Args:

27 | datasets (List[MegatronDataset]): The MegatronDataset instances to blend

28 |

29 | weights (List[float]): The weights which determines the dataset blend ratios

30 |

31 | size (int): The number of samples to draw from the blend

32 |

33 | config (BlendedMegatronDatasetConfig): The config object which informs dataset creation

34 |

35 | Raises:

36 | RuntimeError: When the dataset has fewer or more samples than 'size' post-initialization

37 | """

38 |

39 | def __init__(

40 | self,

41 | datasets: List[MegatronDataset],

42 | weights: List[float],

43 | size: int,

44 | config: BlendedMegatronDatasetConfig,

45 | ) -> None:

46 | assert len(datasets) < 32767

47 | assert len(datasets) == len(weights)

48 | assert numpy.isclose(sum(weights), 1.0)

49 | assert all(map(lambda _: type(_) == type(datasets[0]), datasets))

50 |

51 | # Alert user to unnecessary blending

52 | if len(datasets) == 1:

53 | log_single_rank(

54 | logger, logging.WARNING, f"Building a BlendedDataset for a single MegatronDataset"

55 | )

56 |

57 | # Redundant normalization for bitwise identical comparison with Megatron-LM

58 | weights = normalize(weights)

59 |

60 | self.datasets = datasets

61 | self.weights = weights

62 | self.size = size

63 | self.config = config

64 |

65 | unique_identifiers = OrderedDict()

66 | unique_identifiers["class"] = type(self).__name__

67 | unique_identifiers["datasets"] = [dataset.unique_identifiers for dataset in self.datasets]

68 | unique_identifiers["weights"] = self.weights

69 | unique_identifiers["size"] = self.size

70 |

71 | self.unique_description = json.dumps(unique_identifiers, indent=4)

72 | self.unique_description_hash = hashlib.md5(

73 | self.unique_description.encode("utf-8")

74 | ).hexdigest()

75 |

76 | self.dataset_index, self.dataset_sample_index = self._build_indices()

77 |

78 | # Check size

79 | _ = self[self.size - 1]

80 | try:

81 | _ = self[self.size]

82 | raise RuntimeError(f"{type(self).__name__} size is improperly bounded")

83 | except IndexError:

84 | log_single_rank(logger, logging.INFO, f"> {type(self).__name__} length: {len(self)}")

85 |

86 | def __len__(self) -> int:

87 | return self.size

88 |

89 | def __getitem__(self, idx: int) -> Dict[str, Union[int, numpy.ndarray]]:

90 | dataset_id = self.dataset_index[idx]

91 | dataset_sample_id = self.dataset_sample_index[idx]

92 | return {

93 | "dataset_id": dataset_id,

94 | **self.datasets[dataset_id][dataset_sample_id],

95 | }

96 |

97 | def _build_indices(self) -> Tuple[numpy.ndarray, numpy.ndarray]:

98 | """Build and optionally cache the dataset index and the dataset sample index

99 |

100 | The dataset index is a 1-D mapping which determines the dataset to query. The dataset

101 | sample index is a 1-D mapping which determines the sample to request from the queried

102 | dataset.

103 |

104 | Returns:

105 | Tuple[numpy.ndarray, numpy.ndarray]: The dataset index and the dataset sample index

106 | """

107 | path_to_cache = self.config.path_to_cache

108 |

109 | if path_to_cache:

110 | get_path_to = lambda suffix: os.path.join(

111 | path_to_cache, f"{self.unique_description_hash}-{type(self).__name__}-{suffix}"

112 | )

113 | path_to_description = get_path_to("description.txt")

114 | path_to_dataset_index = get_path_to("dataset_index.npy")

115 | path_to_dataset_sample_index = get_path_to("dataset_sample_index.npy")

116 | cache_hit = all(

117 | map(

118 | os.path.isfile,

119 | [path_to_description, path_to_dataset_index, path_to_dataset_sample_index],

120 | )

121 | )

122 | else:

123 | cache_hit = False

124 |

125 | if not path_to_cache or (not cache_hit and torch.distributed.get_rank() == 0):

126 | log_single_rank(

127 | logger, logging.INFO, f"Build and save the {type(self).__name__} indices",

128 | )

129 |

130 | # Build the dataset and dataset sample indexes

131 | log_single_rank(

132 | logger, logging.INFO, f"\tBuild and save the dataset and dataset sample indexes"

133 | )

134 | t_beg = time.time()

135 | from core.datasets import helpers

136 |

137 | dataset_index = numpy.zeros(self.size, dtype=numpy.int16)

138 | dataset_sample_index = numpy.zeros(self.size, dtype=numpy.int64)

139 | helpers.build_blending_indices(

140 | dataset_index,

141 | dataset_sample_index,

142 | self.weights,

143 | len(self.datasets),

144 | self.size,

145 | _VERBOSE,

146 | )

147 |

148 | if path_to_cache:

149 | os.makedirs(path_to_cache, exist_ok=True)

150 | # Write the description

151 | with open(path_to_description, "wt") as writer:

152 | writer.write(self.unique_description)

153 | # Save the indexes

154 | numpy.save(path_to_dataset_index, dataset_index, allow_pickle=True)

155 | numpy.save(path_to_dataset_sample_index, dataset_sample_index, allow_pickle=True)

156 | else:

157 | log_single_rank(

158 | logger,

159 | logging.WARNING,

160 | "Unable to save the indexes because path_to_cache is None",

161 | )

162 |

163 | t_end = time.time()

164 | log_single_rank(logger, logging.DEBUG, f"\t> time elapsed: {t_end - t_beg:4f} seconds")

165 |

166 | return dataset_index, dataset_sample_index

167 |

168 | log_single_rank(logger, logging.INFO, f"Load the {type(self).__name__} indices")

169 |

170 | log_single_rank(

171 | logger, logging.INFO, f"\tLoad the dataset index from {path_to_dataset_index}"

172 | )

173 | t_beg = time.time()

174 | dataset_index = numpy.load(path_to_dataset_index, allow_pickle=True, mmap_mode='r')

175 | t_end = time.time()

176 | log_single_rank(logger, logging.DEBUG, f"\t> time elapsed: {t_end - t_beg:4f} seconds")

177 |

178 | log_single_rank(

179 | logger,

180 | logging.INFO,

181 | f"\tLoad the dataset sample index from {path_to_dataset_sample_index}",

182 | )

183 | t_beg = time.time()

184 | dataset_sample_index = numpy.load(

185 | path_to_dataset_sample_index, allow_pickle=True, mmap_mode='r'

186 | )

187 | t_end = time.time()

188 | log_single_rank(logger, logging.DEBUG, f"\t> time elapsed: {t_end - t_beg:4f} seconds")

189 |

190 | return dataset_index, dataset_sample_index

191 |

--------------------------------------------------------------------------------

/code/finetune/tools/codecmanipulator.py:

--------------------------------------------------------------------------------

1 | import json

2 | import numpy as np

3 | import einops

4 |

5 |

6 | class CodecManipulator(object):

7 | r"""

8 | **mm tokenizer v0.1**

9 | see codeclm/hf/mm_tokenizer_v0.1_hf/id2vocab.json

10 |

11 | text tokens:

12 | llama tokenizer 0~31999

13 |

14 | special tokens: "32000": "", "32001": "", "32002": "", "32003": "", "32004": "", "32005": "", "32006": "", "32007": "", "32008": "", "32009": "", "32010": "", "32011": "", "32012": "", "32013": "", "32014": "", "32015": "", "32016": "", "32017": "", "32018": "", "32019": "", "32020": "", "32021": ""

15 |

16 | mm tokens:

17 | dac_16k: 4 codebook, 1024 vocab, 32022 - 36117

18 | dac_44k: 9 codebook, 1024 vocab, 36118 - 45333

19 | xcodec: 12 codebook, 1024 vocab, 45334 - 57621

20 | semantic mert: 1024, 57622 - 58645

21 | semantic hubert: 512, 58646 - 59157

22 | visual: 64000, not included in v0.1

23 | semanticodec 100tps 16384: semantic=16384, 59158 - 75541, acoustic=8192, 75542 - 83733

24 | """

25 | def __init__(self, codec_type, quantizer_begin=None, n_quantizer=None, teacher_forcing=False, data_feature="codec"):

26 | self.codec_type = codec_type

27 | self.mm_v0_2_cfg = {

28 | "dac16k": {"codebook_size": 1024, "num_codebooks": 4, "global_offset": 32022, "sep": [""], "fps": 50},

29 | "dac44k": {"codebook_size": 1024, "num_codebooks": 9, "global_offset": 36118, "sep": [""]},

30 | "xcodec": {"codebook_size": 1024, "num_codebooks": 12, "global_offset": 45334, "sep": [""], "fps": 50},

31 | "mert": {"codebook_size": 1024, "global_offset": 57622, "sep": [""]},

32 | "hubert": {"codebook_size": 512, "global_offset": 58646, "sep": [""]},

33 | "semantic/s": {"codebook_size": 16384, "num_codebooks": 1, "global_offset": 59158, "sep": ["", ""]},

34 | "semantic/a": {"codebook_size": 8192, "num_codebooks": 1, "global_offset": 75542, "sep": ["", ""]},

35 | "semanticodec": {"codebook_size": [16384, 8192], "num_codebooks": 2, "global_offset": 59158, "sep": [""], "fps": 50},

36 | "special_tokens": {

37 | '': 32000, '': 32001, '': 32002, '': 32003, '': 32004, '': 32005, '': 32006, '': 32007, '': 32008, '': 32009, '': 32010, '': 32011, '': 32012, '': 32013, '': 32014, '': 32015, '': 32016, '': 32017, '': 32018, '': 32019, '': 32020, '': 32021

38 | },

39 | "metadata": {

40 | "len": 83734,

41 | "text_range": [0, 31999],

42 | "special_range": [32000, 32021],

43 | "mm_range": [32022, 83733]

44 | },

45 | "codec_range": {

46 | "dac16k": [32022, 36117],

47 | "dac44k": [36118, 45333],

48 | "xcodec": [45334, 57621],

49 | # "hifi16k": [53526, 57621],

50 | "mert": [57622, 58645],

51 | "hubert": [58646, 59157],

52 | "semantic/s": [59158, 75541],

53 | "semantic/a": [75542, 83733],

54 | "semanticodec": [59158, 83733]

55 | }

56 | }

57 | self.sep = self.mm_v0_2_cfg[self.codec_type]["sep"]

58 | self.sep_ids = [self.mm_v0_2_cfg["special_tokens"][s] for s in self.sep]

59 | self.codebook_size = self.mm_v0_2_cfg[self.codec_type]["codebook_size"]

60 | self.num_codebooks = self.mm_v0_2_cfg[self.codec_type]["num_codebooks"]

61 | self.global_offset = self.mm_v0_2_cfg[self.codec_type]["global_offset"]

62 | self.fps = self.mm_v0_2_cfg[self.codec_type]["fps"] if "fps" in self.mm_v0_2_cfg[self.codec_type] else None

63 |

64 | self.quantizer_begin = quantizer_begin if quantizer_begin is not None else 0

65 | self.n_quantizer = n_quantizer if n_quantizer is not None else self.num_codebooks

66 | self.teacher_forcing = teacher_forcing

67 | self.data_feature = data_feature

68 |

69 |

70 | def offset_tok_ids(self, x, global_offset=0, codebook_size=2048, num_codebooks=4):

71 | """

72 | x: (K, T)

73 | """

74 | if isinstance(codebook_size, int):

75 | assert x.max() < codebook_size, f"max(x)={x.max()}, codebook_size={codebook_size}"

76 | elif isinstance(codebook_size, list):

77 | for i, cs in enumerate(codebook_size):

78 | assert x[i].max() < cs, f"max(x)={x[i].max()}, codebook_size={cs}, layer_id={i}"

79 | else:

80 | raise ValueError(f"codebook_size={codebook_size}")

81 | assert x.min() >= 0, f"min(x)={x.min()}"

82 | assert x.shape[0] == num_codebooks or x.shape[0] == self.n_quantizer, \

83 | f"x.shape[0]={x.shape[0]}, num_codebooks={num_codebooks}, n_quantizer={self.n_quantizer}"

84 |

85 | _x = x.copy()

86 | _x = _x.astype(np.uint32)

87 | cum_offset = 0

88 | quantizer_begin = self.quantizer_begin

89 | quantizer_end = quantizer_begin+self.n_quantizer

90 | for k in range(self.quantizer_begin, quantizer_end): # k: quantizer_begin to quantizer_end - 1

91 | if isinstance(codebook_size, int):

92 | _x[k] += global_offset + k * codebook_size

93 | elif isinstance(codebook_size, list):

94 | _x[k] += global_offset + cum_offset

95 | cum_offset += codebook_size[k]

96 | else:

97 | raise ValueError(f"codebook_size={codebook_size}")

98 | return _x[quantizer_begin:quantizer_end]

99 |

100 | def unoffset_tok_ids(self, x, global_offset=0, codebook_size=2048, num_codebooks=4):

101 | """

102 | x: (K, T)

103 | """

104 | if isinstance(codebook_size, int):

105 | assert x.max() < global_offset + codebook_size * num_codebooks, f"max(x)={x.max()}, codebook_size={codebook_size}"

106 | elif isinstance(codebook_size, list):

107 | assert x.max() < global_offset + sum(codebook_size), f"max(x)={x.max()}, codebook_size={codebook_size}"

108 | assert x.min() >= global_offset, f"min(x)={x.min()}, global_offset={global_offset}"

109 | assert x.shape[0] == num_codebooks or x.shape[0] == self.n_quantizer, \

110 | f"x.shape[0]={x.shape[0]}, num_codebooks={num_codebooks}, n_quantizer={self.n_quantizer}"

111 |

112 | _x = x.copy()

113 | _x = _x.astype(np.uint32)

114 | cum_offset = 0

115 | quantizer_begin = self.quantizer_begin

116 | quantizer_end = quantizer_begin+self.n_quantizer

117 | for k in range(quantizer_begin, quantizer_end):

118 | if isinstance(codebook_size, int):

119 | _x[k-quantizer_begin] -= global_offset + k * codebook_size

120 | elif isinstance(codebook_size, list):

121 | _x[k-quantizer_begin] -= global_offset + cum_offset

122 | cum_offset += codebook_size[k]

123 | else:

124 | raise ValueError(f"codebook_size={codebook_size}")

125 | return _x

126 |

127 | def flatten(self, x):

128 | if len(x.shape) > 2:

129 | x = x.squeeze()

130 | assert x.shape[0] == self.num_codebooks or x.shape[0] == self.n_quantizer, \

131 | f"x.shape[0]={x.shape[0]}, num_codebooks={self.num_codebooks}, n_quantizer={self.n_quantizer}"

132 | return einops.rearrange(x, 'K T -> (T K)')

133 |

134 | def unflatten(self, x, n_quantizer=None):

135 | if x.ndim > 1 and x.shape[0] == 1:

136 | x = x.squeeze(0)

137 | assert len(x.shape) == 1

138 | assert x.shape[0] % self.num_codebooks == 0 or x.shape[0] % self.n_quantizer == 0, \

139 | f"x.shape[0]={x.shape[0]}, num_codebooks={self.num_codebooks}, n_quantizer={self.n_quantizer}"

140 | if n_quantizer!=self.num_codebooks:

141 | return einops.rearrange(x, '(T K) -> K T', K=n_quantizer)

142 | return einops.rearrange(x, '(T K) -> K T', K=self.num_codebooks)

143 |

144 | # def check_codec_type_from_path(self, path):

145 | # if self.codec_type == "hifi16k":

146 | # assert "academicodec_hifi_16k_320d_large_uni" in path

147 |

148 | def get_codec_type_from_range(self, ids):

149 | ids_range = [ids.min(), ids.max()]

150 | codec_range = self.mm_v0_2_cfg["codec_range"]

151 | for codec_type, r in codec_range.items():

152 | if ids_range[0] >= r[0] and ids_range[1] <= r[1]:

153 | return codec_type

154 | raise ValueError(f"ids_range={ids_range}, codec_range={codec_range}")

155 |

156 | def npy2ids(self, npy):

157 | if isinstance(npy, str):

158 | data = np.load(npy)

159 | elif isinstance(npy, np.ndarray):

160 | data = npy

161 | else:

162 | raise ValueError(f"not supported type: {type(npy)}")

163 |

164 | assert len(data.shape)==2, f'data shape: {data.shape} is not (n_codebook, seq_len)'

165 | data = self.offset_tok_ids(

166 | data,

167 | global_offset=self.global_offset,

168 | codebook_size=self.codebook_size,

169 | num_codebooks=self.num_codebooks,

170 | )

171 | data = self.flatten(data)

172 | codec_range = self.get_codec_type_from_range(data)

173 | assert codec_range == self.codec_type, f"get_codec_type_from_range(data)={codec_range}, self.codec_type={self.codec_type}"

174 | data = data.tolist()

175 | return data

176 |

177 | def ids2npy(self, token_ids):

178 | # make sure token_ids starts with codebook 0

179 | if isinstance(self.codebook_size, int):

180 | codebook_0_range = (self.global_offset + self.quantizer_begin*self.codebook_size, self.global_offset + (self.quantizer_begin+1)*self.codebook_size)

181 | elif isinstance(self.codebook_size, list):

182 | codebook_0_range = (self.global_offset, self.global_offset + self.codebook_size[0])

183 | assert token_ids[0] >= codebook_0_range[0] \

184 | and token_ids[0] < codebook_0_range[1], f"token_ids[0]={token_ids[self.quantizer_begin]}, codebook_0_range={codebook_0_range}"

185 | data = np.array(token_ids)

186 | data = self.unflatten(data, n_quantizer=self.n_quantizer)

187 | data = self.unoffset_tok_ids(

188 | data,

189 | global_offset=self.global_offset,

190 | codebook_size=self.codebook_size,

191 | num_codebooks=self.num_codebooks,

192 | )

193 | return data

194 |

195 | def npy_to_json_str(self, npy_path):

196 | data = self.npy2ids(npy_path)

197 | return json.dumps({"text": data, "src": npy_path, "codec": self.codec_type})

198 |

199 | def sep(self):

200 | return ''.join(self.sep)

201 |

202 | def sep_ids(self):

203 | return self.sep_ids

204 |

--------------------------------------------------------------------------------

/code/inference/codecmanipulator.py:

--------------------------------------------------------------------------------

1 | import json

2 | import numpy as np

3 | import einops

4 |

5 |

6 | class CodecManipulator(object):

7 | r"""

8 | **mm tokenizer v0.1**

9 | see codeclm/hf/mm_tokenizer_v0.1_hf/id2vocab.json

10 |

11 | text tokens:

12 | llama tokenizer 0~31999

13 |

14 | special tokens: "32000": "", "32001": "", "32002": "", "32003": "", "32004": "", "32005": "", "32006": "", "32007": "", "32008": "", "32009": "", "32010": "", "32011": "", "32012": "", "32013": "", "32014": "", "32015": "", "32016": "", "32017": "", "32018": "", "32019": "", "32020": "", "32021": ""

15 |

16 | mm tokens:

17 | dac_16k: 4 codebook, 1024 vocab, 32022 - 36117

18 | dac_44k: 9 codebook, 1024 vocab, 36118 - 45333

19 | xcodec: 12 codebook, 1024 vocab, 45334 - 57621

20 | semantic mert: 1024, 57622 - 58645

21 | semantic hubert: 512, 58646 - 59157

22 | visual: 64000, not included in v0.1

23 | semanticodec 100tps 16384: semantic=16384, 59158 - 75541, acoustic=8192, 75542 - 83733

24 | """

25 | def __init__(self, codec_type, quantizer_begin=None, n_quantizer=None, teacher_forcing=False, data_feature="codec"):

26 | self.codec_type = codec_type

27 | self.mm_v0_2_cfg = {

28 | "dac16k": {"codebook_size": 1024, "num_codebooks": 4, "global_offset": 32022, "sep": [""], "fps": 50},

29 | "dac44k": {"codebook_size": 1024, "num_codebooks": 9, "global_offset": 36118, "sep": [""]},

30 | "xcodec": {"codebook_size": 1024, "num_codebooks": 12, "global_offset": 45334, "sep": [""], "fps": 50},

31 | "mert": {"codebook_size": 1024, "global_offset": 57622, "sep": [""]},

32 | "hubert": {"codebook_size": 512, "global_offset": 58646, "sep": [""]},

33 | "semantic/s": {"codebook_size": 16384, "num_codebooks": 1, "global_offset": 59158, "sep": ["", ""]},

34 | "semantic/a": {"codebook_size": 8192, "num_codebooks": 1, "global_offset": 75542, "sep": ["", ""]},

35 | "semanticodec": {"codebook_size": [16384, 8192], "num_codebooks": 2, "global_offset": 59158, "sep": [""], "fps": 50},

36 | "special_tokens": {

37 | '': 32000, '': 32001, '': 32002, '': 32003, '': 32004, '': 32005, '': 32006, '': 32007, '': 32008, '': 32009, '': 32010, '': 32011, '': 32012, '': 32013, '': 32014, '': 32015, '': 32016, '': 32017, '': 32018, '': 32019, '': 32020, '': 32021

38 | },

39 | "metadata": {

40 | "len": 83734,

41 | "text_range": [0, 31999],

42 | "special_range": [32000, 32021],

43 | "mm_range": [32022, 83733]

44 | },

45 | "codec_range": {

46 | "dac16k": [32022, 36117],

47 | "dac44k": [36118, 45333],

48 | "xcodec": [45334, 57621],

49 | # "hifi16k": [53526, 57621],

50 | "mert": [57622, 58645],

51 | "hubert": [58646, 59157],

52 | "semantic/s": [59158, 75541],

53 | "semantic/a": [75542, 83733],

54 | "semanticodec": [59158, 83733]

55 | }

56 | }

57 | self.sep = self.mm_v0_2_cfg[self.codec_type]["sep"]

58 | self.sep_ids = [self.mm_v0_2_cfg["special_tokens"][s] for s in self.sep]

59 | self.codebook_size = self.mm_v0_2_cfg[self.codec_type]["codebook_size"]

60 | self.num_codebooks = self.mm_v0_2_cfg[self.codec_type]["num_codebooks"]

61 | self.global_offset = self.mm_v0_2_cfg[self.codec_type]["global_offset"]

62 | self.fps = self.mm_v0_2_cfg[self.codec_type]["fps"] if "fps" in self.mm_v0_2_cfg[self.codec_type] else None

63 |

64 | self.quantizer_begin = quantizer_begin if quantizer_begin is not None else 0

65 | self.n_quantizer = n_quantizer if n_quantizer is not None else self.num_codebooks

66 | self.teacher_forcing = teacher_forcing

67 | self.data_feature = data_feature

68 |

69 |

70 | def offset_tok_ids(self, x, global_offset=0, codebook_size=2048, num_codebooks=4):

71 | """

72 | x: (K, T)

73 | """

74 | if isinstance(codebook_size, int):

75 | assert x.max() < codebook_size, f"max(x)={x.max()}, codebook_size={codebook_size}"

76 | elif isinstance(codebook_size, list):

77 | for i, cs in enumerate(codebook_size):

78 | assert x[i].max() < cs, f"max(x)={x[i].max()}, codebook_size={cs}, layer_id={i}"

79 | else:

80 | raise ValueError(f"codebook_size={codebook_size}")

81 | assert x.min() >= 0, f"min(x)={x.min()}"

82 | assert x.shape[0] == num_codebooks or x.shape[0] == self.n_quantizer, \

83 | f"x.shape[0]={x.shape[0]}, num_codebooks={num_codebooks}, n_quantizer={self.n_quantizer}"

84 |

85 | _x = x.copy()

86 | _x = _x.astype(np.uint32)

87 | cum_offset = 0

88 | quantizer_begin = self.quantizer_begin

89 | quantizer_end = quantizer_begin+self.n_quantizer

90 | for k in range(self.quantizer_begin, quantizer_end): # k: quantizer_begin to quantizer_end - 1

91 | if isinstance(codebook_size, int):

92 | _x[k] += global_offset + k * codebook_size

93 | elif isinstance(codebook_size, list):

94 | _x[k] += global_offset + cum_offset

95 | cum_offset += codebook_size[k]

96 | else:

97 | raise ValueError(f"codebook_size={codebook_size}")

98 | return _x[quantizer_begin:quantizer_end]

99 |

100 | def unoffset_tok_ids(self, x, global_offset=0, codebook_size=2048, num_codebooks=4):

101 | """

102 | x: (K, T)

103 | """

104 | if isinstance(codebook_size, int):

105 | assert x.max() < global_offset + codebook_size * num_codebooks, f"max(x)={x.max()}, codebook_size={codebook_size}"

106 | elif isinstance(codebook_size, list):

107 | assert x.max() < global_offset + sum(codebook_size), f"max(x)={x.max()}, codebook_size={codebook_size}"

108 | assert x.min() >= global_offset, f"min(x)={x.min()}, global_offset={global_offset}"

109 | assert x.shape[0] == num_codebooks or x.shape[0] == self.n_quantizer, \