├── .gitignore

├── LICENSE

├── Multi-processing and Multi-threading in Python

├── Multi-processing

│ ├── Multi-processing in Python.md

│ ├── distributed_processing_server.py

│ ├── distributed_processing_worker.py

│ └── multiprocessing_async.py

└── Multi-threading

│ ├── Locks and Sync Blocking

│ ├── sync_blocking.py

│ └── wait_blocking.py

│ ├── Multi-threading in Python.md

│ ├── Race Condition Demo by Raymond Hettinger

│ ├── comm_via_atomic_message_queue.py

│ ├── comm_via_locks.py

│ ├── note

│ └── race_condition.py

│ └── multithreading_async.py

├── Multi-threading in Java

├── AtomicIntegerDemo.java

├── Bank User Example from 15-619 Cloud Computing

│ ├── BankUserMultiThreaded.java

│ ├── F17 15-619 Cloud Computing- (writeup: Thread-safe programming in Java) - TheProject.Zone.pdf

│ └── S17 15-619 Cloud Computing- (writeup: Introduction to multithreaded programming in Java) - TheProject.Zone.pdf

├── BlockingQueueDemo.java

├── Locks and Sync Blocking

│ └── WaitBlockingDemo.java

└── Multi-threading in Java.md

├── README.md

├── distributed_locking.py

├── thread_notify.png

├── thread_status.png

└── thread_wait.png

/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_Store

2 |

3 | # Java compilation files

4 | *.class

5 |

6 | # Python compilation files

7 | __pycache__/

8 | *.pyc

9 |

10 | # Project files

11 | .vscode/

12 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2017 Ziang Lu

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/Multi-processing and Multi-threading in Python/Multi-processing/Multi-processing in Python.md:

--------------------------------------------------------------------------------

1 | # Multi-processing in Python

2 |

3 | ## Basic Implementations

4 |

5 | * Using `os.fork()` function

6 |

7 | ```python

8 | import os

9 |

10 | print(f'Process (os.getpid()) start...')

11 | pid = os.fork() # 操作系统把当前进程(父进程)复制一份(称为子进程)

12 | # os.fork()函数调用一次, 返回两次: 父进程中返回创建的子进程的ID, 子进程中返回0

13 | if pid != 0:

14 | print(f'I ({os.getpid()}) just created a child process {pid}.')

15 | else:

16 | print(f'I am child process ({os.getpid()}) and my parent is {os.getppid()}.')

17 |

18 | # Output:

19 | # Process (15918) start...

20 | # I (15918) just created a child process (15919).

21 | # I am child process (15919) and my parent is 15918.

22 | ```

23 |

24 | * Using `multiprocessing` module

25 |

26 | * Create subprocesses using `Process`

27 |

28 | ```python

29 | from multiprocessing import Process

30 |

31 |

32 | def run_process(name: str) -> None:

33 | """

34 | Dummy task to be run within a process.

35 | :param name: str

36 | :return: None

37 | """

38 | print(f"Running child process '{name}' ({os.getpid()})")

39 |

40 |

41 | print(f'Parent process {os.getpid()}')

42 | p = Process(target=run_process, args=('test',))

43 | print('Child process starting...')

44 | p.start()

45 | p.join()

46 | print('Child process ends.')

47 | print('Parent process ends.')

48 |

49 | # Output:

50 | # Parent process 16623

51 | # Child process starting...

52 | # Running child process 'test' (16624)

53 | # Child process ends.

54 | # Parent process ends.

55 | ```

56 |

57 | * Create a pool of subprocesses using `Pool` and `Pool.apply()` method, `Pool.map()` method, `Pool.imap()` method or `Pool.imap_unordered()` method

58 |

59 | ```python

60 | import os

61 | import random

62 | import time

63 | from multiprocessing import Pool

64 |

65 |

66 | def long_time_task(name: str) -> float:

67 | """

68 | Dummy long task to be run within a process.

69 | :param name: str

70 | :return: float

71 | """

72 | print(f"Running task '{name}' ({os.getpid()})...")

73 | start = time.time()

74 | time.sleep(random.random() * 3)

75 | end = time.time()

76 | time_elapsed = end - start

77 | print(f"Task '{name}' runs {time_elapsed:.2f} seconds.")

78 | return time_elapsed

79 |

80 |

81 | print(f'Parent process {os.getpid()}')

82 | with Pool(4) as pool: # 开启一个4个进程的进程池

83 | # 在进程池中执行一个任务

84 | # pool.apply(func=long_time_task, args=(f'Some Task')) # Will block here

85 |

86 | # 在进程池中concurrently执行多个相同的任务, 只是参数不同

87 | start = time.time()

88 | # results = pool.map(

89 | # func=long_time_task, iterable=map(lambda x: f'Task-{x}', range(5))

90 | # ) # Will block here

91 | total_running_time = 0

92 | # for result in pool.imap(

93 | # func=long_time_task, iterable=map(lambda x: f'Task-{x}', range(5))

94 | # ): # Lazy version of pool.map()

95 | # total_running_time += result

96 | for result in pool.imap_unordered(

97 | func=long_time_task, iterable=map(lambda x: f'Task-{x}', range(5))

98 | ): # Lazy, unordered version of pool.map()

99 | total_running_time += result

100 | pool.close() # 调用close()之后就不能再添加新的任务了

101 | pool.join()

102 | print(f'Theoretical total running time: {total_running_time:.2f} seconds.')

103 | end = time.time()

104 | print(f'Actual running time: {end - start:.2f} seconds.')

105 | print('All subprocesses done.')

106 |

107 | # Output:

108 | # Parent process 9727

109 | # Running task 'Task-0' (9728)...

110 | # Running task 'Task-1' (9729)...

111 | # Running task 'Task-2' (9730)...

112 | # Running task 'Task-3' (9731)...

113 | # Task 'Task-0' runs 0.23 seconds.

114 | # Running task 'Task-4' (9728)...

115 | # Task 'Task-3' runs 0.44 seconds.

116 | # Task 'Task-4' runs 1.39 seconds.

117 | # Task 'Task-1' runs 1.90 seconds.

118 | # Task 'Task-2' runs 1.89 seconds.

119 | # Theoretical total running time: 5.85 seconds.

120 | # Actual running time: 1.95 seconds.

121 | # All subprocesses done.

122 | ```

123 |

124 | ***

125 |

126 | **Multi-processing + Async (Subprocess [Coroutine])**

127 |

128 | -> 把coroutine包在subprocess中

129 |

130 | *创建一个process pool (subprocesses), 在其中放入async的task (coroutine), 参见`multiprocessing_async.py`*

131 |

132 | ***

133 |

134 | * Using `subprocess` module to create non-self-defined subprocesses

135 |

136 | ```python

137 | import subprocess

138 |

139 | print('$ nslookup www.python.org')

140 | r = subprocess.call(['nslookup', 'www.python.org'])

141 | # 相当于在command line中输入 nslookup www.python.org

142 | print('Exit code:', r)

143 | print()

144 |

145 | # Output:

146 | # $ nslookup www.python.org

147 | # Server: 137.99.203.20

148 | # Address: 137.99.203.20#53

149 | #

150 | # Non-authoritative answer:

151 | # www.python.org canonical name = python.map.fastly.net.

152 | # Name: python.map.fastly.net

153 | # Address: 151.101.208.223

154 | #

155 | # Exit code: 0

156 |

157 | # Non-self-defined subprocesses might need input

158 | print('$ nslookup')

159 | # 相当于在command line中输入 nslookup

160 | p = subprocess.Popen(

161 | ['nslookup'],

162 | stdin=subprocess.PIPE,

163 | stdout=subprocess.PIPE,

164 | stderr=subprocess.PIPE

165 | )

166 | output, _ = p.communicate(b'set q=mx\npython.org\nexit\n')

167 | # 相当于再在command line中输入

168 | # set q=mx

169 | # python.org

170 | # exit

171 | print(output.decode('utf-8'))

172 | print('Exit code:', p.returncode)

173 | print()

174 |

175 | # Output:

176 | # $ nslookup

177 | # Server: 137.99.203.20

178 | # Address: 137.99.203.20#53

179 | #

180 | # Non-authoritative answer:

181 | # python.org mail exchanger = 50 mail.python.org

182 | #

183 | # Authoratative answers can be found from:

184 | #

185 | #

186 | # Exit code: 0

187 | ```

188 |

189 |

190 |

191 | ## Communication between Processes

192 |

193 | * Through a pipe, creating two `multiprocessing.connection.Connection` objects on both ends of the pipe

194 |

195 | ```python

196 | from multiprocessing import Pipe, Process

197 | from multiprocessing.connection import Connection

198 |

199 |

200 | def write_process(conn: Connection) -> None:

201 | """

202 | Process to write data to pipe through the given connection.

203 | :param conn: Connection

204 | :return: None

205 | """

206 | print('Child process writing to the pipe...')

207 | conn.send([42, None, 'Hello'])

208 | conn.close()

209 |

210 |

211 | parent_conn, child_conn = Pipe()

212 |

213 | p = Process(target=write_process, args=(child_conn,))

214 | p.start()

215 |

216 | print(f'Parent process reading from pipe... {parent_conn.recv()}')

217 |

218 | p.join()

219 |

220 | # Output:

221 | # Child process writing to the pipe...

222 | # Parent process reading from pipe... [42, None, 'Hello']

223 | ```

224 |

225 | * Through `multiprocessing.Queue`

226 |

227 | ```python

228 | import random

229 | import time

230 | from multiprocessing import Process, Queue

231 | from typing import List

232 |

233 |

234 | def producer_process(q: Queue, urls: List[str]) -> None:

235 | """

236 | Producer process to write data to the given message queue.

237 | :param q: Queue

238 | :param urls: list[str]

239 | :return: None

240 | """

241 | print('Producer process starts writing...')

242 | for url in urls:

243 | q.put(url)

244 | print(f'[Producer Process] Put {url} to the queue')

245 | time.sleep(random.random())

246 |

247 |

248 | def consumer_process(q: Queue) -> None:

249 | """

250 | Consumer process to get data from the given message queue.

251 | :param q: Queue

252 | :return: None

253 | """

254 | print('Consumer process starts reading...')

255 | while True:

256 | url = q.get(block=True)

257 | print(f'[Consumer Process] Get {url} from the queue')

258 |

259 |

260 | q = Queue()

261 | producer1 = Process(target=producer_process, args=(q, ['url1', 'url2', 'url3']))

262 | producer2 = Process(target=producer_process, args=(q, ['url4', 'url5']))

263 | consumer = Process(target=consumer_process, args=(q,))

264 |

265 | producer1.start()

266 | producer2.start()

267 | consumer.start()

268 |

269 | producer1.join()

270 | producer2.join()

271 |

272 | # Since there is an infinite loop in consumer_process, we need to manually force

273 | # it to stop.

274 | time.sleep(3) # Make sure the consumer has finished consuming the values

275 | consumer.terminate()

276 |

277 | # Output:

278 | # Producer process starts writing...

279 | # [Producer Process] Put url1 to the queue

280 | # Producer process starts writing...

281 | # [Producer Process] Put url4 to the queue

282 | # Consumer process starts reading...

283 | # [Consumer Process] Get url1 from the queue

284 | # [Consumer Process] Get url4 from the queue

285 | # [Producer Process] Put url5 to the queue

286 | # [Consumer Process] Get url5 from the queue

287 | # [Producer Process] Put url2 to the queue

288 | # [Consumer Process] Get url2 from the queue

289 | # [Producer Process] Put url3 to the queue

290 | # [Consumer Process] Get url3 from the queue

291 | ```

292 | * Don't make too many trips back and forth

293 |

294 | Instead, do significant work in one trip

295 |

296 | * Don't send or receive a lot of data at one time

297 |

298 |

--------------------------------------------------------------------------------

/Multi-processing and Multi-threading in Python/Multi-processing/distributed_processing_server.py:

--------------------------------------------------------------------------------

1 | #!usr/bin/env python3

2 | # -*- coding: utf-8 -*-

3 |

4 | """

5 | Distributed processing: distribute multiple processes to multiple machines.

6 |

7 | Task server module.

8 | """

9 |

10 | __author__ = 'Ziang Lu'

11 |

12 | import random

13 | from multiprocessing import Queue

14 | from multiprocessing.managers import BaseManager

15 |

16 | # 创建发送任务的queue和接受结果的queue

17 | task_queue = Queue(maxsize=5)

18 | result_queue = Queue(maxsize=5)

19 |

20 |

21 | class ServerQueueManager(BaseManager):

22 | pass

23 |

24 |

25 | # 给ServerQueueManager注册两个函数来分别返回两个queue

26 | ServerQueueManager.register('get_task_queue', callable=lambda: task_queue)

27 | ServerQueueManager.register('get_result_queue', callable=lambda: result_queue)

28 |

29 |

30 | ##### SERVER-SIDE #####

31 |

32 | # 创建manager, 并绑定端口5000, 设置authkey "abc"

33 | server_manager = ServerQueueManager(address=('', 5000), authkey=b'abc')

34 | # 启动manager

35 | server_manager.start()

36 | print('Server manager started.')

37 |

38 | # 通过ServerQueueManager封装来获取task_queue和result_queue

39 | task_q = server_manager.get_task_queue() # 本质上是个proxy

40 | result_q = server_manager.get_result_queue() # 本质上是个proxy

41 |

42 | # 向task_q设置任务

43 | for _ in range(10):

44 | n = random.randint(0, 10000)

45 | print(f'Put task {n}...')

46 | task_q.put(n)

47 |

48 | # Output:

49 | # Server manager started.

50 | # Put task 8739...

51 | # Put task 5790...

52 | # Put task 474...

53 | # Put task 8122...

54 | # Put task 4101...

55 | # Put task 8863...

56 | # Put task 3944...

57 | # Put task 7217...

58 | # Put task 6542...

59 | # Put task 2097...

60 |

61 |

62 | # 从result_q读取任务结果

63 | print('Getting results...')

64 | for _ in range(10):

65 | # Will block here and wait for getting results

66 | r = result_q.get(timeout=10)

67 | print(f'Result: {r}')

68 |

69 | # 关闭manager

70 | server_manager.shutdown()

71 | print('Server manager exited.')

72 |

73 | # Output:

74 | # Getting results...

75 | # Result: 8739 * 8739 = 76370121

76 | # Result: 5790 * 5790 = 33524100

77 | # Result: 474 * 474 = 224676

78 | # Result: 8122 * 8122 = 65966884

79 | # Result: 4101 * 4101 = 16818201

80 | # Result: 8863 * 8863 = 78552769

81 | # Result: 3944 * 3944 = 15555136

82 | # Result: 7217 * 7217 = 52085089

83 | # Result: 6542 * 6542 = 42797764

84 | # Result: 2097 * 2097 = 4397409

85 | # Server manager exited.

86 |

--------------------------------------------------------------------------------

/Multi-processing and Multi-threading in Python/Multi-processing/distributed_processing_worker.py:

--------------------------------------------------------------------------------

1 | #!usr/bin/env python3

2 | # -*- coding: utf-8 -*-

3 |

4 | """

5 | Distributed processing: distribute multiple processes to multiple machines.

6 |

7 | Task consumer module.

8 | """

9 |

10 | __author__ = 'Ziang Lu'

11 |

12 | import time

13 | from multiprocessing import Queue

14 | from multiprocessing.managers import BaseManager

15 |

16 |

17 | class WorkerQueueManager(BaseManager):

18 | pass

19 |

20 |

21 | # 由于WorkerQueueManager只从网络上获取queue, 所以注册时只提供名字

22 | WorkerQueueManager.register('get_task_queue')

23 | WorkerQueueManager.register('get_result_queue')

24 |

25 | ##### WORKER-SIDE #####

26 |

27 | server_addr = '127.0.0.1' # localhost

28 | # 创建manager, port和authkey注意与manager.py中保持一致

29 | worker_manager = WorkerQueueManager(

30 | address=(server_addr, 5000), authkey=b'abc'

31 | )

32 | # 连接至服务器

33 | print(f'Connecting to server {server_addr}...')

34 | worker_manager.connect()

35 | print('Worker started.')

36 |

37 | # 通过WorkerQueueManager封装来获取task_queue和result_queue

38 | task_q = worker_manager.get_task_queue() # 本质上是个proxy

39 | result_q = worker_manager.get_result_queue() # 本质上是个proxy

40 |

41 | # 从task_q获取任务, 执行任务, 并把结果写入result_q

42 | for _ in range(10):

43 | try:

44 | n = task_q.get(timeout=1)

45 | print(f'Calculating {n} * {n}...')

46 | result = f'{n} * {n} = {n * n}'

47 | time.sleep(1)

48 | result_q.put(result)

49 | except Queue.Empty:

50 | print('Task queue is empty.')

51 | print('Worker exits.')

52 |

53 |

54 | # Output:

55 | # Connecting to server 127.0.0.1...

56 | # Worker started.

57 | # Calculating 8739 * 8739...

58 | # Calculating 5790 * 5790...

59 | # Calculating 474 * 474...

60 | # Calculating 8122 * 8122...

61 | # Calculating 4101 * 4101...

62 | # Calculating 8863 * 8863...

63 | # Calculating 3944 * 3944...

64 | # Calculating 7217 * 7217...

65 | # Calculating 6542 * 6542...

66 | # Calculating 2097 * 2097...

67 | # Worker exits.

68 |

--------------------------------------------------------------------------------

/Multi-processing and Multi-threading in Python/Multi-processing/multiprocessing_async.py:

--------------------------------------------------------------------------------

1 | #!usr/bin/env python3

2 | # -*- coding: utf-8 -*-

3 |

4 | """

5 | We can assign asynchronous tasks into a process pool.

6 |

7 | This can implemented in two ways:

8 | - one with "concurrent.futures" module

9 | - one with "multiprocessing" module

10 | """

11 |

12 | __author__ = 'Ziang Lu'

13 |

14 | import concurrent.futures as cf

15 | import os

16 | import random

17 | import time

18 | from multiprocessing import Pool

19 |

20 |

21 | def long_time_task(name: str) -> float:

22 | """

23 | Dummy long task to be run within a process.

24 | :param name: str

25 | :return: float

26 | """

27 | print(f"Running task '{name}' ({os.getpid()})...")

28 | start = time.time()

29 | time.sleep(random.random() * 3)

30 | end = time.time()

31 | time_elapsed = end - start

32 | print(f"Task '{name}' runs {time_elapsed:.2f} seconds.")

33 | return time_elapsed

34 |

35 |

36 | def demo1():

37 | # With "concurrent.futures" module

38 | print(f'Parent process {os.getpid()}')

39 | with cf.ProcessPoolExecutor(max_workers=4) as pool: # 开启一个4个进程的进程池

40 | start = time.time()

41 | # 在进程池中执行多个任务, 但是在主进程中是async的

42 | futures = [pool.submit(long_time_task, f'Task-{i}') for i in range(5)] # Will NOT block here

43 |

44 | # Since submit() method is asynchronous (non-blocking), by now the tasks

45 | # in the process pool are still executing, but in this main process, we

46 | # have successfully proceeded to here.

47 | total_running_time = 0

48 | for future in cf.as_completed(futures):

49 | total_running_time += future.result()

50 |

51 | print(f'Theoretical total running time: {total_running_time:.2f} '

52 | f'seconds.')

53 | end = time.time()

54 | print(f'Actual running time: {end - start:.2f} seconds.')

55 | print('All subprocesses done.')

56 |

57 | # Output:

58 | # Parent process 41585

59 | # Running task 'Task-0' (41586)...

60 | # Running task 'Task-1' (41587)...

61 | # Running task 'Task-2' (41588)...

62 | # Running task 'Task-3' (41589)...

63 | # Task 'Task-3' runs 0.29 seconds.

64 | # Running task 'Task-4' (41589)...

65 | # Task 'Task-1' runs 0.43 seconds.

66 | # Task 'Task-2' runs 0.46 seconds.

67 | # Task 'Task-4' runs 0.79 seconds.

68 | # Task 'Task-0' runs 1.25 seconds.

69 | # Theoretical total running time: 3.21 seconds.

70 | # Actual running time: 1.26 seconds.

71 | # All subprocesses done.

72 |

73 |

74 | def demo2():

75 | # With "multiprocessing" module

76 | with Pool(4) as pool: # 开启一个4个进程的进程池

77 | start = time.time()

78 | # 在进程池中执行多个任务, 但是在主进程中是async的

79 | results = [

80 | pool.apply_async(func=long_time_task, args=(f'Task-{i}',))

81 | for i in range(5)

82 | ] # Will NOT block here

83 | pool.close() # 调用close()之后就不能再添加新的任务了

84 |

85 | # Since apply_async() method is asynchronous (non-blocking), by now the

86 | # tasks in the process pool are still executing, but in this main

87 | # process, we have successfully proceeded to here.

88 | total_running_time = 0

89 | for result in results: # AsyncResult

90 | total_running_time += result.get(timeout=10) # Returns the result when it arrives

91 |

92 | print(f'Theoretical total running time: {total_running_time:.2f} '

93 | f'seconds.')

94 | end = time.time()

95 | print(f'Actual running time: {end - start:.2f} seconds.')

96 | print('All subprocesses done.')

97 |

98 | # Output:

99 | # Parent process 10161

100 | # Running task 'Task-0' (10162)...

101 | # Running task 'Task-1' (10163)...

102 | # Running task 'Task-2' (10164)...

103 | # Running task 'Task-3' (10165)...

104 | # Task 'Task-2' runs 1.08 seconds.

105 | # Running task 'Task-4' (10164)...

106 | # Task 'Task-3' runs 1.43 seconds.

107 | # Task 'Task-0' runs 2.69 seconds.

108 | # Task 'Task-1' runs 2.72 seconds.

109 | # Task 'Task-4' runs 2.07 seconds.

110 | # Theoretical total running time: 10.00 seconds.

111 | # Actual running time: 3.18 seconds.

112 | # All subprocesses done.

113 |

--------------------------------------------------------------------------------

/Multi-processing and Multi-threading in Python/Multi-threading/Locks and Sync Blocking/sync_blocking.py:

--------------------------------------------------------------------------------

1 | #!usr/bin/env python3

2 | # -*- coding: utf-8 -*-

3 |

4 | """

5 | Test module to implement synchronous blocking to address race condition among

6 | threads, using threading.Lock and

7 | threading.Semaphore/threading.BoundedSemaphore.

8 | """

9 |

10 | __author__ = 'Ziang Lu'

11 |

12 | import time

13 | from threading import BoundedSemaphore, Lock, Semaphore, Thread, current_thread

14 |

15 | balance = 0

16 |

17 | # Lock

18 | lock = Lock()

19 |

20 |

21 | def thread_func(n: int) -> None:

22 | """

23 | Dummy function to be run within a thread.

24 | :param n: int

25 | :return: None

26 | """

27 | for _ in range(10000000):

28 | # Note that Lock objects can be used in a traditional way, i.e., via

29 | # acquire() and release() methods, but it can also simply be used as a

30 | # context manager, as a syntax sugar

31 | with lock:

32 | change_balance(n)

33 |

34 |

35 | def change_balance(n: int) -> None:

36 | """

37 | Changes the balance.

38 | :param n: int

39 | :return: None

40 | """

41 | global balance

42 | balance += n

43 | balance -= n

44 | # 先存后取, 效果应该为无变化

45 |

46 |

47 | th1 = Thread(target=thread_func, args=(5,))

48 | th2 = Thread(target=thread_func, args=(8,))

49 | th1.start()

50 | th2.start()

51 | th1.join()

52 | th2.join()

53 | print(balance)

54 |

55 |

56 | # Output:

57 | # 0

58 |

59 |

60 | # Semaphore

61 | # 管理一个内置的计数器, 每当调用acquire()时-1, 调用release()时+1

62 | # 计数器不能小于0; 当计数器为0时, acquire()将阻塞线程至同步锁定状态, 直到其他线程调用

63 | # release()

64 |

65 | # 注意: 同时acquire semaphore的线程仍然可能会有race condition

66 |

67 | # BoundedSemaphore

68 | # 在Semaphore的基础上, 不允许计数器超过initial value (设置上限)

69 |

70 | # A bounded semaphore with initial value 2

71 | bounded_sema = BoundedSemaphore(value=2)

72 |

73 |

74 | def func() -> None:

75 | """

76 | Dummy function.

77 | :return: None

78 | """

79 | th_name = current_thread().name

80 | # 请求Semaphore, 成功后计数器-1

81 | print(f'{th_name} acquiring semaphore...')

82 | # Note that BoundedSemaphore objects can be used in a traditional way, i.e.,

83 | # via acquire() and release() methods, but it can also simply be used as a

84 | # context manager, as a syntax sugar

85 | with bounded_sema: # 释放Semaphore的时候, 计数器+1

86 | print(f'{th_name} gets semaphore')

87 | time.sleep(4)

88 |

89 |

90 | threads = [Thread(target=func) for _ in range(4)]

91 | for th in threads:

92 | th.start()

93 | for th in threads:

94 | th.join()

95 |

96 |

97 | # Output:

98 | # Thread-3 acquiring semaphore...

99 | # Thread-3 gets semaphore

100 | # Thread-4 acquiring semaphore...

101 | # Thread-4 gets semaphore

102 | # Thread-5 acquiring semaphore...

103 | # Thread-6 acquiring semaphore... # Will block here for 4 seconds, waiting for the semaphore

104 | # Thread-5 gets semaphore

105 | # Thread-6 gets semaphore

106 |

--------------------------------------------------------------------------------

/Multi-processing and Multi-threading in Python/Multi-threading/Locks and Sync Blocking/wait_blocking.py:

--------------------------------------------------------------------------------

1 | #!usr/bin/env python3

2 | # -*- coding: utf-8 -*-

3 |

4 | """

5 | Test module to implement wait blocking to address race condition, using

6 | threading.Condition and threading.Event.

7 | """

8 |

9 | __author__ = 'Ziang Lu'

10 |

11 | import time

12 | from threading import Condition, Event, Thread, current_thread

13 |

14 | # Condition

15 |

16 | product = None # 商品

17 | condition = Condition()

18 |

19 |

20 | def producer() -> None:

21 | while True:

22 | if condition.acquire():

23 | global product

24 | if not product:

25 | # 生产商品

26 | print('Producing something...')

27 | product = 'anything'

28 | condition.notify()

29 |

30 | condition.wait()

31 | time.sleep(2)

32 |

33 |

34 | def consumer() -> None:

35 | while True:

36 | if condition.acquire():

37 | global product

38 | if product:

39 | # 消耗商品

40 | print('Consuming something...')

41 | product = None

42 | condition.notify()

43 |

44 | condition.wait()

45 | time.sleep(2)

46 |

47 |

48 | prod_thread = Thread(target=producer)

49 | cons_thread = Thread(target=consumer)

50 | prod_thread.start()

51 | cons_thread.start()

52 |

53 |

54 | # Output:

55 | # Producing something...

56 | # Consuming something...

57 | # Producing something...

58 | # Consuming something...

59 | # ...

60 |

--------------------------------------------------------------------------------

/Multi-processing and Multi-threading in Python/Multi-threading/Multi-threading in Python.md:

--------------------------------------------------------------------------------

1 | # Multi-threading in Python

2 |

3 | ## Basic Implementations

4 |

5 | * Using `threading` module

6 |

7 | * Normal implementation

8 |

9 | ```python

10 | import time

11 | from threading import Thread, current_thread

12 |

13 |

14 | def loop() -> None:

15 | """

16 | Loop to be run within a thread.

17 | :return: None

18 | """

19 | th_name = current_thread().name

20 | print(f'Running thread {th_name}...')

21 | n = 1

22 | while n < 5:

23 | print(f'thread {th_name} >>> {n}')

24 | time.sleep(1)

25 | n += 1

26 | print(f'Thread {th_name} ends.')

27 |

28 |

29 | th_name = current_thread().name

30 | print(f'Running thread {th_name}...')

31 | th = Thread(target=loop, name='LoopThread')

32 | th.start()

33 | th.join()

34 | print(f'Thread {th_name} ends.')

35 | print()

36 |

37 | # Output:

38 | # Running thread MainThread...

39 | # Running thread LoopThread...

40 | # thread LoopThread >>> 1

41 | # thread LoopThread >>> 2

42 | # thread LoopThread >>> 3

43 | # thread LoopThread >>> 4

44 | # Thread LoopThread ends.

45 | # Thread MainThread ends.

46 |

47 |

48 | # 注意:

49 | # Python的thread虽然是真正的thread, 但解释器执行代码时, 有一个GIL锁 (Global Interpreter Lock)

50 | # 任何Python thread执行前, 必须先获得GIL, 然后, 每执行100条字节码, 解释器就自动释放GIL锁, 让别的thread有机会执行

51 | # (Python 2.x)

52 | # 所以, multi-threading在Python中只能交替进行

53 | # (即使100个thread跑在100核CPU上, 也只能用到1个核 => 不可能通过multi-threading实现parallelism)

54 | # 但由于每个process有各自独立的GIL, 可以通过multi-processing实现concurrency

55 | ```

56 |

57 | * Extending `threading.Thread` class, and override `run()` method

58 |

59 | ```python

60 | from threading import Thread

61 |

62 | import requests

63 | from bs4 import BeautifulSoup

64 |

65 |

66 | class MyThread(Thread):

67 | """

68 | My inherited thread class.

69 | """

70 | __slots__ = ['_page_num']

71 |

72 | _BASE_URL = 'https://movie.douban.com/top250?start={}&filter='

73 |

74 | def __init__(self, page_num: int):

75 | """

76 | Constructor with parameter.

77 | :param page_num: int

78 | """

79 | super().__init__()

80 | self._page_num = page_num

81 |

82 | def run(self):

83 | # Note that run() method is automatically called when start() method is

84 | # called

85 |

86 | url = self._BASE_URL.format(self._page_num * 25)

87 | r = requests.get(url)

88 | soup = BeautifulSoup(r.text, 'lxml')

89 | title_tags = soup.find('ol', class_='grid_view').find_all('li')

90 | for title_tag in title_tags:

91 | title = title_tag.find('span', class_='title').text

92 | print(title)

93 |

94 |

95 | for page_num in range(10):

96 | th = MyThread(page_num)

97 | th.start()

98 | ```

99 |

100 | * **Multi-threading + Async (Thread [Coroutine])**

101 |

102 | -> 把coroutine包在thread中

103 |

104 | *创建一个thread pool, 在其中放入async的task (coroutine), 参见`multithreading_async.py`*

105 |

106 |

107 |

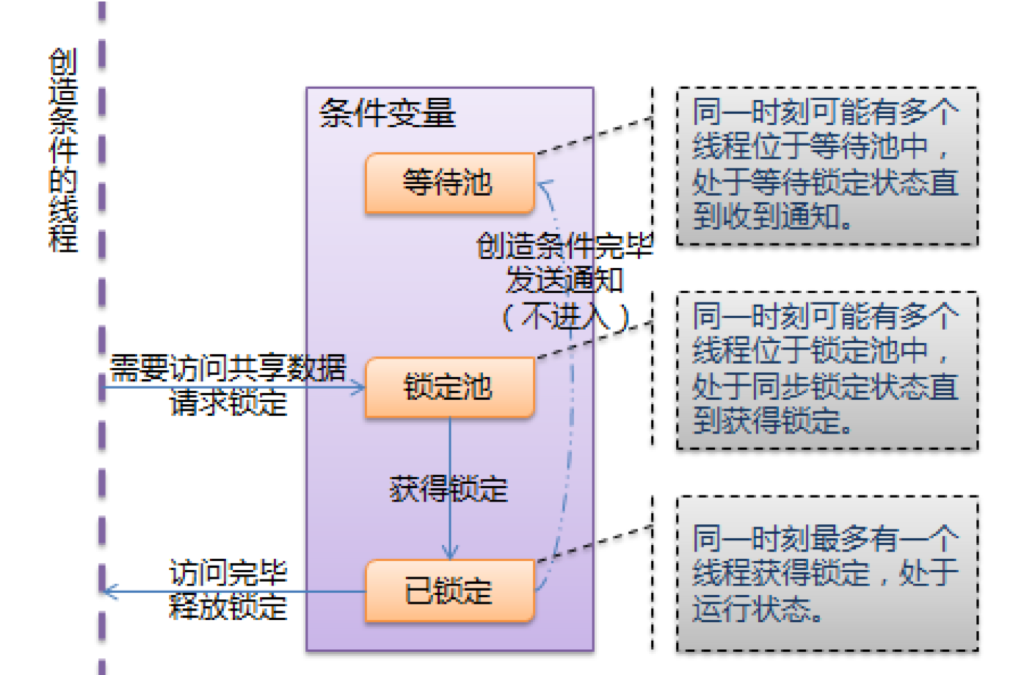

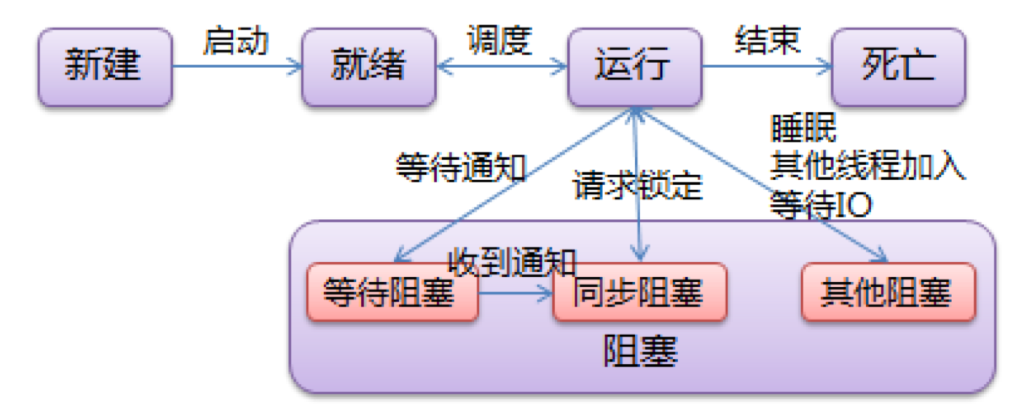

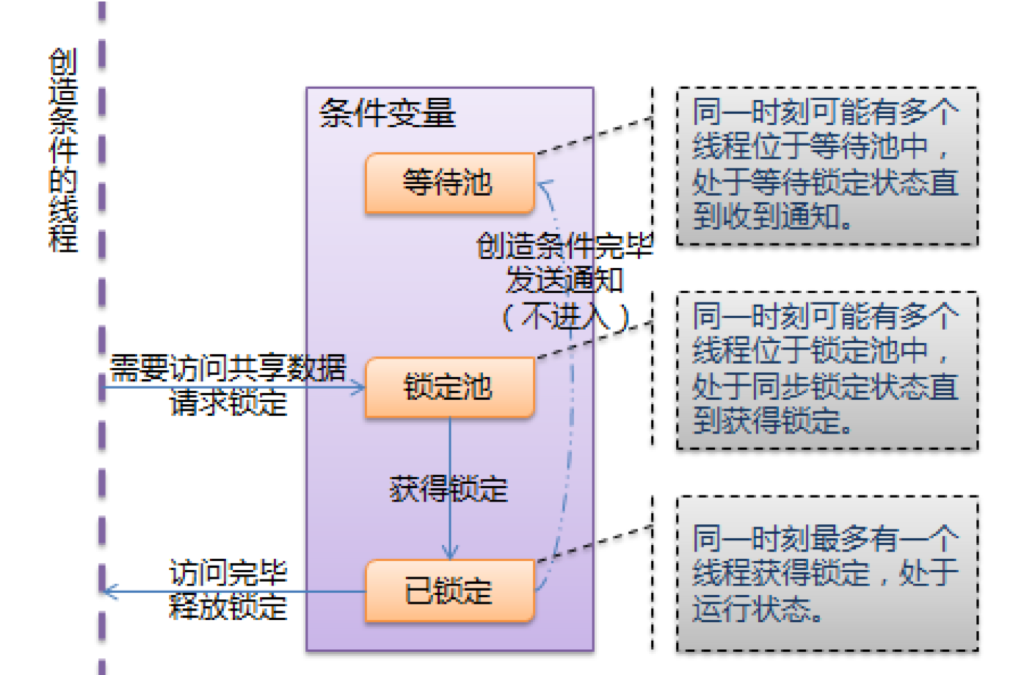

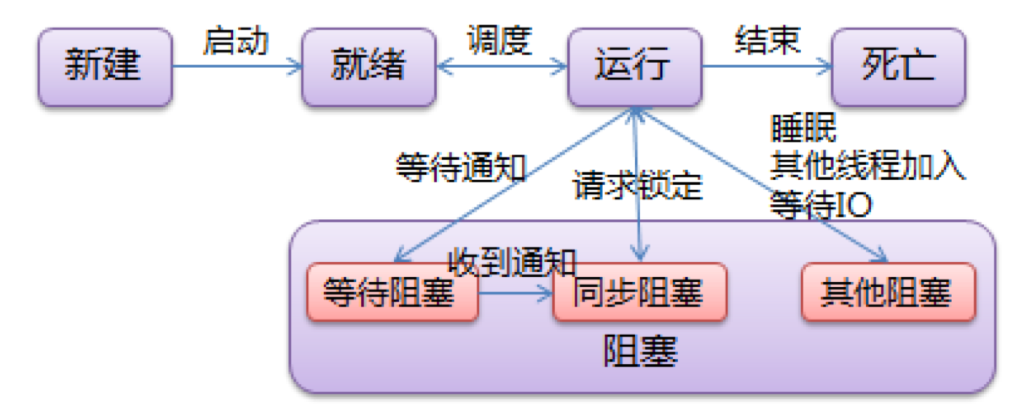

108 | ## Python Thread的wait和notify

109 |

110 | ### Condition (条件变量)

111 |

112 | 通常与一个锁关联, 需要在多个Condition中共享一个锁时, 可以传递一个Lock/RLock实例给constructor, 否则它将自己生成一个RLock实例

113 |

114 | **相当于除了Lock带有的锁定池外, Condition还自带一个等待池**

115 |

116 | **构造方法:**

117 |

118 | `Condition(lock=None)`

119 |

120 | **实例方法:**

121 |

122 | - `acquire(*args)` / `release()`

123 |

124 | 调用关联的锁的相应方法

125 |

126 | - `wait(timeout=None)` *(使用前当前thread必须已获得锁定, 否则将抛出异常)*

127 |

128 | 使当前thread进入Condition的等待池等待通知, 并释放锁

129 |

130 | - `notify(n=1)` *(使用前当前thread必须已获得锁定, 否则将抛出异常)*

131 |

132 | 从Condition的等待池中随机挑选一个thread来通知, 收到通知的thread将自动调用acquire()来尝试获得锁定 (进入锁定池), 但当前thread不会释放锁

133 |

134 | - `notify_all()` *(使用前当前thread必须已获得锁定, 否则将抛出异常)*

135 |

136 | 通知Condition的等待池中的全部thread, 收到通知的全部thread将自动调用acquire()来尝试获得锁定 (进入锁定池), 但当前thread不会释放锁

137 |

138 |

139 |

140 | #### 万恶的Python GIL (Global Interpreter Lock)

141 |

142 | **在Python中, 某个thread想要执行, 必须先拿到GIL.**

143 |

144 | *(我们可以把GIL看作是"通行证", 并且在一个Python process中, GIL只有一个. 拿不到通行证的thread, 就不允许进入CPU执行.)*

145 |

146 | => **由于GIL的存在, Python里一个process同一时间永远只能执行一个thread (即拿到GIL的thread才能执行)**; 而每次释放GIL, thread进行锁竞争、切换thread, 会消耗资源, 这就是为什么**Python即使是在多核CPU上, multi-threading的效率也并不高**

147 |

148 |

149 |

150 | 在Python multi-threading下, 每个thread的执行方式:

151 |

152 | 1. 获取process GIL

153 |

154 | 2. 执行代码直到sleep或者是Python虚拟机将其挂起

155 |

156 | 3. 释放process GIL

157 |

158 | - 遇到IO操作

159 |

160 | => **对于IO密集型程序, 可以在thread-A等待时, 自动切换到thread-B, 不浪费等待时间, 从而提升程序执行效率**

161 |

162 | => **Python multi-threading对IO密集型代码比较友好**

163 |

164 | - Python 2.x中, ticks计数达到100

165 |

166 | => 对于CPU计算密集型程序, 由于ticks会很快达到100, 然后进行释放GIL, thread进行锁竞争、切换thread, 导致资源消耗严重

167 |

168 | - Python 3.x中, 改为计时器达到某个阈值

169 |

170 | => 对于CPU计算密集型程序稍微友好了一些, 但依然没有解决根本问题

171 |

172 | => **Python multi-threading对CPU计算密集型代码不友好**

173 |

174 |

175 |

176 | **结论: 多核下, 想做concurrent提升效率, 比较通用的方法是使用multi-processing, 能够有效提高执行效率** (每个process有各自独立的GIL, 互不干扰, 这样就可以真正意义上的parallel执行.)

177 |

178 |

--------------------------------------------------------------------------------

/Multi-processing and Multi-threading in Python/Multi-threading/Race Condition Demo by Raymond Hettinger/comm_via_atomic_message_queue.py:

--------------------------------------------------------------------------------

1 | #!usr/bin/env python3

2 | # -*- coding: utf-8 -*-

3 |

4 | """

5 | Simple demo of solution of race conditions with (atomic) message queue.

6 | Note that the race conditions in this demo are also amplified by fuzzing

7 | technique

8 |

9 | In order to be away with race conditions, we need to

10 | 1. Ensure an explicit ordering of the operations (on the shared resources)

11 | All operations (on the shared resources) must be executed in the same order

12 | they are received.

13 | => To ensure this, we simply put the operations (on one shared resource) in a

14 | single thread.

15 | 2. Restrict access to the shared resource

16 | Only one operation can access the shared resource at the same time. During

17 | the period of access, no other operations can read or change its value.

18 |

19 | Specifically for solution with (atomic) message queue,

20 | 1. Each shared resource shall be accessed in exactly its own thread.

21 | 2. All communications with that thread shall be done using an atomic message

22 | queue.

23 | """

24 |

25 | import random

26 | import time

27 | from queue import Queue

28 | from threading import Thread

29 |

30 | ##### Fuzzing technique #####

31 |

32 | FUZZ = False

33 |

34 |

35 | def fuzz() -> None:

36 | """

37 | Fuzzes the program for a random amount of time, if instructed.

38 | :return: None

39 | """

40 | if FUZZ:

41 | time.sleep(random.random())

42 |

43 |

44 | ##### Daemon thread for print() access & Atomic message queue for print() #####

45 |

46 | # Note that the built-in print() function is a "global" resource, and thus could

47 | # lead to race condition

48 | # Therefore, as instructed above, accessing print() function should be handled

49 | # in exactly its own thread, which should be a daemon thread. (=> 1)

50 |

51 | # All communications with the print()-access daemon thread shall be done using

52 | # an atomic message queue. (=> 2)

53 | print_queue = Queue() # Atomic message queue used for the print()-access daemon thread

54 |

55 |

56 | def print_manager() -> None:

57 | """

58 | Daemon thread function that uses an atomic message queue to communicate with

59 | the external world, and has exclusive right to access print() function.

60 | :return: None

61 | """

62 | while True:

63 | fuzz()

64 | stuff_to_print = print_queue.get()

65 | for line in stuff_to_print:

66 | fuzz()

67 | print(line)

68 | fuzz()

69 | # Mark the task as done

70 | print_queue.task_done()

71 |

72 |

73 | # Create and start the print()-access daemon thread

74 | print_daemon_thread = Thread(

75 | target=print_manager, name='print()-access Daemon Thread'

76 | )

77 | print_daemon_thread.daemon = True

78 | print_daemon_thread.start()

79 |

80 |

81 | ##### Daemon thread for "counter" & Atomic message queue for "counter" #####

82 |

83 | counter = 0

84 | # As instructed above, accessing the "counter" global variable should be handled

85 | # in exactly its own thread, which should be a daemon thread. (=> 1)

86 |

87 | # All communications with the "counter"-access daemon thread shall be done using

88 | # an atomic message queue. (=> 2)

89 | # Atomic message queue used for the "counter"-access daemon thread

90 | counter_queue = Queue()

91 |

92 |

93 | def counter_manager() -> None:

94 | """

95 | Daemon thread function that uses an atomic message queue to communicate with

96 | the external world, and has exclusive right to access the "counter" global

97 | variable.

98 | :return: None

99 | """

100 | while True:

101 | global counter

102 | fuzz()

103 | old_val = counter

104 | fuzz()

105 | increment = counter_queue.get()

106 | fuzz()

107 | counter = old_val + increment

108 | fuzz()

109 | # Send a message to the print()-access atomic message queue in order to

110 | # print the "counter" value

111 | print_queue.put([f'Counter value: {counter}', '----------'])

112 | fuzz()

113 | # Mark the task as done

114 | counter_queue.task_done()

115 |

116 |

117 | # Create and start the "counter"-access daemon thread

118 | counter_daemon_thread = Thread(

119 | target=counter_manager, name='Counter-access Daemon Thread'

120 | )

121 | counter_daemon_thread.daemon = True

122 | counter_daemon_thread.start()

123 |

124 |

125 | ##### Actual workers #####

126 |

127 |

128 | def worker() -> None:

129 | """

130 | Thread function that increments "counter" by 1.

131 | This is done by sending a message to the "counter"-access atomic message

132 | queue.

133 | :return: None

134 | """

135 | fuzz()

136 | counter_queue.put(1)

137 |

138 |

139 | print_queue.put(['Starting up'])

140 |

141 | # Create and start 10 worker threads

142 | worker_threads = []

143 | for _ in range(10):

144 | worker_thread = Thread(target=worker)

145 | worker_threads.append(worker_thread)

146 | worker_thread.start()

147 | fuzz()

148 | # Join the 10 worker threads

149 | for worker_thread in worker_threads:

150 | worker_thread.join()

151 | fuzz()

152 | # By now, it is guaranteed that 10 messages have been sent to the

153 | # "counter"-access atomic message queue, but the tasks haven't necesserily been

154 | # done yet.

155 |

156 | # Note that since the "counter"-access queue is a daemon thread and never ends,

157 | # we cannot join it

158 | # Instead, we can join the atomic message queue itself, which waits until all

159 | # the tasks have been marked as done.

160 | counter_queue.join()

161 |

162 | print_queue.put(['Finishing up'])

163 | print_queue.join() # Same reason as above

164 |

165 | # Output:

166 | # Starting up

167 | # Counter value: 1

168 | # ----------

169 | # Counter value: 2

170 | # ----------

171 | # Counter value: 3

172 | # ----------

173 | # Counter value: 4

174 | # ----------

175 | # Counter value: 5

176 | # ----------

177 | # Counter value: 6

178 | # ----------

179 | # Counter value: 7

180 | # ----------

181 | # Counter value: 8

182 | # ----------

183 | # Counter value: 9

184 | # ----------

185 | # Counter value: 10

186 | # ----------

187 | # Finishing up

188 |

--------------------------------------------------------------------------------

/Multi-processing and Multi-threading in Python/Multi-threading/Race Condition Demo by Raymond Hettinger/comm_via_locks.py:

--------------------------------------------------------------------------------

1 | #!usr/bin/env python3

2 | # -*- coding: utf-8 -*-

3 |

4 | """

5 | Simple demo of solution of race conditions with locks.

6 | Note that the race conditions in this demo are also amplified by fuzzing

7 | technique

8 |

9 | In order to be away with race conditions, we need to

10 | 1. Ensure an explicit ordering of the operations (on the shared resources)

11 | All operations (on the shared resources) must be executed in the same order

12 | they are received.

13 | 2. Restrict access to the shared resource

14 | Only one operation can access the shared resource at the same time. During

15 | the period of access, no other operations can read or change its value.

16 |

17 | Specifically for solution with locks,

18 | 2. All accesses to the shared resource shall be done using its own lock.

19 | """

20 |

21 | import random

22 | import time

23 | from threading import Condition, Thread

24 |

25 | ##### Fuzzing technique #####

26 |

27 | FUZZ = False

28 |

29 |

30 | def fuzz() -> None:

31 | """

32 | Fuzzes the program for a random amount of time, if instructed.

33 | :return: None

34 | """

35 | if fuzz:

36 | time.sleep(random.random())

37 |

38 |

39 | ##### Locks for print()-access & "counter"-access #####

40 |

41 | # All accesses to the shared resource shall be done using its own lock. (=> 2)

42 | print_lock = Condition() # Lock for print()-access

43 |

44 | counter = 0

45 |

46 | counter_lock = Condition() # Lock for "counter"-access

47 |

48 |

49 | def worker() -> None:

50 | """

51 | Thread function that increments "counter" by 1.

52 | :return: None

53 | """

54 | global counter

55 | # Lock on the "counter"-access lock

56 | with counter_lock:

57 | fuzz()

58 | old_val = counter

59 | fuzz()

60 | counter = old_val + 1

61 | # Lock on the print()-access lock

62 | with print_lock:

63 | fuzz()

64 | print(f'The counter value is {counter}')

65 | fuzz()

66 | print('----------')

67 |

68 |

69 | # Lock on the print()-access lock

70 | with print_lock:

71 | print('Starting up')

72 |

73 | # Create and start 10 worker threads

74 | worker_threads = []

75 | for _ in range(10):

76 | worker_thread = Thread(target=worker)

77 | worker_threads.append(worker_thread)

78 | worker_thread.start()

79 | fuzz()

80 | # Join the 10 worker threads

81 | for worker_thread in worker_threads:

82 | worker_thread.join()

83 | fuzz()

84 |

85 | # Lock on the print()-access lock

86 | with print_lock:

87 | print('Finishing up')

88 |

89 | # Output:

90 | # Starting up

91 | # The counter value is 1

92 | # ----------

93 | # The counter value is 2

94 | # ----------

95 | # The counter value is 3

96 | # ----------

97 | # The counter value is 4

98 | # ----------

99 | # The counter value is 5

100 | # ----------

101 | # The counter value is 6

102 | # ----------

103 | # The counter value is 7

104 | # ----------

105 | # The counter value is 8

106 | # ----------

107 | # The counter value is 9

108 | # ----------

109 | # The counter value is 10

110 | # ----------

111 | # Finishing up

112 |

--------------------------------------------------------------------------------

/Multi-processing and Multi-threading in Python/Multi-threading/Race Condition Demo by Raymond Hettinger/note:

--------------------------------------------------------------------------------

1 | This example is summarized from a talk on PyCon 2016 by Raymond Hettinger:

2 | https://www.youtube.com/watch?v=Bv25Dwe84g0&index=4&list=PLZdWBibC1pBRc5317FXPYMkxVPmnHGz7f

3 |

--------------------------------------------------------------------------------

/Multi-processing and Multi-threading in Python/Multi-threading/Race Condition Demo by Raymond Hettinger/race_condition.py:

--------------------------------------------------------------------------------

1 | #!usr/bin/env python3

2 | # -*- coding: utf-8 -*-

3 |

4 | """

5 | Simple demo of race condition, amplified by fuzzing technique.

6 | """

7 |

8 | import random

9 | import time

10 | from threading import Thread

11 |

12 | # Fizzing is a technique for amplifying race condition to make them more

13 | # visible.

14 | # Basically, define a fuzz() function, which simply sleeps a random amount of

15 | # time if instructed, and then call the fuzz() function before each operation

16 |

17 | # Fuzzing setup

18 |

19 | FUZZ = True

20 |

21 |

22 | def fuzz() -> None:

23 | """

24 | Fuzzes the program for a random amount of time, if instructed.

25 | :return: None

26 | """

27 | if FUZZ:

28 | time.sleep(random.random())

29 |

30 |

31 | # Shared global variable

32 | counter = 0

33 |

34 |

35 | def worker() -> None:

36 | """

37 | :return: None

38 | """

39 | global counter

40 | fuzz()

41 | old_val = counter # Read from the global variable

42 | fuzz()

43 | counter = old_val + 1 # Write to the global variable

44 | # Whenever there is read from/write to global variables, there could be race

45 | # condition.

46 | # To amplify this race condition to make it more visible, we utilize fuzzing

47 | # technique as mentioned above.

48 | fuzz()

49 | # Note that the built-in print() function is also a "global" resource, and

50 | # thus could lead to race condition as well

51 | print(f'The counter is {counter}')

52 | fuzz()

53 | print('----------')

54 |

55 |

56 | print('Starting up')

57 | for _ in range(10):

58 | Thread(target=worker).start()

59 | fuzz()

60 | print('Finishing up')

61 |

--------------------------------------------------------------------------------

/Multi-processing and Multi-threading in Python/Multi-threading/multithreading_async.py:

--------------------------------------------------------------------------------

1 | #!usr/bin/env python3

2 | # -*- coding: utf-8 -*-

3 |

4 | """

5 | We can assign asynchronous tasks into a thread pool.

6 | """

7 |

8 | __author__ = 'Ziang Lu'

9 |

10 | import concurrent.futures as cf

11 |

12 | import requests

13 |

14 | sites = [

15 | 'http://europe.wsj.com/',

16 | 'http://some-made-up-domain.com/',

17 | 'http://www.bbc.co.uk/',

18 | 'http://www.cnn.com/',

19 | 'http://www.foxnews.com/',

20 | ]

21 |

22 |

23 | def site_size(url: str) -> int:

24 | """

25 | Returns the page size in bytes of the given URL.

26 | :param url: str

27 | :return: str

28 | """

29 | response = requests.get(url)

30 | return len(response.content)

31 |

32 |

33 | # Create a thread pool with 10 threads

34 | with cf.ThreadPoolExecutor(max_workers=10) as pool:

35 | # Submit tasks for execution

36 | future_to_url = {pool.submit(site_size, url): url for url in sites} # Will NOT block here

37 |

38 | # Since submit() method is asynchronous (non-blocking), by now the tasks in

39 | # the thread pool are still executing, but in this main thread, we have

40 | # successfully proceeded to here.

41 | # Wait until all the submitted tasks have been completed

42 | for future in cf.as_completed(future_to_url):

43 | url = future_to_url[future]

44 | try:

45 | # Get the execution result

46 | page_size = future.result()

47 | except Exception as e:

48 | print(f'{url} generated an exception: {e}')

49 | else:

50 | print(f'{url} page is {page_size} bytes.')

51 |

52 | # Output:

53 | # http://some-made-up-domain.com/ generated an exception: HTTPConnectionPool(host='some-made-up-domain.com', port=80): Max retries exceeded with url: / (Caused by NewConnectionError(': Failed to establish a new connection: [Errno 8] nodename nor servname provided, or not known'))

54 | # http://www.foxnews.com/ page is 216594 bytes.

55 | # http://www.cnn.com/ page is 1725827 bytes.

56 | # http://europe.wsj.com/ page is 979035 bytes.

57 | # http://www.bbc.co.uk/ page is 289252 bytes.

58 |

--------------------------------------------------------------------------------

/Multi-threading in Java/AtomicIntegerDemo.java:

--------------------------------------------------------------------------------

1 | import java.util.concurrent.atomic.AtomicInteger;

2 |

3 | /**

4 | * Self-defined class that implements Runnable interface.

5 | */

6 | class Processing implements Runnable {

7 | /**

8 | * Number of processed tasks.

9 | */

10 | // private int processedCount = 0;

11 | private AtomicInteger processedCount = new AtomicInteger();

12 |

13 | /**

14 | * Accessor of processedCount.

15 | * @return processedCount

16 | */

17 | int getProcessedCount() {

18 | // return processedCount;

19 | return processedCount.get();

20 | }

21 |

22 | @Override

23 | public void run() {

24 | for (int i = 1; i < 5; ++i) {

25 | processSomeTask(i);

26 | System.out.println(Thread.currentThread().getName() + " finished processing task-" + i);

27 |

28 | // Wrong implementation:

29 | // ++processedCount;

30 | // Normal integer increment is NOT atomic, resulting in race condition and thus wrong final result.

31 |

32 | // Correct implementation: Use AtomicInteger

33 | processedCount.incrementAndGet();

34 | // AtomicInteger increment is atomic.

35 | }

36 | }

37 |

38 | /**

39 | * Private helper method to process some task.

40 | * @param i task-i

41 | */

42 | private void processSomeTask(int i) {

43 | try {

44 | Thread.sleep(i * 1000);

45 | } catch (InterruptedException e) {

46 | e.printStackTrace();

47 | }

48 | }

49 | }

50 |

51 | /**

52 | * Simple demo for using AtomicInteger for atomic operations.

53 | *

54 | * @author Ziang Lu

55 | */

56 | public class AtomicIntegerDemo {

57 |

58 | /**

59 | * Main driver.

60 | * @param args arguments from command line

61 | */

62 | public static void main(String[] args) {

63 | Processing sharedRunnable = new Processing();

64 | Thread th1 = new Thread(sharedRunnable, "Thread-1"), th2 = new Thread(sharedRunnable, "Thread-2");

65 | th1.start();

66 | th2.start();

67 | try {

68 | th1.join();

69 | th2.join();

70 | } catch (InterruptedException e) {

71 | e.printStackTrace();

72 | }

73 | System.out.println("Total processed count: " + sharedRunnable.getProcessedCount());

74 |

75 | /*

76 | * Output:

77 | * Thread-1 finished processing task-1

78 | * Thread-2 finished processing task-1

79 | * Thread-2 finished processing task-2

80 | * Thread-1 finished processing task-2

81 | * Thread-1 finished processing task-3

82 | * Thread-2 finished processing task-3

83 | * Thread-1 finished processing task-4

84 | * Thread-2 finished processing task-4

85 | * Total processed count: 8

86 | */

87 | }

88 |

89 | }

90 |

--------------------------------------------------------------------------------

/Multi-threading in Java/Bank User Example from 15-619 Cloud Computing/BankUserMultiThreaded.java:

--------------------------------------------------------------------------------

1 | import java.util.PriorityQueue;

2 |

3 | public class BankUserMultiThreaded {

4 |

5 | /**

6 | * Balance of the bank user.

7 | */

8 | private static int balance;

9 | /**

10 | * Min-heap for the deposit/withdraw operation timestamps.

11 | *

12 | * In order to ensure an explicit ordering of the deposit/withdraw

13 | * operations, i.e., all the operations must be executed in the order they

14 | * are received:

15 | * we create a min-heap of the timestamps of the deposit/withdraw operation.

16 | */

17 | private static final PriorityQueue operations = new PriorityQueue<>();

18 |

19 | /**

20 | * Adds the given amount to the balance.

21 | * No need to do authentication in deposit() method.

22 | * @param amt amount to deposit

23 | */

24 | public static void deposit(final int amt) {

25 | // Get the timestamp of the operation, and push it to the min-heap

26 | Long timestamp = getTime();

27 | operations.offer(timestamp);

28 |

29 | // Create a new thread for the deposit operation

30 | Thread th = new Thread(new Runnable() {

31 | @Override

32 | public void run() {

33 | // 尝试获得operations的monitor

34 | acquireLock(timestamp);

35 | // 当acquireLock()方法退出时, 当前线程已成功获得operations的monitor

36 |

37 | // No need to do authentication

38 | balance += amt;

39 | System.out.println(String.format("Deposit %d to funds. Not at %d", amt, balance));

40 |

41 | // 完成operation, 并释放operations的monitor

42 | releaseLock();

43 | }

44 | }, "Thread-Deposit");

45 | th.start();

46 | }

47 |

48 | /**

49 | * Private helper method to get the current timestamp.

50 | * @return current timestamp

51 | */

52 | private static Long getTime() {

53 | return System.nanoTime();

54 | }

55 |

56 | /**

57 | * Private helper method for the given operation timestamp's thread to

58 | * acquire operations's monitor.

59 | * @param timestamp given operation timestamp

60 | */

61 | private static void acquireLock(Long timestamp) {

62 | synchronized (operations) { // 尝试获得operations的monitor

63 | // 成功获得operations的monitor后

64 |

65 | // Check whether the operation to perform is the current operation

66 | while (timestamp != operations.peek()) {

67 | try {

68 | operations.wait(); // 当前线程进入operations的等待池, 并释放operations的monitor

69 | } catch (InterruptedException ex) {

70 | System.out.println(Thread.currentThread().getName() + " interrupted");

71 | }

72 | }

73 | // 此时, 当前的操作已经是下一步执行的操作, 且当前线程已再次获得operation的monitor

74 | }

75 | }

76 |

77 | /**

78 | * Private helper method for the current operation timestamp's thread to

79 | * release operation's monitor.

80 | */

81 | private static void releaseLock() {

82 | // Remove the timestamp of the operation just performed

83 | operations.poll();

84 | synchronized (operations) {

85 | operations.notifyAll(); // 通知operations的等待池中的全部线程来竞争operations的monitor

86 | // 根据第66行, 下一步操作的线程会成功获得operations的monitor而退出acquireLock()方法, 而对于

87 | // 不是下一步操作的线程, 该线程会重新进入operations的等待池

88 | // 注意这里不能用notify(), 因为一旦唤醒的不是下一步操作的线程, 根据第66行, 该线程会重新进入operations的等待池, 即全部线

89 | // 程都在operations的等待池中, 却没有一个再次唤醒另一个线程, 会导致deadlock

90 | }

91 | }

92 |

93 | /**

94 | * Private helper method to wait 50 milliseconds.

95 | */

96 | private static void wait_50ms() {

97 | try {

98 | Thread.sleep(50);

99 | } catch (InterruptedException e) {

100 | return;

101 | }

102 | }

103 |

104 | /**

105 | * Removes the given amount from the balance.

106 | * @param amt amount to withdraw

107 | */

108 | public static void withdraw(final int amt) {

109 | // Get the timestamp of the operation, and push it to the min-heap

110 | Long timestamp = getTime();

111 | operations.offer(timestamp);

112 |

113 | // Create a new thread responsible for the withdraw operation

114 | Thread th = new Thread(new Runnable() {

115 | @Override

116 | public void run() {

117 | // 尝试获得operations的monitor

118 | acquireLock(timestamp);

119 | // 当acquireLock()方法退出时, 当前线程已成功获得operations的monitor

120 |

121 | int holdings = balance;

122 | if (!authenticate()) { // authenticate() method always takes 500 milliseconds to run.

123 | return;

124 | }

125 | if (holdings < amt) {

126 | System.out.println(String.format(

127 | "Overdraft Error: insufficient funds for this withdrawl. Balance = %d. Amt = %d", holdings, amt

128 | ));

129 | // 完成操作, 并释放operations的monitor

130 | releaseLock();

131 | return;

132 | }

133 | balance = holdings - amt;

134 | System.out.println(String.format("Withdraw %d from funds. Now at %d", amt, balance));

135 |

136 | // 完成操作, 并释放operations的monitor

137 | releaseLock();

138 | }

139 | }, "Thread-Withdraw");

140 | th.start();

141 | }

142 |

143 | /**

144 | * Private helper method to authenticate.

145 | * Due to poor optimization, this method always takes 500 milliseconds to

146 | * run.

147 | * @return whether the authentication succeeds

148 | */

149 | public static Boolean authenticate() {

150 | try {

151 | Thread.sleep(500);

152 | } catch (InterruptedException e) {

153 | System.out.println("ERROR: ABORT OPERATION, Authentication failed");

154 | return false;

155 | }

156 | return true;

157 | }

158 |

159 | public static void test_case0() {

160 | balance = 0;

161 | deposit(100);

162 | wait_50ms(); //

163 | deposit(200);

164 | wait_50ms(); //

165 | deposit(700);

166 | wait_50ms(); // To make sure the deposits are in the sequentially correct order

167 | if (balance == 1000) {

168 | System.out.println("Test passed!");

169 | } else {

170 | System.out.println("Test failed!");

171 | }

172 | }

173 |

174 | public static void test_case1() {

175 | balance = 0;

176 | deposit(100);

177 | deposit(200);

178 | deposit(700);

179 | }

180 |

181 | public static void test_case2() {

182 | balance = 0;

183 | deposit(1000);

184 | withdraw(1000);

185 | withdraw(1000);

186 | withdraw(1000);

187 | withdraw(1000);

188 | withdraw(1000);

189 | }

190 |

191 | public static void test_case3() {

192 | balance = 0;

193 | withdraw(1000);

194 | deposit(500);

195 | deposit(500);

196 | withdraw(500);

197 | withdraw(500);

198 | withdraw(1000);

199 | }

200 |

201 | public static void test_case4() {

202 | balance = 0;

203 | deposit(2000);

204 | withdraw(500);

205 | withdraw(1000);

206 | withdraw(1500);

207 | deposit(4000);

208 | withdraw(2000);

209 | withdraw(2500);

210 | withdraw(3000);

211 | deposit(5000);

212 | withdraw(3500);

213 | withdraw(4000);

214 | }

215 |

216 | public static void main(String[] args) {

217 | // Uncomment Tests Cases as you go

218 | // test_case0();

219 | // test_case1();

220 | // test_case2();

221 | // test_case3();

222 | test_case4();

223 | }

224 |

225 | }

226 |

--------------------------------------------------------------------------------

/Multi-threading in Java/Bank User Example from 15-619 Cloud Computing/F17 15-619 Cloud Computing- (writeup: Thread-safe programming in Java) - TheProject.Zone.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Ziang-Lu/Multiprocessing-and-Multithreading/8d61a4479517fe8f1711f069c388d5828753092b/Multi-threading in Java/Bank User Example from 15-619 Cloud Computing/F17 15-619 Cloud Computing- (writeup: Thread-safe programming in Java) - TheProject.Zone.pdf

--------------------------------------------------------------------------------

/Multi-threading in Java/Bank User Example from 15-619 Cloud Computing/S17 15-619 Cloud Computing- (writeup: Introduction to multithreaded programming in Java) - TheProject.Zone.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Ziang-Lu/Multiprocessing-and-Multithreading/8d61a4479517fe8f1711f069c388d5828753092b/Multi-threading in Java/Bank User Example from 15-619 Cloud Computing/S17 15-619 Cloud Computing- (writeup: Introduction to multithreaded programming in Java) - TheProject.Zone.pdf

--------------------------------------------------------------------------------

/Multi-threading in Java/BlockingQueueDemo.java:

--------------------------------------------------------------------------------

1 | import java.util.Random;

2 | import java.util.concurrent.ArrayBlockingQueue;

3 | import java.util.concurrent.BlockingQueue;

4 |

5 | /**

6 | * Producer class.

7 | * Writes data to an atomic message queue

8 | */

9 | class Producer implements Runnable {

10 | /**

11 | * Random number generator to use.

12 | */

13 | private final Random random;

14 | /**

15 | * Atomic message queue used by this producer.

16 | */

17 | private final BlockingQueue queue;

18 |

19 | /**

20 | * Constructor with parameter.

21 | * @param queue atomic message queue to use

22 | */

23 | Producer(BlockingQueue queue) {

24 | random = new Random();

25 | this.queue = queue;

26 | }

27 |

28 | @Override

29 | public void run() {

30 | try {

31 | // Produce messages

32 | for (int i = 0; i < 5; ++i) {

33 | // Simulate the producing process by sleeping for 1~3 seconds

34 | Thread.sleep((random.nextInt(2) + 1) * 1000);

35 | String msg = String.format("Message-%d", i);

36 | queue.put(msg);

37 | System.out.println("Producer put " + msg + " into the queue");

38 | }

39 | // Put a special value indicating that the message queue should shut down

40 | queue.put("exit");

41 | } catch (InterruptedException e) {

42 | e.printStackTrace();

43 | }

44 | }

45 | }

46 |

47 | /**

48 | * Consumer class.

49 | * Reads data from an atomic message queue

50 | */

51 | class Consumer implements Runnable {

52 | /**

53 | * Random number generator to use.

54 | */

55 | private final Random random;

56 | /**

57 | * Atomic queue used by this consumer.

58 | */

59 | private final BlockingQueue queue;

60 |

61 | /**

62 | * Constructor with parameter.

63 | * @param queue atomic message queue to use

64 | */

65 | Consumer(BlockingQueue queue) {

66 | random = new Random();

67 | this.queue = queue;

68 | }

69 |

70 | @Override

71 | public void run() {

72 | try {

73 | String msg = queue.take();

74 | // Check for the special value indicating that the message queue should shut down

75 | while (!msg.equals("exit")) {

76 | System.out.println("Consumer got " + msg + " from the queue");

77 | // Simulate the consuming process by sleeping for 1~5 seconds

78 | Thread.sleep((random.nextInt(4) + 1) * 1000);

79 | msg = queue.take();

80 | }

81 | } catch (InterruptedException e) {

82 | e.printStackTrace();

83 | }

84 | }

85 | }

86 |

87 | /**

88 | * Simple demo for using BlockingQueue as an atomic message queue.

89 | *

90 | * @author Ziang Lu

91 | */

92 | public class BlockingQueueDemo {

93 |

94 | /**

95 | * Main driver.

96 | * @param args arguments from command line

97 | */

98 | public static void main(String[] args) {

99 | BlockingQueue queue = new ArrayBlockingQueue<>(3);

100 | new Thread(new Producer(queue)).start();

101 | new Thread(new Consumer(queue)).start();

102 |

103 | /*

104 | * Output:

105 | * Producer put Message-0 into the queue

106 | * Consumer got Message-0 from the queue

107 | * Producer put Message-1 into the queue

108 | * Consumer got Message-1 from the queue

109 | * Producer put Message-2 into the queue

110 | * Consumer got Message-2 from the queue

111 | * Producer put Message-3 into the queue

112 | * Producer put Message-4 into the queue

113 | * Consumer got Message-3 from the queue

114 | * Consumer got Message-4 from the queue

115 | */

116 | }

117 |

118 | }

119 |

--------------------------------------------------------------------------------

/Multi-threading in Java/Locks and Sync Blocking/WaitBlockingDemo.java:

--------------------------------------------------------------------------------

1 | /**

2 | * Self-defined queue to synchronize on.

3 | */

4 | class MyQueue {

5 | /**

6 | * Underlying number in the queue.

7 | */

8 | private int n;

9 | /**

10 | * Whether the number is set by put() method.

11 | */

12 | boolean numIsSet = false;

13 |

14 | /**

15 | * Accessor of n.

16 | * @return n

17 | */

18 | int get() {

19 | System.out.println("Got " + n + " from queue");

20 | return n;

21 | }

22 |

23 | /**

24 | * Mutator of n

25 | * @param n n

26 | */

27 | void put(int n) {

28 | System.out.println("Put " + n + " into the queue");

29 | this.n = n;

30 | }

31 | }

32 |

33 | /**

34 | * Self-defined Producer class that implements Runnable interface.

35 | * Writes data to a synchronized queue

36 | */

37 | class Producer implements Runnable {

38 | /**

39 | * Queue to write data to.

40 | */

41 | private final MyQueue queue;

42 |

43 | /**

44 | * Constructor with parameter.

45 | * @param queue queue to write data to

46 | */

47 | Producer(MyQueue queue) {

48 | this.queue = queue;

49 | }

50 |

51 | @Override

52 | public void run() {

53 | int i = 0;

54 | while (i <= 5) {

55 | synchronized (queue) {

56 | while (queue.numIsSet) {

57 | try {

58 | System.out.println("Producer waiting...");

59 | queue.wait();

60 | System.out.println("Producer got queue's monitor");

61 | } catch (InterruptedException e) {

62 | System.out.println(Thread.currentThread().getName() + " interrupted");

63 | }

64 | }

65 | queue.put(i);

66 | queue.numIsSet = true;

67 | queue.notify();

68 | ++i;

69 | }

70 | }

71 | }

72 | }

73 |

74 | /**

75 | * Self-defined Consumer class that implements Runnable interface.

76 | * Reads data from a synchronized queue

77 | */

78 | class Consumer implements Runnable {

79 | /**

80 | * Queue to read data from.

81 | */

82 | private final MyQueue queue;

83 |

84 | /**

85 | * Constructor with parameter.

86 | * @param queue queue to read data from

87 | */

88 | Consumer(MyQueue queue) {

89 | this.queue = queue;

90 | }

91 |

92 | @Override

93 | public void run() {

94 | while (true) {

95 | synchronized (queue) {

96 | while (!queue.numIsSet) {

97 | try {

98 | System.out.println("Consumer waiting...");

99 | queue.wait();

100 | System.out.println("Consumer got queue's monitor");

101 | } catch (InterruptedException e) {

102 | System.out.println(Thread.currentThread().getName() + " interrupted");

103 | }

104 | }

105 | queue.get();

106 | queue.numIsSet = false;

107 | queue.notify();

108 | }

109 | }

110 | }

111 | }

112 |

113 | public class WaitBlockingDemo {

114 |

115 | /**

116 | * Main driver.

117 | * @param args arguments from command line

118 | */

119 | public static void main(String[] args) {

120 | MyQueue queue = new MyQueue();

121 | Thread producer = new Thread(new Producer(queue), "Thread-Producer");

122 | Thread consumer = new Thread(new Consumer(queue), "Thread-Consumer");

123 | producer.start();

124 | consumer.start();

125 | try {

126 | producer.join();

127 | consumer.join();

128 | } catch (InterruptedException e) {

129 | System.out.println(Thread.currentThread().getName() + " interrupted");

130 | }

131 |

132 | /*

133 | * Output:

134 | * Put: 1 into the queue

135 | * Producer waiting...

136 | * Got: 1 from queue

137 | * Consumer waiting...

138 | * Producer got queue's monitor

139 | * Put: 2 into the queue

140 | * Producer waiting...

141 | * Consumer got queue's monitor

142 | * Got: 2 from queue

143 | * Consumer waiting...

144 | * Producer got queue's monitor

145 | * Put: 3 into the queue

146 | * Producer waiting...

147 | * Consumer got queue's monitor

148 | * Got: 3 from queue

149 | * Consumer waiting...

150 | * Producer got queue's monitor

151 | * Put: 4 into the queue

152 | * Producer waiting...

153 | * Consumer got queue's monitor

154 | * Got: 4 from the queue

155 | * Consumer waiting...

156 | * Producer got queue's monitor

157 | * Put: 5 into the queue

158 | * Consumer got queue's monitor

159 | * Got: 5 from the queue

160 | * Consumer waiting...

161 | */

162 | }

163 |

164 | }

165 |

--------------------------------------------------------------------------------

/Multi-threading in Java/Multi-threading in Java.md:

--------------------------------------------------------------------------------

1 | # Mult-threading in Java

2 |

3 | ## Basic Implementations

4 |

5 | * Implementing `Runnable` interface, and therefore implement `run()` method

6 |

7 | ```java

8 | /**

9 | * Self-defined class that implements Runnable interface.

10 | */

11 | class DisplayMessage implements Runnable {

12 | /**

13 | * Message to display.

14 | */

15 | private final String msg;

16 |

17 | /**

18 | * Constructor with parameter.

19 | * @param msg message to display

20 | */

21 | DisplayMessage(String msg) {

22 | this.msg = msg;

23 | }

24 |

25 | @Override

26 | public void run() {

27 | try {

28 | for (int i = 0; i < 3; ++i) {

29 | System.out.println(msg);

30 | Thread.sleep((long) (500 + Math.random() * 500));

31 | }

32 | } catch (InterruptedException ex) {

33 | System.out.println(Thread.currentThread().getName() + " interrupted");

34 | }

35 | }

36 | }

37 |

38 | /**

39 | * Simple demo for multi-threading with implementing Runnable interface.

40 | *

41 | * @author Ziang Lu

42 | */

43 | public class SimpleDemo {

44 |

45 | /**

46 | * Main driver.

47 | * @param args arguments from command line

48 | */

49 | public static void main(String[] args) {

50 | Thread helloThread = new Thread(new DisplayMessage("hello"), "Thread-Hello");

51 | System.out.println("Starting hello thread...");

52 | helloThread.start();

53 |

54 | Thread byeThread = new Thread(new DisplayMessage("bye"), "Thread-Bye");

55 | System.out.println("Starting bye thread...");

56 | byeThread.start();

57 |

58 | try {

59 | helloThread.join();

60 | byeThread.join();

61 | } catch (InterruptedException ex) {

62 | System.out.println(Thread.currentThread().getName() + " interrupted");

63 | }

64 | System.out.println();

65 |

66 | /*

67 | * Output:

68 | * Starting hello thread...

69 | * Starting bye thread...

70 | * hello

71 | * bye

72 | * hello

73 | * bye

74 | * hello

75 | * bye

76 | */

77 | }

78 |

79 | }

80 | ```

81 |

82 | * Extending `Thread` class, and override `run()` method

83 |

84 | ```java

85 | /**

86 | * Self-defined class that extends Thread class.

87 | */

88 | class GuessANumber extends Thread {

89 | /**

90 | * Number to guess.

91 | */

92 | private final int num;

93 |

94 | /**

95 | * Constructor with parameter.

96 | * @param num number to guess

97 | */

98 | GuessANumber(int num) {

99 | this.num = num;

100 | }

101 |

102 | @Override

103 | public void run() {

104 | String threadName = Thread.currentThread().getName();

105 | int counter = 0;

106 | int guess = 0;

107 | do {

108 | guess = (int) (Math.random() * 10 + 1);

109 | System.out.println(threadName + " guesses " + guess);

110 | ++counter;

111 | } while (guess != num);

112 | System.out.println("Correct! " + threadName + " uses " + counter + " guesses.");

113 | }

114 | }

115 |

116 | /**

117 | * Simple demo for multi-threading with extending Thread class.

118 | *

119 | * @author Ziang Lu

120 | */

121 | public class SimpleDemo {

122 |

123 | /**

124 | * Main driver.

125 | * @param args arguments from command line

126 | */

127 | public static void main(String[] args) {

128 | Thread guessThread = new GuessANumber(7);

129 | guessThread.setName("Thread-Guess");

130 | System.out.println("Starting guess thread...");

131 | guessThread.start();

132 | try {

133 | guessThread.join();

134 | } catch (InterruptedException ex) {