├── 2022

├── readme.md

├── week10handout.pdf

├── week12handout.pdf

├── week13handout.pdf

├── week14handout.pdf

├── week15handout.pdf

├── week1handout.pdf

├── week3handout.pdf

├── week4handout.pdf

├── week5handout.pdf

├── week6handout.pdf

├── week7handout.pdf

└── week8handout.pdf

├── 2024

├── Week 1

│ └── week1handout.pdf

├── Week 10

│ ├── basic LaTeX document.zip

│ ├── week10assignment.md

│ └── week10handout.pdf

├── Week 11

│ ├── week11assignment.md

│ └── week11handout.pdf

├── Week 12

│ ├── big_project.zip

│ ├── week12assignment.md

│ └── week12handout.pdf

├── Week 13

│ ├── big_project_1.zip

│ ├── big_project_2.zip

│ ├── corpus1.TXT

│ ├── corpus2.TXT

│ ├── corpus3.TXT

│ ├── week13assignment.md

│ └── week13upload.pdf

├── Week 14

│ ├── readme-example.md

│ ├── week14assignment.md

│ └── week14handout.pdf

├── Week 15

│ └── week15handout.pdf

├── Week 2

│ ├── .Rhistory

│ ├── code_APR15.r

│ ├── week2assignment.md

│ └── week2handout.pdf

├── Week 3

│ ├── code_APR22.r

│ ├── week3assignment.md

│ └── week3handout.pdf

├── Week 4

│ ├── code_APR29.r

│ ├── week4assignment.md

│ └── week4handout.pdf

├── Week 5

│ ├── code_MAY06.r

│ ├── week5assignment.md

│ └── week5handout.pdf

├── Week 6

│ ├── code_MAY13.r

│ ├── week6assignment.md

│ └── week6handout.pdf

├── Week 8

│ ├── code_MAY27.r

│ ├── week8.pdf

│ ├── week8assignment.md

│ └── week8handout.pdf

├── Week 9

│ ├── quarto-demo.zip

│ ├── week9assignment.md

│ └── week9handout.pdf

└── readme.md

├── 2025

├── Week 1

│ ├── assignment01.md

│ └── week01handout.pdf

├── Week 2

│ ├── assignment02.md

│ ├── week02.R

│ └── week02handout.pdf

├── Week 3

│ ├── assignment03.R

│ ├── assignment03.md

│ ├── week03.R

│ └── week03handout.pdf

├── Week 4

│ ├── assignment04.R

│ ├── assignment04.md

│ ├── week04.R

│ └── week04handout.pdf

├── Week 5

│ ├── assignment05.R

│ ├── assignment05.md

│ ├── week05.R

│ └── week05handout.pdf

├── Week 6

│ ├── assignment06.R

│ └── week06handout.pdf

├── Week 7

│ ├── assignment07.R

│ ├── week07.R

│ └── week07handout.pdf

├── Week 8

│ ├── assignment08.md

│ ├── week08.R

│ └── week08handout.pdf

├── Week 9

│ ├── assignment09.md

│ ├── quarto_demo.zip

│ └── week09handout.pdf

└── readme.md

├── R_tutorial

├── .gitignore

├── R_tutorial.Rproj

├── readme.md

├── rsconnect

│ └── documents

│ │ └── tutorial.Rmd

│ │ └── shinyapps.io

│ │ └── anna-pryslopska

│ │ └── TidyversePractice.dcf

├── tutorial.Rmd

└── tutorial.html

└── readme.md

/2022/readme.md:

--------------------------------------------------------------------------------

1 | # Course description

2 |

3 | This seminar provides a gentle, hands-on introduction to the essential tools for quantitative research for students of the humanities. During the course of the seminar, the students will familiarize themselves with a wide array of software that is rarely taught but is invaluable in developing an efficient, transparent, reusable, and scalable research workflow. From text file, through data visualization, to creating beautiful reports - this course will empower students to improve their skill and help them establish good practices.

4 |

5 | The seminar is targeted at students with little to no experience with programming, who wish to improve their workflow, learn the basics of data handling and document typesetting, prepare for a big project (such as a BA or MA thesis), and learn about scientific project management.

6 |

7 | Materials: laptop with internet access

8 |

9 | ## Syllabus

10 |

11 | | Week | Topic | Description | Slides |

12 | | --:| -- | -- | -- |

13 | | 1 | Introduction, course overview and software installation | Course overview and expectations, classroom management and assignments/grading etc. | [Slides](https://github.com/a-nap/Digital-Research-Toolkit/blob/main/2022/week1handout.pdf) (some pages missing for privacy) |

14 | | 2 | | *no class* | |

15 | | 3 | Looking at data | Data types, encoding, R and RStudio, installing and loading packages, importing data | [Slides](https://github.com/a-nap/Digital-Research-Toolkit/blob/main/2022/week3handout.pdf) |

16 | | 4 | Reading data, data inspection and manipulation | Basic operators, importing, sorting, filtering, subsetting, removing missing data, data maniplation | [Slides](https://github.com/a-nap/Digital-Research-Toolkit/blob/main/2022/week4handout.pdf) |

17 | | 5 | Data manipulation and error handling | Data manipulation (merging, summary statistics, grouping, if ... else ..., etc.), naming variables, pipelines, documentation, tidy code, error handling and getting help | [Slides](https://github.com/a-nap/Digital-Research-Toolkit/blob/main/2022/week5handout.pdf) |

18 | | 6 | Data visualization | Visualizing in R (`ggplot2`, `esquisse`), choice of visualization, plot types, best practices, exporting plots and data | [Slides](https://github.com/a-nap/Digital-Research-Toolkit/blob/main/2022/week6handout.pdf) |

19 | | 7 | Creating reports with RMarkdown and `knitr` | Pandoc, RMarkdown, basic syntax and elements, export, document, and chunk options, documentation | [Slides](https://github.com/a-nap/Digital-Research-Toolkit/blob/main/2022/week7handout.pdf) |

20 | | 8 | Typesetting documents with LaTeX | What is LaTeX, basic document and file structure, advantages and disadvantages, from R to LaTeX | [Slides](https://github.com/a-nap/Digital-Research-Toolkit/blob/main/2022/week8handout.pdf) |

21 | | 9 | | *no class* | |

22 | | 10 | Typesetting documents with LaTeX | Editing text (commands, whitespace, environments, font properties, figures, and tables), glosses, IPA symbols, semantic formulae, syntactic trees | [Slides](https://github.com/a-nap/Digital-Research-Toolkit/blob/main/2022/week10handout.pdf) |

23 | | 11 | | *no class* | |

24 | | 12 | Typesetting documents with LaTeX and bibliography management | Large projects, citations, references, bibliography styles, bib file structure | [Slides](https://github.com/a-nap/Digital-Research-Toolkit/blob/main/2022/week12handout.pdf) |

25 | | 13 | Literature and reference management, common command line commands | Reference managers, looking up literature, text editors, command line commads (grep, diff, ping, cd, etc.) | [Slides](https://github.com/a-nap/Digital-Research-Toolkit/blob/main/2022/week13handout.pdf) (some pages missing for privacy) |

26 | | 14 | Version control and Git | Git, GitHub, version control | [Slides](https://github.com/a-nap/Digital-Research-Toolkit/blob/main/2022/week14handout.pdf) |

27 | | 15| Version control and Git, course wrap-up | Git, GitHub, SSH, reverting to older versions | [Slides](https://github.com/a-nap/Digital-Research-Toolkit/blob/main/2022/week15handout.pdf) |

28 |

--------------------------------------------------------------------------------

/2022/week10handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2022/week10handout.pdf

--------------------------------------------------------------------------------

/2022/week12handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2022/week12handout.pdf

--------------------------------------------------------------------------------

/2022/week13handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2022/week13handout.pdf

--------------------------------------------------------------------------------

/2022/week14handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2022/week14handout.pdf

--------------------------------------------------------------------------------

/2022/week15handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2022/week15handout.pdf

--------------------------------------------------------------------------------

/2022/week1handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2022/week1handout.pdf

--------------------------------------------------------------------------------

/2022/week3handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2022/week3handout.pdf

--------------------------------------------------------------------------------

/2022/week4handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2022/week4handout.pdf

--------------------------------------------------------------------------------

/2022/week5handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2022/week5handout.pdf

--------------------------------------------------------------------------------

/2022/week6handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2022/week6handout.pdf

--------------------------------------------------------------------------------

/2022/week7handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2022/week7handout.pdf

--------------------------------------------------------------------------------

/2022/week8handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2022/week8handout.pdf

--------------------------------------------------------------------------------

/2024/Week 1/week1handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 1/week1handout.pdf

--------------------------------------------------------------------------------

/2024/Week 10/basic LaTeX document.zip:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 10/basic LaTeX document.zip

--------------------------------------------------------------------------------

/2024/Week 10/week10assignment.md:

--------------------------------------------------------------------------------

1 | # Assignment Week 10

2 |

3 | Create a basic XeLaTeX document for the Noisy channel experiment (as on page 29 in the handout and the provided LaTeX files). Upload the resulting files to ILIAS. Use the scientific document structure. You DON'T need to include:

4 |

5 | - references,

6 | - abstract,

7 | - tables,

8 | - pictures,

9 | - lists,

10 | - numbered examples,

11 |

12 | You DO need to:

13 |

14 | - describe the experiment in detail,

15 | - include a description of the phenomenon,

16 | - describle the sentences.

17 |

--------------------------------------------------------------------------------

/2024/Week 10/week10handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 10/week10handout.pdf

--------------------------------------------------------------------------------

/2024/Week 11/week11assignment.md:

--------------------------------------------------------------------------------

1 | # Assignment Week 10

2 |

3 | Re-create the Moses illusion report in LaTeX (don't worry about proper citations). Upload all the files needed for compiling your report (obligatorily the TEX file, but also plots) and the PDF report file.

4 |

5 | - Include at least one table and one figure of the data (with captions).

6 | - Reference and hyperlink the table and figure in the text.

7 | - Create one list (via itemize, enumerate, or exe).

8 | - Make one gloss (e.g. translate a question from the experiment to your native language).

9 | - Make one syntactic tree.

10 | - Make one semantic formula.

11 | - Preserve the scientific article structure (Background, methods, results, etc.).

12 | - Include a table of contents.

13 |

--------------------------------------------------------------------------------

/2024/Week 11/week11handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 11/week11handout.pdf

--------------------------------------------------------------------------------

/2024/Week 12/big_project.zip:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 12/big_project.zip

--------------------------------------------------------------------------------

/2024/Week 12/week12assignment.md:

--------------------------------------------------------------------------------

1 | # Assignment Week 12

2 |

3 | Continue writing the Moses illusion report: make a style file and add a reference section. Upload your TEX, PDF, BIB, image, and style files.

4 |

5 | 1. Put all packages you're loading in a separate style file and load it.

6 | 2. Add 10 different but relevant references to your report, among them

7 | - at least one journal `@article`

8 | - at least one book `@book`

9 | - at least one part of a book `@incollection`

10 | - at least one thesis `@thesis`

11 | 3. Reference all the citations in the text, so that there is at least one of each of these:

12 | - as a parenthetical reference

13 | - as a textual reference

14 | - reference only the author

15 | - reference only the publication year

16 | - reference only the title

17 | - reference it without a citation but include in bibliography

18 | 4. Sort the entries by name, year, and title

19 | 5. Use the `authoryear` or `apa` reference style

--------------------------------------------------------------------------------

/2024/Week 12/week12handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 12/week12handout.pdf

--------------------------------------------------------------------------------

/2024/Week 13/big_project_1.zip:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 13/big_project_1.zip

--------------------------------------------------------------------------------

/2024/Week 13/big_project_2.zip:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 13/big_project_2.zip

--------------------------------------------------------------------------------

/2024/Week 13/week13assignment.md:

--------------------------------------------------------------------------------

1 | # Assignment Week 13

2 |

3 | Upload the solution as a single file of your choosing.

4 |

5 | - Find the changes I made in the big project (files: `big_poject1.zip` vs `big_poject2.zip`) → I didn’t compile it so the PDF will look the same.

6 | - How many times does the word "Tagblatt" appear in the files `corpus1.txt`, `corpus2.txt`, and `corpus3.txt`? Count only the lines.

7 | - Count all the lines and instances where the whole article "die" appears in these 3 files. Capitalization is not important, i.e. Die OK, Dieser not OK

8 | - What are the differences between `corpus2.txt` and `corpus3.txt`?

9 |

--------------------------------------------------------------------------------

/2024/Week 13/week13upload.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 13/week13upload.pdf

--------------------------------------------------------------------------------

/2024/Week 14/readme-example.md:

--------------------------------------------------------------------------------

1 | # Big project

2 |

3 | What is this? This project is authored by Anna Prysłopska.

4 |

5 | This is my Big Project. This can be my BA thesis, a novel, the next big app that Facebook will buy (I don't subscribe to their rebranding), or anything else.

6 |

7 | ## Table of Contents

8 | - [Installation](#installation)

9 | - [Usage](#usage)

10 | - [Configuration](#configuration)

11 | - [Examples](#examples)

12 | - [Contributing](#contributing)

13 | - [Contact](#contact)

14 |

15 | ## Installation

16 |

17 | You need LaTeX for this.

18 |

19 | ## Usage

20 |

21 | Read it.

22 |

23 | ## Configuration

24 |

25 | Some details.

26 |

27 | ## Examples

28 |

29 | Just read it. If you cannot read, this documentation will not help you.

30 |

31 | ## Contributing

32 |

33 | Pull requests are welcome or not.

34 |

35 | ## Contact

36 |

37 | It's Anna Prysłopska, Wikipedia, and ChatGPT.

--------------------------------------------------------------------------------

/2024/Week 14/week14assignment.md:

--------------------------------------------------------------------------------

1 | # Assignment Week 14

2 |

3 | Upload a plain text file about how far you got with #2.

4 |

5 | 1. Make a new git repository for the project we worked on this semester (Moses illusion, noisy channel, and the related files). Add a readme file, then add the R files, the Quarto files, and the LaTeX files AS INDIVIDUAL COMMITS. Include only the necessary files (omit redundant files). You should have at least 4 commits. Write meaningful commit messages.

6 | 2. Attempt this exercise and report on how far you got with it: [Bandit](https://overthewire.org/wargames/bandit/bandit0.html)

7 |

--------------------------------------------------------------------------------

/2024/Week 14/week14handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 14/week14handout.pdf

--------------------------------------------------------------------------------

/2024/Week 15/week15handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 15/week15handout.pdf

--------------------------------------------------------------------------------

/2024/Week 2/.Rhistory:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 2/.Rhistory

--------------------------------------------------------------------------------

/2024/Week 2/code_APR15.r:

--------------------------------------------------------------------------------

1 | # Lecture 15 APR 2024 -----------------------------------------------------

2 | # Installing and loading packages

3 | install.packages("dplyr")

4 | library(dplyr)

5 |

6 | # Working directory

7 | setwd() # FIXME remember to add your path!

8 | getwd()

9 |

10 | # First function call

11 | print("Hello world!")

12 |

13 | # Assignment

14 | ten <- 10.2 # works

15 | "rose" -> Rose # works

16 | name = "Anna" # works

17 | true <<- FALSE # works

18 | 13/12 ->> n # doesn't work

19 | 13/12 ->> nrs # works

20 |

21 | # Homework

22 | # 1. Change the layout and color theme of RStudio.

23 | # 2. Make and upload a screenshot of your RStudio installation.

24 | # 3. Install and load the packages: tidyverse, knitr, MASS, and psych

25 | # 4. Write a code that prints a long text (~30 words) and save it to a variable.

26 | # 5. Upload your code to ILIAS.

27 |

28 | # Session information

29 | sessionInfo()

30 |

--------------------------------------------------------------------------------

/2024/Week 2/week2assignment.md:

--------------------------------------------------------------------------------

1 | # Assignment Week 2

2 |

3 | Upload 2 files to complete this assignment.

4 |

5 | ## Part 1/file 1 (image)

6 |

7 | - Change the layout and color theme of RStudio.

8 | - Make and upload a screenshot of your RStudio installation.

9 |

10 | ## Part 2/file 2 (r script)

11 |

12 | Upload an R script that does all of the following:

13 |

14 | - Install and load the packages: tidyverse, knitr, MASS, and psych

15 | - Prints a long text (~30 words) and saves it to a variable.

16 |

--------------------------------------------------------------------------------

/2024/Week 2/week2handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 2/week2handout.pdf

--------------------------------------------------------------------------------

/2024/Week 3/code_APR22.r:

--------------------------------------------------------------------------------

1 | # Lecture 22 APR 2024 -----------------------------------------------------

2 | library(tidyverse)

3 | library(psych)

4 |

5 | # Data types

6 | log <- TRUE

7 | int <- 1L

8 | dbl <- 1.0

9 | cpx <- 1+0i

10 | chr <- "one"

11 | nan <- NaN

12 | inf <- Inf

13 | ninf <- -Inf

14 | mis <- NA

15 | ntype <- NULL

16 |

17 | # Data type exercises

18 | # = is for assignment; == is for equality

19 | log == int

20 | log == 10

21 | int == dbl

22 | dbl == cpx

23 | cpx == chr

24 | chr == nan

25 | nan == inf

26 | inf == ninf

27 | ninf == mis

28 | mis == mis

29 | mis == ntype

30 | ninf == ntype

31 |

32 |

33 | 5L + 2

34 | 3.7 * 3L

35 | 99999.0e-1 - 3.3e+3

36 | 10 / as.complex(2)

37 | as.character(5) / 5

38 |

39 | # Removing from the environment

40 | x <- "bad"

41 | rm(x)

42 |

43 | # Moses illusion data

44 | moses <- read_csv("moses.csv")

45 | moses

46 | print(moses)

47 | print(moses, n=Inf)

48 | View(moses)

49 | head(moses)

50 | head(as.data.frame(moses))

51 | tail(as.data.frame(moses), n = 20)

52 | spec(moses)

53 | summary(moses)

54 | describe(moses)

55 | colnames(moses)

--------------------------------------------------------------------------------

/2024/Week 3/week3assignment.md:

--------------------------------------------------------------------------------

1 | # Assignment Week 3

2 |

3 | Please complete the following tasks. Submit the assignment as a single R script. Use comments and sections to give your file structure.

4 |

5 | ## According to R, what is the type of the following

6 |

7 | "Anna"

8 | -10

9 | FALSE

10 | 3.14

11 | as.logical(1)

12 |

13 | ## According to R, is the following true

14 |

15 | 7+0i == 7

16 | 9 == 9.0

17 | "zero" == 0L

18 | "cat" == "cat"

19 | TRUE == 1

20 |

21 | ## What is the output of the following operations and why?

22 |

23 | 10 < 1

24 | 5 != 4

25 | 5 - FALSE

26 | 1.0 == 1

27 | 4 *9.1

28 | "a" + 1

29 | 0/0

30 | b* 2

31 | (1-2)/0

32 | 10 <- 20

33 | NA == NA

34 | -Inf == NA

35 |

36 | ## Read and inspect the `noisy.csv` data

37 |

38 | What are the meaningful columns? What should be kept and what can be discarded?

39 |

40 | (anonymized data tdb)

41 |

--------------------------------------------------------------------------------

/2024/Week 3/week3handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 3/week3handout.pdf

--------------------------------------------------------------------------------

/2024/Week 4/code_APR29.r:

--------------------------------------------------------------------------------

1 | # Lecture 29 APR 2024 -----------------------------------------------------

2 | library(tidyverse)

3 | library(psych)

4 |

5 | # Moses illusion data

6 | moses <- read_csv("moses.csv")

7 |

8 | ## Clean up data -----------------------------------------------------------

9 | # Task 1: Rename and drop columns

10 | moses.ren <-

11 | rename(moses,

12 | ID = MD5.hash.of.participant.s.IP.address,

13 | ANSWER = Value)

14 |

15 | moses.sel <-

16 | select(moses.ren, c(ITEM, CONDITION, ANSWER, ID,

17 | Label, Parameter))

18 |

19 | # Task 2: Remove missing values

20 | moses.na <- na.omit(moses.sel)

21 |

22 | # Task 3: Remove unnecessary rows

23 | moses.fil <-

24 | filter(moses.na,

25 | Parameter == "Final",

26 | Label != "instructions",

27 | CONDITION %in% 1:2)

28 |

29 | # Task 4: Sort the values

30 | moses.arr <- arrange(moses.fil, ITEM, CONDITION,

31 | desc(ANSWER))

32 |

33 | # Task 5: Re-code item number

34 | moses.it <- mutate(moses.arr, ITEM = as.numeric(ITEM))

35 | head(moses.it, n=20)

36 |

37 | # Task 6: Look at possible answers

38 | uk <- unique(select(filter(moses.it, ITEM == 2), ANSWER))

39 |

40 | ## Noisy channel data ------------------------------------------------------

41 | noisy <- read_csv("noisy.csv")

42 |

43 | noisy |>

44 | rename(ID = MD5.hash.of.participant.s.IP.address) |>

45 | select(ID,

46 | Label,

47 | PennElementType,

48 | Parameter,

49 | Value,

50 | ITEM,

51 | CONDITION,

52 | Reading.time,

53 | Sentence..or.sentence.MD5.) |>

54 | view()

55 |

56 | # Session information

57 | sessionInfo()

58 |

--------------------------------------------------------------------------------

/2024/Week 4/week4assignment.md:

--------------------------------------------------------------------------------

1 | # Assignment Week 4

2 |

3 | Please complete the following tasks. Submit the assignment as a single R script. Use comments and sections to give your file structure.

4 |

5 | ## What do the following evaluate to and why?

6 |

7 | !TRUE

8 | FALSE + 0

9 | 5 & TRUE

10 | 0 & TRUE

11 | 1 - FALSE

12 | FALSE + 1

13 | 1 | FALSE

14 | FALSE | NA

15 |

16 | ## Have the moses.csv data saved in your environment as "moses". Why do the following fail?

17 |

18 | Summary(moses)

19 | read_csv(moses.csv)

20 | tail(moses, n==10)

21 | describe(Moses)

22 | filter(moses, CONDITION == 102)

23 | arragne(moses, ID)

24 | mutate(moses, ITEMS = as.character("ITEM"))

25 |

26 | ## Clean up the Moses illusion data like we did in the tasks in class and save it to a new data frame. Use pipes instead of saving each step to a new data frame.

27 |

28 | - select relevant columns

29 | - rename mislabeled columns

30 | - remove missing data

31 | - remove unnecessary rows

32 | - change the item column to numeric values

33 | - arrange by item, condition, and answer

34 |

35 | ## From the Moses illusion data, make two new variables (printing and dont.know, respectively) with all answers which are supposed to mean "printing (press) and "don't know".

36 |

37 | ## Preprocess noisy channel data.

38 |

39 | Make two data frames: for reading times and for acceptability judgments.

40 |

41 | ### Acceptability ratings

42 |

43 | - rename the ID column and column with the rating

44 | - only data from the experiment (see `Label`) and where `PennElementType` IS "Scale"

45 | - make sure the column with the rating data is numeric

46 | - select the relevant columns: participant ID, item, condition, rating

47 | - remove missing values

48 |

49 | ### Reading times

50 |

51 | - rename the ID column and column with the full sentence

52 | - only data from the experiment (see `Label`)

53 | - only data where `PennElementType` IS NOT "Scale" or "TextInput"

54 | - only data from where Reading.time is not "NULL" (as a string)

55 | - make a new column with reading times as numeric values

56 | - keep only those rows with realistic reading times (between 80 and 2000 ms)

57 | - select relevant columns: participant ID, item, condition, sentence, reading time, and Parameter

58 | - remove missing values

59 |

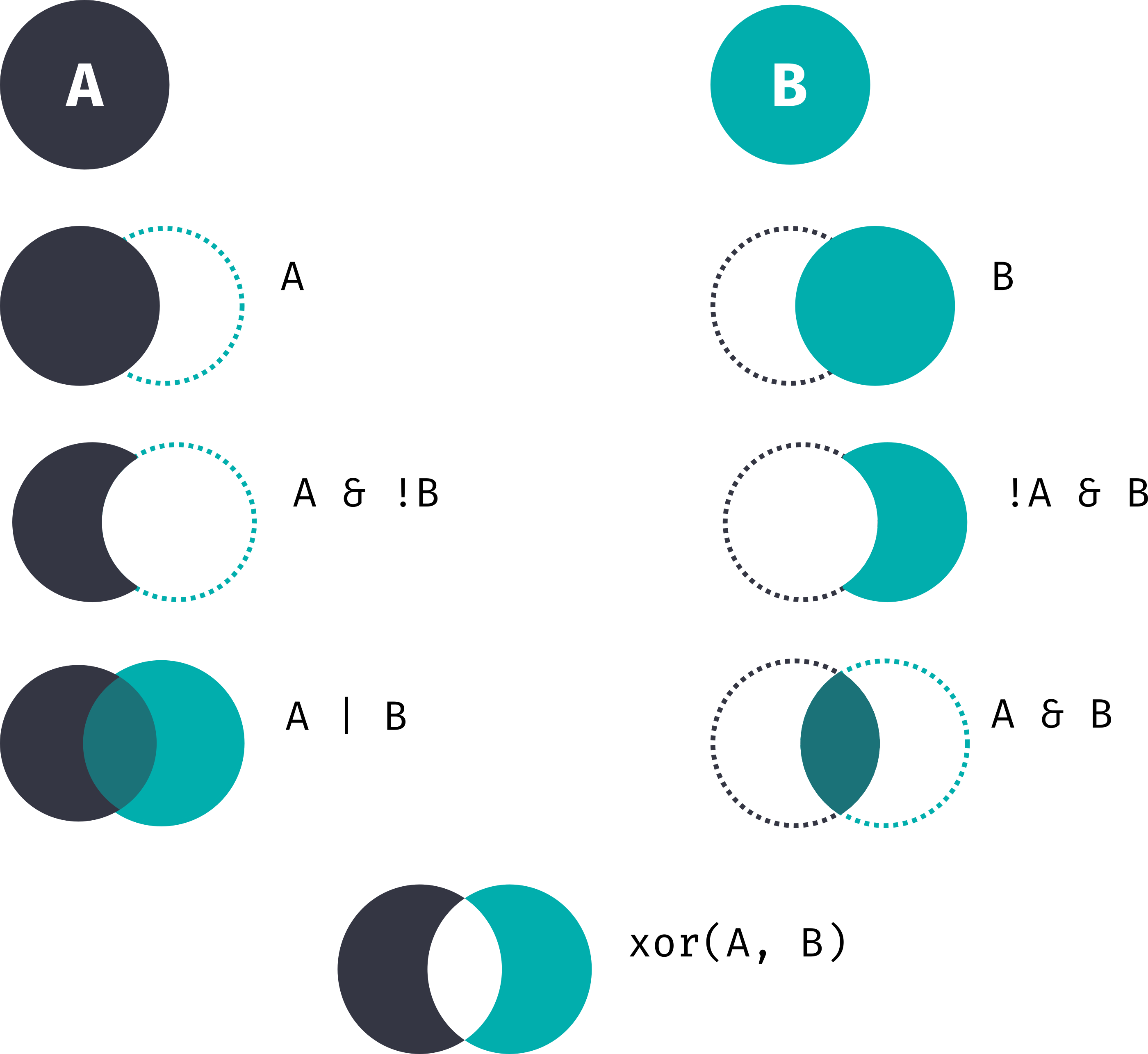

60 | ## Solve the logic exercise from the slides.

61 |

--------------------------------------------------------------------------------

/2024/Week 4/week4handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 4/week4handout.pdf

--------------------------------------------------------------------------------

/2024/Week 5/code_MAY06.r:

--------------------------------------------------------------------------------

1 | # Lecture 6 May 2024 ------------------------------------------------------

2 | library(tidyverse)

3 | library(psych)

4 |

5 | ## Homework ---------------------------------------------------------------

6 |

7 | moses <- read_csv("moses.csv")

8 |

9 | moses <-

10 | moses |>

11 | rename(ID = MD5.hash.of.participant.s.IP.address,

12 | ANSWER = Value) |>

13 | select(ITEM, CONDITION, ANSWER, ID, Label, Parameter) |>

14 | na.omit() |>

15 | filter(Parameter == "Final",

16 | Label != "instructions",

17 | CONDITION%in%1:2) |>

18 | arrange(ITEM, CONDITION, desc(ANSWER)) |>

19 | mutate(ITEM = as.numeric(ITEM))

20 |

21 |

22 |

23 | moses |> select(RESPONSE) |> arrange(RESPONSE) |> unique()

24 | dont.know <- c("Don't Know", "Don't know", "don't knoe", "don't know",

25 | "don`t know", "dont know", "don´t know", "i don't know",

26 | "Not sure", "no idea", "forgotten", "I do not know",

27 | "I don't know")

28 |

29 | moses |> filter(ITEM == 108) |> select(RESPONSE) |> unique()

30 | printing <- c("Print", "printer", "Printing", "Printing books", "printing press",

31 | "press", "Press", "letter press", "letterpress", "Letterpressing",

32 | "inventing printing", "inventing the book press/his bibles",

33 | "finding a way to print books", "for inventing the pressing machine",

34 | "Drucka", "Book print", "book printing", "bookpress", "Buchdruck",

35 | "the book printer")

36 |

37 |

38 |

39 | noisy <- read_csv("noisy.csv")

40 | noisy.rt <-

41 | noisy |>

42 | rename(ID = "MD5.hash.of.participant.s.IP.address",

43 | SENTENCE = "Sentence..or.sentence.MD5.") |>

44 | mutate(RT = as.numeric(Reading.time)) |>

45 | filter(Label == "experiment",

46 | PennElementType != "Scale",

47 | PennElementType != "TextInput",

48 | Reading.time != "NULL",

49 | RT > 80 & RT < 2000) |>

50 | select(ID, ITEM, CONDITION, SENTENCE, RT, Parameter) |>

51 | na.omit()

52 |

53 | noisy.aj <-

54 | noisy |>

55 | filter(Label == "experiment",

56 | PennElementType == "Scale") |>

57 | mutate(RATING = as.numeric(Value),

58 | ID = "MD5.hash.of.participant.s.IP.address") |>

59 | select(ID, ITEM, CONDITION, RATING) |>

60 | na.omit()

61 |

62 |

63 | ## Naming -----------------------------------------------------------------

64 |

65 | ueOd2FNRGAP0dRopq4OqU <- 1:10

66 | ueOd2FNRGAPOdRopq4OqU <- c("Passport", "ID", "Driver's license")

67 | ueOb2FNRGAPOdRopq4OqU <- FALSE

68 | ueOd2FNRGAPOdRopq4OqU <- 5L

69 |

70 | He_just.kept_talking.in.one.long_incredibly.unbroken.sentence.moving_from.topic_to.topic <- 1

71 |

72 | ## Joining, cleaning, grouping, summarizing -------------------------------

73 | moses <- read_csv("moses_clean.csv") # Look, I overwrote the previous 'moses' variable!

74 | questions <- read_csv("questions.csv")

75 |

76 | # Task 1

77 | data_with_answers <-

78 | moses |>

79 | inner_join(questions, by=c("ITEM", "CONDITION", "LIST")) |>

80 | select(ITEM, CONDITION, ID, ANSWER, CORRECT_ANSWER, LIST)

81 |

82 | moses |>

83 | full_join(questions, by=c("ITEM", "CONDITION", "LIST")) |>

84 | select(ITEM, CONDITION, ID, ANSWER, CORRECT_ANSWER, LIST)

85 |

86 | moses |>

87 | merge(questions, by=c("ITEM", "CONDITION", "LIST")) |>

88 | select(ITEM, CONDITION, ID, ANSWER, CORRECT_ANSWER, LIST)

89 |

90 | # Task 2

91 | moses |>

92 | inner_join(questions, by=c("ITEM", "CONDITION", "LIST")) |>

93 | select(ITEM, CONDITION, ID, ANSWER, CORRECT_ANSWER, LIST) |>

94 | mutate(ACCURATE = ANSWER == CORRECT_ANSWER)

95 |

96 | # Task 3

97 | moses |>

98 | inner_join(questions, by=c("ITEM", "CONDITION", "LIST")) |>

99 | select(ITEM, CONDITION, ID, ANSWER, CORRECT_ANSWER, LIST) |>

100 | mutate(ACCURATE = ifelse(CORRECT_ANSWER == ANSWER,

101 | yes = "correct",

102 | no = ifelse(ANSWER == "dont_know",

103 | yes = "dont_know",

104 | no = "incorrect")))

105 |

106 | # Task 4

107 | moses |>

108 | inner_join(questions, by=c("ITEM", "CONDITION", "LIST")) |>

109 | select(ITEM, CONDITION, ID, ANSWER, CORRECT_ANSWER, LIST) |>

110 | mutate(ACCURATE = ifelse(CORRECT_ANSWER == ANSWER,

111 | yes = "correct",

112 | no = ifelse(ANSWER == "dont_know",

113 | yes = "dont_know",

114 | no = "incorrect")),

115 | CONDITION = case_when(CONDITION == '1' ~ 'illusion',

116 | CONDITION == '2' ~ 'no illusion',

117 | CONDITION == '100' ~ 'good filler',

118 | CONDITION == '101' ~ 'bad filler'))

119 |

120 | # Task 5

121 | moses |>

122 | full_join(questions, by=c("ITEM", "CONDITION", "LIST")) |>

123 | select(ITEM, CONDITION, ID, ANSWER, CORRECT_ANSWER, LIST) |>

124 | mutate(ACCURATE = ifelse(CORRECT_ANSWER == ANSWER,

125 | yes = "correct",

126 | no = ifelse(ANSWER == "dont_know",

127 | yes = "dont_know",

128 | no = "incorrect")),

129 | CONDITION = case_when(CONDITION == '1' ~ 'illusion',

130 | CONDITION == '2' ~ 'no illusion',

131 | CONDITION == '100' ~ 'good filler',

132 | CONDITION == '101' ~ 'bad filler')) |>

133 | group_by(ITEM, ACCURATE) |>

134 | summarise(Count = n())

--------------------------------------------------------------------------------

/2024/Week 5/week5assignment.md:

--------------------------------------------------------------------------------

1 | # Assignment Week 5

2 |

3 | ## Read and preprocess the new Moses illusion data (`moses_clean.csv`)

4 |

5 | 1. Calculate the percentage of "correct", "incorrect", and "don't know" answers in the two critical conditions.

6 | 2. Of all the questions in all conditions, which question was the easiest and which was the hardest?

7 | 3. Of the Moses illusion questions, which question fooled most people?

8 | 4. Which participant was the best in answering questions? Who was the worst?

9 |

10 | ## Read and inspect the updated new noisy channel data (`noisy_rt.csv` and `noisy_aj.csv`).

11 |

12 | 1. **Acceptability judgment data:** Calculate the mean rating in each condition. How was the data spread out? Did the participants rate the sentences differently?

13 | 2. **Reading times:** calculate the average length people spent reading each sentence fragment in each condition. Did the participant read the sentences differently in each condition?

14 | 3. Make one data frame out of both data frames. Keep all the information but remove redundancy.

15 |

--------------------------------------------------------------------------------

/2024/Week 5/week5handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 5/week5handout.pdf

--------------------------------------------------------------------------------

/2024/Week 6/code_MAY13.r:

--------------------------------------------------------------------------------

1 | library(tidyverse)

2 |

3 | # Homework ----------------------------------------------------------------

4 | # Task 1.1: Calculate the percentage of "correct", "incorrect",

5 | # and "don't know" answers in the two critical conditions.

6 |

7 | moses <- read_csv("moses_clean.csv")

8 | questions <- read_csv("questions.csv")

9 |

10 | moses.preprocessed <-

11 | moses |>

12 | inner_join(questions, by=c("ITEM", "CONDITION", "LIST")) |>

13 | select(ID, ITEM, CONDITION, QUESTION, ANSWER, CORRECT_ANSWER) |>

14 | mutate(ACCURATE = ifelse(CORRECT_ANSWER == ANSWER,

15 | yes = "correct",

16 | no = ifelse(ANSWER == "dont_know",

17 | yes = "dont_know",

18 | no = "incorrect")),

19 | CONDITION = case_when(CONDITION == '1' ~ 'illusion',

20 | CONDITION == '2' ~ 'no illusion',

21 | CONDITION == '100' ~ 'good filler',

22 | CONDITION == '101' ~ 'bad filler'))

23 |

24 | moses.preprocessed |>

25 | filter(CONDITION %in% c('illusion', 'no illusion')) |>

26 | group_by(CONDITION, ACCURATE) |>

27 | summarise(count = n()) |>

28 | mutate(percentage = count / sum(count) * 100)

29 |

30 | # Task 1.2: Of all the questions in all conditions, which

31 | # question was the easiest and which was the hardest?

32 |

33 | minmax <-

34 | moses.preprocessed |>

35 | group_by(ITEM, QUESTION, ACCURATE) |>

36 | summarise(count = n()) |>

37 | mutate(CORRECT_ANSWERS = count / sum(count) * 100) |>

38 | arrange(CORRECT_ANSWERS) |>

39 | filter(ACCURATE == "correct")

40 |

41 | head(minmax, 2)

42 | tail(minmax, 2)

43 |

44 | minmax |>

45 | filter(CORRECT_ANSWERS == min(minmax$CORRECT_ANSWERS) |

46 | CORRECT_ANSWERS == max(minmax$CORRECT_ANSWERS))

47 |

48 | # Task 1.3: Of the Moses illusion questions, which question fooled most people?

49 |

50 | moses.preprocessed |>

51 | group_by(ITEM, CONDITION, QUESTION, ACCURATE) |>

52 | summarise(count = n()) |>

53 | mutate(CORRECT_ANSWERS = count / sum(count) * 100) |>

54 | filter(CONDITION == 'illusion',

55 | ACCURATE == "incorrect") |>

56 | arrange(CORRECT_ANSWERS) |>

57 | print(n=Inf)

58 |

59 | # Task 1.4: Which participant was the best in answering questions?

60 | # Who was the worst?

61 |

62 | moses.preprocessed |>

63 | group_by(ID, ACCURATE) |>

64 | summarise(count = n()) |>

65 | mutate(CORRECT_ANSWERS = count / sum(count) * 100) |>

66 | filter(ACCURATE == "correct") |>

67 | arrange(CORRECT_ANSWERS) |>

68 | print(n=Inf)

69 |

70 | # Task 2.1

71 | noisy_aj <- read.csv("noisy_aj.csv")

72 | noisy_aj |>

73 | group_by(CONDITION) |>

74 | summarise(MEAN_RATING = mean(RATING),

75 | SD = sd(RATING))

76 |

77 | # Task 2.2

78 | noisy_rt <- read.csv("noisy_rt.csv")

79 | noisy_rt |>

80 | group_by(IA, CONDITION) |>

81 | summarise(MEAN_RT = mean(RT),

82 | SD = sd(RT))

83 |

84 | # Task 2.3

85 |

86 | noisy <- noisy_aj |>

87 | full_join(noisy_rt)

88 | # full_join(noisy_rt, by=c("ID", "ITEM", "CONDITION")) |> head()

89 |

90 | # Lecture 13 May 2024 ------------------------------------------------------

91 |

92 | # Noisy data preparation

93 | noisy <- read_csv("noisy.csv")

94 | noisy.rt <-

95 | noisy |>

96 | rename(ID = "MD5.hash.of.participant.s.IP.address",

97 | SENTENCE = "Sentence..or.sentence.MD5.") |>

98 | mutate(RT = as.numeric(Reading.time)) |>

99 | filter(Label == "experiment",

100 | PennElementType != "Scale",

101 | PennElementType != "TextInput",

102 | Reading.time != "NULL",

103 | RT > 80 & RT < 2000) |>

104 | select(ID, ITEM, CONDITION, SENTENCE, RT, Parameter) |>

105 | na.omit()

106 |

107 | # Plotting

108 | # Data summary with 1 row per observation

109 | noisy.summary <-

110 | noisy.rt |>

111 | group_by(ITEM, CONDITION, Parameter) |>

112 | summarise(RT = mean(RT)) |>

113 | group_by(CONDITION, Parameter) |>

114 | summarise(MeanRT = mean(RT),

115 | SD = sd(RT)) |>

116 | rename(IA = Parameter)

117 |

118 | # Plot object

119 | noisy.summary |>

120 | ggplot() +

121 | aes(x=as.numeric(IA), y=MeanRT, colour=CONDITION) +

122 | geom_line() +

123 | geom_point() +

124 | facet_wrap(.~CONDITION) +

125 | stat_sum() +

126 | # geom_errorbar(aes(ymin=MeanRT-2*SD, ymax=MeanRT+2*SD)) +

127 | coord_polar() +

128 | theme_classic() +

129 | labs(x = "Interest area",

130 | y = "Mean reading time in ms",

131 | title = "Noisy channel data",

132 | subtitle = "Reading times only",

133 | caption = "Additional caption",

134 | colour="Condition",

135 | size = "Count")

136 |

137 | # Esquisse

138 | library(esquisse)

139 | set_i18n("de") # Set language to German

140 | esquisser()

141 |

--------------------------------------------------------------------------------

/2024/Week 6/week6assignment.md:

--------------------------------------------------------------------------------

1 | # Assignment Week 6

2 |

3 | **Make 10 plots overall.**

4 |

5 | 9 plots should visualize the data from the two in-class experiments. These plots should follow the WCOG guidelines and show different aspects of the data (e.g. only one condition, only one interest area) Do not make 3 plots that show the same thing, e.g. three times the mean acceptability rating between conditions.

6 |

7 | - 3 plots for the Moses illusion data (line, point, and bar),

8 | - 3 plots for the noisy channel reading time data (line, point, and bar), and

9 | - 3 plots for the noisy channel acceptability rating data (line, point, and bar).

10 |

11 | You can use hybrid plots as well.

12 |

13 | The last plot can be based on any dataset you want and be in any shape you want. It has to be ugly, unreadable, and violate as many WCOG guidelines as it can.

14 |

15 | If you use a dataset outside of the two experiments in class, please upload it with your script file.

16 |

--------------------------------------------------------------------------------

/2024/Week 6/week6handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 6/week6handout.pdf

--------------------------------------------------------------------------------

/2024/Week 8/code_MAY27.r:

--------------------------------------------------------------------------------

1 | # Load necessary packages

2 | library(ggplot2)

3 | library(patchwork)

4 | library(cowplot)

5 |

6 |

7 | # Last homework: minimal and maximal values only --------------------------

8 | minmax <-

9 | moses.preprocessed |>

10 | group_by(ITEM, QUESTION, ACCURATE) |>

11 | summarise(count = n()) |>

12 | mutate(CORRECT_ANSWERS = count / sum(count) * 100) |>

13 | arrange(CORRECT_ANSWERS) |>

14 | filter(ACCURATE == "correct")

15 |

16 | minmax |>

17 | filter(CORRECT_ANSWERS == min(minmax$CORRECT_ANSWERS) |

18 | CORRECT_ANSWERS == max(minmax$CORRECT_ANSWERS))

19 |

20 | # Generate plots from the R base 'iris' dataframe -------------------------

21 | # Find out more abotu the iris dataframe by typing: ?iris

22 | plot1 <-

23 | ggplot(iris) +

24 | aes(x = Sepal.Length,

25 | fill = Species) +

26 | geom_density(alpha = 0.5) +

27 | theme_minimal()

28 |

29 | plot2 <-

30 | ggplot(iris) +

31 | aes(x = Sepal.Length,

32 | y = Sepal.Width,

33 | color = Species) +

34 | geom_point() +

35 | theme_minimal()

36 |

37 | plot3 <-

38 | ggplot(iris) +

39 | aes(x = Species, y = Petal.Width, fill = Species) +

40 | geom_boxplot() +

41 | theme_minimal()

42 |

43 | plot4 <-

44 | ggplot(iris) +

45 | aes(x = Petal.Length,

46 | y = Petal.Width,

47 | colour = Species,

48 | group = Species) +

49 | geom_step() +

50 | theme_minimal()

51 |

52 |

53 | # Patchwork ---------------------------------------------------------------

54 | # Join plots and arrange them in two rows

55 | plots <- (plot1 | plot2 | plot3) / plot4 + plot_layout(nrow = 2)

56 | # Keep all legends together and add annotations

57 | plots + plot_layout(guides = 'collect') + plot_annotation(tag_levels = 'A')

58 |

59 | # Export plots

60 | ggsave("patchwork_plots.png", width=1000, units = "px", dpi=100)

61 | ggsave("patchwork_plots.pdf", dpi=100)

62 |

63 | # Remove last plot

64 | dev.off()

65 |

66 | # Cowplot -----------------------------------------------------------------

67 | # Join plots, remove all legends, add annotations

68 | plots <-

69 | plot_grid(plot1 + theme(legend.position="none"),

70 | plot2 + theme(legend.position="none"),

71 | plot3 + theme(legend.position="none"),

72 | plot4 + theme(legend.position="none"),

73 | labels = c('A', 'B', 'C', 'D'),

74 | label_size = 12)

75 | # Choose one legend to keep

76 | legend <-

77 | get_legend(plot1 +

78 | guides(color = guide_legend(nrow = 1)) +

79 | theme(legend.box.margin = margin(12, 12, 12, 12)))

80 | # Put the plot and legend together

81 | plot_grid(plots, legend, rel_widths = c(3, .4))

82 |

83 | # Export plots

84 | ggsave("cowplot_plots.png", height=8, dpi=100)

85 | ggsave("cowplot_plots.pdf", dpi=100)

86 |

--------------------------------------------------------------------------------

/2024/Week 8/week8.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 8/week8.pdf

--------------------------------------------------------------------------------

/2024/Week 8/week8assignment.md:

--------------------------------------------------------------------------------

1 | # Assignment Week 8

2 |

3 | Upload 1 file.

4 |

5 | - Upload one PNG or PDF file with the plots from last week's homework in one picture (**not one picture per plot**). Use the packages `patchwork` or `cowplot`.

6 | - Vote for the ugliest plot

7 | - Install Quarto: https://quarto.org/docs/get-started/

8 | - Watch the introductory video: https://youtu.be/_f3latmOhew?si=xxovQvYkUosC_4uB

9 |

--------------------------------------------------------------------------------

/2024/Week 8/week8handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 8/week8handout.pdf

--------------------------------------------------------------------------------

/2024/Week 9/quarto-demo.zip:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 9/quarto-demo.zip

--------------------------------------------------------------------------------

/2024/Week 9/week9assignment.md:

--------------------------------------------------------------------------------

1 | # Assignment Week 9

2 |

3 | Submit two files in which you report on the Moses illusion experiment: a Quarto file (`.qmd`) and an HTML file (`.html`). The Quarto file should have all the code, text, and markup. The HTML file should look like **a beautiful report with no unnecessary code or output.** Treat it like a report or presentation, but be very brief; this is not a term paper.

4 |

5 | - Keep all the code you need for analyzing and visualizing the data.

6 | - Make at least one table.

7 | - Make at least one list.

8 | - Include at least one plot of the data.

9 | - Reference the table, list, and plot in the report text by hyperlinking/cross-referencing.

10 | - Include the session info in full.

11 |

12 | Your Quarto file should include ALL code needed to generate the data. That means you need to include everything from start to finish, so also the code for **loading the packages and data**. Then all the code for preprocessing and generating tables, plots, etc.

13 |

--------------------------------------------------------------------------------

/2024/Week 9/week9handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2024/Week 9/week9handout.pdf

--------------------------------------------------------------------------------

/2024/readme.md:

--------------------------------------------------------------------------------

1 | # Digital Research Toolkit for Linguists

2 |

3 | Author: `anna.pryslopska[ AT ]ling.uni-stuttgart.de`

4 |

5 | These are the original materials from the course "Digital Research Toolkit for Linguists taught by me in the Summer Semester 2024 at the University of Stuttgart.

6 |

7 | If you want to replicate this course, you can do so with proper attribution. To replicate the data, follow these links for [Experiment 1](https://farm.pcibex.net/r/CuZHnp/) (full Moses illusion experiment) and [Experiment 2](https://farm.pcibex.net/r/zAxKiw/) (demo of self-paced reading with acceptability judgment).

8 |

9 | ## Schedule and syllabus

10 |

11 | This is a rough overview of the topics discussed every week. These are subject to change, depending on how the class goes.

12 |

13 | | Week | Topic | Description | Assignments | Materials |

14 | | ---- | ----- | ----------- | ----------- | --------- |

15 | | 1 | Introduction & overview | Course overview and expectations, classroom management and assignments/grading etc. Data collection. | Complete [Experiment 1](https://farm.pcibex.net/p/glQRwV/) and [Experiment 2](https://farm.pcibex.net/p/ceZUkj/) and recruit one more person. [Install R](https://www.r-project.org/) and [RStudio](https://posit.co/download/rstudio-desktop/), install [Texmaker](https://www.xm1math.net/texmaker/) or make an [Overleaf](https://www.overleaf.com/) account. | [Slides](https://github.com/a-nap/DRTfL2024/blob/1e3ac235f6957eaaebf8a19f1889d0b6a6f79fb7/Week%201/week1handout.pdf) |

16 | | 2 | Data, R and RStudio | Intro recap, directories, R and RStudio, installing and loading packages, working with scripts | Read chapters 2, 6 and 7 of [R for Data Science](https://r4ds.hadley.nz/), complete [assignment 1](https://github.com/a-nap/DRTfL2024/blob/main/Week%202/week2assignment.md) | [Slides](https://github.com/a-nap/DRTfL2024/blob/main/Week%202/week2handout.pdf), [code](https://github.com/a-nap/DRTfL2024/blob/main/Week%202/code_APR15.r) |

17 | | 3 | Reading data, data inspection and manipulation | Looking at your data, data types, importing, making sense of the data, intro to sorting, filtering, subsetting, removing missing data, data manipulation | Read chapters 3, 4 and 5 of [R for Data Science](https://r4ds.hadley.nz/), complete [assignment 2](https://github.com/a-nap/DRTfL2024/blob/main/Week%203/week3assignment.md). | [Slides](https://github.com/a-nap/DRTfL2024/blob/main/Week%203/week3handout.pdf), [code](https://github.com/a-nap/DRTfL2024/blob/main/Week%203/code_APR22.r), data |

18 | | 4 | Data manipulation | Basic operators, data manipulation (filtering, sorting, subsetting, arranging), pipelines, tidy code, practice. | Compete [assignment 3](https://github.com/a-nap/DRTfL2024/blob/main/Week%204/week4assignment.md) | [Slides](https://github.com/a-nap/DRTfL2024/blob/main/Week%204/week4handout.pdf), [code](https://github.com/a-nap/DRTfL2024/blob/main/Week%204/code_APR29.r), data |

19 | | 5 | Data manipulation and error handling | Summary statistics, grouping, merging, if ... else, naming variables, tidy code, error handling and getting help. | [Assignment 4](https://github.com/a-nap/DRTfL2024/blob/main/Week%205/week5assignment.md), read the slides from the QCBS R Workshop Series [*Workshop 3: Introduction to data visualisation with `ggplot2`*](https://r.qcbs.ca/workshop03/pres-en/workshop03-pres-en.html) | [Slides](https://github.com/a-nap/DRTfL2024/blob/main/Week%205/week5handout.pdf), [code](https://github.com/a-nap/DRTfL2024/blob/main/Week%205/code_MAY06.r) |

20 | | 6 | Data visualization | Communicating with graphics, choice of visualization, plot types, best practices, visualizing in R (`ggplot2`, `esquisse`), exporting plots and data | Complete [assignment 5](https://github.com/a-nap/DRTfL2024/blob/main/Week%206/week6assignment.md). If you haven't yet, install the package `esquisse` | [Slides](https://github.com/a-nap/DRTfL2024/blob/main/Week%206/week6handout.pdf), [code](https://github.com/a-nap/DRTfL2024/blob/main/Week%206/code_MAY13.r) |

21 | | 7 | No class | Holiday | | |

22 | | 8 | Data visualization | Data visualization recap, best practices, lying with plots, practical exercises, exporting/saving plots and data. | Complete [assignment 6](https://github.com/a-nap/DRTfL2024/blob/main/Week%208/week8assignment.md). Install [Quarto](https://quarto.org/docs/get-started/). Watch [the introductory video](https://www.youtube.com/watch?v=_f3latmOhew) | Slides [large](https://github.com/a-nap/DRTfL2024/blob/main/Week%208/week8.pdf) and [compressed](https://github.com/a-nap/DRTfL2024/blob/main/Week%208/week8handout.pdf), [code](https://github.com/a-nap/DRTfL2024/blob/main/Week%208/code_MAY27.r) |

23 | | 9 | Creating reports with Quarto and knitr | Pandoc, markdown, Quarto, basic syntax and elements, export, document, and chunk options, documentation | Complete [assignment 7](https://github.com/a-nap/DRTfL2024/blob/main/Week%209/week9assignment.md). | [Slides](https://github.com/a-nap/DRTfL2024/blob/main/Week%209/week9handout.pdf), [compressed Quarto files](https://github.com/a-nap/DRTfL2024/blob/main/Week%209/quarto-demo.zip) |

24 | | 10 | Typesetting documents with LaTeX | What is LaTeX, basic document and file structure, advantages and disadvantages, from R to LaTeX | Complete [assignment 8](https://github.com/a-nap/DRTfL2024/blob/main/Week%2010/week10assignment.md), read chapter 2 of [*The Not So Short Introduction to LaTeX*](https://tobi.oetiker.ch/lshort/lshort.pdf). | [Slides](https://github.com/a-nap/DRTfL2024/blob/main/Week%2010/week10handout.pdf), [basic LaTeX file (zip)](https://github.com/a-nap/DRTfL2024/blob/main/Week%2010/basic%20LaTeX%20document.zip) |

25 | | 11 | Typesetting documents with LaTeX | Editing text (commands, whitespace, environments, font properties, figures, and tables), glosses, IPA symbols, semantic formulae, syntactic trees | Complete [assignment 9](https://github.com/a-nap/DRTfL2024/blob/main/Week%2011/week11assignment.md), read [*Bibliography management with biblatex*](https://www.overleaf.com/learn/latex/Bibliography_management_with_biblatex) | [Slides](https://github.com/a-nap/DRTfL2024/blob/main/Week%2011/week11handout.pdf) |

26 | | 12 | Typesetting documents with LaTeX and bibliography management | Large projects, citations, references, bibliography styles, bib file structure | Complete [assignment 10](https://github.com/a-nap/DRTfL2024/blob/main/Week%2012/week12assignment.md) | [Slides](https://github.com/a-nap/DRTfL2024/blob/main/Week%2012/week12handout.pdf), [big project files](https://github.com/a-nap/DRTfL2024/blob/main/Week%2012/big_project.zip) |

27 | | 13 | Literature and reference management, common command line commands | Reference managers, looking up literature, command line commands (grep, diff, ping, cd, etc.) | Complete [assignment 11](https://github.com/a-nap/DRTfL2024/blob/main/Week%2013/week13assignment.md) | [Slides](https://github.com/a-nap/DRTfL2024/blob/main/Week%2013/week13upload.pdf), [corpus1.txt](https://github.com/a-nap/DRTfL2024/blob/main/Week%2013/corpus1.TXT), [corpus2.txt](https://github.com/a-nap/DRTfL2024/blob/main/Week%2013/corpus2.TXT), [corpus3.txt](https://github.com/a-nap/DRTfL2024/blob/main/Week%2013/corpus3.TXT), [big project 1](https://github.com/a-nap/DRTfL2024/blob/main/Week%2013/big_project_1.zip), [big project 2](https://github.com/a-nap/DRTfL2024/blob/main/Week%2013/big_project_2.zip) |

28 | | 14 | Text editors, version control and Git | Text editors, Git, GitHub, version control | Complete [assignment 12](https://github.com/a-nap/DRTfL2024/blob/main/Week%2014/week14assignment.md) | [Slides](https://github.com/a-nap/DRTfL2024/blob/main/Week%2014/week14handout.pdf), [example readme file](https://github.com/a-nap/DRTfL2024/blob/main/Week%2014/readme-example.md) |

29 | | 15 | Version control and Git | Git, GitHub, SSH, reverting to older versions | In class assignment | [Slides](https://github.com/a-nap/DRTfL2024/blob/main/Week%2015/week15handout.pdf), [SSH for GitHub video](https://vimeo.com/989393245) |

30 |

31 | ## Recommended reading

32 |

33 | ### Git

34 |

35 | - GitHub Git guide: [`https://github.com/git-guides/`](https://github.com/git-guides/)

36 | - Another git guide: [`http://rogerdudler.github.io/git-guide/`](http://rogerdudler.github.io/git-guide/)

37 | - Git tutorial: [`http://git-scm.com/docs/gittutorial`](http://git-scm.com/docs/gittutorial)

38 | - Another git tutorial: [`https://www.w3schools.com/git/`](https://www.w3schools.com/git/)

39 | - Git cheat sheets: [`https://training.github.com/`](https://training.github.com/)

40 | - Where to ask questions: [Stackoverflow](https://stackoverflow.com)

41 |

42 | ### LaTeX

43 |

44 | - Overleaf (n.d.) *Bibliography management with biblatex*. Accessed: 2024-06-24. URL: [`https://www.overleaf.com/learn/latex/Bibliography_management_with_biblatex`](https://www.overleaf.com/learn/latex/Bibliography_management_with_biblatex)

45 | - Dickinson, Markus and Josh Herring (2008). *LaTeX for Linguists*. Accessed: 2024-06-07. URL:

46 | [`https://cl.indiana.edu/~md7/08/latex/slides.pdf`](https://cl.indiana.edu/~md7/08/latex/slides.pdf).

47 | - LaTeX/Linguistics - Wikibooks (2024). Accessed: 2024-06-07. URL: [`https://en.wikibooks.org/wiki/LaTeX/Linguistics`](https://en.wikibooks.org/wiki/LaTeX/Linguistics).

48 | - Oetiker, Tobias et al. (2023). *The Not So Short Introduction to LATEX*. Accessed: 2024-06-07. URL:

49 | [`https://tobi.oetiker.ch/lshort/lshort.pdf`](https://tobi.oetiker.ch/lshort/lshort.pdf).

50 |

51 | ### Quarto

52 |

53 | - Introductory video: [`https://www.youtube.com/watch?v=_f3latmOhew`](https://www.youtube.com/watch?v=_f3latmOhew)

54 | - Documentation: [`https://quarto.org/docs/get-started/`](https://quarto.org/docs/get-started/)

55 |

56 | ### R

57 |

58 | - QCBS R Workshop Series [`https://r.qcbs.ca/`](https://r.qcbs.ca/)

59 | - Wickham, Hadley, Mine Çetinkaya-Rundel, and Garrett Grolemund (2023). *R for data science: import, tidy, transform, visualize, and model data*. 2nd ed. O’Reilly Media, Inc. URL: [`https://r4ds.hadley.nz/`](https://r4ds.hadley.nz/).

60 |

61 | ### Experiments

62 |

63 | - Free-response: Erickson, Thomas D and Mark E Mattson (1981). “From words to meaning: A semantic illusion”. In: *Journal of Verbal Learning and Verbal Behavior* 20.5, pp. 540–551. DOI: [`10.1016/s0022-5371(81)90165-1`](https://www.sciencedirect.com/science/article/abs/pii/S0022537181901651).

64 | - Self-paced reading with acceptability judgments: Gibson, Edward, Leon Bergen, and Steven T Piantadosi (2013). “Rational integration of noisy evidence and prior semantic expectations in sentence interpretation”. In: *Proceedings of the National Academy of Sciences* 110.20, pp. 8051–8056. DOI: [`10.1073/pnas.1216438110`](https://www.pnas.org/doi/full/10.1073/pnas.1216438110).

65 |

--------------------------------------------------------------------------------

/2025/Week 1/assignment01.md:

--------------------------------------------------------------------------------

1 | # Assignment 01

2 |

3 | Give one answer to each of the three tasks.

4 |

5 | ## Task 1

6 |

7 | 1. Take part in the class experiment: https://farm.pcibex.net/p/glQRwV/

8 | 2. Which question did you like most?

9 |

10 | ## Task 2

11 |

12 | 1. Install R: https://cran.r-project.org/

13 | 2. Install RStudio: https://www.rstudio.com/

14 | 3. Did you successfully install both?

15 |

16 | ## Task 3

17 |

18 | Check if your data is sold: https://netzpolitik.org/2024/databroker-files-jetzt-testen-wurde-mein-handy-standort-verkauft/

19 |

20 | Was your data sold?

--------------------------------------------------------------------------------

/2025/Week 1/week01handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2025/Week 1/week01handout.pdf

--------------------------------------------------------------------------------

/2025/Week 2/assignment02.md:

--------------------------------------------------------------------------------

1 | # Assignment 02

2 |

3 | Upload 2 files to complete this assignment.

4 |

5 | ## Part 1/file 1 (image)

6 |

7 | - Change the layout and color theme of RStudio.

8 | - Make and upload a screenshot of your RStudio installation.

9 |

10 | ## Part 2/file 2 (r script)

11 |

12 | Upload an R script that does all of the following:

13 |

14 | - Install and load the packages: tidyverse, knitr, patchwork, and psych

15 | - Prints a long text (30-50 words) and saves it to a variable called "long_text"

16 |

--------------------------------------------------------------------------------

/2025/Week 2/week02.R:

--------------------------------------------------------------------------------

1 | # Week 02 -----------------------------------------------------------------

2 | # April 15th 2025

3 |

4 | # Working directory

5 | setwd("path here") # For me, this is "~/Linguistics toolkit course/2025/Code"

6 | getwd() # Show the working directory

7 |

8 | # Packages

9 | install.packages(c("NAME", "ANOTHER NAME")) # Install packages called NAME and ANOTHER NAME

10 | library(NAME) # Load one package at a time

11 | sessionInfo() # Current R session information

12 | detach("package:NAME", unload = TRUE) # Unload the package called NAME

--------------------------------------------------------------------------------

/2025/Week 2/week02handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2025/Week 2/week02handout.pdf

--------------------------------------------------------------------------------

/2025/Week 3/assignment03.R:

--------------------------------------------------------------------------------

1 | # Homework assignment week 3

2 | # AUTOGRADER INFORMATION/CAUTION

3 | # Type your answers in the comments next to the word "ANSWER".

4 | # Do not make new lines, delete code, or change the code. This might make the autograder fail.

5 |

6 | # 1. According to R, what is the type of the following variables:

7 | "R" # ANSWER

8 | -10 # ANSWER

9 | FALSE # ANSWER

10 | 3.14 # ANSWER

11 | as.logical(1) # ANSWER

12 | as.numeric(TRUE) # ANSWER

13 |

14 | # 2. According to R, are the following two variables equivalent (yes/no):

15 | 7+0i == 7 # ANSWER

16 | 9 == 9.0 # ANSWER

17 | "zero" == 0L # ANSWER

18 | "cat" == "cat" # ANSWER

19 | TRUE == 1 # ANSWER

20 |

21 | # 3. What is the output of the following operations? If there is an error, what caused it?

22 | -10 > 1 # ANSWER

23 | 5 != 4 # ANSWER

24 | 5 - FALSE # ANSWER

25 | 17.0 == 7 # ANSWER

26 | 4 = 9.1 # ANSWER

27 | 0/0 # ANSWER

28 | "toolkit" + 1 # ANSWER

29 | toolkit = 2 # ANSWER

30 | toolkit * 2 # ANSWER

31 | (1-2)/0 # ANSWER

32 | 10 -> 20 # ANSWER

33 | NA == NA # ANSWER

34 | NA == Inf # ANSWER

35 |

36 | # 4. Create a long text (30-50 words) and save it to a variable called "long_text".

37 |

--------------------------------------------------------------------------------

/2025/Week 3/assignment03.md:

--------------------------------------------------------------------------------

1 | # Assignment 03

2 |

3 | Upload 1 file to complete this assignment: assignment03.R

4 |

5 | ## AUTOGRADER INFORMATION/CAUTION

6 |

7 | Type your answers in the comments next to the word "ANSWER" for tasks 1-3.

--------------------------------------------------------------------------------

/2025/Week 3/week03.R:

--------------------------------------------------------------------------------

1 | # Week 03 -----------------------------------------------------------------

2 | # April 22nd 2025

3 |

4 | library(tidyverse)

5 | library(psych)

6 |

7 | # Data types

8 | typeof(1L)

9 | is.numeric(1)

10 | as.character(1)

11 |

12 | # Printing and assigning

13 | print("Hello World")

14 | jabberwocky <- print("Twas brillig, and the slithy toves did gyre and gimble in the wabe: all mimsy were the borogoves, and the mome raths outgrabe.")

15 | rm(jabberwocky) # Removes the variable "jabberwocky"

16 |

17 | # This operator can be used anywhere

18 | ten <- 10.2

19 | "rose" -> Rose

20 | mean(number <- 10)

21 |

22 | # This operator can be used only at the top level

23 | name = "Anna"

24 | mean(number = 10) # This will not work and cause an error

25 |

26 | # This operator assigns the value (used mainly in functions)

27 | true <<- FALSE

28 | 13/12 ->> n

29 | mean(number <<- 10)

30 |

31 | # Type coercion

32 | TRUE + 1

33 | 5L + 2

34 | 3.7 * 3L

35 | 99999.0e-1 - 3.3e+3

36 | 10 / as.complex(2)

37 | as.character(5) / 5 # This will not work and will cause an error

38 | paste(5+0i, "five")

39 |

40 | # Loading data

41 | getwd() # Check your working directory!

42 | moses <- read_csv("moses_raw_data.csv")

43 |

44 | # Inspecting data

45 | View(moses)

46 | moses

47 | print(moses, n=Inf)

48 | head(moses)

49 | tail(moses, n=20)

50 | spec(moses)

51 | summary(moses)

52 | describe(moses)

53 | colnames(moses)

54 |

55 | # This function calculates the probability of getting exactly 6 successes

56 | # out of 9 tries in a binomial experiment, where each try has a 50% (0.5)

57 | # chance of success. In other words: What are the chances of a fair coin landing

58 | # on "heads" 6 times out of 9 throws. Returns a probability between 0 and 1.

59 | # You can translate probability to percent by multiplying the result by 100

60 | # (so around 16.4%).

61 | dbinom(x=6, size=9, prob=0.5)

62 |

63 | min(moses$EventTime)

64 | max(moses$EventTime)

65 | quantile(moses$EventTime)

66 | colnames(moses)

67 | mean(moses$EventTime)

68 | median(moses$EventTime)

69 | min(moses$EventTime)

70 | max(moses$EventTime)

71 | range(moses$EventTime)

72 | sd(moses$EventTime)

73 | skew(moses$EventTime)

74 | kurtosis(moses$EventTime) # requires the package "moments", which we won't use

75 | mean_se(moses$EventTime)

76 |

77 | # Data cleanup

78 | select(WHERE, WHAT) # Select columns

79 | na.omit(WHERE) # Remove missing values

80 | filter(WHERE, TRUE CONDITION) # Select rows, based on a condition

81 | arrange(WHERE, HOW) # Reorder data by rows

82 | rename(WHERE, NEW = OLD) # Rename columns

83 | mutate(WHERE, NEW = FUNCTION(OLD)) # Create new values

84 |

85 | # Optional plot: Normal distribution with standard deviation lines.

86 | # Feel free to ignore. This code gives you a small preview of data visualization

87 | # That we'll be doing later in the course.

88 |

89 | # Define custom colors I use for the course

90 | dusk <- "#343643"

91 | pine <- "#476938"

92 | meadow <- "#86B047"

93 | sunshine <- "#DABA2E"

94 |

95 | # Set the mean and standard deviation for the normal distribution

96 | mean_value <- 0

97 | sd_value <- 1

98 |

99 | # Create a sequence of values from -4 to 4 (for plotting the bell curve)

100 | x_values <- seq(-4, 4, length.out = 100)

101 |

102 | # Generate the normal distribution values for those x-values

103 | y_values <- dnorm(x_values, mean = mean_value, sd = sd_value)

104 |

105 | # Create a data frame to use with ggplot

106 | data <- data.frame(x = x_values, y = y_values)

107 |

108 | # Plot the normal distribution curve

109 | ggplot(data, aes(x = x, y = y)) +

110 | geom_line(linewidth=2) + # Line for the bell curve

111 | annotate("text", x = mean_value + sd_value, y = 0.4, label = "68%", color = pine, size = 5) +

112 | annotate("text", x = mean_value + 2*sd_value, y = 0.4, label = "95%", color = meadow, size = 5) +

113 | annotate("text", x = mean_value + 3*sd_value, y = 0.4, label = "99.7%", color = sunshine, size = 5) +

114 | annotate("text", x = mean_value - sd_value, y = 0.4, label = "±1 SD", color = pine, size = 5) +

115 | annotate("text", x = mean_value - 2*sd_value, y = 0.4, label = "±2 SD", color = meadow, size = 5) +

116 | annotate("text", x = mean_value - 3*sd_value, y = 0.4, label = "±3 SD", color = sunshine, size = 5) +

117 | geom_histogram(stat="identity", fill="white", color=dusk)+ # Uncomment this line to see the values

118 | geom_vline(xintercept = mean_value, color = dusk, linetype = "dashed") + # Mean line

119 | geom_vline(xintercept = mean_value + sd_value, color = pine, linetype = "dotted", linewidth=1) + # +1 SD line

120 | geom_vline(xintercept = mean_value - sd_value, color = pine, linetype = "dotted", linewidth=1) + # -1 SD line

121 | geom_vline(xintercept = mean_value + 2*sd_value, color = meadow, linetype = "dashed", linewidth=1) + # +2 SD line

122 | geom_vline(xintercept = mean_value - 2*sd_value, color = meadow, linetype = "dashed", linewidth=1) + # -2 SD line

123 | geom_vline(xintercept = mean_value + 3*sd_value, color = sunshine, linetype = "solid", linewidth=1) + # +3 SD line

124 | geom_vline(xintercept = mean_value - 3*sd_value, color = sunshine, linetype = "solid", linewidth=1) + # -3 SD line

125 | labs(title = "Normal distribution with standard deviation lines", x = "Some variable X",

126 | y = "Density (how much data lies here)",

127 | subtitle="AKA Bell curve with ±1, ±2, ±3 SDs") +

128 | theme_bw() +

129 | theme(panel.grid = element_blank()) # Removes grid lines, because I think they're distracting

130 |

131 |

--------------------------------------------------------------------------------

/2025/Week 3/week03handout.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/a-nap/Digital-Research-Toolkit/a77600100bb012f00ea4329d576d3e40501eea01/2025/Week 3/week03handout.pdf

--------------------------------------------------------------------------------

/2025/Week 4/assignment04.R:

--------------------------------------------------------------------------------

1 | ###########################################################################

2 | # Assignment Week 4

3 | ###########################################################################

4 |

5 | # Please complete the following 4 tasks. Submit the assignment as a single R script.

6 | # Use comments and sections to give your file structure. I should be able to run

7 | # your script without errors.

8 |

9 | # Task 1 ------------------------------------------------------------------

10 | # Clean up the Moses illusion data like we did in the tasks in class and save it

11 | # to a new data frame.

12 | # - select relevant columns

13 | # - rename mislabeled columns

14 | # - remove missing data

15 | # - remove unnecessary rows

16 | # - arrange by condition, and answer

17 |

18 |

19 |

20 |

21 |

22 |

23 | # Task 2 ------------------------------------------------------------------

24 | # Have the mosesdata saved in your environment as "moses".

25 | # Why do these functions not work as intended? Fix the code and explain what was

26 | # wrong.

27 | ### IMPORTANT #############################################################

28 | # Type your answers in the comments next to the word "ANSWER".

29 |

30 | read_csv(moses.csv) # ANSWER

31 | tail(moses, n==10) # ANSWER

32 | Summary(moses) # ANSWER

33 | describe(Moses) # ANSWER

34 | filter(moses, CONDITION == 102) # ANSWER