├── pywick

├── models

│ ├── localization

│ │ └── __init__.py

│ ├── segmentation

│ │ ├── testnets

│ │ │ ├── hrnetv2

│ │ │ │ ├── __init__.py

│ │ │ │ └── bn_helper.py

│ │ │ ├── drnet

│ │ │ │ ├── __init__.py

│ │ │ │ └── utils.py

│ │ │ ├── tkcnet

│ │ │ │ └── __init__.py

│ │ │ ├── gscnn

│ │ │ │ ├── utils

│ │ │ │ │ ├── __init__.py

│ │ │ │ │ └── AttrDict.py

│ │ │ │ ├── my_functionals

│ │ │ │ │ └── __init__.py

│ │ │ │ ├── mynn.py

│ │ │ │ ├── __init__.py

│ │ │ │ └── config.py

│ │ │ ├── axial_deeplab

│ │ │ │ └── __init__.py

│ │ │ ├── lg_kernel_exfuse

│ │ │ │ └── __init__.py

│ │ │ ├── mixnet

│ │ │ │ ├── __init__.py

│ │ │ │ ├── layers.py

│ │ │ │ └── mdconv.py

│ │ │ ├── exfuse

│ │ │ │ ├── __init__.py

│ │ │ │ └── unet_layer.py

│ │ │ ├── flatten.py

│ │ │ ├── __init__.py

│ │ │ └── msc.py

│ │ ├── da_basenets

│ │ │ ├── __init__.py

│ │ │ ├── segbase.py

│ │ │ ├── model_store.py

│ │ │ └── jpu.py

│ │ ├── emanet

│ │ │ ├── __init__.py

│ │ │ └── settings.py

│ │ ├── galdnet

│ │ │ └── __init__.py

│ │ ├── refinenet

│ │ │ └── __init__.py

│ │ ├── mnas_linknets

│ │ │ └── __init__.py

│ │ ├── gcnnets

│ │ │ └── __init__.py

│ │ ├── config.py

│ │ ├── LICENSE-BSD3-Clause.txt

│ │ ├── __init__.py

│ │ ├── fcn32s.py

│ │ ├── drn_seg.py

│ │ ├── fcn16s.py

│ │ ├── seg_net.py

│ │ └── lexpsp.py

│ ├── classification

│ │ ├── dpn

│ │ │ └── __init__.py

│ │ ├── testnets

│ │ │ ├── __init__.py

│ │ │ └── se_module.py

│ │ ├── resnext_features

│ │ │ └── __init__.py

│ │ ├── pretrained_notes.txt

│ │ └── __init__.py

│ ├── LICENSE-MIT.txt

│ ├── __init__.py

│ ├── LICENSE-BSD 2-Clause.txt

│ ├── LICENSE-BSD.txt

│ ├── LICENSE_LIP6.txt

│ └── model_locations.py

├── modules

│ ├── __init__.py

│ └── stn.py

├── dictmodels

│ ├── __init__.py

│ ├── model_spec.py

│ └── dict_config.py

├── meters

│ ├── meter.py

│ ├── averagemeter.py

│ ├── __init__.py

│ ├── msemeter.py

│ ├── timemeter.py

│ ├── movingaveragevaluemeter.py

│ ├── mapmeter.py

│ ├── averagevaluemeter.py

│ └── classerrormeter.py

├── README_loss_functions.md

├── functions

│ ├── __init__.py

│ ├── mish.py

│ ├── LICENSE-MIT.txt

│ ├── swish.py

│ └── activations_autofn.py

├── datasets

│ ├── tnt

│ │ ├── __init__.py

│ │ ├── table.py

│ │ ├── dataset.py

│ │ ├── concatdataset.py

│ │ ├── transform.py

│ │ ├── resampledataset.py

│ │ ├── transformdataset.py

│ │ ├── listdataset.py

│ │ └── shuffledataset.py

│ ├── UsefulDataset.py

│ ├── __init__.py

│ ├── ClonedFolderDataset.py

│ ├── PredictFolderDataset.py

│ └── TensorDataset.py

├── transforms

│ ├── README.md

│ ├── __init__.py

│ └── utils.py

├── gridsearch

│ ├── __init__.py

│ ├── grid_test.py

│ └── pipeline.py

├── __init__.py

├── optimizers

│ ├── rangerlars.py

│ ├── lookaheadsgd.py

│ ├── __init__.py

│ ├── addsign.py

│ ├── powersign.py

│ └── lookahead.py

├── callbacks

│ ├── __init__.py

│ ├── LambdaCallback.py

│ ├── EarlyStopping.py

│ ├── History.py

│ ├── TQDM.py

│ ├── LRScheduler.py

│ ├── Callback.py

│ ├── CSVLogger.py

│ └── CallbackContainer.py

├── configs

│ ├── eval_classifier.yaml

│ └── train_classifier.json

├── LICENSE-MIT.txt

├── custom_regularizers.py

└── cust_random.py

├── setup.cfg

├── examples

├── imgs

│ ├── orig1.png

│ ├── orig2.png

│ ├── orig3.png

│ ├── tform1.png

│ ├── tform2.png

│ └── tform3.png

├── 17flowers_split.py

├── mnist_example.py

└── mnist_loader_example.py

├── docs

└── source

│ ├── help.rst

│ ├── api

│ ├── losses.rst

│ ├── samplers.rst

│ ├── initializers.rst

│ ├── regularizers.rst

│ ├── constraints.rst

│ ├── conditions.rst

│ ├── pywick.gridsearch.rst

│ ├── pywick.models.localization.rst

│ ├── pywick.models.rst

│ ├── pywick.functions.rst

│ ├── pywick.transforms.rst

│ ├── pywick.models.torchvision.rst

│ ├── pywick.datasets.rst

│ ├── pywick.meters.rst

│ ├── pywick.datasets.tnt.rst

│ └── pywick.rst.old

│ ├── requirements.txt

│ ├── segmentation_guide.md

│ ├── description.rst

│ ├── classification_guide.md

│ └── index.rst

├── .deepsource.toml

├── requirements.txt

├── tests

├── run_test.sh

└── integration

│ ├── fit_simple

│ ├── single_input_no_target.py

│ ├── simple_multi_input_no_target.py

│ ├── simple_multi_input_single_target.py

│ ├── single_input_single_target.py

│ ├── single_input_multi_target.py

│ └── simple_multi_input_multi_target.py

│ └── fit_loader_simple

│ ├── single_input_single_target.py

│ └── single_input_multi_target.py

├── .gitignore

├── entrypoint.sh

├── readthedocs.yml

├── LICENSE.txt

├── setup.py

└── Dockerfile

/pywick/models/localization/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/setup.cfg:

--------------------------------------------------------------------------------

1 | [metadata]

2 | description-file = README.md

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/hrnetv2/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/pywick/models/classification/dpn/__init__.py:

--------------------------------------------------------------------------------

1 | from .dualpath import *

--------------------------------------------------------------------------------

/pywick/models/segmentation/da_basenets/__init__.py:

--------------------------------------------------------------------------------

1 | from . import *

--------------------------------------------------------------------------------

/pywick/models/segmentation/emanet/__init__.py:

--------------------------------------------------------------------------------

1 | from .emanet import *

--------------------------------------------------------------------------------

/pywick/models/segmentation/galdnet/__init__.py:

--------------------------------------------------------------------------------

1 | from .GALDNet import *

2 |

--------------------------------------------------------------------------------

/pywick/models/segmentation/refinenet/__init__.py:

--------------------------------------------------------------------------------

1 | from .refinenet import *

--------------------------------------------------------------------------------

/pywick/modules/__init__.py:

--------------------------------------------------------------------------------

1 | from .module_trainer import ModuleTrainer

2 |

--------------------------------------------------------------------------------

/pywick/models/segmentation/mnas_linknets/__init__.py:

--------------------------------------------------------------------------------

1 | from .linknet import *

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/drnet/__init__.py:

--------------------------------------------------------------------------------

1 | from .drnet import DRNet

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/tkcnet/__init__.py:

--------------------------------------------------------------------------------

1 | from .tkcnet import *

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/gscnn/utils/__init__.py:

--------------------------------------------------------------------------------

1 | from .AttrDict import *

2 |

--------------------------------------------------------------------------------

/pywick/dictmodels/__init__.py:

--------------------------------------------------------------------------------

1 | from .dict_config import *

2 | from .model_spec import *

3 |

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/axial_deeplab/__init__.py:

--------------------------------------------------------------------------------

1 | from .axial_deeplab import *

--------------------------------------------------------------------------------

/examples/imgs/orig1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/achaiah/pywick/HEAD/examples/imgs/orig1.png

--------------------------------------------------------------------------------

/examples/imgs/orig2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/achaiah/pywick/HEAD/examples/imgs/orig2.png

--------------------------------------------------------------------------------

/examples/imgs/orig3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/achaiah/pywick/HEAD/examples/imgs/orig3.png

--------------------------------------------------------------------------------

/examples/imgs/tform1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/achaiah/pywick/HEAD/examples/imgs/tform1.png

--------------------------------------------------------------------------------

/examples/imgs/tform2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/achaiah/pywick/HEAD/examples/imgs/tform2.png

--------------------------------------------------------------------------------

/examples/imgs/tform3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/achaiah/pywick/HEAD/examples/imgs/tform3.png

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/lg_kernel_exfuse/__init__.py:

--------------------------------------------------------------------------------

1 | from .large_kernel_exfuse import *

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/mixnet/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Source: https://github.com/zsef123/MixNet-PyTorch

3 | """

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/exfuse/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Source: https://github.com/rplab-snu/nucleus_segmentation

3 | """

--------------------------------------------------------------------------------

/docs/source/help.rst:

--------------------------------------------------------------------------------

1 | Help

2 | ======

3 | Please visit our `github page`_.

4 |

5 | .. _github page: https://github.com/achaiah/pywick

--------------------------------------------------------------------------------

/pywick/models/classification/testnets/__init__.py:

--------------------------------------------------------------------------------

1 | from .se_densenet_full import se_densenet121, se_densenet161, se_densenet169, se_densenet201

--------------------------------------------------------------------------------

/.deepsource.toml:

--------------------------------------------------------------------------------

1 | version = 1

2 |

3 | [[analyzers]]

4 | name = "python"

5 | enabled = true

6 |

7 | [analyzers.meta]

8 | runtime_version = "3.x.x"

--------------------------------------------------------------------------------

/docs/source/api/losses.rst:

--------------------------------------------------------------------------------

1 | Losses

2 | ========

3 |

4 | .. automodule:: pywick.losses

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

--------------------------------------------------------------------------------

/docs/source/api/samplers.rst:

--------------------------------------------------------------------------------

1 | Samplers

2 | ==========

3 |

4 | .. automodule:: pywick.samplers

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

--------------------------------------------------------------------------------

/docs/source/api/initializers.rst:

--------------------------------------------------------------------------------

1 | Initializers

2 | ============

3 |

4 | .. automodule:: pywick.initializers

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

--------------------------------------------------------------------------------

/docs/source/api/regularizers.rst:

--------------------------------------------------------------------------------

1 | Regularizers

2 | ============

3 |

4 | .. automodule:: pywick.regularizers

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

--------------------------------------------------------------------------------

/docs/source/api/constraints.rst:

--------------------------------------------------------------------------------

1 | Constraints

2 | ============

3 |

4 | .. automodule:: pywick.constraints

5 | :members: Constraint, MaxNorm, NonNeg, UnitNorm

6 | :undoc-members:

--------------------------------------------------------------------------------

/pywick/models/segmentation/gcnnets/__init__.py:

--------------------------------------------------------------------------------

1 | from .gcn import *

2 | from .gcn_nasnet import *

3 | from .gcn_densenet import *

4 | from .gcn_psp import *

5 | from .gcn_resnext import *

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/flatten.py:

--------------------------------------------------------------------------------

1 | from torch.nn import Module

2 |

3 |

4 | class Flatten(Module):

5 | @staticmethod

6 | def forward(x):

7 | return x.view(x.size(0), -1)

8 |

--------------------------------------------------------------------------------

/docs/source/api/conditions.rst:

--------------------------------------------------------------------------------

1 | Conditions

2 | ============

3 |

4 | .. automodule:: pywick.conditions

5 | :members: Condition, SegmentationInputAsserts, SegmentationOutputAsserts

6 | :undoc-members:

--------------------------------------------------------------------------------

/pywick/models/classification/resnext_features/__init__.py:

--------------------------------------------------------------------------------

1 | from .resnext101_32x4d_features import resnext101_32x4d_features

2 | from .resnext101_64x4d_features import resnext101_64x4d_features

3 | from .resnext50_32x4d_features import resnext50_32x4d_features

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/gscnn/my_functionals/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Copyright (C) 2019 NVIDIA Corporation. All rights reserved.

3 | Licensed under the CC BY-NC-SA 4.0 license (https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode).

4 | """

5 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | albumentations

2 | dill

3 | #hickle

4 | h5py

5 | # inplace_abn

6 | numpy

7 | opencv-python-headless

8 | pandas

9 | pillow

10 | prodict

11 | pycm

12 | pyyaml

13 | scipy

14 | requests

15 | scikit-image

16 | six

17 | tabulate

18 | tini

19 | tqdm

20 | yacs

--------------------------------------------------------------------------------

/pywick/meters/meter.py:

--------------------------------------------------------------------------------

1 |

2 | class Meter:

3 | """

4 | Abstract meter class from which all other meters inherit

5 | """

6 | def reset(self):

7 | pass

8 |

9 | def add(self):

10 | pass

11 |

12 | def value(self):

13 | pass

14 |

--------------------------------------------------------------------------------

/docs/source/requirements.txt:

--------------------------------------------------------------------------------

1 | albumentations

2 | dill

3 | #hickle

4 | h5py

5 | # inplace_abn

6 | numpy

7 | opencv-python-headless

8 | pandas

9 | pillow

10 | prodict

11 | pycm

12 | pyyaml

13 | scipy

14 | requests

15 | scikit-image

16 | six

17 | tabulate

18 | tini

19 | tqdm

20 | yacs

--------------------------------------------------------------------------------

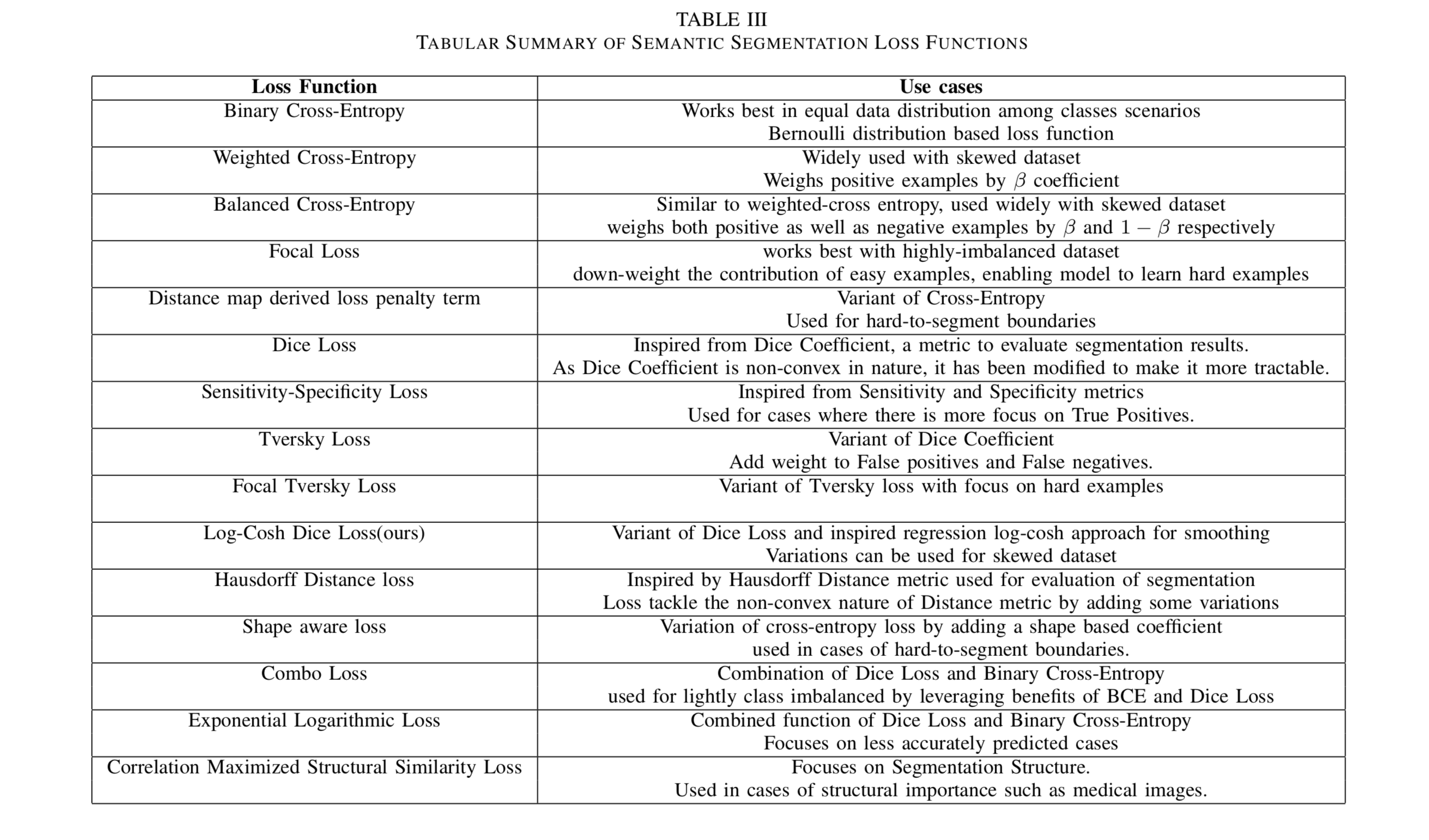

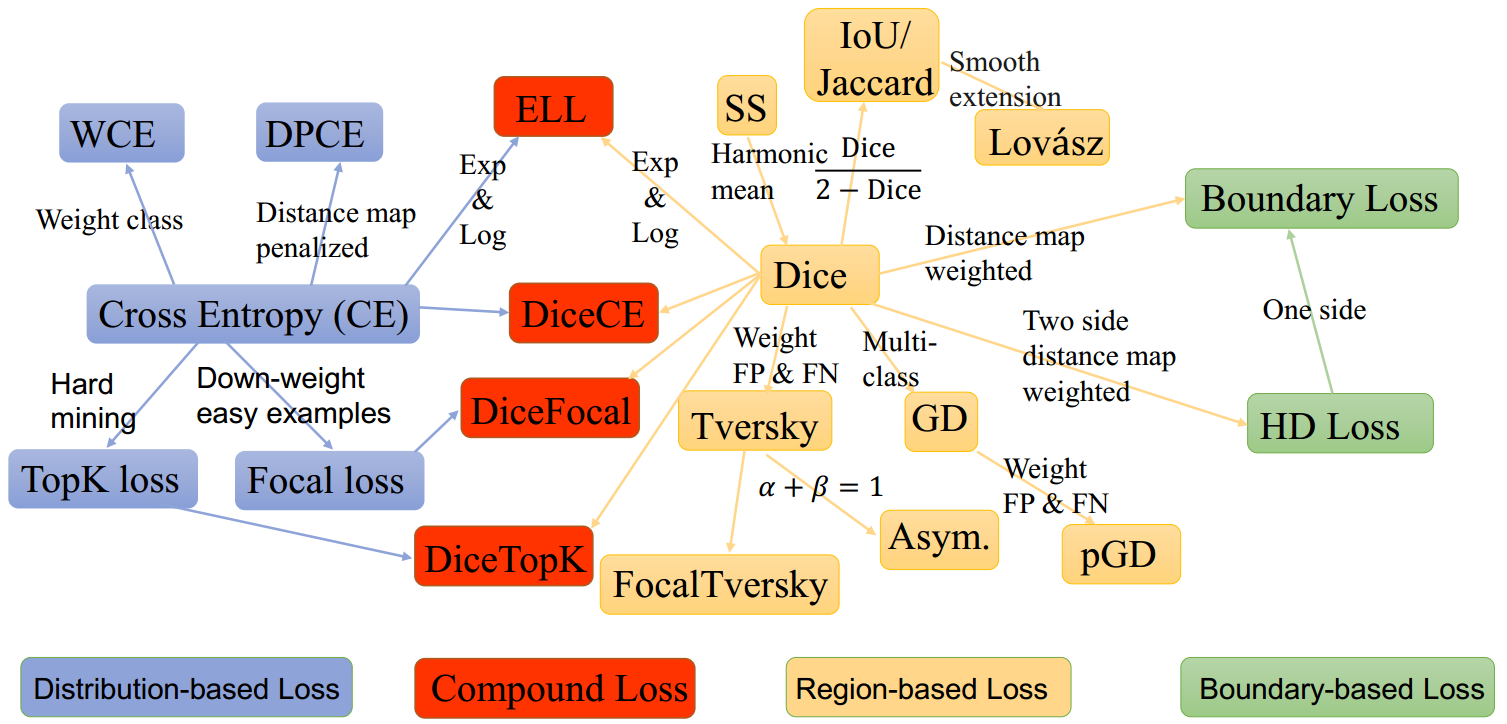

/pywick/README_loss_functions.md:

--------------------------------------------------------------------------------

1 | ## Summarized Loss functions and their use-cases

2 |

3 |

4 |

5 |

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/hrnetv2/bn_helper.py:

--------------------------------------------------------------------------------

1 |

2 | import torch

3 | import functools

4 |

5 | if torch.__version__.startswith('0'):

6 | from inplace_abn import InPlaceABNSync

7 | BatchNorm2d = functools.partial(InPlaceABNSync, activation='none')

8 | BatchNorm2d_class = InPlaceABNSync

9 | relu_inplace = False

10 | else:

11 | BatchNorm2d_class = BatchNorm2d = torch.nn.SyncBatchNorm

12 | relu_inplace = True

--------------------------------------------------------------------------------

/pywick/functions/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Here you can find a collection of functions that are used in neural networks. One of the most important aspects of a neural

3 | network is a good activation function. Pytorch already has a solid `collection `_

4 | of activation functions but here are a few more experimental ones to play around with.

5 | """

6 |

7 | from . import *

8 |

--------------------------------------------------------------------------------

/docs/source/api/pywick.gridsearch.rst:

--------------------------------------------------------------------------------

1 | Gridsearch

2 | =========================

3 |

4 | .. automodule:: pywick.gridsearch

5 | :members:

6 | :undoc-members:

7 |

8 | Gridsearch

9 | -------------

10 |

11 | .. automodule:: pywick.gridsearch.gridsearch

12 | :members:

13 | :undoc-members:

14 |

15 | Pipeline

16 | ---------------------------------

17 |

18 | .. automodule:: pywick.gridsearch.pipeline

19 | :members: Pipeline

20 | :undoc-members:

21 |

--------------------------------------------------------------------------------

/pywick/meters/averagemeter.py:

--------------------------------------------------------------------------------

1 | class AverageMeter:

2 | """Computes and stores the average and current value"""

3 | def __init__(self):

4 | self.reset()

5 |

6 | def reset(self):

7 | self.val = 0

8 | self.avg = 0

9 | self.sum = 0

10 | self.count = 0

11 |

12 | def update(self, val, n=1):

13 | self.val = val

14 | self.sum += val * n

15 | self.count += n

16 | self.avg = self.sum / self.count

--------------------------------------------------------------------------------

/pywick/datasets/tnt/__init__.py:

--------------------------------------------------------------------------------

1 | from .batchdataset import BatchDataset

2 | from .concatdataset import ConcatDataset

3 | from .dataset import Dataset

4 | from .listdataset import ListDataset

5 | from .multipartitiondataset import MultiPartitionDataset

6 | from .resampledataset import ResampleDataset

7 | from .shuffledataset import ShuffleDataset

8 | from .splitdataset import SplitDataset

9 | from .table import *

10 | from .transform import *

11 | from .transformdataset import TransformDataset

12 |

--------------------------------------------------------------------------------

/pywick/transforms/README.md:

--------------------------------------------------------------------------------

1 | ### Transforms

2 | Various transform functions have been collected here over the years to make augmentations easier to use. However, most transforms involving images

3 | (rather than numpy arrays or tensors) will be deprecated in favor of the [albumentations](https://github.com/albu/albumentations) package

4 | that you can install separately.

5 |

6 | ### Removed Transforms

7 | * CV2_transforms have been removed in favor of the [albumentations](https://github.com/albu/albumentations) package

--------------------------------------------------------------------------------

/tests/run_test.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 | set -e

3 |

4 | PYCMD=${PYCMD:="python"}

5 | if [ "$1" == "coverage" ];

6 | then

7 | coverage erase

8 | PYCMD="coverage run --parallel-mode --source torch "

9 | echo "coverage flag found. Setting python command to: \"$PYCMD\""

10 | fi

11 |

12 | pushd "$(dirname "$0")"

13 |

14 | $PYCMD test_meters.py

15 | $PYCMD unit/transforms/test_affine_transforms.py

16 | $PYCMD unit/transforms/test_image_transforms.py

17 | $PYCMD unit/transforms/test_tensor_transforms.py

18 |

--------------------------------------------------------------------------------

/pywick/gridsearch/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | When trying to find the right hyperparameters for your neural network, sometimes you just have to do a lot of trial and error.

3 | Currently, our Gridsearch implementation is pretty basic, but it allows you to supply ranges of input values for various

4 | metaparameters and then executes training runs in either random or sequential fashion.\n

5 | Warning: this class is a bit underdeveloped. Tread with care.

6 | """

7 |

8 | from .gridsearch import GridSearch

9 | from .pipeline import Pipeline

--------------------------------------------------------------------------------

/docs/source/api/pywick.models.localization.rst:

--------------------------------------------------------------------------------

1 | Localization

2 | ==================================

3 |

4 | FPN

5 | -------------------------------------

6 |

7 | .. automodule:: pywick.models.localization.fpn

8 | :members: FPN, FPN101

9 | :undoc-members:

10 | :show-inheritance:

11 |

12 | Retina\_FPN

13 | ---------------------------------------------

14 |

15 | .. automodule:: pywick.models.localization.retina_fpn

16 | :members: RetinaFPN, RetinaFPN101

17 | :undoc-members:

18 | :show-inheritance:

19 |

--------------------------------------------------------------------------------

/pywick/transforms/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Along with custom transforms provided by Pywick, we fully support integration of Albumentations `_ which contains a great number of useful transform functions. See train_classifier.py for an example of how to incorporate albumentations into training.

3 | """

4 |

5 | from .affine_transforms import *

6 | from .distortion_transforms import *

7 | from .image_transforms import *

8 | from .tensor_transforms import *

9 | from .utils import *

10 |

--------------------------------------------------------------------------------

/pywick/__init__.py:

--------------------------------------------------------------------------------

1 | __version__ = '0.6.5'

2 | __author__ = 'Achaiah'

3 | __description__ = 'High-level batteries-included neural network training library for Pytorch'

4 |

5 | from pywick import (

6 | callbacks,

7 | conditions,

8 | constraints,

9 | datasets,

10 | dictmodels,

11 | functions,

12 | gridsearch,

13 | losses,

14 | meters,

15 | metrics,

16 | misc,

17 | models,

18 | modules,

19 | optimizers,

20 | regularizers,

21 | samplers,

22 | transforms,

23 | utils

24 | )

25 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | .git/

2 | sandbox/

3 |

4 | *.DS_Store

5 | *__pycache__*

6 | __pycache__

7 | *.pyc

8 | .ipynb_checkpoints/

9 | *.ipynb_checkpoints/

10 | *.bkbn

11 | .spyderworkspace

12 | .spyderproject

13 |

14 | # setup.py working directory

15 | build

16 | # sphinx build directory

17 | doc/_build

18 | docs/build

19 | docs/Makefile

20 | docs/make.bat

21 | docs/source/_build

22 | # setup.py dist directory

23 | dist

24 | # Egg metadata

25 | *.egg-info

26 | .eggs

27 |

28 | .idea

29 | /pywick.egg-info/

30 |

31 | pywick/configs/train_classifier_local.yaml

32 |

--------------------------------------------------------------------------------

/pywick/datasets/tnt/table.py:

--------------------------------------------------------------------------------

1 | import torch

2 |

3 |

4 | def canmergetensor(tbl):

5 | if not isinstance(tbl, list):

6 | return False

7 |

8 | if torch.is_tensor(tbl[0]):

9 | sz = tbl[0].numel()

10 | for v in tbl:

11 | if v.numel() != sz:

12 | return False

13 | return True

14 | return False

15 |

16 |

17 | def mergetensor(tbl):

18 | sz = [len(tbl)] + list(tbl[0].size())

19 | res = tbl[0].new(torch.Size(sz))

20 | for i,v in enumerate(tbl):

21 | res[i].copy_(v)

22 | return res

23 |

--------------------------------------------------------------------------------

/pywick/optimizers/rangerlars.py:

--------------------------------------------------------------------------------

1 | from .lookahead import *

2 | from .ralamb import *

3 |

4 | # RAdam + LARS + LookAHead

5 |

6 | class RangerLars(Lookahead):

7 |

8 | def __init__(self, params, alpha=0.5, k=6, *args, **kwargs):

9 | """

10 | Combination of RAdam + LARS + LookAhead

11 |

12 | :param params:

13 | :param alpha:

14 | :param k:

15 | :param args:

16 | :param kwargs:

17 | :return:

18 | """

19 | ralamb = Ralamb(params, *args, **kwargs)

20 | super().__init__(ralamb, alpha, k)

21 |

--------------------------------------------------------------------------------

/docs/source/api/pywick.models.rst:

--------------------------------------------------------------------------------

1 | Models

2 | =====================

3 |

4 | .. automodule:: pywick.models

5 | :members:

6 | :undoc-members:

7 |

8 | .. toctree::

9 |

10 | pywick.models.torchvision

11 | pywick.models.rwightman

12 | pywick.models.classification

13 | pywick.models.localization

14 | pywick.models.segmentation

15 |

16 |

17 | utility functions

18 | ---------------------------------

19 |

20 | .. automodule:: pywick.models.model_utils

21 | :members: load_checkpoint, get_model, get_fc_names, get_supported_models

22 | :show-inheritance:

23 |

--------------------------------------------------------------------------------

/docs/source/segmentation_guide.md:

--------------------------------------------------------------------------------

1 | ## Segmentation

2 |

3 | In a short while we will publish a walk-through that will go into detail

4 | on how to do segmentation with Pywick. In the meantime, if you feel

5 | adventurous feel free to look at our [README](https://pywick.readthedocs.io/en/latest/README.html).

6 |

7 | You can also take a look at our [Classification guide](https://pywick.readthedocs.io/en/latest/classification_guide.html) to get a good idea of how to get started on your own. The segmentation training process is very similar but involves more complicated directory structure for data.

8 |

--------------------------------------------------------------------------------

/pywick/dictmodels/model_spec.py:

--------------------------------------------------------------------------------

1 | from typing import Dict

2 |

3 | from prodict import Prodict

4 |

5 |

6 | class ModelSpec(Prodict):

7 | """

8 | Model specification to instantiate. Most models will have pre-configured and pre-trained variants but this gives you more fine-grained control

9 | """

10 |

11 | model_name : int # Size of the batch to use when training (per GPU)

12 | model_params : Dict # where to find the training data

13 |

14 | def init(self):

15 | # nothing initialized yet but will be expanded in the future

16 | pass

17 |

--------------------------------------------------------------------------------

/pywick/models/classification/pretrained_notes.txt:

--------------------------------------------------------------------------------

1 | last_linear

2 | ----------

3 | NOTE: Some pretrained models contain '.fc' as the name of the last layer. Simply rename it to 'last_linear' before loading the weights.

4 |

5 | inceptionresnetv2

6 | inceptionv4

7 | nasnetalarge

8 | nasnetamobile

9 | pnasnet

10 | polynet

11 | resnet_swish

12 | resnext101_x

13 | SENet / se_resnet50, se_resnet101, se_resnet152 etc

14 | WideResNet

15 |

16 |

17 | Conv2D (1x1 kernel)

18 | -----------

19 | DPN (dpn68, dpn68b, dpn92, dpn98, dpn107, dpn131)

20 |

21 |

22 | Multiple FC

23 | -----------

24 | inception (torchvision) (fc)

25 | pyramid_resnet (fc2, fc3, fc4)

26 | resnet (torchvision) (fc)

27 | se_resnet (relies on resnet) (fc)

--------------------------------------------------------------------------------

/docs/source/api/pywick.functions.rst:

--------------------------------------------------------------------------------

1 | Functions

2 | ========================

3 |

4 | .. automodule:: pywick.functions

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

8 |

9 | CyclicLR

10 | --------------------------------

11 |

12 | .. automodule:: pywick.functions.cyclicLR

13 | :members:

14 | :undoc-members:

15 | :show-inheritance:

16 |

17 | Mish

18 | --------------------------------

19 |

20 | .. automodule:: pywick.functions.mish

21 | :members:

22 | :undoc-members:

23 | :show-inheritance:

24 |

25 | Swish + Aria

26 | -----------------------------

27 |

28 | .. automodule:: pywick.functions.swish

29 | :members:

30 | :undoc-members:

31 | :show-inheritance:

32 |

--------------------------------------------------------------------------------

/pywick/meters/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Meters are used to accumulate values over time or batch and generally provide some statistical measure of your process.

3 | """

4 |

5 | from pywick.meters.apmeter import APMeter

6 | from pywick.meters.aucmeter import AUCMeter

7 | from pywick.meters.averagemeter import AverageMeter

8 | from pywick.meters.averagevaluemeter import AverageValueMeter

9 | from pywick.meters.classerrormeter import ClassErrorMeter

10 | from pywick.meters.confusionmeter import ConfusionMeter

11 | from pywick.meters.mapmeter import mAPMeter

12 | from pywick.meters.movingaveragevaluemeter import MovingAverageValueMeter

13 | from pywick.meters.msemeter import MSEMeter

14 | from pywick.meters.timemeter import TimeMeter

15 |

--------------------------------------------------------------------------------

/pywick/modules/stn.py:

--------------------------------------------------------------------------------

1 |

2 | import torch.nn as nn

3 |

4 | from ..functions import F_affine2d, F_affine3d

5 |

6 |

7 | class STN2d(nn.Module):

8 |

9 | def __init__(self, local_net):

10 | super(STN2d, self).__init__()

11 | self.local_net = local_net

12 |

13 | def forward(self, x):

14 | params = self.local_net(x)

15 | x_transformed = F_affine2d(x[0], params.view(2,3))

16 | return x_transformed

17 |

18 |

19 | class STN3d(nn.Module):

20 |

21 | def __init__(self, local_net):

22 | self.local_net = local_net

23 |

24 | def forward(self, x):

25 | params = self.local_net(x)

26 | x_transformed = F_affine3d(x, params.view(3,4))

27 | return x_transformed

28 |

29 |

--------------------------------------------------------------------------------

/pywick/optimizers/lookaheadsgd.py:

--------------------------------------------------------------------------------

1 | from pywick.optimizers.lookahead import *

2 | from torch.optim import SGD

3 |

4 |

5 | class LookaheadSGD(Lookahead):

6 |

7 | def __init__(self, params, lr, alpha=0.5, k=6, momentum=0.9, dampening=0, weight_decay=0.0001, nesterov=False):

8 | """

9 | Combination of SGD + LookAhead

10 |

11 | :param params:

12 | :param lr:

13 | :param alpha:

14 | :param k:

15 | :param momentum:

16 | :param dampening:

17 | :param weight_decay:

18 | :param nesterov:

19 | """

20 | sgd = SGD(params, lr=lr, momentum=momentum, dampening=dampening, weight_decay=weight_decay, nesterov=nesterov)

21 | super().__init__(sgd, alpha, k)

22 |

--------------------------------------------------------------------------------

/docs/source/description.rst:

--------------------------------------------------------------------------------

1 | Welcome to Pywick!

2 | ========================

3 |

4 | About

5 | ^^^^^

6 | Pywick is a high-level Pytorch training framework that aims to get you up and running quickly with state of the art neural networks.

7 | Does the world need another Pytorch framework? Probably not. But we started this project when no good frameworks were available and

8 | it just kept growing. So here we are.

9 |

10 | Pywick tries to stay on the bleeding edge of research into neural networks. If you just wish to run a vanilla CNN, this is probably

11 | going to be overkill. However, if you want to get lost in the world of neural networks, fine-tuning and hyperparameter optimization

12 | for months on end then this is probably the right place for you :)

--------------------------------------------------------------------------------

/entrypoint.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # run demo if "demo" env variable is set

4 | if [ -n "$demo" ]; then

5 | # prepare directories

6 | mkdir -p /data /jobs && cd /data && \

7 | # get the dataset

8 | wget https://www.robots.ox.ac.uk/~vgg/data/flowers/17/17flowers.tgz && \

9 | tar xzf 17flowers.tgz && rm 17flowers.tgz && \

10 | # refactor images into correct structure

11 | python /home/pywick/examples/17flowers_split.py && \

12 | rm -rf jpg && \

13 | # train on the dataset

14 | cd /home/pywick/pywick && python train_classifier.py configs/train_classifier.yaml

15 | echo "keeping container alive ..."

16 | tail -f /dev/null

17 |

18 | # otherwise keep the container alive

19 | else

20 | echo "running a blank container..."

21 | tail -f /dev/null

22 | fi

--------------------------------------------------------------------------------

/readthedocs.yml:

--------------------------------------------------------------------------------

1 | # .readthedocs.yml

2 | # Read the Docs configuration file

3 | # See https://docs.readthedocs.io/en/stable/config-file/v2.html for details

4 |

5 | # Required

6 | version: 2

7 |

8 | # Build documentation in the docs/ directory with Sphinx

9 | sphinx:

10 | configuration: docs/source/conf.py

11 |

12 | # Build documentation with MkDocs

13 | #mkdocs:

14 | # configuration: mkdocs.yml

15 |

16 | # Optionally build your docs in additional formats such as PDF and ePub

17 | formats: all

18 |

19 | # Configuration for the documentation build process

20 | build:

21 | image: latest

22 |

23 | # Optionally set the version of Python and requirements required to build your docs

24 | python:

25 | version: 3.6

26 | install:

27 | - requirements: docs/source/requirements.txt

--------------------------------------------------------------------------------

/docs/source/api/pywick.transforms.rst:

--------------------------------------------------------------------------------

1 | Transforms

2 | =========================

3 |

4 | Affine

5 | -------------------------------------------

6 |

7 | .. automodule:: pywick.transforms.affine_transforms

8 | :members:

9 | :undoc-members:

10 |

11 |

12 | Distortion

13 | -----------------------------------------------

14 |

15 | .. automodule:: pywick.transforms.distortion_transforms

16 | :members:

17 | :undoc-members:

18 |

19 |

20 | Image

21 | ------------------------------------------

22 |

23 | .. automodule:: pywick.transforms.image_transforms

24 | :members:

25 | :undoc-members:

26 |

27 |

28 | Tensor

29 | -------------------------------------------

30 |

31 | .. automodule:: pywick.transforms.tensor_transforms

32 | :members:

33 | :undoc-members:

34 |

35 |

--------------------------------------------------------------------------------

/pywick/meters/msemeter.py:

--------------------------------------------------------------------------------

1 | import math

2 | from . import meter

3 | import torch

4 |

5 |

6 | class MSEMeter(meter.Meter):

7 | def __init__(self, root=False):

8 | super(MSEMeter, self).__init__()

9 | self.reset()

10 | self.root = root

11 |

12 | def reset(self):

13 | self.n = 0

14 | self.sesum = 0.0

15 |

16 | def add(self, output, target):

17 | if not torch.is_tensor(output) and not torch.is_tensor(target):

18 | output = torch.from_numpy(output)

19 | target = torch.from_numpy(target)

20 | self.n += output.numel()

21 | self.sesum += torch.sum((output - target) ** 2)

22 |

23 | def value(self):

24 | mse = self.sesum / max(1, self.n)

25 | return math.sqrt(mse) if self.root else mse

26 |

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/mixnet/layers.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 |

4 |

5 | class Swish(nn.Module):

6 | @staticmethod

7 | def forward(x):

8 | return x * torch.sigmoid(x)

9 |

10 |

11 | class Flatten(nn.Module):

12 | @staticmethod

13 | def forward(x):

14 | return x.view(x.shape[0], -1)

15 |

16 |

17 | class SEModule(nn.Module):

18 | def __init__(self, ch, squeeze_ch):

19 | super().__init__()

20 | self.se = nn.Sequential(

21 | nn.AdaptiveAvgPool2d(1),

22 | nn.Conv2d(ch, squeeze_ch, 1, 1, 0, bias=True),

23 | Swish(),

24 | nn.Conv2d(squeeze_ch, ch, 1, 1, 0, bias=True),

25 | )

26 |

27 | def forward(self, x):

28 | return x * torch.sigmoid(self.se(x))

29 |

--------------------------------------------------------------------------------

/pywick/models/segmentation/config.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | # here (https://github.com/pytorch/vision/tree/master/torchvision/models) to find the download link of pretrained models

4 |

5 | root = '/models/pytorch'

6 | res50_path = os.path.join(root, 'resnet50-19c8e357.pth')

7 | res101_path = os.path.join(root, 'resnet101-5d3b4d8f.pth')

8 | res152_path = os.path.join(root, 'resnet152-b121ed2d.pth')

9 | inception_v3_path = os.path.join(root, 'inception_v3_google-1a9a5a14.pth')

10 | vgg19_bn_path = os.path.join(root, 'vgg19_bn-c79401a0.pth')

11 | vgg16_path = os.path.join(root, 'vgg16-397923af.pth')

12 | dense201_path = os.path.join(root, 'densenet201-4c113574.pth')

13 |

14 | '''

15 | vgg16 trained using caffe

16 | visit this (https://github.com/jcjohnson/pytorch-vgg) to download the converted vgg16

17 | '''

18 | vgg16_caffe_path = os.path.join(root, 'vgg16-caffe.pth')

19 |

--------------------------------------------------------------------------------

/pywick/callbacks/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Callbacks are the primary mechanism by which one can embed event hooks into the training process. Many useful callbacks are provided

3 | out of the box but in all likelihood you will want to implement your own to execute actions based on training events. To do so,

4 | simply extend the pywick.callbacks.Callback class and overwrite functions that you are interested in acting upon.

5 |

6 | """

7 | from .Callback import *

8 | from .CallbackContainer import *

9 | from .CSVLogger import *

10 | from .CyclicLRScheduler import *

11 | from .EarlyStopping import *

12 | from .ExperimentLogger import *

13 | from .History import *

14 | from .LambdaCallback import *

15 | from .LRScheduler import *

16 | from .ModelCheckpoint import *

17 | from .OneCycleLRScheduler import *

18 | from .ReduceLROnPlateau import *

19 | from .SimpleModelCheckpoint import *

20 | from .TQDM import *

21 |

--------------------------------------------------------------------------------

/pywick/meters/timemeter.py:

--------------------------------------------------------------------------------

1 | import time

2 | from . import meter

3 |

4 | class TimeMeter(meter.Meter):

5 | """

6 | This meter is designed to measure the time between events and can be

7 | used to measure, for instance, the average processing time per batch of data.

8 | It is different from most other meters in terms of the methods it provides:

9 |

10 | Mmethods:

11 |

12 | * `reset()` resets the timer, setting the timer and unit counter to zero.

13 | * `value()` returns the time passed since the last `reset()`; divided by the counter value when `unit=true`.

14 | """

15 | def __init__(self, unit):

16 | super(TimeMeter, self).__init__()

17 | self.unit = unit

18 | self.reset()

19 |

20 | def reset(self):

21 | self.n = 0

22 | self.time = time.time()

23 |

24 | def value(self):

25 | return time.time() - self.time

26 |

--------------------------------------------------------------------------------

/pywick/models/classification/testnets/se_module.py:

--------------------------------------------------------------------------------

1 | # Source: https://github.com/zhouyuangan/SE_DenseNet/blob/master/se_module.py (License: MIT)

2 |

3 | from torch import nn

4 |

5 |

6 | class SELayer(nn.Module):

7 | def __init__(self, channel, reduction=16):

8 | if channel <= reduction:

9 | raise AssertionError("Make sure your input channel bigger than reduction which equals to {}".format(reduction))

10 | super(SELayer, self).__init__()

11 | self.avg_pool = nn.AdaptiveAvgPool2d(1)

12 | self.fc = nn.Sequential(

13 | nn.Linear(channel, channel // reduction),

14 | nn.ReLU(inplace=True),

15 | nn.Linear(channel // reduction, channel),

16 | nn.Sigmoid()

17 | )

18 |

19 | def forward(self, x):

20 | b, c, _, _ = x.size()

21 | y = self.avg_pool(x).view(b, c)

22 | y = self.fc(y).view(b, c, 1, 1)

23 | return x * y

--------------------------------------------------------------------------------

/pywick/configs/eval_classifier.yaml:

--------------------------------------------------------------------------------

1 | # This specification extends / overrides default.yaml where necessary

2 | __include__: default.yaml

3 |

4 | eval:

5 | batch_size: 1 # size of batch to run through eval

6 | dataroots: '/data/eval' # directory containing evaluation data

7 | eval_chkpt: '/data/models/best.pth' # saved checkpoint to use for evaluation

8 | gpu_id: 0

9 | has_grnd_truth: True # whether ground truth is provided (as directory names under which images reside)

10 | # input_size: 224 # should be saved with the model but could be overridden here

11 | jobroot: '/jobs/eval_output' # where to output predictions

12 | topK: 5 # number of results to return

13 | use_gpu: False # toggle gpu use for inference

14 | workers: 1 # keep at 1 otherwise statistics may not be accurate

--------------------------------------------------------------------------------

/examples/17flowers_split.py:

--------------------------------------------------------------------------------

1 | import shutil

2 | import os

3 |

4 | directory = "jpg"

5 | target_train = "17flowers"

6 |

7 | if not os.path.isdir(target_train):

8 | os.makedirs(target_train)

9 |

10 | classes = [

11 | "daffodil",

12 | "snowdrop",

13 | "lilyvalley",

14 | "bluebell",

15 | "crocus",

16 | "iris",

17 | "tigerlily",

18 | "tulip",

19 | "fritillary",

20 | "sunflower",

21 | "daisy",

22 | "coltsfoot",

23 | "dandelion",

24 | "cowslip",

25 | "buttercup",

26 | "windflower",

27 | "pansy",

28 | ]

29 |

30 | j = 0

31 | for i in range(1, 1361):

32 | label_dir = os.path.join(target_train, classes[j])

33 |

34 | if not os.path.isdir(label_dir):

35 | os.makedirs(label_dir)

36 |

37 | filename = "image_" + str(i).zfill(4) + ".jpg"

38 | shutil.copy(

39 | os.path.join(directory, filename), os.path.join(label_dir, filename)

40 | )

41 |

42 | if i % 80 == 0:

43 | j += 1

--------------------------------------------------------------------------------

/docs/source/api/pywick.models.torchvision.rst:

--------------------------------------------------------------------------------

1 | Torchvision Models

2 | ====================================

3 |

4 | All standard `torchvision models `_

5 | are supported out of the box.

6 |

7 | * AlexNet

8 | * Densenet (121, 161, 169, 201)

9 | * GoogLeNet

10 | * Inception V3

11 | * Mobilenet V2

12 | * ResNet (18, 34, 50, 101, 152)

13 | * ShuffleNet V2

14 | * SqueezeNet (1.0, 1.1)

15 | * VGG (11, 13, 16, 19)

16 |

17 | Keep in mind that if you use torvision loading methods (e.g. ``torchvision.models.alexnet(...)``) you

18 | will get a vanilla pretrained model based on Imagenet with 1000 classes. However, more typically,

19 | you'll want to use a pretrained model with your own dataset (and your own number of classes). In that

20 | case you should instead use Pywick's ``models.model_utils.get_model(...)`` utility function

21 | which will do all the dirty work for you and give you a pretrained model but with your custom

22 | number of classes!

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/gscnn/mynn.py:

--------------------------------------------------------------------------------

1 | """

2 | Copyright (C) 2019 NVIDIA Corporation. All rights reserved.

3 | Licensed under the CC BY-NC-SA 4.0 license (https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode).

4 | """

5 |

6 | import torch.nn as nn

7 | from .config import cfg

8 |

9 |

10 | def Norm2d(in_channels):

11 | """

12 | Custom Norm Function to allow flexible switching

13 | """

14 | layer = cfg.MODEL.BNFUNC

15 | normalizationLayer = layer(in_channels)

16 | return normalizationLayer

17 |

18 |

19 | def initialize_weights(*models):

20 | for model in models:

21 | for module in model.modules():

22 | if isinstance(module(nn.Conv2d, nn.Linear)):

23 | nn.init.kaiming_normal(module.weight)

24 | if module.bias is not None:

25 | module.bias.data.zero_()

26 | elif isinstance(module, nn.BatchNorm2d):

27 | module.weight.data.fill_(1)

28 | module.bias.data.zero_()

29 |

--------------------------------------------------------------------------------

/pywick/functions/mish.py:

--------------------------------------------------------------------------------

1 | # Source: https://github.com/rwightman/gen-efficientnet-pytorch/blob/master/geffnet/activations/activations.py (Apache 2.0)

2 | # Note. Cuda-compiled source can be found here: https://github.com/thomasbrandon/mish-cuda (MIT)

3 |

4 | import torch.nn as nn

5 | import torch.nn.functional as F

6 |

7 | def mish(x, inplace: bool = False):

8 | """Mish: A Self Regularized Non-Monotonic Neural Activation Function - https://arxiv.org/abs/1908.08681

9 | """

10 | return x.mul(F.softplus(x).tanh())

11 |

12 | class Mish(nn.Module):

13 | """

14 | Mish - "Mish: A Self Regularized Non-Monotonic Neural Activation Function"

15 | https://arxiv.org/abs/1908.08681v1

16 | implemented for PyTorch / FastAI by lessw2020

17 | github: https://github.com/lessw2020/mish

18 | """

19 | def __init__(self, inplace: bool = False):

20 | super(Mish, self).__init__()

21 | self.inplace = inplace

22 |

23 | def forward(self, x):

24 | return mish(x, self.inplace)

25 |

--------------------------------------------------------------------------------

/pywick/models/LICENSE-MIT.txt:

--------------------------------------------------------------------------------

1 | The MIT License

2 |

3 | Copyright 2019

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

6 |

7 | The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

8 |

9 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/__init__.py:

--------------------------------------------------------------------------------

1 | from .autofocusNN import *

2 | from .axial_deeplab import *

3 | from .dabnet import *

4 | from .deeplabv3 import DeepLabV3 as TEST_DLV3

5 | from .deeplabv2 import DeepLabV2 as TEST_DLV2

6 | from .deeplabv3_xception import DeepLabv3_plus as TEST_DLV3_Xception

7 | from .deeplabv3_xception import create_DLX_V3_pretrained as TEST_DLX_V3

8 | from .deeplabv3_resnet import create_DLR_V3_pretrained as TEST_DLR_V3

9 | from .difnet import DifNet101, DifNet152

10 | from .drnet import DRNet

11 | from .encnet import EncNet as TEST_EncNet, encnet_resnet50 as TEST_EncNet_Res50, encnet_resnet101 as TEST_EncNet_Res101, encnet_resnet152 as TEST_EncNet_Res152

12 | from .exfuse import UnetExFuse

13 | from .gscnn import GSCNN

14 | from .lg_kernel_exfuse import GCNFuse

15 | from .psanet import *

16 | from .psp_saeed import PSPNet as TEST_PSPNet2

17 | from .tkcnet import TKCNet_Resnet101

18 | from .tiramisu_test import FCDenseNet57 as TEST_Tiramisu57

19 | from .Unet_nested import UNet_Nested_dilated as TEST_Unet_nested_dilated

20 | from .unet_plus_plus import NestNet as Unet_Plus_Plus

--------------------------------------------------------------------------------

/pywick/transforms/utils.py:

--------------------------------------------------------------------------------

1 | import cv2

2 |

3 |

4 | def read_cv2_as_rgba(path):

5 | """

6 | Reads files from the provided path and returns them as a dictionary of: {'image': rgba, 'mask': rgba[:, :, 3]}

7 | :param path: Absolute file path

8 |

9 | :return: {'image': rgba, 'mask': rgba[:, :, 3]}

10 | """

11 | image = cv2.imread(path, -1)

12 | # By default OpenCV uses BGR color space for color images, so we need to convert the image to RGB color space.

13 | rgba = cv2.cvtColor(image, cv2.COLOR_BGRA2RGBA)

14 | return {'image': rgba, 'mask': rgba[:, :, 3]}

15 |

16 |

17 | def read_cv2_as_rgb(path):

18 | """

19 | Reads files from the provided path and returns them as a dictionary of: {'image': rgb} in RGB format

20 | :param path: Absolute file path

21 |

22 | :return: CV2 / numpy array in RGB format

23 | """

24 | image = cv2.imread(path, -1)

25 | # By default OpenCV uses BGR color space for color images, so we need to convert the image to RGB color space.

26 | return {'image': cv2.cvtColor(image, cv2.COLOR_BGR2RGB)}

27 |

--------------------------------------------------------------------------------

/pywick/models/segmentation/emanet/settings.py:

--------------------------------------------------------------------------------

1 | import logging

2 | import numpy as np

3 | from torch import Tensor

4 |

5 |

6 | # Data settings

7 | DATA_ROOT = '/path/to/VOC'

8 | MEAN = Tensor(np.array([0.485, 0.456, 0.406]))

9 | STD = Tensor(np.array([0.229, 0.224, 0.225]))

10 | SCALES = (0.5, 0.75, 1.0, 1.25, 1.5, 1.75, 2.0)

11 | CROP_SIZE = 513

12 | IGNORE_LABEL = 255

13 |

14 | # Model definition

15 | N_CLASSES = 21

16 | N_LAYERS = 101

17 | STRIDE = 8

18 | BN_MOM = 3e-4

19 | EM_MOM = 0.9

20 | STAGE_NUM = 3

21 |

22 | # Training settings

23 | BATCH_SIZE = 16

24 | ITER_MAX = 30000

25 | ITER_SAVE = 2000

26 |

27 | LR_DECAY = 10

28 | LR = 9e-3

29 | LR_MOM = 0.9

30 | POLY_POWER = 0.9

31 | WEIGHT_DECAY = 1e-4

32 |

33 | DEVICE = 0

34 | DEVICES = list(range(0, 4))

35 |

36 | LOG_DIR = './logdir'

37 | MODEL_DIR = './models'

38 | NUM_WORKERS = 16

39 |

40 | logger = logging.getLogger('train')

41 | logger.setLevel(logging.INFO)

42 | ch = logging.StreamHandler()

43 | ch.setLevel(logging.INFO)

44 | formatter = logging.Formatter('%(asctime)s - %(levelname)s - %(message)s')

45 | ch.setFormatter(formatter)

46 | logger.addHandler(ch)

47 |

--------------------------------------------------------------------------------

/pywick/datasets/tnt/dataset.py:

--------------------------------------------------------------------------------

1 | from torch.utils.data import DataLoader

2 |

3 |

4 | class Dataset:

5 | def __init__(self):

6 | pass

7 |

8 | def __len__(self):

9 | pass

10 |

11 | def __getitem__(self, idx):

12 | if idx >= len(self):

13 | raise IndexError("CustomRange index out of range")

14 | pass

15 |

16 | def batch(self, *args, **kwargs):

17 | from .batchdataset import BatchDataset

18 | return BatchDataset(self, *args, **kwargs)

19 |

20 | def transform(self, *args, **kwargs):

21 | from .transformdataset import TransformDataset

22 | return TransformDataset(self, *args, **kwargs)

23 |

24 | def shuffle(self, *args, **kwargs):

25 | from .shuffledataset import ShuffleDataset

26 | return ShuffleDataset(self, *args, **kwargs)

27 |

28 | def parallel(self, *args, **kwargs):

29 | return DataLoader(self, *args, **kwargs)

30 |

31 | def partition(self, *args, **kwargs):

32 | from .multipartitiondataset import MultiPartitionDataset

33 | return MultiPartitionDataset(self, *args, **kwargs)

34 |

35 |

--------------------------------------------------------------------------------

/pywick/models/segmentation/testnets/gscnn/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Copyright (C) 2019 NVIDIA Corporation. All rights reserved.

3 | Licensed under the CC BY-NC-SA 4.0 license (https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode).

4 | """

5 |

6 | import importlib

7 | import torch

8 | import logging

9 |

10 | from .gscnn import GSCNN

11 |

12 | def get_net(args, criterion):

13 | net = get_model(network=args.arch, num_classes=args.dataset_cls.num_classes,

14 | criterion=criterion, trunk=args.trunk)

15 | num_params = sum([param.nelement() for param in net.parameters()])

16 | logging.info('Model params = {:2.1f}M'.format(num_params / 1000000))

17 |

18 | net = net.cuda()

19 | net = torch.nn.DataParallel(net)

20 | return net

21 |

22 |

23 | def get_model(network, num_classes, criterion, trunk):

24 |

25 | module = network[:network.rfind('.')]

26 | model = network[network.rfind('.')+1:]

27 | mod = importlib.import_module(module)

28 | net_func = getattr(mod, model)

29 | net = net_func(num_classes=num_classes, trunk=trunk, criterion=criterion)

30 | return net

31 |

32 |

33 |

--------------------------------------------------------------------------------

/pywick/LICENSE-MIT.txt:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2017 Elad Hoffer

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

--------------------------------------------------------------------------------

/pywick/meters/movingaveragevaluemeter.py:

--------------------------------------------------------------------------------

1 | import math

2 | from . import meter

3 | import torch

4 |

5 |

6 | class MovingAverageValueMeter(meter.Meter):

7 | """

8 | Keeps track of mean and standard deviation of some value for a given window.

9 | """

10 | def __init__(self, windowsize):

11 | super(MovingAverageValueMeter, self).__init__()

12 | self.windowsize = windowsize

13 | self.valuequeue = torch.Tensor(windowsize)

14 | self.reset()

15 |

16 | def reset(self):

17 | self.sum = 0.0

18 | self.n = 0

19 | self.var = 0.0

20 | self.valuequeue.fill_(0)

21 |

22 | def add(self, value):

23 | queueid = (self.n % self.windowsize)

24 | oldvalue = self.valuequeue[queueid]

25 | self.sum += value - oldvalue

26 | self.var += value * value - oldvalue * oldvalue

27 | self.valuequeue[queueid] = value

28 | self.n += 1

29 |

30 | def value(self):

31 | n = min(self.n, self.windowsize)

32 | mean = self.sum / max(1, n)

33 | std = math.sqrt(max((self.var - n * mean * mean) / max(1, n-1), 0))

34 | return mean, std

35 |

36 |

--------------------------------------------------------------------------------

/pywick/functions/LICENSE-MIT.txt:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2017 Elad Hoffer

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

--------------------------------------------------------------------------------

/pywick/datasets/UsefulDataset.py:

--------------------------------------------------------------------------------

1 | import torch.utils.data.dataset as ds

2 |

3 | class UsefulDataset(ds.Dataset):

4 | '''

5 | A ``torch.utils.data.Dataset`` class with additional useful functions.

6 | '''

7 |

8 | def __init__(self):

9 | self.num_inputs = 1 # these are hardcoded for the fit module to work

10 | self.num_targets = 1 # these are hardcoded for the fit module to work

11 |

12 | def getdata(self):

13 | """

14 | Data that the Dataset class operates on. Typically iterable/list of tuple(label,target).

15 | Note: This is different than simply calling myDataset.data because some datasets are comprised of multiple other datasets!

16 | The dataset returned should be the `combined` dataset!

17 |

18 | :return: iterable - Representation of the entire dataset (combined if necessary from multiple other datasets)

19 | """

20 | raise NotImplementedError

21 |

22 | def getmeta_data(self):

23 | """

24 | Additional data to return that might be useful to consumer. Typically a dict.

25 |

26 | :return: dict(any)

27 | """

28 | raise NotImplementedError

--------------------------------------------------------------------------------

/pywick/datasets/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Datasets are the primary mechanism by which Pytorch assembles training and testing data

3 | to be used while training neural networks. While `pytorch` already provides a number of

4 | handy `datasets `_ and

5 | `torchvision` further extends them to common

6 | `academic sets `_,

7 | the implementations below provide some very powerful options for loading all kinds of data.

8 | We had to extend the default Pytorch implementation as by default it does not keep track

9 | of some useful metadata. That said, you can use our datasets in the normal fashion you're used to

10 | with Pytorch.

11 | """

12 |

13 | from .BaseDataset import BaseDataset

14 | from .ClonedFolderDataset import ClonedFolderDataset

15 | from .CSVDataset import CSVDataset

16 | from .FolderDataset import FolderDataset

17 | from .MultiFolderDataset import MultiFolderDataset

18 | from .PredictFolderDataset import PredictFolderDataset

19 | from .TensorDataset import TensorDataset

20 | from .UsefulDataset import UsefulDataset

21 | from . import data_utils

22 | from .tnt import *

23 |

--------------------------------------------------------------------------------

/pywick/meters/mapmeter.py:

--------------------------------------------------------------------------------

1 | from . import meter, APMeter

2 |

3 | class mAPMeter(meter.Meter):

4 | """

5 | The mAPMeter measures the mean average precision over all classes.

6 |

7 | The mAPMeter is designed to operate on `NxK` Tensors `output` and

8 | `target`, and optionally a `Nx1` Tensor weight where (1) the `output`

9 | contains model output scores for `N` examples and `K` classes that ought to

10 | be higher when the model is more convinced that the example should be

11 | positively labeled, and smaller when the model believes the example should

12 | be negatively labeled (for instance, the output of a sigmoid function); (2)

13 | the `target` contains only values 0 (for negative examples) and 1

14 | (for positive examples); and (3) the `weight` ( > 0) represents weight for

15 | each sample.

16 | """

17 | def __init__(self):

18 | super(mAPMeter, self).__init__()

19 | self.apmeter = APMeter()

20 |

21 | def reset(self):

22 | self.apmeter.reset()

23 |

24 | def add(self, output, target, weight=None):

25 | self.apmeter.add(output, target, weight)

26 |

27 | def value(self):

28 | return self.apmeter.value().mean()

29 |

--------------------------------------------------------------------------------

/pywick/optimizers/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Optimizers govern the path that your neural network takes as it tries to minimize error.

3 | Picking the right optimizer and initializing it with the right parameters will either make your network learn successfully

4 | or will cause it not to learn at all! Pytorch already implements the most widely used flavors such as SGD, Adam, RMSProp etc.

5 | Here we strive to include optimizers that Pytorch has missed (and any cutting edge ones that have not yet been added).

6 | """

7 |

8 | from .a2grad import A2GradInc, A2GradExp, A2GradUni

9 | from .adabelief import AdaBelief

10 | from .adahessian import Adahessian

11 | from .adamp import AdamP

12 | from .adamw import AdamW

13 | from .addsign import AddSign

14 | from .apollo import Apollo

15 | from .eve import Eve

16 | from .lars import Lars

17 | from .lookahead import Lookahead

18 | from .lookaheadsgd import LookaheadSGD

19 | from .madgrad import MADGRAD

20 | from .nadam import Nadam

21 | from .powersign import PowerSign

22 | from .qhadam import QHAdam

23 | from .radam import RAdam

24 | from .ralamb import Ralamb

25 | from .rangerlars import RangerLars

26 | from .sgdw import SGDW

27 | from .swa import SWA

28 | from torch.optim import *

29 |

--------------------------------------------------------------------------------

/LICENSE.txt:

--------------------------------------------------------------------------------

1 | COPYRIGHT

2 |

3 | Copyright (c) 2019, Achaiah.

4 | All rights reserved.

5 |

6 | LICENSE

7 |

8 | The MIT License (MIT)

9 |

10 | Permission is hereby granted, free of charge, to any person obtaining a copy

11 | of this software and associated documentation files (the "Software"), to deal

12 | in the Software without restriction, including without limitation the rights

13 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

14 | copies of the Software, and to permit persons to whom the Software is

15 | furnished to do so, subject to the following conditions:

16 |

17 | The above copyright notice and this permission notice shall be included in all

18 | copies or substantial portions of the Software.

19 |

20 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

21 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

22 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

23 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

24 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

25 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

26 | SOFTWARE.

27 |

--------------------------------------------------------------------------------

/pywick/datasets/tnt/concatdataset.py:

--------------------------------------------------------------------------------

1 | from .dataset import Dataset

2 | import numpy as np

3 |

4 |

5 | class ConcatDataset(Dataset):

6 | """

7 | Dataset to concatenate multiple datasets.

8 |

9 | Purpose: useful to assemble different existing datasets, possibly

10 | large-scale datasets as the concatenation operation is done in an

11 | on-the-fly manner.

12 |

13 | Args:

14 | datasets (iterable): List of datasets to be concatenated

15 | """

16 |

17 | def __init__(self, datasets):

18 | super(ConcatDataset, self).__init__()

19 |

20 | self.datasets = list(datasets)

21 | if len(datasets) <= 0:

22 | raise AssertionError('datasets should not be an empty iterable')

23 | self.cum_sizes = np.cumsum([len(x) for x in self.datasets])

24 |

25 | def __len__(self):

26 | return self.cum_sizes[-1]

27 |

28 | def __getitem__(self, idx):

29 | super(ConcatDataset, self).__getitem__(idx)

30 | dataset_index = self.cum_sizes.searchsorted(idx, 'right')

31 |

32 | if dataset_index == 0:

33 | dataset_idx = idx

34 | else:

35 | dataset_idx = idx - self.cum_sizes[dataset_index - 1]

36 |

37 | return self.datasets[dataset_index][dataset_idx]

38 |

--------------------------------------------------------------------------------

/pywick/gridsearch/grid_test.py:

--------------------------------------------------------------------------------

1 | import json

2 | from .gridsearch import GridSearch

3 |

4 | my_args = {

5 | 'shape': '+plus+',

6 | 'animal':['cat', 'mouse', 'dog'],

7 | 'number':[4, 5, 6],

8 | 'device':['CUP', 'MUG', 'TPOT'],

9 | 'flower' : '=Rose='

10 | }

11 |

12 | def tryme(args_dict):

13 | print(json.dumps(args_dict, indent=4))

14 |

15 | def tryme_vars(animal='', number=0, device='', shape='', flower=''):

16 | print(animal + " : " + str(number) + " : " + device + " : " + shape + " : " + flower)

17 |

18 | def main():

19 | grids = GridSearch(tryme, grid_params=my_args, search_behavior='exhaustive', args_as_dict=True)

20 |

21 | print('-------- INITIAL SETTINGS ---------')

22 | tryme(my_args)

23 | print('-------------- END ----------------')

24 | print('-------------- --- ----------------')

25 | print()

26 | print('+++++++++++ Dict Result ++++++++++')

27 | grids.run()

28 | print('+++++++++++++ End Dict Result +++++++++++\n\n')

29 |

30 | grids = GridSearch(tryme_vars, grid_params=my_args, search_behavior='sampled_0.5', args_as_dict=False)

31 | print('========== Vars Result ==========')

32 | grids.run()

33 | print('========== End Vars Result ==========')

34 | # exit()

35 |

36 | if __name__ == '__main__':

37 | main()

38 |

--------------------------------------------------------------------------------

/pywick/models/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Neural network models is what deep learning is all about! While you can download some standard models from

3 | `torchvision `_, we strive to create a library of models