├── utils

├── bremm.png

├── saveload.py

├── basic.py

├── data.py

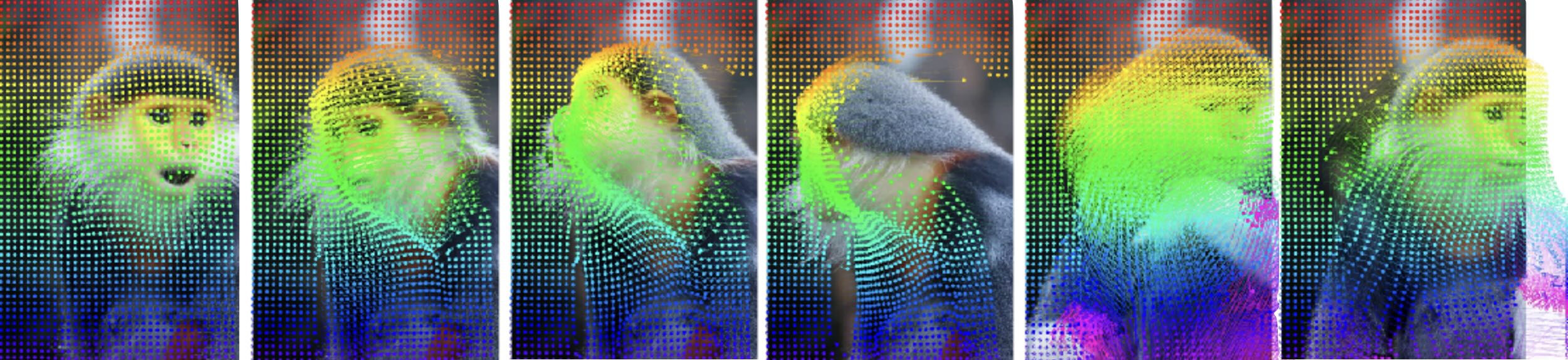

├── samp.py

├── loss.py

├── misc.py

└── py.py

├── .gitignore

├── demo_video

└── download_video.sh

├── download_reference_model.sh

├── requirements.txt

├── LICENSE

├── datasets

├── davisdataset.py

├── rgbstackingdataset.py

├── kineticsdataset.py

├── robotapdataset.py

├── egopointsdataset.py

├── crohddataset.py

├── badjadataset.py

├── horsedataset.py

├── drivetrackdataset.py

├── kubric_movif_dataset.py

├── exportdataset.py

├── pointdataset.py

└── dynrep_dataset.py

├── README.md

├── demo.py

├── test_dense_on_sparse.py

└── train_stage1.py

/utils/bremm.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/aharley/alltracker/HEAD/utils/bremm.png

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | *~

2 | *#*

3 | *pyc

4 | #.*

5 | .DS_Store

6 | *.out

7 | *.gif

8 | stock_videos/

9 | logs*

10 | *.mp4

11 | temp_*

12 |

--------------------------------------------------------------------------------

/demo_video/download_video.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | FILE="monkey.mp4"

3 | echo "downloading ${FILE} from dropbox"

4 | wget --max-redirect=20 -O ${FILE} https://www.dropbox.com/scl/fi/fm2m3ylhzmqae05bzwm8q/monkey.mp4?rlkey=ibf81gaqpxkh334rccu7zrioe&st=mli9bqb6&dl=1

5 |

--------------------------------------------------------------------------------

/download_reference_model.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | FILE="alltracker_reference.tar.gz"

3 | echo "downloading ${FILE} from dropbox"

4 | wget --max-redirect=20 -O ${FILE} https://www.dropbox.com/scl/fi/ng66ceortfy07bgie3r54/alltracker_reference.tar.gz?rlkey=o781im2v0sl7035hy8fcuv1d5&st=u5mcttcx&dl=1

5 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | torch

2 | torchaudio

3 | torchvision

4 | pytorch_lightning==2.4.0

5 | opencv-python==4.10.0.84

6 | einops==0.8.0

7 | moviepy==1.0.3

8 | h5py==3.12.1

9 | matplotlib==3.9.2

10 | scikit-learn==1.5.2

11 | scikit-image==0.24.0

12 | tensorboardX==2.6.2.2

13 | prettytable==3.12.0

14 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2025 Adam W. Harley

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/datasets/davisdataset.py:

--------------------------------------------------------------------------------

1 | from numpy import random

2 | import torch

3 | import numpy as np

4 | import pickle

5 | from datasets.pointdataset import PointDataset

6 | import utils.data

7 | import cv2

8 |

9 | class DavisDataset(PointDataset):

10 | def __init__(

11 | self,

12 | data_root='../datasets/tapvid_davis',

13 | crop_size=(384,512),

14 | seq_len=None,

15 | only_first=False,

16 | ):

17 | super(DavisDataset, self).__init__(

18 | data_root=data_root,

19 | crop_size=crop_size,

20 | seq_len=seq_len,

21 | )

22 |

23 | print('loading TAPVID-DAVIS dataset...')

24 |

25 | self.dname = 'davis'

26 | self.only_first = only_first

27 |

28 | input_path = '%s/tapvid_davis.pkl' % data_root

29 | with open(input_path, 'rb') as f:

30 | data = pickle.load(f)

31 | if isinstance(data, dict):

32 | data = list(data.values())

33 | self.data = data

34 | print('found %d videos in %s' % (len(self.data), data_root))

35 |

36 | def __getitem__(self, index):

37 | dat = self.data[index]

38 | rgbs = dat['video'] # list of H,W,C uint8 images

39 | trajs = dat['points'] # N,S,2 array

40 | visibs = 1-dat['occluded'] # N,S array

41 | # note the annotations are only valid when not occluded

42 |

43 | trajs = trajs.transpose(1,0,2) # S,N,2

44 | visibs = visibs.transpose(1,0) # S,N

45 | valids = visibs.copy()

46 |

47 | rgbs, trajs, visibs, valids = utils.data.standardize_test_data(

48 | rgbs, trajs, visibs, valids, only_first=self.only_first, seq_len=self.seq_len)

49 |

50 | rgbs = [cv2.resize(rgb, (self.crop_size[1], self.crop_size[0]), interpolation=cv2.INTER_LINEAR) for rgb in rgbs]

51 | # in this data, 1.0,1.0 should lie at the bottom-right corner pixel

52 | H, W = rgbs[0].shape[:2]

53 | trajs[:,:,0] *= W

54 | trajs[:,:,1] *= H

55 |

56 | rgbs = torch.from_numpy(np.stack(rgbs,0)).permute(0,3,1,2).contiguous().float() # S,C,H,W

57 | trajs = torch.from_numpy(trajs).float() # S,N,2

58 | valids = torch.from_numpy(valids).float() # S,N

59 | visibs = torch.from_numpy(visibs).float() # S,N

60 |

61 | sample = utils.data.VideoData(

62 | video=rgbs,

63 | trajs=trajs,

64 | visibs=visibs,

65 | valids=valids,

66 | dname=self.dname,

67 | )

68 | return sample, True

69 |

70 | def __len__(self):

71 | return len(self.data)

72 |

73 |

74 |

--------------------------------------------------------------------------------

/datasets/rgbstackingdataset.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import numpy as np

3 | import pickle

4 | from datasets.pointdataset import PointDataset

5 | import utils.data

6 | import cv2

7 |

8 | class RGBStackingDataset(PointDataset):

9 | def __init__(

10 | self,

11 | data_root='../datasets/tapvid_rgbstacking',

12 | crop_size=(384,512),

13 | seq_len=None,

14 | only_first=False,

15 | ):

16 | super(RGBStackingDataset, self).__init__(

17 | data_root=data_root,

18 | crop_size=crop_size,

19 | seq_len=seq_len,

20 | )

21 |

22 | print('loading TAPVID-RGB-Stacking dataset...')

23 |

24 | self.dname = 'rgbstacking'

25 | self.only_first = only_first

26 |

27 | input_path = '%s/tapvid_rgb_stacking.pkl' % data_root

28 | with open(input_path, 'rb') as f:

29 | data = pickle.load(f)

30 | if isinstance(data, dict):

31 | data = list(data.values())

32 | self.data = data

33 | print('found %d videos in %s' % (len(self.data), data_root))

34 |

35 | def __getitem__(self, index):

36 | dat = self.data[index]

37 | rgbs = dat['video'] # list of H,W,C uint8 images

38 | trajs = dat['points'] # N,S,2 array

39 | visibs = 1-dat['occluded'] # N,S array

40 | # note the annotations are only valid when visib

41 | valids = visibs.copy()

42 |

43 | trajs = trajs.transpose(1,0,2) # S,N,2

44 | visibs = visibs.transpose(1,0) # S,N

45 | valids = valids.transpose(1,0) # S,N

46 |

47 | rgbs, trajs, visibs, valids = utils.data.standardize_test_data(

48 | rgbs, trajs, visibs, valids, only_first=self.only_first, seq_len=self.seq_len)

49 |

50 | rgbs = [cv2.resize(rgb, (self.crop_size[1], self.crop_size[0]), interpolation=cv2.INTER_LINEAR) for rgb in rgbs]

51 | # 1.0,1.0 should lie at the bottom-right corner pixel

52 | H, W = rgbs[0].shape[:2]

53 | trajs[:,:,0] *= W-1

54 | trajs[:,:,1] *= H-1

55 |

56 | rgbs = torch.from_numpy(np.stack(rgbs,0)).permute(0,3,1,2).contiguous().float() # S,C,H,W

57 | trajs = torch.from_numpy(trajs).float() # S,N,2

58 | visibs = torch.from_numpy(visibs).float() # S,N

59 | valids = torch.from_numpy(valids).float() # S,N

60 |

61 | sample = utils.data.VideoData(

62 | video=rgbs,

63 | trajs=trajs,

64 | visibs=visibs,

65 | valids=valids,

66 | dname=self.dname,

67 | )

68 | return sample, True

69 |

70 | def __len__(self):

71 | return len(self.data)

72 |

73 |

74 |

--------------------------------------------------------------------------------

/datasets/kineticsdataset.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import numpy as np

3 | import pickle

4 | from datasets.pointdataset import PointDataset

5 | import utils.data

6 | import cv2

7 | from pathlib import Path

8 | import io

9 | from PIL import Image

10 |

11 | def decode(frame):

12 | byteio = io.BytesIO(frame)

13 | img = Image.open(byteio)

14 | return np.array(img)

15 |

16 | class KineticsDataset(PointDataset):

17 | def __init__(

18 | self,

19 | data_root='../datasets/tapvid_kinetics',

20 | crop_size=(384,512),

21 | seq_len=None,

22 | only_first=False,

23 | ):

24 | super(KineticsDataset, self).__init__(

25 | data_root=data_root,

26 | crop_size=crop_size,

27 | seq_len=seq_len,

28 | )

29 |

30 | print('loading TAPVID-Kinetics dataset...')

31 |

32 | self.dname = 'kinetics'

33 | self.only_first = only_first

34 |

35 | self.data = []

36 | for vid_pkl in sorted(list(Path(data_root).glob('*.pkl')))[:]:

37 | vid_pkl = vid_pkl.name

38 | print(vid_pkl)

39 | input_path = "%s/%s" % (data_root, vid_pkl)

40 | with open(input_path, "rb") as f:

41 | data = pickle.load(f)

42 | self.data += data

43 | print("found %d videos in %s" % (len(self.data), data_root))

44 |

45 | def __getitem__(self, index):

46 | dat = self.data[index]

47 | rgbs = dat['video'] # list of H,W,C uint8 images

48 | if isinstance(rgbs[0], bytes): # decode if needed

49 | rgbs = [decode(frame) for frame in rgbs]

50 | trajs = dat['points'] # N,S,2 array

51 | visibs = 1-dat['occluded'] # N,S array

52 | # note the annotations are only valid when visib

53 |

54 | trajs = trajs.transpose(1,0,2) # S,N,2

55 | visibs = visibs.transpose(1,0) # S,N

56 | valids = visibs.copy()

57 |

58 | rgbs, trajs, visibs, valids = utils.data.standardize_test_data(

59 | rgbs, trajs, visibs, valids, only_first=self.only_first, seq_len=self.seq_len)

60 |

61 | rgbs = [cv2.resize(rgb, (self.crop_size[1], self.crop_size[0]), interpolation=cv2.INTER_LINEAR) for rgb in rgbs]

62 | H, W = rgbs[0].shape[:2]

63 | trajs[:,:,0] *= W-1

64 | trajs[:,:,1] *= H-1

65 |

66 | rgbs = torch.from_numpy(np.stack(rgbs,0)).permute(0,3,1,2).contiguous().float() # S,C,H,W

67 | trajs = torch.from_numpy(trajs).float() # S,N,2

68 | visibs = torch.from_numpy(visibs).float() # S,N

69 | valids = torch.from_numpy(valids).float() # S,N

70 |

71 | sample = utils.data.VideoData(

72 | video=rgbs,

73 | trajs=trajs,

74 | visibs=visibs,

75 | valids=valids,

76 | dname=self.dname,

77 | )

78 | return sample, True

79 |

80 | def __len__(self):

81 | return len(self.data)

82 |

83 |

84 |

--------------------------------------------------------------------------------

/datasets/robotapdataset.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import numpy as np

3 | import pickle

4 | from datasets.pointdataset import PointDataset

5 | import utils.data

6 | import cv2

7 |

8 | class RobotapDataset(PointDataset):

9 | def __init__(

10 | self,

11 | data_root='../datasets/robotap',

12 | crop_size=(384,512),

13 | seq_len=None,

14 | only_first=False,

15 | ):

16 | super(RobotapDataset, self).__init__(

17 | data_root=data_root,

18 | crop_size=crop_size,

19 | seq_len=seq_len,

20 | )

21 |

22 | self.dname = 'robo'

23 | self.only_first = only_first

24 |

25 | # self.train_pkls = ['robotap_split0.pkl', 'robotap_split1.pkl', 'robotap_split2.pkl']

26 | self.val_pkls = ['robotap_split3.pkl', 'robotap_split4.pkl']

27 |

28 | print("loading robotap dataset...")

29 | # self.vid_pkls = self.train_pkls if is_training else self.val_pkls

30 | self.data = []

31 | for vid_pkl in self.val_pkls:

32 | print(vid_pkl)

33 | input_path = "%s/%s" % (data_root, vid_pkl)

34 | with open(input_path, "rb") as f:

35 | data = pickle.load(f)

36 | keys = list(data.keys())

37 | self.data += [data[key] for key in keys]

38 | print("found %d videos in %s" % (len(self.data), data_root))

39 |

40 | def __len__(self):

41 | return len(self.data)

42 |

43 | def getitem_helper(self, index):

44 | dat = self.data[index]

45 | rgbs = dat["video"] # list of H,W,C uint8 images

46 | trajs = dat["points"] # N,S,2 array

47 | visibs = 1 - dat["occluded"] # N,S array

48 |

49 | # note the annotations are only valid when not occluded

50 | trajs = trajs.transpose(1,0,2) # S,N,2

51 | visibs = visibs.transpose(1,0) # S,N

52 | valids = visibs.copy()

53 |

54 | rgbs, trajs, visibs, valids = utils.data.standardize_test_data(

55 | rgbs, trajs, visibs, valids, only_first=self.only_first, seq_len=self.seq_len)

56 |

57 | rgbs = [cv2.resize(rgb, (self.crop_size[1], self.crop_size[0]), interpolation=cv2.INTER_LINEAR) for rgb in rgbs]

58 | # 1.0,1.0 should lie at the bottom-right corner pixel

59 | H, W = rgbs[0].shape[:2]

60 | trajs[:,:,0] *= W-1

61 | trajs[:,:,1] *= H-1

62 |

63 | rgbs = torch.from_numpy(np.stack(rgbs,0)).permute(0,3,1,2).contiguous().float() # S,C,H,W

64 | trajs = torch.from_numpy(trajs).float() # S,N,2

65 | visibs = torch.from_numpy(visibs).float() # S,N

66 | valids = torch.from_numpy(valids).float() # S,N

67 |

68 | if self.seq_len is not None:

69 | rgbs = rgbs[:self.seq_len]

70 | trajs = trajs[:self.seq_len]

71 | valids = valids[:self.seq_len]

72 | visibs = visibs[:self.seq_len]

73 |

74 | sample = utils.data.VideoData(

75 | video=rgbs,

76 | trajs=trajs,

77 | visibs=visibs,

78 | valids=valids,

79 | dname=self.dname,

80 | )

81 | return sample, True

82 |

--------------------------------------------------------------------------------

/utils/saveload.py:

--------------------------------------------------------------------------------

1 | import pathlib

2 | import os

3 | import torch

4 |

5 | def save(ckpt_dir, module, optimizer, scheduler, global_step, keep_latest=2, model_name='model'):

6 | pathlib.Path(ckpt_dir).mkdir(exist_ok=True, parents=True)

7 | prev_ckpts = list(pathlib.Path(ckpt_dir).glob('%s-*pth' % model_name))

8 | prev_ckpts.sort(key=lambda p: p.stat().st_mtime,reverse=True)

9 | if len(prev_ckpts) > keep_latest-1:

10 | for f in prev_ckpts[keep_latest-1:]:

11 | f.unlink()

12 | save_path = '%s/%s-%09d.pth' % (ckpt_dir, model_name, global_step)

13 | save_dict = {

14 | "model": module.state_dict(),

15 | "optimizer": optimizer.state_dict(),

16 | "global_step": global_step,

17 | }

18 | if scheduler is not None:

19 | save_dict['scheduler'] = scheduler.state_dict()

20 | print(f"saving {save_path}")

21 | torch.save(save_dict, save_path)

22 | return False

23 |

24 | def load(fabric, ckpt_path, model, optimizer=None, scheduler=None, model_ema=None, step=0, model_name='model', ignore_load=None, strict=True, verbose=True, weights_only=False):

25 | if verbose:

26 | print('reading ckpt from %s' % ckpt_path)

27 | if not os.path.exists(ckpt_path):

28 | print('...there is no full checkpoint in %s' % ckpt_path)

29 | print('-- note this function no longer appends "saved_checkpoints/" before the ckpt_path --')

30 | assert(False)

31 | else:

32 | if os.path.isfile(ckpt_path):

33 | path = ckpt_path

34 | print('...found checkpoint %s' % (path))

35 | else:

36 | prev_ckpts = list(pathlib.Path(ckpt_path).glob('%s-*pth' % model_name))

37 | prev_ckpts.sort(key=lambda p: p.stat().st_mtime,reverse=True)

38 | if len(prev_ckpts):

39 | path = prev_ckpts[0]

40 | # e.g., './checkpoints/2Ai4_5e-4_base18_1539/model-000050000.pth'

41 | # OR ./whatever.pth

42 | step = int(str(path).split('-')[-1].split('.')[0])

43 | if verbose:

44 | print('...found checkpoint %s; (parsed step %d from path)' % (path, step))

45 | else:

46 | print('...there is no full checkpoint here!')

47 | return 0

48 | if fabric is not None:

49 | checkpoint = fabric.load(path)

50 | else:

51 | checkpoint = torch.load(path, weights_only=weights_only)

52 | if optimizer is not None:

53 | optimizer.load_state_dict(checkpoint['optimizer'])

54 | if scheduler is not None:

55 | scheduler.load_state_dict(checkpoint['scheduler'])

56 | assert ignore_load is None # not ready yet

57 | if 'model' in checkpoint:

58 | state_dict = checkpoint['model']

59 | else:

60 | state_dict = checkpoint

61 | model.load_state_dict(state_dict, strict=strict)

62 | return step

63 |

64 |

65 |

66 |

--------------------------------------------------------------------------------

/datasets/egopointsdataset.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import numpy as np

3 | from datasets.pointdataset import PointDataset

4 | import utils.data

5 | import cv2

6 | from pathlib import Path

7 |

8 | class EgoPointsDataset(PointDataset):

9 | def __init__(

10 | self,

11 | data_root='../datasets/ego_points',

12 | crop_size=(384,512),

13 | seq_len=None,

14 | only_first=False,

15 | ):

16 | super(EgoPointsDataset, self).__init__(

17 | data_root=data_root,

18 | crop_size=crop_size,

19 | seq_len=seq_len,

20 | )

21 |

22 | print('loading egopoints dataset...')

23 |

24 | self.dname = 'egopoints'

25 | self.only_first = only_first

26 |

27 | self.data = []

28 | for subfolder in Path(data_root).iterdir():

29 | if subfolder.is_dir():

30 | annot_fn = subfolder / 'annot.npz'

31 | if not annot_fn.exists():

32 | continue

33 | data = np.load(annot_fn)

34 | trajs_2d, valids, visibs, vis_valids = data['trajs_2d'], data['valids'], data['visibs'], data['vis_valids']

35 |

36 | self.data.append({

37 | 'rgb_paths': sorted(subfolder.glob('rgbs/*.jpg')),

38 | 'trajs_2d': trajs_2d,

39 | 'valids': valids,

40 | 'visibs': visibs,

41 | 'vis_valids': vis_valids,

42 | })

43 |

44 | print('found %d videos in %s' % (len(self.data), data_root))

45 |

46 | def __getitem__(self, index):

47 | dat = self.data[index]

48 | rgb_paths = dat['rgb_paths']

49 | trajs = dat['trajs_2d'] # S,N,2

50 | valids = dat['valids'] # S,N

51 | visibs = valids.copy() # we don't use this

52 |

53 | rgbs = [cv2.imread(str(rgb_path))[..., ::-1] for rgb_path in rgb_paths]

54 |

55 | rgbs, trajs, visibs, valids = utils.data.standardize_test_data(

56 | rgbs, trajs, visibs, valids, only_first=self.only_first, seq_len=self.seq_len)

57 |

58 | # resize

59 | rgbs = [cv2.resize(rgb, (self.crop_size[1], self.crop_size[0]), interpolation=cv2.INTER_LINEAR) for rgb in rgbs]

60 | rgb0_raw = cv2.imread(str(rgb_paths[0]))[..., ::-1]

61 | trajs = trajs / (np.array([rgb0_raw.shape[1], rgb0_raw.shape[0]]) - 1)

62 | trajs = np.maximum(np.minimum(trajs, 1.), 0.)

63 | # 1.0,1.0 should map to the bottom-right corner pixel

64 | H, W = rgbs[0].shape[:2]

65 | trajs[:,:,0] *= W-1

66 | trajs[:,:,1] *= H-1

67 |

68 | rgbs = torch.from_numpy(np.stack(rgbs,0)).permute(0,3,1,2).contiguous().float() # S,C,H,W

69 | trajs = torch.from_numpy(trajs).float() # S,N,2

70 | valids = torch.from_numpy(valids).float() # S,N

71 | visibs = torch.from_numpy(visibs).float()

72 |

73 | if self.seq_len is not None:

74 | rgbs = rgbs[:self.seq_len]

75 | trajs = trajs[:self.seq_len]

76 | valids = valids[:self.seq_len]

77 | visibs = visibs[:self.seq_len]

78 |

79 | # req at least one timestep valid (after cutting)

80 | val_ok = torch.sum(valids, axis=0) > 0

81 | trajs = trajs[:,val_ok]

82 | valids = valids[:,val_ok]

83 | visibs = visibs[:,val_ok]

84 |

85 | sample = utils.data.VideoData(

86 | video=rgbs,

87 | trajs=trajs,

88 | valids=valids,

89 | visibs=visibs,

90 | dname=self.dname,

91 | )

92 | return sample, True

93 |

94 | def __len__(self):

95 | return len(self.data)

96 |

97 |

98 |

--------------------------------------------------------------------------------

/datasets/crohddataset.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import numpy as np

3 | import os

4 | from PIL import Image

5 | import cv2

6 | from datasets.pointdataset import PointDataset

7 | import utils.data

8 |

9 | class CrohdDataset(PointDataset):

10 | def __init__(

11 | self,

12 | data_root='../datasets/crohd',

13 | crop_size=(384, 512),

14 | seq_len=None,

15 | only_first=False,

16 | ):

17 | super(CrohdDataset, self).__init__(

18 | data_root=data_root,

19 | crop_size=crop_size,

20 | seq_len=seq_len,

21 | )

22 |

23 | self.dname = 'crohd'

24 | self.seq_len = seq_len

25 | self.only_first = only_first

26 |

27 | dataset_dir = "%s/HT21/train" % self.data_root

28 | label_location = "%s/HT21Labels/train" % self.data_root

29 | subfolders = ["HT21-01", "HT21-02", "HT21-03", "HT21-04"]

30 |

31 | print("loading data from {0}".format(dataset_dir))

32 | self.dataset_dir = dataset_dir

33 | self.subfolders = subfolders

34 | print("found %d samples" % len(self.subfolders))

35 |

36 | def getitem_helper(self, index):

37 | subfolder = self.subfolders[index]

38 |

39 | label_path = os.path.join(self.dataset_dir, subfolder, "gt/gt.txt")

40 | labels = np.loadtxt(label_path, delimiter=",")

41 |

42 | n_frames = int(labels[-1, 0])

43 | n_heads = int(labels[:, 1].max())

44 |

45 | bboxes = np.zeros((n_frames, n_heads, 4))

46 | visibs = np.zeros((n_frames, n_heads))

47 |

48 | for i in range(labels.shape[0]):

49 | (

50 | frame_id,

51 | head_id,

52 | bb_left,

53 | bb_top,

54 | bb_width,

55 | bb_height,

56 | conf,

57 | cid,

58 | vis,

59 | ) = labels[i]

60 | frame_id = int(frame_id) - 1 # convert 1-indexing to 0-indexing

61 | head_id = int(head_id) - 1 # convert 1-indexing to 0-indexing

62 |

63 | visibs[frame_id, head_id] = vis

64 | box_cur = np.array(

65 | [bb_left, bb_top, bb_left + bb_width, bb_top + bb_height]

66 | ) # convert xywh to x1, y1, x2, y2

67 | bboxes[frame_id, head_id] = box_cur

68 |

69 | prescale = 0.75 # to save memory

70 |

71 | # take the center of each head box as a coordinate

72 | trajs = np.stack([bboxes[:, :, [0, 2]].mean(2), bboxes[:, :, [1, 3]].mean(2)], axis=2) # S,N,2

73 | trajs = trajs * prescale

74 | valids = visibs.copy()

75 |

76 | S, N = valids.shape

77 |

78 | rgbs = []

79 | for ii in range(S):

80 | rgb_path = os.path.join(self.dataset_dir, subfolder, "img1", str(ii + 1).zfill(6) + ".jpg")

81 | rgb = Image.open(rgb_path) # 1920x1080

82 | rgb = rgb.resize((int(rgb.size[0] * prescale), int(rgb.size[1] * prescale)), Image.BILINEAR) # save memory by downsampling here

83 | rgbs.append(rgb)

84 | rgbs = np.stack(rgbs) # S,H,W,3

85 |

86 | rgbs, trajs, visibs, valids = utils.data.standardize_test_data(

87 | rgbs, trajs, visibs, valids, only_first=self.only_first, seq_len=self.seq_len)

88 |

89 | H, W = rgbs[0].shape[:2]

90 | S, N = trajs.shape[:2]

91 | sx = W / self.crop_size[1]

92 | sy = H / self.crop_size[0]

93 | trajs[:,:,0] /= sx

94 | trajs[:,:,1] /= sy

95 | rgbs = [cv2.resize(rgb, (self.crop_size[1], self.crop_size[0]), interpolation=cv2.INTER_LINEAR) for rgb in rgbs]

96 | rgbs = np.stack(rgbs)

97 | H,W = rgbs[0].shape[:2]

98 |

99 | rgbs = torch.from_numpy(rgbs).permute(0, 3, 1, 2).float()

100 | trajs = torch.from_numpy(trajs).float()

101 | visibs = torch.from_numpy(visibs).float()

102 | valids = torch.from_numpy(valids).float()

103 |

104 | sample = utils.data.VideoData(

105 | video=rgbs,

106 | trajs=trajs,

107 | visibs=visibs,

108 | valids=valids,

109 | dname=self.dname,

110 | )

111 | return sample, True

112 |

113 | def __len__(self):

114 | return len(self.subfolders)

115 |

116 |

117 |

--------------------------------------------------------------------------------

/datasets/badjadataset.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import numpy as np

3 | from datasets.pointdataset import PointDataset

4 | import utils.data

5 | import cv2

6 | import glob

7 | import imageio

8 | import pandas as pd

9 |

10 | # this is badja with annotations hand-cleaned by adam in 2022

11 |

12 | class BadjaDataset(PointDataset):

13 | def __init__(

14 | self,

15 | data_root='../datasets/badja',

16 | crop_size=(384,512),

17 | seq_len=None,

18 | only_first=False,

19 | ):

20 | super(BadjaDataset, self).__init__(

21 | data_root=data_root,

22 | crop_size=crop_size,

23 | seq_len=seq_len,

24 | )

25 |

26 | self.dname = 'badja'

27 | self.seq_len = seq_len

28 | self.only_first = only_first

29 |

30 | npzs = glob.glob('%s/complete_aa/*.npz' % self.data_root)

31 | npzs = sorted(npzs)

32 | df = pd.read_csv('%s/picks_and_coords.txt' % self.data_root, sep=' ', header=None)

33 | track_names = df[0].tolist()

34 | pick_frames = np.array(df[1])

35 |

36 | self.animal_names = []

37 | self.animal_trajs = []

38 | self.animal_visibs = []

39 | self.animal_valids = []

40 | self.animal_picks = []

41 |

42 | for ind in range(len(npzs)):

43 | o = np.load(npzs[ind])

44 |

45 | animal_name = o['animal_name']

46 | trajs = o['trajs_g']

47 | valids = o['valids_g']

48 |

49 | S, N, D = trajs.shape

50 |

51 | assert(D==2)

52 |

53 | N = trajs.shape[1]

54 |

55 | # hand-picked frame where it's fair to start tracking this kp

56 | pick_g = np.zeros((N), dtype=np.int32)

57 |

58 | for n in range(N):

59 | short_name = '%s_%02d' % (animal_name, n)

60 | txt_id = track_names.index(short_name)

61 | pick_id = pick_frames[txt_id]

62 | pick_g[n] = pick_id

63 |

64 | # discard annotations before the pick

65 | valids[:pick_id,n] = 0

66 | valids[pick_id,n] = 2

67 |

68 | self.animal_names.append(animal_name)

69 | self.animal_trajs.append(trajs)

70 | self.animal_valids.append(valids)

71 |

72 | def __getitem__(self, index):

73 | animal_name = self.animal_names[index]

74 | trajs = self.animal_trajs[index].copy()

75 | valids = self.animal_valids[index]

76 | valids = (valids==2) * 1.0

77 | visibs = valids.copy()

78 |

79 | S,N,D = trajs.shape

80 |

81 | filenames = glob.glob('%s/videos/%s/*.png' % (self.data_root, animal_name)) + glob.glob('%s/videos/%s/*.jpg' % (self.data_root, animal_name))

82 | filenames = sorted(filenames)

83 | S = len(filenames)

84 | filenames_short = [fn.split('/')[-1] for fn in filenames]

85 |

86 | rgbs = []

87 | for s in range(S):

88 | filename_actual = filenames[s]

89 | rgb = imageio.imread(filename_actual)

90 | rgbs.append(rgb)

91 |

92 | rgbs, trajs, visibs, valids = utils.data.standardize_test_data(

93 | rgbs, trajs, visibs, valids, only_first=self.only_first, seq_len=self.seq_len)

94 |

95 | S = len(rgbs)

96 | H, W, C = rgbs[0].shape

97 | N = trajs.shape[1]

98 | rgbs = [cv2.resize(rgb, (self.crop_size[1], self.crop_size[0]), interpolation=cv2.INTER_LINEAR) for rgb in rgbs]

99 | sx = W / self.crop_size[1]

100 | sy = H / self.crop_size[0]

101 | trajs[:,:,0] /= sx

102 | trajs[:,:,1] /= sy

103 | rgbs = np.stack(rgbs, 0)

104 | H, W, C = rgbs[0].shape

105 |

106 | rgbs = torch.from_numpy(rgbs).reshape(S, H, W, 3).permute(0,3,1,2).float()

107 | trajs = torch.from_numpy(trajs).reshape(S, N, 2).float()

108 | visibs = torch.from_numpy(visibs).reshape(S, N).float()

109 | valids = torch.from_numpy(valids).reshape(S, N).float()

110 |

111 | sample = utils.data.VideoData(

112 | video=rgbs,

113 | trajs=trajs,

114 | visibs=visibs,

115 | valids=valids,

116 | dname=self.dname,

117 | )

118 | return sample, True

119 |

120 | def __len__(self):

121 | return len(self.animal_names)

122 |

123 |

--------------------------------------------------------------------------------

/utils/basic.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import numpy as np

3 | import os

4 | EPS = 1e-6

5 |

6 | def sub2ind(height, width, y, x):

7 | return y*width + x

8 |

9 | def ind2sub(height, width, ind):

10 | y = ind // width

11 | x = ind % width

12 | return y, x

13 |

14 | def get_lr_str(lr):

15 | lrn = "%.1e" % lr # e.g., 5.0e-04

16 | lrn = lrn[0] + lrn[3:5] + lrn[-1] # e.g., 5e-4

17 | return lrn

18 |

19 | def strnum(x):

20 | s = '%g' % x

21 | if '.' in s:

22 | if x < 1.0:

23 | s = s[s.index('.'):]

24 | s = s[:min(len(s),4)]

25 | return s

26 |

27 | def assert_same_shape(t1, t2):

28 | for (x, y) in zip(list(t1.shape), list(t2.shape)):

29 | assert(x==y)

30 |

31 | def mkdir(path):

32 | if not os.path.exists(path):

33 | os.makedirs(path)

34 |

35 | def print_stats(name, tensor):

36 | shape = tensor.shape

37 | tensor = tensor.detach().cpu().numpy()

38 | print('%s (%s) min = %.2f, mean = %.2f, max = %.2f' % (name, tensor.dtype, np.min(tensor), np.mean(tensor), np.max(tensor)), shape)

39 |

40 | def normalize_single(d):

41 | # d is a whatever shape torch tensor

42 | dmin = torch.min(d)

43 | dmax = torch.max(d)

44 | d = (d-dmin)/(EPS+(dmax-dmin))

45 | return d

46 |

47 | def normalize(d):

48 | # d is B x whatever. normalize within each element of the batch

49 | out = torch.zeros(d.size(), dtype=d.dtype, device=d.device)

50 | B = list(d.size())[0]

51 | for b in list(range(B)):

52 | out[b] = normalize_single(d[b])

53 | return out

54 |

55 | def meshgrid2d(B, Y, X, stack=False, norm=False, device='cuda', on_chans=False):

56 | # returns a meshgrid sized B x Y x X

57 |

58 | grid_y = torch.linspace(0.0, Y-1, Y, device=torch.device(device))

59 | grid_y = torch.reshape(grid_y, [1, Y, 1])

60 | grid_y = grid_y.repeat(B, 1, X)

61 |

62 | grid_x = torch.linspace(0.0, X-1, X, device=torch.device(device))

63 | grid_x = torch.reshape(grid_x, [1, 1, X])

64 | grid_x = grid_x.repeat(B, Y, 1)

65 |

66 | if norm:

67 | grid_y, grid_x = normalize_grid2d(

68 | grid_y, grid_x, Y, X)

69 |

70 | if stack:

71 | # note we stack in xy order

72 | # (see https://pytorch.org/docs/stable/nn.functional.html#torch.nn.functional.grid_sample)

73 | if on_chans:

74 | grid = torch.stack([grid_x, grid_y], dim=1)

75 | else:

76 | grid = torch.stack([grid_x, grid_y], dim=-1)

77 | return grid

78 | else:

79 | return grid_y, grid_x

80 |

81 | def gridcloud2d(B, Y, X, norm=False, device='cuda'):

82 | # we want to sample for each location in the grid

83 | grid_y, grid_x = meshgrid2d(B, Y, X, norm=norm, device=device)

84 | x = torch.reshape(grid_x, [B, -1])

85 | y = torch.reshape(grid_y, [B, -1])

86 | # these are B x N

87 | xy = torch.stack([x, y], dim=2)

88 | # this is B x N x 2

89 | return xy

90 |

91 | def reduce_masked_mean(x, mask, dim=None, keepdim=False, broadcast=False):

92 | # x and mask are the same shape, or at least broadcastably so < actually it's safer if you disallow broadcasting

93 | # returns shape-1

94 | # axis can be a list of axes

95 | if not broadcast:

96 | for (a,b) in zip(x.size(), mask.size()):

97 | if not a==b:

98 | print('some shape mismatch:', x.shape, mask.shape)

99 | assert(a==b) # some shape mismatch!

100 | # assert(x.size() == mask.size())

101 | prod = x*mask

102 | if dim is None:

103 | numer = torch.sum(prod)

104 | denom = EPS+torch.sum(mask)

105 | else:

106 | numer = torch.sum(prod, dim=dim, keepdim=keepdim)

107 | denom = EPS+torch.sum(mask, dim=dim, keepdim=keepdim)

108 | mean = numer/denom

109 | return mean

110 |

111 | def reduce_masked_median(x, mask, keep_batch=False):

112 | # x and mask are the same shape

113 | assert(x.size() == mask.size())

114 | device = x.device

115 |

116 | B = list(x.shape)[0]

117 | x = x.detach().cpu().numpy()

118 | mask = mask.detach().cpu().numpy()

119 |

120 | if keep_batch:

121 | x = np.reshape(x, [B, -1])

122 | mask = np.reshape(mask, [B, -1])

123 | meds = np.zeros([B], np.float32)

124 | for b in list(range(B)):

125 | xb = x[b]

126 | mb = mask[b]

127 | if np.sum(mb) > 0:

128 | xb = xb[mb > 0]

129 | meds[b] = np.median(xb)

130 | else:

131 | meds[b] = np.nan

132 | meds = torch.from_numpy(meds).to(device)

133 | return meds.float()

134 | else:

135 | x = np.reshape(x, [-1])

136 | mask = np.reshape(mask, [-1])

137 | if np.sum(mask) > 0:

138 | x = x[mask > 0]

139 | med = np.median(x)

140 | else:

141 | med = np.nan

142 | med = np.array([med], np.float32)

143 | med = torch.from_numpy(med).to(device)

144 | return med.float()

145 |

--------------------------------------------------------------------------------

/datasets/horsedataset.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import numpy as np

3 | import os

4 | from PIL import Image

5 | import cv2

6 | from datasets.pointdataset import PointDataset

7 | import pickle

8 | import utils.data

9 |

10 | class HorseDataset(PointDataset):

11 | def __init__(

12 | self,

13 | data_root='../datasets/horse10',

14 | crop_size=(384, 512),

15 | seq_len=None,

16 | only_first=False,

17 | ):

18 | super(HorseDataset, self).__init__(

19 | data_root=data_root,

20 | crop_size=crop_size,

21 | seq_len=seq_len,

22 | )

23 | print("loading horse dataset...")

24 |

25 | self.seq_len = seq_len

26 | self.only_first = only_first

27 | self.dname = 'hor'

28 |

29 | self.dataset_location = data_root

30 | self.anno_path = os.path.join(self.dataset_location, "seq_annotation.pkl")

31 | with open(self.anno_path, "rb") as f:

32 | self.annotation = pickle.load(f)

33 |

34 | self.video_names = []

35 |

36 | for video_name in list(self.annotation.keys()):

37 | video = self.annotation[video_name]

38 |

39 | rgbs = []

40 | trajs = []

41 | visibs = []

42 | for sample in video:

43 | img_path = sample["img_path"]

44 | img_path = self.dataset_location + '/' + img_path

45 | rgb = Image.open(img_path)

46 | rgbs.append(rgb)

47 | trajs.append(np.squeeze(sample["keypoints"], 0))

48 | visibs.append(np.squeeze(sample["keypoints_visible"], 0))

49 |

50 | rgbs = np.stack(rgbs, axis=0)

51 | trajs = np.stack(trajs, axis=0)

52 | visibs = np.stack(visibs, axis=0)

53 | valids = visibs.copy()

54 |

55 | S, H, W, C = rgbs.shape

56 | _, N, D = trajs.shape

57 |

58 | for si in range(S):

59 | # avoid 2px edge, since these are not really visible (according to adam)

60 | oob_inds = np.logical_or(

61 | np.logical_or(trajs[si, :, 0] < 2, trajs[si, :, 0] >= W-2),

62 | np.logical_or(trajs[si, :, 1] < 2, trajs[si, :, 1] >= H-2),

63 | )

64 | visibs[si, oob_inds] = 0

65 |

66 | rgbs, trajs, visibs, valids = utils.data.standardize_test_data(

67 | rgbs, trajs, visibs, valids, only_first=self.only_first, seq_len=self.seq_len)

68 |

69 | N = trajs.shape[1]

70 | if N > 0:

71 | self.video_names.append(video_name)

72 |

73 | print(f"found {len(self.annotation)} unique videos in {self.dataset_location}")

74 |

75 | def getitem_helper(self, index):

76 | video_name = self.video_names[index]

77 | video = self.annotation[video_name]

78 |

79 | rgbs = []

80 | trajs = []

81 | visibs = []

82 | for sample in video:

83 | img_path = sample["img_path"]

84 | img_path = self.dataset_location + '/' + img_path

85 | rgb = Image.open(img_path)

86 | rgbs.append(rgb)

87 | trajs.append(np.squeeze(sample["keypoints"], 0))

88 | visibs.append(np.squeeze(sample["keypoints_visible"], 0))

89 |

90 | rgbs = np.stack(rgbs, axis=0)

91 | trajs = np.stack(trajs, axis=0)

92 | visibs = np.stack(visibs, axis=0)

93 | valids = visibs.copy()

94 |

95 | S, H, W, C = rgbs.shape

96 | _, N, D = trajs.shape

97 |

98 | for si in range(S):

99 | # avoid 2px edge, since these are not really visible (according to adam)

100 | oob_inds = np.logical_or(

101 | np.logical_or(trajs[si, :, 0] < 2, trajs[si, :, 0] >= W-2),

102 | np.logical_or(trajs[si, :, 1] < 2, trajs[si, :, 1] >= H-2),

103 | )

104 | visibs[si, oob_inds] = 0

105 |

106 | rgbs, trajs, visibs, valids = utils.data.standardize_test_data(

107 | rgbs, trajs, visibs, valids, only_first=self.only_first, seq_len=self.seq_len)

108 |

109 | H, W = rgbs[0].shape[:2]

110 | trajs[:,:,0] /= W-1

111 | trajs[:,:,1] /= H-1

112 | rgbs = [cv2.resize(rgb, (self.crop_size[1], self.crop_size[0]), interpolation=cv2.INTER_LINEAR) for rgb in rgbs]

113 | rgbs = np.stack(rgbs)

114 | H,W = rgbs[0].shape[:2]

115 | trajs[:,:,0] *= W-1

116 | trajs[:,:,1] *= H-1

117 |

118 | rgbs = torch.from_numpy(rgbs).permute(0, 3, 1, 2).float()

119 | trajs = torch.from_numpy(trajs)

120 | visibs = torch.from_numpy(visibs)

121 | valids = torch.from_numpy(valids)

122 |

123 | sample = utils.data.VideoData(

124 | video=rgbs,

125 | trajs=trajs,

126 | visibs=visibs,

127 | valids=valids,

128 | dname=self.dname,

129 | )

130 | return sample, True

131 |

132 | def __len__(self):

133 | return len(self.video_names)

134 |

--------------------------------------------------------------------------------

/utils/data.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import dataclasses

3 | import torch.nn.functional as F

4 | from dataclasses import dataclass

5 | from typing import Any, Optional, Dict

6 | import utils.misc

7 | import numpy as np

8 |

9 | def replace_invalid_xys_with_nearest(xys, valids):

10 | # replace invalid xys with nearby ones

11 | invalid_idx = np.where(valids==0)[0]

12 | valid_idx = np.where(valids==1)[0]

13 | for idx in invalid_idx:

14 | nearest = valid_idx[np.argmin(np.abs(valid_idx - idx))]

15 | xys[idx] = xys[nearest]

16 | return xys

17 |

18 | def standardize_test_data(rgbs, trajs, visibs, valids, S_cap=600, only_first=False, seq_len=None):

19 | trajs = trajs.astype(np.float32) # S,N,2

20 | visibs = visibs.astype(np.float32) # S,N

21 | valids = valids.astype(np.float32) # S,N

22 |

23 | # only take tracks that make sense

24 | visval_ok = np.sum(valids*visibs, axis=0) > 1

25 | trajs = trajs[:,visval_ok]

26 | visibs = visibs[:,visval_ok]

27 | valids = valids[:,visval_ok]

28 |

29 | # fill in missing data (for visualization)

30 | N = trajs.shape[1]

31 | for ni in range(N):

32 | trajs[:,ni] = replace_invalid_xys_with_nearest(trajs[:,ni], valids[:,ni])

33 |

34 | # use S_cap or seq_len (legacy)

35 | if seq_len is not None:

36 | S = min(len(rgbs), seq_len)

37 | else:

38 | S = len(rgbs)

39 | S = min(S, S_cap)

40 |

41 | if only_first:

42 | # we'll find the best frame to start on

43 | best_count = 0

44 | best_ind = 0

45 |

46 | for si in range(0,len(rgbs)-64):

47 | # try this slice

48 | visibs_ = visibs[si:min(si+S,len(rgbs)+1)] # S,N

49 | valids_ = valids[si:min(si+S,len(rgbs)+1)] # S,N

50 | visval_ok0 = (visibs_[0]*valids_[0]) > 0 # N

51 | visval_okA = np.sum(visibs_*valids_, axis=0) > 1 # N

52 | all_ok = visval_ok0 & visval_okA

53 | # print('- slicing %d to %d; sum(ok) %d' % (si, min(si+S,len(rgbs)+1), np.sum(all_ok)))

54 | if np.sum(all_ok) > best_count:

55 | best_count = np.sum(all_ok)

56 | best_ind = si

57 | si = best_ind

58 | rgbs = rgbs[si:si+S]

59 | trajs = trajs[si:si+S]

60 | visibs = visibs[si:si+S]

61 | valids = valids[si:si+S]

62 | vis_ok0 = visibs[0] > 0 # N

63 | trajs = trajs[:,vis_ok0]

64 | visibs = visibs[:,vis_ok0]

65 | valids = valids[:,vis_ok0]

66 | # print('- best_count', best_count, 'best_ind', best_ind)

67 |

68 | if seq_len is not None:

69 | rgbs = rgbs[:seq_len]

70 | trajs = trajs[:seq_len]

71 | valids = valids[:seq_len]

72 |

73 | # req two timesteps valid (after seqlen trim)

74 | visval_ok = np.sum(visibs*valids, axis=0) > 1

75 | trajs = trajs[:,visval_ok]

76 | valids = valids[:,visval_ok]

77 | visibs = visibs[:,visval_ok]

78 |

79 | return rgbs, trajs, visibs, valids

80 |

81 |

82 | @dataclass(eq=False)

83 | class VideoData:

84 | """

85 | Dataclass for storing video tracks data.

86 | """

87 |

88 | video: torch.Tensor # B,S,C,H,W

89 | trajs: torch.Tensor # B,S,N,2

90 | visibs: torch.Tensor # B,S,N

91 | valids: Optional[torch.Tensor] = None # B,S,N

92 | dname: Optional[str] = None

93 |

94 |

95 | def collate_fn(batch):

96 | """

97 | Collate function for video tracks data.

98 | """

99 | video = torch.stack([b.video for b in batch], dim=0)

100 | trajs = torch.stack([b.trajs for b in batch], dim=0)

101 | visibs = torch.stack([b.visibs for b in batch], dim=0)

102 | dname = [b.dname for b in batch]

103 |

104 | return VideoData(

105 | video=video,

106 | trajs=trajs,

107 | visibs=visibs,

108 | dname=dname,

109 | )

110 |

111 |

112 | def collate_fn_train(batch):

113 | """

114 | Collate function for video tracks data during training.

115 | """

116 | gotit = [gotit for _, gotit in batch]

117 | video = torch.stack([b.video for b, _ in batch], dim=0)

118 | trajs = torch.stack([b.trajs for b, _ in batch], dim=0)

119 | visibs = torch.stack([b.visibs for b, _ in batch], dim=0)

120 | valids = torch.stack([b.valids for b, _ in batch], dim=0)

121 | dname = [b.dname for b, _ in batch]

122 |

123 | return (

124 | VideoData(

125 | video=video,

126 | trajs=trajs,

127 | visibs=visibs,

128 | valids=valids,

129 | dname=dname,

130 | ),

131 | gotit,

132 | )

133 |

134 |

135 | def try_to_cuda(t: Any) -> Any:

136 | """

137 | Try to move the input variable `t` to a cuda device.

138 |

139 | Args:

140 | t: Input.

141 |

142 | Returns:

143 | t_cuda: `t` moved to a cuda device, if supported.

144 | """

145 | try:

146 | t = t.float().cuda()

147 | except AttributeError:

148 | pass

149 | return t

150 |

151 |

152 | def dataclass_to_cuda_(obj):

153 | """

154 | Move all contents of a dataclass to cuda inplace if supported.

155 |

156 | Args:

157 | batch: Input dataclass.

158 |

159 | Returns:

160 | batch_cuda: `batch` moved to a cuda device, if supported.

161 | """

162 | for f in dataclasses.fields(obj):

163 | setattr(obj, f.name, try_to_cuda(getattr(obj, f.name)))

164 | return obj

165 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # AllTracker: Efficient Dense Point Tracking at High Resolution

2 |

3 | **[[Paper](https://arxiv.org/abs/2506.07310)] [[Project Page](https://alltracker.github.io/)] [[Gradio Demo](https://huggingface.co/spaces/aharley/alltracker)]**

4 |

5 |  6 |

7 | **AllTracker is a point tracking model which is faster and more accurate than other similar models, while also producing dense output at high resolution.**

8 |

9 | AllTracker estimates long-range point tracks by way of estimating the flow field between a query frame and every other frame of a video. Unlike existing point tracking methods, our approach delivers high-resolution and dense (all-pixel) correspondence fields, which can be visualized as flow maps. Unlike existing optical flow methods, our approach corresponds one frame to hundreds of subsequent frames, rather than just the next frame.

10 |

11 | We are actively adding to this repo, but please ping or open an issue if you notice something missing or broken. The demo (at least) should work for everyone!

12 |

13 |

14 | ## Env setup

15 |

16 | Install miniconda:

17 | ```

18 | mkdir -p ~/miniconda3

19 | wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh -O ~/miniconda3/miniconda.sh

20 | bash ~/miniconda3/miniconda.sh -b -u -p ~/miniconda3

21 | rm ~/miniconda3/miniconda.sh

22 | source ~/miniconda3/bin/activate

23 | conda init

24 | ```

25 |

26 | Set up a fresh conda environment for AllTracker:

27 |

28 | ```

29 | conda create -n alltracker python=3.12.8

30 | conda activate alltracker

31 | pip install -r requirements.txt

32 | ```

33 |

34 | ## Running the demo

35 |

36 | Download the sample video:

37 | ```

38 | cd demo_video

39 | sh download_video.sh

40 | cd ..

41 | ```

42 |

43 | Run the demo:

44 | ```

45 | python demo.py --mp4_path ./demo_video/monkey.mp4

46 | ```

47 | The demo script will automatically download the model weights from [huggingface](https://huggingface.co/aharley/alltracker/tree/main) if needed.

48 |

49 | For a fancier visualization, giving a side-by-side view of the input and output, try this:

50 | ```

51 | python demo.py --mp4_path ./demo_video/monkey.mp4 --query_frame 32 --conf_thr 0.01 --bkg_opacity 0.0 --rate 2 --hstack --query_frame 16

52 | ```

53 |

54 |

55 |

56 |

57 | ## Training

58 |

59 | AllTracker is trained in two stages: Stage 1 is kubric alone; Stage 2 is a mix of datasets. This 2-stage regime enables fair comparisons with models that train only on Kubric.

60 |

61 | ### Data prep

62 |

63 | Start by downloding Kubric.

64 |

65 | - 24-frame data: [kubric_au.tar.gz](https://huggingface.co/datasets/aharley/alltracker_data/resolve/main/kubric_au.tar.gz?download=true)

66 |

67 | - 64-frame data: [part1](https://huggingface.co/datasets/aharley/alltracker_data/resolve/main/ce64_kub_aa?download=true), [part2](https://huggingface.co/datasets/aharley/alltracker_data/resolve/main/ce64_kub_ab?download=true), [part3](https://huggingface.co/datasets/aharley/alltracker_data/resolve/main/ce64_kub_ac?download=true)

68 |

69 | Merge the parts by concatenating:

70 | ```

71 | cat ce64_kub_aa ce64_kub_ab ce64_kub_ac > ce64_kub.tar.gz

72 | ```

73 |

74 | The 24-frame Kubric data is a torch export of the official `kubric-public/tfds/movi_f/512x512` data.

75 |

76 | With Kubric, you can skip the other datasets and start training Stage 1.

77 |

78 | Download the rest of the point tracking datasets from [here](https://huggingface.co/datasets/aharley/alltracker_data/tree/main). There you will find 24-frame datasets, `ce24*.tar.gz`, and 64-frame datasets, `ce64*.tar.gz`. Some of the datasets are large, and they are split into parts, so you need to create the full files by concatenating.

79 |

80 | On disk, the point tracking datasets should look like this:

81 | ```

82 | data/

83 | ├── ce24/

84 | │ ├── drivingpt/

85 | │ ├── fltpt/

86 | │ ├── monkapt/

87 | │ ├── springpt/

88 | ├── ce64/

89 | │ ├── drivingpt/

90 | │ ├── kublong/

91 | │ ├── monkapt/

92 | │ ├── podlong/

93 | │ ├── springpt/

94 | ├── dynamicreplica/

95 | ├── kubric_au/

96 | ```

97 |

98 | Download the optical flow datasets from the official websites: [FlyingChairs, FlyingThings3D, Monkaa, Driving](https://lmb.informatik.uni-freiburg.de/resources/datasets) [AutoFlow](https://autoflow-google.github.io/), [SPRING](https://spring-benchmark.org/), [VIPER](https://playing-for-benchmarks.org/download/), [HD1K](http://hci-benchmark.iwr.uni-heidelberg.de/), [KITTI](https://www.cvlibs.net/datasets/kitti/eval_scene_flow.php?benchmark=flow), [TARTANAIR](https://theairlab.org/tartanair-dataset/).

99 |

100 |

101 | ### Stage 1

102 |

103 | Stage 1 is to train the model for 200k steps on Kubric.

104 |

105 | ```

106 | export CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7; python train_stage1.py --mixed_precision --lr 5e-4 --max_steps 200000 --data_dir /data --exp "stage1abc"

107 | ```

108 |

109 | This should produce a tensorboard log in `./logs_train/`, and checkpoints in `./checkpoints/`, in folder names similar to "64Ai4i3_5e-4m_stage1abc_1318". (The 4-digit string at the end is a timecode indicating when the run began, to help make the filepaths unique.)

110 |

111 | ### Stage 2

112 |

113 | Stage 2 is to train the model for 400k steps on a mix of point tracking datasets and optical flow datasets. This stage initializes from the output of Stage 1.

114 |

115 | ```

116 | export CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7; python train_stage2.py --mixed_precision --init_dir '64Ai4i3_5e-4m_stage1abc_1318' --lr 1e-5 --max_steps 400000 --exp 'stage2abc'

117 | ```

118 |

119 | ## Evaluation

120 |

121 | Test the model on point tracking datasets with a command like:

122 |

123 | ```

124 | python test_dense_on_sparse.py --dname 'dav'

125 | ```

126 |

127 | At the end, you should see:

128 | ```

129 | da: 76.3,

130 | aj: 63.3,

131 | oa: 90.0,

132 | ```

133 | which represent `d_avg` (accuracy), Average Jaccard, and Occlusion Accuracy. We find that small numerical issues (even across GPUs) may cause +- 0.1 fluctuation on these metrics.

134 |

135 | Test it at higher resolution with the `image_size` arg, like: `--image_size 448 768`, which should produce `da: 78.8, aj: 65.9, oa: 90.2` or `--image_size 768 1024`, which should produce `da: 80.6, aj: 67.2, oa: 89.7`.

136 |

137 | Note that AJ and OA are not reliable metrics in all datasets, because not all datasets follow the same rules about visibility annotation.

138 |

139 | Dataloaders for all test datasets are in the repo, and can be run in sequence with a command like:

140 | ```

141 | python test_dense_on_sparse.py --dname 'bad,cro,dav,dri,ego,hor,kin,rgb,rob'

142 | ```

143 | but if you have multiple GPUs, we recomend running the tests in parallel.

144 |

145 |

146 | ## Citation

147 |

148 | If you use this code for your research, please cite:

149 |

150 | ```

151 | Adam W. Harley, Yang You, Xinglong Sun, Yang Zheng, Nikhil Raghuraman, Yunqi Gu, Sheldon Liang, Wen-Hsuan Chu, Achal Dave, Pavel Tokmakov, Suya You, Rares Ambrus, Katerina Fragkiadaki, Leonidas J. Guibas. AllTracker: Efficient Dense Point Tracking at High Resolution. ICCV 2025.

152 | ```

153 |

154 | Bibtex:

155 | ```

156 | @inproceedings{harley2025alltracker,

157 | author = {Adam W. Harley and Yang You and Xinglong Sun and Yang Zheng and Nikhil Raghuraman and Yunqi Gu and Sheldon Liang and Wen-Hsuan Chu and Achal Dave and Pavel Tokmakov and Suya You and Rares Ambrus and Katerina Fragkiadaki and Leonidas J. Guibas},

158 | title = {All{T}racker: {E}fficient Dense Point Tracking at High Resolution}

159 | booktitle = {ICCV},

160 | year = {2025}

161 | }

162 | ```

163 |

--------------------------------------------------------------------------------

/utils/samp.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import utils.basic

3 | import torch.nn.functional as F

4 |

5 | def bilinear_sampler(input, coords, align_corners=True, padding_mode="border"):

6 | r"""Sample a tensor using bilinear interpolation

7 |

8 | `bilinear_sampler(input, coords)` samples a tensor :attr:`input` at

9 | coordinates :attr:`coords` using bilinear interpolation. It is the same

10 | as `torch.nn.functional.grid_sample()` but with a different coordinate

11 | convention.

12 |

13 | The input tensor is assumed to be of shape :math:`(B, C, H, W)`, where

14 | :math:`B` is the batch size, :math:`C` is the number of channels,

15 | :math:`H` is the height of the image, and :math:`W` is the width of the

16 | image. The tensor :attr:`coords` of shape :math:`(B, H_o, W_o, 2)` is

17 | interpreted as an array of 2D point coordinates :math:`(x_i,y_i)`.

18 |

19 | Alternatively, the input tensor can be of size :math:`(B, C, T, H, W)`,

20 | in which case sample points are triplets :math:`(t_i,x_i,y_i)`. Note

21 | that in this case the order of the components is slightly different

22 | from `grid_sample()`, which would expect :math:`(x_i,y_i,t_i)`.

23 |

24 | If `align_corners` is `True`, the coordinate :math:`x` is assumed to be

25 | in the range :math:`[0,W-1]`, with 0 corresponding to the center of the

26 | left-most image pixel :math:`W-1` to the center of the right-most

27 | pixel.

28 |

29 | If `align_corners` is `False`, the coordinate :math:`x` is assumed to

30 | be in the range :math:`[0,W]`, with 0 corresponding to the left edge of

31 | the left-most pixel :math:`W` to the right edge of the right-most

32 | pixel.

33 |

34 | Similar conventions apply to the :math:`y` for the range

35 | :math:`[0,H-1]` and :math:`[0,H]` and to :math:`t` for the range

36 | :math:`[0,T-1]` and :math:`[0,T]`.

37 |

38 | Args:

39 | input (Tensor): batch of input images.

40 | coords (Tensor): batch of coordinates.

41 | align_corners (bool, optional): Coordinate convention. Defaults to `True`.

42 | padding_mode (str, optional): Padding mode. Defaults to `"border"`.

43 |

44 | Returns:

45 | Tensor: sampled points.

46 | """

47 |

48 | sizes = input.shape[2:]

49 |

50 | assert len(sizes) in [2, 3]

51 |

52 | if len(sizes) == 3:

53 | # t x y -> x y t to match dimensions T H W in grid_sample

54 | coords = coords[..., [1, 2, 0]]

55 |

56 | if align_corners:

57 | coords = coords * torch.tensor(

58 | [2 / max(size - 1, 1) for size in reversed(sizes)], device=coords.device

59 | )

60 | else:

61 | coords = coords * torch.tensor(

62 | [2 / size for size in reversed(sizes)], device=coords.device

63 | )

64 |

65 | coords -= 1

66 |

67 | return F.grid_sample(

68 | input, coords, align_corners=align_corners, padding_mode=padding_mode

69 | )

70 |

71 |

72 | def sample_features4d(input, coords):

73 | r"""Sample spatial features

74 |

75 | `sample_features4d(input, coords)` samples the spatial features

76 | :attr:`input` represented by a 4D tensor :math:`(B, C, H, W)`.

77 |

78 | The field is sampled at coordinates :attr:`coords` using bilinear

79 | interpolation. :attr:`coords` is assumed to be of shape :math:`(B, R,

80 | 3)`, where each sample has the format :math:`(x_i, y_i)`. This uses the

81 | same convention as :func:`bilinear_sampler` with `align_corners=True`.

82 |

83 | The output tensor has one feature per point, and has shape :math:`(B,

84 | R, C)`.

85 |

86 | Args:

87 | input (Tensor): spatial features.

88 | coords (Tensor): points.

89 |

90 | Returns:

91 | Tensor: sampled features.

92 | """

93 |

94 | B, _, _, _ = input.shape

95 |

96 | # B R 2 -> B R 1 2

97 | coords = coords.unsqueeze(2)

98 |

99 | # B C R 1

100 | feats = bilinear_sampler(input, coords)

101 |

102 | return feats.permute(0, 2, 1, 3).view(

103 | B, -1, feats.shape[1] * feats.shape[3]

104 | ) # B C R 1 -> B R C

105 |

106 |

107 | def sample_features5d(input, coords):

108 | r"""Sample spatio-temporal features

109 |

110 | `sample_features5d(input, coords)` works in the same way as

111 | :func:`sample_features4d` but for spatio-temporal features and points:

112 | :attr:`input` is a 5D tensor :math:`(B, T, C, H, W)`, :attr:`coords` is

113 | a :math:`(B, R1, R2, 3)` tensor of spatio-temporal point :math:`(t_i,

114 | x_i, y_i)`. The output tensor has shape :math:`(B, R1, R2, C)`.

115 |

116 | Args:

117 | input (Tensor): spatio-temporal features.

118 | coords (Tensor): spatio-temporal points.

119 |

120 | Returns:

121 | Tensor: sampled features.

122 | """

123 |

124 | B, T, _, _, _ = input.shape

125 |

126 | # B T C H W -> B C T H W

127 | input = input.permute(0, 2, 1, 3, 4)

128 |

129 | # B R1 R2 3 -> B R1 R2 1 3

130 | coords = coords.unsqueeze(3)

131 |

132 | # B C R1 R2 1

133 | feats = bilinear_sampler(input, coords)

134 |

135 | return feats.permute(0, 2, 3, 1, 4).view(

136 | B, feats.shape[2], feats.shape[3], feats.shape[1]

137 | ) # B C R1 R2 1 -> B R1 R2 C

138 |

139 |

140 | def bilinear_sample2d(im, x, y, return_inbounds=False):

141 | # x and y are each B, N

142 | # output is B, C, N

143 | B, C, H, W = list(im.shape)

144 | N = list(x.shape)[1]

145 |

146 | x = x.float()

147 | y = y.float()

148 | H_f = torch.tensor(H, dtype=torch.float32)

149 | W_f = torch.tensor(W, dtype=torch.float32)

150 |

151 | # inbound_mask = (x>-0.5).float()*(y>-0.5).float()*(x -0.5).byte() & (x < float(W_f - 0.5)).byte()

208 | y_valid = (y > -0.5).byte() & (y < float(H_f - 0.5)).byte()

209 | inbounds = (x_valid & y_valid).float()

210 | inbounds = inbounds.reshape(B, N) # something seems wrong here for B>1; i'm getting an error here (or downstream if i put -1)

211 | return output, inbounds

212 |

213 | return output # B, C, N

214 |

--------------------------------------------------------------------------------

/datasets/drivetrackdataset.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import numpy as np

3 | import os

4 | import glob

5 | import cv2

6 | from datasets.pointdataset import PointDataset

7 | import pickle

8 | import utils.data

9 | from pathlib import Path

10 |

11 | class DrivetrackDataset(PointDataset):

12 | def __init__(

13 | self,

14 | data_root='../datasets/drivetrack',

15 | crop_size=(384, 512),

16 | seq_len=None,

17 | traj_per_sample=512,

18 | only_first=False,

19 | ):

20 | super(DrivetrackDataset, self).__init__(

21 | data_root=data_root,

22 | crop_size=crop_size,

23 | seq_len=seq_len,

24 | traj_per_sample=traj_per_sample,

25 | )

26 | print("loading drivetrack dataset...")

27 |

28 | self.dname = 'drivetrack'

29 | self.only_first = only_first

30 | S = seq_len

31 |

32 | self.dataset_location = Path(data_root)

33 | self.S = S

34 | video_fns = sorted(list(self.dataset_location.glob('*.npz')))

35 |

36 | self.video_fns = []

37 | for video_fn in video_fns[:100]: # drivetrack is huge and self-similar, so we trim to 100

38 | ds = np.load(video_fn, allow_pickle=True)

39 | rgbs, trajs, visibs = ds['video'], ds['tracks'], ds['visibles']

40 | # rgbs is T,1280,1920,3

41 | # trajs is N,T,2

42 | # visibs is N,T

43 |

44 | trajs = np.transpose(trajs, (1,0,2)).astype(np.float32) # S,N,2

45 | visibs = np.transpose(visibs, (1,0)).astype(np.float32) # S,N

46 | valids = visibs.copy()

47 | # print('N0', trajs.shape[1])

48 |

49 | # discard tracks with any inf/nan

50 | idx = np.nonzero(np.isfinite(trajs.sum(0).sum(1)))[0] # N

51 | trajs = trajs[:,idx]

52 | visibs = visibs[:,idx]

53 | valids = valids[:,idx]

54 | # print('N1', trajs.shape[1])

55 |

56 | if trajs.shape[1] < self.traj_per_sample:

57 | continue

58 |

59 | # shuffle and trim

60 | inds = np.random.permutation(trajs.shape[1])

61 | inds = inds[:10000]

62 | trajs = trajs[:,inds]

63 | visibs = visibs[:,inds]

64 | valids = valids[:,inds]

65 | # print('N2', trajs.shape[1])

66 |

67 | S,H,W,C = rgbs.shape

68 |

69 | # set OOB to invisible

70 | visibs[trajs[:, :, 0] > W-1] = False

71 | visibs[trajs[:, :, 0] < 0] = False

72 | visibs[trajs[:, :, 1] > H-1] = False

73 | visibs[trajs[:, :, 1] < 0] = False

74 |

75 | rgbs, trajs, visibs, valids = utils.data.standardize_test_data(

76 | rgbs, trajs, visibs, valids, only_first=self.only_first, seq_len=self.seq_len)

77 | # print('N3', trajs.shape[1])

78 |

79 | if trajs.shape[1] < self.traj_per_sample:

80 | continue

81 |

82 | trajs = torch.from_numpy(trajs)

83 | visibs = torch.from_numpy(visibs)

84 | valids = torch.from_numpy(valids)

85 | # discard tracks that go far OOB

86 | crop_tensor = torch.tensor(self.crop_size).flip(0)[None, None] / 2.0

87 | close_pts_inds = torch.all(

88 | torch.linalg.vector_norm(trajs[..., :2] - crop_tensor, dim=-1) < max(H,W)*2,

89 | dim=0,

90 | )

91 | trajs = trajs[:, close_pts_inds]

92 | visibs = visibs[:, close_pts_inds]

93 | valids = valids[:, close_pts_inds]

94 | # print('N4', trajs.shape[1])

95 |

96 | if trajs.shape[1] < self.traj_per_sample:

97 | continue

98 |

99 | visible_inds = (valids[0]*visibs[0]).nonzero(as_tuple=False)[:, 0]

100 | trajs = trajs[:, visible_inds].float()

101 | visibs = visibs[:, visible_inds].float()

102 | valids = valids[:, visible_inds].float()

103 | # print('N5', trajs.shape[1])

104 |

105 | if trajs.shape[1] >= self.traj_per_sample:

106 | self.video_fns.append(video_fn)

107 |

108 | print(f"found {len(self.video_fns)} unique videos in {self.dataset_location}")

109 |

110 | def getitem_helper(self, index):

111 | video_fn = self.video_fns[index]

112 | ds = np.load(video_fn, allow_pickle=True)

113 | rgbs, trajs, visibs = ds['video'], ds['tracks'], ds['visibles']

114 | # rgbs is T,1280,1920,3

115 | # trajs is N,T,2

116 | # visibs is N,T

117 |

118 | trajs = np.transpose(trajs, (1,0,2)).astype(np.float32) # S,N,2

119 | visibs = np.transpose(visibs, (1,0)).astype(np.float32) # S,N

120 | valids = visibs.copy()

121 |

122 | # discard inf/nan

123 | idx = np.nonzero(np.isfinite(trajs.sum(0).sum(1)))[0] # N

124 | trajs = trajs[:,idx]

125 | visibs = visibs[:,idx]

126 | valids = valids[:,idx]

127 |

128 | # shuffle and trim

129 | inds = np.random.permutation(trajs.shape[1])

130 | inds = inds[:10000]

131 | trajs = trajs[:,inds]

132 | visibs = visibs[:,inds]

133 | valids = valids[:,inds]

134 | N = trajs.shape[1]

135 | # print('N2', trajs.shape[1])

136 |

137 | S,H,W,C = rgbs.shape

138 | # set OOB to invisible

139 | visibs[trajs[:, :, 0] > W-1] = False

140 | visibs[trajs[:, :, 0] < 0] = False

141 | visibs[trajs[:, :, 1] > H-1] = False

142 | visibs[trajs[:, :, 1] < 0] = False

143 |

144 | rgbs, trajs, visibs, valids = utils.data.standardize_test_data(

145 | rgbs, trajs, visibs, valids, only_first=self.only_first, seq_len=self.seq_len)

146 |

147 | H, W = rgbs[0].shape[:2]

148 | trajs[:,:,0] /= W-1

149 | trajs[:,:,1] /= H-1

150 | rgbs = [cv2.resize(rgb, (self.crop_size[1], self.crop_size[0]), interpolation=cv2.INTER_LINEAR) for rgb in rgbs]

151 | rgbs = np.stack(rgbs)

152 | H,W = rgbs[0].shape[:2]

153 | trajs[:,:,0] *= W-1

154 | trajs[:,:,1] *= H-1

155 |

156 | trajs = torch.from_numpy(trajs)

157 | visibs = torch.from_numpy(visibs)

158 | valids = torch.from_numpy(valids)

159 |

160 | # discard tracks that go far OOB

161 | crop_tensor = torch.tensor(self.crop_size).flip(0)[None, None] / 2.0

162 | close_pts_inds = torch.all(

163 | torch.linalg.vector_norm(trajs[..., :2] - crop_tensor, dim=-1) < max(H,W)*2,

164 | dim=0,

165 | )

166 | trajs = trajs[:, close_pts_inds]

167 | visibs = visibs[:, close_pts_inds]

168 | valids = valids[:, close_pts_inds]

169 | # print('N3', trajs.shape[1])

170 |

171 | visible_pts_inds = (valids[0]*visibs[0]).nonzero(as_tuple=False)[:, 0]

172 | point_inds = torch.randperm(len(visible_pts_inds))[: self.traj_per_sample]

173 | if len(point_inds) < self.traj_per_sample:

174 | return None, False

175 | visible_inds_sampled = visible_pts_inds[point_inds]

176 | trajs = trajs[:, visible_inds_sampled].float()

177 | visibs = visibs[:, visible_inds_sampled].float()

178 | valids = valids[:, visible_inds_sampled].float()

179 | # print('N4', trajs.shape[1])

180 |

181 | trajs = trajs[:, :self.traj_per_sample]

182 | visibs = visibs[:, :self.traj_per_sample]

183 | valids = valids[:, :self.traj_per_sample]

184 | # print('N5', trajs.shape[1])

185 |

186 | rgbs = torch.from_numpy(rgbs).permute(0, 3, 1, 2).float()

187 |

188 | sample = utils.data.VideoData(

189 | video=rgbs,

190 | trajs=trajs,

191 | visibs=visibs,

192 | valids=valids,

193 | dname=self.dname,

194 | )

195 | return sample, True

196 |

197 | def __len__(self):

198 | return len(self.video_fns)

199 |

--------------------------------------------------------------------------------

/utils/loss.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn.functional as F

3 | import torch.nn as nn

4 | from typing import List

5 | import utils.basic

6 |

7 |

8 | def sequence_loss(

9 | flow_preds,

10 | flow_gt,

11 | valids,

12 | vis=None,

13 | gamma=0.8,

14 | use_huber_loss=False,

15 | loss_only_for_visible=False,

16 | ):

17 | """Loss function defined over sequence of flow predictions"""

18 | total_flow_loss = 0.0

19 | for j in range(len(flow_gt)):

20 | B, S, N, D = flow_gt[j].shape

21 | B, S2, N = valids[j].shape

22 | assert S == S2

23 | n_predictions = len(flow_preds[j])

24 | flow_loss = 0.0

25 | for i in range(n_predictions):

26 | i_weight = gamma ** (n_predictions - i - 1)

27 | flow_pred = flow_preds[j][i]

28 | if use_huber_loss:

29 | i_loss = huber_loss(flow_pred, flow_gt[j], delta=6.0)

30 | else:

31 | i_loss = (flow_pred - flow_gt[j]).abs() # B, S, N, 2

32 | i_loss = torch.mean(i_loss, dim=3) # B, S, N

33 | valid_ = valids[j].clone()

34 | if loss_only_for_visible:

35 | valid_ = valid_ * vis[j]

36 | flow_loss += i_weight * utils.basic.reduce_masked_mean(i_loss, valid_)

37 | flow_loss = flow_loss / n_predictions

38 | total_flow_loss += flow_loss

39 | return total_flow_loss / len(flow_gt)

40 |

41 | def sequence_loss_dense(

42 | flow_preds,

43 | flow_gt,

44 | valids,

45 | vis=None,

46 | gamma=0.8,

47 | use_huber_loss=False,

48 | loss_only_for_visible=False,

49 | ):

50 | """Loss function defined over sequence of flow predictions"""

51 | total_flow_loss = 0.0

52 | for j in range(len(flow_gt)):

53 | # print('flow_gt[j]', flow_gt[j].shape)

54 | B, S, D, H, W = flow_gt[j].shape

55 | B, S2, _, H, W = valids[j].shape

56 | assert S == S2

57 | n_predictions = len(flow_preds[j])

58 | flow_loss = 0.0

59 | # import ipdb; ipdb.set_trace()

60 | for i in range(n_predictions):

61 | # print('flow_e[j][i]', flow_preds[j][i].shape)

62 | i_weight = gamma ** (n_predictions - i - 1)

63 | flow_pred = flow_preds[j][i] # B,S,2,H,W

64 | if use_huber_loss:

65 | i_loss = huber_loss(flow_pred, flow_gt[j], delta=6.0) # B,S,2,H,W

66 | else:

67 | i_loss = (flow_pred - flow_gt[j]).abs() # B,S,2,H,W

68 | i_loss_ = torch.mean(i_loss, dim=2) # B,S,H,W

69 | valid_ = valids[j].reshape(B,S,H,W)

70 | # print(' (%d,%d) i_loss_' % (i,j), i_loss_.shape)

71 | # print(' (%d,%d) valid_' % (i,j), valid_.shape)

72 | if loss_only_for_visible:

73 | valid_ = valid_ * vis[j].reshape(B,-1,H,W) # usually B,S,H,W, but maybe B,1,H,W

74 | flow_loss += i_weight * utils.basic.reduce_masked_mean(i_loss_, valid_, broadcast=True)

75 | # import ipdb; ipdb.set_trace()

76 | flow_loss = flow_loss / n_predictions

77 | total_flow_loss += flow_loss

78 | return total_flow_loss / len(flow_gt)

79 |

80 |

81 | def huber_loss(x, y, delta=1.0):

82 | """Calculate element-wise Huber loss between x and y"""

83 | diff = x - y

84 | abs_diff = diff.abs()

85 | flag = (abs_diff <= delta).float()

86 | return flag * 0.5 * diff**2 + (1 - flag) * delta * (abs_diff - 0.5 * delta)

87 |

88 |

89 | def sequence_BCE_loss(vis_preds, vis_gts, valids=None, use_logits=False):

90 | total_bce_loss = 0.0

91 | # all_vis_preds = [torch.stack(vp) for vp in vis_preds]

92 | # all_vis_preds = torch.stack(all_vis_preds)

93 | # utils.basic.print_stats('all_vis_preds', all_vis_preds)

94 | for j in range(len(vis_preds)):

95 | n_predictions = len(vis_preds[j])

96 | bce_loss = 0.0

97 | for i in range(n_predictions):

98 | # utils.basic.print_stats('vis_preds[%d][%d]' % (j,i), vis_preds[j][i])

99 | # utils.basic.print_stats('vis_gts[%d]' % (i), vis_gts[i])

100 | if use_logits:

101 | loss = F.binary_cross_entropy_with_logits(vis_preds[j][i], vis_gts[j], reduction='none')

102 | else:

103 | loss = F.binary_cross_entropy(vis_preds[j][i], vis_gts[j], reduction='none')

104 | if valids is None:

105 | bce_loss += loss.mean()

106 | else:

107 | bce_loss += (loss * valids[j]).mean()

108 | bce_loss = bce_loss / n_predictions

109 | total_bce_loss += bce_loss

110 | return total_bce_loss / len(vis_preds)

111 |

112 |

113 | # def sequence_BCE_loss_dense(vis_preds, vis_gts):

114 | # total_bce_loss = 0.0

115 | # for j in range(len(vis_preds)):

116 | # n_predictions = len(vis_preds[j])

117 | # bce_loss = 0.0

118 | # for i in range(n_predictions):

119 | # vis_e = vis_preds[j][i]

120 | # vis_g = vis_gts[j]

121 | # print('vis_e', vis_e.shape, 'vis_g', vis_g.shape)

122 | # vis_loss = F.binary_cross_entropy(vis_e, vis_g)

123 | # bce_loss += vis_loss

124 | # bce_loss = bce_loss / n_predictions

125 | # total_bce_loss += bce_loss

126 | # return total_bce_loss / len(vis_preds)

127 |

128 |

129 | def sequence_prob_loss(

130 | tracks: torch.Tensor,

131 | confidence: torch.Tensor,

132 | target_points: torch.Tensor,

133 | visibility: torch.Tensor,

134 | expected_dist_thresh: float = 12.0,

135 | use_logits=False,

136 | ):

137 | """Loss for classifying if a point is within pixel threshold of its target."""

138 | # Points with an error larger than 12 pixels are likely to be useless; marking

139 | # them as occluded will actually improve Jaccard metrics and give

140 | # qualitatively better results.

141 | total_logprob_loss = 0.0

142 | for j in range(len(tracks)):

143 | n_predictions = len(tracks[j])

144 | logprob_loss = 0.0

145 | for i in range(n_predictions):

146 | err = torch.sum((tracks[j][i].detach() - target_points[j]) ** 2, dim=-1)

147 | valid = (err <= expected_dist_thresh**2).float()

148 | if use_logits:

149 | loss = F.binary_cross_entropy_with_logits(confidence[j][i], valid, reduction="none")

150 | else:

151 | loss = F.binary_cross_entropy(confidence[j][i], valid, reduction="none")

152 | loss *= visibility[j]

153 | loss = torch.mean(loss, dim=[1, 2])

154 | logprob_loss += loss

155 | logprob_loss = logprob_loss / n_predictions

156 | total_logprob_loss += logprob_loss