├── .github

└── FUNDING.yml

├── README.md

├── TutorialProject

├── NumPyCNN.py

├── README.md

└── example.py

├── cnn.py

├── dataset_inputs.npy

├── dataset_outputs.npy

├── example.py

└── requirements.txt

/.github/FUNDING.yml:

--------------------------------------------------------------------------------

1 | # These are supported funding model platforms

2 |

3 | github: # Replace with up to 4 GitHub Sponsors-enabled usernames e.g., [user1, user2]

4 | # paypal: http://paypal.me/ahmedfgad # Replace with a single Patreon username

5 | open_collective: pygad

6 | ko_fi: # Replace with a single Ko-fi username

7 | tidelift: # Replace with a single Tidelift platform-name/package-name e.g., npm/babel

8 | community_bridge: # Replace with a single Community Bridge project-name e.g., cloud-foundry

9 | liberapay: # Replace with a single Liberapay username

10 | issuehunt: # Replace with a single IssueHunt username

11 | otechie: # Replace with a single Otechie username

12 | custom: ['https://donate.stripe.com/eVa5kO866elKgM0144', 'http://paypal.me/ahmedfgad'] # Replace with up to 4 custom sponsorship URLs e.g., ['link1', 'link2']

13 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # NumPyCNN: Implementing Convolutional Neural Networks From Scratch

2 | NumPyCNN is a Python implementation for convolutional neural networks (CNNs) from scratch using NumPy.

3 |

4 | **IMPORTANT** *If you are coming for the code of the tutorial titled [Building Convolutional Neural Network using NumPy from Scratch]( [https://www.linkedin.com/pulse/building-convolutional-neural-network-using-numpy-from-ahmed-gad](https://www.linkedin.com/pulse/building-convolutional-neural-network-using-numpy-from-ahmed-gad/)), then it has been moved to the [TutorialProject](https://github.com/ahmedfgad/NumPyCNN/tree/master/TutorialProject) directory on 20 May 2020.*

5 |

6 | The project has a single module named `cnn.py` which implements all classes and functions needed to build the CNN.

7 |

8 | It is very important to note that the project only implements the **forward pass** of training CNNs and there is **no learning algorithm used**. Just the learning rate is used to make some changes to the weights after each epoch which is better than leaving the weights unchanged.

9 |

10 | The project can be used for classification problems where only 1 class per sample is allowed.

11 |

12 | The project will be extended to **train CNN using the genetic algorithm** with the help of a library named [PyGAD](https://pypi.org/project/pygad). Check the library's documentation at [Read The Docs](https://pygad.readthedocs.io/): https://pygad.readthedocs.io

13 |

14 | # Donation

15 |

16 | - [Credit/Debit Card](https://donate.stripe.com/eVa5kO866elKgM0144): https://donate.stripe.com/eVa5kO866elKgM0144

17 | - [Open Collective](https://opencollective.com/pygad): [opencollective.com/pygad](https://opencollective.com/pygad)

18 | - PayPal: Use either this link: [paypal.me/ahmedfgad](https://paypal.me/ahmedfgad) or the e-mail address ahmed.f.gad@gmail.com

19 | - Interac e-Transfer: Use e-mail address ahmed.f.gad@gmail.com

20 |

21 | # Installation

22 |

23 | To install [PyGAD](https://pypi.org/project/pygad), simply use pip to download and install the library from [PyPI](https://pypi.org/project/pygad) (Python Package Index). The library is at PyPI at this page https://pypi.org/project/pygad.

24 |

25 | Install PyGAD with the following command:

26 |

27 | ```python

28 | pip install pygad

29 | ```

30 |

31 | To get started with PyGAD, please read the documentation at [Read The Docs](https://pygad.readthedocs.io/) https://pygad.readthedocs.io.

32 |

33 | # PyGAD Source Code

34 |

35 | The source code of the PyGAD' modules is found in the following GitHub projects:

36 |

37 | - [pygad](https://github.com/ahmedfgad/GeneticAlgorithmPython): (https://github.com/ahmedfgad/GeneticAlgorithmPython)

38 | - [pygad.nn](https://github.com/ahmedfgad/NumPyANN): https://github.com/ahmedfgad/NumPyANN

39 | - [pygad.gann](https://github.com/ahmedfgad/NeuralGenetic): https://github.com/ahmedfgad/NeuralGenetic

40 | - [pygad.cnn](https://github.com/ahmedfgad/NumPyCNN): https://github.com/ahmedfgad/NumPyCNN

41 | - [pygad.gacnn](https://github.com/ahmedfgad/CNNGenetic): https://github.com/ahmedfgad/CNNGenetic

42 | - [pygad.kerasga](https://github.com/ahmedfgad/KerasGA): https://github.com/ahmedfgad/KerasGA

43 | - [pygad.torchga](https://github.com/ahmedfgad/TorchGA): https://github.com/ahmedfgad/TorchGA

44 |

45 | The documentation of PyGAD is available at [Read The Docs](https://pygad.readthedocs.io/) https://pygad.readthedocs.io.

46 |

47 | # PyGAD Documentation

48 |

49 | The documentation of the PyGAD library is available at [Read The Docs](https://pygad.readthedocs.io) at this link: https://pygad.readthedocs.io. It discusses the modules supported by PyGAD, all its classes, methods, attribute, and functions. For each module, a number of examples are given.

50 |

51 | If there is an issue using PyGAD, feel free to post at issue in this [GitHub repository](https://github.com/ahmedfgad/GeneticAlgorithmPython) https://github.com/ahmedfgad/GeneticAlgorithmPython or by sending an e-mail to ahmed.f.gad@gmail.com.

52 |

53 | If you built a project that uses PyGAD, then please drop an e-mail to ahmed.f.gad@gmail.com with the following information so that your project is included in the documentation.

54 |

55 | - Project title

56 | - Brief description

57 | - Preferably, a link that directs the readers to your project

58 |

59 | Please check the **Contact Us** section for more contact details.

60 |

61 | # Life Cycle of PyGAD

62 |

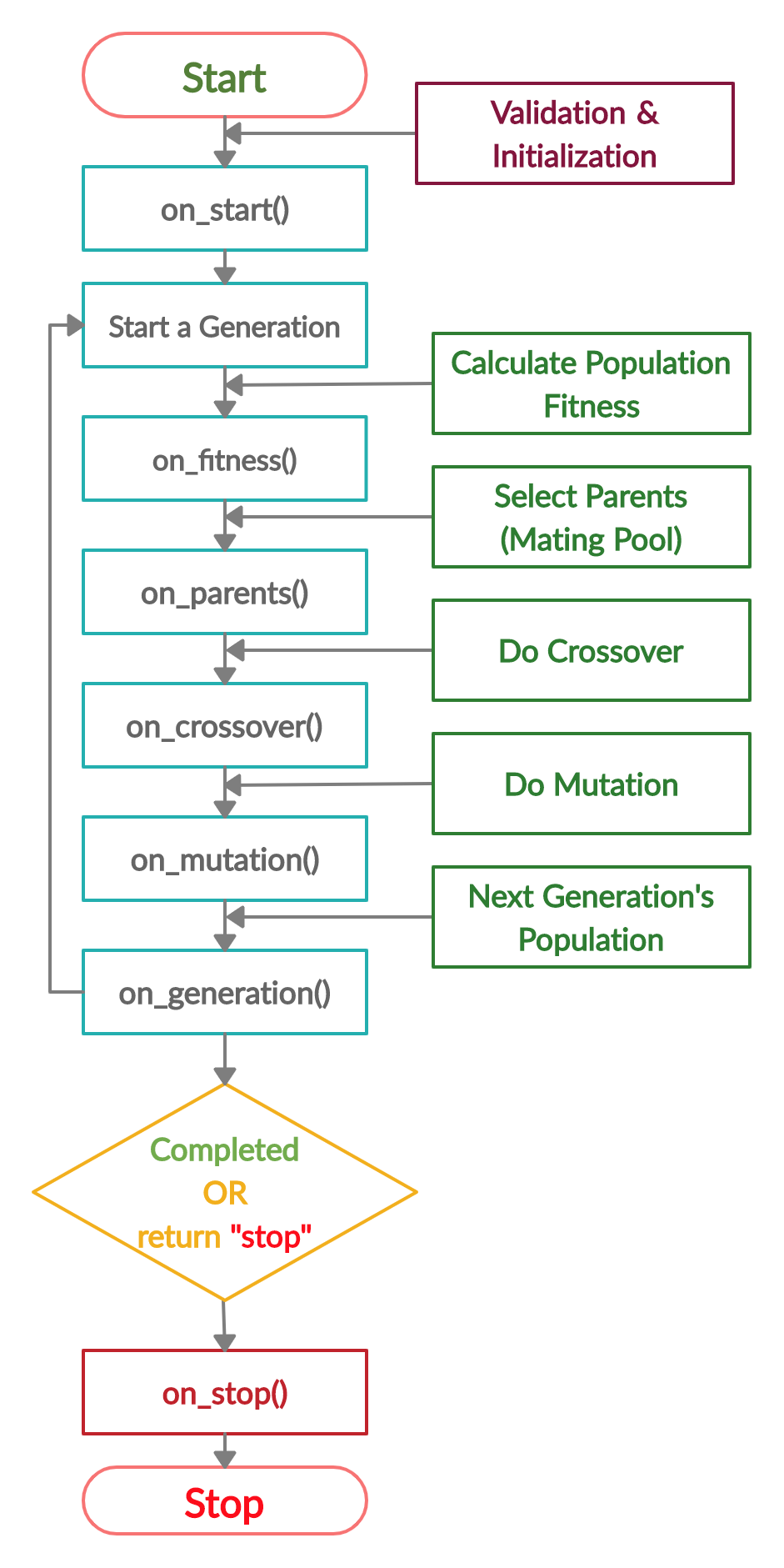

63 | The next figure lists the different stages in the lifecycle of an instance of the `pygad.GA` class. Note that PyGAD stops when either all generations are completed or when the function passed to the `on_generation` parameter returns the string `stop`.

64 |

65 |

66 |

67 | The next code implements all the callback functions to trace the execution of the genetic algorithm. Each callback function prints its name.

68 |

69 | ```python

70 | import pygad

71 | import numpy

72 |

73 | function_inputs = [4,-2,3.5,5,-11,-4.7]

74 | desired_output = 44

75 |

76 | def fitness_func(ga_instance, solution, solution_idx):

77 | output = numpy.sum(solution*function_inputs)

78 | fitness = 1.0 / (numpy.abs(output - desired_output) + 0.000001)

79 | return fitness

80 |

81 | fitness_function = fitness_func

82 |

83 | def on_start(ga_instance):

84 | print("on_start()")

85 |

86 | def on_fitness(ga_instance, population_fitness):

87 | print("on_fitness()")

88 |

89 | def on_parents(ga_instance, selected_parents):

90 | print("on_parents()")

91 |

92 | def on_crossover(ga_instance, offspring_crossover):

93 | print("on_crossover()")

94 |

95 | def on_mutation(ga_instance, offspring_mutation):

96 | print("on_mutation()")

97 |

98 | def on_generation(ga_instance):

99 | print("on_generation()")

100 |

101 | def on_stop(ga_instance, last_population_fitness):

102 | print("on_stop()")

103 |

104 | ga_instance = pygad.GA(num_generations=3,

105 | num_parents_mating=5,

106 | fitness_func=fitness_function,

107 | sol_per_pop=10,

108 | num_genes=len(function_inputs),

109 | on_start=on_start,

110 | on_fitness=on_fitness,

111 | on_parents=on_parents,

112 | on_crossover=on_crossover,

113 | on_mutation=on_mutation,

114 | on_generation=on_generation,

115 | on_stop=on_stop)

116 |

117 | ga_instance.run()

118 | ```

119 |

120 | Based on the used 3 generations as assigned to the `num_generations` argument, here is the output.

121 |

122 | ```

123 | on_start()

124 |

125 | on_fitness()

126 | on_parents()

127 | on_crossover()

128 | on_mutation()

129 | on_generation()

130 |

131 | on_fitness()

132 | on_parents()

133 | on_crossover()

134 | on_mutation()

135 | on_generation()

136 |

137 | on_fitness()

138 | on_parents()

139 | on_crossover()

140 | on_mutation()

141 | on_generation()

142 |

143 | on_stop()

144 | ```

145 |

146 | # Example

147 |

148 | Check the [PyGAD's documentation](https://pygad.readthedocs.io/en/latest/cnn.html) for information about the implementation of this example.

149 |

150 | ```python

151 | import numpy

152 | import pygad.cnn

153 |

154 | train_inputs = numpy.load("dataset_inputs.npy")

155 | train_outputs = numpy.load("dataset_outputs.npy")

156 |

157 | sample_shape = train_inputs.shape[1:]

158 | num_classes = 4

159 |

160 | input_layer = pygad.cnn.Input2D(input_shape=sample_shape)

161 | conv_layer1 = pygad.cnn.Conv2D(num_filters=2,

162 | kernel_size=3,

163 | previous_layer=input_layer,

164 | activation_function=None)

165 | relu_layer1 = pygad.cnn.Sigmoid(previous_layer=conv_layer1)

166 | average_pooling_layer = pygad.cnn.AveragePooling2D(pool_size=2,

167 | previous_layer=relu_layer1,

168 | stride=2)

169 |

170 | conv_layer2 = pygad.cnn.Conv2D(num_filters=3,

171 | kernel_size=3,

172 | previous_layer=average_pooling_layer,

173 | activation_function=None)

174 | relu_layer2 = pygad.cnn.ReLU(previous_layer=conv_layer2)

175 | max_pooling_layer = pygad.cnn.MaxPooling2D(pool_size=2,

176 | previous_layer=relu_layer2,

177 | stride=2)

178 |

179 | conv_layer3 = pygad.cnn.Conv2D(num_filters=1,

180 | kernel_size=3,

181 | previous_layer=max_pooling_layer,

182 | activation_function=None)

183 | relu_layer3 = pygad.cnn.ReLU(previous_layer=conv_layer3)

184 | pooling_layer = pygad.cnn.AveragePooling2D(pool_size=2,

185 | previous_layer=relu_layer3,

186 | stride=2)

187 |

188 | flatten_layer = pygad.cnn.Flatten(previous_layer=pooling_layer)

189 | dense_layer1 = pygad.cnn.Dense(num_neurons=100,

190 | previous_layer=flatten_layer,

191 | activation_function="relu")

192 | dense_layer2 = pygad.cnn.Dense(num_neurons=num_classes,

193 | previous_layer=dense_layer1,

194 | activation_function="softmax")

195 |

196 | model = pygad.cnn.Model(last_layer=dense_layer2,

197 | epochs=1,

198 | learning_rate=0.01)

199 |

200 | model.summary()

201 |

202 | model.train(train_inputs=train_inputs,

203 | train_outputs=train_outputs)

204 |

205 | predictions = model.predict(data_inputs=train_inputs)

206 | print(predictions)

207 |

208 | num_wrong = numpy.where(predictions != train_outputs)[0]

209 | num_correct = train_outputs.size - num_wrong.size

210 | accuracy = 100 * (num_correct/train_outputs.size)

211 | print("Number of correct classifications : {num_correct}.".format(num_correct=num_correct))

212 | print("Number of wrong classifications : {num_wrong}.".format(num_wrong=num_wrong.size))

213 | print("Classification accuracy : {accuracy}.".format(accuracy=accuracy))

214 | ```

215 |

216 | # For More Information

217 |

218 | There are different resources that can be used to get started with the building CNN and its Python implementation.

219 |

220 | ## Tutorial: Implementing Genetic Algorithm in Python

221 |

222 | To start with coding the genetic algorithm, you can check the tutorial titled [**Genetic Algorithm Implementation in Python**](https://www.linkedin.com/pulse/genetic-algorithm-implementation-python-ahmed-gad) available at these links:

223 |

224 | - [LinkedIn](https://www.linkedin.com/pulse/genetic-algorithm-implementation-python-ahmed-gad)

225 | - [Towards Data Science](https://towardsdatascience.com/genetic-algorithm-implementation-in-python-5ab67bb124a6)

226 | - [KDnuggets](https://www.kdnuggets.com/2018/07/genetic-algorithm-implementation-python.html)

227 |

228 | [This tutorial](https://www.linkedin.com/pulse/genetic-algorithm-implementation-python-ahmed-gad) is prepared based on a previous version of the project but it still a good resource to start with coding the genetic algorithm.

229 |

230 | [](https://www.linkedin.com/pulse/genetic-algorithm-implementation-python-ahmed-gad)

231 |

232 | ## Tutorial: Introduction to Genetic Algorithm

233 |

234 | Get started with the genetic algorithm by reading the tutorial titled [**Introduction to Optimization with Genetic Algorithm**](https://www.linkedin.com/pulse/introduction-optimization-genetic-algorithm-ahmed-gad) which is available at these links:

235 |

236 | * [LinkedIn](https://www.linkedin.com/pulse/introduction-optimization-genetic-algorithm-ahmed-gad)

237 | * [Towards Data Science](https://www.kdnuggets.com/2018/03/introduction-optimization-with-genetic-algorithm.html)

238 | * [KDnuggets](https://towardsdatascience.com/introduction-to-optimization-with-genetic-algorithm-2f5001d9964b)

239 |

240 | [](https://www.linkedin.com/pulse/introduction-optimization-genetic-algorithm-ahmed-gad)

241 |

242 | ## Tutorial: Build Neural Networks in Python

243 |

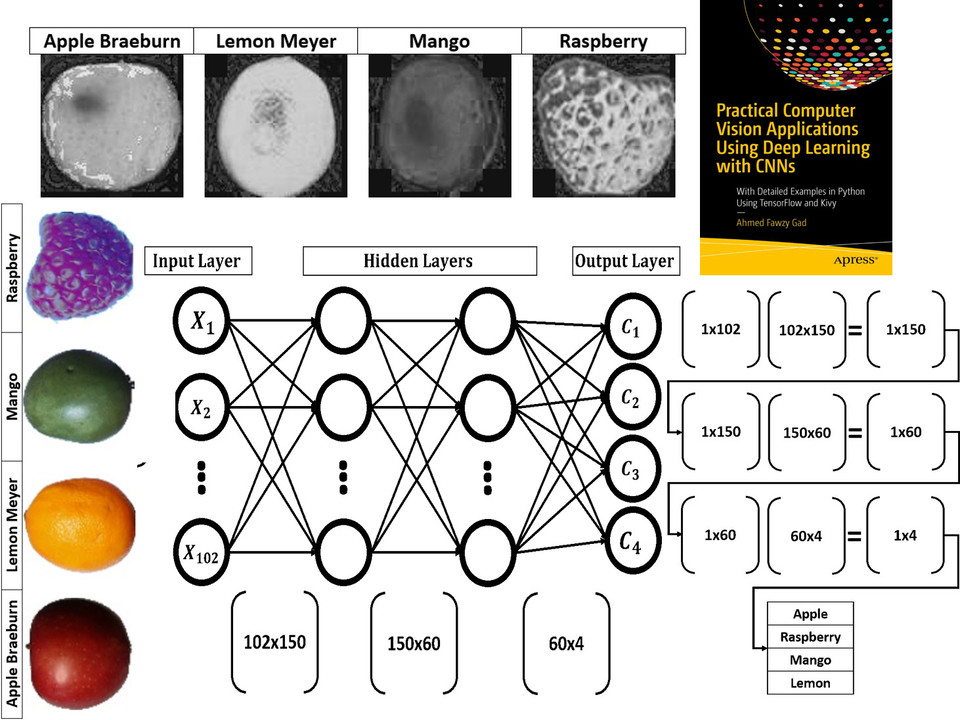

244 | Read about building neural networks in Python through the tutorial titled [**Artificial Neural Network Implementation using NumPy and Classification of the Fruits360 Image Dataset**](https://www.linkedin.com/pulse/artificial-neural-network-implementation-using-numpy-fruits360-gad) available at these links:

245 |

246 | * [LinkedIn](https://www.linkedin.com/pulse/artificial-neural-network-implementation-using-numpy-fruits360-gad)

247 | * [Towards Data Science](https://towardsdatascience.com/artificial-neural-network-implementation-using-numpy-and-classification-of-the-fruits360-image-3c56affa4491)

248 | * [KDnuggets](https://www.kdnuggets.com/2019/02/artificial-neural-network-implementation-using-numpy-and-image-classification.html)

249 |

250 | [](https://www.linkedin.com/pulse/artificial-neural-network-implementation-using-numpy-fruits360-gad)

251 |

252 | ## Tutorial: Optimize Neural Networks with Genetic Algorithm

253 |

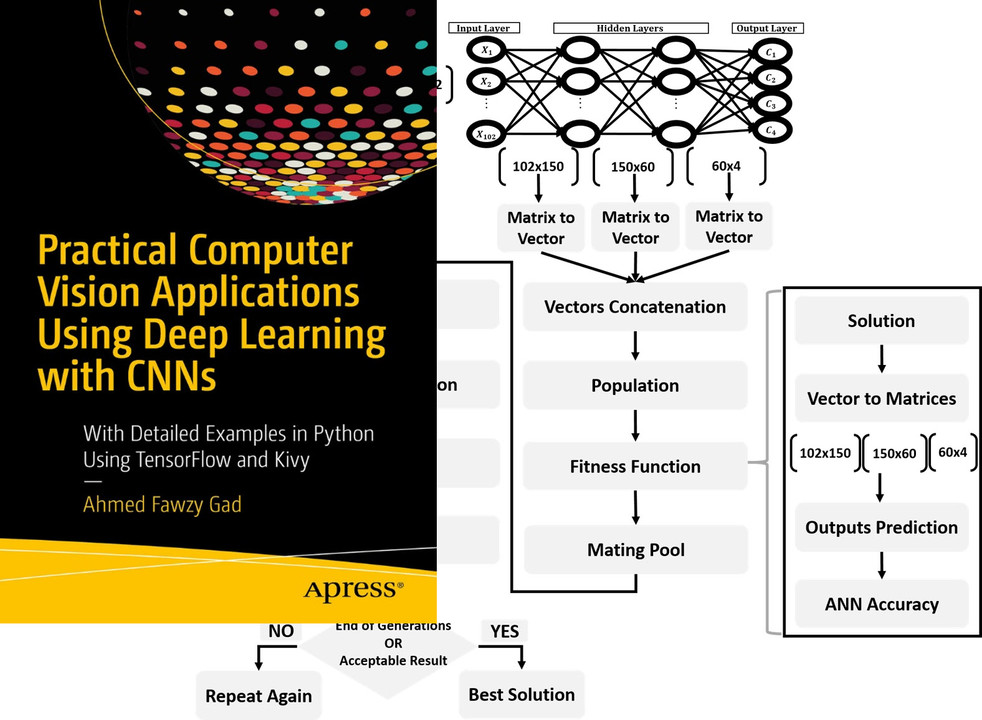

254 | Read about training neural networks using the genetic algorithm through the tutorial titled [**Artificial Neural Networks Optimization using Genetic Algorithm with Python**](https://www.linkedin.com/pulse/artificial-neural-networks-optimization-using-genetic-ahmed-gad) available at these links:

255 |

256 | - [LinkedIn](https://www.linkedin.com/pulse/artificial-neural-networks-optimization-using-genetic-ahmed-gad)

257 | - [Towards Data Science](https://towardsdatascience.com/artificial-neural-networks-optimization-using-genetic-algorithm-with-python-1fe8ed17733e)

258 | - [KDnuggets](https://www.kdnuggets.com/2019/03/artificial-neural-networks-optimization-genetic-algorithm-python.html)

259 |

260 | [](https://www.linkedin.com/pulse/artificial-neural-networks-optimization-using-genetic-ahmed-gad)

261 |

262 | ## Tutorial: Building CNN in Python

263 |

264 | To start with coding the genetic algorithm, you can check the tutorial titled [**Building Convolutional Neural Network using NumPy from Scratch**](https://www.linkedin.com/pulse/building-convolutional-neural-network-using-numpy-from-ahmed-gad) available at these links:

265 |

266 | - [LinkedIn](https://www.linkedin.com/pulse/building-convolutional-neural-network-using-numpy-from-ahmed-gad)

267 | - [Towards Data Science](https://towardsdatascience.com/building-convolutional-neural-network-using-numpy-from-scratch-b30aac50e50a)

268 | - [KDnuggets](https://www.kdnuggets.com/2018/04/building-convolutional-neural-network-numpy-scratch.html)

269 | - [Chinese Translation](http://m.aliyun.com/yunqi/articles/585741)

270 |

271 | [This tutorial](https://www.linkedin.com/pulse/building-convolutional-neural-network-using-numpy-from-ahmed-gad)) is prepared based on a previous version of the project but it still a good resource to start with coding CNNs.

272 |

273 | [](https://www.linkedin.com/pulse/building-convolutional-neural-network-using-numpy-from-ahmed-gad)

274 |

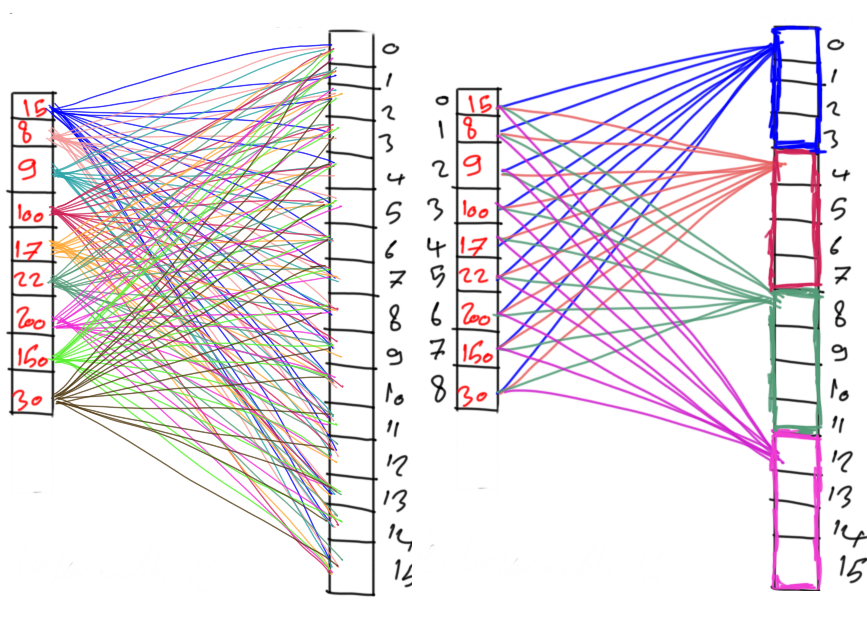

275 | ## Tutorial: Derivation of CNN from FCNN

276 |

277 | Get started with the genetic algorithm by reading the tutorial titled [**Derivation of Convolutional Neural Network from Fully Connected Network Step-By-Step**](https://www.linkedin.com/pulse/derivation-convolutional-neural-network-from-fully-connected-gad) which is available at these links:

278 |

279 | * [LinkedIn](https://www.linkedin.com/pulse/derivation-convolutional-neural-network-from-fully-connected-gad)

280 | * [Towards Data Science](https://towardsdatascience.com/derivation-of-convolutional-neural-network-from-fully-connected-network-step-by-step-b42ebafa5275)

281 | * [KDnuggets](https://www.kdnuggets.com/2018/04/derivation-convolutional-neural-network-fully-connected-step-by-step.html)

282 |

283 | [](https://www.linkedin.com/pulse/derivation-convolutional-neural-network-from-fully-connected-gad)

284 |

285 | ## Book: Practical Computer Vision Applications Using Deep Learning with CNNs

286 |

287 | You can also check my book cited as [**Ahmed Fawzy Gad 'Practical Computer Vision Applications Using Deep Learning with CNNs'. Dec. 2018, Apress, 978-1-4842-4167-7**](https://www.amazon.com/Practical-Computer-Vision-Applications-Learning/dp/1484241665) which discusses neural networks, convolutional neural networks, deep learning, genetic algorithm, and more.

288 |

289 | Find the book at these links:

290 |

291 | - [Amazon](https://www.amazon.com/Practical-Computer-Vision-Applications-Learning/dp/1484241665)

292 | - [Springer](https://link.springer.com/book/10.1007/978-1-4842-4167-7)

293 | - [Apress](https://www.apress.com/gp/book/9781484241660)

294 | - [O'Reilly](https://www.oreilly.com/library/view/practical-computer-vision/9781484241677)

295 | - [Google Books](https://books.google.com.eg/books?id=xLd9DwAAQBAJ)

296 |

297 |

298 |

299 | # Citing PyGAD - Bibtex Formatted Citation

300 |

301 | If you used PyGAD, please consider adding a citation to the following paper about PyGAD:

302 |

303 | ```

304 | @misc{gad2021pygad,

305 | title={PyGAD: An Intuitive Genetic Algorithm Python Library},

306 | author={Ahmed Fawzy Gad},

307 | year={2021},

308 | eprint={2106.06158},

309 | archivePrefix={arXiv},

310 | primaryClass={cs.NE}

311 | }

312 | ```

313 |

314 | # Contact Us

315 |

316 | * E-mail: ahmed.f.gad@gmail.com

317 | * [LinkedIn](https://www.linkedin.com/in/ahmedfgad)

318 | * [Amazon Author Page](https://amazon.com/author/ahmedgad)

319 | * [Heartbeat](https://heartbeat.fritz.ai/@ahmedfgad)

320 | * [Paperspace](https://blog.paperspace.com/author/ahmed)

321 | * [KDnuggets](https://kdnuggets.com/author/ahmed-gad)

322 | * [TowardsDataScience](https://towardsdatascience.com/@ahmedfgad)

323 | * [GitHub](https://github.com/ahmedfgad)

324 |

--------------------------------------------------------------------------------

/TutorialProject/NumPyCNN.py:

--------------------------------------------------------------------------------

1 | import numpy

2 | import sys

3 |

4 | """

5 | Convolutional neural network implementation using NumPy.

6 | An article describing this project is titled "Building Convolutional Neural Network using NumPy from Scratch". It is available in these links: https://www.linkedin.com/pulse/building-convolutional-neural-network-using-numpy-from-ahmed-gad/

7 | https://www.kdnuggets.com/2018/04/building-convolutional-neural-network-numpy-scratch.html

8 | It is also translated into Chinese: http://m.aliyun.com/yunqi/articles/585741

9 |

10 | The project is tested using Python 3.5.2 installed inside Anaconda 4.2.0 (64-bit)

11 | NumPy version used is 1.14.0

12 |

13 | For more info., contact me:

14 | Ahmed Fawzy Gad

15 | KDnuggets: https://www.kdnuggets.com/author/ahmed-gad

16 | LinkedIn: https://www.linkedin.com/in/ahmedfgad

17 | Facebook: https://www.facebook.com/ahmed.f.gadd

18 | ahmed.f.gad@gmail.com

19 | ahmed.fawzy@ci.menofia.edu.eg

20 | """

21 |

22 | def conv_(img, conv_filter):

23 | filter_size = conv_filter.shape[1]

24 | result = numpy.zeros((img.shape))

25 | #Looping through the image to apply the convolution operation.

26 | for r in numpy.uint16(numpy.arange(filter_size/2.0,

27 | img.shape[0]-filter_size/2.0+1)):

28 | for c in numpy.uint16(numpy.arange(filter_size/2.0,

29 | img.shape[1]-filter_size/2.0+1)):

30 | """

31 | Getting the current region to get multiplied with the filter.

32 | How to loop through the image and get the region based on

33 | the image and filer sizes is the most tricky part of convolution.

34 | """

35 | curr_region = img[r-numpy.uint16(numpy.floor(filter_size/2.0)):r+numpy.uint16(numpy.ceil(filter_size/2.0)),

36 | c-numpy.uint16(numpy.floor(filter_size/2.0)):c+numpy.uint16(numpy.ceil(filter_size/2.0))]

37 | #Element-wise multipliplication between the current region and the filter.

38 | curr_result = curr_region * conv_filter

39 | conv_sum = numpy.sum(curr_result) #Summing the result of multiplication.

40 | result[r, c] = conv_sum #Saving the summation in the convolution layer feature map.

41 |

42 | #Clipping the outliers of the result matrix.

43 | final_result = result[numpy.uint16(filter_size/2.0):result.shape[0]-numpy.uint16(filter_size/2.0),

44 | numpy.uint16(filter_size/2.0):result.shape[1]-numpy.uint16(filter_size/2.0)]

45 | return final_result

46 | def conv(img, conv_filter):

47 |

48 | if len(img.shape) != len(conv_filter.shape) - 1: # Check whether number of dimensions is the same

49 | print("Error: Number of dimensions in conv filter and image do not match.")

50 | exit()

51 | if len(img.shape) > 2 or len(conv_filter.shape) > 3: # Check if number of image channels matches the filter depth.

52 | if img.shape[-1] != conv_filter.shape[-1]:

53 | print("Error: Number of channels in both image and filter must match.")

54 | sys.exit()

55 | if conv_filter.shape[1] != conv_filter.shape[2]: # Check if filter dimensions are equal.

56 | print('Error: Filter must be a square matrix. I.e. number of rows and columns must match.')

57 | sys.exit()

58 | if conv_filter.shape[1]%2==0: # Check if filter diemnsions are odd.

59 | print('Error: Filter must have an odd size. I.e. number of rows and columns must be odd.')

60 | sys.exit()

61 |

62 | # An empty feature map to hold the output of convolving the filter(s) with the image.

63 | feature_maps = numpy.zeros((img.shape[0]-conv_filter.shape[1]+1,

64 | img.shape[1]-conv_filter.shape[1]+1,

65 | conv_filter.shape[0]))

66 |

67 | # Convolving the image by the filter(s).

68 | for filter_num in range(conv_filter.shape[0]):

69 | print("Filter ", filter_num + 1)

70 | curr_filter = conv_filter[filter_num, :] # getting a filter from the bank.

71 | """

72 | Checking if there are mutliple channels for the single filter.

73 | If so, then each channel will convolve the image.

74 | The result of all convolutions are summed to return a single feature map.

75 | """

76 | if len(curr_filter.shape) > 2:

77 | conv_map = conv_(img[:, :, 0], curr_filter[:, :, 0]) # Array holding the sum of all feature maps.

78 | for ch_num in range(1, curr_filter.shape[-1]): # Convolving each channel with the image and summing the results.

79 | conv_map = conv_map + conv_(img[:, :, ch_num],

80 | curr_filter[:, :, ch_num])

81 | else: # There is just a single channel in the filter.

82 | conv_map = conv_(img, curr_filter)

83 | feature_maps[:, :, filter_num] = conv_map # Holding feature map with the current filter.

84 | return feature_maps # Returning all feature maps.

85 |

86 |

87 | def pooling(feature_map, size=2, stride=2):

88 | #Preparing the output of the pooling operation.

89 | pool_out = numpy.zeros((numpy.uint16((feature_map.shape[0]-size+1)/stride+1),

90 | numpy.uint16((feature_map.shape[1]-size+1)/stride+1),

91 | feature_map.shape[-1]))

92 | for map_num in range(feature_map.shape[-1]):

93 | r2 = 0

94 | for r in numpy.arange(0,feature_map.shape[0]-size+1, stride):

95 | c2 = 0

96 | for c in numpy.arange(0, feature_map.shape[1]-size+1, stride):

97 | pool_out[r2, c2, map_num] = numpy.max([feature_map[r:r+size, c:c+size, map_num]])

98 | c2 = c2 + 1

99 | r2 = r2 +1

100 | return pool_out

101 |

102 | def relu(feature_map):

103 | #Preparing the output of the ReLU activation function.

104 | relu_out = numpy.zeros(feature_map.shape)

105 | for map_num in range(feature_map.shape[-1]):

106 | for r in numpy.arange(0,feature_map.shape[0]):

107 | for c in numpy.arange(0, feature_map.shape[1]):

108 | relu_out[r, c, map_num] = numpy.max([feature_map[r, c, map_num], 0])

109 | return relu_out

110 |

--------------------------------------------------------------------------------

/TutorialProject/README.md:

--------------------------------------------------------------------------------

1 | # NumPyCNN

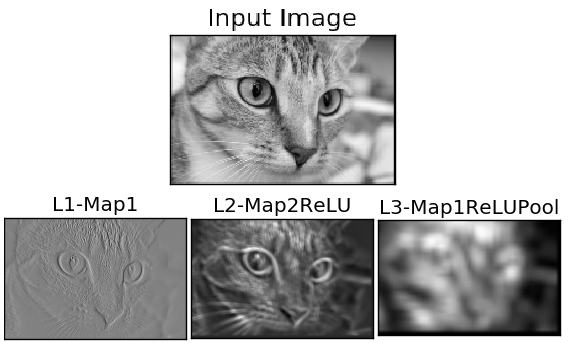

2 | Convolutional neural network implementation using NumPy. Just three layers are created which are convolution (conv for short), ReLU, and max pooling. The major steps involved are as follows:

3 | 1. Reading the input image.

4 | 2. Preparing filters.

5 | 3. Conv layer: Convolving each filter with the input image.

6 | 4. ReLU layer: Applying ReLU activation function on the feature maps (output of conv layer).

7 | 5. Max Pooling layer: Applying the pooling operation on the output of ReLU layer.

8 | 6. Stacking conv, ReLU, and max pooling layers

9 |

10 | **The project is tested using Python 3.5.2 installed inside Anaconda 4.2.0 (64-bit)

11 | NumPy version used is 1.14.0**

12 |

13 | The file named **example.py** is an example of using the project.

14 | The code starts by reading an input image. That image can be either single or multi-dimensional image.

15 |

16 | ```python

17 | # Reading the image

18 | #img = skimage.io.imread("test.jpg")

19 | #img = skimage.data.checkerboard()

20 | img = skimage.data.chelsea()

21 | #img = skimage.data.camera()

22 | ```

23 |

24 | In this examplel, an input gray is used and this is why it is required to ensure the image is already gray.

25 | ```python

26 | # Converting the image into gray.

27 | img = skimage.color.rgb2gray(img)

28 | ```

29 |

30 | The filters of the first conv layer are prepared according to the input image dimensions. The filter is created by specifying the following:

31 | 1) Number of filters.

32 | 2) Size of first dimension.

33 | 3) Size of second dimension.

34 | 4) Size of third dimension and so on.

35 |

36 | Because the previous image is just gray, then the filter will have just width and height and no depth. That is why it is created by specifying just three numbers (number of filters, width, and height). Here is an example of creating two 3x3 filters.

37 | ```python

38 | # First conv layer

39 | #l1_filter = numpy.random.rand(2,7,7)*20 # Preparing the filters randomly.

40 | l1_filter = numpy.zeros((2,3,3))

41 | l1_filter[0, :, :] = numpy.array([[[-1, 0, 1],

42 | [-1, 0, 1],

43 | [-1, 0, 1]]])

44 | l1_filter[1, :, :] = numpy.array([[[1, 1, 1],

45 | [0, 0, 0],

46 | [-1, -1, -1]]])

47 | ```

48 |

49 | The code can still work with RGb images. The only difference is using filters of similar shape to the image. If the image is RGB and not converted to gray, then the filter will be created by specifying 4 numbers (number of filters, width, height, and number of channels). Here is an example of creating two 7x7x3 filters.

50 | ```python

51 | # First conv layer

52 | l1_filter = numpy.random.rand(2, 7, 7, 3) # Preparing the filters randomly.

53 | ```

54 |

55 | Next is to forward the filters to get applied on the image using the stack of layers used in the ConvNet.

56 | ```python

57 | print("\n**Working with conv layer 1**")

58 | l1_feature_map = numpycnn.conv(img, l1_filter)

59 | print("\n**ReLU**")

60 | l1_feature_map_relu = numpycnn.relu(l1_feature_map)

61 | print("\n**Pooling**")

62 | l1_feature_map_relu_pool = numpycnn.pooling(l1_feature_map_relu, 2, 2)

63 | print("**End of conv layer 1**\n")

64 | ```

65 |

66 | Here is the outputs of such conv-relu-pool layers.

67 |

68 |

69 | ```python

70 | # Second conv layer

71 | l2_filter = numpy.random.rand(3, 5, 5, l1_feature_map_relu_pool.shape[-1])

72 | print("\n**Working with conv layer 2**")

73 | l2_feature_map = numpycnn.conv(l1_feature_map_relu_pool, l2_filter)

74 | print("\n**ReLU**")

75 | l2_feature_map_relu = numpycnn.relu(l2_feature_map)

76 | print("\n**Pooling**")

77 | l2_feature_map_relu_pool = numpycnn.pooling(l2_feature_map_relu, 2, 2)

78 | print("**End of conv layer 2**\n")

79 | ```

80 | The outputs of such conv-relu-pool layers are shown below.

81 |

82 |

83 | ```python

84 | # Third conv layer

85 | l3_filter = numpy.random.rand(1, 7, 7, l2_feature_map_relu_pool.shape[-1])

86 | print("\n**Working with conv layer 3**")

87 | l3_feature_map = numpycnn.conv(l2_feature_map_relu_pool, l3_filter)

88 | print("\n**ReLU**")

89 | l3_feature_map_relu = numpycnn.relu(l3_feature_map)

90 | print("\n**Pooling**")

91 | l3_feature_map_relu_pool = numpycnn.pooling(l3_feature_map_relu, 2, 2)

92 | print("**End of conv layer 3**\n")

93 | ```

94 | The following graph shows the outputs of the above conv-relu-pool layers.

95 |

96 |

97 | An article describing this project is titled "Building Convolutional Neural Network using NumPy from Scratch". It is available in these links:

98 | https://www.linkedin.com/pulse/building-convolutional-neural-network-using-numpy-from-ahmed-gad/

99 | https://www.kdnuggets.com/2018/04/building-convolutional-neural-network-numpy-scratch.html

100 | It is also translated into Chinese: http://m.aliyun.com/yunqi/articles/585741

101 |

102 | For more info.:

103 | KDnuggets: https://www.kdnuggets.com/author/ahmed-gad

104 | LinkedIn: https://www.linkedin.com/in/ahmedfgad

105 | Facebook: https://www.facebook.com/ahmed.f.gadd

106 | ahmed.f.gad@gmail.com

107 | ahmed.fawzy@ci.menofia.edu.eg

108 |

--------------------------------------------------------------------------------

/TutorialProject/example.py:

--------------------------------------------------------------------------------

1 | import skimage.data

2 | import numpy

3 | import matplotlib

4 | import NumPyCNN as numpycnn

5 |

6 | """

7 | Convolutional neural network implementation using NumPy

8 | An article describing this project is titled "Building Convolutional Neural Network using NumPy from Scratch". It is available in these links: https://www.linkedin.com/pulse/building-convolutional-neural-network-using-numpy-from-ahmed-gad/

9 | https://www.kdnuggets.com/2018/04/building-convolutional-neural-network-numpy-scratch.html

10 | It is also translated into Chinese: http://m.aliyun.com/yunqi/articles/585741

11 |

12 | The project is tested using Python 3.5.2 installed inside Anaconda 4.2.0 (64-bit)

13 | NumPy version used is 1.14.0

14 |

15 | For more info., contact me:

16 | Ahmed Fawzy Gad

17 | KDnuggets: https://www.kdnuggets.com/author/ahmed-gad

18 | LinkedIn: https://www.linkedin.com/in/ahmedfgad

19 | Facebook: https://www.facebook.com/ahmed.f.gadd

20 | ahmed.f.gad@gmail.com

21 | ahmed.fawzy@ci.menofia.edu.eg

22 | """

23 |

24 | # Reading the image

25 | #img = skimage.io.imread("test.jpg")

26 | #img = skimage.data.checkerboard()

27 | img = skimage.data.chelsea()

28 | #img = skimage.data.camera()

29 |

30 | # Converting the image into gray.

31 | img = skimage.color.rgb2gray(img)

32 |

33 | # First conv layer

34 | #l1_filter = numpy.random.rand(2,7,7)*20 # Preparing the filters randomly.

35 | l1_filter = numpy.zeros((2,3,3))

36 | l1_filter[0, :, :] = numpy.array([[[-1, 0, 1],

37 | [-1, 0, 1],

38 | [-1, 0, 1]]])

39 | l1_filter[1, :, :] = numpy.array([[[1, 1, 1],

40 | [0, 0, 0],

41 | [-1, -1, -1]]])

42 |

43 | print("\n**Working with conv layer 1**")

44 | l1_feature_map = numpycnn.conv(img, l1_filter)

45 | print("\n**ReLU**")

46 | l1_feature_map_relu = numpycnn.relu(l1_feature_map)

47 | print("\n**Pooling**")

48 | l1_feature_map_relu_pool = numpycnn.pooling(l1_feature_map_relu, 2, 2)

49 | print("**End of conv layer 1**\n")

50 |

51 | # Second conv layer

52 | l2_filter = numpy.random.rand(3, 5, 5, l1_feature_map_relu_pool.shape[-1])

53 | print("\n**Working with conv layer 2**")

54 | l2_feature_map = numpycnn.conv(l1_feature_map_relu_pool, l2_filter)

55 | print("\n**ReLU**")

56 | l2_feature_map_relu = numpycnn.relu(l2_feature_map)

57 | print("\n**Pooling**")

58 | l2_feature_map_relu_pool = numpycnn.pooling(l2_feature_map_relu, 2, 2)

59 | print("**End of conv layer 2**\n")

60 |

61 | # Third conv layer

62 | l3_filter = numpy.random.rand(1, 7, 7, l2_feature_map_relu_pool.shape[-1])

63 | print("\n**Working with conv layer 3**")

64 | l3_feature_map = numpycnn.conv(l2_feature_map_relu_pool, l3_filter)

65 | print("\n**ReLU**")

66 | l3_feature_map_relu = numpycnn.relu(l3_feature_map)

67 | print("\n**Pooling**")

68 | l3_feature_map_relu_pool = numpycnn.pooling(l3_feature_map_relu, 2, 2)

69 | print("**End of conv layer 3**\n")

70 |

71 | # Graphing results

72 | fig0, ax0 = matplotlib.pyplot.subplots(nrows=1, ncols=1)

73 | ax0.imshow(img).set_cmap("gray")

74 | ax0.set_title("Input Image")

75 | ax0.get_xaxis().set_ticks([])

76 | ax0.get_yaxis().set_ticks([])

77 | matplotlib.pyplot.savefig("in_img.png", bbox_inches="tight")

78 | matplotlib.pyplot.close(fig0)

79 |

80 | # Layer 1

81 | fig1, ax1 = matplotlib.pyplot.subplots(nrows=3, ncols=2)

82 | ax1[0, 0].imshow(l1_feature_map[:, :, 0]).set_cmap("gray")

83 | ax1[0, 0].get_xaxis().set_ticks([])

84 | ax1[0, 0].get_yaxis().set_ticks([])

85 | ax1[0, 0].set_title("L1-Map1")

86 |

87 | ax1[0, 1].imshow(l1_feature_map[:, :, 1]).set_cmap("gray")

88 | ax1[0, 1].get_xaxis().set_ticks([])

89 | ax1[0, 1].get_yaxis().set_ticks([])

90 | ax1[0, 1].set_title("L1-Map2")

91 |

92 | ax1[1, 0].imshow(l1_feature_map_relu[:, :, 0]).set_cmap("gray")

93 | ax1[1, 0].get_xaxis().set_ticks([])

94 | ax1[1, 0].get_yaxis().set_ticks([])

95 | ax1[1, 0].set_title("L1-Map1ReLU")

96 |

97 | ax1[1, 1].imshow(l1_feature_map_relu[:, :, 1]).set_cmap("gray")

98 | ax1[1, 1].get_xaxis().set_ticks([])

99 | ax1[1, 1].get_yaxis().set_ticks([])

100 | ax1[1, 1].set_title("L1-Map2ReLU")

101 |

102 | ax1[2, 0].imshow(l1_feature_map_relu_pool[:, :, 0]).set_cmap("gray")

103 | ax1[2, 0].get_xaxis().set_ticks([])

104 | ax1[2, 0].get_yaxis().set_ticks([])

105 | ax1[2, 0].set_title("L1-Map1ReLUPool")

106 |

107 | ax1[2, 1].imshow(l1_feature_map_relu_pool[:, :, 1]).set_cmap("gray")

108 | ax1[2, 0].get_xaxis().set_ticks([])

109 | ax1[2, 0].get_yaxis().set_ticks([])

110 | ax1[2, 1].set_title("L1-Map2ReLUPool")

111 |

112 | matplotlib.pyplot.savefig("L1.png", bbox_inches="tight")

113 | matplotlib.pyplot.close(fig1)

114 |

115 | # Layer 2

116 | fig2, ax2 = matplotlib.pyplot.subplots(nrows=3, ncols=3)

117 | ax2[0, 0].imshow(l2_feature_map[:, :, 0]).set_cmap("gray")

118 | ax2[0, 0].get_xaxis().set_ticks([])

119 | ax2[0, 0].get_yaxis().set_ticks([])

120 | ax2[0, 0].set_title("L2-Map1")

121 |

122 | ax2[0, 1].imshow(l2_feature_map[:, :, 1]).set_cmap("gray")

123 | ax2[0, 1].get_xaxis().set_ticks([])

124 | ax2[0, 1].get_yaxis().set_ticks([])

125 | ax2[0, 1].set_title("L2-Map2")

126 |

127 | ax2[0, 2].imshow(l2_feature_map[:, :, 2]).set_cmap("gray")

128 | ax2[0, 2].get_xaxis().set_ticks([])

129 | ax2[0, 2].get_yaxis().set_ticks([])

130 | ax2[0, 2].set_title("L2-Map3")

131 |

132 | ax2[1, 0].imshow(l2_feature_map_relu[:, :, 0]).set_cmap("gray")

133 | ax2[1, 0].get_xaxis().set_ticks([])

134 | ax2[1, 0].get_yaxis().set_ticks([])

135 | ax2[1, 0].set_title("L2-Map1ReLU")

136 |

137 | ax2[1, 1].imshow(l2_feature_map_relu[:, :, 1]).set_cmap("gray")

138 | ax2[1, 1].get_xaxis().set_ticks([])

139 | ax2[1, 1].get_yaxis().set_ticks([])

140 | ax2[1, 1].set_title("L2-Map2ReLU")

141 |

142 | ax2[1, 2].imshow(l2_feature_map_relu[:, :, 2]).set_cmap("gray")

143 | ax2[1, 2].get_xaxis().set_ticks([])

144 | ax2[1, 2].get_yaxis().set_ticks([])

145 | ax2[1, 2].set_title("L2-Map3ReLU")

146 |

147 | ax2[2, 0].imshow(l2_feature_map_relu_pool[:, :, 0]).set_cmap("gray")

148 | ax2[2, 0].get_xaxis().set_ticks([])

149 | ax2[2, 0].get_yaxis().set_ticks([])

150 | ax2[2, 0].set_title("L2-Map1ReLUPool")

151 |

152 | ax2[2, 1].imshow(l2_feature_map_relu_pool[:, :, 1]).set_cmap("gray")

153 | ax2[2, 1].get_xaxis().set_ticks([])

154 | ax2[2, 1].get_yaxis().set_ticks([])

155 | ax2[2, 1].set_title("L2-Map2ReLUPool")

156 |

157 | ax2[2, 2].imshow(l2_feature_map_relu_pool[:, :, 2]).set_cmap("gray")

158 | ax2[2, 2].get_xaxis().set_ticks([])

159 | ax2[2, 2].get_yaxis().set_ticks([])

160 | ax2[2, 2].set_title("L2-Map3ReLUPool")

161 |

162 | matplotlib.pyplot.savefig("L2.png", bbox_inches="tight")

163 | matplotlib.pyplot.close(fig2)

164 |

165 | # Layer 3

166 | fig3, ax3 = matplotlib.pyplot.subplots(nrows=1, ncols=3)

167 | ax3[0].imshow(l3_feature_map[:, :, 0]).set_cmap("gray")

168 | ax3[0].get_xaxis().set_ticks([])

169 | ax3[0].get_yaxis().set_ticks([])

170 | ax3[0].set_title("L3-Map1")

171 |

172 | ax3[1].imshow(l3_feature_map_relu[:, :, 0]).set_cmap("gray")

173 | ax3[1].get_xaxis().set_ticks([])

174 | ax3[1].get_yaxis().set_ticks([])

175 | ax3[1].set_title("L3-Map1ReLU")

176 |

177 | ax3[2].imshow(l3_feature_map_relu_pool[:, :, 0]).set_cmap("gray")

178 | ax3[2].get_xaxis().set_ticks([])

179 | ax3[2].get_yaxis().set_ticks([])

180 | ax3[2].set_title("L3-Map1ReLUPool")

181 |

182 | matplotlib.pyplot.savefig("L3.png", bbox_inches="tight")

183 | matplotlib.pyplot.close(fig3)

184 |

--------------------------------------------------------------------------------

/cnn.py:

--------------------------------------------------------------------------------

1 | import numpy

2 | import functools

3 |

4 | """

5 | Convolutional neural network implementation using NumPy

6 | A tutorial that helps to get started (Building Convolutional Neural Network using NumPy from Scratch) available in these links:

7 | https://www.linkedin.com/pulse/building-convolutional-neural-network-using-numpy-from-ahmed-gad

8 | https://towardsdatascience.com/building-convolutional-neural-network-using-numpy-from-scratch-b30aac50e50a

9 | https://www.kdnuggets.com/2018/04/building-convolutional-neural-network-numpy-scratch.html

10 | It is also translated into Chinese: http://m.aliyun.com/yunqi/articles/585741

11 | """

12 |

13 | # Supported activation functions by the cnn.py module.

14 | supported_activation_functions = ("sigmoid", "relu", "softmax")

15 |

16 | def sigmoid(sop):

17 |

18 | """

19 | Applies the sigmoid function.

20 |

21 | sop: The input to which the sigmoid function is applied.

22 |

23 | Returns the result of the sigmoid function.

24 | """

25 |

26 | if type(sop) in [list, tuple]:

27 | sop = numpy.array(sop)

28 |

29 | return 1.0 / (1 + numpy.exp(-1 * sop))

30 |

31 | def relu(sop):

32 |

33 | """

34 | Applies the rectified linear unit (ReLU) function.

35 |

36 | sop: The input to which the relu function is applied.

37 |

38 | Returns the result of the ReLU function.

39 | """

40 |

41 | if not (type(sop) in [list, tuple, numpy.ndarray]):

42 | if sop < 0:

43 | return 0

44 | else:

45 | return sop

46 | elif type(sop) in [list, tuple]:

47 | sop = numpy.array(sop)

48 |

49 | result = sop

50 | result[sop < 0] = 0

51 |

52 | return result

53 |

54 | def softmax(layer_outputs):

55 |

56 | """

57 | Applies the sotmax function.

58 |

59 | sop: The input to which the softmax function is applied.

60 |

61 | Returns the result of the softmax function.

62 | """

63 | return layer_outputs / (numpy.sum(layer_outputs) + 0.000001)

64 |

65 | def layers_weights(model, initial=True):

66 |

67 | """

68 | Creates a list holding the weights of all layers in the CNN.

69 |

70 | model: A reference to the instance from the cnn.Model class.

71 | initial: When True, the function returns the initial weights of the layers. When False, the trained weights of the layers are returned. The initial weights are only needed before network training starts. The trained weights are needed to predict the network outputs.

72 |

73 | Returns a list (network_weights) holding the weights of the layers in the CNN.

74 | """

75 |

76 | network_weights = []

77 |

78 | layer = model.last_layer

79 | while "previous_layer" in layer.__init__.__code__.co_varnames:

80 | if type(layer) in [Conv2D, Dense]:

81 | # If the 'initial' parameter is True, append the initial weights. Otherwise, append the trained weights.

82 | if initial == True:

83 | network_weights.append(layer.initial_weights)

84 | elif initial == False:

85 | network_weights.append(layer.trained_weights)

86 | else:

87 | raise ValueError("Unexpected value to the 'initial' parameter: {initial}.".format(initial=initial))

88 |

89 | # Go to the previous layer.

90 | layer = layer.previous_layer

91 |

92 | # If the first layer in the network is not an input layer (i.e. an instance of the Input2D class), raise an error.

93 | if not (type(layer) is Input2D):

94 | raise TypeError("The first layer in the network architecture must be an input layer.")

95 |

96 | # Currently, the weights of the layers are in the reverse order. In other words, the weights of the first layer are at the last index of the 'network_weights' list while the weights of the last layer are at the first index.

97 | # Reversing the 'network_weights' list to order the layers' weights according to their location in the network architecture (i.e. the weights of the first layer appears at index 0 of the list).

98 | network_weights.reverse()

99 | return numpy.array(network_weights)

100 |

101 | def layers_weights_as_matrix(model, vector_weights):

102 |

103 | """

104 | Converts the network weights from vectors to matrices.

105 |

106 | model: A reference to the instance from the cnn.Model class.

107 | vector_weights: The network weights as vectors where the weights of each layer form a single vector.

108 |

109 | Returns a list (network_weights) holding the weights of the CNN layers as matrices.

110 | """

111 |

112 | network_weights = []

113 |

114 | start = 0

115 | layer = model.last_layer

116 | vector_weights = vector_weights[::-1]

117 | while "previous_layer" in layer.__init__.__code__.co_varnames:

118 | if type(layer) in [Conv2D, Dense]:

119 | layer_weights_shape = layer.initial_weights.shape

120 | layer_weights_size = layer.initial_weights.size

121 |

122 | weights_vector=vector_weights[start:start + layer_weights_size]

123 | # matrix = pygad.nn.DenseLayer.to_array(vector=weights_vector, shape=layer_weights_shape)

124 | matrix = numpy.reshape(weights_vector, newshape=(layer_weights_shape))

125 | network_weights.append(matrix)

126 |

127 | start = start + layer_weights_size

128 |

129 | # Go to the previous layer.

130 | layer = layer.previous_layer

131 |

132 | # If the first layer in the network is not an input layer (i.e. an instance of the Input2D class), raise an error.

133 | if not (type(layer) is Input2D):

134 | raise TypeError("The first layer in the network architecture must be an input layer.")

135 |

136 | # Currently, the weights of the layers are in the reverse order. In other words, the weights of the first layer are at the last index of the 'network_weights' list while the weights of the last layer are at the first index.

137 | # Reversing the 'network_weights' list to order the layers' weights according to their location in the network architecture (i.e. the weights of the first layer appears at index 0 of the list).

138 | network_weights.reverse()

139 | return numpy.array(network_weights)

140 |

141 | def layers_weights_as_vector(model, initial=True):

142 |

143 | """

144 | Creates a list holding the weights of each layer (Conv and Dense) in the CNN as a vector.

145 |

146 | model: A reference to the instance from the cnn.Model class.

147 | initial: When True, the function returns the initial weights of the CNN. When False, the trained weights of the CNN layers are returned. The initial weights are only needed before network training starts. The trained weights are needed to predict the network outputs.

148 |

149 | Returns a list (network_weights) holding the weights of the CNN layers as a vector.

150 | """

151 |

152 | network_weights = []

153 |

154 | layer = model.last_layer

155 | while "previous_layer" in layer.__init__.__code__.co_varnames:

156 | if type(layer) in [Conv2D, Dense]:

157 | # If the 'initial' parameter is True, append the initial weights. Otherwise, append the trained weights.

158 | if initial == True:

159 | vector = numpy.reshape(layer.initial_weights, newshape=(layer.initial_weights.size))

160 | # vector = pygad.nn.DenseLayer.to_vector(matrix=layer.initial_weights)

161 | network_weights.extend(vector)

162 | elif initial == False:

163 | vector = numpy.reshape(layer.trained_weights, newshape=(layer.trained_weights.size))

164 | # vector = pygad.nn.DenseLayer.to_vector(array=layer.trained_weights)

165 | network_weights.extend(vector)

166 | else:

167 | raise ValueError("Unexpected value to the 'initial' parameter: {initial}.".format(initial=initial))

168 |

169 | # Go to the previous layer.

170 | layer = layer.previous_layer

171 |

172 | # If the first layer in the network is not an input layer (i.e. an instance of the Input2D class), raise an error.

173 | if not (type(layer) is Input2D):

174 | raise TypeError("The first layer in the network architecture must be an input layer.")

175 |

176 | # Currently, the weights of the layers are in the reverse order. In other words, the weights of the first layer are at the last index of the 'network_weights' list while the weights of the last layer are at the first index.

177 | # Reversing the 'network_weights' list to order the layers' weights according to their location in the network architecture (i.e. the weights of the first layer appears at index 0 of the list).

178 | network_weights.reverse()

179 | return numpy.array(network_weights)

180 |

181 | def update_layers_trained_weights(model, final_weights):

182 |

183 | """

184 | After the network weights are trained, the 'trained_weights' attribute of each layer is updated by the weights calculated after passing all the epochs (such weights are passed in the 'final_weights' parameter).

185 | By just passing a reference to the last layer in the network (i.e. output layer) in addition to the final weights, this function updates the 'trained_weights' attribute of all layers.

186 |

187 | model: A reference to the instance from the cnn.Model class.

188 | final_weights: An array of layers weights as matrices after passing through all the epochs.

189 | """

190 |

191 | layer = model.last_layer

192 | layer_idx = len(final_weights) - 1

193 | while "previous_layer" in layer.__init__.__code__.co_varnames:

194 | if type(layer) in [Conv2D, Dense]:

195 | layer.trained_weights = final_weights[layer_idx]

196 |

197 | layer_idx = layer_idx - 1

198 |

199 | # Go to the previous layer.

200 | layer = layer.previous_layer

201 |

202 | class Input2D:

203 |

204 | """

205 | Implementing the input layer of a CNN.

206 | The CNN architecture must start with an input layer.

207 | """

208 |

209 | def __init__(self, input_shape):

210 |

211 | """

212 | input_shape: Shape of the input sample to the CNN.

213 | """

214 |

215 | # If the input sample has less than 2 dimensions, then an exception is raised.

216 | if len(input_shape) < 2:

217 | raise ValueError("The Input2D class creates an input layer for data inputs with at least 2 dimensions but ({num_dim}) dimensions found.".format(num_dim=len(input_shape)))

218 | # If the input sample has exactly 2 dimensions, the third dimension is set to 1.

219 | elif len(input_shape) == 2:

220 | input_shape = (input_shape[0], input_shape[1], 1)

221 |

222 | for dim_idx, dim in enumerate(input_shape):

223 | if dim <= 0:

224 | raise ValueError("The dimension size of the inputs cannot be <= 0. Please pass a valid value to the 'input_size' parameter.")

225 |

226 | self.input_shape = input_shape # Shape of the input sample.

227 | self.layer_output_size = input_shape # Shape of the output from the current layer. For an input layer, it is the same as the shape of the input sample.

228 |

229 | class Conv2D:

230 |

231 | """

232 | Implementing the convolution layer.

233 | """

234 |

235 | def __init__(self, num_filters, kernel_size, previous_layer, activation_function=None):

236 |

237 | """

238 | num_filters: Number of filters in the convolution layer.

239 | kernel_size: Kernel size of the filter.

240 | previous_layer: A reference to the previous layer.

241 | activation_function=None: The name of the activation function to be used in the conv layer. If None, then no activation function is applied besides the convolution operation. The activation function can be applied by a separate layer.

242 | """

243 |

244 | if num_filters <= 0:

245 | raise ValueError("Number of filters cannot be <= 0. Please pass a valid value to the 'num_filters' parameter.")

246 | # Number of filters in the conv layer.

247 | self.num_filters = num_filters

248 |

249 | if kernel_size <= 0:

250 | raise ValueError("The kernel size cannot be <= 0. Please pass a valid value to the 'kernel_size' parameter.")

251 | # Kernel size of each filter.

252 | self.kernel_size = kernel_size

253 |

254 | # Validating the activation function

255 | if (activation_function is None):

256 | self.activation = None

257 | elif (activation_function == "relu"):

258 | self.activation = relu

259 | elif (activation_function == "sigmoid"):

260 | self.activation = sigmoid

261 | elif (activation_function == "softmax"):

262 | raise ValueError("The softmax activation function cannot be used in a conv layer.")

263 | else:

264 | raise ValueError("The specified activation function '{activation_function}' is not among the supported activation functions {supported_activation_functions}. Please use one of the supported functions.".format(activation_function=activation_function, supported_activation_functions=supported_activation_functions))

265 |

266 | # The activation function used in the current layer.

267 | self.activation_function = activation_function

268 |

269 | if previous_layer is None:

270 | raise TypeError("The previous layer cannot be of Type 'None'. Please pass a valid layer to the 'previous_layer' parameter.")

271 | # A reference to the layer that preceeds the current layer in the network architecture.

272 | self.previous_layer = previous_layer

273 |

274 | # A reference to the bank of filters.

275 | self.filter_bank_size = (self.num_filters,

276 | self.kernel_size,

277 | self.kernel_size,

278 | self.previous_layer.layer_output_size[-1])

279 |

280 | # Initializing the filters of the conv layer.

281 | self.initial_weights = numpy.random.uniform(low=-0.1,

282 | high=0.1,

283 | size=self.filter_bank_size)

284 |

285 | # The trained filters of the conv layer. Only assigned a value after the network is trained (i.e. the train_network() function completes).

286 | # Just initialized to be equal to the initial filters

287 | self.trained_weights = self.initial_weights.copy()

288 |

289 | # Size of the input to the layer.

290 | self.layer_input_size = self.previous_layer.layer_output_size

291 |

292 | # Size of the output from the layer.

293 | # Later, it must conider strides and paddings

294 | self.layer_output_size = (self.previous_layer.layer_output_size[0] - self.kernel_size + 1,

295 | self.previous_layer.layer_output_size[1] - self.kernel_size + 1,

296 | num_filters)

297 |

298 | # The layer_output attribute holds the latest output from the layer.

299 | self.layer_output = None

300 |

301 | def conv_(self, input2D, conv_filter):

302 |

303 | """

304 | Convolves the input (input2D) by a single filter (conv_filter).

305 |

306 | input2D: The input to be convolved by a single filter.

307 | conv_filter: The filter convolving the input.

308 |

309 | Returns the result of convolution.

310 | """

311 |

312 | result = numpy.zeros(shape=(input2D.shape[0], input2D.shape[1], conv_filter.shape[0]))

313 | # Looping through the image to apply the convolution operation.

314 | for r in numpy.uint16(numpy.arange(self.filter_bank_size[1]/2.0,

315 | input2D.shape[0]-self.filter_bank_size[1]/2.0+1)):

316 | for c in numpy.uint16(numpy.arange(self.filter_bank_size[1]/2.0,

317 | input2D.shape[1]-self.filter_bank_size[1]/2.0+1)):

318 | """

319 | Getting the current region to get multiplied with the filter.

320 | How to loop through the image and get the region based on

321 | the image and filer sizes is the most tricky part of convolution.

322 | """

323 | if len(input2D.shape) == 2:

324 | curr_region = input2D[r-numpy.uint16(numpy.floor(self.filter_bank_size[1]/2.0)):r+numpy.uint16(numpy.ceil(self.filter_bank_size[1]/2.0)),

325 | c-numpy.uint16(numpy.floor(self.filter_bank_size[1]/2.0)):c+numpy.uint16(numpy.ceil(self.filter_bank_size[1]/2.0))]

326 | else:

327 | curr_region = input2D[r-numpy.uint16(numpy.floor(self.filter_bank_size[1]/2.0)):r+numpy.uint16(numpy.ceil(self.filter_bank_size[1]/2.0)),

328 | c-numpy.uint16(numpy.floor(self.filter_bank_size[1]/2.0)):c+numpy.uint16(numpy.ceil(self.filter_bank_size[1]/2.0)), :]

329 | # Element-wise multipliplication between the current region and the filter.

330 |

331 | for filter_idx in range(conv_filter.shape[0]):

332 | curr_result = curr_region * conv_filter[filter_idx]

333 | conv_sum = numpy.sum(curr_result) # Summing the result of multiplication.

334 |

335 | if self.activation is None:

336 | result[r, c, filter_idx] = conv_sum # Saving the SOP in the convolution layer feature map.

337 | else:

338 | result[r, c, filter_idx] = self.activation(conv_sum) # Saving the activation function result in the convolution layer feature map.

339 |

340 | # Clipping the outliers of the result matrix.

341 | final_result = result[numpy.uint16(self.filter_bank_size[1]/2.0):result.shape[0]-numpy.uint16(self.filter_bank_size[1]/2.0),

342 | numpy.uint16(self.filter_bank_size[1]/2.0):result.shape[1]-numpy.uint16(self.filter_bank_size[1]/2.0), :]

343 | return final_result

344 |

345 | def conv(self, input2D):

346 |

347 | """

348 | Convolves the input (input2D) by a filter bank.

349 |

350 | input2D: The input to be convolved by the filter bank.

351 |

352 | The conv() method saves the result of convolving the input by the filter bank in the layer_output attribute.

353 | """

354 |

355 | if len(input2D.shape) != len(self.initial_weights.shape) - 1: # Check if there is a match in the number of dimensions between the image and the filters.

356 | raise ValueError("Number of dimensions in the conv filter and the input do not match.")

357 | if len(input2D.shape) > 2 or len(self.initial_weights.shape) > 3: # Check if number of image channels matches the filter depth.

358 | if input2D.shape[-1] != self.initial_weights.shape[-1]:

359 | raise ValueError("Number of channels in both the input and the filter must match.")

360 | if self.initial_weights.shape[1] != self.initial_weights.shape[2]: # Check if filter dimensions are equal.

361 | raise ValueError('A filter must be a square matrix. I.e. number of rows and columns must match.')

362 | if self.initial_weights.shape[1]%2==0: # Check if filter diemnsions are odd.

363 | raise ValueError('A filter must have an odd size. I.e. number of rows and columns must be odd.')

364 |

365 | self.layer_output = self.conv_(input2D, self.trained_weights)

366 |

367 | class AveragePooling2D:

368 |

369 | """

370 | Implementing the average pooling layer.

371 | """

372 |

373 | def __init__(self, pool_size, previous_layer, stride=2):

374 |

375 | """

376 | pool_size: Pool size.

377 | previous_layer: Reference to the previous layer in the CNN architecture.

378 | stride=2: Stride

379 | """

380 |

381 | if not (type(pool_size) is int):

382 | raise ValueError("The expected type of the pool_size is int but {pool_size_type} found.".format(pool_size_type=type(pool_size)))

383 |

384 | if pool_size <= 0:

385 | raise ValueError("The passed value to the pool_size parameter cannot be <= 0.")

386 | self.pool_size = pool_size

387 |

388 | if stride <= 0:

389 | raise ValueError("The passed value to the stride parameter cannot be <= 0.")

390 | self.stride = stride

391 |

392 | if previous_layer is None:

393 | raise TypeError("The previous layer cannot be of Type 'None'. Please pass a valid layer to the 'previous_layer' parameter.")

394 | # A reference to the layer that preceeds the current layer in the network architecture.

395 | self.previous_layer = previous_layer

396 |

397 | # Size of the input to the layer.

398 | self.layer_input_size = self.previous_layer.layer_output_size

399 |

400 | # Size of the output from the layer.

401 | self.layer_output_size = (numpy.uint16((self.previous_layer.layer_output_size[0] - self.pool_size + 1)/stride + 1),

402 | numpy.uint16((self.previous_layer.layer_output_size[1] - self.pool_size + 1)/stride + 1),

403 | self.previous_layer.layer_output_size[-1])

404 |

405 | # The layer_output attribute holds the latest output from the layer.

406 | self.layer_output = None

407 |

408 | def average_pooling(self, input2D):

409 |

410 | """

411 | Applies the average pooling operation.

412 |

413 | input2D: The input to which the average pooling operation is applied.

414 |

415 | The average_pooling() method saves its result in the layer_output attribute.

416 | """

417 |

418 | # Preparing the output of the pooling operation.

419 | pool_out = numpy.zeros((numpy.uint16((input2D.shape[0]-self.pool_size+1)/self.stride+1),

420 | numpy.uint16((input2D.shape[1]-self.pool_size+1)/self.stride+1),

421 | input2D.shape[-1]))

422 | for map_num in range(input2D.shape[-1]):

423 | r2 = 0

424 | for r in numpy.arange(0,input2D.shape[0]-self.pool_size+1, self.stride):

425 | c2 = 0

426 | for c in numpy.arange(0, input2D.shape[1]-self.pool_size+1, self.stride):

427 | pool_out[r2, c2, map_num] = numpy.mean([input2D[r:r+self.pool_size, c:c+self.pool_size, map_num]])

428 | c2 = c2 + 1

429 | r2 = r2 +1

430 |

431 | self.layer_output = pool_out

432 |

433 | class MaxPooling2D:

434 |

435 | """

436 | Similar to the AveragePooling2D class except that it implements max pooling.

437 | """

438 |

439 | def __init__(self, pool_size, previous_layer, stride=2):

440 |

441 | """

442 | pool_size: Pool size.

443 | previous_layer: Reference to the previous layer in the CNN architecture.

444 | stride=2: Stride

445 | """

446 |

447 | if not (type(pool_size) is int):

448 | raise ValueError("The expected type of the pool_size is int but {pool_size_type} found.".format(pool_size_type=type(pool_size)))

449 |

450 | if pool_size <= 0:

451 | raise ValueError("The passed value to the pool_size parameter cannot be <= 0.")

452 | self.pool_size = pool_size

453 |

454 | if stride <= 0:

455 | raise ValueError("The passed value to the stride parameter cannot be <= 0.")

456 | self.stride = stride

457 |

458 | if previous_layer is None:

459 | raise TypeError("The previous layer cannot be of Type 'None'. Please pass a valid layer to the 'previous_layer' parameter.")

460 | # A reference to the layer that preceeds the current layer in the network architecture.

461 | self.previous_layer = previous_layer

462 |

463 | # Size of the input to the layer.

464 | self.layer_input_size = self.previous_layer.layer_output_size

465 |

466 | # Size of the output from the layer.

467 | self.layer_output_size = (numpy.uint16((self.previous_layer.layer_output_size[0] - self.pool_size + 1)/stride + 1),

468 | numpy.uint16((self.previous_layer.layer_output_size[1] - self.pool_size + 1)/stride + 1),

469 | self.previous_layer.layer_output_size[-1])

470 |

471 | # The layer_output attribute holds the latest output from the layer.

472 | self.layer_output = None

473 |

474 | def max_pooling(self, input2D):

475 |

476 | """

477 | Applies the max pooling operation.

478 |

479 | input2D: The input to which the max pooling operation is applied.

480 |

481 | The max_pooling() method saves its result in the layer_output attribute.

482 | """

483 |

484 | # Preparing the output of the pooling operation.

485 | pool_out = numpy.zeros((numpy.uint16((input2D.shape[0]-self.pool_size+1)/self.stride+1),

486 | numpy.uint16((input2D.shape[1]-self.pool_size+1)/self.stride+1),

487 | input2D.shape[-1]))

488 | for map_num in range(input2D.shape[-1]):

489 | r2 = 0

490 | for r in numpy.arange(0,input2D.shape[0]-self.pool_size+1, self.stride):

491 | c2 = 0

492 | for c in numpy.arange(0, input2D.shape[1]-self.pool_size+1, self.stride):

493 | pool_out[r2, c2, map_num] = numpy.max([input2D[r:r+self.pool_size, c:c+self.pool_size, map_num]])

494 | c2 = c2 + 1

495 | r2 = r2 +1

496 |

497 | self.layer_output = pool_out

498 |

499 | class ReLU:

500 |

501 | """

502 | Implementing the ReLU layer.

503 | """

504 |

505 | def __init__(self, previous_layer):

506 |

507 | """

508 | previous_layer: Reference to the previous layer.

509 | """

510 |

511 | if previous_layer is None:

512 | raise TypeError("The previous layer cannot be of Type 'None'. Please pass a valid layer to the 'previous_layer' parameter.")

513 |

514 | # A reference to the layer that preceeds the current layer in the network architecture.

515 | self.previous_layer = previous_layer

516 |

517 | # Size of the input to the layer.

518 | self.layer_input_size = self.previous_layer.layer_output_size

519 |

520 | # Size of the output from the layer.

521 | self.layer_output_size = self.previous_layer.layer_output_size

522 |

523 | # The layer_output attribute holds the latest output from the layer.

524 | self.layer_output = None

525 |

526 | def relu_layer(self, layer_input):

527 |

528 | """

529 | Applies the ReLU function over all elements in input to the ReLU layer.

530 |

531 | layer_input: The input to which the ReLU function is applied.

532 |

533 | The relu_layer() method saves its result in the layer_output attribute.

534 | """

535 |

536 | self.layer_output_size = layer_input.size

537 | self.layer_output = relu(layer_input)

538 |

539 | class Sigmoid:

540 |

541 | """

542 | Implementing the sigmoid layer.

543 | """

544 |

545 | def __init__(self, previous_layer):

546 |

547 | """

548 | previous_layer: Reference to the previous layer.

549 | """

550 |

551 | if previous_layer is None:

552 | raise TypeError("The previous layer cannot be of Type 'None'. Please pass a valid layer to the 'previous_layer' parameter.")

553 | # A reference to the layer that preceeds the current layer in the network architecture.

554 | self.previous_layer = previous_layer

555 |

556 | # Size of the input to the layer.

557 | self.layer_input_size = self.previous_layer.layer_output_size

558 |

559 | # Size of the output from the layer.

560 | self.layer_output_size = self.previous_layer.layer_output_size

561 |

562 | # The layer_output attribute holds the latest output from the layer.

563 | self.layer_output = None

564 |

565 | def sigmoid_layer(self, layer_input):

566 |

567 | """

568 | Applies the sigmoid function over all elements in input to the sigmoid layer.

569 |

570 | layer_input: The input to which the sigmoid function is applied.

571 |

572 | The sigmoid_layer() method saves its result in the layer_output attribute.

573 | """

574 |

575 | self.layer_output_size = layer_input.size

576 | self.layer_output = sigmoid(layer_input)

577 |

578 | class Flatten:

579 |

580 | """

581 | Implementing the flatten layer.

582 | """

583 |

584 | def __init__(self, previous_layer):

585 |

586 | """

587 | previous_layer: Reference to the previous layer.

588 | """

589 |

590 | if previous_layer is None:

591 | raise TypeError("The previous layer cannot be of Type 'None'. Please pass a valid layer to the 'previous_layer' parameter.")

592 | # A reference to the layer that preceeds the current layer in the network architecture.

593 | self.previous_layer = previous_layer

594 |

595 | # Size of the input to the layer.

596 | self.layer_input_size = self.previous_layer.layer_output_size

597 |

598 | # Size of the output from the layer.

599 | self.layer_output_size = functools.reduce(lambda x, y: x*y, self.previous_layer.layer_output_size)

600 |

601 | # The layer_output attribute holds the latest output from the layer.

602 | self.layer_output = None

603 |

604 | def flatten(self, input2D):

605 |

606 | """

607 | Reshapes the input into a 1D vector.

608 |

609 | input2D: The input to the Flatten layer that will be converted into a 1D vector.

610 |

611 | The flatten() method saves its result in the layer_output attribute.

612 | """

613 |

614 | self.layer_output_size = input2D.size

615 | self.layer_output = numpy.ravel(input2D)

616 |

617 | class Dense:

618 |

619 | """

620 | Implementing the input dense (fully connected) layer of a CNN.

621 | """

622 |

623 | def __init__(self, num_neurons, previous_layer, activation_function="relu"):

624 |

625 | """

626 | num_neurons: Number of neurons in the dense layer.

627 | previous_layer: Reference to the previous layer.

628 | activation_function: Name of the activation function to be used in the current layer.

629 | """

630 |

631 | if num_neurons <= 0:

632 | raise ValueError("Number of neurons cannot be <= 0. Please pass a valid value to the 'num_neurons' parameter.")

633 |

634 | # Number of neurons in the dense layer.

635 | self.num_neurons = num_neurons

636 |

637 | # Validating the activation function

638 | if (activation_function == "relu"):

639 | self.activation = relu

640 | elif (activation_function == "sigmoid"):

641 | self.activation = sigmoid

642 | elif (activation_function == "softmax"):

643 | self.activation = softmax

644 | else:

645 | raise ValueError("The specified activation function '{activation_function}' is not among the supported activation functions {supported_activation_functions}. Please use one of the supported functions.".format(activation_function=activation_function, supported_activation_functions=supported_activation_functions))

646 |

647 | self.activation_function = activation_function

648 |

649 | if previous_layer is None:

650 | raise TypeError("The previous layer cannot be of Type 'None'. Please pass a valid layer to the 'previous_layer' parameter.")

651 | # A reference to the layer that preceeds the current layer in the network architecture.

652 | self.previous_layer = previous_layer

653 |

654 | if type(self.previous_layer.layer_output_size) in [list, tuple, numpy.ndarray] and len(self.previous_layer.layer_output_size) > 1:

655 | raise ValueError("The input to the dense layer must be of type int but {sh} found.".format(sh=type(self.previous_layer.layer_output_size)))

656 | # Initializing the weights of the layer.

657 | self.initial_weights = numpy.random.uniform(low=-0.1,

658 | high=0.1,

659 | size=(self.previous_layer.layer_output_size, self.num_neurons))

660 |

661 | # The trained weights of the layer. Only assigned a value after the network is trained (i.e. the train_network() function completes).

662 | # Just initialized to be equal to the initial weights

663 | self.trained_weights = self.initial_weights.copy()

664 |

665 | # Size of the input to the layer.

666 | self.layer_input_size = self.previous_layer.layer_output_size

667 |

668 | # Size of the output from the layer.

669 | self.layer_output_size = num_neurons

670 |

671 | # The layer_output attribute holds the latest output from the layer.

672 | self.layer_output = None

673 |

674 | def dense_layer(self, layer_input):

675 |

676 | """

677 | Calculates the output of the dense layer.

678 |

679 | layer_input: The input to the dense layer

680 |

681 | The dense_layer() method saves its result in the layer_output attribute.

682 | """

683 |

684 | if self.trained_weights is None:

685 | raise TypeError("The weights of the dense layer cannot be of Type 'None'.")

686 |

687 | sop = numpy.matmul(layer_input, self.trained_weights)

688 |

689 | self.layer_output = self.activation(sop)

690 |

691 | class Model:

692 |

693 | """

694 | Creating a CNN model.

695 | """

696 |

697 | def __init__(self, last_layer, epochs=10, learning_rate=0.01):

698 |

699 | """

700 | last_layer: A reference to the last layer in the CNN architecture.

701 | epochs=10: Number of epochs.

702 | learning_rate=0.01: Learning rate.

703 | """

704 |

705 | self.last_layer = last_layer

706 | self.epochs = epochs

707 | self.learning_rate = learning_rate

708 |

709 | # The network_layers attribute is a list holding references to all CNN layers.

710 | self.network_layers = self.get_layers()

711 |

712 | def get_layers(self):

713 |

714 | """

715 | Prepares a list of all layers in the CNN model.

716 | Returns the list.

717 | """

718 |

719 | network_layers = []

720 |

721 | # The last layer in the network archietcture.

722 | layer = self.last_layer

723 |

724 | while "previous_layer" in layer.__init__.__code__.co_varnames:

725 | network_layers.insert(0, layer)

726 | layer = layer.previous_layer

727 |

728 | return network_layers

729 |

730 | def train(self, train_inputs, train_outputs):

731 |

732 | """

733 | Trains the CNN model.

734 | It is important to note that no learning algorithm is used for training the CNN. Just the learning rate is used for making some changes which is better than leaving the weights unchanged.

735 |

736 | train_inputs: Training data inputs.

737 | train_outputs: Training data outputs.

738 | """

739 |

740 | if (train_inputs.ndim != 4):

741 | raise ValueError("The training data input has {num_dims} but it must have 4 dimensions. The first dimension is the number of training samples, the second & third dimensions represent the width and height of the sample, and the fourth dimension represents the number of channels in the sample.".format(num_dims=train_inputs.ndim))

742 |

743 | if (train_inputs.shape[0] != len(train_outputs)):

744 | raise ValueError("Mismatch between the number of input samples and number of labels: {num_samples_inputs} != {num_samples_outputs}.".format(num_samples_inputs=train_inputs.shape[0], num_samples_outputs=len(train_outputs)))

745 |

746 | network_predictions = []

747 | network_error = 0

748 |

749 | for epoch in range(self.epochs):

750 | print("Epoch {epoch}".format(epoch=epoch))

751 | for sample_idx in range(train_inputs.shape[0]):

752 | # print("Sample {sample_idx}".format(sample_idx=sample_idx))

753 | self.feed_sample(train_inputs[sample_idx, :])

754 |

755 | try:

756 | predicted_label = numpy.where(numpy.max(self.last_layer.layer_output) == self.last_layer.layer_output)[0][0]

757 | except IndexError:

758 | print(self.last_layer.layer_output)

759 | raise IndexError("Index out of range")

760 | network_predictions.append(predicted_label)

761 |

762 | network_error = network_error + abs(predicted_label - train_outputs[sample_idx])

763 |

764 | self.update_weights(network_error)

765 |

766 | def feed_sample(self, sample):

767 |

768 | """

769 | Feeds a sample in the CNN layers.

770 |

771 | sample: The samples to be fed to the CNN layers.

772 |

773 | Returns results of the last layer in the CNN.

774 | """

775 |

776 | last_layer_outputs = sample

777 | for layer in self.network_layers:

778 | if type(layer) is Conv2D:

779 | # import time

780 | # time1 = time.time()

781 | layer.conv(input2D=last_layer_outputs)

782 | # time2 = time.time()

783 | # print(time2 - time1)

784 | elif type(layer) is Dense:

785 | layer.dense_layer(layer_input=last_layer_outputs)

786 | elif type(layer) is MaxPooling2D:

787 | layer.max_pooling(input2D=last_layer_outputs)

788 | elif type(layer) is AveragePooling2D:

789 | layer.average_pooling(input2D=last_layer_outputs)

790 | elif type(layer) is ReLU: