├── .gitignore

├── Data

└── .gitignore

├── LICENSE

├── Literature

├── base_paper.pdf

├── copy_network.pdf

├── decoder_part.odt

├── doubts

└── graves2016.pdf

├── README.md

├── TensorFlow_implementation

├── .gitignore

├── Data_Preprocessor.ipynb

├── Summary_Generator

│ ├── Model.py

│ ├── Tensorflow_Graph

│ │ ├── __init__.py

│ │ ├── order_planner_with_copynet.py

│ │ ├── order_planner_without_copynet.py

│ │ └── utils.py

│ ├── Text_Preprocessing_Helpers

│ │ ├── __init__.py

│ │ ├── pickling_tools.py

│ │ └── utils.py

│ └── __init__.py

├── fast_data_preprocessor_part1.py

├── fast_data_preprocessor_part2.py

├── inferencer.py

├── pre_processing_op.ipynb

├── seq2seq

│ ├── __init__.py

│ ├── configurable.py

│ ├── contrib

│ │ ├── __init__.py

│ │ ├── experiment.py

│ │ ├── rnn_cell.py

│ │ └── seq2seq

│ │ │ ├── __init__.py

│ │ │ ├── decoder.py

│ │ │ └── helper.py

│ ├── data

│ │ ├── __init__.py

│ │ ├── input_pipeline.py

│ │ ├── parallel_data_provider.py

│ │ ├── postproc.py

│ │ ├── sequence_example_decoder.py

│ │ ├── split_tokens_decoder.py

│ │ └── vocab.py

│ ├── decoders

│ │ ├── __init__.py

│ │ ├── attention.py

│ │ ├── attention_decoder.py

│ │ ├── basic_decoder.py

│ │ ├── beam_search_decoder.py

│ │ └── rnn_decoder.py

│ ├── encoders

│ │ ├── __init__.py

│ │ ├── conv_encoder.py

│ │ ├── encoder.py

│ │ ├── image_encoder.py

│ │ ├── pooling_encoder.py

│ │ └── rnn_encoder.py

│ ├── global_vars.py

│ ├── graph_module.py

│ ├── graph_utils.py

│ ├── inference

│ │ ├── __init__.py

│ │ ├── beam_search.py

│ │ └── inference.py

│ ├── losses.py

│ ├── metrics

│ │ ├── __init__.py

│ │ ├── bleu.py

│ │ ├── metric_specs.py

│ │ └── rouge.py

│ ├── models

│ │ ├── __init__.py

│ │ ├── attention_seq2seq.py

│ │ ├── basic_seq2seq.py

│ │ ├── bridges.py

│ │ ├── image2seq.py

│ │ ├── model_base.py

│ │ └── seq2seq_model.py

│ ├── tasks

│ │ ├── __init__.py

│ │ ├── decode_text.py

│ │ ├── dump_attention.py

│ │ ├── dump_beams.py

│ │ └── inference_task.py

│ ├── test

│ │ ├── __init__.py

│ │ ├── attention_test.py

│ │ ├── beam_search_test.py

│ │ ├── bridges_test.py

│ │ ├── conv_encoder_test.py

│ │ ├── data_test.py

│ │ ├── decoder_test.py

│ │ ├── example_config_test.py

│ │ ├── hooks_test.py

│ │ ├── input_pipeline_test.py

│ │ ├── losses_test.py

│ │ ├── metrics_test.py

│ │ ├── models_test.py

│ │ ├── pipeline_test.py

│ │ ├── pooling_encoder_test.py

│ │ ├── rnn_cell_test.py

│ │ ├── rnn_encoder_test.py

│ │ ├── train_utils_test.py

│ │ ├── utils.py

│ │ └── vocab_test.py

│ └── training

│ │ ├── __init__.py

│ │ ├── hooks.py

│ │ └── utils.py

├── trainer_with_copy_net.py

└── trainer_without_copy_net.py

├── Visualizations

├── first_run_of_both.png

└── projector_pic.png

└── architecture_diagram.jpeg

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | env/

12 | build/

13 | develop-eggs/

14 | dist/

15 | downloads/

16 | eggs/

17 | .eggs/

18 | lib/

19 | lib64/

20 | parts/

21 | sdist/

22 | var/

23 | wheels/

24 | *.egg-info/

25 | .installed.cfg

26 | *.egg

27 |

28 | # PyInstaller

29 | # Usually these files are written by a python script from a template

30 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

31 | *.manifest

32 | *.spec

33 |

34 | # Installer logs

35 | pip-log.txt

36 | pip-delete-this-directory.txt

37 |

38 | # Unit test / coverage reports

39 | htmlcov/

40 | .tox/

41 | .coverage

42 | .coverage.*

43 | .cache

44 | nosetests.xml

45 | coverage.xml

46 | *.cover

47 | .hypothesis/

48 |

49 | # Translations

50 | *.mo

51 | *.pot

52 |

53 | # Django stuff:

54 | *.log

55 | local_settings.py

56 |

57 | # Flask stuff:

58 | instance/

59 | .webassets-cache

60 |

61 | # Scrapy stuff:

62 | .scrapy

63 |

64 | # Sphinx documentation

65 | docs/_build/

66 |

67 | # PyBuilder

68 | target/

69 |

70 | # Jupyter Notebook

71 | .ipynb_checkpoints

72 |

73 | # pyenv

74 | .python-version

75 |

76 | # celery beat schedule file

77 | celerybeat-schedule

78 |

79 | # SageMath parsed files

80 | *.sage.py

81 |

82 | # dotenv

83 | .env

84 |

85 | # virtualenv

86 | .venv

87 | venv/

88 | ENV/

89 |

90 | # Spyder project settings

91 | .spyderproject

92 | .spyproject

93 |

94 | # Rope project settings

95 | .ropeproject

96 |

97 | # mkdocs documentation

98 | /site

99 |

100 | # mypy

101 | .mypy_cache/

102 |

103 | # ignore pycharm setup

104 | .idea/

105 |

--------------------------------------------------------------------------------

/Data/.gitignore:

--------------------------------------------------------------------------------

1 | # ignore the full version of the wikipedia-biography-dataset

2 | wikipedia-biography-dataset/

3 |

4 | # ignore link as well

5 | wikipedia-biography-dataset

6 |

7 | # ignore the three full dataset files

8 | *.nb

9 | *.sent

10 | *.box

11 |

12 | # ignore the pickle files

13 | *.pickle

14 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2017 Animesh Karnewar

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/Literature/base_paper.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/akanimax/natural-language-summary-generation-from-structured-data/b8cc906286f97e8523acc8306945c34a4f8ef17c/Literature/base_paper.pdf

--------------------------------------------------------------------------------

/Literature/copy_network.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/akanimax/natural-language-summary-generation-from-structured-data/b8cc906286f97e8523acc8306945c34a4f8ef17c/Literature/copy_network.pdf

--------------------------------------------------------------------------------

/Literature/decoder_part.odt:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/akanimax/natural-language-summary-generation-from-structured-data/b8cc906286f97e8523acc8306945c34a4f8ef17c/Literature/decoder_part.odt

--------------------------------------------------------------------------------

/Literature/doubts:

--------------------------------------------------------------------------------

1 | 1.) In link based attention, how is the link matrix implemented?

2 |

3 | 2.) Equation 8, what is the first alpha(t - 1)

4 |

5 | 3.) Equation 8, how is the product of Link matrix with the alpha(t - 1) dimensionally correct?

6 |

7 | 4.) alpha_t_link is used for computing zt and also for computing alpha_hybrid

8 |

9 | 5.) how is the vocabulary calculated programmatically?

10 |

11 |

12 |

--------------------------------------------------------------------------------

/Literature/graves2016.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/akanimax/natural-language-summary-generation-from-structured-data/b8cc906286f97e8523acc8306945c34a4f8ef17c/Literature/graves2016.pdf

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Natural-Language-Summary-Generation-From-Structured-Data

2 | Implementation (Personal) of the paper titled

3 | `"Order-Planning Neural Text Generation From Structured Data"`. The dataset

4 | for this project can be found at ->

5 | [WikiBio](https://github.com/DavidGrangier/wikipedia-biography-dataset)

6 |

7 | Requirements for training:

8 | * `python 3+`

9 | * `tensorflow-gpu` (preferable; CPU will take forever)

10 | * `Host Memory 12GB+` (this will be addressed soon)

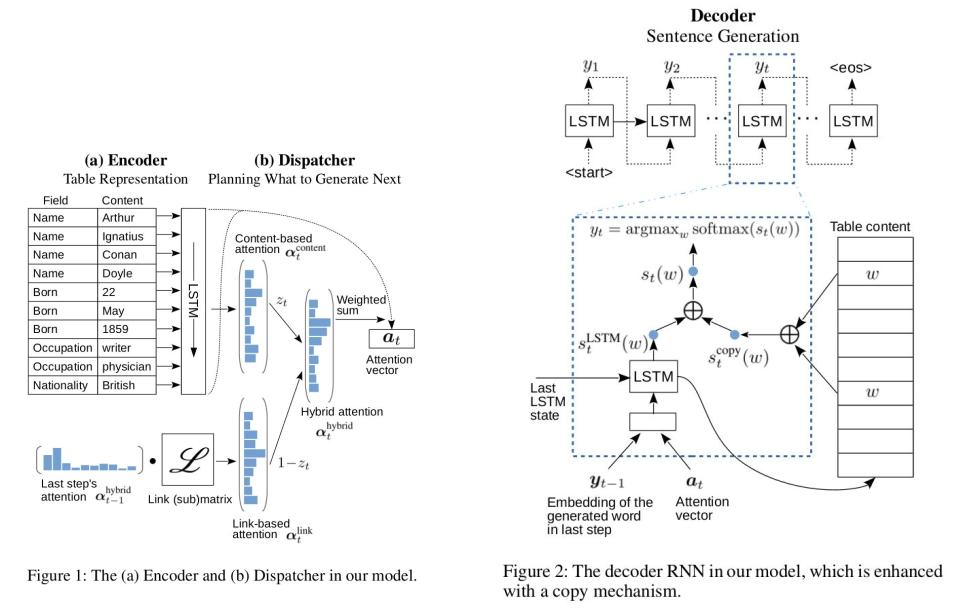

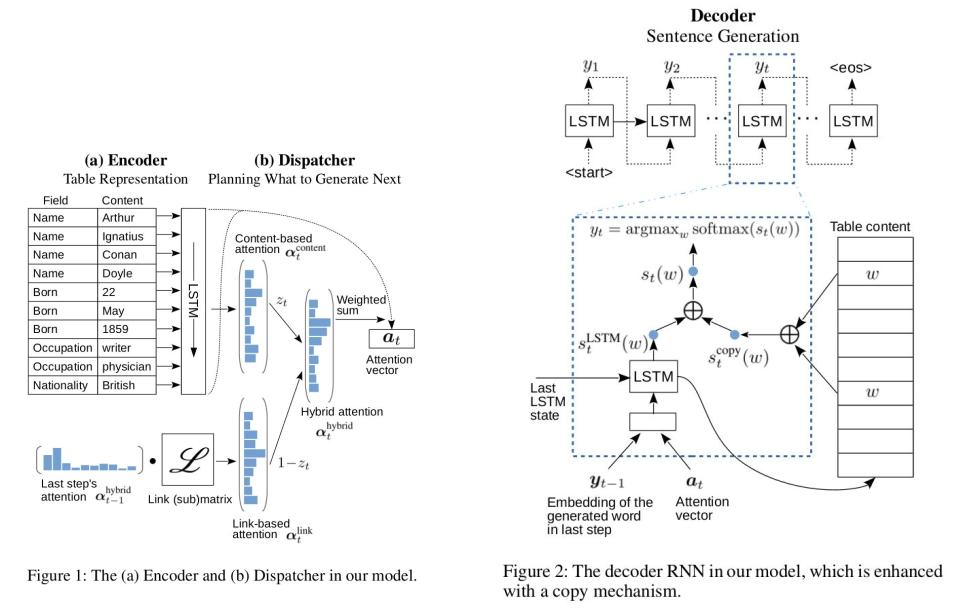

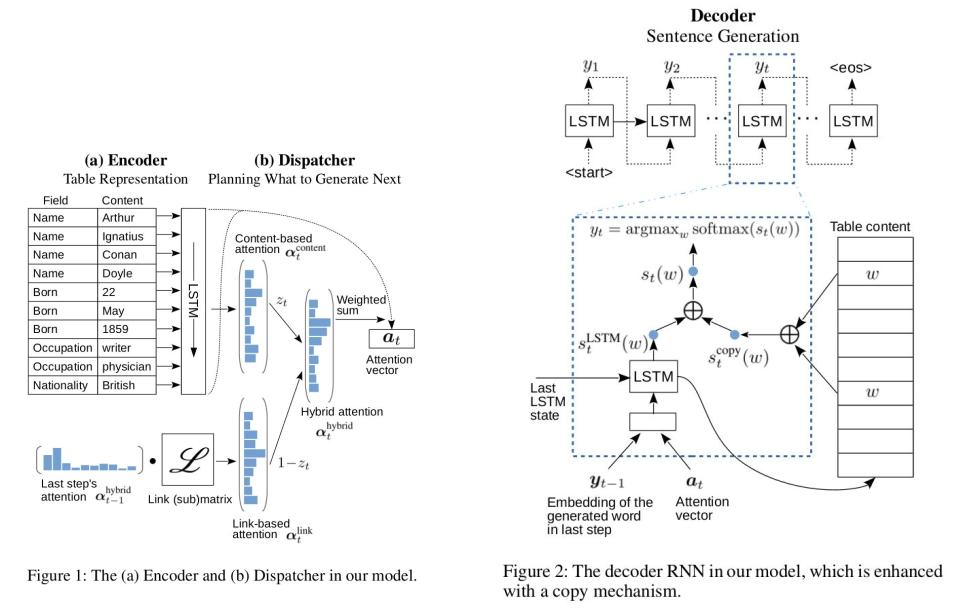

11 |

12 | ## Architecture

13 |

14 |  15 |

15 |

16 |

17 | ## Running the Code

18 | Process of using this code is slightly involved presently.

19 | This will be addressed in further development (perhaps with collaboration).

20 |

21 | #### 1. Preprocessing:

22 | Please refer to the `/TensorFlow_implementation/Data_Preprocessor.ipynb`

23 | for info about what steps are performed in preprocessing the data. Using

24 | the notebook on the full data for preprocessing will be very slow,

25 | so please use the following procedure for it.

26 |

27 | Step 1:

28 | (your_venv)$ python fast_data_preprocessor_part1.py

29 |

30 | Note that all the tweakable parameters are declared at the

31 | beginning of the script (Change them as per your requirement).

32 | This will generate a `temp.pickle` file in the same directory. Do not delete

33 | it even after full preprocessing. This is like a backup of the

34 | preprocessing pipeline; i.e. if you decide to change something later,

35 | you would'nt have to run the entire preprocessing again.

36 |

37 | Step 2:

38 | (your_venv)$ python fast_data_preprocessor_part12.py

39 |

40 | This will create the following file: `/Data/plug_and_play.pickle`. Again,

41 | tweakable parameters are at the beginning of the script.

42 | **Please Note that this process requires RAM 12GB+.

43 | If you have < 12GB Host memory, please use a subset of

44 | the dataset instead of the entire dataset

45 | (change `data_limit` in the script).**

46 |

47 | #### 2. Training:

48 |

49 | Once preprocessing is done, simply run one of the two training Scripts.

50 |

51 | (your_venv)$ python trainer_with_copy_net.py

52 | OR

53 | (your_venv)$ python trainer_without_copy_net.py

54 |

55 | Again all the hyperparameters are present at the beginning of the script.

56 | Example `trainer_without_copy_net.py`:

57 |

58 | ''' Name of the model: '''

59 | # This can be changed to create new models in the directory

60 | model_name = "Model_1(without_copy_net)"

61 |

62 | '''

63 | ========================================================

64 | || All Tweakable hyper-parameters

65 | ========================================================

66 | '''

67 | # constants for this script

68 | no_of_epochs = 500

69 | train_percentage = 100

70 | batch_size = 8

71 | checkpoint_factor = 100

72 | learning_rate = 3e-4 # for learning rate

73 | # but I have noticed that this learning rate works quite well.

74 | momentum = 0.9

75 |

76 | # Memory usage fraction:

77 | gpu_memory_usage_fraction = 1

78 |

79 | # Embeddings size:

80 | field_embedding_size = 100

81 | content_label_embedding_size = 400 # This is a much bigger

82 | # vocabulary compared to the field_name's vocabulary

83 |

84 | # LSTM hidden state sizes

85 | lstm_cell_state_size = hidden_state_size = 500 # they are

86 | # same (for now)

87 | '''

88 | ========================================================

89 | '''

90 |

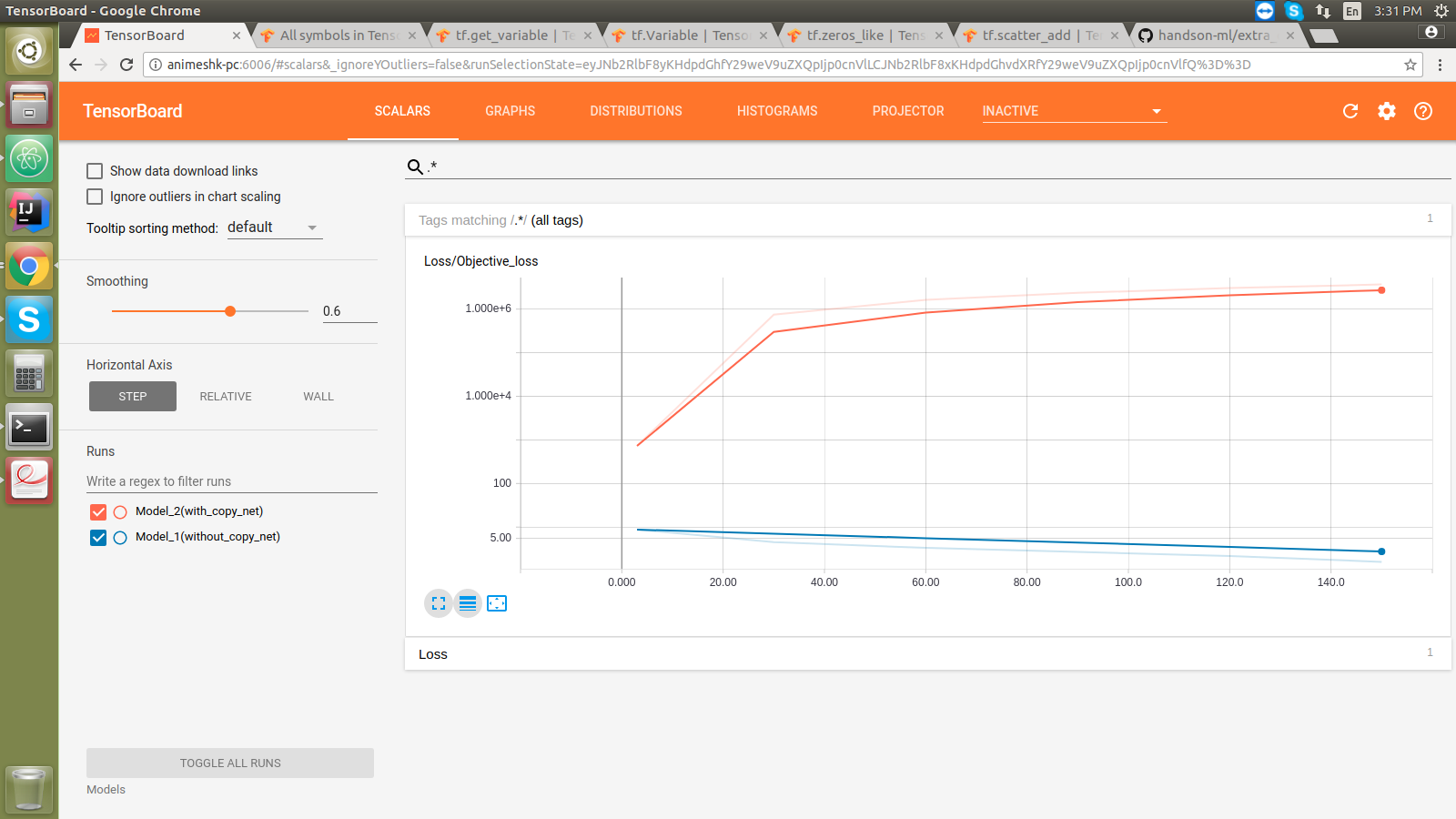

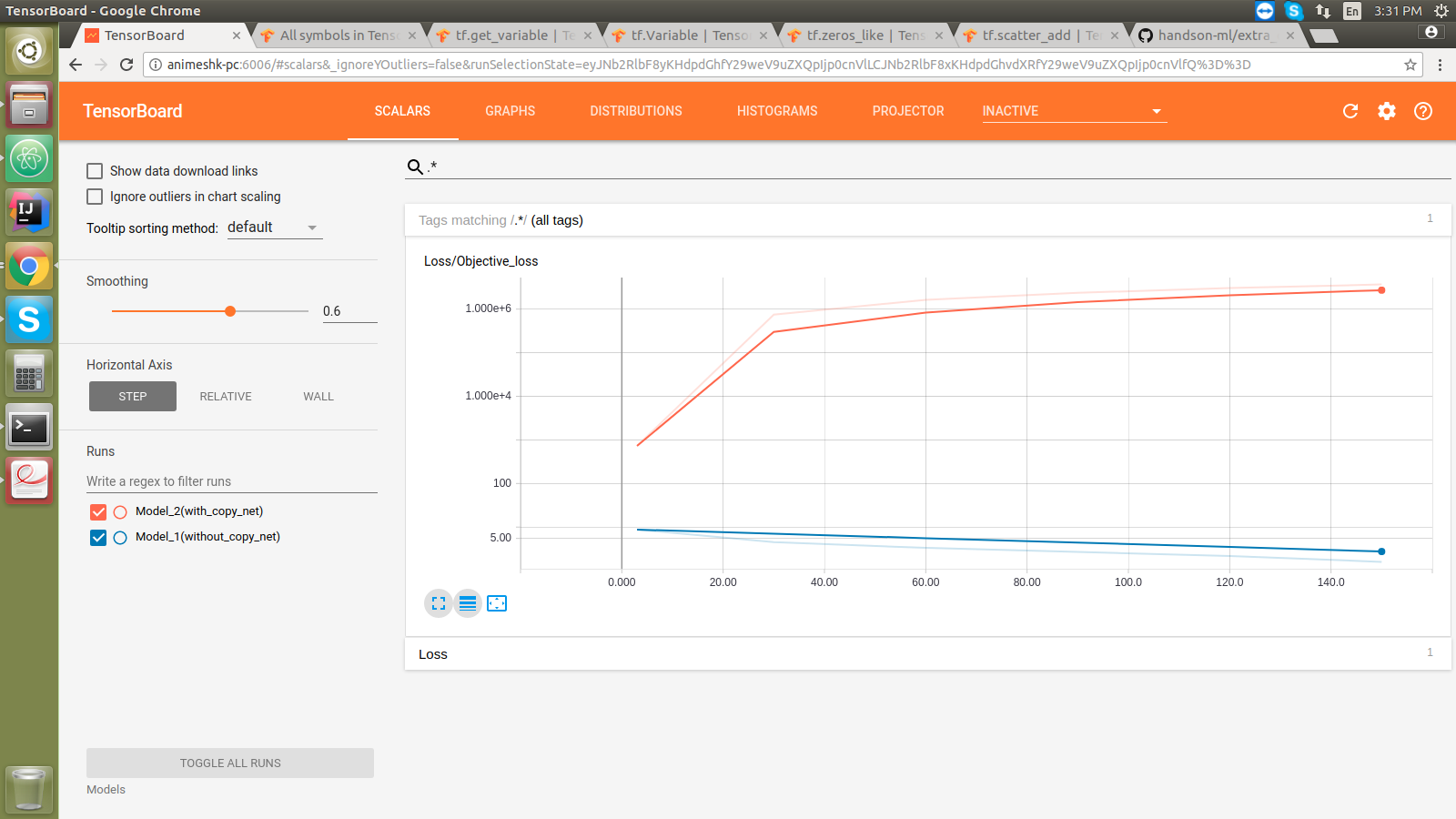

91 | ## Test Runs:

92 | Once training is started, log-dirs are created for Tensorboard.

93 | Start your `tensorboard` server pointing to the log-dir.

94 |

95 | #### Loss monitor:

96 |

97 |

98 |  100 |

100 |

101 |

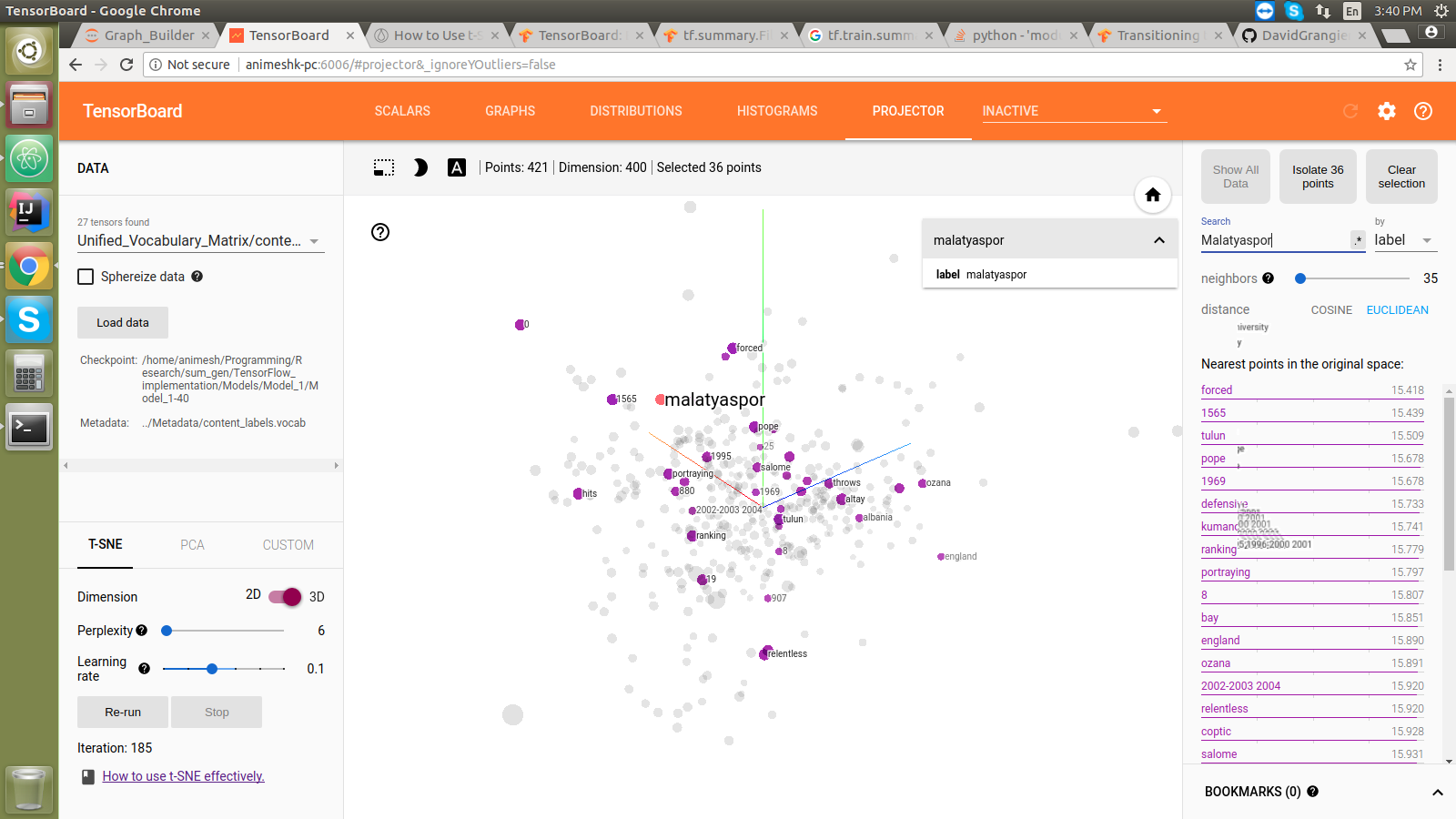

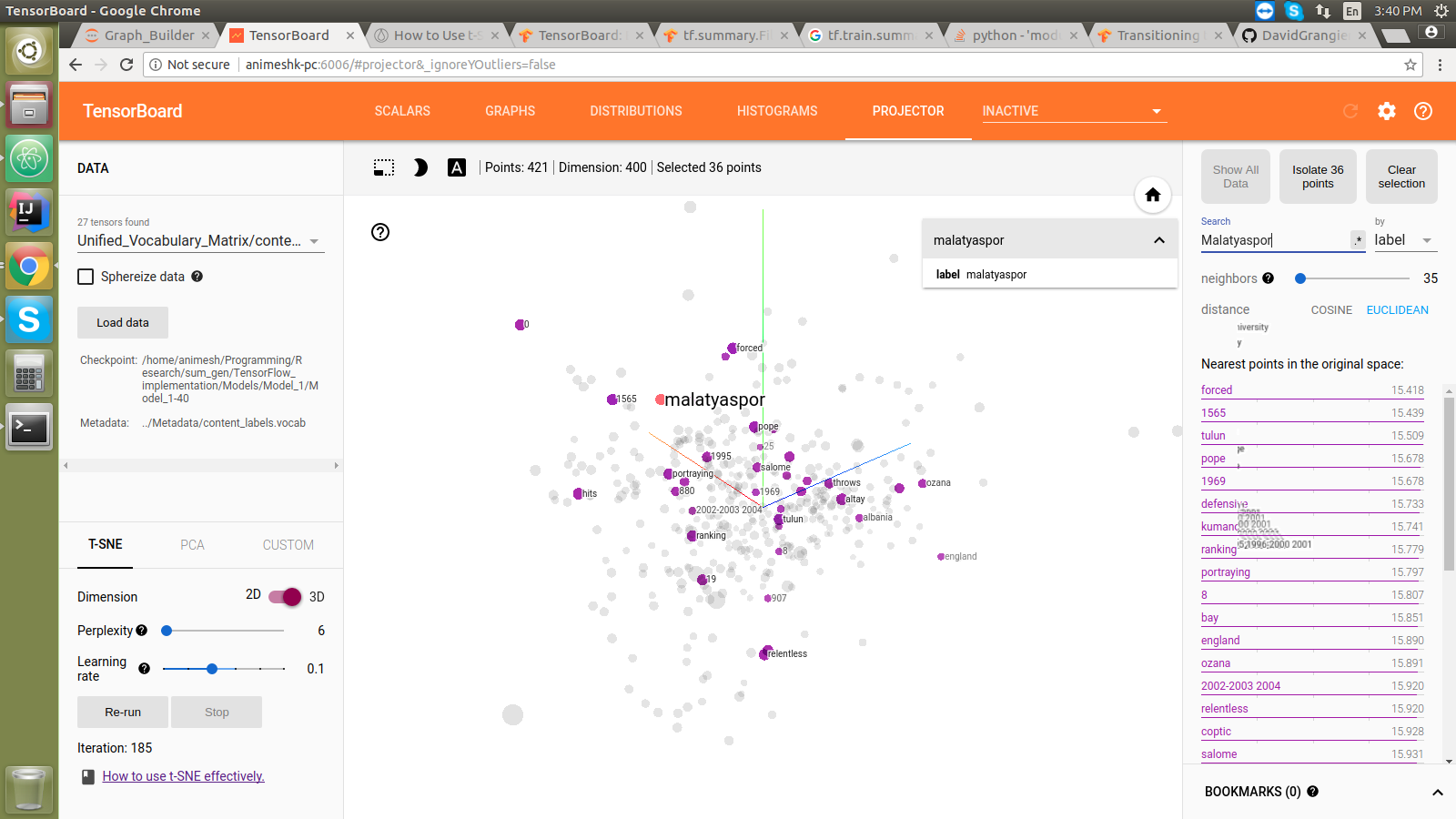

102 | #### Embedding projector:

103 |

104 |

105 |  107 |

107 |

108 |

109 | * **Trained models coming soon ...**

110 |

111 | ## Thanks

112 | Please feel free to open PRs (contribute)/ issues / comments (feedback) here.

113 |

114 |

115 | Best regards,

116 | @akanimax :)

--------------------------------------------------------------------------------

/TensorFlow_implementation/.gitignore:

--------------------------------------------------------------------------------

1 | # ignore the pickle files

2 | *.pickle

3 |

4 | # ignore the Models directory

5 | Models/

6 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/Summary_Generator/Tensorflow_Graph/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/akanimax/natural-language-summary-generation-from-structured-data/b8cc906286f97e8523acc8306945c34a4f8ef17c/TensorFlow_implementation/Summary_Generator/Tensorflow_Graph/__init__.py

--------------------------------------------------------------------------------

/TensorFlow_implementation/Summary_Generator/Tensorflow_Graph/utils.py:

--------------------------------------------------------------------------------

1 | '''

2 | Library of helper tools for training and creating the Tensorflow graph of the

3 | system

4 | '''

5 |

6 | import numpy as np

7 |

8 | # Obtain the sequence lengths for the given input field_encodings / content_encodings (To feed to the RNN encoder)

9 | def get_lengths(sequences):

10 | '''

11 | Function to obtain the lengths of the given encodings. This allows for variable length sequences in the

12 | RNN encoder.

13 | @param

14 | sequences = [2d] list of integer encoded sequences, padded to the max_length of the batch

15 |

16 | @return

17 | lengths = [1d] list containing the lengths of the sequences

18 | '''

19 | return list(map(lambda x: len(x), sequences))

20 |

21 |

22 | def pad_sequences(seqs, pad_value = 0):

23 | '''

24 | funtion for padding the list of sequences and return a tensor that has all the sequences padded

25 | with leading 0s (for the bucketing phase)

26 | @param

27 | seqs => the list of integer sequences

28 | pad_value => the integer used as the padding value (defaults to zero)

29 | @return => padded tensor for this batch

30 | '''

31 |

32 | # find the maximum length among the given sequences

33 | max_length = max(map(lambda x: len(x), seqs))

34 |

35 | # create a list denoting the values with which the sequences need to be padded:

36 | padded_seqs = [] # initialize to empty list

37 | for seq in seqs:

38 | seq_len = len(seq) # obtain the length of current sequences

39 | diff = max_length - seq_len # calculate the padding amount for this seq

40 | padded_seqs.append(seq + [pad_value for _ in range(diff)])

41 |

42 |

43 | # return the padded seqs tensor

44 | return np.array(padded_seqs)

45 |

46 |

47 |

48 | # function to perform synchronous random shuffling of the training data

49 | def synch_random_shuffle_non_np(X, Y):

50 | '''

51 | ** This function takes in the parameters that are non numpy compliant dtypes such as list, tuple, etc.

52 | Although this function works on numpy arrays as well, this is not as performant enough

53 | @param

54 | X, Y => The data to be shuffled

55 | @return => The shuffled data

56 | '''

57 | combined = list(zip(X, Y))

58 |

59 | # shuffle the combined list in place

60 | np.random.shuffle(combined)

61 |

62 | # extract the data back from the combined list

63 | X, Y = list(zip(*combined))

64 |

65 | # return the shuffled data:

66 | return X, Y

67 |

68 |

69 |

70 | # function to split the data into train - dev sets:

71 | def split_train_dev(X, Y, train_percentage):

72 | '''

73 | function to split the given data into two small datasets (train - dev)

74 | @param

75 | X, Y => the data to be split

76 | (** Make sure the train dimension is the first one)

77 | train_percentage => the percentage which should be in the training set.

78 | (**this should be in 100% not decimal)

79 | @return => train_X, train_Y, test_X, test_Y

80 | '''

81 | m_examples = len(X)

82 | assert train_percentage <= 100, "Train percentage cannot be greater than 100! NOOB!"

83 | partition_point = int((m_examples * (float(train_percentage) / 100)) + 0.5) # 0.5 is added for rounding

84 |

85 | # construct the train_X, train_Y, test_X, test_Y sets:

86 | train_X = X[: partition_point]; train_Y = Y[: partition_point]

87 | test_X = X[partition_point: ]; test_Y = Y[partition_point: ]

88 |

89 | assert len(train_X) + len(test_X) == m_examples, "Something wrong in X splitting"

90 | assert len(train_Y) + len(test_Y) == m_examples, "Something wrong in Y splitting"

91 |

92 | # return the constructed sets

93 | return train_X, train_Y, test_X, test_Y

94 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/Summary_Generator/Text_Preprocessing_Helpers/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/akanimax/natural-language-summary-generation-from-structured-data/b8cc906286f97e8523acc8306945c34a4f8ef17c/TensorFlow_implementation/Summary_Generator/Text_Preprocessing_Helpers/__init__.py

--------------------------------------------------------------------------------

/TensorFlow_implementation/Summary_Generator/Text_Preprocessing_Helpers/pickling_tools.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function

2 |

3 | import _pickle as pickle # pickle module in python

4 | import os # for path related operations

5 |

6 | '''

7 | Simple function to perform pickling of the given object. This fucntion may fail if the size of the object exceeds

8 | the max size of the pickling protocol used. Although this is highly rare, One might then have to resort to some other

9 | strategy to pickle the data.

10 | The second function available is to unpickle a file located at the specified path

11 | '''

12 |

13 | # coded by botman

14 |

15 | # function to pickle an object

16 | def pickleIt(obj, save_path):

17 | '''

18 | function to pickle the given object.

19 | @param

20 | obj => the python object to be pickled

21 | save_path => the path where the pickled file is to be saved

22 | @return => nothing (the pickle file gets saved at the given location)

23 | '''

24 | if(not os.path.isfile(save_path)):

25 | with open(save_path, 'wb') as dumping:

26 | pickle.dump(obj, dumping)

27 |

28 | print("The file has been pickled at:", save_path)

29 |

30 | else:

31 | print("The pickle file already exists: ", save_path)

32 |

33 |

34 | # function to unpickle the given file and load the obj back into the python environment

35 | def unPickleIt(pickle_path): # might throw the file not found exception

36 | '''

37 | function to unpickle the object from the given path

38 | @param

39 | pickle_path => the path where the pickle file is located

40 | @return => the object extracted from the saved path

41 | '''

42 |

43 | with open(pickle_path, 'rb') as dumped_pickle:

44 | obj = pickle.load(dumped_pickle)

45 |

46 | return obj # return the unpickled object

47 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/Summary_Generator/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/akanimax/natural-language-summary-generation-from-structured-data/b8cc906286f97e8523acc8306945c34a4f8ef17c/TensorFlow_implementation/Summary_Generator/__init__.py

--------------------------------------------------------------------------------

/TensorFlow_implementation/fast_data_preprocessor_part1.py:

--------------------------------------------------------------------------------

1 | '''

2 | script for preprocessing the data from the files

3 | This script is optimized for producing the processed data faster

4 | '''

5 | from __future__ import print_function

6 | import numpy as np

7 | import os

8 | from Summary_Generator.Text_Preprocessing_Helpers.pickling_tools import *

9 |

10 | # set the data_path

11 | data_path = "../Data"

12 |

13 | data_files_paths = {

14 | "table_content": os.path.join(data_path, "train.box"),

15 | "nb_sentences" : os.path.join(data_path, "train.nb"),

16 | "train_sentences": os.path.join(data_path, "train.sent")

17 | }

18 |

19 | # generate the lists for all the samples in the dataset by reading the file once

20 |

21 |

22 | #=======================================================================================================================

23 | # Read the file for field_names and content_names

24 | #=======================================================================================================================

25 |

26 |

27 | print("Reading from the train.box file ...")

28 | with open(data_files_paths["table_content"]) as t_file:

29 | # read all the lines from the file:

30 | table_contents = t_file.readlines()

31 |

32 | # split all the lines at tab to generate the list of field_value pairs

33 | table_contents = map(lambda x: x.strip().split('\t'), table_contents)

34 |

35 |

36 | print("splitting the samples into field_names and content_words ...")

37 | # convert this list of string pairs into list of lists of tuples

38 | table_contents = map(lambda y: map(lambda x: tuple(x.split(":")), y), table_contents)

39 |

40 | # write a loop to separate out the field_names and the content_words

41 | count = 0; field_names = []; content_words = [] # initialize these to empty lists

42 | for sample in table_contents:

43 | # unzip the list:

44 | fields, contents = zip(*sample)

45 |

46 | # modify the fields to discard the _1, _2 labels

47 | fields = map(lambda x: x.split("_")[0], fields)

48 |

49 | # append the lists to appropriate lists

50 | field_names.append(list(fields)); content_words.append(list(contents))

51 |

52 | # increment the counter

53 | count += 1

54 |

55 | # give a feed_back for 1,00,000 samples:

56 | if(count % 100000 == 0):

57 | print("seperated", count, "samples")

58 |

59 | print("\nfield_names:\n", field_names[: 3], "\n\ncontent_words:\n", content_words[: 3])

60 |

61 |

62 |

63 | #==================================================================================================================

64 | # Read the file for the labels now

65 | #==================================================================================================================

66 | print("\n\nReading from the train.nb and the train.sent files ...")

67 | (labels, label_lengths) = (open(data_files_paths["train_sentences"]), open(data_files_paths["nb_sentences"]))

68 | label_words = labels.readlines(); lab_lengths = label_lengths.readlines()

69 | # close the files:

70 | labels.close(); label_lengths.close()

71 |

72 | print(label_words[: 3])

73 |

74 | # now perfrom the map_reduce operation to receive the a data structure similar to the field_names and content_words

75 | print("grouping lines in train.sent according to the train.nb ... ")

76 | count = 0; label_sentences = [] # initialize to empty list

77 |

78 | for length in lab_lengths:

79 | temp = []; cnt = 0;

80 | while(cnt < int(length)):

81 | sent = label_words.pop(0)

82 | # print("sent", sent)

83 | temp += sent.strip().split(' ')

84 | cnt += 1

85 | # print("temp ", temp)

86 |

87 | # append the temp to the label_sentences

88 | label_sentences.append(temp)

89 |

90 | # increment the counter

91 | count += 1

92 |

93 | # print a feedback for 1000 samples:

94 | if(count % 1000 == 0):

95 | print("grouped", count, "label_sentences")

96 |

97 |

98 | print(label_sentences[-3:])

99 |

100 |

101 | print("pickling the stuff generated till now ... ")

102 | # finally pickle the objects into a temporary pickle file:

103 | # temp_pickle object definition:

104 | temp_pickle = {

105 | "fields": field_names,

106 | "content": content_words,

107 | "label": label_sentences

108 | }

109 |

110 | pickleIt(temp_pickle, "temp.pickle")

111 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/fast_data_preprocessor_part2.py:

--------------------------------------------------------------------------------

1 | '''

2 | This script picks up from where we left in the first part.

3 | '''

4 |

5 | from __future__ import print_function

6 | from Summary_Generator.Text_Preprocessing_Helpers.pickling_tools import *

7 | from Summary_Generator.Text_Preprocessing_Helpers.utils import *

8 |

9 |

10 | # obtain the data from the pickle file generated as an entailment of the preprocessing part 1.

11 | temp_pickle_file_path = "temp.pickle"

12 |

13 | # set the limit on the samples to be trained on:

14 | limit = 600000 # no limit for now

15 |

16 | # unpickle the object from this file

17 | print("unpickling the data ...")

18 | temp_obj = unPickleIt(temp_pickle_file_path)

19 |

20 | # extract the three lists from this temp_obj

21 | field_names = temp_obj['fields'][:limit]

22 | content_words = temp_obj['content'][:limit]

23 | label_words = temp_obj['label'][:limit]

24 |

25 | # print first three elements from this list to verify the sanity:

26 | print("\nField_names:", field_names[: 3]); print("\nContent_words:", content_words[: 3]), print("\nLabel_words:", label_words[: 3])

27 |

28 | # tokenize the field_names:

29 | print("\n\nTokenizing the field_names ...")

30 | field_sequences, field_dict, rev_field_dict, field_vocab_size = prepare_tokenizer(field_names)

31 |

32 | print("Encoded field_sequences:", field_sequences[: 3])

33 |

34 |

35 | #Last part is to tokenize the content and the label sequences together:

36 | # note the length of the content_words:

37 | content_split_point = len(content_words)

38 |

39 | # attach them together

40 | # transform the label_words to add and tokens to all the sentences

41 | for i in range(len(label_words)):

42 | label_words[i] = [''] + label_words[i] + ['']

43 |

44 | unified_content_label_list = content_words + label_words

45 |

46 | # tokenize the unified_content_and_label_words:

47 | print("\n\nTokenizing the content and the label names ...")

48 | unified_sequences, content_label_dict, rev_content_label_dict, content_label_vocab_size = prepare_tokenizer(unified_content_label_list, max_word_length = 20000)

49 |

50 | print("Encoded content_label_sequences:", unified_sequences[: 3])

51 |

52 | # obtain the content and label sequences by separating it from the unified_sequences

53 | content_sequences = unified_sequences[: content_split_point]; label_sequences = unified_sequences[content_split_point: ]

54 |

55 | # Finally, pickle all of it together:

56 | pickle_obj = {

57 | # ''' Input structured data: '''

58 |

59 | # field_encodings and related data:

60 | 'field_encodings': field_sequences,

61 | 'field_dict': field_dict,

62 | 'field_rev_dict': rev_field_dict,

63 | 'field_vocab_size': field_vocab_size,

64 |

65 | # content encodings and related data:

66 | 'content_encodings': content_sequences,

67 |

68 | # ''' Label summary sentences: '''

69 |

70 | # label encodings and related data:

71 | 'label_encodings': label_sequences,

72 |

73 | # V union C related data:

74 | 'content_union_label_dict': content_label_dict,

75 | 'rev_content_union_label_dict': rev_content_label_dict,

76 | 'content_label_vocab_size': content_label_vocab_size

77 | }

78 |

79 | # call the pickling function to perform the pickling:

80 | print("\nPickling the processed data ...")

81 | pickleIt(pickle_obj, "../Data/plug_and_play.pickle")

82 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/inferencer.py:

--------------------------------------------------------------------------------

1 | '''

2 | Script for checking if the Inference computations run properly for the trained graph.

3 | '''

4 |

5 | from Summary_Generator.Tensorflow_Graph import order_planner_without_copynet

6 | from Summary_Generator.Text_Preprocessing_Helpers.pickling_tools import *

7 | from Summary_Generator.Tensorflow_Graph.utils import *

8 | from Summary_Generator.Model import *

9 | import numpy as np

10 | import tensorflow as tf

11 |

12 |

13 | # random_seed value for consistent debuggable behaviour

14 | seed_value = 3

15 |

16 | np.random.seed(seed_value) # set this seed for a device independant consistent behaviour

17 |

18 | ''' Set the constants for the script '''

19 | # various paths of the files

20 | data_path = "../Data" # the data path

21 |

22 | data_files_paths = {

23 | "table_content": os.path.join(data_path, "train.box"),

24 | "nb_sentences" : os.path.join(data_path, "train.nb"),

25 | "train_sentences": os.path.join(data_path, "train.sent")

26 | }

27 |

28 | base_model_path = "Models"

29 | plug_and_play_data_file = os.path.join(data_path, "plug_and_play.pickle")

30 |

31 |

32 | # Set the train_percentage mark here.

33 | train_percentage = 90

34 |

35 |

36 |

37 | ''' Extract and setup the data '''

38 | # Obtain the data:

39 | data = unPickleIt(plug_and_play_data_file)

40 |

41 | field_encodings = data['field_encodings']

42 | field_dict = data['field_dict']

43 |

44 | content_encodings = data['content_encodings']

45 |

46 | label_encodings = data['label_encodings']

47 | content_label_dict = data['content_union_label_dict']

48 | rev_content_label_dict = data['rev_content_union_label_dict']

49 |

50 | # vocabulary sizes

51 | field_vocab_size = data['field_vocab_size']

52 | content_label_vocab_size = data['content_label_vocab_size']

53 |

54 |

55 | X, Y = synch_random_shuffle_non_np(zip(field_encodings, content_encodings), label_encodings)

56 |

57 | train_X, train_Y, dev_X, dev_Y = split_train_dev(X, Y, train_percentage)

58 | train_X_field, train_X_content = zip(*train_X)

59 | train_X_field = list(train_X_field); train_X_content = list(train_X_content)

60 |

61 | # Free up the resources by deleting non required stuff

62 | del X, Y, field_encodings, content_encodings, train_X

63 |

64 | # print train_X_field, train_X_content, train_Y, dev_X, dev_Y

65 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/seq2seq/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright 2017 Google Inc.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | """

15 | seq2seq library base module

16 | """

17 |

18 | from __future__ import absolute_import

19 | from __future__ import division

20 | from __future__ import print_function

21 |

22 | from seq2seq.graph_module import GraphModule

23 |

24 | from seq2seq import contrib

25 | from seq2seq import data

26 | from seq2seq import decoders

27 | from seq2seq import encoders

28 | from seq2seq import global_vars

29 | from seq2seq import graph_utils

30 | from seq2seq import inference

31 | from seq2seq import losses

32 | from seq2seq import metrics

33 | from seq2seq import models

34 | from seq2seq import test

35 | from seq2seq import training

36 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/seq2seq/configurable.py:

--------------------------------------------------------------------------------

1 | # Copyright 2017 Google Inc.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | """

15 | Abstract base class for objects that are configurable using

16 | a parameters dictionary.

17 | """

18 |

19 | from __future__ import absolute_import

20 | from __future__ import division

21 | from __future__ import print_function

22 |

23 | import abc

24 | import copy

25 | from pydoc import locate

26 |

27 | import six

28 | import yaml

29 |

30 | import tensorflow as tf

31 |

32 |

33 | class abstractstaticmethod(staticmethod): #pylint: disable=C0111,C0103

34 | """Decorates a method as abstract and static"""

35 | __slots__ = ()

36 |

37 | def __init__(self, function):

38 | super(abstractstaticmethod, self).__init__(function)

39 | function.__isabstractmethod__ = True

40 |

41 | __isabstractmethod__ = True

42 |

43 |

44 | def _create_from_dict(dict_, default_module, *args, **kwargs):

45 | """Creates a configurable class from a dictionary. The dictionary must have

46 | "class" and "params" properties. The class can be either fully qualified, or

47 | it is looked up in the modules passed via `default_module`.

48 | """

49 | class_ = locate(dict_["class"]) or getattr(default_module, dict_["class"])

50 | params = {}

51 | if "params" in dict_:

52 | params = dict_["params"]

53 | instance = class_(params, *args, **kwargs)

54 | return instance

55 |

56 |

57 | def _maybe_load_yaml(item):

58 | """Parses `item` only if it is a string. If `item` is a dictionary

59 | it is returned as-is.

60 | """

61 | if isinstance(item, six.string_types):

62 | return yaml.load(item)

63 | elif isinstance(item, dict):

64 | return item

65 | else:

66 | raise ValueError("Got {}, expected YAML string or dict", type(item))

67 |

68 |

69 | def _deep_merge_dict(dict_x, dict_y, path=None):

70 | """Recursively merges dict_y into dict_x.

71 | """

72 | if path is None: path = []

73 | for key in dict_y:

74 | if key in dict_x:

75 | if isinstance(dict_x[key], dict) and isinstance(dict_y[key], dict):

76 | _deep_merge_dict(dict_x[key], dict_y[key], path + [str(key)])

77 | elif dict_x[key] == dict_y[key]:

78 | pass # same leaf value

79 | else:

80 | dict_x[key] = dict_y[key]

81 | else:

82 | dict_x[key] = dict_y[key]

83 | return dict_x

84 |

85 |

86 | def _parse_params(params, default_params):

87 | """Parses parameter values to the types defined by the default parameters.

88 | Default parameters are used for missing values.

89 | """

90 | # Cast parameters to correct types

91 | if params is None:

92 | params = {}

93 | result = copy.deepcopy(default_params)

94 | for key, value in params.items():

95 | # If param is unknown, drop it to stay compatible with past versions

96 | if key not in default_params:

97 | raise ValueError("%s is not a valid model parameter" % key)

98 | # Param is a dictionary

99 | if isinstance(value, dict):

100 | default_dict = default_params[key]

101 | if not isinstance(default_dict, dict):

102 | raise ValueError("%s should not be a dictionary", key)

103 | if default_dict:

104 | value = _parse_params(value, default_dict)

105 | else:

106 | # If the default is an empty dict we do not typecheck it

107 | # and assume it's done downstream

108 | pass

109 | if value is None:

110 | continue

111 | if default_params[key] is None:

112 | result[key] = value

113 | else:

114 | result[key] = type(default_params[key])(value)

115 | return result

116 |

117 |

118 | @six.add_metaclass(abc.ABCMeta)

119 | class Configurable(object):

120 | """Interface for all classes that are configurable

121 | via a parameters dictionary.

122 |

123 | Args:

124 | params: A dictionary of parameters.

125 | mode: A value in tf.contrib.learn.ModeKeys

126 | """

127 |

128 | def __init__(self, params, mode):

129 | self._params = _parse_params(params, self.default_params())

130 | self._mode = mode

131 | self._print_params()

132 |

133 | def _print_params(self):

134 | """Logs parameter values"""

135 | classname = self.__class__.__name__

136 | tf.logging.info("Creating %s in mode=%s", classname, self._mode)

137 | tf.logging.info("\n%s", yaml.dump({classname: self._params}))

138 |

139 | @property

140 | def mode(self):

141 | """Returns a value in tf.contrib.learn.ModeKeys.

142 | """

143 | return self._mode

144 |

145 | @property

146 | def params(self):

147 | """Returns a dictionary of parsed parameters.

148 | """

149 | return self._params

150 |

151 | @abstractstaticmethod

152 | def default_params():

153 | """Returns a dictionary of default parameters. The default parameters

154 | are used to define the expected type of passed parameters. Missing

155 | parameter values are replaced with the defaults returned by this method.

156 | """

157 | raise NotImplementedError

158 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/seq2seq/contrib/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright 2017 Google Inc.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/seq2seq/contrib/experiment.py:

--------------------------------------------------------------------------------

1 | # Copyright 2017 Google Inc.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 |

15 | """A patched tf.learn Experiment class to handle GPU memory

16 | sharing issues.

17 | """

18 |

19 | import tensorflow as tf

20 |

21 | class Experiment(tf.contrib.learn.Experiment):

22 | """A patched tf.learn Experiment class to handle GPU memory

23 | sharing issues."""

24 |

25 | def __init__(self, train_steps_per_iteration=None, *args, **kwargs):

26 | super(Experiment, self).__init__(*args, **kwargs)

27 | self._train_steps_per_iteration = train_steps_per_iteration

28 |

29 | def _has_training_stopped(self, eval_result):

30 | """Determines whether the training has stopped."""

31 | if not eval_result:

32 | return False

33 |

34 | global_step = eval_result.get(tf.GraphKeys.GLOBAL_STEP)

35 | return global_step and self._train_steps and (

36 | global_step >= self._train_steps)

37 |

38 | def continuous_train_and_eval(self,

39 | continuous_eval_predicate_fn=None):

40 | """Interleaves training and evaluation.

41 |

42 | The frequency of evaluation is controlled by the `train_steps_per_iteration`

43 | (via constructor). The model will be first trained for

44 | `train_steps_per_iteration`, and then be evaluated in turns.

45 |

46 | This differs from `train_and_evaluate` as follows:

47 | 1. The procedure will have train and evaluation in turns. The model

48 | will be trained for a number of steps (usuallly smaller than `train_steps`

49 | if provided) and then be evaluated. `train_and_evaluate` will train the

50 | model for `train_steps` (no small training iteraions).

51 |

52 | 2. Due to the different approach this schedule takes, it leads to two

53 | differences in resource control. First, the resources (e.g., memory) used

54 | by training will be released before evaluation (`train_and_evaluate` takes

55 | double resources). Second, more checkpoints will be saved as a checkpoint

56 | is generated at the end of each small trainning iteration.

57 |

58 | Args:

59 | continuous_eval_predicate_fn: A predicate function determining whether to

60 | continue after each iteration. `predicate_fn` takes the evaluation

61 | results as its arguments. At the beginning of evaluation, the passed

62 | eval results will be None so it's expected that the predicate function

63 | handles that gracefully. When `predicate_fn` is not specified, this will

64 | run in an infinite loop or exit when global_step reaches `train_steps`.

65 |

66 | Returns:

67 | A tuple of the result of the `evaluate` call to the `Estimator` and the

68 | export results using the specified `ExportStrategy`.

69 |

70 | Raises:

71 | ValueError: if `continuous_eval_predicate_fn` is neither None nor

72 | callable.

73 | """

74 |

75 | if (continuous_eval_predicate_fn is not None and

76 | not callable(continuous_eval_predicate_fn)):

77 | raise ValueError(

78 | "`continuous_eval_predicate_fn` must be a callable, or None.")

79 |

80 | eval_result = None

81 |

82 | # Set the default value for train_steps_per_iteration, which will be

83 | # overriden by other settings.

84 | train_steps_per_iteration = 1000

85 | if self._train_steps_per_iteration is not None:

86 | train_steps_per_iteration = self._train_steps_per_iteration

87 | elif self._train_steps is not None:

88 | # train_steps_per_iteration = int(self._train_steps / 10)

89 | train_steps_per_iteration = min(

90 | self._min_eval_frequency, self._train_steps)

91 |

92 | while (not continuous_eval_predicate_fn or

93 | continuous_eval_predicate_fn(eval_result)):

94 |

95 | if self._has_training_stopped(eval_result):

96 | # Exits once max steps of training is satisfied.

97 | tf.logging.info("Stop training model as max steps reached")

98 | break

99 |

100 | tf.logging.info("Training model for %s steps", train_steps_per_iteration)

101 | self._estimator.fit(

102 | input_fn=self._train_input_fn,

103 | steps=train_steps_per_iteration,

104 | monitors=self._train_monitors)

105 |

106 | tf.logging.info("Evaluating model now.")

107 | eval_result = self._estimator.evaluate(

108 | input_fn=self._eval_input_fn,

109 | steps=self._eval_steps,

110 | metrics=self._eval_metrics,

111 | name="one_pass",

112 | hooks=self._eval_hooks)

113 |

114 | return eval_result, self._maybe_export(eval_result)

115 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/seq2seq/contrib/rnn_cell.py:

--------------------------------------------------------------------------------

1 | # Copyright 2017 Google Inc.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | """Collection of RNN Cells

15 | """

16 |

17 | from __future__ import absolute_import

18 | from __future__ import division

19 | from __future__ import print_function

20 | from __future__ import unicode_literals

21 |

22 | import sys

23 | import inspect

24 |

25 | import tensorflow as tf

26 | from tensorflow.python.ops import array_ops # pylint: disable=E0611

27 | from tensorflow.python.util import nest # pylint: disable=E0611

28 | from tensorflow.contrib.rnn import MultiRNNCell # pylint: disable=E0611

29 |

30 | # Import all cell classes from Tensorflow

31 | TF_CELL_CLASSES = [

32 | x for x in tf.contrib.rnn.__dict__.values()

33 | if inspect.isclass(x) and issubclass(x, tf.contrib.rnn.RNNCell)

34 | ]

35 | for cell_class in TF_CELL_CLASSES:

36 | setattr(sys.modules[__name__], cell_class.__name__, cell_class)

37 |

38 |

39 | class ExtendedMultiRNNCell(MultiRNNCell):

40 | """Extends the Tensorflow MultiRNNCell with residual connections"""

41 |

42 | def __init__(self,

43 | cells,

44 | residual_connections=False,

45 | residual_combiner="add",

46 | residual_dense=False):

47 | """Create a RNN cell composed sequentially of a number of RNNCells.

48 |

49 | Args:

50 | cells: list of RNNCells that will be composed in this order.

51 | state_is_tuple: If True, accepted and returned states are n-tuples, where

52 | `n = len(cells)`. If False, the states are all

53 | concatenated along the column axis. This latter behavior will soon be

54 | deprecated.

55 | residual_connections: If true, add residual connections between all cells.

56 | This requires all cells to have the same output_size. Also, iff the

57 | input size is not equal to the cell output size, a linear transform

58 | is added before the first layer.

59 | residual_combiner: One of "add" or "concat". To create inputs for layer

60 | t+1 either "add" the inputs from the prev layer or concat them.

61 | residual_dense: Densely connect each layer to all other layers

62 |

63 | Raises:

64 | ValueError: if cells is empty (not allowed), or at least one of the cells

65 | returns a state tuple but the flag `state_is_tuple` is `False`.

66 | """

67 | super(ExtendedMultiRNNCell, self).__init__(cells, state_is_tuple=True)

68 | assert residual_combiner in ["add", "concat", "mean"]

69 |

70 | self._residual_connections = residual_connections

71 | self._residual_combiner = residual_combiner

72 | self._residual_dense = residual_dense

73 |

74 | def __call__(self, inputs, state, scope=None):

75 | """Run this multi-layer cell on inputs, starting from state."""

76 | if not self._residual_connections:

77 | return super(ExtendedMultiRNNCell, self).__call__(

78 | inputs, state, (scope or "extended_multi_rnn_cell"))

79 |

80 | with tf.variable_scope(scope or "extended_multi_rnn_cell"):

81 | # Adding Residual connections are only possible when input and output

82 | # sizes are equal. Optionally transform the initial inputs to

83 | # `cell[0].output_size`

84 | if self._cells[0].output_size != inputs.get_shape().as_list()[1] and \

85 | (self._residual_combiner in ["add", "mean"]):

86 | inputs = tf.contrib.layers.fully_connected(

87 | inputs=inputs,

88 | num_outputs=self._cells[0].output_size,

89 | activation_fn=None,

90 | scope="input_transform")

91 |

92 | # Iterate through all layers (code from MultiRNNCell)

93 | cur_inp = inputs

94 | prev_inputs = [cur_inp]

95 | new_states = []

96 | for i, cell in enumerate(self._cells):

97 | with tf.variable_scope("cell_%d" % i):

98 | if not nest.is_sequence(state):

99 | raise ValueError(

100 | "Expected state to be a tuple of length %d, but received: %s" %

101 | (len(self.state_size), state))

102 | cur_state = state[i]

103 | next_input, new_state = cell(cur_inp, cur_state)

104 |

105 | # Either combine all previous inputs or only the current input

106 | input_to_combine = prev_inputs[-1:]

107 | if self._residual_dense:

108 | input_to_combine = prev_inputs

109 |

110 | # Add Residual connection

111 | if self._residual_combiner == "add":

112 | next_input = next_input + sum(input_to_combine)

113 | if self._residual_combiner == "mean":

114 | combined_mean = tf.reduce_mean(tf.stack(input_to_combine), 0)

115 | next_input = next_input + combined_mean

116 | elif self._residual_combiner == "concat":

117 | next_input = tf.concat([next_input] + input_to_combine, 1)

118 | cur_inp = next_input

119 | prev_inputs.append(cur_inp)

120 |

121 | new_states.append(new_state)

122 | new_states = (tuple(new_states)

123 | if self._state_is_tuple else array_ops.concat(new_states, 1))

124 | return cur_inp, new_states

125 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/seq2seq/contrib/seq2seq/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright 2017 Google Inc.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/seq2seq/data/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright 2017 Google Inc.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | """Collection of input-related utlities.

15 | """

16 |

17 | from seq2seq.data import input_pipeline

18 | from seq2seq.data import parallel_data_provider

19 | from seq2seq.data import postproc

20 | from seq2seq.data import split_tokens_decoder

21 | from seq2seq.data import vocab

22 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/seq2seq/data/parallel_data_provider.py:

--------------------------------------------------------------------------------

1 | # Copyright 2017 Google Inc.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | """A Data Provder that reads parallel (aligned) data.

15 | """

16 |

17 | from __future__ import absolute_import

18 | from __future__ import division

19 | from __future__ import print_function

20 | from __future__ import unicode_literals

21 |

22 | import numpy as np

23 |

24 | import tensorflow as tf

25 | from tensorflow.contrib.slim.python.slim.data import data_provider

26 | from tensorflow.contrib.slim.python.slim.data import parallel_reader

27 |

28 | from seq2seq.data import split_tokens_decoder

29 |

30 |

31 | def make_parallel_data_provider(data_sources_source,

32 | data_sources_target,

33 | reader=tf.TextLineReader,

34 | num_samples=None,

35 | source_delimiter=" ",

36 | target_delimiter=" ",

37 | **kwargs):

38 | """Creates a DataProvider that reads parallel text data.

39 |

40 | Args:

41 | data_sources_source: A list of data sources for the source text files.

42 | data_sources_target: A list of data sources for the target text files.

43 | Can be None for inference mode.

44 | num_samples: Optional, number of records in the dataset

45 | delimiter: Split tokens in the data on this delimiter. Defaults to space.

46 | kwargs: Additional arguments (shuffle, num_epochs, etc) that are passed

47 | to the data provider

48 |

49 | Returns:

50 | A DataProvider instance

51 | """

52 |

53 | decoder_source = split_tokens_decoder.SplitTokensDecoder(

54 | tokens_feature_name="source_tokens",

55 | length_feature_name="source_len",

56 | append_token="SEQUENCE_END",

57 | delimiter=source_delimiter)

58 |

59 | dataset_source = tf.contrib.slim.dataset.Dataset(

60 | data_sources=data_sources_source,

61 | reader=reader,

62 | decoder=decoder_source,

63 | num_samples=num_samples,

64 | items_to_descriptions={})

65 |

66 | dataset_target = None

67 | if data_sources_target is not None:

68 | decoder_target = split_tokens_decoder.SplitTokensDecoder(

69 | tokens_feature_name="target_tokens",

70 | length_feature_name="target_len",

71 | prepend_token="SEQUENCE_START",

72 | append_token="SEQUENCE_END",

73 | delimiter=target_delimiter)

74 |

75 | dataset_target = tf.contrib.slim.dataset.Dataset(

76 | data_sources=data_sources_target,

77 | reader=reader,

78 | decoder=decoder_target,

79 | num_samples=num_samples,

80 | items_to_descriptions={})

81 |

82 | return ParallelDataProvider(

83 | dataset1=dataset_source, dataset2=dataset_target, **kwargs)

84 |

85 |

86 | class ParallelDataProvider(data_provider.DataProvider):

87 | """Creates a ParallelDataProvider. This data provider reads two datasets

88 | in parallel, keeping them aligned.

89 |

90 | Args:

91 | dataset1: The first dataset. An instance of the Dataset class.

92 | dataset2: The second dataset. An instance of the Dataset class.

93 | Can be None. If None, only `dataset1` is read.

94 | num_readers: The number of parallel readers to use.

95 | shuffle: Whether to shuffle the data sources and common queue when

96 | reading.

97 | num_epochs: The number of times each data source is read. If left as None,

98 | the data will be cycled through indefinitely.

99 | common_queue_capacity: The capacity of the common queue.

100 | common_queue_min: The minimum number of elements in the common queue after

101 | a dequeue.

102 | seed: The seed to use if shuffling.

103 | """

104 |

105 | def __init__(self,

106 | dataset1,

107 | dataset2,

108 | shuffle=True,

109 | num_epochs=None,

110 | common_queue_capacity=4096,

111 | common_queue_min=1024,

112 | seed=None):

113 |

114 | if seed is None:

115 | seed = np.random.randint(10e8)

116 |

117 | _, data_source = parallel_reader.parallel_read(

118 | dataset1.data_sources,

119 | reader_class=dataset1.reader,

120 | num_epochs=num_epochs,

121 | num_readers=1,

122 | shuffle=False,

123 | capacity=common_queue_capacity,

124 | min_after_dequeue=common_queue_min,

125 | seed=seed)

126 |

127 | data_target = ""

128 | if dataset2 is not None:

129 | _, data_target = parallel_reader.parallel_read(

130 | dataset2.data_sources,

131 | reader_class=dataset2.reader,

132 | num_epochs=num_epochs,

133 | num_readers=1,

134 | shuffle=False,

135 | capacity=common_queue_capacity,

136 | min_after_dequeue=common_queue_min,

137 | seed=seed)

138 |

139 | # Optionally shuffle the data

140 | if shuffle:

141 | shuffle_queue = tf.RandomShuffleQueue(

142 | capacity=common_queue_capacity,

143 | min_after_dequeue=common_queue_min,

144 | dtypes=[tf.string, tf.string],

145 | seed=seed)

146 | enqueue_ops = []

147 | enqueue_ops.append(shuffle_queue.enqueue([data_source, data_target]))

148 | tf.train.add_queue_runner(

149 | tf.train.QueueRunner(shuffle_queue, enqueue_ops))

150 | data_source, data_target = shuffle_queue.dequeue()

151 |

152 | # Decode source items

153 | items = dataset1.decoder.list_items()

154 | tensors = dataset1.decoder.decode(data_source, items)

155 |

156 | if dataset2 is not None:

157 | # Decode target items

158 | items2 = dataset2.decoder.list_items()

159 | tensors2 = dataset2.decoder.decode(data_target, items2)

160 |

161 | # Merge items and results

162 | items = items + items2

163 | tensors = tensors + tensors2

164 |

165 | super(ParallelDataProvider, self).__init__(

166 | items_to_tensors=dict(zip(items, tensors)),

167 | num_samples=dataset1.num_samples)

168 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/seq2seq/data/postproc.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | # Copyright 2017 Google Inc.

3 | #

4 | # Licensed under the Apache License, Version 2.0 (the "License");

5 | # you may not use this file except in compliance with the License.

6 | # You may obtain a copy of the License at

7 | #

8 | # http://www.apache.org/licenses/LICENSE-2.0

9 | #

10 | # Unless required by applicable law or agreed to in writing, software

11 | # distributed under the License is distributed on an "AS IS" BASIS,

12 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | # See the License for the specific language governing permissions and

14 | # limitations under the License.

15 |

16 | """

17 | A collection of commonly used post-processing functions.

18 | """

19 |

20 | from __future__ import absolute_import

21 | from __future__ import division

22 | from __future__ import print_function

23 | from __future__ import unicode_literals

24 |

25 | def strip_bpe(text):

26 | """Deodes text that was processed using BPE from

27 | https://github.com/rsennrich/subword-nmt"""

28 | return text.replace("@@ ", "").strip()

29 |

30 | def decode_sentencepiece(text):

31 | """Decodes text that uses https://github.com/google/sentencepiece encoding.

32 | Assumes that pieces are separated by a space"""

33 | return "".join(text.split(" ")).replace("▁", " ").strip()

34 |

35 | def slice_text(text,

36 | eos_token="SEQUENCE_END",

37 | sos_token="SEQUENCE_START"):

38 | """Slices text from SEQUENCE_START to SEQUENCE_END, not including

39 | these special tokens.

40 | """

41 | eos_index = text.find(eos_token)

42 | text = text[:eos_index] if eos_index > -1 else text

43 | sos_index = text.find(sos_token)

44 | text = text[sos_index+len(sos_token):] if sos_index > -1 else text

45 | return text.strip()

46 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/seq2seq/data/sequence_example_decoder.py:

--------------------------------------------------------------------------------

1 | # Copyright 2017 Google Inc.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | """A decoder for tf.SequenceExample"""

15 |

16 | import tensorflow as tf

17 | from tensorflow.contrib.slim.python.slim.data import data_decoder

18 |

19 |

20 | class TFSEquenceExampleDecoder(data_decoder.DataDecoder):

21 | """A decoder for TensorFlow Examples.

22 | Decoding Example proto buffers is comprised of two stages: (1) Example parsing

23 | and (2) tensor manipulation.

24 | In the first stage, the tf.parse_example function is called with a list of

25 | FixedLenFeatures and SparseLenFeatures. These instances tell TF how to parse

26 | the example. The output of this stage is a set of tensors.

27 | In the second stage, the resulting tensors are manipulated to provide the

28 | requested 'item' tensors.

29 | To perform this decoding operation, an ExampleDecoder is given a list of

30 | ItemHandlers. Each ItemHandler indicates the set of features for stage 1 and

31 | contains the instructions for post_processing its tensors for stage 2.

32 | """

33 |

34 | def __init__(self, context_keys_to_features, sequence_keys_to_features,

35 | items_to_handlers):

36 | """Constructs the decoder.

37 | Args:

38 | keys_to_features: a dictionary from TF-Example keys to either

39 | tf.VarLenFeature or tf.FixedLenFeature instances. See tensorflow's

40 | parsing_ops.py.

41 | items_to_handlers: a dictionary from items (strings) to ItemHandler

42 | instances. Note that the ItemHandler's are provided the keys that they

43 | use to return the final item Tensors.

44 | """

45 | self._context_keys_to_features = context_keys_to_features

46 | self._sequence_keys_to_features = sequence_keys_to_features

47 | self._items_to_handlers = items_to_handlers

48 |

49 | def list_items(self):

50 | """See base class."""

51 | return list(self._items_to_handlers.keys())

52 |

53 | def decode(self, serialized_example, items=None):

54 | """Decodes the given serialized TF-example.

55 | Args:

56 | serialized_example: a serialized TF-example tensor.

57 | items: the list of items to decode. These must be a subset of the item

58 | keys in self._items_to_handlers. If `items` is left as None, then all

59 | of the items in self._items_to_handlers are decoded.

60 | Returns:

61 | the decoded items, a list of tensor.

62 | """

63 | context, sequence = tf.parse_single_sequence_example(

64 | serialized_example, self._context_keys_to_features,

65 | self._sequence_keys_to_features)

66 |

67 | # Merge context and sequence features

68 | example = {}

69 | example.update(context)

70 | example.update(sequence)

71 |

72 | all_features = {}

73 | all_features.update(self._context_keys_to_features)

74 | all_features.update(self._sequence_keys_to_features)

75 |

76 | # Reshape non-sparse elements just once:

77 | for k, value in all_features.items():

78 | if isinstance(value, tf.FixedLenFeature):

79 | example[k] = tf.reshape(example[k], value.shape)

80 |

81 | if not items:

82 | items = self._items_to_handlers.keys()

83 |

84 | outputs = []

85 | for item in items:

86 | handler = self._items_to_handlers[item]

87 | keys_to_tensors = {key: example[key] for key in handler.keys}

88 | outputs.append(handler.tensors_to_item(keys_to_tensors))

89 | return outputs

90 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/seq2seq/data/split_tokens_decoder.py:

--------------------------------------------------------------------------------

1 | # Copyright 2017 Google Inc.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | """A decoder that splits a string into tokens and returns the

15 | individual tokens and the length.

16 | """

17 |

18 | from __future__ import absolute_import

19 | from __future__ import division

20 | from __future__ import print_function

21 | from __future__ import unicode_literals

22 |

23 | import tensorflow as tf

24 | from tensorflow.contrib.slim.python.slim.data import data_decoder

25 |

26 |

27 | class SplitTokensDecoder(data_decoder.DataDecoder):

28 | """A DataProvider that splits a string tensor into individual tokens and

29 | returns the tokens and the length.

30 | Optionally prepends or appends special tokens.

31 |

32 | Args:

33 | delimiter: Delimiter to split on. Must be a single character.

34 | tokens_feature_name: A descriptive feature name for the token values

35 | length_feature_name: A descriptive feature name for the length value

36 | """

37 |

38 | def __init__(self,

39 | delimiter=" ",

40 | tokens_feature_name="tokens",

41 | length_feature_name="length",

42 | prepend_token=None,

43 | append_token=None):

44 | self.delimiter = delimiter

45 | self.tokens_feature_name = tokens_feature_name

46 | self.length_feature_name = length_feature_name

47 | self.prepend_token = prepend_token

48 | self.append_token = append_token

49 |

50 | def decode(self, data, items):

51 | decoded_items = {}

52 |

53 | # Split tokens

54 | tokens = tf.string_split([data], delimiter=self.delimiter).values

55 |

56 | # Optionally prepend a special token

57 | if self.prepend_token is not None:

58 | tokens = tf.concat([[self.prepend_token], tokens], 0)

59 |

60 | # Optionally append a special token

61 | if self.append_token is not None:

62 | tokens = tf.concat([tokens, [self.append_token]], 0)

63 |

64 | decoded_items[self.length_feature_name] = tf.size(tokens)

65 | decoded_items[self.tokens_feature_name] = tokens

66 | return [decoded_items[_] for _ in items]

67 |

68 | def list_items(self):

69 | return [self.tokens_feature_name, self.length_feature_name]

70 |

--------------------------------------------------------------------------------

/TensorFlow_implementation/seq2seq/data/vocab.py:

--------------------------------------------------------------------------------

1 | # Copyright 2017 Google Inc.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | """Vocabulary related functions.

15 | """

16 |

17 | from __future__ import absolute_import

18 | from __future__ import division

19 | from __future__ import print_function

20 |

21 | import collections

22 | import tensorflow as tf

23 | from tensorflow import gfile

24 |

25 | SpecialVocab = collections.namedtuple("SpecialVocab",

26 | ["UNK", "SEQUENCE_START", "SEQUENCE_END"])

27 |

28 |

29 | class VocabInfo(

30 | collections.namedtuple("VocbabInfo",

31 | ["path", "vocab_size", "special_vocab"])):

32 | """Convenience structure for vocabulary information.

33 | """

34 |

35 | @property

36 | def total_size(self):

37 | """Returns size the the base vocabulary plus the size of extra vocabulary"""

38 | return self.vocab_size + len(self.special_vocab)

39 |

40 |

41 | def get_vocab_info(vocab_path):

42 | """Creates a `VocabInfo` instance that contains the vocabulary size and

43 | the special vocabulary for the given file.

44 |

45 | Args:

46 | vocab_path: Path to a vocabulary file with one word per line.

47 |

48 | Returns:

49 | A VocabInfo tuple.

50 | """

51 | with gfile.GFile(vocab_path) as file:

52 | vocab_size = sum(1 for _ in file)

53 | special_vocab = get_special_vocab(vocab_size)

54 | return VocabInfo(vocab_path, vocab_size, special_vocab)

55 |

56 |

57 | def get_special_vocab(vocabulary_size):

58 | """Returns the `SpecialVocab` instance for a given vocabulary size.

59 | """

60 | return SpecialVocab(*range(vocabulary_size, vocabulary_size + 3))

61 |

62 |

63 | def create_vocabulary_lookup_table(filename, default_value=None):

64 | """Creates a lookup table for a vocabulary file.

65 |

66 | Args:

67 | filename: Path to a vocabulary file containg one word per line.

68 | Each word is mapped to its line number.

69 | default_value: UNK tokens will be mapped to this id.

70 | If None, UNK tokens will be mapped to [vocab_size]

71 |

72 | Returns:

73 | A tuple (vocab_to_id_table, id_to_vocab_table,

74 | word_to_count_table, vocab_size). The vocab size does not include

75 | the UNK token.

76 | """

77 | if not gfile.Exists(filename):

78 | raise ValueError("File does not exist: {}".format(filename))

79 |

80 | # Load vocabulary into memory

81 | with gfile.GFile(filename) as file:

82 | vocab = list(line.strip("\n") for line in file)

83 | vocab_size = len(vocab)

84 |

85 | has_counts = len(vocab[0].split("\t")) == 2

86 | if has_counts:

87 | vocab, counts = zip(*[_.split("\t") for _ in vocab])

88 | counts = [float(_) for _ in counts]

89 | vocab = list(vocab)

90 | else:

91 | counts = [-1. for _ in vocab]

92 |