├── .gitignore

├── go.mod

├── fs

├── os_mmap_windows_amd64.go

├── os_mmap_windows_386.go

├── os_mmap_test.go

├── mem_test.go

├── os_test.go

├── os_plan9.go

├── os_unix.go

├── os_mmap_unix.go

├── os_mmap_windows.go

├── os.go

├── os_windows.go

├── sub.go

├── fs.go

├── os_mmap.go

├── mem.go

└── fs_test.go

├── doc.go

├── db_rpc_test.go

├── db_mmap_test.go

├── internal

├── hash

│ ├── seed_test.go

│ ├── seed.go

│ ├── murmurhash32.go

│ └── murmurhash32_test.go

├── errors

│ ├── errors.go

│ └── errors_test.go

└── assert

│ ├── assert.go

│ └── assert_test.go

├── metrics.go

├── logger.go

├── lock.go

├── .github

└── workflows

│ ├── golangci-lint.yaml

│ └── test.yaml

├── errors.go

├── gobfile.go

├── example_test.go

├── header.go

├── iterator_test.go

├── datalog_test.go

├── options.go

├── CHANGELOG.md

├── backup_test.go

├── iterator.go

├── backup.go

├── file.go

├── file_test.go

├── README.md

├── bucket.go

├── recovery.go

├── segment.go

├── compaction.go

├── datalog.go

├── recovery_test.go

├── docs

└── design.md

├── index.go

├── compaction_test.go

├── db.go

├── LICENSE

└── db_test.go

/.gitignore:

--------------------------------------------------------------------------------

1 | /fs/test

2 |

--------------------------------------------------------------------------------

/go.mod:

--------------------------------------------------------------------------------

1 | module github.com/akrylysov/pogreb

2 |

3 | go 1.18

4 |

--------------------------------------------------------------------------------

/fs/os_mmap_windows_amd64.go:

--------------------------------------------------------------------------------

1 | package fs

2 |

3 | const maxMmapSize = 1 << 48

4 |

--------------------------------------------------------------------------------

/doc.go:

--------------------------------------------------------------------------------

1 | /*

2 | Package pogreb implements an embedded key-value store for read-heavy workloads.

3 | */

4 | package pogreb

5 |

--------------------------------------------------------------------------------

/fs/os_mmap_windows_386.go:

--------------------------------------------------------------------------------

1 | package fs

2 |

3 | import (

4 | "math"

5 | )

6 |

7 | const maxMmapSize = math.MaxInt32

8 |

--------------------------------------------------------------------------------

/fs/os_mmap_test.go:

--------------------------------------------------------------------------------

1 | package fs

2 |

3 | import (

4 | "testing"

5 | )

6 |

7 | func TestOSMMapFS(t *testing.T) {

8 | testFS(t, Sub(OSMMap, t.TempDir()))

9 | }

10 |

--------------------------------------------------------------------------------

/db_rpc_test.go:

--------------------------------------------------------------------------------

1 | //go:build plan9

2 | // +build plan9

3 |

4 | package pogreb

5 |

6 | import (

7 | "github.com/akrylysov/pogreb/fs"

8 | )

9 |

10 | var testFileSystems = []fs.FileSystem{fs.Mem, fs.OS}

11 |

--------------------------------------------------------------------------------

/db_mmap_test.go:

--------------------------------------------------------------------------------

1 | //go:build !plan9

2 | // +build !plan9

3 |

4 | package pogreb

5 |

6 | import (

7 | "github.com/akrylysov/pogreb/fs"

8 | )

9 |

10 | var testFileSystems = []fs.FileSystem{fs.Mem, fs.OSMMap, fs.OS}

11 |

--------------------------------------------------------------------------------

/internal/hash/seed_test.go:

--------------------------------------------------------------------------------

1 | package hash

2 |

3 | import (

4 | "testing"

5 |

6 | "github.com/akrylysov/pogreb/internal/assert"

7 | )

8 |

9 | func TestRandSeed(t *testing.T) {

10 | _, err := RandSeed()

11 | assert.Nil(t, err)

12 | }

13 |

--------------------------------------------------------------------------------

/metrics.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import "expvar"

4 |

5 | // Metrics holds the DB metrics.

6 | type Metrics struct {

7 | Puts expvar.Int

8 | Dels expvar.Int

9 | Gets expvar.Int

10 | HashCollisions expvar.Int

11 | }

12 |

--------------------------------------------------------------------------------

/logger.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "log"

5 | "os"

6 | )

7 |

8 | var logger = log.New(os.Stderr, "pogreb: ", 0)

9 |

10 | // SetLogger sets the global logger.

11 | func SetLogger(l *log.Logger) {

12 | if l != nil {

13 | logger = l

14 | }

15 | }

16 |

--------------------------------------------------------------------------------

/lock.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "os"

5 |

6 | "github.com/akrylysov/pogreb/fs"

7 | )

8 |

9 | const (

10 | lockName = "lock"

11 | )

12 |

13 | func createLockFile(opts *Options) (fs.LockFile, bool, error) {

14 | return opts.FileSystem.CreateLockFile(lockName, os.FileMode(0644))

15 | }

16 |

--------------------------------------------------------------------------------

/fs/mem_test.go:

--------------------------------------------------------------------------------

1 | package fs

2 |

3 | import (

4 | "testing"

5 | )

6 |

7 | func TestMemFS(t *testing.T) {

8 | testFS(t, Mem)

9 | }

10 |

11 | func TestMemLockFile(t *testing.T) {

12 | testLockFile(t, Mem)

13 | }

14 |

15 | func TestMemLockAcquireExisting(t *testing.T) {

16 | testLockFileAcquireExisting(t, Mem)

17 | }

18 |

--------------------------------------------------------------------------------

/internal/hash/seed.go:

--------------------------------------------------------------------------------

1 | package hash

2 |

3 | import (

4 | "crypto/rand"

5 | "encoding/binary"

6 | )

7 |

8 | // RandSeed generates a random hash seed.

9 | func RandSeed() (uint32, error) {

10 | b := make([]byte, 4)

11 | if _, err := rand.Read(b); err != nil {

12 | return 0, err

13 | }

14 | return binary.LittleEndian.Uint32(b), nil

15 | }

16 |

--------------------------------------------------------------------------------

/fs/os_test.go:

--------------------------------------------------------------------------------

1 | package fs

2 |

3 | import (

4 | "testing"

5 | )

6 |

7 | func TestOSFS(t *testing.T) {

8 | testFS(t, Sub(OS, t.TempDir()))

9 | }

10 |

11 | func TestOSLockFile(t *testing.T) {

12 | testLockFile(t, Sub(OS, t.TempDir()))

13 | }

14 |

15 | func TestOSLockAcquireExisting(t *testing.T) {

16 | testLockFileAcquireExisting(t, Sub(OS, t.TempDir()))

17 | }

18 |

--------------------------------------------------------------------------------

/.github/workflows/golangci-lint.yaml:

--------------------------------------------------------------------------------

1 | name: golangci-lint

2 | on:

3 | push:

4 | tags:

5 | - v*

6 | branches:

7 | - master

8 | - main

9 | pull_request:

10 | jobs:

11 | golangci:

12 | name: lint

13 | runs-on: ubuntu-latest

14 | steps:

15 | - uses: actions/checkout@v4

16 | - name: golangci-lint

17 | uses: golangci/golangci-lint-action@v6

18 |

--------------------------------------------------------------------------------

/errors.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "github.com/akrylysov/pogreb/internal/errors"

5 | )

6 |

7 | var (

8 | errKeyTooLarge = errors.New("key is too large")

9 | errValueTooLarge = errors.New("value is too large")

10 | errFull = errors.New("database is full")

11 | errCorrupted = errors.New("database is corrupted")

12 | errLocked = errors.New("database is locked")

13 | errBusy = errors.New("database is busy")

14 | )

15 |

--------------------------------------------------------------------------------

/fs/os_plan9.go:

--------------------------------------------------------------------------------

1 | //go:build plan9

2 | // +build plan9

3 |

4 | package fs

5 |

6 | import (

7 | "os"

8 | "syscall"

9 | )

10 |

11 | func createLockFile(name string, perm os.FileMode) (LockFile, bool, error) {

12 | acquiredExisting := false

13 | if _, err := os.Stat(name); err == nil {

14 | acquiredExisting = true

15 | }

16 | f, err := os.OpenFile(name, os.O_RDWR|os.O_CREATE, syscall.DMEXCL|perm)

17 | if err != nil {

18 | return nil, false, err

19 | }

20 | return &osLockFile{f, name}, acquiredExisting, nil

21 | }

22 |

23 | // Return a default FileSystem for this platform.

24 | func DefaultFileSystem() FileSystem {

25 | return OS

26 | }

27 |

--------------------------------------------------------------------------------

/gobfile.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "encoding/gob"

5 |

6 | "github.com/akrylysov/pogreb/fs"

7 | )

8 |

9 | func readGobFile(fsys fs.FileSystem, name string, v interface{}) error {

10 | f, err := openFile(fsys, name, openFileFlags{readOnly: true})

11 | if err != nil {

12 | return err

13 | }

14 | defer f.Close()

15 | dec := gob.NewDecoder(f)

16 | return dec.Decode(v)

17 | }

18 |

19 | func writeGobFile(fsys fs.FileSystem, name string, v interface{}) error {

20 | f, err := openFile(fsys, name, openFileFlags{truncate: true})

21 | if err != nil {

22 | return err

23 | }

24 | defer f.Close()

25 | enc := gob.NewEncoder(f)

26 | return enc.Encode(v)

27 | }

28 |

--------------------------------------------------------------------------------

/fs/os_unix.go:

--------------------------------------------------------------------------------

1 | //go:build !(plan9 || windows)

2 | // +build !plan9,!windows

3 |

4 | package fs

5 |

6 | import (

7 | "os"

8 | "syscall"

9 | )

10 |

11 | func createLockFile(name string, perm os.FileMode) (LockFile, bool, error) {

12 | acquiredExisting := false

13 | if _, err := os.Stat(name); err == nil {

14 | acquiredExisting = true

15 | }

16 | f, err := os.OpenFile(name, os.O_RDWR|os.O_CREATE, perm)

17 | if err != nil {

18 | return nil, false, err

19 | }

20 | if err := syscall.Flock(int(f.Fd()), syscall.LOCK_EX|syscall.LOCK_NB); err != nil {

21 | if err == syscall.EWOULDBLOCK {

22 | err = os.ErrExist

23 | }

24 | return nil, false, err

25 | }

26 | return &osLockFile{f, name}, acquiredExisting, nil

27 | }

28 |

--------------------------------------------------------------------------------

/.github/workflows/test.yaml:

--------------------------------------------------------------------------------

1 | name: Test

2 | on: [push, pull_request]

3 | jobs:

4 | test:

5 | strategy:

6 | matrix:

7 | go-version: [1.18.x, 1.x]

8 | os: [ubuntu-latest, macos-latest, windows-latest]

9 | runs-on: ${{ matrix.os }}

10 | steps:

11 | - name: Install Go

12 | uses: actions/setup-go@v5

13 | with:

14 | go-version: ${{ matrix.go-version }}

15 | - name: Checkout code

16 | uses: actions/checkout@v4

17 | - name: Build GOARCH=386

18 | if: ${{ matrix.os != 'macos-latest' }}

19 | env:

20 | GOARCH: "386"

21 | run: go build

22 | - name: Test

23 | run: go test ./... -race -coverprofile=coverage.txt -covermode=atomic

24 | - name: Upload coverage to Codecov

25 | if: ${{ matrix.os == 'ubuntu-latest' }}

26 | uses: codecov/codecov-action@v5

27 |

--------------------------------------------------------------------------------

/example_test.go:

--------------------------------------------------------------------------------

1 | package pogreb_test

2 |

3 | import (

4 | "log"

5 |

6 | "github.com/akrylysov/pogreb"

7 | )

8 |

9 | func Example() {

10 | db, err := pogreb.Open("pogreb.test", nil)

11 | if err != nil {

12 | log.Fatal(err)

13 | return

14 | }

15 | defer db.Close()

16 |

17 | // Insert a new key-value pair.

18 | if err := db.Put([]byte("testKey"), []byte("testValue")); err != nil {

19 | log.Fatal(err)

20 | }

21 |

22 | // Retrieve the inserted value.

23 | val, err := db.Get([]byte("testKey"))

24 | if err != nil {

25 | log.Fatal(err)

26 | }

27 | log.Printf("%s", val)

28 |

29 | // Iterate over items.

30 | it := db.Items()

31 | for {

32 | key, val, err := it.Next()

33 | if err == pogreb.ErrIterationDone {

34 | break

35 | }

36 | if err != nil {

37 | log.Fatal(err)

38 | }

39 | log.Printf("%s %s", key, val)

40 | }

41 | }

42 |

--------------------------------------------------------------------------------

/fs/os_mmap_unix.go:

--------------------------------------------------------------------------------

1 | //go:build !(plan9 || windows)

2 | // +build !plan9,!windows

3 |

4 | package fs

5 |

6 | import (

7 | "os"

8 | "syscall"

9 | "unsafe"

10 | )

11 |

12 | func mmap(f *os.File, fileSize int64, mappingSize int64) ([]byte, error) {

13 | p, err := syscall.Mmap(int(f.Fd()), 0, int(mappingSize), syscall.PROT_READ, syscall.MAP_SHARED)

14 | return p, err

15 | }

16 |

17 | func munmap(data []byte) error {

18 | return syscall.Munmap(data)

19 | }

20 |

21 | func madviceRandom(data []byte) error {

22 | _, _, errno := syscall.Syscall(syscall.SYS_MADVISE, uintptr(unsafe.Pointer(&data[0])), uintptr(len(data)), uintptr(syscall.MADV_RANDOM))

23 | if errno != 0 {

24 | return errno

25 | }

26 | return nil

27 | }

28 |

29 | func (f *osMMapFile) Truncate(size int64) error {

30 | if err := f.File.Truncate(size); err != nil {

31 | return err

32 | }

33 | f.size = size

34 | return f.mremap()

35 | }

36 |

--------------------------------------------------------------------------------

/header.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "bytes"

5 | "encoding/binary"

6 | )

7 |

8 | const (

9 | formatVersion = 2 // File format version.

10 | headerSize = 512

11 | )

12 |

13 | var (

14 | signature = [8]byte{'p', 'o', 'g', 'r', 'e', 'b', '\x0e', '\xfd'}

15 | )

16 |

17 | type header struct {

18 | signature [8]byte

19 | formatVersion uint32

20 | }

21 |

22 | func newHeader() *header {

23 | return &header{

24 | signature: signature,

25 | formatVersion: formatVersion,

26 | }

27 | }

28 |

29 | func (h header) MarshalBinary() ([]byte, error) {

30 | buf := make([]byte, headerSize)

31 | copy(buf[:8], h.signature[:])

32 | binary.LittleEndian.PutUint32(buf[8:12], h.formatVersion)

33 | return buf, nil

34 | }

35 |

36 | func (h *header) UnmarshalBinary(data []byte) error {

37 | if !bytes.Equal(data[:8], signature[:]) {

38 | return errCorrupted

39 | }

40 | copy(h.signature[:], data[:8])

41 | h.formatVersion = binary.LittleEndian.Uint32(data[8:12])

42 | return nil

43 | }

44 |

--------------------------------------------------------------------------------

/internal/errors/errors.go:

--------------------------------------------------------------------------------

1 | package errors

2 |

3 | import (

4 | "errors"

5 | "fmt"

6 | )

7 |

8 | type wrappedError struct {

9 | cause error

10 | msg string

11 | }

12 |

13 | func (we wrappedError) Error() string {

14 | return we.msg + ": " + we.cause.Error()

15 | }

16 |

17 | func (we wrappedError) Unwrap() error {

18 | return we.cause

19 | }

20 |

21 | // New returns an error that formats as the given text.

22 | func New(text string) error {

23 | return errors.New(text)

24 | }

25 |

26 | // Wrap returns an error annotating err with an additional message.

27 | // Compatible with Go 1.13 error chains.

28 | func Wrap(cause error, message string) error {

29 | return wrappedError{

30 | cause: cause,

31 | msg: message,

32 | }

33 | }

34 |

35 | // Wrapf returns an error annotating err with an additional formatted message.

36 | // Compatible with Go 1.13 error chains.

37 | func Wrapf(cause error, format string, a ...interface{}) error {

38 | return wrappedError{

39 | cause: cause,

40 | msg: fmt.Sprintf(format, a...),

41 | }

42 | }

43 |

--------------------------------------------------------------------------------

/internal/hash/murmurhash32.go:

--------------------------------------------------------------------------------

1 | package hash

2 |

3 | import (

4 | "math/bits"

5 | )

6 |

7 | const (

8 | c1 uint32 = 0xcc9e2d51

9 | c2 uint32 = 0x1b873593

10 | )

11 |

12 | // Sum32WithSeed is a port of MurmurHash3_x86_32 function.

13 | func Sum32WithSeed(data []byte, seed uint32) uint32 {

14 | h1 := seed

15 | dlen := len(data)

16 |

17 | for len(data) >= 4 {

18 | k1 := uint32(data[0]) | uint32(data[1])<<8 | uint32(data[2])<<16 | uint32(data[3])<<24

19 | data = data[4:]

20 |

21 | k1 *= c1

22 | k1 = bits.RotateLeft32(k1, 15)

23 | k1 *= c2

24 |

25 | h1 ^= k1

26 | h1 = bits.RotateLeft32(h1, 13)

27 | h1 = h1*5 + 0xe6546b64

28 | }

29 |

30 | var k1 uint32

31 | switch len(data) {

32 | case 3:

33 | k1 ^= uint32(data[2]) << 16

34 | fallthrough

35 | case 2:

36 | k1 ^= uint32(data[1]) << 8

37 | fallthrough

38 | case 1:

39 | k1 ^= uint32(data[0])

40 | k1 *= c1

41 | k1 = bits.RotateLeft32(k1, 15)

42 | k1 *= c2

43 | h1 ^= k1

44 | }

45 |

46 | h1 ^= uint32(dlen)

47 |

48 | h1 ^= h1 >> 16

49 | h1 *= 0x85ebca6b

50 | h1 ^= h1 >> 13

51 | h1 *= 0xc2b2ae35

52 | h1 ^= h1 >> 16

53 |

54 | return h1

55 | }

56 |

--------------------------------------------------------------------------------

/internal/hash/murmurhash32_test.go:

--------------------------------------------------------------------------------

1 | package hash

2 |

3 | import (

4 | "fmt"

5 | "testing"

6 |

7 | "github.com/akrylysov/pogreb/internal/assert"

8 | )

9 |

10 | func TestSum32WithSeed(t *testing.T) {

11 | testCases := []struct {

12 | in []byte

13 | seed uint32

14 | out uint32

15 | }{

16 | {

17 | in: nil,

18 | out: 0,

19 | },

20 | {

21 | in: nil,

22 | seed: 1,

23 | out: 1364076727,

24 | },

25 | {

26 | in: []byte{1},

27 | out: 3831157163,

28 | },

29 | {

30 | in: []byte{1, 2},

31 | out: 1690789502,

32 | },

33 | {

34 | in: []byte{1, 2, 3},

35 | out: 2161234436,

36 | },

37 | {

38 | in: []byte{1, 2, 3, 4},

39 | out: 1043635621,

40 | },

41 | {

42 | in: []byte{1, 2, 3, 4, 5},

43 | out: 2727459272,

44 | },

45 | }

46 | for i, tc := range testCases {

47 | t.Run(fmt.Sprintf("%d", i), func(t *testing.T) {

48 | assert.Equal(t, tc.out, Sum32WithSeed(tc.in, tc.seed))

49 | })

50 | }

51 | }

52 |

53 | func BenchmarkSum32WithSeed(b *testing.B) {

54 | data := []byte("pogreb_Sum32WithSeed_bench")

55 | b.SetBytes(int64(len(data)))

56 | for n := 0; n < b.N; n++ {

57 | Sum32WithSeed(data, 0)

58 | }

59 | }

60 |

--------------------------------------------------------------------------------

/internal/errors/errors_test.go:

--------------------------------------------------------------------------------

1 | package errors

2 |

3 | import (

4 | "errors"

5 | "testing"

6 |

7 | "github.com/akrylysov/pogreb/internal/assert"

8 | )

9 |

10 | func TestWrap(t *testing.T) {

11 | err1 := New("err1")

12 | w11 := Wrap(err1, "wrapped 11")

13 | w12 := Wrapf(w11, "wrapped %d%s", 1, "2")

14 |

15 | assert.Equal(t, err1, w11.(wrappedError).Unwrap())

16 | assert.Equal(t, w11, w12.(wrappedError).Unwrap())

17 |

18 | assert.Equal(t, "wrapped 11: err1", w11.Error())

19 | assert.Equal(t, "wrapped 12: wrapped 11: err1", w12.Error())

20 | }

21 |

22 | func TestIs(t *testing.T) {

23 | err1 := New("err1")

24 | w11 := Wrap(err1, "wrapped 11")

25 | w12 := Wrap(w11, "wrapped 12")

26 |

27 | err2 := New("err2")

28 | w21 := Wrap(err2, "wrapped 21")

29 |

30 | assert.Equal(t, true, errors.Is(err1, err1))

31 | assert.Equal(t, true, errors.Is(w11, err1))

32 | assert.Equal(t, true, errors.Is(w12, err1))

33 | assert.Equal(t, true, errors.Is(w12, w11))

34 |

35 | assert.Equal(t, false, errors.Is(err1, err2))

36 | assert.Equal(t, false, errors.Is(w11, err2))

37 | assert.Equal(t, false, errors.Is(w12, err2))

38 | assert.Equal(t, false, errors.Is(w21, err1))

39 | assert.Equal(t, false, errors.Is(w21, w11))

40 | }

41 |

--------------------------------------------------------------------------------

/fs/os_mmap_windows.go:

--------------------------------------------------------------------------------

1 | //go:build windows

2 | // +build windows

3 |

4 | package fs

5 |

6 | import (

7 | "os"

8 | "syscall"

9 | "unsafe"

10 | )

11 |

12 | func mmap(f *os.File, fileSize int64, mappingSize int64) ([]byte, error) {

13 | size := fileSize

14 | low, high := uint32(size), uint32(size>>32)

15 | fmap, err := syscall.CreateFileMapping(syscall.Handle(f.Fd()), nil, syscall.PAGE_READONLY, high, low, nil)

16 | if err != nil {

17 | return nil, err

18 | }

19 | defer syscall.CloseHandle(fmap)

20 | ptr, err := syscall.MapViewOfFile(fmap, syscall.FILE_MAP_READ, 0, 0, uintptr(size))

21 | if err != nil {

22 | return nil, err

23 | }

24 | data := (*[maxMmapSize]byte)(unsafe.Pointer(ptr))[:size]

25 | return data, nil

26 | }

27 |

28 | func munmap(data []byte) error {

29 | return syscall.UnmapViewOfFile(uintptr(unsafe.Pointer(&data[0])))

30 | }

31 |

32 | func madviceRandom(data []byte) error {

33 | return nil

34 | }

35 |

36 | func (f *osMMapFile) Truncate(size int64) error {

37 | // Truncating a memory-mapped file fails on Windows. Unmap it first.

38 | if err := f.munmap(); err != nil {

39 | return err

40 | }

41 | if err := f.File.Truncate(size); err != nil {

42 | return err

43 | }

44 | f.size = size

45 | return f.mremap()

46 | }

47 |

--------------------------------------------------------------------------------

/iterator_test.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "bytes"

5 | "testing"

6 |

7 | "github.com/akrylysov/pogreb/internal/assert"

8 | )

9 |

10 | func TestIteratorEmpty(t *testing.T) {

11 | db, err := createTestDB(nil)

12 | assert.Nil(t, err)

13 | it := db.Items()

14 | for i := 0; i < 8; i++ {

15 | _, _, err := it.Next()

16 | if err != ErrIterationDone {

17 | t.Fatalf("expected %v; got %v", ErrIterationDone, err)

18 | }

19 | }

20 | assert.Nil(t, db.Close())

21 | }

22 |

23 | func TestIterator(t *testing.T) {

24 | db, err := createTestDB(nil)

25 | assert.Nil(t, err)

26 |

27 | items := map[byte]bool{}

28 | var i byte

29 | for i = 0; i < 255; i++ {

30 | items[i] = false

31 | err := db.Put([]byte{i}, []byte{i})

32 | assert.Nil(t, err)

33 | }

34 |

35 | it := db.Items()

36 | for {

37 | key, value, err := it.Next()

38 | if err == ErrIterationDone {

39 | break

40 | }

41 | assert.Nil(t, err)

42 | if k, ok := items[key[0]]; !ok {

43 | t.Fatalf("unknown key %v", k)

44 | }

45 | if !bytes.Equal(key, value) {

46 | t.Fatalf("expected %v; got %v", key, value)

47 | }

48 | items[key[0]] = true

49 | }

50 |

51 | for k, v := range items {

52 | if !v {

53 | t.Fatalf("expected to iterate over key %v", k)

54 | }

55 | }

56 |

57 | for i := 0; i < 8; i++ {

58 | _, _, err := it.Next()

59 | if err != ErrIterationDone {

60 | t.Fatalf("expected %v; got %v", ErrIterationDone, err)

61 | }

62 | }

63 |

64 | assert.Nil(t, db.Close())

65 | }

66 |

--------------------------------------------------------------------------------

/datalog_test.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "testing"

5 |

6 | "github.com/akrylysov/pogreb/internal/assert"

7 | )

8 |

9 | func (dl *datalog) segmentMetas() []segmentMeta {

10 | var metas []segmentMeta

11 | for _, seg := range dl.segmentsBySequenceID() {

12 | metas = append(metas, *seg.meta)

13 | }

14 | return metas

15 | }

16 |

17 | func TestDatalog(t *testing.T) {

18 | db, err := createTestDB(nil)

19 | assert.Nil(t, err)

20 |

21 | _, _, err = db.datalog.put([]byte{'1'}, []byte{'1'})

22 | assert.Nil(t, err)

23 | assert.Equal(t, &segmentMeta{PutRecords: 1}, db.datalog.segments[0].meta)

24 | assert.Nil(t, db.datalog.segments[1])

25 |

26 | sm := db.datalog.segmentsBySequenceID()

27 | assert.Equal(t, []*segment{db.datalog.segments[0]}, sm)

28 |

29 | // Writing to a full file swaps it.

30 | db.datalog.segments[0].meta.Full = true

31 | _, _, err = db.datalog.put([]byte{'1'}, []byte{'1'})

32 | assert.Nil(t, err)

33 | assert.Equal(t, &segmentMeta{PutRecords: 1, Full: true}, db.datalog.segments[0].meta)

34 | assert.Equal(t, &segmentMeta{PutRecords: 1}, db.datalog.segments[1].meta)

35 |

36 | sm = db.datalog.segmentsBySequenceID()

37 | assert.Equal(t, []*segment{db.datalog.segments[0], db.datalog.segments[1]}, sm)

38 |

39 | _, _, err = db.datalog.put([]byte{'1'}, []byte{'1'})

40 | assert.Nil(t, err)

41 | assert.Equal(t, &segmentMeta{PutRecords: 1, Full: true}, db.datalog.segments[0].meta)

42 | assert.Equal(t, &segmentMeta{PutRecords: 2}, db.datalog.segments[1].meta)

43 |

44 | assert.Nil(t, db.Close())

45 | }

46 |

--------------------------------------------------------------------------------

/fs/os.go:

--------------------------------------------------------------------------------

1 | package fs

2 |

3 | import (

4 | "os"

5 | )

6 |

7 | type osFS struct{}

8 |

9 | // OS is a file system backed by the os package.

10 | var OS FileSystem = &osFS{}

11 |

12 | func (fs *osFS) OpenFile(name string, flag int, perm os.FileMode) (File, error) {

13 | f, err := os.OpenFile(name, flag, perm)

14 | if err != nil {

15 | return nil, err

16 | }

17 | return &osFile{File: f}, nil

18 | }

19 |

20 | func (fs *osFS) CreateLockFile(name string, perm os.FileMode) (LockFile, bool, error) {

21 | return createLockFile(name, perm)

22 | }

23 |

24 | func (fs *osFS) Stat(name string) (os.FileInfo, error) {

25 | return os.Stat(name)

26 | }

27 |

28 | func (fs *osFS) Remove(name string) error {

29 | return os.Remove(name)

30 | }

31 |

32 | func (fs *osFS) Rename(oldpath, newpath string) error {

33 | return os.Rename(oldpath, newpath)

34 | }

35 |

36 | func (fs *osFS) ReadDir(name string) ([]os.DirEntry, error) {

37 | return os.ReadDir(name)

38 | }

39 |

40 | func (fs *osFS) MkdirAll(path string, perm os.FileMode) error {

41 | return os.MkdirAll(path, perm)

42 | }

43 |

44 | type osFile struct {

45 | *os.File

46 | }

47 |

48 | func (f *osFile) Slice(start int64, end int64) ([]byte, error) {

49 | buf := make([]byte, end-start)

50 | _, err := f.ReadAt(buf, start)

51 | if err != nil {

52 | return nil, err

53 | }

54 | return buf, nil

55 | }

56 |

57 | type osLockFile struct {

58 | *os.File

59 | path string

60 | }

61 |

62 | func (f *osLockFile) Unlock() error {

63 | if err := os.Remove(f.path); err != nil {

64 | return err

65 | }

66 | return f.Close()

67 | }

68 |

--------------------------------------------------------------------------------

/fs/os_windows.go:

--------------------------------------------------------------------------------

1 | //go:build windows

2 | // +build windows

3 |

4 | package fs

5 |

6 | import (

7 | "os"

8 | "syscall"

9 | "unsafe"

10 | )

11 |

12 | var (

13 | modkernel32 = syscall.NewLazyDLL("kernel32.dll")

14 | procLockFileEx = modkernel32.NewProc("LockFileEx")

15 | )

16 |

17 | const (

18 | errorLockViolation = 0x21

19 | )

20 |

21 | func lockfile(f *os.File) error {

22 | var ol syscall.Overlapped

23 |

24 | r1, _, err := syscall.Syscall6(

25 | procLockFileEx.Addr(),

26 | 6,

27 | uintptr(f.Fd()), // handle

28 | uintptr(0x0003),

29 | uintptr(0), // reserved

30 | uintptr(1), // locklow

31 | uintptr(0), // lockhigh

32 | uintptr(unsafe.Pointer(&ol)),

33 | )

34 | if r1 == 0 && (err == syscall.ERROR_FILE_EXISTS || err == errorLockViolation) {

35 | return os.ErrExist

36 | }

37 | return nil

38 | }

39 |

40 | func createLockFile(name string, perm os.FileMode) (LockFile, bool, error) {

41 | acquiredExisting := false

42 | if _, err := os.Stat(name); err == nil {

43 | acquiredExisting = true

44 | }

45 | fd, err := syscall.CreateFile(&(syscall.StringToUTF16(name)[0]),

46 | syscall.GENERIC_READ|syscall.GENERIC_WRITE,

47 | syscall.FILE_SHARE_READ|syscall.FILE_SHARE_WRITE|syscall.FILE_SHARE_DELETE,

48 | nil,

49 | syscall.CREATE_ALWAYS,

50 | syscall.FILE_ATTRIBUTE_NORMAL,

51 | 0)

52 | if err != nil {

53 | return nil, false, os.ErrExist

54 | }

55 | f := os.NewFile(uintptr(fd), name)

56 | if err := lockfile(f); err != nil {

57 | f.Close()

58 | return nil, false, err

59 | }

60 | return &osLockFile{f, name}, acquiredExisting, nil

61 | }

62 |

--------------------------------------------------------------------------------

/fs/sub.go:

--------------------------------------------------------------------------------

1 | package fs

2 |

3 | import (

4 | "os"

5 | "path/filepath"

6 | )

7 |

8 | // Sub returns a new file system rooted at dir.

9 | func Sub(fsys FileSystem, dir string) FileSystem {

10 | return &subFS{

11 | fsys: fsys,

12 | root: dir,

13 | }

14 | }

15 |

16 | type subFS struct {

17 | fsys FileSystem

18 | root string

19 | }

20 |

21 | func (fs *subFS) OpenFile(name string, flag int, perm os.FileMode) (File, error) {

22 | subName := filepath.Join(fs.root, name)

23 | return fs.fsys.OpenFile(subName, flag, perm)

24 | }

25 |

26 | func (fs *subFS) Stat(name string) (os.FileInfo, error) {

27 | subName := filepath.Join(fs.root, name)

28 | return fs.fsys.Stat(subName)

29 | }

30 |

31 | func (fs *subFS) Remove(name string) error {

32 | subName := filepath.Join(fs.root, name)

33 | return fs.fsys.Remove(subName)

34 | }

35 |

36 | func (fs *subFS) Rename(oldpath, newpath string) error {

37 | subOldpath := filepath.Join(fs.root, oldpath)

38 | subNewpath := filepath.Join(fs.root, newpath)

39 | return fs.fsys.Rename(subOldpath, subNewpath)

40 | }

41 |

42 | func (fs *subFS) ReadDir(name string) ([]os.DirEntry, error) {

43 | subName := filepath.Join(fs.root, name)

44 | return fs.fsys.ReadDir(subName)

45 | }

46 |

47 | func (fs *subFS) CreateLockFile(name string, perm os.FileMode) (LockFile, bool, error) {

48 | subName := filepath.Join(fs.root, name)

49 | return fs.fsys.CreateLockFile(subName, perm)

50 | }

51 |

52 | func (fs *subFS) MkdirAll(path string, perm os.FileMode) error {

53 | subPath := filepath.Join(fs.root, path)

54 | return fs.fsys.MkdirAll(subPath, perm)

55 | }

56 |

57 | var _ FileSystem = &subFS{}

58 |

--------------------------------------------------------------------------------

/options.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "math"

5 | "time"

6 |

7 | "github.com/akrylysov/pogreb/fs"

8 | )

9 |

10 | // Options holds the optional DB parameters.

11 | type Options struct {

12 | // BackgroundSyncInterval sets the amount of time between background Sync() calls.

13 | //

14 | // Setting the value to 0 disables the automatic background synchronization.

15 | // Setting the value to -1 makes the DB call Sync() after every write operation.

16 | // Default: 0

17 | BackgroundSyncInterval time.Duration

18 |

19 | // BackgroundCompactionInterval sets the amount of time between background Compact() calls.

20 | //

21 | // Setting the value to 0 disables the automatic background compaction.

22 | // Default: 0

23 | BackgroundCompactionInterval time.Duration

24 |

25 | // FileSystem sets the file system implementation.

26 | //

27 | // Default: fs.OSMMap.

28 | FileSystem fs.FileSystem

29 | rootFS fs.FileSystem

30 |

31 | maxSegmentSize uint32

32 | compactionMinSegmentSize uint32

33 | compactionMinFragmentation float32

34 | }

35 |

36 | func (src *Options) copyWithDefaults(path string) *Options {

37 | opts := Options{}

38 | if src != nil {

39 | opts = *src

40 | }

41 | if opts.FileSystem == nil {

42 | opts.FileSystem = fs.DefaultFileSystem()

43 | }

44 | opts.rootFS = opts.FileSystem

45 | opts.FileSystem = fs.Sub(opts.FileSystem, path)

46 | if opts.maxSegmentSize == 0 {

47 | opts.maxSegmentSize = math.MaxUint32

48 | }

49 | if opts.compactionMinSegmentSize == 0 {

50 | opts.compactionMinSegmentSize = 32 << 20

51 | }

52 | if opts.compactionMinFragmentation == 0 {

53 | opts.compactionMinFragmentation = 0.5

54 | }

55 | return &opts

56 | }

57 |

--------------------------------------------------------------------------------

/CHANGELOG.md:

--------------------------------------------------------------------------------

1 | # Changelog

2 |

3 | ## [0.10.2] - 2023-12-10

4 | ### Fixed

5 | - Fix an edge case causing recovery to fail.

6 |

7 | ## [0.10.1] - 2021-05-01

8 | ### Changed

9 | - Improve error reporting.

10 | ### Fixed

11 | - Fix compilation for 32-bit OS.

12 |

13 | ## [0.10.0] - 2021-02-09

14 | ### Added

15 | - Memory-mapped file access can now be disabled by setting `Options.FileSystem` to `fs.OS`.

16 | ### Changed

17 | - The default file system implementation is changed to `fs.OSMMap`.

18 |

19 | ## [0.9.2] - 2021-01-01

20 | ### Changed

21 | - Write-ahead log doesn't rely on wall-clock time anymore. It prevents potential race conditions during compaction and recovery.

22 | ### Fixed

23 | - Fix recovery writing extra delete records.

24 |

25 | ## [0.9.1] - 2020-04-03

26 | ### Changed

27 | - Improve Go 1.14 compatibility (remove "unsafe" usage).

28 |

29 | ## [0.9.0] - 2020-03-08

30 | ### Changed

31 | - Replace the unstructured data file for storing key-value pairs with a write-ahead log.

32 | ### Added

33 | - In the event of a crash or a power loss the database is automatically recovered.

34 | - Optional background compaction allows reclaiming disk space occupied by overwritten or deleted keys.

35 | ### Fixed

36 | - Fix disk space overhead when storing small keys and values.

37 |

38 | ## [0.8.3] - 2019-11-03

39 | ### Fixed

40 | - Fix slice bounds out of range error mapping files on Windows.

41 |

42 | ## [0.8.2] - 2019-09-04

43 | ### Fixed

44 | - Race condition could lead to data corruption.

45 |

46 | ## [0.8.1] - 2019-06-30

47 | ### Fixed

48 | - Fix panic when accessing closed database.

49 | - Return error opening invalid database.

50 |

51 | ## [0.8] - 2019-03-30

52 | ### Changed

53 | - ~2x write performance improvement on non-Windows.

54 |

55 | ## [0.7] - 2019-03-23

56 | ### Added

57 | - Windows support (@mattn).

58 | ### Changed

59 | - Improve freelist performance.

60 |

--------------------------------------------------------------------------------

/backup_test.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "testing"

5 |

6 | "github.com/akrylysov/pogreb/internal/assert"

7 | )

8 |

9 | const testDBBackupName = testDBName + ".backup"

10 |

11 | func TestBackup(t *testing.T) {

12 | opts := &Options{

13 | maxSegmentSize: 1024,

14 | compactionMinSegmentSize: 520,

15 | compactionMinFragmentation: 0.02,

16 | }

17 |

18 | run := func(name string, f func(t *testing.T, db *DB)) bool {

19 | return t.Run(name, func(t *testing.T) {

20 | db, err := createTestDB(opts)

21 | assert.Nil(t, err)

22 | f(t, db)

23 | assert.Nil(t, db.Close())

24 | _ = cleanDir(testDBBackupName)

25 | })

26 | }

27 |

28 | run("empty", func(t *testing.T, db *DB) {

29 | assert.Nil(t, db.Backup(testDBBackupName))

30 | db2, err := Open(testDBBackupName, opts)

31 | assert.Nil(t, err)

32 | assert.Nil(t, db2.Close())

33 | })

34 |

35 | run("single segment", func(t *testing.T, db *DB) {

36 | assert.Nil(t, db.Put([]byte{0}, []byte{0}))

37 | assert.Equal(t, 1, countSegments(t, db))

38 | assert.Nil(t, db.Backup(testDBBackupName))

39 | db2, err := Open(testDBBackupName, opts)

40 | assert.Nil(t, err)

41 | v, err := db2.Get([]byte{0})

42 | assert.Equal(t, []byte{0}, v)

43 | assert.Nil(t, err)

44 | assert.Nil(t, db2.Close())

45 | })

46 |

47 | run("multiple segments", func(t *testing.T, db *DB) {

48 | for i := byte(0); i < 100; i++ {

49 | assert.Nil(t, db.Put([]byte{i}, []byte{i}))

50 | }

51 | assert.Equal(t, 3, countSegments(t, db))

52 | assert.Nil(t, db.Backup(testDBBackupName))

53 | db2, err := Open(testDBBackupName, opts)

54 | assert.Equal(t, 3, countSegments(t, db2))

55 | assert.Nil(t, err)

56 | for i := byte(0); i < 100; i++ {

57 | v, err := db2.Get([]byte{i})

58 | assert.Nil(t, err)

59 | assert.Equal(t, []byte{i}, v)

60 | }

61 | assert.Nil(t, db2.Close())

62 | })

63 | }

64 |

--------------------------------------------------------------------------------

/fs/fs.go:

--------------------------------------------------------------------------------

1 | /*

2 | Package fs provides a file system interface.

3 | */

4 | package fs

5 |

6 | import (

7 | "errors"

8 | "io"

9 | "os"

10 | )

11 |

12 | var (

13 | errAppendModeNotSupported = errors.New("append mode is not supported")

14 | )

15 |

16 | // File is the interface compatible with os.File.

17 | // All methods are not thread-safe, except for ReadAt, Slice and Stat.

18 | type File interface {

19 | io.Closer

20 | io.Reader

21 | io.ReaderAt

22 | io.Seeker

23 | io.Writer

24 | io.WriterAt

25 |

26 | // Stat returns os.FileInfo describing the file.

27 | Stat() (os.FileInfo, error)

28 |

29 | // Sync commits the current contents of the file.

30 | Sync() error

31 |

32 | // Truncate changes the size of the file.

33 | Truncate(size int64) error

34 |

35 | // Slice reads and returns the contents of file from offset start to offset end.

36 | Slice(start int64, end int64) ([]byte, error)

37 | }

38 |

39 | // LockFile represents a lock file.

40 | type LockFile interface {

41 | // Unlock and removes the lock file.

42 | Unlock() error

43 | }

44 |

45 | // FileSystem represents a file system.

46 | type FileSystem interface {

47 | // OpenFile opens the file with specified flag.

48 | OpenFile(name string, flag int, perm os.FileMode) (File, error)

49 |

50 | // Stat returns os.FileInfo describing the file.

51 | Stat(name string) (os.FileInfo, error)

52 |

53 | // Remove removes the file.

54 | Remove(name string) error

55 |

56 | // Rename renames oldpath to newpath.

57 | Rename(oldpath, newpath string) error

58 |

59 | // ReadDir reads the directory and returns a list of directory entries.

60 | ReadDir(name string) ([]os.DirEntry, error)

61 |

62 | // CreateLockFile creates a lock file.

63 | CreateLockFile(name string, perm os.FileMode) (LockFile, bool, error)

64 |

65 | // MkdirAll creates a directory named path.

66 | MkdirAll(path string, perm os.FileMode) error

67 | }

68 |

--------------------------------------------------------------------------------

/iterator.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "errors"

5 | "sync"

6 | )

7 |

8 | // ErrIterationDone is returned by ItemIterator.Next calls when there are no more items to return.

9 | var ErrIterationDone = errors.New("no more items in iterator")

10 |

11 | type item struct {

12 | key []byte

13 | value []byte

14 | }

15 |

16 | // ItemIterator is an iterator over DB key-value pairs. It iterates the items in an unspecified order.

17 | type ItemIterator struct {

18 | db *DB

19 | nextBucketIdx uint32

20 | queue []item

21 | mu sync.Mutex

22 | }

23 |

24 | // fetchItems adds items to the iterator queue from a bucket located at nextBucketIdx.

25 | func (it *ItemIterator) fetchItems(nextBucketIdx uint32) error {

26 | bit := it.db.index.newBucketIterator(nextBucketIdx)

27 | for {

28 | b, err := bit.next()

29 | if err == ErrIterationDone {

30 | return nil

31 | }

32 | if err != nil {

33 | return err

34 | }

35 | for i := 0; i < slotsPerBucket; i++ {

36 | sl := b.slots[i]

37 | if sl.offset == 0 {

38 | // No more items in the bucket.

39 | break

40 | }

41 | key, value, err := it.db.datalog.readKeyValue(sl)

42 | if err != nil {

43 | return err

44 | }

45 | key = cloneBytes(key)

46 | value = cloneBytes(value)

47 | it.queue = append(it.queue, item{key: key, value: value})

48 | }

49 | }

50 | }

51 |

52 | // Next returns the next key-value pair if available, otherwise it returns ErrIterationDone error.

53 | func (it *ItemIterator) Next() ([]byte, []byte, error) {

54 | it.mu.Lock()

55 | defer it.mu.Unlock()

56 |

57 | it.db.mu.RLock()

58 | defer it.db.mu.RUnlock()

59 |

60 | // The iterator queue is empty and we have more buckets to check.

61 | for len(it.queue) == 0 && it.nextBucketIdx < it.db.index.numBuckets {

62 | if err := it.fetchItems(it.nextBucketIdx); err != nil {

63 | return nil, nil, err

64 | }

65 | it.nextBucketIdx++

66 | }

67 |

68 | if len(it.queue) > 0 {

69 | item := it.queue[0]

70 | it.queue = it.queue[1:]

71 | return item.key, item.value, nil

72 | }

73 |

74 | return nil, nil, ErrIterationDone

75 | }

76 |

--------------------------------------------------------------------------------

/backup.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "io"

5 | "os"

6 |

7 | "github.com/akrylysov/pogreb/fs"

8 | )

9 |

10 | func touchFile(fsys fs.FileSystem, path string) error {

11 | f, err := fsys.OpenFile(path, os.O_CREATE|os.O_TRUNC, os.FileMode(0640))

12 | if err != nil {

13 | return err

14 | }

15 | return f.Close()

16 | }

17 |

18 | // Backup creates a database backup at the specified path.

19 | func (db *DB) Backup(path string) error {

20 | // Make sure the compaction is not running during backup.

21 | db.maintenanceMu.Lock()

22 | defer db.maintenanceMu.Unlock()

23 |

24 | if err := db.opts.rootFS.MkdirAll(path, 0755); err != nil {

25 | return err

26 | }

27 |

28 | db.mu.RLock()

29 | var segments []*segment

30 | activeSegmentSizes := make(map[uint16]int64)

31 | for _, seg := range db.datalog.segmentsBySequenceID() {

32 | segments = append(segments, seg)

33 | if !seg.meta.Full {

34 | // Save the size of the active segments to copy only the data persisted up to the point

35 | // of when the backup started.

36 | activeSegmentSizes[seg.id] = seg.size

37 | }

38 | }

39 | db.mu.RUnlock()

40 |

41 | srcFS := db.opts.FileSystem

42 | dstFS := fs.Sub(db.opts.rootFS, path)

43 |

44 | for _, seg := range segments {

45 | name := segmentName(seg.id, seg.sequenceID)

46 | mode := os.FileMode(0640)

47 | srcFile, err := srcFS.OpenFile(name, os.O_RDONLY, mode)

48 | if err != nil {

49 | return err

50 | }

51 |

52 | dstFile, err := dstFS.OpenFile(name, os.O_CREATE|os.O_RDWR|os.O_TRUNC, mode)

53 | if err != nil {

54 | return err

55 | }

56 |

57 | if srcSize, ok := activeSegmentSizes[seg.id]; ok {

58 | if _, err := io.CopyN(dstFile, srcFile, srcSize); err != nil {

59 | return err

60 | }

61 | } else {

62 | if _, err := io.Copy(dstFile, srcFile); err != nil {

63 | return err

64 | }

65 | }

66 |

67 | if err := srcFile.Close(); err != nil {

68 | return err

69 | }

70 | if err := dstFile.Close(); err != nil {

71 | return err

72 | }

73 | }

74 |

75 | if err := touchFile(dstFS, lockName); err != nil {

76 | return err

77 | }

78 |

79 | return nil

80 | }

81 |

--------------------------------------------------------------------------------

/internal/assert/assert.go:

--------------------------------------------------------------------------------

1 | package assert

2 |

3 | import (

4 | "reflect"

5 | "testing"

6 | "time"

7 | )

8 |

9 | // Equal fails the test when expected is not equal to actual.

10 | func Equal(t testing.TB, expected interface{}, actual interface{}) {

11 | if !reflect.DeepEqual(expected, actual) {

12 | t.Helper()

13 | t.Fatalf("expected %+v; got %+v", expected, actual)

14 | }

15 | }

16 |

17 | // https://github.com/golang/go/blob/go1.15/src/reflect/value.go#L1071

18 | var nillableKinds = map[reflect.Kind]bool{

19 | reflect.Chan: true,

20 | reflect.Func: true,

21 | reflect.Map: true,

22 | reflect.Ptr: true,

23 | reflect.UnsafePointer: true,

24 | reflect.Interface: true,

25 | reflect.Slice: true,

26 | }

27 |

28 | // Nil fails the test when obj is not nil.

29 | func Nil(t testing.TB, obj interface{}) {

30 | if obj == nil {

31 | return

32 | }

33 | val := reflect.ValueOf(obj)

34 | if !nillableKinds[val.Kind()] || !val.IsNil() {

35 | t.Helper()

36 | t.Fatalf("expected nil; got %+v", obj)

37 | }

38 | }

39 |

40 | // NotNil fails the test when obj is nil.

41 | func NotNil(t testing.TB, obj interface{}) {

42 | val := reflect.ValueOf(obj)

43 | if obj == nil || (nillableKinds[val.Kind()] && val.IsNil()) {

44 | t.Helper()

45 | t.Fatalf("expected not nil; got %+v", obj)

46 | }

47 | }

48 |

49 | const pollingInterval = time.Millisecond * 10 // How often CompleteWithin polls the cond function.

50 |

51 | // CompleteWithin fails the test when cond doesn't succeed within waitDur.

52 | func CompleteWithin(t testing.TB, waitDur time.Duration, cond func() bool) {

53 | start := time.Now()

54 | for time.Since(start) < waitDur {

55 | if cond() {

56 | return

57 | }

58 | time.Sleep(pollingInterval)

59 | }

60 | t.Helper()

61 | t.Fatalf("expected to complete within %v", waitDur)

62 | }

63 |

64 | // Panic fails the test when the test doesn't panic with the expected message.

65 | func Panic(t testing.TB, expectedMessage string, f func()) {

66 | t.Helper()

67 | var message interface{}

68 | func() {

69 | defer func() {

70 | message = recover()

71 | }()

72 | f()

73 | }()

74 | Equal(t, expectedMessage, message)

75 | }

76 |

--------------------------------------------------------------------------------

/file.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "io"

5 | "os"

6 |

7 | "github.com/akrylysov/pogreb/fs"

8 | )

9 |

10 | // file is a database file.

11 | // When stored in a file system, the file starts with a header.

12 | type file struct {

13 | fs.File

14 | size int64

15 | }

16 |

17 | type openFileFlags struct {

18 | truncate bool

19 | readOnly bool

20 | }

21 |

22 | func openFile(fsyst fs.FileSystem, name string, flags openFileFlags) (*file, error) {

23 | var flag int

24 | if flags.readOnly {

25 | flag = os.O_RDONLY

26 | } else {

27 | flag = os.O_CREATE | os.O_RDWR

28 | if flags.truncate {

29 | flag |= os.O_TRUNC

30 | }

31 | }

32 | fi, err := fsyst.OpenFile(name, flag, os.FileMode(0640))

33 | f := &file{}

34 | if err != nil {

35 | return f, err

36 | }

37 | clean := fi.Close

38 | defer func() {

39 | if clean != nil {

40 | _ = clean()

41 | }

42 | }()

43 | f.File = fi

44 | stat, err := fi.Stat()

45 | if err != nil {

46 | return f, err

47 | }

48 | f.size = stat.Size()

49 | if f.size == 0 {

50 | // It's a new file - write header.

51 | if err := f.writeHeader(); err != nil {

52 | return nil, err

53 | }

54 | } else {

55 | if err := f.readHeader(); err != nil {

56 | return nil, err

57 | }

58 | }

59 | if _, err := f.Seek(int64(headerSize), io.SeekStart); err != nil {

60 | return nil, err

61 | }

62 | clean = nil

63 | return f, nil

64 | }

65 |

66 | func (f *file) writeHeader() error {

67 | h := newHeader()

68 | data, err := h.MarshalBinary()

69 | if err != nil {

70 | return err

71 | }

72 | if _, err = f.append(data); err != nil {

73 | return err

74 | }

75 | return nil

76 | }

77 |

78 | func (f *file) readHeader() error {

79 | h := &header{}

80 | buf := make([]byte, headerSize)

81 | if _, err := io.ReadFull(f, buf); err != nil {

82 | return err

83 | }

84 | return h.UnmarshalBinary(buf)

85 | }

86 |

87 | func (f *file) empty() bool {

88 | return f.size == int64(headerSize)

89 | }

90 |

91 | func (f *file) extend(size uint32) (int64, error) {

92 | off := f.size

93 | if err := f.Truncate(off + int64(size)); err != nil {

94 | return 0, err

95 | }

96 | f.size += int64(size)

97 | return off, nil

98 | }

99 |

100 | func (f *file) append(data []byte) (int64, error) {

101 | off := f.size

102 | if _, err := f.WriteAt(data, off); err != nil {

103 | return 0, err

104 | }

105 | f.size += int64(len(data))

106 | return off, nil

107 | }

108 |

--------------------------------------------------------------------------------

/file_test.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "errors"

5 | "os"

6 | "time"

7 |

8 | "github.com/akrylysov/pogreb/fs"

9 | )

10 |

11 | type errfs struct{}

12 |

13 | func (fs *errfs) OpenFile(name string, flag int, perm os.FileMode) (fs.File, error) {

14 | return &errfile{}, nil

15 | }

16 |

17 | func (fs *errfs) CreateLockFile(name string, perm os.FileMode) (fs.LockFile, bool, error) {

18 | return &errfile{}, false, nil

19 | }

20 |

21 | func (fs *errfs) Stat(name string) (os.FileInfo, error) {

22 | return nil, errfileError

23 | }

24 |

25 | func (fs *errfs) Remove(name string) error {

26 | return errfileError

27 | }

28 |

29 | func (fs *errfs) Rename(oldpath, newpath string) error {

30 | return errfileError

31 | }

32 |

33 | func (fs *errfs) ReadDir(name string) ([]os.DirEntry, error) {

34 | return nil, errfileError

35 | }

36 |

37 | func (fs *errfs) MkdirAll(path string, perm os.FileMode) error {

38 | return errfileError

39 | }

40 |

41 | type errfile struct{}

42 |

43 | var errfileError = errors.New("errfile error")

44 |

45 | func (m *errfile) Close() error {

46 | return errfileError

47 | }

48 |

49 | func (m *errfile) Unlock() error {

50 | return errfileError

51 | }

52 |

53 | func (m *errfile) ReadAt(p []byte, off int64) (int, error) {

54 | return 0, errfileError

55 | }

56 |

57 | func (m *errfile) Read(p []byte) (int, error) {

58 | return 0, errfileError

59 | }

60 |

61 | func (m *errfile) WriteAt(p []byte, off int64) (int, error) {

62 | return 0, errfileError

63 | }

64 |

65 | func (m *errfile) Write(p []byte) (int, error) {

66 | return 0, errfileError

67 | }

68 |

69 | func (m *errfile) Seek(offset int64, whence int) (int64, error) {

70 | return 0, errfileError

71 | }

72 |

73 | func (m *errfile) Stat() (os.FileInfo, error) {

74 | return nil, errfileError

75 | }

76 |

77 | func (m *errfile) Sync() error {

78 | return errfileError

79 | }

80 |

81 | func (m *errfile) Truncate(size int64) error {

82 | return errfileError

83 | }

84 |

85 | func (m *errfile) Name() string {

86 | return "errfile"

87 | }

88 |

89 | func (m *errfile) Size() int64 {

90 | return 0

91 | }

92 |

93 | func (m *errfile) Mode() os.FileMode {

94 | return os.FileMode(0)

95 | }

96 |

97 | func (m *errfile) ModTime() time.Time {

98 | return time.Now()

99 | }

100 |

101 | func (m *errfile) IsDir() bool {

102 | return false

103 | }

104 |

105 | func (m *errfile) Sys() interface{} {

106 | return errfileError

107 | }

108 |

109 | func (m *errfile) Slice(start int64, end int64) ([]byte, error) {

110 | return nil, errfileError

111 | }

112 |

113 | func (m *errfile) Mmap(fileSize int64, mappingSize int64) error {

114 | return errfileError

115 | }

116 |

117 | func (m *errfile) Munmap() error {

118 | return errfileError

119 | }

120 |

121 | // Compile time interface assertion.

122 | var _ fs.File = &errfile{}

123 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | # Pogreb

4 | [](https://pkg.go.dev/github.com/akrylysov/pogreb)

5 | [](https://github.com/akrylysov/pogreb/actions)

6 | [](https://goreportcard.com/report/github.com/akrylysov/pogreb)

7 | [](https://codecov.io/gh/akrylysov/pogreb)

8 |

9 | Pogreb is an embedded key-value store for read-heavy workloads written in Go.

10 |

11 | ## Key characteristics

12 |

13 | - 100% Go.

14 | - Optimized for fast random lookups and infrequent bulk inserts.

15 | - Can store larger-than-memory data sets.

16 | - Low memory usage.

17 | - All DB methods are safe for concurrent use by multiple goroutines.

18 |

19 | ## Installation

20 |

21 | ```sh

22 | $ go get -u github.com/akrylysov/pogreb

23 | ```

24 |

25 | ## Usage

26 |

27 | ### Opening a database

28 |

29 | To open or create a new database, use the `pogreb.Open()` function:

30 |

31 | ```go

32 | package main

33 |

34 | import (

35 | "log"

36 |

37 | "github.com/akrylysov/pogreb"

38 | )

39 |

40 | func main() {

41 | db, err := pogreb.Open("pogreb.test", nil)

42 | if err != nil {

43 | log.Fatal(err)

44 | return

45 | }

46 | defer db.Close()

47 | }

48 | ```

49 |

50 | ### Writing to a database

51 |

52 | Use the `DB.Put()` function to insert a new key-value pair:

53 |

54 | ```go

55 | err := db.Put([]byte("testKey"), []byte("testValue"))

56 | if err != nil {

57 | log.Fatal(err)

58 | }

59 | ```

60 |

61 | ### Reading from a database

62 |

63 | To retrieve the inserted value, use the `DB.Get()` function:

64 |

65 | ```go

66 | val, err := db.Get([]byte("testKey"))

67 | if err != nil {

68 | log.Fatal(err)

69 | }

70 | log.Printf("%s", val)

71 | ```

72 |

73 | ### Deleting from a database

74 |

75 | Use the `DB.Delete()` function to delete a key-value pair:

76 |

77 | ```go

78 | err := db.Delete([]byte("testKey"))

79 | if err != nil {

80 | log.Fatal(err)

81 | }

82 | ```

83 |

84 | ### Iterating over items

85 |

86 | To iterate over items, use `ItemIterator` returned by `DB.Items()`:

87 |

88 | ```go

89 | it := db.Items()

90 | for {

91 | key, val, err := it.Next()

92 | if err == pogreb.ErrIterationDone {

93 | break

94 | }

95 | if err != nil {

96 | log.Fatal(err)

97 | }

98 | log.Printf("%s %s", key, val)

99 | }

100 | ```

101 |

102 | ## Performance

103 |

104 | The benchmarking code can be found in the [pogreb-bench](https://github.com/akrylysov/pogreb-bench) repository.

105 |

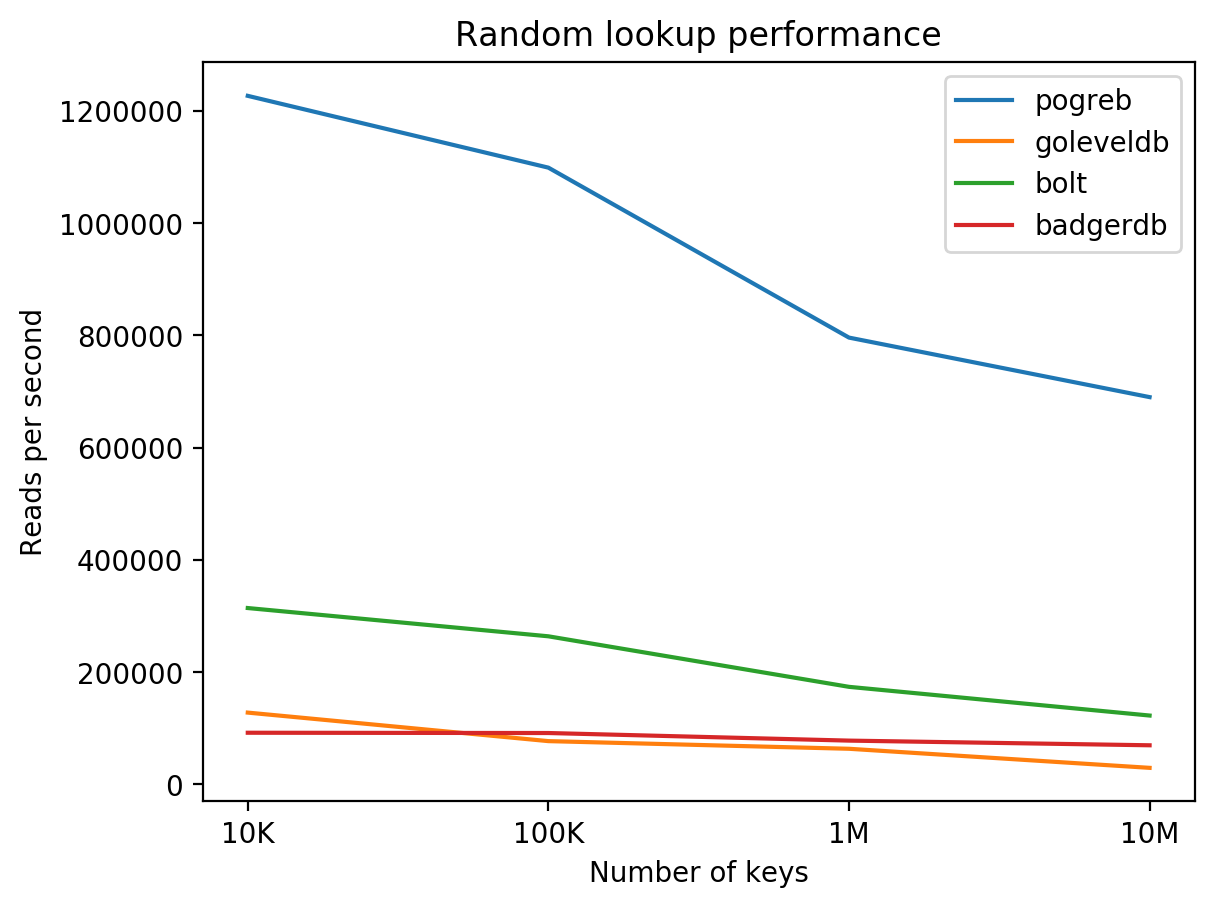

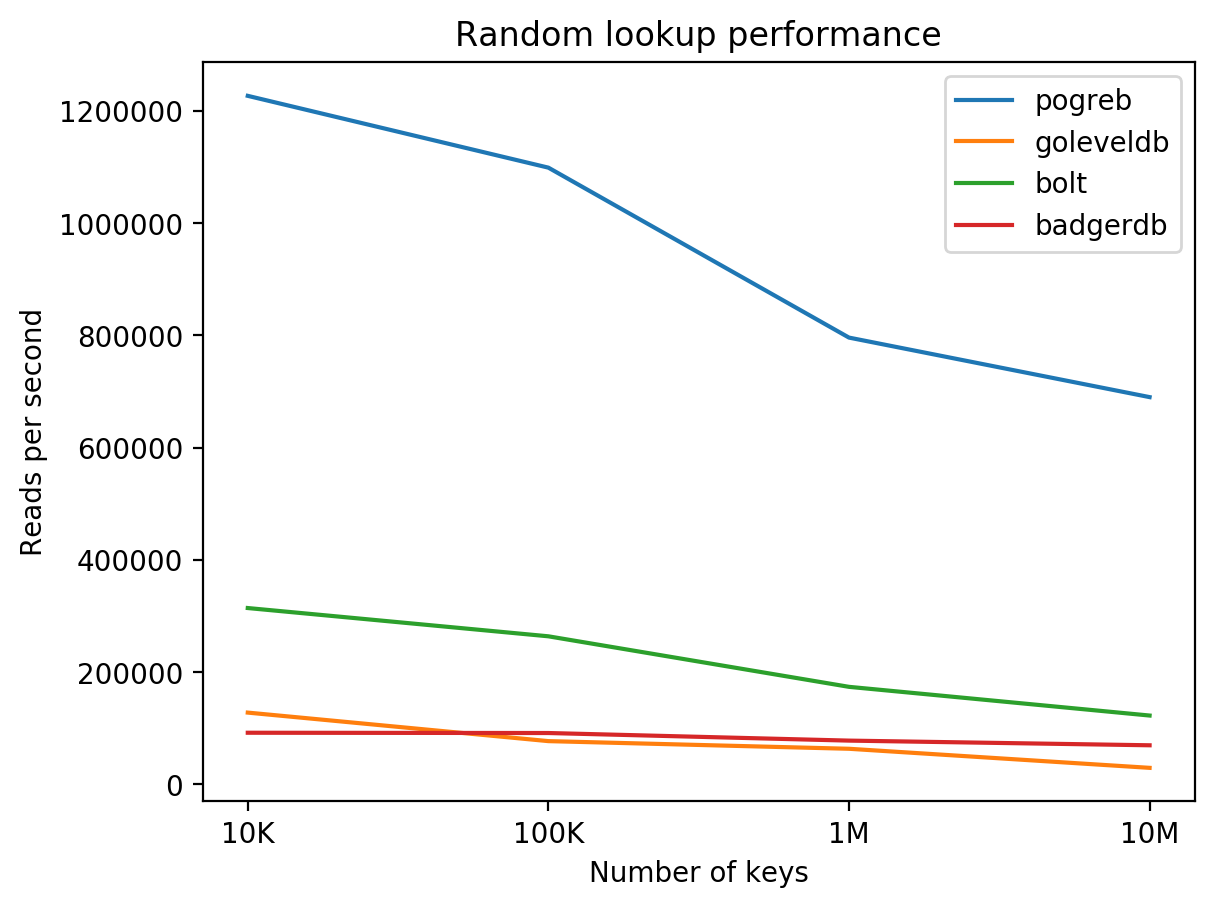

106 | Results of read performance benchmark of pogreb, goleveldb, bolt and badgerdb

107 | on DigitalOcean 8 CPUs / 16 GB RAM / 160 GB SSD + Ubuntu 16.04.3 (higher is better):

108 |

109 |

110 |

111 | ## Internals

112 |

113 | [Design document](/docs/design.md).

114 |

115 | ## Limitations

116 |

117 | The design choices made to optimize for point lookups bring limitations for other potential use-cases. For example, using a hash table for indexing makes range scans impossible. Additionally, having a single hash table shared across all WAL segments makes the recovery process require rebuilding the entire index, which may be impractical for large databases.

--------------------------------------------------------------------------------

/bucket.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "encoding/binary"

5 | )

6 |

7 | const (

8 | bucketSize = 512

9 | slotsPerBucket = 31 // Maximum number of slots possible to fit in a 512-byte bucket.

10 | )

11 |

12 | // slot corresponds to a single item in the hash table.

13 | type slot struct {

14 | hash uint32

15 | segmentID uint16

16 | keySize uint16

17 | valueSize uint32

18 | offset uint32 // Offset of the record in a segment.

19 | }

20 |

21 | func (sl slot) kvSize() uint32 {

22 | return uint32(sl.keySize) + sl.valueSize

23 | }

24 |

25 | // bucket is an array of slots.

26 | type bucket struct {

27 | slots [slotsPerBucket]slot

28 | next int64 // Offset of overflow bucket.

29 | }

30 |

31 | // bucketHandle is a bucket, plus its offset and the file it's written to.

32 | type bucketHandle struct {

33 | bucket

34 | file *file

35 | offset int64

36 | }

37 |

38 | func (b bucket) MarshalBinary() ([]byte, error) {

39 | buf := make([]byte, bucketSize)

40 | data := buf

41 | for i := 0; i < slotsPerBucket; i++ {

42 | sl := b.slots[i]

43 | binary.LittleEndian.PutUint32(buf[:4], sl.hash)

44 | binary.LittleEndian.PutUint16(buf[4:6], sl.segmentID)

45 | binary.LittleEndian.PutUint16(buf[6:8], sl.keySize)

46 | binary.LittleEndian.PutUint32(buf[8:12], sl.valueSize)

47 | binary.LittleEndian.PutUint32(buf[12:16], sl.offset)

48 | buf = buf[16:]

49 | }

50 | binary.LittleEndian.PutUint64(buf[:8], uint64(b.next))

51 | return data, nil

52 | }

53 |

54 | func (b *bucket) UnmarshalBinary(data []byte) error {

55 | for i := 0; i < slotsPerBucket; i++ {

56 | _ = data[16] // bounds check hint to compiler; see golang.org/issue/14808

57 | b.slots[i].hash = binary.LittleEndian.Uint32(data[:4])

58 | b.slots[i].segmentID = binary.LittleEndian.Uint16(data[4:6])

59 | b.slots[i].keySize = binary.LittleEndian.Uint16(data[6:8])

60 | b.slots[i].valueSize = binary.LittleEndian.Uint32(data[8:12])

61 | b.slots[i].offset = binary.LittleEndian.Uint32(data[12:16])

62 | data = data[16:]

63 | }

64 | b.next = int64(binary.LittleEndian.Uint64(data[:8]))

65 | return nil

66 | }

67 |

68 | func (b *bucket) del(slotIdx int) {

69 | i := slotIdx

70 | // Shift slots.

71 | for ; i < slotsPerBucket-1; i++ {

72 | b.slots[i] = b.slots[i+1]

73 | }

74 | b.slots[i] = slot{}

75 | }

76 |

77 | func (b *bucketHandle) read() error {

78 | buf, err := b.file.Slice(b.offset, b.offset+int64(bucketSize))

79 | if err != nil {

80 | return err

81 | }

82 | return b.UnmarshalBinary(buf)

83 | }

84 |

85 | func (b *bucketHandle) write() error {

86 | buf, err := b.MarshalBinary()

87 | if err != nil {

88 | return err

89 | }

90 | _, err = b.file.WriteAt(buf, b.offset)

91 | return err

92 | }

93 |

94 | // slotWriter inserts and writes slots into a bucket.

95 | type slotWriter struct {

96 | bucket *bucketHandle

97 | slotIdx int

98 | prevBuckets []*bucketHandle

99 | }

100 |

101 | func (sw *slotWriter) insert(sl slot, idx *index) error {

102 | if sw.slotIdx == slotsPerBucket {

103 | // Bucket is full, create a new overflow bucket.

104 | nextBucket, err := idx.createOverflowBucket()

105 | if err != nil {

106 | return err

107 | }

108 | sw.bucket.next = nextBucket.offset

109 | sw.prevBuckets = append(sw.prevBuckets, sw.bucket)

110 | sw.bucket = nextBucket

111 | sw.slotIdx = 0

112 | }

113 | sw.bucket.slots[sw.slotIdx] = sl

114 | sw.slotIdx++

115 | return nil

116 | }

117 |

118 | func (sw *slotWriter) write() error {

119 | // Write previous buckets first.

120 | for i := len(sw.prevBuckets) - 1; i >= 0; i-- {

121 | if err := sw.prevBuckets[i].write(); err != nil {

122 | return err

123 | }

124 | }

125 | return sw.bucket.write()

126 | }

127 |

--------------------------------------------------------------------------------

/fs/os_mmap.go:

--------------------------------------------------------------------------------

1 | //go:build !plan9

2 |

3 | package fs

4 |

5 | import (

6 | "io"

7 | "os"

8 | )

9 |

10 | const (

11 | initialMmapSize = 1024 << 20 // 1 GiB

12 | )

13 |

14 | type osMMapFS struct {

15 | osFS

16 | }

17 |

18 | // OSMMap is a file system backed by the os package and memory-mapped files.

19 | var OSMMap FileSystem = &osMMapFS{}

20 |

21 | func (fs *osMMapFS) OpenFile(name string, flag int, perm os.FileMode) (File, error) {

22 | if flag&os.O_APPEND != 0 {

23 | // osMMapFS doesn't support opening files in append-only mode.

24 | // The database doesn't currently use O_APPEND.

25 | return nil, errAppendModeNotSupported

26 | }

27 | f, err := os.OpenFile(name, flag, perm)

28 | if err != nil {

29 | return nil, err

30 | }

31 |

32 | stat, err := f.Stat()

33 | if err != nil {

34 | return nil, err

35 | }

36 |

37 | mf := &osMMapFile{

38 | File: f,

39 | size: stat.Size(),

40 | }

41 | if err := mf.mremap(); err != nil {

42 | return nil, err

43 | }

44 | return mf, nil

45 | }

46 |

47 | type osMMapFile struct {

48 | *os.File

49 | data []byte

50 | offset int64

51 | size int64

52 | mmapSize int64

53 | }

54 |

55 | func (f *osMMapFile) WriteAt(p []byte, off int64) (int, error) {

56 | n, err := f.File.WriteAt(p, off)

57 | if err != nil {

58 | return 0, err

59 | }

60 | writeOff := off + int64(n)

61 | if writeOff > f.size {

62 | f.size = writeOff

63 | }

64 | return n, f.mremap()

65 | }

66 |

67 | func (f *osMMapFile) Write(p []byte) (int, error) {

68 | n, err := f.File.Write(p)

69 | if err != nil {

70 | return 0, err

71 | }

72 | f.offset += int64(n)

73 | if f.offset > f.size {

74 | f.size = f.offset

75 | }

76 | return n, f.mremap()

77 | }

78 |

79 | func (f *osMMapFile) Seek(offset int64, whence int) (int64, error) {

80 | off, err := f.File.Seek(offset, whence)

81 | f.offset = off

82 | return off, err

83 | }

84 |

85 | func (f *osMMapFile) Read(p []byte) (int, error) {

86 | n, err := f.File.Read(p)

87 | f.offset += int64(n)

88 | return n, err

89 | }

90 |

91 | func (f *osMMapFile) Slice(start int64, end int64) ([]byte, error) {

92 | if end > f.size {

93 | return nil, io.EOF

94 | }

95 | if f.data == nil {

96 | return nil, os.ErrClosed

97 | }

98 | return f.data[start:end], nil

99 | }

100 |

101 | func (f *osMMapFile) munmap() error {

102 | if f.data == nil {

103 | return nil

104 | }

105 | if err := munmap(f.data); err != nil {

106 | return err

107 | }

108 | f.data = nil

109 | f.mmapSize = 0

110 | return nil

111 | }

112 |

113 | func (f *osMMapFile) mmap(fileSize int64, mappingSize int64) error {

114 | if f.data != nil {

115 | if err := munmap(f.data); err != nil {

116 | return err

117 | }

118 | }

119 |

120 | data, err := mmap(f.File, fileSize, mappingSize)

121 | if err != nil {

122 | return err

123 | }

124 |

125 | _ = madviceRandom(data)

126 |

127 | f.data = data

128 | return nil

129 | }

130 |

131 | func (f *osMMapFile) mremap() error {

132 | mmapSize := f.mmapSize

133 |

134 | if mmapSize >= f.size {

135 | return nil

136 | }

137 |

138 | if mmapSize == 0 {

139 | mmapSize = initialMmapSize

140 | if mmapSize < f.size {

141 | mmapSize = f.size

142 | }

143 | } else {

144 | if err := f.munmap(); err != nil {

145 | return err

146 | }

147 | mmapSize *= 2

148 | }

149 |

150 | if err := f.mmap(f.size, mmapSize); err != nil {

151 | return err

152 | }

153 |

154 | // On Windows mmap may memory-map less than the requested size.

155 | f.mmapSize = int64(len(f.data))

156 |

157 | return nil

158 | }

159 |

160 | func (f *osMMapFile) Close() error {

161 | if err := f.munmap(); err != nil {

162 | return err

163 | }

164 | return f.File.Close()

165 | }

166 |

167 | // Return a default FileSystem for this platform.

168 | func DefaultFileSystem() FileSystem {

169 | return OSMMap

170 | }

171 |

--------------------------------------------------------------------------------

/recovery.go:

--------------------------------------------------------------------------------

1 | package pogreb

2 |

3 | import (

4 | "io"

5 | "path/filepath"

6 |

7 | "github.com/akrylysov/pogreb/fs"

8 | )

9 |

10 | const (

11 | recoveryBackupExt = ".bac"

12 | )

13 |

14 | func backupNonsegmentFiles(fsys fs.FileSystem) error {

15 | logger.Println("moving non-segment files...")

16 |

17 | files, err := fsys.ReadDir(".")

18 | if err != nil {

19 | return err

20 | }

21 |

22 | for _, file := range files {

23 | name := file.Name()

24 | ext := filepath.Ext(name)

25 | if ext == segmentExt || name == lockName {

26 | continue

27 | }

28 | dst := name + recoveryBackupExt

29 | if err := fsys.Rename(name, dst); err != nil {

30 | return err

31 | }

32 | logger.Printf("moved %s to %s", name, dst)

33 | }

34 |

35 | return nil

36 | }

37 |

38 | func removeRecoveryBackupFiles(fsys fs.FileSystem) error {

39 | logger.Println("removing recovery backup files...")

40 |

41 | files, err := fsys.ReadDir(".")

42 | if err != nil {

43 | return err

44 | }

45 |

46 | for _, file := range files {

47 | name := file.Name()

48 | ext := filepath.Ext(name)

49 | if ext != recoveryBackupExt {

50 | continue

51 | }

52 | if err := fsys.Remove(name); err != nil {

53 | return err

54 | }

55 | logger.Printf("removed %s", name)

56 | }

57 |

58 | return nil

59 | }

60 |

61 | // recoveryIterator iterates over records of all datalog segments in insertion order.

62 | // Corrupted segments are truncated to the last valid record.

63 | type recoveryIterator struct {

64 | segments []*segment

65 | segit *segmentIterator

66 | }

67 |

68 | func newRecoveryIterator(segments []*segment) *recoveryIterator {

69 | return &recoveryIterator{

70 | segments: segments,

71 | }

72 | }

73 |

74 | func (it *recoveryIterator) next() (record, error) {

75 | for {

76 | if it.segit == nil {

77 | if len(it.segments) == 0 {

78 | return record{}, ErrIterationDone

79 | }

80 | var err error

81 | it.segit, err = newSegmentIterator(it.segments[0])

82 | if err != nil {

83 | return record{}, err

84 | }

85 | it.segments = it.segments[1:]

86 | }

87 | rec, err := it.segit.next()

88 | if err == io.EOF || err == io.ErrUnexpectedEOF || err == errCorrupted {

89 | // Truncate file to the last valid offset.

90 | if err := it.segit.f.Truncate(int64(it.segit.offset)); err != nil {

91 | return record{}, err

92 | }

93 | fi, fierr := it.segit.f.Stat()

94 | if fierr != nil {

95 | return record{}, fierr

96 | }

97 | logger.Printf("truncated segment %s to offset %d", fi.Name(), it.segit.offset)

98 | err = ErrIterationDone

99 | }

100 | if err == ErrIterationDone {

101 | it.segit = nil

102 | continue

103 | }

104 | if err != nil {

105 | return record{}, err

106 | }

107 | return rec, nil

108 | }

109 | }

110 |

111 | func (db *DB) recover() error {

112 | logger.Println("started recovery")

113 | logger.Println("rebuilding index...")

114 |

115 | segments := db.datalog.segmentsBySequenceID()

116 | it := newRecoveryIterator(segments)

117 | for {

118 | rec, err := it.next()

119 | if err == ErrIterationDone {

120 | break

121 | }

122 | if err != nil {

123 | return err

124 | }

125 |

126 | h := db.hash(rec.key)

127 | meta := db.datalog.segments[rec.segmentID].meta

128 | if rec.rtype == recordTypePut {

129 | sl := slot{

130 | hash: h,

131 | segmentID: rec.segmentID,

132 | keySize: uint16(len(rec.key)),

133 | valueSize: uint32(len(rec.value)),

134 | offset: rec.offset,

135 | }

136 | if err := db.put(sl, rec.key); err != nil {

137 | return err

138 | }

139 | meta.PutRecords++

140 | } else {