├── .VERSION

├── .github

└── workflows

│ ├── ci.yml

│ └── publish.yml

├── .gitignore

├── LICENSE

├── README.md

├── codenav

├── __init__.py

├── agents

│ ├── __init__.py

│ ├── agent.py

│ ├── cohere

│ │ ├── __init__.py

│ │ └── agent.py

│ ├── gpt4

│ │ ├── __init__.py

│ │ └── agent.py

│ ├── interaction_formatters.py

│ └── llm_chat_agent.py

├── codenav_run.py

├── constants.py

├── default_eval_spec.py

├── environments

│ ├── __init__.py

│ ├── abstractions.py

│ ├── code_env.py

│ ├── code_summary_env.py

│ ├── done_env.py

│ └── retrieval_env.py

├── interaction

│ ├── __init__.py

│ ├── episode.py

│ └── messages.py

├── prompts

│ ├── __init__.py

│ ├── codenav

│ │ └── repo_description.txt

│ ├── default

│ │ ├── action__code.txt

│ │ ├── action__done.txt

│ │ ├── action__es_search.txt

│ │ ├── action__guidelines.txt

│ │ ├── action__preamble.txt

│ │ ├── overview.txt

│ │ ├── repo_description.txt

│ │ ├── response__code.txt

│ │ ├── response__done.txt

│ │ ├── response__es_search.txt

│ │ ├── response__preamble.txt

│ │ └── workflow.txt

│ ├── query_prompt.py

│ └── restart_prompt.py

├── retrieval

│ ├── __init__.py

│ ├── code_blocks.py

│ ├── code_summarizer.py

│ └── elasticsearch

│ │ ├── README.md

│ │ ├── __init__.py

│ │ ├── create_index.py

│ │ ├── debug_add_item_to_index.py

│ │ ├── elasticsearch.yml

│ │ ├── elasticsearch_constants.py

│ │ ├── elasticsearch_retriever.py

│ │ ├── index_codebase.py

│ │ └── install_elasticsearch.py

└── utils

│ ├── __init__.py

│ ├── config_params.py

│ ├── eval_types.py

│ ├── evaluator.py

│ ├── hashing_utils.py

│ ├── linting_and_type_checking_utils.py

│ ├── llm_utils.py

│ ├── logging_utils.py

│ ├── omegaconf_utils.py

│ ├── parsing_utils.py

│ ├── prompt_utils.py

│ └── string_utils.py

├── codenav_examples

├── create_code_env.py

├── create_episode.py

├── create_index.py

├── create_prompt.py

└── parallel_evaluation.py

├── playground

└── .gitignore

├── pyproject.toml

├── requirements.txt

├── scripts

└── release.py

└── setup.py

/.VERSION:

--------------------------------------------------------------------------------

1 | 0.0.1

--------------------------------------------------------------------------------

/.github/workflows/ci.yml:

--------------------------------------------------------------------------------

1 | name: CI

2 |

3 | concurrency:

4 | group: ${{ github.workflow }}-${{ github.ref }}

5 | cancel-in-progress: true

6 |

7 | on:

8 | pull_request:

9 | branches:

10 | - main

11 | push:

12 | branches:

13 | - main

14 |

15 | env:

16 | # Change this to invalidate existing cache.

17 | CACHE_PREFIX: v0

18 | PYTHON_PATH: ./

19 |

20 | jobs:

21 | checks:

22 | name: python ${{ matrix.python }} - ${{ matrix.task.name }}

23 | runs-on: [ubuntu-latest]

24 | timeout-minutes: 30

25 | strategy:

26 | fail-fast: false

27 | matrix:

28 | python: [3.9]

29 | task:

30 | - name: Style

31 | run: |

32 | black --check .

33 |

34 | # TODO: Add testing back in after fixing the tests for use with GH actions.

35 | # - name: Test

36 | # run: |

37 | # pytest -v --color=yes tests/

38 |

39 | steps:

40 | - uses: actions/checkout@v3

41 |

42 | - name: Setup Python

43 | uses: actions/setup-python@v4

44 | with:

45 | python-version: ${{ matrix.python }}

46 |

47 | - name: Install prerequisites

48 | run: |

49 | pip install --upgrade pip setuptools wheel virtualenv

50 |

51 | - name: Set build variables

52 | shell: bash

53 | run: |

54 | # Get the exact Python version to use in the cache key.

55 | echo "PYTHON_VERSION=$(python --version)" >> $GITHUB_ENV

56 | echo "RUNNER_ARCH=$(uname -m)" >> $GITHUB_ENV

57 | # Use week number in cache key so we can refresh the cache weekly.

58 | echo "WEEK_NUMBER=$(date +%V)" >> $GITHUB_ENV

59 |

60 | - uses: actions/cache@v3

61 | id: virtualenv-cache

62 | with:

63 | path: .venv

64 | key: ${{ env.CACHE_PREFIX }}-${{ env.WEEK_NUMBER }}-${{ runner.os }}-${{ env.RUNNER_ARCH }}-${{ env.PYTHON_VERSION }}-${{ hashFiles('requirements.txt') }}

65 | restore-keys: |

66 | ${{ env.CACHE_PREFIX }}-${{ env.WEEK_NUMBER }}-${{ runner.os }}-${{ env.RUNNER_ARCH }}-${{ env.PYTHON_VERSION }}-

67 |

68 | - name: Setup virtual environment (no cache hit)

69 | if: steps.virtualenv-cache.outputs.cache-hit != 'true'

70 | run: |

71 | test -d .venv || virtualenv -p $(which python) --copies --reset-app-data .venv

72 | . .venv/bin/activate

73 | pip install -e .[dev]

74 |

75 | - name: Setup virtual environment (cache hit)

76 | if: steps.virtualenv-cache.outputs.cache-hit == 'true'

77 | run: |

78 | . .venv/bin/activate

79 | pip install --no-deps -e .[dev]

80 |

81 | - name: Show environment info

82 | run: |

83 | . .venv/bin/activate

84 | which python

85 | python --version

86 | pip freeze

87 |

88 | - name: ${{ matrix.task.name }}

89 | run: |

90 | . .venv/bin/activate

91 | ${{ matrix.task.run }}

92 |

93 | - name: Clean up

94 | if: always()

95 | run: |

96 | . .venv/bin/activate

97 | pip uninstall -y codenav

98 |

--------------------------------------------------------------------------------

/.github/workflows/publish.yml:

--------------------------------------------------------------------------------

1 | # This workflow will upload the codenav package using Twine (after manually triggering it)

2 | # For more information see: https://help.github.com/en/actions/language-and-framework-guides/using-python-with-github-actions#publishing-to-package-registries

3 |

4 | name: Publish PYPI Packages

5 |

6 | on:

7 | workflow_dispatch:

8 |

9 | jobs:

10 | deploy:

11 |

12 | runs-on: ubuntu-latest

13 |

14 | steps:

15 | - uses: actions/checkout@v2

16 | - name: Set up Python

17 | uses: actions/setup-python@v2

18 | with:

19 | python-version: '3.9'

20 | - name: Install dependencies

21 | run: |

22 | python -m pip install --upgrade pip

23 | pip install setuptools twine

24 | - name: Build and publish

25 | env:

26 | TWINE_PASSWORD: ${{ secrets.PYPI_PASSWORD }}

27 | run: |

28 | python scripts/release.py

29 | twine upload -u __token__ dist/*

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | share/python-wheels/

24 | *.egg-info/

25 | .installed.cfg

26 | *.egg

27 | MANIFEST

28 |

29 | # PyInstaller

30 | # Usually these files are written by a python script from a template

31 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

32 | *.manifest

33 | *.spec

34 |

35 | # Installer logs

36 | pip-log.txt

37 | pip-delete-this-directory.txt

38 |

39 | # Unit test / coverage reports

40 | htmlcov/

41 | .tox/

42 | .nox/

43 | .coverage

44 | .coverage.*

45 | .cache

46 | nosetests.xml

47 | coverage.xml

48 | *.cover

49 | *.py,cover

50 | .hypothesis/

51 | .pytest_cache/

52 | cover/

53 |

54 | # Translations

55 | *.mo

56 | *.pot

57 |

58 | # Django stuff:

59 | *.log

60 | local_settings.py

61 | db.sqlite3

62 | db.sqlite3-journal

63 |

64 | # Flask stuff:

65 | instance/

66 | .webassets-cache

67 |

68 | # Scrapy stuff:

69 | .scrapy

70 |

71 | # Sphinx documentation

72 | docs/_build/

73 |

74 | # PyBuilder

75 | .pybuilder/

76 | target/

77 |

78 | # Jupyter Notebook

79 | .ipynb_checkpoints

80 |

81 | # IPython

82 | profile_default/

83 | ipython_config.py

84 |

85 | # pyenv

86 | # For a library or package, you might want to ignore these files since the code is

87 | # intended to run in multiple environments; otherwise, check them in:

88 | # .python-version

89 |

90 | # pipenv

91 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

92 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

93 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

94 | # install all needed dependencies.

95 | #Pipfile.lock

96 |

97 | # poetry

98 | # Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

99 | # This is especially recommended for binary packages to ensure reproducibility, and is more

100 | # commonly ignored for libraries.

101 | # https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

102 | #poetry.lock

103 |

104 | # pdm

105 | # Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

106 | #pdm.lock

107 | # pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

108 | # in version control.

109 | # https://pdm.fming.dev/#use-with-ide

110 | .pdm.toml

111 |

112 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

113 | __pypackages__/

114 |

115 | # Celery stuff

116 | celerybeat-schedule

117 | celerybeat.pid

118 |

119 | # SageMath parsed files

120 | *.sage.py

121 |

122 | # Environments

123 | .env

124 | .venv

125 | env/

126 | venv/

127 | ENV/

128 | env.bak/

129 | venv.bak/

130 |

131 | # Spyder project settings

132 | .spyderproject

133 | .spyproject

134 |

135 | # Rope project settings

136 | .ropeproject

137 |

138 | # mkdocs documentation

139 | /site

140 |

141 | # mypy

142 | .mypy_cache/

143 | .dmypy.json

144 | dmypy.json

145 |

146 | # Pyre type checker

147 | .pyre/

148 |

149 | # pytype static type analyzer

150 | .pytype/

151 |

152 | # Cython debug symbols

153 | cython_debug/

154 |

155 | # PyCharm

156 | # JetBrains specific template is maintained in a separate JetBrains.gitignore that can

157 | # be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

158 | # and can be added to the global gitignore or merged into this file. For a more nuclear

159 | # option (not recommended) you can uncomment the following to ignore the entire idea folder.

160 | #.idea/

161 |

162 | rag_cache

163 | __tmp*

164 | wandb

165 | tmp

166 | external_src

167 | codenav/external_src

168 | file_lock

169 | tmp.py

170 | *.jpg

171 | *.png

172 | rsync-to-*

173 | results

174 | *_out

175 | .vscode

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | Copyright 2024 Allen Institute for AI

179 |

180 | Licensed under the Apache License, Version 2.0 (the "License");

181 | you may not use this file except in compliance with the License.

182 | You may obtain a copy of the License at

183 |

184 | http://www.apache.org/licenses/LICENSE-2.0

185 |

186 | Unless required by applicable law or agreed to in writing, software

187 | distributed under the License is distributed on an "AS IS" BASIS,

188 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

189 | See the License for the specific language governing permissions and

190 | limitations under the License.

191 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

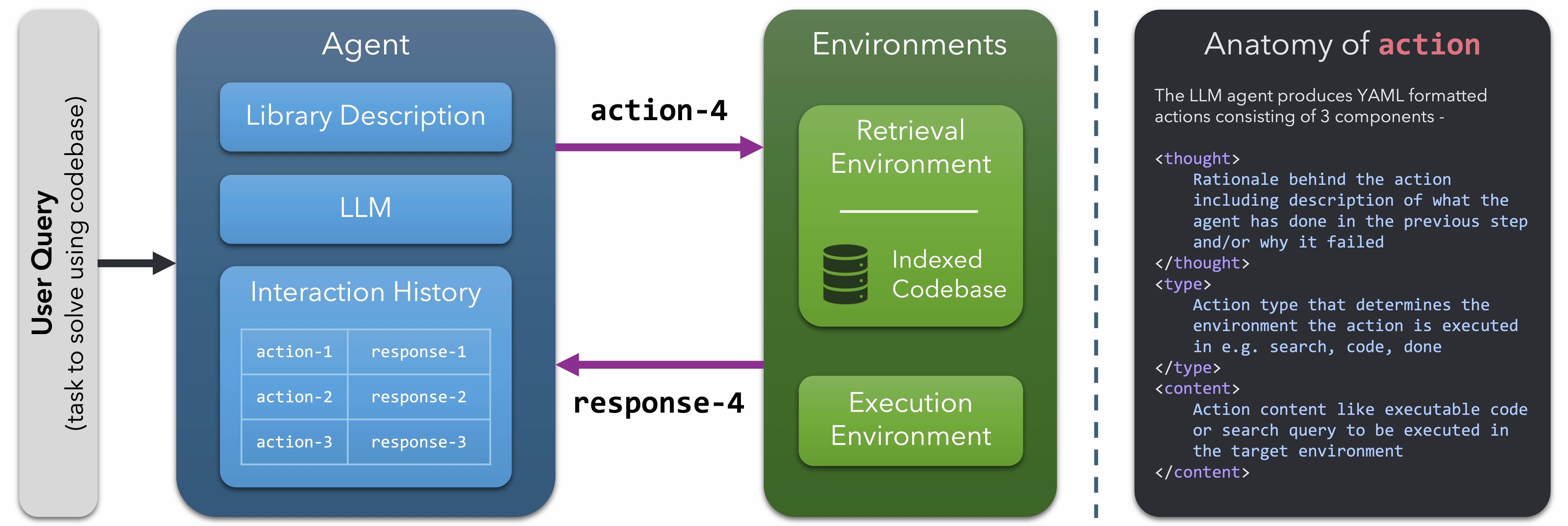

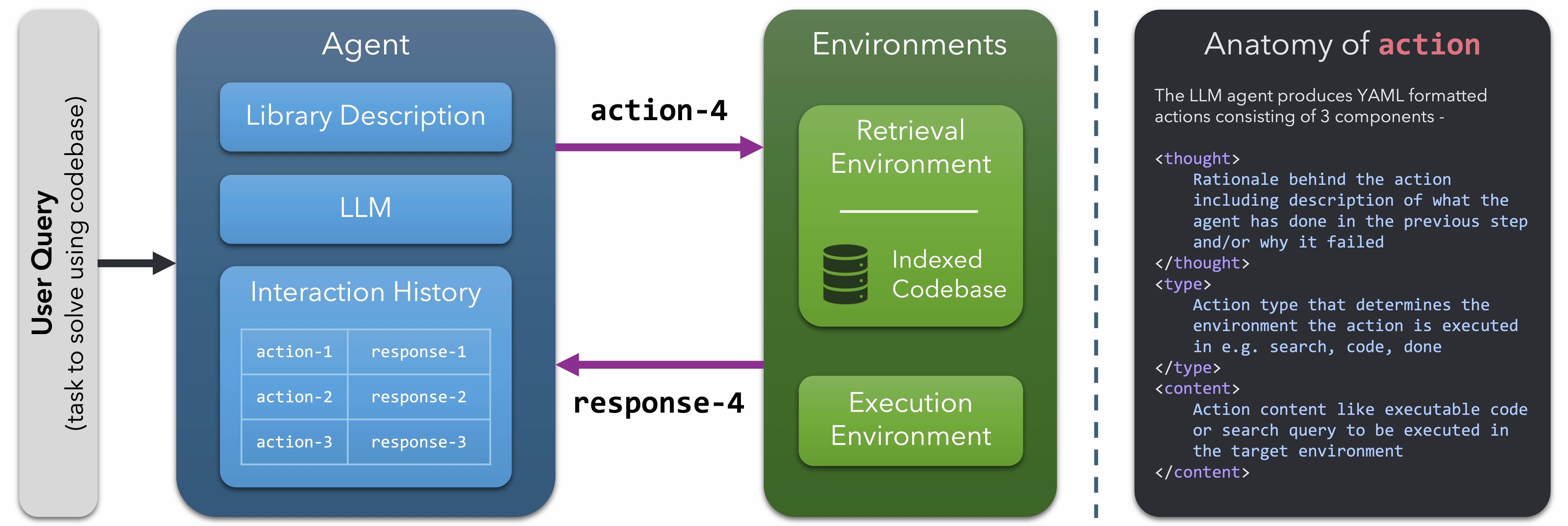

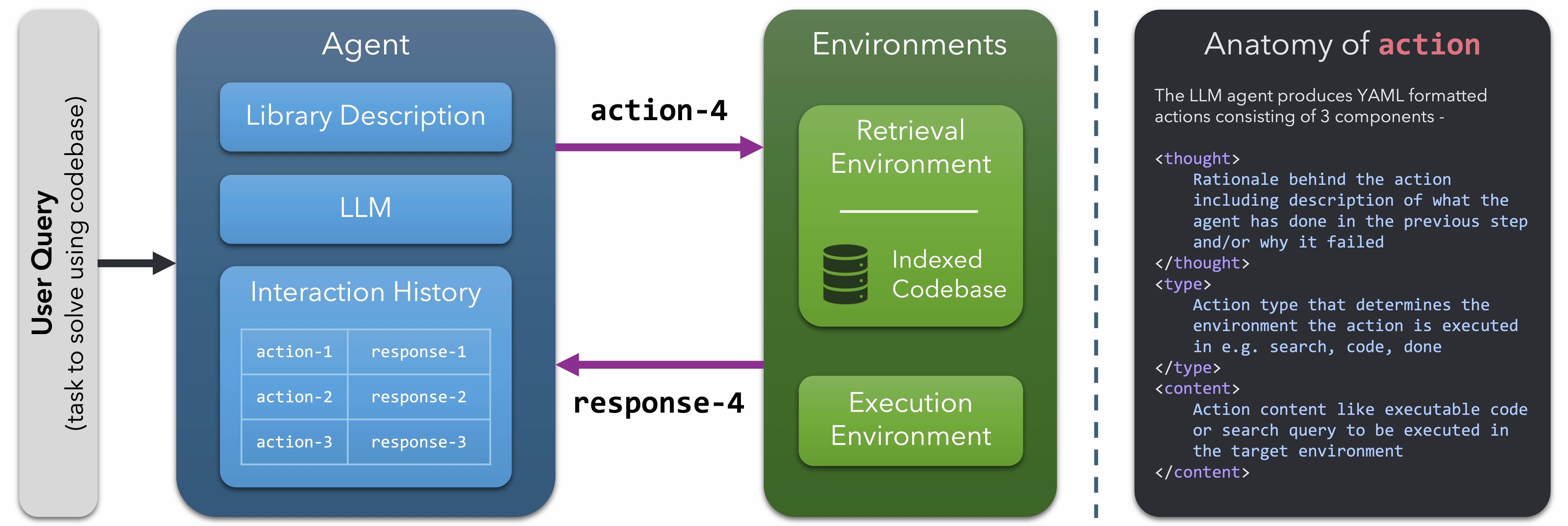

1 | # [CodeNav: Beyond tool-use to using real-world codebases with LLM agents 🚀](https://codenav.allenai.org/)

2 |

3 |

4 |

5 |

46 | Click to see DoneEnv.py contents

47 |

48 | class DoneEnv(CodeNavEnv):

49 | """

50 | DoneEnv is an environment class that handles the 'done' action in the CodeNav framework.

51 |

52 | Methods:

53 | check_action_validity(action: CodeNavAction) -> Tuple[bool, str]:

54 | Checks if the given action is valid for the 'done' action.

55 |

56 | step(action: CodeNavAction) -> None:

57 | Executes the 'done' action.

58 | """

59 | def check_action_validity(self, action: CodeNavAction) -> Tuple[bool, str]:

60 | """

61 | Checks if the given action is valid for the 'done' action.

62 |

63 | Args:

64 | action (CodeNavAction): The action to be validated.

65 |

66 | Returns:

67 | Tuple[bool, str]: A tuple containing a boolean indicating validity and an error message if invalid.

68 | """

69 | assert action.content is not None

70 |

71 | if action.content.strip().lower() in ["true", "false"]:

72 | return True, ""

73 | else:

74 | return (

75 | False,

76 | "When executing the done action, the content must be either 'True' or 'False'",

77 | )

78 |

79 | def step(self, action: CodeNavAction) -> None:

80 | """

81 | Executes the 'done' action.

82 |

83 | Args:

84 | action (CodeNavAction): The action to be executed.

85 | """

86 | return None

87 |

88 |

89 | Note: the `codenav` command line tool is simply an alias for running the [codenav_run.py](codenav%2Fcodenav_run.py) so

90 | you can replace `codenav ...` with `python -m codenav.codenav_run ...`

91 | or `python /path/to/codenav/codenav_run.py ...` and obtain the same results.

92 |

93 | Here's a more detailed description of the arguments you can pass to `codenav query` or `python -m codenav.codenav_run query`:

94 | | Argument | Type | Description |

95 | | --- | --- | --- |

96 | | `--code_dir` | str | The path to the codebase you want CodeNav to use. By default all files in this directory will get indexed with relative file paths. For instance, if you set `--code_dir /Users/tanmay/codebase` which contains a `computer_vision/tool.py` file then this file will be indexed with relative path `computer_vision/tools.py` |

97 | | `--force_subdir` | str | If you wish to only index a subdirectory within the code_dir then set this to the name of the sub directory |

98 | | `--module` | str | If you have a module installed e.g. via `pip install transformers` and you want CodeNav to use this module, you can simply set `--module transformers` instead of providing `--code_dir` |

99 | | `--repo_description_path` | str | If you have a README file or a file with a description of the codebase you are using, you can provide the path to this file here. You may use this file to point out to CodeNav the high-level purpose and structure of the codebase (e.g. highlight important directories, files, classes or functions) |

100 | | `--force_reindex` | bool | Set this flag if you want to force CodeNav to reindex the codebase. Otherwise, CodeNav will reuse an existing index if it exists or create one if it doesn't |

101 | | `--playground_dir` | str | The path specified here will work as the current directory for CodeNav's execution environment |

102 | | `--query` | str | The query you want CodeNav to solve using the codebase |

103 | | `--query_file` | str | If your query is long, you may want to save it to a txt file and provide the path to the text file here |

104 | | `--max_steps` | int | The maximum number of interactions to allow between CodeNav agent and environments |

105 |

106 |

107 | ### CodeNav as a library

108 |

109 | If you'd like to use CodeNav programmatically, you can do so by importing the `codenav` module and using the various

110 | functions/classes we provide. To get a sense of how this is done, we provide a number of example scripts

111 | under the [codenav_examples](codenav_examples) directory:

112 | - [create_index.py](codenav_examples%2Fcreate_index.py): Creates an Elasticsearch index for this codebase and then uses the `RetrievalEnv` environment to search for a code snippet.

113 | - [create_episode.py](codenav_examples%2Fcreate_episode.py): Creates an `OpenAICodeNavAgent` agent and then uses it to generate a solution for the query `"Find the DoneEnv and instantiate it"` **on this codebase** (i.e. executes a CodeNav agent on the CodeNav codebase). Be sure to run the `create_index.py` script above to generate the index before running this script.

114 | - [create_code_env.py](codenav_examples%2Fcreate_code_env.py)): Creates a `PythonCodeEnv` object and then executes a given code string in this environemnt

115 | - [create_prompt.py](codenav_examples%2Fcreate_prompt.py): Creates a custom prompt and instantiates and CodeNav agent with that prompt.

116 | - [parallel_evaluation.py](codenav_examples%2Fparallel_evaluation.py): Demonstrates how to run multiple CodeNav agents in parallel. This is useful for evaluating on a dataset of queries using multiple processes. The EvalSpec abstraction also helps you organize the code a little better!

117 |

118 | **Note** - You will still need to launch ElasticSearch server before running any of the above. To do so run

119 | ```

120 | python -m codenav.codenav_run init

121 | ```

122 |

123 | ## Elasticsearch & Indexing Gotchas 🤔

124 |

125 | When running CodeNav you must start an Elasticsearch index on your machine (e.g. by running `codenav init`)

126 | and once you run a query on a given codebase, CodeNav will index that codebase exactly once.

127 | This process means there are two things you should keep in mind:

128 | 1. You must manually shut off the Elasticsearch index once you are done with it. You can do this by running `codenav stop`.

129 | 2. If you modify/update the codebase you are asking CodeNav to use the Elasticsearch index will not automatically update and thus CodeNav will be writing code using stale information. In this case, you should add the `--force_reindex` flag when running `codenav query`, this will force CodeNav to reindex the codebase.

130 | 3. If you run CodeNav and find that it is unable to search for a file, you may want to make sure the file was indexed correctly. You can inspect all indexed files using Elasticsearch's Kibana interface at `http://localhost:5601/`. To view all the indices index by CodeNav, go to `http://localhost:5601/app/management/data/index_management`. Then click on the index you want to inspect and the click on "Discover Index" on the top-right side of the page. This will show you all the code blocks stored in this index. You can now use the UI to run queries against this index and see if the file your are looking for is present in the index and if it has the correct file path.

131 |

132 | ## Warning ⚠️

133 |

134 | CodeNav is a research project and may make errors. As CodeNav can potentially execute ANY code

135 | it wants, it is not suitable for security sensitive applications. We strongly recommend

136 | that you run CodeNav in a sandboxed environment where data loss or security breaches are not a concern.

137 |

138 | ## Authors ✍️

139 | - [Tanmay Gupta](https://tanmaygupta.info/)

140 | - [Luca Weihs](https://lucaweihs.github.io/)

141 | - [Aniruddha Kembhavi](https://anikem.github.io/)

142 |

143 | ## License 📄

144 | This project is licensed under the Apache 2.0 License.

145 |

146 | ## Citation

147 | ```bibtex

148 | @misc{gupta2024codenavtooluseusingrealworld,

149 | title={CodeNav: Beyond tool-use to using real-world codebases with LLM agents},

150 | author={Tanmay Gupta and Luca Weihs and Aniruddha Kembhavi},

151 | year={2024},

152 | eprint={2406.12276},

153 | archivePrefix={arXiv},

154 | primaryClass={cs.AI},

155 | url={https://arxiv.org/abs/2406.12276},

156 | }

157 | ```

158 |

159 | CodeNav builds along the research direction we started exploring with VisProg (CVPR 2023 Best Paper). For more context please visit [https://github.com/allenai/visprog/blob/main/README.md](https://github.com/allenai/visprog/blob/main/README.md).

160 |

--------------------------------------------------------------------------------

/codenav/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/allenai/codenav/2f200d9de2ee15cc82e232b8414765fe5f2fb617/codenav/__init__.py

--------------------------------------------------------------------------------

/codenav/agents/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/allenai/codenav/2f200d9de2ee15cc82e232b8414765fe5f2fb617/codenav/agents/__init__.py

--------------------------------------------------------------------------------

/codenav/agents/agent.py:

--------------------------------------------------------------------------------

1 | import abc

2 | from typing import Optional, Sequence, List, Dict, Any

3 |

4 | import codenav.interaction.messages as msg

5 | from codenav.agents.interaction_formatters import InteractionFormatter

6 |

7 |

8 | class CodeNavAgent(abc.ABC):

9 | max_tokens: int

10 | interaction_formatter: InteractionFormatter

11 | model: str

12 |

13 | def __init__(self, allowed_action_types: Sequence[msg.ACTION_TYPES]):

14 | self.allowed_action_types = allowed_action_types

15 | # self.reset()

16 |

17 | def reset(self):

18 | """Reset episode state. Must be called before starting a new episode."""

19 | self.episode_state = self.init_episode_state()

20 |

21 | @abc.abstractmethod

22 | def init_episode_state(self) -> msg.EpisodeState:

23 | """Build system prompt and initialize the episode state with it"""

24 | raise NotImplementedError

25 |

26 | def update_state(self, interaction: msg.Interaction):

27 | self.episode_state.update(interaction)

28 |

29 | @property

30 | def system_prompt_str(self) -> str:

31 | return self.episode_state.system_prompt.content

32 |

33 | @property

34 | def user_query_prompt_str(self) -> str:

35 | queries = [

36 | i.response

37 | for i in self.episode_state.interactions

38 | if isinstance(i.response, msg.UserQueryToAgent)

39 | ]

40 | assert len(queries) == 1

41 | return queries[0].message

42 |

43 | @abc.abstractmethod

44 | def get_action(self) -> msg.CodeNavAction:

45 | raise NotImplementedError

46 |

47 | @abc.abstractmethod

48 | def summarize_episode_state_for_restart(

49 | self, episode_state: msg.EpisodeState, max_tokens: Optional[int]

50 | ) -> str:

51 | raise NotImplementedError

52 |

53 | @property

54 | @abc.abstractmethod

55 | def all_queries_and_responses(self) -> List[Dict[str, Any]]:

56 | raise NotImplementedError

57 |

--------------------------------------------------------------------------------

/codenav/agents/cohere/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/allenai/codenav/2f200d9de2ee15cc82e232b8414765fe5f2fb617/codenav/agents/cohere/__init__.py

--------------------------------------------------------------------------------

/codenav/agents/cohere/agent.py:

--------------------------------------------------------------------------------

1 | import copy

2 | import time

3 | from typing import List, Literal, Optional, Sequence

4 |

5 | from cohere import ChatMessage, InternalServerError, TooManyRequestsError

6 |

7 | import codenav.interaction.messages as msg

8 | from codenav.agents.interaction_formatters import InteractionFormatter

9 | from codenav.agents.llm_chat_agent import LLMChatCodeNavAgent, LlmChatMessage

10 | from codenav.constants import COHERE_CLIENT

11 |

12 |

13 | class CohereCodeNavAgent(LLMChatCodeNavAgent):

14 | def __init__(

15 | self,

16 | prompt: str,

17 | model: Literal["command-r", "command-r-plus"] = "command-r",

18 | max_tokens: int = 50000,

19 | allowed_action_types: Sequence[msg.ACTION_TYPES] = (

20 | "code",

21 | "done",

22 | "search",

23 | "reset",

24 | ),

25 | prompt_set: str = "default",

26 | interaction_formatter: Optional[InteractionFormatter] = None,

27 | ):

28 | super().__init__(

29 | model=model,

30 | prompt=prompt,

31 | max_tokens=max_tokens,

32 | allowed_action_types=allowed_action_types,

33 | interaction_formatter=interaction_formatter,

34 | )

35 |

36 | @property

37 | def client(self):

38 | return COHERE_CLIENT

39 |

40 | def query_llm(

41 | self,

42 | messages: List[LlmChatMessage],

43 | model: Optional[str] = None,

44 | max_tokens: Optional[int] = None,

45 | ) -> str:

46 | if model is None:

47 | model = self.model

48 |

49 | if max_tokens is None:

50 | max_tokens = self.max_tokens

51 |

52 | output = None

53 | nretries = 50

54 | for retry in range(nretries):

55 | try:

56 | output = self.client.chat(

57 | message=messages[-1]["message"],

58 | model=model,

59 | chat_history=messages[:-1],

60 | prompt_truncation="OFF",

61 | temperature=0.0,

62 | max_input_tokens=max_tokens,

63 | max_tokens=3000,

64 | )

65 | break

66 | except (TooManyRequestsError, InternalServerError):

67 | pass

68 |

69 | if retry >= nretries - 1:

70 | raise RuntimeError(f"Hit max retries ({nretries})")

71 |

72 | time.sleep(5)

73 |

74 | self._all_queries_and_responses.append(

75 | {

76 | "input": copy.deepcopy(messages),

77 | "output": output.text,

78 | "input_tokens": output.meta.billed_units.input_tokens,

79 | "output_tokens": output.meta.billed_units.output_tokens,

80 | }

81 | )

82 | return output.text

83 |

84 | def create_message_from_text(self, text: str, role: str) -> ChatMessage:

85 | role = {

86 | "assistant": "CHATBOT",

87 | "user": "USER",

88 | "system": "SYSTEM",

89 | }[role]

90 |

91 | return dict(role=role, message=text)

92 |

--------------------------------------------------------------------------------

/codenav/agents/gpt4/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/allenai/codenav/2f200d9de2ee15cc82e232b8414765fe5f2fb617/codenav/agents/gpt4/__init__.py

--------------------------------------------------------------------------------

/codenav/agents/gpt4/agent.py:

--------------------------------------------------------------------------------

1 | from typing import Optional, Sequence

2 |

3 | import codenav.interaction.messages as msg

4 | from codenav.agents.interaction_formatters import InteractionFormatter

5 | from codenav.agents.llm_chat_agent import LLMChatCodeNavAgent, LlmChatMessage

6 | from codenav.constants import DEFAULT_OPENAI_MODEL, OPENAI_CLIENT

7 | from codenav.utils.llm_utils import create_openai_message

8 |

9 |

10 | class OpenAICodeNavAgent(LLMChatCodeNavAgent):

11 | def __init__(

12 | self,

13 | prompt: str,

14 | model: str = DEFAULT_OPENAI_MODEL,

15 | max_tokens: int = 50000,

16 | allowed_action_types: Sequence[msg.ACTION_TYPES] = (

17 | "code",

18 | "done",

19 | "search",

20 | "reset",

21 | ),

22 | interaction_formatter: Optional[InteractionFormatter] = None,

23 | ):

24 | super().__init__(

25 | model=model,

26 | prompt=prompt,

27 | max_tokens=max_tokens,

28 | allowed_action_types=allowed_action_types,

29 | interaction_formatter=interaction_formatter,

30 | )

31 |

32 | @property

33 | def client(self):

34 | return OPENAI_CLIENT

35 |

36 | def create_message_from_text(self, text: str, role: str) -> LlmChatMessage:

37 | return create_openai_message(text=text, role=role)

38 |

--------------------------------------------------------------------------------

/codenav/agents/interaction_formatters.py:

--------------------------------------------------------------------------------

1 | import abc

2 | from types import MappingProxyType

3 | from typing import Mapping, Literal

4 |

5 | from codenav.interaction.messages import (

6 | CodeNavAction,

7 | RESPONSE_TYPES,

8 | Interaction,

9 | MultiRetrievalResult,

10 | RetrievalResult,

11 | )

12 | from codenav.retrieval.elasticsearch.create_index import es_doc_to_string

13 |

14 | DEFAULT_ACTION_FORMAT_KWARGS = MappingProxyType(

15 | dict(include_header=True),

16 | )

17 |

18 | DEFAULT_RESPONSE_FORMAT_KWARGS = MappingProxyType(

19 | dict(

20 | include_code=False,

21 | display_updated_vars=True,

22 | include_query=True,

23 | include_header=True,

24 | )

25 | )

26 |

27 |

28 | class InteractionFormatter(abc.ABC):

29 | def format_action(self, action: CodeNavAction):

30 | raise NotImplementedError

31 |

32 | def format_response(self, response: RESPONSE_TYPES):

33 | raise NotImplementedError

34 |

35 |

36 | class DefaultInteractionFormatter(InteractionFormatter):

37 | def __init__(

38 | self,

39 | action_format_kwargs: Mapping[str, bool] = DEFAULT_ACTION_FORMAT_KWARGS,

40 | response_format_kwargs: Mapping[str, bool] = DEFAULT_RESPONSE_FORMAT_KWARGS,

41 | ):

42 | self.action_format_kwargs = action_format_kwargs

43 | self.response_format_kwargs = response_format_kwargs

44 |

45 | def format_action(self, action: CodeNavAction):

46 | return Interaction.format_action(

47 | action,

48 | **self.action_format_kwargs,

49 | )

50 |

51 | def format_response(self, response: RESPONSE_TYPES):

52 | return Interaction.format_response(

53 | response,

54 | **self.response_format_kwargs,

55 | )

56 |

57 |

58 | class CustomRetrievalInteractionFormatter(DefaultInteractionFormatter):

59 | def __init__(

60 | self, use_summary: Literal["ifshorter", "always", "never", "prototype"]

61 | ):

62 | super().__init__()

63 | self.use_summary = use_summary

64 |

65 | def format_retrieval_result(self, rr: RetrievalResult, include_query=True):

66 | res_str = ""

67 | if include_query:

68 | res_str += f"QUERY:\n{rr.query}\n\n"

69 |

70 | res_str += "CODE BLOCKS:\n"

71 |

72 | if len(rr.es_docs) == 0:

73 | if rr.failure_reason is not None:

74 | res_str += f"Failed to retrieve code blocks: {rr.failure_reason}\n"

75 | else:

76 | res_str += "No code blocks found.\n"

77 |

78 | return res_str

79 |

80 | for doc in rr.es_docs[: rr.max_expanded]:

81 | if self.use_summary == "prototype":

82 | doc = {**doc}

83 | doc["text"] = doc["prototype"] or doc["text"]

84 |

85 | use_summary = "never"

86 | else:

87 | use_summary = self.use_summary

88 |

89 | res_str += f"---\n{es_doc_to_string(doc, use_summary=use_summary)}\n"

90 |

91 | res_str += "---\n"

92 |

93 | unexpanded_docs = rr.es_docs[rr.max_expanded :]

94 | if len(unexpanded_docs) <= rr.max_expanded:

95 | res_str += "(All code blocks matching the query were returned.)\n"

96 | else:

97 | res_str += (

98 | f"({len(unexpanded_docs)} additional code blocks not shown."

99 | f" Search again with the same query to see additional results.)\n\n"

100 | )

101 |

102 | if rr.max_prototype > 0:

103 | prototypes_docs = [

104 | doc

105 | for doc in unexpanded_docs

106 | if doc["type"] in {"CLASS", "FUNCTION"}

107 | ]

108 | num_prototype_docs_shown = min(len(prototypes_docs), rr.max_prototype)

109 | res_str += (

110 | f"Prototypes for the next {num_prototype_docs_shown} out of"

111 | f" {len(prototypes_docs)} classes/functions found in unexpanded results"

112 | f" (search again with the same query to see details):\n"

113 | )

114 | for doc in prototypes_docs[:num_prototype_docs_shown]:

115 | res_str += f"{es_doc_to_string(doc, prototype=True)}\n"

116 |

117 | return res_str

118 |

119 | def format_response(self, response: RESPONSE_TYPES):

120 | if not isinstance(response, MultiRetrievalResult):

121 | return super(CustomRetrievalInteractionFormatter, self).format_response(

122 | response

123 | )

124 | else:

125 | res_str = ""

126 | for res in response.retrieval_results:

127 | res_str += self.format_retrieval_result(res, include_query=True)

128 | res_str += "\n"

129 |

130 | return res_str

131 |

--------------------------------------------------------------------------------

/codenav/agents/llm_chat_agent.py:

--------------------------------------------------------------------------------

1 | import copy

2 | import traceback

3 | from typing import Any, Dict, List, Optional, Sequence, Union, cast

4 |

5 | from openai.types.chat import (

6 | ChatCompletionAssistantMessageParam,

7 | ChatCompletionSystemMessageParam,

8 | ChatCompletionUserMessageParam,

9 | )

10 |

11 | import codenav.interaction.messages as msg

12 | from codenav.agents.agent import CodeNavAgent

13 | from codenav.agents.interaction_formatters import (

14 | DefaultInteractionFormatter,

15 | InteractionFormatter,

16 | )

17 | from codenav.constants import (

18 | TOGETHER_CLIENT,

19 | )

20 | from codenav.prompts.restart_prompt import RESTART_PROMPT

21 | from codenav.utils.llm_utils import MaxTokensExceededError, query_gpt

22 |

23 | LlmChatMessage = Union[

24 | ChatCompletionSystemMessageParam,

25 | ChatCompletionUserMessageParam,

26 | ChatCompletionAssistantMessageParam,

27 | ]

28 |

29 |

30 | class LLMChatCodeNavAgent(CodeNavAgent):

31 | def __init__(

32 | self,

33 | model: str,

34 | prompt: str,

35 | max_tokens: int = 50000,

36 | allowed_action_types: Sequence[msg.ACTION_TYPES] = (

37 | "code",

38 | "done",

39 | "search",

40 | "reset",

41 | ),

42 | interaction_formatter: Optional[InteractionFormatter] = None,

43 | ):

44 | super().__init__(allowed_action_types=allowed_action_types)

45 | self.model = model

46 | self.prompt = prompt

47 | self.max_tokens = max_tokens

48 |

49 | if interaction_formatter is None:

50 | self.interaction_formatter = DefaultInteractionFormatter()

51 | else:

52 | self.interaction_formatter = interaction_formatter

53 |

54 | self._all_queries_and_responses: List[Dict] = []

55 |

56 | @property

57 | def client(self):

58 | return TOGETHER_CLIENT

59 |

60 | def query_llm(

61 | self,

62 | messages: List[LlmChatMessage],

63 | model: Optional[str] = None,

64 | max_tokens: Optional[int] = None,

65 | ) -> str:

66 | if model is None:

67 | model = self.model

68 |

69 | if max_tokens is None:

70 | max_tokens = self.max_tokens

71 |

72 | response_dict = query_gpt(

73 | messages=messages,

74 | model=model,

75 | max_tokens=max_tokens,

76 | client=self.client,

77 | return_input_output_tokens=True,

78 | )

79 | self._all_queries_and_responses.append(

80 | {"input": copy.deepcopy(messages), **response_dict}

81 | )

82 | return response_dict["output"]

83 |

84 | @property

85 | def all_queries_and_responses(self) -> List[Dict[str, Any]]:

86 | return copy.deepcopy(self._all_queries_and_responses)

87 |

88 | def init_episode_state(self) -> msg.EpisodeState:

89 | return msg.EpisodeState(system_prompt=msg.SystemPrompt(content=self.prompt))

90 |

91 | def create_message_from_text(self, text: str, role: str) -> LlmChatMessage:

92 | return cast(LlmChatMessage, {"role": role, "content": text})

93 |

94 | def build_chat_context(

95 | self,

96 | episode_state: msg.EpisodeState,

97 | ) -> List[LlmChatMessage]:

98 | chat_messages: List[LlmChatMessage] = [

99 | self.create_message_from_text(

100 | text=episode_state.system_prompt.content, role="system"

101 | ),

102 | ]

103 | for interaction in episode_state.interactions:

104 | if interaction.hidden:

105 | continue

106 |

107 | if interaction.action is not None:

108 | chat_messages.append(

109 | self.create_message_from_text(

110 | text=self.interaction_formatter.format_action(

111 | interaction.action

112 | ),

113 | role="assistant",

114 | )

115 | )

116 |

117 | if interaction.response is not None:

118 | chat_messages.append(

119 | self.create_message_from_text(

120 | text=self.interaction_formatter.format_response(

121 | interaction.response,

122 | ),

123 | role="user",

124 | )

125 | )

126 |

127 | return chat_messages

128 |

129 | def summarize_episode_state_for_restart(

130 | self, episode_state: msg.EpisodeState, max_tokens: Optional[int]

131 | ) -> str:

132 | if max_tokens is None:

133 | max_tokens = self.max_tokens

134 |

135 | chat_messages = self.build_chat_context(episode_state)

136 |

137 | current_env_var_names = {

138 | var_name

139 | for i in episode_state.interactions

140 | if isinstance(i.response, msg.ExecutionResult)

141 | for var_name in (i.response.updated_vars or {}).keys()

142 | }

143 |

144 | chat_messages.append(

145 | self.create_message_from_text(

146 | text=RESTART_PROMPT.format(

147 | current_env_var_names=", ".join(sorted(list(current_env_var_names)))

148 | ),

149 | role="user",

150 | )

151 | )

152 |

153 | return self.query_llm(messages=chat_messages, max_tokens=max_tokens)

154 |

155 | def get_action(self) -> msg.CodeNavAction:

156 | chat_messages = self.build_chat_context(self.episode_state)

157 | try:

158 | output = self.query_llm(messages=chat_messages)

159 | return msg.CodeNavAction.from_text(output)

160 | except MaxTokensExceededError:

161 | if "reset" in self.allowed_action_types:

162 | summary = self.summarize_episode_state_for_restart(

163 | episode_state=self.episode_state, max_tokens=2 * self.max_tokens

164 | )

165 | for interaction in self.episode_state.interactions:

166 | if not isinstance(interaction.response, msg.UserQueryToAgent):

167 | if not interaction.hidden:

168 | interaction.hidden_at_index = len(

169 | self.episode_state.interactions

170 | )

171 | interaction.hidden = True

172 | action = msg.CodeNavAction.from_text(summary)

173 | action.type = "reset"

174 | return action

175 |

176 | return msg.CodeNavAction(

177 | thought=f"Max tokens exceeded:\n{traceback.format_exc()}",

178 | type="done",

179 | content="False",

180 | )

181 |

--------------------------------------------------------------------------------

/codenav/codenav_run.py:

--------------------------------------------------------------------------------

1 | import importlib

2 | import os

3 | import re

4 | import subprocess

5 | import time

6 | from argparse import ArgumentParser

7 | from typing import Any, Dict, Optional, Sequence

8 |

9 | import attrs

10 | from elasticsearch import Elasticsearch

11 |

12 | from codenav.default_eval_spec import run_codenav_on_query

13 | from codenav.interaction.episode import Episode

14 | from codenav.retrieval.elasticsearch.index_codebase import (

15 | DEFAULT_ES_HOST,

16 | DEFAULT_ES_PORT,

17 | DEFAULT_KIBANA_PORT,

18 | build_index,

19 | )

20 | from codenav.retrieval.elasticsearch.install_elasticsearch import (

21 | ES_PATH,

22 | KIBANA_PATH,

23 | install_elasticsearch,

24 | is_es_installed,

25 | )

26 |

27 |

28 | def is_port_in_use(port: int) -> bool:

29 | import socket

30 |

31 | with socket.socket(socket.AF_INET, socket.SOCK_STREAM) as s:

32 | return s.connect_ex(("localhost", port)) == 0

33 |

34 |

35 | def is_es_running():

36 | es = Elasticsearch(DEFAULT_ES_HOST)

37 | return es.ping()

38 |

39 |

40 | def run_init():

41 | es = Elasticsearch(DEFAULT_ES_HOST)

42 | if es.ping():

43 | print(

44 | "Initialization complete, Elasticsearch is already running at http://localhost:9200."

45 | )

46 | return

47 |

48 | if not is_es_installed():

49 | print("Elasticsearch installation not found, downloading...")

50 | install_elasticsearch()

51 |

52 | if not is_es_installed():

53 | raise ValueError("Elasticsearch installation failed")

54 |

55 | if is_port_in_use(DEFAULT_ES_PORT) or is_port_in_use(DEFAULT_KIBANA_PORT):

56 | raise ValueError(

57 | f"The ports {DEFAULT_ES_PORT} and {DEFAULT_KIBANA_PORT} are already in use,"

58 | f" to start elasticsearch we require that these ports are free."

59 | )

60 |

61 | cmd = os.path.join(ES_PATH, "bin", "elasticsearch")

62 | print(f"Starting Elasticsearch server with command: {cmd}")

63 | es_process = subprocess.Popen(

64 | [cmd], stdout=subprocess.DEVNULL, stderr=subprocess.DEVNULL

65 | )

66 |

67 | cmd = os.path.join(KIBANA_PATH, "bin", "kibana")

68 | print(f"Starting Kibana server with command: {cmd}")

69 | kibana_process = subprocess.Popen(

70 | [cmd], stdout=subprocess.DEVNULL, stderr=subprocess.DEVNULL

71 | )

72 |

73 | es_started = False

74 | kibana_started = False

75 | try:

76 | for _ in range(10):

77 | es_started = es.ping()

78 | kibana_started = is_port_in_use(DEFAULT_KIBANA_PORT)

79 |

80 | if not es_started:

81 | print("Elasticsearch server not started yet...")

82 |

83 | if not kibana_started:

84 | print("Kibana server not started yet...")

85 |

86 | if es_started and kibana_started:

87 | break

88 |

89 | print("Waiting 10 seconds...")

90 | time.sleep(10)

91 |

92 | if not (es_started and kibana_started):

93 | raise RuntimeError("Elasticsearch failed to start")

94 |

95 | finally:

96 | if not (es_started and kibana_started):

97 | es_process.kill()

98 | kibana_process.kill()

99 |

100 | # noinspection PyUnreachableCode

101 | print(

102 | f"Initialization complete. "

103 | f" Elasticsearch server started successfully (PID {es_process.pid}) and can be accessed at {DEFAULT_ES_PORT}."

104 | f" You can also access the Kibana dashboard (PID {kibana_process.pid}) at {DEFAULT_KIBANA_PORT}."

105 | f" You will need to manually stop these processes when you are done with them."

106 | )

107 |

108 |

109 | def main():

110 | parser = ArgumentParser()

111 | parser.add_argument(

112 | "command",

113 | help="command to be executed",

114 | choices=["init", "stop", "query"],

115 | )

116 | parser.add_argument(

117 | "--code_dir",

118 | type=str,

119 | default=None,

120 | help="Path to the codebase to use. Only one of `code_dir` or `module` should be provided.",

121 | )

122 | parser.add_argument(

123 | "--module",

124 | type=str,

125 | default=None,

126 | help="Module to use for the codebase. Only one of `code_dir` or `module` should be provided.",

127 | )

128 |

129 | parser.add_argument(

130 | "--playground_dir",

131 | type=str,

132 | default=None,

133 | help="The working directory for the agent.",

134 | )

135 | parser.add_argument(

136 | "--max_steps",

137 | type=int,

138 | default=20,

139 | help="Maximum number of rounds of interaction.",

140 | )

141 | parser.add_argument(

142 | "-q",

143 | "--q",

144 | "--query",

145 | type=str,

146 | help="A description of the problem you want the the agent to solve (using `code_dir`).",

147 | )

148 | parser.add_argument(

149 | "-f",

150 | "--query_file",

151 | type=str,

152 | default=None,

153 | help="A path to a file containing your query (useful for long/detailed queries that are hard to enter on the commandline).",

154 | )

155 | parser.add_argument(

156 | "--force_reindex",

157 | action="store_true",

158 | help="Will delete the existing index (if any) and refresh it.",

159 | )

160 |

161 | parser.add_argument(

162 | "--force_subdir",

163 | type=str,

164 | default=None,

165 | help="Index only a subdirectory of the code_dir",

166 | )

167 |

168 | parser.add_argument(

169 | "--repo_description_path",

170 | type=str,

171 | default=None,

172 | help="Path to a file containing a description of the codebase.",

173 | )

174 |

175 | args = parser.parse_args()

176 |

177 | if args.command == "init":

178 | run_init()

179 | elif args.command == "stop":

180 | # Find all processes that start with ES_PATH and KIBANA_PATH and kill them

181 | for path in [ES_PATH, KIBANA_PATH]:

182 | cmd = f"ps aux | grep {path} | grep -v grep | awk '{{print $2}}' | xargs kill "

183 | subprocess.run(cmd, shell=True)

184 | elif args.command == "query":

185 | if not is_es_running():

186 | raise ValueError(

187 | "Elasticsearch not running, please run `codenav init` first."

188 | )

189 |

190 | assert (args.q is None) != (

191 | args.query_file is None

192 | ), "Exactly one of `q` or `query_file` should be provided"

193 |

194 | if args.query_file is not None:

195 | print(args.query_file)

196 | with open(args.query_file, "r") as f:

197 | args.q = f.read()

198 |

199 | if args.q is None:

200 | raise ValueError("No query provided")

201 |

202 | if args.code_dir is None == args.module is None:

203 | raise ValueError("Exactly one of `code_dir` or `module` should be provided")

204 |

205 | if args.code_dir is None and args.module is None:

206 | raise ValueError("No code_dir or module provided")

207 |

208 | if args.playground_dir is None:

209 | raise ValueError("No playground_dir provided")

210 |

211 | if args.code_dir is None:

212 | path_to_module = os.path.abspath(

213 | os.path.dirname(importlib.import_module(args.module).__file__)

214 | )

215 | args.code_dir = os.path.dirname(path_to_module)

216 | code_name = os.path.basename(path_to_module)

217 | force_subdir = code_name

218 | sys_path = os.path.dirname(path_to_module)

219 | else:

220 | force_subdir = args.force_subdir

221 | args.code_dir = os.path.abspath(args.code_dir)

222 | sys_path = args.code_dir

223 | code_name = os.path.basename(args.code_dir)

224 |

225 | args.playground_dir = os.path.abspath(args.playground_dir)

226 |

227 | if args.force_reindex or not Elasticsearch(DEFAULT_ES_HOST).indices.exists(

228 | index=code_name

229 | ):

230 | print(f"Index {code_name} not found, creating index...")

231 | build_index(

232 | code_dir=args.code_dir,

233 | index_uid=code_name,

234 | delete_index=args.force_reindex,

235 | force_subdir=force_subdir,

236 | )

237 |

238 | run_codenav_on_query(

239 | exp_name=re.sub("[^A-Za-z0–9 ]", "", args.q).replace(" ", "_")[:30],

240 | out_dir=args.playground_dir,

241 | query=args.q,

242 | code_dir=args.code_dir if args.module is None else args.module,

243 | sys_paths=[sys_path],

244 | index_name=code_name,

245 | working_dir=args.playground_dir,

246 | max_steps=args.max_steps,

247 | repo_description_path=args.repo_description_path,

248 | )

249 |

250 | else:

251 | raise ValueError(f"Unrecognized command: {args.command}")

252 |

253 |

254 | if __name__ == "__main__":

255 | main()

256 |

--------------------------------------------------------------------------------

/codenav/constants.py:

--------------------------------------------------------------------------------

1 | import os

2 | import warnings

3 | from pathlib import Path

4 | from typing import Optional

5 |

6 | ABS_PATH_OF_CODENAV_DIR = os.path.abspath(os.path.dirname(Path(__file__)))

7 | PROMPTS_DIR = os.path.join(ABS_PATH_OF_CODENAV_DIR, "prompts")

8 |

9 | DEFAULT_OPENAI_MODEL = "gpt-4o-2024-05-13"

10 | DEFAULT_RETRIEVAL_PER_QUERY = 3

11 |

12 |

13 | def get_env_var(name: str) -> Optional[str]:

14 | if name in os.environ:

15 | return os.environ[name]

16 | else:

17 | return None

18 |

19 |

20 | OPENAI_API_KEY = get_env_var("OPENAI_API_KEY")

21 | OPENAI_ORG = get_env_var("OPENAI_ORG")

22 | OPENAI_CLIENT = None

23 | try:

24 | from openai import OpenAI

25 |

26 | if OPENAI_API_KEY is not None and OPENAI_ORG is not None:

27 | OPENAI_CLIENT = OpenAI(

28 | api_key=OPENAI_API_KEY,

29 | organization=OPENAI_ORG,

30 | )

31 | else:

32 | warnings.warn(

33 | "OpenAI_API_KEY and OPENAI_ORG not set. OpenAI API will not work."

34 | )

35 | except ImportError:

36 | warnings.warn("openai package not found. OpenAI API will not work.")

37 |

38 |

39 | TOGETHER_API_KEY = get_env_var("TOGETHER_API_KEY")

40 | TOGETHER_CLIENT = None

41 | try:

42 | from together import Together

43 |

44 | if TOGETHER_API_KEY is not None:

45 | TOGETHER_CLIENT = Together(api_key=TOGETHER_API_KEY)

46 | else:

47 | warnings.warn("TOGETHER_API_KEY not set. Together API will not work.")

48 | except ImportError:

49 | warnings.warn("together package not found. Together API will not work.")

50 |

51 |

52 | COHERE_API_KEY = get_env_var("COHERE_API_KEY")

53 | COHERE_CLIENT = None

54 | try:

55 | import cohere

56 |

57 | if COHERE_API_KEY is not None:

58 | COHERE_CLIENT = cohere.Client(api_key=COHERE_API_KEY)

59 | else:

60 | warnings.warn("COHERE_API_KEY not set. Cohere API will not work.")

61 | except ImportError:

62 | warnings.warn("cohere package not found. Cohere API will not work.")

63 |

--------------------------------------------------------------------------------

/codenav/default_eval_spec.py:

--------------------------------------------------------------------------------

1 | import os

2 | from typing import Any, List, Optional

3 |

4 | from codenav.agents.gpt4.agent import OpenAICodeNavAgent

5 | from codenav.constants import ABS_PATH_OF_CODENAV_DIR, DEFAULT_OPENAI_MODEL

6 | from codenav.environments.code_env import PythonCodeEnv

7 | from codenav.environments.done_env import DoneEnv

8 | from codenav.environments.retrieval_env import EsCodeRetriever, RetrievalEnv

9 | from codenav.interaction.episode import Episode

10 | from codenav.retrieval.elasticsearch.elasticsearch_constants import RESERVED_CHARACTERS

11 | from codenav.retrieval.elasticsearch.index_codebase import DEFAULT_ES_HOST

12 | from codenav.utils.eval_types import EvalInput, EvalSpec, Str2AnyDict

13 | from codenav.utils.evaluator import CodenavEvaluator

14 | from codenav.utils.prompt_utils import PROMPTS_DIR, PromptBuilder

15 |

16 |

17 | class DefaultEvalSpec(EvalSpec):

18 | def __init__(

19 | self,

20 | episode_kwargs: Str2AnyDict,

21 | interaction_kwargs: Str2AnyDict,

22 | logging_kwargs: Str2AnyDict,

23 | ):

24 | super().__init__(episode_kwargs, interaction_kwargs, logging_kwargs)

25 |

26 | @staticmethod

27 | def build_episode(

28 | eval_input: EvalInput,

29 | episode_kwargs: Optional[Str2AnyDict] = None,

30 | ) -> Episode:

31 | assert episode_kwargs is not None

32 |

33 | prompt_builder = PromptBuilder(

34 | prompt_dirs=episode_kwargs["prompt_dirs"],

35 | repo_description=episode_kwargs["repo_description"],

36 | )

37 | prompt = prompt_builder.build(

38 | dict(

39 | AVAILABLE_ACTIONS=episode_kwargs["allowed_actions"],

40 | RESERVED_CHARACTERS=RESERVED_CHARACTERS,

41 | RETRIEVALS_PER_KEYWORD=episode_kwargs["retrievals_per_keyword"],

42 | )

43 | )

44 |

45 | return Episode(

46 | agent=OpenAICodeNavAgent(

47 | prompt=prompt,

48 | model=episode_kwargs["llm"],

49 | allowed_action_types=episode_kwargs["allowed_actions"],

50 | ),

51 | action_type_to_env=dict(

52 | code=PythonCodeEnv(

53 | code_dir=episode_kwargs["code_dir"],

54 | sys_paths=episode_kwargs["sys_paths"],

55 | working_dir=episode_kwargs["working_dir"],

56 | ),

57 | search=RetrievalEnv(

58 | code_retriever=EsCodeRetriever(

59 | index_name=episode_kwargs["index_name"],

60 | host=episode_kwargs["host"],

61 | ),

62 | expansions_per_query=episode_kwargs["retrievals_per_keyword"],

63 | prototypes_per_query=episode_kwargs["prototypes_per_keyword"],

64 | summarize_code=False,

65 | ),

66 | done=DoneEnv(),

67 | ),

68 | user_query_str=eval_input.query,

69 | )

70 |

71 | @staticmethod

72 | def run_interaction(

73 | episode: Episode,

74 | interaction_kwargs: Optional[Str2AnyDict] = None,

75 | ) -> Str2AnyDict:

76 | assert interaction_kwargs is not None

77 | episode.step_until_max_steps_or_success(

78 | max_steps=interaction_kwargs["max_steps"],

79 | verbose=interaction_kwargs["verbose"],

80 | )

81 | ipynb_str = episode.to_notebook(cur_dir=episode.code_env.working_dir)

82 | return dict(ipynb_str=ipynb_str)

83 |

84 | @staticmethod

85 | def log_output(

86 | interaction_output: Str2AnyDict,

87 | eval_input: EvalInput,

88 | logging_kwargs: Optional[Str2AnyDict] = None,

89 | ) -> Any:

90 | assert logging_kwargs is not None

91 |

92 | outfile = os.path.join(logging_kwargs["out_dir"], f"{eval_input.uid}.ipynb")

93 | with open(outfile, "w") as f:

94 | f.write(interaction_output["ipynb_str"])

95 |

96 | return outfile

97 |

98 |

99 | def run_codenav_on_query(

100 | exp_name: str,

101 | out_dir: str,

102 | query: str,

103 | code_dir: str,

104 | index_name: str,

105 | working_dir: str = os.path.join(

106 | os.path.dirname(ABS_PATH_OF_CODENAV_DIR), "playground"

107 | ),

108 | sys_paths: Optional[List[str]] = None,

109 | repo_description_path: Optional[str] = None,

110 | es_host: str = DEFAULT_ES_HOST,

111 | max_steps: int = 20,

112 | ):

113 | prompt_dirs = [PROMPTS_DIR]

114 | repo_description = "default/repo_description.txt"

115 | if repo_description_path is not None:

116 | prompt_dir, repo_description = os.path.split(repo_description_path)

117 | conflict_path = os.path.join(PROMPTS_DIR, repo_description)

118 | if os.path.exists(conflict_path):

119 | raise ValueError(

120 | f"Prompt conflict detected: {repo_description} already exists in {PROMPTS_DIR}. "

121 | f"Please rename the {repo_description_path} file to resolve this conflict."

122 | )

123 | # append to front

124 | prompt_dirs.append(prompt_dir)

125 |

126 | episode_kwargs = dict(

127 | allowed_actions=["done", "code", "search"],

128 | repo_description=repo_description,

129 | retrievals_per_keyword=3,

130 | prototypes_per_keyword=7,

131 | llm=DEFAULT_OPENAI_MODEL,

132 | code_dir=code_dir,

133 | sys_paths=[] if sys_paths is None else sys_paths,

134 | working_dir=working_dir,

135 | index_name=index_name,

136 | host=es_host,

137 | prompt_dirs=prompt_dirs,

138 | )

139 | interaction_kwargs = dict(max_steps=max_steps, verbose=True)

140 | logging_kwargs = dict(out_dir=out_dir)

141 |

142 | # Run CodeNav on the query

143 | outfile = CodenavEvaluator.evaluate_input(

144 | eval_input=EvalInput(uid=exp_name, query=query),

145 | eval_spec=DefaultEvalSpec(

146 | episode_kwargs=episode_kwargs,

147 | interaction_kwargs=interaction_kwargs,

148 | logging_kwargs=logging_kwargs,

149 | ),

150 | )

151 |

152 | print("Output saved to ", outfile)

153 |

--------------------------------------------------------------------------------

/codenav/environments/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/allenai/codenav/2f200d9de2ee15cc82e232b8414765fe5f2fb617/codenav/environments/__init__.py

--------------------------------------------------------------------------------

/codenav/environments/abstractions.py:

--------------------------------------------------------------------------------

1 | import abc

2 | from typing import Tuple, Optional, Union

3 |

4 | from codenav.interaction.messages import (

5 | CodeNavAction,

6 | InvalidAction,

7 | MultiRetrievalResult,

8 | ExecutionResult,

9 | UserMessageToAgent,

10 | )

11 |

12 |

13 | class CodeNavEnv(abc.ABC):

14 | @abc.abstractmethod

15 | def check_action_validity(self, action: CodeNavAction) -> Tuple[bool, str]:

16 | raise NotImplementedError

17 |

18 | @abc.abstractmethod

19 | def step(

20 | self, action: CodeNavAction

21 | ) -> Optional[

22 | Union[InvalidAction, MultiRetrievalResult, ExecutionResult, UserMessageToAgent]

23 | ]:

24 | raise NotImplementedError

25 |

--------------------------------------------------------------------------------

/codenav/environments/code_summary_env.py:

--------------------------------------------------------------------------------

1 | from typing import Optional, Tuple

2 |

3 | from codenav.environments.abstractions import CodeNavEnv

4 | from codenav.interaction.messages import CodeNavAction, UserMessageToAgent

5 |

6 |

7 | class CodeSummaryEnv(CodeNavEnv):

8 | def __init__(self) -> None:

9 | self.summary: Optional[str] = None

10 |

11 | def check_action_validity(self, action: CodeNavAction) -> Tuple[bool, str]:

12 | if action.content is None or action.content.strip() == "":

13 | return False, "No summary found in the action content."

14 |

15 | return True, ""

16 |

17 | def step(self, action: CodeNavAction) -> UserMessageToAgent:

18 | assert action.content is not None

19 | self.summary = action.content.strip()

20 | return UserMessageToAgent(message="Summary received and stored.")

21 |

--------------------------------------------------------------------------------

/codenav/environments/done_env.py:

--------------------------------------------------------------------------------

1 | from typing import Tuple

2 |

3 | from codenav.environments.abstractions import CodeNavEnv

4 | from codenav.interaction.messages import CodeNavAction

5 |

6 |

7 | class DoneEnv(CodeNavEnv):

8 | def check_action_validity(self, action: CodeNavAction) -> Tuple[bool, str]:

9 | assert action.content is not None

10 |

11 | if action.content.strip().lower() in ["true", "false"]:

12 | return True, ""

13 | else:

14 | return (

15 | False,

16 | "When executing the done action, the content must be either 'True' or 'False'",

17 | )

18 |

19 | def step(self, action: CodeNavAction) -> None:

20 | return None

21 |

--------------------------------------------------------------------------------

/codenav/environments/retrieval_env.py:

--------------------------------------------------------------------------------

1 | import os

2 | import traceback

3 | from typing import Dict, List, Sequence, Tuple, Union

4 |

5 | import codenav.interaction.messages as msg

6 | from codenav.environments.abstractions import CodeNavEnv

7 | from codenav.interaction.messages import CodeNavAction

8 | from codenav.retrieval.code_blocks import CodeBlockType

9 | from codenav.retrieval.elasticsearch.create_index import EsDocument, es_doc_to_hash

10 | from codenav.retrieval.elasticsearch.elasticsearch_retriever import (

11 | EsCodeRetriever,

12 | parallel_add_summary_to_es_docs,

13 | )

14 |

15 |

16 | def reorder_es_docs(es_docs: List[EsDocument]) -> List[EsDocument]:

17 | scores = []

18 | for es_doc in es_docs:

19 | t = es_doc["type"]

20 | proto = es_doc.get("prototype") or ""

21 |

22 | if t in [

23 | CodeBlockType.FUNCTION.name,

24 | CodeBlockType.CLASS.name,

25 | CodeBlockType.DOCUMENTATION.name,

26 | ]:

27 | score = 0.0

28 | elif t in [CodeBlockType.IMPORT.name, CodeBlockType.ASSIGNMENT.name]:

29 | score = 1.0

30 | else:

31 | score = 2.0

32 |

33 | if (

34 | os.path.basename(es_doc["file_path"]).lower().startswith("test_")

35 | or os.path.basename(es_doc["file_path"]).lower().endswith("_test.py")

36 | or (proto is not None and "test_" in proto.lower() or "Test" in proto)

37 | ):

38 | score += 2.0

39 |

40 | scores.append(score)

41 |

42 | return [

43 | es_doc for _, es_doc in sorted(list(zip(scores, es_docs)), key=lambda x: x[0])

44 | ]

45 |

46 |

47 | class RetrievalEnv(CodeNavEnv):

48 | def __init__(

49 | self,

50 | code_retriever: EsCodeRetriever,

51 | expansions_per_query: int,

52 | prototypes_per_query: int,

53 | max_per_query: int = 100,

54 | summarize_code: bool = True,

55 | overwrite_existing_summary: bool = False,

56 | ):

57 | self.code_retriever = code_retriever

58 | self.expansions_per_query = expansions_per_query

59 | self.prototypes_per_query = prototypes_per_query

60 | self.max_per_query = max_per_query

61 | self.summarize_code = summarize_code

62 | self.overwrite_existing_summary = overwrite_existing_summary

63 |

64 | self.retrieved_es_docs: Dict[str, EsDocument] = {}

65 |

66 | def reset(self):

67 | self.retrieved_es_docs = dict()

68 |

69 | def check_action_validity(self, action: CodeNavAction) -> Tuple[bool, str]:

70 | return True, ""

71 |

72 | def _get_retrieval_result(self, query: str):

73 | query = query.strip()

74 |

75 | try:

76 | es_docs = self.code_retriever.search(

77 | query=query, default_n=self.max_per_query

78 | )

79 | except:

80 | error_msg = traceback.format_exc()

81 | print(error_msg)

82 | if "Failed to parse query" in error_msg:

83 | error_msg = (

84 | f"Failed to parse search query: {query}\n"

85 | f"Please check the syntax and try again (be careful to escape any reserved characters)."

86 | )

87 |

88 | return msg.RetrievalResult(

89 | query=query,

90 | es_docs=[],

91 | failure_reason=error_msg,

92 | )

93 |

94 | filtered_es_docs = [

95 | es_doc

96 | for es_doc in es_docs

97 | if es_doc_to_hash(es_doc) not in self.retrieved_es_docs

98 | ]

99 |