├── .gitignore

├── LICENSE

├── README.md

├── images

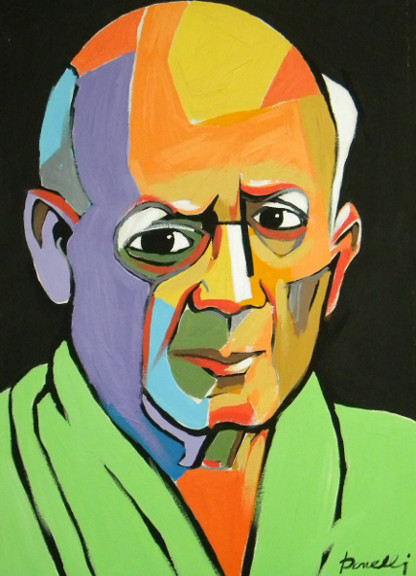

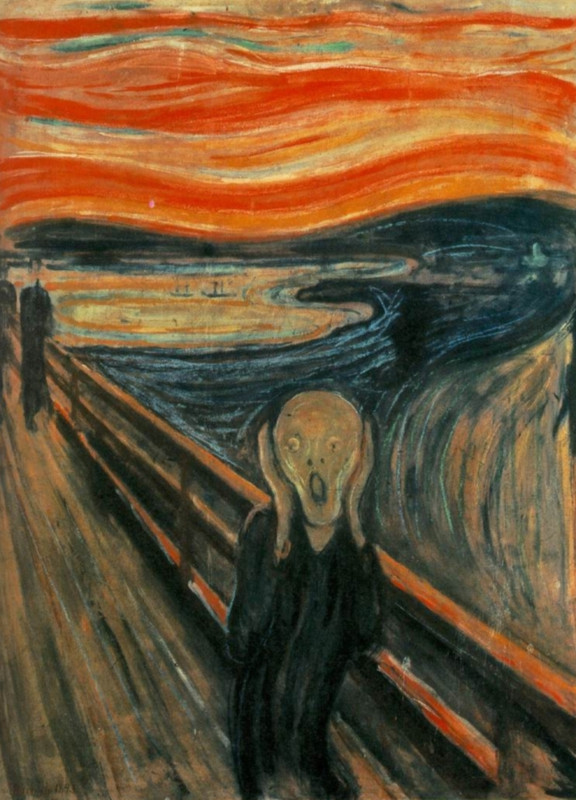

├── donelli.jpg

├── groening.jpg

├── lundstroem.jpg

├── margrethe.jpg

├── margrethe_donelli.jpg

├── margrethe_groening.jpg

├── margrethe_lundstroem.jpg

├── margrethe_picasso.jpg

├── margrethe_skrik.jpg

├── picasso.jpg

├── skrik.jpg

├── starry_night.jpg

├── tuebingen-starry_night.jpg

└── tuebingen.jpg

├── matconvnet.py

├── neural_artistic_style.py

└── style_network.py

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 |

5 | # C extensions

6 | *.so

7 |

8 | # Distribution / packaging

9 | .Python

10 | env/

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | *.egg-info/

23 | .installed.cfg

24 | *.egg

25 |

26 | # PyInstaller

27 | # Usually these files are written by a python script from a template

28 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

29 | *.manifest

30 | *.spec

31 |

32 | # Installer logs

33 | pip-log.txt

34 | pip-delete-this-directory.txt

35 |

36 | # Unit test / coverage reports

37 | htmlcov/

38 | .tox/

39 | .coverage

40 | .coverage.*

41 | .cache

42 | nosetests.xml

43 | coverage.xml

44 | *,cover

45 |

46 | # Translations

47 | *.mo

48 | *.pot

49 |

50 | # Django stuff:

51 | *.log

52 |

53 | # Sphinx documentation

54 | docs/_build/

55 |

56 | # PyBuilder

57 | target/

58 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | The MIT License (MIT)

2 |

3 | Copyright (c) 2015 Anders Boesen Lindbo Larsen

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

23 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | ## Neural Artistic Style in Python

2 |

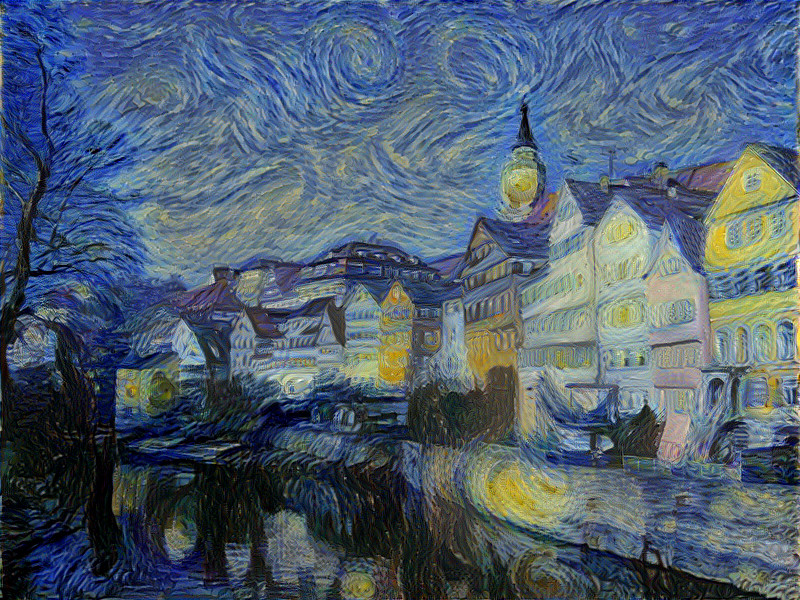

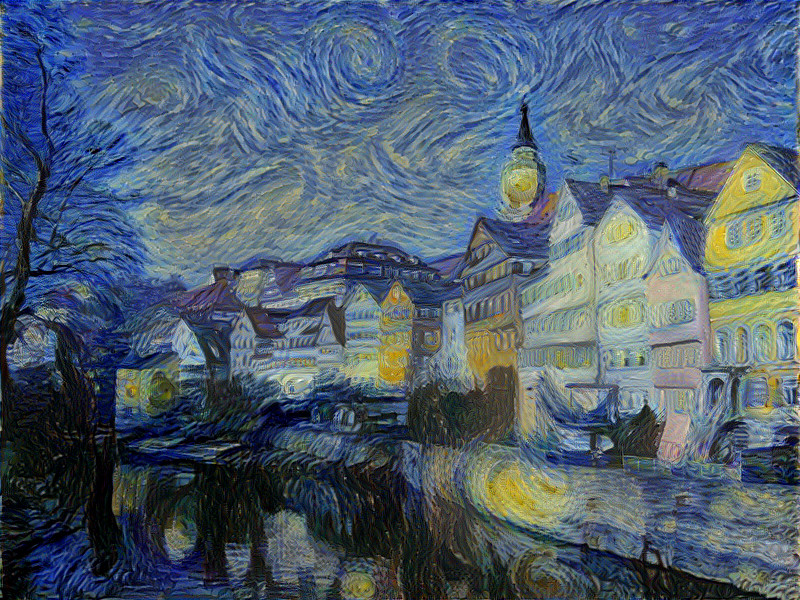

3 | Implementation of [A Neural Algorithm of Artistic Style](http://arxiv.org/abs/1508.06576). A method to transfer the style of one image to the subject of another image.

4 |

5 |

6 | ### Requirements

7 | - [DeepPy](http://github.com/andersbll/deeppy), Deep learning in Python.

8 | - [CUDArray](http://github.com/andersbll/cudarray) with [cuDNN](https://developer.nvidia.com/cudnn), CUDA-accelerated NumPy.

9 | - [Pretrained VGG 19 model](http://www.vlfeat.org/matconvnet/pretrained), choose *imagenet-vgg-verydeep-19*.

10 |

11 |

12 | ### Examples

13 | Execute

14 |

15 | python neural_artistic_style.py --subject images/tuebingen.jpg --style images/starry_night.jpg

16 |

17 | The two inputs are

18 |

19 |

20 | Subject:

21 |  22 | Style:

23 |

22 | Style:

23 |  24 |

24 |

25 |

26 | The output becomes:

27 |

28 |  29 |

29 |

30 |

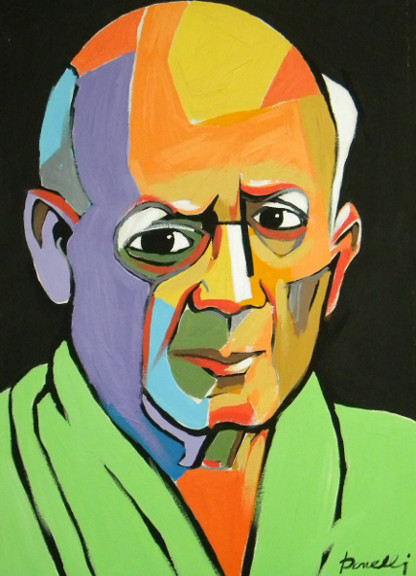

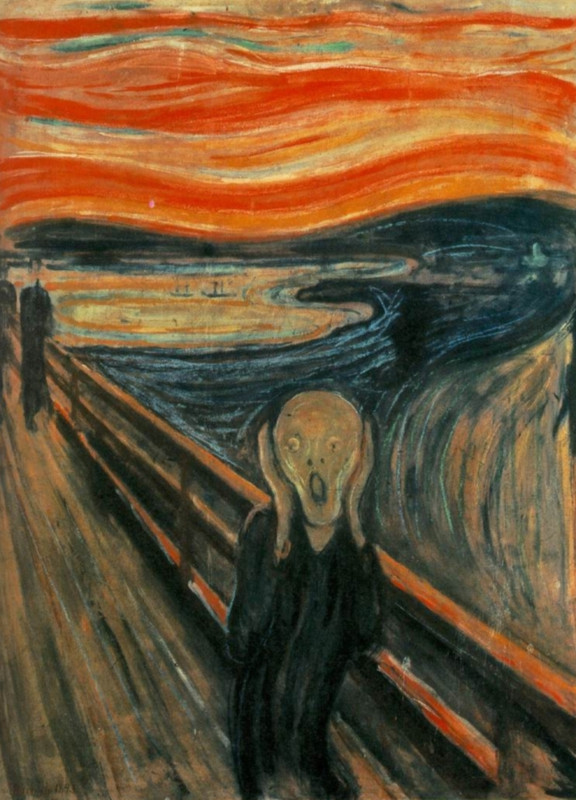

31 | We can also choose a (younger version) of HM the Queen of Denmark as subject and paint her using different styles. Click the images to see the full size.

32 |

33 | **Subject**

34 |

35 |  36 |

36 |

37 |

38 | **Styles**

39 |

40 |  41 |

41 |  42 |

42 |  43 |

43 |  44 |

44 |  45 |

45 |

46 |

47 | **Outputs**

48 |

49 |  50 |

50 |  51 |

51 |  52 |

52 |  53 |

53 |  54 |

54 |

55 |

56 |

57 | ### Help

58 | List command line options with

59 |

60 | python neural_artistic_style.py --help

61 |

--------------------------------------------------------------------------------

/images/donelli.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/andersbll/neural_artistic_style/94b21acca47db89a06b095c48f0c3c69d424144b/images/donelli.jpg

--------------------------------------------------------------------------------

/images/groening.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/andersbll/neural_artistic_style/94b21acca47db89a06b095c48f0c3c69d424144b/images/groening.jpg

--------------------------------------------------------------------------------

/images/lundstroem.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/andersbll/neural_artistic_style/94b21acca47db89a06b095c48f0c3c69d424144b/images/lundstroem.jpg

--------------------------------------------------------------------------------

/images/margrethe.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/andersbll/neural_artistic_style/94b21acca47db89a06b095c48f0c3c69d424144b/images/margrethe.jpg

--------------------------------------------------------------------------------

/images/margrethe_donelli.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/andersbll/neural_artistic_style/94b21acca47db89a06b095c48f0c3c69d424144b/images/margrethe_donelli.jpg

--------------------------------------------------------------------------------

/images/margrethe_groening.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/andersbll/neural_artistic_style/94b21acca47db89a06b095c48f0c3c69d424144b/images/margrethe_groening.jpg

--------------------------------------------------------------------------------

/images/margrethe_lundstroem.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/andersbll/neural_artistic_style/94b21acca47db89a06b095c48f0c3c69d424144b/images/margrethe_lundstroem.jpg

--------------------------------------------------------------------------------

/images/margrethe_picasso.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/andersbll/neural_artistic_style/94b21acca47db89a06b095c48f0c3c69d424144b/images/margrethe_picasso.jpg

--------------------------------------------------------------------------------

/images/margrethe_skrik.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/andersbll/neural_artistic_style/94b21acca47db89a06b095c48f0c3c69d424144b/images/margrethe_skrik.jpg

--------------------------------------------------------------------------------

/images/picasso.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/andersbll/neural_artistic_style/94b21acca47db89a06b095c48f0c3c69d424144b/images/picasso.jpg

--------------------------------------------------------------------------------

/images/skrik.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/andersbll/neural_artistic_style/94b21acca47db89a06b095c48f0c3c69d424144b/images/skrik.jpg

--------------------------------------------------------------------------------

/images/starry_night.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/andersbll/neural_artistic_style/94b21acca47db89a06b095c48f0c3c69d424144b/images/starry_night.jpg

--------------------------------------------------------------------------------

/images/tuebingen-starry_night.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/andersbll/neural_artistic_style/94b21acca47db89a06b095c48f0c3c69d424144b/images/tuebingen-starry_night.jpg

--------------------------------------------------------------------------------

/images/tuebingen.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/andersbll/neural_artistic_style/94b21acca47db89a06b095c48f0c3c69d424144b/images/tuebingen.jpg

--------------------------------------------------------------------------------

/matconvnet.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import scipy.io

3 | import deeppy as dp

4 |

5 |

6 | def conv_layer(weights, bias, border_mode):

7 | return dp.Convolution(

8 | n_filters=weights.shape[0],

9 | filter_shape=weights.shape[2:],

10 | border_mode=border_mode,

11 | weights=weights,

12 | bias=bias,

13 | )

14 |

15 |

16 | def pool_layer(pool_method, border_mode):

17 | return dp.Pool(

18 | win_shape=(3, 3),

19 | strides=(2, 2),

20 | method=pool_method,

21 | border_mode=border_mode,

22 | )

23 |

24 |

25 | def vgg_net(path, pool_method='max', border_mode='same'):

26 | matconvnet = scipy.io.loadmat(path)

27 | img_mean = matconvnet['meta'][0][0][2][0][0][2]

28 | vgg_layers = matconvnet['layers'][0]

29 | layers = []

30 | for layer in vgg_layers:

31 | layer = layer[0][0]

32 | layer_type = layer[1][0]

33 | if layer_type == 'conv':

34 | params = layer[2][0]

35 | weights = params[0]

36 | bias = params[1]

37 | weights = np.transpose(weights, (3, 2, 0, 1)).astype(dp.float_)

38 | bias = np.reshape(bias, (1, bias.size, 1, 1)).astype(dp.float_)

39 | layers.append(conv_layer(weights, bias, border_mode))

40 | elif layer_type == 'pool':

41 | layers.append(pool_layer(pool_method, border_mode))

42 | elif layer_type == 'relu':

43 | layers.append(dp.ReLU())

44 | elif layer_type == 'softmax':

45 | pass

46 | else:

47 | raise ValueError('invalid layer type: %s' % layer_type)

48 | return layers, img_mean

49 |

--------------------------------------------------------------------------------

/neural_artistic_style.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 |

3 | import os

4 | import argparse

5 | import numpy as np

6 | import scipy.misc

7 | import deeppy as dp

8 |

9 | from matconvnet import vgg_net

10 | from style_network import StyleNetwork

11 |

12 |

13 | def weight_tuple(s):

14 | try:

15 | conv_idx, weight = map(float, s.split(','))

16 | return conv_idx, weight

17 | except:

18 | raise argparse.ArgumentTypeError('weights must by "int,float"')

19 |

20 |

21 | def float_range(x):

22 | x = float(x)

23 | if x < 0.0 or x > 1.0:

24 | raise argparse.ArgumentTypeError("%r not in range [0, 1]" % x)

25 | return x

26 |

27 |

28 | def weight_array(weights):

29 | array = np.zeros(19)

30 | for idx, weight in weights:

31 | array[idx] = weight

32 | norm = np.sum(array)

33 | if norm > 0:

34 | array /= norm

35 | return array

36 |

37 |

38 | def imread(path):

39 | return scipy.misc.imread(path).astype(dp.float_)

40 |

41 |

42 | def imsave(path, img):

43 | img = np.clip(img, 0, 255).astype(np.uint8)

44 | scipy.misc.imsave(path, img)

45 |

46 |

47 | def to_bc01(img):

48 | return np.transpose(img, (2, 0, 1))[np.newaxis, ...]

49 |

50 |

51 | def to_rgb(img):

52 | return np.transpose(img[0], (1, 2, 0))

53 |

54 |

55 | def run():

56 | parser = argparse.ArgumentParser(

57 | description='Neural artistic style. Generates an image by combining '

58 | 'the subject from one image and the style from another.',

59 | formatter_class=argparse.ArgumentDefaultsHelpFormatter

60 | )

61 | parser.add_argument('--subject', required=True, type=str,

62 | help='Subject image.')

63 | parser.add_argument('--style', required=True, type=str,

64 | help='Style image.')

65 | parser.add_argument('--output', default='out.png', type=str,

66 | help='Output image.')

67 | parser.add_argument('--init', default=None, type=str,

68 | help='Initial image. Subject is chosen as default.')

69 | parser.add_argument('--init-noise', default=0.0, type=float_range,

70 | help='Weight between [0, 1] to adjust the noise level '

71 | 'in the initial image.')

72 | parser.add_argument('--random-seed', default=None, type=int,

73 | help='Random state.')

74 | parser.add_argument('--animation', default='animation', type=str,

75 | help='Output animation directory.')

76 | parser.add_argument('--iterations', default=500, type=int,

77 | help='Number of iterations to run.')

78 | parser.add_argument('--learn-rate', default=2.0, type=float,

79 | help='Learning rate.')

80 | parser.add_argument('--smoothness', type=float, default=5e-8,

81 | help='Weight of smoothing scheme.')

82 | parser.add_argument('--subject-weights', nargs='*', type=weight_tuple,

83 | default=[(9, 1)],

84 | help='List of subject weights (conv_idx,weight).')

85 | parser.add_argument('--style-weights', nargs='*', type=weight_tuple,

86 | default=[(0, 1), (2, 1), (4, 1), (8, 1), (12, 1)],

87 | help='List of style weights (conv_idx,weight).')

88 | parser.add_argument('--subject-ratio', type=float, default=2e-2,

89 | help='Weight of subject relative to style.')

90 | parser.add_argument('--pool-method', default='avg', type=str,

91 | choices=['max', 'avg'], help='Subsampling scheme.')

92 | parser.add_argument('--network', default='imagenet-vgg-verydeep-19.mat',

93 | type=str, help='Network in MatConvNet format).')

94 | args = parser.parse_args()

95 |

96 | if args.random_seed is not None:

97 | np.random.seed(args.random_seed)

98 |

99 | layers, pixel_mean = vgg_net(args.network, pool_method=args.pool_method)

100 |

101 | # Inputs

102 | style_img = imread(args.style) - pixel_mean

103 | subject_img = imread(args.subject) - pixel_mean

104 | if args.init is None:

105 | init_img = subject_img

106 | else:

107 | init_img = imread(args.init) - pixel_mean

108 | noise = np.random.normal(size=init_img.shape, scale=np.std(init_img)*1e-1)

109 | init_img = init_img * (1 - args.init_noise) + noise * args.init_noise

110 |

111 | # Setup network

112 | subject_weights = weight_array(args.subject_weights) * args.subject_ratio

113 | style_weights = weight_array(args.style_weights)

114 | net = StyleNetwork(layers, to_bc01(init_img), to_bc01(subject_img),

115 | to_bc01(style_img), subject_weights, style_weights,

116 | args.smoothness)

117 |

118 | # Repaint image

119 | def net_img():

120 | return to_rgb(net.image) + pixel_mean

121 |

122 | if not os.path.exists(args.animation):

123 | os.mkdir(args.animation)

124 |

125 | params = net.params

126 | learn_rule = dp.Adam(learn_rate=args.learn_rate)

127 | learn_rule_states = [learn_rule.init_state(p) for p in params]

128 | for i in range(args.iterations):

129 | imsave(os.path.join(args.animation, '%.4d.png' % i), net_img())

130 | cost = np.mean(net.update())

131 | for param, state in zip(params, learn_rule_states):

132 | learn_rule.step(param, state)

133 | print('Iteration: %i, cost: %.4f' % (i, cost))

134 | imsave(args.output, net_img())

135 |

136 |

137 | if __name__ == "__main__":

138 | run()

139 |

--------------------------------------------------------------------------------

/style_network.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import cudarray as ca

3 | import deeppy as dp

4 | from deeppy.base import Model

5 | from deeppy.parameter import Parameter

6 |

7 |

8 | class Convolution(dp.Convolution):

9 | """ Convolution layer wrapper

10 |

11 | This layer does not propagate gradients to filters. Also, it reduces

12 | memory consumption as it does not store fprop() input for bprop().

13 | """

14 | def __init__(self, layer):

15 | self.layer = layer

16 |

17 | def fprop(self, x):

18 | y = self.conv_op.fprop(x, self.weights.array)

19 | y += self.bias.array

20 | return y

21 |

22 | def bprop(self, y_grad):

23 | # Backprop to input image only

24 | _, x_grad = self.layer.conv_op.bprop(

25 | imgs=None, filters=self.weights.array, convout_d=y_grad,

26 | to_imgs=True, to_filters=False

27 | )

28 | return x_grad

29 |

30 | # Wrap layer methods

31 | def __getattr__(self, attr):

32 | if attr in self.__dict__:

33 | return getattr(self, attr)

34 | return getattr(self.layer, attr)

35 |

36 |

37 | def gram_matrix(img_bc01):

38 | n_channels = img_bc01.shape[1]

39 | feats = ca.reshape(img_bc01, (n_channels, -1))

40 | gram = ca.dot(feats, feats.T)

41 | return gram

42 |

43 |

44 | class StyleNetwork(Model):

45 | """ Artistic style network

46 |

47 | Implementation of [1].

48 |

49 | Differences:

50 | - The gradients for both subject and style are normalized. The original

51 | method uses pre-normalized convolutional features.

52 | - The Gram matrices are scaled wrt. # of pixels. The original method is

53 | sensitive to different image sizes between subject and style.

54 | - Additional smoothing term for visually better results.

55 |

56 | References:

57 | [1]: A Neural Algorithm of Artistic Style; Leon A. Gatys, Alexander S.

58 | Ecker, Matthias Bethge; arXiv:1508.06576; 08/2015

59 | """

60 |

61 | def __init__(self, layers, init_img, subject_img, style_img,

62 | subject_weights, style_weights, smoothness=0.0):

63 |

64 | # Map weights (in convolution indices) to layer indices

65 | self.subject_weights = np.zeros(len(layers))

66 | self.style_weights = np.zeros(len(layers))

67 | layers_len = 0

68 | conv_idx = 0

69 | for l, layer in enumerate(layers):

70 | if isinstance(layer, dp.Activation):

71 | self.subject_weights[l] = subject_weights[conv_idx]

72 | self.style_weights[l] = style_weights[conv_idx]

73 | if subject_weights[conv_idx] > 0 or \

74 | style_weights[conv_idx] > 0:

75 | layers_len = l+1

76 | conv_idx += 1

77 |

78 | # Discard unused layers

79 | layers = layers[:layers_len]

80 |

81 | # Wrap convolution layers for better performance

82 | self.layers = [Convolution(l) if isinstance(l, dp.Convolution) else l

83 | for l in layers]

84 |

85 | # Setup network

86 | x_shape = init_img.shape

87 | self.x = Parameter(init_img)

88 | self.x.setup(x_shape)

89 | for layer in self.layers:

90 | layer.setup(x_shape)

91 | x_shape = layer.y_shape(x_shape)

92 |

93 | # Precompute subject features and style Gram matrices

94 | self.subject_feats = [None]*len(self.layers)

95 | self.style_grams = [None]*len(self.layers)

96 | next_subject = ca.array(subject_img)

97 | next_style = ca.array(style_img)

98 | for l, layer in enumerate(self.layers):

99 | next_subject = layer.fprop(next_subject)

100 | next_style = layer.fprop(next_style)

101 | if self.subject_weights[l] > 0:

102 | self.subject_feats[l] = next_subject

103 | if self.style_weights[l] > 0:

104 | gram = gram_matrix(next_style)

105 | # Scale gram matrix to compensate for different image sizes

106 | n_pixels_subject = np.prod(next_subject.shape[2:])

107 | n_pixels_style = np.prod(next_style.shape[2:])

108 | scale = (n_pixels_subject / float(n_pixels_style))

109 | self.style_grams[l] = gram * scale

110 |

111 | self.tv_weight = smoothness

112 | kernel = np.array([[0, 1, 0], [1, -4, 1], [0, 1, 0]], dtype=dp.float_)

113 | kernel /= np.sum(np.abs(kernel))

114 | self.tv_kernel = ca.array(kernel[np.newaxis, np.newaxis, ...])

115 | self.tv_conv = ca.nnet.ConvBC01((1, 1), (1, 1))

116 |

117 | @property

118 | def image(self):

119 | return np.array(self.x.array)

120 |

121 | @property

122 | def params(self):

123 | return [self.x]

124 |

125 | def update(self):

126 | # Forward propagation

127 | next_x = self.x.array

128 | x_feats = [None]*len(self.layers)

129 | for l, layer in enumerate(self.layers):

130 | next_x = layer.fprop(next_x)

131 | if self.subject_weights[l] > 0 or self.style_weights[l] > 0:

132 | x_feats[l] = next_x

133 |

134 | # Backward propagation

135 | grad = ca.zeros_like(next_x)

136 | loss = ca.zeros(1)

137 | for l, layer in reversed(list(enumerate(self.layers))):

138 | if self.subject_weights[l] > 0:

139 | diff = x_feats[l] - self.subject_feats[l]

140 | norm = ca.sum(ca.fabs(diff)) + 1e-8

141 | weight = float(self.subject_weights[l]) / norm

142 | grad += diff * weight

143 | loss += 0.5*weight*ca.sum(diff**2)

144 | if self.style_weights[l] > 0:

145 | diff = gram_matrix(x_feats[l]) - self.style_grams[l]

146 | n_channels = diff.shape[0]

147 | x_feat = ca.reshape(x_feats[l], (n_channels, -1))

148 | style_grad = ca.reshape(ca.dot(diff, x_feat), x_feats[l].shape)

149 | norm = ca.sum(ca.fabs(style_grad))

150 | weight = float(self.style_weights[l]) / norm

151 | style_grad *= weight

152 | grad += style_grad

153 | loss += 0.25*weight*ca.sum(diff**2)

154 | grad = layer.bprop(grad)

155 |

156 | if self.tv_weight > 0:

157 | x = ca.reshape(self.x.array, (3, 1) + grad.shape[2:])

158 | tv = self.tv_conv.fprop(x, self.tv_kernel)

159 | tv *= self.tv_weight

160 | grad -= ca.reshape(tv, grad.shape)

161 |

162 | ca.copyto(self.x.grad_array, grad)

163 | return loss

164 |

--------------------------------------------------------------------------------

22 | Style:

23 |

22 | Style:

23 |  24 |

24 |  22 | Style:

23 |

22 | Style:

23 |  24 |

24 |  29 |

29 |  36 |

36 |  41 |

41 |  42 |

42 |  43 |

43 |  44 |

44 |  45 |

45 |  50 |

50 |  51 |

51 |  52 |

52 |  53 |

53 |  54 |

54 |