├── cassandra.md

├── choosing_a_database.md

├── database-foundation.md

├── database-research.pdf

├── drawio

├── foundation_of_data_systems

└── storage_and_retrieval

├── images

├── ![]().png

├── 2020-10-09-08-07-27.png

├── 2020-10-09-08-22-44.png

├── 2020-10-09-08-23-40.png

├── 2020-10-09-08-25-53.png

├── 2020-10-09-08-26-23.png

├── 2020-10-09-09-45-09.png

├── 2020-10-09-09-51-37.png

├── 2020-10-15-10-14-29.png

├── 2020-10-15-10-33-23.png

├── 2020-10-15-10-41-34.png

├── 2020-10-15-11-02-23.png

├── cassandra

│ ├── 149f7c7b.png

│ ├── 2a482058.png

│ ├── 2e4598fe.png

│ ├── 3580057f.png

│ ├── 5abf55f1.png

│ └── e8f5bac5.png

├── choosing_database

│ ├── ab021a97.png

│ └── cf5618a7.png

├── foundation_of_data_systems.png

├── indexing.png

├── intensive

│ ├── 2020-09-30-11-24-08.png

│ ├── 2020-09-30-12-43-17.png

│ ├── 2020-09-30-12-45-56.png

│ ├── 2020-09-30-12-50-56.png

│ ├── 2020-09-30-13-39-23.png

│ ├── 2020-09-30-13-39-31.png

│ ├── 2020-09-30-13-58-58.png

│ ├── 2020-09-30-14-09-32.png

│ ├── 2020-09-30-14-12-27.png

│ ├── 2020-09-30-15-29-21.png

│ ├── 2020-09-30-15-33-44.png

│ ├── 2020-09-30-15-35-46.png

│ ├── 2020-09-30-15-53-48.png

│ ├── 2020-09-30-15-59-51.png

│ ├── 2020-09-30-21-37-42.png

│ ├── 2020-09-30-21-41-30.png

│ ├── 2020-10-01-09-54-22.png

│ ├── 2020-10-01-09-54-24.png

│ └── 2020-10-01-10-32-50.png

├── mongodb-replicaset

│ ├── 0dde5a21.png

│ ├── 10d422c0.png

│ ├── 2b6a83ef.png

│ ├── 3265315e.png

│ ├── 32cf40dc.png

│ ├── 378a5b1f.png

│ ├── 42075f95.png

│ ├── 53f7d13b.png

│ ├── 5de8cac9.png

│ ├── 648e69b1.png

│ ├── 676c1811.png

│ ├── 93653734.png

│ ├── 937a3729.png

│ ├── 99c0ddfc.png

│ ├── 9a3d1e67.png

│ ├── b2aa36d1.png

│ ├── cda7fe4a.png

│ ├── d1b63713.png

│ ├── de1f1c67.png

│ └── e7b2e770.png

├── pool.png

└── readme.md

│ ├── 2020-10-18-17-14-25.png

│ ├── 2020-10-18-17-14-32.png

│ ├── 2020-10-18-17-14-44.png

│ ├── 2020-10-18-17-22-30.png

│ ├── 2020-10-18-18-07-48.png

│ ├── 2020-10-18-18-11-26.png

│ ├── 2020-10-18-20-52-24.png

│ ├── 2020-10-18-20-53-08.png

│ ├── 2020-10-18-21-13-33.png

│ ├── 2020-10-18-21-13-59.png

│ ├── 2020-10-18-21-14-27.png

│ ├── 2020-10-18-21-14-37.png

│ ├── 2020-10-18-21-15-56.png

│ ├── 2020-10-18-21-17-15.png

│ ├── 2020-10-18-21-19-04.png

│ ├── 2020-10-19-07-27-09.png

│ ├── 2020-10-19-07-27-12.png

│ ├── 2020-10-19-08-11-48.png

│ ├── 2020-10-19-09-31-29.png

│ ├── 2020-10-19-09-46-37.png

│ ├── 2020-10-19-09-47-50.png

│ ├── 2020-10-19-09-51-23.png

│ ├── 2020-10-19-11-12-43.png

│ ├── 2020-10-19-15-25-19.png

│ ├── 2020-10-19-15-25-26.png

│ ├── 2020-10-19-16-56-48.png

│ ├── 2020-10-19-17-08-49.png

│ └── 2020-10-19-17-21-23.png

├── mongodb.md

├── nosql.md

├── postgresql.md

├── readme.md

├── relational_vs_nosql.md

├── scaling.md

└── storage_engine.md

/cassandra.md:

--------------------------------------------------------------------------------

1 | # Cassandra

2 | ## Why use Cassandra ?

3 | - Is a good fit for several nodes, a great fit if your application is expected to require `dozen of nodes`.

4 | - Lots of writes, statistics, analysis. Consider your application from the perspective of the ratio of reads to write. `Excellent throughput on writes`.

5 | - Has out of the box support for `geographical distribution` of data. Easily to replicate data across multiple data centers.

6 |

7 | ## Cassandra key features

8 | - **Linear scalability**: You can add more and more nodes to the cluster and it will still have the top performance

9 | - **Fault tolerance**: You don't have to worry about a master node going down and the whole things stop to work

10 | - **Commodity hardware**: You do not have to buy specialized servers in order to run cassandra you can use commodity hardware

11 | - **Highly-performant**: For realtime application, different with hadoop when they run at nights, at batches. Those traditional databases are not designed for realtime

12 |

13 | ## Database distribution

14 | To distribute the trows across the nodes, a partitioner is used. The partitioner uses an algorithm to determin which node a given row of data will go to

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

23 |

24 | ### Virtual nodes

25 | Instead of a node being responsible for just one token range, it is instead responsible for many small token ranges(by default, 256 of them)

26 |

27 | - Virtual nodes were created to make it easier to add new nodes to a cluster while keeping the cluster balanced

28 | - When a new ndoe is added, it receives many small token range slices from the existing nodes, to maintain a balanced cluster

29 |

30 |

31 |

32 | ## Usecases

33 | - Sensor data

34 | - Recommendation engine, gaming, fraud detection, location based services

35 |

36 | ## Data modeling [1]

37 | ### Query-first design

38 | In cassandra you don't start with the data model, you start with the query model

39 |

40 | ## Cassandra configuration

41 | ### Snitch

42 | Snitch is how the nodes in a cluster know aout the topology of the cluster

43 |

44 |

45 | ## Read/ Write data [2]

46 |

47 |

48 | ### Gossip

49 | Every one second, each node communicates with up to theree other nodes, exchanging information about ifself and all the other nodes that it has information about

50 |

51 | - Gossip is the **internal** communication method for nodes in a cluster to talk to each other

52 | - For **external communication** such as an application to a database, CQL or Thrift are used

53 |

54 |

55 |

56 | ## Reference

57 | [1] Cassandra: The Definitive Guide (https://learning.oreilly.com/library/view/cassandra-the-definitive/9781098115159/)

58 |

59 | [2] https://www.udemy.com/course/apache-cassandra/

60 | https://www.javatpoint.com/use-cases-of-cassandra

--------------------------------------------------------------------------------

/choosing_a_database.md:

--------------------------------------------------------------------------------

1 | # Choosing database

2 |

3 | [7 Database Paradigms - YouTube](https://www.youtube.com/watch?v=W2Z7fbCLSTw)

4 |

5 | ## Columned based

6 | - `Decentralized and can scale horizontally`, a popular usecases for scaling a large amount of time-series data like records from an IOT device, weather sensor, on in the case of netflix, a history of different shows watch you watched

7 | - It's often used in situation where you have `frequent write` but `infrequent update and reads`

8 | - When you need to retrieve columns of data in one disk block to reduce disk I/O.

9 | - It's not going to be your primary app database. For that you need something more general purpose

10 |

11 | ## Document oriented database

12 | Tradeoff: Schemaless relation-ish queries without joins

13 |

14 |

15 |

16 | Reads from a frontend application are much faster however writing or updating data tend to be more complex, document databases are far more general purpose than the other options

17 |

18 | For developers, they are easier to use, best for mosts apps, games, iot and many other usecases. If you are not sure how your data is structured at this point, a document database is properly

19 |

20 |

21 | ## Graph database

22 |

23 |

24 | ## Chosing your database

25 | Two reasons to consider a NoSQL database: programmer productivity and data access performance.

26 | - To improve programmer productivity by using a database that better matches an application’s

27 | needs.

28 | - To improve data access performance via some combination of handling larger data volumes,

29 | reducing latency, and improving throughput.

30 |

31 | It's essential to test your expectation about programmer productivity and performance before commiting to using a NoSQL technology

32 |

33 | ### Sticking with the default

34 | There are many cases you’re better off sticking with the default option of a relational database:

35 | - You can easily find people with the experience of using them.

36 | - They are mature, so you are less likely to run into the rough edges of new technology

37 | - Picking a new technology will always introduce a risk of problems should things run into difficulties

38 |

39 |

40 | ---

41 | ### SQL

42 | traditional SQL databases are missing two important capabilities — linear write scalability (i.e. automatic sharding across multiple nodes) and automatic/zero-data loss failover.

43 |

44 | This means data volumes ingested cannot exceed the max write throughput of a single node.

45 |

46 | Additionally, some temporary data loss should be expected on failover

47 |

48 | . Zero downtime upgrades are also very difficult to achieve in the SQL database world.

49 |

50 | ### NoSQL

51 | NoSQL DBs are usually distributed in nature where data gets partitioned or sharded across multiple nodes.

52 | They mandate denormalization which means inserted data also needs to be copied multiple times to serve the specific queries you have in mind.

--------------------------------------------------------------------------------

/database-foundation.md:

--------------------------------------------------------------------------------

1 | ## 1. Foundation of data systems [1]

2 |

3 |

4 | ### 1.1. Reliability

5 | - `Tolerate` hardware and software & software faults

6 | - The system should continue to work correctly even in the face of adversity.

7 |

8 | #### 1.1.1. Hardware faults

9 | Anyone who has worked with large datacenters can tell you that these things happen all the time when you have a lot of machines:

10 | - Hard disk crash

11 | - RAM becomes faulty

12 | - The power grid has a blackout

13 | - Someone unplugs the wrong network cable.

14 |

15 | We can achieve `reliability` by using software fault-tolerance techniques in preference or in addition to hardware

16 | redundancy.

17 |

18 | For example: A single-server system

19 | requires planned `downtime` if you need to reboot the machine. Whereas a system that can tolerate machine failure can be patched one node at a time, without downtime of the entire system.

20 |

21 | #### 1.1.2. Software errors

22 | - Hardware faults as being random and independent from each other. It is unlikely that a large number of hardware components will fail at the same time.

23 | - A software bug that causes every instance of an application server to crash when

24 | given a particular bad input

25 | - A service that the system depends on that slows down, becomes unresponsive, or

26 | starts returning corrupted response

27 | - Cascading failures, where a small fault in one component triggers a fault in

28 | another component, which in turn triggers further faults

29 |

30 | Solutions:

31 | - Carefully thinking about assumptions and interactions in the

32 | system

33 | - Thorough testing

34 | - Process isolation

35 | - Allowing processes to crash and restart

36 | - Measuring, monitoring, and analyzing system behavior in production

37 |

38 | #### 1.1.3. Human errors

39 | Configuration errors by operators were the leading cause of outages, whereas hard‐

40 | ware faults (servers or network) played a role in only 10–25% of outages

41 |

42 | How we make our system reliables:

43 | - Test thoroughly at all levels, from unit tests to whole-system integration tests and

44 | manual tests

45 | - Make it fast to roll back configuration changes, roll

46 | out new code gradually

47 | - Set up detailed and clear monitoring, such as performance metrics and error

48 | rates

49 |

50 | #### 1.1.4. How important is reliability?

51 | - Bugs in business applications cause `lost productivity`

52 | - Outages of ecommerce sites can have huge costs in terms of `lost revenue

53 | and damage to reputation`.

54 |

55 |

56 | ### 1.2. Scalability

57 | - Measuring load & performance

58 | - Latency percentiles, throughput

59 | - As the system grows, there should be reasonable ways of dealing with that growth. Scalability is the term we use to describe a system’s ability to cope with increased

60 | load.

61 |

62 | #### 1.2.1. Describing Load

63 | - Post tweet: A user can publish a new message to their followers (4.6k requests/sec on aver‐

64 | age, over 12k requests/sec at peak)

65 |

66 | - Home timeline: A user can view tweets posted by the people they follow (300k requests/sec)

67 |

68 |

69 |

70 |

71 |

72 | #### 1.2.2. Describing performance

73 | If the 95th percentile response time

74 | is 1.5 seconds, that means 95 out of 100 requests take less than 1.5 seconds

75 |

76 | ### 1.3. Maintainability

77 | - Operability

78 | - Simplicty & evolvability

79 |

80 | #### 1.3.1. Operability

81 | Make it easy for operations teams to keep the system running smoothly.

82 |

83 | #### 1.3.2. Simplicity

84 | Make it easy for new engineers to understand the system, by removing as much

85 | complexity as possible from the system. (Note this is not the same as simplicity

86 | of the user interface.)

87 |

88 | #### 1.3.3. Evolvability

89 | Make it easy for engineers to make changes to the system in the future, adapting

90 | it for unanticipated use cases as requirements change. Also known as extensibility, modifiability, or plasticity.

91 |

92 |

93 |

94 | ## 2. Data model and query languages

95 | ### 2.1. The Birth of NoSQL

96 | - A need for greater scalability than relational databases can easily achive, including very large datasets or very high write throughput

97 | - Specialized query operations that are not well supported by the relational model

98 | - Frustration with the restrictiveness of relational schemas, and a desire for a more

99 | dynamic and expressive data model

100 |

101 |

102 |

103 |

104 | ### 2.2. Document data model and JSON

105 | The JSON representation has better `locality` than the multi-table schema. If you want to fetch a profile in the relational example, you need to either

106 | perform multiple queries or perform a messy multi-way join between the users table and its subordinate tables. In the JSON representation, all the relevant information is in one place, and one query is sufficient.

107 |

108 | - If the data in your application has a document-like structure (i.e., a tree of one-to-many relationships, where typically the entire tree is loaded at once), then it’s probably a good idea to use a document model.

109 |

110 | #### 2.2.1. Advantage

111 | - Flexibility in the document model: Do not enforce any schema on the data in documents

112 | - Data locality for queries: If your application often

113 | needs to access the entire document (for example, to render it on a web page), there is

114 | a performance advantage to this storage locality

115 | But it is generally recommended that you keep

116 | documents fairly small and avoid writes that increase the size of a document

117 |

118 |

119 | #### 2.2.2. Limitation

120 | - you cannot refer directly to a nested item within a document, but instead you need to say something like “the second item in the list of positions for user 251”

121 | - Poor support for join.

122 | - Many-to-many relationships may never be needed in an analytics application that uses a document database to record which events occurred at which time

123 |

124 | ### 2.3. Schema changes

125 |

126 |

127 | Schema changes have a bad reputation of being slow and requiring downtime.

128 |

129 | MySQL is a notable exception—it

130 | copies the entire table on `ALTER TABLE` , which can mean minutes or even hours of

131 | downtime when altering a large table although various tools exist to work around

132 | this limitation

133 |

134 | #### MapReduce Querying

135 | MapReduce is a programming model for processing large amounts of data in bulk

136 | across many machines, popularized by Google

137 |

138 |

139 |

140 |

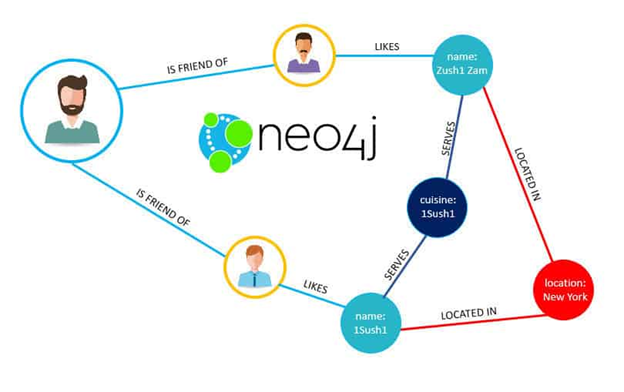

141 | ### 2.4. Graph-Like Data Models

142 | - If your application has mostly one-to-many relationships (tree-structured data) or no relationships between records, the document

143 | model is appropriate.

144 |

145 | - If many-to-many relationships are very common in your data? The relational model can handle simple cases of many-to-many relationships, but as the con‐

146 | nections within your data become more complex, it becomes more natural to start

147 | modeling your data as a graph

148 |

149 | A graph consists of two kinds of object: Vertices and edges. Many kinds of data can be modeled as a graph. Typical examples include:

150 |

151 | - Social graphs: Vertices are people, and edges indicate which people know each other.

152 | - The web graph: Vertices are web pages, and edges indicate HTML links to other pages.

153 | Well-known algorithms can operate on these graphs:

154 | - Car navigation system: search for the shortest path between two points in a road network

155 | - PageRank can be used on the web graph to determine the popularity of a web page

156 | and thus its ranking in search results.

157 |

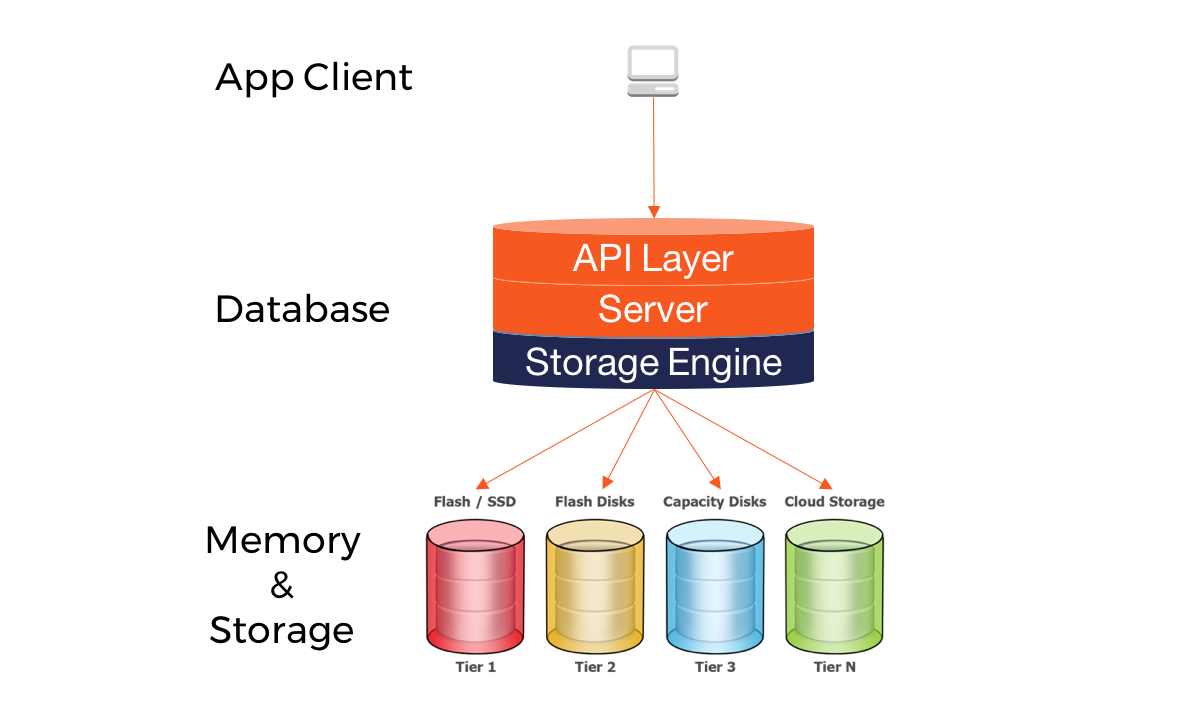

158 | ## 3. Storage and Retrieval

159 | - Why should you, as an application developer, care how the database handles storage and retrieval internally. You do need to select a storage engine that is appropriate for your application, from the many that are available.

160 | - In order to tune a storage engine to perform well on your kind of workload, you need to have a rough idea of what the storage engine is doing under the hood.

161 | - There is a big difference between storage engines that are optimized for transactional workloads and those that are optimized for analytics.

162 |

163 | Two families of storage engines:

164 | - Log-structured storage engines

165 | - Page oriented storage engines such as B-Trees.

166 |

167 | ### 3.1. Hash index

168 | - Is usually implemented as a hash map.

169 | - Let’s say our data storage consists only of appending to a file, Whenever you append a

170 | new key-value pair to the file, you also update the hash map to reflect the offset of the

171 | data you just wrote

172 | - When you want to look up a value, use the hash map to find the offset in the data file, seek to that location, and read the value.

173 |

174 | How we avoid eventually running out of disk space?

175 | - break the log into segments of a certain size by closing a segment file when it reaches a certain size

176 | - making subsequent writes to a new segment file

177 | - We can then perform compaction on these segments. Compaction means throwing away duplicate

178 | keys in the log, and keeping only the most recent update for each key.

179 |

180 | - merge several segments together at the same time as performing the compaction

181 |

182 | Hash index implementation, append-only log: The order of key-value pairs in the file does not matter

183 | - File format: It’s faster and simpler to use a binary format

184 | - Deleting records: If you want to delete a key and its associated value, you have to append a special deletion record to the data file (sometimes called a tombstone)

185 | - Crash recovery: If the database is restarted, the in-memory hash maps are lost.

186 | storing a snapshot of each segment’s hash map on disk, which can be loaded into memory more quickly.

187 | - Partially written records: The database may crash at any time, including halfway through appending a record to the log. Bitcask files include checksums, allowing such corrupted parts

188 | of the log to be detected and ignored.

189 | - Concurrency control: As writes are appended to the log in a strictly sequential order, a common implementation choice is to have only one writer thread

190 |

191 | #### append-only design turns out to be good for several reasons

192 | - Appending and segment merging are sequential write operations, which are gererally much faster than random writes

193 | - Concurrency and crash recovery are much simpler if segment files are append only or immutable.

194 | #### Limitation

195 | - The hash table must fit in memory, so if you have a very large number of keys, you’re out of luck.

196 |

197 | ### SSTables(Sorted String Table) and LSMTrees

198 | - The sequence of key-value pairs is sorted by key.

199 | Advantages over log segments with hash indexes:

200 | - Merging segments is simple and efficient even if the files are bigger than the

201 | available memory

202 |

203 | - In order to find a particular key in the file, you no longer need to keep an index

204 | of all the keys in memory. You still need an in-memory index to tell you the offsets for some of the keys, but it can be sparse: one key for every few kilobytes of segment file is sufficient

205 |

206 |

207 |

208 | #### Constructing and maintaining SSTables

209 | - How do you get your data to be sorted by key in the first place? Our

210 | incoming writes can occur in any order.? We can insert keys in order and read them back in sorted order with Red-black trees, AVL trees

211 | Working flow:

212 | - When a write comes in -> add it to an in-memory balanced tree data structure(red-black) tree. This is sometimes called a memtable.

213 | - When the memtable gets bigger than some threshold -> write it out to disk as an SSTable file

214 | - The new SSTable file becomes the most recent segment of the database.

215 | - In order to serve a read request, first try to find the key in the memtable, then in the most recent on-disk segment, then in the next-older segment,

216 | - From time to time, run a merging and compaction process in the background to combine segment files and to discard overwritten or deleted values.

217 |

218 | #### Making an LSM-tree out of SSTables

219 |

220 | ### B-Trees

221 | B-Trees keep key-value pairs sorted by key, which allows efficient value lookups and range queries.

222 |

223 | Log structured indexes break the database down into variable-size segments typically several megabytes or more in size, and always write a segment sequentially

224 |

225 | B-trees break the databaes down into fixed-size blocks or pages, traditionally 4KB in size(sometimes bigger) and read or write one page at a

226 | time. This design corresponds more closely to the underlying hardware, as disks are

227 | also arranged in fixed-size blocks.

228 |

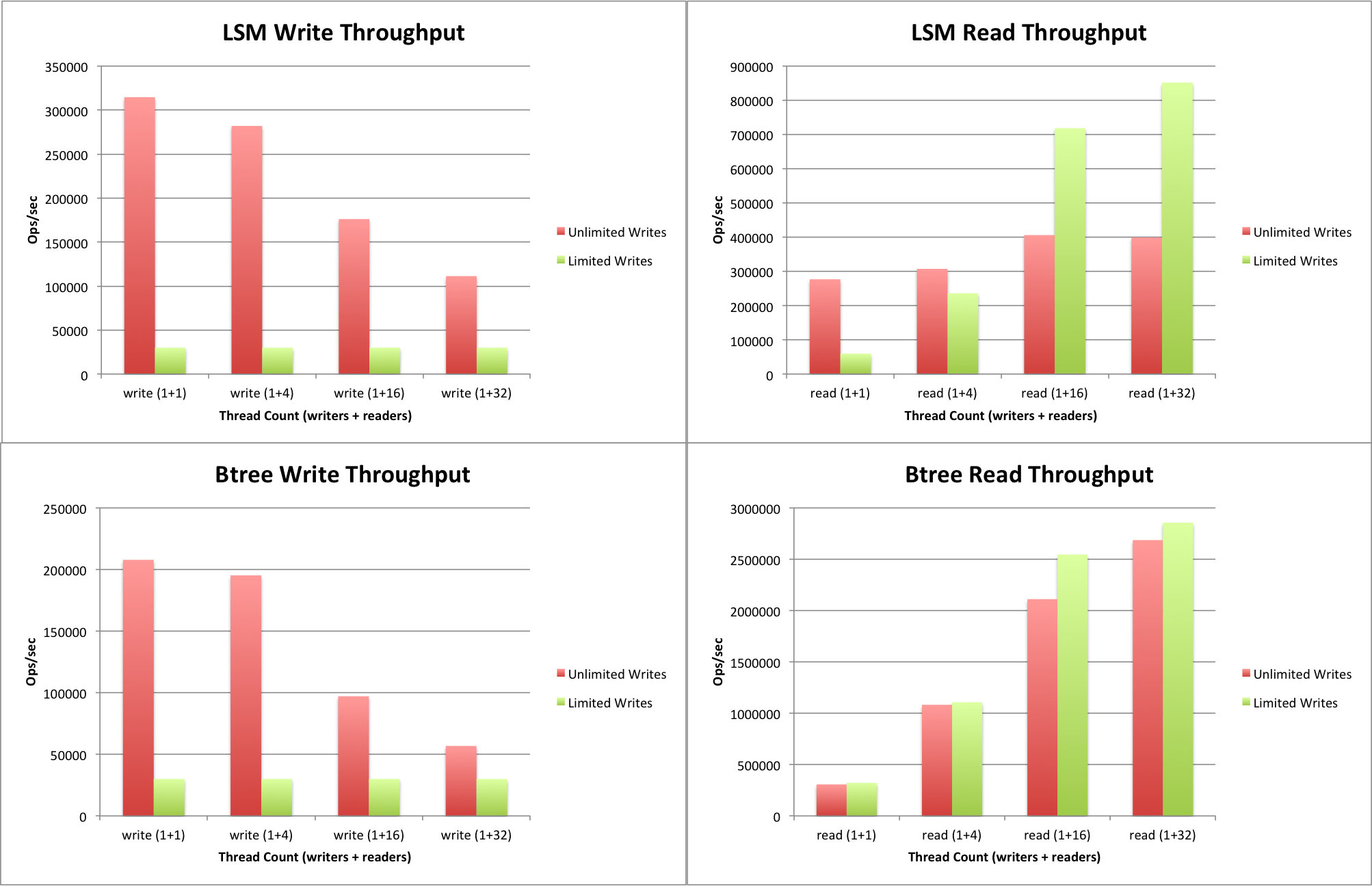

229 | ### Decding factor between B-tree and LSM trees

230 | https://rkenmi.com/posts/b-trees-vs-lsm-trees#:~:text=Modern%20databases%20are%20typically%20represented,in%20fixed%20size%20page%20segments.

231 | - B-Trees typically modify entries in-place

232 | - LSM trees on the other hand append entries and discard stale entries from time to time

233 | - B-Trees only have to worry about 1 unique key

234 | - LSM trees will potentially have duplicate keys

235 |

236 | If `reads` are a concern, then it may be worthwhile to look into B-Trees instead of LSM Trees. In a nutshell, if we do a simple database query with LSM Trees, we'll first look at the memtable for the key. If it doesn't exist there, then we look at the most recent SSTable, and if not there, then we look at the 2nd most recent SSTable, and onwards. This means that if the key to be queried doesn't exist at all, then LSM Trees can be quite slow.

237 |

238 | Otherwise if writes are a concern, LSM Trees are more attractive due to sequential writes. In general, sequential write is a lot faster than random writes, especially on magnetic hard disks. Do note though, that the maximum write throughput can be more unpredictable than B-Trees due to the periodic compaction and merging going on in the background.

239 |

240 | ### Full-text search and fuzzy indexes

241 | - full-text search engines commonly allow a search for one word to be expanded to include synonyms of the word

242 | - ignore grammatical variations of words

243 | - search for occurences of words near each other in the same document

244 | - Cope with typos in documents or queries

245 |

246 | ### Transaction Processing or Analytics? OLTP, OLAP

247 |

248 | There was a trend for companies to stop using their OLTP systems for analytics

249 | purposes, and to run the analytics on a separate database instead. This separate data‐

250 | base was called a data warehouse.

251 |

252 | ### Data warehousing

253 | A data warehouse, by contrast, is a separate database that analysts can query to their

254 | hearts’ content, without affecting OLTP operations

255 | Data warehous contains a read-only copy of the data in all the various OLTP systems in the company

256 |

257 |

258 | Advatange of using data warehouse: rather than querying OLTP systems directly for analytics, is that the data warehouse can be optimized for analytic access patterns.

259 |

260 | ### Column-Oriented Storage

261 | - Instead of loading all of those rows from disk to memory, parse them, filter out those that don't meet the required conditions. That can take a long time

262 | - The idea behind column-oriented storage is don't store all the values from one row together, but store all the values from each column together instead. If each column is stored in a separate file, a query only needs to read and parse those columns

263 | that are used in that query, which can save a lot of work

264 |

265 | ### Encoding

266 | - XML, JSON, binary format

267 | - Apache thrift, Message pack, gRPC

268 | - Avaro

269 |

270 | ## Distributed data

271 | There are various reasons why you might want to distribute a database across multi‐

272 | ple machines

273 | - Scalability

274 | If your data volume, read load, or write load grows bigger than a single machine

275 | can handle, you can potentially spread the load across multiple machines.

276 |

277 | - Fault tolerance/high availability

278 | If your application needs to continue working even if one machine (or several

279 | machines, or the network, or an entire datacenter) goes down, you can use multi‐

280 | ple machines to give you redundancy. When one fails, another one can take over.

281 |

282 | - Latency

283 | If you have users around the world, you might want to have servers at various

284 | locations worldwide so that each user can be served from a datacenter that is geo‐

285 | graphically close to them

286 |

287 | ### Replication

288 | Keeping a copy of the same data on multiple machines that are connected via a network. Reasons:

289 | - To keep data geographically close to your users(reduce latency)

290 | - To allow the system to continue working even if some of its parts have failed(increase availability)

291 | - Scale out the number of machines that can serve read queries(increase read throughput)

292 | #### Leader and followers

293 | - One of the replicas is designated the leader

294 | - The other replicas are known as followers

295 | - When a client wants to read from the database, it can query either the leader or any of the followers. However, writes are only accepted on the leader

296 |

297 |

298 | ### Setting Up New Followers

299 | - Take a consistent snapshot of the leader’s database at some point in time—if pos‐

300 | sible, without taking a lock on the entire database.

301 | - Copy the snapshot to the new follower node.

302 | - The follower connects to the leader and requests all the data changes that have

303 | happened since the snapshot was taken.This requires that the snapshot is associ‐

304 | ated with an exact position in the leader’s replication log.MySQL calls it the binlog coordinates.

305 | - When the follower has processed the backlog of data changes since the snapshot,

306 | we say it has caught up. It can now continue to process data changes from the

307 | leader as they happen.

308 | ### Problems with Replication Lag:

309 | The delay between a write happening on the leader and being reflected on a follower—the replication lag

310 | - Leader-based replication requires all writes to go through a single node,

311 | - Read-only queries can go to any replica

312 |

313 | **read-after-write consistency**:

314 | - When reading something that the user may have modified, read it from the leader; otherwise, read it from a follower.

315 | - For example, user profile information on a social network is nor‐

316 | mally only editable by the owner of the profile,

317 | **Monotonic Reads**: Monotonic reads is that user shouldn't see things moving backward in time

318 | - Make sure that each user always makes their reads from the same replica. For example: the replica can be choosen based on a hash of the UserID, rather than randomly. If that replica failed, the user's queries will need to be rerouted to

319 | another replica.

320 |

321 | **Consistent Prefix Reads**:

322 | Anomaly: If some partitions are replicated slower than others, an observer may see the

323 | answer before they see the question.

324 | This guarantee says that if a sequence of writes happens in a certain order,

325 | then anyone reading those writes will see them appear in the same order.

326 |

327 | One solution is to make sure that any writes that are causally related to each other are

328 | written to the same partition

329 |

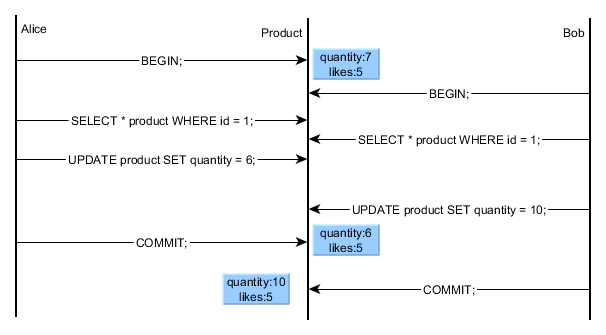

330 | ### Solutions for Replication Lag

331 | When working with an eventually consistent system, it is worth thinking about how

332 | the application behaves if the replication lag increases to several minutes or even

333 | hours.

334 |

335 | However, if the result is a bad expe‐

336 | rience for users, it’s important to design the system to provide a stronger guarantee,

337 | such as read-after-write

338 |

339 | ### Multi-leader replication

340 | - Allow more than one node to accept writes

341 | Usecases:

342 | - Multi-datacenter operation: You can have a leader in each datacenter

343 |

344 |

345 |

346 | Compare how the single-leader and multi-leader configurations fare in a multi-

347 | datacenter deployment:

348 | - Performance: every write can be processed in the local datacenter

349 | and is replicated asynchronously to the other datacenters. Thus, the inter-

350 | datacenter network delay is hidden from users, which means the perceived per‐

351 | formance may be better.

352 | - each datacenter can continue operating independently of the others,

353 | and replication catches up when the failed datacenter comes back online.

354 |

355 | Downside:

356 | - the same data may be concurrently modified in two different datacenters

357 | - those write conflicts must be resolved

358 |

359 | #### Synchronous Versus Asynchronous Replication

360 | - Synchronous: The leader waits until follower has confirmed that it receive the write before reporting success to user

361 | - Asynchronous: the leader send the message, doesn't wait for a response from the follower

362 | It's impractical for all followers to be synchronous: Any one node outage would cause the whole system to grind to a halt.

363 | **semi-synchronous**: one of the followers is synchronous, and the others are asynchronous. If the synchronous follower becomes unavailable or slow, one of the asynchronous followers is made synchronous.

364 |

365 | ## The trouble with distributed system

366 | ### Unreliable Networks

367 | network problems can be surprisingly common, Public cloud services such as EC2 are notorious for having frequent transient net‐work glitches. nobody is immune from network problems

368 | - Your request may have been lost(perhaps someone unplugged a network cable) (perhaps someone unplugged a network cable).

369 | - Your request may be waiting in a queue and will be delivered later

370 | - The remote node may have failed

371 | - The remote node may have temporarily stopped responding (perhaps it is expe‐

372 | riencing a long garbage collection pause), but it

373 | will start responding again later.

374 | - The remote node may have processed your request, but the response has been

375 | lost on the network

376 | - The remote node may have processed your request, but the response has been

377 | delayed and will be delivered later

378 | Solution: Timeout- after some time you give up waiting

379 | and assume that the response is not going to arrive

380 |

381 |

382 | ## References

383 | [1] Martin Kleppmann: [Designing Data-Intensive Applications: The Big Ideas Behind Reliable, Scalable, and Maintainable Systems](https://www.amazon.com/Designing-Data-Intensive-Applications-Reliable-Maintainable/dp/1449373321)

384 |

--------------------------------------------------------------------------------

/database-research.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/database-research.pdf

--------------------------------------------------------------------------------

/drawio/foundation_of_data_systems:

--------------------------------------------------------------------------------

1 | 7Vxtb5w4EP41K919SMQ75GOaps1JiVo1lXr96AUDvgCmxuzL/fqzwbCA2Zd0Ydk9baoGGNtgZuaZGXuGzPSHePWZgDR8wR6MZprirWb6x5mmqY6jsQOnrEuKad6VhIAgT3TaEF7Rv1AQFUHNkQezVkeKcURR2ia6OEmgS1s0QAhetrv5OGo/NQUBlAivLohk6g/k0bCkOqayoT9BFITVk1VFtMSg6iwIWQg8vGyQ9MeZ/kAwpuVZvHqAEWdexZdy3KctrfXECEzoIQOitR+Cv95AsviSpsv75886+nVjirnRdfXC0GPvLy4xoSEOcAKixw31A8F54kF+V4Vdbfo8Y5wyosqI/0BK10KYIKeYkUIaR6K1fCZ/0NZXEaQM58SFO+ZfqQQgAaQ7+mk1w5mmQhxDStZsHIERoGjRngcQKhPU/TZcZSeCse9gsjUFkxkryfpvPv7WrC5/itsVFx9Xrau1uJpAOPqUwnGuwtkpHGNK4Yj7LkCUiyfNNCti0/3gY/bCTbFZv3JcNdxkBePvWQfVTFebRnYW8OMnLkA2f5ywLtjnU+c+ClB+yNYZhXH1IDbv8lnlSElb2rqwDBGFrykoWL9kLrEtdx9F0QOOMCnG6h6Aju8yekYJfoONFst14Nyvn7eAhMLVbpnLMhIDdEN4IOGC1ep6uXFomiVoYcOZVbThxXrpbudYdyKGfsWo0GIhqLu2nHSzw/8Sq2JQRwT1LH5fKpoEtm8wQmCOIkTXw+q9CR3P6NN7R5vrljWQ3rfZqfWovaqdUu11VeLiCdS+w3vf5P96bU7xMyxQjnXt/UAx7GmRoktI4SuGMZDi+77m9noIz5pb5kBIMdRzg4oxSVg2oOIbh4ZXkwa/xt0UbD6D6Pdg8Rwb/hZD7wkB60aHlBumbFcg0LFv3aX9+/qzk3IGgxrByuZedWdbP3NSaEs+6gUwpWH/R/FTjgv7/dTcMQ1TGcZP1dtuZ+OnVDlofgLEW7JXYlQf5BG9wHjgRu0sRQzrQEYbozG6J+bCPhWMhoRgkl0gp7Wz47NsN57yGCSXzeRz47I2te80dPs93rO4+goJYq8PyfAuVbUO9KnqsTsvx4ltkn2rMwh5DpfPJPFy7d+r+NfeHS93+1cGYdR4WbMnVh5L01vqc6uoFwP7SVfJ1TQbPvE7jiABlMceNIQZP76hxGM6WqYTishvYF+5f+OOJyoa9PJnqJBwy5ZbM4mgntSHOpJUqpxNqG7SNSUlS4sQZn+6SOPpolLNlaJtKd6FtyaYxCCS00k3rO0JZCE7eCh7YweXAH6sU0jl89tzYmR5olNM/dv9C/s9hy6TTFYp7/pSZv/KZs1hl/DfeRKkUR5kJS65ehKcBPwOkC4xKUQD5mwqu19uTva8bgfXDFe0Dd4dIN0Ga2YIEJur/rFx9b0w8zfaNnOBGaj9qCgrCZHnwUTyGWPaBK1jFEytJ7C+6zEKqj2aVbg7K6vQWKPO86DQvjwrQAYZ6zkvUZJRkLiFJ8HCcoSXgL0HkLnAQwW6fICinIjbgVm988HeroYmsy4pOyviMEpQEEC+mOzpzYBL2v0tEHNlT+ZZ2ubCFbu/jV2zjdwqJb8Xuc5o2VHlbJD7QzgNFyc+CnIiKkWuajf4Vkyv3pmnDCP1S69F0Y/dEtmSY3c6a2S9I4Gxc+zyzvoLBFlOCo/zv1lXGdr+dVXv3mRXHMMBwpgCEHCFaGOPi139bLRs9if4xQgbXJW27a9VnXQDUrd7fOSAWDhNQaLR3Y9Xe3Te6dH52nsMz1h5M+EZA29YQzNJLY/RlyPtq/Ycz6DIS7KvLAThsVex7hlSf09SWCix2JmaxYYcOz8DChOXzzqFzPYlFEVw4A3Jk+T8u8w2tZ6I8bTMlqvSv4eMkUGY5sNm+6HKFNru4/CdZetgJHU27anV2ZykUHbAcMLQDwwnzHGCd7tT+2ycuEDWkKs1vjAzdLkVsta2iuPJwnRTv3SMHFpHZw6SU5Yw4thbAqU9GDm2mLMyt4cWc3b6j5OcNuSyn1cUpxFyB//24yRLDMc4O7xOnf7X7lrp/xvlVtkUBExUAGCYh9oAawgb8F7oqopttbGo7MbuvgEjgdeUwPu4wIvL/XJLVQ6oNjgxfOXtyKmyEy/gjefTENc7CLJ1MZaw35iHWOKjVgpBXGTBeU7xDcJ0VmXEq89bFZInSZm+y2KMaRjtS/lfUxwHhYqGfdtOrvVvwZiy9tYqPbz6yv59bPUtTF9fTvyxqbMJXHI1TgKUwDIhXGgslxXhiXFP0tv3Z8Y9tGiVu8CYKUjxEBdmGSiEV2ebs8atGgOvyl3GVd2FZZ9q2z2GWR3tEwxTrs47lWUW7W7Ja95CgvkffPeCvSx7G6V1+udOOBRqH5em3Q1BEsAaDO/Q/70mWkLEhXAGeF5tLXwIaE5gtt8WXAEsh1bdT076ENxb+fEbzoldbv7AURnubv5MlP74Hw==

--------------------------------------------------------------------------------

/drawio/storage_and_retrieval:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/images/![]().png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/![]().png

--------------------------------------------------------------------------------

/images/2020-10-09-08-07-27.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/2020-10-09-08-07-27.png

--------------------------------------------------------------------------------

/images/2020-10-09-08-22-44.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/2020-10-09-08-22-44.png

--------------------------------------------------------------------------------

/images/2020-10-09-08-23-40.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/2020-10-09-08-23-40.png

--------------------------------------------------------------------------------

/images/2020-10-09-08-25-53.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/2020-10-09-08-25-53.png

--------------------------------------------------------------------------------

/images/2020-10-09-08-26-23.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/2020-10-09-08-26-23.png

--------------------------------------------------------------------------------

/images/2020-10-09-09-45-09.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/2020-10-09-09-45-09.png

--------------------------------------------------------------------------------

/images/2020-10-09-09-51-37.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/2020-10-09-09-51-37.png

--------------------------------------------------------------------------------

/images/2020-10-15-10-14-29.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/2020-10-15-10-14-29.png

--------------------------------------------------------------------------------

/images/2020-10-15-10-33-23.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/2020-10-15-10-33-23.png

--------------------------------------------------------------------------------

/images/2020-10-15-10-41-34.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/2020-10-15-10-41-34.png

--------------------------------------------------------------------------------

/images/2020-10-15-11-02-23.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/2020-10-15-11-02-23.png

--------------------------------------------------------------------------------

/images/cassandra/149f7c7b.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/cassandra/149f7c7b.png

--------------------------------------------------------------------------------

/images/cassandra/2a482058.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/cassandra/2a482058.png

--------------------------------------------------------------------------------

/images/cassandra/2e4598fe.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/cassandra/2e4598fe.png

--------------------------------------------------------------------------------

/images/cassandra/3580057f.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/cassandra/3580057f.png

--------------------------------------------------------------------------------

/images/cassandra/5abf55f1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/cassandra/5abf55f1.png

--------------------------------------------------------------------------------

/images/cassandra/e8f5bac5.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/cassandra/e8f5bac5.png

--------------------------------------------------------------------------------

/images/choosing_database/ab021a97.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/choosing_database/ab021a97.png

--------------------------------------------------------------------------------

/images/choosing_database/cf5618a7.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/choosing_database/cf5618a7.png

--------------------------------------------------------------------------------

/images/foundation_of_data_systems.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/foundation_of_data_systems.png

--------------------------------------------------------------------------------

/images/indexing.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/indexing.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-11-24-08.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-11-24-08.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-12-43-17.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-12-43-17.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-12-45-56.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-12-45-56.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-12-50-56.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-12-50-56.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-13-39-23.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-13-39-23.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-13-39-31.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-13-39-31.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-13-58-58.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-13-58-58.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-14-09-32.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-14-09-32.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-14-12-27.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-14-12-27.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-15-29-21.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-15-29-21.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-15-33-44.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-15-33-44.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-15-35-46.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-15-35-46.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-15-53-48.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-15-53-48.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-15-59-51.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-15-59-51.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-21-37-42.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-21-37-42.png

--------------------------------------------------------------------------------

/images/intensive/2020-09-30-21-41-30.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-09-30-21-41-30.png

--------------------------------------------------------------------------------

/images/intensive/2020-10-01-09-54-22.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-10-01-09-54-22.png

--------------------------------------------------------------------------------

/images/intensive/2020-10-01-09-54-24.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-10-01-09-54-24.png

--------------------------------------------------------------------------------

/images/intensive/2020-10-01-10-32-50.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/intensive/2020-10-01-10-32-50.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/0dde5a21.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/0dde5a21.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/10d422c0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/10d422c0.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/2b6a83ef.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/2b6a83ef.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/3265315e.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/3265315e.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/32cf40dc.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/32cf40dc.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/378a5b1f.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/378a5b1f.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/42075f95.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/42075f95.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/53f7d13b.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/53f7d13b.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/5de8cac9.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/5de8cac9.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/648e69b1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/648e69b1.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/676c1811.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/676c1811.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/93653734.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/93653734.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/937a3729.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/937a3729.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/99c0ddfc.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/99c0ddfc.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/9a3d1e67.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/9a3d1e67.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/b2aa36d1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/b2aa36d1.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/cda7fe4a.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/cda7fe4a.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/d1b63713.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/d1b63713.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/de1f1c67.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/de1f1c67.png

--------------------------------------------------------------------------------

/images/mongodb-replicaset/e7b2e770.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/mongodb-replicaset/e7b2e770.png

--------------------------------------------------------------------------------

/images/pool.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/pool.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-17-14-25.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-17-14-25.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-17-14-32.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-17-14-32.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-17-14-44.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-17-14-44.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-17-22-30.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-17-22-30.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-18-07-48.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-18-07-48.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-18-11-26.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-18-11-26.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-20-52-24.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-20-52-24.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-20-53-08.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-20-53-08.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-21-13-33.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-21-13-33.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-21-13-59.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-21-13-59.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-21-14-27.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-21-14-27.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-21-14-37.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-21-14-37.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-21-15-56.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-21-15-56.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-21-17-15.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-21-17-15.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-18-21-19-04.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-18-21-19-04.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-19-07-27-09.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-19-07-27-09.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-19-07-27-12.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-19-07-27-12.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-19-08-11-48.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-19-08-11-48.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-19-09-31-29.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-19-09-31-29.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-19-09-46-37.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-19-09-46-37.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-19-09-47-50.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-19-09-47-50.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-19-09-51-23.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-19-09-51-23.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-19-11-12-43.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-19-11-12-43.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-19-15-25-19.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-19-15-25-19.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-19-15-25-26.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-19-15-25-26.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-19-16-56-48.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-19-16-56-48.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-19-17-08-49.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-19-17-08-49.png

--------------------------------------------------------------------------------

/images/readme.md/2020-10-19-17-21-23.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/anhthii/database-notes/4e7fad12afab907545e4cb8ee85b9bac3e19b636/images/readme.md/2020-10-19-17-21-23.png

--------------------------------------------------------------------------------

/mongodb.md:

--------------------------------------------------------------------------------

1 | # MongoDB

2 | ## Why use MongoDB ?

3 | - Combine the best features of key-value stores and relational databases

4 | - Is designed to `rapidly develop`, prototype web-application and internet infrastructures. We can start writing code immediately and move fast.

5 | - Flexible data model: Don't need to do a lot of `planning around schemas`

6 | - The data model and persistence strategies are built for high `read-and-write throughput`

7 | - Easy to set up a high availibity with `automatic failover`

8 | - `Built-in sharding` features help scaling out huge data collection, we don't have to do application-side sharding

9 |

10 | ### weakingness

11 |

12 |

13 |

14 | ## Key features

15 | ### 1. Document data model

16 |

17 |

18 |

19 |

20 | ### 2. Schemaless model advantages

21 | Your application code and not the database, enforces the data’s structure.

22 |

23 | This can speed up initial application development when the schema is changing frequently.

24 |

25 | ### 3. Indexes

26 |

27 | - Indexes in Mongo DB are implemented as a B-tree data structure

28 | - By permitting `multiple secondary indexes` Mongo DB allows users to optimize for a wide variety of queries

29 | - With Mongo DB , you can create up to 64 indexes per collection

30 |

31 | ### 4. Replication

32 |

33 | - Mongo DB provides database replication via a topology known as a replica set.

34 | - Replica sets distribute data across two or more machines for redundancy and automate

35 | failover in the event of server and network outages

36 | - Replica sets consist of many Mongo DB servers

37 | - A replica set’s primary node can accept both reads and writes, but the secondary nodes are read-only

38 |

39 | ### 5. Speed and durability

40 | - `Write speed` is understood as the volume of inserts, updates, and deletes that a database can process in a given time frame

41 | - `Durability` refers to level of assurance that these write operaitons have been made permanent

42 |

43 | In Mongodb, users control the speed and durablity trade-off by choosing` write semantics` and deciding whether to enable journaling. MongoDB safely

44 |

45 | - You can configure MongoDB to `fire-and-forget`, sending off a write without for an acknowledgement. Ideal of low-value data (like clickstreams and logs)

46 |

47 | ### 6. Scaling

48 |

49 | - Easy to scale out by plug in more servers

50 | - MongoDB provide range-based partitioning mechanism known as `sharding` which atutomatically manages the distribution of data across node

51 | - No application code has to handle the logic of sharding

52 |

53 | ### 7. Aggregation [2]

54 | MongoDB have an aggregation framework modeled on the concept of data processing pipelines that allows you to do expressive analytical query on the database easily, which is a feature that traditional `SQL` don't support.

55 |

56 |

57 |

58 | ###

59 |

60 |

61 | ## Suitable usecases

62 | ### Event logging

63 | - Document databases can store all these different types of events and can act as a central data store for event storage.

64 | - Events can be sharded by the name of the application

65 | where the event originated or by the type of event such as `order_processed` or `customer_logged`

66 |

67 | ### Web Analytics or Real-Time Analytics

68 | New metrics can be easily added without schema changes.

69 |

70 | ### E-Commerce Applications

71 | E-commerce applications often need to have flexible schema for products and orders, as well as the

72 | ability to evolve their data models without expensive database refactoring or data migration

73 |

74 | ## When not to use

75 | - **Complex Transactions Spanning Different Operations**: If you need to have atomic cross-document operations, then document databases may not be for you

76 | - **Queries against Varying Aggregate Structure**: Since the data is saved as an aggregate, if the design of the aggregate is constantly changing, you need to normalize the data. In this scenario, document databases may not work.

77 |

78 |

79 | ## Handle schema changes in MongoDB

80 | - Write an upgrade script.

81 | - Incrementally update your documents as they are used.

82 |

83 | https://mongodb.github.io/mongo-csharp-driver/2.10/reference/bson/mapping/schema_changes/

84 | https://derickrethans.nl/managing-schema-changes.html

85 |

86 | ## Strength and weakness

87 | ### Strength

88 | - Be able to handle huge amounts of data(and huge amounts of request) by replication and horizontal scaling.

89 | - Flexible data model, no need to conform a schema

90 | - Easy to use

91 |

92 | ### Weakness

93 | - Encourage denormalization of schemas, thus lead to `duplicate` data

94 | - Mongo is focused on large datasets, works best in large cluster, which can require some effort to design and manage, setting up a Mongo cluster requires a little more forethought

95 | - Database management is complex

96 | - If indexing is implemented poorly or composite index in an incorrect order, MongoDB can be one of the `slowest database`

97 | - not good in applications where analytics are performed or where joins are required because there is no joins in MongoDB.

98 |

99 |

100 | ## Mongodb index

101 | http://learnmongodbthehardway.com/schema/indexes/

102 | - Indexes are key to achieving high performance in MongoDB

103 | - They allow the database to search through less documents to satisfy a query.

104 | - Without an index MongoDB has to scan through all of the documents in a collection to fulfill the query.

105 | - An index increases the time it takes to insert a document

106 | - Indexes trade off faster queries against storage space.

107 |

108 | - MongoDB automatically uses all free memory on the machine as its cache. System resource monitors show that MongoDB uses a lot of memory, but its usage is dynamic. If another process suddenly needs half the server’s RAM, MongoDB will yield cached memory to the other process.[1]

109 |

110 | ## Database modeling

111 | ```

112 | Inp progess ....

113 | ```

114 |

115 | ## MongoBB replicaset

116 | - Group of mongod processes that maintain the same dataset

117 | - Redundancy and high availability

118 | - Increased read capacity

119 |

120 |

121 |

122 |

123 |

124 |

125 |

126 |

127 |

128 |

129 |

130 |

131 |

132 |

133 |

134 |

135 |

136 |

137 |

138 |

139 | - Manager in application driver

140 |

141 |

142 |

143 | Replica sets allow us high availability, but some point of time, we want

144 |

145 |

146 |

147 |

148 |

149 |

150 |

151 |

152 |

153 |

154 |

155 |

156 |

157 |

158 | ## References

159 | [1]https://stackoverflow.com/a/22010542

160 |

161 | [2] https://docs.mongodb.com/manual/core/map-reduce/

162 |

163 |

--------------------------------------------------------------------------------

/nosql.md:

--------------------------------------------------------------------------------

1 | # Why NoSQL?

2 | - Flexible, schemaless data modeling.

3 | - Help dealing with data in **Aggregates**

4 | - Support large volumes of data by running on clusters. Relational databases are not designed to run effciently on clusters

5 |

6 | ## Aggregate data model [1]

7 | - Dealing in aggregates make it much easier for these databases to handle operating on a cluster, sinces the aggregate makes a natural unit for replication and sharding.

8 |

9 | - Aggregates are often easier for application programmers to work with, since they often manipulate data through aggregate structures.

10 |

11 | ### Relations and Aggregates

12 | #### Relation

13 |

14 |

15 | #### Aggregate

16 | ```js

17 | // in customers

18 | {