├── .editorconfig

├── .github

└── workflows

│ ├── close-inactive-issues.yaml

│ ├── main.yaml

│ └── secret-scanning.yaml

├── .gitignore

├── CHANGELOG.md

├── LICENSE

├── Makefile

├── README.md

├── config

├── arg_plate_example.yaml

└── latin_plate_example.yaml

├── docs

├── architecture.md

├── contributing.md

├── index.md

├── installation.md

├── reference.md

└── usage.md

├── fast_plate_ocr

├── __init__.py

├── cli

│ ├── __init__.py

│ ├── cli.py

│ ├── onnx_converter.py

│ ├── train.py

│ ├── utils.py

│ ├── valid.py

│ ├── visualize_augmentation.py

│ └── visualize_predictions.py

├── common

│ ├── __init__.py

│ └── utils.py

├── inference

│ ├── __init__.py

│ ├── config.py

│ ├── hub.py

│ ├── onnx_inference.py

│ ├── process.py

│ └── utils.py

├── py.typed

└── train

│ ├── __init__.py

│ ├── data

│ ├── __init__.py

│ ├── augmentation.py

│ └── dataset.py

│ ├── model

│ ├── __init__.py

│ ├── config.py

│ ├── custom.py

│ ├── layer_blocks.py

│ └── models.py

│ └── utilities

│ ├── __init__.py

│ ├── backend_utils.py

│ └── utils.py

├── mkdocs.yml

├── poetry.lock

├── poetry.toml

├── pyproject.toml

└── test

├── __init__.py

├── assets

├── __init__.py

├── test_plate_1.png

└── test_plate_2.png

├── conftest.py

└── fast_lp_ocr

├── __init__.py

├── inference

├── __init__.py

├── test_hub.py

├── test_onnx_inference.py

└── test_process.py

└── train

├── __init__.py

├── test_config.py

├── test_custom.py

├── test_models.py

└── test_utils.py

/.editorconfig:

--------------------------------------------------------------------------------

1 | # EditorConfig: https://EditorConfig.org

2 | root = true

3 |

4 | [*]

5 | charset = utf-8

6 | end_of_line = lf

7 | indent_style = space

8 | trim_trailing_whitespace = true

9 | insert_final_newline = true

10 |

11 | [*.py]

12 | indent_size = 4

13 | max_line_length = 100

14 |

--------------------------------------------------------------------------------

/.github/workflows/close-inactive-issues.yaml:

--------------------------------------------------------------------------------

1 | name: Close inactive issues

2 |

3 | on:

4 | schedule:

5 | - cron: "30 1 * * *" # Runs daily at 1:30 AM UTC

6 |

7 | jobs:

8 | close-issues:

9 | runs-on: ubuntu-latest

10 | permissions:

11 | issues: write

12 | pull-requests: write

13 | steps:

14 | - uses: actions/stale@v5

15 | with:

16 | days-before-issue-stale: 90 # The number of days old an issue can be before marking it stale

17 | days-before-issue-close: 14 # The number of days to wait to close an issue after it being marked stale

18 | stale-issue-label: "stale"

19 | stale-issue-message: "This issue is stale because it has been open for 90 days with no activity."

20 | close-issue-message: "This issue was closed because it has been inactive for 14 days since being marked as stale."

21 | days-before-pr-stale: -1 # Disables stale behavior for PRs

22 | days-before-pr-close: -1 # Disables closing behavior for PRs

23 | repo-token: ${{ secrets.GITHUB_TOKEN }}

24 |

--------------------------------------------------------------------------------

/.github/workflows/main.yaml:

--------------------------------------------------------------------------------

1 | name: Test and Deploy

2 | on:

3 | push:

4 | branches:

5 | - master

6 | tags:

7 | - 'v*'

8 | pull_request:

9 | branches:

10 | - master

11 | jobs:

12 | test:

13 | name: Test

14 | strategy:

15 | fail-fast: false

16 | matrix:

17 | python-version: [ '3.10', '3.11', '3.12' ]

18 | os: [ ubuntu-latest ]

19 | runs-on: ${{ matrix.os }}

20 | steps:

21 | - uses: actions/checkout@v3

22 |

23 | - name: Install poetry

24 | run: pipx install poetry

25 |

26 | - uses: actions/setup-python@v4

27 | with:

28 | python-version: ${{ matrix.python-version }}

29 | cache: 'poetry'

30 |

31 | - name: Install dependencies

32 | run: poetry install --all-extras

33 |

34 | - name: Check format

35 | run: make check_format

36 |

37 | - name: Run linters

38 | run: make lint

39 |

40 | - name: Run tests

41 | run: make test

42 |

43 | publish-to-pypi:

44 | name: Build and Publish to PyPI

45 | needs:

46 | - test

47 | if: "startsWith(github.ref, 'refs/tags/v')"

48 | runs-on: ubuntu-latest

49 | environment:

50 | name: pypi

51 | url: https://pypi.org/p/fast-plate-ocr

52 | permissions:

53 | id-token: write

54 | steps:

55 | - uses: actions/checkout@v3

56 |

57 | - name: Install poetry

58 | run: pipx install poetry

59 |

60 | - name: Setup Python

61 | uses: actions/setup-python@v3

62 | with:

63 | python-version: '3.10'

64 |

65 | - name: Build a binary wheel

66 | run: poetry build

67 |

68 | - name: Publish distribution 📦 to PyPI

69 | uses: pypa/gh-action-pypi-publish@release/v1

70 |

71 | github-release:

72 | name: Create GitHub release

73 | needs:

74 | - publish-to-pypi

75 | runs-on: ubuntu-latest

76 |

77 | permissions:

78 | contents: write

79 |

80 | steps:

81 | - uses: actions/checkout@v3

82 |

83 | - name: Check package version matches tag

84 | id: check-version

85 | uses: samuelcolvin/check-python-version@v4.1

86 | with:

87 | version_file_path: 'pyproject.toml'

88 |

89 | - name: Create GitHub Release

90 | env:

91 | GITHUB_TOKEN: ${{ github.token }}

92 | tag: ${{ github.ref_name }}

93 | run: |

94 | gh release create "$tag" \

95 | --repo="$GITHUB_REPOSITORY" \

96 | --title="${GITHUB_REPOSITORY#*/} ${tag#v}" \

97 | --generate-notes

98 |

99 | update_docs:

100 | name: Update documentation

101 | needs:

102 | - github-release

103 | runs-on: ubuntu-latest

104 |

105 | steps:

106 | - uses: actions/checkout@v3

107 | with:

108 | fetch-depth: 0

109 |

110 | - name: Install poetry

111 | run: pipx install poetry

112 |

113 | - uses: actions/setup-python@v4

114 | with:

115 | python-version: '3.10'

116 | cache: 'poetry'

117 |

118 | - name: Configure Git user

119 | run: |

120 | git config --local user.email "github-actions[bot]@users.noreply.github.com"

121 | git config --local user.name "github-actions[bot]"

122 |

123 | - name: Retrieve version

124 | id: check-version

125 | uses: samuelcolvin/check-python-version@v4.1

126 | with:

127 | version_file_path: 'pyproject.toml'

128 | skip_env_check: true

129 |

130 | - name: Deploy the docs

131 | run: |

132 | poetry run mike deploy \

133 | --update-aliases \

134 | --push \

135 | --branch docs-site \

136 | ${{ steps.check-version.outputs.VERSION_MAJOR_MINOR }} latest

137 |

--------------------------------------------------------------------------------

/.github/workflows/secret-scanning.yaml:

--------------------------------------------------------------------------------

1 | on:

2 | push:

3 | branches:

4 | - master

5 | pull_request:

6 | branches:

7 | - master

8 |

9 | name: Secret Leaks

10 | jobs:

11 | trufflehog:

12 | runs-on: ubuntu-latest

13 | steps:

14 | - name: Checkout code

15 | uses: actions/checkout@v4

16 | with:

17 | fetch-depth: 0

18 | - name: Secret Scanning

19 | uses: trufflesecurity/trufflehog@main

20 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | share/python-wheels/

24 | *.egg-info/

25 | .installed.cfg

26 | *.egg

27 | MANIFEST

28 |

29 | # PyInstaller

30 | # Usually these files are written by a python script from a template

31 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

32 | *.manifest

33 | *.spec

34 |

35 | # Installer logs

36 | pip-log.txt

37 | pip-delete-this-directory.txt

38 |

39 | # Unit test / coverage reports

40 | htmlcov/

41 | .tox/

42 | .nox/

43 | .coverage

44 | .coverage.*

45 | .cache

46 | nosetests.xml

47 | coverage.xml

48 | *.cover

49 | *.py,cover

50 | .hypothesis/

51 | .pytest_cache/

52 | cover/

53 |

54 | # Translations

55 | *.mo

56 | *.pot

57 |

58 | # Django stuff:

59 | *.log

60 | local_settings.py

61 | db.sqlite3

62 | db.sqlite3-journal

63 |

64 | # Flask stuff:

65 | instance/

66 | .webassets-cache

67 |

68 | # Scrapy stuff:

69 | .scrapy

70 |

71 | # Sphinx documentation

72 | docs/_build/

73 |

74 | # PyBuilder

75 | .pybuilder/

76 | target/

77 |

78 | # Jupyter Notebook

79 | .ipynb_checkpoints

80 |

81 | # IPython

82 | profile_default/

83 | ipython_config.py

84 |

85 | # pyenv

86 | # For a library or package, you might want to ignore these files since the code is

87 | # intended to run in multiple environments; otherwise, check them in:

88 | # .python-version

89 |

90 | # pipenv

91 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

92 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

93 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

94 | # install all needed dependencies.

95 | #Pipfile.lock

96 |

97 | # poetry

98 | # Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

99 | # This is especially recommended for binary packages to ensure reproducibility, and is more

100 | # commonly ignored for libraries.

101 | # https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

102 | #poetry.lock

103 |

104 | # pdm

105 | # Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

106 | #pdm.lock

107 | # pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

108 | # in version control.

109 | # https://pdm.fming.dev/#use-with-ide

110 | .pdm.toml

111 |

112 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

113 | __pypackages__/

114 |

115 | # Celery stuff

116 | celerybeat-schedule

117 | celerybeat.pid

118 |

119 | # SageMath parsed files

120 | *.sage.py

121 |

122 | # Environments

123 | .env

124 | .venv

125 | env/

126 | venv/

127 | ENV/

128 | env.bak/

129 | venv.bak/

130 |

131 | # Spyder project settings

132 | .spyderproject

133 | .spyproject

134 |

135 | # Rope project settings

136 | .ropeproject

137 |

138 | # mkdocs documentation

139 | /site

140 |

141 | # mypy

142 | .mypy_cache/

143 | .dmypy.json

144 | dmypy.json

145 |

146 | # Pyre type checker

147 | .pyre/

148 |

149 | # pytype static type analyzer

150 | .pytype/

151 |

152 | # Cython debug symbols

153 | cython_debug/

154 |

155 | # pyenv

156 | .python-version

157 |

158 | # CUDA DNN

159 | cudnn64_7.dll

160 |

161 | # Train folder

162 | train_val_set/

163 |

--------------------------------------------------------------------------------

/CHANGELOG.md:

--------------------------------------------------------------------------------

1 | # Changelog

2 |

3 | All notable changes to this project will be documented in this file.

4 |

5 | The format is based on [Keep a Changelog](https://keepachangelog.com/en/1.1.0/),

6 | and this project adheres to [Semantic Versioning](https://semver.org/spec/v2.0.0.html).

7 |

8 | ## [0.3.0] - 2024-12-08

9 |

10 | ### Added

11 |

12 | - New Global model using MobileViTV2 trained with data from +65 countries, with 85k+ plates 🚀 .

13 |

14 | [0.2.0]: https://github.com/ankandrew/fast-plate-ocr/compare/v0.2.0...v0.3.0

15 |

16 | ## [0.2.0] - 2024-10-14

17 |

18 | ### Added

19 |

20 | - New European model using MobileViTV2 - trained on +40 countries 🚀 .

21 | - Added more logging to train script.

22 |

23 | [0.2.0]: https://github.com/ankandrew/fast-plate-ocr/compare/v0.1.6...v0.2.0

24 |

25 | ## [0.1.6] - 2024-05-09

26 |

27 | ### Added

28 |

29 | - Add new Argentinian model trained with more (synthetic) data.

30 | - Add option to visualize only predictions which have low char prob.

31 | - Add onnxsim for simplifying ONNX model when exporting.

32 |

33 | [0.1.6]: https://github.com/ankandrew/fast-plate-ocr/compare/v0.1.5...v0.1.6

34 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2024 ankandrew

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | # Directories

2 | SRC_PATHS := fast_plate_ocr/ test/

3 |

4 | # Tasks

5 | .PHONY: help

6 | help:

7 | @echo "Available targets:"

8 | @echo " help : Show this help message"

9 | @echo " format : Format code using Ruff format"

10 | @echo " check_format : Check code formatting with Ruff format"

11 | @echo " ruff : Run Ruff linter"

12 | @echo " pylint : Run Pylint linter"

13 | @echo " mypy : Run MyPy static type checker"

14 | @echo " lint : Run linters (Ruff, Pylint and Mypy)"

15 | @echo " test : Run tests using pytest"

16 | @echo " checks : Check format, lint, and test"

17 | @echo " clean : Clean up caches and build artifacts"

18 |

19 | .PHONY: format

20 | format:

21 | @echo "==> Sorting imports..."

22 | @# Currently, the Ruff formatter does not sort imports, see https://docs.astral.sh/ruff/formatter/#sorting-imports

23 | @poetry run ruff check --select I --fix $(SRC_PATHS)

24 | @echo "=====> Formatting code..."

25 | @poetry run ruff format $(SRC_PATHS)

26 |

27 | .PHONY: check_format

28 | check_format:

29 | @echo "=====> Checking format..."

30 | @poetry run ruff format --check --diff $(SRC_PATHS)

31 | @echo "=====> Checking imports are sorted..."

32 | @poetry run ruff check --select I --exit-non-zero-on-fix $(SRC_PATHS)

33 |

34 | .PHONY: ruff

35 | ruff:

36 | @echo "=====> Running Ruff..."

37 | @poetry run ruff check $(SRC_PATHS)

38 |

39 | .PHONY: pylint

40 | pylint:

41 | @echo "=====> Running Pylint..."

42 | @poetry run pylint $(SRC_PATHS)

43 |

44 | .PHONY: mypy

45 | mypy:

46 | @echo "=====> Running Mypy..."

47 | @poetry run mypy $(SRC_PATHS)

48 |

49 | .PHONY: lint

50 | lint: ruff pylint mypy

51 |

52 | .PHONY: test

53 | test:

54 | @echo "=====> Running tests..."

55 | @poetry run pytest test/

56 |

57 | .PHONY: clean

58 | clean:

59 | @echo "=====> Cleaning caches..."

60 | @poetry run ruff clean

61 | @rm -rf .cache .pytest_cache .mypy_cache build dist *.egg-info

62 |

63 | checks: format lint test

64 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | ## Fast & Lightweight License Plate OCR

2 |

3 | [](https://github.com/ankandrew/fast-plate-ocr/actions)

4 | [](https://keras.io/keras_3/)

5 | [](https://pypi.python.org/pypi/fast-plate-ocr)

6 | [](https://pypi.python.org/pypi/fast-plate-ocr)

7 | [](https://github.com/astral-sh/ruff)

8 | [](https://github.com/pylint-dev/pylint)

9 | [](http://mypy-lang.org/)

10 | [](https://onnx.ai/)

11 | [](https://huggingface.co/spaces/ankandrew/fast-alpr)

12 | [](https://ankandrew.github.io/fast-plate-ocr/)

13 | [](https://pypi.python.org/pypi/fast-plate-ocr)

14 |

15 |

16 |

17 | ---

18 |

19 | ### Introduction

20 |

21 | **Lightweight** and **fast** OCR models for license plate text recognition. You can train models from scratch or use

22 | the trained models for inference.

23 |

24 | The idea is to use this after a plate object detector, since the OCR expects the cropped plates.

25 |

26 | ### Features

27 |

28 | - **Keras 3 Backend Support**: Compatible with **[TensorFlow](https://www.tensorflow.org/)**, **[JAX](https://github.com/google/jax)**, and **[PyTorch](https://pytorch.org/)** backends 🧠

29 | - **Augmentation Variety**: Diverse **augmentations** via **[Albumentations](https://albumentations.ai/)** library 🖼️

30 | - **Efficient Execution**: **Lightweight** models that are cheap to run 💰

31 | - **ONNX Runtime Inference**: **Fast** and **optimized** inference with **[ONNX runtime](https://onnxruntime.ai/)** ⚡

32 | - **User-Friendly CLI**: Simplified **CLI** for **training** and **validating** OCR models 🛠️

33 | - **Model HUB**: Access to a collection of **pre-trained models** ready for inference 🌟

34 |

35 | ### Available Models

36 |

37 | | Model Name | Time b=1

(ms)[1] | Throughput

(plates/second)[1] | Accuracy[2] | Dataset |

38 | |:----------------------------------------:|:--------------------------------:|:----------------------------------------------:|:----------------------:|:--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------:|

39 | | `argentinian-plates-cnn-model` | 2.1 | 476 | 94.05% | Non-synthetic, plates up to 2020. Dataset [arg_plate_dataset.zip](https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_plate_dataset.zip). |

40 | | `argentinian-plates-cnn-synth-model` | 2.1 | 476 | 94.19% | Plates up to 2020 + synthetic plates. Dataset [arg_plate_dataset_plus_synth.zip](https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_plate_dataset_plus_synth.zip). |

41 | | `european-plates-mobile-vit-v2-model` | 2.9 | 344 | 92.5%[3] | European plates (from +40 countries, trained on 40k+ plates). |

42 | | 🆕🔥 `global-plates-mobile-vit-v2-model` | 2.9 | 344 | 93.3%[4] | Worldwide plates (from +65 countries, trained on 85k+ plates). |

43 |

44 | > [!TIP]

45 | > Try `fast-plate-ocr` pre-trained models in [Hugging Spaces](https://huggingface.co/spaces/ankandrew/fast-alpr).

46 |

47 |

48 | Notes

49 |

50 | _[1] Inference on Mac M1 chip using CPUExecutionProvider. Utilizing CoreMLExecutionProvider accelerates speed by 5x in the CNN models._

51 |

52 | _[2] Accuracy is what we refer to as plate_acc. See [metrics section](#model-metrics)._

53 |

54 | _[3] For detailed accuracy for each country see [results](https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/european_mobile_vit_v2_ocr_results.json) and the corresponding [val split](https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/european_mobile_vit_v2_ocr_val.zip) used._

55 |

56 | _[4] For detailed accuracy for each country see [results](https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/global_mobile_vit_v2_ocr_results.json)._

57 |

58 |

59 |

60 |

61 | Reproduce results

62 |

63 | * Calculate Inference Time:

64 |

65 | ```shell

66 | pip install fast_plate_ocr

67 | ```

68 |

69 | ```python

70 | from fast_plate_ocr import ONNXPlateRecognizer

71 |

72 | m = ONNXPlateRecognizer("argentinian-plates-cnn-model")

73 | m.benchmark()

74 | ```

75 | * Calculate Model accuracy:

76 |

77 | ```shell

78 | pip install fast-plate-ocr[train]

79 | curl -LO https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_cnn_ocr_config.yaml

80 | curl -LO https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_cnn_ocr.keras

81 | curl -LO https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_plate_benchmark.zip

82 | unzip arg_plate_benchmark.zip

83 | fast_plate_ocr valid \

84 | -m arg_cnn_ocr.keras \

85 | --config-file arg_cnn_ocr_config.yaml \

86 | --annotations benchmark/annotations.csv

87 | ```

88 |

89 |

90 |

91 | ### Inference

92 |

93 | For inference, install:

94 |

95 | ```shell

96 | pip install fast_plate_ocr

97 | ```

98 |

99 | #### Usage

100 |

101 | To predict from disk image:

102 |

103 | ```python

104 | from fast_plate_ocr import ONNXPlateRecognizer

105 |

106 | m = ONNXPlateRecognizer('argentinian-plates-cnn-model')

107 | print(m.run('test_plate.png'))

108 | ```

109 |

110 |

111 | run demo

112 |

113 |

114 |

115 |

116 |

117 | To run model benchmark:

118 |

119 | ```python

120 | from fast_plate_ocr import ONNXPlateRecognizer

121 |

122 | m = ONNXPlateRecognizer('argentinian-plates-cnn-model')

123 | m.benchmark()

124 | ```

125 |

126 |

127 | benchmark demo

128 |

129 |

130 |

131 |

132 |

133 | Make sure to check out the [docs](https://ankandrew.github.io/fast-plate-ocr) for more information.

134 |

135 | ### CLI

136 |

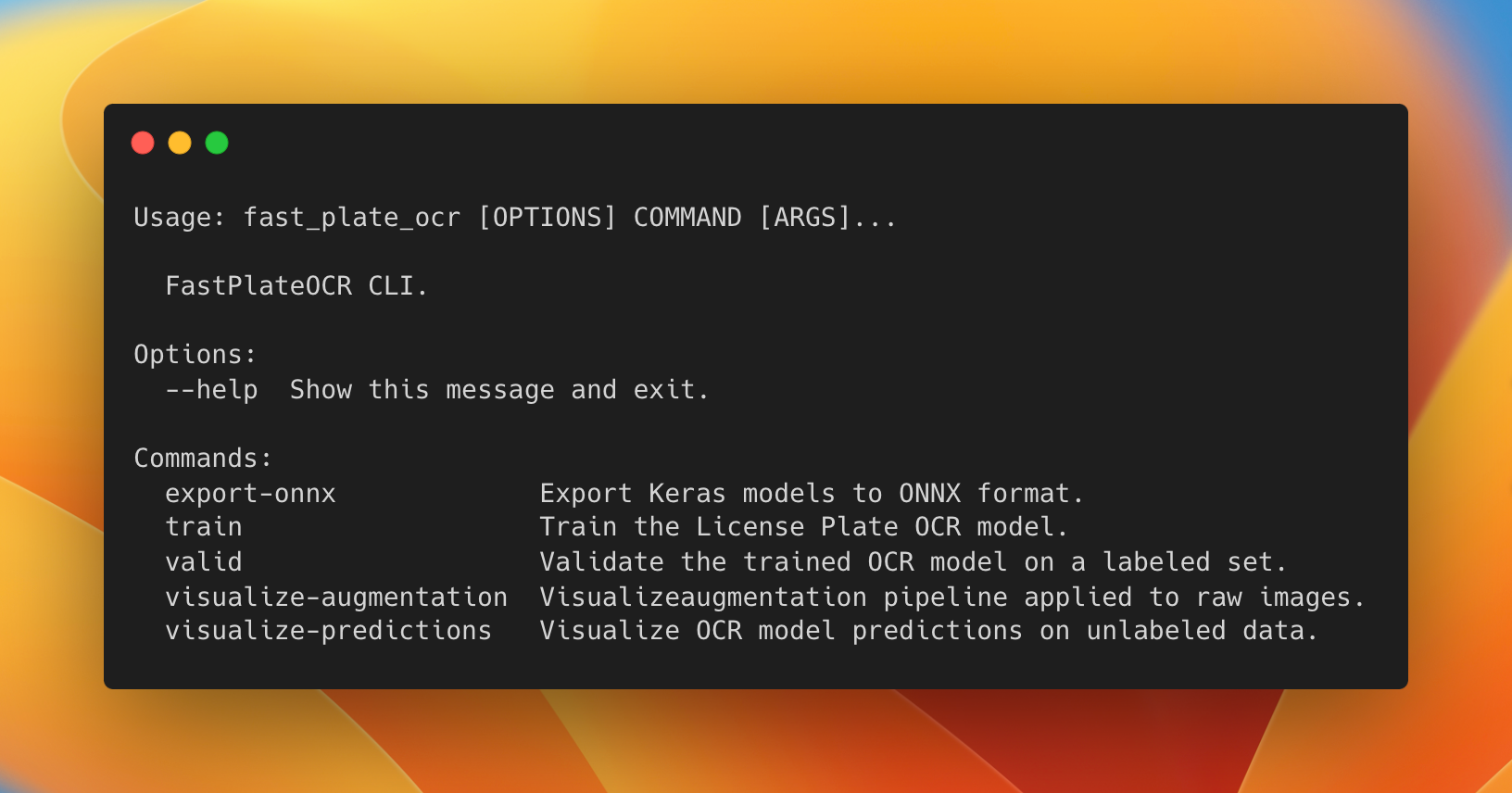

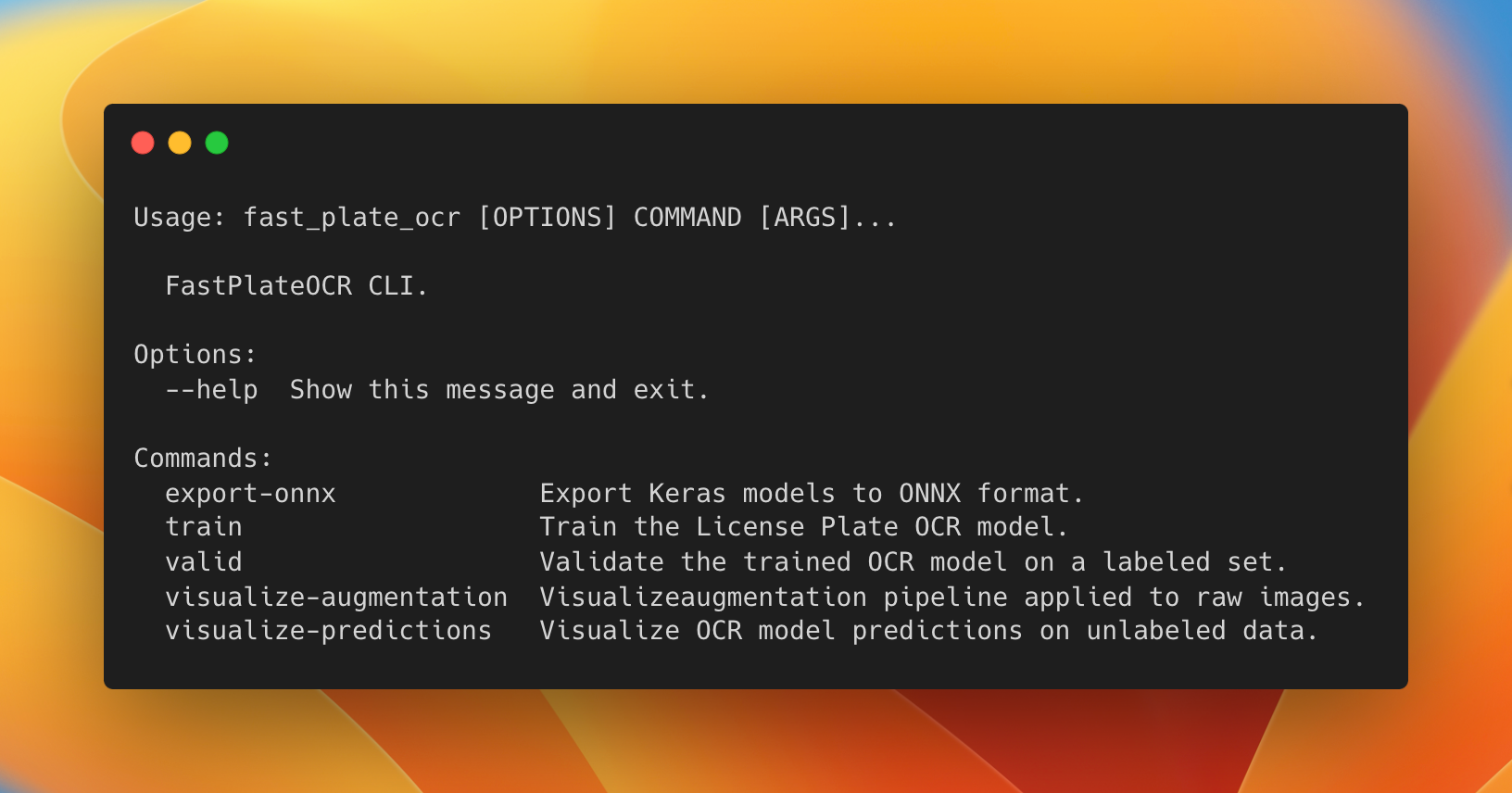

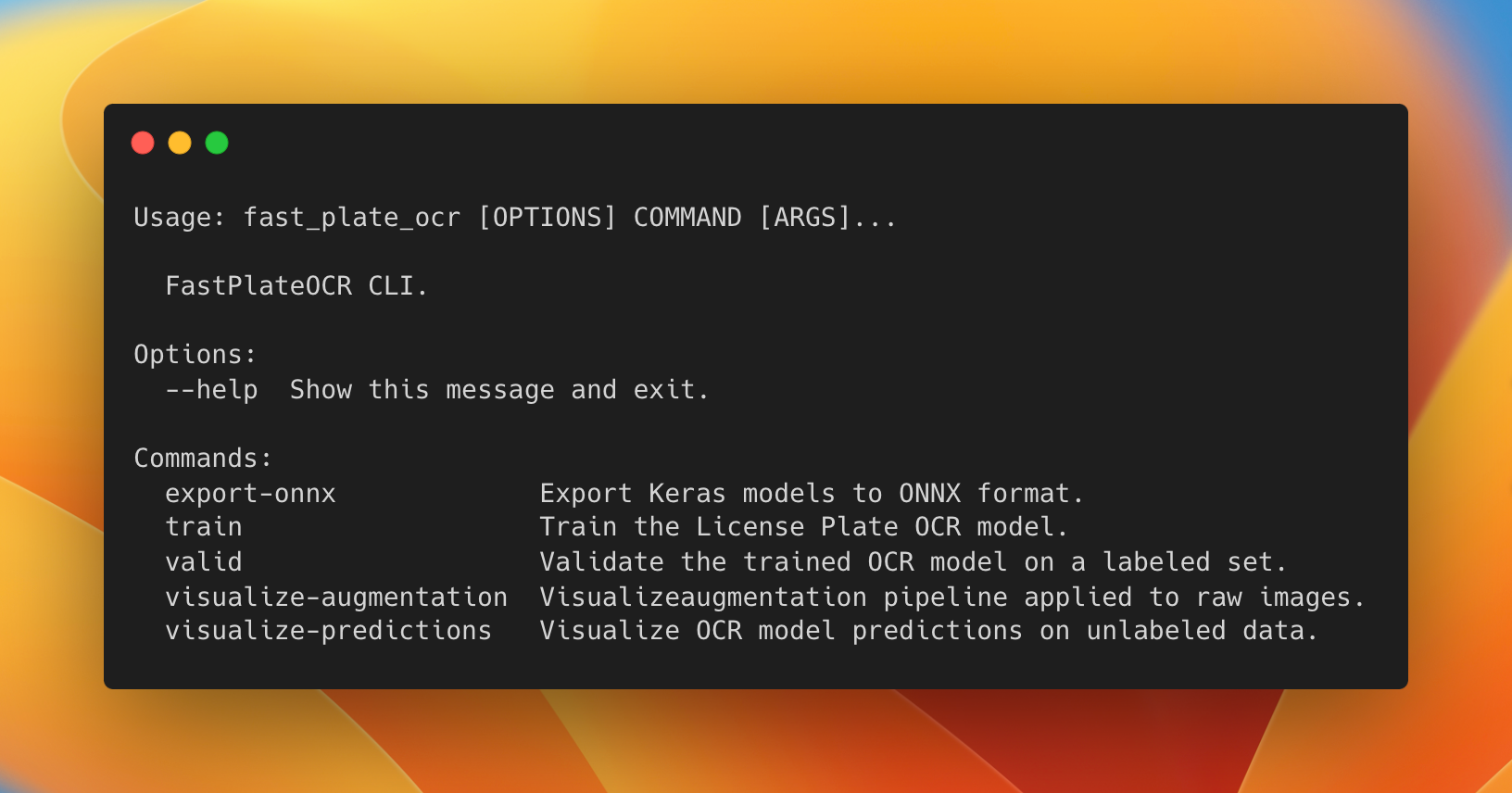

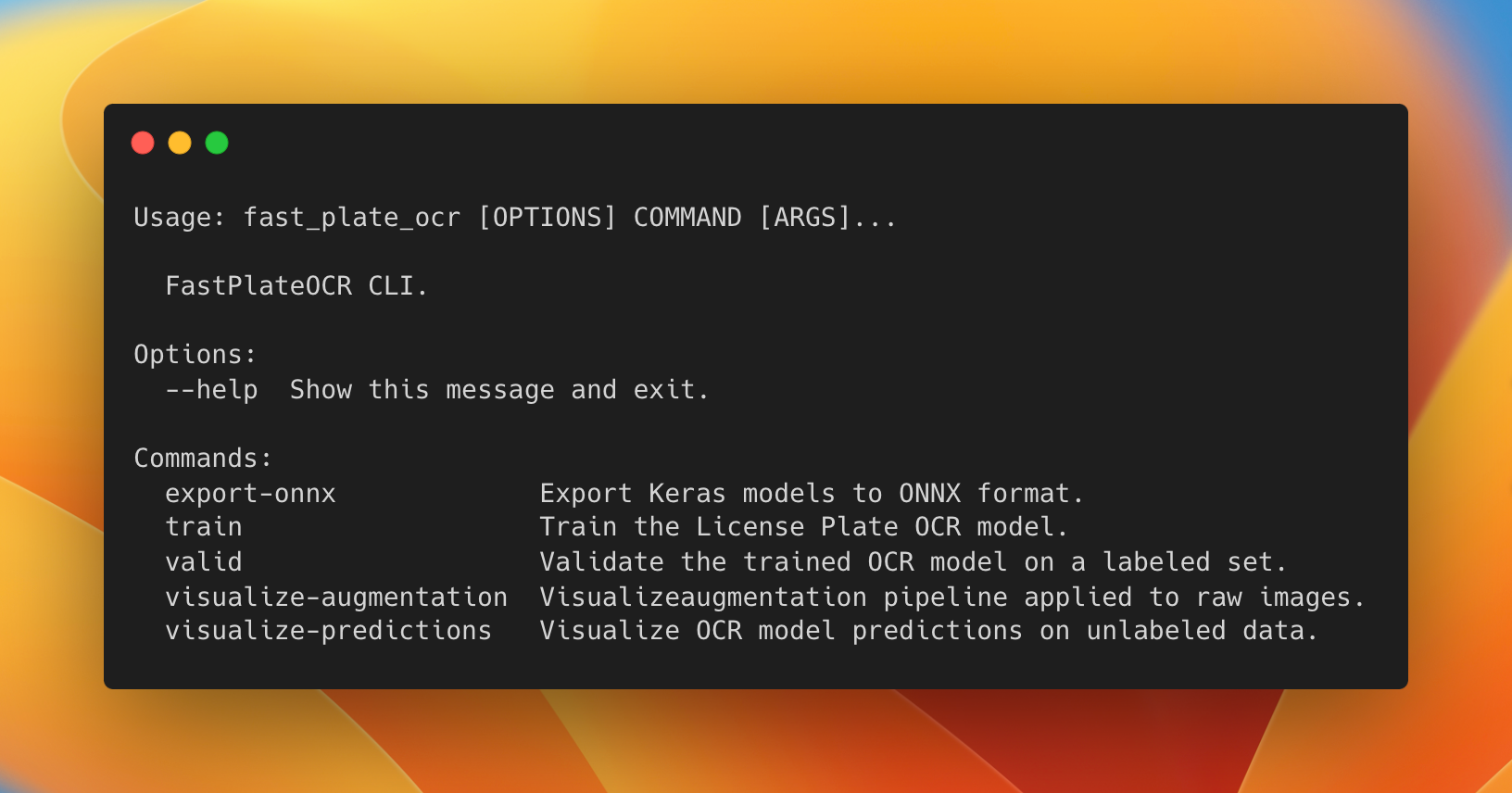

137 |  138 |

139 | To train or use the CLI tool, you'll need to install:

140 |

141 | ```shell

142 | pip install fast_plate_ocr[train]

143 | ```

144 |

145 | > [!IMPORTANT]

146 | > Make sure you have installed a supported backend for Keras.

147 |

148 | #### Train Model

149 |

150 | To train the model you will need:

151 |

152 | 1. A configuration used for the OCR model. Depending on your use case, you might have more plate slots or different set

153 | of characters. Take a look at the config for Argentinian license plate as an example:

154 | ```yaml

155 | # Config example for Argentinian License Plates

156 | # The old license plates contain 6 slots/characters (i.e. JUH697)

157 | # and new 'Mercosur' contain 7 slots/characters (i.e. AB123CD)

158 |

159 | # Max number of plate slots supported. This represents the number of model classification heads.

160 | max_plate_slots: 7

161 | # All the possible character set for the model output.

162 | alphabet: '0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZ_'

163 | # Padding character for plates which length is smaller than MAX_PLATE_SLOTS. It should still be present in the alphabet.

164 | pad_char: '_'

165 | # Image height which is fed to the model.

166 | img_height: 70

167 | # Image width which is fed to the model.

168 | img_width: 140

169 | ```

170 | 2. A labeled dataset,

171 | see [arg_plate_dataset.zip](https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_plate_dataset.zip)

172 | for the expected data format.

173 | 3. Run train script:

174 | ```shell

175 | # You can set the backend to either TensorFlow, JAX or PyTorch

176 | # (just make sure it is installed)

177 | KERAS_BACKEND=tensorflow fast_plate_ocr train \

178 | --annotations path_to_the_train.csv \

179 | --val-annotations path_to_the_val.csv \

180 | --config-file config.yaml \

181 | --batch-size 128 \

182 | --epochs 750 \

183 | --dense \

184 | --early-stopping-patience 100 \

185 | --reduce-lr-patience 50

186 | ```

187 |

188 | You will probably want to change the augmentation pipeline to apply to your dataset.

189 |

190 | In order to do this define an Albumentations pipeline:

191 |

192 | ```python

193 | import albumentations as A

194 |

195 | transform_pipeline = A.Compose(

196 | [

197 | # ...

198 | A.RandomBrightnessContrast(brightness_limit=0.1, contrast_limit=0.1, p=1),

199 | A.MotionBlur(blur_limit=(3, 5), p=0.1),

200 | A.CoarseDropout(max_holes=10, max_height=4, max_width=4, p=0.3),

201 | # ... and any other augmentation ...

202 | ]

203 | )

204 |

205 | # Export to a file (this resultant YAML can be used by the train script)

206 | A.save(transform_pipeline, "./transform_pipeline.yaml", data_format="yaml")

207 | ```

208 |

209 | And then you can train using the custom transformation pipeline with the `--augmentation-path` option.

210 |

211 | #### Visualize Augmentation

212 |

213 | It's useful to visualize the augmentation pipeline before training the model. This helps us to identify

214 | if we should apply more heavy augmentation or less, as it can hurt the model.

215 |

216 | You might want to see the augmented image next to the original, to see how much it changed:

217 |

218 | ```shell

219 | fast_plate_ocr visualize-augmentation \

220 | --img-dir benchmark/imgs \

221 | --columns 2 \

222 | --show-original \

223 | --augmentation-path '/transform_pipeline.yaml'

224 | ```

225 |

226 | You will see something like:

227 |

228 |

229 |

230 | #### Validate Model

231 |

232 | After finishing training you can validate the model on a labeled test dataset.

233 |

234 | Example:

235 |

236 | ```shell

237 | fast_plate_ocr valid \

238 | --model arg_cnn_ocr.keras \

239 | --config-file arg_plate_example.yaml \

240 | --annotations benchmark/annotations.csv

241 | ```

242 |

243 | #### Visualize Predictions

244 |

245 | Once you finish training your model, you can view the model predictions on raw data with:

246 |

247 | ```shell

248 | fast_plate_ocr visualize-predictions \

249 | --model arg_cnn_ocr.keras \

250 | --img-dir benchmark/imgs \

251 | --config-file arg_cnn_ocr_config.yaml

252 | ```

253 |

254 | You will see something like:

255 |

256 |

257 |

258 | #### Export as ONNX

259 |

260 | Exporting the Keras model to ONNX format might be beneficial to speed-up inference time.

261 |

262 | ```shell

263 | fast_plate_ocr export-onnx \

264 | --model arg_cnn_ocr.keras \

265 | --output-path arg_cnn_ocr.onnx \

266 | --opset 18 \

267 | --config-file arg_cnn_ocr_config.yaml

268 | ```

269 |

270 | ### Keras Backend

271 |

272 | To train the model, you can install the ML Framework you like the most. **Keras 3** has

273 | support for **TensorFlow**, **JAX** and **PyTorch** backends.

274 |

275 | To change the Keras backend you can either:

276 |

277 | 1. Export `KERAS_BACKEND` environment variable, i.e. to use JAX for training:

278 | ```shell

279 | KERAS_BACKEND=jax fast_plate_ocr train --config-file ...

280 | ```

281 | 2. Edit your local config file at `~/.keras/keras.json`.

282 |

283 | _Note: You will probably need to install your desired framework for training._

284 |

285 | ### Model Architecture

286 |

287 | The current model architecture is quite simple but effective.

288 | See [cnn_ocr_model](https://github.com/ankandrew/cnn-ocr-lp/blob/e59b738bad86d269c82101dfe7a3bef49b3a77c7/fast_plate_ocr/train/model/models.py#L23-L23)

289 | for implementation details.

290 |

291 | The model output consists of several heads. Each head represents the prediction of a character of the

292 | plate. If the plate consists of 7 characters at most (`max_plate_slots=7`), then the model would have 7 heads.

293 |

294 | Example of Argentinian plates:

295 |

296 |

297 |

298 | Each head will output a probability distribution over the `vocabulary` specified during training. So the output

299 | prediction for a single plate will be of shape `(max_plate_slots, vocabulary_size)`.

300 |

301 | ### Model Metrics

302 |

303 | During training, you will see the following metrics

304 |

305 | * **plate_acc**: Compute the number of **license plates** that were **fully classified**. For a single plate, if the

306 | ground truth is `ABC123` and the prediction is also `ABC123`, it would score 1. However, if the prediction was

307 | `ABD123`, it would score 0, as **not all characters** were correctly classified.

308 |

309 | * **cat_acc**: Calculate the accuracy of **individual characters** within the license plates that were

310 | **correctly classified**. For example, if the correct label is `ABC123` and the prediction is `ABC133`, it would yield

311 | a precision of 83.3% (5 out of 6 characters correctly classified), rather than 0% as in plate_acc, because it's not

312 | completely classified correctly.

313 |

314 | * **top_3_k**: Calculate how frequently the true character is included in the **top-3 predictions**

315 | (the three predictions with the highest probability).

316 |

317 | ### Contributing

318 |

319 | Contributions to the repo are greatly appreciated. Whether it's bug fixes, feature enhancements, or new models,

320 | your contributions are warmly welcomed.

321 |

322 | To start contributing or to begin development, you can follow these steps:

323 |

324 | 1. Clone repo

325 | ```shell

326 | git clone https://github.com/ankandrew/fast-plate-ocr.git

327 | ```

328 | 2. Install all dependencies using [Poetry](https://python-poetry.org/docs/#installation):

329 | ```shell

330 | poetry install --all-extras

331 | ```

332 | 3. To ensure your changes pass linting and tests before submitting a PR:

333 | ```shell

334 | make checks

335 | ```

336 |

337 | If you want to train a model and share it, we'll add it to the HUB 🚀

338 |

339 | If you look to contribute to the repo, some cool things are in the backlog:

340 |

341 | - [ ] Implement [STN](https://arxiv.org/abs/1506.02025) using Keras 3 (With `keras.ops`)

342 | - [ ] Implement [SVTRv2](https://arxiv.org/abs/2411.15858).

343 | - [ ] Implement CTC loss function, so we can choose that or CE loss.

344 | - [ ] Extra head for country recognition, making it configurable.

345 |

--------------------------------------------------------------------------------

/config/arg_plate_example.yaml:

--------------------------------------------------------------------------------

1 | # Config example for Argentinian License Plates

2 | # The old license plates contain 6 slots/characters (i.e. JUH697)

3 | # and new 'Mercosur' contain 7 slots/characters (i.e. AB123CD)

4 |

5 | # Max number of plate slots supported. This represents the number of model classification heads.

6 | max_plate_slots: 7

7 | # All the possible character set for the model output.

8 | alphabet: '0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZ_'

9 | # Padding character for plates which length is smaller than MAX_PLATE_SLOTS. It should still be present in the alphabet.

10 | pad_char: '_'

11 | # Image height which is fed to the model.

12 | img_height: 70

13 | # Image width which is fed to the model.

14 | img_width: 140

15 |

--------------------------------------------------------------------------------

/config/latin_plate_example.yaml:

--------------------------------------------------------------------------------

1 | # Config example for Latin plates from 70 countries

2 |

3 | # Max number of plate slots supported. This represents the number of model classification heads.

4 | max_plate_slots: 9

5 | # All the possible character set for the model output.

6 | alphabet: '0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZ_'

7 | # Padding character for plates which length is smaller than MAX_PLATE_SLOTS. It should still be present in the alphabet.

8 | pad_char: '_'

9 | # Image height which is fed to the model.

10 | img_height: 70

11 | # Image width which is fed to the model.

12 | img_width: 140

13 |

--------------------------------------------------------------------------------

/docs/architecture.md:

--------------------------------------------------------------------------------

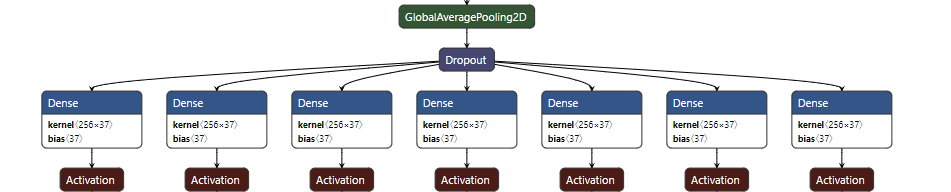

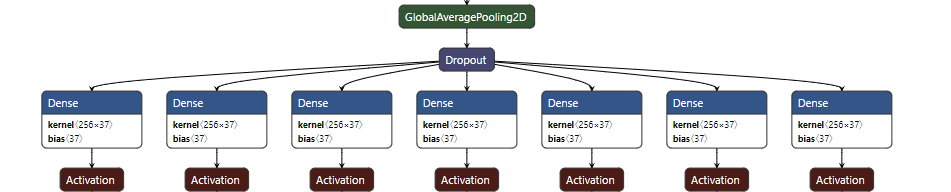

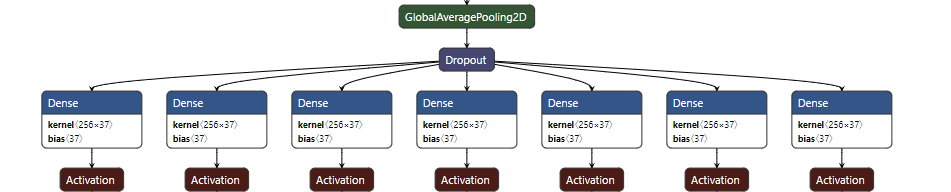

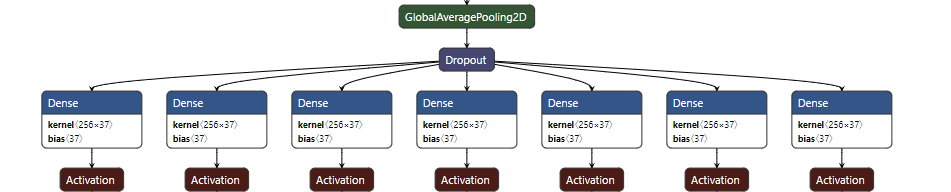

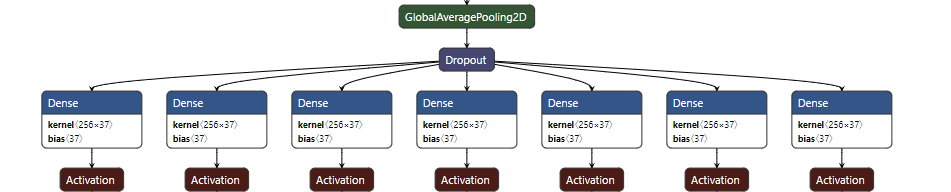

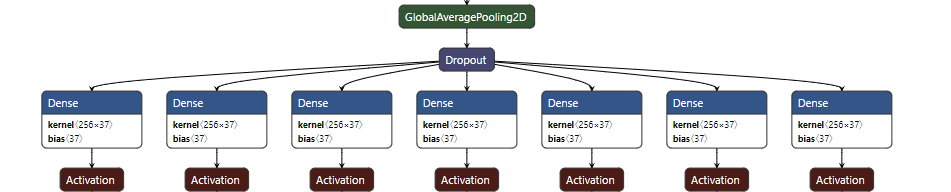

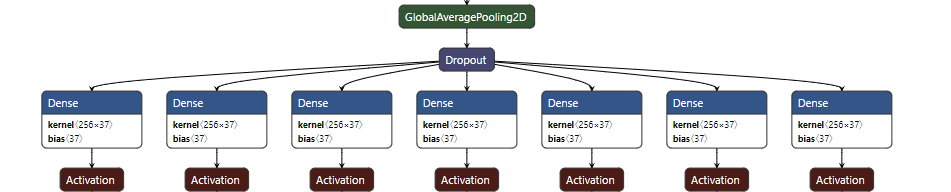

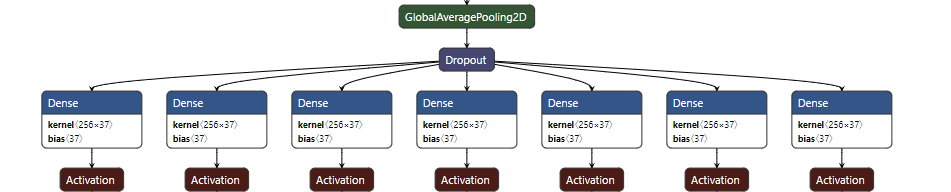

1 | ### ConvNet (CNN) model

2 |

3 | The current model architecture is quite simple but effective. It just consists of a few CNN layers with several output

4 | heads.

5 | See [cnn_ocr_model](https://github.com/ankandrew/cnn-ocr-lp/blob/e59b738bad86d269c82101dfe7a3bef49b3a77c7/fast_plate_ocr/train/model/models.py#L23-L23)

6 | for implementation details.

7 |

8 | The model output consists of several heads. Each head represents the prediction of a character of the

9 | plate. If the plate consists of 7 characters at most (`max_plate_slots=7`), then the model would have 7 heads.

10 |

11 | Example of Argentinian plates:

12 |

13 |

14 |

15 | Each head will output a probability distribution over the `vocabulary` specified during training. So the output

16 | prediction for a single plate will be of shape `(max_plate_slots, vocabulary_size)`.

17 |

18 | ### Model Metrics

19 |

20 | During training, you will see the following metrics

21 |

22 | * **plate_acc**: Compute the number of **license plates** that were **fully classified**. For a single plate, if the

23 | ground truth is `ABC123` and the prediction is also `ABC123`, it would score 1. However, if the prediction was

24 | `ABD123`, it would score 0, as **not all characters** were correctly classified.

25 |

26 | * **cat_acc**: Calculate the accuracy of **individual characters** within the license plates that were

27 | **correctly classified**. For example, if the correct label is `ABC123` and the prediction is `ABC133`, it would yield

28 | a precision of 83.3% (5 out of 6 characters correctly classified), rather than 0% as in plate_acc, because it's not

29 | completely classified correctly.

30 |

31 | * **top_3_k**: Calculate how frequently the true character is included in the **top-3 predictions**

32 | (the three predictions with the highest probability).

33 |

--------------------------------------------------------------------------------

/docs/contributing.md:

--------------------------------------------------------------------------------

1 | Contributions are greatly appreciated. Whether it's bug fixes, feature enhancements, or new models,

2 | your contributions are warmly welcomed.

3 |

4 | To start contributing or to begin development, you can follow these steps:

5 |

6 | 1. Clone repo

7 | ```shell

8 | git clone https://github.com/ankandrew/fast-plate-ocr.git

9 | ```

10 | 2. Install all dependencies using [Poetry](https://python-poetry.org/docs/#installation):

11 | ```shell

12 | poetry install --all-extras

13 | ```

14 | 3. To ensure your changes pass linting and tests before submitting a PR:

15 | ```shell

16 | make checks

17 | ```

18 |

19 | ???+ tip

20 | If you want to train a model and share it, we'll add it to the HUB 🚀

21 |

--------------------------------------------------------------------------------

/docs/index.md:

--------------------------------------------------------------------------------

1 | # Fast & Lightweight License Plate OCR

2 |

3 |

4 |

5 | **FastPlateOCR** is a **lightweight** and **fast** OCR framework for **license plate text recognition**. You can train

6 | models from scratch or use the trained models for inference.

7 |

8 | The idea is to use this after a plate object detector, since the OCR expects the cropped plates.

9 |

10 | ### Features

11 |

12 | - **Keras 3 Backend Support**: Compatible with **TensorFlow**, **JAX**, and **PyTorch** backends 🧠

13 | - **Augmentation Variety**: Diverse augmentations via **Albumentations** library 🖼️

14 | - **Efficient Execution**: **Lightweight** models that are cheap to run 💰

15 | - **ONNX Runtime Inference**: **Fast** and **optimized** inference with ONNX runtime ⚡

16 | - **User-Friendly CLI**: Simplified **CLI** for **training** and **validating** OCR models 🛠️

17 | - **Model HUB**: Access to a collection of pre-trained models ready for inference 🌟

18 |

19 | ### Model Zoo

20 |

21 | We currently have the following available models:

22 |

23 | | Model Name | Time b=1

138 |

139 | To train or use the CLI tool, you'll need to install:

140 |

141 | ```shell

142 | pip install fast_plate_ocr[train]

143 | ```

144 |

145 | > [!IMPORTANT]

146 | > Make sure you have installed a supported backend for Keras.

147 |

148 | #### Train Model

149 |

150 | To train the model you will need:

151 |

152 | 1. A configuration used for the OCR model. Depending on your use case, you might have more plate slots or different set

153 | of characters. Take a look at the config for Argentinian license plate as an example:

154 | ```yaml

155 | # Config example for Argentinian License Plates

156 | # The old license plates contain 6 slots/characters (i.e. JUH697)

157 | # and new 'Mercosur' contain 7 slots/characters (i.e. AB123CD)

158 |

159 | # Max number of plate slots supported. This represents the number of model classification heads.

160 | max_plate_slots: 7

161 | # All the possible character set for the model output.

162 | alphabet: '0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZ_'

163 | # Padding character for plates which length is smaller than MAX_PLATE_SLOTS. It should still be present in the alphabet.

164 | pad_char: '_'

165 | # Image height which is fed to the model.

166 | img_height: 70

167 | # Image width which is fed to the model.

168 | img_width: 140

169 | ```

170 | 2. A labeled dataset,

171 | see [arg_plate_dataset.zip](https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_plate_dataset.zip)

172 | for the expected data format.

173 | 3. Run train script:

174 | ```shell

175 | # You can set the backend to either TensorFlow, JAX or PyTorch

176 | # (just make sure it is installed)

177 | KERAS_BACKEND=tensorflow fast_plate_ocr train \

178 | --annotations path_to_the_train.csv \

179 | --val-annotations path_to_the_val.csv \

180 | --config-file config.yaml \

181 | --batch-size 128 \

182 | --epochs 750 \

183 | --dense \

184 | --early-stopping-patience 100 \

185 | --reduce-lr-patience 50

186 | ```

187 |

188 | You will probably want to change the augmentation pipeline to apply to your dataset.

189 |

190 | In order to do this define an Albumentations pipeline:

191 |

192 | ```python

193 | import albumentations as A

194 |

195 | transform_pipeline = A.Compose(

196 | [

197 | # ...

198 | A.RandomBrightnessContrast(brightness_limit=0.1, contrast_limit=0.1, p=1),

199 | A.MotionBlur(blur_limit=(3, 5), p=0.1),

200 | A.CoarseDropout(max_holes=10, max_height=4, max_width=4, p=0.3),

201 | # ... and any other augmentation ...

202 | ]

203 | )

204 |

205 | # Export to a file (this resultant YAML can be used by the train script)

206 | A.save(transform_pipeline, "./transform_pipeline.yaml", data_format="yaml")

207 | ```

208 |

209 | And then you can train using the custom transformation pipeline with the `--augmentation-path` option.

210 |

211 | #### Visualize Augmentation

212 |

213 | It's useful to visualize the augmentation pipeline before training the model. This helps us to identify

214 | if we should apply more heavy augmentation or less, as it can hurt the model.

215 |

216 | You might want to see the augmented image next to the original, to see how much it changed:

217 |

218 | ```shell

219 | fast_plate_ocr visualize-augmentation \

220 | --img-dir benchmark/imgs \

221 | --columns 2 \

222 | --show-original \

223 | --augmentation-path '/transform_pipeline.yaml'

224 | ```

225 |

226 | You will see something like:

227 |

228 |

229 |

230 | #### Validate Model

231 |

232 | After finishing training you can validate the model on a labeled test dataset.

233 |

234 | Example:

235 |

236 | ```shell

237 | fast_plate_ocr valid \

238 | --model arg_cnn_ocr.keras \

239 | --config-file arg_plate_example.yaml \

240 | --annotations benchmark/annotations.csv

241 | ```

242 |

243 | #### Visualize Predictions

244 |

245 | Once you finish training your model, you can view the model predictions on raw data with:

246 |

247 | ```shell

248 | fast_plate_ocr visualize-predictions \

249 | --model arg_cnn_ocr.keras \

250 | --img-dir benchmark/imgs \

251 | --config-file arg_cnn_ocr_config.yaml

252 | ```

253 |

254 | You will see something like:

255 |

256 |

257 |

258 | #### Export as ONNX

259 |

260 | Exporting the Keras model to ONNX format might be beneficial to speed-up inference time.

261 |

262 | ```shell

263 | fast_plate_ocr export-onnx \

264 | --model arg_cnn_ocr.keras \

265 | --output-path arg_cnn_ocr.onnx \

266 | --opset 18 \

267 | --config-file arg_cnn_ocr_config.yaml

268 | ```

269 |

270 | ### Keras Backend

271 |

272 | To train the model, you can install the ML Framework you like the most. **Keras 3** has

273 | support for **TensorFlow**, **JAX** and **PyTorch** backends.

274 |

275 | To change the Keras backend you can either:

276 |

277 | 1. Export `KERAS_BACKEND` environment variable, i.e. to use JAX for training:

278 | ```shell

279 | KERAS_BACKEND=jax fast_plate_ocr train --config-file ...

280 | ```

281 | 2. Edit your local config file at `~/.keras/keras.json`.

282 |

283 | _Note: You will probably need to install your desired framework for training._

284 |

285 | ### Model Architecture

286 |

287 | The current model architecture is quite simple but effective.

288 | See [cnn_ocr_model](https://github.com/ankandrew/cnn-ocr-lp/blob/e59b738bad86d269c82101dfe7a3bef49b3a77c7/fast_plate_ocr/train/model/models.py#L23-L23)

289 | for implementation details.

290 |

291 | The model output consists of several heads. Each head represents the prediction of a character of the

292 | plate. If the plate consists of 7 characters at most (`max_plate_slots=7`), then the model would have 7 heads.

293 |

294 | Example of Argentinian plates:

295 |

296 |

297 |

298 | Each head will output a probability distribution over the `vocabulary` specified during training. So the output

299 | prediction for a single plate will be of shape `(max_plate_slots, vocabulary_size)`.

300 |

301 | ### Model Metrics

302 |

303 | During training, you will see the following metrics

304 |

305 | * **plate_acc**: Compute the number of **license plates** that were **fully classified**. For a single plate, if the

306 | ground truth is `ABC123` and the prediction is also `ABC123`, it would score 1. However, if the prediction was

307 | `ABD123`, it would score 0, as **not all characters** were correctly classified.

308 |

309 | * **cat_acc**: Calculate the accuracy of **individual characters** within the license plates that were

310 | **correctly classified**. For example, if the correct label is `ABC123` and the prediction is `ABC133`, it would yield

311 | a precision of 83.3% (5 out of 6 characters correctly classified), rather than 0% as in plate_acc, because it's not

312 | completely classified correctly.

313 |

314 | * **top_3_k**: Calculate how frequently the true character is included in the **top-3 predictions**

315 | (the three predictions with the highest probability).

316 |

317 | ### Contributing

318 |

319 | Contributions to the repo are greatly appreciated. Whether it's bug fixes, feature enhancements, or new models,

320 | your contributions are warmly welcomed.

321 |

322 | To start contributing or to begin development, you can follow these steps:

323 |

324 | 1. Clone repo

325 | ```shell

326 | git clone https://github.com/ankandrew/fast-plate-ocr.git

327 | ```

328 | 2. Install all dependencies using [Poetry](https://python-poetry.org/docs/#installation):

329 | ```shell

330 | poetry install --all-extras

331 | ```

332 | 3. To ensure your changes pass linting and tests before submitting a PR:

333 | ```shell

334 | make checks

335 | ```

336 |

337 | If you want to train a model and share it, we'll add it to the HUB 🚀

338 |

339 | If you look to contribute to the repo, some cool things are in the backlog:

340 |

341 | - [ ] Implement [STN](https://arxiv.org/abs/1506.02025) using Keras 3 (With `keras.ops`)

342 | - [ ] Implement [SVTRv2](https://arxiv.org/abs/2411.15858).

343 | - [ ] Implement CTC loss function, so we can choose that or CE loss.

344 | - [ ] Extra head for country recognition, making it configurable.

345 |

--------------------------------------------------------------------------------

/config/arg_plate_example.yaml:

--------------------------------------------------------------------------------

1 | # Config example for Argentinian License Plates

2 | # The old license plates contain 6 slots/characters (i.e. JUH697)

3 | # and new 'Mercosur' contain 7 slots/characters (i.e. AB123CD)

4 |

5 | # Max number of plate slots supported. This represents the number of model classification heads.

6 | max_plate_slots: 7

7 | # All the possible character set for the model output.

8 | alphabet: '0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZ_'

9 | # Padding character for plates which length is smaller than MAX_PLATE_SLOTS. It should still be present in the alphabet.

10 | pad_char: '_'

11 | # Image height which is fed to the model.

12 | img_height: 70

13 | # Image width which is fed to the model.

14 | img_width: 140

15 |

--------------------------------------------------------------------------------

/config/latin_plate_example.yaml:

--------------------------------------------------------------------------------

1 | # Config example for Latin plates from 70 countries

2 |

3 | # Max number of plate slots supported. This represents the number of model classification heads.

4 | max_plate_slots: 9

5 | # All the possible character set for the model output.

6 | alphabet: '0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZ_'

7 | # Padding character for plates which length is smaller than MAX_PLATE_SLOTS. It should still be present in the alphabet.

8 | pad_char: '_'

9 | # Image height which is fed to the model.

10 | img_height: 70

11 | # Image width which is fed to the model.

12 | img_width: 140

13 |

--------------------------------------------------------------------------------

/docs/architecture.md:

--------------------------------------------------------------------------------

1 | ### ConvNet (CNN) model

2 |

3 | The current model architecture is quite simple but effective. It just consists of a few CNN layers with several output

4 | heads.

5 | See [cnn_ocr_model](https://github.com/ankandrew/cnn-ocr-lp/blob/e59b738bad86d269c82101dfe7a3bef49b3a77c7/fast_plate_ocr/train/model/models.py#L23-L23)

6 | for implementation details.

7 |

8 | The model output consists of several heads. Each head represents the prediction of a character of the

9 | plate. If the plate consists of 7 characters at most (`max_plate_slots=7`), then the model would have 7 heads.

10 |

11 | Example of Argentinian plates:

12 |

13 |

14 |

15 | Each head will output a probability distribution over the `vocabulary` specified during training. So the output

16 | prediction for a single plate will be of shape `(max_plate_slots, vocabulary_size)`.

17 |

18 | ### Model Metrics

19 |

20 | During training, you will see the following metrics

21 |

22 | * **plate_acc**: Compute the number of **license plates** that were **fully classified**. For a single plate, if the

23 | ground truth is `ABC123` and the prediction is also `ABC123`, it would score 1. However, if the prediction was

24 | `ABD123`, it would score 0, as **not all characters** were correctly classified.

25 |

26 | * **cat_acc**: Calculate the accuracy of **individual characters** within the license plates that were

27 | **correctly classified**. For example, if the correct label is `ABC123` and the prediction is `ABC133`, it would yield

28 | a precision of 83.3% (5 out of 6 characters correctly classified), rather than 0% as in plate_acc, because it's not

29 | completely classified correctly.

30 |

31 | * **top_3_k**: Calculate how frequently the true character is included in the **top-3 predictions**

32 | (the three predictions with the highest probability).

33 |

--------------------------------------------------------------------------------

/docs/contributing.md:

--------------------------------------------------------------------------------

1 | Contributions are greatly appreciated. Whether it's bug fixes, feature enhancements, or new models,

2 | your contributions are warmly welcomed.

3 |

4 | To start contributing or to begin development, you can follow these steps:

5 |

6 | 1. Clone repo

7 | ```shell

8 | git clone https://github.com/ankandrew/fast-plate-ocr.git

9 | ```

10 | 2. Install all dependencies using [Poetry](https://python-poetry.org/docs/#installation):

11 | ```shell

12 | poetry install --all-extras

13 | ```

14 | 3. To ensure your changes pass linting and tests before submitting a PR:

15 | ```shell

16 | make checks

17 | ```

18 |

19 | ???+ tip

20 | If you want to train a model and share it, we'll add it to the HUB 🚀

21 |

--------------------------------------------------------------------------------

/docs/index.md:

--------------------------------------------------------------------------------

1 | # Fast & Lightweight License Plate OCR

2 |

3 |

4 |

5 | **FastPlateOCR** is a **lightweight** and **fast** OCR framework for **license plate text recognition**. You can train

6 | models from scratch or use the trained models for inference.

7 |

8 | The idea is to use this after a plate object detector, since the OCR expects the cropped plates.

9 |

10 | ### Features

11 |

12 | - **Keras 3 Backend Support**: Compatible with **TensorFlow**, **JAX**, and **PyTorch** backends 🧠

13 | - **Augmentation Variety**: Diverse augmentations via **Albumentations** library 🖼️

14 | - **Efficient Execution**: **Lightweight** models that are cheap to run 💰

15 | - **ONNX Runtime Inference**: **Fast** and **optimized** inference with ONNX runtime ⚡

16 | - **User-Friendly CLI**: Simplified **CLI** for **training** and **validating** OCR models 🛠️

17 | - **Model HUB**: Access to a collection of pre-trained models ready for inference 🌟

18 |

19 | ### Model Zoo

20 |

21 | We currently have the following available models:

22 |

23 | | Model Name | Time b=1

(ms)[1] | Throughput

(plates/second)[1] | Accuracy[2] | Dataset |

24 | |:----------------------------------------:|:--------------------------------:|:----------------------------------------------:|:----------------------:|:--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------:|

25 | | `argentinian-plates-cnn-model` | 2.1 | 476 | 94.05% | Non-synthetic, plates up to 2020. Dataset [arg_plate_dataset.zip](https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_plate_dataset.zip). |

26 | | `argentinian-plates-cnn-synth-model` | 2.1 | 476 | 94.19% | Plates up to 2020 + synthetic plates. Dataset [arg_plate_dataset_plus_synth.zip](https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_plate_dataset_plus_synth.zip). |

27 | | `european-plates-mobile-vit-v2-model` | 2.9 | 344 | 92.5%[3] | European plates (from +40 countries, trained on 40k+ plates). |

28 | | 🆕🔥 `global-plates-mobile-vit-v2-model` | 2.9 | 344 | 93.3%[4] | Worldwide plates (from +65 countries, trained on 85k+ plates). |

29 |

30 | _[1] Inference on Mac M1 chip using CPUExecutionProvider. Utilizing CoreMLExecutionProvider accelerates speed

31 | by 5x in the CNN models._

32 |

33 | _[2] Accuracy is what we refer as plate_acc. See [metrics section](#model-metrics)._

34 |

35 | _[3] For detailed accuracy for each country see [results](https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/european_mobile_vit_v2_ocr_results.json) and

36 | the corresponding [val split](https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/european_mobile_vit_v2_ocr_val.zip) used._

37 |

38 | _[4] For detailed accuracy for each country see [results](https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/global_mobile_vit_v2_ocr_results.json)._

39 |

40 |

41 | Reproduce results.

42 |

43 | Calculate Inference Time:

44 |

45 | ```shell

46 | pip install fast_plate_ocr

47 | ```

48 |

49 | ```python

50 | from fast_plate_ocr import ONNXPlateRecognizer

51 |

52 | m = ONNXPlateRecognizer("argentinian-plates-cnn-model")

53 | m.benchmark()

54 | ```

55 |

56 | Calculate Model accuracy:

57 |

58 | ```shell

59 | pip install fast-plate-ocr[train]

60 | curl -LO https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_cnn_ocr_config.yaml

61 | curl -LO https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_cnn_ocr.keras

62 | curl -LO https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_plate_benchmark.zip

63 | unzip arg_plate_benchmark.zip

64 | fast_plate_ocr valid \

65 | -m arg_cnn_ocr.keras \

66 | --config-file arg_cnn_ocr_config.yaml \

67 | --annotations benchmark/annotations.csv

68 | ```

69 |

70 |

71 |

--------------------------------------------------------------------------------

/docs/installation.md:

--------------------------------------------------------------------------------

1 | ### Inference

2 |

3 | For **inference**, install:

4 |

5 | ```shell

6 | pip install fast_plate_ocr

7 | ```

8 |

9 | ### Train

10 |

11 | To **train** or use the **CLI tool**, you'll need to install:

12 |

13 | ```shell

14 | pip install fast_plate_ocr[train]

15 | ```

16 |

17 | ???+ info

18 | You will probably need to **install** your desired framework for training. FastPlateOCR doesn't

19 | enforce you to use any specific framework. See [Keras backend](usage.md#keras-backend) section.

20 |

--------------------------------------------------------------------------------

/docs/reference.md:

--------------------------------------------------------------------------------

1 | ::: fast_plate_ocr.inference.onnx_inference

2 |

--------------------------------------------------------------------------------

/docs/usage.md:

--------------------------------------------------------------------------------

1 | ### API

2 |

3 | To predict from disk image:

4 |

5 | ```python

6 | from fast_plate_ocr import ONNXPlateRecognizer

7 |

8 | m = ONNXPlateRecognizer('argentinian-plates-cnn-model')

9 | print(m.run('test_plate.png'))

10 | ```

11 |

12 |

13 | Demo

14 |

15 |

16 |

17 |

20 |

21 | To run model benchmark:

22 |

23 | ```python

24 | from fast_plate_ocr import ONNXPlateRecognizer

25 |

26 | m = ONNXPlateRecognizer('argentinian-plates-cnn-model')

27 | m.benchmark()

28 | ```

29 |

30 |

31 | Demo

32 |

33 |

34 |

35 |

38 |

39 | For a full list of options see [Reference](reference.md).

40 |

41 | ### CLI

42 |

43 |  44 |

45 | To train or use the CLI tool, you'll need to install:

46 |

47 | ```shell

48 | pip install fast_plate_ocr[train]

49 | ```

50 |

51 | #### Train Model

52 |

53 | To train the model you will need:

54 |

55 | 1. A configuration used for the OCR model. Depending on your use case, you might have more plate slots or different set

56 | of characters. Take a look at the config for Argentinian license plate as an example:

57 | ```yaml

58 | # Config example for Argentinian License Plates

59 | # The old license plates contain 6 slots/characters (i.e. JUH697)

60 | # and new 'Mercosur' contain 7 slots/characters (i.e. AB123CD)

61 |

62 | # Max number of plate slots supported. This represents the number of model classification heads.

63 | max_plate_slots: 7

64 | # All the possible character set for the model output.

65 | alphabet: '0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZ_'

66 | # Padding character for plates which length is smaller than MAX_PLATE_SLOTS. It should still be present in the alphabet.

67 | pad_char: '_'

68 | # Image height which is fed to the model.

69 | img_height: 70

70 | # Image width which is fed to the model.

71 | img_width: 140

72 | ```

73 | 2. A labeled dataset,

74 | see [arg_plate_dataset.zip](https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_plate_dataset.zip)

75 | for the expected data format.

76 | 3. Run train script:

77 | ```shell

78 | # You can set the backend to either TensorFlow, JAX or PyTorch

79 | # (just make sure it is installed)

80 | KERAS_BACKEND=tensorflow fast_plate_ocr train \

81 | --annotations path_to_the_train.csv \

82 | --val-annotations path_to_the_val.csv \

83 | --config-file config.yaml \

84 | --batch-size 128 \

85 | --epochs 750 \

86 | --dense \

87 | --early-stopping-patience 100 \

88 | --reduce-lr-patience 50

89 | ```

90 |

91 | You will probably want to change the augmentation pipeline to apply to your dataset.

92 |

93 | In order to do this define an Albumentations pipeline:

94 |

95 | ```python

96 | import albumentations as A

97 |

98 | transform_pipeline = A.Compose(

99 | [

100 | # ...

101 | A.RandomBrightnessContrast(brightness_limit=0.1, contrast_limit=0.1, p=1),

102 | A.MotionBlur(blur_limit=(3, 5), p=0.1),

103 | A.CoarseDropout(max_holes=10, max_height=4, max_width=4, p=0.3),

104 | # ... and any other augmentation ...

105 | ]

106 | )

107 |

108 | # Export to a file (this resultant YAML can be used by the train script)

109 | A.save(transform_pipeline, "./transform_pipeline.yaml", data_format="yaml")

110 | ```

111 |

112 | And then you can train using the custom transformation pipeline with the `--augmentation-path` option.

113 |

114 | #### Visualize Augmentation

115 |

116 | It's useful to visualize the augmentation pipeline before training the model. This helps us to identify

117 | if we should apply more heavy augmentation or less, as it can hurt the model.

118 |

119 | You might want to see the augmented image next to the original, to see how much it changed:

120 |

121 | ```shell

122 | fast_plate_ocr visualize-augmentation \

123 | --img-dir benchmark/imgs \

124 | --columns 2 \

125 | --show-original \

126 | --augmentation-path '/transform_pipeline.yaml'

127 | ```

128 |

129 | You will see something like:

130 |

131 |

132 |

133 | #### Validate Model

134 |

135 | After finishing training you can validate the model on a labeled test dataset.

136 |

137 | Example:

138 |

139 | ```shell

140 | fast_plate_ocr valid \

141 | --model arg_cnn_ocr.keras \

142 | --config-file arg_plate_example.yaml \

143 | --annotations benchmark/annotations.csv

144 | ```

145 |

146 | #### Visualize Predictions

147 |

148 | Once you finish training your model, you can view the model predictions on raw data with:

149 |

150 | ```shell

151 | fast_plate_ocr visualize-predictions \

152 | --model arg_cnn_ocr.keras \

153 | --img-dir benchmark/imgs \

154 | --config-file arg_cnn_ocr_config.yaml

155 | ```

156 |

157 | You will see something like:

158 |

159 |

160 |

161 | #### Export as ONNX

162 |

163 | Exporting the Keras model to ONNX format might be beneficial to speed-up inference time.

164 |

165 | ```shell

166 | fast_plate_ocr export-onnx \

167 | --model arg_cnn_ocr.keras \

168 | --output-path arg_cnn_ocr.onnx \

169 | --opset 18 \

170 | --config-file arg_cnn_ocr_config.yaml

171 | ```

172 |

173 | ### Keras Backend

174 |

175 | To train the model, you can install the ML Framework you like the most. **Keras 3** has

176 | support for **TensorFlow**, **JAX** and **PyTorch** backends.

177 |

178 | To change the Keras backend you can either:

179 |

180 | 1. Export `KERAS_BACKEND` environment variable, i.e. to use JAX for training:

181 | ```shell

182 | KERAS_BACKEND=jax fast_plate_ocr train --config-file ...

183 | ```

184 | 2. Edit your local config file at `~/.keras/keras.json`.

185 |

186 | ???+ tip

187 | **Usually training with JAX and TensorFlow is faster.**

188 |

189 | _Note: You will probably need to install your desired framework for training._

190 |

--------------------------------------------------------------------------------

/fast_plate_ocr/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Fast Plate OCR package.

3 | """

4 |

5 | from fast_plate_ocr.inference.onnx_inference import ONNXPlateRecognizer

6 |

7 | __all__ = ["ONNXPlateRecognizer"]

8 |

--------------------------------------------------------------------------------

/fast_plate_ocr/cli/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ankandrew/fast-plate-ocr/49954297c2226ad6c488f6fbfb93169b579b815d/fast_plate_ocr/cli/__init__.py

--------------------------------------------------------------------------------

/fast_plate_ocr/cli/cli.py:

--------------------------------------------------------------------------------

1 | """

2 | Main CLI used when training a FastPlateOCR model.

3 | """

4 |

5 | try:

6 | import click

7 |

8 | from fast_plate_ocr.cli.onnx_converter import export_onnx

9 | from fast_plate_ocr.cli.train import train

10 | from fast_plate_ocr.cli.valid import valid

11 | from fast_plate_ocr.cli.visualize_augmentation import visualize_augmentation

12 | from fast_plate_ocr.cli.visualize_predictions import visualize_predictions

13 | except ImportError as e:

14 | raise ImportError("Make sure to 'pip install fast-plate-ocr[train]' to run this!") from e

15 |

16 |

17 | @click.group(context_settings={"max_content_width": 120})

18 | def main_cli():

19 | """FastPlateOCR CLI."""

20 |

21 |

22 | main_cli.add_command(visualize_predictions)

23 | main_cli.add_command(visualize_augmentation)

24 | main_cli.add_command(valid)

25 | main_cli.add_command(train)

26 | main_cli.add_command(export_onnx)

27 |

--------------------------------------------------------------------------------

/fast_plate_ocr/cli/onnx_converter.py:

--------------------------------------------------------------------------------

1 | """

2 | Script for converting Keras models to ONNX format.

3 | """

4 |

5 | import logging

6 | import pathlib

7 | import shutil

8 | from tempfile import NamedTemporaryFile

9 |

10 | import click

11 | import numpy as np

12 | import onnx

13 | import onnxruntime as rt

14 | import onnxsim

15 | import tensorflow as tf

16 | import tf2onnx

17 | from tf2onnx import constants as tf2onnx_constants

18 |

19 | from fast_plate_ocr.common.utils import log_time_taken

20 | from fast_plate_ocr.train.model.config import load_config_from_yaml

21 | from fast_plate_ocr.train.utilities.utils import load_keras_model

22 |

23 | logging.basicConfig(

24 | level=logging.INFO, format="%(asctime)s - %(message)s", datefmt="%Y-%m-%d %H:%M:%S"

25 | )

26 |

27 |

28 | # pylint: disable=too-many-arguments,too-many-locals

29 |

30 |

31 | @click.command(context_settings={"max_content_width": 120})

32 | @click.option(

33 | "-m",

34 | "--model",

35 | "model_path",

36 | required=True,

37 | type=click.Path(exists=True, file_okay=True, dir_okay=False, path_type=pathlib.Path),

38 | help="Path to the saved .keras model.",

39 | )

40 | @click.option(

41 | "--output-path",

42 | required=True,

43 | type=str,

44 | help="Output name for ONNX model.",

45 | )

46 | @click.option(

47 | "--simplify/--no-simplify",

48 | default=False,

49 | show_default=True,

50 | help="Simplify ONNX model using onnxsim.",

51 | )

52 | @click.option(

53 | "--config-file",

54 | required=True,

55 | type=click.Path(exists=True, file_okay=True, path_type=pathlib.Path),

56 | help="Path pointing to the model license plate OCR config.",

57 | )

58 | @click.option(

59 | "--opset",

60 | default=16,

61 | type=click.IntRange(max=max(tf2onnx_constants.OPSET_TO_IR_VERSION)),

62 | show_default=True,

63 | help="Opset version for ONNX.",

64 | )

65 | def export_onnx(

66 | model_path: pathlib.Path,

67 | output_path: str,

68 | simplify: bool,

69 | config_file: pathlib.Path,

70 | opset: int,

71 | ) -> None:

72 | """

73 | Export Keras models to ONNX format.

74 | """

75 | config = load_config_from_yaml(config_file)

76 | model = load_keras_model(

77 | model_path,

78 | vocab_size=config.vocabulary_size,

79 | max_plate_slots=config.max_plate_slots,

80 | )

81 | spec = (tf.TensorSpec((None, config.img_height, config.img_width, 1), tf.uint8, name="input"),)

82 | # Convert from Keras to ONNX using tf2onnx library

83 | with NamedTemporaryFile(suffix=".onnx") as tmp:

84 | tmp_onnx = tmp.name

85 | model_proto, _ = tf2onnx.convert.from_keras(

86 | model,

87 | input_signature=spec,

88 | opset=opset,

89 | output_path=tmp_onnx,

90 | )

91 | if simplify:

92 | logging.info("Simplifying ONNX model ...")

93 | model_simp, check = onnxsim.simplify(onnx.load(tmp_onnx))

94 | assert check, "Simplified ONNX model could not be validated!"

95 | onnx.save(model_simp, output_path)

96 | else:

97 | shutil.copy(tmp_onnx, output_path)

98 | output_names = [n.name for n in model_proto.graph.output]

99 | x = np.random.randint(0, 256, size=(1, config.img_height, config.img_width, 1), dtype=np.uint8)

100 | # Run dummy inference and log time taken

101 | m = rt.InferenceSession(output_path)

102 | with log_time_taken("ONNX inference took:"):

103 | onnx_pred = m.run(output_names, {"input": x})

104 | # Check if ONNX and keras have the same results

105 | if not np.allclose(model.predict(x, verbose=0), onnx_pred[0], rtol=1e-5, atol=1e-5):

106 | logging.warning("ONNX model output was not close to Keras model for the given tolerance!")

107 | logging.info("Model converted to ONNX! Saved at %s", output_path)

108 |

109 |

110 | if __name__ == "__main__":

111 | export_onnx()

112 |

--------------------------------------------------------------------------------

/fast_plate_ocr/cli/train.py:

--------------------------------------------------------------------------------

1 | """

2 | Script for training the License Plate OCR models.

3 | """

4 |

5 | import pathlib

6 | import shutil

7 | from datetime import datetime

8 | from typing import Literal

9 |

10 | import albumentations as A

11 | import click

12 | from keras.src.callbacks import EarlyStopping, ModelCheckpoint, ReduceLROnPlateau, TensorBoard

13 | from keras.src.optimizers import Adam

14 | from torch.utils.data import DataLoader

15 |

16 | from fast_plate_ocr.cli.utils import print_params, print_train_details

17 | from fast_plate_ocr.train.data.augmentation import TRAIN_AUGMENTATION

18 | from fast_plate_ocr.train.data.dataset import LicensePlateDataset

19 | from fast_plate_ocr.train.model.config import load_config_from_yaml

20 | from fast_plate_ocr.train.model.custom import (

21 | cat_acc_metric,

22 | cce_loss,

23 | plate_acc_metric,

24 | top_3_k_metric,

25 | )

26 | from fast_plate_ocr.train.model.models import cnn_ocr_model

27 |

28 | # ruff: noqa: PLR0913

29 | # pylint: disable=too-many-arguments,too-many-locals

30 |

31 |

32 | @click.command(context_settings={"max_content_width": 120})

33 | @click.option(

34 | "--dense/--no-dense",

35 | default=True,

36 | show_default=True,

37 | help="Whether to use Fully Connected layers in model head or not.",

38 | )

39 | @click.option(

40 | "--config-file",

41 | required=True,

42 | type=click.Path(exists=True, file_okay=True, path_type=pathlib.Path),

43 | help="Path pointing to the model license plate OCR config.",

44 | )

45 | @click.option(

46 | "--annotations",

47 | required=True,

48 | type=click.Path(exists=True, file_okay=True, path_type=pathlib.Path),

49 | help="Path pointing to the train annotations CSV file.",

50 | )

51 | @click.option(

52 | "--val-annotations",

53 | required=True,

54 | type=click.Path(exists=True, file_okay=True, path_type=pathlib.Path),

55 | help="Path pointing to the train validation CSV file.",

56 | )

57 | @click.option(

58 | "--augmentation-path",

59 | type=click.Path(exists=True, file_okay=True, path_type=pathlib.Path),

60 | help="YAML file pointing to the augmentation pipeline saved with Albumentations.save(...)",

61 | )

62 | @click.option(

63 | "--lr",

64 | default=1e-3,

65 | show_default=True,

66 | type=float,

67 | help="Initial learning rate to use.",

68 | )

69 | @click.option(

70 | "--label-smoothing",

71 | default=0.05,

72 | show_default=True,

73 | type=float,

74 | help="Amount of label smoothing to apply.",

75 | )

76 | @click.option(

77 | "--batch-size",

78 | default=128,

79 | show_default=True,

80 | type=int,

81 | help="Batch size for training.",

82 | )

83 | @click.option(

84 | "--num-workers",

85 | default=0,

86 | show_default=True,

87 | type=int,

88 | help="How many subprocesses to load data, used in the torch DataLoader.",

89 | )

90 | @click.option(

91 | "--output-dir",

92 | default="./trained_models",

93 | type=click.Path(dir_okay=True, path_type=pathlib.Path),

94 | help="Output directory where model will be saved.",

95 | )

96 | @click.option(

97 | "--epochs",

98 | default=500,

99 | show_default=True,

100 | type=int,

101 | help="Number of training epochs.",

102 | )

103 | @click.option(

104 | "--tensorboard",

105 | "-t",

106 | is_flag=True,

107 | help="Whether to use TensorBoard visualization tool.",

108 | )

109 | @click.option(

110 | "--tensorboard-dir",

111 | "-l",

112 | default="tensorboard_logs",

113 | show_default=True,

114 | type=click.Path(path_type=pathlib.Path),

115 | help="The path of the directory where to save the TensorBoard log files.",

116 | )

117 | @click.option(

118 | "--early-stopping-patience",

119 | default=100,

120 | show_default=True,

121 | type=int,

122 | help="Stop training when 'val_plate_acc' doesn't improve for X epochs.",

123 | )

124 | @click.option(