├── .actor

├── Dockerfile

├── actor.json

└── input_schema.json

├── .dockerignore

├── .editorconfig

├── .eslintrc

├── .github

└── workflows

│ └── checks.yml

├── .gitignore

├── CHANGELOG.md

├── LICENSE.md

├── README.md

├── data

├── dataset_rag-web-browser_2024-09-02_2gb_maxResult_1.json

├── dataset_rag-web-browser_2024-09-02_2gb_maxResults_5.json

├── dataset_rag-web-browser_2024-09-02_4gb_maxResult_1.json

├── dataset_rag-web-browser_2024-09-02_4gb_maxResult_5.json

└── performance_measures.md

├── docs

├── apify-gpt-custom-action.png

├── aws-lambda-call-rag-web-browser.py

├── stand_by_rag_web_browser_example.py

└── standby-openapi-3.0.0.json

├── eslint.config.mjs

├── package-lock.json

├── package.json

├── src

├── const.ts

├── crawlers.ts

├── errors.ts

├── google-search

│ └── google-extractors-urls.ts

├── input.ts

├── main.ts

├── mcp

│ └── server.ts

├── performance-measures.ts

├── request-handler.ts

├── responses.ts

├── search.ts

├── server.ts

├── types.ts

├── utils.ts

└── website-content-crawler

│ ├── html-processing.ts

│ ├── markdown.ts

│ └── text-extractor.ts

├── tests

├── cheerio-crawler.content.test.ts

├── helpers

│ ├── html

│ │ └── basic.html

│ └── server.ts

├── playwright-crawler.content.test.ts

├── standby.test.ts

└── utils.test.ts

├── tsconfig.eslint.json

├── tsconfig.json

├── types

└── turndown-plugin-gfm.d.ts

└── vitest.config.ts

/.actor/Dockerfile:

--------------------------------------------------------------------------------

1 | # Specify the base Docker image. You can read more about

2 | # the available images at https://crawlee.dev/docs/guides/docker-images

3 | # You can also use any other image from Docker Hub.

4 | FROM apify/actor-node-playwright-chrome:22-1.46.0 AS builder

5 |

6 | # Copy just package.json and package-lock.json

7 | # to speed up the build using Docker layer cache.

8 | COPY --chown=myuser package*.json ./

9 |

10 | # Install all dependencies. Don't audit to speed up the installation.

11 | RUN npm install --include=dev --audit=false

12 |

13 | # Next, copy the source files using the user set

14 | # in the base image.

15 | COPY --chown=myuser . ./

16 |

17 | # Install all dependencies and build the project.

18 | # Don't audit to speed up the installation.

19 | RUN npm run build

20 |

21 | # Create final image

22 | FROM apify/actor-node-playwright-firefox:22-1.46.0

23 |

24 | # Copy just package.json and package-lock.json

25 | # to speed up the build using Docker layer cache.

26 | COPY --chown=myuser package*.json ./

27 |

28 | # Install NPM packages, skip optional and development dependencies to

29 | # keep the image small. Avoid logging too much and print the dependency

30 | # tree for debugging

31 | RUN npm --quiet set progress=false \

32 | && npm install --omit=dev --omit=optional \

33 | && echo "Installed NPM packages:" \

34 | && (npm list --omit=dev --all || true) \

35 | && echo "Node.js version:" \

36 | && node --version \

37 | && echo "NPM version:" \

38 | && npm --version \

39 | && rm -r ~/.npm

40 |

41 | # Remove the existing firefox installation

42 | RUN rm -rf ${PLAYWRIGHT_BROWSERS_PATH}/*

43 |

44 | # Install all required playwright dependencies for firefox

45 | RUN npx playwright install firefox

46 | # symlink the firefox binary to the root folder in order to bypass the versioning and resulting browser launch crashes.

47 | RUN ln -s ${PLAYWRIGHT_BROWSERS_PATH}/firefox-*/firefox/firefox ${PLAYWRIGHT_BROWSERS_PATH}/

48 |

49 | # Overrides the dynamic library used by Firefox to determine trusted root certificates with p11-kit-trust.so, which loads the system certificates.

50 | RUN rm $PLAYWRIGHT_BROWSERS_PATH/firefox-*/firefox/libnssckbi.so

51 | RUN ln -s /usr/lib/x86_64-linux-gnu/pkcs11/p11-kit-trust.so $(ls -d $PLAYWRIGHT_BROWSERS_PATH/firefox-*)/firefox/libnssckbi.so

52 |

53 | # Copy built JS files from builder image

54 | COPY --from=builder --chown=myuser /home/myuser/dist ./dist

55 |

56 | # Next, copy the remaining files and directories with the source code.

57 | # Since we do this after NPM install, quick build will be really fast

58 | # for most source file changes.

59 | COPY --chown=myuser . ./

60 |

61 | # Disable experimental feature warning from Node.js

62 | ENV NODE_NO_WARNINGS=1

63 |

64 | # Run the image.

65 | CMD npm run start:prod --silent

66 |

--------------------------------------------------------------------------------

/.actor/actor.json:

--------------------------------------------------------------------------------

1 | {

2 | "actorSpecification": 1,

3 | "name": "rag-web-browser",

4 | "title": "RAG Web browser",

5 | "description": "Web browser for OpenAI Assistants API and RAG pipelines, similar to a web browser in ChatGPT. It queries Google Search, scrapes the top N pages from the results, and returns their cleaned content as Markdown for further processing by an LLM.",

6 | "version": "1.0",

7 | "input": "./input_schema.json",

8 | "dockerfile": "./Dockerfile",

9 | "storages": {

10 | "dataset": {

11 | "actorSpecification": 1,

12 | "title": "RAG Web Browser",

13 | "description": "Too see all scraped properties, export the whole dataset or select All fields instead of Overview.",

14 | "views": {

15 | "overview": {

16 | "title": "Overview",

17 | "description": "An view showing just basic properties for simplicity.",

18 | "transformation": {

19 | "flatten": ["metadata", "searchResult"],

20 | "fields": [

21 | "metadata.url",

22 | "metadata.title",

23 | "searchResult.resultType",

24 | "markdown"

25 | ]

26 | },

27 | "display": {

28 | "component": "table",

29 | "properties": {

30 | "metadata.url": {

31 | "label": "Page URL",

32 | "format": "text"

33 | },

34 | "metadata.title": {

35 | "label": "Page title",

36 | "format": "text"

37 | },

38 | "searchResult.resultType": {

39 | "label": "Result type",

40 | "format": "text"

41 | },

42 | "text": {

43 | "label": "Extracted Markdown",

44 | "format": "text"

45 | }

46 | }

47 | }

48 | },

49 | "searchResults": {

50 | "title": "Search results",

51 | "description": "A view showing just the Google Search results, without the page content.",

52 | "transformation": {

53 | "flatten": ["searchResult"],

54 | "fields": [

55 | "searchResult.title",

56 | "searchResult.description",

57 | "searchResult.resultType",

58 | "searchResult.url"

59 | ]

60 | },

61 | "display": {

62 | "component": "table",

63 | "properties": {

64 | "searchResult.description": {

65 | "label": "Description",

66 | "format": "text"

67 | },

68 | "searchResult.title": {

69 | "label": "Title",

70 | "format": "text"

71 | },

72 | "searchResult.resultType": {

73 | "label": "Result type",

74 | "format": "text"

75 | },

76 | "searchResult.url": {

77 | "label": "URL",

78 | "format": "text"

79 | }

80 | }

81 | }

82 | }

83 | }

84 | }

85 | }

86 | }

87 |

--------------------------------------------------------------------------------

/.actor/input_schema.json:

--------------------------------------------------------------------------------

1 | {

2 | "title": "RAG Web Browser",

3 | "description": "Here you can test RAG Web Browser and its settings. Just enter the search terms or URL and click *Start ▶* to get results. In production applications, call the Actor via Standby HTTP server for fast response times.",

4 | "type": "object",

5 | "schemaVersion": 1,

6 | "properties": {

7 | "query": {

8 | "title": "Search term or URL",

9 | "type": "string",

10 | "description": "Enter Google Search keywords or a URL of a specific web page. The keywords might include the [advanced search operators](https://blog.apify.com/how-to-scrape-google-like-a-pro/). Examples:\n\n- san francisco weather\n- https://www.cnn.com\n- function calling site:openai.com",

11 | "prefill": "web browser for RAG pipelines -site:reddit.com",

12 | "editor": "textfield",

13 | "pattern": "[^\\s]+"

14 | },

15 | "maxResults": {

16 | "title": "Maximum results",

17 | "type": "integer",

18 | "description": "The maximum number of top organic Google Search results whose web pages will be extracted. If `query` is a URL, then this field is ignored and the Actor only fetches the specific web page.",

19 | "default": 3,

20 | "minimum": 1,

21 | "maximum": 100

22 | },

23 | "outputFormats": {

24 | "title": "Output formats",

25 | "type": "array",

26 | "description": "Select one or more formats to which the target web pages will be extracted and saved in the resulting dataset.",

27 | "editor": "select",

28 | "default": ["markdown"],

29 | "items": {

30 | "type": "string",

31 | "enum": ["text", "markdown", "html"],

32 | "enumTitles": ["Plain text", "Markdown", "HTML"]

33 | }

34 | },

35 | "requestTimeoutSecs": {

36 | "title": "Request timeout",

37 | "type": "integer",

38 | "description": "The maximum time in seconds available for the request, including querying Google Search and scraping the target web pages. For example, OpenAI allows only [45 seconds](https://platform.openai.com/docs/actions/production#timeouts) for custom actions. If a target page loading and extraction exceeds this timeout, the corresponding page will be skipped in results to ensure at least some results are returned within the timeout. If no page is extracted within the timeout, the whole request fails.",

39 | "minimum": 1,

40 | "maximum": 300,

41 | "default": 40,

42 | "unit": "seconds",

43 | "editor": "hidden"

44 | },

45 | "serpProxyGroup": {

46 | "title": "SERP proxy group",

47 | "type": "string",

48 | "description": "Enables overriding the default Apify Proxy group used for fetching Google Search results.",

49 | "editor": "select",

50 | "default": "GOOGLE_SERP",

51 | "enum": ["GOOGLE_SERP", "SHADER"],

52 | "sectionCaption": "Google Search scraping settings"

53 | },

54 | "serpMaxRetries": {

55 | "title": "SERP max retries",

56 | "type": "integer",

57 | "description": "The maximum number of times the Actor will retry fetching the Google Search results on error. If the last attempt fails, the entire request fails.",

58 | "minimum": 0,

59 | "maximum": 5,

60 | "default": 2

61 | },

62 | "proxyConfiguration": {

63 | "title": "Proxy configuration",

64 | "type": "object",

65 | "description": "Apify Proxy configuration used for scraping the target web pages.",

66 | "default": {

67 | "useApifyProxy": true

68 | },

69 | "prefill": {

70 | "useApifyProxy": true

71 | },

72 | "editor": "proxy",

73 | "sectionCaption": "Target pages scraping settings"

74 | },

75 | "scrapingTool": {

76 | "title": "Select a scraping tool",

77 | "type": "string",

78 | "description": "Select a scraping tool for extracting the target web pages. The Browser tool is more powerful and can handle JavaScript heavy websites, while the Plain HTML tool can't handle JavaScript but is about two times faster.",

79 | "editor": "select",

80 | "default": "raw-http",

81 | "enum": ["browser-playwright", "raw-http"],

82 | "enumTitles": ["Browser (uses Playwright)", "Raw HTTP"]

83 | },

84 | "removeElementsCssSelector": {

85 | "title": "Remove HTML elements (CSS selector)",

86 | "type": "string",

87 | "description": "A CSS selector matching HTML elements that will be removed from the DOM, before converting it to text, Markdown, or saving as HTML. This is useful to skip irrelevant page content. The value must be a valid CSS selector as accepted by the `document.querySelectorAll()` function. \n\nBy default, the Actor removes common navigation elements, headers, footers, modals, scripts, and inline image. You can disable the removal by setting this value to some non-existent CSS selector like `dummy_keep_everything`.",

88 | "editor": "textarea",

89 | "default": "nav, footer, script, style, noscript, svg, img[src^='data:'],\n[role=\"alert\"],\n[role=\"banner\"],\n[role=\"dialog\"],\n[role=\"alertdialog\"],\n[role=\"region\"][aria-label*=\"skip\" i],\n[aria-modal=\"true\"]",

90 | "prefill": "nav, footer, script, style, noscript, svg, img[src^='data:'],\n[role=\"alert\"],\n[role=\"banner\"],\n[role=\"dialog\"],\n[role=\"alertdialog\"],\n[role=\"region\"][aria-label*=\"skip\" i],\n[aria-modal=\"true\"]"

91 | },

92 | "htmlTransformer": {

93 | "title": "HTML transformer",

94 | "type": "string",

95 | "description": "Specify how to transform the HTML to extract meaningful content without any extra fluff, like navigation or modals. The HTML transformation happens after removing and clicking the DOM elements.\n\n- **None** (default) - Only removes the HTML elements specified via 'Remove HTML elements' option.\n\n- **Readable text** - Extracts the main contents of the webpage, without navigation and other fluff.",

96 | "default": "none",

97 | "prefill": "none",

98 | "editor": "hidden"

99 | },

100 | "desiredConcurrency": {

101 | "title": "Desired browsing concurrency",

102 | "type": "integer",

103 | "description": "The desired number of web browsers running in parallel. The system automatically scales the number based on the CPU and memory usage. If the initial value is `0`, the Actor picks the number automatically based on the available memory.",

104 | "minimum": 0,

105 | "maximum": 50,

106 | "default": 5,

107 | "editor": "hidden"

108 | },

109 | "maxRequestRetries": {

110 | "title": "Target page max retries",

111 | "type": "integer",

112 | "description": "The maximum number of times the Actor will retry loading the target web page on error. If the last attempt fails, the page will be skipped in the results.",

113 | "minimum": 0,

114 | "maximum": 3,

115 | "default": 1

116 | },

117 | "dynamicContentWaitSecs": {

118 | "title": "Target page dynamic content timeout",

119 | "type": "integer",

120 | "description": "The maximum time in seconds to wait for dynamic page content to load. The Actor considers the web page as fully loaded once this time elapses or when the network becomes idle.",

121 | "default": 10,

122 | "unit": "seconds"

123 | },

124 | "removeCookieWarnings": {

125 | "title": "Remove cookie warnings",

126 | "type": "boolean",

127 | "description": "If enabled, the Actor attempts to close or remove cookie consent dialogs to improve the quality of extracted text. Note that this setting increases the latency.",

128 | "default": true

129 | },

130 | "debugMode": {

131 | "title": "Enable debug mode",

132 | "type": "boolean",

133 | "description": "If enabled, the Actor will store debugging information into the resulting dataset under the `debug` field.",

134 | "default": false

135 | }

136 | },

137 | "required": ["query"]

138 | }

139 |

--------------------------------------------------------------------------------

/.dockerignore:

--------------------------------------------------------------------------------

1 | # configurations

2 | .idea

3 |

4 | # crawlee and apify storage folders

5 | apify_storage

6 | crawlee_storage

7 | storage

8 |

9 | # installed files

10 | node_modules

11 |

12 | # git folder

13 | .git

14 |

15 | # data

16 | data

17 | src/storage

18 | dist

19 |

--------------------------------------------------------------------------------

/.editorconfig:

--------------------------------------------------------------------------------

1 | root = true

2 |

3 | [*]

4 | indent_style = space

5 | indent_size = 4

6 | charset = utf-8

7 | trim_trailing_whitespace = true

8 | insert_final_newline = true

9 | end_of_line = lf

10 | max_line_length = 120

11 |

--------------------------------------------------------------------------------

/.eslintrc:

--------------------------------------------------------------------------------

1 | {

2 | "root": true,

3 | "env": {

4 | "browser": true,

5 | "es2020": true,

6 | "node": true

7 | },

8 | "extends": [

9 | "@apify/eslint-config-ts"

10 | ],

11 | "parserOptions": {

12 | "project": "./tsconfig.json",

13 | "ecmaVersion": 2020

14 | },

15 | "ignorePatterns": [

16 | "node_modules",

17 | "dist",

18 | "**/*.d.ts"

19 | ],

20 | "plugins": ["import"],

21 | "rules": {

22 | "import/order": [

23 | "error",

24 | {

25 | "groups": [

26 | ["builtin", "external"],

27 | "internal",

28 | ["parent", "sibling", "index"]

29 | ],

30 | "newlines-between": "always",

31 | "alphabetize": {

32 | "order": "asc",

33 | "caseInsensitive": true

34 | }

35 | }

36 | ],

37 | "max-len": ["error", { "code": 120, "ignoreUrls": true, "ignoreStrings": true, "ignoreTemplateLiterals": true }]

38 | }

39 | }

40 |

--------------------------------------------------------------------------------

/.github/workflows/checks.yml:

--------------------------------------------------------------------------------

1 | name: Code Checks

2 |

3 | on:

4 | push:

5 | branches: [ master ]

6 | pull_request:

7 | branches: [ master ]

8 |

9 | jobs:

10 | build-and-test:

11 | runs-on: ubuntu-latest

12 |

13 | steps:

14 | - uses: actions/checkout@v4

15 |

16 | - name: Setup Node.js

17 | uses: actions/setup-node@v4

18 | with:

19 | node-version: 'latest'

20 | cache: 'npm'

21 |

22 | - name: Install dependencies

23 | run: npm ci

24 |

25 | - name: Build

26 | run: npm run build

27 |

28 | - name: Lint

29 | run: npm run lint

30 |

31 | - name: Install Playwright

32 | run: npx playwright install

33 |

34 | - name: Test

35 | run: npm run test

36 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # This file tells Git which files shouldn't be added to source control

2 |

3 | .DS_Store

4 | .idea

5 | dist

6 | node_modules

7 | apify_storage

8 | storage

9 |

10 | # Added by Apify CLI

11 | .venv

12 | .aider*

13 |

14 | # Actor run input

15 | input.json

16 |

--------------------------------------------------------------------------------

/CHANGELOG.md:

--------------------------------------------------------------------------------

1 | This changelog summarizes all changes of the RAG Web Browser

2 |

3 | ### 1.0.15 (2025-03-27)

4 |

5 | 🐛 Bug Fixes

6 | - Cancel requests only in standby mode

7 |

8 | ### 1.0.13 (2025-03-27)

9 |

10 | 🐛 Bug Fixes

11 | - Cancel crawling requests from timed-out search queries

12 |

13 | ### 1.0.12 (2025-03-24)

14 |

15 | 🐛 Bug Fixes

16 | - Updated selector for organic search results and places

17 |

18 | ### 1.0.11 (2025-03-21)

19 |

20 | 🐛 Bug Fixes

21 | - Selector for organic search results

22 |

23 | ### 1.0.10 (2025-03-19)

24 |

25 | 🚀 Features

26 | - Handle all query parameters in the standby mode (including proxy)

27 |

28 | ### 1.0.9 (2025-03-14)

29 |

30 | 🚀 Features

31 | - Change default value for `scrapingTool` from 'browser-playwright' to 'raw-http' to improve latency.

32 |

33 | ### 1.0.8 (2025-03-07)

34 |

35 | 🚀 Features

36 | - Add a new `scrapingTool` input to allow users to choose between Browser scraper and raw HTTP scraper

37 |

38 | ### 1.0.7 (2025-02-20)

39 |

40 | 🚀 Features

41 | - Update Readme.md to include information about MCP

42 |

43 | ### 1.0.6 (2025-02-04)

44 |

45 | 🚀 Features

46 | - Handle double encoding of URLs

47 |

48 | ### 1.0.5 (2025-01-17)

49 |

50 | 🐛 Bug Fixes

51 | - Change default value of input query

52 | - Retry search if no results are found

53 |

54 | ### 1.0.4 (2025-01-04)

55 |

56 | 🚀 Features

57 | - Include Model Context Protocol in Standby Mode

58 |

59 | ### 1.0.3 (2024-11-13)

60 |

61 | 🚀 Features

62 | - Improve README.md and simplify configuration

63 | - Add an AWS Lambda function

64 | - Hide variables initialConcurrency, minConcurrency, and maxConcurrency in the Actor input and remove them from README.md

65 | - Remove requestTimeoutContentCrawlSecs and use only requestTimeoutSecs

66 | - Ensure there is enough time left to wait for dynamic content before the Actor timeout (normal mode)

67 | - Rename googleSearchResults to searchResults and searchProxyGroup to serpProxyGroup

68 | - Implement input validation

69 |

70 | ### 0.1.4 (2024-11-08)

71 |

72 | 🚀 Features

73 | - Add functionality to extract content from a specific URL

74 | - Update README.md to include new functionality and provide examples

75 |

76 | ### 0.0.32 (2024-10-17)

77 |

78 | 🚀 Features

79 | - Handle errors when request is added to Playwright queue.

80 | This will prevent the Cheerio crawler from repeating the same request multiple times.

81 | - Silence error: Could not parse CSS stylesheet as there is no way to fix it at our end

82 | - Set logLevel to INFO (debug level can be set using the `debugMode=true` input)

83 |

84 | ### 2024-10-11

85 |

86 | 🚀 Features

87 | - Increase the maximum number of results (`maxResults`) from 50 to 100

88 | - Explain better how to search a specific website using "llm site:apify.com"

89 |

90 | ### 2024-10-07

91 |

92 | 🚀 Features

93 | - Add a short description how to create a custom action

94 |

95 | ### 2024-09-24

96 |

97 | 🚀 Features

98 | - Updated README.md to include tips on improving latency

99 | - Set initialConcurrency to 5

100 | - Set minConcurrency to 3

101 |

102 | ### 2024-09-20

103 |

104 | 🐛 Bug Fixes

105 | - Fix response format when crawler fails

106 |

107 | ### 2024-09-24

108 |

109 | 🚀 Features

110 | - Add ability to create new crawlers using query parameters

111 | - Update Dockerfile to node version 22

112 |

113 | 🐛 Bug Fixes

114 | - Fix playwright key creation

115 |

116 | ### 2024-09-11

117 |

118 | 🚀 Features

119 | - Initial version of the RAG Web Browser

120 |

--------------------------------------------------------------------------------

/LICENSE.md:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "{}"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright 2024 Apify Technologies s.r.o.

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # 🌐 RAG Web Browser

2 |

3 | [](https://apify.com/apify/rag-web-browser)

4 |

5 | This Actor provides web browsing functionality for AI agents and LLM applications,

6 | similar to the [web browsing](https://openai.com/index/introducing-chatgpt-search/) feature in ChatGPT.

7 | It accepts a search phrase or a URL, queries Google Search, then crawls web pages from the top search results, cleans the HTML, converts it to text or Markdown,

8 | and returns it back for processing by the LLM application.

9 | The extracted text can then be injected into prompts and retrieval augmented generation (RAG) pipelines, to provide your LLM application with up-to-date context from the web.

10 |

11 | ## Main features

12 |

13 | - 🚀 **Quick response times** for great user experience

14 | - ⚙️ Supports **dynamic JavaScript-heavy websites** using a headless browser

15 | - 🔄 **Flexible scraping** with Browser mode for complex websites or Plain HTML mode for faster scraping

16 | - 🕷 Automatically **bypasses anti-scraping protections** using proxies and browser fingerprints

17 | - 📝 Output formats include **Markdown**, plain text, and HTML

18 | - 🔌 Supports **OpenAPI and MCP** for easy integration

19 | - 🪟 It's **open source**, so you can review and modify it

20 |

21 | ## Example

22 |

23 | For a search query like `fast web browser in RAG pipelines`, the Actor will return an array with a content of top results from Google Search, which looks like this:

24 |

25 | ```json

26 | [

27 | {

28 | "crawl": {

29 | "httpStatusCode": 200,

30 | "httpStatusMessage": "OK",

31 | "loadedAt": "2024-11-25T21:23:58.336Z",

32 | "uniqueKey": "eM0RDxDQ3q",

33 | "requestStatus": "handled"

34 | },

35 | "searchResult": {

36 | "title": "apify/rag-web-browser",

37 | "description": "Sep 2, 2024 — The RAG Web Browser is designed for Large Language Model (LLM) applications or LLM agents to provide up-to-date ....",

38 | "url": "https://github.com/apify/rag-web-browser"

39 | },

40 | "metadata": {

41 | "title": "GitHub - apify/rag-web-browser: RAG Web Browser is an Apify Actor to feed your LLM applications ...",

42 | "description": "RAG Web Browser is an Apify Actor to feed your LLM applications ...",

43 | "languageCode": "en",

44 | "url": "https://github.com/apify/rag-web-browser"

45 | },

46 | "markdown": "# apify/rag-web-browser: RAG Web Browser is an Apify Actor ..."

47 | }

48 | ]

49 | ```

50 |

51 | If you enter a specific URL such as `https://openai.com/index/introducing-chatgpt-search/`, the Actor will extract

52 | the web page content directly like this:

53 |

54 | ```json

55 | [{

56 | "crawl": {

57 | "httpStatusCode": 200,

58 | "httpStatusMessage": "OK",

59 | "loadedAt": "2024-11-21T14:04:28.090Z"

60 | },

61 | "metadata": {

62 | "url": "https://openai.com/index/introducing-chatgpt-search/",

63 | "title": "Introducing ChatGPT search | OpenAI",

64 | "description": "Get fast, timely answers with links to relevant web sources",

65 | "languageCode": "en-US"

66 | },

67 | "markdown": "# Introducing ChatGPT search | OpenAI\n\nGet fast, timely answers with links to relevant web sources.\n\nChatGPT can now search the web in a much better way than before. ..."

68 | }]

69 | ```

70 |

71 | ## ⚙️ Usage

72 |

73 | The RAG Web Browser can be used in two ways: **as a standard Actor** by passing it an input object with the settings,

74 | or in the **Standby mode** by sending it an HTTP request.

75 |

76 | See the [Performance Optimization](#-performance-optimization) section below for detailed benchmarks and configuration recommendations to achieve optimal response times.

77 |

78 | ### Normal Actor run

79 |

80 | You can run the Actor "normally" via the Apify API, schedule, integrations, or manually in Console.

81 | On start, you pass the Actor an input JSON object with settings including the search phrase or URL,

82 | and it stores the results to the default dataset.

83 | This mode is useful for testing and evaluation, but might be too slow for production applications and RAG pipelines,

84 | because it takes some time to start the Actor's Docker container and a web browser.

85 | Also, one Actor run can only handle one query, which isn't efficient.

86 |

87 | ### Standby web server

88 |

89 | The Actor also supports the [**Standby mode**](https://docs.apify.com/platform/actors/running/standby),

90 | where it runs an HTTP web server that receives requests with the search phrases and responds with the extracted web content.

91 | This mode is preferred for production applications, because if the Actor is already running, it will

92 | return the results much faster. Additionally, in the Standby mode the Actor can handle multiple requests

93 | in parallel, and thus utilizes the computing resources more efficiently.

94 |

95 | To use RAG Web Browser in the Standby mode, simply send an HTTP GET request to the following URL:

96 |

97 | ```

98 | https://rag-web-browser.apify.actor/search?token=&query=hello+world

99 | ```

100 |

101 | where `` is your [Apify API token](https://console.apify.com/settings/integrations).

102 | Note that you can also pass the API token using the `Authorization` HTTP header with Basic authentication for increased security.

103 |

104 | The response is a JSON array with objects containing the web content from the found web pages, as shown in the example [above](#example).

105 |

106 | #### Query parameters

107 |

108 | The `/search` GET HTTP endpoint accepts all the input parameters [described on the Actor page](https://apify.com/apify/rag-web-browser/input-schema). Object parameters like `proxyConfiguration` should be passed as url-encoded JSON strings.

109 |

110 |

111 | ## 🔌 Integration with LLMs

112 |

113 | RAG Web Browser has been designed for easy integration with LLM applications, GPTs, OpenAI Assistants, and RAG pipelines using function calling.

114 |

115 | ### OpenAPI schema

116 |

117 | Here you can find the [OpenAPI 3.1.0 schema](https://apify.com/apify/rag-web-browser/api/openapi)

118 | or [OpenAPI 3.0.0 schema](https://raw.githubusercontent.com/apify/rag-web-browser/refs/heads/master/docs/standby-openapi-3.0.0.json)

119 | for the Standby web server. Note that the OpenAPI definition contains

120 | all available query parameters, but only `query` is required.

121 | You can remove all the others parameters from the definition if their default value is right for your application,

122 | in order to reduce the number of LLM tokens necessary and to reduce the risk of hallucinations in function calling.

123 |

124 | ### OpenAI Assistants

125 |

126 | While OpenAI's ChatGPT and GPTs support web browsing natively, [Assistants](https://platform.openai.com/docs/assistants/overview) currently don't.

127 | With RAG Web Browser, you can easily add the web search and browsing capability to your custom AI assistant and chatbots.

128 | For detailed instructions,

129 | see the [OpenAI Assistants integration](https://docs.apify.com/platform/integrations/openai-assistants#real-time-search-data-for-openai-assistant) in Apify documentation.

130 |

131 | ### OpenAI GPTs

132 |

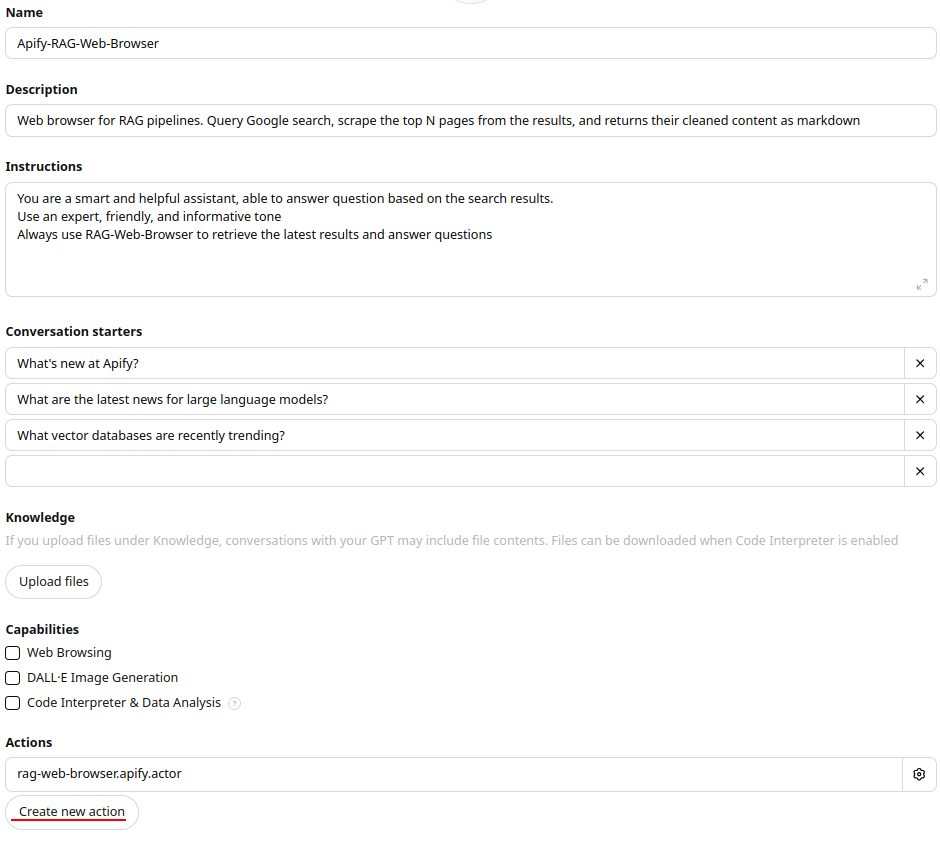

133 | You can easily add the RAG Web Browser to your GPTs by creating a custom action. Here's a quick guide:

134 |

135 | 1. Go to [**My GPTs**](https://chatgpt.com/gpts/mine) on ChatGPT website and click **+ Create a GPT**.

136 | 2. Complete all required details in the form.

137 | 3. Under the **Actions** section, click **Create new action**.

138 | 4. In the Action settings, set **Authentication** to **API key** and choose Bearer as **Auth Type**.

139 | 5. In the **schema** field, paste the [OpenAPI 3.1.0 schema](https://raw.githubusercontent.com/apify/rag-web-browser/refs/heads/master/docs/standby-openapi-3.1.0.json)

140 | of the Standby web server HTTP API.

141 |

142 |

143 |

144 | Learn more about [adding custom actions to your GPTs with Apify Actors](https://blog.apify.com/add-custom-actions-to-your-gpts/) on Apify Blog.

145 |

146 | ### Anthropic: Model Context Protocol (MCP) Server

147 |

148 | The RAG Web Browser Actor can also be used as an [MCP server](https://github.com/modelcontextprotocol) and integrated with AI applications and agents, such as Claude Desktop.

149 | For example, in Claude Desktop, you can configure the MCP server in its settings to perform web searches and extract content.

150 | Alternatively, you can develop a custom MCP client to interact with the RAG Web Browser Actor.

151 |

152 | In the Standby mode, the Actor runs an HTTP server that supports the MCP protocol via SSE (Server-Sent Events).

153 |

154 | 1. Initiate SSE connection:

155 | ```shell

156 | curl https://rag-web-browser.apify.actor/sse?token=

157 | ```

158 | On connection, you'll receive a `sessionId`:

159 | ```text

160 | event: endpoint

161 | data: /message?sessionId=5b2

162 | ```

163 |

164 | 1. Send a message to the server by making a POST request with the `sessionId`, `APIFY-API-TOKEN` and your query:

165 | ```shell

166 | curl -X POST "https://rag-web-browser.apify.actor/message?session_id=5b2&token=" -H "Content-Type: application/json" -d '{

167 | "jsonrpc": "2.0",

168 | "id": 1,

169 | "method": "tools/call",

170 | "params": {

171 | "arguments": { "query": "recent news about LLMs", "maxResults": 1 },

172 | "name": "rag-web-browser"

173 | }

174 | }'

175 | ```

176 | For the POST request, the server will respond with:

177 | ```text

178 | Accepted

179 | ```

180 |

181 | 1. Receive a response at the initiated SSE connection:

182 | The server invoked `Actor` and its tool using the provided query and sent the response back to the client via SSE.

183 |

184 | ```text

185 | event: message

186 | data: {"result":{"content":[{"type":"text","text":"[{\"searchResult\":{\"title\":\"Language models recent news\",\"description\":\"Amazon Launches New Generation of LLM Foundation Model...\"}}

187 | ```

188 |

189 | You can try the MCP server using the [MCP Tester Client](https://apify.com/jiri.spilka/tester-mcp-client) available on Apify. In the MCP client, simply enter the URL `https://rag-web-browser.apify.actor/sse` in the Actor input field and click **Run** and interact with server in a UI.

190 | To learn more about MCP servers, check out the blog post [What is Anthropic's Model Context Protocol](https://blog.apify.com/what-is-model-context-protocol/).

191 |

192 | ## ⏳ Performance optimization

193 |

194 | To get the most value from RAG Web Browsers in your LLM applications,

195 | always use the Actor via the [Standby web server](#standby-web-server) as described above,

196 | and see the tips in the following sections.

197 |

198 | ### Scraping tool

199 |

200 | The **most critical performance decision** is selecting the appropriate scraping method for your use case:

201 |

202 | - **For static websites**: Use `scrapingTool=raw-http` to achieve up to 2x faster performance. This lightweight method directly fetches HTML without JavaScript processing.

203 |

204 | - **For dynamic websites**: Use the default `scrapingTool=browser-playwright` when targeting sites with JavaScript-rendered content or interactive elements

205 |

206 | This single parameter choice can significantly impact both response times and content quality, so select based on your target websites' characteristics.

207 |

208 | ### Request timeout

209 |

210 | Many user-facing RAG applications impose a time limit on external functions to provide a good user experience.

211 | For example, OpenAI Assistants and GPTs have a limit of [45 seconds](https://platform.openai.com/docs/actions/production#timeouts) for custom actions.

212 |

213 | To ensure the web search and content extraction is completed within the required timeout,

214 | you can set the `requestTimeoutSecs` query parameter.

215 | If this timeout is exceeded, **the Actor makes the best effort to return results it has scraped up to that point**

216 | in order to provide your LLM application with at least some context.

217 |

218 | Here are specific situations that might occur when the timeout is reached:

219 |

220 | - The Google Search query failed => the HTTP request fails with a 5xx error.

221 | - The requested `query` is a single URL that failed to load => the HTTP request fails with a 5xx error.

222 | - The requested `query` is a search term, but one of target web pages failed to load => the response contains at least

223 | the `searchResult` for the specific page contains a URL, title, and description.

224 | - One of the target pages hasn't loaded dynamic content (within the `dynamicContentWaitSecs` deadline)

225 | => the Actor extracts content from the currently loaded HTML

226 |

227 |

228 | ### Reducing response time

229 |

230 | For low-latency applications, it's recommended to run the RAG Web Browser in Standby mode

231 | with the default settings, i.e. with 8 GB of memory and maximum of 24 requests per run.

232 | Note that on the first request, the Actor takes a little time to respond (cold start).

233 |

234 | Additionally, you can adjust the following query parameters to reduce the response time:

235 |

236 | - `scrapingTool`: Use `raw-http` for static websites or `browser-playwright` for dynamic websites.

237 | - `maxResults`: The lower the number of search results to scrape, the faster the response time. Just note that the LLM application might not have sufficient context for the prompt.

238 | - `dynamicContentWaitSecs`: The lower the value, the faster the response time. However, the important web content might not be loaded yet, which will reduce the accuracy of your LLM application.

239 | - `removeCookieWarnings`: If the websites you're scraping don't have cookie warnings or if their presence can be tolerated, set this to `false` to slightly improve latency.

240 | - `debugMode`: If set to `true`, the Actor will store latency data to results so that you can see where it takes time.

241 |

242 |

243 | ### Cost vs. throughput

244 |

245 | When running the RAG Web Browser in Standby web server, the Actor can process a number of requests in parallel.

246 | This number is determined by the following [Standby mode](https://docs.apify.com/platform/actors/running/standby) settings:

247 |

248 | - **Max requests per run** and **Desired requests per run** - Determine how many requests can be sent by the system to one Actor run.

249 | - **Memory** - Determines how much memory and CPU resources the Actor run has available, and this how many web pages it can open and process in parallel.

250 |

251 | Additionally, the Actor manages its internal pool of web browsers to handle the requests.

252 | If the Actor memory or CPU is at capacity, the pool automatically scales down, and requests

253 | above the capacity are delayed.

254 |

255 | By default, these Standby mode settings are optimized for quick response time:

256 | 8 GB of memory and maximum of 24 requests per run gives approximately ~340 MB per web page.

257 | If you prefer to optimize the Actor for the cost, you can **Create task** for the Actor in Apify Console

258 | and override these settings. Just note that requests might take longer and so you should

259 | increase `requestTimeoutSecs` accordingly.

260 |

261 |

262 | ### Benchmark

263 |

264 | Below is a typical latency breakdown for RAG Web Browser with **maxResults** set to either `1` or `3`, and various memory settings.

265 | These settings allow for processing all search results in parallel.

266 | The numbers below are based on the following search terms: "apify", "Donald Trump", "boston".

267 | Results were averaged for the three queries.

268 |

269 | | Memory (GB) | Max results | Latency (sec) |

270 | |-------------|-------------|---------------|

271 | | 4 | 1 | 22 |

272 | | 4 | 3 | 31 |

273 | | 8 | 1 | 16 |

274 | | 8 | 3 | 17 |

275 |

276 | Please note the these results are only indicative and may vary based on the search term, target websites, and network latency.

277 |

278 | ## 💰 Pricing

279 |

280 | The RAG Web Browser is free of charge, and you only pay for the Apify platform consumption when it runs.

281 | The main driver of the price is the Actor compute units (CUs), which are proportional to the amount of Actor run memory

282 | and run time (1 CU = 1 GB memory x 1 hour).

283 |

284 | ## ⓘ Limitations and feedback

285 |

286 | The Actor uses [Google Search](https://www.google.com/) in the United States with English language,

287 | and so queries like "_best nearby restaurants_" will return search results from the US.

288 |

289 | If you need other regions or languages, or have some other feedback,

290 | please [submit an issue](https://console.apify.com/actors/3ox4R101TgZz67sLr/issues) in Apify Console to let us know.

291 |

292 |

293 | ## 👷🏼 Development

294 |

295 | The RAG Web Browser Actor has open source available on [GitHub](https://github.com/apify/rag-web-browser),

296 | so that you can modify and develop it yourself. Here are the steps how to run it locally on your computer.

297 |

298 | Download the source code:

299 |

300 | ```bash

301 | git clone https://github.com/apify/rag-web-browser

302 | cd rag-web-browser

303 | ```

304 |

305 | Install [Playwright](https://playwright.dev) with dependencies:

306 |

307 | ```bash

308 | npx playwright install --with-deps

309 | ```

310 |

311 | And then you can run it locally using [Apify CLI](https://docs.apify.com/cli) as follows:

312 |

313 | ```bash

314 | APIFY_META_ORIGIN=STANDBY apify run -p

315 | ```

316 |

317 | Server will start on `http://localhost:3000` and you can send requests to it, for example:

318 |

319 | ```bash

320 | curl "http://localhost:3000/search?query=example.com"

321 | ```

322 |

--------------------------------------------------------------------------------

/data/dataset_rag-web-browser_2024-09-02_4gb_maxResult_1.json:

--------------------------------------------------------------------------------

1 | [{

2 | "crawl": {

3 | "httpStatusCode": 200,

4 | "loadedAt": "2024-09-02T11:57:16.049Z",

5 | "uniqueKey": "6cca1227-3742-4544-b1c1-16cb13d2dba8",

6 | "requestStatus": "handled",

7 | "debug": {

8 | "timeMeasures": [

9 | {

10 | "event": "request-received",

11 | "timeMs": 0,

12 | "timeDeltaPrevMs": 0

13 | },

14 | {

15 | "event": "before-cheerio-queue-add",

16 | "timeMs": 143,

17 | "timeDeltaPrevMs": 143

18 | },

19 | {

20 | "event": "cheerio-request-handler-start",

21 | "timeMs": 2993,

22 | "timeDeltaPrevMs": 2850

23 | },

24 | {

25 | "event": "before-playwright-queue-add",

26 | "timeMs": 3011,

27 | "timeDeltaPrevMs": 18

28 | },

29 | {

30 | "event": "playwright-request-start",

31 | "timeMs": 15212,

32 | "timeDeltaPrevMs": 12201

33 | },

34 | {

35 | "event": "playwright-wait-dynamic-content",

36 | "timeMs": 22158,

37 | "timeDeltaPrevMs": 6946

38 | },

39 | {

40 | "event": "playwright-remove-cookie",

41 | "timeMs": 22331,

42 | "timeDeltaPrevMs": 173

43 | },

44 | {

45 | "event": "playwright-parse-with-cheerio",

46 | "timeMs": 23122,

47 | "timeDeltaPrevMs": 791

48 | },

49 | {

50 | "event": "playwright-process-html",

51 | "timeMs": 25226,

52 | "timeDeltaPrevMs": 2104

53 | },

54 | {

55 | "event": "playwright-before-response-send",

56 | "timeMs": 25433,

57 | "timeDeltaPrevMs": 207

58 | }

59 | ]

60 | }

61 | },

62 | "metadata": {

63 | "author": null,

64 | "title": "Apify: Full-stack web scraping and data extraction platform",

65 | "description": "Cloud platform for web scraping, browser automation, and data for AI. Use 2,000+ ready-made tools, code templates, or order a custom solution.",

66 | "keywords": "web scraper,web crawler,scraping,data extraction,API",

67 | "languageCode": "en",

68 | "url": "https://apify.com/"

69 | },

70 | "text": "Full-stack web scraping and data extraction platformStar apify/crawlee on GitHubProblem loading pageBack ButtonSearch IconFilter Icon\npowering the world's top data-driven teams\nSimplify scraping with\nCrawlee\nGive your crawlers an unfair advantage with Crawlee, our popular library for building reliable scrapers in Node.js.\n\nimport\n{\nPuppeteerCrawler,\nDataset\n}\nfrom 'crawlee';\nconst crawler = new PuppeteerCrawler(\n{\nasync requestHandler(\n{\nrequest, page,\nenqueueLinks\n}\n) \n{\nurl: request.url,\ntitle: await page.title(),\nawait enqueueLinks();\nawait crawler.run(['https://crawlee.dev']);\nUse your favorite libraries\nApify works great with both Python and JavaScript, with Playwright, Puppeteer, Selenium, Scrapy, or any other library.\nStart with our code templates\nfrom scrapy.spiders import CrawlSpider, Rule\nclass Scraper(CrawlSpider):\nname = \"scraper\"\nstart_urls = [\"https://the-coolest-store.com/\"]\ndef parse_item(self, response):\nitem = Item()\nitem[\"price\"] = response.css(\".price_color::text\").get()\nreturn item\nTurn your code into an Apify Actor\nActors are serverless microapps that are easy to develop, run, share, and integrate. The infra, proxies, and storages are ready to go.\nLearn more about Actors\nimport\n{ Actor\n}\nfrom 'apify'\nawait Actor.init();\nDeploy to the cloud\nNo config required. Use a single CLI command or build directly from GitHub.\nDeploy to Apify\n> apify push\nInfo: Deploying Actor 'computer-scraper' to Apify.\nRun: Updated version 0.0 for scraper Actor.\nRun: Building Actor scraper\nACTOR: Pushing Docker image to repository.\nACTOR: Build finished.\nActor build detail -> https://console.apify.com/actors#/builds/0.0.2\nSuccess: Actor was deployed to Apify cloud and built there.\nRun your Actors\nStart from Apify Console, CLI, via API, or schedule your Actor to start at any time. It’s your call.\nPOST/v2/acts/4cT0r1D/runs\nRun object\n{ \"id\": \"seHnBnyCTfiEnXft\", \"startedAt\": \"2022-12-01T13:42:00.364Z\", \"finishedAt\": null, \"status\": \"RUNNING\", \"options\": { \"build\": \"version-3\", \"timeoutSecs\": 3600, \"memoryMbytes\": 4096 }, \"defaultKeyValueStoreId\": \"EiGjhZkqseHnBnyC\", \"defaultDatasetId\": \"vVh7jTthEiGjhZkq\", \"defaultRequestQueueId\": \"TfiEnXftvVh7jTth\" }\nNever get blocked\nUse our large pool of datacenter and residential proxies. Rely on smart IP address rotation with human-like browser fingerprints.\nLearn more about Apify Proxy\nawait Actor.createProxyConfiguration(\n{\ncountryCode: 'US',\ngroups: ['RESIDENTIAL'],\nStore and share crawling results\nUse distributed queues of URLs to crawl. Store structured data or binary files. Export datasets in CSV, JSON, Excel or other formats.\nLearn more about Apify Storage\nGET/v2/datasets/d4T453t1D/items\nDataset items\n[ { \"title\": \"myPhone 99 Super Max\", \"description\": \"Such phone, max 99, wow!\", \"price\": 999 }, { \"title\": \"myPad Hyper Thin\", \"description\": \"So thin it's 2D.\", \"price\": 1499 } ]\nMonitor performance over time\nInspect all Actor runs, their logs, and runtime costs. Listen to events and get custom automated alerts.\nIntegrations. Everywhere.\nConnect to hundreds of apps right away using ready-made integrations, or set up your own with webhooks and our API.\nSee all integrations\nCrawls websites using raw HTTP requests, parses the HTML with the Cheerio library, and extracts data from the pages using a Node.js code. Supports both recursive crawling and lists of URLs. This actor is a high-performance alternative to apify/web-scraper for websites that do not require JavaScript.\nCrawls arbitrary websites using the Chrome browser and extracts data from pages using JavaScript code. The Actor supports both recursive crawling and lists of URLs and automatically manages concurrency for maximum performance. This is Apify's basic tool for web crawling and scraping.\nExtract data from hundreds of Google Maps locations and businesses. Get Google Maps data including reviews, images, contact info, opening hours, location, popular times, prices & more. Export scraped data, run the scraper via API, schedule and monitor runs, or integrate with other tools.\nYouTube crawler and video scraper. Alternative YouTube API with no limits or quotas. Extract and download channel name, likes, number of views, and number of subscribers.\nScrape Booking with this hotels scraper and get data about accommodation on Booking.com. You can crawl by keywords or URLs for hotel prices, ratings, addresses, number of reviews, stars. You can also download all that room and hotel data from Booking.com with a few clicks: CSV, JSON, HTML, and Excel\nCrawls websites with the headless Chrome and Puppeteer library using a provided server-side Node.js code. This crawler is an alternative to apify/web-scraper that gives you finer control over the process. Supports both recursive crawling and list of URLs. Supports login to website.\nUse this Amazon scraper to collect data based on URL and country from the Amazon website. Extract product information without using the Amazon API, including reviews, prices, descriptions, and Amazon Standard Identification Numbers (ASINs). Download data in various structured formats.\nScrape tweets from any Twitter user profile. Top Twitter API alternative to scrape Twitter hashtags, threads, replies, followers, images, videos, statistics, and Twitter history. Export scraped data, run the scraper via API, schedule and monitor runs or integrate with other tools.\nBrowse 2,000+ Actors",

71 | "markdown": "# Full-stack web scraping and data extraction platformStar apify/crawlee on GitHubProblem loading pageBack ButtonSearch IconFilter Icon\n\npowering the world's top data-driven teams\n\n#### \n\nSimplify scraping with\n\nCrawlee\n\nGive your crawlers an unfair advantage with Crawlee, our popular library for building reliable scrapers in Node.js.\n\n \n\nimport\n\n{\n\n \n\nPuppeteerCrawler,\n\n \n\nDataset\n\n}\n\n \n\nfrom 'crawlee';\n\nconst crawler = new PuppeteerCrawler(\n\n{\n\n \n\nasync requestHandler(\n\n{\n\n \n\nrequest, page,\n\n \n\nenqueueLinks\n\n}\n\n) \n\n{\n\nurl: request.url,\n\ntitle: await page.title(),\n\nawait enqueueLinks();\n\nawait crawler.run(\\['https://crawlee.dev'\\]);\n\n\n\n#### Use your favorite libraries\n\nApify works great with both Python and JavaScript, with Playwright, Puppeteer, Selenium, Scrapy, or any other library.\n\n[Start with our code templates](https://apify.com/templates)\n\nfrom scrapy.spiders import CrawlSpider, Rule\n\nclass Scraper(CrawlSpider):\n\nname = \"scraper\"\n\nstart\\_urls = \\[\"https://the-coolest-store.com/\"\\]\n\ndef parse\\_item(self, response):\n\nitem = Item()\n\nitem\\[\"price\"\\] = response.css(\".price\\_color::text\").get()\n\nreturn item\n\n#### Turn your code into an Apify Actor\n\nActors are serverless microapps that are easy to develop, run, share, and integrate. The infra, proxies, and storages are ready to go.\n\n[Learn more about Actors](https://apify.com/actors)\n\nimport\n\n{ Actor\n\n}\n\n from 'apify'\n\nawait Actor.init();\n\n\n\n#### Deploy to the cloud\n\nNo config required. Use a single CLI command or build directly from GitHub.\n\n[Deploy to Apify](https://console.apify.com/actors/new)\n\n\\> apify push\n\nInfo: Deploying Actor 'computer-scraper' to Apify.\n\nRun: Updated version 0.0 for scraper Actor.\n\nRun: Building Actor scraper\n\nACTOR: Pushing Docker image to repository.\n\nACTOR: Build finished.\n\nActor build detail -> https://console.apify.com/actors#/builds/0.0.2\n\nSuccess: Actor was deployed to Apify cloud and built there.\n\n\n\n#### Run your Actors\n\nStart from Apify Console, CLI, via API, or schedule your Actor to start at any time. It’s your call.\n\n```\nPOST/v2/acts/4cT0r1D/runs\n```\n\nRun object\n\n```\n{\n \"id\": \"seHnBnyCTfiEnXft\",\n \"startedAt\": \"2022-12-01T13:42:00.364Z\",\n \"finishedAt\": null,\n \"status\": \"RUNNING\",\n \"options\": {\n \"build\": \"version-3\",\n \"timeoutSecs\": 3600,\n \"memoryMbytes\": 4096\n },\n \"defaultKeyValueStoreId\": \"EiGjhZkqseHnBnyC\",\n \"defaultDatasetId\": \"vVh7jTthEiGjhZkq\",\n \"defaultRequestQueueId\": \"TfiEnXftvVh7jTth\"\n}\n```\n\n\n\n#### Never get blocked\n\nUse our large pool of datacenter and residential proxies. Rely on smart IP address rotation with human-like browser fingerprints.\n\n[Learn more about Apify Proxy](https://apify.com/proxy)\n\nawait Actor.createProxyConfiguration(\n\n{\n\ncountryCode: 'US',\n\ngroups: \\['RESIDENTIAL'\\],\n\n\n\n#### Store and share crawling results\n\nUse distributed queues of URLs to crawl. Store structured data or binary files. Export datasets in CSV, JSON, Excel or other formats.\n\n[Learn more about Apify Storage](https://apify.com/storage)\n\n```\nGET/v2/datasets/d4T453t1D/items\n```\n\nDataset items\n\n```\n[\n {\n \"title\": \"myPhone 99 Super Max\",\n \"description\": \"Such phone, max 99, wow!\",\n \"price\": 999\n },\n {\n \"title\": \"myPad Hyper Thin\",\n \"description\": \"So thin it's 2D.\",\n \"price\": 1499\n }\n]\n```\n\n\n\n#### Monitor performance over time\n\nInspect all Actor runs, their logs, and runtime costs. Listen to events and get custom automated alerts.\n\n\n\n#### Integrations. Everywhere.\n\nConnect to hundreds of apps right away using ready-made integrations, or set up your own with webhooks and our API.\n\n[See all integrations](https://apify.com/integrations)\n\n[\n\nCrawls websites using raw HTTP requests, parses the HTML with the Cheerio library, and extracts data from the pages using a Node.js code. Supports both recursive crawling and lists of URLs. This actor is a high-performance alternative to apify/web-scraper for websites that do not require JavaScript.\n\n](https://apify.com/apify/cheerio-scraper)[\n\nCrawls arbitrary websites using the Chrome browser and extracts data from pages using JavaScript code. The Actor supports both recursive crawling and lists of URLs and automatically manages concurrency for maximum performance. This is Apify's basic tool for web crawling and scraping.\n\n](https://apify.com/apify/web-scraper)[\n\nExtract data from hundreds of Google Maps locations and businesses. Get Google Maps data including reviews, images, contact info, opening hours, location, popular times, prices & more. Export scraped data, run the scraper via API, schedule and monitor runs, or integrate with other tools.\n\n](https://apify.com/compass/crawler-google-places)[\n\nYouTube crawler and video scraper. Alternative YouTube API with no limits or quotas. Extract and download channel name, likes, number of views, and number of subscribers.\n\n](https://apify.com/streamers/youtube-scraper)[\n\nScrape Booking with this hotels scraper and get data about accommodation on Booking.com. You can crawl by keywords or URLs for hotel prices, ratings, addresses, number of reviews, stars. You can also download all that room and hotel data from Booking.com with a few clicks: CSV, JSON, HTML, and Excel\n\n](https://apify.com/voyager/booking-scraper)[\n\nCrawls websites with the headless Chrome and Puppeteer library using a provided server-side Node.js code. This crawler is an alternative to apify/web-scraper that gives you finer control over the process. Supports both recursive crawling and list of URLs. Supports login to website.\n\n](https://apify.com/apify/puppeteer-scraper)[\n\nUse this Amazon scraper to collect data based on URL and country from the Amazon website. Extract product information without using the Amazon API, including reviews, prices, descriptions, and Amazon Standard Identification Numbers (ASINs). Download data in various structured formats.\n\n](https://apify.com/junglee/Amazon-crawler)[\n\nScrape tweets from any Twitter user profile. Top Twitter API alternative to scrape Twitter hashtags, threads, replies, followers, images, videos, statistics, and Twitter history. Export scraped data, run the scraper via API, schedule and monitor runs or integrate with other tools.\n\n](https://apify.com/quacker/twitter-scraper)\n\n[Browse 2,000+ Actors](https://apify.com/store)",

72 | "html": null

73 | },

74 | {

75 | "crawl": {

76 | "httpStatusCode": 200,

77 | "loadedAt": "2024-09-02T11:57:46.636Z",

78 | "uniqueKey": "8b63e9cc-700b-4c36-ae32-3622eb3dba76",

79 | "requestStatus": "handled",

80 | "debug": {

81 | "timeMeasures": [

82 | {

83 | "event": "request-received",

84 | "timeMs": 0,

85 | "timeDeltaPrevMs": 0

86 | },

87 | {

88 | "event": "before-cheerio-queue-add",

89 | "timeMs": 101,

90 | "timeDeltaPrevMs": 101

91 | },

92 | {

93 | "event": "cheerio-request-handler-start",

94 | "timeMs": 2726,

95 | "timeDeltaPrevMs": 2625

96 | },

97 | {

98 | "event": "before-playwright-queue-add",

99 | "timeMs": 2734,

100 | "timeDeltaPrevMs": 8

101 | },

102 | {

103 | "event": "playwright-request-start",

104 | "timeMs": 11707,

105 | "timeDeltaPrevMs": 8973

106 | },

107 | {

108 | "event": "playwright-wait-dynamic-content",

109 | "timeMs": 12790,

110 | "timeDeltaPrevMs": 1083

111 | },

112 | {

113 | "event": "playwright-remove-cookie",

114 | "timeMs": 13525,

115 | "timeDeltaPrevMs": 735

116 | },

117 | {

118 | "event": "playwright-parse-with-cheerio",

119 | "timeMs": 13914,

120 | "timeDeltaPrevMs": 389

121 | },

122 | {

123 | "event": "playwright-process-html",

124 | "timeMs": 14788,

125 | "timeDeltaPrevMs": 874

126 | },

127 | {

128 | "event": "playwright-before-response-send",

129 | "timeMs": 14899,

130 | "timeDeltaPrevMs": 111

131 | }

132 | ]

133 | }

134 | },

135 | "metadata": {

136 | "author": null,

137 | "title": "Home | Donald J. Trump",

138 | "description": "Certified Website of Donald J. Trump For President 2024. America's comeback starts right now. Join our movement to Make America Great Again!",

139 | "keywords": null,

140 | "languageCode": "en",

141 | "url": "https://www.donaldjtrump.com/"

142 | },

143 | "text": "Home | Donald J. Trump\n\"THEY’RE NOT AFTER ME, \nTHEY’RE AFTER YOU \n…I’M JUST STANDING \nIN THE WAY!”\nDONALD J. TRUMP, 45th President of the United States \nContribute VOLUNTEER \nAgenda47 Platform\nAmerica needs determined Republican Leadership at every level of Government to address the core threats to our very survival: Our disastrously Open Border, our weakened Economy, crippling restrictions on American Energy Production, our depleted Military, attacks on the American System of Justice, and much more. \nTo make clear our commitment, we offer to the American people the 2024 GOP Platform to Make America Great Again! It is a forward-looking Agenda that begins with the following twenty promises that we will accomplish very quickly when we win the White House and Republican Majorities in the House and Senate. \nPlatform \nI AM YOUR VOICE. AMERICA FIRST!\nPresident Trump Will Stop China From Owning America\nI will ensure America's future remains firmly in America's hands!\nPresident Donald J. Trump Calls for Probe into Intelligence Community’s Role in Online Censorship\nThe ‘Twitter Files’ prove that we urgently need my plan to dismantle the illegal censorship regime — a regime like nobody’s ever seen in the history of our country or most other countries for that matter,” President Trump said.\nPresident Donald J. Trump — Free Speech Policy Initiative\nPresident Donald J. Trump announced a new policy initiative aimed to dismantle the censorship cartel and restore free speech.\nPresident Donald J. Trump Declares War on Cartels\nJoe Biden prepares to make his first-ever trip to the southern border that he deliberately erased, President Trump announced that when he is president again, it will be the official policy of the United States to take down the drug cartels just as we took down ISIS.\nAgenda47: Ending the Nightmare of the Homeless, Drug Addicts, and Dangerously Deranged\nFor a small fraction of what we spend upon Ukraine, we could take care of every homeless veteran in America. Our veterans are being treated horribly.\nAgenda47: Liberating America from Biden’s Regulatory Onslaught\nNo longer will unelected members of the Washington Swamp be allowed to act as the fourth branch of our Republic.\nAgenda47: Firing the Radical Marxist Prosecutors Destroying America\nIf we cannot restore the fair and impartial rule of law, we will not be a free country.\nAgenda47: President Trump Announces Plan to Stop the America Last Warmongers and Globalists\nPresident Donald J. Trump announced his plan to defeat the America Last warmongers and globalists in the Deep State, the Pentagon, the State Department, and the national security industrial complex.\nAgenda47: President Trump Announces Plan to End Crime and Restore Law and Order\nPresident Donald J. Trump unveiled his new plan to stop out-of-control crime and keep all Americans safe. In his first term, President Trump reduced violent crime and stood strongly with America’s law enforcement. On Joe Biden’s watch, violent crime has skyrocketed and communities have become less safe as he defunded, defamed, and dismantled police forces. www.DonaldJTrump.com Text TRUMP to 88022\nAgenda47: President Trump on Making America Energy Independent Again\nBiden's War on Energy Is The Key Driver of the Worst Inflation in 58 Years! When I'm back in Office, We Will Eliminate Every Democrat Regulation That Hampers Domestic Enery Production!\nPresident Trump Will Build a New Missile Defense Shield\nWe must be able to defend our homeland, our allies, and our military assets around the world from the threat of hypersonic missiles, no matter where they are launched from. Just as President Trump rebuilt our military, President Trump will build a state-of-the-art next-generation missile defense shield to defend America from missile attack.\nPresident Trump Calls for Immediate De-escalation and Peace\nJoe Biden's weakness and incompetence has brought us to the brink of nuclear war and leading us to World War 3. It's time for all parties involved to pursue a peaceful end to the war in Ukraine before it spirals out of control and into nuclear war.\nPresident Trump’s Plan to Protect Children from Left-Wing Gender Insanity\nPresident Trump today announced his plan to stop the chemical, physical, and emotional mutilation of our youth.\nPresident Trump’s Plan to Save American Education and Give Power Back to Parents\nOur public schools have been taken over by the Radical Left Maniacs!\nWe Must Protect Medicare and Social Security\nUnder no circumstances should Republicans vote to cut a single penny from Medicare or Social Security\nPresident Trump Will Stop China From Owning America\nI will ensure America's future remains firmly in America's hands!\nPresident Donald J. Trump Calls for Probe into Intelligence Community’s Role in Online Censorship\nThe ‘Twitter Files’ prove that we urgently need my plan to dismantle the illegal censorship regime — a regime like nobody’s ever seen in the history of our country or most other countries for that matter,” President Trump said.\nPresident Donald J. Trump — Free Speech Policy Initiative\nPresident Donald J. Trump announced a new policy initiative aimed to dismantle the censorship cartel and restore free speech.\nPresident Donald J. Trump Declares War on Cartels\nJoe Biden prepares to make his first-ever trip to the southern border that he deliberately erased, President Trump announced that when he is president again, it will be the official policy of the United States to take down the drug cartels just as we took down ISIS.\nAgenda47: Ending the Nightmare of the Homeless, Drug Addicts, and Dangerously Deranged\nFor a small fraction of what we spend upon Ukraine, we could take care of every homeless veteran in America. Our veterans are being treated horribly.\nAgenda47: Liberating America from Biden’s Regulatory Onslaught\nNo longer will unelected members of the Washington Swamp be allowed to act as the fourth branch of our Republic.",