├── src

├── __init__.py

├── bitcoin

│ ├── __init__.py

│ ├── descriptors.py

│ ├── musig.py

│ ├── address.py

│ ├── coverage.py

│ ├── segwit_addr.py

│ ├── authproxy.py

│ ├── util.py

│ ├── mininode.py

│ ├── key.py

│ ├── test_node.py

│ └── test_framework.py

├── signer

│ ├── __init__.py

│ ├── nsec.txt

│ ├── coordinator_pk.txt

│ ├── wallet.py

│ └── signer.py

├── utils

│ ├── __init__.py

│ ├── payload.py

│ └── nostr_utils.py

└── coordinator

│ ├── __init__.py

│ ├── nsec.txt

│ ├── signer_pks.txt

│ ├── db.template.json

│ ├── mempool_space_client.py

│ ├── db.py

│ ├── coordinator.py

│ └── wallet.py

├── requirements.txt

├── assets

├── images

│ ├── flow.png

│ ├── munstr-logo.png

│ ├── on_chain_tx.png

│ ├── frankenstein.png

│ └── xcf

│ │ └── munstr-logo.xcf

├── font

│ └── 1313MockingbirdLane.ttf

└── presentation

│ └── MIT2023-Munstr.pdf

├── start_coordinator.py

├── start_signer.py

├── .gitignore

└── README.md

/src/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/src/bitcoin/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/src/signer/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/src/utils/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/src/coordinator/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | bip32

2 | nostr

3 | colorama

4 | python-bitcoinlib

5 |

--------------------------------------------------------------------------------

/src/signer/nsec.txt:

--------------------------------------------------------------------------------

1 | nsec13kwzykv6tvranz0hj4ckc7mt8jh7x7s8us5j4m0xv2zd02esrz3qy6xq40

--------------------------------------------------------------------------------

/src/coordinator/nsec.txt:

--------------------------------------------------------------------------------

1 | nsec1fwfc64nlq4sefw3myz7sft7npvdh9666x330rtlhzh5uxl88l9gs59th9h

--------------------------------------------------------------------------------

/src/signer/coordinator_pk.txt:

--------------------------------------------------------------------------------

1 | 5b08cc2b5d5771243ad6fe108972411338ea04ed7fc4a499a904e1cf6895f471

--------------------------------------------------------------------------------

/src/coordinator/signer_pks.txt:

--------------------------------------------------------------------------------

1 | 939222991dc2c54918551ead7082bc0ef9fc52e3b705f5d9d48727b57e70b845

--------------------------------------------------------------------------------

/assets/images/flow.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/arminsabouri/munstr/HEAD/assets/images/flow.png

--------------------------------------------------------------------------------

/src/coordinator/db.template.json:

--------------------------------------------------------------------------------

1 | {"xpubs": [], "nonces": [], "wallets": [], "signatures": [], "spends": []}

--------------------------------------------------------------------------------

/assets/images/munstr-logo.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/arminsabouri/munstr/HEAD/assets/images/munstr-logo.png

--------------------------------------------------------------------------------

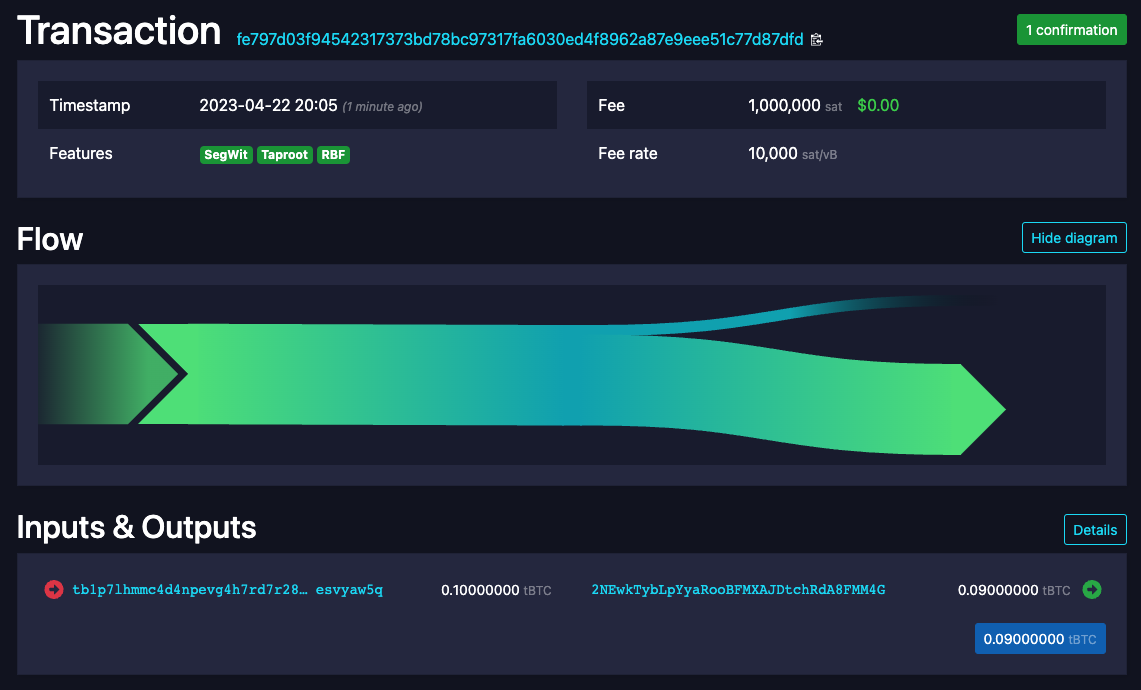

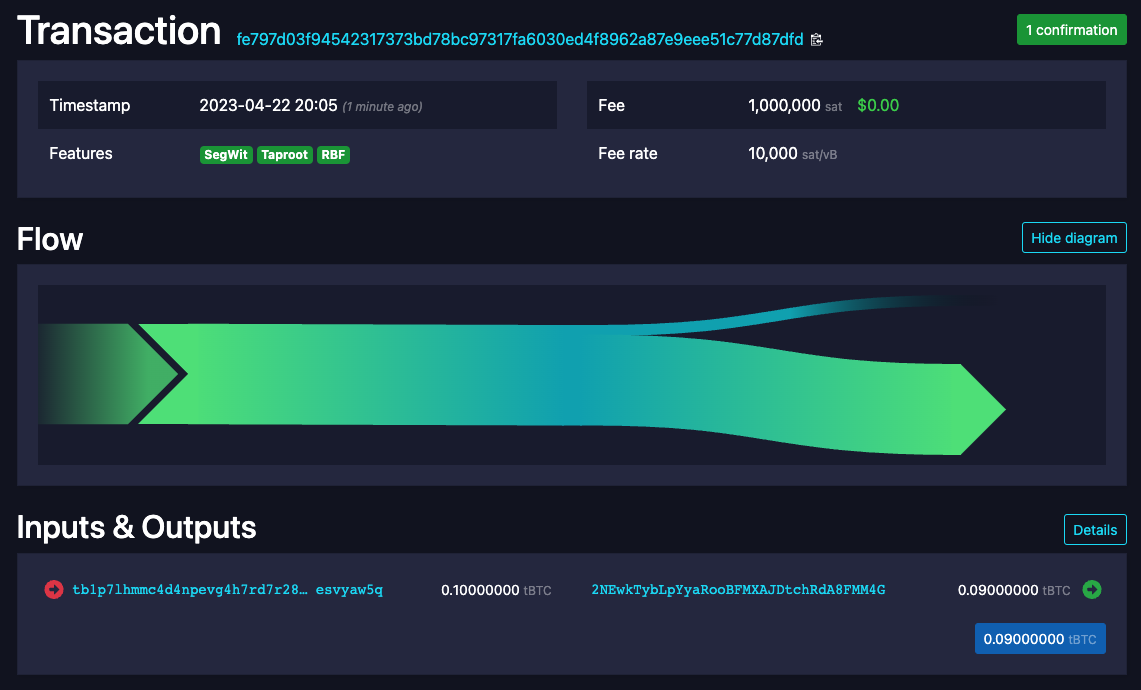

/assets/images/on_chain_tx.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/arminsabouri/munstr/HEAD/assets/images/on_chain_tx.png

--------------------------------------------------------------------------------

/assets/images/frankenstein.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/arminsabouri/munstr/HEAD/assets/images/frankenstein.png

--------------------------------------------------------------------------------

/assets/images/xcf/munstr-logo.xcf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/arminsabouri/munstr/HEAD/assets/images/xcf/munstr-logo.xcf

--------------------------------------------------------------------------------

/assets/font/1313MockingbirdLane.ttf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/arminsabouri/munstr/HEAD/assets/font/1313MockingbirdLane.ttf

--------------------------------------------------------------------------------

/assets/presentation/MIT2023-Munstr.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/arminsabouri/munstr/HEAD/assets/presentation/MIT2023-Munstr.pdf

--------------------------------------------------------------------------------

/start_coordinator.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | from src.coordinator.coordinator import run

3 |

4 | def main():

5 | run()

6 |

7 | if __name__ == "__main__":

8 | main()

9 |

--------------------------------------------------------------------------------

/start_signer.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | from src.signer.signer import run_signer

3 |

4 | import sys

5 | import argparse

6 |

7 |

8 | def main(wallet_id=None, key_pair_seed=None, nonce_seed=None):

9 | run_signer(wallet_id=wallet_id, key_pair_seed=key_pair_seed, nonce_seed=nonce_seed)

10 |

11 | if __name__ == "__main__":

12 | parser = argparse.ArgumentParser()

13 | parser.add_argument("--wallet_id", help="Wallet ID", default=None)

14 | parser.add_argument("--key_seed", help="Key seed")

15 | parser.add_argument("--nonce_seed", help="Nonce seed")

16 | args = parser.parse_args()

17 |

18 | # Call the main function with the command line arguments

19 | main(args.wallet_id, args.key_seed, args.nonce_seed)

20 |

21 |

--------------------------------------------------------------------------------

/src/utils/payload.py:

--------------------------------------------------------------------------------

1 | import json

2 | import logging

3 |

4 | from enum import Enum

5 |

6 | # ref_id is also valid but not required

7 | class PayloadKeys(Enum):

8 | REQUEST_ID = 'req_id' # the request ID

9 | COMMAND = 'command' # command to pass into the application

10 | PAYLOAD = 'payload' # payload for the command

11 | TIMESTAMP = 'ts' # timestamp

12 |

13 | def is_valid_json(json_str: str):

14 | try:

15 | json.loads(json_str)

16 | except ValueError as e:

17 | logging.error("Invalid JSON!")

18 | print(e)

19 | return False

20 | return True

21 |

22 | def is_valid_payload(payload: dict):

23 | required_keys = [payload_key.value for payload_key in PayloadKeys]

24 | for key in required_keys:

25 | if key not in list(payload.keys()):

26 | # logging.error("Key is missing from JSON payload: %s", key)

27 | return False

28 |

29 | return True

--------------------------------------------------------------------------------

/src/coordinator/mempool_space_client.py:

--------------------------------------------------------------------------------

1 | #

2 | # Use mempool.space's API for interfacing with the network. In the future we

3 | # would like users to be able to connect Munstr to their own node

4 | #

5 | import requests

6 | import logging

7 |

8 | API_ENDPOINT = "https://mempool.space/testnet/api"

9 |

10 | def broadcast_transaction(tx_hex):

11 | broadcast_transaction_path = f"/tx"

12 |

13 | payload = {"tx": tx_hex}

14 |

15 | # TODO should data be set to tx_hex?

16 | response = requests.post(API_ENDPOINT + broadcast_transaction_path, data=payload)

17 |

18 | if (response.status_code == 200):

19 | logging.info('[mempool.space client] Transaction broadcast success!')

20 | else:

21 | logging.error('[mempool.space.client] Transaction broadcast failed with error code %d', response.status_code)

22 | logging.error(response.content)

23 |

24 | def get_transaction(txid):

25 | get_transaction_path = f"/tx/{txid}"

26 |

27 | try:

28 | response = requests.get(API_ENDPOINT + get_transaction_path)

29 | response.raise_for_status()

30 | transaction = response.json()

31 |

32 | return transaction

33 | except requests.exceptions.RequestException as e:

34 | logging.error("[mempool.space client] Error retrieving transaction %s", txid)

35 | print(e)

36 | return None

--------------------------------------------------------------------------------

/src/bitcoin/descriptors.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | # Copyright (c) 2019 Pieter Wuille

3 | # Distributed under the MIT software license, see the accompanying

4 | # file COPYING or http://www.opensource.org/licenses/mit-license.php.

5 | """Utility functions related to output descriptors"""

6 |

7 | INPUT_CHARSET = "0123456789()[],'/*abcdefgh@:$%{}IJKLMNOPQRSTUVWXYZ&+-.;<=>?!^_|~ijklmnopqrstuvwxyzABCDEFGH`#\"\\ "

8 | CHECKSUM_CHARSET = "qpzry9x8gf2tvdw0s3jn54khce6mua7l"

9 | GENERATOR = [0xf5dee51989, 0xa9fdca3312, 0x1bab10e32d, 0x3706b1677a, 0x644d626ffd]

10 |

11 | def descsum_polymod(symbols):

12 | """Internal function that computes the descriptor checksum."""

13 | chk = 1

14 | for value in symbols:

15 | top = chk >> 35

16 | chk = (chk & 0x7ffffffff) << 5 ^ value

17 | for i in range(5):

18 | chk ^= GENERATOR[i] if ((top >> i) & 1) else 0

19 | return chk

20 |

21 | def descsum_expand(s):

22 | """Internal function that does the character to symbol expansion"""

23 | groups = []

24 | symbols = []

25 | for c in s:

26 | if not c in INPUT_CHARSET:

27 | return None

28 | v = INPUT_CHARSET.find(c)

29 | symbols.append(v & 31)

30 | groups.append(v >> 5)

31 | if len(groups) == 3:

32 | symbols.append(groups[0] * 9 + groups[1] * 3 + groups[2])

33 | groups = []

34 | if len(groups) == 1:

35 | symbols.append(groups[0])

36 | elif len(groups) == 2:

37 | symbols.append(groups[0] * 3 + groups[1])

38 | return symbols

39 |

40 | def descsum_create(s):

41 | """Add a checksum to a descriptor without"""

42 | symbols = descsum_expand(s) + [0, 0, 0, 0, 0, 0, 0, 0]

43 | checksum = descsum_polymod(symbols) ^ 1

44 | return s + '#' + ''.join(CHECKSUM_CHARSET[(checksum >> (5 * (7 - i))) & 31] for i in range(8))

45 |

46 | def descsum_check(s, require=True):

47 | """Verify that the checksum is correct in a descriptor"""

48 | if not '#' in s:

49 | return not require

50 | if s[-9] != '#':

51 | return False

52 | if not all(x in CHECKSUM_CHARSET for x in s[-8:]):

53 | return False

54 | symbols = descsum_expand(s[:-9]) + [CHECKSUM_CHARSET.find(x) for x in s[-8:]]

55 | return descsum_polymod(symbols) == 1

56 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | ## Python

2 |

3 | # Byte-compiled / optimized / DLL files

4 | __pycache__/

5 | *.py[cod]

6 | *$py.class

7 |

8 | # C extensions

9 | *.so

10 |

11 | # Distribution / packaging

12 | .Python

13 | build/

14 | develop-eggs/

15 | dist/

16 | downloads/

17 | eggs/

18 | .eggs/

19 | lib/

20 | lib64/

21 | parts/

22 | sdist/

23 | var/

24 | wheels/

25 | pip-wheel-metadata/

26 | share/python-wheels/

27 | *.egg-info/

28 | .installed.cfg

29 | *.egg

30 | MANIFEST

31 |

32 | # PyInstaller

33 | # Usually these files are written by a python script from a template

34 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

35 | *.manifest

36 | *.spec

37 |

38 | # Installer logs

39 | pip-log.txt

40 | pip-delete-this-directory.txt

41 |

42 | # Unit test / coverage reports

43 | htmlcov/

44 | .tox/

45 | .nox/

46 | .coverage

47 | .coverage.*

48 | .cache

49 | nosetests.xml

50 | coverage.xml

51 | *.cover

52 | .hypothesis/

53 | .pytest_cache/

54 |

55 | # Translations

56 | *.mo

57 | *.pot

58 |

59 | # Django stuff:

60 | *.log

61 | local_settings.py

62 | db.sqlite3

63 | db.sqlite3-journal

64 |

65 | # Flask stuff:

66 | instance/

67 | .webassets-cache

68 |

69 | # Scrapy stuff:

70 | .scrapy

71 |

72 | # Sphinx documentation

73 | docs/_build/

74 |

75 | # PyBuilder

76 | target/

77 |

78 | # Jupyter Notebook

79 | .ipynb_checkpoints

80 |

81 | # IPython

82 | profile_default/

83 | ipython_config.py

84 |

85 | # pyenv

86 | .python-version

87 |

88 | # pipenv

89 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

90 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

91 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

92 | # install all needed dependencies.

93 | #Pipfile.lock

94 |

95 | # celery beat schedule file

96 | celerybeat-schedule

97 |

98 | # SageMath parsed files

99 | *.sage.py

100 |

101 | # Environments

102 | .env

103 | .venv

104 | env/

105 | venv/

106 | ENV/

107 | env.bak/

108 | venv.bak/

109 |

110 | # Spyder project settings

111 | .spyderproject

112 | .spyproject

113 |

114 | # Rope project settings

115 | .ropeproject

116 |

117 | # mkdocs documentation

118 | /site

119 |

120 | # mypy

121 | .mypy_cache/

122 | .dmypy.json

123 | dmypy.json

124 |

125 | # Pyre type checker

126 | .pyre/

127 |

128 | ## VIM

129 |

130 | # Swap

131 | [._]*.s[a-v][a-z]

132 | [._]*.sw[a-p]

133 | [._]s[a-rt-v][a-z]

134 | [._]ss[a-gi-z]

135 | [._]sw[a-p]

136 |

137 | # Session

138 | Session.vim

139 | Sessionx.vim

140 |

141 | # Temporary

142 | .netrwhist

143 | *~

144 | # Auto-generated tag files

145 | tags

146 | # Persistent undo

147 | [._]*.un~

148 |

149 | # Custom stuff

150 | db.json

151 |

152 | # Lil' Stevie Jobs

153 | .DS_Store

154 |

--------------------------------------------------------------------------------

/src/bitcoin/musig.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) 2019 The Bitcoin Core developers

2 | # Distributed under the MIT software license, see the accompanying

3 | # file COPYING or http://www.opensource.org/licenses/mit-license.php.

4 | """Preliminary MuSig implementation.

5 |

6 | WARNING: This code is slow, uses bad randomness, does not properly protect

7 | keys, and is trivially vulnerable to side channel attacks. Do not use for

8 | anything but tests.

9 |

10 | See https://eprint.iacr.org/2018/068.pdf for the MuSig signature scheme implemented here.

11 | """

12 |

13 | import hashlib

14 |

15 | from .key import (

16 | SECP256K1,

17 | SECP256K1_ORDER,

18 | TaggedHash,

19 | )

20 |

21 | def generate_musig_key(pubkey_list):

22 | """Aggregate individually generated public keys.

23 |

24 | Returns a MuSig public key as defined in the MuSig paper."""

25 | pubkey_list_sorted = sorted([int.from_bytes(key.get_bytes(), 'big') for key in pubkey_list])

26 |

27 | # Concatenate all the public keys together

28 | L = b''

29 | for px in pubkey_list_sorted:

30 | L += px.to_bytes(32, 'big')

31 |

32 | # hash of all the public keys concatenated

33 | Lh = hashlib.sha256(L).digest()

34 |

35 | # the MuSig coefficients. This prevents a key cancellation attack.

36 | musig_c = {}

37 |

38 | aggregate_key = 0

39 | for key in pubkey_list:

40 | musig_c[key] = hashlib.sha256(Lh + key.get_bytes()).digest()

41 | aggregate_key += key.mul(musig_c[key])

42 | return musig_c, aggregate_key

43 |

44 | def aggregate_schnorr_nonces(nonce_point_list):

45 | """Construct aggregated musig nonce from individually generated nonces."""

46 | R_agg = sum(nonce_point_list)

47 | R_agg_affine = SECP256K1.affine(R_agg.p)

48 | negated = False

49 | if R_agg_affine[1] % 2 != 0:

50 | negated = True

51 | R_agg_negated = SECP256K1.mul([(R_agg.p, SECP256K1_ORDER - 1)])

52 | R_agg.p = R_agg_negated

53 | return R_agg, negated

54 |

55 | def sign_musig(priv_key, k_key, R_musig, P_musig, msg):

56 | """Construct a MuSig partial signature and return the s value."""

57 | assert priv_key.valid

58 | assert priv_key.compressed

59 | assert P_musig.compressed

60 | assert len(msg) == 32

61 | assert k_key is not None and k_key.secret != 0

62 | assert R_musig.get_y() % 2 == 0

63 | e = musig_digest(R_musig, P_musig, msg)

64 | return (k_key.secret + e * priv_key.secret) % SECP256K1_ORDER

65 |

66 | def musig_digest(R_musig, P_musig, msg):

67 | """Get the digest to sign for musig"""

68 | return int.from_bytes(TaggedHash("BIP0340/challenge", R_musig.get_bytes() + P_musig.get_bytes() + msg), 'big') % SECP256K1_ORDER

69 |

70 | def aggregate_musig_signatures(s_list, R_musig):

71 | """Construct valid Schnorr signature from a list of partial MuSig signatures."""

72 | assert s_list is not None and all(isinstance(s, int) for s in s_list)

73 | s_agg = sum(s_list) % SECP256K1_ORDER

74 | return R_musig.get_x().to_bytes(32, 'big') + s_agg.to_bytes(32, 'big')

75 |

--------------------------------------------------------------------------------

/src/utils/nostr_utils.py:

--------------------------------------------------------------------------------

1 | import json

2 | import logging

3 | import ssl

4 | import time

5 | import uuid

6 |

7 | from nostr.event import Event, EventKind

8 | from nostr.filter import Filter, Filters

9 | from nostr.key import PrivateKey

10 | from nostr.message_type import ClientMessageType

11 | from nostr.relay_manager import RelayManager

12 |

13 | from src.utils.payload import PayloadKeys

14 |

15 | NOSTR_RELAYS = ["wss://nostr-pub.wellorder.net", "wss://relay.damus.io"]

16 |

17 | def add_relays():

18 | relay_manager = RelayManager()

19 | [relay_manager.add_relay(relay) for relay in NOSTR_RELAYS]

20 |

21 | logging.info("[nostr] Added the following relay(s): %s", NOSTR_RELAYS)

22 | return relay_manager

23 |

24 | def construct_and_publish_event(payload: dict, private_key: PrivateKey, relay_manager: RelayManager):

25 | public_key = private_key.public_key

26 | event = Event(content=json.dumps(payload), public_key=public_key.hex())

27 |

28 | private_key.sign_event(event)

29 | relay_manager.publish_event(event)

30 |

31 | logging.info('[nostr] Published event for the %s command', payload[PayloadKeys.COMMAND.value])

32 |

33 |

34 | # Used to generate both requests and responses

35 | def generate_nostr_message(command: str, req_id=str(uuid.uuid4()), ref_id=None, payload={}):

36 | message = {

37 | PayloadKeys.COMMAND.value: command,

38 | PayloadKeys.REQUEST_ID.value: req_id,

39 | PayloadKeys.PAYLOAD.value: payload,

40 | PayloadKeys.TIMESTAMP.value: int(time.time())

41 | }

42 |

43 | if (ref_id != None):

44 | message['ref_id'] = ref_id

45 |

46 | return message

47 |

48 | def init_relay_manager(relay_manager: RelayManager, author_pks: list[str]):

49 | # set up relay subscription

50 | subscription_id = "str"

51 | filters = Filters([Filter(authors=author_pks, kinds=[EventKind.TEXT_NOTE])])

52 | relay_manager.add_subscription(subscription_id, filters)

53 |

54 | # NOTE: This disables ssl certificate verification

55 | relay_manager.open_connections({"cert_reqs": ssl.CERT_NONE})

56 |

57 | # wait a moment for a connection to open to each relay

58 | time.sleep(1.5)

59 |

60 | request = [ClientMessageType.REQUEST, subscription_id]

61 | request.extend(filters.to_json_array())

62 | message = json.dumps(request)

63 |

64 | relay_manager.relays[NOSTR_RELAYS[0]].publish(message)

65 |

66 | # give the message a moment to send

67 | time.sleep(1)

68 |

69 | logging.info("[nostr] Relay manager started!")

70 |

71 | # read an nsec from a file, return both private and public keys

72 | def read_nsec(nsec_file_name):

73 | with open(nsec_file_name, 'r') as f:

74 | try:

75 | nsec = f.read()

76 | private_key = PrivateKey().from_nsec(nsec)

77 | public_key = private_key.public_key.hex()

78 | logging.info("[nostr] My public key: %s", public_key)

79 | return private_key, public_key

80 | except(error):

81 | logging.error("[nostr] Unexpected error reading nsec from %s", nsec_file_name)

82 | sys.exit(1)

83 |

84 | # read public keys from a file

85 | def read_public_keys(file_name):

86 | with open(file_name, 'r') as f:

87 | try:

88 | lines = f.readlines()

89 | return [line.strip() for line in lines]

90 | except(error):

91 | logging.error("[nostr] Unexpected error reading public keys from %s", file_name)

92 | sys.exit(1)

93 |

94 |

--------------------------------------------------------------------------------

/src/bitcoin/address.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | # Copyright (c) 2016-2019 The Bitcoin Core developers

3 | # Distributed under the MIT software license, see the accompanying

4 | # file COPYING or http://www.opensource.org/licenses/mit-license.php.

5 | """Encode and decode BASE58, P2PKH and P2SH addresses."""

6 |

7 | import enum

8 |

9 | from .script import hash256, hash160, sha256, CScript, OP_0

10 | from .util import hex_str_to_bytes

11 |

12 | from . import segwit_addr

13 |

14 | ADDRESS_BCRT1_UNSPENDABLE = 'bcrt1qqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqq3xueyj'

15 |

16 |

17 | class AddressType(enum.Enum):

18 | bech32 = 'bech32'

19 | p2sh_segwit = 'p2sh-segwit'

20 | legacy = 'legacy' # P2PKH

21 |

22 |

23 | chars = '123456789ABCDEFGHJKLMNPQRSTUVWXYZabcdefghijkmnopqrstuvwxyz'

24 |

25 |

26 | def byte_to_base58(b, version):

27 | result = ''

28 | str = b.hex()

29 | str = chr(version).encode('latin-1').hex() + str

30 | checksum = hash256(hex_str_to_bytes(str)).hex()

31 | str += checksum[:8]

32 | value = int('0x'+str,0)

33 | while value > 0:

34 | result = chars[value % 58] + result

35 | value //= 58

36 | while (str[:2] == '00'):

37 | result = chars[0] + result

38 | str = str[2:]

39 | return result

40 |

41 | # TODO: def base58_decode

42 |

43 | def keyhash_to_p2pkh(hash, main = False):

44 | assert len(hash) == 20

45 | version = 0 if main else 111

46 | return byte_to_base58(hash, version)

47 |

48 | def scripthash_to_p2sh(hash, main = False):

49 | assert len(hash) == 20

50 | version = 5 if main else 196

51 | return byte_to_base58(hash, version)

52 |

53 | def key_to_p2pkh(key, main = False):

54 | key = check_key(key)

55 | return keyhash_to_p2pkh(hash160(key), main)

56 |

57 | def script_to_p2sh(script, main = False):

58 | script = check_script(script)

59 | return scripthash_to_p2sh(hash160(script), main)

60 |

61 | def key_to_p2sh_p2wpkh(key, main = False):

62 | key = check_key(key)

63 | p2shscript = CScript([OP_0, hash160(key)])

64 | return script_to_p2sh(p2shscript, main)

65 |

66 | ## TODO

67 | # def witness_v1_program_to_scriptpubkey(witness_program):

68 | # return CScript([OP_1, ])

69 |

70 | def program_to_witness(version, program, main=False):

71 | if (type(program) is str):

72 | program = hex_str_to_bytes(program)

73 | assert 0 <= version <= 16

74 | assert 2 <= len(program) <= 40

75 | assert version > 0 or len(program) in [20, 32]

76 | return segwit_addr.encode_segwit_address("bc" if main else "tb", version, program)

77 |

78 | def script_to_p2wsh(script, main = False):

79 | script = check_script(script)

80 | return program_to_witness(0, sha256(script), main)

81 |

82 | def key_to_p2wpkh(key, main = False):

83 | key = check_key(key)

84 | return program_to_witness(0, hash160(key), main)

85 |

86 | def script_to_p2sh_p2wsh(script, main = False):

87 | script = check_script(script)

88 | p2shscript = CScript([OP_0, sha256(script)])

89 | return script_to_p2sh(p2shscript, main)

90 |

91 | def check_key(key):

92 | if (type(key) is str):

93 | key = hex_str_to_bytes(key) # Assuming this is hex string

94 | if (type(key) is bytes and (len(key) == 33 or len(key) == 65)):

95 | return key

96 | assert False

97 |

98 | def check_script(script):

99 | if (type(script) is str):

100 | script = hex_str_to_bytes(script) # Assuming this is hex string

101 | if (type(script) is bytes or type(script) is CScript):

102 | return script

103 | assert False

104 |

--------------------------------------------------------------------------------

/src/bitcoin/coverage.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | # Copyright (c) 2015-2018 The Bitcoin Core developers

3 | # Distributed under the MIT software license, see the accompanying

4 | # file COPYING or http://www.opensource.org/licenses/mit-license.php.

5 | """Utilities for doing coverage analysis on the RPC interface.

6 |

7 | Provides a way to track which RPC commands are exercised during

8 | testing.

9 | """

10 |

11 | import os

12 |

13 |

14 | REFERENCE_FILENAME = 'rpc_interface.txt'

15 |

16 |

17 | class AuthServiceProxyWrapper():

18 | """

19 | An object that wraps AuthServiceProxy to record specific RPC calls.

20 |

21 | """

22 | def __init__(self, auth_service_proxy_instance, coverage_logfile=None):

23 | """

24 | Kwargs:

25 | auth_service_proxy_instance (AuthServiceProxy): the instance

26 | being wrapped.

27 | coverage_logfile (str): if specified, write each service_name

28 | out to a file when called.

29 |

30 | """

31 | self.auth_service_proxy_instance = auth_service_proxy_instance

32 | self.coverage_logfile = coverage_logfile

33 |

34 | def __getattr__(self, name):

35 | return_val = getattr(self.auth_service_proxy_instance, name)

36 | if not isinstance(return_val, type(self.auth_service_proxy_instance)):

37 | # If proxy getattr returned an unwrapped value, do the same here.

38 | return return_val

39 | return AuthServiceProxyWrapper(return_val, self.coverage_logfile)

40 |

41 | def __call__(self, *args, **kwargs):

42 | """

43 | Delegates to AuthServiceProxy, then writes the particular RPC method

44 | called to a file.

45 |

46 | """

47 | return_val = self.auth_service_proxy_instance.__call__(*args, **kwargs)

48 | self._log_call()

49 | return return_val

50 |

51 | def _log_call(self):

52 | rpc_method = self.auth_service_proxy_instance._service_name

53 |

54 | if self.coverage_logfile:

55 | with open(self.coverage_logfile, 'a+', encoding='utf8') as f:

56 | f.write("%s\n" % rpc_method)

57 |

58 | def __truediv__(self, relative_uri):

59 | return AuthServiceProxyWrapper(self.auth_service_proxy_instance / relative_uri,

60 | self.coverage_logfile)

61 |

62 | def get_request(self, *args, **kwargs):

63 | self._log_call()

64 | return self.auth_service_proxy_instance.get_request(*args, **kwargs)

65 |

66 | def get_filename(dirname, n_node):

67 | """

68 | Get a filename unique to the test process ID and node.

69 |

70 | This file will contain a list of RPC commands covered.

71 | """

72 | pid = str(os.getpid())

73 | return os.path.join(

74 | dirname, "coverage.pid%s.node%s.txt" % (pid, str(n_node)))

75 |

76 |

77 | def write_all_rpc_commands(dirname, node):

78 | """

79 | Write out a list of all RPC functions available in `bitcoin-cli` for

80 | coverage comparison. This will only happen once per coverage

81 | directory.

82 |

83 | Args:

84 | dirname (str): temporary test dir

85 | node (AuthServiceProxy): client

86 |

87 | Returns:

88 | bool. if the RPC interface file was written.

89 |

90 | """

91 | filename = os.path.join(dirname, REFERENCE_FILENAME)

92 |

93 | if os.path.isfile(filename):

94 | return False

95 |

96 | help_output = node.help().split('\n')

97 | commands = set()

98 |

99 | for line in help_output:

100 | line = line.strip()

101 |

102 | # Ignore blanks and headers

103 | if line and not line.startswith('='):

104 | commands.add("%s\n" % line.split()[0])

105 |

106 | with open(filename, 'w', encoding='utf8') as f:

107 | f.writelines(list(commands))

108 |

109 | return True

110 |

--------------------------------------------------------------------------------

/src/signer/wallet.py:

--------------------------------------------------------------------------------

1 | from bip32 import BIP32

2 |

3 | from src.bitcoin.key import ECKey, ECPubKey, generate_key_pair

4 | from src.bitcoin.messages import sha256

5 | from src.bitcoin.musig import sign_musig

6 |

7 |

8 | class Wallet:

9 | def __init__(self, wallet_id, key_pair_seed, nonce_seed):

10 | self.wallet_id = wallet_id

11 | self.nonce_seed = int(nonce_seed)

12 |

13 | self.private_key = None

14 | self.public_key = None

15 | self.cmap = None

16 | self.pubkey_agg = None

17 | self.r_agg = None

18 | self.should_negate_nonce = None

19 |

20 | self.current_spend_request_id = None

21 | self.sig_hash = None

22 |

23 | # TODO should use ascii or utf-8?

24 | prv, pk = generate_key_pair(sha256(bytes(key_pair_seed, 'ascii')))

25 |

26 | self.private_key = prv

27 | self.public_key = pk

28 |

29 | def get_root_xpub(self):

30 | return self.get_root_hd_node().get_xpub()

31 |

32 | def get_root_hd_node(self):

33 | key_bytes = self.private_key.get_bytes()

34 | return BIP32.from_seed(key_bytes)

35 |

36 | def get_pubkey_at_index(self, index: int):

37 | root_xpub = self.get_root_xpub()

38 | bip32_node = BIP32.from_xpub(root_xpub)

39 | pk = bip32_node.get_pubkey_from_path(f"m/{index}")

40 |

41 | key = ECPubKey().set(pk)

42 | return key.get_bytes()

43 |

44 | def get_new_nonce(self):

45 | k = ECKey().set(self.nonce_seed)

46 | return k.get_pubkey()

47 |

48 | def get_wallet_id(self):

49 | return self.wallet_id

50 |

51 | def set_cmap(self, cmap):

52 | modified_cmap = {}

53 | # Both key and value should be hex encoded strings

54 | # Key is public key

55 | # value is the challenge

56 | for key, value in cmap.items():

57 | modified_cmap[key] = bytes.fromhex(value)

58 |

59 | self.cmap = modified_cmap

60 |

61 | def get_pubkey(self):

62 | return self.public_key.get_bytes().hex()

63 |

64 | def set_pubkey_agg(self, pubkey_agg):

65 | self.pubkey_agg = ECPubKey().set(bytes.fromhex(pubkey_agg))

66 |

67 | def set_r_agg(self, r_agg):

68 | self.r_agg = ECPubKey().set(bytes.fromhex(r_agg))

69 |

70 | def set_should_negate_nonce(self, value):

71 | self.should_negate_nonce = value

72 |

73 | def set_sig_hash(self, sig_hash):

74 | self.sig_hash = bytes.fromhex(sig_hash)

75 |

76 | def set_current_spend_request_id(self, current_spend_request_id):

77 | self.current_spend_request_id = current_spend_request_id

78 |

79 | def get_private_key_tweaked(self):

80 | if self.cmap != None:

81 | # TODO this is all bip32 stuff

82 | # TODO index is hardcoded at 1

83 | # index = 1

84 | # pk = self.get_pubkey_at_index(index).hex()

85 | # TODO hardcoded pk, get from class variable

86 | # prv = self.get_root_hd_node().get_privkey_from_path(f"m/{index}")

87 | # print("prv", prv)

88 | # private_key = ECKey().set(prv)

89 |

90 | pk = self.public_key.get_bytes().hex()

91 | private_key = self.private_key

92 | tweaked_key = private_key * self.cmap[pk]

93 |

94 | if self.pubkey_agg.get_y() % 2 != 0:

95 | tweaked_key.negate()

96 | self.pubkey_agg.negate()

97 |

98 | return tweaked_key

99 | return None

100 |

101 | def sign_with_current_context(self, nonce: str):

102 | if self.sig_hash == None or self.cmap == None or self.r_agg == None or self.pubkey_agg == None:

103 | # TODO should throw

104 | return None

105 |

106 | k1 = ECKey().set(self.nonce_seed)

107 | # negate here

108 | if self.should_negate_nonce:

109 | k1.negate()

110 | tweaked_private_key = self.get_private_key_tweaked()

111 | return sign_musig(tweaked_private_key, k1, self.r_agg, self.pubkey_agg, self.sig_hash)

112 |

--------------------------------------------------------------------------------

/src/bitcoin/segwit_addr.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | # Copyright (c) 2017 Pieter Wuille

3 | # Distributed under the MIT software license, see the accompanying

4 | # file COPYING or http://www.opensource.org/licenses/mit-license.php.

5 | """Reference implementation for Bech32/Bech32m and segwit addresses."""

6 | from enum import Enum

7 |

8 | CHARSET = "qpzry9x8gf2tvdw0s3jn54khce6mua7l"

9 | BECH32_CONST = 1

10 | BECH32M_CONST = 0x2bc830a3

11 |

12 | class Encoding(Enum):

13 | """Enumeration type to list the various supported encodings."""

14 | BECH32 = 1

15 | BECH32M = 2

16 |

17 |

18 | def bech32_polymod(values):

19 | """Internal function that computes the Bech32 checksum."""

20 | generator = [0x3b6a57b2, 0x26508e6d, 0x1ea119fa, 0x3d4233dd, 0x2a1462b3]

21 | chk = 1

22 | for value in values:

23 | top = chk >> 25

24 | chk = (chk & 0x1ffffff) << 5 ^ value

25 | for i in range(5):

26 | chk ^= generator[i] if ((top >> i) & 1) else 0

27 | return chk

28 |

29 |

30 | def bech32_hrp_expand(hrp):

31 | """Expand the HRP into values for checksum computation."""

32 | return [ord(x) >> 5 for x in hrp] + [0] + [ord(x) & 31 for x in hrp]

33 |

34 |

35 | def bech32_verify_checksum(hrp, data):

36 | """Verify a checksum given HRP and converted data characters."""

37 | check = bech32_polymod(bech32_hrp_expand(hrp) + data)

38 | if check == BECH32_CONST:

39 | return Encoding.BECH32

40 | elif check == BECH32M_CONST:

41 | return Encoding.BECH32M

42 | else:

43 | return None

44 |

45 | def bech32_create_checksum(encoding, hrp, data):

46 | """Compute the checksum values given HRP and data."""

47 | values = bech32_hrp_expand(hrp) + data

48 | const = BECH32M_CONST if encoding == Encoding.BECH32M else BECH32_CONST

49 | polymod = bech32_polymod(values + [0, 0, 0, 0, 0, 0]) ^ const

50 | return [(polymod >> 5 * (5 - i)) & 31 for i in range(6)]

51 |

52 |

53 | def bech32_encode(encoding, hrp, data):

54 | """Compute a Bech32 or Bech32m string given HRP and data values."""

55 | combined = data + bech32_create_checksum(encoding, hrp, data)

56 | return hrp + '1' + ''.join([CHARSET[d] for d in combined])

57 |

58 |

59 | def bech32_decode(bech):

60 | """Validate a Bech32/Bech32m string, and determine HRP and data."""

61 | if ((any(ord(x) < 33 or ord(x) > 126 for x in bech)) or

62 | (bech.lower() != bech and bech.upper() != bech)):

63 | return (None, None, None)

64 | bech = bech.lower()

65 | pos = bech.rfind('1')

66 | if pos < 1 or pos + 7 > len(bech) or len(bech) > 90:

67 | return (None, None, None)

68 | if not all(x in CHARSET for x in bech[pos+1:]):

69 | return (None, None, None)

70 | hrp = bech[:pos]

71 | data = [CHARSET.find(x) for x in bech[pos+1:]]

72 | encoding = bech32_verify_checksum(hrp, data)

73 | if encoding is None:

74 | return (None, None, None)

75 | return (encoding, hrp, data[:-6])

76 |

77 |

78 | def convertbits(data, frombits, tobits, pad=True):

79 | """General power-of-2 base conversion."""

80 | acc = 0

81 | bits = 0

82 | ret = []

83 | maxv = (1 << tobits) - 1

84 | max_acc = (1 << (frombits + tobits - 1)) - 1

85 | for value in data:

86 | if value < 0 or (value >> frombits):

87 | return None

88 | acc = ((acc << frombits) | value) & max_acc

89 | bits += frombits

90 | while bits >= tobits:

91 | bits -= tobits

92 | ret.append((acc >> bits) & maxv)

93 | if pad:

94 | if bits:

95 | ret.append((acc << (tobits - bits)) & maxv)

96 | elif bits >= frombits or ((acc << (tobits - bits)) & maxv):

97 | return None

98 | return ret

99 |

100 |

101 | def decode_segwit_address(hrp, addr):

102 | """Decode a segwit address."""

103 | encoding, hrpgot, data = bech32_decode(addr)

104 | if hrpgot != hrp:

105 | return (None, None)

106 | decoded = convertbits(data[1:], 5, 8, False)

107 | if decoded is None or len(decoded) < 2 or len(decoded) > 40:

108 | return (None, None)

109 | if data[0] > 16:

110 | return (None, None)

111 | if data[0] == 0 and len(decoded) != 20 and len(decoded) != 32:

112 | return (None, None)

113 | if (data[0] == 0 and encoding != Encoding.BECH32) or (data[0] != 0 and encoding != Encoding.BECH32M):

114 | return (None, None)

115 | return (data[0], decoded)

116 |

117 |

118 | def encode_segwit_address(hrp, witver, witprog):

119 | """Encode a segwit address."""

120 | encoding = Encoding.BECH32 if witver == 0 else Encoding.BECH32M

121 | ret = bech32_encode(encoding, hrp, [witver] + convertbits(witprog, 8, 5))

122 | if decode_segwit_address(hrp, ret) == (None, None):

123 | return None

124 | return ret

125 |

--------------------------------------------------------------------------------

/src/coordinator/db.py:

--------------------------------------------------------------------------------

1 | import json

2 | import os

3 | import logging

4 |

5 | class UUIDEncoder(json.JSONEncoder):

6 | def default(self, obj):

7 | if isinstance(obj, uuid.UUID):

8 | # If obj is a UUID, serialize it to a string

9 | return str(obj)

10 | # For all other objects, use the default serialization method

11 | return super().default(obj)

12 |

13 | class DB:

14 | def __init__(self, json_file):

15 | if not os.path.isfile(json_file):

16 | raise FileNotFoundError(f"JSON file '{json_file}' not found")

17 | self.json_file = json_file

18 |

19 | def get_data(self):

20 | with open(self.json_file, "r") as f:

21 | return json.load(f)

22 |

23 | def set_data(self, new_data):

24 | with open(self.json_file, "w") as f:

25 | json.dump(new_data, f)

26 |

27 | def get_value(self, key):

28 | with open(self.json_file, "r") as f:

29 | data = json.load(f)

30 | return data.get(key)

31 |

32 | def set_value(self, key, value):

33 | with open(self.json_file, "r") as f:

34 | data = json.load(f)

35 | data[key] = value

36 | with open(self.json_file, "w") as f:

37 | json.dump(data, f)

38 |

39 | ## TODO these utility functions should reside in their own file

40 |

41 | def add_wallet(self, wallet_id, quorum):

42 | with open(self.json_file, "r") as f:

43 | data = json.load(f)

44 |

45 | filtered = [wallet for wallet in data['wallets'] if wallet['wallet_id'] == wallet_id]

46 | if len(filtered) > 0:

47 | return False

48 | data['wallets'].append({'wallet_id': wallet_id, 'quorum': quorum})

49 | with open(self.json_file, "w") as f:

50 | json.dump(data, f)

51 |

52 | return True

53 |

54 | def get_wallet(self, wallet_id):

55 | with open(self.json_file, "r") as f:

56 | data = json.load(f)

57 | filtered = [wallet for wallet in data['wallets'] if wallet['wallet_id'] == wallet_id]

58 | if len(filtered) > 0:

59 | return filtered[0]

60 | return None

61 |

62 | def get_xpubs(self, wallet_id):

63 | with open(self.json_file, "r") as f:

64 | data = json.load(f)

65 | filtered = [xpub for xpub in data['xpubs'] if xpub['wallet_id'] == wallet_id]

66 | if len(filtered) > 0:

67 | return filtered

68 | return None

69 |

70 | def add_xpub(self, wallet_id, xpub):

71 | with open(self.json_file, "r") as f:

72 | data = json.load(f)

73 | # TODO check wallet id exists

74 | filtered_xpubs = [xpub for xpub in data['xpubs'] if xpub['wallet_id'] == wallet_id and xpub['xpub'] == xpub]

75 | if len(filtered_xpubs) > 0:

76 | return False

77 |

78 | data['xpubs'].append({'wallet_id': wallet_id, 'xpub': xpub})

79 |

80 | with open(self.json_file, "w") as f:

81 | json.dump(data, f)

82 |

83 | return True

84 |

85 | def add_spend_request(self, txid, output_index, prev_script_pubkey, prev_value_sats, spend_request_id, new_address,

86 | value, wallet_id):

87 | with open(self.json_file, "r") as f:

88 | data = json.load(f)

89 | filtered = [spend for spend in data['spends'] if spend['spend_request_id'] == spend_request_id]

90 | if len(filtered) > 0:

91 | return False

92 | data['spends'].append({'spend_request_id': spend_request_id, 'txid': txid, 'output_index': output_index, 'prev_script_pubkey': prev_script_pubkey,

93 | 'prev_value_sats': prev_value_sats, 'new_address': new_address, 'value': value, 'wallet_id': wallet_id})

94 | with open(self.json_file, "w") as f:

95 | json.dump(data, f)

96 |

97 | return True

98 |

99 | def get_spend_request(self, spend_request_id):

100 | with open(self.json_file, "r") as f:

101 | data = json.load(f)

102 |

103 | filtered = [spend for spend in data['spends'] if spend['spend_request_id'] == spend_request_id]

104 |

105 | if (len(filtered) == 0):

106 | logging.error('[db] Unable to locate spend request %s', spend_request_id)

107 |

108 | # There should only be one

109 | return filtered[0]

110 |

111 | def add_nonce(self, nonce, spend_request_id):

112 | with open(self.json_file, "r") as f:

113 | data = json.load(f)

114 | # TODO should protect the same nonce being provided again

115 | data['nonces'].append({'spend_request_id': spend_request_id, 'nonce': nonce})

116 | with open(self.json_file, "w") as f:

117 | json.dump(data, f)

118 |

119 | return True

120 |

121 | def get_all_nonces(self, spend_request_id):

122 | with open(self.json_file, "r") as f:

123 | data = json.load(f)

124 |

125 | # TODO check that the wallet ID exists

126 | nonces = [nonce for nonce in data['nonces'] if nonce['spend_request_id'] == spend_request_id]

127 |

128 | return nonces

129 |

130 | def add_partial_signature(self, signature, spend_request_id):

131 | with open(self.json_file, "r") as f:

132 | data = json.load(f)

133 |

134 | # TODO should guard against providing the same signature again

135 | data['signatures'].append({'spend_request_id': spend_request_id, 'signature': signature})

136 |

137 | with open(self.json_file, "w") as f:

138 | json.dump(data, f)

139 |

140 | return True

141 |

142 | def get_all_signatures(self, spend_request_id):

143 | with open(self.json_file, "r") as f:

144 | data = json.load(f)

145 |

146 | # TODO check that the wallet ID exists

147 | signatures = [signature for signature in data['signatures'] if signature['spend_request_id'] == spend_request_id]

148 | return signatures

--------------------------------------------------------------------------------

/src/coordinator/coordinator.py:

--------------------------------------------------------------------------------

1 | import json

2 | import logging

3 | import time

4 |

5 | from colorama import Fore

6 |

7 | from src.utils.nostr_utils import add_relays, construct_and_publish_event, generate_nostr_message, init_relay_manager, read_nsec, read_public_keys

8 | from src.utils.payload import is_valid_json, is_valid_payload, PayloadKeys

9 | from src.coordinator.wallet import add_xpub, create_wallet, is_valid_command, get_address, save_nonce, start_spend, save_signature

10 | from src.coordinator.db import DB

11 |

12 | header = """

13 | ▄████▄ ▒█████ ▒█████ ██▀███ ▓█████▄ ██▓ ███▄ █ ▄▄▄ ▄▄▄█████▓ ▒█████ ██▀███

14 | ▒██▀ ▀█ ▒██▒ ██▒▒██▒ ██▒▓██ ▒ ██▒▒██▀ ██▌▓██▒ ██ ▀█ █ ▒████▄ ▓ ██▒ ▓▒▒██▒ ██▒▓██ ▒ ██▒

15 | ▒▓█ ▄ ▒██░ ██▒▒██░ ██▒▓██ ░▄█ ▒░██ █▌▒██▒▓██ ▀█ ██▒▒██ ▀█▄ ▒ ▓██░ ▒░▒██░ ██▒▓██ ░▄█ ▒

16 | ▒▓▓▄ ▄██▒▒██ ██░▒██ ██░▒██▀▀█▄ ░▓█▄ ▌░██░▓██▒ ▐▌██▒░██▄▄▄▄██░ ▓██▓ ░ ▒██ ██░▒██▀▀█▄

17 | ▒ ▓███▀ ░░ ████▓▒░░ ████▓▒░░██▓ ▒██▒░▒████▓ ░██░▒██░ ▓██░ ▓█ ▓██▒ ▒██▒ ░ ░ ████▓▒░░██▓ ▒██▒

18 | ░ ░▒ ▒ ░░ ▒░▒░▒░ ░ ▒░▒░▒░ ░ ▒▓ ░▒▓░ ▒▒▓ ▒ ░▓ ░ ▒░ ▒ ▒ ▒▒ ▓▒█░ ▒ ░░ ░ ▒░▒░▒░ ░ ▒▓ ░▒▓░

19 | ░ ▒ ░ ▒ ▒░ ░ ▒ ▒░ ░▒ ░ ▒░ ░ ▒ ▒ ▒ ░░ ░░ ░ ▒░ ▒ ▒▒ ░ ░ ░ ▒ ▒░ ░▒ ░ ▒░

20 | ░ ░ ░ ░ ▒ ░ ░ ░ ▒ ░░ ░ ░ ░ ░ ▒ ░ ░ ░ ░ ░ ▒ ░ ░ ░ ░ ▒ ░░ ░

21 | ░ ░ ░ ░ ░ ░ ░ ░ ░ ░ ░ ░ ░ ░ ░

22 | ░ ░

23 | """

24 |

25 | # Map application commands to the corresponding methods

26 | COMMAND_MAP = {

27 | 'address': get_address,

28 | 'nonce': save_nonce,

29 | 'spend': start_spend,

30 | 'sign': save_signature,

31 | 'wallet': create_wallet,

32 | 'xpub': add_xpub

33 | }

34 |

35 | def setup_logging():

36 | logging.basicConfig()

37 | logging.getLogger().setLevel(logging.INFO)

38 | logging.info(Fore.GREEN + header)

39 |

40 | def run():

41 | setup_logging()

42 |

43 | # start up the db

44 | db = DB('src/coordinator/db.json')

45 |

46 | relay_manager = add_relays()

47 | nostr_private_key, nostr_public_key = read_nsec('src/coordinator/nsec.txt')

48 |

49 | # get the public keys for the signers so we can subscribe to messages from them

50 | signer_pks = read_public_keys('src/coordinator/signer_pks.txt')

51 |

52 | init_relay_manager(relay_manager, signer_pks)

53 |

54 | # initialize a timestamp filter

55 | # this will be used to keep track of messages that we have already seen

56 | timestamp_filter = int(time.time())

57 |

58 | while 1:

59 | if (not relay_manager.message_pool.has_events()):

60 | logging.info('No messages! Sleeping ZzZzZzzz...')

61 | time.sleep(1)

62 | continue

63 |

64 | new_event = relay_manager.message_pool.get_event()

65 | event_content = new_event.event.content

66 | # print(f"Message content: {event_content}")

67 | # print(f"From Public key {new_event.event.public_key}")

68 |

69 | #

70 | # Event validation

71 | #

72 | if (not is_valid_json(event_content)):

73 | logging.info('Error with new event! Invalid JSON')

74 | continue

75 |

76 | json_payload = json.loads(event_content)

77 | if (not is_valid_payload(json_payload)):

78 | logging.info('Error with new event! Payload does not have the required keys')

79 | continue

80 |

81 | command = json_payload['command']

82 | if (not is_valid_command):

83 | logging.info('%s is not a valid command!', command)

84 | continue

85 |

86 | # skip if this event is old

87 | event_timestamp = json_payload[PayloadKeys.TIMESTAMP.value]

88 | if (event_timestamp < timestamp_filter):

89 | continue

90 |

91 | #

92 | # Handle the command that's in the event

93 | #

94 | try:

95 | logging.info('[coordinator] Handling command of type: %s', command)

96 | result = COMMAND_MAP[command](json_payload['payload'], db)

97 |

98 | # package the result into a response

99 | ref_id = json_payload[PayloadKeys.REQUEST_ID.value]

100 | response_payload = {}

101 |

102 | if command == "wallet":

103 | response_payload = {

104 | 'wallet_id': result

105 | }

106 | elif command == "address":

107 | response_payload = {

108 | 'address': result[0],

109 | 'cmap': result[1],

110 | 'pubkey_agg': result[2]

111 | }

112 | elif command == "spend":

113 | response_payload = {

114 | 'spend_request_id': result

115 | }

116 | elif command == "nonce":

117 | if result != None:

118 | response_payload = {

119 | 'r_agg': result[0],

120 | 'sig_hash': result[1],

121 | 'negated': result[2],

122 | 'spend_request_id': json_payload['payload']['spend_request_id']

123 | }

124 | elif command == "sign":

125 | if (result != None):

126 | response_payload = {

127 | 'raw_tx': result,

128 | 'spend_request_id': json_payload['payload']['spend_request_id']

129 | }

130 | if result != None:

131 | nostr_response = generate_nostr_message(command=command, ref_id=ref_id, payload=response_payload)

132 | construct_and_publish_event(nostr_response, nostr_private_key, relay_manager)

133 |

134 | except Exception as e:

135 | logging.error('Something went wrong!')

136 | print(e)

137 | pass

138 | # TODO better error handling

139 |

140 | # update the timestamp filter to keep track of messages we have already seen

141 | timestamp_filter = int(time.time())

142 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

Secure your Bitcoin with the Munstrous power of decentralized multi-signature technology

🕸🕯 An open source Musig privacy based wallet 🕯🕸

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 | [](https://bitcoin.org) [](https://www.python.org/)

19 |

20 | [](https://github.com/0xBEEFCAF3/munstr/releases/) [](#license) [](https://github.com/0xBEEFCAF3/munstr)

21 |

22 |

23 |

24 |

25 |

26 | ## What is Munstr?

27 | **Munstr** (MuSig + Nostr) is a combination of Schnorr signature based **MuSig** (multisignature) keys in a terminal based wallet using decentralized **Nostr** networks as a communication layer to facilitate a secure and encrypted method of transporting and digitally signing bitcoin transactions in a way that chain analysis cannot identify the nature and setup of the transaction data. To anyone observing the blockchain, Munstr transactions look like single key **Pay-to-Taproot** (P2TR) spends.

28 |

29 | This is facilitated through an interactive, multi-signature (n-of-n) Bitcoin wallet that is designed to enable a group of signers to coordinate an interactive signing session for taproot based outputs that belong to an aggregated public key.

30 |

31 |

32 |

33 |

34 |

35 |

36 |

37 |

68 |

69 |

70 |

71 |

157 |

158 |

159 |

157 |

158 |

159 |  160 |

161 |

162 |

160 |

161 |

162 |  163 |

164 |

165 |

166 | ## License

167 |

168 | Licensed under the MIT License, Copyright © 2023-present TeamMunstr

169 |

--------------------------------------------------------------------------------

/src/bitcoin/authproxy.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) 2011 Jeff Garzik

2 | #

3 | # Previous copyright, from python-jsonrpc/jsonrpc/proxy.py:

4 | #

5 | # Copyright (c) 2007 Jan-Klaas Kollhof

6 | #

7 | # This file is part of jsonrpc.

8 | #

9 | # jsonrpc is free software; you can redistribute it and/or modify

10 | # it under the terms of the GNU Lesser General Public License as published by

11 | # the Free Software Foundation; either version 2.1 of the License, or

12 | # (at your option) any later version.

13 | #

14 | # This software is distributed in the hope that it will be useful,

15 | # but WITHOUT ANY WARRANTY; without even the implied warranty of

16 | # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

17 | # GNU Lesser General Public License for more details.

18 | #

19 | # You should have received a copy of the GNU Lesser General Public License

20 | # along with this software; if not, write to the Free Software

21 | # Foundation, Inc., 59 Temple Place, Suite 330, Boston, MA 02111-1307 USA

22 | """HTTP proxy for opening RPC connection to bitcoind.

23 |

24 | AuthServiceProxy has the following improvements over python-jsonrpc's

25 | ServiceProxy class:

26 |

27 | - HTTP connections persist for the life of the AuthServiceProxy object

28 | (if server supports HTTP/1.1)

29 | - sends protocol 'version', per JSON-RPC 1.1

30 | - sends proper, incrementing 'id'

31 | - sends Basic HTTP authentication headers

32 | - parses all JSON numbers that look like floats as Decimal

33 | - uses standard Python json lib

34 | """

35 |

36 | import base64

37 | import decimal

38 | from http import HTTPStatus

39 | import http.client

40 | import json

41 | import logging

42 | import os

43 | import socket

44 | import time

45 | import urllib.parse

46 |

47 | HTTP_TIMEOUT = 30

48 | USER_AGENT = "AuthServiceProxy/0.1"

49 |

50 | log = logging.getLogger("BitcoinRPC")

51 |

52 | class JSONRPCException(Exception):

53 | def __init__(self, rpc_error, http_status=None):

54 | try:

55 | errmsg = '%(message)s (%(code)i)' % rpc_error

56 | except (KeyError, TypeError):

57 | errmsg = ''

58 | super().__init__(errmsg)

59 | self.error = rpc_error

60 | self.http_status = http_status

61 |

62 |

63 | def EncodeDecimal(o):

64 | if isinstance(o, decimal.Decimal):

65 | return str(o)

66 | raise TypeError(repr(o) + " is not JSON serializable")

67 |

68 | class AuthServiceProxy():

69 | __id_count = 0

70 |

71 | # ensure_ascii: escape unicode as \uXXXX, passed to json.dumps

72 | def __init__(self, service_url, service_name=None, timeout=HTTP_TIMEOUT, connection=None, ensure_ascii=True):

73 | self.__service_url = service_url

74 | self._service_name = service_name

75 | self.ensure_ascii = ensure_ascii # can be toggled on the fly by tests

76 | self.__url = urllib.parse.urlparse(service_url)

77 | user = None if self.__url.username is None else self.__url.username.encode('utf8')

78 | passwd = None if self.__url.password is None else self.__url.password.encode('utf8')

79 | authpair = user + b':' + passwd

80 | self.__auth_header = b'Basic ' + base64.b64encode(authpair)

81 | self.timeout = timeout

82 | self._set_conn(connection)

83 |

84 | def __getattr__(self, name):

85 | if name.startswith('__') and name.endswith('__'):

86 | # Python internal stuff

87 | raise AttributeError

88 | if self._service_name is not None:

89 | name = "%s.%s" % (self._service_name, name)

90 | return AuthServiceProxy(self.__service_url, name, connection=self.__conn)

91 |

92 | def _request(self, method, path, postdata):

93 | '''

94 | Do a HTTP request, with retry if we get disconnected (e.g. due to a timeout).

95 | This is a workaround for https://bugs.python.org/issue3566 which is fixed in Python 3.5.

96 | '''

97 | headers = {'Host': self.__url.hostname,

98 | 'User-Agent': USER_AGENT,

99 | 'Authorization': self.__auth_header,

100 | 'Content-type': 'application/json'}

101 | if os.name == 'nt':

102 | # Windows somehow does not like to re-use connections

103 | # TODO: Find out why the connection would disconnect occasionally and make it reusable on Windows

104 | self._set_conn()

105 | try:

106 | self.__conn.request(method, path, postdata, headers)

107 | return self._get_response()

108 | except http.client.BadStatusLine as e:

109 | if e.line == "''": # if connection was closed, try again

110 | self.__conn.close()

111 | self.__conn.request(method, path, postdata, headers)

112 | return self._get_response()

113 | else:

114 | raise

115 | except (BrokenPipeError, ConnectionResetError):

116 | # Python 3.5+ raises BrokenPipeError instead of BadStatusLine when the connection was reset

117 | # ConnectionResetError happens on FreeBSD with Python 3.4

118 | self.__conn.close()

119 | self.__conn.request(method, path, postdata, headers)

120 | return self._get_response()

121 |

122 | def get_request(self, *args, **argsn):

123 | AuthServiceProxy.__id_count += 1

124 |

125 | log.debug("-{}-> {} {}".format(

126 | AuthServiceProxy.__id_count,

127 | self._service_name,

128 | json.dumps(args or argsn, default=EncodeDecimal, ensure_ascii=self.ensure_ascii),

129 | ))

130 | if args and argsn:

131 | raise ValueError('Cannot handle both named and positional arguments')

132 | return {'version': '1.1',

133 | 'method': self._service_name,

134 | 'params': args or argsn,

135 | 'id': AuthServiceProxy.__id_count}

136 |

137 | def __call__(self, *args, **argsn):

138 | postdata = json.dumps(self.get_request(*args, **argsn), default=EncodeDecimal, ensure_ascii=self.ensure_ascii)

139 | response, status = self._request('POST', self.__url.path, postdata.encode('utf-8'))

140 | if response['error'] is not None:

141 | raise JSONRPCException(response['error'], status)

142 | elif 'result' not in response:

143 | raise JSONRPCException({

144 | 'code': -343, 'message': 'missing JSON-RPC result'}, status)

145 | elif status != HTTPStatus.OK:

146 | raise JSONRPCException({

147 | 'code': -342, 'message': 'non-200 HTTP status code but no JSON-RPC error'}, status)

148 | else:

149 | return response['result']

150 |

151 | def batch(self, rpc_call_list):

152 | postdata = json.dumps(list(rpc_call_list), default=EncodeDecimal, ensure_ascii=self.ensure_ascii)

153 | log.debug("--> " + postdata)

154 | response, status = self._request('POST', self.__url.path, postdata.encode('utf-8'))

155 | if status != HTTPStatus.OK:

156 | raise JSONRPCException({

157 | 'code': -342, 'message': 'non-200 HTTP status code but no JSON-RPC error'}, status)

158 | return response

159 |

160 | def _get_response(self):

161 | req_start_time = time.time()

162 | try:

163 | http_response = self.__conn.getresponse()

164 | except socket.timeout:

165 | raise JSONRPCException({

166 | 'code': -344,

167 | 'message': '%r RPC took longer than %f seconds. Consider '

168 | 'using larger timeout for calls that take '

169 | 'longer to return.' % (self._service_name,

170 | self.__conn.timeout)})

171 | if http_response is None:

172 | raise JSONRPCException({

173 | 'code': -342, 'message': 'missing HTTP response from server'})

174 |

175 | content_type = http_response.getheader('Content-Type')

176 | if content_type != 'application/json':

177 | raise JSONRPCException(

178 | {'code': -342, 'message': 'non-JSON HTTP response with \'%i %s\' from server' % (http_response.status, http_response.reason)},

179 | http_response.status)

180 |

181 | responsedata = http_response.read().decode('utf8')

182 | response = json.loads(responsedata, parse_float=decimal.Decimal)

183 | elapsed = time.time() - req_start_time

184 | if "error" in response and response["error"] is None:

185 | log.debug("<-%s- [%.6f] %s" % (response["id"], elapsed, json.dumps(response["result"], default=EncodeDecimal, ensure_ascii=self.ensure_ascii)))

186 | else:

187 | log.debug("<-- [%.6f] %s" % (elapsed, responsedata))

188 | return response, http_response.status

189 |

190 | def __truediv__(self, relative_uri):

191 | return AuthServiceProxy("{}/{}".format(self.__service_url, relative_uri), self._service_name, connection=self.__conn)

192 |

193 | def _set_conn(self, connection=None):

194 | port = 80 if self.__url.port is None else self.__url.port

195 | if connection:

196 | self.__conn = connection

197 | self.timeout = connection.timeout

198 | elif self.__url.scheme == 'https':

199 | self.__conn = http.client.HTTPSConnection(self.__url.hostname, port, timeout=self.timeout)

200 | else:

201 | self.__conn = http.client.HTTPConnection(self.__url.hostname, port, timeout=self.timeout)

202 |

--------------------------------------------------------------------------------

/src/coordinator/wallet.py:

--------------------------------------------------------------------------------

1 | import logging

2 | import uuid

3 | from bip32 import BIP32

4 |

5 | from src.bitcoin.musig import generate_musig_key, aggregate_schnorr_nonces, aggregate_musig_signatures

6 | from src.bitcoin.address import program_to_witness

7 | from src.bitcoin.key import ECPubKey

8 | from src.bitcoin.messages import CTransaction, CTxIn, COutPoint, CTxOut, CScriptWitness, CTxInWitness

9 | from src.bitcoin.script import TaprootSignatureHash, SIGHASH_ALL_TAPROOT

10 | from src.coordinator.mempool_space_client import broadcast_transaction, get_transaction

11 |

12 | # Using Peter Todd's python-bitcoinlib (https://github.com/petertodd/python-bitcoinlib)

13 | from bitcoin.wallet import CBitcoinAddress

14 | import bitcoin

15 |

16 | # can abstract this network selection bit out to a properties/config file

17 | bitcoin.SelectParams('testnet')

18 |

19 | COMMANDS = ['address', 'nonce','spend', 'wallet', 'xpub']

20 |

21 | # in memory cache of transactions that are in the process of being spent

22 | # keys are aggregate nonces and values are spending transactions

23 | spending_txs = {}

24 |

25 | def is_valid_command(command: str):

26 | return command in COMMANDS

27 |

28 | def create_spending_transaction(txid, outputIndex, destination_addr, amount_sat, version=1, nSequence=0):

29 | """Construct a CTransaction object that spends the first ouput from txid."""

30 | # Construct transaction

31 | spending_tx = CTransaction()

32 | # Populate the transaction version

33 | spending_tx.nVersion = version

34 | # Populate the locktime

35 | spending_tx.nLockTime = 0

36 |

37 | # Populate the transaction inputs

38 | outpoint = COutPoint(int(txid, 16), outputIndex)

39 | spending_tx_in = CTxIn(outpoint=outpoint, nSequence=nSequence)

40 | spending_tx.vin = [spending_tx_in]

41 |

42 | script_pubkey = CBitcoinAddress(destination_addr).to_scriptPubKey()

43 | dest_output = CTxOut(nValue=amount_sat, scriptPubKey=script_pubkey)

44 | spending_tx.vout = [dest_output]

45 |

46 | return (spending_tx, script_pubkey)

47 |

48 |

49 | def create_wallet(payload: dict, db):

50 | if (not 'quorum' in payload):

51 | raise Exception("[wallet] Cannot create a wallet without the 'quorum' property")

52 |

53 | quorum = payload['quorum']

54 | if (quorum < 1):

55 | raise Exception("[wallet] Quorum must be greater than 1")

56 |

57 | new_wallet_id = str(uuid.uuid4())

58 |

59 | if (db.add_wallet(new_wallet_id, quorum)):

60 | logging.info("[wallet] Saved new wallet ID %s to the database", new_wallet_id)

61 | return new_wallet_id

62 |

63 | # Get a wallet ID from the provided payload. Throw an error if it is missing.

64 | def get_wallet_id(payload: dict):

65 | if (not 'wallet_id' in payload):

66 | raise Exception("[wallet] 'wallet_id' property is missing")

67 |

68 | wallet_id = payload['wallet_id']

69 | return wallet_id

70 |

71 | # Get the spend request ID from the provided payload. Throw an error if it is missing

72 | def get_spend_request_id(payload: dict):

73 | if (not 'spend_request_id' in payload):

74 | raise Exception("[wallet] 'spend_request_id' property is missing")

75 |

76 | spend_request_id = payload['spend_request_id']

77 | return spend_request_id

78 |

79 | def add_xpub(payload: dict, db):

80 | if (not 'xpub' in payload):

81 | raise Exception("[wallet] Cannot add an xpub without the 'xpub' property")

82 |

83 | xpub = payload['xpub']

84 | wallet_id = get_wallet_id(payload)

85 |

86 | if (db.add_xpub(wallet_id, xpub)):

87 | logging.info('[wallet] Added xpub to wallet %s', wallet_id)

88 |

89 | def get_address(payload: dict, db):

90 | index = payload['index']

91 | wallet_id = get_wallet_id(payload)

92 | ec_public_keys = []

93 |

94 | # wallet = db.get_wallet(wallet_id)

95 | wallet_xpubs = db.get_xpubs(wallet_id)

96 |

97 | if (wallet_xpubs == []):

98 | raise Exception('[wallet] No xpubs to create an address from!')

99 |

100 | for xpub in wallet_xpubs:

101 | # The method to generate and aggregate MuSig key expects ECPubKey objects

102 | ec_public_key = ECPubKey()

103 |

104 | # TODO xpubs aren't working quite right. Using regular public keys for now.

105 | # bip32_node = BIP32.from_xpub(xpub['xpub'])

106 | # public_key = bip32_node.get_pubkey_from_path(f"m/{index}")

107 | #e c_public_key.set(public_key)

108 |

109 | ec_public_key.set(bytes.fromhex(xpub['xpub']))

110 | ec_public_keys.append(ec_public_key)

111 |

112 | c_map, pubkey_agg = generate_musig_key(ec_public_keys)

113 | logging.info('[wallet] Aggregate public key: %s', pubkey_agg.get_bytes().hex())

114 |

115 | # Create a segwit v1 address (P2TR) from the aggregate key

116 | p2tr_address = program_to_witness(0x01, pubkey_agg.get_bytes())

117 | logging.info('[wallet] Returning P2TR address %s', p2tr_address)

118 |

119 | # convert the challenges/coefficients to hex so they can be returned to the signer

120 | c_map_hex = {}

121 | for key, value in c_map.items():

122 | # k is the hex encoded pubkey, the value is the challenge/coefficient

123 | k = key.get_bytes().hex()

124 | c_map_hex[k] = value.hex()

125 |

126 | return [p2tr_address, c_map_hex, pubkey_agg.get_bytes().hex()]

127 |

128 | # Initiates a spending transaction

129 | def start_spend(payload: dict, db):

130 | # create an ID for this request

131 | spend_request_id = str(uuid.uuid4())

132 | logging.info('[wallet] Starting spend request with id %s', spend_request_id)

133 |

134 |

135 | if (not 'txid' in payload):

136 | raise Exception("[wallet] Cannot spend without the 'txid' property, which corresponds to the transaction ID of the output that is being spent")

137 |

138 | if (not 'output_index' in payload):

139 | raise Exception("[wallet] Cannot spend without the 'output_index' property, which corresponds to the index of the oputput that is being spent")

140 |

141 | if (not 'new_address' in payload):

142 | raise Exception("[wallet] Cannot spend without the 'new_address' property, which corresponds to the destination address of the transaction")

143 |

144 | if (not 'value' in payload):

145 | raise Exception("[wallet] Cannot spend without the 'value' property, which corresponds to the value (in satoshis) of the output that is being spent")

146 |

147 | txid = payload['txid']

148 | output_index = payload['output_index']

149 | destination_address = payload['new_address']

150 | wallet_id = get_wallet_id(payload)

151 |

152 | # 10% of fees will go to miners. Can have better fee support in the future

153 | output_amount = int(payload['value'] * 0.9)

154 |

155 | # Use mempool.space to look up the scriptpubkey for the output being spent

156 | # Could probably find a library to do this so we don't have to make any external calls

157 | tx = get_transaction(txid)

158 | input_script_pub_key = tx['vout'][output_index]['scriptpubkey']

159 | input_value_sats = tx['vout'][output_index]['value']

160 |

161 | # Persist to the db so other signers can easily retrieve this information

162 | if (db.add_spend_request(txid,

163 | output_index,

164 | input_script_pub_key,

165 | input_value_sats,

166 | spend_request_id,

167 | destination_address,

168 | output_amount,

169 | wallet_id)):

170 | logging.info('[wallet] Saved spend request %s to the database', spend_request_id)

171 |

172 | return spend_request_id

173 |

174 | def save_nonce(payload: dict, db):

175 | if (not 'nonce' in payload):

176 | raise Exception("[wallet] Cannot save a nonce without the 'nonce' property")

177 |

178 | nonce = payload['nonce']

179 | spend_request_id = get_spend_request_id(payload)

180 | spend_request = db.get_spend_request(spend_request_id)

181 | wallet_id = spend_request['wallet_id']

182 | if (spend_request is None):

183 | logging.error('[wallet] Cannot find spend request %s in the database', spend_request_id)

184 |

185 |

186 | logging.info('[wallet] Saving nonce for request id %s', spend_request_id)

187 |

188 | wallet = db.get_wallet(wallet_id)

189 |

190 | # Save the nonce to the db

191 | db.add_nonce(nonce, spend_request_id)

192 |

193 | logging.info('[wallet] Successfully saved nonce for request id %s', spend_request_id)

194 |

195 | # When the last signer provides a nonce, we can return the aggregate nonce (R_AGG)

196 | nonces = db.get_all_nonces(spend_request_id)

197 |

198 | if (len(nonces) != wallet['quorum']):

199 | return None

200 |

201 | # Generate nonce points

202 | nonce_points = [ECPubKey().set(bytes.fromhex(nonce['nonce'])) for nonce in nonces]

203 | # R_agg is the aggregate nonce, negated is if the private key was negated to produce

204 | # an even y coordinate

205 | R_agg, negated = aggregate_schnorr_nonces(nonce_points)

206 |

207 | (spending_tx, _script_pub_key) = create_spending_transaction(spend_request['txid'],

208 | spend_request['output_index'],

209 | spend_request['new_address'],

210 | spend_request['value'])

211 |

212 | # Create a sighash for ALL (0x00)

213 | sighash_musig = TaprootSignatureHash(spending_tx, [{'n': spend_request['output_index'], 'nValue': spend_request['prev_value_sats'], 'scriptPubKey': bytes.fromhex(spend_request['prev_script_pubkey'])}], SIGHASH_ALL_TAPROOT)

214 | print(sighash_musig)

215 |

216 | # Update cache

217 | spending_txs[R_agg] = spending_tx

218 |

219 | # Encode everything as hex before returning

220 | return (R_agg.get_bytes().hex(), sighash_musig.hex(), negated)

221 |

222 | def save_signature(payload, db):

223 | if (not 'signature' in payload):

224 | raise Exception("[wallet] Cannot save a signature without the 'signature' property")

225 |

226 | signature = payload['signature']

227 | spend_request_id = get_spend_request_id(payload)

228 |

229 | logging.info("[wallet] Recieved partial signature for spend request %s", spend_request_id)

230 |

231 | db.add_partial_signature(signature, spend_request_id)

232 |

233 | spend_request = db.get_spend_request(spend_request_id)

234 | wallet_id = spend_request['wallet_id']

235 | wallet = db.get_wallet(wallet_id)

236 |

237 | sigs = db.get_all_signatures(spend_request_id)

238 | sigs = [sig['signature'] for sig in sigs]

239 |

240 | nonces = db.get_all_nonces(spend_request_id)

241 | quorum = wallet['quorum']

242 | if len(nonces) != quorum or len(sigs) != quorum:

243 | logging.error('[wallet] Number of nonces and signatures does not match expected quorum of %d', quorum)

244 | return None

245 |

246 | nonce_points = [ECPubKey().set(bytes.fromhex(nonce['nonce'])) for nonce in nonces]

247 |

248 | # Aggregate keys and signatures

249 | R_agg, negated = aggregate_schnorr_nonces(nonce_points)

250 |

251 | # Retrieve the current transaction from the cache

252 | spending_tx = spending_txs[R_agg]

253 |

254 | # The aggregate signature

255 | tx_sig_agg = aggregate_musig_signatures(sigs, R_agg)

256 |

257 | # Add the aggregate signature to the witness stack

258 | witness_stack = CScriptWitness()

259 | witness_stack.stack.append(tx_sig_agg)

260 |

261 | # Add the witness to the transaction

262 | spending_tx.wit.vtxinwit.append(CTxInWitness(witness_stack))

263 |

264 | tx_serialized_hex = spending_tx.serialize().hex()