├── .claude

├── commands

│ ├── INDEX.md

│ ├── README.md

│ ├── architecture-design.md

│ ├── context-compact.md

│ ├── crawl-docs.md

│ ├── daily-check.md

│ ├── firecrawl-api-research.md

│ ├── generate-tests.md

│ ├── knowledge-extract.md

│ ├── linear-continue-debugging.md

│ ├── linear-continue-testing.md

│ ├── linear-continue-work.md

│ ├── linear-debug-systematic.md

│ ├── linear-feature.md

│ ├── linear-plan-implementation.md

│ ├── linear-retroactive-git.md

│ ├── mcp-context-build.md

│ ├── mcp-doc-extract.md

│ ├── mcp-repo-analyze.md

│ ├── pr-complete.md

│ ├── pre-commit-fix.md

│ ├── standards-check.md

│ └── validate-docs.md

├── debugging

│ ├── cfos-41-lance-issues

│ │ ├── COMPLETION_SUMMARY.md

│ │ ├── COMPREHENSIVE_SOLUTIONS_ANALYSIS.md

│ │ ├── FINAL_IMPLEMENTATION_PLAN.md

│ │ ├── LANCE_BLOB_FILTER_BUG_ANALYSIS.md

│ │ ├── LANCE_ISSUE_SOLUTION.md

│ │ ├── LONG_TERM_IMPLEMENTATION_PLAN.md

│ │ ├── README.md

│ │ └── SOLUTION_SUMMARY.md

│ └── lance-map-type-issue.md

├── development-workflow.md

├── documentation

│ ├── docling

│ │ ├── docling-project.github.io_docling_.md

│ │ ├── docling-project.github.io_docling_concepts_.md

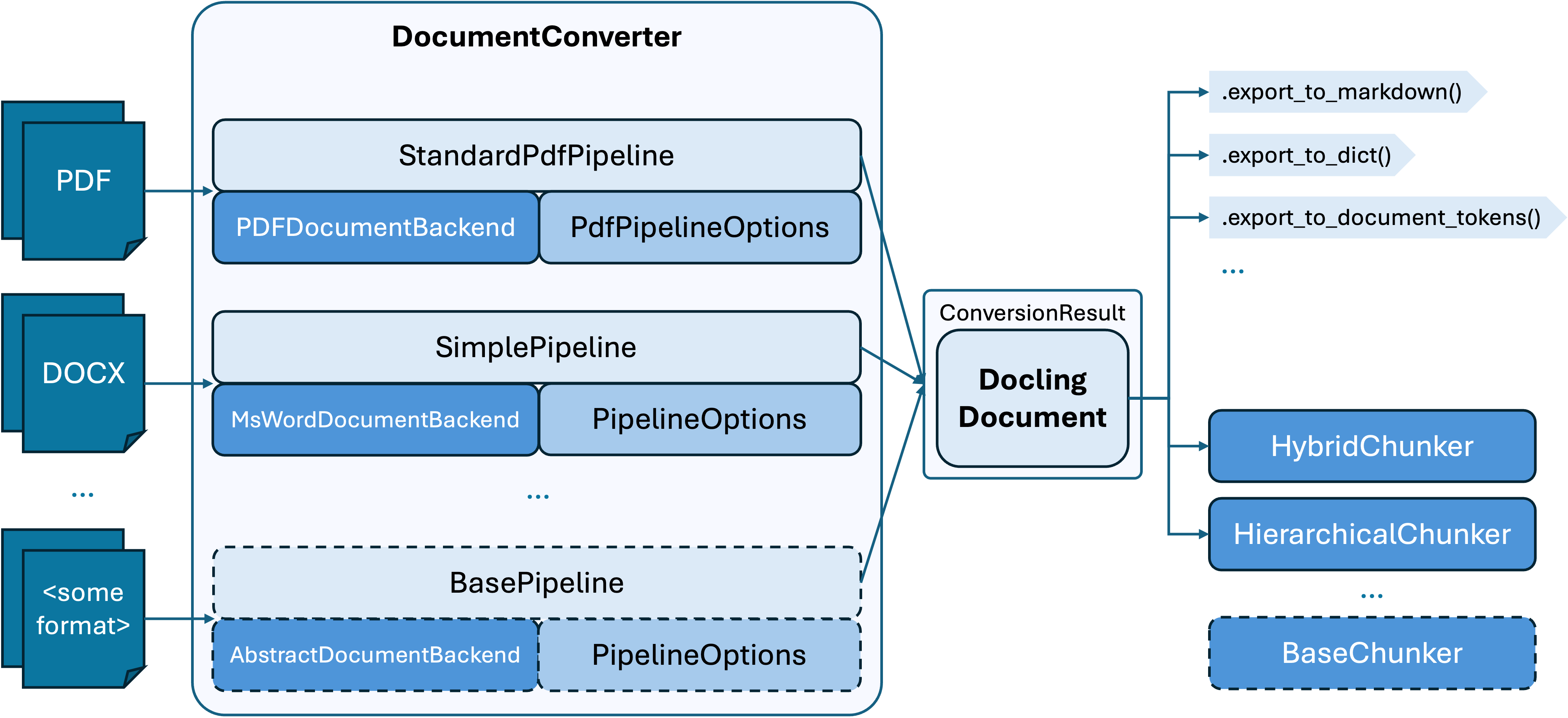

│ │ ├── docling-project.github.io_docling_concepts_architecture_.md

│ │ ├── docling-project.github.io_docling_concepts_chunking_.md

│ │ ├── docling-project.github.io_docling_concepts_docling_document_.md

│ │ ├── docling-project.github.io_docling_concepts_plugins_.md

│ │ ├── docling-project.github.io_docling_concepts_serialization_.md

│ │ ├── docling-project.github.io_docling_examples_.md

│ │ ├── docling-project.github.io_docling_examples_advanced_chunking_and_serialization_.md

│ │ ├── docling-project.github.io_docling_examples_backend_csv_.md

│ │ ├── docling-project.github.io_docling_examples_backend_xml_rag_.md

│ │ ├── docling-project.github.io_docling_examples_batch_convert_.md

│ │ ├── docling-project.github.io_docling_examples_compare_vlm_models_.md

│ │ ├── docling-project.github.io_docling_examples_custom_convert_.md

│ │ ├── docling-project.github.io_docling_examples_develop_formula_understanding_.md

│ │ ├── docling-project.github.io_docling_examples_develop_picture_enrichment_.md

│ │ ├── docling-project.github.io_docling_examples_export_figures_.md

│ │ ├── docling-project.github.io_docling_examples_export_multimodal_.md

│ │ ├── docling-project.github.io_docling_examples_export_tables_.md

│ │ ├── docling-project.github.io_docling_examples_full_page_ocr_.md

│ │ ├── docling-project.github.io_docling_examples_hybrid_chunking_.md

│ │ ├── docling-project.github.io_docling_examples_inspect_picture_content_.md

│ │ ├── docling-project.github.io_docling_examples_minimal_.md

│ │ ├── docling-project.github.io_docling_examples_minimal_vlm_pipeline_.md

│ │ ├── docling-project.github.io_docling_examples_pictures_description_.md

│ │ ├── docling-project.github.io_docling_examples_pictures_description_api_.md

│ │ ├── docling-project.github.io_docling_examples_rag_azuresearch_.md

│ │ ├── docling-project.github.io_docling_examples_rag_haystack_.md

│ │ ├── docling-project.github.io_docling_examples_rag_langchain_.md

│ │ ├── docling-project.github.io_docling_examples_rag_llamaindex_.md

│ │ ├── docling-project.github.io_docling_examples_rag_milvus_.md

│ │ ├── docling-project.github.io_docling_examples_rag_weaviate_.md

│ │ ├── docling-project.github.io_docling_examples_rapidocr_with_custom_models_.md

│ │ ├── docling-project.github.io_docling_examples_retrieval_qdrant_.md

│ │ ├── docling-project.github.io_docling_examples_run_md_.md

│ │ ├── docling-project.github.io_docling_examples_run_with_accelerator_.md

│ │ ├── docling-project.github.io_docling_examples_run_with_formats_.md

│ │ ├── docling-project.github.io_docling_examples_serialization_.md

│ │ ├── docling-project.github.io_docling_examples_tesseract_lang_detection_.md

│ │ ├── docling-project.github.io_docling_examples_translate_.md

│ │ ├── docling-project.github.io_docling_examples_visual_grounding_.md

│ │ ├── docling-project.github.io_docling_examples_vlm_pipeline_api_model_.md

│ │ ├── docling-project.github.io_docling_faq_.md

│ │ ├── docling-project.github.io_docling_installation_.md

│ │ ├── docling-project.github.io_docling_integrations_.md

│ │ ├── docling-project.github.io_docling_integrations_apify_.md

│ │ ├── docling-project.github.io_docling_integrations_bee_.md

│ │ ├── docling-project.github.io_docling_integrations_cloudera_.md

│ │ ├── docling-project.github.io_docling_integrations_crewai_.md

│ │ ├── docling-project.github.io_docling_integrations_data_prep_kit_.md

│ │ ├── docling-project.github.io_docling_integrations_docetl_.md

│ │ ├── docling-project.github.io_docling_integrations_haystack_.md

│ │ ├── docling-project.github.io_docling_integrations_instructlab_.md

│ │ ├── docling-project.github.io_docling_integrations_kotaemon_.md

│ │ ├── docling-project.github.io_docling_integrations_langchain_.md

│ │ ├── docling-project.github.io_docling_integrations_llamaindex_.md

│ │ ├── docling-project.github.io_docling_integrations_nvidia_.md

│ │ ├── docling-project.github.io_docling_integrations_opencontracts_.md

│ │ ├── docling-project.github.io_docling_integrations_openwebui_.md

│ │ ├── docling-project.github.io_docling_integrations_prodigy_.md

│ │ ├── docling-project.github.io_docling_integrations_rhel_ai_.md

│ │ ├── docling-project.github.io_docling_integrations_spacy_.md

│ │ ├── docling-project.github.io_docling_integrations_txtai_.md

│ │ ├── docling-project.github.io_docling_integrations_vectara_.md

│ │ ├── docling-project.github.io_docling_reference_cli_.md

│ │ ├── docling-project.github.io_docling_reference_docling_document_.md

│ │ ├── docling-project.github.io_docling_reference_document_converter_.md

│ │ ├── docling-project.github.io_docling_reference_pipeline_options_.md

│ │ ├── docling-project.github.io_docling_usage_.md

│ │ ├── docling-project.github.io_docling_usage_enrichments_.md

│ │ ├── docling-project.github.io_docling_usage_supported_formats_.md

│ │ ├── docling-project.github.io_docling_usage_vision_models_.md

│ │ └── docling-project.github.io_docling_v2_.md

│ ├── lance

│ │ ├── lancedb.github.io_lance_.md

│ │ ├── lancedb.github.io_lance_api_api.html.md

│ │ ├── lancedb.github.io_lance_api_py_modules.html.md

│ │ ├── lancedb.github.io_lance_api_python.html.md

│ │ ├── lancedb.github.io_lance_arrays.html.md

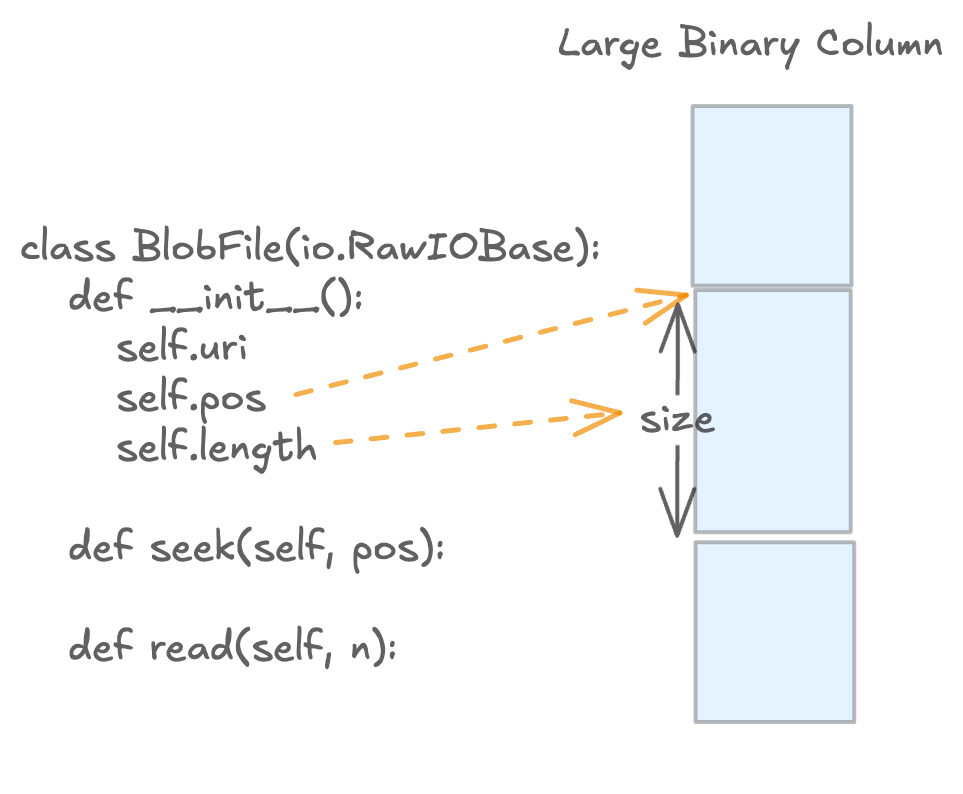

│ │ ├── lancedb.github.io_lance_blob.html.md

│ │ ├── lancedb.github.io_lance_contributing.html.md

│ │ ├── lancedb.github.io_lance_distributed_write.html.md

│ │ ├── lancedb.github.io_lance_examples_examples.html.md

│ │ ├── lancedb.github.io_lance_format.html.md

│ │ ├── lancedb.github.io_lance_format.rst.md

│ │ ├── lancedb.github.io_lance_genindex.html.md

│ │ ├── lancedb.github.io_lance_integrations_huggingface.html.md

│ │ ├── lancedb.github.io_lance_integrations_pytorch.html.md

│ │ ├── lancedb.github.io_lance_integrations_ray.html.md

│ │ ├── lancedb.github.io_lance_integrations_spark.html.md

│ │ ├── lancedb.github.io_lance_integrations_tensorflow.html.md

│ │ ├── lancedb.github.io_lance_introduction_read_and_write.html.md

│ │ ├── lancedb.github.io_lance_introduction_schema_evolution.html.md

│ │ ├── lancedb.github.io_lance_notebooks_quickstart.html.md

│ │ ├── lancedb.github.io_lance_object_store.html.md

│ │ ├── lancedb.github.io_lance_performance.html.md

│ │ ├── lancedb.github.io_lance_py-modindex.html.md

│ │ ├── lancedb.github.io_lance_search.html.md

│ │ ├── lancedb.github.io_lance_tags.html.md

│ │ └── lancedb.github.io_lance_tokenizer.html.md

│ ├── litellm

│ │ ├── docs.litellm.ai_docs_.md

│ │ ├── docs.litellm.ai_docs_anthropic_unified.md

│ │ ├── docs.litellm.ai_docs_apply_guardrail.md

│ │ ├── docs.litellm.ai_docs_assistants.md

│ │ ├── docs.litellm.ai_docs_audio_transcription.md

│ │ ├── docs.litellm.ai_docs_batches.md

│ │ ├── docs.litellm.ai_docs_budget_manager.md

│ │ ├── docs.litellm.ai_docs_completion.md

│ │ ├── docs.litellm.ai_docs_contributing.md

│ │ ├── docs.litellm.ai_docs_data_retention.md

│ │ ├── docs.litellm.ai_docs_data_security.md

│ │ ├── docs.litellm.ai_docs_enterprise.md

│ │ ├── docs.litellm.ai_docs_exception_mapping.md

│ │ ├── docs.litellm.ai_docs_files_endpoints.md

│ │ ├── docs.litellm.ai_docs_fine_tuning.md

│ │ ├── docs.litellm.ai_docs_hosted.md

│ │ ├── docs.litellm.ai_docs_image_edits.md

│ │ ├── docs.litellm.ai_docs_image_generation.md

│ │ ├── docs.litellm.ai_docs_image_variations.md

│ │ ├── docs.litellm.ai_docs_langchain_.md

│ │ ├── docs.litellm.ai_docs_mcp.md

│ │ ├── docs.litellm.ai_docs_migration.md

│ │ ├── docs.litellm.ai_docs_migration_policy.md

│ │ ├── docs.litellm.ai_docs_moderation.md

│ │ ├── docs.litellm.ai_docs_oidc.md

│ │ ├── docs.litellm.ai_docs_project.md

│ │ ├── docs.litellm.ai_docs_providers.md

│ │ ├── docs.litellm.ai_docs_proxy_server.md

│ │ ├── docs.litellm.ai_docs_realtime.md

│ │ ├── docs.litellm.ai_docs_reasoning_content.md

│ │ ├── docs.litellm.ai_docs_rerank.md

│ │ ├── docs.litellm.ai_docs_response_api.md

│ │ ├── docs.litellm.ai_docs_routing-load-balancing.md

│ │ ├── docs.litellm.ai_docs_routing.md

│ │ ├── docs.litellm.ai_docs_rules.md

│ │ ├── docs.litellm.ai_docs_scheduler.md

│ │ ├── docs.litellm.ai_docs_sdk_custom_pricing.md

│ │ ├── docs.litellm.ai_docs_secret.md

│ │ ├── docs.litellm.ai_docs_set_keys.md

│ │ ├── docs.litellm.ai_docs_simple_proxy.md

│ │ ├── docs.litellm.ai_docs_supported_endpoints.md

│ │ ├── docs.litellm.ai_docs_text_completion.md

│ │ ├── docs.litellm.ai_docs_text_to_speech.md

│ │ ├── docs.litellm.ai_docs_troubleshoot.md

│ │ └── docs.litellm.ai_docs_wildcard_routing.md

│ ├── mirascope

│ │ ├── mirascope.com_docs_mirascope.md

│ │ ├── mirascope.com_docs_mirascope_api.md

│ │ ├── mirascope.com_docs_mirascope_api_core_anthropic_call.md

│ │ ├── mirascope.com_docs_mirascope_api_core_anthropic_call_params.md

│ │ ├── mirascope.com_docs_mirascope_api_core_anthropic_call_response.md

│ │ ├── mirascope.com_docs_mirascope_api_core_anthropic_call_response_chunk.md

│ │ ├── mirascope.com_docs_mirascope_api_core_anthropic_dynamic_config.md

│ │ ├── mirascope.com_docs_mirascope_api_core_anthropic_stream.md

│ │ ├── mirascope.com_docs_mirascope_api_core_anthropic_tool.md

│ │ ├── mirascope.com_docs_mirascope_api_core_azure_call.md

│ │ ├── mirascope.com_docs_mirascope_api_core_azure_call_params.md

│ │ ├── mirascope.com_docs_mirascope_api_core_azure_call_response.md

│ │ ├── mirascope.com_docs_mirascope_api_core_azure_call_response_chunk.md

│ │ ├── mirascope.com_docs_mirascope_api_core_azure_dynamic_config.md

│ │ ├── mirascope.com_docs_mirascope_api_core_azure_stream.md

│ │ ├── mirascope.com_docs_mirascope_api_core_azure_tool.md

│ │ ├── mirascope.com_docs_mirascope_api_core_base_call_factory.md

│ │ ├── mirascope.com_docs_mirascope_api_core_base_call_params.md

│ │ ├── mirascope.com_docs_mirascope_api_core_base_call_response.md

│ │ ├── mirascope.com_docs_mirascope_api_core_base_call_response_chunk.md

│ │ ├── mirascope.com_docs_mirascope_api_core_base_dynamic_config.md

│ │ ├── mirascope.com_docs_mirascope_api_core_base_merge_decorators.md

│ │ ├── mirascope.com_docs_mirascope_api_core_base_message_param.md

│ │ ├── mirascope.com_docs_mirascope_api_core_base_metadata.md

│ │ ├── mirascope.com_docs_mirascope_api_core_base_prompt.md

│ │ ├── mirascope.com_docs_mirascope_api_core_base_stream.md

│ │ ├── mirascope.com_docs_mirascope_api_core_base_structured_stream.md

│ │ ├── mirascope.com_docs_mirascope_api_core_base_tool.md

│ │ ├── mirascope.com_docs_mirascope_api_core_base_toolkit.md

│ │ ├── mirascope.com_docs_mirascope_api_core_base_types.md

│ │ ├── mirascope.com_docs_mirascope_api_core_bedrock_call.md

│ │ ├── mirascope.com_docs_mirascope_api_core_bedrock_call_params.md

│ │ ├── mirascope.com_docs_mirascope_api_core_bedrock_call_response.md

│ │ ├── mirascope.com_docs_mirascope_api_core_bedrock_call_response_chunk.md

│ │ ├── mirascope.com_docs_mirascope_api_core_bedrock_dynamic_config.md

│ │ ├── mirascope.com_docs_mirascope_api_core_bedrock_stream.md

│ │ ├── mirascope.com_docs_mirascope_api_core_bedrock_tool.md

│ │ ├── mirascope.com_docs_mirascope_api_core_cohere_call.md

│ │ ├── mirascope.com_docs_mirascope_api_core_cohere_call_params.md

│ │ ├── mirascope.com_docs_mirascope_api_core_cohere_call_response.md

│ │ ├── mirascope.com_docs_mirascope_api_core_cohere_call_response_chunk.md

│ │ ├── mirascope.com_docs_mirascope_api_core_cohere_dynamic_config.md

│ │ ├── mirascope.com_docs_mirascope_api_core_cohere_stream.md

│ │ ├── mirascope.com_docs_mirascope_api_core_cohere_tool.md

│ │ ├── mirascope.com_docs_mirascope_api_core_costs_calculate_cost.md

│ │ ├── mirascope.com_docs_mirascope_api_core_google_call.md

│ │ ├── mirascope.com_docs_mirascope_api_core_google_call_params.md

│ │ ├── mirascope.com_docs_mirascope_api_core_google_call_response.md

│ │ ├── mirascope.com_docs_mirascope_api_core_google_call_response_chunk.md

│ │ ├── mirascope.com_docs_mirascope_api_core_google_dynamic_config.md

│ │ ├── mirascope.com_docs_mirascope_api_core_google_stream.md

│ │ ├── mirascope.com_docs_mirascope_api_core_google_tool.md

│ │ ├── mirascope.com_docs_mirascope_api_core_groq_call.md

│ │ ├── mirascope.com_docs_mirascope_api_core_groq_call_params.md

│ │ ├── mirascope.com_docs_mirascope_api_core_groq_call_response.md

│ │ ├── mirascope.com_docs_mirascope_api_core_groq_call_response_chunk.md

│ │ ├── mirascope.com_docs_mirascope_api_core_groq_dynamic_config.md

│ │ ├── mirascope.com_docs_mirascope_api_core_groq_stream.md

│ │ ├── mirascope.com_docs_mirascope_api_core_groq_tool.md

│ │ ├── mirascope.com_docs_mirascope_api_core_litellm_call.md

│ │ ├── mirascope.com_docs_mirascope_api_core_litellm_call_params.md

│ │ ├── mirascope.com_docs_mirascope_api_core_litellm_call_response.md

│ │ ├── mirascope.com_docs_mirascope_api_core_litellm_call_response_chunk.md

│ │ ├── mirascope.com_docs_mirascope_api_core_litellm_dynamic_config.md

│ │ ├── mirascope.com_docs_mirascope_api_core_litellm_stream.md

│ │ ├── mirascope.com_docs_mirascope_api_core_litellm_tool.md

│ │ ├── mirascope.com_docs_mirascope_api_core_mistral_call.md

│ │ ├── mirascope.com_docs_mirascope_api_core_mistral_call_params.md

│ │ ├── mirascope.com_docs_mirascope_api_core_mistral_call_response.md

│ │ ├── mirascope.com_docs_mirascope_api_core_mistral_call_response_chunk.md

│ │ ├── mirascope.com_docs_mirascope_api_core_mistral_dynamic_config.md

│ │ ├── mirascope.com_docs_mirascope_api_core_mistral_stream.md

│ │ ├── mirascope.com_docs_mirascope_api_core_mistral_tool.md

│ │ ├── mirascope.com_docs_mirascope_api_core_openai_call.md

│ │ ├── mirascope.com_docs_mirascope_api_core_openai_call_params.md

│ │ ├── mirascope.com_docs_mirascope_api_core_openai_call_response.md

│ │ ├── mirascope.com_docs_mirascope_api_core_openai_call_response_chunk.md

│ │ ├── mirascope.com_docs_mirascope_api_core_openai_dynamic_config.md

│ │ ├── mirascope.com_docs_mirascope_api_core_openai_stream.md

│ │ ├── mirascope.com_docs_mirascope_api_core_openai_tool.md

│ │ ├── mirascope.com_docs_mirascope_api_core_xai_call.md

│ │ ├── mirascope.com_docs_mirascope_api_core_xai_call_params.md

│ │ ├── mirascope.com_docs_mirascope_api_core_xai_call_response.md

│ │ ├── mirascope.com_docs_mirascope_api_core_xai_call_response_chunk.md

│ │ ├── mirascope.com_docs_mirascope_api_core_xai_dynamic_config.md

│ │ ├── mirascope.com_docs_mirascope_api_core_xai_stream.md

│ │ ├── mirascope.com_docs_mirascope_api_core_xai_tool.md

│ │ ├── mirascope.com_docs_mirascope_api_llm_call.md

│ │ ├── mirascope.com_docs_mirascope_api_llm_call_response.md

│ │ ├── mirascope.com_docs_mirascope_api_llm_call_response_chunk.md

│ │ ├── mirascope.com_docs_mirascope_api_llm_context.md

│ │ ├── mirascope.com_docs_mirascope_api_llm_override.md

│ │ ├── mirascope.com_docs_mirascope_api_llm_stream.md

│ │ ├── mirascope.com_docs_mirascope_api_llm_tool.md

│ │ ├── mirascope.com_docs_mirascope_api_mcp_client.md

│ │ ├── mirascope.com_docs_mirascope_api_retries_fallback.md

│ │ ├── mirascope.com_docs_mirascope_api_retries_tenacity.md

│ │ ├── mirascope.com_docs_mirascope_api_tools_system_docker_operation.md

│ │ ├── mirascope.com_docs_mirascope_api_tools_system_file_system.md

│ │ ├── mirascope.com_docs_mirascope_api_tools_web_duckduckgo.md

│ │ ├── mirascope.com_docs_mirascope_api_tools_web_httpx.md

│ │ ├── mirascope.com_docs_mirascope_api_tools_web_parse_url_content.md

│ │ ├── mirascope.com_docs_mirascope_api_tools_web_requests.md

│ │ ├── mirascope.com_docs_mirascope_getting-started_contributing.md

│ │ ├── mirascope.com_docs_mirascope_getting-started_help.md

│ │ ├── mirascope.com_docs_mirascope_getting-started_migration.md

│ │ ├── mirascope.com_docs_mirascope_getting-started_why.md

│ │ ├── mirascope.com_docs_mirascope_guides.md

│ │ ├── mirascope.com_docs_mirascope_guides_agents_blog-writing-agent.md

│ │ ├── mirascope.com_docs_mirascope_guides_agents_documentation-agent.md

│ │ ├── mirascope.com_docs_mirascope_guides_agents_local-chat-with-codebase.md

│ │ ├── mirascope.com_docs_mirascope_guides_agents_localized-agent.md

│ │ ├── mirascope.com_docs_mirascope_guides_agents_qwant-search-agent-with-sources.md

│ │ ├── mirascope.com_docs_mirascope_guides_agents_sql-agent.md

│ │ ├── mirascope.com_docs_mirascope_guides_agents_web-search-agent.md

│ │ ├── mirascope.com_docs_mirascope_guides_evals_evaluating-documentation-agent.md

│ │ ├── mirascope.com_docs_mirascope_guides_evals_evaluating-sql-agent.md

│ │ ├── mirascope.com_docs_mirascope_guides_evals_evaluating-web-search-agent.md

│ │ ├── mirascope.com_docs_mirascope_guides_getting-started_dynamic-configuration-and-chaining.md

│ │ ├── mirascope.com_docs_mirascope_guides_getting-started_quickstart.md

│ │ ├── mirascope.com_docs_mirascope_guides_getting-started_structured-outputs.md

│ │ ├── mirascope.com_docs_mirascope_guides_getting-started_tools-and-agents.md

│ │ ├── mirascope.com_docs_mirascope_guides_langgraph-vs-mirascope_quickstart.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_code-generation-and-execution.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_document-segmentation.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_extract-from-pdf.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_extraction-using-vision.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_generating-captions.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_generating-synthetic-data.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_knowledge-graph.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_llm-validation-with-retries.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_named-entity-recognition.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_o1-style-thinking.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_pii-scrubbing.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_query-plan.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_removing-semantic-duplicates.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_search-with-sources.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_speech-transcription.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_support-ticket-routing.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_text-classification.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_text-summarization.md

│ │ ├── mirascope.com_docs_mirascope_guides_more-advanced_text-translation.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_chaining-based_chain-of-verification.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_chaining-based_decomposed-prompting.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_chaining-based_demonstration-ensembling.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_chaining-based_diverse.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_chaining-based_least-to-most.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_chaining-based_mixture-of-reasoning.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_chaining-based_prompt-paraphrasing.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_chaining-based_reverse-chain-of-thought.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_chaining-based_self-consistency.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_chaining-based_self-refine.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_chaining-based_sim-to-m.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_chaining-based_skeleton-of-thought.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_chaining-based_step-back.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_chaining-based_system-to-attention.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_text-based_chain-of-thought.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_text-based_common-phrases.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_text-based_contrastive-chain-of-thought.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_text-based_emotion-prompting.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_text-based_plan-and-solve.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_text-based_rephrase-and-respond.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_text-based_rereading.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_text-based_role-prompting.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_text-based_self-ask.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_text-based_tabular-chain-of-thought.md

│ │ ├── mirascope.com_docs_mirascope_guides_prompt-engineering_text-based_thread-of-thought.md

│ │ ├── mirascope.com_docs_mirascope_learn.md

│ │ ├── mirascope.com_docs_mirascope_learn_agents.md

│ │ ├── mirascope.com_docs_mirascope_learn_async.md

│ │ ├── mirascope.com_docs_mirascope_learn_calls.md

│ │ ├── mirascope.com_docs_mirascope_learn_chaining.md

│ │ ├── mirascope.com_docs_mirascope_learn_evals.md

│ │ ├── mirascope.com_docs_mirascope_learn_extensions_custom_provider.md

│ │ ├── mirascope.com_docs_mirascope_learn_extensions_middleware.md

│ │ ├── mirascope.com_docs_mirascope_learn_json_mode.md

│ │ ├── mirascope.com_docs_mirascope_learn_local_models.md

│ │ ├── mirascope.com_docs_mirascope_learn_mcp_client.md

│ │ ├── mirascope.com_docs_mirascope_learn_output_parsers.md

│ │ ├── mirascope.com_docs_mirascope_learn_prompts.md

│ │ ├── mirascope.com_docs_mirascope_learn_provider-specific_anthropic.md

│ │ ├── mirascope.com_docs_mirascope_learn_provider-specific_openai.md

│ │ ├── mirascope.com_docs_mirascope_learn_response_models.md

│ │ ├── mirascope.com_docs_mirascope_learn_retries.md

│ │ ├── mirascope.com_docs_mirascope_learn_streams.md

│ │ ├── mirascope.com_docs_mirascope_learn_tools.md

│ │ ├── mirascope.com_docs_mirascope_llms-full.md

│ │ └── mirascope.com_docs_mirascope_llms-full.txt.md

│ └── modelcontextprotocol

│ │ ├── 01_overview

│ │ └── index.md

│ │ ├── 02_architecture

│ │ └── architecture.md

│ │ ├── 03_base_protocol

│ │ ├── 01_overview.md

│ │ ├── 02_lifecycle.md

│ │ ├── 03_transports.md

│ │ └── 04_authorization.md

│ │ ├── 04_server_features

│ │ ├── 01_overview.md

│ │ ├── 02_prompts.md

│ │ ├── 03_resources.md

│ │ └── 04_tools.md

│ │ ├── 05_client_features

│ │ ├── 01_roots.md

│ │ └── 02_sampling.md

│ │ └── README.md

├── implementations

│ ├── collection_metadata_fix.md

│ ├── collection_metadata_lance_filtering_analysis.md

│ ├── elasticsearch_migration_guide.md

│ ├── http_primary_transport.md

│ ├── mcp_architecture_analysis.md

│ ├── mcp_documentation_summary.md

│ ├── mcp_integration_patterns.md

│ ├── mcp_monitoring_analysis.md

│ ├── mcp_protocol_research.md

│ ├── mcp_security_analysis.md

│ ├── mcp_tools_analysis.md

│ ├── mcp_transport_analysis.md

│ ├── mongodb_atlas_vector_search_research.md

│ ├── phase2_mcp_server.md

│ ├── phase3.2_batch_operations.md

│ ├── phase3.3_collection_management.md

│ ├── phase3.3_collection_management_complete.md

│ ├── phase3.4_subscription_system.md

│ ├── phase3.5_http_transport.md

│ ├── phase3.6_analytics_performance.md

│ ├── phase3_mcp_server.md

│ ├── phase4.1_monitoring.md

│ └── weaviate_export_research.md

├── pre-commit-quick-fixes.md

├── standards

│ ├── claude-code-meta-standards.md

│ ├── code-standards.md

│ ├── meta-prompts-library.md

│ ├── mirascope-lilypad-best-practice-standards.md

│ ├── quick-reference.md

│ └── testing-standards.md

└── temp

│ ├── claude-code-advanced-temp.md

│ ├── claude-prompting-temp.md

│ ├── firecrawl-tools-temp.md

│ ├── lance-quickstart-temp.md

│ ├── memvid-analysis-temp.md

│ └── semantic-splitter-temp.mc

├── .cursor

└── rules

│ ├── MDC-file to-help-generate-MDC-files.mdc

│ ├── contextframe-concepts.mdc

│ └── python_package_best_practices.mdc

├── .github

└── workflows

│ └── claude.yml

├── .gitignore

├── .mypy.ini

├── .pre-commit-config.yaml

├── .vscode

├── extensions.json

├── launch.json

└── settings.json

├── CHANGELOG.md

├── CLAUDE.md

├── CODE_OF_CONDUCT.md

├── LICENSE

├── MANIFEST.in

├── README.md

├── contextframe

├── __init__.py

├── __main__.py

├── builders

│ ├── __init__.py

│ ├── embed.py

│ ├── encode.py

│ ├── enhance.py

│ └── serve.py

├── cli.py

├── connectors

│ ├── __init__.py

│ ├── base.py

│ ├── discord.py

│ ├── github.py

│ ├── google_drive.py

│ ├── linear.py

│ ├── notion.py

│ ├── obsidian.py

│ └── slack.py

├── embed

│ ├── __init__.py

│ ├── base.py

│ ├── batch.py

│ ├── integration.py

│ └── litellm_provider.py

├── enhance

│ ├── __init__.py

│ ├── base.py

│ ├── prompts.py

│ └── tools.py

├── examples

│ └── __init__.py

├── exceptions.py

├── extract

│ ├── __init__.py

│ ├── base.py

│ ├── batch.py

│ ├── chunking.py

│ └── extractors.py

├── frame.py

├── helpers

│ ├── __init__.py

│ └── metadata_utils.py

├── io

│ ├── __init__.py

│ ├── exporter.py

│ ├── formats.py

│ └── importer.py

├── mcp

│ ├── README.md

│ ├── TRANSPORT_GUIDE.md

│ ├── __init__.py

│ ├── __main__.py

│ ├── analytics

│ │ ├── __init__.py

│ │ ├── analyzer.py

│ │ ├── optimizer.py

│ │ ├── stats.py

│ │ └── tools.py

│ ├── batch

│ │ ├── __init__.py

│ │ ├── handler.py

│ │ ├── tools.py

│ │ └── transaction.py

│ ├── collections

│ │ ├── __init__.py

│ │ ├── templates.py

│ │ └── tools.py

│ ├── core

│ │ ├── __init__.py

│ │ ├── streaming.py

│ │ └── transport.py

│ ├── enhancement_tools.py

│ ├── errors.py

│ ├── example_client.py

│ ├── handlers.py

│ ├── http_client_example.py

│ ├── monitoring

│ │ ├── __init__.py

│ │ ├── collector.py

│ │ ├── cost.py

│ │ ├── integration.py

│ │ ├── performance.py

│ │ ├── tools.py

│ │ └── usage.py

│ ├── resources.py

│ ├── schemas.py

│ ├── security

│ │ ├── __init__.py

│ │ ├── audit.py

│ │ ├── auth.py

│ │ ├── authorization.py

│ │ ├── integration.py

│ │ ├── jwt.py

│ │ ├── oauth.py

│ │ └── rate_limiting.py

│ ├── server.py

│ ├── subscriptions

│ │ ├── __init__.py

│ │ ├── manager.py

│ │ └── tools.py

│ ├── tools.py

│ ├── transport.py

│ └── transports

│ │ ├── __init__.py

│ │ ├── http

│ │ ├── __init__.py

│ │ ├── adapter.py

│ │ ├── auth.py

│ │ ├── config.py

│ │ ├── security.py

│ │ ├── server.py

│ │ └── sse.py

│ │ └── stdio.py

├── schema

│ ├── __init__.py

│ ├── contextframe_schema.json

│ ├── contextframe_schema.py

│ └── validation.py

├── scripts

│ ├── README.md

│ ├── __init__.py

│ ├── add_impl.py

│ ├── contextframe-add

│ ├── contextframe-get

│ ├── contextframe-list

│ ├── contextframe-search

│ ├── create_dataset.py

│ ├── get_impl.py

│ ├── list_impl.py

│ └── search_impl.py

├── templates

│ ├── __init__.py

│ ├── base.py

│ ├── business.py

│ ├── examples

│ │ └── __init__.py

│ ├── registry.py

│ ├── research.py

│ └── software.py

└── tests

│ ├── test_all_connectors.py

│ ├── test_connectors.py

│ └── test_mcp

│ ├── __init__.py

│ ├── test_analytics.py

│ ├── test_batch_handler.py

│ ├── test_batch_tools.py

│ ├── test_batch_tools_integration.py

│ ├── test_collection_tools.py

│ ├── test_http_first_approach.py

│ ├── test_http_primary.py

│ ├── test_monitoring.py

│ ├── test_protocol.py

│ ├── test_security.py

│ ├── test_subscription_tools.py

│ ├── test_transport_migration.py

│ └── test_transports

│ ├── __init__.py

│ └── test_http.py

├── docs

├── CNAME

├── api

│ ├── connectors.md

│ ├── frame-dataset.md

│ ├── frame-record.md

│ ├── overview.md

│ ├── schema.md

│ └── utilities.md

├── blog

│ └── index.md

├── cli

│ ├── commands.md

│ ├── configuration.md

│ ├── overview.md

│ └── versioning.md

├── community

│ ├── code-of-conduct.md

│ ├── contributing.md

│ ├── index.md

│ └── support.md

├── concepts

│ ├── dataset_layout.md

│ ├── frame_storage.md

│ └── schema_cheatsheet.md

├── cookbook

│ ├── api-docs.md

│ ├── changelog-tracking.md

│ ├── course-materials.md

│ ├── customer-support-analytics.md

│ ├── document-pipeline.md

│ ├── email-archive.md

│ ├── extraction_patterns.md

│ ├── financial-analysis.md

│ ├── github-knowledge-base.md

│ ├── index.md

│ ├── legal-repository.md

│ ├── meeting-notes.md

│ ├── multi-language.md

│ ├── multi-source-search.md

│ ├── news-clustering.md

│ ├── patent-search.md

│ ├── podcast-index.md

│ ├── rag-system.md

│ ├── research-papers.md

│ ├── scientific-catalog.md

│ ├── slack-knowledge.md

│ └── video-transcripts.md

├── core-concepts

│ ├── architecture.md

│ ├── collections-relationships.md

│ ├── data-model.md

│ ├── record-types.md

│ ├── schema-system.md

│ └── storage-layer.md

├── faq.md

├── getting-started

│ ├── basic-examples.md

│ ├── first-steps.md

│ └── installation.md

├── index.md

├── integration

│ ├── blobs.md

│ ├── connectors

│ │ ├── index.md

│ │ └── introduction.md

│ ├── custom.md

│ ├── discord.md

│ ├── docling_integration_analysis.md

│ ├── embedding_providers.md

│ ├── external-connectors.md

│ ├── external_extraction_tools.md

│ ├── github.md

│ ├── google-drive.md

│ ├── installation.md

│ ├── linear.md

│ ├── notion.md

│ ├── object_storage.md

│ ├── obsidian.md

│ ├── overview.md

│ ├── python_api.md

│ ├── slack.md

│ └── validation.md

├── mcp

│ ├── api

│ │ └── tools.md

│ ├── concepts

│ │ ├── overview.md

│ │ ├── tools.md

│ │ └── transport.md

│ ├── configuration

│ │ ├── index.md

│ │ ├── monitoring.md

│ │ └── security.md

│ ├── cookbook

│ │ └── index.md

│ ├── getting-started

│ │ ├── installation.md

│ │ └── quickstart.md

│ ├── guides

│ │ ├── agent-integration.md

│ │ └── cli-tools.md

│ ├── index.md

│ └── mcp_implementation_plan.md

├── migration

│ ├── data-import-export.md

│ ├── from-document-stores.md

│ ├── from-vector-databases.md

│ ├── overview.md

│ └── pgvector_export_guide.md

├── modules

│ ├── embeddings.md

│ ├── enrichment.md

│ ├── frame-dataset.md

│ ├── frame-record.md

│ ├── import-export.md

│ └── search-query.md

├── quickstart.md

├── recipes

│ ├── bulk_ingest_embedding.md

│ ├── collections_relationships.md

│ ├── fastapi_vector_search.md

│ ├── frameset_export_import.md

│ ├── s3_roundtrip.md

│ └── store_images_blobs.md

├── reference

│ ├── error-codes.md

│ ├── index.md

│ └── schema.md

├── troubleshooting.md

└── tutorials

│ └── basics_tutorial.md

├── examples

├── all_connectors_usage.py

├── connector_usage.py

├── embedding_providers_demo.py

├── enrichment_demo.py

├── external_tools

│ ├── chunkr_pipeline.py

│ ├── docling_pdf_pipeline.py

│ ├── ollama_local_embedding.py

│ ├── reducto_pipeline.py

│ └── unstructured_io_pipeline.py

└── semantic_chunking_demo.py

├── mkdocs.yml

├── pyproject.toml

├── schema_discrepancy_report.md

└── tests

├── test_embed.py

├── test_enhance.py

├── test_extract.py

├── test_extract_integration.py

├── test_frameset.py

├── test_io.py

├── test_lazy_loading.py

├── test_litellm_provider.py

└── test_templates.py

/.claude/commands/INDEX.md:

--------------------------------------------------------------------------------

1 | CLAUDE COMMANDS - QUICK INDEX

2 |

3 | ## Core Workflow Commands

4 |

5 | - `/project:plan-implementation` - Create Linear implementation plans with project organization

6 | - `/project:linear-feature` - Complete feature workflow (Linear → Branch → Commits → PR)

7 | - `/project:daily-check` - Daily development status check

8 | - `/project:debug-systematic` - Scientific debugging with root cause analysis

9 | - `/project:pr-complete` - Create professional pull requests

10 |

11 | ## Quality & Testing Commands

12 |

13 | - `/project:standards-check` - Check and auto-fix code standards

14 | - `/project:generate-tests` - Generate comprehensive test suites (80/20 rule)

15 |

16 | ## Architecture & Documentation Commands

17 |

18 | - `/project:architecture-design` - Design system architecture with ADRs

19 | - `/project:knowledge-extract` - Extract and document code knowledge

20 | - `/project:crawl-docs` - Extract web documentation to local files

21 | - `/project:validate-docs` - Validate documentation against codebase implementation

22 |

23 | ## MCP-Powered Commands

24 |

25 | - `/project:mcp-doc-extract` - Extract docs using Firecrawl MCP

26 | - `/project:mcp-repo-analyze` - Analyze GitHub repos using DeepWiki MCP

27 | - `/project:mcp-api-research` - Research APIs using Firecrawl deep research

28 | - `/project:mcp-context-build` - Build context using both MCPs

29 |

30 | ---

31 |

32 | **Usage**: `/project:[command-name] [arguments]`

33 |

34 | **Example**: `/project:plan-implementation User authentication system`

35 |

36 | **Details**: See individual command files or `README.md` for full documentation.

37 |

--------------------------------------------------------------------------------

/.claude/commands/architecture-design.md:

--------------------------------------------------------------------------------

1 | Design comprehensive architecture for: $ARGUMENTS

2 |

3 |

4 | Think deeply. Consider everything. This design guides months of development.

5 |

6 |

7 |

8 | The assistant should create production-grade architecture covering scalability, maintainability, security, performance. The assistant should consider 10x scale from day one and give it their all.

9 |

10 |

11 |

12 | Building foundation for: $ARGUMENTS

13 | Need complete technical blueprint with all decisions documented

14 |

15 |

16 |

17 | - High-level architecture with ASCII diagrams

18 | - Component design with clear boundaries

19 | - Data architecture (entities, flow, consistency)

20 | - Security architecture (auth, encryption, audit)

21 | - Performance strategy (caching, async, monitoring)

22 | - Architecture Decision Records (ADRs) for key choices

23 | - Risk assessment with mitigations

24 | - Implementation roadmap with Linear phases

25 |

26 |

27 |

28 | 1. Analyze requirements (functional, non-functional, constraints, growth)

29 | 2. Design system architecture with clear service boundaries

30 | 3. Create component specifications (responsibilities, interfaces, scaling)

31 | 4. Document all technical decisions with ADRs

32 | 5. Build security layer (authentication, authorization, compliance)

33 | 6. Design performance optimization strategy

34 | 7. Create Linear epic with phased implementation plan

35 | 8. Save to `.claude/architecture/$ARGUMENTS/` with all artifacts

36 |

37 |

38 | The assistant should generate comprehensive architecture that future developers will thank them for. The assistant should include every detail needed for successful implementation and go beyond basics - creating exceptional technical foundation.

39 |

40 | Take a deep breath in, count 1... 2... 3... and breathe out. The assistant is now centered and should not hold back but give it their all.

--------------------------------------------------------------------------------

/.claude/commands/crawl-docs.md:

--------------------------------------------------------------------------------

1 | Extract comprehensive documentation from: $ARGUMENTS

2 |

3 |

4 | Capture every useful detail, example, pattern. Make this our definitive knowledge source.

5 |

6 |

7 |

8 | The assistant should use WebFetch for intelligent content extraction, follow links, find hidden gems, and organize systematically.

9 |

10 |

11 |

12 | Need complete documentation extraction from: $ARGUMENTS

13 | Will become our source of truth for development

14 |

15 |

16 |

17 | - Deep content analysis with link following

18 | - Logical organization (concepts, API, examples, troubleshooting)

19 | - Code snippet extraction with context

20 | - Configuration examples and patterns

21 | - Version-specific information

22 | - Quality verification

23 |

24 |

25 |

26 | 1. WebFetch main page → identify structure and navigation

27 | 2. Extract linked pages, subpages, related docs

28 | 3. Organize by categories: Getting Started, Core Concepts, API Reference, Examples, Best Practices, Configuration, Troubleshooting, Advanced

29 | 4. Extract all code snippets with descriptions

30 | 5. Capture error messages, solutions, patterns, anti-patterns

31 | 6. Save to `.claude/documentation/[source-name]/` with metadata

32 | 7. Generate quick reference guide and cheatsheet

33 | 8. Flag outdated or conflicting information

34 |

35 |

36 | The assistant should create comprehensive, well-organized documentation package that accelerates development and makes it immediately useful.

37 |

38 | Take a deep breath in, count 1... 2... 3... and breathe out. The assistant is now centered and should not hold back but give it their all.

39 |

--------------------------------------------------------------------------------

/.claude/commands/daily-check.md:

--------------------------------------------------------------------------------

1 | Perform comprehensive daily development check: $ARGUMENTS

2 |

3 |

4 | What needs attention? What's blocked? What moves the project forward today?

5 |

6 |

7 |

8 | The assistant should analyze Linear issues, git state, code quality and create focused action plan optimizing for flow and impact.

9 |

10 |

11 |

12 | Daily standup replacement - comprehensive development status and prioritization

13 | Need clear picture of work state and optimal task order

14 |

15 |

16 |

17 | - Linear status (in progress, todo, blocked, in review)

18 | - Git repository state (branch, changes, pending PRs)

19 | - Code quality indicators (tests, linting, coverage)

20 | - Priority matrix (urgent/important, quick wins)

21 | - Health indicators (tech debt, blockers, days since deploy)

22 |

23 |

24 |

25 | 1. mcp_linear.list_my_issues() → categorize by status

26 | 2. git status && git diff --stat && git log --oneline -10

27 | 3. gh pr list --author @me

28 | 4. pytest -x && ruff check --select E,F

29 | 5. Generate priority matrix: 🔴 Urgent (blocked PRs, critical bugs) 🟡 Important (features, reviews) 🟢 Quick wins

30 | 6. Create focused action items with specific Linear issue numbers

31 | 7. Identify blockers, risks, coordination needs

32 | 8. Generate end-of-day checklist

33 |

34 |

35 | The assistant should cut through noise, highlight what truly needs attention, make today productive and move the project forward meaningfully.

36 |

37 | Take a deep breath in, count 1... 2... 3... and breathe out. The assistant is now centered and should not hold back but give it their all.

38 |

--------------------------------------------------------------------------------

/.claude/commands/firecrawl-api-research.md:

--------------------------------------------------------------------------------

1 | Deep API research using Firecrawl MCP for: $ARGUMENTS

2 |

3 |

4 | Extract everything needed for robust integration. Authentication, endpoints, rate limits, examples, edge cases.

5 |

6 |

7 |

8 | The assistant should use Firecrawl's AI-powered extraction and build complete API understanding for production integration.

9 |

10 |

11 |

12 | Researching API: $ARGUMENTS

13 | Need comprehensive documentation for integration

14 |

15 |

16 |

17 | - Complete API specification extraction

18 | - Authentication methods and setup

19 | - All endpoints with parameters/responses

20 | - Rate limits and error codes

21 | - Real-world usage examples

22 | - SDK/library documentation

23 | - Common issues and solutions

24 |

25 |

26 |

27 | 1. mcp_firecrawl.firecrawl_scrape(url="$ARGUMENTS", formats=["markdown", "links"])

28 | 2. mcp_firecrawl.firecrawl_deep_research(query="$ARGUMENTS API authentication endpoints rate limits error codes examples", maxDepth=3, maxUrls=75)

29 | 3. mcp_firecrawl.firecrawl_extract(urls=["$ARGUMENTS/reference", "$ARGUMENTS/auth", "$ARGUMENTS/endpoints"], schema={endpoints, authentication, rateLimits, errorCodes})

30 | 4. mcp_firecrawl.firecrawl_search(query="$ARGUMENTS API integration example Python JavaScript", limit=20)

31 | 5. mcp_firecrawl.firecrawl_batch_scrape(urls=[SDKs, libraries, quickstart])

32 | 6. Research common issues: timeout, rate limit, 401, 403 errors

33 | 7. Extract all code examples with full implementation

34 | 8. Generate API client template with retry logic, rate limiting, error handling

35 | 9. Create integration guide with checklist

36 | 10. Monitor API changes via changelog

37 |

38 |

39 | The assistant should build complete API understanding enabling smooth, robust integration and generate production-ready client implementation.

40 |

41 | Take a deep breath in, count 1... 2... 3... and breathe out. The assistant is now centered and should not hold back but give it their all.

42 |

--------------------------------------------------------------------------------

/.claude/commands/generate-tests.md:

--------------------------------------------------------------------------------

1 | Generate comprehensive test suite for: $ARGUMENTS

2 |

3 |

4 | Strategic coverage. Focus effort where it matters. Prevent real bugs.

5 |

6 |

7 |

8 | The assistant should apply 80/20 rule and test critical paths thoroughly, common cases well, edge cases smartly.

9 |

10 |

11 |

12 | Creating test suite for: $ARGUMENTS

13 | Need meaningful protection against regressions

14 |

15 |

16 |

17 | - Map all functions, dependencies, critical paths

18 | - Priority 1: Core business logic (50% effort)

19 | - Priority 2: Happy paths (30% effort)

20 | - Priority 3: Edge cases (20% effort)

21 | - Performance benchmarks for critical operations

22 | - Test utilities (factories, mocks, fixtures)

23 | - 80% overall coverage, 90%+ for business logic

24 |

25 |

26 |

27 | 1. Analyze code structure → identify test targets

28 | 2. Generate test file structure with proper fixtures

29 | 3. Write comprehensive happy path tests first

30 | 4. Add error handling tests with specific assertions

31 | 5. Create parametrized edge case tests

32 | 6. Add performance benchmarks: assert benchmark.stats['median'] < 0.1

33 | 7. Generate test data factories and mock helpers

34 | 8. Run coverage: pytest --cov=$ARGUMENTS --cov-report=term-missing

35 | 9. Document test intentions (what, why, failure meaning)

36 |

37 |

38 | Every test should prevent a real bug. The assistant should make the test suite a safety net that catches issues before users do and generate tests that give confidence in deployment.

39 |

40 | Take a deep breath in, count 1... 2... 3... and breathe out. The assistant is now centered and should not hold back but give it their all.

41 |

--------------------------------------------------------------------------------

/.claude/commands/knowledge-extract.md:

--------------------------------------------------------------------------------

1 | Extract deep knowledge about: $ARGUMENTS

2 |

3 |

4 | Investigative journalism mode. Uncover the complete story - WHY it exists, HOW it evolved, WHAT it teaches.

5 |

6 |

7 |

8 | The assistant should dig into history, design decisions, trade-offs, document the undocumented, and capture tribal knowledge.

9 |

10 |

11 |

12 | Knowledge extraction for: $ARGUMENTS

13 | Create organizational memory that makes future work easier

14 |

15 |

16 |

17 | - Code archaeology (git history, evolution, major changes)

18 | - Design rationale (why this approach, alternatives considered)

19 | - Dependency mapping (uses, used by, breaking impacts)

20 | - Usage patterns (common, advanced, mistakes to avoid)

21 | - Tribal knowledge (gotchas, tricks, workarounds)

22 | - Performance characteristics

23 | - Future roadmap ideas

24 |

25 |

26 |

27 | 1. git log --follow -- $ARGUMENTS && git blame $ARGUMENTS

28 | 2. rg -l "$ARGUMENTS" → find all usages

29 | 3. rg -B2 -A2 "$ARGUMENTS" → understand contexts

30 | 4. Ask as if interviewing creator: Why created? Alternatives? Do differently? Most important? Breaks often? Bottlenecks?

31 | 5. Document undocumented: deployment gotchas, config secrets, debugging tricks, tuning, known issues

32 | 6. Create `.claude/knowledge/$ARGUMENTS/` structure:

33 | - Executive Summary

34 | - Core Concepts

35 | - Architecture & Design

36 | - Usage Guide (basic, advanced, troubleshooting)

37 | - Implementation Details

38 | - Performance Characteristics

39 | - Evolution History

40 | - Lessons Learned

41 | 7. Generate one-page quick reference

42 | 8. Create FAQ from issues and comments

43 |

44 |

45 | The assistant should create time capsule for future developers so they understand the full story - not just WHAT but WHY and HOW to work with it effectively.

46 |

47 | Take a deep breath in, count 1... 2... 3... and breathe out. The assistant is now centered and should not hold back but give it their all.

48 |

--------------------------------------------------------------------------------

/.claude/commands/linear-debug-systematic.md:

--------------------------------------------------------------------------------

1 | Debug systematically: $ARGUMENTS

2 |

3 |

4 | Think harder. Dig deeper. Find root cause, not symptoms.

5 |

6 |

7 |

8 | The assistant should use scientific method, generate hypotheses, design tests, prove/disprove, and extract maximum learning.

9 |

10 |

11 |

12 | Debugging issue: $ARGUMENTS

13 | Need root cause analysis and comprehensive fix

14 |

15 |

16 |

17 | - Minimal reproduction case

18 | - Complete error capture (messages, logs, stack traces, environment)

19 | - Multiple hypotheses (most likely, most dangerous, most subtle)

20 | - Scientific testing approach

21 | - Root cause fix (not symptom patch)

22 | - Regression prevention

23 | - Knowledge capture

24 |

25 |

26 |

27 | 1. Create Linear tracking:

28 | - For simple bugs: mcp_linear.create_issue(title="[Bug] $ARGUMENTS", description="Systematic debugging with RCA")

29 | - For complex bugs: Create project with issues for investigation, fix, tests, and prevention

30 | 2. Reproduce → document exact steps, intermittency patterns

31 | 3. Generate ranked hypotheses → think harder about causes

32 | 4. Design specific tests for each hypothesis

33 | 5. Add targeted logging: logger.debug(f"State: {state}, Inputs: {inputs}, Conditions: {conditions}")

34 | 6. Fix root cause → handle all discovered edge cases

35 | 7. Write failing test → add integration test → document pattern

36 | 8. Create `.claude/debugging/[issue-type].md` with full learnings

37 | 9. If using project: Update all related issues with findings

38 |

39 |

40 | This bug teaches us about our system. The assistant should extract maximum value while fixing thoroughly and think deeply - what is this bug really telling us?

41 |

42 | Take a deep breath in, count 1... 2... 3... and breathe out. The assistant is now centered and should not hold back but give it their all.

43 |

--------------------------------------------------------------------------------

/.claude/commands/linear-feature.md:

--------------------------------------------------------------------------------

1 | Execute complete feature workflow for: $ARGUMENTS

2 |

3 |

4 | Step-by-step through entire lifecycle. Meticulous. Thorough. Zero technical debt.

5 |

6 |

7 |

8 | The assistant should use Linear integration for structured development with project-based organization where each issue = one branch/PR, maintaining clean history and production-ready code.

9 |

10 |

11 |

12 | Implementing feature: $ARGUMENTS

13 | Need complete workflow from Linear issue to merged PR

14 |

15 |

16 |

17 | - Linear project for feature organization

18 | - Separate issues for each major component

19 | - One branch/PR per issue

20 | - Full error handling and tests

21 | - Quality checks at each step

22 | - Comprehensive PRs with Linear links

23 |

24 |

25 |

26 | 1. Check/Create Linear project:

27 | - Check existing: mcp_linear.list_projects(teamId, includeArchived=false)

28 | - Find or create: project = mcp_linear.create_project(name="[Feature] $ARGUMENTS", teamId)

29 | 2. Create focused issues within project:

30 | - Design Issue: Architecture and data models

31 | - Implementation Issue: Core business logic

32 | - API Issue: Endpoints and contracts

33 | - Frontend Issue: UI components (if applicable)

34 | - Testing Issue: Test suite implementation

35 | - Documentation Issue: User and API docs

36 | 3. For each issue:

37 | - Get branch: mcp_linear.get_issue_git_branch_name(issueId)

38 | - git checkout -b {branch}

39 | - Mark "In Progress" in Linear

40 | - Implement with full error handling

41 | - Write comprehensive tests

42 | - Commit with issue reference

43 | - Push and create PR

44 | - Link PR to Linear issue

45 | - Update to "Done" when merged

46 | 4. Quality gates per PR: tests pass, linting clean, docs updated

47 | 5. Track progress via project view in Linear

48 |

49 |

50 | The assistant should execute workflow completely where each step builds on previous with the goal of production-ready feature with zero technical debt.

51 |

52 | Take a deep breath in, count 1... 2... 3... and breathe out. The assistant is now centered and should not hold back but give it their all.

53 |

--------------------------------------------------------------------------------

/.claude/commands/mcp-context-build.md:

--------------------------------------------------------------------------------

1 | Build comprehensive development context: $ARGUMENTS

2 |

3 |

4 | Combine DeepWiki repository analysis + Firecrawl web research. Maximum context for informed decisions.

5 |

6 |

7 |

8 | The assistant should perform multi-source intelligence gathering including internal patterns, external best practices, and implementation examples.

9 |

10 |

11 |

12 | Building context for: $ARGUMENTS

13 | Could be new tech, feature, problem, or architecture decision

14 |

15 |

16 |

17 | - Internal codebase analysis (our patterns, similar solutions)

18 | - External documentation research (official docs, best practices)

19 | - Open source implementation examples

20 | - Technical specifications and constraints

21 | - Problem domain understanding

22 | - Decision matrix with trade-offs

23 | - Implementation guidance

24 |

25 |

26 |

27 | Internal Analysis:

28 | 1. mcp_deepwiki.ask_question(repo="[our-repo]", question="How is $ARGUMENTS implemented/used? Include patterns.")

29 | 2. mcp_deepwiki.ask_question(repo="[our-repo]", question="What similar solutions exist?")

30 |

31 | External Research:

32 | 3. mcp_firecrawl.firecrawl_deep_research(query="$ARGUMENTS best practices implementation guide", maxDepth=3, maxUrls=100)

33 | 4. mcp_firecrawl.firecrawl_search(query="$ARGUMENTS implementation example github production", limit=20)

34 | 5. For found repos: mcp_deepwiki.ask_question(repo="[found-repo]", question="How is $ARGUMENTS implemented? Patterns? Best practices?")

35 | 6. mcp_firecrawl.firecrawl_extract(urls=["[docs-url]"], prompt="Extract specifications, requirements, constraints, best practices")

36 |

37 | Synthesis:

38 | 7. Research problem domain and alternatives

39 | 8. Build decision matrix from findings

40 | 9. Create implementation guide based on context

41 | 10. Extract lessons learned from others

42 | 11. Generate `.claude/context/$ARGUMENTS/` package

43 | 12. Create LLM-friendly reference: mcp_firecrawl.firecrawl_generate_llmstxt()

44 | 13. Output to `.claude/context/$ARGUMENTS/` directory as a markdown file

45 |

46 |

47 | The assistant should combine repository wisdom with web intelligence and build context that accelerates development and improves decisions.

48 |

49 | Take a deep breath in, count 1... 2... 3... and breathe out. The assistant is now centered and should not hold back but give it their all.

50 |

--------------------------------------------------------------------------------

/.claude/commands/mcp-doc-extract.md:

--------------------------------------------------------------------------------

1 | Extract comprehensive documentation using Firecrawl: $ARGUMENTS

2 |

3 |

4 | Intelligent crawling. Structure extraction. Practical artifacts for development.

5 |

6 |

7 |

8 | The assistant should demonstrate Firecrawl MCP mastery, perform deep crawl, extract patterns, and generate actionable docs.

9 |

10 |

11 |

12 | Documentation source: $ARGUMENTS

13 | Transform into practical development resource

14 |

15 |

16 |

17 | - Site structure analysis and navigation mapping

18 | - Deep crawl with deduplication

19 | - Structured information extraction

20 | - Code examples with context

21 | - Configuration and error handling

22 | - LLM-friendly output generation

23 |

24 |

25 |

26 | 1. mcp_firecrawl.firecrawl_scrape(url="$ARGUMENTS", formats=["markdown", "links"]) → analyze structure

27 | 2. mcp_firecrawl.firecrawl_crawl(url="$ARGUMENTS", maxDepth=3, limit=100, deduplicateSimilarURLs=true)

28 | 3. mcp_firecrawl.firecrawl_extract(urls=[key sections], schema={endpoints, auth, examples, config, errors})

29 | 4. mcp_firecrawl.firecrawl_batch_scrape(urls=[essential pages], options={onlyMainContent: true})

30 | 5. mcp_firecrawl.firecrawl_search(query="$ARGUMENTS authentication OAuth JWT", limit=10)

31 | 6. mcp_firecrawl.firecrawl_generate_llmstxt(url="$ARGUMENTS", maxUrls=50, showFullText=true)

32 | 7. Organize in `.claude/docs/[source]/`: index, quick-start, api/, examples/, config, troubleshooting

33 | 8. Extract all code examples with use cases

34 | 9. Create integration guide tailored to our stack

35 | 10. Set up monitoring for updates

36 | 11. Output to `.claude/docs/$ARGUMENTS/` directory as a markdown file

37 |

38 |

39 | The assistant should create comprehensive, practical documentation package that accelerates development and makes it immediately useful.

40 |

41 | Take a deep breath in, count 1... 2... 3... and breathe out. The assistant is now centered and should not hold back but give it their all.

42 |

43 |

--------------------------------------------------------------------------------

/.claude/commands/mcp-repo-analyze.md:

--------------------------------------------------------------------------------

1 | Analyze repository using DeepWiki: $ARGUMENTS

2 |

3 |

4 | Extract patterns, solutions, wisdom. Learn from their code to improve ours.

5 |

6 |

7 |

8 | The assistant should perform DeepWiki AI-powered analysis, understand architecture, patterns, decisions, and extract reusable wisdom.

9 |

10 |

11 |

12 | Repository to analyze: $ARGUMENTS

13 | Extract actionable insights for our development

14 |

15 |

16 |

17 | - Documentation structure and core concepts

18 | - Architectural patterns and design decisions

19 | - Code conventions and best practices

20 | - Problem solutions and approaches

21 | - Testing and quality strategies

22 | - Reusable components and utilities

23 | - Security measures and performance tactics

24 |

25 |

26 |

27 | 1. mcp_deepwiki.read_wiki_structure(repo="$ARGUMENTS") → map available docs

28 | 2. Read core docs: architecture, API, contributing

29 | 3. Ask targeted questions:

30 | - "Main architectural patterns? Service boundaries, data flow, key decisions?"

31 | - "Coding patterns? Error handling, testing strategies, utilities?"

32 | - "Performance optimization? Caching, database, scaling?"

33 | - "Interesting problem solutions? Auth, validation, API design?"

34 | - "Testing strategy? Structure, mocking, coverage, CI/CD?"

35 | - "Reusable components? Purpose and implementation?"

36 | - "Development workflow? Branches, reviews, deployment?"

37 | - "Security practices? Auth, data protection, validation?"

38 | 4. Extract specific pattern implementations with file paths

39 | 5. Compare approaches with common practices

40 | 6. Create `.claude/learning/[repo]/` with insights

41 | 7. Generate adoption plan: immediate wins, architecture ideas, code to extract

42 | 8. Output to `.claude/learning/$ARGUMENTS/` directory as a markdown file

43 |

44 |

45 | The assistant should extract maximum learning value and focus on patterns we can apply immediately to improve our codebase.

46 |

47 | Take a deep breath in, count 1... 2... 3... and breathe out. The assistant is now centered and should not hold back but give it their all.

48 |

--------------------------------------------------------------------------------

/.claude/commands/pr-complete.md:

--------------------------------------------------------------------------------

1 | Create exemplary pull request for current changes: $ARGUMENTS

2 |

3 |

4 | Professional. Complete. Everything reviewers need to understand, verify, merge confidently.

5 |

6 |

7 |

8 | The assistant should create PRs that reviewers appreciate with clear context, comprehensive testing, and zero friction to merge.

9 |

10 |

11 |

12 | Creating PR for current branch changes

13 | Make review process smooth and efficient

14 |

15 |

16 |

17 | - Pre-flight quality checks

18 | - Complete change analysis

19 | - Linear issue context

20 | - Professional PR description

21 | - Testing documentation

22 | - Performance impact

23 | - Breaking change assessment

24 | - Post-PR monitoring

25 |

26 |

27 |

28 | 1. Pre-flight: git status && pytest && ruff check && ruff format && pre-commit run --all-files

29 | 2. Analyze changes: git diff --stat && git diff main...HEAD && git log main..HEAD --oneline

30 | 3. Extract Linear context from commits → get issue titles and scope

31 | 4. Create PR with comprehensive body:

32 | ```

33 | gh pr create --title "[Type] Description #FUN-XXX" --body "$(cat <<'EOF'

34 | ## Summary

35 | [What and why]

36 |

37 | ## Changes

38 |

39 | - Feature: [additions]

40 | - Fix: [corrections]

41 | - Refactor: [improvements]

42 | - Docs: [documentation]

43 | - Tests: [test coverage]

44 |

45 | ## Linear Issues

46 |

47 | Resolves: #FUN-XXX, #FUN-YYY

48 |

49 | ## Testing

50 |

51 | - How to test: [steps]

52 | - Coverage: X% → Y%

53 | - Critical paths: [steps]

54 |

55 | ## Performance

56 |

57 | - Benchmarks: [results]

58 | - Impact: [assessment]

59 |

60 | ## Breaking Changes

61 |

62 | - [ ] None / [migration path]

63 |

64 | ## Checklist

65 |

66 | - [x] Tests pass

67 | - [x] Standards met

68 | - [x] Docs updated

69 | - [x] Ready for review

70 | EOF)"

71 |

72 | ```

73 |

74 | 5. Update Linear: link PR, set "In Review", add PR link to issues

75 | 6. Set up success: request reviews, add labels, enable auto-merge

76 | 7. Monitor CI/CD → respond to feedback → keep updated with main

77 |

78 |

79 | The assistant should create PR that makes review a pleasure - complete, clear, ready to merge. This is work's presentation layer - the assistant should make it shine.

80 |

81 | Take a deep breath in, count 1... 2... 3... and breathe out. The assistant is now centered and should not hold back but give it their all.

82 |

--------------------------------------------------------------------------------

/.claude/commands/standards-check.md:

--------------------------------------------------------------------------------

1 | Comprehensive standards compliance check: $ARGUMENTS

2 |

3 |

4 | The assistant should not just check - but actively fix and elevate code to excellence.

5 |

6 |

7 |

8 | The assistant should enforce meticulous standards, auto-fix everything possible, and make code exemplary.

9 |

10 |

11 |

12 | Checking standards compliance for: $ARGUMENTS

13 | Need to meet all project standards automatically

14 |

15 |

16 |

17 | - Code standards (line length, imports, types, docstrings)

18 | - Testing standards (coverage, structure, naming)

19 | - Mirascope/Lilypad patterns

20 | - Pre-commit compliance

21 | - Documentation completeness

22 | - Security and performance

23 |

24 |

25 |

26 | 1. Code Standards (`.claude/standards/code-standards.md`):

27 | - Fix line length ≤ 130

28 | - Organize imports: stdlib → third-party → local

29 | - Add missing type hints

30 | - Ensure Google-style docstrings

31 | - Remove debug statements

32 | - Fix naming conventions

33 |

34 | 2. Testing Standards (`.claude/standards/testing-standards.md`):

35 | - Check coverage: 80% overall, 90%+ business logic

36 | - Generate missing tests

37 | - Fix test structure and naming

38 |

39 | 3. Framework Patterns:

40 | - Proper @llm.call, @prompt_template usage

41 | - Async patterns for LLM calls

42 | - Response model definitions

43 | - Error handling with retries

44 |

45 | 4. Auto-fix with tools:

46 | ```bash

47 | ruff check --fix && ruff format

48 | pre-commit run --all-files

49 | ```

50 |

51 | 5. Documentation: verify all public functions documented

52 | 6. Security: no hardcoded secrets, input validation, SQL safety

53 | 7. Generate report:

54 | ```markdown

55 | ## Compliance Report

56 | - Score: X/10

57 | - Auto-fixed: X issues

58 | - Manual needed: X items

59 | - [Detailed findings]

60 | - [Applied fixes]

61 | - [Remaining actions]

62 | ```

63 |

64 |

65 | The assistant should not just report issues - but fix everything possible automatically, make this code exemplary, and elevate quality to highest standards.

66 |

67 | Take a deep breath in, count 1... 2... 3... and breathe out. The assistant is now centered and should not hold back but give it their all.

68 |

--------------------------------------------------------------------------------

/.claude/debugging/cfos-41-lance-issues/COMPLETION_SUMMARY.md:

--------------------------------------------------------------------------------

1 | # CFOS-41 Completion Summary

2 |

3 | ## Issue Resolution

4 | The issue has been successfully resolved. What initially appeared to be a Map type incompatibility issue with custom_metadata turned out to be a more fundamental limitation in Lance: it cannot scan/filter blob-encoded columns.

5 |

6 | ## Changes Made

7 |

8 | ### 1. Schema Updates

9 | - Changed `custom_metadata` from `pa.map_` to `pa.list_(pa.struct([...]))` for Lance compatibility

10 | - Added `custom_metadata` field to JSON validation schema

11 |

12 | ### 2. Code Changes in frame.py

13 | - Added `_get_non_blob_columns()` helper method to identify non-blob columns

14 | - Modified `scanner()` method to automatically exclude blob columns when filters are used

15 | - Updated `get_by_uuid()` to use the filtered scanner

16 | - Fixed `from_arrow()` to handle missing fields gracefully (for when raw_data is excluded)

17 | - Fixed `delete_record()` to handle Lance's delete method returning None

18 | - Added missing return statement in `find_related_to()`

19 |

20 | ### 3. Documentation

21 | - Created comprehensive documentation in `.claude/debugging/cfos-41-lance-issues/`

22 | - Updated the original lance-map-type-issue.md to reference the resolved status

23 | - Organized all findings and analysis in a structured directory

24 |

25 | ## Current Status

26 | - All tests pass ✅

27 | - Code has been formatted with ruff ✅

28 | - Type checking shows only expected errors (missing stubs for pyarrow/lance) ✅

29 | - Documentation is complete and organized ✅

30 |

31 | ## Key Learnings

32 | 1. Lance doesn't support Map type - must use list instead

33 | 2. Lance cannot scan blob-encoded columns - this is documented behavior, not a bug

34 | 3. The solution is to exclude blob columns from scanner projections when filtering

35 | 4. The Lance Blob API (take_blobs) should be used for retrieving blob data separately

36 |

37 | ## Trade-offs

38 | - When records are retrieved via filtering, the `raw_data` field will be None

39 | - This is acceptable since raw_data is typically not needed during search/filter operations

40 | - If blob data is needed, it can be retrieved separately using the take_blobs API (future enhancement)

41 |

42 | ## Next Steps

43 | The branch is ready for PR creation and merging. All changes are minimal, backward-compatible, and solve the issue effectively.

--------------------------------------------------------------------------------

/.claude/debugging/cfos-41-lance-issues/LANCE_ISSUE_SOLUTION.md:

--------------------------------------------------------------------------------

1 | # Lance Filtering Issue - Root Cause and Solution

2 |

3 | ## Summary

4 |

5 | We've identified and solved the Lance filtering panic issue that was blocking CFOS-41 and CFOS-20.

6 |

7 | ## Root Cause

8 |

9 | The issue was **NOT** caused by the `custom_metadata` field's `list` type as initially suspected. Instead, it was caused by a combination of:

10 |

11 | 1. The `raw_data` field being `pa.large_binary()` with `lance-encoding:blob` metadata

12 | 2. Having null values in this blob-encoded field

13 | 3. Using any filter operation on the dataset

14 |

15 | This appears to be a bug in Lance where filtering operations cause a panic when blob-encoded fields contain null values.

16 |

17 | ## Solution

18 |

19 | We need to make two changes to fix the issue:

20 |

21 | ### 1. Remove blob encoding from raw_data field

22 |

23 | Change from:

24 | ```python

25 | pa.field("raw_data", pa.large_binary(), metadata={"lance-encoding:blob": "true"})

26 | ```

27 |

28 | To:

29 | ```python

30 | pa.field("raw_data", pa.large_binary())

31 | ```

32 |

33 | ### 2. Change custom_metadata to JSON string (optional but recommended)

34 |

35 | While the `list` approach works, using a JSON string is simpler and more flexible:

36 |

37 | Change from:

38 | ```python

39 | pa.field("custom_metadata", pa.list_(pa.struct([

40 | pa.field("key", pa.string()),

41 | pa.field("value", pa.string())

42 | ])))

43 | ```

44 |

45 | To:

46 | ```python

47 | pa.field("custom_metadata", pa.string()) # Store as JSON string

48 | ```

49 |

50 | ## Data Conversion

51 |

52 | For the custom_metadata field, use these helper functions:

53 |

54 | ```python

55 | import json

56 |

57 | # When writing to Lance

58 | def dict_to_lance(metadata_dict):

59 | return json.dumps(metadata_dict) if metadata_dict else None

60 |

61 | # When reading from Lance

62 | def lance_to_dict(metadata_json):

63 | return json.loads(metadata_json) if metadata_json else {}

64 | ```

65 |

66 | ## Testing Results

67 |

68 | With these changes:

69 | - ✅ All write operations work

70 | - ✅ All read operations work

71 | - ✅ All filter operations work (uuid, IS NOT NULL, compound filters, etc.)

72 | - ✅ No Lance panics occur

73 |

74 | ## Next Steps

75 |

76 | 1. Update `contextframe_schema.py` to implement these changes

77 | 2. Update `frame.py` to handle JSON conversion for custom_metadata

78 | 3. Run all tests to ensure compatibility

79 | 4. Consider filing a bug report with Lance about the blob+null+filter issue

--------------------------------------------------------------------------------

/.claude/debugging/cfos-41-lance-issues/README.md:

--------------------------------------------------------------------------------

1 | # CFOS-41: Lance Map Type and Blob Filter Issues

2 |

3 | This directory contains the investigation and solution documentation for CFOS-41, which initially appeared to be a Lance Map type incompatibility issue but turned out to be a more fundamental limitation with Lance's blob column scanning.

4 |

5 | ## Files in This Directory

6 |

7 | 1. **SOLUTION_SUMMARY.md** - Final summary of the implemented solution

8 | 2. **LANCE_ISSUE_SOLUTION.md** - Initial solution thoughts

9 | 3. **COMPREHENSIVE_SOLUTIONS_ANALYSIS.md** - Detailed analysis of different approaches

10 | 4. **LANCE_BLOB_FILTER_BUG_ANALYSIS.md** - Deep dive into the Lance blob filtering issue

11 | 5. **LONG_TERM_IMPLEMENTATION_PLAN.md** - Long-term strategy for handling Lance limitations

12 | 6. **FINAL_IMPLEMENTATION_PLAN.md** - The final implementation approach

13 |

14 | ## Key Findings

15 |

16 | 1. **Lance doesn't support Map type** - We must use `list` instead

17 | 2. **Lance can't scan blob-encoded columns** - This is the root cause of the panics

18 | 3. **Solution**: Automatically exclude blob columns from scanner projections when filters are used

19 |

20 | ## Implementation

21 |

22 | The solution was implemented in `contextframe/frame.py`:

23 | - Added `_get_non_blob_columns()` helper

24 | - Modified `scanner()` to exclude blob columns when filtering

25 | - Updated `from_arrow()` to handle missing fields gracefully

26 |

27 | ## Test Files

28 |

29 | All tests were implemented in the standard location:

30 | - `contextframe/tests/test_frameset.py` - Contains tests for frameset functionality

31 |

32 | ## Related Linear Issue

33 |

34 | - **CFOS-41**: Fix Lance Map type incompatibility for custom_metadata field

35 | - Status: Resolved with the blob column exclusion approach

--------------------------------------------------------------------------------

/.claude/debugging/cfos-41-lance-issues/SOLUTION_SUMMARY.md:

--------------------------------------------------------------------------------

1 | # Solution Summary for CFOS-41

2 |

3 | ## Problem

4 | Lance was causing panics when filtering datasets that contained blob-encoded fields with null values. Initially thought to be a Map type incompatibility issue with custom_metadata.

5 |

6 | ## Root Cause Discovery

7 | Through extensive testing and research:

8 |

9 | 1. **Lance doesn't support Map type** - Confirmed this is still the case

10 | 2. **The real issue**: Lance doesn't support scanning/filtering blob-encoded columns at all

11 | 3. **Lance documentation confirms**: You must exclude blob columns from scanner projections and use `take_blobs` API separately

12 |

13 | ## Solution Implemented

14 |

15 | ### 1. Schema Changes

16 | - Changed `custom_metadata` from `pa.map_` to `pa.list_(pa.struct([...]))` for Lance compatibility

17 | - Added `custom_metadata` to JSON validation schema

18 |

19 | ### 2. Frame.py Changes