34 |

35 |

34 |

35 | 2 |

4 | Haofei Xu

5 | ·

6 | Anpei Chen

7 | ·

8 | Yuedong Chen

9 | ·

10 | Christos Sakaridis

11 | ·

12 | Yulun Zhang

13 | Marc Pollefeys

14 | ·

15 | Andreas Geiger

16 | ·

17 | Fisher Yu

18 |

29 | MuRF supports multiple different baseline settings. 30 |

31 |

32 |

33 |  34 |

35 |

34 |

35 |

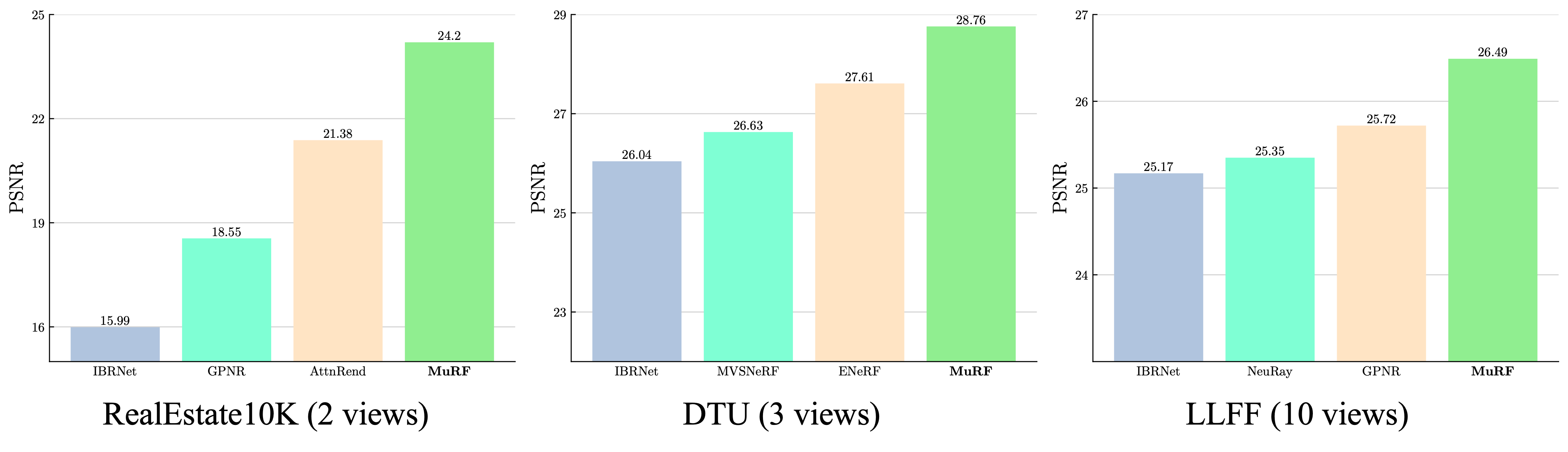

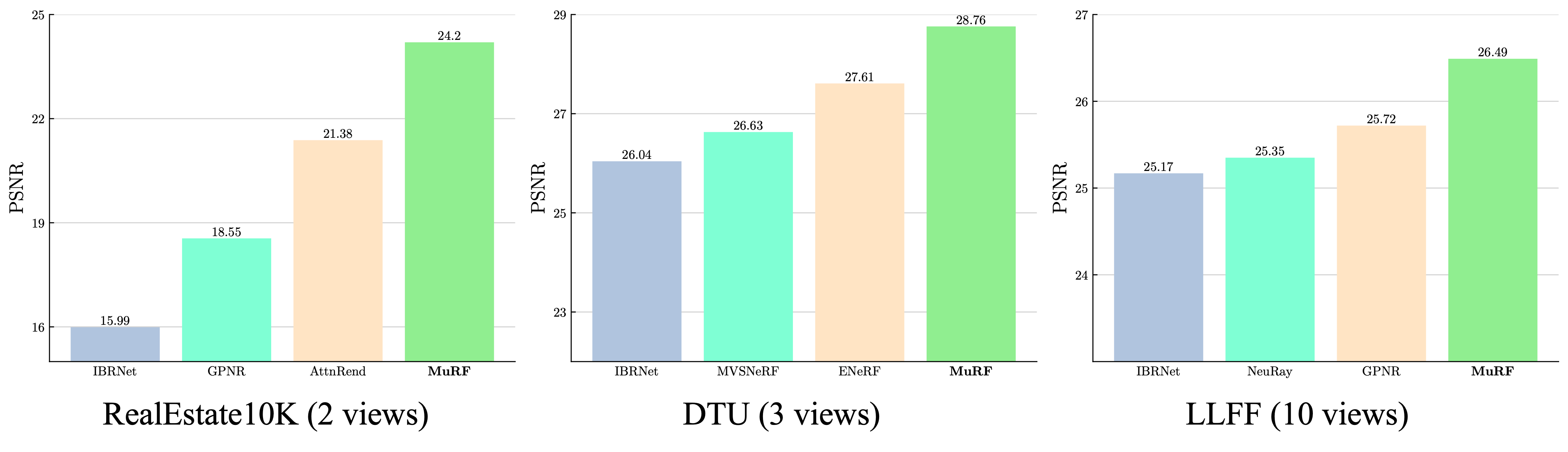

38 | MuRF achieves state-of-the-art performance under various evaluation settings. 39 |