I guess you really like me.

9 |

10 |

10 | {% for post in site.categories[cnamex] reversed %}

11 | {% include archive_post.html %}

12 | {% endfor %}

13 |

--------------------------------------------------------------------------------

/blog/clipjump/index.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: default

3 | cname: clipjump

4 | title: Posts on Clipjump

5 | nod: 1

6 | ---

7 |

8 | {% capture cnamex %}{{ page.cname }}{% endcapture %}

9 |

10 | {% for post in site.categories[cnamex] reversed %}

11 | {% include archive_post.html %}

12 | {% endfor %}

13 |

--------------------------------------------------------------------------------

/projects/clipjump/index.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: page

3 | title: Clipjump

4 | tagline: v11

5 | desc: Clipjump, The Utlimate Clipboard Manager for Windows

6 | ghlink: https://github.com/aviaryan/Clipjump

7 | ---

8 |

9 | ` tag to create a *streched* container. Then use the `.symbol` class to create the icon and add the message text later. Here is the

56 | code for the following 4 boxes (in screenshot).

57 |

58 | {% highlight html %}

59 |

A kind of a notice box !

60 |

Error message

61 |

A positive/success/completion message

62 |

A warning message

63 | {% endhighlight %}

64 |

65 |

66 |

67 |

68 |

To have the message box not strech to full width of the page, use span instead of div tag.

69 | See the

working example on Raw-Github ! And the

gist's source.

70 | Don't hesitate to ask if you face problems.

71 |

--------------------------------------------------------------------------------

/_posts/gsoc/2016-06-24-s3-for-storage.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: post

3 | title: Using S3 for cloud storage

4 | category: gsoc

5 | tags: gsoc gsoc16 python

6 | ---

7 |

8 | In this post, I will talk about how we can use the [Amazon S3](docs.aws.amazon.com/AmazonS3/latest/dev/Welcome.html) (Simple Storage Service) for cloud storage.

9 | As you may know, S3 is a no-fuss, super easy cloud storage service based on the IaaS model.

10 | There is no limit on the size of file or the amount of files you can keep on S3, you are only charged for the amount of bandwidth you use.

11 | This makes S3 very popular among enterprises of all sizes and individuals.

12 |

13 | Now let's see how to use S3 in Python. Luckily we have a very nice library called [Boto](http://boto.cloudhackers.com/en/latest/) for it.

14 | Boto is a library developed by the AWS team to provide a Python SDK for the amazon web services.

15 | Using it is very simple and straight-forward. Here is a basic example of uploading a file on S3 using Boto -

16 |

17 | {% highlight python %}

18 | import boto

19 | from boto.s3.key import Key

20 | # connect to the bucket

21 | conn = boto.connect_s3(AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY)

22 | bucket = conn.get_bucket(BUCKET_NAME)

23 | # set the key

24 | key = 'key/for/file'

25 | file = '/full/path/to/file'

26 | # create a key to keep track of our file in the storage

27 | k = Key(bucket)

28 | k.key = key

29 | k.set_contents_from_filename(file)

30 | {% endhighlight %}

31 |

32 | The above example uploads a `file` to s3 bucket `BUCKET_NAME`.

33 |

34 | Buckets are containers which store data.

35 | The `key` here is the unique key for an item in the bucket. Every item in the bucket is identified by a unique key assigned to it.

36 | The file can be downloaded from the url `BUCKET_NAME.s3.amazonaws.com/{key}`.

37 | It is therefore essential to choose the key name smartly so that you don't end up overwriting an existing item on the server.

38 |

39 | In the [Open Event project](https://github.com/fossasia/open-event-orga-server/), I thought of a scheme that will allows us to avoid conflicts. It relies on using IDs of items for distinguishing them and goes as follows -

40 |

41 | * When uploading user avatar, key should be 'users/{userId}/avatar'

42 | * When uploading event logo, key should be 'events/{eventId}/logo'

43 | * When uploading audio of session, key should be 'events/{eventId}/sessions/{sessionId}/audio'

44 |

45 | Note that to store user 'avatar', I am setting the key as `/avatar` and not `/avatar.extension`. This is because if user uploads pictures in different formats, we will end up

46 | storing different copies of avatars for the same user. This is nice but it's limitation is that downloading file from the url will give the file without an extension.

47 | So to solve this issue, we can use the Content-Disposition header.

48 |

49 | {% highlight python %}

50 | k.set_contents_from_filename(

51 | file,

52 | headers={

53 | 'Content-Disposition': 'attachment; filename=filename.extension'

54 | }

55 | )

56 | {% endhighlight %}

57 |

58 | So now when someone tries to download the file from that link, they will get the file with an extension instead of a no-extension "Choose what you want to do" file.

59 |

60 | This covers up the basics of using S3 for your Python project. You may explore [Boto's S3 documentation](boto.cloudhackers.com/en/latest/s3_tut.html) to find other interesting

61 | functions like deleting a folder, copy one folder to another and so.

62 |

63 | Also don't forget to have a look at the [awesome documentation](https://github.com/fossasia/open-event-orga-server/blob/master/docs/AMAZON_S3.md)

64 | we wrote for the Open Event project.

65 | It provides a more pictorial and detailed guide on how to setup S3 for your project.

66 |

--------------------------------------------------------------------------------

/_posts/gsoc/2016-08-20-docker-compose-starting.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: post

3 | title: Getting started with Docker Compose

4 | category: gsoc

5 | tags: gsoc gsoc16 docker

6 | ---

7 |

8 | In this post, I will talk about running multiple containers at once using [Docker Compose](https://github.com/docker/compose).

9 |

10 |

11 | #### The problem ?

12 |

13 | Suppose you have a complex app with Database containers, Redis and what not. How are you going to start the app ?

14 | One way is to write a shell script that starts the containers one by one.

15 |

16 | {% highlight bash %}

17 | docker run postgres:latest --name mydb -d

18 | docker run redis:3-alpine --name myredis -d

19 | docker run myapp -d

20 | {% endhighlight %}

21 |

22 | Now suppose these containers have lots of configurations (links, volumes, ports, environment variables) that they need to function. You will have to write those parameters

23 | in the shell script.

24 |

25 | {% highlight bash %}

26 | docker network create myapp_default

27 | docker run postgres:latest --name db -d -p 5432:5432 --net myapp_default

28 | docker run redis:3-alpine --name redis -d -p 6379:6379 --net myapp_default \

29 | -v redis:/var/lib/redis/data

30 | docker run myapp -d -p 5000:5000 --net myapp_default -e SOMEVAR=value --link db:db \

31 | --link redis:redis -v storage:/myapp/static

32 | {% endhighlight %}

33 |

34 | Won't it get un-manageable ? Won't it be great if we had a cleaner way to running multiple containers. Here comes docker-compose to the rescue.

35 |

36 |

37 | #### Docker compose

38 |

39 | [Docker compose](https://docs.docker.com/compose/) is a python package which does the job of handling multiple containers for an application very elegantly.

40 | The main file of docker-compose is `docker-compose.yml` which is a YAML like syntax file with the settings/components required to run your app.

41 | Once you define that file, you can just do `docker-compose up` to start your app with all the components and settings. Pretty cool, right ?

42 |

43 | So let's see the docker-compose.yml for the fictional app we have considered above.

44 |

45 | {% highlight yaml %}

46 | version: '2'

47 |

48 | services:

49 | db:

50 | image: postgres:latest

51 | ports:

52 | - '5432:5432'

53 |

54 | redis:

55 | image: 'redis:3-alpine'

56 | command: redis-server

57 | volumes:

58 | - 'redis:/var/lib/redis/data'

59 | ports:

60 | - '6379:6379'

61 |

62 | web:

63 | build: .

64 | environment:

65 | SOMEVAR: value

66 | links:

67 | - db:db

68 | - redis:redis

69 | volumes:

70 | - 'storage:/myapp/static'

71 | ports:

72 | - '5000:5000'

73 |

74 | volumes:

75 | redis:

76 | storage:

77 | {% endhighlight %}

78 |

79 | Once this file is in the project's root directory, you can use `docker-compose up` to start the application.

80 | It will run the services in the order in which they have been defined in the YAML file.

81 |

82 | Docker compose has a lot of commands that generally correspond to the parameters that `docker run` accepts.

83 | You can see a full list on the official [docker-compose reference](https://docs.docker.com/compose/compose-file/).

84 |

85 |

86 | #### Conclusion

87 |

88 | It's no doubt that docker-compose is a boon when you have to run complex applications. It personally use Compose in every dockerized application that I write.

89 | In GSoC 16, I dockerized [Open Event](https://github.com/fossasia/open-event-orga-server).

90 | Here is the [docker-compose.yml](https://github.com/fossasia/open-event-orga-server/blob/development/docker-compose.yml) file if you are interested.

91 |

92 | PS - If you liked this post, you might find my [other posts on Docker](http://aviaryan.in/blog/tags.html#docker) interesting. Do take a look and let me know your views.

93 |

94 |

--------------------------------------------------------------------------------

/ahk/functions/ahkini.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: page

3 | title: AhkIni()

4 | tagline: Fast Ini lib with standard comment-linked support

5 | ghlink: https://github.com/aviaryan/autohotkey-scripts/blob/master/Functions/AhkIni.ahk

6 | ---

7 |

8 |

Ahk Board Topic

9 |

10 | Class to perform Ini reading and writing operations

11 | Created as a complete replacement of the default Ini commands

12 |

13 |

- Much much faster than the default Ini operations.

14 |

- Support for reading/writing linked comments to keys and sections.

15 |

- Same syntax as the default Ini commands.

16 |

17 |

Example of an Ini created through the lib

18 |

19 | {% highlight ini %}

20 | [section1]

21 | ;section1 comment

22 | key1 = hithere_key1

23 | ;keycomment

24 | ;line2

25 | ;line3

26 | key2 = hithere_key2

27 | ;keycomment2

28 | key3 = hithere_key3

29 |

30 | [section2]

31 | ;section2 comment

32 | key1 = hithere2

33 | ;keycomment2

34 | {% endhighlight %}

35 |

36 |

The code

37 | {% highlight autohotkey %}

38 | SetWorkingDir,% A_scriptdir

39 |

40 | Ini := new AhkIni("black2.ini") ;will create new ini if nothing exists

41 |

42 | ini.write("section1", "key1", "hithere_key1", "keycomment`nline2`nline3")

43 | ini.write("section1", "key2", "hithere_key2", "keycomment2", "section1 comment")

44 | ini.write("section1", "key3", "hithere_key3") ;no comment for this key

45 | ini.write("section2", "key1", "hithere2", "keycomment2", "section2 comment")

46 |

47 | msgbox,% ini.read("section1", "key1", key, sec) "`n`n" key "`n`n" sec

48 | msgbox,% "Section1 keys are `n`n" ini.read("section1") ;read the keys in section 1

49 | msgbox,% "Sections in the ini are`n`n" ini.read()

50 | ;ini.delete("section1", "key1") ;delete the above read key

51 |

52 | ini.Save()

53 |

54 | #include, AhkIni.ahk

55 | {% endhighlight %}

56 |

57 |

58 |

59 |

60 |

61 | Methods

62 |

63 |

64 | - New(Inifile)

65 |

Sets a pointer to an Ini settings file.

66 | The Ini file is created if it doesn't exists.

67 |

68 | - .Read(Section="", Key="", Byref Key_com="", ByRef Sec_com="")

69 |

Reads value of the Key contained in a Section to the outputvar.

70 | If the Byref parameters are specified, the linked Key comments (Key_com) and Section comments (Sec_com) are

71 | also read.

72 | If Section="" i.e. the first param is unspecified, the list of sections in the ini will be returned in a fashion similar to the AHK_l

73 | IniRead command.

74 | If Key="" , the list of Keys in the specified Section is returned.

75 |

76 | - .Write(Section, Key, Value, Key_com="", Sec_com="")

77 |

Write values to a key, creating the key and section if necessary.

78 | The params Key_com and Sec_com are for key and section comments.

79 | If these params are omitted, then the original corresponding comment is left unchanged but if

80 | these params are called as a Space (" " or A_space), then the linked comment for the section/key is

81 | deleted and changed to null.

82 |

83 | - .Delete(Section="", Key="")

84 |

Delete keys or sections from the ini.

85 | If Key is omitted , the whole section is deleted.

86 | If Section is omitted , the whole Ini is deleted.

87 |

88 | - .Save()

89 |

Saves the changes made to the Ini made in memory to the file.

90 | Remember to call .Save after making the changes.

91 |

92 |

--------------------------------------------------------------------------------

/ahk/tools/htmltag.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: page

3 | title: HTMLTag

4 | tagline: v ----

5 | ghlink: https://github.com/aviaryan/autohotkey-scripts/blob/master/Tools/HtmlTag.ahk

6 | ---

7 |

8 |

Ahk Topic

9 |

DOWNLOAD

10 |

HTML Tagger is a tool to add HTML, bb-code and custom tags or wrappings to selected text on the screen.

11 | It is intutive , fast and powerful.

12 |

13 |

14 |

15 |

FEATURES

16 |

17 | - No need for shortcuts, you can fastly cycle between tags/wrappings using a single Ctrl+H

18 | This feature is same as the one innovated in Clipjump.

19 | - Support for unlimited tags/wrappings.

20 |

- Very fast switching through tags/wrappings which can be added/deleted at runtime

21 |

- You can determine where should the cursor be placed after insertion of tags so that it gets easy to append custom

22 | information.

23 |

- Open source and free.

24 |

25 |

26 |

27 |

USAGE

28 |

29 | - Select Text you want to apply tags to.

30 |

- Hold Ctrl, then tap H to cycle between different Items OR tags available

31 |

- Release Ctrl to apply the current selected tag.

32 |

- While holding Ctrl, tap X to cancel Tag operation

33 |

- While holding Ctrl, tap D to delete Tooltip active tag/wrapping item.

34 |

35 | Use Win+H to show a GUI which will enable you to add more tag items to the program.

36 | The item added here is saved in a HTMLTag.ini file for future use.

37 |

38 |

HtmlTag Adder GUI Options

39 |

40 | - LABEL

41 |

The name by which an item will be identified (Shown in Tooltip)

42 | - MASK

43 |

The MASK that you want the program to impose on the selected text.

44 | eg -> <h1>|</h1>

45 | Here | is the Delimiter and will symbolize selected text due to the TEXT POINT Param

46 | - TEXT POINT

47 |

The Delim which you want to be replaced by selected text.

48 | This may seem a useless option as it will more often be 1.

49 | Use TEXT POINT = 0 to make selected Text appear before tag items.

50 |

51 | - CARET INSERTION POINT

52 |

f 0 , Caret (Cursor) is placed at the end of tag after a Tag is applied

53 | If 1 or more, the Caret is placed that many points away from the right of Inserted selected text.

54 | Use Caret=1 to place the Caret right after the selected text tagged by the program.

55 | If you don't understand , better try it.

56 |

57 | - DELIMITER

58 |

The Delimiter used to separate MASK items.

59 | Default = |

60 |

61 |

62 |

63 |

64 |

Include Files

65 |

AhkIni.ahk

66 |

Clipjump Communicator.ahk (OPTIONAL)

67 |

68 |

69 |

Some Examples

70 |

71 | - Commenting text in Autohotkey scripts

72 |

Using Mask = /*|*/ and all other options default, the selected text can be converted into an

73 | Autohotkey Block comment.

74 | - Inserting code in BB-Code Forums (like Autohotkey)

75 |

Using Mask = [c]|[/c] and all other options default

76 | - Commenting text (inline) in Autohotkey

77 |

Using Mask = ;| and all other options default

78 |

79 |

80 |

--------------------------------------------------------------------------------

/_posts/gsoc/2016-06-19-paginated-apis-flask.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: post

3 | title: Paginated APIs in Flask

4 | category: gsoc

5 | tags: gsoc gsoc16 flask python

6 | ---

7 |

8 | Week 2 of GSoC I had the task of implementing paginated APIs in [Open Event](https://github.com/fossasia/open-event) project.

9 | I was aware that [DRF](www.django-rest-framework.org/) provided such feature in Django so I looked through the Internet to find some library for Flask.

10 | Luckily, I didn't find any so I decided to make my own.

11 |

12 | A paginated API is page-based API. This approach is used as the API data can be very large sometimes and pagination can help to break it into small chunks.

13 | The Paginated API built in the Open Event project looks like this -

14 |

15 | {% highlight json %}

16 | {

17 | "start": 41,

18 | "limit": 20,

19 | "count": 128,

20 | "next": "/api/v2/events/page?start=61&limit=20",

21 | "previous": "/api/v2/events/page?start=21&limit=20",

22 | "results": [

23 | {

24 | "data": "data"

25 | },

26 | {

27 | "data": "data"

28 | }

29 | ]

30 | }

31 | {% endhighlight %}

32 |

33 | Let me explain what the keys in this JSON mean -

34 |

35 | 1. `start` - It is the position from which we want the data to be returned.

36 | 2. `limit` - It is the max number of items to return from that position.

37 | 3. `next` - It is the url for the next page of the query assuming current value of `limit`

38 | 4. `previous` - It is the url for the previous page of the query assuming current value of `limit`

39 | 5. `count` - It is the total count of results available in the dataset. Here as the 'count' is 128, that means you can go maximum till start=121 keeping limit as 20. Also when

40 | you get the page with start=121 and limit=20, 8 items will be returned.

41 | 6. `results` - This is the list of results whose position lies within the bounds specified by the request.

42 |

43 | Now let's see how to implement it. I have simplified the code to make it easier to understand.

44 |

45 | {% highlight python %}

46 | from flask import Flask, abort, request, jsonify

47 | from models import Event

48 |

49 | app = Flask(__name__)

50 |

51 | @app.route('/api/v2/events/page')

52 | def view():

53 | return jsonify(get_paginated_list(

54 | Event,

55 | '/api/v2/events/page',

56 | start=request.args.get('start', 1),

57 | limit=request.args.get('limit', 20)

58 | ))

59 |

60 | def get_paginated_list(klass, url, start, limit):

61 | # check if page exists

62 | results = klass.query.all()

63 | count = len(results)

64 | if (count < start):

65 | abort(404)

66 | # make response

67 | obj = {}

68 | obj['start'] = start

69 | obj['limit'] = limit

70 | obj['count'] = count

71 | # make URLs

72 | # make previous url

73 | if start == 1:

74 | obj['previous'] = ''

75 | else:

76 | start_copy = max(1, start - limit)

77 | limit_copy = start - 1

78 | obj['previous'] = url + '?start=%d&limit=%d' % (start_copy, limit_copy)

79 | # make next url

80 | if start + limit > count:

81 | obj['next'] = ''

82 | else:

83 | start_copy = start + limit

84 | obj['next'] = url + '?start=%d&limit=%d' % (start_copy, limit)

85 | # finally extract result according to bounds

86 | obj['results'] = results[(start - 1):(start - 1 + limit)]

87 | return obj

88 | {% endhighlight %}

89 |

90 | Just to be clear, here I am assuming you are using SQLAlchemy for the database. The `klass` parameter in the above code is the SqlAlchemy db.Model class on which you want

91 | to query upon for the results. The `url` is the base url of the request, here '/api/v2/events/page' and it used in setting the *previous* and *next* urls.

92 | Other things should be clear from the code.

93 |

94 | So this was how to implement your very own Paginated API framework in Flask (should say Python). I hope you found this post interesting.

95 |

96 | Until next time.

97 |

98 | Ciao

99 |

--------------------------------------------------------------------------------

/_posts/gsoc/2016-07-15-celery-flask-using.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: post

3 | title: Setting up Celery with Flask

4 | category: gsoc

5 | tags: gsoc gsoc16 celery flask

6 | ---

7 |

8 | In this article, I will explain how to use Celery with a Flask application.

9 | Celery requires a broker to run. The most famous of the brokers is Redis.

10 | So to start using Celery with Flask, first we will have to setup the Redis broker.

11 |

12 | Redis can be downloaded from their site [http://redis.io](http://redis.io).

13 | I wrote a script that simplifies downloading, building and running the redis server.

14 |

15 | {% highlight bash %}

16 | #!/bin/bash

17 | # This script downloads and runs redis-server.

18 | # If redis has been already downloaded, it just runs it

19 | if [ ! -d redis-3.2.1/src ]; then

20 | wget http://download.redis.io/releases/redis-3.2.1.tar.gz

21 | tar xzf redis-3.2.1.tar.gz

22 | rm redis-3.2.1.tar.gz

23 | cd redis-3.2.1

24 | make

25 | else

26 | cd redis-3.2.1

27 | fi

28 | src/redis-server

29 | {% endhighlight %}

30 |

31 | When the above script is ran from the first time, the redis folder doesn\'t exist so it downloads the same, builds it and then runs it.

32 | In subsequent runs, it will skip the downloading and building part and just run the server.

33 |

34 | Now that the redis server is running, we will have to install its Python counterpart.

35 |

36 | {% highlight bash %}

37 | pip install redis

38 | {% endhighlight %}

39 |

40 | After the redis broker is set, now its time to setup the celery extension.

41 | First install celery by using `pip install celery`.

42 | Then we need to setup celery in the flask app definition.

43 |

44 | {% highlight python %}

45 | # in app.py

46 | def make_celery(app):

47 | # set redis url vars

48 | app.config['CELERY_BROKER_URL'] = environ.get('REDIS_URL', 'redis://localhost:6379/0')

49 | app.config['CELERY_RESULT_BACKEND'] = app.config['CELERY_BROKER_URL']

50 | # create context tasks in celery

51 | celery = Celery(app.import_name, broker=app.config['CELERY_BROKER_URL'])

52 | celery.conf.update(app.config)

53 | TaskBase = celery.Task

54 | class ContextTask(TaskBase):

55 | abstract = True

56 | def __call__(self, *args, **kwargs):

57 | with app.app_context():

58 | return TaskBase.__call__(self, *args, **kwargs)

59 | celery.Task = ContextTask

60 | return celery

61 |

62 | celery = make_celery(current_app)

63 | {% endhighlight %}

64 |

65 | Now that Celery is setup on our project, let's define a sample task.

66 |

67 | {% highlight python %}

68 | @app.route('/task')

69 | def view():

70 | background_task.delay(*args, **kwargs)

71 | return 'OK'

72 |

73 | @celery.task

74 | def background_task(*args, **kwargs):

75 | # code

76 | # more code

77 | {% endhighlight %}

78 |

79 | Now to run the celery workers, execute

80 |

81 | {% highlight bash %}

82 | celery worker -A app.celery

83 | {% endhighlight %}

84 |

85 | That should be all. Now to run our little project, we can execute the following script.

86 |

87 | {% highlight bash %}

88 | bash run_redis.sh & # to run redis

89 | celery worker -A app.celery & # to run celery workers

90 | python app.py

91 | {% endhighlight %}

92 |

93 | If you are wondering how to run the same on Heroku, just use the free [heroku-redis](https://elements.heroku.com/addons/heroku-redis) extension.

94 | It will start the redis server on heroku. Then to run the workers and app, set the Procfile as -

95 |

96 | {% highlight yaml %}

97 | web: sh heroku.sh

98 | {% endhighlight %}

99 |

100 | Then set the heroku.sh as -

101 |

102 | {% highlight bash %}

103 | #!/bin/bash

104 | celery worker -A app.celery &

105 | gunicorn app:app

106 | {% endhighlight %}

107 |

108 |

109 | That's a basic guide on how to run a Flask app with Celery and Redis.

110 | If you want more information on this topic, please see my post

111 | [Ideas on Using Celery in Flask for background tasks](http://aviaryan.in/blog/gsoc/celery-flask-good-ideas.html).

112 |

--------------------------------------------------------------------------------

/_posts/gsoc/2016-07-02-jwt-intro.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: post

3 | title: Introduction to JWT

4 | category: gsoc

5 | tags: gsoc gsoc16

6 | ---

7 |

8 | In this post, I will try to explain what is JWT, what are its advantages and why you should be using it.

9 |

10 | [JWT](https://jwt.io) stands for JSON Web Tokens. Let me explain what each word means.

11 |

12 | 1. Tokens - Token is in tech terms a piece of data (claim) which gives access to certain piece of information and allows certain actions.

13 | 2. Web - Web here means that it was designed to be used on the web i.e. web projects.

14 | 3. JSON - JSON means that the token can contain json data. In JWT, the json is first serialized and then [Base64 encoded](https://en.wikipedia.org/wiki/Base64).

15 |

16 | A JWT looks like a random sequence of strings separated by 2 dots. The `yyyyy` part which you see below has the Base64 encoded form of json data mentioned earlier.

17 |

18 | {% highlight bash %}

19 | xxxxx.yyyyy.zzzzz

20 | {% endhighlight %}

21 | The 3 parts in order are -

22 |

23 | * Header - Header is the base64 encoded json which contains hashing algorithm on which the token is secured.

24 | * Payload - Payload is the base64 encoded json data which needs to be shared through the token.

25 | The json can include some default keys like `iss` (issuer), `exp` (expiration time), `sub` (subject) etc. Particularly `exp` here is the interesting one as it allows specifying

26 | expiry time of the token.

27 |

28 | At this point you might be thinking that how is JWT secure if all we are doing is base64 encoding payload. After all, there are easy ways to decode base64.

29 | This is where the 3rd part (zzzzz) is used.

30 |

31 | * Signature - Signature is a hashed string made up by the first two parts of the token (header and payload) and a `secret`. The secret should be kept confidential to the owner

32 | who is authenticating using JWT. This is how the signature is created. (assuming HMACSHA256 as the algorithm)

33 |

34 | {% highlight python %}

35 | HMACSHA256(

36 | xxxxx + "." + yyyyy,

37 | secret)

38 | {% endhighlight %}

39 |

40 | #### How to use JWT for authentication

41 |

42 | Once you realize it, the idea of JWT is quite simple. To use JWT for authentication, what you do is you make the client POST their username and password to a certain url.

43 | If the combination is correct, you return a JWT including `username` in the "Payload". So the payload looks like -

44 |

45 | {% highlight json %}

46 | {

47 | "username": "john.doe"

48 | }

49 | {% endhighlight %}

50 |

51 | Once the client has this JWT, they can send the same in Header when accessing protected routes. The server can read the JWT from the header and verify its correctness by matching the signature (zzzzz part) with the encoded hash created using header+payload and secret (generated signature).

52 | If the strings match, it means that the JWT is valid and therefore the request can be given access to the routes.

53 | BTW, you won't have to go through such a deal for using JWT for authentication, there are already a handful of [libraries](https://jwt.io/#libraries-io) that can do these for

54 | you.

55 |

56 |

57 | #### Why use JWT over auth tokens ?

58 |

59 | As you might have noticed in the previous section, JWT has a payload field that can contain any type of information.

60 | If you include `username` in it, you will be able to identify the user just by validating the JWT and there will be no need to read from the database unlike typical tokens which require a database read cycle to get the claimed user.

61 | Now if you go ahead and include permission informations in JWT too (like `'isAdmin': True`), then more database reads can be prevented.

62 | And this optimization comes at no cost at all. So this is why you should be using JWT.

63 |

64 |

65 | We at [Open Event](https://github.com/fossasia/open-event) use JWT for our primary means of authentication. Apart from that, we support basic authentication too.

66 | Read [this post](http://aviaryan.in/blog/gsoc/auth-flask-done-right.html) for some points about that.

67 |

68 | That's it for now. Thanks for reading.

69 |

--------------------------------------------------------------------------------

/assets/css/mojombo.css:

--------------------------------------------------------------------------------

1 | /** https://github.com/mojombo/tpw/blob/master/css/syntax.css **/

2 | .highlight pre { background: rgb(241, 241, 241); padding: 10px 15px;}

3 | .highlight .c { color: #999988; font-style: italic } /* Comment */

4 | .highlight .err { color: #a61717; background-color: #e3d2d2 } /* Error */

5 | .highlight .k { font-weight: bold } /* Keyword */

6 | .highlight .o { font-weight: bold } /* Operator */

7 | .highlight .cm { color: #999988; font-style: italic } /* Comment.Multiline */

8 | .highlight .cp { color: #999999; font-weight: bold } /* Comment.Preproc */

9 | .highlight .c1 { color: #999988; font-style: italic } /* Comment.Single */

10 | .highlight .cs { color: #999999; font-weight: bold; font-style: italic } /* Comment.Special */

11 | .highlight .gd { color: #000000; background-color: #ffdddd } /* Generic.Deleted */

12 | .highlight .gd .x { color: #000000; background-color: #ffaaaa } /* Generic.Deleted.Specific */

13 | .highlight .ge { font-style: italic } /* Generic.Emph */

14 | .highlight .gr { color: #aa0000 } /* Generic.Error */

15 | .highlight .gh { color: #999999 } /* Generic.Heading */

16 | .highlight .gi { color: #000000; background-color: #ddffdd } /* Generic.Inserted */

17 | .highlight .gi .x { color: #000000; background-color: #aaffaa } /* Generic.Inserted.Specific */

18 | .highlight .go { color: #888888 } /* Generic.Output */

19 | .highlight .gp { color: #555555 } /* Generic.Prompt */

20 | .highlight .gs { font-weight: bold } /* Generic.Strong */

21 | .highlight .gu { color: #aaaaaa } /* Generic.Subheading */

22 | .highlight .gt { color: #aa0000 } /* Generic.Traceback */

23 | .highlight .kc { font-weight: bold } /* Keyword.Constant */

24 | .highlight .kd { font-weight: bold } /* Keyword.Declaration */

25 | .highlight .kp { font-weight: bold } /* Keyword.Pseudo */

26 | .highlight .kr { font-weight: bold } /* Keyword.Reserved */

27 | .highlight .kt { color: #445588; font-weight: bold } /* Keyword.Type */

28 | .highlight .m { color: #009999 } /* Literal.Number */

29 | .highlight .s { color: #d14 } /* Literal.String */

30 | .highlight .na { color: #008080 } /* Name.Attribute */

31 | .highlight .nb { color: #0086B3 } /* Name.Builtin */

32 | .highlight .nc { color: #445588; font-weight: bold } /* Name.Class */

33 | .highlight .no { color: #008080 } /* Name.Constant */

34 | .highlight .ni { color: #800080 } /* Name.Entity */

35 | .highlight .ne { color: #990000; font-weight: bold } /* Name.Exception */

36 | .highlight .nf { color: #990000; font-weight: bold } /* Name.Function */

37 | .highlight .nn { color: #555555 } /* Name.Namespace */

38 | .highlight .nt { color: #000080 } /* Name.Tag */

39 | .highlight .nv { color: #008080 } /* Name.Variable */

40 | .highlight .ow { font-weight: bold } /* Operator.Word */

41 | .highlight .w { color: #bbbbbb } /* Text.Whitespace */

42 | .highlight .mf { color: #009999 } /* Literal.Number.Float */

43 | .highlight .mh { color: #009999 } /* Literal.Number.Hex */

44 | .highlight .mi { color: #009999 } /* Literal.Number.Integer */

45 | .highlight .mo { color: #009999 } /* Literal.Number.Oct */

46 | .highlight .sb { color: #d14 } /* Literal.String.Backtick */

47 | .highlight .sc { color: #d14 } /* Literal.String.Char */

48 | .highlight .sd { color: #d14 } /* Literal.String.Doc */

49 | .highlight .s2 { color: #d14 } /* Literal.String.Double */

50 | .highlight .se { color: #d14 } /* Literal.String.Escape */

51 | .highlight .sh { color: #d14 } /* Literal.String.Heredoc */

52 | .highlight .si { color: #d14 } /* Literal.String.Interpol */

53 | .highlight .sx { color: #d14 } /* Literal.String.Other */

54 | .highlight .sr { color: #009926 } /* Literal.String.Regex */

55 | .highlight .s1 { color: #d14 } /* Literal.String.Single */

56 | .highlight .ss { color: #990073 } /* Literal.String.Symbol */

57 | .highlight .bp { color: #999999 } /* Name.Builtin.Pseudo */

58 | .highlight .vc { color: #008080 } /* Name.Variable.Class */

59 | .highlight .vg { color: #008080 } /* Name.Variable.Global */

60 | .highlight .vi { color: #008080 } /* Name.Variable.Instance */

61 | .highlight .il { color: #009999 } /* Literal.Number.Integer.Long */

--------------------------------------------------------------------------------

/_posts/gsoc/2016-08-04-dynamic-marshal-restplus.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: post

3 | title: Dynamically marshalling output in Flask Restplus

4 | category: gsoc

5 | tags: gsoc gsoc16 flask-restplus flask

6 | ---

7 |

8 | Do you use [Flask-Restplus](https://github.com/noirbizarre/flask-restplus) ? Have you felt the need of dynamically modifying API output according to condition.

9 | If yes, then this post is for you.

10 |

11 | In this post, I will show how to use decorators to restrict GET API output. So let's start.

12 |

13 | This is the basic code to create an API. Here we have created a `get_speaker` API to get a single item from Speaker model.

14 |

15 | {% highlight python %}

16 | from flask_restplus import Resource, Model, fields, Namespace

17 | from models import Speaker

18 |

19 | api = Namespace('speakers', description='Speakers', path='/')

20 |

21 | SPEAKER = Model('Name', {

22 | 'id': fields.Integer(),

23 | 'name': fields.String(),

24 | 'phone': fields.String()

25 | })

26 |

27 | class DAO:

28 | def get(speaker_id):

29 | return Speaker.query.get(speaker_id)

30 |

31 | @api.route('/speakers/

')

32 | class Speaker(Resource):

33 | @api.doc('get_speaker')

34 | @api.marshal_with(SPEAKER)

35 | def get(self, speaker_id):

36 | """Fetch a speaker given its id"""

37 | return DAO.get(speaker_id)

38 | {% endhighlight %}

39 |

40 | Now our need is to change the returned API data according to some condition. Like if user is authenticated then only return `phone` field of the SPEAKER model.

41 | One way to do this is to create condition statements in `get` method that marshals the output according to the situation. But if there are lots of methods which require this,

42 | then this is not a good way.

43 |

44 | So let's create a decorator which can change the `marshal` decorator at runtime. It will accept parameters as which models to marshal in case of authenticated and non-authenticated cases.

45 |

46 | {% highlight python %}

47 | from flask_login import current_user

48 | from flask_restplus import marshal_with

49 |

50 | def selective_marshal_with(fields, fields_private):

51 | """

52 | Selective response marshalling. Doesn't update apidoc.

53 | """

54 | def decorator(func):

55 | @wraps(func)

56 | def wrapper(*args, **kwargs):

57 | if current_user.is_authenticated:

58 | model = fields

59 | else:

60 | model = fields_private

61 | func2 = marshal_with(model)(func)

62 | return func2(*args, **kwargs)

63 | return wrapper

64 | return decorator

65 | {% endhighlight %}

66 |

67 | The above code adds a wrapper over the API function which checks if the user is authenticated. If the user is authenticated, `fields` model is used for marshalling else

68 | `fields_private` is used for marshalling.

69 |

70 | So let's create the private model for `SPEAKER`. We will call it `SPEAKER_PRIVATE`.

71 |

72 | {% highlight python %}

73 | from flask_restplus import Model, fields

74 |

75 | SPEAKER_PRIVATE = Model('NamePrivate', {

76 | 'id': fields.Integer(),

77 | 'name': fields.String()

78 | })

79 | {% endhighlight %}

80 |

81 | The final step is attaching the `selective_marshal_with` decorator to the get() method.

82 |

83 | {% highlight python %}

84 | @api.route('/speakers/')

85 | class Speaker(Resource):

86 | @api.doc('get_speaker', model=SPEAKER)

87 | @selective_marshal_with(SPEAKER, SPEAKER_PRIVATE)

88 | def get(self, speaker_id):

89 | """Fetch a speaker given its id"""

90 | return DAO.get(speaker_id)

91 | {% endhighlight %}

92 |

93 | You will notice that I removed `@api.marshal_with(SPEAKER)`. This was to disable automatic marshalling of output by flask-restplus. To compensate for this, I have added

94 | `model=SPEAKER` in `api.doc`. It will not auto-marshal the output but will still show the swagger documentation.

95 |

96 | That concludes this. The get method will now switch `marshal` field w.r.t to the authentication level of the user.

97 | As you may notice, the `selective_marhsal_with` function is generic and can be used with other models and APIs too.

98 |

--------------------------------------------------------------------------------

/_posts/gsoc/2016-08-16-docker-using-alpine.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: post

3 | title: Small Docker images using Alpine Linux

4 | category: gsoc

5 | tags: gsoc gsoc16 docker

6 | ---

7 |

8 | Everyone likes optimization, small file sizes and such.. Won't it be great if you are able to reduce your Docker image sizes by a factor of 2 or more.

9 | Say hello to [Alpine Linux](https://hub.docker.com/_/alpine/).

10 | It is a minimal Linux distro weighing just 5 MBs. It also has basic linux tools and a nice package manager APK. APK is quite stable and has a considerable amount of

11 | packages.

12 |

13 | {% highlight bash %}

14 | apk add python gcc

15 | {% endhighlight %}

16 |

17 | In this post, my main motto is how to squeeze the best out of AlpineLinux to create the smallest possible Docker image. So let's start.

18 |

19 |

20 | ### Step 1: Use AlpineLinux based images

21 |

22 | Ok, I know that's obvious but just for the sake of completeness of this article, I will state that prefer using Alpine based images wherever possible.

23 | [Python](https://hub.docker.com/_/python/) and Redis have their official Alpine based images whereas NodeJS has good unoffical Alpine-based images.

24 | Same goes for Postgres, Ruby and other popular environments.

25 |

26 |

27 | ### Step 2: Install only needed dependencies

28 |

29 | Prefer installing select dependencies over installing a package that contains lots of them. For example, prefer installing `gcc` and development libraries over buildpacks.

30 | You can find listing of Alpine packages on their [website](https://pkgs.alpinelinux.org/packages).

31 |

32 | **Pro Tip** - A great list of Debian v/s Alpine development packages is at [alpine-buildpack-deps](https://hub.docker.com/r/praekeltfoundation/alpine-buildpack-deps/) Docker Hub page (scroll down to Packages). It is a very complete list and you will always find the dependency you are looking for.

33 |

34 |

35 | ### Step 3: Delete build dependencies after use

36 |

37 | Build dependencies are required by components/libraries to build native extensions for the platform. Once the build is done, they are not needed.

38 | So you should delete the build-dependencies after their job is complete. Have a look at the following snippet.

39 |

40 | {% highlight bash %}

41 | RUN apk add --virtual build-dependencies gcc python-dev linux-headers musl-dev postgresql-dev \

42 | && pip install -r requirements.txt \

43 | && apk del build-dependencies

44 | {% endhighlight %}

45 |

46 | I am using `--virtual` to give a label to the pacakages installed on that instance and then when `pip install` is done, I am deleting them.

47 |

48 |

49 | ### Step 4: Remove cache

50 |

51 | Cache can take up lots of un-needed space. So always run `apk add` with `--no-cache` parameter.

52 |

53 | {% highlight bash %}

54 | RUN apk add --no-cache package1 package2

55 | {% endhighlight %}

56 |

57 | If you are using npm for manaing project dependencies and bower for managing frontend dependencies, it is recommended to clear their cache too.

58 |

59 | {% highlight bash %}

60 | RUN npm cache clean && bower cache clean

61 | {% endhighlight %}

62 |

63 |

64 | ### Step 5: Learn from the experts

65 |

66 | Each and every image on Docker Hub is open source, meaning that it's Dockerfile is freely available. Since the official images are made as efficient as possible,

67 | it's easy to find great tricks on how to achieve optimum performance and compact size in them. So when viewing an image on DockerHub, don't forget to peek into its

68 | Dockerfile, it helps more than you can imagine.

69 |

70 |

71 | ## Conclusion

72 |

73 | That's all I have for now. I will keep you updated on new tips if I find any. In my personal experience, I found AlpineLinux to be worth using.

74 | I tried deploying [Open Event Server](https://github.com/fossasia/open-event-orga-server) on Alpine but faced some issues so ended up creating a Dockerfile

75 | using [debain:jessie](https://hub.docker.com/_/debian/).

76 | But for small projects, I would recommend Alpine.

77 | On large and complex projects however, you may face issues with Alpine at times. That maybe due to lack of packages, lack of library support or some other thing.

78 | But it's not impossible to overcome those issues so if you try hard enough, you can get your app running on Alpine.

79 |

--------------------------------------------------------------------------------

/_posts/gsoc/2016-07-25-open-event-import-export-algo.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: post

3 | title: Import/Export feature of Open Event - Challenges

4 | category: gsoc

5 | tags: gsoc gsoc16

6 | ---

7 |

8 | We have developed a nice import/export feature as a part of our GSoC project [Open Event](https://github.com/fossasia/open-event).

9 | It allows user to export an event and then further import it back.

10 |

11 | Event contains data like tracks, sessions, microlocations etc.

12 | When I was developing the basic part of this feature, it was a challenge on how to export and then further import the same data.

13 | I was in need of a format that completely stores data and is recognized by the current system.

14 | This is when I decided to use the APIs.

15 |

16 | API documentation of Open Event project is at [http://open-event.herokuapp.com/api/v2](http://open-event.herokuapp.com/api/v2). We have a considerably rich API covering most

17 | aspects of the system.

18 | For the export, I adopted this very simple technique.

19 |

20 | 1. Call the corresponding GET APIs (tracks, sessions etc) for a database model internally.

21 | 2. Save the data in separate json files.

22 | 3. Zip them all and done.

23 |

24 | This was very simple and convenient. Now the real challenge came of importing the event from the data exported.

25 | As exported data was nothing but json, we could have created the event back by sending the data back as POST request.

26 | But this was not that easy because the data formats are not exactly the same for GET and POST requests.

27 |

28 | Example -

29 |

30 | Sessions GET --

31 |

32 | {% highlight json %}

33 | {

34 | "speakers": [

35 | {

36 | "id": 1,

37 | "name": "Jay Sean"

38 | }

39 | ],

40 | "track": {

41 | "id": 1,

42 | "name": "Warmups"

43 | }

44 | }

45 | {% endhighlight %}

46 |

47 | Sessions POST --

48 |

49 | {% highlight json %}

50 | {

51 | "speaker_ids": [1],

52 | "track_id": 1

53 | }

54 | {% endhighlight %}

55 |

56 | So the exported data can only be imported when it has been converted to POST form. Luckily, the only change between POST and GET APIs was of the related attributes where

57 | dictionary in GET was replaced with just the ID in POST/PUT.

58 | So when importing I had to make it so such that the dicts are converted to their POST counterparts. For this, all that I had to do was to list all dict-type keys

59 | and extract the `id` key from them.

60 | I defined a global variable as the following listing all dict keys and then wrote a function to extract the ids and convert the keys.

61 |

62 | {% highlight python %}

63 | RELATED_FIELDS = {

64 | 'sessions': [

65 | ('track', 'track_id', 'tracks'),

66 | ('speakers', 'speaker_ids', 'speakers'),

67 | ]

68 | }

69 | {% endhighlight %}

70 |

71 |

72 | #### Second challenge

73 |

74 | Now I realized that there was even a tougher problem, and that was how to re-create the relations.

75 | In the above json, you must have realized that a session can be related to speaker(s) and track. These relations are managed using the IDs of the items.

76 | When an event is imported, the IDs are bound to change and so the old IDs will become outdated i.e. a track which was at ID 62 when exported can be at ID 92 when it is imported.

77 | This will cause the relationships to break.

78 | So to counter this problem, I did the following -

79 |

80 | 1. Import items in a specific order, independent first

81 | 2. Store a map of old IDs v/s new IDs.

82 | 3. When dependent items are to be created, get new ID from the map and relate with it.

83 |

84 | Let me explain the above -

85 |

86 | The first step was to import/re-create the independent items first. Here independent items are tracks and speakers, and the dependent item is session.

87 | Now while creating the independent items, store their new IDs after create. Create a map of old ids v/s new ids and store it.

88 | This map will hold a clue to *what became what* after they were recreated from the json.

89 | Now the key final step is that when dependent items are to be created, find the indepedent *related* keys in their json using the above defined *RELATED_FIELDS* listing.

90 | Once they are found, extract their IDs and find the new ID corresponding to their old ID.

91 | Link the new ID with the dependent item and that would be all.

92 |

93 | This post covers the main challenges I faced when developing the import/export feature and how I overcame them.

94 | I hope it will provide some help when you are dealing with similar problems.

95 |

96 |

--------------------------------------------------------------------------------

/_posts/2018-05-11-introducing-chattt.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: post

3 | title: "Introducing Chattt - an open source CLI chat client"

4 | tags: open-source project github

5 | ---

6 |

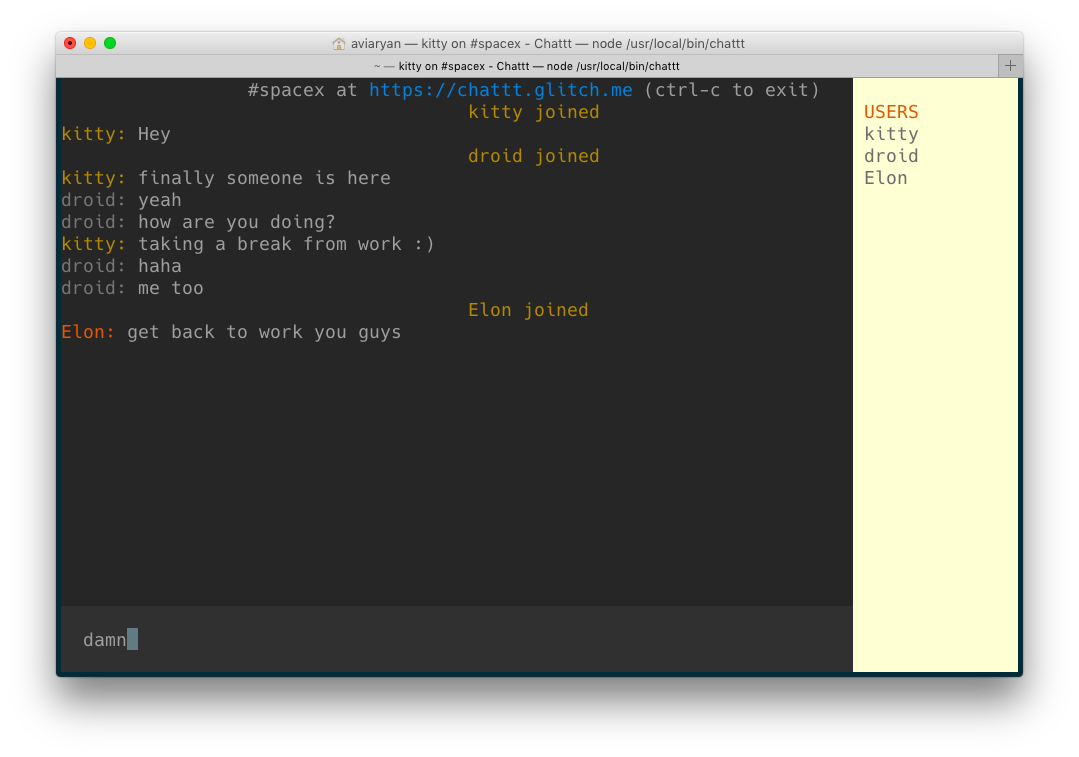

7 |

8 |  9 |

10 |

11 | So the past 2 months I was slowly working on my hobby project called "Chattt" and I just did the [v0.2](https://github.com/aviaryan/chattt/releases/tag/v0.2.0) release recently. It's quite ready for public use now so I thought I should share it with everyone.

12 |

13 | ## So what is Chattt?

14 |

15 | Chattt is an open source terminal based chat system. Its selling point is that it is very simple to use and it doesn't require much technical knowledge. I created it because I always wanted to have a simpler chat system that worked right from the terminal. I know you might say, "IRC does the same thing" but the thing is that I have always found IRC too complex. But that could be because I haven't given it much of a shot.

16 |

17 | Well whatever be the reason here, I went ahead and did this project. I even published it on `npm`. Here is how you can install and use it.

18 |

19 | ```sh

20 | $ npm install -g chattt

21 | $ chattt

22 | ```

23 | Once installed, it's ready to use. The video below shows a demo.

24 |

25 |

26 |

27 |

28 |

29 |

30 | ## The Development

31 |

32 | Chattt was developed entirely in **JavaScript**(JS) which also happens to be my new favorite language. I had to create two GitHub repositories for it since it required both a [backend](https://github.com/aviaryan/chattt-backend) and a [frontend](https://github.com/aviaryan/chattt) part.

33 |

34 | The backend part of Chattt is an ExpressJS server using [socket.io](http://socket.io/) for interacting with chat clients i.e. the Chattt CLI instances. For those who don't know, `socket.io` is a convenient library for using web sockets in your projects. Chattt uses the same concept to implement its core i.e. the chatting feature.

35 |

36 | I also needed a place to host the backend. Since it was just a hobby project and I wasn't too sure about its success, I didn't want to spend money on a compute server. But then I learned about [Glitch](https://glitch.com/) which allows hosting of NodeJS projects for free. I gave it a try and after a few attempts, I was able to host [chattt's backend on it](https://glitch.com/edit/#!/chattt).

37 |

38 | Coming to the frontend part, I had to make a CLI for Chattt. This was a real challenge for me because a CLI chat application requires a very dynamic command-line interface i.e. the command line window should update-in-place. I didn't know how to do such a thing. But then I stumbled upon [blessed](https://github.com/chjj/blessed), a terminal interface library which allowed me to do just that. So excited, I started learning how to use it. Now this was quite challenging since blessed's documentation was massive and I didn't understand the keywords used there (since I hadn't done CLI programming before). But then I came upon [gitter-cli](https://github.com/RodrigoEspinosa/gitter-cli) which was an open-source CLI chat client for [Gitter](https://gitter.im/) and it also used the `blessed` library. So after going through its codebase, I was able to pick up `blessed`'s concepts better and this helped me to finally develop the chattt's user interface(UI).

39 |

40 | Connecting the UI with the backend to display chats was fairly easy once we had the UI so I won't be talking about that. But in the end, I think I did an impressive job on the UI, the result of which it looks like this now.

41 |

42 |

43 |

9 |

10 |

11 | So the past 2 months I was slowly working on my hobby project called "Chattt" and I just did the [v0.2](https://github.com/aviaryan/chattt/releases/tag/v0.2.0) release recently. It's quite ready for public use now so I thought I should share it with everyone.

12 |

13 | ## So what is Chattt?

14 |

15 | Chattt is an open source terminal based chat system. Its selling point is that it is very simple to use and it doesn't require much technical knowledge. I created it because I always wanted to have a simpler chat system that worked right from the terminal. I know you might say, "IRC does the same thing" but the thing is that I have always found IRC too complex. But that could be because I haven't given it much of a shot.

16 |

17 | Well whatever be the reason here, I went ahead and did this project. I even published it on `npm`. Here is how you can install and use it.

18 |

19 | ```sh

20 | $ npm install -g chattt

21 | $ chattt

22 | ```

23 | Once installed, it's ready to use. The video below shows a demo.

24 |

25 |

26 |

27 |

28 |

29 |

30 | ## The Development

31 |

32 | Chattt was developed entirely in **JavaScript**(JS) which also happens to be my new favorite language. I had to create two GitHub repositories for it since it required both a [backend](https://github.com/aviaryan/chattt-backend) and a [frontend](https://github.com/aviaryan/chattt) part.

33 |

34 | The backend part of Chattt is an ExpressJS server using [socket.io](http://socket.io/) for interacting with chat clients i.e. the Chattt CLI instances. For those who don't know, `socket.io` is a convenient library for using web sockets in your projects. Chattt uses the same concept to implement its core i.e. the chatting feature.

35 |

36 | I also needed a place to host the backend. Since it was just a hobby project and I wasn't too sure about its success, I didn't want to spend money on a compute server. But then I learned about [Glitch](https://glitch.com/) which allows hosting of NodeJS projects for free. I gave it a try and after a few attempts, I was able to host [chattt's backend on it](https://glitch.com/edit/#!/chattt).

37 |

38 | Coming to the frontend part, I had to make a CLI for Chattt. This was a real challenge for me because a CLI chat application requires a very dynamic command-line interface i.e. the command line window should update-in-place. I didn't know how to do such a thing. But then I stumbled upon [blessed](https://github.com/chjj/blessed), a terminal interface library which allowed me to do just that. So excited, I started learning how to use it. Now this was quite challenging since blessed's documentation was massive and I didn't understand the keywords used there (since I hadn't done CLI programming before). But then I came upon [gitter-cli](https://github.com/RodrigoEspinosa/gitter-cli) which was an open-source CLI chat client for [Gitter](https://gitter.im/) and it also used the `blessed` library. So after going through its codebase, I was able to pick up `blessed`'s concepts better and this helped me to finally develop the chattt's user interface(UI).

39 |

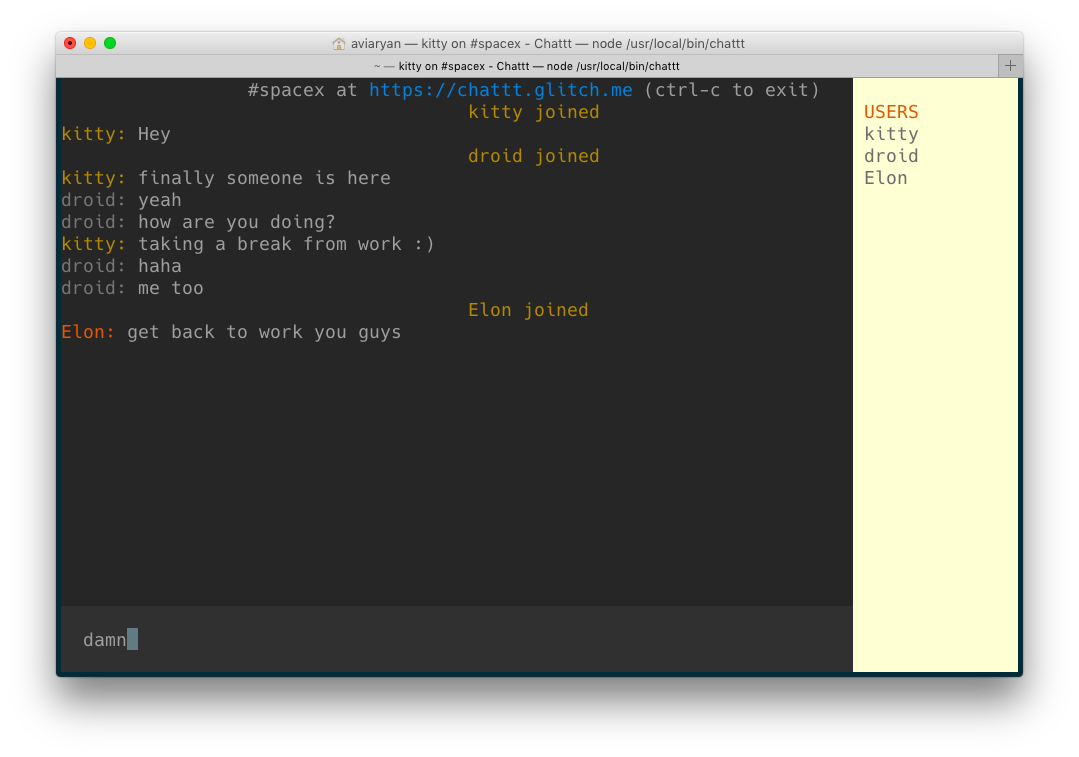

40 | Connecting the UI with the backend to display chats was fairly easy once we had the UI so I won't be talking about that. But in the end, I think I did an impressive job on the UI, the result of which it looks like this now.

41 |

42 |

43 |  44 |

45 |

46 |

47 | ## What's Next?

48 |

49 | Glad you asked. Chattt is still in an early phase (only `v0.2`) and I plan to add some more features to it. The most notable ones are -

50 |

51 | - Feature to set custom backend server in the client [v0.3]

52 | - Feature to view active user list of a channel before actually joining it [v0.3]

53 |

54 | I also plan to generalize the Chattt frontend to support other chat/communication providers like Telegram and Discord. Many users have suggested me this and I will give it a try after publishing `[v0.3]`. If you don't want to miss on these updates, I would suggest [watching](https://github.com/aviaryan/chattt/watchers) the GitHub repo.

55 |

56 |

57 | ## Conclusion

58 |

59 | Chattt is one of the [many](https://github.com/aviaryan?tab=repositories) "hobby" open source projects of mine. I learned a lot of new things developing it. I would really appreciate if you can share your suggestions and ideas regarding it with me on my Discord (**aviaryan#7504**) or GitHub. Seriously, they mean a lot. And thanks for reading this far. Bye! 😊

60 |

61 | 👋🏻👋🏻👋🏻

62 |

--------------------------------------------------------------------------------

/_posts/gsoc/2016-07-28-downloading-files-from-urls.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: post

3 | title: Downloading Files from URLs in Python

4 | category: gsoc

5 | tags: gsoc gsoc16 python

6 | ---

7 |

8 | This post is about how to efficiently/correctly download files from URLs using Python.

9 | I will be using the god-send library [requests](docs.python-requests.org/) for it. I will write about methods to correctly download binaries from URLs and set their filenames.

10 |

11 | Let's start with baby steps on how to download a file using requests --

12 |

13 | {% highlight python %}

14 | import requests

15 |

16 | url = 'http://google.com/favicon.ico'

17 | r = requests.get(url, allow_redirects=True)

18 | open('google.ico', 'wb').write(r.content)

19 | {% endhighlight %}

20 |

21 | The above code will download the media at [http://google.com/favicon.ico](http://google.com/favicon.ico) and save it as google.ico.

22 |

23 | Now let's take another example where url is [https://www.youtube.com/watch?v=9bZkp7q19f0](https://www.youtube.com/watch?v=9bZkp7q19f0).

24 | What do you think will happen if the above code is used to download it ?

25 | If you said that a HTML page will be downloaded, you are spot on. This was one of the problems I faced in the Import module of Open Event where I had to download media from

26 | certain links. When the URL linked to a webpage rather than a binary, I had to not download that file and just keep the link as is.

27 | To solve this, what I did was inspecting the headers of the URL. Headers usually contain a `Content-Type` parameter which tells us about the type of data the url is linking to.

28 | A naive way to do it will be -

29 |

30 | {% highlight python %}

31 | r = requests.get(url, allow_redirects=True)

32 | print r.headers.get('content-type')

33 | {% endhighlight %}

34 |

35 | It works but is not the optimum way to do so as it involves downloading the file for checking the header.

36 | So if the file is large, this will do nothing but waste bandwidth.

37 | I looked into the requests documentation and found a better way to do it. That way involved just fetching the headers of a url before actually downloading it.

38 | This allows us to skip downloading files which weren't meant to be downloaded.

39 |

40 | {% highlight python %}

41 | import requests

42 |

43 | def is_downloadable(url):

44 | """

45 | Does the url contain a downloadable resource

46 | """

47 | h = requests.head(url, allow_redirects=True)

48 | header = h.headers

49 | content_type = header.get('content-type')

50 | if 'text' in content_type.lower():

51 | return False

52 | if 'html' in content_type.lower():

53 | return False

54 | return True

55 |

56 | print is_downloadable('https://www.youtube.com/watch?v=9bZkp7q19f0')

57 | # >> False

58 | print is_downloadable('http://google.com/favicon.ico')

59 | # >> True

60 | {% endhighlight %}

61 |

62 | To restrict download by file size, we can get the filesize from the `Content-Length` header and then do suitable comparisons.

63 |

64 | {% highlight python %}

65 | content_length = header.get('content-length', None)

66 | if content_length and content_length > 2e8: # 200 mb approx

67 | return False

68 | {% endhighlight %}

69 |

70 | So using the above function, we can skip downloading urls which don't link to media.

71 |

72 |

73 | #### Getting filename from URL

74 |

75 | We can parse the url to get the filename.

76 | Example - [http://aviaryan.in/images/profile.png](http://aviaryan.in/images/profile.png).

77 |

78 | To extract the filename from the above URL we can write a routine which fetches the last string after backslash (/).

79 |

80 | {% highlight python %}

81 | url = 'http://aviaryan.in/images/profile.png'

82 | if url.find('/'):

83 | print url.rsplit('/', 1)[1]

84 | {% endhighlight %}

85 |

86 | This will be give the filename in some cases correctly. However, there are times when the filename information is not present in the url.

87 | Example, something like `http://url.com/download`. In that case, the `Content-Disposition` header will contain the filename information.

88 | Here is how to fetch it.

89 |

90 | {% highlight python %}

91 | import requests

92 | import re

93 |

94 | def get_filename_from_cd(cd):

95 | """

96 | Get filename from content-disposition

97 | """

98 | if not cd:

99 | return None

100 | fname = re.findall('filename=(.+)', cd)

101 | if len(fname) == 0:

102 | return None

103 | return fname[0]

104 |

105 |

106 | url = 'http://google.com/favicon.ico'

107 | r = requests.get(url, allow_redirects=True)

108 | filename = get_filename_from_cd(r.headers.get('content-disposition'))

109 | open(filename, 'wb').write(r.content)

110 | {% endhighlight %}

111 |

112 | The url-parsing code in conjuction with the above method to get filename from `Content-Disposition` header will work for most of the cases.

113 | Use them and test the results.

114 |

115 | These are my 2 cents on downloading files using requests in Python. Let me know of other tricks I might have overlooked.

116 |

--------------------------------------------------------------------------------

/_posts/gsoc/2016-08-11-dockerfile-basic.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: post

3 | title: Writing your first Dockerfile

4 | category: gsoc

5 | tags: gsoc gsoc16 docker

6 | ---

7 |

8 | In this tutorial, I will show you how to write your first `Dockerfile`.

9 | I got to learn Docker because I had to implement a [Docker](http://docker.com) deployment for our GSoC project [Open Event Server](https://github.com/aviaryan/open-event-orga-server).

10 |

11 | First up, what is Docker ?

12 | Basically saying, Docker is an open platform for people to build, ship and run applications anytime and anywhere. Using Docker, your app will be able to run on any

13 | platform that supports Docker. And the best part is, it will run in the same way on different platforms i.e. no cross-platform issues.

14 | So you build your app for the platform you are most comfortable with and then deploy it anywhere.

15 | This is the fundamental advantage of Docker and why it was created.

16 |

17 | So let's start our dive into Docker.

18 |

19 | Docker works using Dockerfile ([example](https://github.com/fossasia/open-event-orga-server/blob/master/Dockerfile)), a file which specifies how Docker is supposed to build your application.

20 | It contains the steps Docker is supposed to follow to package your app. Once that is done, you can send this packaged app to anyone and they can run it on their system with

21 | no problems.

22 |

23 | Let's start with the project structure. You will have to keep `Dockerfile` at the root of your project. A basic project will look as follows -

24 |

25 | {% highlight bash %}

26 | - app.py

27 | - Dockerfile

28 | - requirements.txt

29 | - some_app_folder/

30 | - some_file

31 | - some_file

32 | {% endhighlight %}

33 |

34 | Dockerfile starts with a base image that decides on which image your app should be built upon. Basically "Images" are nothing but apps.

35 | So for example you want your run your application in Ubuntu 14.04 VM, you use [ubuntu:14.04](https://hub.docker.com/_/ubuntu/) as the base image.

36 |

37 | {% highlight bash %}

38 | FROM ubuntu:14.04

39 | MAINTAINER Your Name

40 | {% endhighlight %}

41 |

42 | These are usually the first two lines of a Dockerfile and they specify the base image and Dockerfile maintainer respectively.

43 | You can look into [Docker Hub](https://hub.docker.com/) for more base images.

44 |

45 | Now that we have started our Dockerfile, it's time to do something. Now think, if you are trying to run your app on a new system of Ubuntu, what will be the first step you

46 | will do... You update the package lists.

47 |

48 | {% highlight bash %}

49 | RUN apt-get update

50 | {% endhighlight %}

51 |

52 | You may possibly want to update the packages too.

53 |

54 | {% highlight bash %}

55 | RUN apt-get update

56 | RUN apt-get upgrade -y

57 | {% endhighlight %}

58 |

59 | Let's explain what's happening. `RUN` is a Docker command which instructs to run something on the shell. Here we are running `apt-get update` followed by `apt-get upgrade -y`

60 | on the shell. There is no need for `sudo` as Docker already runs commands with root user previledges.

61 |

62 | The next thing you will want to do now is to put your application inside the container (your Ubuntu VM). `COPY` command is just for that.

63 |

64 | {% highlight bash %}

65 | RUN mkdir -p /myapp

66 | WORKDIR /myapp

67 | COPY . .

68 | {% endhighlight %}

69 |

70 | Right now we were at the root of the ubuntu instance i.e. in parallel with /var, /home, /root etc. You surely don't want to copy your files there.

71 | So we create a 'myapp' directory and set it as WORKDIR (project's directory). From now on, all commands will run inside it.

72 |

73 | Now that copying the app has been done, you may want to install it's requirements.

74 |

75 | {% highlight bash %}

76 | RUN apt-get install -y python python-setuptools python-pip

77 | RUN pip install -r requirements.txt

78 | {% endhighlight %}

79 |

80 | You might be thinking why am I installing Python here. Isn't it present by default !? Well let me tell you that base image 'ubuntu' is not the Ubuntu you are used with. It just contains the bare essentials, not stuff like python, gcc, ruby etc. So you will have to install it on your own.

81 |

82 | Similarly if you are installing some Python package that requires gcc, it will not work. When you are struck in a issue like that, try googling the error message and most

83 | likely you will find an answer. :grinning:

84 |

85 | The last thing remaining now is to run your app. With this, your Dockerfile is complete.

86 |

87 | {% highlight bash %}

88 | CMD python app.py

89 | {% endhighlight %}

90 |

91 |

92 | #### Building the app

93 |

94 | To build the app run the following command.

95 |

96 | {% highlight bash %}

97 | docker build -t myapp .

98 | {% endhighlight %}

99 |

100 | Then to run the app, execute `docker run myapp`.

101 |

102 |

103 | #### Where to go next

104 |

105 | Refer to the [official Dockerfile reference](https://docs.docker.com/engine/reference/builder/) to learn more Dockerfile commands.

106 | Also you may find my post on [using Travis to test Docker applications](http://aviaryan.in/blog/gsoc/docker-test.html) interesting if you want to automate testing of your Docker application.

107 |

108 | I will write more blog posts on Docker as I learn more. I hope you found this one useful.

109 |

110 |

--------------------------------------------------------------------------------

/_posts/gsoc/2016-06-06-auth-flask-done-right.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: post

3 | title: REST API Authentication in Flask

4 | category: gsoc

5 | tags: gsoc gsoc16 flask python

6 | ---

7 |

8 | Recently I had the challenge of restricting unauthorized personnel from accessing some views in Flask.

9 | Sure the naive way will be asking the username and password in the json itself and checking the records in the database. The request will be something like this-

10 |

11 | {% highlight json %}

12 | {

13 | "username": "open_event_user",

14 | "password": "password"

15 | }

16 | {% endhighlight %}

17 |

18 | But I wanted to do something better. So I looked up around the Internet and found that it is possible to accept Basic authorization credentials in Flask (sadly it isn't documented).

19 | For those who don't know what Basic authorization is a way to send plain `username:password` combo as header in a request after obscuring them with base64 encoding.

20 | So for the above username and password, the corresponding header will be -

21 |

22 | {% highlight json %}

23 | {

24 | "Authorization": "Basic b3Blbl9ldmVudF91c2VyOnBhc3N3b3Jk"

25 | }

26 | {% endhighlight %}

27 | where the hashed string is base64 encoded form of string "open\_event\_user:password".

28 |

29 | Now back to the topic, so the next job is to validate the views by checking the Basic auth credentials in header and call `abort()` if credentials are missing or wrong.

30 | For this, we can easily create a helper function that aborts a view if there is something wrong with the credentials.

31 |

32 | {% highlight python %}

33 | from flask import request, Flask, abort

34 | from models import UserModel

35 |

36 | app = Flask(__name__)

37 |

38 | def validate_auth():

39 | auth = request.authorization

40 | if not auth: # no header set

41 | abort(401)

42 | user = UserModel.query.filter_by(username=auth.username).first()

43 | if user is None or user.password != auth.password:

44 | abort(401)

45 |

46 | @app.route('/view')

47 | def my_view():

48 | validate_auth()

49 | # stuff on success

50 | # more stuff

51 | {% endhighlight %}

52 |

53 | This works but wouldn't it be nice if we could specify `validate_auth` function as a decorator.

54 | This will give us the advantage of only having to set it once in a model view with all auth-required methods. Right ? So here we go

55 |

56 | {% highlight python %}

57 | def requires_auth(f):

58 | @wraps(f)

59 | def decorated(*args, **kwargs):

60 | auth = request.authorization

61 | if not auth: # no header set

62 | abort(401)

63 | user = UserModel.query.filter_by(username=auth.username).first()

64 | if user is None or user.password != auth.password:

65 | abort(401)

66 | return f(*args, **kwargs)

67 | return decorated

68 |

69 | @app.route('/view')

70 | @requires_auth

71 | def my_view():

72 | # stuff on success

73 | # more stuff

74 | {% endhighlight %}

75 | I renamed the function from validate\_auth to requires\_auth because it suits the context better.

76 |

77 | At this point, the above code may look perfect but it doesn't work when you are accessing the API through Swagger web UI.

78 | This is because it is not possible to set base64 encoded authorization header from the swagger UI.

79 | For those who are wondering "what the hell is swagger", I will define Swagger as a tool for API based projects which creates a nice web UI to live-test the API and

80 | also exports a schema of the API that can be used to understand API definitions.

81 |

82 | Now how do we get `requires_auth` to work when a request is sent through swagger UI ? It was a little tricky and took me a couple of hours but I finally got it.

83 | The trick therefore is to check for active sessions when there are no authorization headers set (as in the case of swagger UI).

84 | If an active session is found, it means that the user is authenticated.

85 | Here I would like to suggest using Flask-Login extension which makes session and login management a child's play.

86 | Always use it if your flask project deals with login, user accounts and stuff.

87 |

88 | Now back to the task in hand, here is how we can set the `requires_auth` function to check for existing sessions.

89 |

90 | {% highlight python %}

91 | from flask import request, abort, g

92 | from flask.ext import login

93 |

94 | def requires_auth(f):

95 | @wraps(f)

96 | def decorated(*args, **kwargs):

97 | auth = request.authorization

98 | if not auth: # no header set

99 | if login.current_user.is_authenticated: # check active session

100 | g.user = login.current_user

101 | return f(*args, **kwargs)

102 | else:

103 | abort(401)

104 | user = UserModel.query.filter_by(username=auth.username).first()

105 | if user is None or user.password != auth.password:

106 | abort(401)

107 | g.user = user

108 | return f(*args, **kwargs)

109 | return decorated

110 | {% endhighlight %}

111 |

112 | Pretty easy right !! Also notice that I am saving the user who was currently authenticated in flask's global variable `g`.

113 | Now the authenticated user can be accessed from views as `g.user`. Cool, isn't it ?

114 | Now if there is a need to add a more secure form of authorization like 'Token' based, you can easily update the `requires_auth` decorator to get the same results.

115 |

116 | I hope this article provided valuable insight into managing REST API authorizations in Flask. I will keep posting more awesome things I learn in my GSoC journey.

117 |

118 | That's it. Sayonara.

119 |