$date

| $newline$title $date |

| $newline$title $date |

| $newline$title $date |

| $newline$title $date |

| $newline$title $date |

|

5 | How to Build PROTEIN VISUALIZATION WEB-APP using PYTHON and STREAMLIT | Bioinformatics | Sep 22, 2022 |

|

4 | LANGCHAIN AI AUTONOMOUS AGENT WEB APP - 👶 BABY AGI 🤖 with EMAIL AUTOMATION using DATABUTTON May 11, 2023 |

|

6 | Build a PERSONAL CHATBOT with LangChainAI MEMORY with ChatGPT-3.5-Turbo API in PYTHON Apr 13, 2023 |

|

8 | How to Build a CHAT BOT with ChatGPT API (GPT-3.5-TURBO) having CONVERSATIONAL MEMORY in Python Mar 15, 2023 |

|

10 | USING 🦜 LANGCHAIN AIs SequentialChain 🔗 : BUILD - @OpenAI @streamlit Web APP | Python Feb 20, 2023 |

|

12 | CHAT-GPT-LIKE live STREAM completions AI-ASSISTANT BOT in PYTHON? @OpenAI +Streamlit Feb 11, 2023 |

|

14 | I built my own SEMANTIC TEXT SEARCH WEB APP using OPENAI EMBEDDINGS + STREAMLIT | ada-002 engine Feb 6, 2023 |

|

16 | Is ChatGPT PRO (Paid Version) worth the $42 monthly? @OpenAI’s API as cheaper alternatives & more … Jan 21, 2023 |

|

18 | ChatGPT helped me to build this AI TWEET Generator Web APP using OpenAI + Streamlit ( Python ) Jan 19, 2023 |

|

20 | Using chatGPT to build a Machine Learning Web App in Python! Jan 3, 2023 |

|

22 | I asked ChatGPT to buid this Data Science Web App from scratch in PYTHON Dec 29, 2022 |

|

24 | OpenAI GPT-3 CHAT BOT 🤖 within Streamlit Python Web app 🚀| Python @OpenAI @streamlitofficial Dec 24, 2022 |

|

26 | How to build OpenAI Web Apps in Python | Streamlit | GPT3 text-da-vinci model for Summarizer App Dec 21, 2022 |

|

6 | 🤖CHAT with ANY ONLINE RESOURCES using EMBEDCHAIN - a LangChain wrapper, in few lines of code ! Jul 18, 2023 |

|

8 | Convert EXCEL SHEETS DATA to ANIMATED PLOTS EASILY in PYTHON using IPYVIZZU Jan 28, 2023 |

|

10 | Using chatGPT to build a Machine Learning Web App in Python! Jan 3, 2023 |

|

12 | I asked ChatGPT to buid this Data Science Web App from scratch in PYTHON Dec 29, 2022 |

|

14 | Python Web App using INTERACTIVE AGGRID Table connected to GOOGLE SHEET |JavaScript Injection | Dec 6, 2022 |

|

16 | How to automate Google Sheets using Python May 16, 2022 |

|

18 | STREAMLIT PYTHON WEB APP connected to GOOGLE SHEET as DATABASE | Automate Google Spreadsheets Jun 23, 2021 |

|

24 | Built an AI Agent from Screenshot & Deployed as Full Stack App! Dec 15, 2024 |

|

26 | Customers asked how Databutton is different than Bolt.new, so we did a livestream Nov 15, 2024 |

|

28 | AI AGENTS simplified in 60 Seconds #aiagents #ai #aicode #aiagent #aiappdevelopment #aicode Nov 5, 2024 |

|

30 | Simplest Way to Build AI Agents Explained ( And use them in Your Full Stack APPS !) Nov 1, 2024 |

|

32 | (Not Just Hype!) Build a Multi-Agent AI App with Just Prompts? Watch Live—A Real Backend & Slick UI Oct 26, 2024 |

51 |

51 |  52 |

52 |  53 | [](https://www.youtube.com/c/Avra_b)

54 | [](https://medium.com/@avra42)

55 |

--------------------------------------------------------------------------------

/Streamlit-Python/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/avrabyt/YouTube-Tutorials/148ba08fea304bd04916268d6c4e2cd1052d67b7/Streamlit-Python/.DS_Store

--------------------------------------------------------------------------------

/Streamlit-Python/README.md:

--------------------------------------------------------------------------------

1 | # [Streamlit-Python Play List](https://youtube.com/playlist?list=PLqQrRCH56DH8JSoGC3hsciV-dQhgFGS1K) ⬇️

2 |

3 | [](https://github.com/avrabyt/YouTube-Tutorials/actions/workflows/Streamlit-workflow.yml)

4 |

5 |

53 | [](https://www.youtube.com/c/Avra_b)

54 | [](https://medium.com/@avra42)

55 |

--------------------------------------------------------------------------------

/Streamlit-Python/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/avrabyt/YouTube-Tutorials/148ba08fea304bd04916268d6c4e2cd1052d67b7/Streamlit-Python/.DS_Store

--------------------------------------------------------------------------------

/Streamlit-Python/README.md:

--------------------------------------------------------------------------------

1 | # [Streamlit-Python Play List](https://youtube.com/playlist?list=PLqQrRCH56DH8JSoGC3hsciV-dQhgFGS1K) ⬇️

2 |

3 | [](https://github.com/avrabyt/YouTube-Tutorials/actions/workflows/Streamlit-workflow.yml)

4 |

5 |  |

6 | Building Web Apps with GPT-4 Vision & Text to Speech (TTS) API - Transform Videos into AI Voiceovers Nov 30, 2023 |

|

8 | Generate Apps from Sketches or Screenshots with OpenAI GPT-4 Vision API (6 mins quick demo) Nov 24, 2023 |

|

10 | How to Build App with OpenAI's New GPT-4 TURBO VISION API (gpt vision) Nov 15, 2023 |

|

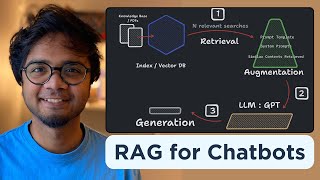

12 | Build your own RAG (retrieval augmented generation) AI Chatbot using Python | Simple walkthrough Nov 3, 2023 |

|

14 | 🤖Prompt to Powerful Data Visualization 📊 - Build Your One-Prompt Charts App using GPT and Databutton Oct 12, 2023 |

|

16 | How to Stream LangChainAI Abstractions and Responses using Streamlit Callback Handler and Chat UI Oct 3, 2023 |

|

18 | 🦜🛠️ Getting started with LangSmith - Integrating with LANGCHAIN powered Web Applications & Chatbots Jul 20, 2023 |

|

20 | 🤖CHAT with ANY ONLINE RESOURCES using EMBEDCHAIN - a LangChain wrapper, in few lines of code ! Jul 18, 2023 |

|

22 | MULTILINGUAL CHATBOT 🤖 USING @CohereAI embedding models, @databutton and @LangChain Jun 27, 2023 |

|

24 | LANGCHAIN AI- ConstitutionalChainAI + Databutton AI ASSISTANT Web App ( in 13 minutes from scratch) May 13, 2023 |

|

26 | LANGCHAIN AI AUTONOMOUS AGENT WEB APP - 👶 BABY AGI 🤖 with EMAIL AUTOMATION using DATABUTTON May 11, 2023 |

|

28 | Build a PERSONAL CHATBOT with LangChainAI MEMORY with ChatGPT-3.5-Turbo API in PYTHON Apr 13, 2023 |

|

30 | How to Build a CHAT BOT with ChatGPT API (GPT-3.5-TURBO) having CONVERSATIONAL MEMORY in Python Mar 15, 2023 |

|

32 | USING 🦜 LANGCHAIN AIs SequentialChain 🔗 : BUILD - @OpenAI @streamlit Web APP | Python Feb 20, 2023 |