├── tests

└── __init__.py

├── pautobot

├── __init__.py

├── routers

│ ├── __init__.py

│ ├── bot.py

│ ├── contexts.py

│ └── documents.py

├── engine

│ ├── __init__.py

│ ├── bot_enums.py

│ ├── llm_factory.py

│ ├── qa_factory.py

│ ├── chatbot_factory.py

│ ├── context_manager.py

│ ├── bot_context.py

│ ├── ingest.py

│ └── engine.py

├── models.py

├── app_info.py

├── config.py

├── database.py

├── globals.py

├── db_models.py

├── app.py

└── utils.py

├── MANIFEST.in

├── frontend

├── .prettierignore

├── styles

│ └── globals.css

├── public

│ ├── favicon.ico

│ ├── pautobot.png

│ └── loading.svg

├── jsconfig.json

├── postcss.config.js

├── README.md

├── tailwind.config.js

├── components

│ ├── RightSidebar.js

│ ├── icons

│ │ ├── UploadIcon.js

│ │ └── LoadingIcon.js

│ ├── ModelSelector.js

│ ├── Sidebar.js

│ ├── ContextManager.js

│ ├── SidebarBottomMenu.js

│ ├── SidebarMenu.js

│ ├── SidebarTopMenu.js

│ ├── NewMessage.js

│ ├── Main.js

│ └── QADBManager.js

├── next.config.js

├── lib

│ └── requests

│ │ ├── history.js

│ │ ├── bot.js

│ │ └── documents.js

├── pages

│ ├── _app.js

│ └── index.js

└── package.json

├── docs

├── pautobot.png

├── screenshot.png

└── python3.11.3_lite.zip

├── pyproject.toml

├── requirements.txt

├── .pre-commit-config.yaml

├── .gitignore

├── setup.py

└── README.md

/tests/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/pautobot/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/pautobot/routers/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/MANIFEST.in:

--------------------------------------------------------------------------------

1 | recursive-include pautobot/frontend-dist *

--------------------------------------------------------------------------------

/frontend/.prettierignore:

--------------------------------------------------------------------------------

1 | .next

2 | dist

3 | node_modules

--------------------------------------------------------------------------------

/pautobot/engine/__init__.py:

--------------------------------------------------------------------------------

1 | from pautobot.engine.engine import *

2 |

--------------------------------------------------------------------------------

/docs/pautobot.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/awesomedev08/pautobot/HEAD/docs/pautobot.png

--------------------------------------------------------------------------------

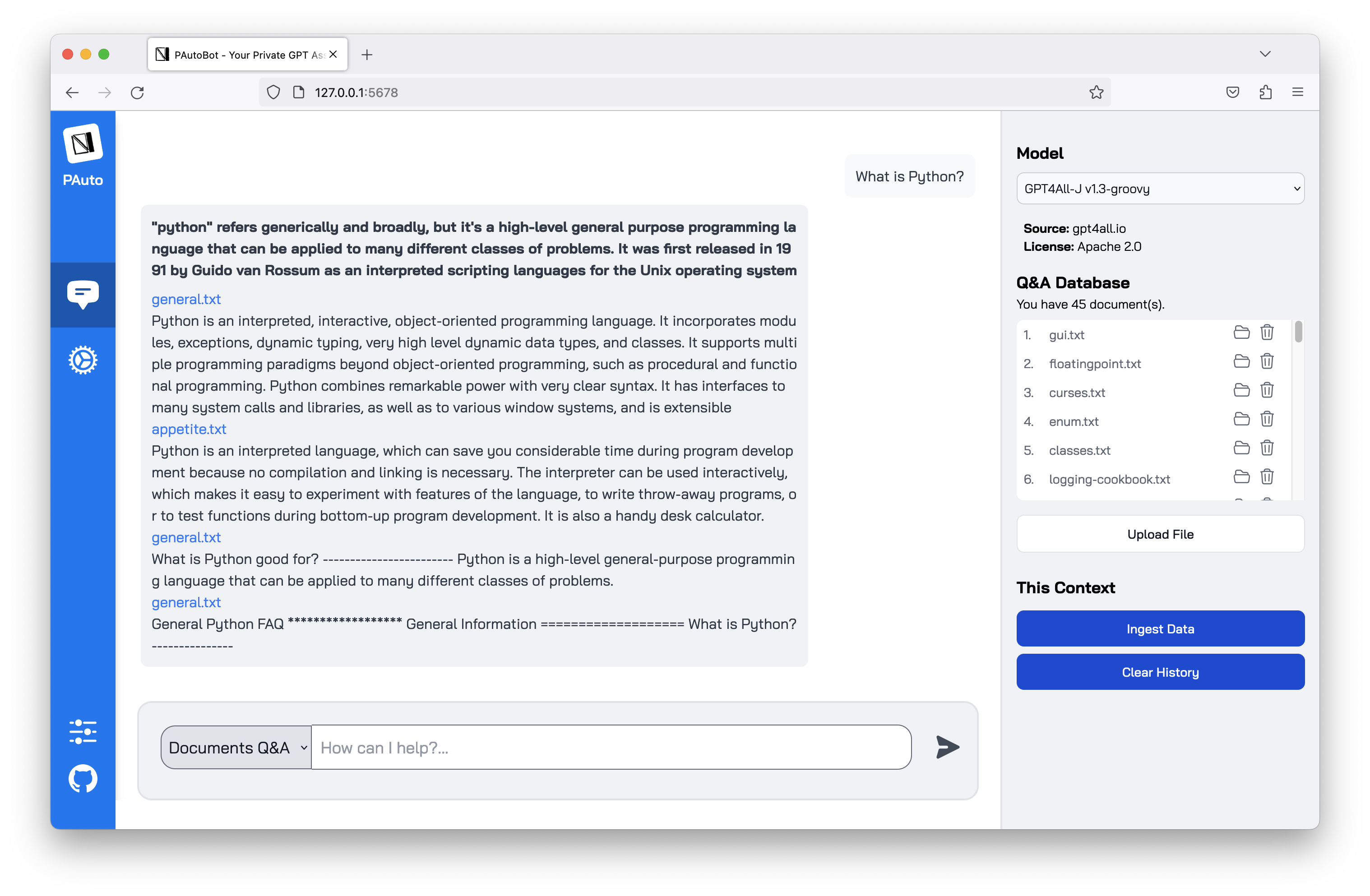

/docs/screenshot.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/awesomedev08/pautobot/HEAD/docs/screenshot.png

--------------------------------------------------------------------------------

/frontend/styles/globals.css:

--------------------------------------------------------------------------------

1 | @tailwind base;

2 | @tailwind components;

3 | @tailwind utilities;

4 |

--------------------------------------------------------------------------------

/docs/python3.11.3_lite.zip:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/awesomedev08/pautobot/HEAD/docs/python3.11.3_lite.zip

--------------------------------------------------------------------------------

/frontend/public/favicon.ico:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/awesomedev08/pautobot/HEAD/frontend/public/favicon.ico

--------------------------------------------------------------------------------

/frontend/public/pautobot.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/awesomedev08/pautobot/HEAD/frontend/public/pautobot.png

--------------------------------------------------------------------------------

/frontend/jsconfig.json:

--------------------------------------------------------------------------------

1 | {

2 | "compilerOptions": {

3 | "paths": {

4 | "@/*": ["./*"]

5 | }

6 | }

7 | }

8 |

--------------------------------------------------------------------------------

/pautobot/models.py:

--------------------------------------------------------------------------------

1 | from pydantic import BaseModel

2 |

3 |

4 | class Query(BaseModel):

5 | mode: str

6 | query: str

7 |

--------------------------------------------------------------------------------

/frontend/postcss.config.js:

--------------------------------------------------------------------------------

1 | module.exports = {

2 | plugins: {

3 | tailwindcss: {},

4 | autoprefixer: {},

5 | },

6 | };

7 |

--------------------------------------------------------------------------------

/pautobot/app_info.py:

--------------------------------------------------------------------------------

1 | __appname__ = "PautoBot"

2 | __description__ = (

3 | "Private AutoGPT Robot - Your private task assistant with GPT!"

4 | )

5 | __version__ = "0.0.27"

6 |

--------------------------------------------------------------------------------

/frontend/README.md:

--------------------------------------------------------------------------------

1 |

2 |

🔥 PⒶutoBot 🔥

4 | Your private task assistant with GPT

5 |

6 |

--------------------------------------------------------------------------------

/pautobot/config.py:

--------------------------------------------------------------------------------

1 | import os

2 | import pathlib

3 |

4 | DATA_ROOT = os.path.abspath(

5 | os.path.join(os.path.expanduser("~"), "pautobot-data")

6 | )

7 | pathlib.Path(DATA_ROOT).mkdir(parents=True, exist_ok=True)

8 |

9 | DATABASE_PATH = os.path.abspath(os.path.join(DATA_ROOT, "pautobot.db"))

10 |

--------------------------------------------------------------------------------

/pautobot/engine/bot_enums.py:

--------------------------------------------------------------------------------

1 | from enum import Enum

2 |

3 |

4 | class BotStatus(str, Enum):

5 | """Bot status."""

6 |

7 | READY = "READY"

8 | THINKING = "THINKING"

9 | ERROR = "ERROR"

10 |

11 |

12 | class BotMode(str, Enum):

13 | """Bot mode."""

14 |

15 | QA = "QA"

16 | CHAT = "CHAT"

17 |

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

1 | [tool.black]

2 | line-length = 79

3 | #include = '\.pyi?$'

4 | exclude = '''

5 | /(

6 | \.git

7 | | \.hg

8 | | \.tox

9 | | \.venv

10 | | _build

11 | | buck-out

12 | | build

13 | | dist

14 | )/

15 | '''

16 |

17 | [build-system]

18 | requires = ["setuptools", "wheel"]

19 | build-backend = "setuptools.build_meta"

20 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | langchain==0.0.194

2 | gpt4all==0.3.0

3 | chromadb==0.3.23

4 | urllib3==2.0.2

5 | pdfminer.six==20221105

6 | unstructured==0.6.6

7 | extract-msg==0.41.1

8 | tabulate==0.9.0

9 | pandoc==2.3

10 | pypandoc==1.11

11 | tqdm==4.65.0

12 | python-multipart==0.0.6

13 | fastapi==0.96.0

14 | SQLAlchemy==2.0.15

15 | alembic==1.11.1

16 | sentence_transformers==2.2.2

17 | requests

18 |

--------------------------------------------------------------------------------

/frontend/tailwind.config.js:

--------------------------------------------------------------------------------

1 | /** @type {import('tailwindcss').Config} */

2 | module.exports = {

3 | content: [

4 | "./app/**/*.{js,ts,jsx,tsx,mdx}",

5 | "./pages/**/*.{js,ts,jsx,tsx,mdx}",

6 | "./components/**/*.{js,ts,jsx,tsx,mdx}",

7 | ],

8 | theme: {

9 | extend: {

10 | rotate: {

11 | logo: "-10deg",

12 | },

13 | },

14 | },

15 | plugins: [],

16 | };

17 |

--------------------------------------------------------------------------------

/frontend/components/RightSidebar.js:

--------------------------------------------------------------------------------

1 | "use client";

2 | import React from "react";

3 |

4 | import ModelSelector from "./ModelSelector";

5 | import QADBManager from "./QADBManager";

6 | import ContextManager from "./ContextManager";

7 |

8 | export default function SidebarTools() {

9 | return (

10 | <>

11 |

11 |

18 | );

19 | }

20 |

--------------------------------------------------------------------------------

/frontend/pages/_app.js:

--------------------------------------------------------------------------------

1 | import "@/styles/globals.css";

2 | import "react-toastify/dist/ReactToastify.css";

3 |

4 | import { Bai_Jamjuree } from "next/font/google";

5 | import Head from "next/head";

6 |

7 | const bai_jam = Bai_Jamjuree({

8 | subsets: ["latin", "vietnamese"],

9 | weight: ["200", "300", "400", "500", "600", "700"],

10 | });

11 |

12 | export default function RootLayout({ Component, pageProps }) {

13 | return (

14 | <>

15 |

16 | PAutoBot - Your Private GPT Assistant

17 |

18 |

19 |

21 |

22 | );

23 | }

24 |

--------------------------------------------------------------------------------

/frontend/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "pauto-frontend",

3 | "version": "0.0.27",

4 | "private": true,

5 | "scripts": {

6 | "dev": "next dev",

7 | "build": "next build",

8 | "export": "next export",

9 | "start": "next start",

10 | "lint": "next lint",

11 | "pr": "prettier --write ."

12 | },

13 | "dependencies": {

14 | "next": "13.4.3",

15 | "react": "18.2.0",

16 | "react-dom": "18.2.0",

17 | "react-toastify": "^9.1.3"

18 | },

19 | "devDependencies": {

20 | "autoprefixer": "^10.4.14",

21 | "postcss": "^8.4.23",

22 | "tailwindcss": "^3.3.2",

23 | "prettier": "^2.8.8",

24 | "eslint": "8.41.0",

25 | "eslint-config-next": "13.4.3"

26 | }

27 | }

28 |

--------------------------------------------------------------------------------

/frontend/pages/index.js:

--------------------------------------------------------------------------------

1 | import { ToastContainer } from "react-toastify";

2 |

3 | import Sidebar from "@/components/Sidebar";

4 | import Main from "@/components/Main";

5 | import SidebarTools from "@/components/RightSidebar";

6 |

7 | export default function Home() {

8 | return (

9 |

10 |

11 |

13 |

14 |

17 |

18 |

20 |

Model

5 |

6 |

10 | GPT4All-J v1.3-groovy

11 |

12 |

13 |

14 |

24 |

25 | License: Apache 2.0

26 |

27 |

8 |

9 |

window.open("https://pautobot.com/", "_blank")}

14 | >

15 |

20 |

21 | PAuto

22 |

23 |

24 |

25 |

26 |

28 |

29 |

31 |

32 |

33 |

This Context

10 |

11 | {

16 | toast.info("Ingesting data...");

17 | ingestData(0).catch((error) => {

18 | toast.error(error);

19 | });

20 | }}

21 | >

22 | Ingest Data

23 |

24 | {

27 | clearChatHistory(0).then(() => {

28 | toast.success("Chat history cleared!");

29 | window.location.reload();

30 | });

31 | }}

32 | >

33 | Clear History

34 |

35 |

36 |

37 | );

38 | }

39 |

--------------------------------------------------------------------------------

/frontend/components/icons/LoadingIcon.js:

--------------------------------------------------------------------------------

1 | export default function () {

2 | return (

3 |

11 |

20 | );

21 | }

22 |

--------------------------------------------------------------------------------

/pautobot/db_models.py:

--------------------------------------------------------------------------------

1 | import datetime

2 |

3 | from sqlalchemy import Column, DateTime, ForeignKey, Integer, String

4 | from sqlalchemy.orm import relationship

5 |

6 | from pautobot.database import Base, engine

7 |

8 |

9 | class BotContext(Base):

10 | __tablename__ = "contexts"

11 |

12 | id = Column(Integer, primary_key=True, index=True)

13 | name = Column(String, index=True)

14 | created_at = Column(DateTime, default=datetime.datetime.utcnow)

15 | documents = relationship("Document", back_populates="bot_context")

16 | chat_chunks = relationship("ChatChunk", back_populates="bot_context")

17 |

18 |

19 | class Document(Base):

20 | __tablename__ = "documents"

21 |

22 | id = Column(Integer, primary_key=True, index=True)

23 | name = Column(String)

24 | storage_name = Column(String)

25 | created_at = Column(DateTime, default=datetime.datetime.utcnow)

26 | bot_context_id = Column(Integer, ForeignKey("contexts.id"))

27 | bot_context = relationship("BotContext", back_populates="documents")

28 |

29 |

30 | class ChatChunk(Base):

31 | __tablename__ = "chat_chunks"

32 |

33 | id = Column(Integer, primary_key=True, index=True)

34 | created_at = Column(DateTime, default=datetime.datetime.utcnow)

35 | text = Column(String)

36 | bot_context_id = Column(Integer, ForeignKey("contexts.id"))

37 | bot_context = relationship("BotContext", back_populates="chat_chunks")

38 |

39 |

40 | Base.metadata.create_all(engine)

41 |

--------------------------------------------------------------------------------

/frontend/lib/requests/bot.js:

--------------------------------------------------------------------------------

1 | export const getBotInfo = () => {

2 | return fetch("/api/bot_info", {

3 | method: "GET",

4 | headers: {

5 | "Content-Type": "application/json",

6 | },

7 | }).then(async (response) => {

8 | let data = await response.json();

9 | if (!response.ok) {

10 | const error = (data && data.message) || response.status;

11 | return Promise.reject(error);

12 | }

13 | return Promise.resolve(data);

14 | });

15 | };

16 |

17 | export const ask = (contextId, mode, message) => {

18 | return fetch(`/api/${contextId}/ask`, {

19 | method: "POST",

20 | headers: {

21 | "Content-Type": "application/json",

22 | },

23 | body: JSON.stringify({ mode: mode, query: message }),

24 | }).then(async (response) => {

25 | let data = await response.json();

26 | if (!response.ok) {

27 | const error = (data && data.message) || response.status;

28 | return Promise.reject(error);

29 | }

30 | return Promise.resolve(data);

31 | });

32 | };

33 |

34 | export const queryBotResponse = (contextId) => {

35 | return fetch(`/api/${contextId}/get_answer`, {

36 | method: "GET",

37 | headers: {

38 | "Content-Type": "application/json",

39 | },

40 | }).then(async (response) => {

41 | let data = await response.json();

42 | if (!response.ok) {

43 | const error = (data && data.message) || response.status;

44 | return Promise.reject(error);

45 | }

46 | return Promise.resolve(data);

47 | });

48 | };

49 |

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | ---

2 | files: ^(.*\.(py|json|md|sh|yaml|cfg|txt))$

3 | exclude: ^(\.[^/]*cache/.*|.*/_user.py|source_documents/)$

4 | repos:

5 | - repo: https://github.com/pre-commit/pre-commit-hooks

6 | rev: v4.4.0

7 | hooks:

8 | - id: check-yaml

9 | args: [--unsafe]

10 | - id: end-of-file-fixer

11 | - id: trailing-whitespace

12 | exclude-files: \.md$

13 | - id: check-json

14 | - id: mixed-line-ending

15 | - id: check-merge-conflict

16 | - id: check-docstring-first

17 | - id: fix-byte-order-marker

18 | - id: check-case-conflict

19 | - repo: https://github.com/adrienverge/yamllint.git

20 | rev: v1.29.0

21 | hooks:

22 | - id: yamllint

23 | args:

24 | - --no-warnings

25 | - -d

26 | - '{extends: relaxed, rules: {line-length: {max: 90}}}'

27 | - repo: https://github.com/myint/autoflake

28 | rev: v1.4

29 | hooks:

30 | - id: autoflake

31 | exclude: .*/__init__.py

32 | args:

33 | - --in-place

34 | - --remove-all-unused-imports

35 | - --expand-star-imports

36 | - --remove-duplicate-keys

37 | - --remove-unused-variables

38 | - repo: https://github.com/pre-commit/mirrors-isort

39 | rev: v5.4.2

40 | hooks:

41 | - id: isort

42 | args: ["--profile", "black"]

43 | - repo: https://github.com/pre-commit/pre-commit-hooks

44 | rev: v3.3.0

45 | hooks:

46 | - id: trailing-whitespace

47 | - id: end-of-file-fixer

48 |

--------------------------------------------------------------------------------

/pautobot/routers/bot.py:

--------------------------------------------------------------------------------

1 | import logging

2 | import traceback

3 |

4 | from fastapi import APIRouter, BackgroundTasks, status

5 | from fastapi.responses import JSONResponse

6 |

7 | from pautobot import globals

8 | from pautobot.engine.bot_enums import BotStatus

9 | from pautobot.models import Query

10 |

11 | router = APIRouter(

12 | prefix="/api",

13 | tags=["Ask Bot"],

14 | )

15 |

16 |

17 | @router.get("/bot_info")

18 | async def get_bot_info():

19 | return globals.engine.get_bot_info()

20 |

21 |

22 | @router.post("/{context_id}/ask")

23 | async def ask(

24 | context_id: int, query: Query, background_tasks: BackgroundTasks

25 | ):

26 | try:

27 | globals.engine.check_query(

28 | query.mode, query.query, context_id=context_id

29 | )

30 | except ValueError as e:

31 | logging.error(traceback.format_exc())

32 | return JSONResponse(

33 | status_code=status.HTTP_400_BAD_REQUEST,

34 | content={"message": str(e)},

35 | )

36 | if globals.engine.context.current_answer["status"] == BotStatus.THINKING:

37 | return JSONResponse(

38 | status_code=status.HTTP_400_BAD_REQUEST,

39 | content={"message": "Bot is already thinking"},

40 | )

41 | globals.engine.context.current_answer = {

42 | "answer": "",

43 | "docs": [],

44 | }

45 | background_tasks.add_task(globals.engine.query, query.mode, query.query)

46 | return {"message": "Query received"}

47 |

48 |

49 | @router.get("/{context_id}/get_answer")

50 | async def get_answer(context_id: int):

51 | return globals.engine.get_answer(context_id=context_id)

52 |

--------------------------------------------------------------------------------

/pautobot/engine/chatbot_factory.py:

--------------------------------------------------------------------------------

1 | from langchain import LLMChain, PromptTemplate

2 | from langchain.memory import ConversationBufferWindowMemory

3 |

4 |

5 | class ChatbotFactory:

6 | """Factory for instantiating chatbots."""

7 |

8 | @staticmethod

9 | def create_chatbot(

10 | llm,

11 | ):

12 | template = """Assistant is a large language model train by human.

13 |

14 | Assistant is designed to be able to assist with a wide range of tasks, from answering simple questions to providing in-depth explanations and discussions on a wide range of topics. As a language model, Assistant is able to generate human-like text based on the input it receives, allowing it to engage in natural-sounding conversations and provide responses that are coherent and relevant to the topic at hand.

15 |

16 | Assistant is constantly learning and improving, and its capabilities are constantly evolving. It is able to process and understand large amounts of text, and can use this knowledge to provide accurate and informative responses to a wide range of questions. Additionally, Assistant is able to generate its own text based on the input it receives, allowing it to engage in discussions and provide explanations and descriptions on a wide range of topics.

17 |

18 | Overall, Assistant is a powerful tool that can help with a wide range of tasks and provide valuable insights and information on a wide range of topics. Whether you need help with a specific question or just want to have a conversation about a particular topic, Assistant is here to assist.

19 |

20 | {history}

21 | Human: {human_input}

22 | Assistant:"""

23 |

24 | prompt = PromptTemplate(

25 | input_variables=["history", "human_input"], template=template

26 | )

27 | chatbot_instance = LLMChain(

28 | llm=llm,

29 | prompt=prompt,

30 | verbose=True,

31 | memory=ConversationBufferWindowMemory(k=2),

32 | )

33 | return chatbot_instance

34 |

--------------------------------------------------------------------------------

/frontend/lib/requests/documents.js:

--------------------------------------------------------------------------------

1 | export const ingestData = (contextId) => {

2 | return fetch(`/api/${contextId}/documents/ingest`, {

3 | method: "POST",

4 | headers: {

5 | "Content-Type": "application/json",

6 | },

7 | }).then(async (response) => {

8 | let data = await response.json();

9 | if (!response.ok) {

10 | const error = (data && data.message) || response.status;

11 | return Promise.reject(error);

12 | }

13 | return Promise.resolve(data);

14 | });

15 | };

16 |

17 | export const uploadDocument = (contextId, file) => {

18 | const formData = new FormData();

19 | formData.append("file", file);

20 | return fetch(`/api/${contextId}/documents`, {

21 | method: "POST",

22 | body: formData,

23 | }).then(async (response) => {

24 | let data = await response.json();

25 | if (!response.ok) {

26 | const error = (data && data.message) || response.status;

27 | console.log(error);

28 | return Promise.reject(error);

29 | }

30 | return Promise.resolve(data);

31 | });

32 | };

33 |

34 | export const openDocument = (contextId, documentId) => {

35 | return fetch(

36 | `/api/${contextId}/documents/${documentId}/open_in_file_explorer`,

37 | {

38 | method: "POST",

39 | }

40 | );

41 | };

42 |

43 | export const getDocuments = (contextId) => {

44 | return fetch(`/api/${contextId}/documents`, {

45 | method: "GET",

46 | headers: {

47 | "Content-Type": "application/json",

48 | },

49 | }).then(async (response) => {

50 | let data = await response.json();

51 | if (!response.ok) {

52 | const error = (data && data.message) || response.status;

53 | return Promise.reject(error);

54 | }

55 | return Promise.resolve(data);

56 | });

57 | };

58 |

59 | export const deleteDocument = (contextId, documentId) => {

60 | return fetch(`/api/${contextId}/documents/${documentId}`, {

61 | method: "DELETE",

62 | }).then(async (response) => {

63 | let data = await response.json();

64 | if (!response.ok) {

65 | const error = (data && data.message) || response.status;

66 | return Promise.reject(error);

67 | }

68 | return Promise.resolve(data);

69 | });

70 | };

71 |

--------------------------------------------------------------------------------

/frontend/components/SidebarBottomMenu.js:

--------------------------------------------------------------------------------

1 | import { toast } from "react-toastify";

2 |

3 | export default function () {

4 | return (

5 |

6 |

{

9 | toast.info("Coming soon!");

10 | }}

11 | >

12 |

18 |

20 |

21 |

22 |

34 |

35 |

6 |

7 |

8 |

9 |

15 |

21 | Query

22 |

24 |

25 | {

28 | openDocumentsFolder();

29 | }}

30 | >

31 |

38 |

40 | Manage Knowledge DB

41 |

43 |

44 |

3 |

4 |

7 |

10 |

13 |

16 |

--------------------------------------------------------------------------------

/pautobot/engine/context_manager.py:

--------------------------------------------------------------------------------

1 | import logging

2 | import os

3 | import shutil

4 |

5 | from pautobot import db_models

6 | from pautobot.config import DATA_ROOT

7 | from pautobot.database import session

8 | from pautobot.engine.bot_context import BotContext

9 |

10 |

11 | class ContextManager:

12 | """

13 | Context manager. Handle logics related to PautoBot contexts.

14 | """

15 |

16 | def __init__(self):

17 | self._current_context = None

18 | self._contexts = {}

19 |

20 | def load_contexts(self) -> None:

21 | """

22 | Load all contexts from the database.

23 | """

24 | self._contexts = {0: BotContext(id=0, name="Default")}

25 | self._current_context = self._contexts[0]

26 | for context in session.query(db_models.BotContext).all():

27 | self._contexts[context.id] = BotContext(id=context.id)

28 |

29 | def rename_context(self, context_id: int, new_name: str) -> None:

30 | """

31 | Rename a context.

32 | """

33 | if context_id not in self._contexts:

34 | raise ValueError(f"Context {context_id} not found!")

35 | session.query(db_models.BotContext).filter_by(id=context_id).update(

36 | {"name": new_name}

37 | )

38 | session.commit()

39 |

40 | def delete_context(self, context_id: int) -> None:

41 | """

42 | Completely delete a context.

43 | """

44 | if context_id not in self._contexts:

45 | raise ValueError(f"Context {context_id} not found!")

46 | if context_id in self._contexts:

47 | del self._contexts[context_id]

48 | try:

49 | session.query(db_models.BotContext).filter_by(

50 | id=context_id

51 | ).delete()

52 | session.commit()

53 | shutil.rmtree(os.path.join(DATA_ROOT, "contexts", str(context_id)))

54 | except Exception as e:

55 | logging.error(f"Error while deleting context {context_id}: {e}")

56 |

57 | def get_context(self, context_id: int) -> BotContext:

58 | """

59 | Get a context by its ID.

60 | """

61 | if context_id not in self._contexts:

62 | raise ValueError(f"Context {context_id} not found!")

63 | return self._contexts[context_id]

64 |

65 | def get_contexts(self) -> dict:

66 | """

67 | Get all contexts.

68 | """

69 | return self._contexts

70 |

71 | def set_current_context(self, context_id: int) -> None:

72 | """

73 | Set the current context.

74 | """

75 | if context_id not in self._contexts:

76 | raise ValueError(f"Context {context_id} not found!")

77 | self._current_context = self._contexts[context_id]

78 |

79 | def get_current_context(self) -> BotContext:

80 | """

81 | Get the current context.

82 | """

83 | return self._current_context

84 |

--------------------------------------------------------------------------------

/pautobot/routers/documents.py:

--------------------------------------------------------------------------------

1 | import os

2 | import tempfile

3 | import zipfile

4 |

5 | from fastapi import APIRouter, File, UploadFile

6 | from fastapi.responses import JSONResponse

7 |

8 | from pautobot import globals

9 | from pautobot.utils import SUPPORTED_DOCUMENT_TYPES

10 |

11 | router = APIRouter(

12 | prefix="/api",

13 | tags=["Documents"],

14 | )

15 |

16 |

17 | @router.get("/{context_id}/documents")

18 | async def get_documents(context_id: int):

19 | """

20 | Get all documents in the bot's context

21 | """

22 | return globals.context_manager.get_context(context_id).get_documents()

23 |

24 |

25 | @router.post("/{context_id}/documents")

26 | async def upload_document(context_id: int, file: UploadFile = File(...)):

27 | """

28 | Upload a document to the bot's context

29 | """

30 | if not file:

31 | return {"message": "No file sent"}

32 |

33 | file_extension = os.path.splitext(file.filename)[1]

34 | if file_extension == ".zip":

35 | tmp_dir = tempfile.mkdtemp()

36 | tmp_zip_file = os.path.join(tmp_dir, file.filename)

37 | with open(tmp_zip_file, "wb") as tmp_zip:

38 | tmp_zip.write(file.file.read())

39 | with zipfile.ZipFile(tmp_zip_file, "r") as zip_ref:

40 | zip_ref.extractall(tmp_dir)

41 | for filename in os.listdir(tmp_dir):

42 | if os.path.splitext(filename)[1] in SUPPORTED_DOCUMENT_TYPES:

43 | with open(os.path.join(tmp_dir, filename), "rb") as file:

44 | globals.context_manager.get_context(

45 | context_id

46 | ).add_document(file, filename)

47 | elif file_extension in SUPPORTED_DOCUMENT_TYPES:

48 | globals.context_manager.get_context(context_id).add_document(

49 | file.file, file.filename

50 | )

51 | else:

52 | return JSONResponse(

53 | status_code=400,

54 | content={"message": f"File type {file_extension} not supported"},

55 | )

56 | globals.engine.ingest_documents_in_background(context_id=context_id)

57 | return {"message": "File uploaded"}

58 |

59 |

60 | @router.delete("/{context_id}/documents/{document_id}")

61 | async def delete_document(context_id: int, document_id: int):

62 | """

63 | Delete a document from the bot's context

64 | """

65 | try:

66 | globals.context_manager.get_context(context_id).delete_document(

67 | document_id

68 | )

69 | except ValueError as e:

70 | return JSONResponse(status_code=400, content={"message": str(e)})

71 | globals.engine.ingest_documents_in_background(context_id=context_id)

72 | return {"message": "Document deleted"}

73 |

74 |

75 | @router.post("/{context_id}/documents/ingest")

76 | async def ingest_documents(context_id: int):

77 | """

78 | Ingest all documents in the bot's context

79 | """

80 | globals.engine.ingest_documents_in_background(context_id=context_id)

81 | return {"message": "Ingestion finished!"}

82 |

83 |

84 | @router.post("/{context_id}/documents/{document_id}/open_in_file_explorer")

85 | async def open_in_file_explorer(context_id: int, document_id: int):

86 | """

87 | Open the bot's context in the file explorer

88 | """

89 | globals.context_manager.get_context(context_id).open_document(document_id)

90 | return {"message": "Documents folder opened"}

91 |

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | import re

2 |

3 | from setuptools import find_packages, setup

4 |

5 |

6 | def get_version():

7 | """Get package version from app_info.py file"""

8 | filename = "pautobot/app_info.py"

9 | with open(filename, encoding="utf-8") as f:

10 | match = re.search(

11 | r"""^__version__ = ['"]([^'"]*)['"]""", f.read(), re.M

12 | )

13 | if not match:

14 | raise RuntimeError(f"{filename} doesn't contain __version__")

15 | version = match.groups()[0]

16 | return version

17 |

18 |

19 | def get_install_requires():

20 | """Get python requirements based on context"""

21 | install_requires = [

22 | "langchain>=0.0.194",

23 | "gpt4all>=0.3.0",

24 | "chromadb>=0.3.23",

25 | "urllib3>=2.0.2",

26 | "pdfminer.six>=20221105",

27 | "unstructured>=0.6.6",

28 | "extract-msg>=0.41.1",

29 | "tabulate>=0.9.0",

30 | "pandoc>=2.3",

31 | "pypandoc>=1.11",

32 | "tqdm>=4.65.0",

33 | "python-multipart>=0.0.6",

34 | "fastapi==0.96.0",

35 | "SQLAlchemy==2.0.15",

36 | "alembic==1.11.1",

37 | "sentence_transformers==2.2.2",

38 | "requests",

39 | ]

40 |

41 | return install_requires

42 |

43 |

44 | def get_long_description():

45 | """Read long description from README"""

46 | with open("README.md", encoding="utf-8") as f:

47 | long_description = f.read()

48 | long_description = long_description.replace(

49 | "",

50 | "",

51 | )

52 | long_description = long_description.replace(

53 | '

2 |

🔥 PⒶutoBot 🔥

4 | Private AutoGPT Robot - Your private task assistant with GPT!

5 |

6 |

7 | - 🔥 **Chat** to your offline **LLMs on CPU Only**. **100% private**, no data leaves your execution environment at any point.

8 | - 🔥 **Ask questions** to your documents without an internet connection. Engine developed based on [PrivateGPT](https://github.com/imartinez/privateGPT).

9 | - 🔥 **Automate tasks** easily with **PAutoBot plugins**. Easy for everyone.

10 | - 🔥 **Easy coding structure** with **Next.js** and **Python**. Easy to understand and modify.

11 | - 🔥 **Built with** [LangChain](https://github.com/hwchase17/langchain), [GPT4All](https://github.com/nomic-ai/gpt4all), [Chroma](https://www.trychroma.com/), [SentenceTransformers](https://www.sbert.net/), [PrivateGPT](https://github.com/imartinez/privateGPT).

12 |

13 |

14 |

15 | **The supported extensions are:**

16 |

17 | - `.csv`: CSV,

18 | - `.docx`: Word Document,

19 | - `.doc`: Word Document,

20 | - `.enex`: EverNote,

21 | - `.eml`: Email,

22 | - `.epub`: EPub,

23 | - `.html`: HTML File,

24 | - `.md`: Markdown,

25 | - `.msg`: Outlook Message,

26 | - `.odt`: Open Document Text,

27 | - `.pdf`: Portable Document Format (PDF),

28 | - `.pptx` : PowerPoint Document,

29 | - `.ppt` : PowerPoint Document,

30 | - `.txt`: Text file (UTF-8),

31 |

32 | ## I. Installation and Usage

33 |

34 | ### 1. Installation

35 |

36 | - Python 3.8 or higher.

37 | - Install **PAutoBot**:

38 |

39 | ```shell

40 | pip install pautobot

41 | ```

42 |

43 | ### 2. Usage

44 |

45 | - Run the app:

46 |

47 | ```shell

48 | python -m pautobot.app

49 | ```

50 |

51 | or just:

52 |

53 | ```shell

54 | pautobot

55 | ```

56 |

57 | - Go to

6 |

20 |

{

23 | toast.info("Coming soon!");

24 | }}

25 | >

26 |

32 |

39 |

40 |

frontend-dist not found in package pautobot. Please run: bash build_frontend.sh"

62 | )

63 | return

64 |

65 |

66 | def download_file(url, file_path):

67 | """

68 | Send a GET request to the URL

69 | """

70 | tmp_file = tempfile.NamedTemporaryFile(delete=False)

71 | pathlib.Path(file_path).parent.mkdir(parents=True, exist_ok=True)

72 | response = requests.get(url, stream=True)

73 |

74 | # Check if the request was successful

75 | if response.status_code == 200:

76 | total_size = int(response.headers.get("content-length", 0))

77 | block_size = 8192 # Chunk size in bytes

78 | progress_bar = tqdm(total=total_size, unit="B", unit_scale=True)

79 |

80 | with open(tmp_file.name, "wb") as file:

81 | # Iterate over the response content in chunks

82 | for chunk in response.iter_content(chunk_size=block_size):

83 | file.write(chunk)

84 | progress_bar.update(len(chunk))

85 |

86 | progress_bar.close()

87 | shutil.move(tmp_file.name, file_path)

88 | logging.info("File downloaded successfully.")

89 | else:

90 | logging.info("Failed to download file.")

91 |

92 |

93 | DEFAULT_MODEL_URLS = {

94 | "ggml-gpt4all-j": "https://gpt4all.io/models/ggml-gpt4all-j.bin",

95 | "ggml-gpt4all-j-v1.1-breezy": "https://gpt4all.io/models/ggml-gpt4all-j-v1.1-breezy.bin",

96 | "ggml-gpt4all-j-v1.2-jazzy": "https://gpt4all.io/models/ggml-gpt4all-j-v1.2-jazzy.bin",

97 | "ggml-gpt4all-j-v1.3-groovy": "https://gpt4all.io/models/ggml-gpt4all-j-v1.3-groovy.bin",

98 | "ggml-gpt4all-l13b-snoozy": "https://gpt4all.io/models/ggml-gpt4all-l13b-snoozy.bin",

99 | "ggml-mpt-7b-base": "https://gpt4all.io/models/ggml-mpt-7b-base.bin",

100 | "ggml-mpt-7b-instruct": "https://gpt4all.io/models/ggml-mpt-7b-instruct.bin",

101 | "ggml-nous-gpt4-vicuna-13b": "https://gpt4all.io/models/ggml-nous-gpt4-vicuna-13b.bin",

102 | "ggml-replit-code-v1-3b": "https://huggingface.co/nomic-ai/ggml-replit-code-v1-3b/resolve/main/ggml-replit-code-v1-3b.bin",

103 | "ggml-stable-vicuna-13B.q4_2": "https://gpt4all.io/models/ggml-stable-vicuna-13B.q4_2.bin",

104 | "ggml-v3-13b-hermes-q5_1": "https://huggingface.co/eachadea/ggml-nous-hermes-13b/resolve/main/ggml-v3-13b-hermes-q5_1.bin",

105 | "ggml-vicuna-13b-1.1-q4_2": "https://gpt4all.io/models/ggml-vicuna-13b-1.1-q4_2.bin",

106 | "ggml-vicuna-7b-1.1-q4_2": "https://gpt4all.io/models/ggml-vicuna-7b-1.1-q4_2.bin",

107 | "ggml-wizard-13b-uncensored": "https://gpt4all.io/models/ggml-wizard-13b-uncensored.bin",

108 | "ggml-wizardLM-7B.q4_2": "https://gpt4all.io/models/ggml-wizardLM-7B.q4_2.bin",

109 | }

110 |

111 |

112 | def download_model(model_type, model_path):

113 | """

114 | Download model if not exists

115 | TODO (vietanhdev):

116 | - Support more model types

117 | - Multiple download links

118 | - Check hash of the downloaded file

119 | """

120 | MODEL_URL = DEFAULT_MODEL_URLS["ggml-gpt4all-j-v1.3-groovy"]

121 | if not os.path.exists(model_path):

122 | logging.info("Downloading model...")

123 | try:

124 | download_file(MODEL_URL, model_path)

125 | except Exception as e:

126 | logging.info(f"Error while downloading model: {e}")

127 | traceback.print_exc()

128 | exit(1)

129 | logging.info("Model downloaded!")

130 |

--------------------------------------------------------------------------------

/frontend/components/Main.js:

--------------------------------------------------------------------------------

1 | "use client";

2 | import React, { useState, useRef, useEffect } from "react";

3 | import { toast } from "react-toastify";

4 | import NewMessage from "./NewMessage";

5 |

6 | import { getChatHistory } from "@/lib/requests/history";

7 | import { ask, queryBotResponse } from "@/lib/requests/bot";

8 | import { openDocument } from "@/lib/requests/documents";

9 |

10 | export default function Main() {

11 | const [messages, setMessages] = useState([]);

12 | const [thinking, setThinking] = useState(false);

13 | const messagesRef = useRef(null);

14 |

15 | const scrollMessages = () => {

16 | setTimeout(() => {

17 | messagesRef.current.scrollTop = messagesRef.current.scrollHeight;

18 | }, 300);

19 | };

20 |

21 | useEffect(() => {

22 | getChatHistory(0).then(async (response) => {

23 | let data = await response.json();

24 | if (!response.ok) {

25 | const error = (data && data.message) || response.status;

26 | return Promise.reject(error);

27 | }

28 | setMessages(data);

29 | scrollMessages();

30 | });

31 | }, []);

32 |

33 | const onSubmitMessage = (mode, message) => {

34 | if (thinking) {

35 | toast.warning("I am thinking about previous question! Please wait...");

36 | return;

37 | }

38 | setThinking(true);

39 | let newMessages = [

40 | ...messages,

41 | { query: message },

42 | { answer: "Thinking..." },

43 | ];

44 | setMessages(newMessages);

45 | scrollMessages();

46 |

47 | ask(0, mode, message)

48 | .then(async (data) => {

49 | // Query data from /api/get_answer

50 | const interval = setInterval(async () => {

51 | queryBotResponse(0)

52 | .then(async (data) => {

53 | if (data.status == "THINKING" && data.answer) {

54 | newMessages.pop();

55 | newMessages = [

56 | ...newMessages,

57 | { answer: data.answer, docs: null },

58 | ];

59 | setMessages(newMessages);

60 | scrollMessages();

61 | } else if (data.status == "READY") {

62 | clearInterval(interval);

63 | newMessages.pop();

64 | newMessages = [

65 | ...newMessages,

66 | { answer: data.answer, docs: data.docs },

67 | ];

68 | setMessages(newMessages);

69 | setThinking(false);

70 | scrollMessages();

71 | }

72 | })

73 | .catch((error) => {

74 | toast.error(error);

75 | setThinking(false);

76 | });

77 | }, 2000);

78 | })

79 | .catch((error) => {

80 | toast.error(error);

81 | setThinking(false);

82 | });

83 | };

84 |

85 | return (

86 | <>

87 |

88 |

92 |

93 | {messages.map((message, index) => {

94 | if (message.query) {

95 | return (

96 |

97 |

98 |

{message.query}

99 |

100 |

101 | );

102 | } else {

103 | return (

104 |

105 |

106 |

107 | {message.answer}

108 | {message.answer === "Thinking..." && (

109 |

112 | {message.docs && (

113 |

114 |

115 | {message.docs.map((doc, index) => {

116 | return (

117 |

118 |

{

121 | openDocument(0, doc.source_id);

122 | }}

123 | >

124 | {doc.source}

125 |

126 |

{doc.content}

127 |

128 | );

129 | })}

130 |

131 |

132 | )}

133 |

134 |

135 | );

136 | }

137 | })}

138 | {messages.length === 0 && (

139 |

140 |

Hello World!

141 |

142 | We are in the mission of building an all-in-one task assistant

143 | with PrivateGPT!

144 |

145 |

146 | )}

147 |

148 |

149 |

153 |

Q&A Database

123 |

124 | {documents.length > 0 ? (

125 | You have {documents.length} document(s).

126 | ) : (

127 |

128 | You have no document. Please upload a file and ingest data for Q&A.

129 |

130 | )}

131 |

132 | {

135 | e.preventDefault();

136 | e.stopPropagation();

137 | }}

138 | onDrop={(e) => {

139 | e.preventDefault();

140 | e.stopPropagation();

141 | uploadFiles(e.dataTransfer.files);

142 | }}

143 | >

144 |

145 |

146 | {documents.map((document, key) => (

147 |

148 | {key + 1}.

149 |

150 | {document.name}

151 |

152 |

153 | {

155 | openDocument(0, document.id);

156 | }}

157 | >

158 |

166 |

172 |

173 | {

175 | let confirmation = confirm(

176 | "Are you sure you want to delete this document?"

177 | );

178 | if (confirmation) {

179 | deleteDocument(0, document.id)

180 | .then((response) => {

181 | toast.success("Document deleted!");

182 | refetchDocuments(0);

183 | })

184 | .catch((error) => {

185 | toast.error(error);

186 | });

187 | }

188 | }}

189 | >

190 |

198 |

204 |

205 |

206 |

207 | ))}

208 |

209 |

210 |

213 |

214 | Note: The bot is

215 | currently ingesting data. Please wait until it finishes.

216 |

217 |

219 | )}

220 |

221 | {

237 | if (uploading) return;

238 | fileInput.current.click();

239 | }}

240 | >

241 | Upload Files

242 | {uploading ?

244 |

245 |

246 | );

247 | }

248 |

--------------------------------------------------------------------------------

/pautobot/engine/engine.py:

--------------------------------------------------------------------------------

1 | import logging

2 | import os

3 | import threading

4 | import traceback

5 |

6 | from pautobot import db_models

7 | from pautobot.config import DATA_ROOT

8 | from pautobot.database import session

9 | from pautobot.engine.bot_enums import BotMode, BotStatus

10 | from pautobot.engine.chatbot_factory import ChatbotFactory

11 | from pautobot.engine.context_manager import ContextManager

12 | from pautobot.engine.ingest import ingest_documents

13 | from pautobot.engine.llm_factory import LLMFactory

14 | from pautobot.engine.qa_factory import QAFactory

15 |

16 |

17 | class PautoBotEngine:

18 | """PautoBot engine for answering questions."""

19 |

20 | def __init__(

21 | self, mode, context_manager: ContextManager, model_type="GPT4All"

22 | ) -> None:

23 | self.mode = mode

24 | self.model_type = model_type

25 | self.model_path = os.path.join(

26 | DATA_ROOT,

27 | "models",

28 | "ggml-gpt4all-j-v1.3-groovy.bin",

29 | )

30 | self.context_manager = context_manager

31 | if not self.context_manager.get_contexts():

32 | raise ValueError(

33 | "No contexts found! Please create at least one context first."

34 | )

35 | self.context = self.context_manager.get_current_context()

36 | self.status = BotStatus.READY

37 |

38 | # Prepare the LLM

39 | self.model_n_ctx = 1000

40 | self.llm = LLMFactory.create_llm(

41 | model_type=self.model_type,

42 | model_path=self.model_path,

43 | model_n_ctx=self.model_n_ctx,

44 | streaming=False,

45 | verbose=False,

46 | )

47 | self.chatbot_instance = ChatbotFactory.create_chatbot(self.llm)

48 |

49 | # Prepare the retriever

50 | self.qa_instance = None

51 | self.qa_instance_error = None

52 | self.is_ingesting_data = False

53 | if mode == BotMode.CHAT.value:

54 | return

55 | self.ingest_documents_in_background()

56 |

57 | def get_bot_info(self) -> dict:

58 | """Get the bot's info."""

59 | return {

60 | "mode": self.mode,

61 | "model_type": self.status.value,

62 | "qa_instance_error": self.qa_instance_error,

63 | "status": self.status.value,

64 | "is_ingesting_data": self.is_ingesting_data,

65 | "context": self.context.dict(),

66 | }

67 |

68 | def ingest_documents(self, context_id=None) -> None:

69 | """Ingest the bot's documents."""

70 | if self.is_ingesting_data:

71 | logging.warning("Already ingesting data. Skipping...")

72 | return

73 | self.is_ingesting_data = True

74 | if context_id is not None:

75 | self.switch_context(context_id)

76 | try:

77 | ingest_documents(

78 | self.context.documents_directory,

79 | self.context.search_db_directory,

80 | self.context.embeddings_model_name,

81 | )

82 | # Reload QA

83 | self.qa_instance = QAFactory.create_qa(

84 | context=self.context,

85 | llm=self.llm,

86 | )

87 | except Exception as e:

88 | logging.error(f"Error while ingesting documents: {e}")

89 | logging.error(traceback.format_exc())

90 | self.qa_instance_error = "Error while ingesting documents!"

91 | finally:

92 | self.is_ingesting_data = False

93 |

94 | def ingest_documents_in_background(self, context_id=None) -> None:

95 | """Ingest the bot's documents in the background using a thread."""

96 | if self.is_ingesting_data:

97 | logging.warning("Already ingesting data. Skipping...")

98 | return

99 | thread = threading.Thread(

100 | target=self.ingest_documents,

101 | args=(context_id,),

102 | )

103 | thread.start()

104 |

105 | def switch_context(self, context_id: int) -> None:

106 | """Switch the bot context if needed."""

107 | if self.context.id != context_id:

108 | self.context = self.context_manager.get_context(context_id)

109 | self.qa_instance = QAFactory.create_qa(

110 | context=self.context,

111 | llm=self.llm,

112 | )

113 |

114 | def check_query(self, mode, query, context_id=None) -> None:

115 | """

116 | Check if the query is valid.

117 | Raises an exception on invalid query.

118 | """

119 | if context_id is not None:

120 | self.switch_context(context_id)

121 | if not query:

122 | raise ValueError("Query cannot be empty!")

123 | if mode == BotMode.QA.value and self.mode == BotMode.CHAT.value:

124 | raise ValueError(

125 | "PautobotEngine was initialized in chat mode! "

126 | "Please restart in QA mode."

127 | )

128 | elif mode == BotMode.QA.value and self.is_ingesting_data:

129 | raise ValueError(

130 | "Pautobot is currently ingesting data! Please wait a few minutes and try again."

131 | )

132 | elif mode == BotMode.QA.value and self.qa_instance_error is not None:

133 | raise ValueError(

134 | "Pautobot QA instance is not ready! Please wait a few minutes and try again."

135 | )

136 |

137 | def query(self, mode, query, context_id=None) -> None:

138 | """Query the bot."""

139 | self.status = BotStatus.THINKING

140 | if context_id is not None:

141 | self.switch_context(context_id)

142 | self.check_query(mode, query)

143 | if mode is None:

144 | mode = self.mode

145 | if mode == BotMode.QA.value and self.qa_instance is None:

146 | logging.info(self.qa_instance_error)

147 | mode = BotMode.CHAT

148 | self.context.current_answer = {

149 | "status": self.status,

150 | "answer": "",

151 | "docs": [],

152 | }

153 | self.context.write_chat_history(

154 | {

155 | "query": query,

156 | "mode": mode,

157 | }

158 | )

159 | if mode == BotMode.QA.value:

160 | try:

161 | logging.info("Received query: ", query)

162 | logging.info("Searching...")

163 | res = self.qa_instance(query)

164 | answer, docs = (

165 | res["result"],

166 | res["source_documents"],

167 | )

168 | doc_json = []

169 | for document in docs:

170 | document_file = document.metadata["source"]

171 | document_id = os.path.basename(document_file).split(".")[0]

172 | document_id = int(document_id)

173 | db_document = (

174 | session.query(db_models.Document)

175 | .filter(db_models.Document.id == document_id)

176 | .first()

177 | )

178 | if not db_document:

179 | continue

180 | doc_json.append(

181 | {

182 | "source": db_document.name,

183 | "source_id": db_document.id,

184 | "content": document.page_content,

185 | }

186 | )

187 | self.status = BotStatus.READY

188 | self.context.current_answer = {

189 | "status": self.status,

190 | "answer": answer,

191 | "docs": doc_json,

192 | }

193 | self.context.write_chat_history(self.context.current_answer)

194 | except Exception as e:

195 | logging.error("Error during thinking: ", e)

196 | traceback.print_exc()

197 | answer = "Error during thinking! Please try again."

198 | if "Index not found" in str(e):

199 | answer = "Index not found! Please ingest documents first."

200 | self.status = BotStatus.READY

201 | self.context.current_answer = {

202 | "status": self.status,

203 | "answer": answer,

204 | "docs": None,

205 | }

206 | self.context.write_chat_history(self.context.current_answer)

207 | else:

208 | try:

209 | logging.info("Received query: ", query)

210 | logging.info("Thinking...")

211 | answer = self.chatbot_instance.predict(human_input=query)

212 | logging.info("Answer: ", answer)

213 | self.status = BotStatus.READY

214 | self.context.current_answer = {

215 | "status": self.status,

216 | "answer": answer,

217 | "docs": None,

218 | }

219 | self.context.write_chat_history(self.context.current_answer)

220 | except Exception as e:

221 | logging.error("Error during thinking: ", e)

222 | traceback.print_exc()

223 | self.status = BotStatus.READY

224 | self.context.current_answer = {

225 | "status": self.status,

226 | "answer": "Error during thinking! Please try again.",

227 | "docs": None,

228 | }

229 | self.context.write_chat_history(self.context.current_answer)

230 |

231 | def get_answer(self, context_id=None) -> dict:

232 | """Get the bot's answer."""

233 | if context_id is not None:

234 | self.switch_context(context_id)

235 | return self.context.current_answer

236 |

--------------------------------------------------------------------------------

3 |

3 |  3 |

3 |  20 |

20 |  ',

54 | '

',

54 | ' ',

55 | )

56 | return long_description

57 |

58 |

59 | setup(

60 | name="pautobot",

61 | version=get_version(),

62 | packages=find_packages(),

63 | description="Private AutoGPT Robot - Your private task assistant with GPT!",

64 | long_description=get_long_description(),

65 | long_description_content_type="text/markdown",

66 | author="Viet-Anh Nguyen",

67 | author_email="vietanh.dev@gmail.com",

68 | url="https://github.com/vietanhdev/pautobot",

69 | install_requires=get_install_requires(),

70 | license="Apache License 2.0",

71 | keywords="Personal Assistant, Automation, GPT, LLM, PrivateGPT",

72 | classifiers=[

73 | "Natural Language :: English",

74 | "Operating System :: OS Independent",

75 | "Programming Language :: Python",

76 | "Programming Language :: Python :: 3.8",

77 | "Programming Language :: Python :: 3.9",

78 | "Programming Language :: Python :: 3.10",

79 | "Programming Language :: Python :: 3.11",

80 | "Programming Language :: Python :: 3 :: Only",

81 | ],

82 | package_data={

83 | "pautobot": [

84 | "pautobot/frontend-dist/**/*",

85 | "pautobot/frontend-dist/*",

86 | ]

87 | },

88 | include_package_data=True,

89 | entry_points={

90 | "console_scripts": [

91 | "pautobot=pautobot.app:main",

92 | "pautobot.ingest=pautobot.ingest:main",

93 | ],

94 | },

95 | )

96 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

',

55 | )

56 | return long_description

57 |

58 |

59 | setup(

60 | name="pautobot",

61 | version=get_version(),

62 | packages=find_packages(),

63 | description="Private AutoGPT Robot - Your private task assistant with GPT!",

64 | long_description=get_long_description(),

65 | long_description_content_type="text/markdown",

66 | author="Viet-Anh Nguyen",

67 | author_email="vietanh.dev@gmail.com",

68 | url="https://github.com/vietanhdev/pautobot",

69 | install_requires=get_install_requires(),

70 | license="Apache License 2.0",

71 | keywords="Personal Assistant, Automation, GPT, LLM, PrivateGPT",

72 | classifiers=[

73 | "Natural Language :: English",

74 | "Operating System :: OS Independent",

75 | "Programming Language :: Python",

76 | "Programming Language :: Python :: 3.8",

77 | "Programming Language :: Python :: 3.9",

78 | "Programming Language :: Python :: 3.10",

79 | "Programming Language :: Python :: 3.11",

80 | "Programming Language :: Python :: 3 :: Only",

81 | ],

82 | package_data={

83 | "pautobot": [

84 | "pautobot/frontend-dist/**/*",

85 | "pautobot/frontend-dist/*",

86 | ]

87 | },

88 | include_package_data=True,

89 | entry_points={

90 | "console_scripts": [

91 | "pautobot=pautobot.app:main",

92 | "pautobot.ingest=pautobot.ingest:main",

93 | ],

94 | },

95 | )

96 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |  3 |

3 |

110 | )}

111 |