├── MANIFEST.in

├── manifest.json

├── src

├── sagemaker_defect_detection

│ ├── dataset

│ │ ├── __init__.py

│ │ └── neu.py

│ ├── models

│ │ ├── __init__.py

│ │ └── ddn.py

│ ├── __init__.py

│ ├── utils

│ │ ├── __init__.py

│ │ ├── coco_utils.py

│ │ ├── visualize.py

│ │ └── coco_eval.py

│ ├── transforms.py

│ └── classifier.py

├── prepare_data

│ ├── test_neu.py

│ └── neu.py

├── utils.py

├── prepare_RecordIO.py

└── xml2json.py

├── .gitattributes

├── cloudformation

├── solution-assistant

│ ├── requirements.in

│ ├── requirements.txt

│ ├── solution-assistant.yaml

│ └── src

│ │ └── lambda_fn.py

├── defect-detection-sagemaker-notebook-instance.yaml

├── defect-detection-permissions.yaml

└── defect-detection.yaml

├── .viperlightrc

├── NOTICE

├── docs

├── arch.png

├── data.png

├── sample1.png

├── sample2.png

├── sample3.png

├── data_flow.png

├── numerical.png

├── sagemaker.png

├── train_arch.png

└── launch.svg

├── requirements.in

├── .github

├── ISSUE_TEMPLATE

│ ├── questions-or-general-feedbacks.md

│ ├── bug_report.md

│ └── feature_request.md

└── workflows

│ ├── codeql.yaml

│ └── ci.yml

├── .viperlightignore

├── THIRD_PARTY

├── .gitignore

├── setup.cfg

├── CODE_OF_CONDUCT.md

├── scripts

├── train_classifier.sh

├── set_kernelspec.py

├── find_best_ckpt.py

├── build.sh

└── train_detector.sh

├── pyproject.toml

├── .pre-commit-config.yaml

├── setup.py

├── CONTRIBUTING.md

├── requirements.txt

├── LICENSE

├── notebooks

├── 3_classification_from_scratch.ipynb

├── 0_demo.ipynb

├── 1_retrain_from_checkpoint.ipynb

└── 2_detection_from_scratch.ipynb

└── README.md

/MANIFEST.in:

--------------------------------------------------------------------------------

1 | include requirements.txt

2 |

--------------------------------------------------------------------------------

/manifest.json:

--------------------------------------------------------------------------------

1 | {

2 | "version": "1.1.1"

3 | }

4 |

--------------------------------------------------------------------------------

/src/sagemaker_defect_detection/dataset/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/src/sagemaker_defect_detection/models/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/.gitattributes:

--------------------------------------------------------------------------------

1 | *.py text diff=python

2 | *.ipynb text

3 |

--------------------------------------------------------------------------------

/cloudformation/solution-assistant/requirements.in:

--------------------------------------------------------------------------------

1 | crhelper

2 |

--------------------------------------------------------------------------------

/.viperlightrc:

--------------------------------------------------------------------------------

1 | {

2 | "failOn": "medium",

3 | "all": true

4 | }

5 |

--------------------------------------------------------------------------------

/NOTICE:

--------------------------------------------------------------------------------

1 | Copyright Amazon.com, Inc. or its affiliates. All Rights Reserved.

2 |

--------------------------------------------------------------------------------

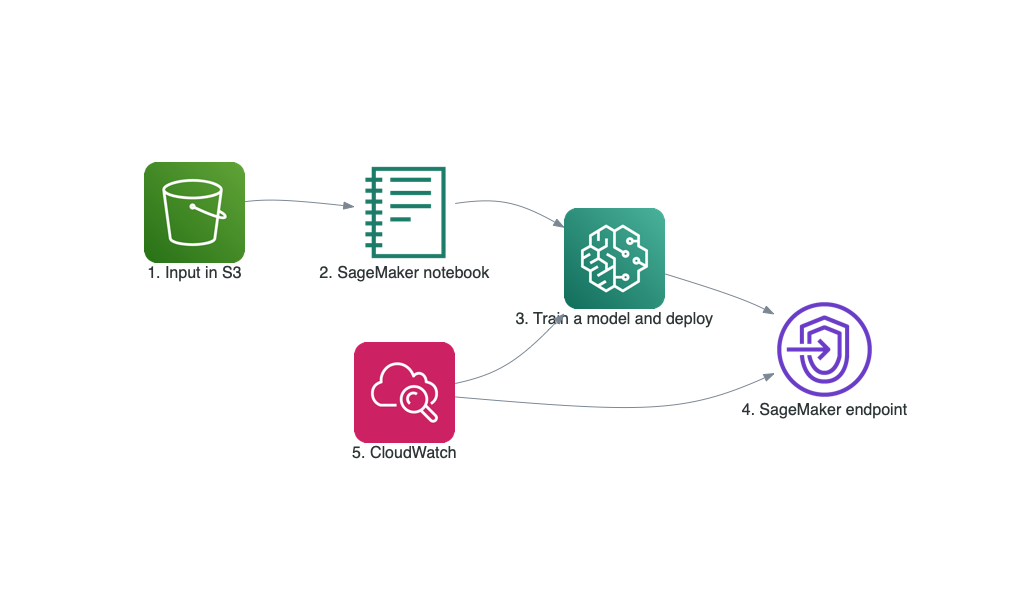

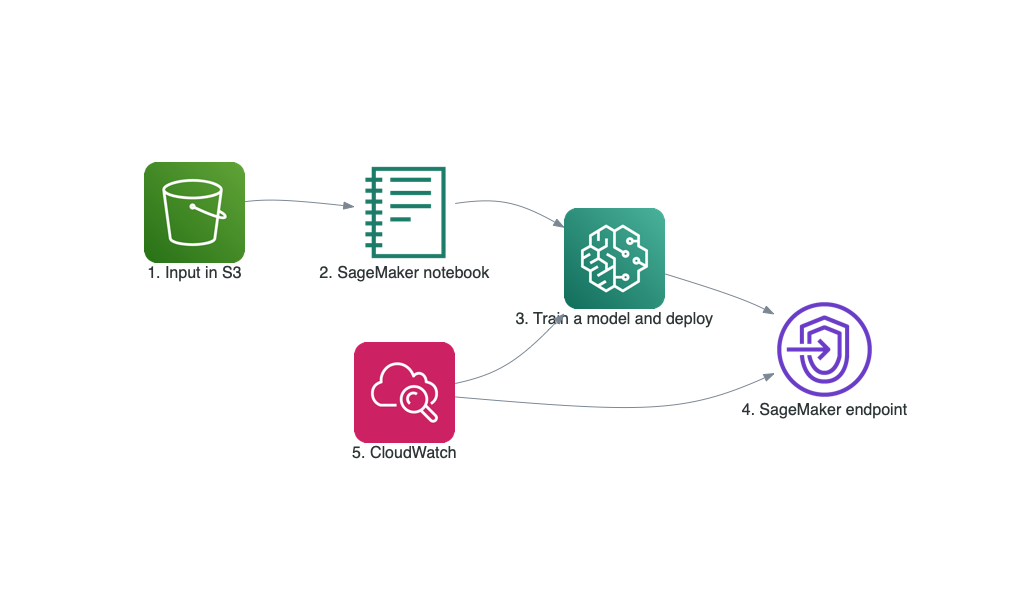

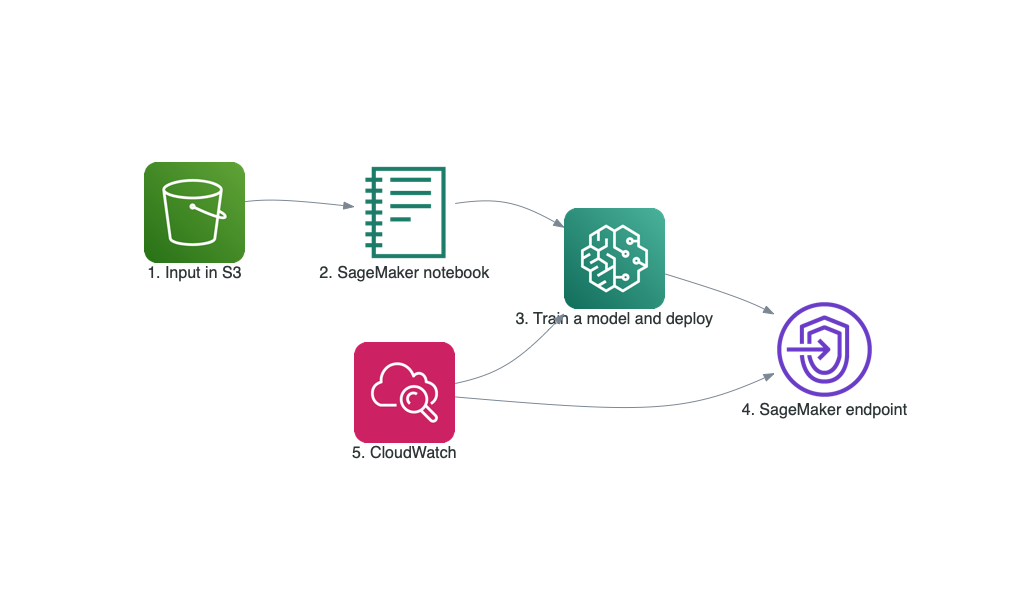

/docs/arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/awslabs/sagemaker-defect-detection/HEAD/docs/arch.png

--------------------------------------------------------------------------------

/docs/data.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/awslabs/sagemaker-defect-detection/HEAD/docs/data.png

--------------------------------------------------------------------------------

/docs/sample1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/awslabs/sagemaker-defect-detection/HEAD/docs/sample1.png

--------------------------------------------------------------------------------

/docs/sample2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/awslabs/sagemaker-defect-detection/HEAD/docs/sample2.png

--------------------------------------------------------------------------------

/docs/sample3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/awslabs/sagemaker-defect-detection/HEAD/docs/sample3.png

--------------------------------------------------------------------------------

/docs/data_flow.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/awslabs/sagemaker-defect-detection/HEAD/docs/data_flow.png

--------------------------------------------------------------------------------

/docs/numerical.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/awslabs/sagemaker-defect-detection/HEAD/docs/numerical.png

--------------------------------------------------------------------------------

/docs/sagemaker.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/awslabs/sagemaker-defect-detection/HEAD/docs/sagemaker.png

--------------------------------------------------------------------------------

/docs/train_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/awslabs/sagemaker-defect-detection/HEAD/docs/train_arch.png

--------------------------------------------------------------------------------

/requirements.in:

--------------------------------------------------------------------------------

1 | patool

2 | pyunpack

3 | torch

4 | torchvision

5 | albumentations

6 | pytorch_lightning

7 | pycocotools

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/questions-or-general-feedbacks.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Questions or general feedbacks

3 | about: Question and feedbacks

4 | title: "[General]"

5 | labels: question

6 | assignees: ehsanmok

7 |

8 | ---

9 |

--------------------------------------------------------------------------------

/cloudformation/solution-assistant/requirements.txt:

--------------------------------------------------------------------------------

1 | #

2 | # This file is autogenerated by pip-compile

3 | # To update, run:

4 | #

5 | # pip-compile requirements.in

6 | #

7 | crhelper==2.0.6 # via -r requirements.in

8 |

--------------------------------------------------------------------------------

/.viperlightignore:

--------------------------------------------------------------------------------

1 | ^dist/

2 | docs/launch.svg:4

3 | CODE_OF_CONDUCT.md:4

4 | CONTRIBUTING.md:50

5 | build/solution-assistant.zip

6 | cloudformation/defect-detection-endpoint.yaml:42

7 | cloudformation/defect-detection-permissions.yaml:114

8 | docs/NEU_surface_defect_database.pdf

9 |

--------------------------------------------------------------------------------

/THIRD_PARTY:

--------------------------------------------------------------------------------

1 | Modifications Copyright Amazon.com, Inc. or its affiliates. All Rights Reserved.

2 | SPDX-License-Identifier: Apache-2.0

3 |

4 | Original Copyright 2016 Soumith Chintala. All Rights Reserved.

5 | SPDX-License-Identifier: BSD-3

6 | https://github.com/pytorch/vision/blob/master/LICENSE

7 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | *.egg-info

2 | __pycache__

3 | .vscode

4 | .mypy*

5 | .ipynb*

6 | .pytest*

7 | *output

8 | *logs*

9 | *checkpoints*

10 | *.ckpt*

11 | *.tar.gz

12 | dist

13 | build

14 | site-packages

15 | stack_outputs.json

16 | docs/*.py

17 | raw_neu_det

18 | neu_det

19 | raw_neu_cls

20 | neu_cls

21 |

--------------------------------------------------------------------------------

/setup.cfg:

--------------------------------------------------------------------------------

1 | [tool:pytest]

2 | norecursedirs =

3 | .git

4 | dist

5 | build

6 | python_files =

7 | test_*.py

8 |

9 | [metadata]

10 | # license_files = LICENSE

11 |

12 | [check-manifest]

13 | ignore =

14 | *.yaml

15 | .github

16 | .github/*

17 | build

18 | deploy

19 | notebook

20 |

--------------------------------------------------------------------------------

/CODE_OF_CONDUCT.md:

--------------------------------------------------------------------------------

1 | ## Code of Conduct

2 | This project has adopted the [Amazon Open Source Code of Conduct](https://aws.github.io/code-of-conduct).

3 | For more information see the [Code of Conduct FAQ](https://aws.github.io/code-of-conduct-faq) or contact

4 | opensource-codeofconduct@amazon.com with any additional questions or comments.

5 |

--------------------------------------------------------------------------------

/scripts/train_classifier.sh:

--------------------------------------------------------------------------------

1 | CLASSIFICATION_DATA_PATH=$1

2 | BACKBONE=$2

3 | BASE_DIR="$(dirname $(dirname $(readlink -f $0)))"

4 | CLASSIFIER_SCRIPT="${BASE_DIR}/src/sagemaker_defect_detection/classifier.py"

5 | MFN_LOGS="${BASE_DIR}/logs/"

6 |

7 | python ${CLASSIFIER_SCRIPT} \

8 | --data-path=${CLASSIFICATION_DATA_PATH} \

9 | --save-path=${MFN_LOGS} \

10 | --backbone=${BACKBONE} \

11 | --gpus=1 \

12 | --learning-rate=1e-3 \

13 | --epochs=50

14 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/bug_report.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Bug report

3 | about: Create a report to help us improve

4 | title: "[Bug]"

5 | labels: bug

6 | assignees: ehsanmok

7 |

8 | ---

9 |

10 | **Describe the bug**

11 | A clear and concise description of what the bug is.

12 |

13 | **To Reproduce**

14 | Steps to reproduce the behavior and error details

15 |

16 | **Expected behavior**

17 | A clear and concise description of what you expected to happen.

18 |

19 | **Additional context**

20 | Add any other context about the problem here.

21 |

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

1 | [build-system]

2 | requires = [

3 | "setuptools",

4 | "wheel",

5 | ]

6 |

7 | [tool.black]

8 | line-length = 119

9 | exclude = '''

10 | (

11 | /(

12 | \.eggs

13 | | \.git

14 | | \.mypy_cache

15 | | build

16 | | dist

17 | )

18 | )

19 | '''

20 |

21 | [tool.portray]

22 | modules = ["src/sagemaker_defect_detection", "src/prepare_data/neu.py"]

23 | docs_dir = "docs"

24 |

25 | [tool.portray.mkdocs.theme]

26 | favicon = "docs/sagemaker.png"

27 | logo = "docs/sagemaker.png"

28 | name = "material"

29 | palette = {primary = "dark blue"}

30 |

--------------------------------------------------------------------------------

/docs/launch.svg:

--------------------------------------------------------------------------------

1 |

7 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/feature_request.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Feature request

3 | about: Suggest an idea for this project

4 | title: "[Feature]"

5 | labels: enhancement

6 | assignees: ehsanmok

7 |

8 | ---

9 |

10 | **Is your feature request related to a problem? Please describe.**

11 | A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

12 |

13 | **Describe the solution you'd like**

14 | A clear and concise description of what you want to happen.

15 |

16 | **Describe alternatives you've considered**

17 | A clear and concise description of any alternative solutions or features you've considered.

18 |

19 | **Additional context**

20 | Add any other context or screenshots about the feature request here.

21 |

--------------------------------------------------------------------------------

/src/sagemaker_defect_detection/__init__.py:

--------------------------------------------------------------------------------

1 | try:

2 | import pytorch_lightning

3 | except ModuleNotFoundError:

4 | print("installing the dependencies for sagemaker_defect_detection package ...")

5 | import subprocess

6 |

7 | subprocess.run(

8 | "python -m pip install -q albumentations==0.4.6 pytorch_lightning==0.8.5 pycocotools==2.0.1", shell=True

9 | )

10 |

11 | from sagemaker_defect_detection.models.ddn import Classification, Detection, RoI, RPN

12 | from sagemaker_defect_detection.dataset.neu import NEUCLS, NEUDET

13 | from sagemaker_defect_detection.transforms import get_transform, get_augmentation, get_preprocess

14 |

15 | __all__ = [

16 | "Classification",

17 | "Detection",

18 | "RoI",

19 | "RPN",

20 | "NEUCLS",

21 | "NEUDET",

22 | "get_transform",

23 | "get_augmentation",

24 | "get_preprocess",

25 | ]

26 |

--------------------------------------------------------------------------------

/scripts/set_kernelspec.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import json

3 |

4 |

5 | def set_kernel_spec(notebook_filepath, display_name, kernel_name):

6 | with open(notebook_filepath, "r") as openfile:

7 | notebook = json.load(openfile)

8 | kernel_spec = {"display_name": display_name, "language": "python", "name": kernel_name}

9 | if "metadata" not in notebook:

10 | notebook["metadata"] = {}

11 | notebook["metadata"]["kernelspec"] = kernel_spec

12 | with open(notebook_filepath, "w") as openfile:

13 | json.dump(notebook, openfile)

14 |

15 |

16 | if __name__ == "__main__":

17 | parser = argparse.ArgumentParser()

18 | parser.add_argument("--notebook")

19 | parser.add_argument("--display-name")

20 | parser.add_argument("--kernel")

21 | args = parser.parse_args()

22 | set_kernel_spec(args.notebook, args.display_name, args.kernel)

23 |

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | default_language_version:

2 | python: python3.6

3 |

4 | repos:

5 | - repo: https://github.com/pre-commit/pre-commit-hooks

6 | rev: v2.3.0

7 | hooks:

8 | - id: end-of-file-fixer

9 | - id: trailing-whitespace

10 | - repo: https://github.com/psf/black

11 | rev: 20.8b1

12 | hooks:

13 | - id: black

14 | - repo: https://github.com/kynan/nbstripout

15 | rev: 0.3.7

16 | hooks:

17 | - id: nbstripout

18 | name: nbstripout

19 | description: "nbstripout: strip output from Jupyter and IPython notebooks"

20 | entry: nbstripout notebooks

21 | language: python

22 | types: [jupyter]

23 | - repo: https://github.com/tomcatling/black-nb

24 | rev: 0.3.0

25 | hooks:

26 | - id: black-nb

27 | name: black-nb

28 | entry: black-nb

29 | language: python

30 | args: ["--include", '\.ipynb$']

31 |

--------------------------------------------------------------------------------

/.github/workflows/codeql.yaml:

--------------------------------------------------------------------------------

1 | name: "CodeQL"

2 |

3 | on:

4 | push:

5 | pull_request:

6 | schedule:

7 | - cron: '0 21 * * 1'

8 |

9 | jobs:

10 | CodeQL-Build:

11 |

12 | runs-on: ubuntu-latest

13 | strategy:

14 | fail-fast: false

15 | matrix:

16 | language: ['python']

17 |

18 | steps:

19 | - name: Checkout repository

20 | uses: actions/checkout@v2

21 | with:

22 | # We must fetch at least the immediate parents so that if this is

23 | # a pull request then we can checkout the head.

24 | fetch-depth: 2

25 |

26 | # If this run was triggered by a pull request event, then checkout

27 | # the head of the pull request instead of the merge commit.

28 | - run: git checkout HEAD^2

29 | if: ${{ github.event_name == 'pull_request' }}

30 |

31 | - name: Initialize CodeQL

32 | uses: github/codeql-action/init@v1

33 | with:

34 | languages: ${{ matrix.language }}

35 |

36 | - name: Perform CodeQL Analysis

37 | uses: github/codeql-action/analyze@v1

38 |

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | from pathlib import Path

2 |

3 | from setuptools import setup, find_packages

4 |

5 | ROOT = Path(__file__).parent.resolve()

6 |

7 | long_description = (ROOT / "README.md").read_text(encoding="utf-8")

8 |

9 | dev_dependencies = ["pre-commit", "mypy==0.781", "black==20.8b1", "nbstripout==0.3.7", "black-nb==0.3.0"]

10 | test_dependencies = ["pytest>=6.0"]

11 | doc_dependencies = ["portray>=1.4.0"]

12 |

13 | setup(

14 | name="sagemaker_defect_detection",

15 | version="0.1",

16 | description="Detect Defects in Products from their Images using Amazon SageMaker ",

17 | long_description=long_description,

18 | author="Ehsan M. Kermani",

19 | python_requires=">=3.6",

20 | package_dir={"": "src"},

21 | packages=find_packages("src", exclude=["tests", "tests/*"]),

22 | install_requires=open(str(ROOT / "requirements.txt"), "r").read(),

23 | extras_require={"dev": dev_dependencies, "test": test_dependencies, "doc": doc_dependencies},

24 | license="Apache License 2.0",

25 | classifiers=[

26 | "Development Status :: 3 - Alpha",

27 | "Programming Language :: Python :: 3.6",

28 | "Programming Language :: Python :: 3.7",

29 | "Programming Language :: Python :: 3.8",

30 | "Programming Language :: Python :: 3 :: Only",

31 | ],

32 | )

33 |

--------------------------------------------------------------------------------

/scripts/find_best_ckpt.py:

--------------------------------------------------------------------------------

1 | import re

2 | from pathlib import Path

3 |

4 |

5 | def get_score(s: str) -> float:

6 | """Gets the criterion score from .ckpt formated with ModelCheckpoint

7 |

8 | Parameters

9 | ----------

10 | s : str

11 | Assumption is the last number is the desired number carved in .ckpt

12 |

13 | Returns

14 | -------

15 | float

16 | The criterion float

17 | """

18 | return float(re.findall(r"(\d+.\d+).ckpt", s)[0])

19 |

20 |

21 | def main(path: str, op: str) -> str:

22 | """Finds the best ckpt path

23 |

24 | Parameters

25 | ----------

26 | path : str

27 | ckpt path

28 | op : str

29 | "max" (for mAP for example) or "min" (for loss)

30 |

31 | Returns

32 | -------

33 | str

34 | A ckpt path

35 | """

36 | ckpts = list(map(str, Path(path).glob("*.ckpt")))

37 | if not len(ckpts):

38 | return

39 |

40 | ckpt_score_dict = {ckpt: get_score(ckpt) for ckpt in ckpts}

41 | op = max if op == "max" else min

42 | out = op(ckpt_score_dict, key=ckpt_score_dict.get)

43 | print(out) # need to flush for bash

44 | return out

45 |

46 |

47 | if __name__ == "__main__":

48 | import sys

49 |

50 | if len(sys.argv) < 3:

51 | print("provide checkpoint path and op either max or min")

52 | sys.exit(1)

53 |

54 | main(sys.argv[1], sys.argv[2])

55 |

--------------------------------------------------------------------------------

/src/prepare_data/test_neu.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | import pytest

4 |

5 | import neu

6 |

7 |

8 | def tmp_fill(tmpdir_, classes, ext):

9 | for cls_ in classes.values():

10 | tmpdir_.join(cls_ + "_0" + ext)

11 | # add one more image

12 | tmpdir_.join(classes["crazing"] + "_1" + ext)

13 | return

14 |

15 |

16 | @pytest.fixture()

17 | def tmp_neu():

18 | def _create(tmpdir, filename):

19 | tmpneu = tmpdir.mkdir(filename)

20 | if filename == "NEU-CLS":

21 | tmp_fill(tmpneu, neu.CLASSES, ".png")

22 | elif filename == "NEU-DET":

23 | imgs = tmpneu.mkdir("IMAGES")

24 | tmp_fill(imgs, neu.CLASSES, ".png")

25 | anns = tmpneu.mkdir("ANNOTATIONS")

26 | tmp_fill(anns, neu.CLASSES, ".xml")

27 | else:

28 | raise ValueError("Not supported")

29 | return tmpneu

30 |

31 | return _create

32 |

33 |

34 | @pytest.mark.parametrize("filename", ["NEU-CLS", "NEU-DET"])

35 | def test_main(tmpdir, tmp_neu, filename) -> None:

36 | data_path = tmp_neu(tmpdir, filename)

37 | output_path = tmpdir.mkdir("output_path")

38 | neu.main(data_path, output_path, archived=False)

39 | assert len(os.listdir(output_path)) == len(neu.CLASSES), "failed to match number of classes in output"

40 | for p in output_path.visit():

41 | assert p.check(), "correct path was not created"

42 | return

43 |

--------------------------------------------------------------------------------

/cloudformation/solution-assistant/solution-assistant.yaml:

--------------------------------------------------------------------------------

1 | AWSTemplateFormatVersion: "2010-09-09"

2 | Description: "(SA0015) - sagemaker-defect-detection solution assistant stack"

3 |

4 | Parameters:

5 | SolutionPrefix:

6 | Type: String

7 | SolutionName:

8 | Type: String

9 | StackName:

10 | Type: String

11 | S3Bucket:

12 | Type: String

13 | SolutionS3Bucket:

14 | Type: String

15 | RoleArn:

16 | Type: String

17 |

18 | Mappings:

19 | Function:

20 | SolutionAssistant:

21 | S3Key: "build/solution-assistant.zip"

22 |

23 | Resources:

24 | SolutionAssistant:

25 | Type: "Custom::SolutionAssistant"

26 | Properties:

27 | SolutionPrefix: !Ref SolutionPrefix

28 | ServiceToken: !GetAtt SolutionAssistantLambda.Arn

29 | S3Bucket: !Ref S3Bucket

30 | StackName: !Ref StackName

31 |

32 | SolutionAssistantLambda:

33 | Type: AWS::Lambda::Function

34 | Properties:

35 | Handler: "lambda_fn.handler"

36 | FunctionName: !Sub "${SolutionPrefix}-solution-assistant"

37 | Role: !Ref RoleArn

38 | Runtime: "python3.8"

39 | Code:

40 | S3Bucket: !Ref SolutionS3Bucket

41 | S3Key: !Sub

42 | - "${SolutionName}/${LambdaS3Key}"

43 | - LambdaS3Key: !FindInMap [Function, SolutionAssistant, S3Key]

44 | Timeout: 60

45 | Metadata:

46 | cfn_nag:

47 | rules_to_suppress:

48 | - id: W58

49 | reason: >-

50 | The required permissions are provided in the permissions stack.

51 |

--------------------------------------------------------------------------------

/src/sagemaker_defect_detection/utils/__init__.py:

--------------------------------------------------------------------------------

1 | from typing import Optional, Union

2 | from pathlib import Path

3 | import tarfile

4 | import logging

5 | from logging.config import fileConfig

6 |

7 | import torch

8 | import torch.nn as nn

9 |

10 |

11 | logger = logging.getLogger(__name__)

12 | logger.setLevel(logging.INFO)

13 |

14 |

15 | def get_logger(config_path: str) -> logging.Logger:

16 | fileConfig(config_path, disable_existing_loggers=False)

17 | logger = logging.getLogger()

18 | return logger

19 |

20 |

21 | def str2bool(flag: Union[str, bool]) -> bool:

22 | if not isinstance(flag, bool):

23 | if flag.lower() == "false":

24 | flag = False

25 | elif flag.lower() == "true":

26 | flag = True

27 | else:

28 | raise ValueError("Wrong boolean argument!")

29 | return flag

30 |

31 |

32 | def freeze(m: nn.Module) -> None:

33 | assert isinstance(m, nn.Module), "freeze only is applied to modules"

34 | for param in m.parameters():

35 | param.requires_grad = False

36 |

37 | return

38 |

39 |

40 | def load_checkpoint(model: nn.Module, path: str, prefix: Optional[str]) -> nn.Module:

41 | path = Path(path)

42 | logger.info(f"path: {path}")

43 | if path.is_dir():

44 | path_str = str(list(path.rglob("*.ckpt"))[0])

45 | else:

46 | path_str = str(path)

47 |

48 | device = "cuda" if torch.cuda.is_available() else "cpu"

49 | state_dict = torch.load(path_str, map_location=torch.device(device))["state_dict"]

50 | if prefix is not None:

51 | if prefix[-1] != ".":

52 | prefix += "."

53 |

54 | state_dict = {k[len(prefix) :]: v for k, v in state_dict.items() if k.startswith(prefix)}

55 |

56 | model.load_state_dict(state_dict, strict=True)

57 | return model

58 |

--------------------------------------------------------------------------------

/.github/workflows/ci.yml:

--------------------------------------------------------------------------------

1 | name: CI

2 |

3 | on:

4 | pull_request:

5 | push:

6 | branches: [ mainline ]

7 |

8 | jobs:

9 | build:

10 |

11 | strategy:

12 | max-parallel: 4

13 | fail-fast: false

14 | matrix:

15 | python-version: [3.7, 3.8]

16 | platform: [ubuntu-latest]

17 |

18 | runs-on: ${{ matrix.platform }}

19 |

20 | steps:

21 | - uses: actions/checkout@v2

22 | - name: Set up Python ${{ matrix.python-version }}

23 | uses: actions/setup-python@v2

24 | with:

25 | python-version: ${{ matrix.python-version }}

26 | - name: CloudFormation lint

27 | run: |

28 | python -m pip install --upgrade pip

29 | python -m pip install cfn-lint

30 | for y in `find deploy/* -name "*.yaml" -o -name "*.template" -o -name "*.json"`; do

31 | echo "============= $y ================"

32 | cfn-lint --fail-on-warnings $y || ec1=$?

33 | done

34 | if [ "$ec1" -ne "0" ]; then echo 'ERROR-1'; else echo 'SUCCESS-1'; ec1=0; fi

35 | echo "Exit Code 1 `echo $ec1`"

36 | if [ "$ec1" -ne "0" ]; then echo 'ERROR'; ec=1; else echo 'SUCCESS'; ec=0; fi;

37 | echo "Exit Code Final `echo $ec`"

38 | exit $ec

39 | - name: Build the package

40 | run: |

41 | python -m pip install -e '.[dev,test,doc]'

42 | - name: Code style check

43 | run: |

44 | black --check src

45 | - name: Notebook style check

46 | run: |

47 | black-nb notebooks/*.ipynb --check

48 | - name: Type check

49 | run: |

50 | mypy --ignore-missing-imports --allow-redefinition --pretty --show-error-context src/

51 | - name: Run tests

52 | run: |

53 | pytest --pyargs src/ -s

54 | - name: Update docs

55 | run: |

56 | portray on_github_pages -m "Update gh-pages" -f

57 |

--------------------------------------------------------------------------------

/src/sagemaker_defect_detection/utils/coco_utils.py:

--------------------------------------------------------------------------------

1 | """

2 | BSD 3-Clause License

3 |

4 | Copyright (c) Soumith Chintala 2016,

5 | All rights reserved.

6 |

7 | Redistribution and use in source and binary forms, with or without

8 | modification, are permitted provided that the following conditions are met:

9 |

10 | * Redistributions of source code must retain the above copyright notice, this

11 | list of conditions and the following disclaimer.

12 |

13 | * Redistributions in binary form must reproduce the above copyright notice,

14 | this list of conditions and the following disclaimer in the documentation

15 | and/or other materials provided with the distribution.

16 |

17 | * Neither the name of the copyright holder nor the names of its

18 | contributors may be used to endorse or promote products derived from

19 | this software without specific prior written permission.

20 |

21 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

22 | AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

23 | IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

24 | DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

25 | FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

26 | DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

27 | SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

28 | CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

29 | OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

30 | OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

31 |

32 |

33 | This module is a modified version of https://github.com/pytorch/vision/tree/03b1d38ba3c67703e648fb067570eeb1a1e61265/references/detection

34 | """

35 |

36 | from pycocotools.coco import COCO

37 |

38 |

39 | def convert_to_coco_api(ds):

40 | coco_ds = COCO()

41 | # annotation IDs need to start at 1, not 0, see torchvision issue #1530

42 | ann_id = 1

43 | dataset = {"images": [], "categories": [], "annotations": []}

44 | categories = set()

45 | for img_idx in range(len(ds)):

46 | # find better way to get target

47 | # targets = ds.get_annotations(img_idx)

48 | img, targets, _ = ds[img_idx]

49 | image_id = targets["image_id"].item()

50 | img_dict = {}

51 | img_dict["id"] = image_id

52 | img_dict["height"] = img.shape[-2]

53 | img_dict["width"] = img.shape[-1]

54 | dataset["images"].append(img_dict)

55 | bboxes = targets["boxes"]

56 | bboxes[:, 2:] -= bboxes[:, :2]

57 | bboxes = bboxes.tolist()

58 | labels = targets["labels"].tolist()

59 | areas = targets["area"].tolist()

60 | iscrowd = targets["iscrowd"].tolist()

61 | num_objs = len(bboxes)

62 | for i in range(num_objs):

63 | ann = {}

64 | ann["image_id"] = image_id

65 | ann["bbox"] = bboxes[i]

66 | ann["category_id"] = labels[i]

67 | categories.add(labels[i])

68 | ann["area"] = areas[i]

69 | ann["iscrowd"] = iscrowd[i]

70 | ann["id"] = ann_id

71 | dataset["annotations"].append(ann)

72 | ann_id += 1

73 | dataset["categories"] = [{"id": i} for i in sorted(categories)]

74 | coco_ds.dataset = dataset

75 | coco_ds.createIndex()

76 | return coco_ds

77 |

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | # Contributing Guidelines

2 |

3 | Thank you for your interest in contributing to our project. Whether it's a bug report, new feature, correction, or additional

4 | documentation, we greatly value feedback and contributions from our community.

5 |

6 | Please read through this document before submitting any issues or pull requests to ensure we have all the necessary

7 | information to effectively respond to your bug report or contribution.

8 |

9 |

10 | ## Reporting Bugs/Feature Requests

11 |

12 | We welcome you to use the GitHub issue tracker to report bugs or suggest features.

13 |

14 | When filing an issue, please check existing open, or recently closed, issues to make sure somebody else hasn't already

15 | reported the issue. Please try to include as much information as you can. Details like these are incredibly useful:

16 |

17 | * A reproducible test case or series of steps

18 | * The version of our code being used

19 | * Any modifications you've made relevant to the bug

20 | * Anything unusual about your environment or deployment

21 |

22 |

23 | ## Contributing via Pull Requests

24 | Contributions via pull requests are much appreciated. Before sending us a pull request, please ensure that:

25 |

26 | 1. You are working against the latest source on the *mainline* branch.

27 | 2. You check existing open, and recently merged, pull requests to make sure someone else hasn't addressed the problem already.

28 | 3. You open an issue to discuss any significant work - we would hate for your time to be wasted.

29 |

30 | To send us a pull request, please:

31 |

32 | 1. Fork the repository.

33 | 2. Modify the source; please focus on the specific change you are contributing. If you also reformat all the code, it will be hard for us to focus on your change.

34 | 3. Ensure local tests pass.

35 | 4. Commit to your fork using clear commit messages.

36 | 5. Send us a pull request, answering any default questions in the pull request interface.

37 | 6. Pay attention to any automated CI failures reported in the pull request, and stay involved in the conversation.

38 |

39 | GitHub provides additional document on [forking a repository](https://help.github.com/articles/fork-a-repo/) and

40 | [creating a pull request](https://help.github.com/articles/creating-a-pull-request/).

41 |

42 |

43 | ## Finding contributions to work on

44 | Looking at the existing issues is a great way to find something to contribute on. As our projects, by default, use the default GitHub issue labels (enhancement/bug/duplicate/help wanted/invalid/question/wontfix), looking at any 'help wanted' issues is a great place to start.

45 |

46 |

47 | ## Code of Conduct

48 | This project has adopted the [Amazon Open Source Code of Conduct](https://aws.github.io/code-of-conduct).

49 | For more information see the [Code of Conduct FAQ](https://aws.github.io/code-of-conduct-faq) or contact

50 | opensource-codeofconduct@amazon.com with any additional questions or comments.

51 |

52 |

53 | ## Security issue notifications

54 | If you discover a potential security issue in this project we ask that you notify AWS/Amazon Security via our [vulnerability reporting page](http://aws.amazon.com/security/vulnerability-reporting/). Please do **not** create a public github issue.

55 |

56 |

57 | ## Licensing

58 |

59 | See the [LICENSE](LICENSE) file for our project's licensing. We will ask you to confirm the licensing of your contribution.

60 |

61 | We may ask you to sign a [Contributor License Agreement (CLA)](http://en.wikipedia.org/wiki/Contributor_License_Agreement) for larger changes.

62 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | #

2 | # This file is autogenerated by pip-compile with python 3.8

3 | # To update, run:

4 | #

5 | # pip-compile requirements.in

6 | #

7 | absl-py

8 | # via tensorboard

9 | albumentations

10 | # via -r requirements.in

11 | cachetools

12 | # via google-auth

13 | certifi

14 | # via requests

15 | charset-normalizer

16 | # via requests

17 | cycler

18 | # via matplotlib

19 | cython

20 | # via pycocotools

21 | easyprocess

22 | # via pyunpack

23 | entrypoint2

24 | # via pyunpack

25 | fonttools

26 | # via matplotlib

27 | future

28 | # via

29 | # pytorch-lightning

30 | # torch

31 | google-auth

32 | # via

33 | # google-auth-oauthlib

34 | # tensorboard

35 | google-auth-oauthlib

36 | # via tensorboard

37 | grpcio

38 | # via tensorboard

39 | idna

40 | # via requests

41 | imageio

42 | # via

43 | # imgaug

44 | # scikit-image

45 | imgaug

46 | # via albumentations

47 | importlib-metadata

48 | # via markdown

49 | kiwisolver

50 | # via matplotlib

51 | markdown

52 | # via tensorboard

53 | matplotlib

54 | # via

55 | # imgaug

56 | # pycocotools

57 | networkx

58 | # via scikit-image

59 | numpy

60 | # via

61 | # albumentations

62 | # imageio

63 | # imgaug

64 | # matplotlib

65 | # opencv-python

66 | # opencv-python-headless

67 | # pytorch-lightning

68 | # pywavelets

69 | # scikit-image

70 | # scipy

71 | # tensorboard

72 | # tifffile

73 | # torch

74 | # torchvision

75 | oauthlib

76 | # via requests-oauthlib

77 | opencv-python

78 | # via imgaug

79 | opencv-python-headless

80 | # via albumentations

81 | packaging

82 | # via

83 | # matplotlib

84 | # scikit-image

85 | patool

86 | # via -r requirements.in

87 | pillow

88 | # via

89 | # imageio

90 | # imgaug

91 | # matplotlib

92 | # scikit-image

93 | # torchvision

94 | protobuf

95 | # via tensorboard

96 | pyasn1

97 | # via

98 | # pyasn1-modules

99 | # rsa

100 | pyasn1-modules

101 | # via google-auth

102 | pycocotools

103 | # via -r requirements.in

104 | pyparsing

105 | # via

106 | # matplotlib

107 | # packaging

108 | python-dateutil

109 | # via matplotlib

110 | pytorch-lightning

111 | # via -r requirements.in

112 | pyunpack

113 | # via -r requirements.in

114 | pywavelets

115 | # via scikit-image

116 | pyyaml

117 | # via

118 | # albumentations

119 | # pytorch-lightning

120 | requests

121 | # via

122 | # requests-oauthlib

123 | # tensorboard

124 | requests-oauthlib

125 | # via google-auth-oauthlib

126 | rsa

127 | # via google-auth

128 | scikit-image

129 | # via imgaug

130 | scipy

131 | # via

132 | # albumentations

133 | # imgaug

134 | # scikit-image

135 | shapely

136 | # via imgaug

137 | six

138 | # via

139 | # google-auth

140 | # grpcio

141 | # imgaug

142 | # python-dateutil

143 | tensorboard

144 | # via pytorch-lightning

145 | tensorboard-data-server

146 | # via tensorboard

147 | tensorboard-plugin-wit

148 | # via tensorboard

149 | tifffile

150 | # via scikit-image

151 | torch

152 | # via

153 | # -r requirements.in

154 | # pytorch-lightning

155 | # torchvision

156 | torchvision

157 | # via -r requirements.in

158 | tqdm

159 | # via pytorch-lightning

160 | urllib3

161 | # via requests

162 | werkzeug

163 | # via tensorboard

164 | wheel

165 | # via tensorboard

166 | zipp

167 | # via importlib-metadata

168 |

--------------------------------------------------------------------------------

/src/sagemaker_defect_detection/transforms.py:

--------------------------------------------------------------------------------

1 | from typing import Callable

2 | import torchvision.transforms as transforms

3 |

4 | import albumentations as albu

5 | import albumentations.pytorch.transforms as albu_transforms

6 |

7 |

8 | PROBABILITY = 0.5

9 | ROTATION_ANGLE = 90

10 | NUM_CHANNELS = 3

11 | # required for resnet

12 | IMAGE_RESIZE_HEIGHT = 256

13 | IMAGE_RESIZE_WIDTH = 256

14 | IMAGE_HEIGHT = 224

15 | IMAGE_WIDTH = 224

16 | # standard imagenet1k mean and standard deviation of RGB channels

17 | MEAN_RED = 0.485

18 | MEAN_GREEN = 0.456

19 | MEAN_BLUE = 0.406

20 | STD_RED = 0.229

21 | STD_GREEN = 0.224

22 | STD_BLUE = 0.225

23 |

24 |

25 | def get_transform(split: str) -> Callable:

26 | """

27 | Image data transformations such as normalization for train split for classification task

28 |

29 | Parameters

30 | ----------

31 | split : str

32 | train or else

33 |

34 | Returns

35 | -------

36 | Callable

37 | Image transformation function

38 | """

39 | normalize = transforms.Normalize(mean=[MEAN_RED, MEAN_GREEN, MEAN_BLUE], std=[STD_RED, STD_GREEN, STD_BLUE])

40 | if split == "train":

41 | return transforms.Compose(

42 | [

43 | transforms.RandomResizedCrop(IMAGE_HEIGHT),

44 | transforms.RandomRotation(ROTATION_ANGLE),

45 | transforms.RandomHorizontalFlip(),

46 | transforms.ToTensor(),

47 | normalize,

48 | ]

49 | )

50 |

51 | else:

52 | return transforms.Compose(

53 | [

54 | transforms.Resize(IMAGE_RESIZE_HEIGHT),

55 | transforms.CenterCrop(IMAGE_HEIGHT),

56 | transforms.ToTensor(),

57 | normalize,

58 | ]

59 | )

60 |

61 |

62 | def get_augmentation(split: str) -> Callable:

63 | """

64 | Obtains proper image augmentation in train split for detection task.

65 | We have splitted transformations done for detection task into augmentation and preprocessing

66 | for clarity

67 |

68 | Parameters

69 | ----------

70 | split : str

71 | train or else

72 |

73 | Returns

74 | -------

75 | Callable

76 | Image augmentation function

77 | """

78 | if split == "train":

79 | return albu.Compose(

80 | [

81 | albu.Resize(IMAGE_RESIZE_HEIGHT, IMAGE_RESIZE_WIDTH, always_apply=True),

82 | albu.RandomCrop(IMAGE_HEIGHT, IMAGE_WIDTH, always_apply=True),

83 | albu.RandomRotate90(p=PROBABILITY),

84 | albu.HorizontalFlip(p=PROBABILITY),

85 | albu.RandomBrightness(p=PROBABILITY),

86 | ],

87 | bbox_params=albu.BboxParams(

88 | format="pascal_voc",

89 | label_fields=["labels"],

90 | min_visibility=0.2,

91 | ),

92 | )

93 | else:

94 | return albu.Compose(

95 | [albu.Resize(IMAGE_HEIGHT, IMAGE_WIDTH)],

96 | bbox_params=albu.BboxParams(format="pascal_voc", label_fields=["labels"]),

97 | )

98 |

99 |

100 | def get_preprocess() -> Callable:

101 | """

102 | Image normalization using albumentation for detection task that aligns well with image augmentation

103 |

104 | Returns

105 | -------

106 | Callable

107 | Image normalization function

108 | """

109 | return albu.Compose(

110 | [

111 | albu.Normalize(mean=[MEAN_RED, MEAN_GREEN, MEAN_BLUE], std=[STD_RED, STD_GREEN, STD_BLUE]),

112 | albu_transforms.ToTensorV2(),

113 | ]

114 | )

115 |

--------------------------------------------------------------------------------

/cloudformation/defect-detection-sagemaker-notebook-instance.yaml:

--------------------------------------------------------------------------------

1 | AWSTemplateFormatVersion: "2010-09-09"

2 | Description: "(SA0015) - sagemaker-defect-detection notebook stack"

3 | Parameters:

4 | SolutionPrefix:

5 | Type: String

6 | SolutionName:

7 | Type: String

8 | S3Bucket:

9 | Type: String

10 | SageMakerIAMRoleArn:

11 | Type: String

12 | SageMakerNotebookInstanceType:

13 | Type: String

14 | StackVersion:

15 | Type: String

16 |

17 | Mappings:

18 | S3:

19 | release:

20 | BucketPrefix: "sagemaker-solutions-prod"

21 | development:

22 | BucketPrefix: "sagemaker-solutions-devo"

23 |

24 | Resources:

25 | NotebookInstance:

26 | Type: AWS::SageMaker::NotebookInstance

27 | Properties:

28 | DirectInternetAccess: Enabled

29 | InstanceType: !Ref SageMakerNotebookInstanceType

30 | LifecycleConfigName: !GetAtt LifeCycleConfig.NotebookInstanceLifecycleConfigName

31 | NotebookInstanceName: !Sub "${SolutionPrefix}"

32 | RoleArn: !Sub "${SageMakerIAMRoleArn}"

33 | VolumeSizeInGB: 100

34 | Metadata:

35 | cfn_nag:

36 | rules_to_suppress:

37 | - id: W1201

38 | reason: Solution does not have KMS encryption enabled by default

39 | LifeCycleConfig:

40 | Type: AWS::SageMaker::NotebookInstanceLifecycleConfig

41 | Properties:

42 | NotebookInstanceLifecycleConfigName: !Sub "${SolutionPrefix}-nb-lifecycle-config"

43 | OnStart:

44 | - Content:

45 | Fn::Base64: |

46 | set -e

47 | sudo -u ec2-user -i <> stack_outputs.json

69 | echo ' "AccountID": "${AWS::AccountId}",' >> stack_outputs.json

70 | echo ' "AWSRegion": "${AWS::Region}",' >> stack_outputs.json

71 | echo ' "IamRole": "${SageMakerIAMRoleArn}",' >> stack_outputs.json

72 | echo ' "SolutionPrefix": "${SolutionPrefix}",' >> stack_outputs.json

73 | echo ' "SolutionName": "${SolutionName}",' >> stack_outputs.json

74 | echo ' "SolutionS3Bucket": "${SolutionsRefBucketBase}",' >> stack_outputs.json

75 | echo ' "S3Bucket": "${S3Bucket}"' >> stack_outputs.json

76 | echo '}' >> stack_outputs.json

77 | cat stack_outputs.json

78 | sudo chown -R ec2-user:ec2-user *

79 | EOF

80 | - SolutionsRefBucketBase:

81 | !FindInMap [S3, !Ref StackVersion, BucketPrefix]

82 |

83 | Outputs:

84 | SourceCode:

85 | Description: "Open Jupyter IDE. This authenticate you against Jupyter."

86 | Value: !Sub "https://${NotebookInstance.NotebookInstanceName}.notebook.${AWS::Region}.sagemaker.aws/"

87 |

88 | SageMakerNotebookInstanceSignOn:

89 | Description: "Link to the SageMaker notebook instance"

90 | Value: !Sub "https://${NotebookInstance.NotebookInstanceName}.notebook.${AWS::Region}.sagemaker.aws/notebooks/notebooks"

91 |

--------------------------------------------------------------------------------

/cloudformation/solution-assistant/src/lambda_fn.py:

--------------------------------------------------------------------------------

1 | import boto3

2 | import sys

3 |

4 | sys.path.append("./site-packages")

5 | from crhelper import CfnResource

6 |

7 | helper = CfnResource()

8 |

9 |

10 | @helper.create

11 | def on_create(_, __):

12 | pass

13 |

14 |

15 | @helper.update

16 | def on_update(_, __):

17 | pass

18 |

19 |

20 | def delete_sagemaker_endpoint(endpoint_name):

21 | sagemaker_client = boto3.client("sagemaker")

22 | try:

23 | sagemaker_client.delete_endpoint(EndpointName=endpoint_name)

24 | print("Successfully deleted endpoint " "called '{}'.".format(endpoint_name))

25 | except sagemaker_client.exceptions.ClientError as e:

26 | if "Could not find endpoint" in str(e):

27 | print("Could not find endpoint called '{}'. " "Skipping delete.".format(endpoint_name))

28 | else:

29 | raise e

30 |

31 |

32 | def delete_sagemaker_endpoint_config(endpoint_config_name):

33 | sagemaker_client = boto3.client("sagemaker")

34 | try:

35 | sagemaker_client.delete_endpoint_config(EndpointConfigName=endpoint_config_name)

36 | print("Successfully deleted endpoint configuration " "called '{}'.".format(endpoint_config_name))

37 | except sagemaker_client.exceptions.ClientError as e:

38 | if "Could not find endpoint configuration" in str(e):

39 | print(

40 | "Could not find endpoint configuration called '{}'. " "Skipping delete.".format(endpoint_config_name)

41 | )

42 | else:

43 | raise e

44 |

45 |

46 | def delete_sagemaker_model(model_name):

47 | sagemaker_client = boto3.client("sagemaker")

48 | try:

49 | sagemaker_client.delete_model(ModelName=model_name)

50 | print("Successfully deleted model called '{}'.".format(model_name))

51 | except sagemaker_client.exceptions.ClientError as e:

52 | if "Could not find model" in str(e):

53 | print("Could not find model called '{}'. " "Skipping delete.".format(model_name))

54 | else:

55 | raise e

56 |

57 |

58 | def delete_s3_objects(bucket_name):

59 | s3_resource = boto3.resource("s3")

60 | try:

61 | s3_resource.Bucket(bucket_name).objects.all().delete()

62 | print("Successfully deleted objects in bucket " "called '{}'.".format(bucket_name))

63 | except s3_resource.meta.client.exceptions.NoSuchBucket:

64 | print("Could not find bucket called '{}'. " "Skipping delete.".format(bucket_name))

65 |

66 |

67 | def delete_s3_bucket(bucket_name):

68 | s3_resource = boto3.resource("s3")

69 | try:

70 | s3_resource.Bucket(bucket_name).delete()

71 | print("Successfully deleted bucket " "called '{}'.".format(bucket_name))

72 | except s3_resource.meta.client.exceptions.NoSuchBucket:

73 | print("Could not find bucket called '{}'. " "Skipping delete.".format(bucket_name))

74 |

75 |

76 | @helper.delete

77 | def on_delete(event, __):

78 | # remove sagemaker endpoints

79 | solution_prefix = event["ResourceProperties"]["SolutionPrefix"]

80 | endpoint_names = [

81 | "{}-demo-endpoint".format(solution_prefix), # make sure it is the same as your endpoint name

82 | "{}-demo-model".format(solution_prefix),

83 | "{}-finetuned-endpoint".format(solution_prefix),

84 | "{}-detector-from-scratch-endpoint".format(solution_prefix),

85 | "{}-classification-endpoint".format(solution_prefix),

86 | ]

87 | for endpoint_name in endpoint_names:

88 | delete_sagemaker_model(endpoint_name)

89 | delete_sagemaker_endpoint_config(endpoint_name)

90 | delete_sagemaker_endpoint(endpoint_name)

91 |

92 | # remove files in s3

93 | output_bucket = event["ResourceProperties"]["S3Bucket"]

94 | delete_s3_objects(output_bucket)

95 |

96 | # delete buckets

97 | delete_s3_bucket(output_bucket)

98 |

99 |

100 | def handler(event, context):

101 | helper(event, context)

102 |

--------------------------------------------------------------------------------

/src/prepare_data/neu.py:

--------------------------------------------------------------------------------

1 | """

2 | Dependencies: unzip unrar

3 | python -m pip install patool pyunpack

4 | """

5 |

6 | from pathlib import Path

7 | import shutil

8 | import re

9 | import os

10 |

11 | try:

12 | from pyunpack import Archive

13 | except ModuleNotFoundError:

14 | print("installing the dependencies `patool` and `pyunpack` for unzipping the data")

15 | import subprocess

16 |

17 | subprocess.run("python -m pip install patool==1.12 pyunpack==0.2.1 -q", shell=True)

18 | from pyunpack import Archive

19 |

20 | CLASSES = {

21 | "crazing": "Cr",

22 | "inclusion": "In",

23 | "pitted_surface": "PS",

24 | "patches": "Pa",

25 | "rolled-in_scale": "RS",

26 | "scratches": "Sc",

27 | }

28 |

29 |

30 | def unpack(path: str) -> None:

31 | path = Path(path)

32 | Archive(str(path)).extractall(str(path.parent))

33 | return

34 |

35 |

36 | def cp_class_images(data_path: Path, class_name: str, class_path_dest: Path) -> None:

37 | lst = list(data_path.rglob(f"{class_name}_*"))

38 | for img_file in lst:

39 | shutil.copy2(str(img_file), str(class_path_dest / img_file.name))

40 |

41 | assert len(lst) == len(list(class_path_dest.glob("*")))

42 | return

43 |

44 |

45 | def cp_image_annotation(data_path: Path, class_name: str, image_path_dest: Path, annotation_path_dest: Path) -> None:

46 | img_lst = sorted(list((data_path / "IMAGES").rglob(f"{class_name}_*")))

47 | ann_lst = sorted(list((data_path / "ANNOTATIONS").rglob(f"{class_name}_*")))

48 | assert len(img_lst) == len(

49 | ann_lst

50 | ), f"images count {len(img_lst)} does not match with annotations count {len(ann_lst)} for class {class_name}"

51 | for (img_file, ann_file) in zip(img_lst, ann_lst):

52 | shutil.copy2(str(img_file), str(image_path_dest / img_file.name))

53 | shutil.copy2(str(ann_file), str(annotation_path_dest / ann_file.name))

54 |

55 | assert len(list(image_path_dest.glob("*"))) == len(list(annotation_path_dest.glob("*")))

56 | return

57 |

58 |

59 | def main(data_path: str, output_path: str, archived: bool = True) -> None:

60 | """

61 | Data preparation

62 |

63 | Parameters

64 | ----------

65 | data_path : str

66 | Raw data path

67 | output_path : str

68 | Output data path

69 | archived: bool

70 | Whether the file is archived or not (for testing)

71 |

72 | Raises

73 | ------

74 | ValueError

75 | If the packed data file is different from NEU-CLS or NEU-DET

76 | """

77 | data_path = Path(data_path)

78 | if archived:

79 | unpack(data_path)

80 |

81 | data_path = data_path.parent / re.search(r"^[^.]*", str(data_path.name)).group(0)

82 | try:

83 | os.remove(str(data_path / "Thumbs.db"))

84 | except FileNotFoundError:

85 | print(f"Thumbs.db is not found. Continuing ...")

86 | pass

87 | except Exception as e:

88 | print(f"{e}: Unknown error!")

89 | raise e

90 |

91 | output_path = Path(output_path)

92 | if data_path.name == "NEU-CLS":

93 | for cls_ in CLASSES.values():

94 | cls_path = output_path / cls_

95 | cls_path.mkdir(exist_ok=True)

96 | cp_class_images(data_path, cls_, cls_path)

97 | elif data_path.name == "NEU-DET":

98 | for cls_ in CLASSES:

99 | cls_path = output_path / CLASSES[cls_]

100 | image_path = cls_path / "images"

101 | image_path.mkdir(parents=True, exist_ok=True)

102 | annotation_path = cls_path / "annotations"

103 | annotation_path.mkdir(exist_ok=True)

104 | cp_image_annotation(data_path, cls_, image_path, annotation_path)

105 | else:

106 | raise ValueError(f"Unknown data. Choose between `NEU-CLS` and `NEU-DET`. Given {data_path.name}")

107 |

108 | return

109 |

110 |

111 | if __name__ == "__main__":

112 | import sys

113 |

114 | if len(sys.argv) < 3:

115 | print("Provide `data_path` and `output_path`")

116 | sys.exit(1)

117 |

118 | main(sys.argv[1], sys.argv[2])

119 | print("Done")

120 |

--------------------------------------------------------------------------------

/scripts/build.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | set -euxo pipefail

3 |

4 | NOW=$(date +"%x %r %Z")

5 | echo "Time: $NOW"

6 |

7 | if [ $# -lt 3 ]; then

8 | echo "Please provide the solution name as well as the base S3 bucket name and the region to run build script."

9 | echo "For example: bash ./scripts/build.sh trademarked-solution-name sagemaker-solutions-build us-west-2"

10 | exit 1

11 | fi

12 |

13 | REGION=$3

14 | SOURCE_REGION="us-west-2"

15 | echo "Region: $REGION, source region: $SOURCE_REGION"

16 | BASE_DIR="$(dirname "$(dirname "$(readlink -f "$0")")")"

17 | echo "Base dir: $BASE_DIR"

18 |

19 | rm -rf build

20 |

21 | # build python package

22 | echo "Python build and package"

23 | python -m pip install --upgrade pip

24 | python -m pip install --upgrade wheel setuptools

25 | python setup.py build sdist bdist_wheel

26 |

27 | find . | grep -E "(__pycache__|\.pyc|\.pyo$|\.egg*|\lightning_logs)" | xargs rm -rf

28 |

29 | echo "Add requirements for SageMaker"

30 | cp requirements.txt build/lib/

31 | cd build/lib || exit

32 | mv sagemaker_defect_detection/{classifier.py,detector.py} .

33 | touch source_dir.tar.gz

34 | tar --exclude=source_dir.tar.gz -czvf source_dir.tar.gz .

35 | echo "Only keep source_dir.tar.gz for SageMaker"

36 | find . ! -name "source_dir.tar.gz" -type f -exec rm -r {} +

37 | rm -rf sagemaker_defect_detection

38 |

39 | cd - || exit

40 |

41 | mv dist build

42 |

43 | # add notebooks to build

44 | echo "Prepare notebooks and add to build"

45 | cp -r notebooks build

46 | rm -rf build/notebooks/*neu* # remove local datasets for build

47 | for nb in build/notebooks/*.ipynb; do

48 | python "$BASE_DIR"/scripts/set_kernelspec.py --notebook "$nb" --display-name "Python 3 (PyTorch JumpStart)" --kernel "HUB_1P_IMAGE"

49 | done

50 |

51 | echo "Copy src to build"

52 | cp -r src build

53 |

54 | # add solution assistant

55 | echo "Solution assistant lambda function"

56 | cd cloudformation/solution-assistant/ || exit

57 | python -m pip install -r requirements.txt -t ./src/site-packages

58 |

59 | cd - || exit

60 |

61 | echo "Clean up pyc files, needed to avoid security issues. See: https://blog.jse.li/posts/pyc/"

62 | find cloudformation | grep -E "(__pycache__|\.pyc|\.pyo$)" | xargs rm -rf

63 | cp -r cloudformation/solution-assistant build/

64 | cd build/solution-assistant/src || exit

65 | zip -q -r9 "$BASE_DIR"/build/solution-assistant.zip -- *

66 |

67 | cd - || exit

68 | rm -rf build/solution-assistant

69 |

70 | if [ -z "$4" ] || [ "$4" == 'mainline' ]; then

71 | s3_prefix="s3://$2-$3/$1"

72 | else

73 | s3_prefix="s3://$2-$3/$1-$4"

74 | fi

75 |

76 | # cleanup and copy the build artifacts

77 | echo "Removing the existing objects under $s3_prefix"

78 | aws s3 rm --recursive "$s3_prefix" --region "$REGION"

79 | echo "Copying new objects to $s3_prefix"

80 | aws s3 sync . "$s3_prefix" --delete --region "$REGION" \

81 | --exclude ".git/*" \

82 | --exclude ".vscode/*" \

83 | --exclude ".mypy_cache/*" \

84 | --exclude "logs/*" \

85 | --exclude "stack_outputs.json" \

86 | --exclude "src/sagemaker_defect_detection/lightning_logs/*" \

87 | --exclude "notebooks/*neu*/*"

88 |

89 | echo "Copying solution artifacts"

90 | aws s3 cp "s3://sagemaker-solutions-artifacts/sagemaker-defect-detection/demo/model.tar.gz" "$s3_prefix"/demo/model.tar.gz --source-region "$SOURCE_REGION"

91 |

92 | mkdir -p build/pretrained/

93 | aws s3 cp "s3://sagemaker-solutions-artifacts/sagemaker-defect-detection/pretrained/model.tar.gz" build/pretrained --source-region "$SOURCE_REGION" &&

94 | cd build/pretrained/ && tar -xf model.tar.gz && cd .. &&

95 | aws s3 sync pretrained "$s3_prefix"/pretrained/ --delete --region "$REGION"

96 |

97 | aws s3 cp "s3://sagemaker-solutions-artifacts/sagemaker-defect-detection/data/NEU-CLS.zip" "$s3_prefix"/data/ --source-region "$SOURCE_REGION"

98 | aws s3 cp "s3://sagemaker-solutions-artifacts/sagemaker-defect-detection/data/NEU-DET.zip" "$s3_prefix"/data/ --source-region "$SOURCE_REGION"

99 |

100 | echo "Add docs to build"

101 | aws s3 sync "s3://sagemaker-solutions-artifacts/sagemaker-defect-detection/docs" "$s3_prefix"/docs --delete --region "$REGION"

102 | aws s3 sync "s3://sagemaker-solutions-artifacts/sagemaker-defect-detection/docs" "$s3_prefix"/build/docs --delete --region "$REGION"

103 |

--------------------------------------------------------------------------------

/src/sagemaker_defect_detection/utils/visualize.py:

--------------------------------------------------------------------------------

1 | from typing import Iterable, List, Union, Tuple

2 |

3 | import numpy as np

4 |

5 | import torch

6 |

7 | from matplotlib import pyplot as plt

8 |

9 | import cv2

10 |

11 | import torch

12 |

13 |

14 | TEXT_COLOR = (255, 255, 255) # White

15 | CLASSES = {

16 | "crazing": "Cr",

17 | "inclusion": "In",

18 | "pitted_surface": "PS",

19 | "patches": "Pa",

20 | "rolled-in_scale": "RS",

21 | "scratches": "Sc",

22 | }

23 | CATEGORY_ID_TO_NAME = {i: name for i, name in enumerate(CLASSES.keys(), start=1)}

24 |

25 |

26 | def unnormalize_to_hwc(

27 | image: torch.Tensor, mean: List[float] = [0.485, 0.456, 0.406], std: List[float] = [0.229, 0.224, 0.225]

28 | ) -> np.ndarray:

29 | """

30 | Unnormalizes and a normlized image tensor [0, 1] CHW -> HWC [0, 255]

31 |

32 | Parameters

33 | ----------

34 | image : torch.Tensor

35 | Normalized image

36 | mean : List[float], optional

37 | RGB averages used in normalization, by default [0.485, 0.456, 0.406] from imagenet1k

38 | std : List[float], optional

39 | RGB standard deviations used in normalization, by default [0.229, 0.224, 0.225] from imagenet1k

40 |

41 | Returns

42 | -------

43 | np.ndarray

44 | Unnormalized image as numpy array

45 | """

46 | image = image.numpy().transpose(1, 2, 0) # HWC

47 | image = (image * std + mean).clip(0, 1)

48 | image = (image * 255).astype(np.uint8)

49 | return image

50 |

51 |

52 | def visualize_bbox(img: np.ndarray, bbox: np.ndarray, class_name: str, color, thickness: int = 2) -> np.ndarray:

53 | """

54 | Uses cv2 to draw colored bounding boxes and class names in an image

55 |

56 | Parameters

57 | ----------

58 | img : np.ndarray

59 | [description]

60 | bbox : np.ndarray

61 | [description]

62 | class_name : str

63 | Class name

64 | color : tuple

65 | BGR tuple

66 | thickness : int, optional

67 | Bouding box thickness, by default 2

68 | """

69 | x_min, y_min, x_max, y_max = tuple(map(int, bbox))

70 |

71 | cv2.rectangle(img, (x_min, y_min), (x_max, y_max), color=color, thickness=thickness)

72 |

73 | ((text_width, text_height), _) = cv2.getTextSize(class_name, cv2.FONT_HERSHEY_SIMPLEX, 0.35, 1)

74 | cv2.rectangle(img, (x_min, y_min - int(1.3 * text_height)), (x_min + text_width, y_min), color, -1)

75 | cv2.putText(

76 | img,

77 | text=class_name,

78 | org=(x_min, y_min - int(0.3 * text_height)),

79 | fontFace=cv2.FONT_HERSHEY_SIMPLEX,

80 | fontScale=0.35,

81 | color=TEXT_COLOR,

82 | lineType=cv2.LINE_AA,

83 | )

84 | return img

85 |

86 |

87 | def visualize(

88 | image: np.ndarray,

89 | bboxes: Iterable[Union[torch.Tensor, np.ndarray]] = [],

90 | category_ids: Iterable[Union[torch.Tensor, np.ndarray]] = [],

91 | colors: Iterable[Tuple[int, int, int]] = [],

92 | titles: Iterable[str] = [],

93 | category_id_to_name=CATEGORY_ID_TO_NAME,

94 | dpi=150,

95 | ) -> None:

96 | """

97 | Applies the bounding boxes and category ids to an image

98 |

99 | Parameters

100 | ----------

101 | image : np.ndarray

102 | Image as numpy array

103 | bboxes : Iterable[Union[torch.Tensor, np.ndarray]], optional

104 | Bouding boxes, by default []

105 | category_ids : Iterable[Union[torch.Tensor, np.ndarray]], optional

106 | Category ids, by default []

107 | colors : Iterable[Tuple[int, int, int]], optional

108 | Colors for each bounding box, by default [()]

109 | titles : Iterable[str], optional

110 | Titles for each image, by default []

111 | category_id_to_name : Dict[str, str], optional

112 | Dictionary of category ids to names, by default CATEGORY_ID_TO_NAME

113 | dpi : int, optional

114 | DPI for clarity, by default 150

115 | """

116 | bboxes, category_ids, colors, titles = list(map(list, [bboxes, category_ids, colors, titles])) # type: ignore

117 | n = len(bboxes)

118 | assert (

119 | n == len(category_ids) == len(colors) == len(titles) - 1

120 | ), f"number of bboxes, category ids, colors and titles (minus one) do not match"

121 |

122 | plt.figure(dpi=dpi)

123 | ncols = n + 1

124 | plt.subplot(1, ncols, 1)

125 | img = image.copy()

126 | plt.axis("off")

127 | plt.title(titles[0])

128 | plt.imshow(image)

129 | if not len(bboxes):

130 | return

131 |

132 | titles = titles[1:]

133 | for i in range(2, ncols + 1):

134 | img = image.copy()

135 | plt.subplot(1, ncols, i)

136 | plt.axis("off")

137 | j = i - 2

138 | plt.title(titles[j])

139 | for bbox, category_id in zip(bboxes[j], category_ids[j]): # type: ignore

140 | if isinstance(bbox, torch.Tensor):

141 | bbox = bbox.numpy()

142 |

143 | if isinstance(category_id, torch.Tensor):

144 | category_id = category_id.numpy()

145 |

146 | if isinstance(category_id, np.ndarray):

147 | category_id = category_id.item()

148 |

149 | class_name = category_id_to_name[category_id]

150 | img = visualize_bbox(img, bbox, class_name, color=colors[j])

151 |

152 | plt.imshow(img)

153 | return

154 |

--------------------------------------------------------------------------------

/scripts/train_detector.sh:

--------------------------------------------------------------------------------

1 | NOW=$(date +"%x %r %Z")

2 | echo "Time: ${NOW}"

3 | DETECTION_DATA_PATH=$1

4 | BACKBONE=$2

5 | BASE_DIR="$(dirname $(dirname $(readlink -f $0)))"

6 | DETECTOR_SCRIPT="${BASE_DIR}/src/sagemaker_defect_detection/detector.py"

7 | LOG_DIR="${BASE_DIR}/logs"

8 | MFN_LOGS="${LOG_DIR}/classification_logs"

9 | RPN_LOGS="${LOG_DIR}/rpn_logs"

10 | ROI_LOGS="${LOG_DIR}/roi_logs"

11 | FINETUNED_RPN_LOGS="${LOG_DIR}/finetune_rpn_logs"

12 | FINETUNED_ROI_LOGS="${LOG_DIR}/finetune_roi_logs"

13 | FINETUNED_FINAL_LOGS="${LOG_DIR}/finetune_final_logs"

14 | EXTRA_FINETUNED_RPN_LOGS="${LOG_DIR}/extra_finetune_rpn_logs"

15 | EXTRA_FINETUNED_ROI_LOGS="${LOG_DIR}/extra_finetune_roi_logs"

16 | EXTRA_FINETUNING_STEPS=3

17 |

18 | function find_best_ckpt() {

19 | python ${BASE_DIR}/scripts/find_best_ckpt.py $1 $2

20 | }

21 |

22 | function train_step() {

23 | echo "training step $1"

24 | case $1 in

25 | "1")

26 | echo "skipping step 1 and use 'train_classifier.sh'"

27 | ;;

28 | "2") # train rpn

29 | python ${DETECTOR_SCRIPT} \

30 | --data-path=${DETECTION_DATA_PATH} \

31 | --backbone=${BACKBONE} \

32 | --train-rpn \

33 | --pretrained-mfn-ckpt=$(find_best_ckpt "${MFN_LOGS}" "max") \

34 | --save-path=${RPN_LOGS} \

35 | --gpus=-1 --distributed-backend=ddp \

36 | --epochs=100

37 | ;;

38 | "3") # train roi

39 | python ${DETECTOR_SCRIPT} \

40 | --data-path=${DETECTION_DATA_PATH} \

41 | --backbone=${BACKBONE} \

42 | --train-roi \

43 | --pretrained-rpn-ckpt=$(find_best_ckpt "${RPN_LOGS}" "min") \

44 | --save-path=${ROI_LOGS} \

45 | --gpus=-1 --distributed-backend=ddp \

46 | --epochs=100

47 | ;;

48 | "4") # finetune rpn

49 | python ${DETECTOR_SCRIPT} \

50 | --data-path=${DETECTION_DATA_PATH} \

51 | --backbone=${BACKBONE} \

52 | --finetune-rpn \

53 | --pretrained-rpn-ckpt=$(find_best_ckpt "${RPN_LOGS}" "min") \

54 | --pretrained-roi-ckpt=$(find_best_ckpt "${ROI_LOGS}" "min") \

55 | --save-path=${FINETUNED_RPN_LOGS} \

56 | --gpus=-1 --distributed-backend=ddp \

57 | --learning-rate=1e-4 \

58 | --epochs=100

59 | # --resume-from-checkpoint=$(find_best_ckpt "${FINETUNED_RPN_LOGS}" "max")

60 | ;;

61 | "5") # finetune roi

62 | python ${DETECTOR_SCRIPT} \

63 | --data-path=${DETECTION_DATA_PATH} \

64 | --backbone=${BACKBONE} \

65 | --finetune-roi \

66 | --finetuned-rpn-ckpt=$(find_best_ckpt "${FINETUNED_RPN_LOGS}" "max") \

67 | --pretrained-roi-ckpt=$(find_best_ckpt "${ROI_LOGS}" "min") \

68 | --save-path=${FINETUNED_ROI_LOGS} \

69 | --gpus=-1 --distributed-backend=ddp \

70 | --learning-rate=1e-4 \

71 | --epochs=100

72 | # --resume-from-checkpoint=$(find_best_ckpt "${FINETUNED_ROI_LOGS}" "max")

73 | ;;

74 | "extra_rpn") # initially EXTRA_FINETUNED_*_LOGS is a copy of FINETUNED_*_LOGS

75 | python ${DETECTOR_SCRIPT} \

76 | --data-path=${DETECTION_DATA_PATH} \

77 | --backbone=${BACKBONE} \

78 | --finetune-rpn \

79 | --finetuned-rpn-ckpt=$(find_best_ckpt "${EXTRA_FINETUNED_RPN_LOGS}" "max") \

80 | --finetuned-roi-ckpt=$(find_best_ckpt "${EXTRA_FINETUNED_ROI_LOGS}" "max") \

81 | --save-path=${EXTRA_FINETUNED_RPN_LOGS} \

82 | --gpus=-1 --distributed-backend=ddp \

83 | --learning-rate=1e-4 \

84 | --epochs=100

85 | ;;

86 | "extra_roi") # initially EXTRA_FINETUNED_*_LOGS is a copy of FINETUNED_*_LOGS

87 | python ${DETECTOR_SCRIPT} \

88 | --data-path=${DETECTION_DATA_PATH} \

89 | --backbone=${BACKBONE} \

90 | --finetune-roi \

91 | --finetuned-rpn-ckpt=$(find_best_ckpt "${EXTRA_FINETUNED_RPN_LOGS}" "max") \

92 | --finetuned-roi-ckpt=$(find_best_ckpt "${EXTRA_FINETUNED_ROI_LOGS}" "max") \

93 | --save-path=${EXTRA_FINETUNED_ROI_LOGS} \

94 | --gpus=-1 --distributed-backend=ddp \

95 | --learning-rate=1e-4 \

96 | --epochs=100

97 | ;;

98 | "joint") # final

99 | python ${DETECTOR_SCRIPT} \

100 | --data-path=${DETECTION_DATA_PATH} \

101 | --backbone=${BACKBONE} \

102 | --finetuned-rpn-ckpt=$(find_best_ckpt "${EXTRA_FINETUNED_RPN_LOGS}" "max") \

103 | --finetuned-roi-ckpt=$(find_best_ckpt "${EXTRA_FINETUNED_ROI_LOGS}" "max") \

104 | --save-path="${FINETUNED_FINAL_LOGS}" \

105 | --gpus=-1 --distributed-backend=ddp \

106 | --learning-rate=1e-3 \

107 | --epochs=300

108 | # --resume-from-checkpoint=$(find_best_ckpt "${FINETUNED_FINAL_LOGS}" "max")

109 | ;;

110 |

111 | *) ;;

112 | esac

113 | }

114 |

115 | function train_wait_to_finish() {

116 | train_step $1 &

117 | BPID=$!

118 | wait $BPID

119 | }

120 |

121 | function run() {

122 | if [ "$1" != "" ]; then

123 | train_step $1

124 | else

125 | nvidia-smi | grep python | awk '{ print $3 }' | xargs -n1 kill -9 >/dev/null 2>&1

126 | read -p "Training all steps from scratch? (Y/N): " confirm && [[ $confirm == [yY] || $confirm == [yY][eE][sS] ]]

127 | if [ "$confirm" == "Y" ]; then

128 | for i in {1..5}; do

129 | train_wait_to_finish $i

130 | done

131 | echo "finished all the training steps"

132 | mkdir -p "${EXTRA_FINETUNED_RPN_LOGS}" && cp -r "${FINETUNED_RPN_LOGS}/"* "${EXTRA_FINETUNED_RPN_LOGS}"

133 | mkdir -p "${EXTRA_FINETUNED_ROI_LOGS}" && cp -r "${FINETUNED_ROI_LOGS}/"* "${EXTRA_FINETUNED_ROI_LOGS}"

134 | fi

135 | echo "repeating extra finetuning steps ${EXTRA_FINETUNING_STEPS} more times"

136 | for i in {1..${EXTRA_FINETUNING_STEPS}}; do

137 | train_wait_to_finish "extra_rpn"

138 | train_wait_to_finish "extra_roi"

139 | done

140 | echo "final joint training"

141 | train_step "joint"

142 | fi

143 | exit 0

144 | }

145 |

146 | run $1

147 |

--------------------------------------------------------------------------------

/cloudformation/defect-detection-permissions.yaml:

--------------------------------------------------------------------------------

1 | AWSTemplateFormatVersion: "2010-09-09"

2 | Description: "(SA0015) - sagemaker-defect-detection permission stack"

3 | Parameters:

4 | SolutionPrefix:

5 | Type: String

6 | SolutionName:

7 | Type: String

8 | S3Bucket:

9 | Type: String

10 | StackVersion:

11 | Type: String

12 |

13 | Mappings:

14 | S3:

15 | release:

16 | BucketPrefix: "sagemaker-solutions-prod"

17 | development:

18 | BucketPrefix: "sagemaker-solutions-devo"

19 |

20 | Resources:

21 | SageMakerIAMRole:

22 | Type: AWS::IAM::Role

23 | Properties:

24 | RoleName: !Sub "${SolutionPrefix}-${AWS::Region}-nb-role"

25 | AssumeRolePolicyDocument:

26 | Version: "2012-10-17"

27 | Statement:

28 | - Effect: Allow

29 | Principal:

30 | AWS:

31 | - !Sub "arn:aws:iam::${AWS::AccountId}:root"

32 | Service:

33 | - sagemaker.amazonaws.com

34 | - lambda.amazonaws.com # for solution assistant resource cleanup

35 | Action:

36 | - "sts:AssumeRole"

37 | Metadata:

38 | cfn_nag:

39 | rules_to_suppress:

40 | - id: W28

41 | reason: Needs to be explicitly named to tighten launch permissions policy

42 |

43 | SageMakerIAMPolicy:

44 | Type: AWS::IAM::Policy

45 | Properties:

46 | PolicyName: !Sub "${SolutionPrefix}-nb-instance-policy"

47 | Roles:

48 | - !Ref SageMakerIAMRole

49 | PolicyDocument:

50 | Version: "2012-10-17"

51 | Statement:

52 | - Effect: Allow

53 | Action:

54 | - sagemaker:CreateTrainingJob

55 | - sagemaker:DescribeTrainingJob

56 | - sagemaker:CreateProcessingJob

57 | - sagemaker:DescribeProcessingJob

58 | - sagemaker:CreateModel

59 | - sagemaker:DescribeEndpointConfig

60 | - sagemaker:DescribeEndpoint

61 | - sagemaker:CreateEndpointConfig

62 | - sagemaker:CreateEndpoint

63 | - sagemaker:DeleteEndpointConfig

64 | - sagemaker:DeleteEndpoint

65 | - sagemaker:DeleteModel

66 | - sagemaker:InvokeEndpoint

67 | Resource:

68 | - !Sub "arn:aws:sagemaker:${AWS::Region}:${AWS::AccountId}:*"